#i not sure what the yml is for

Text

BIG NEWS: there is a new f1 pet account on the block! Zhou made an account on instagram for 玉米 (Yùmï) or Sweetcorn <3

#following the .jpg format i see#zhou guanyu#zg24#f1#f1blr#big news for the formula 1 world#she is so cute!!!#i can more pics of her form zhou account#a little baby#might need to make a post of all f1 pets soon#i not sure what the yml is for#if someone who know could tell me that would be great :)#stake f1 team#alfa romeo#big news for annoying people#that me i’m the annoying people#team zhou

47 notes

·

View notes

Note

My 3tan Roman Empire…

All of Forfeit. I have that entire chapter bouncing around in my head all the damn time. I think about them constantly and akdjksakjsal nope still not over it.

Also the “YML” in Busted. That shit had me weak and I dunno if we’re still spoiler-ing it or not but WE ALL KNOW WHAT IT COULD MEAN.

😩😩

GODDDDD thank you for mentioning forfeit.. that is still my baby of the series. So raw, and probably my rawest. A piece of my soul is in that one for sure.

YOU SAID ITTTTT I was waiting for someone to mention that one specific line I’m screaming😭

what’s your 3tan roman empire?

12 notes

·

View notes

Text

What is Docker Compose: Example, Benefits and Basic Commands - ACEIT

What is Docker Compose?

Docker

An easy-to-use Linux container technology

Docker image format

It helps in application packaging and delivery

Top Engineering Colleges in Jaipur Rajasthan says Docker is a tool that can package an application and its dependencies in a virtual container that can run on any Linux server. This helps enable flexibility and portability on where the application can run, whether on-premises, public cloud, private cloud, bare metal, etc also It composes works in all environments: production, staging, development, testing, as well as CI workflows and with Compose, you use a YAML file to configure your application’s services.

Docker Vs Virtualization

Positive expects of Docker,

Lighter than Virtual machines.

docker images are very small in size

Deploying and scaling is relatively easy

Containers have less startup time

Less secure

Technologies Behind Docker

Control groups:

There are some keys but control Groups are another key component of Linux Containers with Cgroup we can implement resource accounting and limit and also ensure that each container gets its fair share of memory, CPand U, and disk I/O, and thanks to Group up, we can make sure that at single container cannot bring the system down by exhausting resources.

Union file systems:

It is a read-only part and a written part and merges those Docker images made up of layers.

Technologies behind docker

Namespaces also help to create isolated workspace for each process and When you run a container, Docker creates a set of namespaces for that container

SELinux:- It provides secure separation of containers by applying SELinux policy and labels.

Technologies Behind Docker

Capabilities:

One of the most important capabilities is docker drops all capabilities except those needed also "root" within a container have fewer privileges than the real "root" and the best practice for users would be to remove all capabilities except those explicitly required for their processes, so even if an intruder manages to escalate to root within a container.

Components

Docker Images

Docker containers

Docker Hub

Docker Registry

Docker daemon

Docker client

Dcokerfile

It is a text document that contains all the commands a user could call on the command line to assemble an image also it builds can build images using Dockerfile.

Let's talk about real-life applications first!

One application consists of multiple containers, and One container is dependent on another also Mutual dependence startup order, so the Process involves building containers and then deploying them, also Long docker run commands and Complexity are proportional to the number of containers involved.

Docker Compose

Private Colleges of Engineering in Jaipur Rajasthan has a tool for defining and running multi-container Docker applications, It is a YML file and composes contains information about how to build the containers and deploy containers, also Integrated with Docker Swarm so Competes with Kubernetes

Conclusion:

Best Engineering Colleges in Jaipur Rajasthan says The big advantage of using Compose is you can define your application stack in a file, keep it at the root of your project repo (it’s now version controlled), and easily enable someone else to contribute to your project, also Someone would only need to clone your repo and start the compose app and In fact, you might see quite a few projects on GitHub/GitLab doing exactly this now, and Compose works in all environments: production, staging, development, testing, as well as CI workflows also it has commands for managing the whole life cycle of your application with security also according to client requirement they do the needful.

Start, stop and rebuild services

View the status of running services

Stream the log output of running services

Run a one-off command on a service

0 notes

Text

What Is Software Testing? Interpretation, Fundamentals & Kind

Ninja Training For Software Program Testers.

#toc background: #f9f9f9;border: 1px solid #aaa;display: table;margin-bottom: 1em;padding: 1em;width: 350px; .toctitle font-weight: 700;text-align: center;

Content

Certified Software Application Test Automation Designer.

Automation Testing Resources.

Test Automation With Selenium Webdriver.

Top Tips For Learning Java Programming.

Develop A Junit Examination Class

Certified Software Test Automation Designer.

How many days will it take to learn Java?

Bottom line -- beyond the most elementary, the math you need will come from the problem domain you're programming in. NONE of that needs to be a barrier to learning Java development. If you can learn Java, you can probably learn more math if you need it -- just don't try to do both at the same time.

Nonetheless this is dissuaded, as well as utilizing one web browser per node is taken into consideration best practice for optimum efficiency. You can after that make use of the TestNG library to run your examinations on multiple nodes in parallel customarily. The simplest means to use these in a local Selenium Grid is to build a Docker Compose documents within the root directory site of your project. Call the documents docker-compose. yml to maintain points easy.

Java 7 can be set up on Lion and Mountain Lion to run applets. Lion and also Mountain can have both Java 6 and also Java 7 set up simultaneously.

And also, there will be much better assistance for Docker, parallel testing will be included natively, and also it will certainly give a much more useful UI. Request mapping with Hooks will certainly likewise help you to debug your grid. As any kind of examination automation engineer knows, waits are crucial to the security of your examination automation structure. They can additionally speed up your examination by making any kind of sleeps or pauses repetitive as well as get rid of sluggish network and cross-browser concerns.

Automation Testing Resources.

Is Java a good career choice?

Similar to COBOL, thousands of critical enterprise systems have been written in Java and will need to be maintained and enhanced for decades to come. I'd be surprised if it's not around for at least another 15 years. But it will change, and is changing.

However, as these are often made use of to develop screenshots of a solitary component, it deserves knowing that there will certainly additionally be an API command to capture a screenshot of an aspect in Selenium 4. The Selenium Grid will certainly be more steady and also less complicated to establish and handle in Selenium 4. Users will no more require to set up as well as begin nodes and also centers separately as the grid will certainly function as a consolidated node as well as center.

To get up as well as running, initially you need to have Docker as well as Docker Compose installed on your maker. If you're running Windows 10 or a Mac, then they will both be installed through the Docker Desktop. Selenium Grid is notoriously hard to set up, unpredictable, and difficult to either release, or version control, on a CI pipe. A much easier, maintainable and also stable way is to use the pre-built Selenium Docker images. This is important to making certain the continued extensive adoption of their structure within their business.

As a whole, puffed up, obsolete structures fall out of style quickly. He started at IBM, relocated to EADS, then Fujitsu, and also currently runs his own company.

Below are some suggestions to make your waits a lot more resistant. To produce a steady variation of the Grid for your CI pipe, it's likewise possible to deploy your Grid onto Kubernetes or Swarm. If they do fail, this makes sure that any type of Dockers are promptly recovered or changed. It deserves keeping in mind that it is feasible to have multiple web browsers running on each node.

Examination Automation With Selenium Webdriver.

I utilize TestNG as it's specifically created for Acceptance Examinations, while structures such as JUnit are commonly utilized for system testing.

One more excellent structure that is well worth exploring is Spock as it's very easy as well as very meaningful to review.

I would certainly likewise recommend setting a day to do this a minimum of once a year, although ideally it would be every 6 months.

Rest will certainly always stop for a collection amount of time before carrying out some code, while wait will just stop briefly execution until an anticipated condition happens or it breaks, whichever comes first.

You ought to never make use of sleep in an examination automation framework as you want your examinations to run as quick as feasible.

Google's Truth assertion collection is additionally an excellent way to compose readable examinations.

youtube

Check to make certain that you have actually the recommended version of Java mounted for your os. I wrote a publication that gets testers started with Java fast, is simple to adhere to, as well as has instances connected to their job. A driver.switchTo(). parentFrame() technique has been included in make frame navigating much easier. WebElement.getSize() and WebElement.getLocation() are now replaced with a single method WebElement.getRect().

youtube

Apple did not produce an Update 38 for the Mac, they maxed out at Update 37. On Windows, the Java runtime may or may not be pre-installed, the decision is left approximately the hardware maker. A Java variation 6 runtime was pre-installed by Apple on OS X Leopard as well as Snow Leopard, but starting with Lion, Apple stopped pre-installing Java. Java 6 can be mounted on Lion as well as Hill Lion, but it will certainly not run applets.

Leading Tips For Learning Java Programs.

You code as soon as, and the JVM does all the operate in making certain your amazing brand-new programme runs efficiently on any type of platform, whether Windows, Mac, Linux or Android mobile. Java is one of the most prominent shows language in use given that it's the only language that works across all computer system systems without requiring to be recompiled each. Obtain opleiding tester to 4,000+ top Udemy courses anytime, anywhere.

Check to guarantee that you have the advised version of Java set up on your Windows computer system and recognize any type of variations that run out date and should be uninstalled. We are not able to validate if Java is currently mounted and enabled in your web browser.

You do not need to have experience of doing this as your expertise of the technology will certainly be enough (we'll help you with the rest till you're up to speed). Teaching is our enthusiasm and we develop every training course so you can go back to square one, understanding nothing regarding a subject as well as come to be a specialist after the program as well as can deal with enterprise projects. You will certainly obtain the best in course assistance from the instructor for any concern you have actually connected to the training course.

Suggestion # 3: Chrome Devtools: Replicating Network Conditions

Where can I practice Java?

JavaScript can be used to do monotonous things like creating animation in HTML. In short, when it comes to how each programming language is used, Java is typically used for all server-side development, while creating client-side scripts for tasks such as JS validation and interactivity is reserved for.

Toptal handpicks leading Java programmers to suit your requirements. There's likewise a creative side to the role as you will certainly design training course content as well as add ideas for coding difficulties for the learners.

1 note

·

View note

Text

Bird cages!

HOKAY, SO.

I’m finally going to upgrade the ‘tiel cages in the next couple of months! (Once I paint my room and finish some other things around the house.) I have spent a LOT of time looking at cages (both online & in person) over the last days/weeks, and I’ve mostly narrowed it down to these two.

So I’m looking for input! If anybody has any experience with either (or similar ones)! (FWIW, I think I read every review on every site of like a million cages--every cage out there seems to have SOME bad reviews about the quality, but I tried to choose ones where MOST of the bad reviews were based on user error or personal preference things.) Keep in mind I need two, because Keuka & Tioga do not like each other and can’t be housed together.

A&E Elegant Flight Cage

Pros: good size (there are three sizes of this--the one I’m looking at is the medium one, 32″W x 21″D x 61″H); seems very sturdy; nice looking; nice stainless steel food cups; nice shelf. I was able to check out the smaller version at a local bird store and I liked it. (ALSO, a potentially important note: Wayfair has their “own brand” of bird cages, and has this exact cage for only $205. I’m like 95% sure it IS the A&E cage, just rebranded (since it’s not like Wayfair actually MANUFACTURES their own stuff). So I could potentially get this for only $205.)

Cons: Those gaps above & below the door and by the food dishes are weird? Probably not big enough to be a problem for tiels, but? I read one single review about screws sticking out near the food cups (no one else seemed to have this problem though)?

Avian Adventures Loro Flight Cage

Pros: AA has probably the best reputation of all of the brands other than Kings; I like their no-screws approach to cage assembly (this is a big pro, actually--Gwen’s cage is like this, and I LOVE how I was able to pop it apart to store it and then pop it together in like 30 seconds...not that I will probably need to disassemble these much/if ever, but hey); the cage bars are supposed to be extra heavy-duty (also a big plus; I HATE flimsy cage bars with the fiery passion of a thousand suns). Would fit a little better in my space width-wise (though a little worse depth-wise, so that’s kind of a toss up--it’s 30W x 24D x 72H). Comes with a cool perch, I think.

Cons: More expensive--most places it’s $300 or more. The cheapest I found was for $260ish, which isn’t terrible, but still. I don’t like the look of this one as much--it looks bulky (mainly the legs, but also the square-ness. also the top roof thing, but that’s removable at least); I don’t like that the cage can’t detach from the legs; I’ve heard reviews that the casters are cheap (but maybe that’s changed?); the shelf underneath seems like it’s too high up to really be useful (for my tall food containers/plastic storage bin). I wasn’t able to check this one out in person, which always makes me iffy. This is a little thing, but I read reviews about the cups fitting REALLY snugly into the holders and being hard to get out--that would be annoying.

If you have any other suggestions (that I somehow haven’t seen/considered), also feel free to recommend! My basic requirements (and the reasons I chose the above ones) are under the cut, so I don’t completely kill everyone’s dash.

-Size (big). If I’m going to spend hundreds of dollars upgrading them, I want it to be significantly bigger than their current cages, not just a little bigger. Ideally something that could be classified as a “flight cage.” That said, with the space I have, the max width I can go to would be about 32″ (and 30 or 31″ would be preferable) and I wouldn’t want it to be much deeper than 23-24″ (21″ish is preferable). I need two of these, remember.

-General design things. Obviously, these are cockatiels, so bar spacing no more than 1/2 inch. Big enough door that they want to come out. Enough horizontal space (more important than height, though obviously there’s only so much I can do there).

-Small design things, like: most of the brand-name flight cages for smaller birds apparently (like this A&E one, which I was heavily considering for a while) don’t have food-access doors?? Wtf?? While 99% of the time I’m letting them out when I’m changing their food anyway, for the 1% of the time someone else is watching them and needs to change their food/water without them getting out, food access doors are pretty crucial. So that’s a deal-breaker. (As an aside, aren’t big flight cages like this often used for birds who AREN’T tame? Why the HECK would THESE cages be ones that you don’t put food access doors on??)

-Also small: I would prefer one with a shelf underneath! This isn’t as much of a deal-breaker, but I WOULD def prefer one with a shelf big enough to fit their food containers/the plastic tub with their blankets & supplies. (And this did help me choose between otherwise comparable cages.)

-Brand name/sturdy/safe. There’s nothing I hate more than a flimsy cage. That’s part of the reason I’ve waited so long to upgrade them--their current cages are small, but SUCH good quality (they’re Hoei, a Japanese brand you can’t find in the States anymore). Amazon has some cheap cages from brands like Mcage and YML that have some really good reviews, and I considered them, but...they also have several reviews about them being “fine, but you get what you pay for, etc.). I debated, but in the end decided that if I’m investing in new cages, I’d rather just invest in high quality ones. So I’m mostly looking at A&E, Avian Adventures, King’s, Prevue (though Prevue is probs my least fave of these), etc.

-Related to the above, sturdiness again. I really liked the shape/dimensions of the flight cages like the A&E one without food-access doors, but the bars I think are too thin. My tiels aren’t going to bend the bars, that’s not what I’m worried about, but I like to be able to have sturdy, heavy things in there, like java wood or concrete perches, without snapping the bars. Again: flimsy cages are the bane of my existence.

Things I Do Not Want:

-Hagen Vision Cages. Unpopular opinion time! I hate them! No real shade at the cages themselves, I know a lot of people have them and really like them. I just personally do not like the way they look. And the ones I assembled back when I worked at the pet store I Did Not Like. They were frequently broken

-Double cages that stack on top of each other. There are some really nice cages like this, but I don’t like the idea of one bird being essentially on the ground? I know my birds, and they would not be a fan. Also, I know my birds and, even though they do not like each other and can’t be housed together, Tioga would FLIP if she couldn’t see Keuka. (I don’t mind the side-by-side ones with a divider like this--that’s actually what I was PLANNING to get originally. But then I realized that most of those have the same issues as the regular large flight cages of that type--thin bars & no food access doors.)

8 notes

·

View notes

Text

Just How To Get A Software Application Testing Task As A Fresher?

Intro To Java For Examination Automation Training Training Course.

#toc background: #f9f9f9;border: 1px solid #aaa;display: table;margin-bottom: 1em;padding: 1em;width: 350px; .toctitle font-weight: 700;text-align: center;

Content

Qualified Software Examination Automation Engineer.

Automation Testing Resources.

Test Automation With Selenium Webdriver.

Leading Tips For Knowing Java Shows.

Produce A Junit Test Class

youtube

Google's Fact assertion library is also an excellent means to write legible tests. Rest will certainly always stop briefly for a collection quantity of time before carrying out some code, while wait will only stop briefly execution up until an anticipated problem occurs or it times out, whichever comes first.

Can I learn Java online?

' deze website legt uit can be used to create complete applications that may run on a single computer or be distributed among servers and clients in a network. It can also be used to build a small application module or applet (a simply designed, small application) for use as part of a Web page.

The ExpectedConditions course has expanded over time and also currently encompasses almost every scenario conceivable. This will bullet-proof your tests versus most sluggish or cross-browser web site problems. GitHub is residence to over 50 million developers working together to host as well as review code, take care of tasks, as well as develop software together.

You should never make use of rest in a test automation framework as you desire your tests to run as quick as feasible. I 'd additionally suggest establishing a date to do this a minimum of annually, although ideally it would certainly be every 6 months. In the short article, I've included the adjustments that are being available in Selenium 4.0. Please do evaluate the alpha versions of Selenium for yourself.

The randomness described below is just at the level of independent examination methods. As a Software Tester/ Java Developer in Test you'll focus on automation testing to make sure the high quality of highly distributed systems. The system will certainly deliver real-time sporting video clip material and also analytical information by means of a multitude of layouts as well as devices to both B2B as well as B2C customers. This page informs you if Java is mounted and also made it possible for in your existing internet internet browser and what variation you are running. In object-oriented programs, inheritance is the device of basing a class upon an additional course, maintaining similar execution.

youtube

3+ years working as an automated tester as part of a software program growth group. For several years this web page had eight various other methods of identifying the mounted version of Java. Beginning with Java 7 Update 10, the use of Java online by all installed internet internet browsers can be disabled with a new checkbox in the safety and security area of the Java Control Board.

Licensed Software Test Automation Architect.

Given that a string is an immutable data framework, concatenating numerous strings can present substantial performance penalties. Every knowledgeable programmer ought to understand when to utilize StringBuilder instead of just concatenating two strings to boost performances in such situations.

Automation Testing Resources.

Reactivate your internet browser (close all web browser windows and re-open) to make it possible for the freshly installed Java variation in the internet browser if you recently finished your Java software setup.

Then, we can use this data to run our examination under various network problems.

When running a collection of tests, the code within the if statement can be consisted of in a @BeforeClass approach.

Within the test, the CommandExecutor will execute the command in the internet browser's present session.

This subsequently will trigger the needed setups in Chrome's Developer Devices capability to imitate our slow-moving network.

Market is never short of jobs in Java programs language, there are adequate of jobs in both Java development and Automation Testing making use of Java. And afterwards if required -In memory as well as procedure HTTP API testing. Dynamic shows methods for code optimization can be really useful to a designer who comprehends them. Unit testing is a kind of software application testing where specific devices or elements of a software are tested. System testing of software program applications is done during the growth of an application and also need to be well known practice to any type of programmer.

performance tester ">

Can I learn Java in 6 months?

You can learn the basic in two months if you put the time into doing so. However, learning how to design and implement a real world Java application correctly based on a detailed design doc will take more experience.

Examination Automation With Selenium Webdriver.

Every programmer needs to recognize with data-sorting approaches, as sorting is extremely typical in data-analysis procedures. A user interface is made use of to define an abstract type that specifies habits as approach trademarks. Instances of different kinds can execute the very same interface and give a way for a programmer to reuse the code.

Leading Tips For Discovering Java Shows.

Inheritance enables designers to reuse code and is a must recognize topic for every developer that works with OOP languages. Stream API is utilized to refine collections of things. It supports numerous approaches, such as mapping, filtering system, and also sorting, which can be pipelined to generate the preferred result. Given that it simplifies code and enhances efficiency, it should be popular to Java programmers.

Create A Junit Examination Class

To day, my experience has been that while this functions, web browsers incorrectly report that Java is not installed in any way. There are many examination and assertion structures that you can utilize to run your Selenium-powered automation tests. I use TestNG as it's particularly developed for Approval Tests, while structures such as JUnit are frequently utilized for device testing. Another wonderful framework that is well worth examining is Spock as it's very easy as well as highly expressive to check out.

The changes will certainly be significant and you'll need to be prepared. It's worth having a debug variation of your docker-compose if you want to see what's taking place on the internet browser so you can debug your examinations. yml documents that downloads the debug web browser nodes. These contain a VNC web server so you can enjoy the browser as the test runs. Software Application Dev in Java, relational data sources, testing (non-functional and functional automatic testing) and also front-end advancement modern technology (full-stack). This training course assumes that you have no programming history.

It's simply a benefit point if you have some experience after that. You have never code, have some experience or have a lot of experience any various other shows language, this course is one stop location for you. Within a single test approach, tests will run in the sequence you wrote them in, like any kind of Java technique.

Then, we can use this information to run our examination under different network problems. Within the test, the CommandExecutor will certainly implement the command in the browser's existing session. This in turn will certainly trigger the essential settings in Chrome's Programmer Devices performance to imitate our slow-moving network. The code within the if declaration can be included in a @BeforeClass method when running a collection of examinations. Reboot your web browser (close all browser windows and re-open) to enable the newly installed Java variation in the web browser if you just recently finished your Java software application setup.

0 notes

Text

Building a Blog with Next.js

In this article, we will use Next.js to build a static blog framework with the design and structure inspired by Jekyll. I’ve always been a big fan of how Jekyll makes it easier for beginners to setup a blog and at the same time also provides a great degree of control over every aspect of the blog for the advanced users.

With the introduction of Next.js in recent years, combined with the popularity of React, there is a new avenue to explore for static blogs. Next.js makes it super easy to build static websites based on the file system itself with little to no configuration required.

The directory structure of a typical bare-bones Jekyll blog looks like this:

. ├─── _posts/ ...blog posts in markdown ├─── _layouts/ ...layouts for different pages ├─── _includes/ ...re-usable components ├─── index.md ...homepage └─── config.yml ...blog config

The idea is to design our framework around this directory structure as much as possible so that it becomes easier to migrate a blog from Jekyll by simply reusing the posts and configs defined in the blog.

For those unfamiliar with Jekyll, it is a static site generator that can transform your plain text into static websites and blogs. Refer the quick start guide to get up and running with Jekyll.

This article also assumes that you have a basic knowledge of React. If not, React’s getting started page is a good place to start.

Installation

Next.js is powered by React and written in Node.js. So we need to install npm first, before adding next, react and react-dom to the project.

mkdir nextjs-blog && cd $_ npm init -y npm install next react react-dom --save

To run Next.js scripts on the command line, we have to add the next command to the scripts section of our package.json.

"scripts": { "dev": "next" }

We can now run npm run dev on the command line for the first time. Let’s see what happens.

$ npm run dev > [email protected] dev /~user/nextjs-blog > next ready - started server on http://localhost:3000 Error: > Couldn't find a `pages` directory. Please create one under the project root

The compiler is complaining about a missing pages directory in the root of the project. We’ll learn about the concept of pages in the next section.

Concept of pages

Next.js is built around the concept of pages. Each page is a React component that can be of type .js or .jsx which is mapped to a route based on the filename. For example:

File Route ---- ----- /pages/about.js /about /pages/projects/work1.js /projects/work1 /pages/index.js /

Let’s create the pages directory in the root of the project and populate our first page, index.js, with a basic React component.

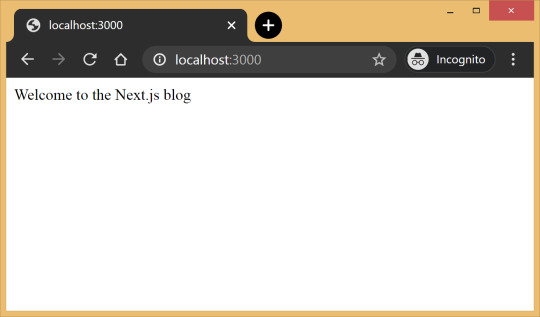

// pages/index.js export default function Blog() { return <div>Welcome to the Next.js blog</div> }

Run npm run dev once again to start the server and navigate to http://localhost:3000 in the browser to view your blog for the first time.

Out of the box, we get:

Hot reloading so we don’t have to refresh the browser for every code change.

Static generation of all pages inside the /pages/** directory.

Static file serving for assets living in the/public/** directory.

404 error page.

Navigate to a random path on localhost to see the 404 page in action. If you need a custom 404 page, the Next.js docs have great information.

Dynamic pages

Pages with static routes are useful to build the homepage, about page, etc. However, to dynamically build all our posts, we will use the dynamic route capability of Next.js. For example:

File Route ---- ----- /pages/posts/[slug].js /posts/1 /posts/abc /posts/hello-world

Any route, like /posts/1, /posts/abc, etc., will be matched by /posts/[slug].js and the slug parameter will be sent as a query parameter to the page. This is especially useful for our blog posts because we don’t want to create one file per post; instead we could dynamically pass the slug to render the corresponding post.

Anatomy of a blog

Now, since we understand the basic building blocks of Next.js, let’s define the anatomy of our blog.

. ├─ api │ └─ index.js # fetch posts, load configs, parse .md files etc ├─ _includes │ ├─ footer.js # footer component │ └─ header.js # header component ├─ _layouts │ ├─ default.js # default layout for static pages like index, about │ └─ post.js # post layout inherts from the default layout ├─ pages │ ├─ index.js # homepage | └─ posts # posts will be available on the route /posts/ | └─ [slug].js # dynamic page to build posts └─ _posts ├─ welcome-to-nextjs.md └─ style-guide-101.md

Blog API

A basic blog framework needs two API functions:

A function to fetch the metadata of all the posts in _posts directory

A function to fetch a single post for a given slug with the complete HTML and metadata

Optionally, we would also like all the site’s configuration defined in config.yml to be available across all the components. So we need a function that will parse the YAML config into a native object.

Since, we would be dealing with a lot of non-JavaScript files, like Markdown (.md), YAML (.yml), etc, we’ll use the raw-loader library to load such files as strings to make it easier to process them.

npm install raw-loader --save-dev

Next we need to tell Next.js to use raw-loader when we import .md and .yml file formats by creating a next.config.js file in the root of the project (more info on that).

module.exports = { target: 'serverless', webpack: function (config) { config.module.rules.push({test: /\.md$/, use: 'raw-loader'}) config.module.rules.push({test: /\.yml$/, use: 'raw-loader'}) return config } }

Next.js 9.4 introduced aliases for relative imports which helps clean up the import statement spaghetti caused by relative paths. To use aliases, create a jsconfig.json file in the project’s root directory specifying the base path and all the module aliases needed for the project.

{ "compilerOptions": { "baseUrl": "./", "paths": { "@includes/*": ["_includes/*"], "@layouts/*": ["_layouts/*"], "@posts/*": ["_posts/*"], "@api": ["api/index"], } } }

For example, this allows us to import our layouts by just using:

import DefaultLayout from '@layouts/default'

Fetch all the posts

This function will read all the Markdown files in the _posts directory, parse the front matter defined at the beginning of the post using gray-matter and return the array of metadata for all the posts.

// api/index.js import matter from 'gray-matter'

export async function getAllPosts() { const context = require.context('../_posts', false, /\.md$/) const posts = [] for(const key of context.keys()){ const post = key.slice(2); const content = await import(`../_posts/${post}`); const meta = matter(content.default) posts.push({ slug: post.replace('.md',''), title: meta.data.title }) } return posts; }

A typical Markdown post looks like this:

--- title: "Welcome to Next.js blog!" --- **Hello world**, this is my first Next.js blog post and it is written in Markdown. I hope you like it!

The section outlined by --- is called the front matter which holds the metadata of the post like, title, permalink, tags, etc. Here’s the output:

[ { slug: 'style-guide-101', title: 'Style Guide 101' }, { slug: 'welcome-to-nextjs', title: 'Welcome to Next.js blog!' } ]

Make sure you install the gray-matter library from npm first using the command npm install gray-matter --save-dev.

Fetch a single post

For a given slug, this function will locate the file in the _posts directory, parse the Markdown with the marked library and return the output HTML with metadata.

// api/index.js import matter from 'gray-matter' import marked from 'marked'

export async function getPostBySlug(slug) { const fileContent = await import(`../_posts/${slug}.md`) const meta = matter(fileContent.default) const content = marked(meta.content) return { title: meta.data.title, content: content } }

Sample output:

{ title: 'Style Guide 101', content: '<p>Incididunt cupidatat eiusmod ...</p>' }

Make sure you install the marked library from npm first using the command npm install marked --save-dev.

Config

In order to re-use the Jekyll config for our Next.js blog, we’ll parse the YAML file using the js-yaml library and export this config so that it can be used across components.

// config.yml title: "Next.js blog" description: "This blog is powered by Next.js"

// api/index.js import yaml from 'js-yaml' export async function getConfig() { const config = await import(`../config.yml`) return yaml.safeLoad(config.default) }

Make sure you install js-yaml from npm first using the command npm install js-yaml --save-dev.

Includes

Our _includes directory contains two basic React components, <Header> and <Footer>, which will be used in the different layout components defined in the _layouts directory.

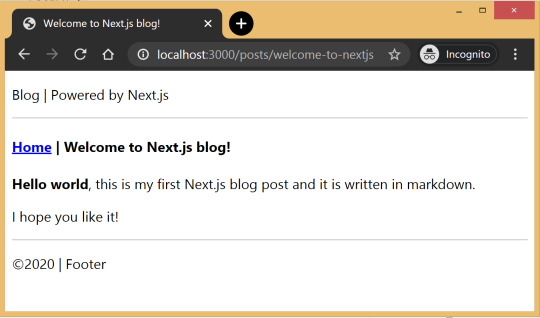

// _includes/header.js export default function Header() { return <header><p>Blog | Powered by Next.js</p></header> }

// _includes/footer.js export default function Footer() { return <footer><p>©2020 | Footer</p></footer> }

Layouts

We have two layout components in the _layouts directory. One is the <DefaultLayout> which is the base layout on top of which every other layout component will be built.

// _layouts/default.js import Head from 'next/head' import Header from '@includes/header' import Footer from '@includes/footer'

export default function DefaultLayout(props) { return ( <main> <Head> <title>{props.title}</title> <meta name='description' content={props.description}/> </Head> <Header/> {props.children} <Footer/> </main> ) }

The second layout is the <PostLayout> component that will override the title defined in the <DefaultLayout> with the post title and render the HTML of the post. It also includes a link back to the homepage.

// _layouts/post.js import DefaultLayout from '@layouts/default' import Head from 'next/head' import Link from 'next/link'

export default function PostLayout(props) { return ( <DefaultLayout> <Head> <title>{props.title}</title> </Head> <article> <h1>{props.title}</h1> <div dangerouslySetInnerHTML=/> <div><Link href='/'><a>Home</a></Link></div> </article> </DefaultLayout> ) }

next/head is a built-in component to append elements to the <head> of the page. next/link is a built-in component that handles client-side transitions between the routes defined in the pages directory.

Homepage

As part of the index page, aka homepage, we will list all the posts inside the _posts directory. The list will contain the post title and the permalink to the individual post page. The index page will use the <DefaultLayout> and we’ll import the config in the homepage to pass the title and description to the layout.

// pages/index.js import DefaultLayout from '@layouts/default' import Link from 'next/link' import { getConfig, getAllPosts } from '@api'

export default function Blog(props) { return ( <DefaultLayout title={props.title} description={props.description}> <p>List of posts:</p> <ul> {props.posts.map(function(post, idx) { return ( <li key={idx}> <Link href={'/posts/'+post.slug}> <a>{post.title}</a> </Link> </li> ) })} </ul> </DefaultLayout> ) }

export async function getStaticProps() { const config = await getConfig() const allPosts = await getAllPosts() return { props: { posts: allPosts, title: config.title, description: config.description } } }

getStaticProps is called at the build time to pre-render pages by passing props to the default component of the page. We use this function to fetch the list of all posts at build time and render the posts archive on the homepage.

Post page

This page will render the title and contents of the post for the slug supplied as part of the context. The post page will use the <PostLayout> component.

// pages/posts/[slug].js import PostLayout from '@layouts/post' import { getPostBySlug, getAllPosts } from "@api"

export default function Post(props) { return <PostLayout title={props.title} content={props.content}/> }

export async function getStaticProps(context) { return { props: await getPostBySlug(context.params.slug) } }

export async function getStaticPaths() { let paths = await getAllPosts() paths = paths.map(post => ({ params: { slug:post.slug } })); return { paths: paths, fallback: false } }

If a page has dynamic routes, Next.js needs to know all the possible paths at build time. getStaticPaths supplies the list of paths that has to be rendered to HTML at build time. The fallback property ensures that if you visit a route that does not exist in the list of paths, it will return a 404 page.

Production ready

Add the following commands for build and start in package.json, under the scripts section and then run npm run build followed by npm run start to build the static blog and start the production server.

// package.json "scripts": { "dev": "next", "build": "next build", "start": "next start" }

The entire source code in this article is available on this GitHub repository. Feel free to clone it locally and play around with it. The repository also includes some basic placeholders to apply CSS to your blog.

Improvements

The blog, although functional, is perhaps too basic for most average cases. It would be nice to extend the framework or submit a patch to include some more features like:

Pagination

Syntax highlighting

Categories and Tags for posts

Styling

Overall, Next.js seems really very promising to build static websites, like a blog. Combined with its ability to export static HTML, we can built a truly standalone app without the need of a server!

The post Building a Blog with Next.js appeared first on CSS-Tricks.

You can support CSS-Tricks by being an MVP Supporter.

Building a Blog with Next.js published first on https://deskbysnafu.tumblr.com/

0 notes

Text

Building a Blog with Next.js

In this article, we will use Next.js to build a static blog framework with the design and structure inspired by Jekyll. I’ve always been a big fan of how Jekyll makes it easier for beginners to setup a blog and at the same time also provides a great degree of control over every aspect of the blog for the advanced users.

With the introduction of Next.js in recent years, combined with the popularity of React, there is a new avenue to explore for static blogs. Next.js makes it super easy to build static websites based on the file system itself with little to no configuration required.

The directory structure of a typical bare-bones Jekyll blog looks like this:

. ├─── _posts/ ...blog posts in markdown ├─── _layouts/ ...layouts for different pages ├─── _includes/ ...re-usable components ├─── index.md ...homepage └─── config.yml ...blog config

The idea is to design our framework around this directory structure as much as possible so that it becomes easier to migrate a blog from Jekyll by simply reusing the posts and configs defined in the blog.

For those unfamiliar with Jekyll, it is a static site generator that can transform your plain text into static websites and blogs. Refer the quick start guide to get up and running with Jekyll.

This article also assumes that you have a basic knowledge of React. If not, React’s getting started page is a good place to start.

Installation

Next.js is powered by React and written in Node.js. So we need to install npm first, before adding next, react and react-dom to the project.

mkdir nextjs-blog && cd $_ npm init -y npm install next react react-dom --save

To run Next.js scripts on the command line, we have to add the next command to the scripts section of our package.json.

"scripts": { "dev": "next" }

We can now run npm run dev on the command line for the first time. Let’s see what happens.

$ npm run dev > [email protected] dev /~user/nextjs-blog > next ready - started server on http://localhost:3000 Error: > Couldn't find a `pages` directory. Please create one under the project root

The compiler is complaining about a missing pages directory in the root of the project. We’ll learn about the concept of pages in the next section.

Concept of pages

Next.js is built around the concept of pages. Each page is a React component that can be of type .js or .jsx which is mapped to a route based on the filename. For example:

File Route ---- ----- /pages/about.js /about /pages/projects/work1.js /projects/work1 /pages/index.js /

Let’s create the pages directory in the root of the project and populate our first page, index.js, with a basic React component.

// pages/index.js export default function Blog() { return <div>Welcome to the Next.js blog</div> }

Run npm run dev once again to start the server and navigate to http://localhost:3000 in the browser to view your blog for the first time.

Out of the box, we get:

Hot reloading so we don’t have to refresh the browser for every code change.

Static generation of all pages inside the /pages/** directory.

Static file serving for assets living in the/public/** directory.

404 error page.

Navigate to a random path on localhost to see the 404 page in action. If you need a custom 404 page, the Next.js docs have great information.

Dynamic pages

Pages with static routes are useful to build the homepage, about page, etc. However, to dynamically build all our posts, we will use the dynamic route capability of Next.js. For example:

File Route ---- ----- /pages/posts/[slug].js /posts/1 /posts/abc /posts/hello-world

Any route, like /posts/1, /posts/abc, etc., will be matched by /posts/[slug].js and the slug parameter will be sent as a query parameter to the page. This is especially useful for our blog posts because we don’t want to create one file per post; instead we could dynamically pass the slug to render the corresponding post.

Anatomy of a blog

Now, since we understand the basic building blocks of Next.js, let’s define the anatomy of our blog.

. ├─ api │ └─ index.js # fetch posts, load configs, parse .md files etc ├─ _includes │ ├─ footer.js # footer component │ └─ header.js # header component ├─ _layouts │ ├─ default.js # default layout for static pages like index, about │ └─ post.js # post layout inherts from the default layout ├─ pages │ ├─ index.js # homepage | └─ posts # posts will be available on the route /posts/ | └─ [slug].js # dynamic page to build posts └─ _posts ├─ welcome-to-nextjs.md └─ style-guide-101.md

Blog API

A basic blog framework needs two API functions:

A function to fetch the metadata of all the posts in _posts directory

A function to fetch a single post for a given slug with the complete HTML and metadata

Optionally, we would also like all the site’s configuration defined in config.yml to be available across all the components. So we need a function that will parse the YAML config into a native object.

Since, we would be dealing with a lot of non-JavaScript files, like Markdown (.md), YAML (.yml), etc, we’ll use the raw-loader library to load such files as strings to make it easier to process them.

npm install raw-loader --save-dev

Next we need to tell Next.js to use raw-loader when we import .md and .yml file formats by creating a next.config.js file in the root of the project (more info on that).

module.exports = { target: 'serverless', webpack: function (config) { config.module.rules.push({test: /\.md$/, use: 'raw-loader'}) config.module.rules.push({test: /\.yml$/, use: 'raw-loader'}) return config } }

Next.js 9.4 introduced aliases for relative imports which helps clean up the import statement spaghetti caused by relative paths. To use aliases, create a jsconfig.json file in the project’s root directory specifying the base path and all the module aliases needed for the project.

{ "compilerOptions": { "baseUrl": "./", "paths": { "@includes/*": ["_includes/*"], "@layouts/*": ["_layouts/*"], "@posts/*": ["_posts/*"], "@api": ["api/index"], } } }

For example, this allows us to import our layouts by just using:

import DefaultLayout from '@layouts/default'

Fetch all the posts

This function will read all the Markdown files in the _posts directory, parse the front matter defined at the beginning of the post using gray-matter and return the array of metadata for all the posts.

// api/index.js import matter from 'gray-matter'

export async function getAllPosts() { const context = require.context('../_posts', false, /\.md$/) const posts = [] for(const key of context.keys()){ const post = key.slice(2); const content = await import(`../_posts/${post}`); const meta = matter(content.default) posts.push({ slug: post.replace('.md',''), title: meta.data.title }) } return posts; }

A typical Markdown post looks like this:

--- title: "Welcome to Next.js blog!" --- **Hello world**, this is my first Next.js blog post and it is written in Markdown. I hope you like it!

The section outlined by --- is called the front matter which holds the metadata of the post like, title, permalink, tags, etc. Here’s the output:

[ { slug: 'style-guide-101', title: 'Style Guide 101' }, { slug: 'welcome-to-nextjs', title: 'Welcome to Next.js blog!' } ]

Make sure you install the gray-matter library from npm first using the command npm install gray-matter --save-dev.

Fetch a single post

For a given slug, this function will locate the file in the _posts directory, parse the Markdown with the marked library and return the output HTML with metadata.

// api/index.js import matter from 'gray-matter' import marked from 'marked'

export async function getPostBySlug(slug) { const fileContent = await import(`../_posts/${slug}.md`) const meta = matter(fileContent.default) const content = marked(meta.content) return { title: meta.data.title, content: content } }

Sample output:

{ title: 'Style Guide 101', content: '<p>Incididunt cupidatat eiusmod ...</p>' }

Make sure you install the marked library from npm first using the command npm install marked --save-dev.

Config

In order to re-use the Jekyll config for our Next.js blog, we’ll parse the YAML file using the js-yaml library and export this config so that it can be used across components.

// config.yml title: "Next.js blog" description: "This blog is powered by Next.js"

// api/index.js import yaml from 'js-yaml' export async function getConfig() { const config = await import(`../config.yml`) return yaml.safeLoad(config.default) }

Make sure you install js-yaml from npm first using the command npm install js-yaml --save-dev.

Includes

Our _includes directory contains two basic React components, <Header> and <Footer>, which will be used in the different layout components defined in the _layouts directory.

// _includes/header.js export default function Header() { return <header><p>Blog | Powered by Next.js</p></header> }

// _includes/footer.js export default function Footer() { return <footer><p>©2020 | Footer</p></footer> }

Layouts

We have two layout components in the _layouts directory. One is the <DefaultLayout> which is the base layout on top of which every other layout component will be built.

// _layouts/default.js import Head from 'next/head' import Header from '@includes/header' import Footer from '@includes/footer'

export default function DefaultLayout(props) { return ( <main> <Head> <title>{props.title}</title> <meta name='description' content={props.description}/> </Head> <Header/> {props.children} <Footer/> </main> ) }

The second layout is the <PostLayout> component that will override the title defined in the <DefaultLayout> with the post title and render the HTML of the post. It also includes a link back to the homepage.

// _layouts/post.js import DefaultLayout from '@layouts/default' import Head from 'next/head' import Link from 'next/link'

export default function PostLayout(props) { return ( <DefaultLayout> <Head> <title>{props.title}</title> </Head> <article> <h1>{props.title}</h1> <div dangerouslySetInnerHTML=/> <div><Link href='/'><a>Home</a></Link></div> </article> </DefaultLayout> ) }

next/head is a built-in component to append elements to the <head> of the page. next/link is a built-in component that handles client-side transitions between the routes defined in the pages directory.

Homepage

As part of the index page, aka homepage, we will list all the posts inside the _posts directory. The list will contain the post title and the permalink to the individual post page. The index page will use the <DefaultLayout> and we’ll import the config in the homepage to pass the title and description to the layout.

// pages/index.js import DefaultLayout from '@layouts/default' import Link from 'next/link' import { getConfig, getAllPosts } from '@api'

export default function Blog(props) { return ( <DefaultLayout title={props.title} description={props.description}> <p>List of posts:</p> <ul> {props.posts.map(function(post, idx) { return ( <li key={idx}> <Link href={'/posts/'+post.slug}> <a>{post.title}</a> </Link> </li> ) })} </ul> </DefaultLayout> ) }

export async function getStaticProps() { const config = await getConfig() const allPosts = await getAllPosts() return { props: { posts: allPosts, title: config.title, description: config.description } } }

getStaticProps is called at the build time to pre-render pages by passing props to the default component of the page. We use this function to fetch the list of all posts at build time and render the posts archive on the homepage.

Post page

This page will render the title and contents of the post for the slug supplied as part of the context. The post page will use the <PostLayout> component.

// pages/posts/[slug].js import PostLayout from '@layouts/post' import { getPostBySlug, getAllPosts } from "@api"

export default function Post(props) { return <PostLayout title={props.title} content={props.content}/> }

export async function getStaticProps(context) { return { props: await getPostBySlug(context.params.slug) } }

export async function getStaticPaths() { let paths = await getAllPosts() paths = paths.map(post => ({ params: { slug:post.slug } })); return { paths: paths, fallback: false } }

If a page has dynamic routes, Next.js needs to know all the possible paths at build time. getStaticPaths supplies the list of paths that has to be rendered to HTML at build time. The fallback property ensures that if you visit a route that does not exist in the list of paths, it will return a 404 page.

Production ready

Add the following commands for build and start in package.json, under the scripts section and then run npm run build followed by npm run start to build the static blog and start the production server.

// package.json "scripts": { "dev": "next", "build": "next build", "start": "next start" }

The entire source code in this article is available on this GitHub repository. Feel free to clone it locally and play around with it. The repository also includes some basic placeholders to apply CSS to your blog.

Improvements

The blog, although functional, is perhaps too basic for most average cases. It would be nice to extend the framework or submit a patch to include some more features like:

Pagination

Syntax highlighting

Categories and Tags for posts

Styling

Overall, Next.js seems really very promising to build static websites, like a blog. Combined with its ability to export static HTML, we can built a truly standalone app without the need of a server!

The post Building a Blog with Next.js appeared first on CSS-Tricks.

via CSS-Tricks https://ift.tt/38RTO6t

0 notes

Note

All questions for Taber and co!

actuallyaltaria said: (co being Belasco, Aracelli, and any NPCS you think are relevant here)

Sure thing! Though I’ll only do odd-numbered questions I think! (from here)

1) What’s their favorite types of art? Do any of them make any art themselves?Taber appreciates all forms of visual art but doesn’t make any herself, and idk about the rest of the party. As far as NPC, Zuzanna is a jeweller, and Helia is pretty damn artful at killing people to death

3) Who will buy the others candy and treats if they’re feeling down, and who will comfort them emotionally?Esovera once gave Taber a bottle of whiskey as a “sorry you got crushed by a dragon and nearly died” gift, so I think she counts for the first category. As for the second, Aracelli is good at voicing her emotions and comforting others when their emotions are out of whack, though she doesn’t let others do the same for her, whoops

The rest goes under a cut!

5) What does everyone do in their downtime?Belasco’s most likely to sell shit, solve puzzles, and design new weapons; Aracelli does target practice for hours on end and pets her bird; Taber spends time with her girlfriend and reads; Esovera and Nate fuck behind Esovera’s bar

7) What superpowers would they pick?I’ll just answer for Taber for this one, but I think she’d choose either invisibility or the ability to take wounds from others. She could, you know, heal those wounds instead of taking them for herself, but uh

9) Can one of them bake well?Nobody in the party has tried while in-game, but I imagine Esovera and Zuzanna can both bake decently well

Gonna skip 11 actually

13) Who enjoys parties? Who would rather stay at home?Lmao the gang is neutral leaning negative toward parties in practice, but Taber does like the idea of being at a party. Zuzanna seems to enjoy parties though, and Esovera likes throwing them! Nate would rather be at home I’m sure, and Helia is more of a rave sorta girl I think

15) Who wears cheesy, cliche, embarrassing underwear?Please please please @theraveninhisstudy tell me that Nate would wear heart-print boxers

17) How would they react to a call or text from a wrong number?Belasco would probably fuck with whoever called him and keep them on the phone to know what it is they’re calling about, unless he doesn’t know who they are and they aren’t important. Aracelli would probably just hang up immediately, as would Taber

19) Who has the worst puns? How do the others react?God I hope this is Helia

21) Who would dye their hair/dye it again? What color would they go for?The closest I can think of would be Belasco donning the Disguise Cloak to actually give him hair, otherwise I’m not sure anyone would?

23) Who is the biggest nerd of all? What makes them a massive nerd?Your fave is a nerd: Belasco [last name confidential]. Receipts:

Mama’s boy

Loves puzzle books

Thought sending a dragon tooth home to his mom was a great idea

It was not a great idea

Once won a spicy food eating contest by cheating bc crows can’t taste spicy food

Avoids swimming lessons by cheating bc he’d rather invest in a pair of Shoes Shin Guards of Water Walking

Once came down from a dissociative episode by remembering that his tiny kitten loves him

Hates shoes

Runner-up is Esovera, who gets immensely excited for holidays and decorates her bar appropriately a month in advance

25) Who is the most likely to be called an edgelord? -Yml switches campaigns just for this question-

27) What did they get made fun of for when they were younger?Aracelli got made fun of by her clan because she comes from a clan of druids but isn’t a druid herself despite all best efforts; Taber was made fun of by her cousins for her height and heritage; Belasco was probably also got made fun of by his asshole cousin for his height but he was made fun of because he was short lmao

29) Who is the most ticklish?My gut tells me either Helia or Zuzanna and either way I need it

31) One of them buys one of those custom T-shirts where you can choose what words are written on it. What does it say? What do others think?Helia would totally have a shirt that reads “I flexed and the sleeves fell off” and Mason, you can’t argue with me about this because I won’t believe you

33) Who eats tons of plates at an all-you-can-eat buffet? Who gets one small plate, and not much more?Depends on what’s being offered! Aracelli will eat lots if it’s not fancy food, meanwhile Taber will do the same if it Is fancy. Honestly I think Belasco would eat a medium-sized plate of whatever

EDIT:Shay: aracelli is absolutely a five dollar all you can eat buffet kind of girlShay: she’d eat a plate of ten slices of pizza at cicis no problem

35) How would one of them react to another getting them flowers?Taber has already given her gf flowers, specifically silk flowers because Zuzanna is allergic to the real deal. Zuzanna was delighted when she learned who her secret admirer was ;0

37) Who would eat ghost peppers without breaking (too much of) a sweat?Belasco, see point 23

39) Who’s that one person who loves candy corn?Taber probably likes it, and I can see Esovera liking it too if only during Halloween Nightmare’s Eve season

41) Who gets hassled the most to fix computers/technology?I feel like that’s probably Aracelli in a modern AU, since she’s so good at fixing things

EDIT:Shay: also aracelli Isn’t a tech person so: she could fix your car, not your computer ;0

I will thus edit my statement to say probably Belasco ;0

43) Who would be the crazy cat person?Belasco

45) How do they tell their crushes they like them, if at all?Taber gave Zuzanna a flower through Zuzanna’s best friend, disappeared for like two weeks, then came back and honest to god meant to tell Zuzanna herself that she sent the flower, but she’s a bi disaster and wasn’t able to get the words out, leaving Zuzanna to spot the flower on her own. That went pretty well though all things considered

Helia meanwhile is a pan disaster who made a bit of a show of stammering out that she liked Aracelli, and could they go out together sometime? Aracelli thought it was cute though

47) Who stims? What’s their favorite method of stimming?Aracelli stims with archery practice, petting her bird, sitting upside down and occasionally by lying facedown on her bed

49) Who is the sassiest of all?Belasco “Are you just going to tell me to go fuck myself? Because if so, you’re derivative and boring” Belasco

51) What is that one interest they could spend hours talking about? Who listens?Aracelli’s special interest is bows/ archery!! Honestly if she wants to talk about it Taber would be happy to listen as a fellow admirer of weapons, but the problem is it hasn’t come up yet. God damn that would be cute

53) Who makes lunch for who?The gang buys food at Esovera’s bar on the regular, which I say should count toward our rent

55) Who’s not strong enough to open tight jars? Who has to open it for them?

Belasco: 9 STR

Aracelli: 11 STR

Taber: 18 STR

Take a guess

57) What’s their ideal first date? What was/will their actual first date be like?I’ll just answer she second question of these!

Aracelli and Helia’s first date was an attempt to eat at a fancy restaurant, both of them bailing because it was too uppity for both of them, then going to Helia’s favourite bar and kicking ass in the fighting pit because why not. Aracelli kicked the most ass and I’m so sad I wasn’t actually present for that

Taber’s and Zuzanna’s was also an outing to a fancy restaurant, less fancy than the one the other two picked but still a good choice for these two rich kids. They chatted over wine and Taber walked Zuzanna home and got a kiss on the cheek before Zuzanna left. It was very cute

59) Who would study for days for a test? Who doesn’t do a lick of studying?Honestly I feel like all of our kids would be pretty good as far as studying!

61) Does anyone have an unusually loud or quiet voice? Does anyone never speak sometimes/at all?Aracelli has gone nonverbal a couple of times, and in general her voice is pretty quiet. Taber keeps hers quiet too, as well as pitching it up. Belasco kinda just talks normally, not loud or quiet

63) Who comes to an event super late? Who comes to it super early?Just gonna answer for Taber for this one too! She’d rather be early to an event than late, she’d definitely be super early if given the chance. Also if Nate is late for an event it’s because he didn’t want to come

65) What’s the most annoying thing about living with these characters/people?Aracelli sometimes just doesn’t wear a shirt, and Belasco is cool with joining in on the naked party, too. That’s just how it is sometimes. Taber is always flustered when this happens. Also Belasco will be subtle and pull personal info out of you/ eavesdrop on all sorts of stuff he shouldn’t. Taber sometimes chills in her full suit of paladin armour for no gd reason other than that she likes wearing it, and it baffles the other two so much

67) Who sees an empty playground and can’t resist playing around for awhile?Probably Aracelli and Helia tbh, and that would also be a really cute date

69) Who’s the one that “you can’t take anywhere”?You can’t take Taber to anyplace where you wanna be stealthy, you can’t take Belasco anywhere if you want him to mind his own business, and you can’t take Aracelli anywhere where there’s no trees

71) What’s the dumbest thing they own, and the story behind it?Taber has a bottle of cheap and shitty perfume that she won at a carnival by playing one of those strength games with the mallet, Aracelli has arrows with heads full of peppercorns meant to be fired in people’s faces (and she made them all by herself!!), and when I asked Nidoran what the dumbest thing Belasco owns is they said “a cat” and that’s fair (and then they later added “6 stolen lemons”, which Belasco stole because Ulrich wouldn’t tell him where he got the lemons in the first place)

73) Who wears/would wear the most colorful or extravagant clothing?Out of everyone in the party, Taber and Belasco both, and Belasco might be a little more extravagant. As far as extravagantly fancy, that would be Zuzanna; for just plain extravagant, Esovera, see point 23

75) What’s a secret one of them have been hiding from the rest?For the longest time Aracelli had been hiding the fact that she was spellscarred, and Taber had been hiding the fact that Deniel is her brother and not her cousin. She’s also hiding the details of what she had to do under the Broker’s servitude, and Belasco is not only hiding as much personal information as he fucking can, but he’s hiding it by lying about it

#I love this party! A lot!#QR 'n A#actuallyaltaria#DnD talk#Taber party#Taber Lamburn#Belasco#Aracelli#food mention/#alcohol mention/#cursing/

3 notes

·

View notes

Text

Deploy ML Models On AWS Lambda - Code Along

Introduction

The site Analytics Vidhya put out an article titled Deploy Machine Learning Models on AWS Lambda on Medium on August 23, 2019.

I thought this was a great read, and thought I might do a code along and share some of my thoughts and takeaways!

[ Photo by Patrick Brinksma on Unsplash ]

You should be able to follow along with my article and their article at the same time (I would read both) and my article should help you through their article. Their sections numbers don’t make sense (they use 2.3 twice) so I choose to use my own section numbers (hopefully you don’t get too confused there).

Let’s get going!

Preface - Things I Learned The Hard Way

You need to use Linux!

This is a big one. I went through all the instructions using a Windows OS thinking it would all work the same, but numpy on Windows is not the same as numpy on Linux, and AWS Lambda is using Linux.

After Windows didn’t work I switched to an Ubuntu VirtualBox VM only to watch my model call fail again! (Note that the issue was actually with how I was submitting my data via cURL, and not Ubuntu, but more on that to come). At the time I was able to deploy a simple function using my Ubuntu VM, but it seemed that numpy was still doing something weird. I thought maybe the issue was that I needed to use AWS Linux (which is actually different from regular Ubuntu).

To use AWS Linux, you have a few options (from my research):

Deploy an EC2 instance and do everything from the EC2 instance

Get an AWS Linux docker image

Use Amazon Linux WorkSpace

I went with option #3. You could try the other options and let me know how it goes. Here is an article on starting an Amazon Linux WorkSpace:

https://aws.amazon.com/blogs/aws/new-amazon-linux-workspaces/

It takes a while for the workspace to become available, but if you’re willing to wait, I think it’s a nice way to get started. It’s also a nice option if you’re running Windows or Mac and you don’t have a LOT of local memory (more on that in a minute).

Funny thing is that full circle, I don’t think I needed to use Amazon Linux. I think the problem was with how I was submitting my cURL data. So in the end you could probably use any flavor of Linux, but when I finally got it working I was using Amazon WorkSpace, so everything is centered around Amazon Linux WorkSpace (all the steps should be roughly the same for Ubuntu, just replace yum with apt or apt-get and there are a few other changes that should be clear).

Another nice thing about running all of this from Amazon WorkSpace is that it also highlights another service from AWS. And again, it’s also a nice option if you’re running Windows or Mac and you don’t have a LOT of local memory to run a VM. VMs basically live in RAM, so if your machine doesn’t have a nice amount of RAM, your VM experience is going to be ssslllooowwwww. My laptop has ~24GB of RAM, so my VM experience is very smooth.

Watch our for typos!

I reiterate this over and over throughout the article, and I tried to highlight some of the major ones that could leave you pulling your hair out.

Use CloudWatch for debugging!

If you’re at the finish line and everything is working but the final model call, you can view the logs of your model calls under CloudWatch. You can add print statements to your main.py script and this should help you find bugs. I had to do this A LOT and test almost every part of my code to find the final issue (which was a doozy).

Okay, let’s go!

#1 - Build a Model with Libraries SHAP and LightGBM.

The article starts off by building and saving a model. To do this, they grab a open dataset from the package SHAP and use LightGBM to build their model.

Funny enough, SHAP (SHapley Additive exPlanations) is a unified approach to explain the output of any machine learning model. It happens to also have datasets that you can play with. SHAP has a lot of dependencies, so it might be easier to just grab any play dataset rather than installing SHAP if you’ve never installed SHAP before. On the other hand, it’s a great package to be aware of, so in that sense it’s also worth installing (although it’s kind of overkill for our needs here).

Here is the full code to build and save a model to disk using their sample code:

import shap

import lightgbm as lgbm

data,labels = shap.datasets.adult()

params = {'objective':'binary',

'booster_type':'gbdt',

'max_depth':6,

'learning_rate':0.05,

'metric':'auc'}

dtrain = lgbm.Dataset(data,labels)

model = lgbm.train(params,dtrain,num_boost_round=100,valid_sets=[dtrain])

model.save_model('saved_adult_model.txt')

Technically you can do this part on any OS, either way I suggest creating a Python venv.

Note that I’m using AWS Linux, which is RedHat based so I’ll be using yum instead of apt or apt-get. First I need python3, so I’ll run:

sudo yum -y install python3

Then I created a new folder, and within that new folder I created a Python venv.

python3 -m venv Lambda_PyVenv

To activate your Python venv just type the following:

source Lambda_PyVenv/bin/activate

Now we need shap and lightgbm. I had some trouble installing shap, so I had to run the following first:

sudo yum install python-devel

sudo yum install libevent-devel

sudo easy_install gevent

Which I got from:

https://stackoverflow.com/questions/11094718/error-command-gcc-failed-with-exit-status-1-while-installing-eventlet

Next I ran:

pip3 install shap

pip3 install lightgbm

And finally I could run:

python3 create_model.py

Note: For file creating and editing I use Atom (if you don’t know how to install atom on Ubuntu, open a new terminal, then I would check out the documentation and just submit the few lines of code from command line).

#2 - Install the Serverless Framework

Next thing you need to do is open a new terminal and install the Serverless Framework on your Linux OS machine.

Something else to keep in mind, if you try to test out npm and serverless from Atom or Sublime consoles, it’s possible you might hit errors. You might want to use your normal terminal from here on out!

Here is a nice article on how to install npm using AWS Linux:

https://tecadmin.net/install-latest-nodejs-and-npm-on-centos/

In short, you need to run:

sudo yum install -y gcc-c++ make

curl -sL https://rpm.nodesource.com/setup_10.x | sudo -E bash -

sudo yum install nodejs

Then to install serverless I just needed to run

sudo npm install -g serverless

Don’t forget to use sudo!

And then I had serverless running on my Linux VM!

#3 - Creating an S3 Bucket and Loading Your Model

The next step is to load your model to S3. The article does this by command line. Another way to do this is to use the AWS Web Console. If you read the first quarter for my article Deep Learning Hyperparameter Optimization using Hyperopt on Amazon Sagemaker I go through a simple way of creating an S3 bucket and loading data (or in this case we’re loading a model).

#4 - Create the Main.py file

Their final main.py file didn’t totally work for me, it was missing some important stuff. In the end my final main.py file looked something like:

import boto3

import lightgbm

import numpy as np

def get_model():

bucket= boto3.resource('s3').Bucket('lambda-example-mjy1')

bucket.download_file('saved_adult_model.txt','/tmp/test_model.txt')

model= lightgbm.Booster(model_file='/tmp/test_model.txt')

return model

def predict(event):

samplestr = event['body']

print("samplestr: ", samplestr)

print("samplestr str:", str(samplestr))

sampnp = np.fromstring(str(samplestr), dtype=int, sep=',').reshape(1,12)

print("sampnp: ", sampnp)

model = get_model()

result = model.predict(sampnp)

return result

def lambda_handler(event,context):

result1 = predict(event)

result = str(result1[0])

return {'statusCode': 200,'headers': { 'Content-Type': 'application/json' },'body': result }

I had to include the ‘header’ section and the body. These needed to be returned as a string in order to not get ‘internal server error’ type errors.

You’ll also notice that I’m taking my input and converting it from a string to a 2d numpy array. I was feeding a 2d array similar to the instructions and this was causing an error. This was the error that caused me to leave Ubuntu for AWS Linux because I thought the error was still related to the OS when it wasn’t. Passing something other than a string will do weird things. It’s better to pass a string and then just convert the string to any format required.

(I can’t stress this enough, this caused me 1.5 days of pain).

#5 - YML File Changes

Next we need to create our .yml file which is required for the serverless framework, and gives necessary instructions for the framework.

For the most part I was able to use their YML file, but below are a few properties I needed to change:

runtime: python3.7

region : us-east-1

deploymentBucket:

name : lambda-example-mjy1

iamRoleStatements:

- Effect : Allow

Action:

- s3:GetObject

Resource:

- "arn:aws:s3:::lambda-example-mjy1/*"

WARNING!!!

s3.GetObject should be s3:GetObject

Don’t get that wrong and spend hours on a blog typo like I did!

If you need to find your region, you can use the following link as a resource:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Concepts.RegionsAndAvailabilityZones.html

Also, make sure the region you specify is the same region that your S3 bucket is located in.

#6 - Test What We Have Built Locally

Before you test locally, I think you need your requirements.txt file. For now you could just run:

pip freeze > requirements.txt

Now, if you’re like me and tried to run it locally at this point, you probably hit an error because they never walked you through how to add the serverless-python-requirements plugin!

I found a nice article that walks you through this step:

https://serverless.com/blog/serverless-python-packaging/

You want to follow the section that states ...

“ Our last step before deploying is to add the serverless-python-requirements plugin. Create a package.json file for saving your node dependencies. Accept the defaults, then install the plugin: “

In short, you want to:

submit ‘npm init’ from command line from your project directory

I gave my package name the same as my service name: ‘test-deploy’

The rest I just left blank i.e. just hit enter

Last prompt just enter ‘y’

Finally you can enter ‘sudo npm install --save serverless-python-requirements’ and everything so far should be a success.

Don’t forget to use sudo!

BUT WE’RE NOT DONE!!! (almost there...)

Now we need to do the following (if you haven’t already):

pip3 install boto3

pip3 install awscli

run “aws configure” and enter our Access Key ID and Secret Access Key

Okay, now if we have our main.py file ready to go, we can test locally (’again’) using the following command:

serverless invoke local -f lgbm-lambda --path data.txt

Where our data.txt file contains

{"body":[[3.900e+01, 7.000e+00, 1.300e+01, 4.000e+00, 1.000e+00,

0.000e+00,4.000e+00, 1.000e+00, 2.174e+03, 0.000e+00, 4.000e+01,

3.900e+01]]}

Also, beware of typos!!!!