#ipython

Explore tagged Tumblr posts

Text

we have the technology. we can make this meme less janky.

21K notes

·

View notes

Text

It's been a while since I've done A Thing on a Computer and I forgot how annoying it is

#T#Anyway let's hope reinstalling cmake fixes my problem#I just wanna install this software 😔#I just wanna run this ipython notebook 😔#I just wanna make the tutorial graphs 😔#Whatever I should read this paper in the meantime

0 notes

Text

made a gimmick blog of my own (inspired by the linux blogs)

not affiliated with the ipython or anaconda projects

12 notes

·

View notes

Text

sometimes I'm like. "I want like. a thing that does all of being shell + a ipython notebook + easy way to make qt buttons or something. (So it can do shell things but also it's easy to set up monitoring and macros and then have them go away when you're working on something else.)"

but I think ultimately it's kinda "grass is greener" shit. id chafe against any interface. ultimately what I want there not to be a computer standing in the way between me and the problems.

4 notes

·

View notes

Text

Ed's parental leave: Day 4 (Feb 7) - Ed hot reloads a car

Yesterday was a rest day, I bought a new laptop and played around with deep research. I also had a lovely chat with some folks at Cursor, Sualeh kindly nudged me into using Composer, which is what I did a lot of today.

What I did:

I tried the puppeteer MCP to see if it can do direct scraping with just Cursor Agent. It sure seems like it's doing something! But as best I can tell, the actual HTML on the page never makes it back to the model, so the LLM is just inventing actions to take (which ALMOST works, probably because the site I am scraping is well known.)

Ran a bunch of Deep Researches about all sorts of things. I did spent some time looking at Browser-Use and LaVague, but I didn't like that they heavily relied on LLMs in the actual crawl loop.

Spent a bunch of time thinking about how I wanted to architect my scraper. This was actually spinning me in circles: I could not think of a generic workflow that (1) avoided using LLMs in the actual code, (2) had a really nice UX for programming the scraping actions, even under the simplifying assumption that I was OK with specializing to one website. I ended up punting here; I'm going to treat this as a pure coding project, not going to try to get fancy with agents, and get some more experience here, maybe I'll get an idea when I'm done.

Unsuccessfully tried to get Sonnet to rewrite my program to have a daemonized browser that I would reconnect to when I ran commands. This also included an attempt where I moved just the browser creation to its own file and checked if it could be one shot. I also attempted to ask Sonnet to make a plan before coding, but this didn't seem to help much.

Rearchitected the program to be used with ipython + autoreload, now I can just keep the browser around in the persistent Python process, no muss no fuss. I think this will be really good! And probably I should write an MCP to ipython too.

Reflections

There's a lot of annoying typing you normally have to do that Cursor melts away, like adding import lines and easy refactors of code. This is really good, well worth the price of admission. I can also envision a simple agentic flow that also helps fill in types after you write your code, I want to experiment with this later (for now, generation seems to do a good job adding types without me bothering them, and I haven't setup my favorite type checker yet).

Large scale editing is basically the same as just chatting with Claude directly. And here the model can get itself into a lot of trouble even on a program that's not so big, if you ask it to do something tricky (like daemonize your browser). My current approach is to just ask for less. However, people claim that detailed implementation plans can make the model less likely to trip over itself (perhaps because it has more tokens to think). I have to try this more carefully.

Therefore, my mental model for Cursor is that it is some really important UI affordances, but on how to code with it, that's still a Sonnet 3.5 badgering thing.

0 notes

Text

Software testing is a good option for adopting as a career. We tell you here some information of software testing. You can get here information about the future scope of software testing

What is python

Python is a high-level, object oriented programming language that is dynamically typed. It is simple and has fairly easy syntax, yet very powerful, making it one of the most popular programming languages for various applications.

What are the key features of Python

Python is an interpreted language. This means that codes are interpreted line by line and not compiled as is the case in C. See an example below.

print('This will be printed even though the next line is an error') Print()#this should return an error

Output:>

This will be printed even though the next line is an error

Traceback (most recent call last): File "< ipython-input-21-1390f7d30421 >", line 2, in < module >Print() NameError: name 'Print' isnot defined

As seen above, the first line was printed, then the second line returned the error.

Python is dynamically typed: This means that the variable type does not have to be explicitly stated when defining a variable and these variables can be changed at any point in time. In Python, the line of code below works perfectly.

x = 3 #this is an integer

x = ‘h2kinfosys’ #this is a string

x has been dynamically changed from an integer to a string

Everything in Python is an object. Python supports Object Oriented Programming (OOP) and that means you can create classes, functions, methods. Python also supports the 3 key features of OOP – Inheritance, Polymorphism and Encapsulation.

Python is a general-purpose language. Python can be used for various applications including web development, hacking, machine learning, game development, test automation, etc

#software testing training#automation testing training in ahmedabad#software testing#unicodetechnologies

0 notes

Text

Numerical Python: Scientific Computing and Data Science Applications with Numpy, SciPy and Matplotlib, Third Edition

Numerical Python: Scientific Computing and Data Science Applications with Numpy, SciPy and Matplotlib, Third Edition #python

Numerical Python: Scientific Computing and Data Science Applications with Numpy, SciPy and Matplotlib, Third Edition Robert Johansson Table of Contents Chapter 1: Introduction to Computing with Python ……………………………………….. 1 Environments for Computing with Python ………. 4 Python …………….. 4 Interpreter …………………………….4 IPython Console … 5 Input and Output Caching ……….6 Autocompletion and Object…

0 notes

Text

Machine Learning Training Online

You need to learn it to train machines like humans through trial-and-error methods. There are multiple other things you need to learn, including how reinforcement learning works, Markov Decision Processes Q-learning algorithm. Then our machine learning course syllabus will give you a structured outline of subjects and topics to learn. Collaborates with leading educational organizations to expand the reach of deep learning training to developers worldwide - online data science course.

Machine Learning courses are basically outlined at developed and novice levels and may be pursued at the student's convenience and against a particular enrolment cost. While not all the courses require you to learn Python, C++, having some familiarity and background with these programming languages is worthwhile. Originating from the IPython project, it offers a comprehensive framework for interactive computing, including notebooks, code, and data management.

What distinguishes machine learning from other computer guided decision processes is that it builds prediction algorithms using data. Some of the most popular products that use machine learning include the handwriting readers implemented by the postal service, speech recognition, movie recommendation systems, etc. Machine Learning is a pathway to the most exciting careers in data analysis today - Best data science institute in delhi.

Machine Learning course, available on our online website, is a popular course option that has introduced countless learners to the world of machine learning. As one of the pioneering online courses in the field, it provides a comprehensive introduction to machine learning algorithms, including supervised and unsupervised learning, and deep learning. Learners get hands-on experience with Octave/MATLAB for coding assignments and gain a solid foundation in machine learning theory. For more information, please visit our site https://eagletflysolutions.com/

0 notes

Text

Is Python a good language for people who are new to programming?

Yes, Python really can be called a great language for beginners. Why?

Readability: Python has relatively clean and easily understandable syntax, so it's much less scary for beginners.

Community and Resources: The world of Python has a huge community and lots of online resources: tutorials, courses, and forums.

Versatility: From web development to data analysis and machine learning, all functions in Python can be easily done.

Interactive Environment: Python has a really interactive environment, IPython/Jupyter Notebook, which allows you to play around with code and see the immediate results. There are many libraries for doing hard things really easily: numerical computation with NumPy, data manipulation with Pandas, and others. Although this Python is a great starting point, it is necessary to practice regularly and explore different projects to make sure you understand properly and build confidence.

0 notes

Text

ISBN: 978-960-02-4104-4 Συγγραφέας: Jake VanderPlas Εκδότης: Εκδόσεις Παπαζήση Σελίδες: 682 Ημερομηνία Έκδοσης: 2023-10-10 Διαστάσεις: 24x17 Εξώφυλλο: Μαλακό εξώφυλλο

0 notes

Text

Automatic image tagging with Gemini AI

I used multimodal generative AI to tag my archive of 2,500 unsorted images. It was surprisingly effective.

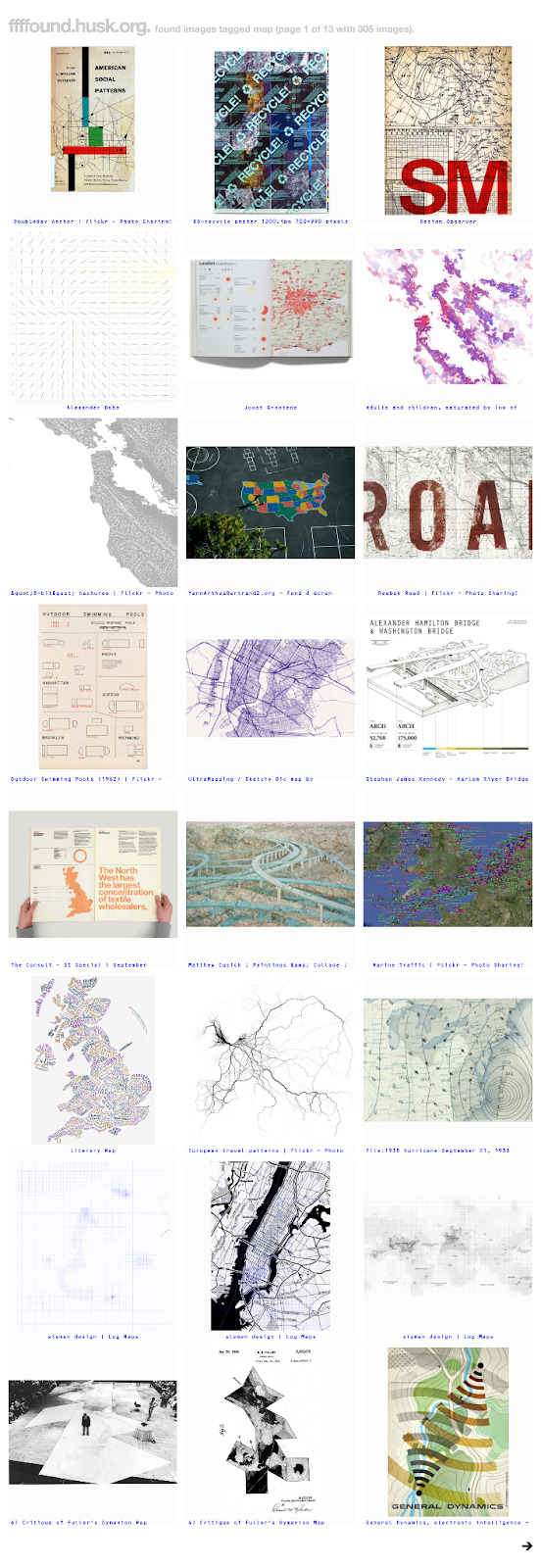

I’m a digital packrat. Disk space is cheap, so why not save everything? That goes double for things out on the internet, especially those on third party servers, where you can’t be sure they’ll live forever. One of the sites that hasn’t lasted is ffffound!, a pioneering image bookmarking website, which I was lucky enough to be a member of.

Back around 2013 I wrote a quick Ruby web scraper to download my images, and ever since I’ve wondered what to do with the 2,500 or so images. ffffound was deliberately minimal - you got only the URL of the site it was saved from and a page title - so organising them seemed daunting.

A little preview- this is what got pulled out tagged "maps".

The power of AI compels you!

As time went on, I thought about using machine learning to write tags or descriptions, but the process back then involved setting up models, training them yourself, and it all seemed like a lot of work. It's a lot less work now. AI models are cheap (or at least, for the end user, subsidised) and easy to access via APIs, even for multimodal queries.

After some promising quick explorations, I decided to use Google’s Gemini API to try tagging the images, mainly because they already had my billing details in Google Cloud and enabling the service was really easy.

Prototyping and scripting

My usual prototyping flow is opening an iPython shell and going through tutorials; of course, there’s one for Gemini, so I skipped to “Generate text from image and text inputs”, replaced their example image with one of mine, tweaked the prompt - ending up with ‘Generate ten unique, one to three word long, tags for this image. Output them as comma separated, without any additional text’ - and was up and running.

With that working, I moved instead to writing a script. Using the code from the interactive session as a core, I wrapped it in some loops, added a little SQL to persist the tags alongside the images in an existing database, and set it off by passing in a list of files on the command line. (The last step meant I could go from running it on the six files matching images/00\*.jpg up to everything without tweaking the code.) Occasionally it hit rather baffling errors, which weren’t well explained in the tutorial - I’ll cover how I handled them in a follow up post.

You can see the resulting script on GitHub. Running it over the entire set of images took a little while - I think the processing time was a few seconds per image, so I did a few runs of maybe an hour each to get all of them - but it was definitely much quicker than tagging by hand. Were the tags any good, though?

Exploring the results

I coded up a nice web interface so I was able to surf around tags. Using that, I could see what the results were. On the whole? Between fine and great. For example, it turns out I really like maps, with 308 of the 2,580 or so images ending up with the tag ‘map’ which are almost all, if not actual maps, do at least look cartographic in some way.

The vast majority of the most common tags I ended up with were the same way - the tag was generally applicable to all of the images in some way, even if it wasn’t totally obvious at first why. However, it definitely wasn’t perfect. One mistake I noticed was this diagram of roads tagged “rail” - and yet, I can see how a human would have done the same.

Another small criticism? There was a lack of consistency across tags. I can think of a few solutions, including resubmitting the images as a group, making the script batch images together, or adding the most common tags to the prompt so the model can re-use them. (This is probably also a good point to note it might also be interesting to compare results with other multimodal models.)

Finally, there were some odd edge cases to do with colour. I can see why most of these images are tagged ‘red’, but why is the telephone box there? While there do turn out to be specks of red in the diagram at the bottom right, I’d also go with “black and white” myself over “black”, “white”, and “red” as distinct tags.

Worth doing?

On the whole, though, I think this experiment was pretty much a success. Tagging the images cost around 25¢ (US) in API usage, took a lot less time than doing so manually, and nudged me into exploring and re-sharing the archive. If you have a similar library, I’d recommend giving this sort of approach a try.

1 note

·

View note

Text

--------------------------------------------------------------------------- FileNotFoundError Traceback (most recent call last) <ipython-input-2-a1af17f750a5> in <module> 1 import pandas as pd 2 import numpy as np ----> 3 df=pd.read_csv("iris.csv") 4 df /opt/conda/lib/python3.7/site-packages/pandas/io/parsers.py in parser_f(filepath_or_buffer, sep, delimiter, header, names, index_col, usecols, squeeze, prefix, mangle_dupe_cols, dtype, engine, converters, true_values, false_values, skipinitialspace, skiprows, skipfooter, nrows, na_values, keep_default_na, na_filter, verbose, skip_blank_lines, parse_dates, infer_datetime_format, keep_date_col, date_parser, dayfirst, cache_dates, iterator, chunksize, compression, thousands, decimal, lineterminator, quotechar, quoting, doublequote, escapechar, comment, encoding, dialect, error_bad_lines, warn_bad_lines, delim_whitespace, low_memory, memory_map, float_precision) 674 ) 675 --> 676 return _read(filepath_or_buffer, kwds) 677 678 parser_f.__name__ = name /opt/conda/lib/python3.7/site-packages/pandas/io/parsers.py in _read(filepath_or_buffer, kwds) 446 447 # Create the parser. --> 448 parser = TextFileReader(fp_or_buf, **kwds) 449 450 if chunksize or iterator: /opt/conda/lib/python3.7/site-packages/pandas/io/parsers.py in __init__(self, f, engine, **kwds) 878 self.options["has_index_names"] = kwds["has_index_names"] 879 --> 880 self._make_engine(self.engine) 881 882 def close(self): /opt/conda/lib/python3.7/site-packages/pandas/io/parsers.py in _make_engine(self, engine) 1112 def _make_engine(self, engine="c"): 1113 if engine == "c": -> 1114 self._engine = CParserWrapper(self.f, **self.options) 1115 else: 1116 if engine == "python": /opt/conda/lib/python3.7/site-packages/pandas/io/parsers.py in __init__(self, src, **kwds) 1889 kwds["usecols"] = self.usecols 1890 -> 1891 self._reader = parsers.TextReader(src, **kwds) 1892 self.unnamed_cols = self._reader.unnamed_cols 1893 pandas/_libs/parsers.pyx in pandas._libs.parsers.TextReader.__cinit__() pandas/_libs/parsers.pyx in pandas._libs.parsers.TextReader._setup_parser_source() FileNotFoundError: [Errno 2] File iris.csv does not exist: 'iris.csv'

1 note

·

View note

Text

PyCharm vs. VS Code: A Comprehensive Comparison of Leading Python IDEs

Introduction: Navigating the Python IDE Landscape in 2024

As Python continues to dominate the programming world, the choice of an Integrated Development Environment (IDE) holds significant importance for developers. In 2024, the market is flooded with a plethora of IDE options, each offering unique features and capabilities tailored to diverse coding needs. Considering the kind support of Learn Python Course in Hyderabad, Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

This guide dives into the leading Python IDEs of 2024, showcasing their standout attributes to help you find the perfect fit for your coding endeavors.

1. PyCharm: Unleashing the Power of JetBrains' Premier IDE

PyCharm remains a cornerstone in the Python development realm, renowned for its robust feature set and seamless integration with various Python frameworks. With intelligent code completion, advanced code analysis, and built-in version control, PyCharm streamlines the development process for both novices and seasoned professionals. Its extensive support for popular Python frameworks like Django and Flask makes it an indispensable tool for web development projects.

2. Visual Studio Code (VS Code): Microsoft's Versatile Coding Companion

Visual Studio Code has emerged as a formidable player in the Python IDE landscape, boasting a lightweight yet feature-rich design. Armed with a vast array of extensions, including Python-specific ones, VS Code empowers developers to tailor their coding environment to their liking. Offering features such as debugging, syntax highlighting, and seamless Git integration, VS Code delivers a seamless coding experience for Python developers across all proficiency levels.

3. JupyterLab: Revolutionizing Data Science with Interactive Exploration

For data scientists and researchers, JupyterLab remains a staple choice for interactive computing and data analysis. Its support for Jupyter notebooks enables users to blend code, visualizations, and explanatory text seamlessly, facilitating reproducible research and collaborative work. Equipped with interactive widgets and compatibility with various data science libraries, JupyterLab serves as an indispensable tool for exploring complex datasets and conducting in-depth analyses. Enrolling in the Best Python Certification Online can help people realise Python's full potential and gain a deeper understanding of its complexities.

4. Spyder: A Dedicated IDE for Scientific Computing and Analysis

Catering specifically to the needs of scientific computing, Spyder provides a user-friendly interface and a comprehensive suite of tools for machine learning, numerical simulations, and statistical analysis. With features like variable exploration, profiling, and an integrated IPython console, Spyder enhances productivity and efficiency for developers working in scientific domains.

5. Sublime Text: Speed, Simplicity, and Customization

Renowned for its speed and simplicity, Sublime Text offers a minimalistic coding environment with powerful customization options. Despite its lightweight design, Sublime Text packs a punch with an extensive package ecosystem and adaptable interface. With support for multiple programming languages and a responsive developer community, Sublime Text remains a top choice for developers seeking a streamlined coding experience.

Conclusion: Choosing Your Path to Python IDE Excellence

In conclusion, the world of Python IDEs in 2024 offers a myriad of options tailored to suit every developer's needs and preferences. Whether you're a web developer, data scientist, or scientific researcher, there's an IDE designed to enhance your coding journey and boost productivity. By exploring the standout features and functionalities of each IDE, you can make an informed decision and embark on a path towards coding excellence in Python.

0 notes

Text

JUPYTER, LLM, AND PYTHON

Creating a new Jupyter notebook output code cell from IPython magic and popluating it with code generated by local LLM

View On WordPress

0 notes

Text

NSIC Technical Services Centre offers career-focused software, hardware, networking, and computer application courses with placement aid and payment plans.

New Post has been published on https://www.jobsarkari.in/nsic-technical-services-centre-offers-career-focused-software-hardware-networking-and-computer-application-courses-with-placement-aid-and-payment-plans/

NSIC Technical Services Centre offers career-focused software, hardware, networking, and computer application courses with placement aid and payment plans.

NSIC Technical Services Centre, a division of the National Small Industries Corporation (NSIC), is inviting applications for various job-oriented courses. The courses offered include Advanced Diploma in Software Technology, Advanced Diploma in Computer Hardware and Networking, NIELIT ‘O’ Level, Computer Hardware and Networking, Advanced Diploma in Computer Application, Computerised Accounting and Tally, Laptop Repairing, Mobile Repairing, MCP & CCNA, ICCNA, Linux Administration, Core Java, C, C++ & Oops, Office & Internet, Python Programming, SQL Server, IMS, Advance Excel / VBA, and Project Training. The duration and fees vary for each course. The center also provides placement assistance and offers an installment facility. Interested individuals can contact NSIC Technical Services Centre located near Govind Puri Metro Station in Okhla Phase-III, New Delhi. Contact details are provided on the website www.nsic.co.in.

NSIC Technical Services Centre offers Job Oriented Courses in various fields.

The courses range from Diploma to Advance Diploma and cover subjects like Software Technology, Computer Hardware & Networking, Computer Application, etc.

The duration of the courses varies from 6 months to 2 years.

Eligibility for the courses is either 10th pass or 12th pass.

Placement assistance is available and installment facility is provided.

GST is charged extra and interested individuals can request a callback by sending an SMS.

NSIC Technical Services Centre offers Job Oriented Courses

Diploma and Advance Diploma courses available

Courses include Software Technology, Computer Hardware & Networking, Computer Application, etc.

Duration of courses ranges from 6 months to 2 years

IAdv. Diploma in Software Technology

Duration: 1 Year

Fee: Rs. 48,000/-

Eligibility: 12 Pass

IAdv. Diploma in Computer H/w and Networking

Duration: 1 Year

Fee: Rs. 32,000/-

Eligibility: 12 Pass

INIELIT ‘O’ Level

Duration: 15 Months

Fee: Rs. 32,000/-

Eligibility: 12 Pass

IComputer Hardware & Networking

Duration: 1 Year

Fee: Rs. 24,000/-

Eligibility: 10 Pass

IAdvance Diploma in Computer Application

Duration: 1 Year

Fee: Rs. 25,000/-

Eligibility: 12 Pass

IDiploma in Computer Application

Duration: 6 Months

Fee: Rs. 15,000/-

Eligibility: 10 Pass

IComputerised Accounting & Tally

Duration: 120 Hrs.

Fee: Rs. 10,000/-

Eligibility: 12 Pass

ILaptop Repairing

Duration: 120 Hrs.

Fee: Rs. 8,000/-

Eligibility: 10 Pass

IMobile Repairing

Duration: 80 Hrs.

Fee: Rs. 7,000/-

Eligibility: 10 Pass

IMCP & CCNA

Duration: 120 Hrs.

Fee: Rs. 8,000/-

Eligibility: 12 Pass

ICCNA

Duration: 80 Hrs.

Fee: Rs. 6,000/-

Eligibility: 12 Pass

ILinux Administration

Duration: 80 Hrs.

Fee: Rs. 6,000/-

Eligibility: 12 Pass

ICore Java

Duration: 60 Hrs.

Fee: Rs. 6,000/-

Eligibility: 12 Pass

/C, C++ & Oops

Duration: 60 Hrs.

Fee: Rs. 5,000/-

Eligibility: 10 Pass

Office & Internet

Duration: 60 Hrs.

Fee: Rs. 4,000/-

Eligibility: 10 Pass

IPython Programming

Duration: 80 Hrs.

Fee: Rs. 6,000/-

Eligibility: 10 Pass

ISQL Server

Duration: 40 Hrs.

Fee: Rs. 5,000/-

Eligibility: 10 Pass

IMS

Duration: 40 Hrs.

Fee: Rs. 6,000/-

Eligibility: Undergoing BCA/MCA/BE/B.Tech

I Advance Excel / VBA

Duration: 1 Month

Fee: Rs. 5,000/-

Eligibility: Undergoing BCA/MCA/BE/B.Tech

Explore Job Oriented Courses at NSIC Technical Services Centre

NSIC Technical Services Centre offers a wide range of job-oriented courses in various fields

Eligibility for courses is either 10th pass or 12th pass

Placement assistance and installment facility available

Request a callback by sending SMS ‘COMP’ to 9654578062

You see the following because WP_DEBUG and WP_DEBUG_DISPLAY are enabled on this site. Please disabled these to prevent the display of these developers' debug messages.

The `the_content` filter applied.

The `the_content` filter applied.

The `the_content` filter applied.

true

Not an instance if `WP_Post`.

Not an instance if `WP_Post`.

Not an instance if `WP_Post`.

NULL

Post eligible.

Post eligible.

Post eligible.

false

0 notes