#java remove duplicate characters

Explore tagged Tumblr posts

Text

SQLi Potential Mitigation Measures

Phase: Architecture and Design

Strategy: Libraries or Frameworks

Use a vetted library or framework that prevents this weakness or makes it easier to avoid. For example, persistence layers like Hibernate or Enterprise Java Beans can offer protection against SQL injection when used correctly.

Phase: Architecture and Design

Strategy: Parameterization

Use structured mechanisms that enforce separation between data and code, such as prepared statements, parameterized queries, or stored procedures. Avoid constructing and executing query strings with "exec" to prevent SQL injection [REF-867].

Phases: Architecture and Design; Operation

Strategy: Environment Hardening

Run your code with the minimum privileges necessary for the task [REF-76]. Limit user privileges to prevent unauthorized access if an attack occurs, such as by ensuring database applications don’t run as an administrator.

Phase: Architecture and Design

Duplicate client-side security checks on the server to avoid CWE-602. Attackers can bypass client checks by altering values or removing checks entirely, making server-side validation essential.

Phase: Implementation

Strategy: Output Encoding

Avoid dynamically generating query strings, code, or commands that mix control and data. If unavoidable, use strict allowlists, escape/filter characters, and quote arguments to mitigate risks like SQL injection (CWE-88).

Phase: Implementation

Strategy: Input Validation

Assume all input is malicious. Use strict input validation with allowlists for specifications and reject non-conforming inputs. For SQL queries, limit characters based on parameter expectations for attack prevention.

Phase: Architecture and Design

Strategy: Enforcement by Conversion

For limited sets of acceptable inputs, map fixed values like numeric IDs to filenames or URLs, rejecting anything outside the known set.

Phase: Implementation

Ensure error messages reveal only necessary details, avoiding cryptic language or excessive information. Store sensitive error details in logs but be cautious with content visible to users to prevent revealing internal states.

Phase: Operation

Strategy: Firewall

Use an application firewall to detect attacks against weaknesses in cases where the code can’t be fixed. Firewalls offer defense in depth, though they may require customization and won’t cover all input vectors.

Phases: Operation; Implementation

Strategy: Environment Hardening

In PHP, avoid using register_globals to prevent weaknesses like CWE-95 and CWE-621. Avoid emulating this feature to reduce risks. source

3 notes

·

View notes

Text

hi

Longest Substring Without Repeating Characters Problem: Find the length of the longest substring without repeating characters. Link: Longest Substring Without Repeating Characters

Median of Two Sorted Arrays Problem: Find the median of two sorted arrays. Link: Median of Two Sorted Arrays

Longest Palindromic Substring Problem: Find the longest palindromic substring in a given string. Link: Longest Palindromic Substring

Zigzag Conversion Problem: Convert a string to a zigzag pattern on a given number of rows. Link: Zigzag Conversion

Three Sum LeetCode #15: Find all unique triplets in the array which gives the sum of zero.

Container With Most Water LeetCode #11: Find two lines that together with the x-axis form a container that holds the most water.

Longest Substring Without Repeating Characters LeetCode #3: Find the length of the longest substring without repeating characters.

Product of Array Except Self LeetCode #238: Return an array such that each element is the product of all the other elements.

Valid Anagram LeetCode #242: Determine if two strings are anagrams.

Linked Lists Reverse Linked List LeetCode #206: Reverse a singly linked list.

Merge Two Sorted Lists LeetCode #21: Merge two sorted linked lists into a single sorted linked list.

Linked List Cycle LeetCode #141: Detect if a linked list has a cycle in it.

Remove Nth Node From End of List LeetCode #19: Remove the nth node from the end of a linked list.

Palindrome Linked List LeetCode #234: Check if a linked list is a palindrome.

Trees and Graphs Binary Tree Inorder Traversal LeetCode #94: Perform an inorder traversal of a binary tree.

Lowest Common Ancestor of a Binary Search Tree LeetCode #235: Find the lowest common ancestor of two nodes in a BST.

Binary Tree Level Order Traversal LeetCode #102: Traverse a binary tree level by level.

Validate Binary Search Tree LeetCode #98: Check if a binary tree is a valid BST.

Symmetric Tree LeetCode #101: Determine if a tree is symmetric.

Dynamic Programming Climbing Stairs LeetCode #70: Count the number of ways to reach the top of a staircase.

Longest Increasing Subsequence LeetCode #300: Find the length of the longest increasing subsequence.

Coin Change LeetCode #322: Given a set of coins, find the minimum number of coins to make a given amount.

Maximum Subarray LeetCode #53: Find the contiguous subarray with the maximum sum.

House Robber LeetCode #198: Maximize the amount of money you can rob without robbing two adjacent houses.

Collections and Hashing Group Anagrams LeetCode #49: Group anagrams together using Java Collections.

Top K Frequent Elements LeetCode #347: Find the k most frequent elements in an array.

Intersection of Two Arrays II LeetCode #350: Find the intersection of two arrays, allowing for duplicates.

LRU Cache LeetCode #146: Implement a Least Recently Used (LRU) cache.

Valid Parentheses LeetCode #20: Check if a string of parentheses is valid using a stack.

Sorting and Searching Merge Intervals LeetCode #56: Merge overlapping intervals.

Search in Rotated Sorted Array LeetCode #33: Search for a target value in a rotated sorted array.

Kth Largest Element in an Array LeetCode #215: Find the kth largest element in an array.

Median of Two Sorted Arrays LeetCode #4: Find the median of two sorted arrays.

0 notes

Text

Remove Duplicate Characters From a String In C#

Remove Duplicate Characters From a String In C#

Remove Duplicate Characters From a String In C# Console.Write(“Enter a String : “);string inputString = Console.ReadLine();string resultString = string.Empty;for (int i = 0; i < inputString.Length; i++){if (!resultString.Contains(inputString[i])){resultString += inputString[i];}}Console.WriteLine(resultString);Console.ReadKey(); Happy Programming!!! -Ravi Kumar Gupta.

View On WordPress

#.NET#c#Coding#java#Program#program in c#Programming#Remove Duplicate Characters From a String#Remove Duplicate Characters From a String In C#Technology

0 notes

Text

Hire me under false pretences, then fire me under more fallacies? Welp; OK then

First post; TL;DR at the end

Background.init()

After leaving 6th form (college for my family over the pond), I started a job as a Full Stack Java Developer for a small company in the city I currently reside, study and work (more on that later). For those not in the know, a "Full Stack" Developer, is someone that develops the application/website that controls an application, the middleware "brain", and the back-end, usually a Database of some kind.

In the contract, it stated that "All development projects developed within Notarealcompany's offices are the sole property of the company". I was new to the scene and assumed this was the norm (turns out it is - Important later).

The Story.main()

My "Training" was minimalistic, and expectations were insanely high. I was placed on a client project within the first month, and was told that this was to be a trial by fire. Oh boy.

Having spoken to the client, their expectations had already been set by the owner; let's call him Berk (Berk is an English term for moron); "Whatever you need, our developers can accommodate". Their requirements were as follows;

The Intranet software MUST match the production, public site in functionality, including JQuery and other technologies I was unfamiliar with

MUST accommodate their inventory and shipping database, including prior version functionality (which included loading a 400k+ database table into a webpage in one shot)

MUST look seamless on ALL internal assets, regardless of browser (THIS is important)

ABSOLUTELY MUST USE THE STRONGEST SECURITY MONEY CAN BUY (without requiring external sources)

Having asked what the oldest machine on their network was, I realised it was a nightmare given form. They wanted advanced webtoys to work on WINDOWS XP SP1 (which did not, and does not, support HTML5, let alone the version of JavaScript/JQuery the main website does).

I was given a time-line of 2 months to build this by the client, who were already under the impression that all would be ok.

Having spent a few days researching and prototyping, it was clear that their laundry list of demands was impossible. I told them in plenty of time, providing evidence with Virtual Machines, using their "golden images". The website looked clunky, the database loader crashed the entire machine, the JavaScript flat-out refused to work.

Needless to say, they weren't happy. I was ordered to fix the issue, or "my ass is out on the street".

Spending every waking moment outside of work, I build something that, still to this day, I am insanely proud of. The Database was built robust; built to British and German security standards around Information Security. The Password management system was NUKE-proof (I calculated it would take until the Sun died to crack a single password), and managed to get the Database to load into the page flawlessly, using "pagination", the same technology Amazon uses to slide through pages, and AJAX (not important; my fellow devs will know). I managed to get the project completed a DAY before the deadline. Gave the customer a deadline, and plugged their live data into it. Everything worked fine, BUT, their DB had multiple duplicate records, with no way to filter through them. I told them that I could fix this issue with a 100% success rate, and would build dupe-protection into the software (it was easy); without losing pertinent information. The SQL script was dirty, but functional.

Shortly after completing the project, I was told it was "too slow". Now bare in mind; the longest action took 0.0023 of a second; EVEN ON XP. Never the less, I built it faster, giving benchmarking data for the before and after (only 0.0001 of a seconds improvement).

Shortly after, I was told to pack my "shit", as I'd failed my peer review.

The nightmare continues

Because I'd built the software outside of work, on my own time, on my own devices; they had no rights over it, as the only version they saw were the second-to-last, and final commits from my private github.

Shortly after leaving, I'm served papers, summoning me to court for "corporate espionage". Wait, WHAT?

Turned up to court with all relevant documents, a copy of my development system on an ISO for evidence, and a court-issued solicitor. Their claim, was that I'd purposely engineered the application to be insecure, causing their client to be hacked, losing an inordinate amount of money. They presented the source code as "evidence", citing that the password functionality for the management interface was using MD5 (you can google an MD5 hash and find out what it is; see here: https://md5.gromweb.com/?md5=1f3870be274f6c49b3e31a0c6728957f

I show the court the source code I have from the final version (which had only been altered once within work premises to improve speed and provide benchmarking information). They then accuse me of theft, despite showing IP-trace information from Git, the commit hashes from Github, correlating with my PC, and all the time logs from editing and committing (all out of hours).

The Aftermath

To cut an already long story short; I got a payout for defamation of character and time wasted, they paid all the court costs, and was let go with the summons removed from my record.

The story doesn't end there though...

Currently, I am doing a Degree in Information Security, and working for a Managed Service Provider for security products and monitoring. I was asked to do a site visit and perform;

Full "Black Box" Penetration Test (I'm given no knowledge on the network to be attacked, and can use almost any means to gain access)

Full Compliance test for PCI DSS (Payment card industry for Debit/Credit payments)

GDPR (Information storage and management)

ISO 27001 FULL audit

All in all, this is a very highly paid job. Sat in the car park with a laptop, I gained FULL ADMIN ACCESS within about 20 minutes, cloned the access cards to my phone over the air, and locked their systems down (all within the contract). Leaving for the day, I compile a report with pure glee. Their contract with us stipulates that the analyst on site would remain to remediate any and all issues, would have total jurisdiction over the network whilst on job, and would return 6 months later for further assessment and remedy and and all issues persistent or new.

The report put the company on blast; outlining every single fault, every blind spot, and provided evidence of previous compromise. The total cost of repairs was more than the company was willing to pay (they were able, I saw the finances after all). The company went into liquidation, but not before trying to have me fired for having a prior vendetta. The legal team for my current employers not-so-politely tore them to shreds, suing for defamation of character (sounds familiar right?), forcing them to liquidate even more assets than they intended; ultimately costing them their second home.

TL;DR: Got a job, got told I was fired after doing an exemplary job; then had the company liquidated due to MANY flaws when working for their security contractors.

submitted by /u/BenignReaver [link] [comments] ****** (source) story by (/u/BenignReaver)

315 notes

·

View notes

Text

Java program to remove duplicate characters from a String

Java program to remove duplicate characters from a String

In this article, we will discuss how to remove duplicate characters from a String. Here is the expected output for some given inputs : Input : topjavatutorial Output : topjavuril Input : hello Output : helo The below program that loops through each character of the String checking if it has already been encountered and ignoring it if the condition is true. package com.topjavatutorial; public…

View On WordPress

#delete duplicate characters in a string#How to remove duplicate characters from a string in java#java - function to remove duplicate characters in a string#Java - Remove duplicates from a string#java LinkedHashSet#java remove duplicate characters#java remove duplicate characters preserving order#java remove duplicate chars from String#java remove duplicates#Remove all duplicates from a given string#Remove duplicate values from a string in java#remove duplicates from String in java#Removing duplicates from a String in Java

0 notes

Text

Notepadd++ Download

Notepad++ comes with a simple and straightforward interface except making an attempt thronged in spite of its massive array of functions, thanks to truth truth that they are sorted into splendid menus with a amount of them being accessible from the context menu among the imperative window.

As mentioned, Notepad++ mac helps a handful of programming languages and consists of syntax light-weight for several of them. Plus, it'll work with a handful of documents at the equal time, whereas final extraordinarily fantabulous with hardware resources.

In notepad++ we can do programming in many languages some are listed C++, Java, C#, XML, HTML, PHP, JavaScript, RC file, makefile, INI file, batch file, ASP, VB/VBS, SQL, Objective-C, CSS, Pascal, Perl, Python, Lau, OS Shell Script, Fortran, NSIS and Flash motion script. Notepad++ main aspects are: syntax lightness and syntax folding, traditional expression search, applications programmer (If you have got a color printer, print your ASCII text file in color), Unicode support, full drag-and-drop supported, Brace and Indent guideline lightness, 2 edits and synchronised read of an equivalent document, and person language define system.

As you know that in currently days there's too heaps competition ,so during this case we wish to acknowledge further concerning our discipline like programming its my passion and i need to understand more which is possible with straightforward interface of editor like notepad++ .

��WHAT'S NEW IN VERSION OF NOTEPAD++

Upgrade Scintilla from four.1.4 to 4.2.0

Fix non Unicode cryptography drawback in non-Western language(Chinese or Turkish).

Add No to All and Yes to All alternatives in Save dialog.

Add the program line argument openFoldersAsWorkspace to open folders in folder as workspace panel. Example: notepad++ -openFoldersAsWorkspace c:\src\myProj01 c:\src\myProj02

Enhance plugin system: permit any plugin to load personal DLL files from the plugin folder.

Fix File-Rename failing once new establish is on a exclusive drive.

Make Clear all marks ,Remove Consecutive Duplicate Lines & Find All Current Document to be macro record-able.

Make Command Argument Help MessageBox modal.

Fix Folder as space crash and â??queue overflow problems.

Make Combobox font monospace in notice dialog.

Fix user-defined language in folding for non-windows line ending.

Fix crash of Folder as space once too several directory changes happen.

Fix and overwrites config.xml issue.

Improve interface in notice dialog for notice Previous Next buttons.

Fix type Line as number regression.

Add further OS information to right information.

Fix tab dragging troubles below WINE and ReactOS.

Fix indent warning signs proceed to following code blocks for Python.

Fix Python folding collapse issue.

Fix crash once sorted.

Fix discover two instances for an equivalent prevalence in each original and cloned archives issue.

Fix program line troubles wherever filenames have multiple white areas in them.

Fix Document Peeker perpetually ever-changing focus drawback.

Make backward direction checkbox be conjointly on notice , Mark tab.

Add two new columns for hypertext mark-up language Code within the Character Panel.

Fix clear all marks in find dialog to boot removes issue.

Enhance supported language (on feature list or auto-completion): LISP, BaanC,(PL/)SQL .

1 note

·

View note

Link

0 notes

Text

Stabila (STB)

The STABILA agreement process utilizes a clever Delegated Proof of Stake approach in which the organization’s squares are made by 21 Lead representatives (Gs). STB account individuals who CD their records have the chance to decide in favor of a choice of Executives, with the best 21 Executives assigned the Governors. At regular intervals, the STABILA convention network makes another square.

Decentralized trade highlights are inherent to the STABILA organization. Different exchanging pairings make up a decentralized trade. A compromise Market between SRC-10 tokens, or in the midst of a SRC-10 token and STB, is alluded to as an exchanging pair. An exchanging pair between any tokens can be made by any record. The STABILA blockchain code is written in Java and was at first a fork of TRON TVM.

Many new organizations recommended the Proof of Stake (PoS) agreement strategy. Token holders in PoS networks lock their token possessions for them to change into block validators. The validators propose and decide on the accompanying square thusly. The trouble with conventional PoS is that validator power is corresponding to the quantity of tokens that have been secured. Accordingly, parties with gigantic amounts of the organization’s essential cash hold extreme control over the organization environment.

In the STABILA organization, there are three kinds of records

Standard exchanges are taken care of utilizing ordinary records.

SRC-10 tokens are put away in symbolic records.

Contract accounts are essentially shrewd records that are set up by conventional records and can likewise be initiated by them. Account Creation A STABILA record can be made in one of three ways:

Utilize the API to make another record.

Move STB to an alternate location.

Send any SRC-10 tokens to another location.

Private Key and Address age A location (public key) and a private key can be utilized to make a disconnected key pair. The client address age calculation starts with the making of a key pair, trailed by the extraction of the public key . Remove the last 20 bytes of the hashed public key utilizing the SHA3–256 capacity (the SHA3 convention utilized is KECCAK-256). The underlying location length ought to be 21 bytes, and 3F ought to be attached to the beginning of the byte exhibit. Utilize the SHA3–256 calculation to hash the location two times and utilize the initial four bytes as a check code. You might get the location in base58check design by connecting the validation code to the furthest limit of the underlying location and encoding it with base58. The principal character of an encoded Stabila Mainnet address is S, and it is 34 bytes in length.

Signing STABILA utilizes an ordinary ECDSA cryptographic strategy with an SECP256K1 determination bend for exchange signature. The public key is a point on the elliptic bend, while the private key is an arbitrary number. To get a public key, first produce an irregular number as a private key, and afterward duplicate the private key by the base place of the elliptic bend to get the public key. At the point when an exchange happens, the natural information is changed into byte design first. The natural information is hence hashed utilizing the SHA-256 calculation. The result of the SHA256 hash is then marked utilizing the private key related with the agreement address. The exchange is then refreshed with the mark result.

Bandwidth

Bandwidth Model SmartContract exercises burn-through both UCR (units of customary assets) and BP(bandwidth focuses), while standard exchanges simply devour transfer speed focuses. There are two distinct kinds of transmission capacity credits Users might acquire transfer speed credits by creating Contracts of Deposits (CD) with STB, and there are additionally 500 free transmission capacity focuses available consistently. At the point when a STB exchange is communicated, it is moved and put away across the organization as a byte exhibit. How much exchange bytes increased by the absolute data transmission focuses rate approaches the quantity of Bandwidth focuses needed by one exchange.

For SRC-10 symbolic exchanges, the organization first checks assuming the gave token resource’s sans absolute data transmission focuses are sufficient. The data transfer capacity focuses acquired through CDing STB are burned-through assuming this isn’t finished. Assuming there are as yet inadequate data transfer capacity focuses, the exchange initiator’s STB is utilized.

Charge Most exchanges on the STABILA network are free, in spite of the fact that transmission capacity use and exchanges are likely to costs attributable to framework requirements and decency.

Exchanges with ordinary transmission capacity costs transfer speed focuses.

Brilliant agreements are costly as far as UCR, yet they likewise require transmission capacity guides for the exchange toward be communicated and checked.

There is no charge for any inquiry exchange. It costs neither UCR nor data transfer capacity. The STABILA network additionally makes a bunch not set in stone expenses for the accompanying exchanges:

For developing a chief hub, you’ll require 1000 STB

Giving a SRC-10 token, you’ll require 1000 STB

Make another record: 1385 UNIT

Setting up an exchanging pair: 14 STB

Website : https://stabilascan.org/ Whitepaper : https://stabilascan.org/api/static/Stabila_Whitepaper_(7).pdf Twitter : https://twitter.com/moneta_holdings Facebook : https://www.facebook.com/stabilacrypto Telegram : https://t.me/stabilastb Linkedin : https://www.linkedin.com/company/stabilacrypto Instagram : https://www.instagram.com/monetaholdings/ YouTube : https://www.youtube.com/channel/UChFtE8tAVlkWGkFrUb-7KOQ

UserName : mairacute Link BTT : https://bitcointalk.org/index.php?action=profile;u=3334914 Wallet Stabila : SW3zYnjLnC7wxErqcFSGK9nmoXZAvMjHf9

0 notes

Text

Nemesis, 1992 - ★★★

As I suspected, VHS did this one dirtier than it deserves. That's clear from the initial action sequence on, as it frequently shoots quite wide to fit everyone in frame, or has the cast all tinily in the background of a foreground character’s action, which gets lost in VHS resolution & aspect ratio.

I also wanted to scrape off some of the sludge to better enjoy the hard-to-describe visual tone of the movie's early sequences, which bring to mind 90’s male sunglasses models & the ads they’d appear in. A warmish, overexposed beige filter over everything; a classic 90's aesthetic. The outdoor shooting in Hawaii (doubling for Java) often looks really good, ditto the shooting on existing interiors. With the built sets, it’s not the cheapness that’s so bad, as the lighting that call for a fine haze filling up most rooms. Could see just how close various performers are to lots of the action, too: explosions, huge frenzies of squibs & sparks, toppling silo towers. Impressive for the budget.

It’s still paced oddly at times, but it’s also true that removing the carnival atmosphere of B-Movie Bingo lets a couple of the low-energy dialog scenes in hotel rooms show some merit. A couple plot points emerged too that I had completely missed, such as the body-hopping of Sam (icy blonde-wigged woman who shoots the hero’s dog) into a cyber-duplicate of Farnsworth (hero’s former police boss). Gives the villain's villainy a much more unique flavor!

The accent situation is still a mess though. In the Java scenes, it seems they’re using something like the local Hawaiian Pidgin to reinforce a future where Japan and the US have merged. Cary-Hiroyuki Tagawa carries it well, but on the other hand it renders the “Max Impact” character just intolerable. Coupled with the strange Peter Pan energy of the actor's performance, with body language like a feral kid or great ape, and the character just doesn't work. The lead is pretty stiff too, & many line readings are awful, but at least some of the affectlessness works for a character losing his emotions as his body gets mechanized.

0 notes

Text

Jira Service Desk 4.0.0 Release Notes

We're excited to present Jira Service Desk 4.0.

In our latest platform release, Jira Service Desk harnesses an upgraded engine that provides faster performance, increased productivity, and greater scalability. Dive in and discover all the goodies awaiting your service desk teams.

Highlights

Lucene upgrade

jQuery upgrade

Everyday tasks, take less time

Accessible priority icons

New options in advanced search

AUI upgrade

AdoptOpenJDK support

End of support for fugue

New customer portal in the works

Resolved issues

Get the latest version

Read the upgrade notes for important details about this release and see the full list of issuesresolved.

Compatible applications

If you're looking for compatible Jira applications, look no further:

Jira Software 8.0 release notes

Lucene upgrade to power performance

A welcome change we inherit from Jira 8.0, is the upgrade of Lucene. This change brings improvements to indexing, which makes it easier to administer and maintain your service desk. Here’s an overview of what to expect:

Faster reindexing

Reindexing is up to 11% faster. This means less time spent on reindexing after major configuration changes, and quicker upgrades.

Smaller indexes

Index size has shrunk by 47% (in our tests, the index size dropped from 4.5GB to 2.4GB). This means a faster, more stable, Jira that is easier to maintain and troubleshoot.

jQuery upgrade

Outdated libraries can lead to security vulnerabilities, so we've upgraded jQuery from version 1.7.2 to 2.2.4. This new version includes two security patches, and the jQuery migrate plugin 1.4.1 for a simplified upgrade experience. Learn more

Everyday tasks, take less time

We know how important it is for agents to move through their service desk with ease, and for admins to scale their service desk as their team grows. The back end upgrade combined with a bunch of front end improvements, makes work more efficient for admins, agents, and customers alike:

Viewing queues is twice as fast in 4.0 compared to 3.16.

Opening the customer page is 36% faster in 4.0 compared to 3.16

Project creation for an instance with 100 projects, is twice as fast in 4.0 compared to JSD 3.15.

Project creation for an instance with up to 200 projects, is six times faster in 4.0 compared to JSD 3.15.

We'll continue to take performance seriously, so that your service desk team can get the most out of their day!

Accessible priority icons

We’ve updated our priority icons to make them more distinctive and accessible. These changes allow Jira users with color blindness, to instantly recognize the relationship between icons, and their level of importance. Here’s a comparison of old and new icons that you'll be seeing around Jira Service Desk:

New options in advanced search

Check out these new options for when you are using advanced search:

Find contributors and date ranges (updatedBy)

Search for issues that were updated by a specific user, and within a specified date range. Whether you're looking for issues updated in the last 8 hours, two months, or between June and September 2017 – we've got you covered. Learn more

Find link types (issueLinkType)

Search for issues that are linked with other issues by particular link types, like blocks or is duplicated by. This will help you quickly find any related blockers, duplicates, and other issues that affect your work. Learn more

Atlassian User Interface (AUI) upgrade

AUI is a frontend library that contains all you need for building beautiful and functional Atlassian Server products and apps. We've upgraded AUI to the more modular 8.0, making it easier to use only the pieces that you need.

AdoptOpenJDK comes to Jira

Oracle stopped providing public updates for Oracle JDK 8 in January 2019. This means that only Oracle customers with a paid subscription or support contract will be eligible for updates.

In order to provide you with another option, we now support running Jira Service Desk 4.0 with AdoptOpenJDK 8. We'll continue to bundle Jira Service Desk with Oracle JDK / JRE 8.

End of support for fugue

In Jira Service Desk 4.0, we've removed com.atlassian.fugue, and updated our APIs to use Core Java Data types and Exceptions.

You can read the full deprecation notice for next steps, and if you have any questions – post them in the Atlassian Developer Community.

Get ready for the new customer portal experience

We're giving you more ways to customize your help center and portals, alongside a fresh new look that will brighten up your customer's day. This first-class, polished experience will be available in the not too distant future, so keep an eye on upcoming release notes. In the meantime, here’s a taster…

Added extras

4-byte characters

Jira now supports 4-byte characters with MySQL 5.7 and later. This means you can finally use all the emojis you've dreamed about!

Here's a guide that will help you connect Jira to a MySQL 5.7 database.

Add-ons are now apps

We're renaming add-ons to apps. This changed in our Universal Plugin Manager some time ago, and now Jira Service Desk follows suit. This change shouldn't really affect you, but we're letting you know so you're not surprised when seeing this new name in Jira administration, and other pages.

Issues in Release

Fixed: The SLA "within" field keeps increasing when the SLA is paused

Fixed: The SLA information is not included in the Excel and HTML export

Fixed: Anonymous comments cause display issues in Activity section in Issue Navigator

Fixed: Portal Settings - Highlight and Text on highlights values are swapped on subsequent edits

Fixed: Inconsistent JIRA Service Desk REST API Pagination

Fixed: JSON export doesn't differentiate public from internal comments

Fixed: Asynchronous cache replication queue - leaking file descriptor when queue file corrupted

Fixed: New line character in issue summary can break issue search detailed view

Fixed: Jira incorrectly sorts options from Select List custom fields with multiple contexts

Fixed: DefaultShareManager.isSharedWith performs user search 2x times

Fixed: Unknown RPC service: update_security_token

Fixed: Copying of SearchRequest performs user search

Fixed: Data Center - Reindex snapshot file fails to create if greater than 8GB

Fixed: The VerifyPopServerConnection resource was vulnerable to SSRF - CVE-2018-13404

Fixed: XSS in the two-dimensional filter statistics gadget on a Jira dashboard - CVE-2018-13403

Fixed: DC index copy does not clean up old files when Snappy archiver is used

Fixed: SQL for checking usages of version in custom fields is slow even if no version picker custom fields exist

Suggestion: Improve FieldCache memory utilisation for Jira instances with large Lucene

Fixed: Search via REST API might fail due to ClassCastException

Fixed: Upgrade Tomcat to the version 8.5.32

Suggestion: Webhook for Project Archive on JIRA Software

Fixed: Deprecate support for authenticating using os_username, os_password as url query parameters

Fixed: JQL input missing from saved filter

Fixed: CachingFieldScreenStore unnecessary flushes fieldScreenCache for create/remove operation

Suggestion: Favourite filters missing in Mobile browser view

Fixed: DC node reindex is run in 5s intervals instead of load-dependent intervals

Suggestion: Don't log Jira events to STDOUT - catalina.out

Fixed: Adding .trigger-dialog class to dropdown item doesn't open a dialog

Fixed: Issue view/create page freeze

Suggestion: Add Additional Logging Related to Index Snapshot Backup

Suggestion: Add additional logging related to index copy between nodes

Fixed: JIRA performance is impacted by slow queries pulling data from the customfieldvalue table

Fixed: Projects may be reverted to the default issue type scheme during the change due to race condition

Suggestion: As an JIRA Datacenter Administrator I want use default ehcache RMI port

Fixed: Two dimensional filter statistics gadget does not show empty values

Suggestion: Embed latest java critical security update (1.8.0.171 or higher) into the next JIRA (sub)version

Fixed: JIRA inefficiently populates fieldLayoutCache due to slow loading of FieldLayoutItems

Suggestion: Provide New look Of Atlassian Product For Server Hosting As Well

Fixed: Startup Parameter "upgrade.reindex.allowed" Not Taking Effect

Fixed: Remote Linking of Issues doesn't reindex issues

Fixed: Index stops functioning because org.apache.lucene.store.AlreadyClosedException is not handled correctly

Suggestion: Add Multi-Column Index to JIRA Tables

Fixed: 'entity_property' table is slow with high number of rows and under high load

Fixed: JIRA Data Center will skip replication operations in case of index exception

Fixed: Improve database indexes for changeitem and changegroup tables.

Suggestion: CSV export should also include SLA field values

Fixed: Priority icons on new projects are not accessible for red-green colour blind users

Fixed: Two Dimensional Filter Statistics Gadget fails when YAxis is a Custom Field restricted to certain issue types

Fixed: Unable to remove user from Project Roles in project administration when the username starts with 0

Suggestion: The jQuery version used in JIRA needs to be updated

Fixed: MSSQL Selection in config.sh Broken (CLI)

Suggestion: Upgrade Lucene to version 7.3

Fixed: User Directory filter throws a 'value too long' error when Filter exceeds 255 chars

Suggestion: Add support for 4 byte characters in MySQL connection

Fixed: xml view of custom field of multiversion picker type display version ID instead of version text

Fixed: Filter gadgets take several minutes to load after a field configuration context change

Suggestion: Ship JIRA with defaults that enable log rotation

Fixed: The xml for version picker customfield provides the version id instead of the version name

Suggestion: JQL function for showing all issues linked to any issue by a given issue link type

Suggestion: Ability to search for issues with blockers linked to them

Suggestion: Reduce JIRA email chatiness

Source

0 notes

Text

Database Interview Questions for java

What’s the Difference between a Primary Key and a Unique Key?

Both primary key and unique key enforce uniqueness of the column on which they are defined. But by default, the primary key creates a clustered index on the column, whereas unique key creates a non-clustered index by default. Another major difference is that primary key doesn’t allow NULLs, but unique key allows one NULL only. Primary Key is the address of data and unique key may not.

What are the Different Index Configurations a Table can have?

A table can have one of the following index configurations:

No indexes A clustered index A clustered index and many non-clustered indexes A non-clustered index Many non-clustered indexes

What is Difference between DELETE and TRUNCATE Commands?

The DELETE command is used to remove rows from a table. A WHERE clause can be used to only remove some rows. If no WHERE condition is specified, all rows will be removed. After performing a DELETE operation you need to COMMIT or ROLLBACK the transaction to make the change permanent or to undo it. Note that this operation will cause all DELETE triggers on the table to fire.

TRUNCATE removes all rows from a table. The operation cannot be rolled back and no triggers will be fired. As such, TRUCATE is faster and doesn’t use as much undo space as a DELETE.

TRUNCATE

TRUNCATE is faster and uses fewer system and transaction log resources than DELETE. TRUNCATE removes the data by deallocating the data pages used to store the table’s data, and only the page deallocations are recorded in the transaction log. TRUNCATE removes all the rows from a table, but the table structure, its columns, constraints, indexes and so on remains. The counter used by an identity for new rows is reset to the seed for the column. You cannot use TRUNCATE TABLE on a table referenced by a FOREIGN KEY constraint. Using T-SQL – TRUNCATE cannot be rolled back unless it is used in TRANSACTION. OR TRUNCATE can be rolled back when used with BEGIN … END TRANSACTION using T-SQL. TRUNCATE is a DDL Command. TRUNCATE resets the identity of the table.

DELETE

DELETE removes rows one at a time and records an entry in the transaction log for each deleted row. DELETE does not reset Identity property of the table. DELETE can be used with or without a WHERE clause DELETE activates Triggers if defined on table. DELETE can be rolled back. DELETE is DML Command. DELETE does not reset the identity of the table.

What are Different Types of Locks?

Shared Locks: Used for operations that do not change or update data (read-only operations), such as a SELECT statement. Update Locks: Used on resources that can be updated. It prevents a common form of deadlock that occurs when multiple sessions are reading, locking, and potentially updating resources later. Exclusive Locks: Used for data-modification operations, such as INSERT, UPDATE, or DELETE. It ensures that multiple updates cannot be made to the same resource at the same time.

What are Pessimistic Lock and Optimistic Lock?

Optimistic Locking is a strategy where you read a record, take note of a version number and check that the version hasn’t changed before you write the record back. If the record is dirty (i.e. different version to yours), then you abort the transaction and the user can re-start it. Pessimistic Locking is when you lock the record for your exclusive use until you have finished with it. It has much better integrity than optimistic locking but requires you to be careful with your application design to avoid Deadlocks.

What is the Difference between a HAVING clause and a WHERE clause?

They specify a search condition for a group or an aggregate. But the difference is that HAVING can be used only with the SELECT statement. HAVING is typically used in a GROUP BY clause. When GROUP BY is not used, HAVING behaves like a WHERE clause. Having Clause is basically used only with the GROUP BY function in a query, whereas WHERE Clause is applied to each row before they are part of the GROUP BY function in a query.

What is NOT NULL Constraint?

A NOT NULL constraint enforces that the column will not accept null values. The not null constraints are used to enforce domain integrity, as the check constraints.

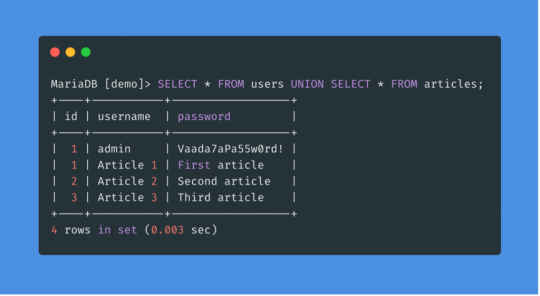

What is the difference between UNION and UNION ALL?

UNION The UNION command is used to select related information from two tables, much like the JOIN command. However, when using the UNION command all selected columns need to be of the same data type. With UNION, only distinct values are selected.

UNION ALL The UNION ALL command is equal to the UNION command, except that UNION ALL selects all values.

The difference between UNION and UNION ALL is that UNION ALL will not eliminate duplicate rows, instead it just pulls all rows from all the tables fitting your query specifics and combines them into a table.

What is B-Tree?

The database server uses a B-tree structure to organize index information. B-Tree generally has following types of index pages or nodes:

Root node: A root node contains node pointers to only one branch node.

Branch nodes: A branch node contains pointers to leaf nodes or other branch nodes, which can be two or more.

Leaf nodes: A leaf node contains index items and horizontal pointers to other leaf nodes, which can be many.

What are the Advantages of Using Stored Procedures?

Stored procedure can reduced network traffic and latency, boosting application performance. Stored procedure execution plans can be reused; they staying cached in SQL Server’s memory, reducing server overhead. Stored procedures help promote code reuse. Stored procedures can encapsulate logic. You can change stored procedure code without affecting clients. Stored procedures provide better security to your data.

What is SQL Injection? How to Protect Against SQL Injection Attack? SQL injection is an attack in which malicious code is inserted into strings that are later passed to an instance of SQL Server for parsing and execution. Any procedure that constructs SQL statements should be reviewed for injection vulnerabilities because SQL Server will execute all syntactically valid queries that it receives. Even parameterized data can be manipulated by a skilled and determined attacker. Here are few methods which can be used to protect again SQL Injection attack:

Use Type-Safe SQL Parameters Use Parameterized Input with Stored Procedures Use the Parameters Collection with Dynamic SQL Filtering Input parameters Use the escape character in LIKE clause Wrapping Parameters with QUOTENAME() and REPLACE()

What is the Correct Order of the Logical Query Processing Phases?

The correct order of the Logical Query Processing Phases is as follows: 1. FROM 2. ON 3. OUTER 4. WHERE 5. GROUP BY 6. CUBE | ROLLUP 7. HAVING 8. SELECT 9. DISTINCT 10. TOP 11. ORDER BY

What are Different Types of Join?

Cross Join : A cross join that does not have a WHERE clause produces the Cartesian product of the tables involved in the join. The size of a Cartesian product result set is the number of rows in the first table multiplied by the number of rows in the second table. The common example is when company wants to combine each product with a pricing table to analyze each product at each price. Inner Join : A join that displays only the rows that have a match in both joined tables is known as inner Join. This is the default type of join in the Query and View Designer. Outer Join : A join that includes rows even if they do not have related rows in the joined table is an Outer Join. You can create three different outer join to specify the unmatched rows to be included: Left Outer Join: In Left Outer Join, all the rows in the first-named table, i.e. “left” table, which appears leftmost in the JOIN clause, are included. Unmatched rows in the right table do not appear. Right Outer Join: In Right Outer Join, all the rows in the second-named table, i.e. “right” table, which appears rightmost in the JOIN clause are included. Unmatched rows in the left table are not included. Full Outer Join : In Full Outer Join, all the rows in all joined tables are included, whether they are matched or not. Self Join : This is a particular case when one table joins to itself with one or two aliases to avoid confusion. A self join can be of any type, as long as the joined tables are the same. A self join is rather unique in that it involves a relationship with only one table. The common example is when company has a hierarchal reporting structure whereby one member of staff reports to another. Self Join can be Outer Join or Inner Join.

What is a View?

A simple view can be thought of as a subset of a table. It can be used for retrieving data as well as updating or deleting rows. Rows updated or deleted in the view are updated or deleted in the table the view was created with. It should also be noted that as data in the original table changes, so does the data in the view as views are the way to look at parts of the original table. The results of using a view are not permanently stored in the database. The data accessed through a view is actually constructed using standard T-SQL select command and can come from one to many different base tables or even other views.

What is an Index?

An index is a physical structure containing pointers to the data. Indices are created in an existing table to locate rows more quickly and efficiently. It is possible to create an index on one or more columns of a table, and each index is given a name. The users cannot see the indexes; they are just used to speed up queries. Effective indexes are one of the best ways to improve performance in a database application. A table scan happens when there is no index available to help a query. In a table scan, the SQL Server examines every row in the table to satisfy the query results. Table scans are sometimes unavoidable, but on large tables, scans have a terrific impact on performance.

Can a view be updated/inserted/deleted? If Yes – under what conditions ?

A View can be updated/deleted/inserted if it has only one base table if the view is based on columns from one or more tables then insert, update and delete is not possible.

What is a Surrogate Key?

A surrogate key is a substitution for the natural primary key. It is just a unique identifier or number for each row that can be used for the primary key to the table. The only requirement for a surrogate primary key is that it should be unique for each row in the table. It is useful because the natural primary key can change and this makes updates more difficult. Surrogated keys are always integer or numeric.

How to remove duplicates from table? ? 1 2 3 4 5 6 7 8 9

DELETE FROM TableName WHERE ID NOT IN (SELECT MAX(ID) FROM TableName GROUP BY Column1, Column2, Column3, ------ Column..n HAVING MAX(ID) IS NOT NULL)

Note : Where Combination of Column1, Column2, Column3, … Column n define the uniqueness of Record.

How to fine the N’th Maximum salary using SQL query?

Using Sub query ? 1 2 3 4 5 6

SELECT * FROM Employee E1 WHERE (N-1) = ( SELECT COUNT(DISTINCT(E2.Salary)) FROM Employee E2 WHERE E2.Salary > E1.Salary )

Another way to get 2’nd maximum salary ? 1

Select max(Salary) From Employee e where e.sal < ( select max(sal) from employee );

0 notes

Text

SOLVED:P2: Interactive PlayList Analysis Solution

In this assignment, you will develop an application that manages a user's songs in a simplified playlist. Objectives Write a class with a main method (PlayList). Use an existing, custom class (Song). Use classes from the Java standard library. Work with numeric data. Work with standard input and output. Specification Existing class that you will use: Song.java Class that you will create: PlayList.java Song (provided class) You do not need to modify this class. You will just use it in your PlayList driver class. You are given a class named Song that keeps track of the details about a song. Each song instance will keep track of the following data. Title of the song. The song title must be specified when the song is created. Artist of the song. The song artist must be specified when the song is created. The play time (in seconds) of the song. The play time must be specified when the song is created. The file path of the song. The file path must be specified when the song is created. The play count of the song (e.g. how many times has the song been played). This is set to 0 by default when the song is created. The Song class has the following methods available for you to use: public Song(String title, String artist, int playTime, String filePath) -- (The Constructor) public String getTitle() public String getArtist() public int getPlayTime() public String getFilePath() public void play() public void stop() public String toString() To find out what each method does, look at the documentation for the Song class: java docs for Song class PlayList (the driver class) You will write a class called PlayList. It is the driver class, meaning, it will contain the main method. In this class, you will gather song information from the user by prompting and reading data from the command line. Then, you will store the song information by creating song objects from the given data. You will use the methods available in the song class to extract data from the song objects, calculate statistics for song play times, and print the information to the user. Finally, you will use conditional statements to order songs by play time. The ordered "playlist" will be printed to the user. Your PlayList code should not duplicate any data or functionality that belongs to the Song objects. Make sure that you are accessing data using the Song methods. Do NOT use a loop or arrays to generate your Songs. We will discuss how to do this later, but please don't over complicate things now. If you want to do something extra, see the extra credit section below. User input Console input should be handled with a Scanner object. Create a Scanner using the standard input as follows: Scanner in = new Scanner(System.in); You should never make more than one Scanner from System.in in any program. Creating more than one Scanner from System.in crashes the script used to test the program. There is only one keyboard; there should only be one Scanner. Your program will prompt the user for the following values and read the user's input from the keyboard. A String for the song title. A String for the song artist. A String for the song play time (converted to an int later). A String for the song file path. Here is a sample input session for one song. Your program must read input in the order below. If it doesn't, it will fail our tests when grading. Enter title: Big Jet Plane Enter artist: Julia Stone Enter play time (mm:ss): 3:54 Enter file name: sounds/westernBeat.wav You can use the nextLine() method of the Scanner class to read each line of input. Creating Song objects from input As you read in the values for each song, create a new Song object using the input values. Use the following process to create each new song. Read the input values from the user into temporary variables. You will need to do some manipulation of the playing time data. The play time will be entered in the format mm:ss, where mm is the number of minutes and ss is the number of seconds. You will need to convert this string value to the total number of seconds before you can store it in your Song object. (Hint: use the String class's indexOf() method to find the index of the ':' character, then use the substring() method to get the minutes and seconds values.) All other input values are String objects, so you can store them directly in your Song object. Use the Song constructor to instantiate a new song, passing in the variables containing the title, artist, play time, and file path. Because a copy of the values will be stored in your song objects, you can re-use the same temporary variables when reading input for the next song. Before moving to the next step, print your song objects to make sure everything looks correct. To print a song, you may use the provided toString method. Look at the Song documentation for an example of how to print a song. NOTE: Each Song instance you create will store its own data, so you will use the methods of the Song class to get that data when you need it. Don't use the temporary variables that you created for reading input from the user. For example, once you create a song, you may retrieve the title using song1.getTitle(); Calculate the average play time. Use the getPlayTime() method to retrieve the play time of each song and calculate the average play time in seconds. Print the average play time formatted to two decimal places. Format the output as shown in example below. Average play time: 260.67 Use the DecimalFormat formatter to print the average. You may use this Find the song with play time closest to 4 minutes Determine which song has play time closest to 4 minutes. Print the title of the song formatted as shown in the output below. Song with play time closest to 240 secs is: Big Jet Plane Build a sorted play list Build a play list of the songs from the shortest to the longest play time and print the songs in order. To print the formatted song data, use the toString() method in the Song class. Print the play list as shown in the output below. ============================================================================== Title Artist File Name Play Time ============================================================================== Where you end Moby sounds/classical.wav 198 Big Jet Plane Julia Stone sounds/westernBeat.wav 234 Last Tango in Paris Gotan Project sounds/newAgeRhythm.wav 350 ============================================================================== Extra Credit (5 points) You may notice that you are repeating the same code to read in the song data from the user for each song (Yuck! This would get really messy if we had 10 songs). Modify your main method to use a loop to read in the songs from the user. As you read and create new songs, you will need to store your songs in an ArrayList. This can be quite challenging if you have never used ArrayLists before, so we recommend saving a backup of your original implementation before attempting the extra credit. Getting Started Create a new Eclipse project for this assignment and import Song.java into your project. Create a new Java class called PlayList and add a main method. Read the Song documentation and (if you are feeling adventurous) look through the Song.java file to familiarize yourself with what it contains and how it works before writing any code of your own. Start implementing your Song class according to the specifications. Test often! Run your program after each task so you can find and fix problems early. It is really hard for anyone to track down problems if the code was not tested along the way. Sample Input/Output Make sure you at least test all of the following inputs. Last Tango in Paris Gotan Project 05:50 sounds/newAgeRhythm.wav Where you end Moby 3:18 sounds/classical.wav Big Jet Plane Julia Stone 3:54 sounds/westernBeat.wav Where you end Moby 3:18 sounds/classical.wav Last Tango in Paris Gotan Project 05:50 sounds/newAgeRhythm.wav Big Jet Plane Julia Stone 3:54 sounds/westernBeat.wav Big Jet Plane Julia Stone 3:54 sounds/westernBeat.wav Last Tango in Paris Gotan Project 05:50 sounds/newAgeRhythm.wav Where you end Moby 3:18 sounds/classical.wav Submitting Your Project Testing Once you have completed your program in Eclipse, copy your Song.java, PlayList.java and README files into a directory on the onyx server, with no other files (if you did the project on your computer). Test that you can compile and run your program from the console in the lab to make sure that it will run properly for the grader. javac PlayList.java java PlayList Documentation If you haven't already, add a javadoc comment to your program. It should be located immediately before the class header. If you forgot how to do this, go look at the Documenting Your Program section from lab . Have a class javadoc comment before the class (as you did in the first lab) Your class comment must include the @author tag at the end of the comment. This will list you as the author of your software when you create your documentation. Include a plain-text file called README that describes your program and how to use it. Expected formatting and content are described in README_TEMPLATE. See README_EXAMPLE for an example. Submission You will follow the same process for submitting each project. Open a console and navigate to the project directory containing your source files, Remove all the .class files using the command, rm *.class. In the same directory, execute the submit command for your section as shown in the following table. Look for the success message and timestamp. If you don't see a success message and timestamp, make sure the submit command you used is EXACTLY as shown. Required Source Files Required files (be sure the names match what is here exactly): PlayList.java (main class) Song.java (provided class) README Section Instructor Submit Command 1 Luke Hindman (TuTh 1:30 - 2:45) submit lhindman cs121-1 p2 2 Greg Andersen (TuTh 9:00 - 10:15) submit gandersen cs121-2 p2 3 Luke Hindman (TuTh 10:30 - 11:45) submit lhindman cs121-3 p2 4 Marissa Schmidt (TuTh 4:30 - 5:45) submit marissa cs121-4 p2 5 Jerry Fails (TuTh 1:30 - 2:45) submit jfails cs121-5 p2 After submitting, you may check your submission using the "check" command. In the example below, replace submit -check Read the full article

0 notes

Video

youtube

In today's Episode, Learn How to remove duplicate characters in a String using Maps in Java About the trainer: Mr. Manjunath Aradhya, a technocrat by profession, a teacher by choice and an educationist by passion. Under his able leadership, ABC for Technology Training which is a National brand enabling the creation of thousands of careers annually in the IT Sector. He has extensive experience working as a Business associate with Wipro Technologies. He has also served as a corporate trainer to many other leading software firms. He has been providing technical assistance to placement cell of various Engineering colleges. He has also authored numerous hot selling engineering and other textbooks which are published by Pearson Education, an internationally acclaimed publication house headquartered in London. Other renowned international publishers such as Cengage Learning headquartered in Boston, United States, have published books authored by him. C Programming and Data Structures book published by Cengage India Private Limited authored by a profound scholar Mr. Manjunath Aradhya is the prescribed book in Dr. Hari Singh Gour University which is the Central University and the oldest university in the state of Madhya Pradesh (MP), India. To learn more about Technology, subscribe to our YouTube Channel: http://bit.ly/2jREy4a

#java#java tutorial#learn java#hindi#java tutorial for beginners#java programming tutorial#java full course#abc for java#abc tech training#abc for technology training

0 notes

Photo

New Post has been published on https://cryptomoonity.com/open-source-ekyc-blockchain-built-on-hyperledger-sawtooth/

Open source eKYC blockchain built on Hyperledger Sawtooth

Guest post: Rohas Nagpal, Primechain Technologies

1. Introduction

Financial and capital markets use the KYC (Know Your Customer) system to identify “bad” customers and minimize money laundering, tax evasion, and terrorism financing. Efforts to prevent money laundering and the financing of terrorism are costing the financial sector billions of dollars. Banks are also exposed to huge penalties for failure to follow KYC guidelines. Costs aside, KYC can delay transactions and lead to duplication of effort between banks.

Blockchain-eKYC is a permissioned Hyperledger Sawtooth blockchain for sharing corporate KYC records amongst banks and other financial institutions.

The records are stored in the blockchain in an encrypted form and can only be viewed by entities that have been “whitelisted” by the issuer entity. This ensures data privacy and confidentiality while at the same time ensuring that records are shared only between entities that trust each other.

Blockchain-eKYC is maintained by Rahul Tiwari, Blockchain Developer, Primechain Technologies Pvt. Ltd.

The source code of Blockchain-eKYC is available on GitHub at:

https://github.com/Primechain/blockchain-ekyc-sawtooth

Primary benefits

Removes duplication of effort, automates processes and reduces compliance errors.

Enables the distribution of encrypted updates to client information in real time.

Provides the historical record of all compliance activities undertaken for each customer.

Provides the historical record of all documents pertaining to each customer.

Establishes records that can be used as evidence to prove to regulators that the bank has complied with all relevant regulations.

Enables identification of entities attempting to create fraudulent histories.

Enables data and records to be analyzed to spot criminal activities.

2. Uploading records

Records can be uploaded in any format (doc, pdf, jpg etc.) up to a maximum of 10 MB per record. These records are automatically encrypted using AES symmetric encryption algorithm and the decryption keys are automatically stored in the exclusive web application of the uploading entity.

When a new record is uploaded to the blockchain, the following information must be provided:

Corporate Identity Number (CIN) of the entity to which this document relates – this information is stored in the blockchain in plain text / un-encrypted form and cannot be changed.

Document category – this information is stored in the blockchain in plain text / un-encrypted form and cannot be changed.

Document type – this information is stored in the blockchain in plain text / un-encrypted form and cannot be changed.

A brief description of the document – this information is stored in the blockchain in plain text / un-encrypted form and cannot be changed.

The document – this can be in pdf, word, excel, image or other format and is stored in the blockchain in AES-encrypted form and cannot be changed. The decryption key is stored in the relevant bank’s dedicated database and does NOT go into the blockchain.

When the above information is provided, this is what happens:

Hash of the uploaded file is calculated.

The file is digitally signed using the private key of the uploader bank.

The file is encrypted using AES symmetric encryption.

The encrypted data is converted into hexadecimal.

The non-encrypted data is converted into hexadecimal.

Hexadecimal content is uploaded to the blockchain.

Sample output:

file_hash: 84a9ceb1ee3a8b0dc509dded516483d1c4d976c13260ffcedf508cfc32b52fbe file_txid: 2e770002051216052b3fdb94bf78d43a8420878063f9c3411b223b38a60da81d data_txid: 85fc7ff1320dd43d28d459520fe5b06ebe7ad89346a819b31a5a61b01e7aac74 signature: IBJNCjmclS2d3jd/jfepfJHFeevLdfYiN22V0T2VuetiBDMH05vziUWhUUH/tgn5HXdpSXjMFISOqFl7JPU8Tt8= secrect_key: ZOwWyWHiOvLGgEr4sTssiir6qUX0g3u0 initialisation_vector: FAaZB6MuHIuX

3. Transaction Processor and State

This section uses the following terminology:

Transaction Processor – this is the business logic / smart contracts layer.

Validator Process – this is the Global State Store layer.

Client Application (User) – this implies a user of the solution; the user’s public key executes the transactions.

The Transaction Processor of the eKYC application is written in Java. It contains all the business logic of the application. Hyperledger Sawtooth stores data within a Merkle Tree. Data is stored in leaf nodes and each node is accessed using an addressing scheme that is composed of 35 bytes, represented as 70 hex characters.

Using the Corporate Identity Number, or CIN, provided by the user while uploading, a 70 characters (35 bytes) address is created for uploading a record to the blockchain. To understand the address creation and namespace design process, see the documentation regarding Address and Namespace Design.

Below is the address creation logic in the application:

Note:

uniqueValue is the type of data (can be any value)

kycAddress is the CIN of the uploaded document.

The User can upload multiple files using the same CIN. However, state will return only the latest uploaded document. To get all the uploaded documents on the same address, business logic is written in Transaction Processor.

The else { part will do the uploading of multiple documents on the same address and fetching every uploaded document from the state.

4. Client Application

The client application uses REST API endpoints to upload (POST) and get (GET) documents on the Sawtooth blockchain platform. It is written in Nodejs. In case of uploading, few steps to be considered:

Creating and encoding transactions having header, header signature, and payload.(Transaction payloads are composed of binary-encoded data that is opaque to the validator.)

Creating BatchHeader, Batch, and encoding Batches.

Submitting batches to the validator.

When getting uploaded data from blockchain, the following steps needs to be considered:

Creating the same address from the CIN given by User, using GET method to fetch the data stored on the particular address. As shown in the following code snippet, updatedAddress is created by getting user input either from User (search using CIN in the network) or from the private database of the user (Records uploaded by the user). Similarly, splitStringArray splits the data returned from a particular address because of the transaction logic written in the Transaction Processor to upload multiple documents on the same address while updating state with the list of all the uploaded data (not only the current payload).

2. The client side logic is then written to convert the splitStringArray by decoding it to the required format and giving User an option to download the same in the form of a file.

5. Installation and setup

Please refer to the guide here: https://github.com/Primechain/blockchain-ekyc-sawtooth/blob/master/setup.MD

6. Third party software and components

Third party software and components: bcryptjs, body-parser, connect-flash, cookie-parser, express, express-fileupload, express-handlebars, express-session, express-validator, mongodb, mongoose, multichain, passport, passport-local, sendgrid/mail.

7. License

Blockchain-eKYC is available under Apache License 2.0. This license does not extend to third party software and components.

The post Open source eKYC blockchain built on Hyperledger Sawtooth appeared first on Hyperledger.

Related

DeepBrain Chain Announces Subsidy for Computing Po... DeepBrain Chain Announces Subsidy for Computing Power Providers Dear DeepBrain Chain community members,Greetings to all. The DeepBrain Chain AI Train...

Bytom Global Dev Competition Korean Station pt1 Title: Bytom Global Dev Competition Korean Station pt1 Video duration: 32:28 Views: 0 Likes: 0 Dislikes: 0 Publication date: 2018-09-28 05:39:08 ...

Faketoshi Craig Wright is Doing it Right – W... Title: Faketoshi Craig Wright is Doing it Right - Why You Should Learn from Him Video duration: 5:29 Views: 2630 Likes: 133 Dislikes: 18 Publicati...

Architecture Design Plan of Testnet 2.0 — U Networ... Architecture Design Plan of Testnet 2.0 — U Network Bi-Weekly Report #11 U Network testnet has been launched for over 3 weeks. 2 bugs on testnet have...

.yuzo_related_post .relatedthumb background: !important; -webkit-transition: background 0.2s linear; -moz-transition: background 0.2s linear; -o-transition: background 0.2s linear; transition: background 0.2s linear;;color:!important; .yuzo_related_post .relatedthumb:hoverbackground:#fcfcf4 !important;color:!important; .yuzo_related_post .yuzo_text color:!important; .yuzo_related_post .relatedthumb:hover .yuzo_text color:!important; .yuzo_related_post .relatedthumb acolor:!important; .yuzo_related_post .relatedthumb a:hovercolor:!important; .yuzo_related_post .relatedthumb:hover a color:!important; .yuzo_related_post .relatedthumb margin: 0px 0px 0px 0px; padding: 5px 5px 5px 5px;

0 notes

Text

Structured JUnit 5 testing

Automated tests, in Java most commonly written with JUnit, are critical to any reasonable software project. Some even say that test code is more important than production code, because it’s easier to recreate the production code from the tests than the other way around. Anyway, they are valuable assets, so it’s necessary to keep them clean.

Adding new tests is something you’ll be doing every day, but hold yourself from getting into a write-only mode: Its overwhelmingly tempting to simply duplicate an existing test method and change just some details for the new test. But when you refactor the production code, you often have to change some test code, too. If this is spilled all over your tests, you will have a hard time; and what’s worse, you will be tempted to not do the refactoring or even stop writing tests. So also in your test code you’ll have to reduce code duplication to a minimum from the very beginning. It’s so little extra work to do now, when you’re into the subject, that it will amortize in no time.

JUnit 5 gives us some opportunities to do this even better, and I’ll show you some techniques here.

Any examples I can come up with are necessarily simplified, as they can’t have the full complexity of a real system. So bear with me while I try to contrive examples with enough complexity to show the effects; and allow me to challenge your fantasy that some things, while they are just over-engineered at this scale, will prove useful when things get bigger.

If you like, you can follow the refactorings done here by looking at the tests in this Git project. They are numbered to match the order presented here.

Example: Testing a parser with three methods for four documents

Let’s take a parser for a stream of documents (like in YAML) as an example test subject. It has three methods:

public class Parser { /** * Parse the one and only document in the input. * * @throws ParseException if there is none or more than one. */ public static Document parseSingle(String input); /** * Parse only the first document in the input. * * @throws ParseException if there is none. */ public static Document parseFirst(String input); /** Parse the list of documents in the input; may be empty, too. */ public static Stream parseAll(String input); }

We use static methods only to make the tests simpler; normally this would be a normal object with member methods.

We write tests for four input files (not to make things too complex): one is empty, one contains a document with only one space character, one contains a single document containing only a comment, and one contains two documents with each only containing a comment. That makes a total of 12 tests looking similar to this one:

class ParserTest { @Test void shouldParseSingleInDocumentOnly() { String input = "# test comment"; Document document = Parser.parseSingle(input); assertThat(document).isEqualTo(new Document().comment(new Comment().text("test comment"))); } }

Following the BDD given-when-then schema, I first have a test setup part (given), then an invocation of the system under test (when), and finally a verification of the outcome (then). These three parts are delimited with empty lines.

For the verification, I use AssertJ.

To reduce duplication, we extract the given and when parts into methods:

class ParserTest { @Test void shouldParseSingleInDocumentOnly() { String input = givenCommentOnlyDocument(); Document document = whenParseSingle(input); assertThat(document).isEqualTo(COMMENT_ONLY_DOCUMENT); } }

Or when the parser is expected to fail:

class ParserTest { @Test void shouldParseSingleInEmpty() { String input = givenEmptyDocument(); ParseException thrown = whenParseSingleThrows(input); assertThat(thrown).hasMessage("expected exactly one document, but found 0"); } }

The given... methods are called three times each, once for every parser method. The when... methods are called four times each, once for every input document, minus the cases where the tests expect exceptions. There is actually not so much reuse for the then... methods; we only extract some constants for the expected documents here, e.g. COMMENT_ONLY.

But reuse is not the most important reason to extract a method. It’s more about hiding complexity and staying at a single level of abstraction. As always, you’ll have to find the right balance: Is whenParseSingle(input) better than Parser.parseSingle(input)? It’s so simple and unlikely that you will ever have to change it by hand, that it’s probably better to not extract it. If you want to go into more detail, read the Clean Code book by Robert C. Martin, it’s worth it!

You can see that the given... methods all return an input string, while all when... methods take that string as an argument. When tests get more complex, they produce or require more than one object, so you’ll have to pass them via fields. But normally I wouldn’t do this in such a simple case. Let’s do that here anyway, as a preparation for the next step:

class ParserTest { private String input; @Test void shouldParseAllInEmptyDocument() { givenEmptyDocument(); Stream stream = whenParseAll(); assertThat(stream.documents()).isEmpty(); } private void givenEmptyDocument() { input = ""; } private Stream whenParseAll() { return Parser.parseAll(input); } }

Adding structure

It would be nice to group all tests with the same input together, so it’s easier to find them in a larger test base, and to more easily see if there are some setups missing or duplicated. To do so in JUnit 5, you can surround all tests that call, e.g., givenTwoCommentOnlyDocuments() with an inner class GivenTwoCommentOnlyDocuments. To have JUnit still recognize the nested test methods, we’ll have to add a @Nested annotation:

class ParserTest { @Nested class GivenOneCommentOnlyDocument { @Test void shouldParseAllInDocumentOnly() { givenOneCommentOnlyDocument(); Stream stream = whenParseAll(); assertThat(stream.documents()).containsExactly(COMMENT_ONLY); } } }

In contrast to having separate top-level test classes, JUnit runs these tests as nested groups, so we see the test run structured like this:

Nice, but we can go a step further. Instead of calling the respective given... method from each test method, we can call it in a @BeforeEach setup method, and as there is now only one call for each given... method, we can inline it:

@Nested class GivenTwoCommentOnlyDocuments { @BeforeEach void givenTwoCommentOnlyDocuments() { input = "# test comment\n---\n# test comment 2"; } }

We could have a little bit less code (which is generally a good thing) by using a constructor like this:

@Nested class GivenTwoCommentOnlyDocuments { GivenTwoCommentOnlyDocuments() { input = "# test comment\n---\n# test comment 2"; } }

…or even an anonymous initializer like this:

@Nested class GivenTwoCommentOnlyDocuments { { input = "# test comment\n---\n# test comment 2"; } }

But I prefer methods to have names that say what they do, and as you can see in the first variant, setup methods are no exception. I sometimes even have several @BeforeEach methods in a single class, when they do separate setup work. This gives me the advantage that I don’t have to read the method body to understand what it does, and when some setup doesn’t work as expected, I can start by looking directly at the method that is responsible for that. But I must admit that this is actually some repetition in this case; probably it’s a matter of taste.

Now the test method names still describe the setup they run in, i.e. the InDocumentOnly part in shouldParseSingleInDocumentOnly. In the code structure as well as in the output provided by the JUnit runner, this is redundant, so we should remove it: shouldParseSingle.

The JUnit runner now looks like this:

Most real world tests share only part of the setup with other tests. You can extract the common setup and simply add the specific setup in each test. I often use objects with all fields set up with reasonable dummy values, and only modify those relevant for each test, e.g. setting one field to null to test that specific outcome. Just make sure to express any additional setup steps in the name of the test, or you may overlook it.

When things get more complex, it’s probably better to nest several layers of Given... classes, even when they have only one test, just to make all setup steps visible in one place, the class names, and not some in the class names and some in the method names.