#kubernetes platform

Explore tagged Tumblr posts

Text

Guide on How to Build Microservices

Microservices are quickly becoming the go-to architectural and organizational strategy for application development. The days of monolithic approaches are behind us as software becomes more complex. Microservices provide greater flexibility and software resilience while creating a push for innovation.

At its core, microservices are about having smaller, independent processes that communicate with one another through a well-designed interface and lightweight API. In this guide, we'll provide a quick breakdown of how to build easy microservices.

Create Your Services

To perform Kubernetes microservices testing, you need to create services. The goal is to have independent containerized services and establish a connection that allows them to communicate.

Keep things simple by developing two web applications. Many developers experimenting with microservices use the iconic "Hello World" test to understand how this architecture works.

Start by building your file structure. You'll need a directory, subdirectories and files to set up the blueprint for your microservices application. The amount of code you'll write for a true microservices application is great. But to keep things simple for experimentation, you can use prewritten codes and directory structures.

The first service you should create is your "hello-world-service." This flask-based application has two endpoints. The first is the welcome page. It includes a button to test the connection to your second service. The second endpoint communicates with your second service.

The second service is the REST-based "welcome service." It delivers a message, allowing you to test that your two services communicate effectively.

The concept is simple: You have one service that you can interact with directly and a second service with the sole function of delivering a message. Using Kubernetes microservices testing, you can force the first service to send a GET request to your REST-based second service.

Containerizing

Before testing, you must make your microservices independent from the hosting environment. To do that, you encapsulate them in Docker containers. You can then set the build path for each service, put them in a running state and start testing.

The result should be a simple web-based application. When you click the "test connection" button, your first service will connect to the second, delivering your "Hello World" message.

Explore the future of scalability – click to harness the power of our Kubernetes platform!

#kubernetes platform#kubernetes preview environments#kubernetes microservices testing#microservice local development environments

0 notes

Text

Deploying Large Language Models on Kubernetes: A Comprehensive Guide

New Post has been published on https://thedigitalinsider.com/deploying-large-language-models-on-kubernetes-a-comprehensive-guide/

Deploying Large Language Models on Kubernetes: A Comprehensive Guide

Large Language Models (LLMs) are capable of understanding and generating human-like text, making them invaluable for a wide range of applications, such as chatbots, content generation, and language translation.

However, deploying LLMs can be a challenging task due to their immense size and computational requirements. Kubernetes, an open-source container orchestration system, provides a powerful solution for deploying and managing LLMs at scale. In this technical blog, we’ll explore the process of deploying LLMs on Kubernetes, covering various aspects such as containerization, resource allocation, and scalability.

Understanding Large Language Models

Before diving into the deployment process, let’s briefly understand what Large Language Models are and why they are gaining so much attention.

Large Language Models (LLMs) are a type of neural network model trained on vast amounts of text data. These models learn to understand and generate human-like language by analyzing patterns and relationships within the training data. Some popular examples of LLMs include GPT (Generative Pre-trained Transformer), BERT (Bidirectional Encoder Representations from Transformers), and XLNet.

LLMs have achieved remarkable performance in various NLP tasks, such as text generation, language translation, and question answering. However, their massive size and computational requirements pose significant challenges for deployment and inference.

Why Kubernetes for LLM Deployment?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides several benefits for deploying LLMs, including:

Scalability: Kubernetes allows you to scale your LLM deployment horizontally by adding or removing compute resources as needed, ensuring optimal resource utilization and performance.

Resource Management: Kubernetes enables efficient resource allocation and isolation, ensuring that your LLM deployment has access to the required compute, memory, and GPU resources.

High Availability: Kubernetes provides built-in mechanisms for self-healing, automatic rollouts, and rollbacks, ensuring that your LLM deployment remains highly available and resilient to failures.

Portability: Containerized LLM deployments can be easily moved between different environments, such as on-premises data centers or cloud platforms, without the need for extensive reconfiguration.

Ecosystem and Community Support: Kubernetes has a large and active community, providing a wealth of tools, libraries, and resources for deploying and managing complex applications like LLMs.

Preparing for LLM Deployment on Kubernetes:

Before deploying an LLM on Kubernetes, there are several prerequisites to consider:

Kubernetes Cluster: You’ll need a Kubernetes cluster set up and running, either on-premises or on a cloud platform like Amazon Elastic Kubernetes Service (EKS), Google Kubernetes Engine (GKE), or Azure Kubernetes Service (AKS).

GPU Support: LLMs are computationally intensive and often require GPU acceleration for efficient inference. Ensure that your Kubernetes cluster has access to GPU resources, either through physical GPUs or cloud-based GPU instances.

Container Registry: You’ll need a container registry to store your LLM Docker images. Popular options include Docker Hub, Amazon Elastic Container Registry (ECR), Google Container Registry (GCR), or Azure Container Registry (ACR).

LLM Model Files: Obtain the pre-trained LLM model files (weights, configuration, and tokenizer) from the respective source or train your own model.

Containerization: Containerize your LLM application using Docker or a similar container runtime. This involves creating a Dockerfile that packages your LLM code, dependencies, and model files into a Docker image.

Deploying an LLM on Kubernetes

Once you have the prerequisites in place, you can proceed with deploying your LLM on Kubernetes. The deployment process typically involves the following steps:

Building the Docker Image

Build the Docker image for your LLM application using the provided Dockerfile and push it to your container registry.

Creating Kubernetes Resources

Define the Kubernetes resources required for your LLM deployment, such as Deployments, Services, ConfigMaps, and Secrets. These resources are typically defined using YAML or JSON manifests.

Configuring Resource Requirements

Specify the resource requirements for your LLM deployment, including CPU, memory, and GPU resources. This ensures that your deployment has access to the necessary compute resources for efficient inference.

Deploying to Kubernetes

Use the kubectl command-line tool or a Kubernetes management tool (e.g., Kubernetes Dashboard, Rancher, or Lens) to apply the Kubernetes manifests and deploy your LLM application.

Monitoring and Scaling

Monitor the performance and resource utilization of your LLM deployment using Kubernetes monitoring tools like Prometheus and Grafana. Adjust the resource allocation or scale your deployment as needed to meet the demand.

Example Deployment

Let’s consider an example of deploying the GPT-3 language model on Kubernetes using a pre-built Docker image from Hugging Face. We’ll assume that you have a Kubernetes cluster set up and configured with GPU support.

Pull the Docker Image:

bashCopydocker pull huggingface/text-generation-inference:1.1.0

Create a Kubernetes Deployment:

Create a file named gpt3-deployment.yaml with the following content:

apiVersion: apps/v1 kind: Deployment metadata: name: gpt3-deployment spec: replicas: 1 selector: matchLabels: app: gpt3 template: metadata: labels: app: gpt3 spec: containers: - name: gpt3 image: huggingface/text-generation-inference:1.1.0 resources: limits: nvidia.com/gpu: 1 env: - name: MODEL_ID value: gpt2 - name: NUM_SHARD value: "1" - name: PORT value: "8080" - name: QUANTIZE value: bitsandbytes-nf4

This deployment specifies that we want to run one replica of the gpt3 container using the huggingface/text-generation-inference:1.1.0 Docker image. The deployment also sets the environment variables required for the container to load the GPT-3 model and configure the inference server.

Create a Kubernetes Service:

Create a file named gpt3-service.yaml with the following content:

apiVersion: v1 kind: Service metadata: name: gpt3-service spec: selector: app: gpt3 ports: - port: 80 targetPort: 8080 type: LoadBalancer

This service exposes the gpt3 deployment on port 80 and creates a LoadBalancer type service to make the inference server accessible from outside the Kubernetes cluster.

Deploy to Kubernetes:

Apply the Kubernetes manifests using the kubectl command:

kubectl apply -f gpt3-deployment.yaml kubectl apply -f gpt3-service.yaml

Monitor the Deployment:

Monitor the deployment progress using the following commands:

kubectl get pods kubectl logs <pod_name>

Once the pod is running and the logs indicate that the model is loaded and ready, you can obtain the external IP address of the LoadBalancer service:

kubectl get service gpt3-service

Test the Deployment:

You can now send requests to the inference server using the external IP address and port obtained from the previous step. For example, using curl:

curl -X POST http://<external_ip>:80/generate -H 'Content-Type: application/json' -d '"inputs": "The quick brown fox", "parameters": "max_new_tokens": 50'

This command sends a text generation request to the GPT-3 inference server, asking it to continue the prompt “The quick brown fox” for up to 50 additional tokens.

Advanced topics you should be aware of

While the example above demonstrates a basic deployment of an LLM on Kubernetes, there are several advanced topics and considerations to explore:

_*]:min-w-0″ readability=”131.72387362124″>

1. Autoscaling

Kubernetes supports horizontal and vertical autoscaling, which can be beneficial for LLM deployments due to their variable computational demands. Horizontal autoscaling allows you to automatically scale the number of replicas (pods) based on metrics like CPU or memory utilization. Vertical autoscaling, on the other hand, allows you to dynamically adjust the resource requests and limits for your containers.

To enable autoscaling, you can use the Kubernetes Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA). These components monitor your deployment and automatically scale resources based on predefined rules and thresholds.

2. GPU Scheduling and Sharing

In scenarios where multiple LLM deployments or other GPU-intensive workloads are running on the same Kubernetes cluster, efficient GPU scheduling and sharing become crucial. Kubernetes provides several mechanisms to ensure fair and efficient GPU utilization, such as GPU device plugins, node selectors, and resource limits.

You can also leverage advanced GPU scheduling techniques like NVIDIA Multi-Instance GPU (MIG) or AMD Memory Pool Remapping (MPR) to virtualize GPUs and share them among multiple workloads.

3. Model Parallelism and Sharding

Some LLMs, particularly those with billions or trillions of parameters, may not fit entirely into the memory of a single GPU or even a single node. In such cases, you can employ model parallelism and sharding techniques to distribute the model across multiple GPUs or nodes.

Model parallelism involves splitting the model architecture into different components (e.g., encoder, decoder) and distributing them across multiple devices. Sharding, on the other hand, involves partitioning the model parameters and distributing them across multiple devices or nodes.

Kubernetes provides mechanisms like StatefulSets and Custom Resource Definitions (CRDs) to manage and orchestrate distributed LLM deployments with model parallelism and sharding.

4. Fine-tuning and Continuous Learning

In many cases, pre-trained LLMs may need to be fine-tuned or continuously trained on domain-specific data to improve their performance for specific tasks or domains. Kubernetes can facilitate this process by providing a scalable and resilient platform for running fine-tuning or continuous learning workloads.

You can leverage Kubernetes batch processing frameworks like Apache Spark or Kubeflow to run distributed fine-tuning or training jobs on your LLM models. Additionally, you can integrate your fine-tuned or continuously trained models with your inference deployments using Kubernetes mechanisms like rolling updates or blue/green deployments.

5. Monitoring and Observability

Monitoring and observability are crucial aspects of any production deployment, including LLM deployments on Kubernetes. Kubernetes provides built-in monitoring solutions like Prometheus and integrations with popular observability platforms like Grafana, Elasticsearch, and Jaeger.

You can monitor various metrics related to your LLM deployments, such as CPU and memory utilization, GPU usage, inference latency, and throughput. Additionally, you can collect and analyze application-level logs and traces to gain insights into the behavior and performance of your LLM models.

6. Security and Compliance

Depending on your use case and the sensitivity of the data involved, you may need to consider security and compliance aspects when deploying LLMs on Kubernetes. Kubernetes provides several features and integrations to enhance security, such as network policies, role-based access control (RBAC), secrets management, and integration with external security solutions like HashiCorp Vault or AWS Secrets Manager.

Additionally, if you’re deploying LLMs in regulated industries or handling sensitive data, you may need to ensure compliance with relevant standards and regulations, such as GDPR, HIPAA, or PCI-DSS.

7. Multi-Cloud and Hybrid Deployments

While this blog post focuses on deploying LLMs on a single Kubernetes cluster, you may need to consider multi-cloud or hybrid deployments in some scenarios. Kubernetes provides a consistent platform for deploying and managing applications across different cloud providers and on-premises data centers.

You can leverage Kubernetes federation or multi-cluster management tools like KubeFed or GKE Hub to manage and orchestrate LLM deployments across multiple Kubernetes clusters spanning different cloud providers or hybrid environments.

These advanced topics highlight the flexibility and scalability of Kubernetes for deploying and managing LLMs.

Conclusion

Deploying Large Language Models (LLMs) on Kubernetes offers numerous benefits, including scalability, resource management, high availability, and portability. By following the steps outlined in this technical blog, you can containerize your LLM application, define the necessary Kubernetes resources, and deploy it to a Kubernetes cluster.

However, deploying LLMs on Kubernetes is just the first step. As your application grows and your requirements evolve, you may need to explore advanced topics such as autoscaling, GPU scheduling, model parallelism, fine-tuning, monitoring, security, and multi-cloud deployments.

Kubernetes provides a robust and extensible platform for deploying and managing LLMs, enabling you to build reliable, scalable, and secure applications.

#access control#Amazon#Amazon Elastic Kubernetes Service#amd#Apache#Apache Spark#app#applications#apps#architecture#Artificial Intelligence#attention#AWS#azure#Behavior#BERT#Blog#Blue#Building#chatbots#Cloud#cloud platform#cloud providers#cluster#clusters#code#command#Community#compliance#comprehensive

0 notes

Text

Kubescape 3.0 elevates open-source Kubernetes security - Help Net Security

Targeted at the DevSecOps practitioner or platform engineer, Kubescape, the open-source Kubernetes security platform has reached version 3.0. Vulnerability scan results Kubescape 3.0 features Kubescape 3.0 adds new features that make it easier for organizations to secure their Kubernetes clusters, including: Compliance and container scan results stored as Kubernetes resources inside API objects:…

View On WordPress

0 notes

Text

Rafay Systems Named As A Cool Vendor In The 2023 Gartner Cool Vendors In Container Management

Rafay Systems, a prominent platform provider for Kubernetes management and operations, has recently earned recognition as a "Cool Vendor" in the 2023 Gartner Cool Vendors in Container Management report. This recognition underscores Rafay's commitment to enabling enterprises to accelerate digital transformation initiatives and enhance developer productivity while ensuring the necessary controls for enterprise-wide adoption.

One of the key aspects of Rafay's approach is its SaaS-first methodology, which empowers enterprise platform teams to streamline complex Kubernetes infrastructure operations across both private and public cloud environments. This efficiency boost aids developers and data scientists in bringing new applications and features to market more rapidly. Gartner's research indicates a growing trend, predicting that "by 2027, more than 90% of G2000 organizations running containerized applications in hybrid deployments will be leveraging container management tooling, up from fewer than 20% in 2023."

Haseeb Budhani, the CEO and co-founder of Rafay Systems, expressed pride in being recognized as a Gartner Cool Vendor, emphasizing that this acknowledgment validates Rafay's mission to enable enterprises to deliver innovative applications quickly. Rafay's Kubernetes Operations Platform aligns with the fast pace of innovation by providing self-service capabilities for developers and data scientists while offering the automation, standardization, and governance that platform teams require.

Read More - https://bit.ly/3RpOFe9

#Rafay Systems#Kubernetes Management And Operations#Kubernetes Operations Platform#Container Management

0 notes

Photo

Upbound Spaces brings managed control planes to self-hosted computing environments Upbound Inc., the startup behind the popular open-source Crossplane project, today announced a new self-hosting feature for its flagship control plane technology, enabling users to deploy managed control planes in self-managed computing environments. Upbound Spaces enables customers with rigorous compliance and data sovereignty requirements to benefit from the company’s Crossplane control plane technology. The launch of […] The post Upbound Spaces brings managed control planes to self-hosted computing environments appeared first on SiliconANGLE. https://siliconangle.com/2023/09/06/upbound-spaces-brings-managed-control-planes-self-hosted-computing-environments/

#Cloud#NEWS#The-Latest#applications#cloud-native technologies#control plane#Crossplane#internal development platforms#Kubernetes#managed control planes#managed environments#multicloud#self-hosted environments#Upbound#Upbound Spaces#Mike Wheatley#SiliconANGLE

0 notes

Text

Want to learn the secret of #CloudNative #Development WITHOUT #Kubernetes ⁉

Then check out my session VMware Explore Las Vegas!! Register here

#developer#platform engineering#springboot#vmware#vmwaretanzu#kubernetes#spring#vmwareexplore#SpringOne#CloudFoundry

0 notes

Text

using git and a … wikipedia says that the generic term for what github and gitlab are is 'software forge' for document editing is pretty great

you can use your favorite text editor! you can track a multi-file project! the default workflow encourages you to keep copies both on your computer and in the cloud! you can log what changes you're making!

if you accidentally edit with the wrong account you can go edit the history, it'll be a pain but you can.

the other options for "edit a document and show it to your friends as you edit" are, like:

google docs. it's a weird proprietary format and if you export to html it will be a horrible mess of html that needs cleanup. you don't by default end up with your file constantly up to date on your computer. it's super easy to end up viewing a doc with the wrong account.

edit in some platform on your computer and upload files to share them with your friends. you will have to upload the files a lot to lots of people if you want to keep them all up to date.

use some weird other web text service. it might also randomly go down and delete everything and there's way less of a robust advice ecosystem

unfortunately if you use a software forge they might ask you if you want to add a kubernetes cluster. and also they often won't enable word wrap on plaintext.

(im currently writing stuff on gitgud.io which has a fairly lenient ToS)

7 notes

·

View notes

Text

Ubuntu is a popular open-source operating system based on the Linux kernel. It's known for its user-friendliness, stability, and security, making it a great choice for both beginners and experienced users. Ubuntu can be used for a variety of purposes, including:

Key Features and Uses of Ubuntu:

Desktop Environment: Ubuntu offers a modern, intuitive desktop environment that is easy to navigate. It comes with a set of pre-installed applications for everyday tasks like web browsing, email, and office productivity.

Development: Ubuntu is widely used by developers due to its robust development tools, package management system, and support for programming languages like Python, Java, and C++.

Servers: Ubuntu Server is a popular choice for hosting websites, databases, and other server applications. It's known for its performance, security, and ease of use.

Cloud Computing: Ubuntu is a preferred operating system for cloud environments, supporting platforms like OpenStack and Kubernetes for managing cloud infrastructure.

Education: Ubuntu is used in educational institutions for teaching computer science and IT courses. It's free and has a vast repository of educational software.

Customization: Users can customize their Ubuntu installation to fit their specific needs, with a variety of desktop environments, themes, and software available.

Installing Ubuntu on Windows:

The image you shared shows that you are installing Ubuntu using the Windows Subsystem for Linux (WSL). This allows you to run Ubuntu natively on your Windows machine, giving you the best of both worlds.

Benefits of Ubuntu:

Free and Open-Source: Ubuntu is free to use and open-source, meaning anyone can contribute to its development.

Regular Updates: Ubuntu receives regular updates to ensure security and performance.

Large Community: Ubuntu has a large, active community that provides support and contributes to its development.

4 notes

·

View notes

Text

Build the Future of Tech: Enroll in the Leading DevOps Course Online Today

In a global economy where speed, security, and scalability are parameters of success, DevOps has emerged as the pulsating core of contemporary IT operations. Businesses are not recruiting either developers or sysadmins anymore—employers need DevOps individuals who can seamlessly integrate both worlds.

If you're willing to accelerate your career and become irreplaceable in the tech world, then now is the ideal time to sign up for Devops Course Online. And ReferMe Group's AWS DevOps Course is the one to take you there—quicker.

Why DevOps? Why Now?

The need for DevOps professionals is growing like crazy. As per current industry reports, job titles such as DevOps Engineer, Cloud Architect, and Site Reliability Engineer are among the best-paying and safest careers in technology today.

Why? Because DevOps helps businesses to:

Deploy faster using continuous integration and delivery (CI/CD)

Boost reliability and uptime

Automate everything-from infrastructure to testing

Scale apps with ease on cloud platforms like AWS

And individuals who develop these skills are rapidly becoming the pillars of today's tech teams.

Why Learn a DevOps Online?

Learning DevOps online provides more than convenience—it provides liberation. As a full-time professional, student, or career changer, online learning allows you:

✅ To learn at your own pace

✅ To access world-class instructors anywhere

✅ To develop real-world, project-based skills

✅ To prepare for globally recognized certifications

✅ J To join a growing network of DevOps learners and mentors

It’s professional-grade training—without the classroom limitations.

What Makes ReferMe Group’s DevOps Course Stand Out?

The AWS DevOps Course from ReferMe Group isn’t just a course—it’s a career accelerator. Here's what sets it apart:

Hands-On Labs & Projects: You’ll work on live AWS environments and build end-to-end DevOps pipelines using tools like Jenkins, Docker, Terraform, Git, Kubernetes, and more.

Training from Experts: Learn from experienced industry experts who have used DevOps at scale.

Resume-Reinforcing Certifications: Train to clear AWS and DevOps certification exams confidently.

Career Guidance: From resume creation to interview preparation, we prepare you for jobs, not course completion.

Lifetime Access: Come back to the content anytime with future upgrades covered.

Who Should Take This Course?

This DevOps course is ideal for:

Software Developers looking to move into deployment and automation

IT Professionals who want to upskill in cloud infrastructure

System Admins transitioning to new-age DevOps careers

Career changers entering the high-demand cloud and DevOps space

Students and recent graduates seeking a future-proof skill set

No experience in DevOps? No worries. We take you from the basics to advanced tools.

Final Thoughts: Your DevOps Journey Starts Here

As businesses continue to move to the cloud and automate their pipelines, DevOps engineers are no longer a nicety—they're a necessity. Investing in a high-quality DevOps course online provides you with the skills, certification, and confidence to compete and succeed in today's tech industry.

Start building your future today.

Join ReferMe Group's AWS DevOps Course today and become the architect of tomorrow's technology.

2 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

Top Trends in Software Development for 2025

The software development industry is evolving at an unprecedented pace, driven by advancements in technology and the increasing demands of businesses and consumers alike. As we step into 2025, staying ahead of the curve is essential for businesses aiming to remain competitive. Here, we explore the top trends shaping the software development landscape and how they impact businesses. For organizations seeking cutting-edge solutions, partnering with the Best Software Development Company in Vadodara, Gujarat, or India can make all the difference.

1. Artificial Intelligence and Machine Learning Integration:

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional but integral to modern software development. From predictive analytics to personalized user experiences, AI and ML are driving innovation across industries. In 2025, expect AI-powered tools to streamline development processes, improve testing, and enhance decision-making.

Businesses in Gujarat and beyond are leveraging AI to gain a competitive edge. Collaborating with the Best Software Development Company in Gujarat ensures access to AI-driven solutions tailored to specific industry needs.

2. Low-Code and No-Code Development Platforms:

The demand for faster development cycles has led to the rise of low-code and no-code platforms. These platforms empower non-technical users to create applications through intuitive drag-and-drop interfaces, significantly reducing development time and cost.

For startups and SMEs in Vadodara, partnering with the Best Software Development Company in Vadodara ensures access to these platforms, enabling rapid deployment of business applications without compromising quality.

3. Cloud-Native Development:

Cloud-native technologies, including Kubernetes and microservices, are becoming the backbone of modern applications. By 2025, cloud-native development will dominate, offering scalability, resilience, and faster time-to-market.

The Best Software Development Company in India can help businesses transition to cloud-native architectures, ensuring their applications are future-ready and capable of handling evolving market demands.

4. Edge Computing:

As IoT devices proliferate, edge computing is emerging as a critical trend. Processing data closer to its source reduces latency and enhances real-time decision-making. This trend is particularly significant for industries like healthcare, manufacturing, and retail.

Organizations seeking to leverage edge computing can benefit from the expertise of the Best Software Development Company in Gujarat, which specializes in creating applications optimized for edge environments.

5. Cybersecurity by Design:

With the increasing sophistication of cyber threats, integrating security into the development process has become non-negotiable. Cybersecurity by design ensures that applications are secure from the ground up, reducing vulnerabilities and protecting sensitive data.

The Best Software Development Company in Vadodara prioritizes cybersecurity, providing businesses with robust, secure software solutions that inspire trust among users.

6. Blockchain Beyond Cryptocurrencies:

Blockchain technology is expanding beyond cryptocurrencies into areas like supply chain management, identity verification, and smart contracts. In 2025, blockchain will play a pivotal role in creating transparent, tamper-proof systems.

Partnering with the Best Software Development Company in India enables businesses to harness blockchain technology for innovative applications that drive efficiency and trust.

7. Progressive Web Apps (PWAs):

Progressive Web Apps (PWAs) combine the best features of web and mobile applications, offering seamless experiences across devices. PWAs are cost-effective and provide offline capabilities, making them ideal for businesses targeting diverse audiences.

The Best Software Development Company in Gujarat can develop PWAs tailored to your business needs, ensuring enhanced user engagement and accessibility.

8. Internet of Things (IoT) Expansion:

IoT continues to transform industries by connecting devices and enabling smarter decision-making. From smart homes to industrial IoT, the possibilities are endless. In 2025, IoT solutions will become more sophisticated, integrating AI and edge computing for enhanced functionality.

For businesses in Vadodara and beyond, collaborating with the Best Software Development Company in Vadodara ensures access to innovative IoT solutions that drive growth and efficiency.

9. DevSecOps:

DevSecOps integrates security into the DevOps pipeline, ensuring that security is a shared responsibility throughout the development lifecycle. This approach reduces vulnerabilities and ensures compliance with industry standards.

The Best Software Development Company in India can help implement DevSecOps practices, ensuring that your applications are secure, scalable, and compliant.

10. Sustainability in Software Development:

Sustainability is becoming a priority in software development. Green coding practices, energy-efficient algorithms, and sustainable cloud solutions are gaining traction. By adopting these practices, businesses can reduce their carbon footprint and appeal to environmentally conscious consumers.

Working with the Best Software Development Company in Gujarat ensures access to sustainable software solutions that align with global trends.

11. 5G-Driven Applications:

The rollout of 5G networks is unlocking new possibilities for software development. Ultra-fast connectivity and low latency are enabling applications like augmented reality (AR), virtual reality (VR), and autonomous vehicles.

The Best Software Development Company in Vadodara is at the forefront of leveraging 5G technology to create innovative applications that redefine user experiences.

12. Hyperautomation:

Hyperautomation combines AI, ML, and robotic process automation (RPA) to automate complex business processes. By 2025, hyperautomation will become a key driver of efficiency and cost savings across industries.

Partnering with the Best Software Development Company in India ensures access to hyperautomation solutions that streamline operations and boost productivity.

13. Augmented Reality (AR) and Virtual Reality (VR):

AR and VR technologies are transforming industries like gaming, education, and healthcare. In 2025, these technologies will become more accessible, offering immersive experiences that enhance learning, entertainment, and training.

The Best Software Development Company in Gujarat can help businesses integrate AR and VR into their applications, creating unique and engaging user experiences.

Conclusion:

The software development industry is poised for significant transformation in 2025, driven by trends like AI, cloud-native development, edge computing, and hyperautomation. Staying ahead of these trends requires expertise, innovation, and a commitment to excellence.

For businesses in Vadodara, Gujarat, or anywhere in India, partnering with the Best Software Development Company in Vadodara, Gujarat, or India ensures access to cutting-edge solutions that drive growth and success. By embracing these trends, businesses can unlock new opportunities and remain competitive in an ever-evolving digital landscape.

#Best Software Development Company in Vadodara#Best Software Development Company in Gujarat#Best Software Development Company in India#nividasoftware

5 notes

·

View notes

Text

A3 Ultra VMs With NVIDIA H200 GPUs Pre-launch This Month

Strong infrastructure advancements for your future that prioritizes AI

To increase customer performance, usability, and cost-effectiveness, Google Cloud implemented improvements throughout the AI Hypercomputer stack this year. Google Cloud at the App Dev & Infrastructure Summit:

Trillium, Google’s sixth-generation TPU, is currently available for preview.

Next month, A3 Ultra VMs with NVIDIA H200 Tensor Core GPUs will be available for preview.

Google’s new, highly scalable clustering system, Hypercompute Cluster, will be accessible beginning with A3 Ultra VMs.

Based on Axion, Google’s proprietary Arm processors, C4A virtual machines (VMs) are now widely accessible

AI workload-focused additions to Titanium, Google Cloud’s host offload capability, and Jupiter, its data center network.

Google Cloud’s AI/ML-focused block storage service, Hyperdisk ML, is widely accessible.

Trillium A new era of TPU performance

Trillium A new era of TPU performance is being ushered in by TPUs, which power Google’s most sophisticated models like Gemini, well-known Google services like Maps, Photos, and Search, as well as scientific innovations like AlphaFold 2, which was just awarded a Nobel Prize! We are happy to inform that Google Cloud users can now preview Trillium, our sixth-generation TPU.

Taking advantage of NVIDIA Accelerated Computing to broaden perspectives

By fusing the best of Google Cloud’s data center, infrastructure, and software skills with the NVIDIA AI platform which is exemplified by A3 and A3 Mega VMs powered by NVIDIA H100 Tensor Core GPUs it also keeps investing in its partnership and capabilities with NVIDIA.

Google Cloud announced that the new A3 Ultra VMs featuring NVIDIA H200 Tensor Core GPUs will be available on Google Cloud starting next month.

Compared to earlier versions, A3 Ultra VMs offer a notable performance improvement. Their foundation is NVIDIA ConnectX-7 network interface cards (NICs) and servers equipped with new Titanium ML network adapter, which is tailored to provide a safe, high-performance cloud experience for AI workloads. A3 Ultra VMs provide non-blocking 3.2 Tbps of GPU-to-GPU traffic using RDMA over Converged Ethernet (RoCE) when paired with our datacenter-wide 4-way rail-aligned network.

In contrast to A3 Mega, A3 Ultra provides:

With the support of Google’s Jupiter data center network and Google Cloud’s Titanium ML network adapter, double the GPU-to-GPU networking bandwidth

With almost twice the memory capacity and 1.4 times the memory bandwidth, LLM inferencing performance can increase by up to 2 times.

Capacity to expand to tens of thousands of GPUs in a dense cluster with performance optimization for heavy workloads in HPC and AI.

Google Kubernetes Engine (GKE), which offers an open, portable, extensible, and highly scalable platform for large-scale training and AI workloads, will also offer A3 Ultra VMs.

Hypercompute Cluster: Simplify and expand clusters of AI accelerators

It’s not just about individual accelerators or virtual machines, though; when dealing with AI and HPC workloads, you have to deploy, maintain, and optimize a huge number of AI accelerators along with the networking and storage that go along with them. This may be difficult and time-consuming. For this reason, Google Cloud is introducing Hypercompute Cluster, which simplifies the provisioning of workloads and infrastructure as well as the continuous operations of AI supercomputers with tens of thousands of accelerators.

Fundamentally, Hypercompute Cluster integrates the most advanced AI infrastructure technologies from Google Cloud, enabling you to install and operate several accelerators as a single, seamless unit. You can run your most demanding AI and HPC workloads with confidence thanks to Hypercompute Cluster’s exceptional performance and resilience, which includes features like targeted workload placement, dense resource co-location with ultra-low latency networking, and sophisticated maintenance controls to reduce workload disruptions.

For dependable and repeatable deployments, you can use pre-configured and validated templates to build up a Hypercompute Cluster with just one API call. This include containerized software with orchestration (e.g., GKE, Slurm), framework and reference implementations (e.g., JAX, PyTorch, MaxText), and well-known open models like Gemma2 and Llama3. As part of the AI Hypercomputer architecture, each pre-configured template is available and has been verified for effectiveness and performance, allowing you to concentrate on business innovation.

A3 Ultra VMs will be the first Hypercompute Cluster to be made available next month.

An early look at the NVIDIA GB200 NVL72

Google Cloud is also awaiting the developments made possible by NVIDIA GB200 NVL72 GPUs, and we’ll be providing more information about this fascinating improvement soon. Here is a preview of the racks Google constructing in the meantime to deliver the NVIDIA Blackwell platform’s performance advantages to Google Cloud’s cutting-edge, environmentally friendly data centers in the early months of next year.

Redefining CPU efficiency and performance with Google Axion Processors

CPUs are a cost-effective solution for a variety of general-purpose workloads, and they are frequently utilized in combination with AI workloads to produce complicated applications, even if TPUs and GPUs are superior at specialized jobs. Google Axion Processors, its first specially made Arm-based CPUs for the data center, at Google Cloud Next ’24. Customers using Google Cloud may now benefit from C4A virtual machines, the first Axion-based VM series, which offer up to 10% better price-performance compared to the newest Arm-based instances offered by other top cloud providers.

Additionally, compared to comparable current-generation x86-based instances, C4A offers up to 60% more energy efficiency and up to 65% better price performance for general-purpose workloads such as media processing, AI inferencing applications, web and app servers, containerized microservices, open-source databases, in-memory caches, and data analytics engines.

Titanium and Jupiter Network: Making AI possible at the speed of light

Titanium, the offload technology system that supports Google’s infrastructure, has been improved to accommodate workloads related to artificial intelligence. Titanium provides greater compute and memory resources for your applications by lowering the host’s processing overhead through a combination of on-host and off-host offloads. Furthermore, although Titanium’s fundamental features can be applied to AI infrastructure, the accelerator-to-accelerator performance needs of AI workloads are distinct.

Google has released a new Titanium ML network adapter to address these demands, which incorporates and expands upon NVIDIA ConnectX-7 NICs to provide further support for virtualization, traffic encryption, and VPCs. The system offers best-in-class security and infrastructure management along with non-blocking 3.2 Tbps of GPU-to-GPU traffic across RoCE when combined with its data center’s 4-way rail-aligned network.

Google’s Jupiter optical circuit switching network fabric and its updated data center network significantly expand Titanium’s capabilities. With native 400 Gb/s link rates and a total bisection bandwidth of 13.1 Pb/s (a practical bandwidth metric that reflects how one half of the network can connect to the other), Jupiter could handle a video conversation for every person on Earth at the same time. In order to meet the increasing demands of AI computation, this enormous scale is essential.

Hyperdisk ML is widely accessible

For computing resources to continue to be effectively utilized, system-level performance maximized, and economical, high-performance storage is essential. Google launched its AI-powered block storage solution, Hyperdisk ML, in April 2024. Now widely accessible, it adds dedicated storage for AI and HPC workloads to the networking and computing advancements.

Hyperdisk ML efficiently speeds up data load times. It drives up to 11.9x faster model load time for inference workloads and up to 4.3x quicker training time for training workloads.

With 1.2 TB/s of aggregate throughput per volume, you may attach 2500 instances to the same volume. This is more than 100 times more than what big block storage competitors are giving.

Reduced accelerator idle time and increased cost efficiency are the results of shorter data load times.

Multi-zone volumes are now automatically created for your data by GKE. In addition to quicker model loading with Hyperdisk ML, this enables you to run across zones for more computing flexibility (such as lowering Spot preemption).

Developing AI’s future

Google Cloud enables companies and researchers to push the limits of AI innovation with these developments in AI infrastructure. It anticipates that this strong foundation will give rise to revolutionary new AI applications.

Read more on Govindhtech.com

#A3UltraVMs#NVIDIAH200#AI#Trillium#HypercomputeCluster#GoogleAxionProcessors#Titanium#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

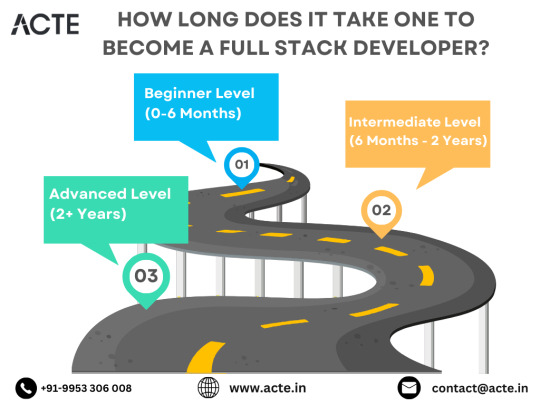

The Roadmap to Full Stack Developer Proficiency: A Comprehensive Guide

Embarking on the journey to becoming a full stack developer is an exhilarating endeavor filled with growth and challenges. Whether you're taking your first steps or seeking to elevate your skills, understanding the path ahead is crucial. In this detailed roadmap, we'll outline the stages of mastering full stack development, exploring essential milestones, competencies, and strategies to guide you through this enriching career journey.

Beginning the Journey: Novice Phase (0-6 Months)

As a novice, you're entering the realm of programming with a fresh perspective and eagerness to learn. This initial phase sets the groundwork for your progression as a full stack developer.

Grasping Programming Fundamentals:

Your journey commences with grasping the foundational elements of programming languages like HTML, CSS, and JavaScript. These are the cornerstone of web development and are essential for crafting dynamic and interactive web applications.

Familiarizing with Basic Data Structures and Algorithms:

To develop proficiency in programming, understanding fundamental data structures such as arrays, objects, and linked lists, along with algorithms like sorting and searching, is imperative. These concepts form the backbone of problem-solving in software development.

Exploring Essential Web Development Concepts:

During this phase, you'll delve into crucial web development concepts like client-server architecture, HTTP protocol, and the Document Object Model (DOM). Acquiring insights into the underlying mechanisms of web applications lays a strong foundation for tackling more intricate projects.

Advancing Forward: Intermediate Stage (6 Months - 2 Years)

As you progress beyond the basics, you'll transition into the intermediate stage, where you'll deepen your understanding and skills across various facets of full stack development.

Venturing into Backend Development:

In the intermediate stage, you'll venture into backend development, honing your proficiency in server-side languages like Node.js, Python, or Java. Here, you'll learn to construct robust server-side applications, manage data storage and retrieval, and implement authentication and authorization mechanisms.

Mastering Database Management:

A pivotal aspect of backend development is comprehending databases. You'll delve into relational databases like MySQL and PostgreSQL, as well as NoSQL databases like MongoDB. Proficiency in database management systems and design principles enables the creation of scalable and efficient applications.

Exploring Frontend Frameworks and Libraries:

In addition to backend development, you'll deepen your expertise in frontend technologies. You'll explore prominent frameworks and libraries such as React, Angular, or Vue.js, streamlining the creation of interactive and responsive user interfaces.

Learning Version Control with Git:

Version control is indispensable for collaborative software development. During this phase, you'll familiarize yourself with Git, a distributed version control system, to manage your codebase, track changes, and collaborate effectively with fellow developers.

Achieving Mastery: Advanced Phase (2+ Years)

As you ascend in your journey, you'll enter the advanced phase of full stack development, where you'll refine your skills, tackle intricate challenges, and delve into specialized domains of interest.

Designing Scalable Systems:

In the advanced stage, focus shifts to designing scalable systems capable of managing substantial volumes of traffic and data. You'll explore design patterns, scalability methodologies, and cloud computing platforms like AWS, Azure, or Google Cloud.

Embracing DevOps Practices:

DevOps practices play a pivotal role in contemporary software development. You'll delve into continuous integration and continuous deployment (CI/CD) pipelines, infrastructure as code (IaC), and containerization technologies such as Docker and Kubernetes.

Specializing in Niche Areas:

With experience, you may opt to specialize in specific domains of full stack development, whether it's frontend or backend development, mobile app development, or DevOps. Specialization enables you to deepen your expertise and pursue career avenues aligned with your passions and strengths.

Conclusion:

Becoming a proficient full stack developer is a transformative journey that demands dedication, resilience, and perpetual learning. By following the roadmap outlined in this guide and maintaining a curious and adaptable mindset, you'll navigate the complexities and opportunities inherent in the realm of full stack development. Remember, mastery isn't merely about acquiring technical skills but also about fostering collaboration, embracing innovation, and contributing meaningfully to the ever-evolving landscape of technology.

#full stack developer#education#information#full stack web development#front end development#frameworks#web development#backend#full stack developer course#technology

9 notes

·

View notes

Text

What are the latest trends in the IT job market?

Introduction

The IT job market is changing quickly. This change is because of new technology, different employer needs, and more remote work.

For jobseekers, understanding these trends is crucial to positioning themselves as strong candidates in a highly competitive landscape.

This blog looks at the current IT job market. It offers insights into job trends and opportunities. You will also find practical strategies to improve your chances of getting your desired role.

Whether you’re in the midst of a job search or considering a career change, this guide will help you navigate the complexities of the job hunting process and secure employment in today’s market.

Section 1: Understanding the Current IT Job Market

Recent Trends in the IT Job Market

The IT sector is booming, with consistent demand for skilled professionals in various domains such as cybersecurity, cloud computing, and data science.

The COVID-19 pandemic accelerated the shift to remote work, further expanding the demand for IT roles that support this transformation.

Employers are increasingly looking for candidates with expertise in AI, machine learning, and DevOps as these technologies drive business innovation.

According to industry reports, job opportunities in IT will continue to grow, with the most substantial demand focused on software development, data analysis, and cloud architecture.

It’s essential for jobseekers to stay updated on these trends to remain competitive and tailor their skills to current market needs.

Recruitment efforts have also become more digitized, with many companies adopting virtual hiring processes and online job fairs.

This creates both challenges and opportunities for job seekers to showcase their talents and secure interviews through online platforms.

NOTE: Visit Now

Remote Work and IT

The surge in remote work opportunities has transformed the job market. Many IT companies now offer fully remote or hybrid roles, which appeal to professionals seeking greater flexibility.

While remote work has increased access to job opportunities, it has also intensified competition, as companies can now hire from a global talent pool.

Section 2: Choosing the Right Keywords for Your IT Resume

Keyword Optimization: Why It Matters

With more employers using Applicant Tracking Systems (ATS) to screen resumes, it’s essential for jobseekers to optimize their resumes with relevant keywords.

These systems scan resumes for specific words related to the job description and only advance the most relevant applications.

To increase the chances of your resume making it through the initial screening, jobseekers must identify and incorporate the right keywords into their resumes.

When searching for jobs in IT, it’s important to tailor your resume for specific job titles and responsibilities. Keywords like “software engineer,” “cloud computing,” “data security,” and “DevOps” can make a huge difference.

By strategically using keywords that reflect your skills, experience, and the job requirements, you enhance your resume’s visibility to hiring managers and recruitment software.

Step-by-Step Keyword Selection Process

Analyze Job Descriptions: Look at several job postings for roles you’re interested in and identify recurring terms.

Incorporate Specific Terms: Include technical terms related to your field (e.g., Python, Kubernetes, cloud infrastructure).

Use Action Verbs: Keywords like “developed,” “designed,” or “implemented” help demonstrate your experience in a tangible way.

Test Your Resume: Use online tools to see how well your resume aligns with specific job postings and make adjustments as necessary.

Section 3: Customizing Your Resume for Each Job Application

Why Customization is Key

One size does not fit all when it comes to resumes, especially in the IT industry. Jobseekers who customize their resumes for each job application are more likely to catch the attention of recruiters. Tailoring your resume allows you to emphasize the specific skills and experiences that align with the job description, making you a stronger candidate. Employers want to see that you’ve taken the time to understand their needs and that your expertise matches what they are looking for.

Key Areas to Customize:

Summary Section: Write a targeted summary that highlights your qualifications and goals in relation to the specific job you’re applying for.

Skills Section: Highlight the most relevant skills for the position, paying close attention to the technical requirements listed in the job posting.

Experience Section: Adjust your work experience descriptions to emphasize the accomplishments and projects that are most relevant to the job.

Education & Certifications: If certain qualifications or certifications are required, make sure they are easy to spot on your resume.

NOTE: Read More

Section 4: Reviewing and Testing Your Optimized Resume

Proofreading for Perfection

Before submitting your resume, it’s critical to review it for accuracy, clarity, and relevance. Spelling mistakes, grammatical errors, or outdated information can reflect poorly on your professionalism.

Additionally, make sure your resume is easy to read and visually organized, with clear headings and bullet points. If possible, ask a peer or mentor in the IT field to review your resume for content accuracy and feedback.

Testing Your Resume with ATS Tools

After making your resume keyword-optimized, test it using online tools that simulate ATS systems. This allows you to see how well your resume aligns with specific job descriptions and identify areas for improvement.

Many tools will give you a match score, showing you how likely your resume is to pass an ATS scan. From here, you can fine-tune your resume to increase its chances of making it to the recruiter’s desk.

Section 5: Trends Shaping the Future of IT Recruitment

Embracing Digital Recruitment

Recruiting has undergone a significant shift towards digital platforms, with job fairs, interviews, and onboarding now frequently taking place online.

This transition means that jobseekers must be comfortable navigating virtual job fairs, remote interviews, and online assessments.

As IT jobs increasingly allow remote work, companies are also using technology-driven recruitment tools like AI for screening candidates.

Jobseekers should also leverage platforms like LinkedIn to increase visibility in the recruitment space. Keeping your LinkedIn profile updated, networking with industry professionals, and engaging in online discussions can all boost your chances of being noticed by recruiters.

Furthermore, participating in virtual job fairs or IT recruitment events provides direct access to recruiters and HR professionals, enhancing your job hunt.

FAQs

1. How important are keywords in IT resumes?

Keywords are essential in IT resumes because they ensure your resume passes through Applicant Tracking Systems (ATS), which scans resumes for specific terms related to the job. Without the right keywords, your resume may not reach a human recruiter.

2. How often should I update my resume?

It’s a good idea to update your resume regularly, especially when you gain new skills or experience. Also, customize it for every job application to ensure it aligns with the job’s specific requirements.

3. What are the most in-demand IT jobs?

Some of the most in-demand IT jobs include software developers, cloud engineers, cybersecurity analysts, data scientists, and DevOps engineers.

4. How can I stand out in the current IT job market?

To stand out, jobseekers should focus on tailoring their resumes, building strong online profiles, networking, and keeping up-to-date with industry trends. Participation in online forums, attending webinars, and earning industry-relevant certifications can also enhance visibility.

Conclusion

The IT job market continues to offer exciting opportunities for jobseekers, driven by technological innovations and changing work patterns.

By staying informed about current trends, customizing your resume, using keywords effectively, and testing your optimized resume, you can improve your job search success.

Whether you are new to the IT field or an experienced professional, leveraging these strategies will help you navigate the competitive landscape and secure a job that aligns with your career goals.

NOTE: Contact Us

2 notes

·

View notes

Text

I have seen a lot of mentions about "alternatives" to #kubernetes lately... Just here to remind you about #CloudFoundry and #VMwareTanzu Application Service, running apps at scale since 2011 😎

#developer#vmwaretanzu#vmware#cloud computing#platform engineering#platform#springboot#java#kubernetes#k8s#open source

1 note

·

View note