#learn web crawling in python

Explore tagged Tumblr posts

Text

Big Companies using Python – Infographic

Python is one of the most popular programming languages today.

Many big companies rely on it for various tasks. It is flexible, easy to learn, and powerful. Let’s explore how major companies use Python to improve their services.

Download Infographic

Google

Google is a strong supporter of Python. The company uses it for multiple applications, including web crawling, AI, and machine learning. Python powers parts of Google Search and YouTube. The company also supports Python through open-source projects like TensorFlow.

Uber

Uber uses Python for data analysis and machine learning. The company relies on Python’s efficiency to improve its ride-matching system. Python also plays a role in Uber’s surge pricing algorithms. The company uses SciPy and NumPy for predictive modelling.

Pinterest

Pinterest relies on Python for backend development. The company uses it for image processing, data analytics, and content discovery. Python helps Pinterest personalise user recommendations. The platform also uses Django, a Python framework, for rapid development.

Instagram

Instagram’s backend is built with Python and Django. Python helps Instagram handle millions of users efficiently. The company uses it to scale infrastructure and improve search features. Python’s simple syntax helps Instagram developers work faster.

Netflix

Netflix uses Python for content recommendations. The company’s algorithm suggests what users should watch next. Python is also used for automation, security, and data analysis. The company relies on Python libraries like Pandas and NumPy for big data processing.

Spotify

Spotify uses Python for data analysis and machine learning. The company processes vast amounts of music data to suggest songs. Python helps with backend services, automation, and A/B testing. Spotify also uses Python to improve its ad-serving algorithms.

Udemy

Udemy, the online learning platform, relies on Python for its backend. The company uses it for web development, data processing, and automation. Python helps Udemy personalise course recommendations. The language also improves search functionality on the platform.

Reddit

Reddit’s core infrastructure is built using Python. The platform uses it for backend development, content ranking, and spam detection. Python’s flexibility allows Reddit to quickly deploy new features. The platform also benefits from Python’s vast ecosystem of libraries.

PayPal

PayPal relies on Python for fraud detection and risk management. The company uses machine learning algorithms to detect suspicious transactions. Python also helps improve security and automate processes. PayPal benefits from Python’s scalability and efficiency.

Conclusion

Python is a powerful tool for big companies. It helps with automation, data analysis, AI, and web development. Companies like Google, Uber, and Netflix depend on Python to improve their services. As Python continues to evolve, more businesses will adopt it for their needs.

First Published: https://dcpweb.co.uk/blog/big-companies-using-python-infographic

1 note

·

View note

Text

A guide to extracting data from websites

A Guide to Extracting Data from Websites

Extracting data from websites, also known as web scraping, is a powerful technique for gathering information from the web automatically. This guide covers:

✅ Web Scraping Basics ✅ Tools & Libraries (Python’s BeautifulSoup, Scrapy, Selenium) ✅ Step-by-Step Example ✅ Best Practices & Legal Considerations

1️⃣ What is Web Scraping?

Web scraping is the process of automatically extracting data from websites. It is useful for:

🔹 Market Research — Extracting competitor pricing, trends, and reviews. 🔹 Data Analysis — Collecting data for machine learning and research. 🔹 News Aggregation — Fetching the latest articles from news sites. 🔹 Job Listings & Real Estate — Scraping job portals or housing listings.

2️⃣ Choosing a Web Scraping Tool

There are multiple tools available for web scraping. Some popular Python libraries include:

Library Best For Pros Cons Beautiful Soup Simple HTML parsing Easy to use, lightweight Not suitable for JavaScript-heavy sites Scrapy Large-scale scraping Fast, built-in crawling tools Higher learning curve Selenium Dynamic content (JS)Interacts with websites like a user Slower, high resource usage

3️⃣ Web Scraping Step-by-Step with Python

🔗 Step 1: Install Required Libraries

First, install BeautifulSoup and requests using:bashpip install beautifulsoup4 requests

🔗 Step 2: Fetch the Web Page

Use the requests library to download a webpage’s HTML content.pythonimport requestsurl = "https://example.com" headers = {"User-Agent": "Mozilla/5.0"} response = requests.get(url, headers=headers)if response.status_code == 200: print("Page fetched successfully!") else: print("Failed to fetch page")

🔗 Step 3: Parse HTML with BeautifulSoup

pythonfrom bs4 import BeautifulSoupsoup = BeautifulSoup(response.text, "html.parser")# Extract the title of the page title = soup.title.text print("Page Title:", title)# Extract all links on the page links = [a["href"] for a in soup.find_all("a", href=True)] print("Links found:", links)

🔗 Step 4: Extract Specific Data

For example, extracting article headlines from a blog:pythonarticles = soup.find_all("h2", class_="post-title") for article in articles: print("Article Title:", article.text)

4️⃣ Handling JavaScript-Rendered Content (Selenium Example)

If a website loads content dynamically using JavaScript, use Selenium. bash pip install selenium

Example using Selenium with Chrome WebDriver:from selenium import webdriveroptions = webdriver.ChromeOptions() options.add_argument("--headless") # Run without opening a browser driver = webdriver.Chrome(options=options)driver.get("https://example.com") page_source = driver.page_source # Get dynamically loaded contentdriver.quit()

5️⃣ Best Practices & Legal Considerations

✅ Check Robots.txt — Websites may prohibit scraping (e.g., example.com/robots.txt). ✅ Use Headers & Rate Limiting – Mimic human behavior to avoid being blocked. ✅ Avoid Overloading Servers – Use delays (time.sleep(1)) between requests. ✅ Respect Copyright & Privacy Laws – Do not scrape personal or copyrighted data.

🚀 Conclusion

Web scraping is an essential skill for data collection, analysis, and automation. Using BeautifulSoup for static pages and Selenium for JavaScript-heavy sites, you can efficiently extract and process data.

WEBSITE: https://www.ficusoft.in/python-training-in-chennai/

0 notes

Text

You need a specific set of skills if you want to succeed as an SEO specialist. At the very least, you need to know how to do keyword research and create eye-catching content. And to make sure you give your clients top-notch Port St. Lucie SEO services, you need to know how to code. It doesn’t take much effort to learn a few programming languages. Still, if you need a helping hand, then best check out the simple guide provided below. Why Learn Programming First, let’s talk about the benefits you can enjoy from learning how to code. By arming yourself with a few useful programming languages, you’ll basically be able to do a kickass job as an SEO pro. And as if that’s not enough, you’ll be able to enjoy the following: Automated Tasks Providing quality SEO services entails many tasks, some of which are repetitive and time-consuming. Coding languages like JavaScript and Python can help you make a program that will do these tasks for you. This frees up your time and energy for other important matters. Improved Technical SEO Audits Regularly doing technical SEO audits help search engines like Google crawl and index your pages more effectively. And to make sure you accomplish precisely that, you need to learn a few programming languages along the way. Better Keyword Insertion Keywords are usually strategically placed in the web site's content, alongside backlinks that will help boost its reputation and rankings. But as you probably already know, you need to insert the keywords in other places as well, including the images’ alt text sections and the subheadings. By knowing how to write HTML code, you can make sure the keywords are placed seamlessly. Enhanced Problem-Solving Skills Lastly, you can significantly improve your ability to solve problems by learning how to code. With this skill, you’ll be able to deal with any issue you encounter head-on, thus enabling you to enjoy a better career in the SEO industry. Top Computer Programming Languages to Learn Next, let’s check out some of the most useful programming languages for SEO specialists. Through these languages, you’ll be able to optimize not only your clients’ websites but also your own career. So without further ado: HTML Although it’s technically not a programming language, HTML (HyperText Markup Language) can nevertheless help you give any website a decent structure. This web-based language provides you with a series of tags that enable you to organize and place content on the web pages. From your articles’ paragraphs and headings to their images and videos, HTML will aid you in making all of them come together. CSS While HTML provides the structure, CSS focuses on the website’s style. By using this language, you can make your clients’ pages look more visually appealing. This will ultimately help their website provide a better user experience, as well as make it easier for people to navigate through it. HTML and CSS should be used together. JavaScript As the most used programming language out there, JavaScript is responsible for providing infrastructure to countless websites. Even well-known social media platforms like Facebook, Instagram, and Youtube, rely on this language. In short, not only will it help you improve your clients’ rankings on search engines, but it will provide you with various frameworks to enhance their pages, too. PHP Aside from JavaScript, a lot of websites also rely on PHP. Clear and easy to learn, this programming language also offers a lot of useful frameworks to make your life as an SEO specialist easier. Take Laravel for example. With its advanced development tools and other features, it can easily outperform other web frameworks. It will help you simplify your entire web development process, and it can help you create cleaner, reusable code. Python As mentioned above, Python can help you automate some of the tasks you need to finish while providing quality SEO services. However, that’s not the only reason why it’s so hot right now.

This fast-growing language can also improve your clients’ rankings by improving their technical SEO, connecting their site to Google API, and more. The best part is, Python is extremely easy to learn and use. SQL Finally, you can employ SQL to improve how you do SEO audits. Since it’s designed to help users organize and analyze data, this programming language can help you create better keyword profiles, automated category tagging systems, SEO monthly reports, and what-not. SQL will turn you into a very formidable SEO specialist alongside other tools like AHrefs, Google Analytics, and SEMRush. Start Learning and Coding In this day and age, equipping yourself with the right skills and tools can improve the services you offer as well as give you an edge over your competition. By learning the programming languages mentioned above, you’ll be able to outplay your rivals in the SEO industry. So if you haven’t yet, start learning a programming language today.

0 notes

Text

You need a specific set of skills if you want to succeed as an SEO specialist. At the very least, you need to know how to do keyword research and create eye-catching content. And to make sure you give your clients top-notch Port St. Lucie SEO services, you need to know how to code. It doesn’t take much effort to learn a few programming languages. Still, if you need a helping hand, then best check out the simple guide provided below. Why Learn Programming First, let’s talk about the benefits you can enjoy from learning how to code. By arming yourself with a few useful programming languages, you’ll basically be able to do a kickass job as an SEO pro. And as if that’s not enough, you’ll be able to enjoy the following: Automated Tasks Providing quality SEO services entails many tasks, some of which are repetitive and time-consuming. Coding languages like JavaScript and Python can help you make a program that will do these tasks for you. This frees up your time and energy for other important matters. Improved Technical SEO Audits Regularly doing technical SEO audits help search engines like Google crawl and index your pages more effectively. And to make sure you accomplish precisely that, you need to learn a few programming languages along the way. Better Keyword Insertion Keywords are usually strategically placed in the web site's content, alongside backlinks that will help boost its reputation and rankings. But as you probably already know, you need to insert the keywords in other places as well, including the images’ alt text sections and the subheadings. By knowing how to write HTML code, you can make sure the keywords are placed seamlessly. Enhanced Problem-Solving Skills Lastly, you can significantly improve your ability to solve problems by learning how to code. With this skill, you’ll be able to deal with any issue you encounter head-on, thus enabling you to enjoy a better career in the SEO industry. Top Computer Programming Languages to Learn Next, let’s check out some of the most useful programming languages for SEO specialists. Through these languages, you’ll be able to optimize not only your clients’ websites but also your own career. So without further ado: HTML Although it’s technically not a programming language, HTML (HyperText Markup Language) can nevertheless help you give any website a decent structure. This web-based language provides you with a series of tags that enable you to organize and place content on the web pages. From your articles’ paragraphs and headings to their images and videos, HTML will aid you in making all of them come together. CSS While HTML provides the structure, CSS focuses on the website’s style. By using this language, you can make your clients’ pages look more visually appealing. This will ultimately help their website provide a better user experience, as well as make it easier for people to navigate through it. HTML and CSS should be used together. JavaScript As the most used programming language out there, JavaScript is responsible for providing infrastructure to countless websites. Even well-known social media platforms like Facebook, Instagram, and Youtube, rely on this language. In short, not only will it help you improve your clients’ rankings on search engines, but it will provide you with various frameworks to enhance their pages, too. PHP Aside from JavaScript, a lot of websites also rely on PHP. Clear and easy to learn, this programming language also offers a lot of useful frameworks to make your life as an SEO specialist easier. Take Laravel for example. With its advanced development tools and other features, it can easily outperform other web frameworks. It will help you simplify your entire web development process, and it can help you create cleaner, reusable code. Python As mentioned above, Python can help you automate some of the tasks you need to finish while providing quality SEO services. However, that’s not the only reason why it’s so hot right now.

This fast-growing language can also improve your clients’ rankings by improving their technical SEO, connecting their site to Google API, and more. The best part is, Python is extremely easy to learn and use. SQL Finally, you can employ SQL to improve how you do SEO audits. Since it’s designed to help users organize and analyze data, this programming language can help you create better keyword profiles, automated category tagging systems, SEO monthly reports, and what-not. SQL will turn you into a very formidable SEO specialist alongside other tools like AHrefs, Google Analytics, and SEMRush. Start Learning and Coding In this day and age, equipping yourself with the right skills and tools can improve the services you offer as well as give you an edge over your competition. By learning the programming languages mentioned above, you’ll be able to outplay your rivals in the SEO industry. So if you haven’t yet, start learning a programming language today.

0 notes

Text

Top Mobile Proxy Scraping Tools for Data Extraction in 2024

Web scraping continues to be a vital strategy for businesses looking to gather competitive intelligence, conduct market research, or monitor pricing. Mobile proxies provide a level of anonymity and efficiency unmatched by other types of proxies, making them ideal for web scraping activities. In 2024, several tools and services stand out for their effectiveness, flexibility, and integration with mobile proxies, offering robust data extraction solutions. 1. Bright Data Scraping Browser and API Bright Data (formerly Luminati) remains a leading choice for businesses that need powerful scraping tools combined with a vast network of over 7 million mobile IPs. Bright Data provides a web scraping browser and scraping API that are fully integrated with their proxy network. These tools offer seamless automation, robust data handling capabilities, and scalability, making them suitable for high-volume data extraction tasks. - Key Features: - Advanced proxy rotation and session management. - Precise geo-targeting options (city, ZIP code, carrier, ASN). - Built-in scraping API for integrating with custom software. - Pay-as-you-go plans for cost flexibility. - Benefits: - Allows for scalable and efficient data extraction with minimal risk of detection. - Suitable for both small and large-scale web scraping projects. For more information, visit Bright Data's website. 2. Apify Platform Apify is a comprehensive web scraping platform that offers a range of tools designed for developers and data scientists. The platform is well-known for its Apify Proxy service, which integrates seamlessly with various mobile proxy providers. Apify supports serverless computation, data storage, and distributed queues, allowing for high scalability in web scraping projects. - Key Features: - Integration with major proxy providers, including mobile proxies. - Open-source libraries like Crawlee for generating human-like browser fingerprints and managing user sessions. - Flexible pricing with a free plan available. - Extensive API support for custom development. - Benefits: - Provides a developer-friendly environment for building complex scraping workflows. - Reduces the risk of being blocked by efficiently managing proxies and sessions. Check out Apify's website for more details. 3. Scrapy Scrapy is a popular open-source web scraping framework written in Python, widely used for large-scale data extraction tasks. While it does not come with built-in mobile proxies, it can be easily integrated with proxy providers like SOAX, Oxylabs, and Smartproxy to enhance anonymity and bypass scraping restrictions. - Key Features: - Middleware support for integrating various proxy services. - High-performance data extraction with asynchronous networking. - Extensive community support and numerous plugins available. - Benefits: - Highly customizable and flexible, making it a top choice for developers. - Strong support for proxy rotation and IP management. Learn more about Scrapy on its official documentation page. 4. Octoparse Octoparse is a no-code scraping tool that is popular among non-developers for its user-friendly interface and robust scraping capabilities. Octoparse supports mobile proxies and provides easy-to-use proxy settings for rotating IPs, managing sessions, and geo-targeting, making it highly effective for data extraction from websites with strong anti-scraping mechanisms. - Key Features: - Drag-and-drop interface for easy scraping setup. - Integrated proxy settings, including support for mobile proxies. - Cloud-based platform for scheduling and automation. - Benefits: - Suitable for both beginners and professionals who need to conduct complex scraping tasks without coding. - Reliable customer support and comprehensive tutorials. Visit Octoparse’s website to explore its features. 5. ProxyCrawl ProxyCrawl is a specialized service for crawling and scraping web pages anonymously. It supports a wide range of proxies, including mobile proxies, and offers an API for direct integration with scraping applications. ProxyCrawl provides a Crawler API and a Scraper API, making it ideal for dynamic and large-scale web scraping projects. - Key Features: - Seamless integration with mobile proxy networks. - Built-in tools for handling CAPTCHAs and IP bans. - Extensive API documentation and support. - Benefits: - Enhances data extraction success rates by mitigating common blocking techniques. - Pay-as-you-go pricing ensures cost-efficiency for varied scraping needs. Explore ProxyCrawl's services for more information. 6. Zyte (formerly Scrapinghub) Zyte offers a complete scraping solution that includes the Zyte Smart Proxy Manager for mobile proxies and an advanced scraping API. This platform is widely recognized for its reliability and is often chosen for projects requiring high levels of anonymity and extensive data scraping. - Key Features: - Integrated with multiple proxy types, including mobile proxies. - Automatic proxy management and IP rotation. - Built-in anti-bot protection features. - Benefits: - Minimizes the risk of detection while maximizing data extraction efficiency. - Supports enterprise-level scraping projects with large datasets. Learn more about Zyte at their official website. Conclusion Choosing the right mobile proxy scraping tool depends on the specific needs of your data extraction project, such as the level of anonymity required, the complexity of the data, and the need for integration with other tools or custom scripts. Tools like Bright Data, Apify, Scrapy, Octoparse, ProxyCrawl, and Zyte provide robust solutions for 2024, each offering unique features to optimize data extraction while mitigating risks associated with scraping activities. Read the full article

0 notes

Text

What is the use of learning the Python language?

Python has evolved to be one of the most essential tools a data scientist can possess because it is adaptable, readable, and has a very extensive ecosystem made up of libraries and frameworks. Here are some reasons why learning Python will serve useful in doing data science:

1. Data Analysis and Manipulation

NumPy: The basic library for numerical computations and arrays.

Pandas: Provides data structures such as DataFrames for efficient data manipulation and analysis.

Matplotlib: A very powerful plotting library for data visualization.

2. Machine Learning

Scikit-learn is a machine learning library that provides algorithms for classification, regression, clustering, and so on.

TensorFlow is a deep learning framework to build and train neural networks.

PyTorch also is one of the famous deep learning frameworks. PyTorch is known for its flexibility and dynamic computational graph.

3. Data Visualization

Seaborn is a high-level data visualization library built on top of Matplotlib, offering informative and attractive plots.

Plotly: An interactive plotting library allowing multiple types of plots; can also be applied to build a dashboard.

4. Natural Language Processing

NLTK: Library that provides basic functions to perform NLP tasks such as tokenization, stemming, and part-of-speech tagging.

Gensim: A Python library for document similarity analysis, topic modeling.

5. Web Scraping and Data Extraction

Beautiful Soup: A parsing library for HTML and XML documents.

Scrapy: A web crawling framework that can be used to extract data from websites

.

6. Data Engineering

Airflow: A workflow management platform for scheduling and monitoring data pipelines. Dask: A parallel computing library to scale data science computations.

7. Rapid Prototyping

The interactive environment and simplicity of Python are an ideal setting for fast experimentation with various data science techniques and algorithms.

8. Large Community and Ecosystem

Python has a huge and active community, which in general invests a lot in detailed documentation, tutorials, and forums.

Also, the thick ecosystem of libraries and frameworks means you will have the latest and greatest in tools and techniques.

In short, knowledge of Python equips a data scientist to handle, analyze, and retrieve insights from data efficiently. Its versatility, readability, and strong libraries make it an invaluable tool in the area of data science.

0 notes

Text

What to do about website anti-crawlers? Teach you five effective measures

With the popularity of web crawler technology, more and more websites have started to adopt anti-crawler strategies to protect their data from being collected on a large scale. If you are engaged in data collection work, then you will certainly encounter various anti-crawler mechanisms to get in the way. We share five common and effective ways to deal with anti-crawler strategies to help you get the data you need more easily.

1. Use high-quality proxy IPs

Websites usually detect frequent requests from the same IP address to determine whether there is a crawler behavior. Therefore, using a high-quality proxy IP is the first step in dealing with anti-crawlers. By using a proxy service, you can change the IP address for each request and reduce the risk of being blocked by the website. We recommend using 711Proxy, which provides high-quality proxy IPs from multiple nodes around the world to ensure that your crawler requests are more stealthy and stable.

2. Simulate human behavior

Websites usually identify crawlers by detecting the frequency of requests, page dwell time and so on. Therefore, when writing a crawler program, it is especially important to try to simulate human browsing behavior. Specifically, you can add random time intervals between requests, scrolling pages, clicking links and other operations, which can effectively reduce the risk of being recognized as a crawler.

3. Handling captcha

Captcha is one of the common means used by many websites to block automated programs. If you encounter captchas during the crawling process, you can consider the following solutions:

· Use third-party captcha recognition services that use manual or machine learning algorithms to recognize captcha content.

· Bypass the captcha trigger condition by changing the IP address through a proxy.

· If the captcha is too complex, it may need to be solved manually or in combination with other technical means.

4. Avoid using default crawler libraries

Websites may recognize crawlers by detecting HTTP headers such as User-Agent. If you are using a default crawler library, such as Python's requests library, its default User-Agent is easily recognized. Therefore, customizing the User-Agent in the request header and trying to mimic the headers of common browsers is the key to bypassing this anti-crawler tactic.

5. Rotation of Request Headers and Sessions

Besides User-Agent, other HTTP headers such as Referer, Accept-Language, etc. may also be used to detect crawler behavior. By regularly rotating these request headers or switching between different sessions, you can further confuse the anti-crawler mechanism and reduce the probability of being blocked.

Tackling your site's anti-crawler tactics requires a combination of techniques to ensure that the crawler's behavior is as close as possible to the normal browsing habits of a human user. If you want to be efficient while remaining stealthy, we strongly recommend you try 711Proxy, which will provide strong support for your crawler project and help you easily deal with various anti-crawler challenges.

0 notes

Text

How to Scrape Product Reviews from eCommerce Sites?

Know More>>https://www.datazivot.com/scrape-product-reviews-from-ecommerce-sites.php

Introduction In the digital age, eCommerce sites have become treasure troves of data, offering insights into customer preferences, product performance, and market trends. One of the most valuable data types available on these platforms is product reviews. To Scrape Product Reviews data from eCommerce sites can provide businesses with detailed customer feedback, helping them enhance their products and services. This blog will guide you through the process to scrape ecommerce sites Reviews data, exploring the tools, techniques, and best practices involved.

Why Scrape Product Reviews from eCommerce Sites? Scraping product reviews from eCommerce sites is essential for several reasons:

Customer Insights: Reviews provide direct feedback from customers, offering insights into their preferences, likes, dislikes, and suggestions.

Product Improvement: By analyzing reviews, businesses can identify common issues and areas for improvement in their products.

Competitive Analysis: Scraping reviews from competitor products helps in understanding market trends and customer expectations.

Marketing Strategies: Positive reviews can be leveraged in marketing campaigns to build trust and attract more customers.

Sentiment Analysis: Understanding the overall sentiment of reviews helps in gauging customer satisfaction and brand perception.

Tools for Scraping eCommerce Sites Reviews Data Several tools and libraries can help you scrape product reviews from eCommerce sites. Here are some popular options:

BeautifulSoup: A Python library designed to parse HTML and XML documents. It generates parse trees from page source code, enabling easy data extraction.

Scrapy: An open-source web crawling framework for Python. It provides a powerful set of tools for extracting data from websites.

Selenium: A web testing library that can be used for automating web browser interactions. It's useful for scraping JavaScript-heavy websites.

Puppeteer: A Node.js library that gives a higher-level API to control Chromium or headless Chrome browsers, making it ideal for scraping dynamic content.

Steps to Scrape Product Reviews from eCommerce Sites Step 1: Identify Target eCommerce Sites First, decide which eCommerce sites you want to scrape. Popular choices include Amazon, eBay, Walmart, and Alibaba. Ensure that scraping these sites complies with their terms of service.

Step 2: Inspect the Website Structure Before scraping, inspect the webpage structure to identify the HTML elements containing the review data. Most browsers have built-in developer tools that can be accessed by right-clicking on the page and selecting "Inspect" or "Inspect Element."

Step 3: Set Up Your Scraping Environment Install the necessary libraries and tools. For example, if you're using Python, you can install BeautifulSoup, Scrapy, and Selenium using pip:

pip install beautifulsoup4 scrapy selenium Step 4: Write the Scraping Script Here's a basic example of how to scrape product reviews from an eCommerce site using BeautifulSoup and requests:

Step 5: Handle Pagination Most eCommerce sites paginate their reviews. You'll need to handle this to scrape all reviews. This can be done by identifying the URL pattern for pagination and looping through all pages:

Step 6: Store the Extracted Data Once you have extracted the reviews, store them in a structured format such as CSV, JSON, or a database. Here's an example of how to save the data to a CSV file:

Step 7: Use a Reviews Scraping API For more advanced needs or if you prefer not to write your own scraping logic, consider using a Reviews Scraping API. These APIs are designed to handle the complexities of scraping and provide a more reliable way to extract ecommerce sites reviews data.

Step 8: Best Practices and Legal Considerations Respect the site's terms of service: Ensure that your scraping activities comply with the website’s terms of service.

Use polite scraping: Implement delays between requests to avoid overloading the server. This is known as "polite scraping."

Handle CAPTCHAs and anti-scraping measures: Be prepared to handle CAPTCHAs and other anti-scraping measures. Using services like ScraperAPI can help.

Monitor for changes: Websites frequently change their structure. Regularly update your scraping scripts to accommodate these changes.

Data privacy: Ensure that you are not scraping any sensitive personal information and respect user privacy.

Conclusion Scraping product reviews from eCommerce sites can provide valuable insights into customer opinions and market trends. By using the right tools and techniques, you can efficiently extract and analyze review data to enhance your business strategies. Whether you choose to build your own scraper using libraries like BeautifulSoup and Scrapy or leverage a Reviews Scraping API, the key is to approach the task with a clear understanding of the website structure and a commitment to ethical scraping practices.

By following the steps outlined in this guide, you can successfully scrape product reviews from eCommerce sites and gain the competitive edge you need to thrive in today's digital marketplace. Trust Datazivot to help you unlock the full potential of review data and transform it into actionable insights for your business. Contact us today to learn more about our expert scraping services and start leveraging detailed customer feedback for your success.

#ScrapeProduceReviewsFromECommerce#ExtractProductReviewsFromECommerce#ScrapingECommerceSitesReviews Data#ScrapeProductReviewsData#ScrapeEcommerceSitesReviewsData

0 notes

Text

Amazon is a global premier online shopping site with many products for sale and an almost limitless number of customer feedback. For businessmen and researchers, it can be like an encyclopedia with essential information to make the right decision when investing in a particular item or creating a product line.

As sellers fill up online stores with products, customers can be picky and easily switch between brands and items until they find exactly what they're looking for. What’s also interesting is that they are not very discreet about it; they will create posts to share their experiences with certain products and, quite often, write a review post to help people decide what to purchase next. This enables the clients to offer their views about the products that companies deal in and this will be an added advantage in that companies will improve their products depending on what the clients are saying. This blog will focus even deeper on how scraping is accomplished on product review scraping in one of the largest retail e-commerce websites, Amazon.

What is Amazon Review Scraping?

Web scraping amazon reviews, therefore, entails the process of automatically scraping and gathering reviews from the product page of Amazon using web scrape tools. This tool crawls through the code of the website, scans over the reviews, and extracts some of the pertinent information, such as the author of the review, the rating given by the author, the comment, and the date of entry of that comment. Not to mention it is very efficient to get a lot of opinions at once in one spot. Nonetheless, it’s important to understand that this tool must be used correctly and adhere to the guidelines posted by Amazon and pertinent laws to prevent any problems with the law and account termination.

Amazon Reviews scraping using Python involves the process of making requests to the review pages of the item, analyzing the structure of the page, and then extracting data such as the name of the reviewer, star ratings, and comments for that particular item. It is similar to training a computer to live within the Amazon website or interface and obtain review data apart from the manual input. In general, the given process is helpful in order to scrape Amazon product reviews to gain more data overall in less time.

How Does Amazon Review Scraping Helps Businesses?

Amazon review scraping involves using automated tools to collect customer reviews from Amazon product pages. This practice offers several benefits that can help businesses in various ways. Here’s a detailed explanation of Amazon product review scraping to boost business operations:

Product Improvement

Web scraping amazon reviews often mention specific problems or suggestions for products. Scraping these reviews lets businesses see common issues that need fixing. For instance, if several reviews mention that a blender’s motor is weak, the company can focus on making it stronger in the upcoming version.

Competitive Analysis

By also scraping reviews of competitors' products, businesses can learn what their competitors are doing well or poorly. Businesses can improve their own products by taking note of the mistakes made by competitors and applying those winning traits to their own products.

Sentiment Analysis

Analyzing the emotions and opinions expressed in reviews helps businesses understand how customers feel about their products. Positive sentiments can indicate what’s working well, while negative sentiments can signal areas that need improvement. This helps in quickly addressing any issues and maintaining customer satisfaction.

Enhanced Customer Service

Review data shows what problems customers frequently face. This information helps businesses provide better customer service by anticipating issues and creating improved how-to manuals. For example, if many reviews mention difficulties with assembly, the company can create clearer instructions or instructional videos.

Informed Marketing Strategies

Knowing what customers appreciate about a product helps businesses create better marketing messages by scraping Amazon reviews. For example, if reviews highlight that a product is particularly useful for families, the marketing team can emphasize this in their advertising campaigns.

Boosting Sales

Insights from Product Review Scraping can improve product descriptions by highlighting features and products that customers prefer. Dealing with negative reviews openly shows potential customers that the company appreciates their feedback, which might increase sales and build confidence.

Boosting Sales

Insights from Product Review Scraping can improve product descriptions by highlighting features and products that customers prefer. Dealing with negative reviews openly shows potential customers that the company appreciates their feedback, which might increase sales and build confidence.

Identifying Brand Advocates

Insights from Product Review Scraping can improve product descriptions by highlighting features and products that customers prefer. Dealing with negative reviews openly shows potential customers that the company appreciates their feedback, which might increase sales and build confidence.

Strategic Decision Making

Detailed review data provides valuable insights that help businesses make informed decisions. Whether deciding to launch a new product, discontinue a failing one, or enter a new market, review data provides evidence to support these choices.

Cost-Effective Data Collection

Automated Amazon review scraping tools can collect vast amounts of review data quickly and efficiently, saving time and resources compared to manual collection. As a result, businesses can focus less on collecting data and more on analyzing it.

In-Depth Market Research

By gathering a large number of customer reviews from automated tools that scrape Amazon product reviews, businesses can understand what customers like and dislike about their products. This information helps companies see trends and patterns in customer preferences. For example, if many customers praise a product's durability, the company knows that this is a strong selling point.

What is the Process of Amazon Review Scraping?

When you scrape Amazon product ratings and reviews, you’re essentially extracting large datasets of information about customer satisfaction, market trends, and product quality. But it is not as easy when doing web scraping for Amazon reviews because Amazon follows strict guidelines and safety measures.

Content Source https://www.reviewgators.com/how-to-scrape-amazon-product-reviews-behind-a-login.php

1 note

·

View note

Text

Which are The Best Scraping Tools For Amazon Web Data Extraction?

In the vast expanse of e-commerce, Amazon stands as a colossus, offering an extensive array of products and services to millions of customers worldwide. For businesses and researchers, extracting data from Amazon's platform can unlock valuable insights into market trends, competitor analysis, pricing strategies, and more. However, manual data collection is time-consuming and inefficient. Enter web scraping tools, which automate the process, allowing users to extract large volumes of data quickly and efficiently. In this article, we'll explore some of the best scraping tools tailored for Amazon web data extraction.

Scrapy: Scrapy is a powerful and flexible web crawling framework written in Python. It provides a robust set of tools for extracting data from websites, including Amazon. With its high-level architecture and built-in support for handling dynamic content, Scrapy makes it relatively straightforward to scrape product listings, reviews, prices, and other relevant information from Amazon's pages. Its extensibility and scalability make it an excellent choice for both small-scale and large-scale data extraction projects.

Octoparse: Octoparse is a user-friendly web scraping tool that offers a point-and-click interface, making it accessible to users with limited programming knowledge. It allows you to create custom scraping workflows by visually selecting the elements you want to extract from Amazon's website. Octoparse also provides advanced features such as automatic IP rotation, CAPTCHA solving, and cloud extraction, making it suitable for handling complex scraping tasks with ease.

ParseHub: ParseHub is another intuitive web scraping tool that excels at extracting data from dynamic websites like Amazon. Its visual point-and-click interface allows users to build scraping agents without writing a single line of code. ParseHub's advanced features include support for AJAX, infinite scrolling, and pagination, ensuring comprehensive data extraction from Amazon's product listings, reviews, and more. It also offers scheduling and API integration capabilities, making it a versatile solution for data-driven businesses.

Apify: Apify is a cloud-based web scraping and automation platform that provides a range of tools for extracting data from Amazon and other websites. Its actor-based architecture allows users to create custom scraping scripts using JavaScript or TypeScript, leveraging the power of headless browsers like Puppeteer and Playwright. Apify offers pre-built actors for scraping Amazon product listings, reviews, and seller information, enabling rapid development and deployment of scraping workflows without the need for infrastructure management.

Beautiful Soup: Beautiful Soup is a Python library for parsing HTML and XML documents, often used in conjunction with web scraping frameworks like Scrapy or Selenium. While it lacks the built-in web crawling capabilities of Scrapy, Beautiful Soup excels at extracting data from static web pages, including Amazon product listings and reviews. Its simplicity and ease of use make it a popular choice for beginners and Python enthusiasts looking to perform basic scraping tasks without a steep learning curve.

Selenium: Selenium is a powerful browser automation tool that can be used for web scraping Amazon and other dynamic websites. It allows you to simulate user interactions, such as clicking buttons, filling out forms, and scrolling through pages, making it ideal for scraping JavaScript-heavy sites like Amazon. Selenium's Python bindings provide a convenient interface for writing scraping scripts, enabling you to extract data from Amazon's product pages with ease.

In conclusion, the best scraping tool for Amazon web data extraction depends on your specific requirements, technical expertise, and budget. Whether you prefer a user-friendly point-and-click interface or a more hands-on approach using Python scripting, there are plenty of options available to suit your needs. By leveraging the power of web scraping tools, you can unlock valuable insights from Amazon's vast trove of data, empowering your business or research endeavors with actionable intelligence.

0 notes

Text

A complete guide on : Web Scraping using AI

Data is readily available online in large amounts, it is an important resource in today's digital world. On the other hand, collecting information from websites might be inefficient, time-consuming, and prone to errors. This is where the powerful method comes into action known as AI Web Scraping, which extracts valuable information from webpages. This tutorial will guide you through the process of using Bardeen.ai to scrape webpages, explain popular AI tools, and talk about how AI enhances web scraping.

AI Web scraping is the term used to describe the act of manually gathering information from websites through artificial intelligence. Traditional web scraping involves creating programs that meet certain criteria so as to fetch data from websites. This technique can work well but proves inefficient on interactive web pages that contain JavaScript and change their contents frequently.

AI Website Scraper makes the scraping process smarter and more adaptable. With improved AI systems, information is better extracted, while data context gets understood properly and trends can be spotted. They are more robust and efficient when it comes to adapting to changes in website structure as compared to traditional scraping techniques.

Why to use AI for Web Scraping?

The usage of artificial intelligence in web scraping has several benefits which make it an attractive choice for different businesses, researchers and developers:

1. Adaptability: In case of any alterations made on the website structure, this kind of web scraper is able to adjust accordingly, ensuring that extraction of data does not end up being interrupted from manual updates always.

2. Efficiency: With automated extraction tools, large volumes of information can be collected within a short time compared with manually doing it.

3. Accuracy: Artificial intelligence will do better in understanding what data means by using machine learning and natural language processing; hence aids in accurately extracting especially unstructured or dynamic ones.

4. Expandability: Projects vary in scope making the ability to easily scale up when handling larger datasets important for AI driven web scraping.

How does AI Web Scraping Work?

AI Web Scraper works by imitating the human way of surfing the internet. When crawling, the AI web scraper makes use of algorithms to scroll the websites on the web and collects the data that might be useful for several purposes. Below is the basic process laid out.

1. Scrolling through the site - The AI web scraper will start the process by browsing the website being to access. Therefore, it's going to crawl everywhere and will track any links to other pages on the site to understand the architecture and find pages in the site that could be of interest.

2. Data extraction – During this step the scraper will extract, find, and distinguish data in which it was designed to, like , text, images, videos, etc. on the website.

3. Processing and structuring the data - The data that was taken is then processed and structured into a format that can be easily analyzed, such as a JSON or CSV file.

4. Resiliency - Websites could change their content or design at any time, so it’s very important that the AI is able to adjust to these changes and continue to scrape without having any issues.

Popular AI Tools for Web Scraping

Several based on AI web scraping technologies have more recently emerged, each with unique functionality to meet a variety of applications. Here are some of the AI web scraping technologies that are regularly mentioned in discussions.

- DiffBot: DiffBot automatically analyzes and extracts data from web pages using machine learning. It can handle complicated websites with dynamic content and returns data in structured fashion.

- Scrapy with AI Integration: Scrapy is a popular Python framework for web scraping. When integrated with AI models, it can do more complicated data extraction tasks, such as reading JavaScript-rendered text.

- Octoparse: This no-code solution employs artificial intelligence to automate the data extraction procedure. It is user-friendly, allowing non-developers to simply design web scraping processes.

- Bardeen.ai: Bardeen.ai is an artificial intelligence platform that automates repetitive operations such as web scraping. It works with major web browsers and provides an easy interface for pulling data from webpages without the need to write code.

What Data Can Be Extracted Using AI Web Scrapers?

Depending on what you want to collect, AI web scraping allows you to collect a wide range of data.

The most popular types are:

- Text data includes articles, blog entries, product descriptions, and customer reviews.

- Multimedia content includes photographs, videos, and infographics.

- Meta-data which include records of the prices, details of the products, the available stock and the rest are part of the organized data.

- Examples of user’s content are comments, ratings, social media posts, and forums.

These data sources may be of many different qualitatively different forms, for example, multimedia or dynamic data. This means that you have an additional chance to get more information, and therefore, to come up with proper staking and planning.

How to Use Bardeen for Web Scraping ai

Barden. AI, on the other hand, is a versatile tool that makes it simple to scrape site data without requiring you to know any coding. Instructions: Applying Bardeen AI to web scraping:

1. Create a field and install Bardeen. ai Extension:

- Visit the bardeen.ai website and create an account.

- Install the Bardeen. The ai browser extension is available for Google Chrome alongside other Chromium-based browsers.

2. Create a New Playbook:

– Once installed, click on Bardeen in the extension. Click on the ai icon in your browser to open dashboard, Click on Create New Playbook to initiate a new automated workflow.

3. Set Up the Scraping Task:

Select “Scrape a website” from the list of templates.

– Input the web URL of your desired scraping website. bardeen.ai will load page automatically and give us an option to choose elements that needed to be extracted.

4. Select Elements:

Utilize the point-and-click interface to choose exact data elements you would like extracted such as text, images or links

- bardeen.ai selects the elements for you and will define extraction rules as per your selection.

5. Run the Scraping Playbook:

- After choosing the data elements, execute the scraping playbook by hitting “Run”.

- Bardeen. ai will automatically use these to scrape the data, and save it in csv or json.

6. Export and Use the Data:

Bardeen (coming soon!) lets you either download the extracted data or integrate it directly into your workflows once scraping is complete. Integration options with tools like Google Sheets, Airtable or Notion.

Bardeen.ai simplifies the web scraping process, making it accessible even to those without technical expertise. Its integration with popular productivity tools also allows for seamless data management and analysis.

Challenges of AI Data Scraping

While AI data scraping has many advantages, there are pitfalls too, which users must be conscious of:

Websites Changes: Occasionally websites may shift their structures or content thereby making it difficult to scrape. Nonetheless, compared to traditional methods, most AI-driven scrapers are more adaptable to these changes.

Legal and Ethical Considerations: When doing website scraping, the legal clauses as contained in terms of service should be adhered to. It is important to know them and operate under them since violation can result into lawsuits.

Resource Intensity: There may be times when using AI models for web scraping requires massive computational resources that can discourage small businesses or individual users.

Benefits Of Artificial Intelligence-Powered Automated Web Scraping:

Despite the problems, the advantages of Automated Web Scraping with AI are impressive. These include:

Fast and Efficient – AI supported tools can scrape large volumes of data at a good speed hence saving time and resources.

Accuracy – When it comes to unstructured or complex datasets, AI improves the reliability of data extraction.

Scalable – The effectiveness of a web scraping tool depends on its ability to handle more data as well as bigger scraping challenges using artificial intelligence therefore applicable for any size project.

The following is a guide if you want your AI Web Scraping to be successful:

To make the most out of AI Web Scraping, consider these tips: To make the most out of AI Web Scraping, consider these tips:

1. Choose the Right Tool: These Web Scrapers are not all the same and are categorized into two main types: facile AI Web Scrapers and complex AI Web Scrapers. Select some tool depending on your requirements – to scrape multimedia or to deal with dynamic pages.

2. Regularly Update Your Scrapers: Web site designs may vary from one layout or structure to the other. These scraping models needs to be updated from time to time so as to ensure it provides the latest data.

3. Respect The Bounds Of The Law: You should always scrape data within the confines of the law. This means adhering to website terms of service as well as any other relevant data protection regulations.

4. Optimize For Performance: Make sure that your AI models and scraping processes are optimized so as to reduce computational costs while improving efficiency at the same time.

Conclusion

AI Web Scraping is one of the most significant ways we are obtaining information from the web. These tools are a more efficient and accurate way of gaining such information as the process has been automated and includes artificial intelligence to make it more scalable. It is very suitable for a business who wish to explore the market, for a researcher who is gathering data to analyze or even a developer who wants to incorporate such data into their application.

This is evident if for example one used tools like Bardeen. ai’s web scraper is designed to be used by anyone, even if they do not know how to code, thus allowing anyone to make use of web data. And as more organizations rely on facts and data more and more, integrating AI usage in web scraping will become a must-have strategy for your business in the contemporary world.

To obtain such services of visit – Enterprise Web Scraping

0 notes

Text

You need a specific set of skills if you want to succeed as an SEO specialist. At the very least, you need to know how to do keyword research and create eye-catching content. And to make sure you give your clients top-notch Port St. Lucie SEO services, you need to know how to code. It doesn’t take much effort to learn a few programming languages. Still, if you need a helping hand, then best check out the simple guide provided below. Why Learn Programming First, let’s talk about the benefits you can enjoy from learning how to code. By arming yourself with a few useful programming languages, you’ll basically be able to do a kickass job as an SEO pro. And as if that’s not enough, you’ll be able to enjoy the following: Automated Tasks Providing quality SEO services entails many tasks, some of which are repetitive and time-consuming. Coding languages like JavaScript and Python can help you make a program that will do these tasks for you. This frees up your time and energy for other important matters. Improved Technical SEO Audits Regularly doing technical SEO audits help search engines like Google crawl and index your pages more effectively. And to make sure you accomplish precisely that, you need to learn a few programming languages along the way. Better Keyword Insertion Keywords are usually strategically placed in the web site's content, alongside backlinks that will help boost its reputation and rankings. But as you probably already know, you need to insert the keywords in other places as well, including the images’ alt text sections and the subheadings. By knowing how to write HTML code, you can make sure the keywords are placed seamlessly. Enhanced Problem-Solving Skills Lastly, you can significantly improve your ability to solve problems by learning how to code. With this skill, you’ll be able to deal with any issue you encounter head-on, thus enabling you to enjoy a better career in the SEO industry. Top Computer Programming Languages to Learn Next, let’s check out some of the most useful programming languages for SEO specialists. Through these languages, you’ll be able to optimize not only your clients’ websites but also your own career. So without further ado: HTML Although it’s technically not a programming language, HTML (HyperText Markup Language) can nevertheless help you give any website a decent structure. This web-based language provides you with a series of tags that enable you to organize and place content on the web pages. From your articles’ paragraphs and headings to their images and videos, HTML will aid you in making all of them come together. CSS While HTML provides the structure, CSS focuses on the website’s style. By using this language, you can make your clients’ pages look more visually appealing. This will ultimately help their website provide a better user experience, as well as make it easier for people to navigate through it. HTML and CSS should be used together. JavaScript As the most used programming language out there, JavaScript is responsible for providing infrastructure to countless websites. Even well-known social media platforms like Facebook, Instagram, and Youtube, rely on this language. In short, not only will it help you improve your clients’ rankings on search engines, but it will provide you with various frameworks to enhance their pages, too. PHP Aside from JavaScript, a lot of websites also rely on PHP. Clear and easy to learn, this programming language also offers a lot of useful frameworks to make your life as an SEO specialist easier. Take Laravel for example. With its advanced development tools and other features, it can easily outperform other web frameworks. It will help you simplify your entire web development process, and it can help you create cleaner, reusable code. Python As mentioned above, Python can help you automate some of the tasks you need to finish while providing quality SEO services. However, that’s not the only reason why it’s so hot right now.

This fast-growing language can also improve your clients’ rankings by improving their technical SEO, connecting their site to Google API, and more. The best part is, Python is extremely easy to learn and use. SQL Finally, you can employ SQL to improve how you do SEO audits. Since it’s designed to help users organize and analyze data, this programming language can help you create better keyword profiles, automated category tagging systems, SEO monthly reports, and what-not. SQL will turn you into a very formidable SEO specialist alongside other tools like AHrefs, Google Analytics, and SEMRush. Start Learning and Coding In this day and age, equipping yourself with the right skills and tools can improve the services you offer as well as give you an edge over your competition. By learning the programming languages mentioned above, you’ll be able to outplay your rivals in the SEO industry. So if you haven’t yet, start learning a programming language today.

0 notes

Text

Winter 2024! (newsletter)

(a copypasta from the newsletter, saved here for ease)

Happy 2024 ! :) ✩

Here’s a round-up of everything I’m working on in the upcoming Winter season ❄️

2023 Highlights →

Octavia Butler AI with Dr. Beth Coleman

This was my second (and a half) year working with Beth on her AI project inspired by Octavia Butler’s writing. With a bespoke AI Image GAN, we created images series inspired by Alice Coltrane, oceanic jellyfish, and Black Panther Party gatherings. Images were reproduced as oil paintings, and documented through a web archive, an exhibition and symposium, and (most excitingly) a published book.

UNCG

I returned to the College of Visual and Performing Arts at University of North Carolina Greensboro as an instructor of two classes, Intro to Web Design & Professional Practices. The latter focused on portfolio & CV building to support students as they graduate into careers as working artists.

Artivive AR Residency

In April, I wrapped up a year-long mentorship residency with Artivive, an AR platform focused on artists. My first time using Blender, I worked with my mentor Agnes Michalczyk, and produced an AR sculpture PaperCuts that has since exhibited in Vienna at Artivive headquarters and in the Geary Art Crawl 2023.

InterAccess

First complete year with InterAccess as Programming Manager (and first day job!) Very grateful for the opportunity to work full-time in a permanent arts position. Highlights include starting an Open HDMI event series, an after hours event inviting visual artists to plug into InterAccess’s in-house immersive projection system, IA 360°, in an open deck style. Several Open HDMI events of the same name have been popping up around the new media scene since the first IA event in April! (1) (2)

Upcoming →

Did You Eat? | Group Exhibition @ Ed Video | January 15 – February 15, 2024

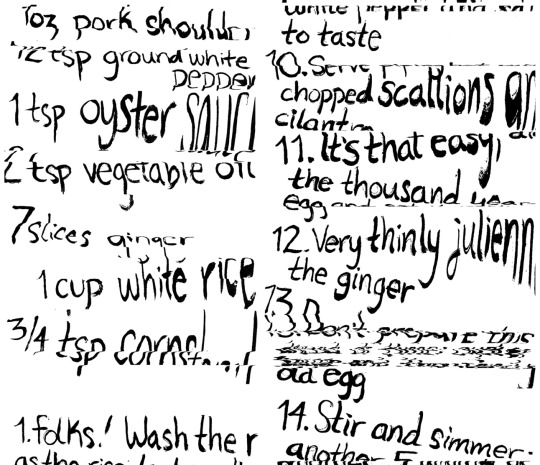

I’ll be showing a series of remixed recipes on acrylic, Ju/uu/ook,as part of a symposium focused on ✩FOOD✩ (including food production vs insecurity, cultural dishes’ role in nostalgia and tradition, and consumerism vs consumption)

Ju/uu/ook looks at the shifts in traditional recipes - whether through generations, miscommunication, or flirtation with shared or adjacent cultures - as something to be welcomed, rather than lamented as culture lost.

section of the distorted scan

Recipes for juuk, char siu, and joong were run through a custom Python script, randomizing the ingredient measurements and instruction order to create a copy, reminiscent of the dish, but nonsensical. The new recipe was then written by hand with traditional calligraphy tools, digitally scanned with physical distortion, cut into acrylic, and then handpainted once again.

painting process! the text was cut in reverse on the back so it appears to float from the front

This project started over a year ago through a School for Poetic Computation course, Digital Love Languages, where I developed the Python script that remixes the recipe text.

snapshot of the code

Did You Eat? | Closing Reception @ Ed Video | Feb 9 2024, time TBA

Closing event to celebrate the exhibition – including a potluck!

Did You Eat? | Artist Panel @ Online | Feb 10 2024, 3PM

Online panel discussion with the other exhibiting artists; email [email protected] to register.

Topics in Communication, Culture, Information & Technology | Design Consultant @ UTM | Winter semester

I’ll be working with Dr. Beth Coleman supporting her class researching conceptual data visualization with a fabrication focus.

Solidarity Infrastructures| School for Poetic Computation

I’ve been accepted to a course (on scholarship, big thank you to equity pricing) with SFPC, starting next week! We’ll be learning about “slow web, organic Internet, right-to-repair, data sovereignty, minimal computing and anti-computing”. Very excited!

Day job updates / InterAccess →

Media Arts Prize Programming | InterAccess | Jan 17 – Feb 17, 2024

↪ Exhibition: once more, once again | Ghislan Sutherland-Timm | January 17 – February 17, 2024 ↪ Exhibition: Kaboos: An exhibition of nightmares | Mehrnaz Abdoos | January 17 – February 17, 2024 ↪ Workshop: DIY Vacuum Formed Lamps | Audrey Ammann January 30, 2024, 6 – 9PM ↪ Artist Talk: Farming the Future | Murley Herrle-Fanning | February 3, 2024, 2 – 3:30PM

0 notes

Text

Essential Python Tools for Modern Data Science: A Comprehensive Overview

Python has established itself as a leading language in data science due to its simplicity and the extensive range of libraries and frameworks it offers. Here's a list of commonly used data science tools in Python:

Data Manipulation and Analysis:

pandas: A cornerstone library for data manipulation and analysis.

NumPy: Provides support for working with arrays and matrices, along with a large library of mathematical functions.

SciPy: Used for more advanced mathematical and statistical operations.

Data Visualization:

Matplotlib: A foundational plotting library.

Seaborn: Built on top of Matplotlib, it offers a higher level interface for creating visually pleasing statistical plots.

Plotly: Provides interactive graphing capabilities.

Bokeh: Designed for creating interactive visualizations for use in web browsers.

Machine Learning:

scikit-learn: A versatile library offering simple and efficient tools for data mining and data analysis.

Statsmodels: Used for estimating and testing statistical models.

TensorFlow and Keras: For deep learning and neural networks.

PyTorch: Another powerful library for deep learning.

Natural Language Processing:

NLTK (Natural Language Toolkit): Provides libraries for human language data processing.

spaCy: Industrial-strength natural language processing with pre-trained models for various languages.

Gensim: Used for topic modeling and similarity detection.

Big Data Processing:

PySpark: Python API for Apache Spark, which is a fast, in-memory data processing engine.

Web Scraping:

Beautiful Soup: Used for pulling data out of HTML and XML files.

Scrapy: An open-source and collaborative web crawling framework.

Requests: For making various types of HTTP requests.

Database Integration:

SQLAlchemy: A SQL toolkit and Object-Relational Mapping (ORM) library.

SQLite: A C-language library that offers a serverless, zero-configuration, transactional SQL database engine.

PyMongo: A Python driver for MongoDB.

Others:

Jupyter Notebook: An open-source web application that allows for the creation and sharing of documents containing live code, equations, visualizations, and narrative text.

Joblib: For saving and loading Python objects, useful when working with large datasets or models.

Scrapy: For web crawling and scraping.

The Python ecosystem for data science is vast, and the tools mentioned above are just the tip of the iceberg. Depending on the specific niche or requirement, data scientists might opt for more specialized tools. It's also worth noting that the Python data science community is active and continually innovating, leading to new tools and libraries emerging regularly.

0 notes

Text

Web Crawling in Python 3

Steps Involved in Web Crawling

To perform this tutorial step-by-step with me, you’ll need Python3 already configured on your local development machine. You can set up everything you need before-hand and then come back to continue ahead.

Creating a Basic Web Scraper

Web Scraping is a two-step process:

You send HTTP request and get source code web pages.

You take that source code and extract information from it.

Both these steps can be implemented in numerous ways in various languages. But we will be using request and bs4 packages of python to perform them.

pip install beautifulsoup4

If you want to install BeautifulSoup4 without using pip or you face any issues during installation you can always refer to the official documentation.

Create a new folder 📂 : With bs4 ready to be utilized, let’s create a new folder for our lab inside any code editor you want (I will be using Microsoft Visual Studio Code

Firstly, we import request package from urllib folder (a directory containing multiple packages related to HTTP requests and responses) of Python so that we can use a particular function that the package provides to make an HTTP request to the website, from where we are trying to scrape data, to get complete source code of its webpage.

import urllib.request as req

Import BeautifulSoup4 package

Next, we bring in the bs4 package that we installed using pip. Think of bs4 as a specialized package to read HTML or XML data. Bs4 has methods and behaviours that allow us to extract data from the webpages’ source code we provide to it, but it doesn’t know what data to look for or in which part to look out.

We will help it to gather information from the webpage and return that info back to us.

import bs4

Provide the URL for webpage

Finally, we provide the crawler with URL of the webpage from where we want to start gathering data: https://www.indeed.co.in/python-jobs.

If you paste this URL in your browser, you will reach indeed.com’s search results page, showing the most relevant jobs out of 11K jobs containing Python as a skill required.

Next, we will send an HTTP request to this URL.

URL = “https://www.indeed.co.in/python-jobs“

Making an HTTP request

Now let’s make a request to indeed.com for the search results page, using HTTP(S) protocol. You typically make this request by using urlopen() from the request package of Python. However, the HTTP response we get is just an object and we cannot make anything useful out it. So, we will handover this object to bs4 to extract the source code and do the needful with it. Send a request to a particular website like this:

response = req.urlopen(URL)

Extracting the source code

Now let’s extract out the source code from the response object. You, generally, will do this by feeding this response object to the BeautifulSoup class present inside bs4 package. However, this source code is very large and it’s a very tedious task to read through it, so we would want to filter the information out of this source code later on. Hand over the response object to BeautifulSoup by writing the following line:

htmlSourceCode = bs4.BeautifulSoup(response)

Testing the crawler

Now let’s test out the code. You can run your Python files by running a command like python <filename> in the integrated terminal of VS Code. Moreover, VS Code has got a graphical play button which can directly run the file which is currently open in the text editor. Still, execute your file by running the following command:

python crawler.py

Read Full Article Here - Web Crawling in Python 3

0 notes