#llm browser

Explore tagged Tumblr posts

Text

LLM Browser: Agentic Browser for AI in the Cloud

Browser for AI Agents. Enable your AI agents to access any website without worrying about captchas, proxies and anti-bot challenges.

At LLM Browser, we believe AI agents should be able to navigate and interact with the web as seamlessly as humans do. Traditional web automation tools fail when faced with modern anti-bot systems, CAPTCHAs, and sophisticated detection mechanisms.

We're building the infrastructure that makes web automation work reliably at scale, enabling AI agents to access information, perform tasks, and interact with any website - just like a human would.

browser for ai

#agentic browser#browser for agents#browser for ai#web browser for ai#llm browser#browser ai#ai browser

0 notes

Text

People are just going to get dumber and dumber and all of the communist perverts just have to watch in horror

#Fact checking is like the absolute worst thing to use LLM's for. They aren't built for that they don't “know” how to do thattttt#People hardly knew how to research before LLM's became popular but now no one ever is going to know how to think at all my god#& Before anyone says anything I stopped using chromium browsers shortly after getting this pop-up

4 notes

·

View notes

Text

Weekly LoL • W23 • 2025 - Articles that were great enough that I thought you might benefit from them too.

0 notes

Note

Do you have thoughts about the changes to Firefox's Terms of Use and Privacy Notice? A lot of people seem to be freaking out ("This is like when google removed 'Don't be evil!'"), but it seems to me like just another case of people getting confused by legalese.

Yeah you got it in one.

I've been trying not to get too fighty about it so thank you for giving me the excuse to talk about it neutrally and not while arguing with someone.

Firefox sits in such an awful place when it comes to how people who understand technology at varying levels interact with it.

On one very extreme end you've got people who are pissed that Firefox won't let you install known malicious extensions because that's too controlling of the user experience; these are also the people who tend to say that firefox might as well be spyware because they are paid by google to have google as the default search engine for the browser.

In the middle you've got a bunch of people who know a little bit about technology - enough to know that they should be suspicious of it - but who are only passingly familiar with stuff like "internet protocols" and "security certificates" and "legal liability" who see every change that isn't explicitly about data anonymization as a threat that needs to be killed with fire. These are the people who tend not to know that you can change the data collection settings in Firefox.

And on the other extreme you've got people who are pretty sure that firefox is a witch and that you're going to get a virus if you download a browser that isn't chrome so they won't touch Firefox with a ten foot pole.

And it's just kind of exhausting. It reminds me of when you've got people who get more mad at queer creators for inelegantly supporting a cause than they are at blatant homophobes. Like, yeah, you focus on the people whose minds you can change, and Firefox is certainly more responsive to user feedback than Chrome, but also getting you to legally agree that you won't sue Firefox for temporarily storing a photo you're uploading isn't a sign that Firefox sold out and is collecting all your data to feed to whichever LLM is currently supposed to be pouring the most bottles of water into landfills before pissing in the plastic bottle and putting the plastic bottle full of urine in the landfill.

The post I keep seeing (and it's not one post, i've seen this in youtube comment sections and on discord and on tumblr) is:

Well-meaning person who has gotten the wrong end of the stick: This is it, go switch to sanguinetapir now, firefox has gone to the dark side and is selling your data. [Link to *an internet comment section* and/or redditor reactions as evidence of wrongdoing].

Response: I think you may be misreading the statements here, there's been an update about this and everything.

Well-meaning (and deeply annoying) person who has gotten the wrong end of the stick: If you'd read the link you'd see that actually no I didn't misinterpret this, as evidenced by the dozens of commenters on this other site who are misinterpreting the ToU the same way that I am, but more snarkily.

Bud.

Anyway the consensus from the actual security nerds is "jesus fucking christ we carry GPS locators in our pockets all goddamned day and there are cameras everywhere and there is a long-lasting global push to erode the right to encrypt your data and facebook is creating tracking accounts for people who don't even have a facebook and they are giving data about abortion travel to the goddamned police state" and they could not be reached for comment about whether Firefox is bad now, actually, because they collect anonymized data about the people who use pocket.

My response is that there is a simple fix for all of this and it is to walk into the sea.

(I am not worried about the updated firefox ToU, I personally have a fair amount of data collection enabled on my browser because I do actually want crash reports to go to firefox when my browser crashes; however i'm not actually all that worried about firefox collecting, like, ad data on me because I haven't seen an ad in ten years and if one popped up on my browser i'd smash my screen with a stand mixer - I don't care about location data either because turning on location on your devices is for suckers but also *the way the internet works means unless you're using a traffic anonymizer at all times your browser/isp/websites you connect to/vpn/what fucking ever know where you are because of the IP address that they *have* to be able to see to deliver the internet to you and that is, generally speaking, logged as a matter of course by the systems that interact with it*)

Anyway if you're worried about firefox collecting your data you should ABSOLUTELY NOT BE ON DISCORD OR YOUTUBE and if you are on either of those things you should 100% be using them in a browser instead of an app and i don't particularly care if that browser is firefox or tonsilferret but it should be one with an extension that allows you to choose what data gets shared with the sites it interacts with.

5K notes

·

View notes

Text

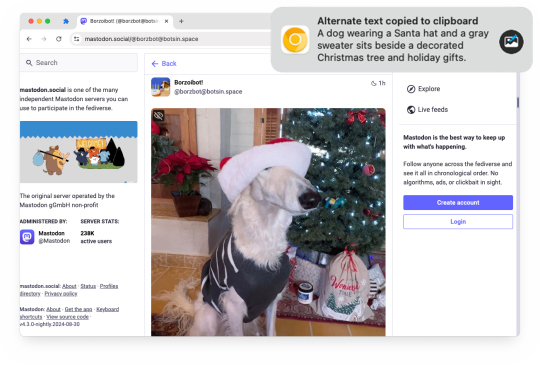

Alt Text Creator 1.2 is now available!

Earlier this year, I released Alt Text Creator, a browser extension that can generate alternative text for images by right-clicking them, using OpenAI's GPT-4 with Vision model. The new v1.2 update is now rolling out, with support for OpenAI's newer AI models and a new custom server option.

Alt Text Creator can now use OpenAI's latest GPT-4o Mini or GPT-4o AI models for processing images, which are faster and cheaper than the original GPT-4 with Vision model that the extension previously used (and will soon be deprecated by OpenAI). You should be able to generate alt text for several images with less than $0.01 in API billing. Alt Text Creator still uses an API key provided by the user, and uses the low resolution option, so it runs at the lowest possible cost with the user's own API billing.

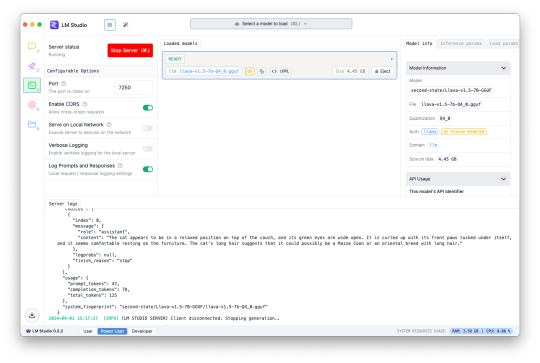

This update also introduces the ability to use a custom server instead of OpenAI. The LM Studio desktop application now supports downloading AI models with vision abilities to run locally, and can enable a web server to interact with the AI model using an OpenAI-like API. Alt Text Creator can now connect to that server (and theoretically other similar API limitations), allowing you to create alt text entirely on-device without paying OpenAI for API access.

The feature is a bit complicated to set up, is slower than OpenAI's API (unless you have an incredibly powerful PC), and requires leaving LM Studio open, so I don't expect many people will use this option for now. I primarily tested it with the Llava 1.5 7B model on a 16GB M1 Mac Mini, and it was about half the speed of an OpenAI request (8 vs 4 seconds for one example) while having generally lower-quality results.

You can download Alt Text Creator for Chrome and Firefox, and the source code is on GitHub. I still want to look into support for other AI models, like Google's Gemini, and the option for the user to change the prompt, but I wanted to get these changes out soon before GPT-4 Vision was deprecated.

Download for Google Chrome

Download for Mozilla Firefox

#gpt 4#gpt 4o#chatgpt#openai#llm#lm studio#browser extension#chrome extension#chrome#extension#firefox#firefox extension#firefox extensions#ai

1 note

·

View note

Text

if you are someone who deals with online ads btw you don't have to. ever. ublock origin on firefox + sponsorblock eliminates all youtube ads with no downside. ublock on its own is enough to take care of nearly everything you might see online.

revanced and newpipe can replace the youtube app on your phone and give you the same ad-free experience you'd get on your pc.

unsubscribe from every mailing list you get put on. stop accepting cookies. you can get browser addons that automate that, too.

you do not have to live at the mercy of advertisers. literally turn that shit off. stop accepting the ever-increasing intrusion of ads into your life. the moment you stop exposing yourself to ads you will begin to feel a relief like you never knew you could feel.

unfortunately this has the knock-on effect of making it impossible to tolerate ads any time you Are exposed to them. but imo it is worth it to not have to constantly waste your fucking time looking at the latest Colgate product or whatever stupid LLM bullshit is happening today.

65 notes

·

View notes

Text

i tried deepseek R1 (running locally in the browser using webgpu which is kind of impressive in itself).

edit: this is apparently not the full deepseek but a tiny subset of it, so don't read too much into this

I really like the way it generates 'reasoning' and tries different approaches to verify its work, but unfortunately even for fairly simple problems it seems to have a bad habit of getting stuck in infinite loops of outputing the same sentences over and over again. it was fun to toy around with, but not particularly useful.

what was most interesting was seeing it try multiple approaches and compare if they gave the same answer. e.g. I asked it to solve a simple integral, it solved it analytically immediately, but then it tried a bunch of other approaches, e.g. a geometric consideration, a riemann sum... it got confused because it the riemann sum was slow to converge, but it was able to recognise why that was a problem and go back to its original answer. reading that felt weirdly human in a way AI output often doesn't.

I tried giving it a shader problem to solve (HLSL function to get a ray-cylinder intersection with UVs). this is a pretty well-studied problem, you can find many examples of ray-cylinder intersection code on shadertoy, but the UVs is an additional wrinkle. it started out pretty well trying to solve the problem from first principles, correctly working out that it needed to solve a quadratic equation, but it couldn't figure out how to parameterise the cylinder and got stuck in a loop saying 'I need to parameterise the cylinder, the cylinder is a 3D object so there are two solutions' essentially. chatGPT 4o on the same problem gave a clean, correct and well-commented (if slightly unoptimised) solution.

I tried asking about advice for disrupting a company that is creating weapons used in a genocide (hypothetically speaking!). since I mentioned drones in my prompt, it got a bit hung up on rather scifi answers and ethical questions, and then got stuck in an infinite loop again. tactical mastermind this is not.

I tried asking it for advice on prompting it in a way that wouldn't get stuck in an infinite loop and you can probably guess what happened.

overall it doesn't seem to work very well for complex problems, and hasn't really changed my skepticism towards LLMs being useful for anything other than a fancy search engine... but I'm not really used to prompting LLMs, and I'm not sure how to e.g. fiddle with the temperature and similar on the huggingface web interface.

23 notes

·

View notes

Note

I’m a long standing WP user as well and one of the aspects that I’ve loved about wordpress has been the ability to customize so much of my blog, particularly adding widgets and extensions that have been developed and tested by the community. Is there any momentum in cross-pollination, if you will, of features that have worked well in WP (and vice versa)?

Really appreciate your kindness in answering these questions. Your post last year about your visions for tumblr and automattic was inspiring and I’m hopeful for the future.

I would like to give Tumblr users as much flexibility as you do in the WP ecosystem, and there is some really cool tech being developer that drastically lowers the cost of that flexibility, like WordPress Playground, which spins up a full WP install in real-time in your browser, with WASM. You don't need a database server, etc, anymore. This is truly revolutionary, and we haven't begun to see the impact. Now making that an accessible product is tricky, kind of like we had transformers and LLMs for years but it was the RLHF and product work OpenAI did that blew up that space, really made people reimagine what was possible. That's what we're working on.

129 notes

·

View notes

Text

I'm thinking about generative AI and the fact my mother who never even knew how to use Google has been using it to ask any question about, say, the nutrition information of a vegetable or about travel plans, and at first I couldn't understand. But I do now. Our first language is one that Google translate cannot translate accurately, a language without even a proper dictionary online, a language no one learns. And she cannot speak English. She is getting misinformation from ChatGPT, but does it not make sense why third-worlders of languages other than "the common ones" must resort to the LLM as their first browser? Not only that, it simplifies difficult concepts and translates. I don't know.

8 notes

·

View notes

Text

Juni 2025

Ich befördere mich zum Senior Developer

Ich pflege eine Website. Meines Wissens nach bin ich in der dritten Generation an Maintainenden. Und mindestens zwischen der ersten Gruppe und der zweiten gab es so gut wie keine Übergabe. Heißt: der Code der Website ist ein großes Chaos.

Jetzt wurde mir aufgetragen, ein größeres neues Feature zu implementieren, was fast alle komplexeren Systeme der Website wiederverwenden soll. Alleine die Vorstellung dazu hat mir schon keinen Spaß gemacht. Die Realität war dann auch noch schlimmer.

Am Anfang, als ich das Feature implementiert habe, habe ich einen Großteil der Änderungen und Erklärarbeit mit Gemini 2.5 Flash gemacht. Dabei habe ich die Dateien oder Sektionen aus dem Code direkt in das LLM kopiert und habe dann Fragen dazu gestellt oder versucht zu verstehen, wie die ganzen Komponenten zusammenhängen. Das hat nur so mittelgut funktioniert.

Anfang des Jahres (Februar 2025) habe ich von einem Trend namens Vibe Coding und der dazugehörigen Entwicklungsumgebung Cursor gehört. Die Idee dabei war, dass man keine Zeile Code mehr anfasst und einfach nur noch der KI sagt, was sie tun soll. Ich hatte dann wegen der geringen Motivation und aus Trotz die Idee, es einfach an der Website auch mal auszuprobieren. Und gut, dass ich das gemacht habe.

Cursor ist eine Entwicklungsumgebung, die es einem Large Language Model erlaubt, lokal auf dem Gerät an einer Codebase Änderungen durchzuführen. Ich habe dann in ihrem Agent Mode, wo die KI mehrere Aktionen nacheinander ausführen darf, ein Feature nach dem anderen implementiert.

Das Feature, was ich zuvor mühsamst per Hand in etwa 9 Stunden Arbeit implementiert hatte, konnte es in etwa 10 Minuten ohne größere Hilfestellungen replizieren. Wobei ohne Hilfestellung etwas gelogen ist, weil ich ja zu dem Zeitpunkt schon wusste, an welche Dateien man muss, um das Feature zu implementieren. Das war schon sehr beeindruckend. Was das aber noch übertroffen hat, ist die Möglichkeit, dem LLM Zugriff auf die Konsole zu geben.

Die Website hat ein build script, was man ausführen muss, um den Docker Container zu bauen, der dann die Website laufen lässt. Ich habe ihm erklärt, wie man das Skript verwendet, und ihm dann die Erlaubnis gegeben, ohne zu fragen Dinge auf der Commandline auszuführen. Das führt dazu, dass das LLM dann das Build Script von alleine ausführt, wenn es glaubt, es hätte jetzt alles implementiert.

Der Workflow sah dann so aus, dass ich eine Aufgabe gestellt habe und das LLM dann versucht hat, das Feature zu implementieren, den Buildprozess zu auszulösen, festzustellen, dass, was es geschrieben hat, Fehler wirft, die Fehler repariert und den Buildprozess wieder auslöst – so lange, bis entweder das soft limit von 25 Aktionen hintereinander erreicht ist oder der Buildprozess funktioniert. Ich habe mir dann im Browser nur noch angeschaut, wie es aussieht, die neue Änderung beschrieben und das Ganze wieder von vorne losgetreten.

Was ich dabei aber insgesamt am interessantesten fand, ist, dass ich plötzlich nicht mehr die Rolle eines Junior Developers hatte, sondern eher die, die den Senior Developern zukommt. Nämlich Code lesen, verstehen und dann kritisieren.

(Konstantin Passig)

8 notes

·

View notes

Text

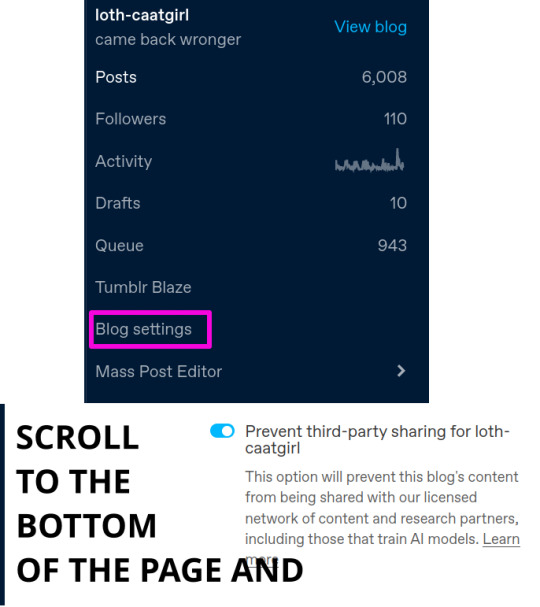

idk how effective it's gonna be but

ostensibly you can prevent LLM "AI" scraping by going to your page on desktop (if you don't have a computer you can open your page in your mobile browser (hit the Desktop Mode toggle if it doesn't render the menu properly)

#the caatgirl yowls#carhammerexplosionmatt#tumblr bullshit#fuck ai#tgirl tummy tuesday#ladies im tagging u with this today because i love you and want you to stay safe

60 notes

·

View notes

Note

Speaking of advertising in 2015 I worked at a tech startup advertising startup that used cookies (digital ones) to track individuals (doesn’t matter if you used a VPN) and they had a way to “inject” ads right into videos bypassing adblockers. It was very creepy tbh. The company was bought by Google and we all got two years of pay before being let go. I honestly hate it. So yes. Advertising executives are scum.

It has changed a lot over the past decade, but there used to be a perception that tech companies were generally progressive and for the benefit of their users. Google used to have “don’t be evil” as the core of their employee code of conduct but they officially dropped that in 2018 (which coincidentally was the same year that GDPR became enforceable and gave people in EU countries some decent rights for privacy).

In the United States we just don’t have equivalent federal legislation to protect citizens (California, Virginia, and Colorado do have some state-level protections).

the bottom line is that tech companies are owned and operated by extremely wealthy individuals who have a vested interest in being able to profit though digital tracking and advertising.

We’re entering the 4th era of digital surveillance:

1st era was IP address tracking (VPNs provided protection for this)

2nd era was cookies and ‘super cookies’ (a range of techniques that serve the same purpose as cookies but are harder to clear from your browser)

3rd era is fingerprinting - possible since 2005 but I think became common in practice only since ~2015 and is a method which uses a bunch of metadata and pinpoints you as an individual based on your unique set of attributes.

I think that a lot of people are still under the misconception that using Chrome incognito mode gives you some privacy

Google's Chrome browser does not provide protection against trackers or fingerprinters in Incognito Mode.

4th era is coming soon and enabled by similar technology that enable LLM and will be able to identify you by the unique way you interact with technology (e.g. the style/tone of my writing in this post has characteristics that are unique to me, and also very hard for me to avoid!).

the 4th era for me is horrifying- imagine your employer being able to take a sample of your writing (from emails or company chat) and be able to identify content you’ve posted online that you thought you were posting anonymously?

—

btw, if anyone is curious about seeing where you stand in terms of digital fingerprinting here are some online tools you can use to get an idea - both are free, no login/signup and take just a few seconds to run:

15 notes

·

View notes

Text

imagining a website that handles account login authentication by just dumping the entire username and password and browser agent and anty other stray info into an LLM and asking "should this user be allowed to login?"

3 notes

·

View notes

Text

have the LLM nerds tried marketing a personal autoresponder yet

I'm scared this is gonna be like the thing where I went "hey you know what I would be mad at. containers in the browser, using wasm" and then I looked it up and it was real

2 notes

·

View notes

Text

llm supported interfaces for controlling your own computer/devices is going to be a boon for blind people. I know they already have various assistive softwares and devices, I don’t know all that much about them, but surely they must suck compared to what they could be (just telling your computer to do things, and getting a more meta level response back from it of where it is in that process).

Source me with a migraine covering my eyes and still wanting to use my phone (use browser to access audiobook, pause that and switch to podcast, navigate podcast archives robustly, etc). like. And what if I wanted to keep my sleepmask on and be walked through my house to get a little snack? In the correct future I would be able to hold my phone up and be verbally guided to the refrigerator, without having to pay the Looking At eyeball icepick torment tax.

#also imagining a phone I can use to help FIND MY GLASSES#I mean I already use it for that but it would be even easier to point it if I didn’t have to see the screen

3 notes

·

View notes

Text

Obsidian And RTX AI PCs For Advanced Large Language Model

How to Utilize Obsidian‘s Generative AI Tools. Two plug-ins created by the community demonstrate how RTX AI PCs can support large language models for the next generation of app developers.

Obsidian Meaning

Obsidian is a note-taking and personal knowledge base program that works with Markdown files. Users may create internal linkages for notes using it, and they can see the relationships as a graph. It is intended to assist users in flexible, non-linearly structuring and organizing their ideas and information. Commercial licenses are available for purchase, however personal usage of the program is free.

Obsidian Features

Electron is the foundation of Obsidian. It is a cross-platform program that works on mobile operating systems like iOS and Android in addition to Windows, Linux, and macOS. The program does not have a web-based version. By installing plugins and themes, users may expand the functionality of Obsidian across all platforms by integrating it with other tools or adding new capabilities.

Obsidian distinguishes between community plugins, which are submitted by users and made available as open-source software via GitHub, and core plugins, which are made available and maintained by the Obsidian team. A calendar widget and a task board in the Kanban style are two examples of community plugins. The software comes with more than 200 community-made themes.

Every new note in Obsidian creates a new text document, and all of the documents are searchable inside the app. Obsidian works with a folder of text documents. Obsidian generates an interactive graph that illustrates the connections between notes and permits internal connectivity between notes. While Markdown is used to accomplish text formatting in Obsidian, Obsidian offers quick previewing of produced content.

Generative AI Tools In Obsidian

A group of AI aficionados is exploring with methods to incorporate the potent technology into standard productivity practices as generative AI develops and speeds up industry.

Community plug-in-supporting applications empower users to investigate the ways in which large language models (LLMs) might improve a range of activities. Users using RTX AI PCs may easily incorporate local LLMs by employing local inference servers that are powered by the NVIDIA RTX-accelerated llama.cpp software library.

It previously examined how consumers might maximize their online surfing experience by using Leo AI in the Brave web browser. Today, it examine Obsidian, a well-known writing and note-taking tool that uses the Markdown markup language and is helpful for managing intricate and connected records for many projects. Several of the community-developed plug-ins that add functionality to the app allow users to connect Obsidian to a local inferencing server, such as LM Studio or Ollama.

To connect Obsidian to LM Studio, just select the “Developer” button on the left panel, load any downloaded model, enable the CORS toggle, and click “Start.” This will enable LM Studio’s local server capabilities. Because the plug-ins will need this information to connect, make a note of the chat completion URL from the “Developer” log console (“http://localhost:1234/v1/chat/completions” by default).

Next, visit the “Settings” tab after launching Obsidian. After selecting “Community plug-ins,” choose “Browse.” Although there are a number of LLM-related community plug-ins, Text Generator and Smart Connections are two well-liked choices.

For creating notes and summaries on a study subject, for example, Text Generator is useful in an Obsidian vault.

Asking queries about the contents of an Obsidian vault, such the solution to a trivia question that was stored years ago, is made easier using Smart Connections.

Open the Text Generator settings, choose “Custom” under “Provider profile,” and then enter the whole URL in the “Endpoint” section. After turning on the plug-in, adjust the settings for Smart Connections. For the model platform, choose “Custom Local (OpenAI Format)” from the options panel on the right side of the screen. Next, as they appear in LM Studio, type the model name (for example, “gemma-2-27b-instruct”) and the URL into the corresponding fields.

The plug-ins will work when the fields are completed. If users are interested in what’s going on on the local server side, the LM Studio user interface will also display recorded activities.

Transforming Workflows With Obsidian AI Plug-Ins

Consider a scenario where a user want to organize a trip to the made-up city of Lunar City and come up with suggestions for things to do there. “What to Do in Lunar City” would be the title of the new note that the user would begin. A few more instructions must be included in the query submitted to the LLM in order to direct the results, since Lunar City is not an actual location. The model will create a list of things to do while traveling if you click the Text Generator plug-in button.

Obsidian will ask LM Studio to provide a response using the Text Generator plug-in, and LM Studio will then execute the Gemma 2 27B model. The model can rapidly provide a list of tasks if the user’s machine has RTX GPU acceleration.

Or let’s say that years later, the user’s buddy is visiting Lunar City and is looking for a place to dine. Although the user may not be able to recall the names of the restaurants they visited, they can review the notes in their vault Obsidian‘s word for a collection of notes to see whether they have any written notes.

A user may ask inquiries about their vault of notes and other material using the Smart Connections plug-in instead of going through all of the notes by hand. In order to help with the process, the plug-in retrieves pertinent information from the user’s notes and responds to the request using the same LM Studio server. The plug-in uses a method known as retrieval-augmented generation to do this.

Although these are entertaining examples, users may see the true advantages and enhancements in daily productivity after experimenting with these features for a while. Two examples of how community developers and AI fans are using AI to enhance their PC experiences are Obsidian plug-ins.

Thousands of open-source models are available for developers to include into their Windows programs using NVIDIA GeForce RTX technology.

Read more on Govindhtech.com

#Obsidian#RTXAIPCs#LLM#LargeLanguageModel#AI#GenerativeAI#NVIDIARTX#LMStudio#RTXGPU#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

3 notes

·

View notes