#llmtools

Explore tagged Tumblr posts

Text

Memory in AI Agents: Short-Term, Long-Term, and Episodic

Memory allows AI agents to retain context, learn from experience, and behave coherently across sessions. There are typically three types of memory systems in modern agents:

Short-term memory holds information during a session—like a conversation buffer.

Long-term memory stores facts or learned patterns across many sessions.

Episodic memory records structured experiences for later retrieval, similar to how humans remember events.

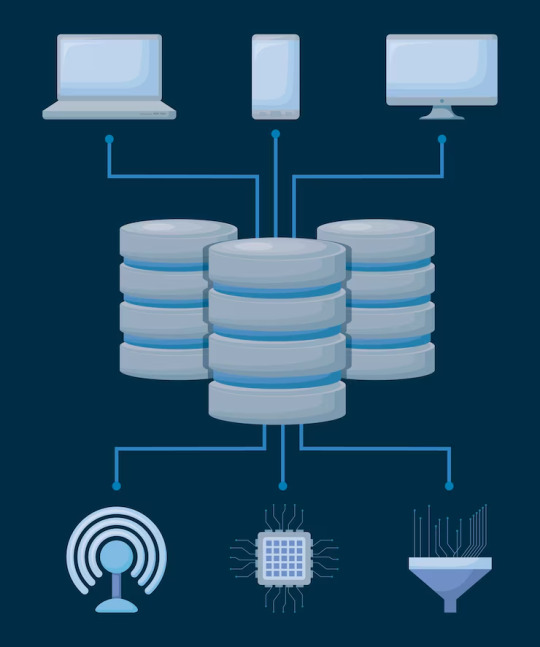

LLM-based agents increasingly rely on memory augmentation strategies—vector databases for recall, memory pruning for relevance, and embedding-based retrieval.

Understanding when and how to use memory modules is crucial for building scalable, context-aware agents. Dive into examples on the AI agents service page.

Memory without context ranking can degrade performance—always include relevance scoring or recency weighting.

1 note

·

View note

Text

instagram

💁🏻♀️If you’re still juggling tools, context windows, and messy pull requests…

Let me put you on something better 👇

✅ Plug-and-play with your stack Qodo lives inside your IDE + Git. No switching tabs, no workflow chaos. Just clean, fast integration.

🧠 Quality-first AI This isn’t just autocomplete. Qodo understands architecture, catches edge cases, and writes code you’d actually review and approve.

🛠️ Built-in smart code reviews Find issues before they hit your PR. It’s like having a senior dev on standby.

👥 Team-friendly from day one Stay consistent across large teams and complex repos — Qodo Merge makes collaboration frictionless.

✨ Been using it for a year. Not looking back.

🤩If you’re a dev, you owe it to yourself to try Qodo Merge.

✨Qodo’s RAG system brings real-time, scalable context to your engineering workflow. From monorepos to microservices, get the right code insights, right when you need them. Less guesswork. More clarity. Better code. 🚀

#qodoai#aifordevelopers#aiworkflow#devtools#codereview#llmtools#genai#developerlife#cleancode#buildsmarter#aiassistant#techstack#programmer#freetools#tools#programming#github#git#extension#datascience#java#flutter#python#Instagram

1 note

·

View note

Text

Meet Your New AI Muse: How LLM Tools Can Spark Inspiration

Large Language Models, also known as LLMs, have evolved into very effective LLM tools that are able to produce prose of human-quality, translate languages, and compose material that is innovative. However, the quality of the data that they are trained on must be high for them to be successful. For artificial intelligence (AI) systems, the trustworthiness may be greatly impacted by two key threats: data hunger and data poisoning.

LLM Tool

The Case of Data Starvation: A Situation of Feast or Famine

Consider a small dataset consisting of children’s novels that was used to train an LLM. Despite the fact that it would be quite good at writing fanciful tales, it would have a hard time dealing with complicated subjects or precise facts. Data hunger may be summed up in this way. A Large Language Model (LLM) that is given an inadequate quantity of data or data that is deficient in variety may display limits in its capabilities.

The consequences of a lack of data include a variety of aspects:

Limited Understanding: LLM tools that are confined in their data diet have difficulty understanding subtle topics and may misread sophisticated inquiries at times.

Biassed Outputs: If the training data leans towards a specific opinion, the LLM tools will reflect that bias in its replies, which might possibly produce to outputs that promote discrimination or offensiveness.

An inaccuracy in the facts: Because LLMs do not have access to a vast array of material, they are more likely to produce content that is either factually erroneous or misleading.

An Unsavoury Turn of Events: Data Poisoning

Malicious actors intentionally insert biassed or inaccurate data into the training dataset, which is what is known as “data poisoning.” The manipulation of the LLM’s results in order to cater to a certain agenda might have devastating effects.

There is a significant danger of data poisoning:

Spreading Misinformation: An LLM tools that has been poisoned may become a potent instrument for spreading false information, which can erode faith in sources that are considered to be reputable.

An amplification of bias: Poisoning may make preexisting biases in the training data even more apparent, which can result in discriminating outputs and the continuation of socioeconomic disparities.

Vulnerabilities in Security: Polluting an LLM that is used in security applications has the potential to introduce vulnerabilities that may be exploited by attackers.

Creating Artificial Intelligence That Can Be Trusted: Reducing the Risks

Implementing a multi-pronged strategy is one way for organisations to protect themselves against data poisoning and data hunger.

Data Diversity is Key: Extensive volumes of high-quality data derived from a wide variety of sources are essential for LLMs, since they are necessary for ensuring a full knowledge and minimising prejudice. As part of this, it is necessary to include data that challenges preexisting ideas and represents the intricacies of the actual world.

Continuous Monitoring and Cleaning: It is of the utmost importance to do regular monitoring of the training data looking for mistakes, biases, and malicious insertions. Unusual data points may be identified and removed with the use of techniques such as anomaly detection and human monitoring.

Transparency in Training and Deployment: It is important for organizations to maintain transparency on the data that is used to train LLMs as well as the methods that are taken to guarantee that the data is of high quality. Because of this openness, confidence in AI solutions is increased, and open criticism and development are made possible that way.

The Trust Factor: The Influence on the Adoption of Artificial Intelligence

The reliability of AI solutions is directly impacted by the lack of data and the poisoning of data. Inaccurate, biassed, or readily manipulable results undermine user trust and impede the deployment of artificial intelligence in a wider context. Users become reluctant to interact with AI-powered services because they are unable to depend on the knowledge that is provided by Large Language Models (LLMs).

The appropriate development and deployment of LLMs may be ensured by organizations if they take active measures to mitigate the risks involved. Trustworthy artificial intelligence solutions that are constructed on a wide variety of high-quality data will, in the end, lead to a future in which people and machines work together in an efficient manner for the sake of improving society.

FAQS

What are Large Language Models (LLMs)?

Large-scale text data was used to train LLMs, which are robust AI systems. They can produce prose of a human calibre, translate across languages, create a variety of imaginative material, and provide you with enlightening answers to your queries.

What is data starvation?

Consider an LLM who has only read children’s literature. Though it would struggle with difficult ideas or facts, it would be amazing at making up tales. This is a shortage of data. An LLM will have limits if the data is insufficient or not sufficiently diverse.

What is data poisoning?

The act of introducing tainted data into an LLM’s training is known as data poisoning. This information may be skewed or inaccurate. It would be like giving just chocolates to a finicky eater they would get ill!

Read more on Govindhtech.com

#LLM#llmtools#llmtool#govindhtech#generativeai#AISolutions#artificialintelligence#news#technology#technews#technologynews#technologytrends

0 notes

Text

youtube

JOIN US! TONIGHT 9PM ET Sept 3, 2023 - Desk of Ladyada - ChatGPT-assisted driver programming & I2C Infrared Proximity Sensors 🔧🤖🔍

This week at the Desk of Ladyada, we've been on a "try to use ChatGPT every day" experiment to see how to use LLM tools for engineering. This week, we had fun using it to write Arduino drivers for chips by uploading the chip datasheet for analysis and guiding the LLM through how to write an 'adafruit' style driver. After all, it's been trained on hundreds of ladyada-written libraries on GitHub. https://github.com/orgs/adafruit/repositories.

Here's the chatGPT log for a video we published last night https://chat.openai.com/c/f740eb57-17a6-41e3-ae0a-12da959a1f4c - and here's a previous one that is more 'complete' https://chat.openai.com/share/f44dc335-7555-4758-b2f9-487f9409d556. The amount of time it takes for ChatGPT to write a driver is about the same as it would take manually, and you definitely need to be eagle-eyed to redirect the AI if it starts making mistakes... but we think with some prompt hacking, we can speed things up by front-loading some of our requirements instead. It's still very early in our experiments, and many things are challenging/hard for GPT 4 to do, so you just have to try them!

The Great Search - I2C Infrared Proximity Sensor

The driver that we used ChatGPT 4 to write is for the VCNL4020 https://www.digikey.com/short/dpz897jj, an 'all in one' IR proximity sensor. These sensors work by bouncing bright IR light off a surface and measuring how much returns. The IR light is modulated and relatively resistant to ambient light interaction. One of the first sensors we ever used was the Sharp GP2Y https://www.adafruit.com/product/164. It is famous for its ease of use in the days before cheap microcontrollers provided 5V power, and an analog signal that roughly maps with distance is emitted. While you can still get analog distance sensors https://www.digikey.com/short/d21wnm5c, I2C interfacing lets you get data quickly and adjust for lower power usage. Let's look at some digital IR proximity sensors and other distance sensors (and the differences between them!)

#adafruit#openai#chatgpt#digikey#deskofladyada#driverprogramming#i2cinfrared#proximitysensors#llmtools#arduino#githublibraries#aiassisted#gpt4challenges#digitalir#analogdistance#Youtube

0 notes