#mark I perceptron

Explore tagged Tumblr posts

Text

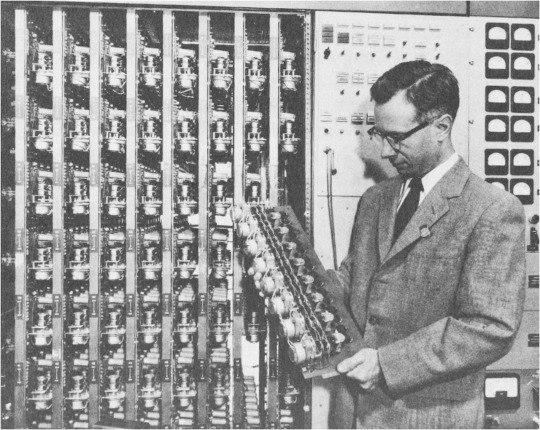

Mark I Perceptron, 1958

Frank Rosenblatt, Cornell Aeronautical University

"The Mark I Perceptron was a pioneering supervised image classification learning system developed by Frank Rosenblatt in 1958. It was the first implementation of an Artificial Intelligence (AI) machine. It differs from the Perceptron which is a software architecture proposed in 1943 by Warren McCulloch and Walter Pitts, which was also employed in Mark I, and enhancements of which have continued to be an integral part of cutting edge AI technologies."

Wikipedia

#1950s#50s#50s tech#artificial intelligence#mark I perceptron#ai#a.i.#1958#fifties#state of technology#50s computers#frank rosenblatt#cornell aeronautical university#wikipedia

59 notes

·

View notes

Text

Frank Rosenblatt, often cited as the Father of Machine Learning, photographed in 1960 alongside his most-notable invention: the Mark I Perceptron machine — a hardware implementation for the perceptron algorithm, the earliest example of an artificial neural network, est. 1943.

#frank rosenblatt#tech history#machine learning#neural network#artificial intelligence#AI#perceptron#60s#black and white#monochrome#technology#u

820 notes

·

View notes

Photo

XユーザーのMassimoさん: 「In this picture from circa 1960, Frank Rosenblatt works on the Mark I Perceptron machine – what he described as the first machine “capable of having an original idea” [full story: https://t.co/SCSK9sGPKg] https://t.co/zQfIFD72AN」 / Twitter

0 notes

Photo

Frank Rosenblatt with a Mark I Perceptron computer in 1960

1 note

·

View note

Text

This Mark I Perceptron could learn to recognize simple patterns, a definite breakthrough for its time. It was also characterized by two other features that have been pretty continuous over the history of AI: first, that this breakthrough was over-hyped and subsequent developments were disappointing, and second, that AI research was funded by the military for its own purposes. Dan McQuillan. 2022. Resisting AI: An Anti-Fascist Approach to Artificial Intelligence. Bristol: Bristol University Press.

0 notes

Link

Mass Effect: Andromeda’s soundtrack marks the first time the company has brought in local musical talent to make some tunes. In the past, BioWare outsourced a lot of its music production to licensed vendors.

“The results from that, I think, have been pretty mixed. They’ve been maybe a little bit lower-quality, definitely not custom made,” said Jeremie Voillot, BioWare’s director of audio, adding it was also a chance to showcase some Edmonton-made music.

John Paesano, who did the scores for Netflix’s Daredevil series and the Maze Runner films, created the main score for the game, which covers in-game combat, cinematics, etc. But the local electronic music makers got a chance to score game scenes taking place in night clubs or other public in-game venues.

“We call this music the ‘diegetic’ music. That’s the music that’s in the world and not, you know, breaking the fourth wall, so to speak,” Voillot said.

The game takes players to different planets and, as such, the artists took different approaches to the music, while still working under the electronic music umbrella.

“Within that genre, there’s definitely some diversity. Some of it’s aggressive drum and bass, some of it’s tropical house, and we matched that with the esthetic of the world and the location we’re actually going to implement it in the game,” he said.

BioWare approached the DJs around the beginning of 2016 and, of the acts approached, Better Living DJs, Comaduster, Deep Six, Dr. Perceptron, KØBA, Nada Deva and Senseless Live all made it onto the soundtrack. Voillot has been DJ’ing in Alberta and British Columbia for more than 20 years, and used his contacts in the industry to scout potential talent.

The musicians were brought into BioWare and shown clips of gameplay and concept art to help get the juices flowing.

Kurtis Schultz of Better Living DJs played the first Mass Effect, but admits he’s fallen off playing video games in the past five years, focusing instead on making music.

Schultz and his DJ-partner Keith Walton finished their tracks around two months ago. Walton recalls this was done on a beach in Mexico, which they were visiting to meet with some friends and also to play a few gigs at BPM Festival.

The Edmonton-based duo are excited that they got the chance to have their music appear in the game.

“I think it’s only a positive thing. Kurtis and I have been at this for about five years, and, you know, it’s been a grind for sure … Anything like this is a huge opportunity. There’s the DJ side of it and producing music to play out, but then producing music for people to sit in their place and listen to and play in a game or a movie, I think it’s really going to push us forward,” Walton said.

“I think it gave us the ability to look a bit more big picture,” Schultz added.

John Paesano: Twitter | IMDB | Wiki

It’s nice that they’re supporting and showcasing local talent in other aspects of the game’s music. sure we can hear some of those tracks in the Vortex Lounge

128 notes

·

View notes

Text

An Old Technique Could Put Artificial Intelligence in Your Hearing Aid

Dag Spicer is expecting a special package soon, but it’s not a Black Friday impulse buy. The fist-sized motor, greened by corrosion, is from a historic room-sized computer intended to ape the human brain. It may also point toward artificial intelligence's future.

Spicer is senior curator at the Computer History Museum in Mountain View, California. The motor in the mail is from the Mark 1 Perceptron, built by Cornell researcher Frank Rosenblatt in 1958. Rosenblatt's machine learned to distinguish shapes such as triangles and squares seen through its camera. When shown examples of different shapes, it built “knowledge” using its 512 motors to turn knobs and tune its connections. "It was a major milestone," says Spicer.

Computers today don’t log their experiences—or ours—using analog parts like the Perceptron’s self-turning knobs. They store and crunch data digitally, using the 1s and 0s of binary numbers. But 11 miles away from the Computer History Museum, a Redwood City, California, startup called Mythic is trying to revive analog computing for artificial intelligence. CEO and cofounder Mike Henry says it’s necessary if we’re to get the full benefits of artificial intelligence in compact devices like phones, cameras, and hearing aids.

Mythic's analog chips are designed to run artificial neural networks in small devices.

Mythic

Mythic uses analog chips to run artificial neural networks, or deep-learning software, which drive the recent excitement about AI. The technique requires large volumes of mathematical and memory operations that are taxing for computers—and particularly challenging for small devices with limited chips and battery power. It’s why the most powerful AI systems reside on beefy cloud servers. That’s limiting, because some places AI could be useful have privacy, time, or energy constraints that mean handing off data to a distant computer is impractical.

You might say Mythic’s project is an exercise in time travel. “By the time I went to college analog computers were gone,” says Eli Yablonovitch, a professor at University of California Berkeley who got his first degree in 1967. “This brings back something that had been soundly rejected." Analog circuits have long been relegated to certain niches, such as radio signal processing.

Henry says internal tests indicate Mythic chips make it possible to run more powerful neural networks in a compact device than a conventional smartphone chip. "This can help deploy deep learning to billions of devices like robots, cars, drones, and phones," he says.

Related Stories

Tom Simonite

China Challenges Nvidia's Hold on Artificial Intelligence Chips

Tom Simonite

Meet the High Schooler Shaking Up Artificial Intelligence

Tom Simonite

Google’s AI Wizard Unveils a New Twist on Neural Networks

Henry likes to show the difference his chips could make with a demo in which simulations of his chip and a smartphone chip marketed as tuned for AI run software that spots pedestrians in video from a camera mounted on a car. The chips Mythic has made so far are too small to run a full video processing system. In the demo, Mythic’s chip can spot people from a greater distance, because it doesn’t have to scale down the video to process it. The suggestion is clear: you’ll be more comfortable sharing streets with autonomous vehicles that boast analog inside.

Digital computers work by crunching binary numbers through clockwork-like sequences of arithmetic. Analog computers operate more like a plumbing system, with electrical current in place of water. Electrons flow through a maze of components like amplifiers and resistors that do the work of mathematical operations by changing the current or combining it with others. Measuring the current that emerges from the pipeline reveals the answer.

That approach burns less energy than an equivalent digital device on some tasks because it requires fewer circuits. A Mythic chip can also do all the work of running a neural network without having to tap a device's memory, which can interfere with other functions. The analog approach isn't great for everything, not least because it's more difficult to control noise, which can affect the precision of numbers. But that's not a problem for running neural networks, which are prized for their ability to make sense of noisy data like images or sound. "Analog math is great for neural networks, but I wouldn't balance my check book with it," Henry says.

If analog comes back, it won't be the first aspect of the Mark 1 Perceptron to get a second life. The machine was one of the earliest examples of a neural network, but the idea was mostly out of favor until the current AI boom started in 2012.

Objects identified in video by a simulation of a conventional smartphone chip tuned for artificial intelligence.

Mythic

A simulation of Mythic's chip can identify more objects from a greater distance because it doesn't have to scale down the video to process it.

Mythic

Mythic's analog plumbing is more compact than the Perceptron Mark 1's motorized knobs. The company's chips are repurposed flash memory chips like those inside a thumb drive—a hack that turns digital storage into an analog computer.

The hack involves writing out the web of a neural network for a task such as processing video onto the memory chip's transistors. Data is passed through the network by flowing analog signals around the chip. Those signals are converted back into digital to complete the processing and allow the chip to work inside a conventional digital device. Mythic has a partnership with Fujitsu, which makes flash memory and aims to get customers final chip designs to test next year. The company will initially target the camera market, where applications include consumer gadgets, cars, and surveillance systems.

Mythic hopes its raise-the-dead strategy will keep it alive in a crowded field of companies working on custom silicon for neural networks. Apple and Google have added custom silicon to power neural networks into their latest smartphones.

Yablonovitch of Berkeley guesses that Mythic won't be the last company that tries to revive analog. He gave a talk this month highlighting the opportune match between analog computing and some of today's toughest, and most lucrative, computing problems.

“The full potential is even bigger than deep learning,” Yablonovitch says. He says there is evidence analog computers might also help with the notorious traveling-salesman problem, which limits computers planning delivery routes, and in other areas including pharmaceuticals, and investing.

Something that hasn’t changed over the decades since analog computers went out of style is engineers’ fondness for dreaming big. Rosenblatt told the New York Times in 1958 that “perceptrons might be fired to the planets as mechanical space explorers.” Henry has extra-terrestrial hopes, too, saying his chips could help satellites understand what they see. He may be on track to finally prove Rosenblatt right.

Related Video

Business

Robots & Us: A Brief History of Our Robotic Future

Artificial intelligence and automation stand to upend nearly every aspect of modern life, from transportation to health care and even work. So how did we get here and where are we going?

More From this publisher : HERE ; This post was curated using : TrendingTraffic

=> *********************************************** Read More Here: An Old Technique Could Put Artificial Intelligence in Your Hearing Aid ************************************ =>

An Old Technique Could Put Artificial Intelligence in Your Hearing Aid was originally posted by 16 MP Just news

0 notes

Text

An Old Technique Could Put Artificial Intelligence in Your Hearing Aid

Dag Spicer is expecting a special package soon, but it’s not a Black Friday impulse buy. The fist-sized motor, greened by corrosion, is from a historic room-sized computer intended to ape the human brain. It may also point toward artificial intelligence's future.

Spicer is senior curator at the Computer History Museum in Mountain View, California. The motor in the mail is from the Mark 1 Perceptron, built by Cornell researcher Frank Rosenblatt in 1958. Rosenblatt's machine learned to distinguish shapes such as triangles and squares seen through its camera. When shown examples of different shapes, it built “knowledge” using its 512 motors to turn knobs and tune its connections. "It was a major milestone," says Spicer.

Computers today don’t log their experiences—or ours—using analog parts like the Perceptron’s self-turning knobs. They store and crunch data digitally, using the 1s and 0s of binary numbers. But 11 miles away from the Computer History Museum, a Redwood City, California, startup called Mythic is trying to revive analog computing for artificial intelligence. CEO and cofounder Mike Henry says it’s necessary if we’re to get the full benefits of artificial intelligence in compact devices like phones, cameras, and hearing aids.

Mythic's analog chips are designed to run artificial neural networks in small devices.

Mythic

Mythic uses analog chips to run artificial neural networks, or deep-learning software, which drive the recent excitement about AI. The technique requires large volumes of mathematical and memory operations that are taxing for computers—and particularly challenging for small devices with limited chips and battery power. It’s why the most powerful AI systems reside on beefy cloud servers. That’s limiting, because some places AI could be useful have privacy, time, or energy constraints that mean handing off data to a distant computer is impractical.

You might say Mythic’s project is an exercise in time travel. “By the time I went to college analog computers were gone,” says Eli Yablonovitch, a professor at University of California Berkeley who got his first degree in 1967. “This brings back something that had been soundly rejected." Analog circuits have long been relegated to certain niches, such as radio signal processing.

Henry says internal tests indicate Mythic chips make it possible to run more powerful neural networks in a compact device than a conventional smartphone chip. "This can help deploy deep learning to billions of devices like robots, cars, drones, and phones," he says.

Henry likes to show the difference his chips could make with a demo in which simulations of his chip and a smartphone chip marketed as tuned for AI run software that spots pedestrians in video from a camera mounted on a car. The chips Mythic has made so far are too small to run a full video processing system. In the demo, Mythic’s chip can spot people from a greater distance, because it doesn’t have to scale down the video to process it. The suggestion is clear: you’ll be more comfortable sharing streets with autonomous vehicles that boast analog inside.

Digital computers work by crunching binary numbers through clockwork-like sequences of arithmetic. Analog computers operate more like a plumbing system, with electrical current in place of water. Electrons flow through a maze of components like amplifiers and resistors that do the work of mathematical operations by changing the current or combining it with others. Measuring the current that emerges from the pipeline reveals the answer.

That approach burns less energy than an equivalent digital device on some tasks because it requires fewer circuits. A Mythic chip can also do all the work of running a neural network without having to tap a device's memory, which can interfere with other functions. The analog approach isn't great for everything, not least because it's more difficult to control noise, which can affect the precision of numbers. But that's not a problem for running neural networks, which are prized for their ability to make sense of noisy data like images or sound. "Analog math is great for neural networks, but I wouldn't balance my check book with it," Henry says.

If analog comes back, it won't be the first aspect of the Mark 1 Perceptron to get a second life. The machine was one of the earliest examples of a neural network, but the idea was mostly out of favor until the current AI boom started in 2012.

Objects identified in video by a simulation of a conventional smartphone chip tuned for artificial intelligence.

Mythic

A simulation of Mythic's chip can identify more objects from a greater distance because it doesn't have to scale down the video to process it.

Mythic

Mythic's analog plumbing is more compact than the Perceptron Mark 1's motorized knobs. The company's chips are repurposed flash memory chips like those inside a thumb drive—a hack that turns digital storage into an analog computer.

The hack involves writing out the web of a neural network for a task such as processing video onto the memory chip's transistors. Data is passed through the network by flowing analog signals around the chip. Those signals are converted back into digital to complete the processing and allow the chip to work inside a conventional digital device. Mythic has a partnership with Fujitsu, which makes flash memory and aims to get customers final chip designs to test next year. The company will initially target the camera market, where applications include consumer gadgets, cars, and surveillance systems.

Mythic hopes its raise-the-dead strategy will keep it alive in a crowded field of companies working on custom silicon for neural networks. Apple and Google have added custom silicon to power neural networks into their latest smartphones.

Yablonovitch of Berkeley guesses that Mythic won't be the last company that tries to revive analog. He gave a talk this month highlighting the opportune match between analog computing and some of today's toughest, and most lucrative, computing problems.

“The full potential is even bigger than deep learning,” Yablonovitch says. He says there is evidence analog computers might also help with the notorious traveling-salesman problem, which limits computers planning delivery routes, and in other areas including pharmaceuticals, and investing.

Something that hasn’t changed over the decades since analog computers went out of style is engineers’ fondness for dreaming big. Rosenblatt told the New York Times in 1958 that “perceptrons might be fired to the planets as mechanical space explorers.” Henry has extra-terrestrial hopes, too, saying his chips could help satellites understand what they see. He may be on track to finally prove Rosenblatt right.

0 notes

Text

The Perceptron - 61 years ago it was sai

The Perceptron – 61 years ago it was sai

The Perceptron – 61 years ago it was said in the New York Times, that this machine was “the embryo of an electronic computer that will be able to walk, talk, see, write, reproduce itself and be conscious of its existence.” The machine was called the ‘Mark I Perceptron’. It was designed for image recognition and had a … This story continues at The Perceptron Or just read more coverage at…

View On WordPress

0 notes

Text

Data Mining Using Sas Enterprise Miner

A Fresh Take on Conventional Wisdom. The Enterprise Miner data mining SEMMA methodology is specifically built to handling enormous data sets in preparation to subsequent data analysis. The Enterprise Miner data mining SEMMA methodology is specifically designed to handling enormous data sets in preparation to subsequent data analysis. Data mining is definitely an analytical tool which is used to solving critical business decisions by analyzing large amounts of data to be able to discover relationships and unknown patterns in the data. The Enterprise Miner data mining SEMMA methodology is specifically designed to handling enormous Outliers summary data sets in preparation to subsequent data analysis. The purpose of the Time Series node would be to prep the data to perform time series modeling by condensing the data into chronological order of equally-spaced time intervals. For categorical-valued targets or stratified interval-valued targets, various numerical target-specified consequences can be predetermined in creating various business modeling scenarios that are built to maximize expected profit or minimize expected loss in the validation data set. In stratified sampling, observations are randomly selected within each non-overlapping group or strata that are d. The default neural network architecture will be the multilayer perceptron MLP network with one hidden layer consisting of three hidden units. I hope after looking over this article that Enterprise Miner v3 will become quite simple SAS analytical tool for one to use so as to incorporate within your SAS analysis tools. In Opportunity, the writer covers a variety of phenomena, from why is a prosperous professional hockey player to Bill Gates' rise in technology, towards the perception of Mozart as a musical prodigy. The graphs are designed to observe trends, patterns, and extreme values involving the variables within the active training data set. Therefore, the node requires both a target variable plus a time identifier variable. SAS Enterprise Miner is made for SEMMA data mining. In Opportunity, the author covers a variety of phenomena, from why is a prosperous professional hockey player to Bill Gates' surge in technology, to the perception of Mozart as a musical prodigy. The purpose of the Two Stage Model node is always to predict an interval-valued target variable based on the estimated probabilities of the mark event from the categorical target variable. However, the node performs a wide array of modeling processes to both stages of the two-stage modeling design such as decision-tree modeling, regression modeling, MLP and RBF neural network modeling, and GLIM modeling. The surrogate input splitting variable is surely an entirely different input variable which will generate a similar split as the primary input variable. However, the node performs a wide variety of modeling techniques to both stages of the two-stage modeling design such as decision-tree modeling, regression modeling, MLP and RBF neural network modeling, and GLIM modeling. As an example, connecting multiple data sets to every modeling node can be reduced from the node by connecting each Input Data Source node for each respective data set for the Control Point node that is then connected to each of the modeling nodes. Explore Explore the data sets to view the data set to observe for unexpected treads, relationships, patterns, or unusual observations while at exactly the same time getting familiar using the data. The graphs are built to observe trends, patterns, and extreme values between the variables inside the active training data set. The sized the two-dimensional squared grid is determined by the quantity of rows and columns. The score code can be used within the SAS editor to generate new prediction estimates through providing a fresh set of values from the input variables. Once again, he uses social psychology to turn conventional wisdom upside down and brings the facts depending on his research. www. . . . sasenterpriseminer.

0 notes

Text

Book Review: Outliers By Malcolm Gladwell

An Overview of SAS Enterprise MinerThe following article is at regards to Enterprise Miner v. Data mining is surely an analytical tool that's used to solving critical business decisions by analyzing large amounts of data in order to discover relationships and unknown patterns inside the data. The Enterprise Miner data mining SEMMA methodology is specifically made to handling enormous data sets in preparation to subsequent data analysis. Explore Explore the data sets to view the data set to observe for unexpected treads, relationships, patterns, or unusual observations while at the identical time getting familiar using the data. In stratified sampling, observations are randomly selected within each non-overlapping group or strata that are d. For categorical-valued targets or stratified interval-valued targets, various numerical target-specified consequences can be predetermined in creating various business modeling scenarios that are designed to maximize expected profit or minimize expected loss from the validation data set. The default neural network architecture will be the multilayer perceptron MLP network with one hidden layer consisting of three hidden units. I hope after looking over this article that Enterprise Miner v3 will become super easy SAS analytical tool for you to use to be able to incorporate inside your SAS analysis tools. Explore Explore the data sets to view the data set to observe for unexpected treads, relationships, patterns, or unusual observations while at the same time getting familiar with the data. The graphs are made to observe trends, patterns, and extreme values between the variables within the active training data set. For categorical variables, the catalog of the metadata information contains information such as the class level values, class level frequencies, variety of missing values for each class level, its ordering information, as well as the target profile information. SAS Enterprise Miner is designed for SEMMA data mining. In Opportunity, mcdougal covers a variety of phenomena, from why is an effective professional hockey player to Bill Gates' surge in technology, to the perception of Mozart as a musical prodigy. The purpose of the Two Stage Model node is to predict an interval-valued target variable based around the estimated probabilities of the mark event from the categorical target variable. However, the node performs a wide variety of modeling techniques to both stages of the two-stage modeling design such as decision-tree modeling, regression modeling, MLP and RBF neural network modeling, and GLIM modeling. The node is designed so that you may impute missing values or redefine values according to predetermined intervals or class levels within the active training data set. In addition, the node performs a wide selection of analysis from regression modeling, logistic regression modeling, multivariate analysis, and principal component analysis. In Opportunity, mcdougal covers a variety of phenomena, from why is a successful professional hockey player to Bill Gates' rise in technology, for the perception of Mozart as a musical prodigy. Explore Explore the data sets to view the data set to observe for unexpected treads, relationships, patterns, or unusual observations while at the same time getting familiar with all the data. The graphs are built to observe trends, patterns, and extreme values between the variables within the active training data set. In addition, the node is effective at creating separate cluster groupings. The sized the two-dimensional squared grid is determined through the variety of rows and Outliers summary columns. Once again, he uses social psychology to turn conventional wisdom inverted and brings the facts depending on his research. www. . . . sasenterpriseminer.

0 notes

Text

3D Optical Metrology Market 2021 SWOT Analysis, Competitive Landscape and Significant Growth | Top Brands:Capture 3D, Carl Zeis, Faro Technologies, Gom, Hexagon Metrology, Leica Microsystems, Nikon…

This report studies the 3D Optical Metrology Market with many aspects of the industry such as market size, market status, market trends and forecast. The report also provides brief information on competitors and opportunities for specific growth with the key market drivers. Find the comprehensive analysis of the 3D Optical Metrology market segmented by company, region, type and applications in the report.

The report provides valuable insight into the development of the 3D Optical Metrology market and related methods for the 3D Optical Metrology market with analysis of each region. The report then examines the dominant aspects of the market and examines each segment.

The report provides an accurate and professional study of the global trading scenarios for the 3D Optical Metrology market. The complex analysis of opportunities, growth factors and future forecasts is presented in simple and easy-to-understand formats. The report covers the 3D Optical Metrology market by developing technology dynamics, financial position, growth strategy and product portfolio during the forecast period.

Get FREE Sample copy of this Report with Graphs and Charts at: https://reportsglobe.com/download-sample/?rid=271134

The segmentation chapters enable readers to understand aspects of the market such as its products, available technology and applications. These chapters are written to describe their development over the years and the course they are likely to take in the coming years. The research report also provides detailed information on new trends that may define the development of these segments in the coming years.

3D Optical Metrology Market Segmentation:

3D Optical Metrology Market, By Application (2016-2027)

Application I

Application II

3D Optical Metrology Market, By Product (2016-2027)

3D Automated Optical Inspection System

Optical Digitizer

Scanner

Laser Scanning

Major Players Operating in the 3D Optical Metrology Market:

Capture 3D, Carl Zeis, Faro Technologies, Gom, Hexagon Metrology, Leica Microsystems, Nikon Metrology, Perceptron, Sensofar USA, SteinbichlerOptotechnik, Zeta Instruments, and Zygo.

Carl Zeis

Nikon Metrology

Gom

Leica Microsystems

Perceptron

Faro Technologies

Sensofar USA

Hexagon Metrology

SteinbichlerOptotechnik

Company Profiles – This is a very important section of the report that contains accurate and detailed profiles for the major players in the global 3D Optical Metrology market. It provides information on the main business, markets, gross margin, revenue, price, production and other factors that define the market development of the players studied in the 3D Optical Metrology market report.

Global 3D Optical Metrology Market: Regional Segments

The different section on regional segmentation gives the regional aspects of the worldwide 3D Optical Metrology market. This chapter describes the regulatory structure that is likely to impact the complete market. It highlights the political landscape in the market and predicts its influence on the 3D Optical Metrology market globally.

North America (US, Canada)

Europe (Germany, UK, France, Rest of Europe)

Asia Pacific (China, Japan, India, Rest of Asia Pacific)

Latin America (Brazil, Mexico)

Middle East and Africa

Get up to 50% discount on this report at: https://reportsglobe.com/ask-for-discount/?rid=271134

The Study Objectives are:

To analyze global 3D Optical Metrology status, future forecast, growth opportunity, key market and key players.

To present the 3D Optical Metrology development in North America, Europe, Asia Pacific, Latin America & Middle East and Africa.

To strategically profile the key players and comprehensively analyze their development plan and strategies.

To define, describe and forecast the market by product type, market applications and key regions.

This report includes the estimation of market size for value (million USD) and volume (K Units). Both top-down and bottom-up approaches have been used to estimate and validate the market size of 3D Optical Metrology market, to estimate the size of various other dependent submarkets in the overall market. Key players in the market have been identified through secondary research, and their market shares have been determined through primary and secondary research. All percentage shares, splits, and breakdowns have been determined using secondary sources and verified primary sources.

Some Major Points from Table of Contents:

Chapter 1. Research Methodology & Data Sources

Chapter 2. Executive Summary

Chapter 3. 3D Optical Metrology Market: Industry Analysis

Chapter 4. 3D Optical Metrology Market: Product Insights

Chapter 5. 3D Optical Metrology Market: Application Insights

Chapter 6. 3D Optical Metrology Market: Regional Insights

Chapter 7. 3D Optical Metrology Market: Competitive Landscape

Ask your queries regarding customization at: https://reportsglobe.com/need-customization/?rid=271134

How Reports Globe is different than other Market Research Providers:

The inception of Reports Globe has been backed by providing clients with a holistic view of market conditions and future possibilities/opportunities to reap maximum profits out of their businesses and assist in decision making. Our team of in-house analysts and consultants works tirelessly to understand your needs and suggest the best possible solutions to fulfill your research requirements.

Our team at Reports Globe follows a rigorous process of data validation, which allows us to publish reports from publishers with minimum or no deviations. Reports Globe collects, segregates, and publishes more than 500 reports annually that cater to products and services across numerous domains.

Contact us:

Mr. Mark Willams

Account Manager

US: +1-970-672-0390

Email: [email protected]

Website: Reportsglobe.com

from NeighborWebSJ https://ift.tt/3eMFZdy via IFTTT

from WordPress https://ift.tt/3zqbRwn via IFTTT

0 notes

Text

Top 7 Data Science Institutes In Hyderabad Determine the chance of buy primarily based on the given features in the dataset. The dataset consists of function vectors belonging to 12,330 on-line sessions. The purpose of this project is to establish user behaviour patterns to effectively perceive the options that affect sales. If you might be serious a few profession pertaining to Data science, then you are on the proper place. ExcelR is taken into account to be top-of-the-line Data Science coaching institutes in Hyderabad. We have built careers of hundreds of Data Science professionals in varied MNCs in India and abroad. Our skilled trainers will allow you to with upskilling the ideas, to complete the assignments and live tasks. This module will train you about the theorem and solving the issues utilizing it. Misclassification, Probability, AUC, R-Square This module will train everybody tips on how to work with Misclassification, Probability, AUC, and R-Square. We start with a excessive-level idea of Object-Oriented Programming and later learn the essential vocabulary(/keywords), grammar(/syntax) and sentence formation(/usable code) of this language. In addition to those places of work, ExcelR believes in building and nurturing future entrepreneurs via its Franchise verticals and therefore has awarded in extra of 30 franchises across the globe. This ensures that our quality schooling and associated providers reach out to all corners of the world. Furthermore, this resonates with our international strategy of catering to the wants of bridging the gap between the industry and academia globally. In this model individuals, can attend classroom, teacher-led stay online and e-studying with a single enrolment. A mixture of these three will produce a synergistic influence on the learning. One can attend a number of Instructor-led reside online periods for one 12 months from different trainers at no further cost with the all new and unique JUMBO PASS. Perceptron algorithm is outlined based mostly on a biological mind mannequin. You will talk about the parameters used within the perceptron algorithm which is the foundation of developing a lot complex neural network models for AI applications. Understand the applying of perceptron algorithms to classify binary data in a linearly separable state of affairs. Decision Tree & Random forest are a number of the most powerful classifier algorithms based on classification rules. The knowledge that is acquired needs to be analysed and decisions must be taken. Various statistical strategies similar to Classification, Regression, Hypothesis Testing, Time Series Analysis is used to construct knowledge models. With the help of Statistics, a Data Scientist can achieve higher insights, which enables to successfully streamline the choice-making course of. Skills of working with programming languages like ‘R’ and Python. The choice might be based on written exams conducted in IIT Hyderabad in finish of May.,followed by on-line interviews. The written test schedule might be revealed in the website after the final date of submitting applications is over. Candidates might be shortlisted for interviews based on written test performance. However, as a result of COVID-19 situation, there are uncertainties relating to the dates of the written examination. If the journey advisories by Government or the decisions taken by the institute/division prohibit journey and gathering, then the selection will be primarily based on solely interviews . In that case stricter shortlisting standards shall be followed, and the list of candidates shortlisted for the interviews shall be put up within the web site. In case we go ahead with this interview-solely method, all candidates is probably not interviewed even when they satisfy the essential eligibility standards. We are allocating an acceptable domain skilled to help you out with program particulars. Build your proficiency in the hottest trade of the 21st century. We encourage all candidates to accumulate full details about this program earlier

than enrolling. Therefore, I hope this article helps you in finding the best Data Science Training Institutes to be able to fulfill your targets. These packages are for highly motivated working professionals to turn into information science practitioners. Data Science is presently a fundamental piece of each affiliation to make choices. It is likewise known as as predictive analytics for enterprise reasons. Digital Nest should be been chosen for an exceptionally far-reaching Data Science course at Hyderabad preserving industry, college students, and consultants in mind. They have manufactured a powerful educational plan to such an extent that no other Data Science foundations can catch-up with. Topic fashions using LDA algorithm, emotion mining utilizing lexicons are discussed as part of NLP module. Learn about enhancing reliability and accuracy of decision tree models utilizing ensemble strategies. Bagging and Boosting are the go to techniques in ensemble methods. The parallel and sequential approaches taken in Bagging and Boosting methods are discussed on this module. Prime classes, a renowned Data Science Institute in Hyderabad, supply quality skill-set enchancment companies for students and corporates. 360DigiTMG has a worldwide residence workplace in the USA and Hyderabad is the headquarter in India. It is a number one Data Science Institute in Hyderabad, established in 2013 with the purpose of overcoming any barrier between industry wishes and academia. With international accreditations from UTM, Malaysia and City and Guilds, UK , and IBM , 360DigiTMG brags of the world-class academic plan. The salaries of those professions is set by the recruiting company and are subjective in nature. It is estimated that the common wage for brisker roles in Data analytics(zero-three years) varies between three-eight lakhs. Our company companions are deeply involved in curriculum design, guaranteeing that it meets the present trade requirements for information science professionals. In this submit-graduate program in Data Science, the teaching and content material of the course are by faculty from Great Lakes and other practising information scientists and consultants in the business. The extra amount of knowledge we use, the extra correct results we derive. Hence, data science has made a mark within the digital world by deriving one of the best outcomes by accessing huge datasets. The PGP- management candidates come with a variety of work expertise from four to 10+ years. The class consists of CXOs, CEOs, Vice Presidents, Business Unit Heads, mid-degree managers, and young professionals bringing of their unique experiences to the fore. Once you obtain a proposal of admission, the admission workplace shall be at your aid in making use of for loans. We don't present any accommodation for the candidates at our studying heart. Outstation candidates are requested to organize lodging for themselves.

Navigate to Address: 360DigiTMG - Data Analytics, Data Science Course Training Hyderabad 2-56/2/19, 3rd floor,, Vijaya towers, near Meridian school,, Ayyappa Society Rd, Madhapur,, Hyderabad, Telangana 500081 099899 94319

0 notes

Text

Explained: Neural networks

In the previous 10 years, the best-performing computerized reasoning frameworks —, for example, the discourse recognizers on cell phones or Google's most recent programmed interpreter — have come about because of a method called "profound learning."

Profound learning is in truth another name for a way to deal with counterfeit consciousness called neural systems, which have been going all through form for over 70 years. Neural systems were initially proposed in 1944 by Warren McCullough and Walter Pitts, two University of Chicago scientists who moved to MIT in 1952 as establishing individuals from what's occasionally called the main subjective science division.

Neural nets were a noteworthy zone of research in both neuroscience and software engineering until 1969, while, as indicated by software engineering legend, they were murdered off by the MIT mathematicians Marvin Minsky and Seymour Papert, who a year later would progress toward becoming co-chiefs of the new MIT Artificial Intelligence Laboratory.

The method then delighted in a resurgence in the 1980s, fell into overshadowing again in the main decade of the new century, and has returned like gangbusters in the second, filled to a great extent by the expanded handling energy of representation chips.

"There's this thought thoughts in science are somewhat similar to pestilences of infections," says Tomaso Poggio, the Eugene McDermott Professor of Brain and Cognitive Sciences at MIT, a specialist at MIT's McGovern Institute for Brain Research, and executive of MIT's Center for Brains, Minds, and Machines. "There are obviously five or six fundamental strains of influenza infections, and evidently every one returns with a time of around 25 years. Individuals get tainted, and they build up a safe reaction, thus they don't get contaminated for the following 25 years. And after that there is another era that is prepared to be tainted by a similar strain of infection. In science, individuals begin to look all starry eyed at a thought, get amped up for it, pound it to death, and after that get inoculated — they become weary of it. So thoughts ought to have a similar sort of periodicity!"

Profound matters

Neural nets are a methods for doing machine learning, in which a PC figures out how to play out some errand by breaking down preparing cases. Normally, the cases have been hand-named ahead of time. A protest acknowledgment framework, for example, may be encouraged a large number of named pictures of autos, houses, espresso containers, et cetera, and it would discover visual examples in the pictures that reliably relate with specific names.

Demonstrated freely on the human mind, a neural net comprises of thousands or even a large number of basic handling hubs that are thickly interconnected. The vast majority of today's neural nets are sorted out into layers of hubs, and they're "nourish forward," implying that information travels through them in just a single bearing. An individual hub may be associated with a few hubs in the layer underneath it, from which it gets information, and a few hubs in the layer above it, to which it sends information.

To each of its approaching associations, a hub will dole out a number known as a "weight." When the system is dynamic, the hub gets an alternate information thing — an alternate number — over each of its associations and increases it by the related weight. It then includes the subsequent items together, yielding a solitary number. In the event that that number is beneath a limit esteem, the hub passes no information to the following layer. On the off chance that the number surpasses the limit esteem, the hub "fires," which in today's neural nets for the most part means sending the number — the whole of the weighted data sources — along all its active associations.

At the point when a neural net is being prepared, the majority of its weights and limits are at first set to irregular qualities. Preparing information is bolstered to the base layer — the information layer — and it goes through the succeeding layers, getting increased and included in complex courses, until it at long last arrives, fundamentally changed, at the yield layer. Amid preparing, the weights and edges are persistently balanced until preparing information with similar marks reliably yield comparative yields.

Brains and machines

The neural nets portrayed by McCullough and Pitts in 1944 had limits and weights, however they weren't orchestrated into layers, and the specialists didn't determine any preparation component. What McCullough and Pitts demonstrated was that a neural net could, on a basic level, figure any capacity that a computerized PC could. The outcome was more neuroscience than software engineering: The fact of the matter was to propose that the human mind could be considered as a registering gadget.

Neural nets keep on being an important instrument for neuroscientific inquire about. For example, specific system formats or principles for modifying weights and edges have recreated watched components of human neuroanatomy and cognizance, a sign that they catch something about how the mind forms data.

The main trainable neural system, the Perceptron, was shown by the Cornell University therapist Frank Rosenblatt in 1957. The Perceptron's plan was much similar to that of the current neural net, aside from that it had just a single layer with customizable weights and limits, sandwiched amongst info and yield layers.

Perceptrons were a dynamic range of research in both brain science and the juvenile train of software engineering until 1959, when Minsky and Papert distributed a book titled "Perceptrons," which exhibited that executing certain genuinely regular calculations on Perceptrons would be unfeasibly tedious.

"Obviously, these impediments sort of vanish on the off chance that you take apparatus that is somewhat more confounded — like, two layers," Poggio says. Be that as it may, at the time, the book chillingly affected neural-net research.

"You need to put these things in authentic setting," Poggio says. "They were contending for programming — for dialects like Lisp. Relatively few years prior, individuals were all the while utilizing simple PCs. It was not clear at all at the time that writing computer programs was the approach. I think they went a tiny bit over the edge, yet obviously, it's not high contrast. On the off chance that you think about this as this opposition between simple processing and advanced figuring, they battled for what at the time was the correct thing."

Periodicity

By the 1980s, in any case, specialists had created calculations for adjusting neural nets' weights and edges that were sufficiently productive for systems with more than one layer, expelling a considerable lot of the constraints distinguished by Minsky and Papert. The field delighted in a renaissance.

In any case, mentally, there's something sub-par about neural nets. Enough preparing may amend a system's settings to the point that it can conveniently group information, yet what do those settings mean? What picture components is a protest recognizer taking a gander at, and how can it sort them out into the particular visual marks of autos, houses, and espresso mugs? Taking a gander at the weights of individual associations won't answer that question.

As of late, PC researchers have started to concoct cunning techniques for concluding the systematic procedures embraced by neural nets. In any case, in the 1980s, the systems' methodologies were garbled. So when the new century rolled over, neural systems were supplanted by bolster vector machines, an option way to deal with machine discovering that depends on some perfect and rich arithmetic.

The current resurgence in neural systems — the profound learning transformation — comes affability of the PC diversion industry. The unpredictable symbolism and quick pace of today's computer games require equipment that can keep up, and the outcome has been the illustrations preparing unit (GPU), which packs a large number of generally basic handling centers on a solitary chip. It didn't take yearn for specialists to understand that the engineering of a GPU is astoundingly similar to that of a neural net.

Present day GPUs empowered the one-layer systems of the 1960s and the a few layer systems of the 1980s to bloom into the 10-, 15-, even 50-layer systems of today. That is the thing that the "profound" in "profound learning" alludes to — the profundity of the system's layers. What's more, presently, profound realizing is in charge of the best-performing frameworks in practically every zone of counterfeit consciousness inquire about.

In the engine

The systems' mistiness is as yet unsettling to scholars, however there's progress on that front, as well. Notwithstanding coordinating the Center for Brains, Minds, and Machines (CBMM), Poggio drives the middle's examination program in Theoretical Frameworks for Intelligence. As of late, Poggio and his CBMM associates have discharged a three-section hypothetical investigation of neural systems.

The initial segment, which was distributed a month ago in the International Journal of Automation and Computing, addresses the scope of calculations that profound learning systems can execute and when profound systems offer favorable circumstances over shallower ones. Parts two and three, which have been discharged as CBMM specialized reports, address the issues of worldwide enhancement, or ensuring that a system has found the settings that best accord with its preparation information, and overfitting, or cases in which the system turns out to be so receptive to the specifics of its preparation information that it neglects to sum up to different cases of similar classifications.

There are still a lot of hypothetical inquiries to be replied, yet CBMM scientists' work could help guarantee that neural systems at long last break the generational cycle that has acquired them and out of support for seven decades.

0 notes