#monitoring solutions for Docker

Explore tagged Tumblr posts

Text

Docker Setup: Monitoring Synology with Prometheus and Grafana

In this article, we will discuss “Docker Setup: Monitoring Synology with Prometheus and Grafana”. We will be utilizing Portainer which is a lightweight/open-source management solution designed to simplify working with Docker containers instead of working with the Container Manager on Synology. Please see How to use Prometheus for Monitoring, how to Install Grafana on Windows and Windows Server,…

#Accessing Grafana and Prometheus#Add Portainer Registries#Configure and Run Prometheus Container#docker#Docker Containers on Synology#Enter5yourownpasswordhere123456#Enter5yourownpasswordhere2345#Grafana monitoring#Grafana/Prometheus Monitoring#How To Install Prometheus And Grafana On Docker#install portainer#Modify Synology Firewall Rules#monitoring solutions for Docker#portainer#portainer server#Prometheus Grafana integration#Prometheus metrics#Pull Docker Images for Grafana and Prometheus#Set Up Grafana Data Source#Synology monitoring setup#Your Portainer instance timed out for Security Purposes

0 notes

Text

Self Hosting

I haven't posted here in quite a while, but the last year+ for me has been a journey of learning a lot of new things. This is a kind of 'state-of-things' post about what I've been up to for the last year.

I put together a small home lab with 3 HP EliteDesk SFF PCs, an old gaming desktop running an i7-6700k, and my new gaming desktop running an i7-11700k and an RTX-3080 Ti.

"Using your gaming desktop as a server?" Yep, sure am! It's running Unraid with ~7TB of storage, and I'm passing the GPU through to a Windows VM for gaming. I use Sunshine/Moonlight to stream from the VM to my laptop in order to play games, though I've definitely been playing games a lot less...

On to the good stuff: I have 3 Proxmox nodes in a cluster, running the majority of my services. Jellyfin, Audiobookshelf, Calibre Web Automated, etc. are all running on Unraid to have direct access to the media library on the array. All told there's 23 docker containers running on Unraid, most of which are media management and streaming services. Across my lab, I have a whopping 57 containers running. Some of them are for things like monitoring which I wouldn't really count, but hey I'm not going to bother taking an effort to count properly.

The Proxmox nodes each have a VM for docker which I'm managing with Portainer, though that may change at some point as Komodo has caught my eye as a potential replacement.

All the VMs and LXC containers on Proxmox get backed up daily and stored on the array, and physical hosts are backed up with Kopia and also stored on the array. I haven't quite figured out backups for the main storage array yet (redundancy != backups), because cloud solutions are kind of expensive.

You might be wondering what I'm doing with all this, and the answer is not a whole lot. I make some things available for my private discord server to take advantage of, the main thing being game servers for Minecraft, Valheim, and a few others. For all that stuff I have to try and do things mostly the right way, so I have users managed in Authentik and all my other stuff connects to that. I've also written some small things here and there to automate tasks around the lab, like SSL certs which I might make a separate post on, and custom dashboard to view and start the various game servers I host. Otherwise it's really just a few things here and there to make my life a bit nicer, like RSSHub to collect all my favorite art accounts in one place (fuck you Instagram, piece of shit).

It's hard to go into detail on a whim like this so I may break it down better in the future, but assuming I keep posting here everything will probably be related to my lab. As it's grown it's definitely forced me to be more organized, and I promise I'm thinking about considering maybe working on documentation for everything. Bookstack is nice for that, I'm just lazy. One day I might even make a network map...

5 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Cloud Agnostic: Achieving Flexibility and Independence in Cloud Management

As businesses increasingly migrate to the cloud, they face a critical decision: which cloud provider to choose? While AWS, Microsoft Azure, and Google Cloud offer powerful platforms, the concept of "cloud agnostic" is gaining traction. Cloud agnosticism refers to a strategy where businesses avoid vendor lock-in by designing applications and infrastructure that work across multiple cloud providers. This approach provides flexibility, independence, and resilience, allowing organizations to adapt to changing needs and avoid reliance on a single provider.

What Does It Mean to Be Cloud Agnostic?

Being cloud agnostic means creating and managing systems, applications, and services that can run on any cloud platform. Instead of committing to a single cloud provider, businesses design their architecture to function seamlessly across multiple platforms. This flexibility is achieved by using open standards, containerization technologies like Docker, and orchestration tools such as Kubernetes.

Key features of a cloud agnostic approach include:

Interoperability: Applications must be able to operate across different cloud environments.

Portability: The ability to migrate workloads between different providers without significant reconfiguration.

Standardization: Using common frameworks, APIs, and languages that work universally across platforms.

Benefits of Cloud Agnostic Strategies

Avoiding Vendor Lock-InThe primary benefit of being cloud agnostic is avoiding vendor lock-in. Once a business builds its entire infrastructure around a single cloud provider, it can be challenging to switch or expand to other platforms. This could lead to increased costs and limited innovation. With a cloud agnostic strategy, businesses can choose the best services from multiple providers, optimizing both performance and costs.

Cost OptimizationCloud agnosticism allows companies to choose the most cost-effective solutions across providers. As cloud pricing models are complex and vary by region and usage, a cloud agnostic system enables businesses to leverage competitive pricing and minimize expenses by shifting workloads to different providers when necessary.

Greater Resilience and UptimeBy operating across multiple cloud platforms, organizations reduce the risk of downtime. If one provider experiences an outage, the business can shift workloads to another platform, ensuring continuous service availability. This redundancy builds resilience, ensuring high availability in critical systems.

Flexibility and ScalabilityA cloud agnostic approach gives companies the freedom to adjust resources based on current business needs. This means scaling applications horizontally or vertically across different providers without being restricted by the limits or offerings of a single cloud vendor.

Global ReachDifferent cloud providers have varying levels of presence across geographic regions. With a cloud agnostic approach, businesses can leverage the strengths of various providers in different areas, ensuring better latency, performance, and compliance with local regulations.

Challenges of Cloud Agnosticism

Despite the advantages, adopting a cloud agnostic approach comes with its own set of challenges:

Increased ComplexityManaging and orchestrating services across multiple cloud providers is more complex than relying on a single vendor. Businesses need robust management tools, monitoring systems, and teams with expertise in multiple cloud environments to ensure smooth operations.

Higher Initial CostsThe upfront costs of designing a cloud agnostic architecture can be higher than those of a single-provider system. Developing portable applications and investing in technologies like Kubernetes or Terraform requires significant time and resources.

Limited Use of Provider-Specific ServicesCloud providers often offer unique, advanced services—such as machine learning tools, proprietary databases, and analytics platforms—that may not be easily portable to other clouds. Being cloud agnostic could mean missing out on some of these specialized services, which may limit innovation in certain areas.

Tools and Technologies for Cloud Agnostic Strategies

Several tools and technologies make cloud agnosticism more accessible for businesses:

Containerization: Docker and similar containerization tools allow businesses to encapsulate applications in lightweight, portable containers that run consistently across various environments.

Orchestration: Kubernetes is a leading tool for orchestrating containers across multiple cloud platforms. It ensures scalability, load balancing, and failover capabilities, regardless of the underlying cloud infrastructure.

Infrastructure as Code (IaC): Tools like Terraform and Ansible enable businesses to define cloud infrastructure using code. This makes it easier to manage, replicate, and migrate infrastructure across different providers.

APIs and Abstraction Layers: Using APIs and abstraction layers helps standardize interactions between applications and different cloud platforms, enabling smooth interoperability.

When Should You Consider a Cloud Agnostic Approach?

A cloud agnostic approach is not always necessary for every business. Here are a few scenarios where adopting cloud agnosticism makes sense:

Businesses operating in regulated industries that need to maintain compliance across multiple regions.

Companies require high availability and fault tolerance across different cloud platforms for mission-critical applications.

Organizations with global operations that need to optimize performance and cost across multiple cloud regions.

Businesses aim to avoid long-term vendor lock-in and maintain flexibility for future growth and scaling needs.

Conclusion

Adopting a cloud agnostic strategy offers businesses unparalleled flexibility, independence, and resilience in cloud management. While the approach comes with challenges such as increased complexity and higher upfront costs, the long-term benefits of avoiding vendor lock-in, optimizing costs, and enhancing scalability are significant. By leveraging the right tools and technologies, businesses can achieve a truly cloud-agnostic architecture that supports innovation and growth in a competitive landscape.

Embrace the cloud agnostic approach to future-proof your business operations and stay ahead in the ever-evolving digital world.

2 notes

·

View notes

Text

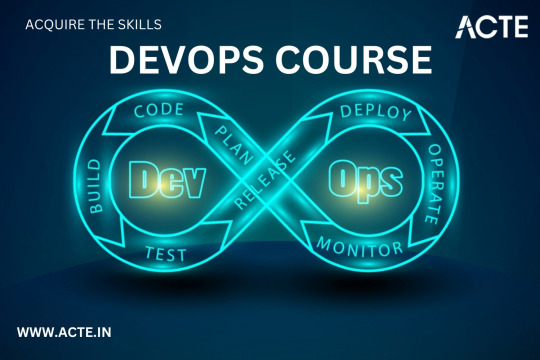

Level Up Your Software Development Skills: Join Our Unique DevOps Course

Would you like to increase your knowledge of software development? Look no further! Our unique DevOps course is the perfect opportunity to upgrade your skillset and pave the way for accelerated career growth in the tech industry. In this article, we will explore the key components of our course, reasons why you should choose it, the remarkable placement opportunities it offers, and the numerous benefits you can expect to gain from joining us.

Key Components of Our DevOps Course

Our DevOps course is meticulously designed to provide you with a comprehensive understanding of the DevOps methodology and equip you with the necessary tools and techniques to excel in the field. Here are the key components you can expect to delve into during the course:

1. Understanding DevOps Fundamentals

Learn the core principles and concepts of DevOps, including continuous integration, continuous delivery, infrastructure automation, and collaboration techniques. Gain insights into how DevOps practices can enhance software development efficiency and communication within cross-functional teams.

2. Mastering Cloud Computing Technologies

Immerse yourself in cloud computing platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform. Acquire hands-on experience in deploying applications, managing serverless architectures, and leveraging containerization technologies such as Docker and Kubernetes for scalable and efficient deployment.

3. Automating Infrastructure as Code

Discover the power of infrastructure automation through tools like Ansible, Terraform, and Puppet. Automate the provisioning, configuration, and management of infrastructure resources, enabling rapid scalability, agility, and error-free deployments.

4. Monitoring and Performance Optimization

Explore various monitoring and observability tools, including Elasticsearch, Grafana, and Prometheus, to ensure your applications are running smoothly and performing optimally. Learn how to diagnose and resolve performance bottlenecks, conduct efficient log analysis, and implement effective alerting mechanisms.

5. Embracing Continuous Integration and Delivery

Dive into the world of continuous integration and delivery (CI/CD) pipelines using popular tools like Jenkins, GitLab CI/CD, and CircleCI. Gain a deep understanding of how to automate build processes, run tests, and deploy applications seamlessly to achieve faster and more reliable software releases.

Reasons to Choose Our DevOps Course

There are numerous reasons why our DevOps course stands out from the rest. Here are some compelling factors that make it the ideal choice for aspiring software developers:

Expert Instructors: Learn from industry professionals who possess extensive experience in the field of DevOps and have a genuine passion for teaching. Benefit from their wealth of knowledge and practical insights gained from working on real-world projects.

Hands-On Approach: Our course emphasizes hands-on learning to ensure you develop the practical skills necessary to thrive in a DevOps environment. Through immersive lab sessions, you will have opportunities to apply the concepts learned and gain valuable experience working with industry-standard tools and technologies.

Tailored Curriculum: We understand that every learner is unique, so our curriculum is strategically designed to cater to individuals of varying proficiency levels. Whether you are a beginner or an experienced professional, our course will be tailored to suit your needs and help you achieve your desired goals.

Industry-Relevant Projects: Gain practical exposure to real-world scenarios by working on industry-relevant projects. Apply your newly acquired skills to solve complex problems and build innovative solutions that mirror the challenges faced by DevOps practitioners in the industry today.

Benefits of Joining Our DevOps Course

By joining our DevOps course, you open up a world of benefits that will enhance your software development career. Here are some notable advantages you can expect to gain:

Enhanced Employability: Acquire sought-after skills that are in high demand in the software development industry. Stand out from the crowd and increase your employability prospects by showcasing your proficiency in DevOps methodologies and tools.

Higher Earning Potential: With the rise of DevOps practices, organizations are willing to offer competitive remuneration packages to skilled professionals. By mastering DevOps through our course, you can significantly increase your earning potential in the tech industry.

Streamlined Software Development Processes: Gain the ability to streamline software development workflows by effectively integrating development and operations. With DevOps expertise, you will be capable of accelerating software deployment, reducing errors, and improving the overall efficiency of the development lifecycle.

Continuous Learning and Growth: DevOps is a rapidly evolving field, and by joining our course, you become a part of a community committed to continuous learning and growth. Stay updated with the latest industry trends, technologies, and best practices to ensure your skills remain relevant in an ever-changing tech landscape.

In conclusion, our unique DevOps course at ACTE institute offers unparalleled opportunities for software developers to level up their skills and propel their careers forward. With a comprehensive curriculum, remarkable placement opportunities, and a host of benefits, joining our course is undoubtedly a wise investment in your future success. Don't miss out on this incredible chance to become a proficient DevOps practitioner and unlock new horizons in the world of software development. Enroll today and embark on an exciting journey towards professional growth and achievement!

10 notes

·

View notes

Text

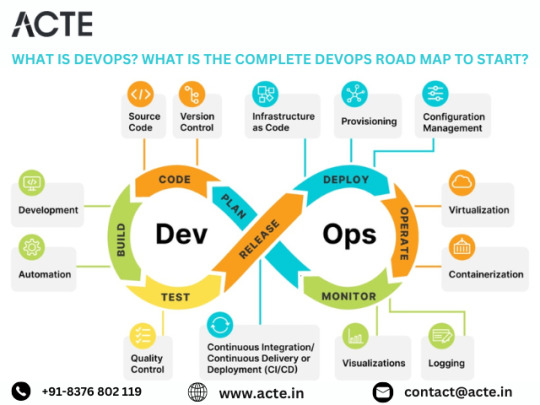

Navigating the DevOps Landscape: A Comprehensive Roadmap for Success

Introduction: In the rapidly evolving realm of software development, DevOps stands as a pivotal force reshaping the way teams collaborate, deploy, and manage software. This detailed guide delves into the essence of DevOps, its core principles, and presents a step-by-step roadmap to kickstart your journey towards mastering DevOps methodologies.

Exploring the Core Tenets of DevOps: DevOps transcends mere toolsets; it embodies a cultural transformation focused on fostering collaboration, automation, and continual enhancement. At its essence, DevOps aims to dismantle barriers between development and operations teams, fostering a culture of shared ownership and continuous improvement.

Grasping Essential Tooling and Technologies: To embark on your DevOps odyssey, familiarizing yourself with the key tools and technologies within the DevOps ecosystem is paramount. From version control systems like Git to continuous integration servers such as Jenkins and containerization platforms like Docker, a diverse array of tools awaits exploration.

Mastery in Automation: Automation serves as the cornerstone of DevOps. By automating routine tasks like code deployment, testing, and infrastructure provisioning, teams can amplify efficiency, minimize errors, and accelerate software delivery. Proficiency in automation tools and scripting languages is imperative for effective DevOps implementation.

Crafting Continuous Integration/Continuous Delivery Pipelines: Continuous Integration (CI) and Continuous Delivery (CD) lie at the heart of DevOps practices. CI/CD pipelines automate the process of integrating code changes, executing tests, and deploying applications, ensuring rapid, reliable, and minimally manual intervention-driven software changes.

Embracing Infrastructure as Code (IaC): Infrastructure as Code (IaC) empowers teams to define and manage infrastructure through code, fostering consistency, scalability, and reproducibility. Treating infrastructure as code enables teams to programmatically provision, configure, and manage infrastructure resources, streamlining deployment workflows.

Fostering Collaboration and Communication: DevOps champions collaboration and communication across development, operations, and other cross-functional teams. By nurturing a culture of shared responsibility, transparency, and feedback, teams can dismantle silos and unite towards common objectives, resulting in accelerated delivery and heightened software quality.

Implementing Monitoring and Feedback Loops: Monitoring and feedback mechanisms are integral facets of DevOps methodologies. Establishing robust monitoring and logging solutions empowers teams to monitor application and infrastructure performance, availability, and security in real-time. Instituting feedback loops enables teams to gather insights and iteratively improve based on user feedback and system metrics.

Embracing Continuous Learning and Growth: DevOps thrives on a culture of continuous learning and improvement. Encouraging experimentation, learning, and knowledge exchange empowers teams to adapt to evolving requirements, technologies, and market dynamics, driving innovation and excellence.

Remaining Current with Industry Dynamics: The DevOps landscape is dynamic, with new tools, technologies, and practices emerging regularly. Staying abreast of industry trends, participating in conferences, webinars, and engaging with the DevOps community are essential for staying ahead. By remaining informed, teams can leverage the latest advancements to enhance their DevOps practices and deliver enhanced value to stakeholders.

Conclusion: DevOps represents a paradigm shift in software development, enabling organizations to achieve greater agility, efficiency, and innovation. By following this comprehensive roadmap and tailoring it to your organization's unique needs, you can embark on a transformative DevOps journey and drive positive change in your software delivery processes.

2 notes

·

View notes

Text

Software Development Process—Definition, Stages, and Methodologies

In the rapidly evolving digital era, software applications are the backbone of business operations, consumer services, and everyday convenience. Behind every high-performing app or platform lies a structured, strategic, and iterative software development process. This process isn't just about writing code—it's about delivering a solution that meets specific goals and user needs.

This blog explores the definition, key stages, and methodologies used in software development—providing you a clear understanding of how digital solutions are brought to life and why choosing the right software development company matters.

What is the software development process?

The software development process is a series of structured steps followed to design, develop, test, and deploy software applications. It encompasses everything from initial idea brainstorming to final deployment and post-launch maintenance.

It ensures that the software meets user requirements, stays within budget, and is delivered on time while maintaining high quality and performance standards.

Key Stages in the Software Development Process

While models may vary based on methodology, the core stages remain consistent:

1. Requirement Analysis

At this stage, the development team gathers and documents all requirements from stakeholders. It involves understanding:

Business goals

User needs

Functional and non-functional requirements

Technical specifications

Tools such as interviews, surveys, and use-case diagrams help in gathering detailed insights.

2. Planning

Planning is crucial for risk mitigation, cost estimation, and setting timelines. It involves

Project scope definition

Resource allocation

Scheduling deliverables

Risk analysis

A solid plan keeps the team aligned and ensures smooth execution.

3. System Design

Based on requirements and planning, system architects create a blueprint. This includes:

UI/UX design

Database schema

System architecture

APIs and third-party integrations

The design must balance aesthetics, performance, and functionality.

4. Development (Coding)

Now comes the actual building. Developers write the code using chosen technologies and frameworks. This stage may involve:

Front-end and back-end development

API creation

Integration with databases and other systems

Version control tools like Git ensure collaborative and efficient coding.

5. Testing

Testing ensures the software is bug-free and performs well under various scenarios. Types of testing include:

Unit Testing

Integration Testing

System Testing

User Acceptance Testing (UAT)

QA teams identify and document bugs for developers to fix before release.

6. Deployment

Once tested, the software is deployed to a live environment. This may include:

Production server setup

Launch strategy

Initial user onboarding

Deployment tools like Docker or Jenkins automate parts of this stage to ensure smooth releases.

7. Maintenance & Support

After release, developers provide regular updates and bug fixes. This stage includes

Performance monitoring

Addressing security vulnerabilities

Feature upgrades

Ongoing maintenance is essential for long-term user satisfaction.

Popular Software Development Methodologies

The approach you choose significantly impacts how flexible, fast, or structured your development process will be. Here are the leading methodologies used by modern software development companies:

🔹 Waterfall Model

A linear, sequential approach where each phase must be completed before the next begins. Best for:

Projects with clear, fixed requirements

Government or enterprise applications

Pros:

Easy to manage and document

Straightforward for small projects

Cons:

Not flexible for changes

Late testing could delay bug detection

🔹 Agile Methodology

Agile breaks the project into smaller iterations, or sprints, typically 2–4 weeks long. Features are developed incrementally, allowing for flexibility and client feedback.

Pros:

High adaptability to change

Faster delivery of features

Continuous feedback

Cons:

Requires high team collaboration

Difficult to predict final cost and timeline

🔹 Scrum Framework

A subset of Agile, Scrum includes roles like Scrum Master and Product Owner. Work is done in sprint cycles with daily stand-up meetings.

Best For:

Complex, evolving projects

Cross-functional teams

🔹 DevOps

Combines development and operations to automate and integrate the software delivery process. It emphasizes:

Continuous integration

Continuous delivery (CI/CD)

Infrastructure as code

Pros:

Faster time-to-market

Reduced deployment failures

🔹 Lean Development

Lean focuses on minimizing waste while maximizing productivity. Ideal for startups or teams on a tight budget.

Principles include:

Empowering the team

Delivering as fast as possible

Building integrity in

Why Partnering with a Professional Software Development Company Matters

No matter how refined your idea is, turning it into a working software product requires deep expertise. A reliable software development company can guide you through every stage with

Technical expertise: They offer full-stack developers, UI/UX designers, and QA professionals.

Industry knowledge: They understand market trends and can tailor solutions accordingly.

Agility and flexibility: They adapt to changes and deliver incremental value quickly.

Post-deployment support: From performance monitoring to feature updates, support never ends.

Partnering with professionals ensures your software is scalable, secure, and built to last.

Conclusion: Build Smarter with a Strategic Software Development Process

The software development process is a strategic blend of analysis, planning, designing, coding, testing, and deployment. Choosing the right development methodology—and more importantly, the right partner—can make the difference between success and failure.

Whether you're developing a mobile app, enterprise software, or SaaS product, working with a reputed software development company will ensure your vision is executed flawlessly and efficiently.

📞 Ready to build your next software product? Connect with an expert software development company today and turn your idea into an innovation-driven reality!

0 notes

Text

Containerization and Test Automation Strategies

Containerization is revolutionizing how software is developed, tested, and deployed. It allows QA teams to build consistent, scalable, and isolated environments for testing across platforms. When paired with test automation, containerization becomes a powerful tool for enhancing speed, accuracy, and reliability. Genqe plays a vital role in this transformation.

What is Containerization? Containerization is a lightweight virtualization method that packages software code and its dependencies into containers. These containers run consistently across different computing environments. This consistency makes it easier to manage environments during testing. Tools like Genqe automate testing inside containers to maximize efficiency and repeatability in QA pipelines.

Benefits of Containerization Containerization provides numerous benefits like rapid test setup, consistent environments, and better resource utilization. Containers reduce conflicts between environments, speeding up the QA cycle. Genqe supports container-based automation, enabling testers to deploy faster, scale better, and identify issues in isolated, reproducible testing conditions.

Containerization and Test Automation Containerization complements test automation by offering isolated, predictable environments. It allows tests to be executed consistently across various platforms and stages. With Genqe, automated test scripts can be executed inside containers, enhancing test coverage, minimizing flakiness, and improving confidence in the release process.

Effective Testing Strategies in Containerized Environments To test effectively in containers, focus on statelessness, fast test execution, and infrastructure-as-code. Adopt microservice testing patterns and parallel execution. Genqe enables test suites to be orchestrated and monitored across containers, ensuring optimized resource usage and continuous feedback throughout the development cycle.

Implementing a Containerized Test Automation Strategy Start with containerizing your application and test tools. Integrate your CI/CD pipelines to trigger tests inside containers. Use orchestration tools like Docker Compose or Kubernetes. Genqe simplifies this with container-native automation support, ensuring smooth setup, execution, and scaling of test cases in real-time.

Best Approaches for Testing Software in Containers Use service virtualization, parallel testing, and network simulation to reflect production-like environments. Ensure containers are short-lived and stateless. With Genqe, testers can pre-configure environments, manage dependencies, and run comprehensive test suites that validate both functionality and performance under containerized conditions.

Common Challenges and Solutions Testing in containers presents challenges like data persistence, debugging, and inter-container communication. Solutions include using volume mounts, logging tools, and health checks. Genqe addresses these by offering detailed reporting, real-time monitoring, and support for mocking and service stubs inside containers, easing test maintenance.

Advantages of Genqe in a Containerized World Genqe enhances containerized testing by providing scalable test execution, seamless integration with Docker/Kubernetes, and cloud-native automation capabilities. It ensures faster feedback, better test reliability, and simplified environment management. Genqe’s platform enables efficient orchestration of parallel and distributed test cases inside containerized infrastructures.

Conclusion Containerization, when combined with automated testing, empowers modern QA teams to test faster and more reliably. With tools like Genqe, teams can embrace DevOps practices and deliver high-quality software consistently. The future of testing is containerized, scalable, and automated — and Genqe is leading the way.

0 notes

Text

Best Software Development Company in Chennai | Leading Software Solutions

When searching for the best software development company in Chennai, businesses of all sizes look for a partner who combines technical expertise, a customer-centric approach, and proven delivery. A leading Software Development Company in Chennai offers end-to-end solutions—from ideation and design to development, testing, deployment, and maintenance—ensuring your software is scalable, secure, and aligned with your strategic goals.

Why Choose the Best Software Development Company in Chennai?

Local Expertise, Global Standards Chennai has emerged as a thriving IT hub, home to talented engineers fluent in cutting-edge technologies. By selecting the best software development company in Chennai, you tap into deep local expertise guided by global best practices, ensuring your project stays on time and within budget.

Proven Track Record The top Software Development Company in Chennai showcases a rich portfolio of successful projects across industries—finance, healthcare, e-commerce, education, and more. Their case studies demonstrate on-point requirements gathering, agile delivery, and robust support.

Cost-Effective Solutions Chennai offers competitive rates without compromising quality. The best software development company in Chennai provides flexible engagement models—fixed price, time & materials, or dedicated teams—so you can choose the structure that best fits your budget and timeline.

Cultural Alignment & Communication Teams in Chennai often work in overlapping time zones with North America, Europe, and Australia, enabling real-time collaboration. A leading Software Development Company in Chennai emphasizes transparent communication, regular status updates, and seamless integration with your in-house team.

Core Services Offered

A comprehensive Software Development Company in Chennai typically delivers:

Custom Software Development Tailor-made applications built from the ground up to address unique business challenges—whether it’s a CRM, ERP, inventory system, or specialized B2B software.

Mobile App Development Native and cross-platform iOS/Android apps designed for performance, usability, and engagement. Ideal for startups and enterprises aiming to reach customers on the go.

Web Application Development Responsive, SEO-friendly, and secure web apps using frameworks like React, Angular, and Vue.js, backed by scalable back-end systems in Node.js, .NET, Java, or Python.

UI/UX Design User-centered design that drives adoption. Wireframes, prototypes, and high-fidelity designs ensure an intuitive interface that delights end users.

Quality Assurance & Testing Automated and manual testing—functional, performance, security, and usability—to deliver a bug-free product that scales under real-world conditions.

DevOps & Cloud Services CI/CD pipelines, containerization with Docker/Kubernetes, and deployments on AWS, Azure, or Google Cloud for high availability and rapid release cycles.

Maintenance & Support Post-launch monitoring, feature enhancements, and 24/7 support to keep your software running smoothly and securely.

The Development Process

Discovery & Planning Workshops and stakeholder interviews to define scope, objectives, and success metrics.

Design & Prototyping Rapid prototyping of wireframes and UI mockups for early feedback and iterative refinement.

Agile Development Two-week sprints with sprint demos, ensuring transparency and adaptability to changing requirements.

Testing & QA Continuous testing throughout development to catch issues early and deliver a stable release.

Deployment & Go-Live Seamless rollout with thorough planning, user training, and post-deployment support.

Maintenance & Evolution Ongoing enhancements, performance tuning, and security updates to keep your application competitive.

Benefits of Partnering Locally

Speedy Onboarding: Proximity to Chennai’s tech ecosystem speeds up recruitment of additional talent.

Cultural Synergy: Shared cultural context helps in understanding your business nuances faster.

Time-Zone Overlap: Real-time collaboration during key business hours reduces turnaround times.

Networking & Events: Access to local tech meetups, hackathons, and startup incubators for continuous innovation.

Conclusion

Choosing the best software development company in Chennai means entrusting your digital transformation to a partner with deep technical skills, transparent processes, and a client-first ethos. Whether you’re a startup looking to disrupt the market or a large enterprise aiming to modernize legacy systems, the right Software Development Company in Chennai will guide you from concept to success—delivering high-quality software on schedule and within budget. Start your journey today and experience why Chennai stands out as a premier destination for software development excellence.

0 notes

Text

Powering Progress – Why an IT Solutions Company India Should Be Your Technology Partner

In today’s hyper‑connected world, agile technology is the backbone of every successful enterprise. From cloud migrations to cybersecurity fortresses, an IT Solutions Company India has become the go‑to partner for businesses of every size. India’s IT sector, now worth over USD 250 billion, delivers world‑class solutions at unmatched value, helping startups and Fortune 500 firms alike turn bold ideas into reality.

1 | A Legacy of Tech Excellence

The meteoric growth of the Indian IT industry traces back to the early 1990s when reform policies sparked global outsourcing. Three decades later, an IT Solutions Company India is no longer a mere offshore vendor but a full‑stack innovation hub. Indian engineers lead global code commits on GitHub, contribute to Kubernetes and TensorFlow, and spearhead R&D in AI, blockchain, and IoT.

2 | Comprehensive Service Portfolio

Your business can tap into an integrated bouquet of services without juggling multiple vendors:

Custom Software Development – Agile sprints, DevOps pipelines, and rigorous QA cycles ensure robust, scalable products.

Cloud & DevOps – Migrate legacy workloads to AWS, Azure, or GCP and automate deployments with Jenkins, Docker, and Kubernetes.

Cybersecurity & Compliance – SOC 2, ISO 27001, GDPR: an IT Solutions Company India hardens your defenses and meets global regulations.

Data Analytics & AI – Transform raw data into actionable insights using ML algorithms, predictive analytics, and BI dashboards.

Managed IT Services – 24×7 monitoring, incident response, and helpdesk support slash downtime and boost productivity.

3 | Why India Wins on the Global Stage

Talent Pool – Over four million skilled technologists graduate each year.

Cost Efficiency – Competitive rates without compromising quality.

Time‑Zone Advantage – Overlapping work windows enable real‑time collaboration with APAC, EMEA, and the Americas.

Innovation Culture – Government initiatives like “Digital India” and “Startup India” fuel continuous R&D.

Proven Track Record – Case studies show a 40‑60 % reduction in TCO after partnering with an IT Solutions Company India.

4 | Success Story Snapshot

A U.S. healthcare startup needed HIPAA‑compliant telemedicine software within six months. Partnering with an IT Solutions Company India, they:

Deployed a microservices architecture on AWS using Terraform

Integrated real‑time video via WebRTC with 99.9 % uptime

Achieved HIPAA compliance in the first audit cycle The result? A 3× increase in user adoption and Series B funding secured in record time.

5 | Engagement Models to Fit Every Need

Dedicated Development Team – Ideal for long‑term projects needing continuous innovation.

Fixed‑Scope, Fixed‑Price – Best for clearly defined deliverables and budgets.

Time & Material – Flexibility for evolving requirements and rapid pivots.

6 | Future‑Proofing Your Business

Technologies like edge AI, quantum computing, and 6G will reshape industries. By aligning with an IT Solutions Company India, you gain a strategic partner who anticipates disruptions and prototypes tomorrow’s solutions today.

7 | Call to Action

Ready to accelerate digital transformation? Choose an IT Solutions Company India that speaks the language of innovation, agility, and ROI. Schedule a free consultation and turn your tech vision into a competitive edge.

Plot No 9, Sarwauttam Complex, Manwakheda Road,Anand Vihar, Behind Vaishali Apartment, Sector 4, Hiran Magri, Udaipur, Udaipur, Rajasthan 313002

1 note

·

View note

Text

Inside Turkey’s Most Promising App Development Firms

As the global shift toward digital innovation accelerates, Turkey is emerging as a powerhouse in the mobile app development arena. Once known primarily for its tourism and textiles, the nation is now carving a niche in cutting-edge technology—particularly in the realm of mobile app development. Today, companies from around the world are turning to a mobile app development company in Turkey to bring their digital visions to life.

So, what makes these firms so promising? The secret lies in a combination of skilled talent, cost-effectiveness, and a deep commitment to innovation. In this blog, we’ll dive into the inner workings of Turkey’s most exciting tech companies, including Five Programmers, and explore why they’re leading the charge in mobile technology.

Why Turkey? The Rise of Mobile Innovation

Turkey offers a unique blend of Eastern resilience and Western modernization. With a young, tech-savvy population and supportive policies for startups, the country has become fertile ground for digital transformation.

Here’s why global businesses are choosing a mobile app development firm in Turkey:

High-Quality Developers: Turkish universities produce thousands of computer science graduates yearly.

Affordable Excellence: Labor costs are significantly lower than in the US or EU, yet the quality of work rivals top-tier global firms.

Strategic Location: Situated between Europe and Asia, Turkey enjoys time-zone flexibility and cultural diversity.

Bilingual Communication: Most teams operate fluently in English, which facilitates smooth global partnerships.

Inside the Work Culture of Turkish App Firms

The top firms in Turkey thrive on collaboration, transparency, and client-first development. From daily scrums to milestone-based deliveries, Turkish teams follow Agile methodologies to ensure efficient workflows.

These companies prioritize:

Transparent timelines

Real-time updates via Slack or Jira

Rapid prototyping with Figma and Adobe XD

End-to-end testing using automated QA tools

One standout example is Five Programmers, a company that has gained a reputation for offering scalable, robust, and beautifully designed mobile applications for clients across the globe.

Core Technologies Used by Turkey’s App Developers

A trusted mobile app development firm in Turkey doesn’t just deliver code—they build experiences. Their tech stacks are modern, diverse, and reliable:

Frontend: React Native, Flutter, Swift, Kotlin

Backend: Node.js, Firebase, Django, Laravel

Database: PostgreSQL, MongoDB, MySQL

DevOps & Deployment: Docker, AWS, GitHub Actions

Design Tools: Figma, Sketch, InVision

These tools enable the seamless creation of iOS and Android apps that are fast, responsive, and feature-rich.

Industries Fueling the Mobile Boom in Turkey

App development firms in Turkey cater to a wide range of industries, including:

Healthcare: Apps for patient monitoring, doctor consultations, and e-pharmacies

Education: Mobile learning apps, digital classrooms, and exam portals

E-commerce: Platforms for online shopping, inventory tracking, and payment integration

Logistics: Fleet tracking apps, warehouse management tools, and smart delivery systems

Finance: Mobile banking, crypto wallets, and investment management apps

These solutions are not only functional but also aligned with global UI/UX trends.

Five Programmers – Setting the Bar for App Excellence

Among the many app development companies in Turkey, Five Programmers has positioned itself as a premium choice for scalable and user-centric mobile solutions. Known for delivering apps with high performance, minimal bugs, and intuitive designs, the firm caters to both startups and established enterprises.

Whether you're launching a fintech app or building a health-tech platform, Five Programmers ensures the final product is ready for real-world challenges. With a global client base and a collaborative mindset, they transform ideas into digital success stories.

Custom Mobile Solutions Tailored for Every Business

Every industry has its own pain points—and Turkey’s top app firms understand that well. That’s why they emphasize customization at every stage:

Discovery & Consultation

Wireframing and UI/UX prototyping

Frontend and backend development

Continuous QA and performance testing

App Store Optimization (ASO) and marketing integration

This detailed approach ensures that every app isn’t just built—it’s engineered for success.

FAQs – Mobile App Development Firms in Turkey

Q1: How long does it take to develop a mobile app in Turkey?

A: A basic app may take 4–6 weeks, while complex platforms can span up to 3–4 months. Timelines are always discussed upfront.

Q2: Is it cost-effective to hire a mobile app development firm in Turkey?

A: Yes, significantly. Compared to developers in North America or Western Europe, Turkish firms offer competitive pricing without compromising quality.

Q3: What platforms do Turkish firms develop for?

A: Most firms build cross-platform solutions using Flutter or React Native and also offer native development for iOS and Android.

Q4: Can I get post-launch support from Turkish developers?

A: Absolutely. Firms like Five Programmers offer long-term maintenance, performance monitoring, and feature upgrades.

Q5: Are Turkish apps internationally compliant?

A: Yes. Apps built in Turkey adhere to GDPR, HIPAA, and ISO standards depending on the target market.

Let’s Build Something Great – Contact Us Today

If you're planning to develop a robust, user-friendly mobile application, there’s no better time to partner with a leading mobile app development firm in Turkey. The teams here are creative, committed, and constantly pushing the boundaries of innovation.

📩 Get a Quote from Five Programmers – Our team will analyze your idea, provide timelines, and propose a cost-effective development roadmap.

🌐 Ready to transform your app idea into reality? Reach out to Five Programmers today and take the first step toward digital success.

0 notes

Text

Legacy Software Modernization Services In India – NRS Infoways

In today’s hyper‑competitive digital landscape, clinging to outdated systems is no longer an option. Legacy applications can slow innovation, inflate maintenance costs, and expose your organization to security vulnerabilities. NRS Infoways bridges the gap between yesterday’s technology and tomorrow’s possibilities with comprehensive Software Modernization Services In India that revitalize your core systems without disrupting day‑to‑day operations.

Why Modernize?

Boost Performance & Scalability

Legacy architectures often struggle under modern workloads. By re‑architecting or migrating to cloud‑native frameworks, NRS Infoways unlocks the flexibility you need to scale on demand and handle unpredictable traffic spikes with ease.

Reduce Technical Debt

Old codebases are costly to maintain. Our experts refactor critical components, streamline dependencies, and implement automated testing pipelines, dramatically lowering long‑term maintenance expenses.

Strengthen Security & Compliance

Obsolete software frequently harbors unpatched vulnerabilities. We embed industry‑standard security protocols and data‑privacy controls to safeguard sensitive information and keep you compliant with evolving regulations.

Enhance User Experience

Customers expect snappy, intuitive interfaces. We upgrade clunky GUIs into sleek, responsive designs—whether for web, mobile, or enterprise portals—boosting user satisfaction and retention.

Our Proven Modernization Methodology

1. Deep‑Dive Assessment

We begin with an exhaustive audit of your existing environment—code quality, infrastructure, DevOps maturity, integration points, and business objectives. This roadmap pinpoints pain points, ranks priorities, and plots the most efficient modernization path.

2. Strategic Planning & Architecture

Armed with data, we design a future‑proof architecture. Whether it’s containerization with Docker/Kubernetes, serverless microservices, or hybrid-cloud setups, each blueprint aligns performance goals with budget realities.

3. Incremental Refactoring & Re‑engineering

To mitigate risk, we adopt a phased approach. Modules are refactored or rewritten in modern languages—often leveraging Java Spring Boot, .NET Core, or Node.js—while maintaining functional parity. Continuous integration pipelines ensure rapid, reliable deployments.

4. Data Migration & Integration

Smooth, loss‑less data transfer is critical. Our team employs advanced ETL processes and secure APIs to migrate databases, synchronize records, and maintain interoperability with existing third‑party solutions.

5. Rigorous Quality Assurance

Automated unit, integration, and performance tests catch issues early. Penetration testing and vulnerability scans validate that the revamped system meets stringent security and compliance benchmarks.

6. Go‑Live & Continuous Support

Once production‑ready, we orchestrate a seamless rollout with minimal downtime. Post‑deployment, NRS Infoways provides 24 × 7 monitoring, performance tuning, and incremental enhancements so your modernized platform evolves alongside your business.

Key Differentiators

Domain Expertise: Two decades of transforming systems across finance, healthcare, retail, and logistics.

Certified Talent: AWS, Azure, and Google Cloud‑certified architects ensure best‑in‑class cloud adoption.

DevSecOps Culture: Security baked into every phase, backed by automated vulnerability management.

Agile Engagement Models: Fixed‑scope, time‑and‑material, or dedicated team options adapt to your budget and timeline.

Result‑Driven KPIs: We measure success via reduced TCO, improved response times, and tangible ROI, not just code delivery.

Success Story Snapshot

A leading Indian logistics firm grappled with a decade‑old monolith that hindered real‑time shipment tracking. NRS Infoways migrated the application to a microservices architecture on Azure, consolidating disparate data silos and introducing RESTful APIs for third‑party integrations. The results? A 40 % reduction in server costs, 60 % faster release cycles, and a 25 % uptick in customer satisfaction scores within six months.

Future‑Proof Your Business Today

Legacy doesn’t have to mean liability. With NRS Infoways’ Legacy Software Modernization Services In India, you gain a robust, scalable, and secure foundation ready to tackle tomorrow’s challenges—whether that’s AI integration, advanced analytics, or global expansion.

Ready to transform?

Contact us for a free modernization assessment and discover how our Software Modernization Services In India can accelerate your digital journey, boost operational efficiency, and drive sustainable growth.

0 notes

Text

Fix Deployment Fast with a Docker Course in Ahmedabad

Are you tired of hearing or saying, "It works on my machine"? That phrase is an indicator of disruptively broken deployment processes: when code works fine locally but breaks on staging and production.

From the perspective of developers and DevOps teams, it is exasperating, and quite frankly, it drains resources. The solution to this issue is Containerisation. The local Docker Course Ahmedabadpromises you the quickest way to master it.

The Benefits of Docker for Developers

Docker is a solution to the problem of the numerous inconsistent environments; it is not only a trendy term. Docker technology, which utilises Docker containers, is capable of providing a reliable solution to these issues. Docker is the tool of choice for a highly containerised world. It allows you to take your application and every single one of its components and pack it thus in a container that can execute anywhere in the world. Because of this feature, “works on my machine” can be completely disregarded.

Using exercises tailored to the local area, a Docker Course Ahmedabad teaches you how to create docker files, manage your containers, and push your images to Docker Hub. This course gives you the chance to build, deploy, and scale containerised apps.

Combining DevOps with Classroom Training and Classes in Ahmedabad Makes for Seamless Deployment Mastery

Reducing the chances of error in using docker is made much easier using DevOps, the layer that takes it to the next level. Unlike other courses that give a broad overview of containers, DevOps Classes and Training in Ahmedabad dive into automation, the establishment of CI/CD pipelines, monitoring, and with advanced tools such as Kubernetes and Jenkins, orchestration.

Docker skills combined with DevOps practices mean that you’re no longer simply coding but rather deploying with greater speed while reducing errors. Companies, especially those with siloed systems, appreciate this multifaceted skill set.

Real-World Impact: What You’ll Gain

Speed: Thus, up to 80% of deployment time is saved.

Reliability: Thus, your application will remain seamless across dev, test, and production environments.

Confidence: For end-users, the deployment problems have already been resolved well before they have the chance to exist.

Achieving these skills will exponentially propel your career.

Conclusion: Transform Every DevOps Weakness into a Strategic Advantage

Fewer bugs and faster release cadence are a universal team goal. Putting confidence in every deployment is every developer’s dream. A comprehensive Docker Course in Ahmedabador DevOps Classes and Training in Ahmedabadcan help achieve both together. Don’t be limited by impediments. Highsky IT Solutions transforms deployment challenges into success with strategic help through practical training focused on boosting your career with Docker and DevOps.

#linux certification ahmedabad#red hat certification ahmedabad#linux online courses in ahmedabad#data science training ahmedabad#rhce rhcsa training ahmedabad#aws security training ahmedabad#docker training ahmedabad#red hat training ahmedabad#microsoft azure cloud certification#python courses in ahmedabad

0 notes

Text

Mastering AWS DevOps Certification on the First Attempt: A Professional Blueprint

Embarking on the journey to AWS DevOps certification can be both challenging and rewarding. Drawing on insights from Fusion Institute’s guide, here’s a polished, professional article designed to help you pass the AWS Certified DevOps Engineer – Professional exam on your first try. Read this : AWS Certifications 1. Why AWS DevOps Certification Matters In today’s cloud-driven landscape, the AWS DevOps Professional certification stands as a prestigious validation of your skills in automation, continuous delivery, and agile operations. Successfully earning this credential on your first attempt positions you as a capable leader capable of handling real-world DevOps environments efficiently. 2. Solidify Your Foundation Before diving in, ensure you have: Associate-level AWS certifications (Solutions Architect, Developer, or SysOps) Hands-on experience with core AWS services such as EC2, S3, IAM, CloudFormation A working knowledge of DevOps practices like CI/CD, Infrastructure-as-Code, and Monitoring Start by reviewing key AWS services and reinforcing your familiarity with the terminology and core concepts. 3. Structured Study Path Follow this comprehensive roadmap: Domain Mastery Break down the certification domains and assign focused study sessions to cover concepts like CI/CD pipelines, logging & monitoring, security, deployment strategies, and fault-tolerant systems. Hands-on Practice Create and utilize play environments using CloudFormation, CodePipeline, CodeDeploy, CodeCommit, Jenkins, and Docker to learn by doing. Deep Dives Revisit intricate topics—particularly fault tolerance, blue/green deployments, and operational best practices—to build clarity and confidence. Mock Exams & Cheat Sheets Integrate Revision materials and timed practice tests from reliable sources. Address incorrect answers immediately to reinforce weak spots. Read This for More Info : Top DevOps Tools Conclusion Achieving the AWS DevOps Professional certification on your first attempt is ambitious—but eminently doable with: Strong foundational AWS knowledge Hands-on experimentation and lab work High-quality study resources and structured planning Strategic exam-day execution Fusion Institute’s guide articulates a clear, results-driven path to certification success—mirroring the approach shared by multiple first-time passers. With focused preparation and disciplined study, your AWS DevOps Professional badge is well within reach. Your AWS DevOps Success Starts Here! Join Fusion Institute’s comprehensive DevOps program and get the guidance, tools, and confidence you need to crack the certification on your first attempt. 📞 Call us at 9503397273/ 7498992609 or 📧 email: [email protected]

0 notes

Text

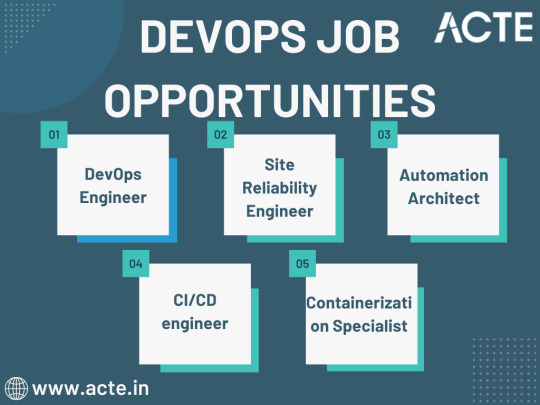

Embarking on a Digital Journey: Your Guide to Learning Coding

In today's fast-paced and ever-evolving technology landscape, DevOps has emerged as a crucial and transformative field that bridges the gap between software development and IT operations. The term "DevOps" is a portmanteau of "Development" and "Operations," emphasizing the importance of collaboration, automation, and efficiency in the software delivery process. DevOps practices have gained widespread adoption across industries, revolutionizing the way organizations develop, deploy, and maintain software. This paradigm shift has led to a surging demand for skilled DevOps professionals who can navigate the complex and multifaceted DevOps landscape.

Exploring DevOps Job Opportunities

DevOps has given rise to a spectrum of job opportunities, each with its unique focus and responsibilities. Let's delve into some of the key DevOps roles that are in high demand:

1. DevOps Engineer

At the heart of DevOps lies the DevOps engineer, responsible for automating and streamlining IT operations and processes. DevOps engineers are the architects of efficient software delivery pipelines, collaborating closely with development and IT teams. Their mission is to accelerate the software delivery process while ensuring the reliability and stability of systems.

2. Site Reliability Engineer (SRE)

Site Reliability Engineers, or SREs, are a subset of DevOps engineers who specialize in maintaining large-scale, highly reliable software systems. They focus on critical aspects such as availability, latency, performance, efficiency, change management, monitoring, emergency response, and capacity planning. SREs play a pivotal role in ensuring that applications and services remain dependable and performant.

3. Automation Architect

Automation is a cornerstone of DevOps, and automation architects are experts in this domain. These professionals design and implement automation solutions that optimize software development and delivery processes. By automating repetitive and manual tasks, they enhance efficiency and reduce the risk of human error.

4. Continuous Integration/Continuous Deployment (CI/CD) Engineer

CI/CD engineers specialize in creating, maintaining, and optimizing CI/CD pipelines. The CI/CD pipeline is the backbone of DevOps, enabling the automated building, testing, and deployment of code. CI/CD engineers ensure that the pipeline operates seamlessly, enabling rapid and reliable software delivery.

5. Containerization Specialist

The rise of containerization technologies like Docker and orchestration tools such as Kubernetes has revolutionized software deployment. Containerization specialists focus on managing and scaling containerized applications, making them an integral part of DevOps teams.

Navigating the DevOps Learning Journey

To embark on a successful DevOps career, individuals often turn to comprehensive training programs and courses that equip them with the necessary skills and knowledge. The DevOps learning journey typically involves the following courses:

1. DevOps Foundation

A foundational DevOps course covers the basics of DevOps practices, principles, and tools. It serves as an excellent starting point for beginners, providing a solid understanding of the DevOps mindset and practices.

2. DevOps Certification

Advanced certification courses are designed for those who wish to delve deeper into DevOps methodologies, CI/CD pipelines, and various tools like Jenkins, Ansible, and Terraform. These certifications validate your expertise and enhance your job prospects.

3. Docker and Kubernetes Training

Containerization and container orchestration are two essential skills in the DevOps toolkit. Courses focused on Docker and Kubernetes provide in-depth knowledge of these technologies, enabling professionals to effectively manage containerized applications.

4. AWS or Azure DevOps Training

Specialized DevOps courses tailored to cloud platforms like AWS or Azure are essential for those working in a cloud-centric environment. These courses teach how to leverage cloud services in a DevOps context, further streamlining software development and deployment.

5. Advanced DevOps Courses

For those looking to specialize in specific areas, advanced DevOps courses cover topics like DevOps security, DevOps practices for mobile app development, and more. These courses cater to professionals who seek to expand their expertise in specific domains.

As the DevOps landscape continues to evolve, the need for high-quality training and education becomes increasingly critical. This is where ACTE Technologies steps into the spotlight as a reputable choice for comprehensive DevOps training.

They offer carefully thought-out courses that are intended to impart both foundational information and real-world, practical experience. Under the direction of knowledgeable educators, students can quickly advance on their path to become skilled DevOps engineers. They provide practical insights into industrial practises and issues, going beyond theory.

Your journey toward mastering DevOps practices and pursuing a successful career begins here. In the digital realm, where possibilities are limitless and innovation knows no bounds, ACTE Technologies serves as a gateway to a thriving DevOps career. With a diverse array of courses and expert instruction, you'll find the resources you need to thrive in this ever-evolving domain.

3 notes

·

View notes

Text

Edge-Native Custom Apps: Why Centralized Cloud Isn’t Enough Anymore

The cloud has transformed how we build, deploy, and scale software. For over a decade, centralized cloud platforms have powered digital transformation by offering scalable infrastructure, on-demand services, and cost efficiency. But as digital ecosystems grow more complex and data-hungry, especially at the edge, cracks are starting to show. Enter edge-native custom applications—a paradigm shift addressing the limitations of centralized cloud computing in real-time, bandwidth-sensitive, and decentralized environments.

The Problem with Centralized Cloud

Centralized cloud infrastructures still have their strengths, especially for storage, analytics, and orchestration. However, they're increasingly unsuited for scenarios that demand:

Ultra-low latency

High availability at remote locations

Reduced bandwidth usage

Compliance with local data regulations

Real-time data processing

Industries like manufacturing, healthcare, logistics, autonomous vehicles, and smart cities generate massive data volumes at the edge. Sending all of it back to a centralized data center for processing leads to lag, inefficiency, and potential regulatory risks.

What Are Edge-Native Applications?

Edge-native applications are custom-built software solutions that run directly on edge devices or edge servers, closer to where data is generated. Unlike traditional apps that rely heavily on a central cloud server, edge-native apps are designed to function autonomously, often in constrained or intermittent network environments.

These applications are built with edge-computing principles in mind—lightweight, fast, resilient, and capable of processing data locally. They can be deployed across a variety of hardware—from IoT sensors and gateways to edge servers and micro data centers.

Why Build Custom Edge-Native Apps?

Every organization’s edge environment is unique—different devices, network topologies, workloads, and compliance demands. Off-the-shelf solutions rarely offer the granularity or adaptability required at the edge.

Custom edge-native apps are purpose-built for specific environments and use cases. Here’s why they’re gaining momentum:

1. Real-Time Performance

Edge-native apps minimize latency by processing data on-site. In mission-critical scenarios—like monitoring patient vitals or operating autonomous drones—milliseconds matter.

2. Offline Functionality

When connectivity is spotty or non-existent, edge apps keep working. For remote field operations or rural infrastructure, uninterrupted functionality is crucial.

3. Data Sovereignty & Privacy

By keeping sensitive data local, edge-native apps help organizations comply with GDPR, HIPAA, and similar regulations without compromising on performance.

4. Reduced Bandwidth Costs

Not all data needs to be sent to the cloud. Edge-native apps filter and process data locally, transmitting only relevant summaries or alerts, significantly reducing bandwidth usage.

5. Tailored for Hardware Constraints

Edge-native custom apps are optimized for low-power, resource-constrained environments—whether it's a rugged industrial sensor or a mobile edge node.

Key Technologies Powering Edge-Native Development

Developing edge-native apps requires a different stack and mindset. Some enabling technologies include:

Containerization (e.g., Docker, Podman) for packaging lightweight services.

Edge orchestration tools like K3s or Azure IoT Edge for deployment and scaling.

Machine Learning on the Edge (TinyML, TensorFlow Lite) for intelligent local decision-making.

Event-driven architecture to trigger real-time responses.

Zero-trust security frameworks to secure distributed endpoints.

Use Cases in Action

Smart Manufacturing: Real-time anomaly detection and predictive maintenance using edge AI to prevent machine failures.

Healthcare: Medical devices that monitor and respond to patient data locally, without relying on external networks.

Retail: Edge-based checkout and inventory management systems to deliver fast, reliable customer experiences even during network outages.

Smart Cities: Traffic and environmental sensors that process data on the spot to adjust signals or issue alerts in real time.

Future Outlook

The rise of 5G, AI, and IoT is only accelerating the demand for edge-native computing. As computing moves outward from the core to the periphery, businesses that embrace edge-native custom apps will gain a significant competitive edge—pun intended.

We're witnessing the dawn of a new software era. It’s no longer just about the cloud—it’s about what happens beyond it.

Need help building your edge-native solution? At Winklix, we specialize in custom app development designed for today’s distributed digital landscape—from cloud to edge. Let’s talk: www.winklix.com

#custom software development company in melbourne#software development company in melbourne#custom software development companies in melbourne#top software development company in melbourne#best software development company in melbourne#custom software development company in seattle#software development company in seattle#custom software development companies in seattle#top software development company in seattle#best software development company in seattle

0 notes