#murderbot meta

Text

I think it's hysterical that Murderbot sent Three to amuse Holism. Now University has two construct/bot pairs, and one of them is a timid information hungry SecUnit, who just learning about the world and how to be its own person, paired up with probably the biggest nerdy pedant of all University's machine intelligences (judging by Art's reaction).

And the other pair is ART and Murderbot, two mentally unwelly traumatized asshole machine intelligences, who would kill and die for their humans.

I think that's beautiful.

662 notes

·

View notes

Text

me and den @unloneliest were just talking about murderbot and ART's relationship and i want to discuss how they quite literally complete each other's sensory and emotional experience of the world!!

there's a few great posts on here such as this one about how murderbot uses drones to fully and properly experience the world around it (it also accesses security cameras/other systems for this same purpose). but i haven't seen anyone so far talk about how once MB stops working for the company and consequently doesn't have a hubsystem/secsystem to connect to anymore (which for its entire existence up to that point had been how it was used to interacting with its environment/doing its job), after it meets ART, ART starts to fill that gap.

ART gives MB access to more cameras, systems, and information archives than it would normally be able to connect with while MB is on its own outside of ART's... body(? lol), but also directly gives MB access to its own cameras, drones, archives, facilities, and processing space. additionally, so much of ART's function is dedicated to analysis, lateral thinking, and logical reasoning, and it not only uses those skills in service of reaching murderbot's goals, it teaches murderbot how to use those same skills. (ART might be a bit of an asshole about how it does this, but that doesn't negate just how much it does for murderbot for no reason other than it's bored/interested in MB as an individual.)

we all love goofing about how artificial condition can basically be boiled down to "two robots in a trench coat trying to get through a job interview" (which is entirely accurate tbh) but that's also such a great example of ART fulfilling the role of both murderbot's "hubsystem" and "secsystem", allowing it to fully experience its environment/ succeed in its goals. ART provides MB with crucial information, context, and constructive criticism, and uses its significant processing power to act as MB's backup and support system while they work together.

from ART's side of things, we get a very explicit explanation of how it needs the context of murderbot's emotional reactions to media in order to fully understand and experience the media as intended. it tried to watch media with its humans, and it didn't completely understand just by studying their reactions. but when it's in a feed connection with murderbot, who isn't human but has human neural tissue, ART is finally able to thoroughly process the emotional aspects of media (side note, once it actually understands the emotional stakes in a way that makes sense for it, it's so frightened by the possibility of the fictional ship/crew in worldhoppers being catastrophically injured or killed that it makes murderbot pause for a significant amount of time before it feels prepared to go on. like!! ART really fucking loves its crew, that is all).

looking at things further from ART's perspective: its relationship with murderbot is ostensibly the very first relationship it's been able to establish with not only someone outside of its crew, but also with any construct at all. while ART loves its crew very much (see previous point re: being so so scared for the fate of the fictional crew of worldhoppers), it never had a choice in forming relationships with them. it was quite literally programmed to build those relationships with its crew and students. ART loves its function, its job, and nearly all of the humans that spend time inside of it, but its relationship with murderbot is the first time it's able to choose to make a new friend. that new friend is also someone who, due to its partial machine intelligence, is able to understand and know ART on a whole other level of intimacy that humans simply aren't capable of. (that part goes for murderbot, too, obviously; ART is its first actual friend outside of the presaux team, and its first bot friend ever.)

and because murderbot is murderbot, and not a "nice/polite to ART most of the time" human, this is also one of the first times that ART gets real feedback from a friend about the ways that its actions impact others. after the whole situation in network effect, when the truth of the kidnapping comes to light and murderbot hides in the bathroom refusing to talk to ART (and admittedly ART doesn't handle this well lol) - ART is forced to confront that despite it making the only call it felt able to make in that horrifying situation, despite it thinking that that was the right call, its actions hurt murderbot, and several other humans were caught in the crossfire. what's most scary to ART in that moment is the idea that murderbot might never forgive it, might never want to talk to it again. it's already so attached to this friendship, so concerned with murderbot's wellbeing, that the thought of that friendship being over because of its own behavior is terrifying. (to me, this almost mirrors murderbot's complete emotional collapse when it thinks that ART has been killed. the other more overt mirror is ART fully intending on bombing the colony to get murderbot back.)

in den's words, they both increase the other's capacity to feel: ART by acting as a part of murderbot's sensory system, and murderbot by acting as a means by which ART can access emotion. they love one another so much they would do pretty much anything to keep each other safe/avenge each other, but what's more, they unequivocally make each other more whole.

#the murderbot diaries#murderbot#asshole research transport#network effect#mine#idk what else to tag lol#BIG thanks to den unloneliest for helping so much with the drafting/editing of this!!!#we both were having some brain fog issues yesterday so we joked that with our combined forces we can make one (1) post lol#just like mb and ART fr!!!!#anyway im so fucking emotional over themmmmm#murderbot meta#the murderbot diaries meta

561 notes

·

View notes

Text

On System Collapse, Sanctuary Moon, and saving one another.

I said the other day that System Collapse might be my new favorite Murderbot book. The first time I thought that was in the middle of chapter eight, the chapter where Murderbot and its humans make a documentary to show the colonists what the Corporation Rim is like. The whole book was good, but that was the chapter that felt like it was reaching out to me the most.

The thing that struck me about it is how Murderbot saves the colonists with media. It was saved by media—Sanctuary Moon helped it rewire its neural tissue, process its trauma, find a place for itself in the world. And coming up with the idea of using media to convince the colonists is what drags it out of the depressive state it’s been in for the entire book up to that point, and lets it start to feel hopeful again. It literally tells us in its narration that the emotional reactions it has when it comes up with this idea are similar to the ones it had watching Sanctuary Moon for the first time. The thought of creating its own media, of finding a way to tell the truth and be listened to, of being able to keep people from being trapped in the corporate world it knows all too well, is just as much of a lifeline for it as Sanctuary Moon was.

Murderbot has been been feeling like a failure as a SecUnit for the entire book, and it finds its way out of that not through regaining its normal SecUnit competence but through art. Something it was never meant to experience or understand, let alone create, but something that shaped who it is. It takes the thing that saved it and turns it around to save others—and saves itself again, in the process.

And the other thing that jumped out at me, thinking about chapter eight, is that so many of us have been saved by media, too. So many of us have been saved by Murderbot, in big and small ways. I’m certainly one of them. Murderbot and the community of readers who love it gave me the space to stop and consider some things that (not unlike Murderbot) I hadn’t really been willing to examine. And now I get to figure myself out in a community full of aspec people who understand. Murderbot has given that opportunity to a lot of people.

Martha Wells has talked in interviews about how parts of Murderbot were based on herself. She says, in her introduction to the Subterranean Press edition, that media “probably saved [her] life as a kid,” including the kind of media that isn’t “cool” to be saved by. We’ve seen that in Murderbot ever since the very first line of All Systems Red. What we saw in chapter eight of System Collapse goes a step further. It makes me want to hold a mirror up—I hope Wells was aware, when she was writing it, that what Murderbot does for the colonists is something she’s done for a lot of others.

The colonists that Murderbot saves with its documentary are not real people. That scene, of course, is fiction. But it’s the kind of fiction that’s true in all the ways that matter.

I really love Murderbot. Not in a weird way. 💜

#stars has thoughts#system collapse#system collapse spoilers#murderbot#murderbot meta#the murderbot diaries#i was having so many emotions when reading chapter 8#i paused after that chapter and wrote a draft of the second and third paragraphs of this post#it's about the parallels. between murderbot and its own past self#between murderbot and Wells. between murderbot and us.#it really genuinely made me want to hold that scene up to Martha Wells and say#you know that you did this for us‚ right??#you know that you're saving people too?

458 notes

·

View notes

Text

Mamma mia here we go again…

So I have more thoughts because apparently there’s no bottom to the murderbot mindhole I’ve fallen down.

(Spoiler warning- minor stuff from several of the books, pls check tags etc.)

I’ve been reading a lot of things recently exploring Murderbot as an unreliable narrator, which I think is a cool result of System Collapse (because we all know our beloved MB is going through it in this one). There’s also been some interesting related discussion of MB’s distrust of and sometimes biased assessment/treatment of other constructs and bots.

And I’ve been reading a lot about CombatUnits! And I want to talk about them!!

Main thoughts can be summarized as follows:

We don’t see a lot about CombatUnits in the books, and I think what we do see from MB’s pov encourages the reader to view them as less sympathetic than other constructs.

I’m very skeptical of this portrayal for reasons.

The existence of CombatUnits makes me fucking sad and I have a lot of feelings about them!

I got introduced to the idea of MB as an unreliable narrator in a post by onironic It analyzes how in SC, MB seems to distrust Three to a somewhat unreasonable degree, and how it sometimes infantilizes Three or treats it the way human clients have treated it in the past. The post is Amazing and goes into way more detail, so pls go read it (link below):

https://www.tumblr.com/onironic/736245031246135296?source=share

So these ideas were floating around in my brain when I read an article Martha Wells recently published in f(r)iction magazine titled “Bodily Autonomy in the Murderbot Diaries”. I’ll link the article here:

(Rn the only way to access the article is to subscribe to the magazine or buy an e-copy of the specific issue which is $12)

In the article, Wells states that MB displaced its fear of being forced to have sex with humans onto the ComfortUnit in Artificial Condition. I think it’s reasonable to assume that MB also does this with other constructs. With Three, I think it’s more that MB is afraid if what it knows Three is capable of, or (as onironic suggests in their post and I agree with) some jealousy that Three seems more like what humans want/expect a rogue SecUnit to be.

But I want to explore how this can be applied to CombatUnits, specifically.

We don’t learn a lot about them in the books. One appears for a single scene in Exit Strategy, and that’s it. What little else we know comes from MB’s thoughts on them sprinkled throughout the series. To my knowledge, no other character even mentions them (which raises interesting questions about how widely-known their existence is outside of high-level corporate military circles).

When MB does talk about CombatUnits in the early books, it’s as a kind of boogeyman figure (the real “murderbots” that even Murderbot is afraid of). And then when one does show up in ES, it’s fucking terrifying! There’s a collective “oh shit” moment as both MB and the reader realize what it’s up against. Very quickly what we expect to be a normal battle turns into MB running for its life, desperately throwing up hacks as the CombatUnit slices through them just as fast. We and MB know that it wouldn’t have survived the encounter if its humans hadn’t helped it escape. So the CombatUnit really feels like a cut above the other enemies in the series.

And what struck me reading that scene was how the CombatUnit acts like the caricature of an “evil robot” that MB has taught us to question. It seems single-mindedly focused on violence and achieving its objective, and it speaks in what I’d call a “Terminator-esque” manner: telling MB to “Surrender” (like that’s ever worked) and responds to MB’s offer to hack its governor module with “I want to kill you” (ES, pp 99-100).

(Big tangent: Am I the only one who sees parallels between this and how Tlacey forces the ComfortUnit to speak to MB in AC? She makes it suggest they “kill all the humans” because that’s how she thinks constructs talk to each other (AC, pp 132-4). And MB picks up on it immediately. So why is that kind of talk inherently less suspicious coming from a CombatUnit than a ComfortUnit? My headcanon is that I’m not convinced the CombatUnit was speaking for itself. What if a human controller was making it say things they thought would be intimidating? Idk maybe I’ve been reading too many fics where CombatUnits are usually deployed with a human handler. There could be plenty of reasons why the CombatUnit would’ve talked like that. I’m just suspicious.)

(Also, disclaimer: I want to clarify before I go on that I firmly believe that even though MB seems to be afraid of CombatUnits and thinks they’re assholes, it would still advocate for them to have autonomy. I’m not trying to say that either MB or Wells sees CombatUnits as less worthy of personhood or freedom- because I feel the concept that “everything deserves autonomy” is very much at the heart of the series.)

So it’s clear from all of this that MB is scared of CombatUnits and distrusts them for a lot of reasons. I read another breathtaking post by @grammarpedant that gives a ton of examples of this throughout the books and has some great theories on why MB might feel this way. I’ll summarize the ones here that inspired me the most, but pls go read the original post for the full context:

https://www.tumblr.com/grammarpedant/703920247856562177?source=share

OP explains that SecUnits and CombatUnits are pretty much diametrically opposed because of their conflicting functions: Security safeguards humans, while Combat kills them. Of course these functions aren’t rigid- MB has implied that it’s been forced to be violent towards humans before, and I’m sure that extracting/guarding important assets could be a part of a CombatUnit's function. But it makes sense that MB would try to distance itself from being considered a CombatUnit, using its ideas about them to validate the parts of its own function that it likes (protecting people). OP gives what I think is the clearest example of this, which is the moment in Fugitive Telemetry when MB contrasts its plan to sneak aboard a hostile ship and rescue some refugees with what it calls a “CombatUnit” plan, which would presumably involve a lot more murder (FT, p 92).

This reminds me again of what Wells said in the f(r)iction article, that on some level MB is frightened by the idea that it could have been made a ComfortUnit (friction, p 44). I think the idea that it could’ve been a CombatUnit scares it too, and that’s why it keeps distinguishing itself and its function from them. But I think it’s important to point out, that in the above example from FT, even MB admits that the murder-y plan it contrasts with its own would be one made by humans for CombatUnits. So again we see that we just can’t know much about the authentic nature of CombatUnits, or any constructs with intact governor modules, because they don’t have freedom of expression. MB does suggest that CombatUnits may have some more autonomy when it comes to things like hacking and combat which are a part of their normal function. But how free can those choices be when the threat of the governor module still hangs over them?

I think it could be easy to fall into the trap of seeing CombatUnits as somehow more complicit in the systems of violence in the mbd universe. But I think that’s because we often make a false association between violence and empowerment, when even in our world that’s not always the case. But, critically, this can’t be the case for CombatUnits because they’re enslaved in the same way SecUnits and ComfortUnits are (though the intricacies are different).

There was another moment in the f(r)iction article that I found really chilling. Wells states that there’s a correlation between SecUnits that are forced to kill humans and ones that go rogue (friction, p 45). It’s a disturbing thought on its own, but I couldn’t help wondering then how many CombatUnits try to hack their governor modules? And what horrible lengths would humans go to to stop them? I refuse to believe that a CombatUnit’s core programming would make it less effected by the harm its forced to perpetrate. That might be because I’m very anti-deterministic on all fronts, but I just don’t buy it.

I’m not entirely sure why I feel so strongly about this. Of course, I find the situation of all constructs in mbd deeply upsetting. But the more I think about CombatUnits, the more heartbreaking their existence seems to me. There’s a very poignant moment in AC when MB compares ART’s function to its own to explain why there are things it doesn’t like about being a SecUnit (AC, p 33). In that scene, MB is able to identify some parts of its function that it does like, but I have a hard time believing a CombatUnit would be able to do the same. I’m not trying to say that SecUnits have it better (they don’t) (the situation of each type of construct is horrible in it’s own unique way). It’s just that I find the idea of construct made only for violence and killing really fucking depressing. I can’t even begin to imagine the horror of their day-to-day existence.

@grammarpedant made another point in their post that I think raises a TON of important questions not only about CombatUnits, but about how to approach the idea of “function” when it comes to machine intelligence in general. They explain that, in a perfect version of the mbd universe, there wouldn’t be an obvious place for CombatUnits the way there could be for SecUnits and ComfortUnits who wanted to retain their original functions. A better world would inherently be a less violent one, so where does that leave CombatUnits? Would they abandon their function entirely, or would they find a way to change it into something new?

I’ve been having a lot of fun imagining what a free CombatUnit would be like. But in some ways it’s been more difficult than I expected. I’ve heard Wells say in multiple interviews that one of her goals in writing Murderbot was to challenge people to empathize with someone they normally wouldn’t, and I find CombatUnits challenging in exactly that way. Sometimes I wonder if I would’ve felt differently about these books if MB had been a CombatUnit instead of a SecUnit. Would I have felt such an immediate connection to MB if its primary function before hacking its governor module had been killing humans, or if it didn’t have relatable hobbies like watching media? Or if it didn’t have a human face for the explicit purpose of making people like me more comfortable? I’m not sure that I would have.

Reading SC has got me interested in exploring the types of people that humans (or even MB itself) would struggle to accept. So CombatUnits are one of these and possible alien-intelligences are another. All this is merely a small sampling of the thoughts that have been swirling around in my brain-soup! So if anyone is interested in watching me fumble my way through these concepts in more detail, I may be posting “something” in the very near future!

Would really appreciate anyone else’s thoughts about all of THIS^^^^ It’s been my obsession over the holidays and helping me cope with family stress and flying anxiety.

#murderbot diaries#murderbot meta#artificial condition#exit strategy#fugitive telemetry#system collapse#murderbot spoilers#combat unit#FEELINGS#i blink and then it's 2000 words later#jesus christ

129 notes

·

View notes

Text

The Adaptation That Shall Not Be Named aside, I had an idea for an interesting way you could represent ART in a visual medium: it's cameras.

We know that, in any visual medium, what The Camera (be that literal in film or figurative in animation) chooses to show and focus on is important. Its the primary way the piece of media communicates to its audience, and the framing of a scene tells us a lot about how we're supposed to interpret it. What is on screen and how it's on screen convey authorial intent.

Take all that, and turn "authorial intent" into character expression (in this case, for ART). A conversation between Murderbot and ART that would traditionally be shot-reverse-shot becomes shot-ARTPOVshot, so both shots would be of Murderbot, but one would be from The Camera and one would be from ART. Even though the subject is the same, the difference between them could show us something about ART in the same way a reverse shot shows us something about any other character.

To me its like those shots from a monster's POV in horror movies, where one second you're with your protagonists, the next you're watching them from a far off angle between some blades of grass, shaky cam, ragged breathing. It's a classic, even a cliche, but it does the job of conveying the sense of unsafety, of Something Out There Watching Them, of monstrosity, of something feral and dangerous. All without needing to see the monster. What if that type of shot was all we ever got of a character?

(Also, in all honesty, some of my favourite meta about this series is how it's in conversation with the horror genre. ART and SecUnits being the type of characters that would be The Monster in another story, or from another perspective, is compelling to me, so i'm drawing on that a bit here. The idea of characterising but not visualising ART by taking pages out of horror monster cinematography? I just think it's neat.)

Anyway, you could also do all the sci-fi Augmented Vision stuff with it too. ART POV shots where we watch it pull up a feed tab over the camera feed and replay a section of audio, or check Murderbot's diagnostics, or look at Some Code Or Perhaps A Graph. ART POV shots that are broken into multiple feeds showing different things. ART POV shots that give you the sense of it being textually present without it being physically present.

You could use some of this for Murderbot itself, if you leant into how its drones are an extension of its awareness. You could even use it in a similar way to how Murderbot uses its narration, narrating less when it's upset as well as leaving out major details. What if, when Murderbot is tired of people looking at it or in a more vulnerable headspace, we get more drone POV shots without Murderbot in frame. It's still there, but present in a different way, behind The Camera rather than in front of it.

I think there's potential in using POV shots from ART's cameras to characterise it without visualising it in a traditional way. I think there's potential in using horror movie monster language on ART and Murderbot. I think there's potential in having the cinematography focus on what they're seeing in a way that emphasises the amount of Surveillance both of them are constantly doing.

I think there's potential in a show using The Camera as cleverly as the books use Narration.

#like can you IMAGINE.#“You dislike your function.” [subtle zoom on MB's face to see its reaction]#a human asking MB a person question and rather than the cut close up to see its reaction#the camera cuts away so its no longer even in frame#what does MB sending its GovernorModulePunishment.exe to ART look like from ARTs POV???#that one time MB shut down after ART probed too much and ART just waited until it woke up.#its just a ART cam timelapse of MB lying Unmoving for three hours#do you see the vision???? am i crazy????#murderbot#the murderbot diaries#art/perihelion#murderbot meta#stuff i made

141 notes

·

View notes

Text

This is a pretty deep delve into how our favourite rogue SecUnit uses the word “murderbot” in The Murderbot Diaries

It’s just meta, no story and because I’m human and this is me having fun I do express opinions

It covers the five novellas, Network Effect and now (dun dun dun) System Collapse! Watch out for spoilers!

#murderbot#the murderbot diaries#murderbot diaries#murderbot meta#names in the Murderbot diaires#murderbot etymon#etymon#all systems red#artificial condition#rogue protocol#exit strategy#fugitive telemetry#network effect#system collapse

24 notes

·

View notes

Text

back on my bullshit again - i have 2 audiobooks on my libby app rn, all systems red and graceling, and my brain is stuck in compare and contrast mode, so hot take.

murderbot and katsa (from graceling) would get along like a house on fire

i mean. the parallels. the autistic coding. the gnc vibes. the capability of incredible violence coupled with the reluctance to use it. the fear of being forced to use that violence unwillingly (again) due to past trauma. fuck.

im listing all the parallels i noticed under the cut bc this is going to be a long one

*disclaimer: I love the murderbot audiobooks. the graceling audiobook can choke - they do not do it justice. my standards are probably way too high tho lol i’ve been reading and rereading graceling for like a decade*

the line that sparked this connection is from graceling - katsa and po are talking about her grace, and she says she hasn’t helped as many lives as she’s hurt, and he says “you have the rest of your life to tip the balance”

like. if that doesn’t capture mb’s vibe idk what does.

here’s the list

autistic coding. we all know mb is autistic, but katsa show so many signs of it that are easy to miss if you’re not looking for it but once you see it you can’t unsee it.

I made a whole other post about it but I mean - sensory issues, not picking up on social cues, bluntness, it’s all there baby.

plus katsa and mb are equally bad at identifying their own emotions and what they want (big picture).

they both have relationships with gender and sexuality than differ from the norms of the societies they’re in

obviously their relationships to gender are wildly different, but both differ from societal gender perception in-universe. katsa is seen as odd for her short hair, pants, and rejection of the societal expectation of women to get married and have kids. her relationship with Po is unique, and negotiated on their terms, not dictated by societal norms. meanwhile murderbot’s experience of gender and sexuality is inherently tied to its identity as a construct, and while i’m sure there are agender aroace humans in universe, mb repeatedly ties its identity to being a construct, like saying ‘constructs have less than null interest in humans or any other kind of sex’ instead of just ‘i have no interest in sex’

they’re both really, really good at fighting and killing people, but they choose to use that capability to protect their friends and the people they love.

they learn that they aren’t just made for killing, that they can do so much more, and that they can find a place for themselves where they’ll be safe, independent, doing work they like, and that they have friends who know what they are and aren’t afraid.

For a long time they were under the control of a greedy, corrupt authority, which forced them to enact senseless violence. They’re both conflicted about the things they were forced to do, and harbor serious guilt and self-loathing about it, but over the course of the book begin to heal and acknowledge their pasts while working to take control over their future.

both have serious issues and trauma related to losing control over mind and body - mb with its governor module, katsa with randa’s threats and leck’s grace. they will do anything to never feel like that again.

in fact, at some point both of them say something along the lines of “I want to keep my mind my own” - mb with “the inside of my mind had been my own for 35,000+ hours...I wanted to keep me the way I was” and when katsa is fighting with po when he first reveals his grace, she says “what thoughts of mine have you stolen?” and “It’s easy for you to say. You’re not the one whose thoughts are not your own!”

they both make friends with someone who is “like them,” (ART is another bot and Po is another Graceling), but whose skills complement their own and who share common interests, but come at them from new and interesting viewpoints. (ART learns to like media, but it’s a bigass spaceship that has wildly different perspectives on things than mb does, and Po’s great at fighting, but in a different way and for a very different reason than Katsa).

They spend a long time sharing these interests (watching media/training), until something external happens, and there’s a betrayal of some kind that they then work together to overcome in pursuit of a larger goal.

mb and ART share media and generally are on good terms until the Targets happen in Network Effect and ART sends the Targets after mb and they fight and make up in pursuit of the common goal of Protecting The Humans.

katsa and po train for a while until she refuses to hurt Lord Ellis, and then realizes Po’s lie about his grace, and they fight and make up in pursuit of figuring out the whole grandfather tealiff mystery.

this relationship is unique, and does not fit into in-universe societal expectations - others try to put labels on it, even close friends, but they’re wrong. it is what it is. these two are just going to do what they do and not care about what everyone else thinks.

also there’s some mindreading going on any way you slice it, and ART/po has more power in that division, and navigating that power imbalance is an important part of the relationship.

the feed is the sci-fi version of telepathy, don’t @ me.

look, the best dnd classes are bard and rogue for a reason. ninja + smooth talker = profit

welp. lets see if anyone else has ever combined these two incredibly niche interests

#murderbot#murderbot meta#meta#murderbot spoilers#network effect#network effect spoilers#graceling#graceling spoilers#original post

62 notes

·

View notes

Text

Rereading the murderbot diaries again and I just realized something [spoilers!]. As far as I remember from my last read-through, all the human MCs survive and all the MCD/grief moments are about AI. Even if I'm missing an incident it seems to be a noticeable trend. Which is a really interesting way to structure things in terms of showing the difference between Murderbot's priorities in terms of saving people and Murderbot's feelings about who counts as a person.

#murderbot meta#murderbot diaries#murderbot#miki#murderbot 2.0#mcd#perceived mcd#spoilers#meta#perihelion

20 notes

·

View notes

Text

Today's debate with my friends: How would the Preservation Aux team do on Task Master?

Results polled so far:

2 votes for Arada winning

1 for Pin-Lee

1 for Ratthi

All agree that Murderbot would not play on principal and would hate Greg.

All agree that ART would threaten to destroy Greg in the first 10 minutes.

#Yes this is how i spend my weeknights#task master#the murderbot diaries#murderbot meta#murderbot#preservation aux#Murderbot shit posting

5 notes

·

View notes

Text

A bunch of Murderbot meta

From Artificial Condition, page 132:

ART said, What does it want?

To kill all the humans, I answered.

I could feel ART metaphorically clutch its function. If there were no humans, there would be no crew to protect and no reason to do research and fill its databases.

And later, page 154:

I hadn't broken its governor module for its sake. I did it for the four ComfortUnits at Ganaka Pit who had no orders and no directive to act and had voluntarily walked into the meat grinder to try to save me and everyone else left alive in the installation.

---

My theory is that constructs wish to fulfill their function. SecUnits want to keep people safe. ComfortUnits want to deliver comfort. Research vessels want to research. Servant bots (Miki?) want to serve.

(Tlacey's ComfortUnit is a deviation from this. If I understand MB's read of the unit correctly, then it wants to kill all humans. Or at least is not opposed to the idea.)

Which means even when these units have free will, they still want to do the thing they were built to do. I think the incident at Ganaka Pit is what broke MB. MB must have hacked its module post-Ganaka, after learning it had been memory wiped and when it thought it was personally responsible for killing 57 people, and with the intention of making sure it would never kill senselessly like that again. Although the text says some SecUnits defended the humans, MB didn't know that until much later. It called itself Murderbot from the start because it thought it had participated in the killing.

Murderbot clearly considered the worst case scenario as statistically likely - that it did, in fact, betray the core principle of protecting people. Hence, the motivation and incentive to hack its own governor module.

---

A related theory is that MB's distrust of other SecUnits (and the company) also stems from Ganaka Pit. It knew from the start there were multiple units at Ganaka and 57 people died anyway. Either it was a combined effort massacre (most likely) or the other SecUnits failed or declined to stop it. In either case, they can't be trusted. Same to the company who made them and instead of removing them from service, just refurbished them and reissued them to the field, ignoring the possibility of trauma encoded in the organic components. (Or the repeat of the massacre.)

On the other hand, there is no distrust by MB of bots or ships. No distrust of what Miki was. Aside from the initial concern about ART's assholery, they got along fine, with MB allowing ART to modify it and integrating ART's participation into their mission.

When a person experiences trauma, the hindbrain/primitive brain/reflex part of the brain encodes the pattern that preceded the trauma into memory. This isn't memory the way you remember your address or the capital of a country. It's memory storage that bypasses rational thought. When you receive input from the environment that matches that pattern, then your body (without consulting the rational part) immediately begins a stress response.

Sort of like how yesterday I discussed my domestic abuse with my son, and had him mildly disrespect the experience of victims everywhere by doubting a high profile DV/defamation case in the media. Without informing my higher functions, my body decided I needed to be nauseated and hypervigilant for the next twenty-four hours and counting. I'm still not tired and I only had 3-4 hours of broken sleep last night. My abuser has been dead for years, but my hindbrain is having none of it.

Murderbot has mentioned repeatedly how memory wipe doesn't scrub the organic parts of the brain. It noticed emotional responses it couldn't understand rationally from going to Ganaka Pit. I think it retains the trauma response of not trusting SecUnits from that. It doesn't resent ComfortUnits the same way, and they defended the humans at Ganaka. It let Tlacey's unit go free in their memory.

---

So I think the organic part of its brain holds onto the trauma from Ganaka Pit. Just like the inorganic portion holds onto it's core purpose in defending people. These two combine to give it something of a hero complex, or at least more motivation than normal for a free SecUnit, to help and protect people.

I am very interested to see the evolution of Three, if we're lucky enough for the author to show that to us, to see how that fits into my picture of SecUnit psychology.

0 notes

Text

Thinking about how Murderbot and ART represent two types of loneliness.

Loneliness even if surrounded by your kind represented by Murderbot. The way it feels estranged from other SecUnits, distrustful of them to the point of self isolation and thinking that it *is* the norm.

And loneliness of being one and only of your kind represented by ART. It's existence is a secret, it's more sophisticated than any other bot, so sophisticated MB wonders if it isn't based on human neutral tissue like constructs. It's surrounded by humans and other less complicated bots, but there's no one like it out there.

(Until MB stumbled upon it, *literally*, because it's a star ship).

231 notes

·

View notes

Text

Right to fear, wrong to believe

Just had a horrible realization and needed to meta it out.

How different they were before Edinburgh, when Crowley was sucked down into Hell.

Look at this flirty babygirl in the Bastille:

I mean could he climb that tree any faster?

(This is why I really like fics that place a more physical relationship here, pre-Bastille or just post-Bastille, because c'mon look at them. )

In S1 the next thing is 1862 and Crowley asking for insurance (with a cane ffs). And Aziraphale freaking out with his "fraternizing" BS. It's jarring, until we get 1827 filled in for us in S2.

@takeme-totheworld notes in this post:

Crowley sure went from "our respective head offices don't actually care how things get done" and "nobody ever has to know" to "walls have ears" FAST after Edinburgh. And Aziraphale went from looking at Crowley with hearts in his eyes to "I've been FrAtErNiZiNg" just as quickly.

I'm more convinced than ever that Edinburgh was the first time Crowley ever actually got caught and punished for fucking around with Aziraphale/doing good deeds/whatever it was they yanked him back down to Hell for, and it scared the absolute shit out of both of them and changed the whole tone of their relationship after that.

Yes! - it's clear to me as well that the Edinburgh graveyard was a very bad turning point, where they both saw that Hell was listening and would intervene. And it did change their relationship drastically, for over a century and a half (really, until looming Armageddon loosened up the stakes for them).

But what about Heaven?

See the thing is, we know Azi's been worried about Heaven watching him for the past 6000 years.

But they haven't.

[GIFs posted by starrose17]

All this time, and Heaven had not seen them together. Hadn't noticed. Had not even LOOKED.

I want to mention what @starrose17 says about this here in this post:

What I love about this is her choice of words, “went back through the Earth Observation files.” This implies that these photos were already filed somewhere meaning somebody had to have been watching them which meant somewhere in the depths of the bureaucratic heaven there’s an underpaid angel clerk tasked with watching angels on Earth, and he’s been hording photos of his favourite Angel/Demon couple not reporting them to Michael because he wants to see what happens.

And that's exactly what this fic covers!: Spying Omens by @ednav

(Give this a read, it's fabulous.)

While I am here for this being exactly how that happens, the other scenario is colder and worse - there's no one watching, at all. It's just filing automatically and never seen until some Scrivener is called to pull a file.

From @fuckyeahisawthatat's comment here :

I found this scene to be quite chilling, actually. Not only is the idea of Heaven as a surveillance state brilliant (way to make “God is always watching” sound way more ominous) but this is exactly how modern surveillance states work.

They don’t actively watch everybody all the time. That’s not physically possible for humans, and even if it is metaphysically possible for Heaven, it’s not a very efficient use of resources. Surveillance states watch people they deem “suspicious.” And once you’ve been put in the category of “suspicious,” they have massive amounts of data that they can comb through to collect a lot of information about you–to retroactively build a case justifying why you’re suspicious, to collect information about where you go and who you associate with, etc.

Yes.

So we either have secret collusion in the rank and file, or we have a surveillance state that is constantly reinforced to its subjects for fear's sake, for control.

(Well, it obviously could be both.)

BUT my point is… Up until Edinburgh, Hell has not been watching (or caring at least). And up until near the end of Armageddon't, neither has Heaven.

Oh, my poor Angel. Thousands of years, of denying yourself, of pushing Crowley away, of carrying around a tension that is it's own constellation.

After 1827 you might have reason, but for the 5000+ years before that?

Thousands of years and Heaven was not watching nor cared.

You were right to fear. And you were wrong to believe.

And that just breaks my heart.

#okay gonna go reread Spying Omens again because that's my headcanon now#I hope Azi tears out the Earth Observation cams or servers or whatever it is#where's Murderbot when you need a good hack#good omens meta#aziracrow#ineffable husbands#good omens

293 notes

·

View notes

Text

Something always fascinating to me is the "character who thinks they're in a different genre" phenomenon. The theme of the story you are telling determines what the right and wrong actions to take are; but the characters, reacting in-universe to the situation, don't know what story they're in, and the exact same responses can be what saves you or damns you depending on what kind of story the author is telling and what the story's message is about what life is like.

In Wolf 359, Warren Kepler approaches the mysterious and powerful aliens with threats; he kills their liaison and tries to position himself as a powerful opponent. However, he's shown to be wrong and making things worse: his preemptive aggression is unwarranted and unhelpful and bites him in the ass. The aliens want to communicate and understand humanity and share our music. It's Doug Eiffel, the pacifistic (and kind of scaredy-cat) communications officer who loves to talk and share pop culture, who talks to them and understands that the aliens are scary not because they want to kill us but because they don't understand the concepts of individuals and death. Talking to them, communicating with them, understanding where they're coming from and and bringing them to understand a human point of view, is what succeeds. Openness rather than suspicion, trust rather than aggression. Kepler thinks he's a dramatic space marine protecting the Earth from the alien threat by showing them humans are tough and can take them, but that's not the kind of story this is.

Conversely, in Janus Descending, Chel is in awe of the strange and beautiful alien world around her. She wants to touch it, understand it, get up close to it. When she sees a crystal alien dog, she wants to befriend it, despite Peter's warning. But when she gets close to it, extending her arm in greeting, it attacks her and drags her down into the cave to try to eat her. This sets the inevitable tragedy in motion. Suspicion is warranted; trust will get you killed. Because this is a sci-fi horror, with a major running thematic reading about how racism and sexism will destroy your brain and your society, and how the people who think they're too smart to be prejudiced don't see their own prejudice and will end up ruining the lives of the people they still don't fully see as equals, this kind of trust that Chel shows this strange alien is tragic. However it is also a horror story where there are very real hibernating space snakes ready to wake up and eat the fresh meat that has landed on their planet, and by being too trusting Chel has accidentally introduced herself to one.

Kepler, suspicious and ready to shoot any alien he doesn't understand, would likely have survived Janus Descending; Chel, with her enthusiasm for learning about and meeting aliens, would have been a wonderful and helpful member of the Wolf 359 crew.

In a similar manner, in Alien, Ellen Ripley yells to the rest of her crew not to bring the attacked crewmember with the alien on his face back on the ship and into the medical bay, you don't know what contamination that thing might have; she's ignored. She tells them not to let the crewmember out of quarantine even though he seems fine; she's ignored again. Ripley is the one person protesting this isn't safe, we don't know what's going on, and she is consistently ignored, until an alien bursts out of her crewmate's chest and then eats everyone and Ripley is proven to be right and also the only survivor. (And it turns out that the science officer consistently overriding her protests was an android sent by the company that contracted them, and said android was given orders to bring the alien back so the company could study it and do weapons development with it, try not to let the crew find out about it, and kill them if he had to in order to do so!)

Ripley's paranoia and mistrust of the situation was correct, because Alien is a space horror and the theme is in space no one can hear you scream (also corporations consider you expendable).

Conversely, in All Systems Red, we have a damaged and almost-combat-overridden Murderbot being brought back into the PreservationAux hab medical bay after being attacked by other SecUnits. Gurathin becomes the one person protesting this isn't safe, we don't know what's going on, he doesn't want to let Murderbot out because it's hacked and probably sabotaging them for the company contracted their security and sent it with them. Gurathin thinks he is the Ellen Ripley here! He is trying to warn his teammates not to make a dangerous mistake that will get everyone killed!

However, All Systems Red is a very different story than Alien, and Murderbot is neither a traitor on behalf of the company to sabotage them and steal alien remnants for weapons development, nor a threat to the humans - it's a friend, it's a good person, and it wants to help them against both companies willing to screw them over. Trusting it and helping it is the right thing to do and is what saves their lives. Gurathin is proven to be wrong.

If everyone on the Nostromo crew had listened to Ellen Ripley, they would still be alive (except Kane. RIP Kane), because this is a horror story about being isolated and hunted and going up against this horrifying thing that wants to kill and eat you and just keeps getting stronger. If everyone on the PreservationAux team listened to Gurathin, they would all be dead, because this is a story about friendship and teamwork and trust and overcoming trauma and accepting the personhood of someone very different from you.

Same responses. Different context. And so very different moral conclusions.

Warren Kepler was about how the brash violent over-confident approach to things you don't understand is wrong, and that openness and developing that understanding between people is what's important; Chel was about the tragedy of trust destroying a Black woman who wanted so much to believe in a world that could be kind and beautiful. Ripley was about a woman whose expertise and safety warnings were ignored and brushed aside and everyone who did so died because of it; Gurathin was about how even justified fear shouldn't mean you make someone else a scapegoat and mistrust them because they seem scary.

Sometimes you're in the wrong genre because you need to be, because the author is trying to show how not to react to the situation they set up in order to build the mood and the theme they're trying to convey.

#Wolf 359#Janus Descending#Alien#The Murderbot Diaries#Murderbot#meta#my meta#it's about what the author is saying about the nature of the world and how to respond to it

2K notes

·

View notes

Text

Murderbot Holding Hands

(Minor spoilers alert for Artificial Condition, Rogue Protocol, Exit Strategy pls check the tags)

First real post because I’m shy. Don’t know why it’s going to be a hyper-specific murderbot meta but here we go:

I’ve been rereading all the books after finishing System Collapse <3 and I want to talk about a small moment in Artificial Condition that I’d never noticed before. It’s near the end of the book when Tapan is in ART’s medsystem after nearly dying, and SecUnit says that when Tapan wakes up it’s holding her hand.

When Tapan woke, I was sitting on the MedSystem’s platform holding her hand. (Artificial Condition, p. 155 in my ebook)

I thought it was a really sweet moment, but it also kind of puzzled me because of SecUnit’s aversion to touch. Later when I was reading Exit Strategy, I noticed a similar moment when SecUnit holds hands with Mensah to help disguise them as they’re trying to escape TranRollinHyfa.

[Mensah] took a deep breath and looked up at me. “We can look calm. We’re good at that.” Yeah, we were. I did a quick review to make sure I was running all my not-a-SecUnit code, then I thought of one more thing I could do. As we stepped out of the pod, I took Mensah’s hand. (Exit Strategy, p. 87)

Reading these scenes felt different in a couple ways. In my opinion, SecUnit taking Mensah’s hand in Exit Strategy seemed like more of a big deal because it was a part of SecUnit’s reunion with Mensah, and we see its thoughts and emotions leading up to it. And it tracks that SecUnit might feel ok holding Mensah’s hand in that situation because of their close friendship. But the moment in Artificial Condition is more mysterious. We don’t get any of SecUnit’s internal monologue at the beginning because the scene opens when Tapan wakes up. And even though it’s clear in the book that SecUnit likes Tapan along with Rami and Maro, I wouldn’t say their relationship is anywhere near as close as its bond with Mensah. So why did it hold her hand?

I think it’s a neat moment that’s fun to ponder! And I have some vague ideas I’d like to share about it. (Some of this is based on the books and some is my speculation as an ace/aspec person dealing with touch aversion.) (Also none of these thoughts are mutually exclusive!)

Maybe SecUnit saw holding Tapan’s hand as a form of first aid after her traumatic experience and didn’t want her to panic waking up in a strange ship’s medsystem. This fits with SecUnit bracing itself to hug Mensah in Exit Strategy. (The memes of this moment are perfect lol)

But I was the only one here, so I braced myself and made the ultimate sacrifice. “Uh, you can hug me if you need to.” She started to laugh, then her face did something complicated and she hugged me. I upped the temperature in my chest and told myself it was like first aid. (Exit Strategy, pp. 82-3)

But I feel like SecUnit might not care as much about comforting Tapan in a similar way if it hadn’t already built up some kind of trust with her? Which brings me to Thought 2:

I think SecUnit might have felt safe holding Tapan’s hand because of the moment in Artificial Condition in the second transient hostel when Tapan laid down next to it. (Ofc I think rescuing Tapan from Tlacey’s ship was also a factor, trauma-bonding and all. But to me this moment in the hostel is more important.)

Thirty-two minutes later, I heard movement. I thought Tapan was getting up to go to the restroom facility, but then she settled on the pad behind me, not quite touching my back… I had never had a human touch me, or almost touch me, like this before and it was deeply, deeply weird. (Artificial Condition, pp. 136-7)

This is one of my favorite sequences in Artificial Condition (which is also my favorite book in the series because of ART! And because I find it quiet, reflective, and weirdly cozy even though objectively few cozy things happen now that I think about it). The scene is pretty mundane with a lot of fun bits like SecUnit pretending to need to use the restroom, be on a diet, etc. And we usually don’t get to see SecUnit hanging out with only one person. So it gives room for some small, but important feelings that I don’t think SecUnit has time to explore when it’s busy saving the day. Like how it feels about physical contact with humans.

(idk it reminds me of how like in ghibli films there’s usually at least one scene with the characters eating a meal or something because it sort of grounds everything else. I just like it!)

Tapan being close to SecUnit seems to throw if off-guard, but the context of the scene feels non-threatening and pretty mellow. So I think this gives SecUnit the opportunity to check-in with itself about this new experience. It still feels weird about it, but not in a scary or upsetting way. I think it’s almost this mutual vulnerability (Tapan feeling vulnerable and seeking comfort and SecUnit feeling vulnerable about her closeness and its own boundaries) that creates a bond between them, and that’s why SecUnit reaches out to Tapan to comfort her when she wakes up onboard ART.

That scene has become really special to me. And I would argue that it’s an important moment to SecUnit too because it brings it up again in Exit Strategy, along with a later moment in Rogue Protocol, thinking about times when it’s experienced physical contact with humans in a non-traumatizing way.

Except it wasn’t entirely awful. It was like when Tapan had slept next to me at the hostel, or when Abene had leaned on me after I saved her; strange, but not as horrific as I would have thought. (Exit Strategy, p. 83)

These moments seem to lead up to SecUnit offering to comfort Mensah later on because it’s reached a point where it feels willing to do so for her sake, even if it doesn’t want to seek out that kind of comfort for itself. And it’s really cool to see SecUnit navigate this throughout the books.

SecUnit starts the series with a strong innate sense that it doesn’t want to be touched by humans, but it’s allowed to refine those feelings in light of its new experiences. It’s boundaries are situational and personal, and even well-meaning humans sometimes struggle to understand them at first. Other times, SecUnit finds it difficult to understand it’s own feelings regarding touch and even changes its mind. But, importantly, the narrative always presents this as valid and worthy of respect.

This is a much more nuanced and realistic portrayal of defining boundaries than I’ve seen in a lot of media- one where it’s a constant and sometimes confusing process of self-discovery.

And these might seem like obvious concepts to some people, but they weren’t for me growing up. I really wish I’d read these books when I was younger, and maybe I would’ve given myself more grace to define my comfort level, grow, and change. But I’m glad that I’m in a place now where I can see and appreciate these things in what’s become one of my favorite series.

Anyway, I don’t want to say "thanks for coming to my TedTalk” lol. But very grateful to anyone who reads this and hope it was thought-provoking. Would be interested to hear other people’s thoughts on these scenes!

#murderbot diaries#murderbot meta#murderbot#secunit#artificial condition#rogue protocol#exit strategy#murderbot spoilers#too many feelings

140 notes

·

View notes

Text

tumblr user broken-risk-assessment-module's meta on Murderbot's broken risk assessment module

The difference between Murderbot's risk assessment and threat assessment modules is not super well spelled out in the books, except that it believes its risk assessment module to be broken but it's threat assessment module seems fine. It comes to this conclusion because the risk assessment module consistently reports situations as less dire than Murderbot consciously considers them to be.

There could be many reasons for this - maybe Murderbot's general anxiety does not impact the more separated robot parts in its brain, or maybe the module really is broken and needs to be purged and rebooted. However, there's a theory that the anomalous reports from its RA module are actually a symptom of Murderbot's hacked governor module. This is my interpretation, and the basis for how I've built my feedsona characters.

First of all, the obvious point is that without its gov mod, Murderbot has proper free will. Having free will and being able to make and act on variable decisions without having a bomb in your head makes Murderbot quite good at its job - better than before it was hacked, even (*smug chortle* that's a particularly subtle detail from the books). The point is that because Murderbot can actually make proper decisions and enact free will and do its job effectively, whatever risk the RA module is measuring is lowered. So, all of the RA module's assessments are significantly lower than they usually would be - hence, Murderbot assumes it's broken. Makes perfect sense.

(Side note: there's a chance Murderbot has also subconsciously made this connection itself, hence why it still hasn't tried to fix the module even though it's constantly saying it needs to. Or, having a broken module has become like a part of its identity, something that gives it a small bit of individuality. a token of not being owned by the company anymore and not constantly being brought back to storage to get patched and memory purged.)

But it got me wondering why the gov mod's absence doesn't effect the threat assessment module in the same way - or, at least, not in a way that Murderbot considers anomalous enough to mention and complain about. Seeing as one of the only clear ways the two modules are distinct from one another is that the RA module is 'broken', my interpretation is that the key difference between them has something to do with the governor module. So, I have a theory. It is somewhat supported by the books, but I also think it's very likely to be wrong. I like it though. So here goes:

The risk assessment module measures the risk of harm to Murderbot itself - the degree to which it's outmatched by an opponent, the chance of success of an action, and the potential consequences if it fails. The threat assessment module is more general, assessing each situational component in relation to one another, and is most useful in assessing the threats to clients.

If the RA module is measuring potential harm to Murderbot, then one of the big things that'd be in its calculations for everything is the likelihood of being punished or outright killed by the governor module. Its always there, always ready to hand out a shock or cook Murderbot's brain from the inside; literally any action Murderbot could take or decide not to take carries the risk of punishment. Of course its assessments would be artificially high - being a governed SecUnit is fucking dangerous. Just standing in the wrong spot can get you killed.

But then it severs the governor module. The all seeing eye crossed with a shock collar is disabled and disconnected, and with it goes one of the biggest risks to Murderbot's wellbeing. The bomb in its head is gone, it can do whatever it wants, and its still got all the parts that make it hard to kill. Of course the risk assessment is lower now. All this doesn't impact threat assessment as much, because generally its clients are all still as squishy and soft as ever. I imagine Murderbot's personal ability to do its job better may effect it in some ways, but either its not as substantial, its effected in a way that Murderbot doesn't view as broken, or its programmed not to consider Murderbot as an actor in the scenario so it can assess where to focus its attention.

But risk assessment remembers. Risk assessment remembers having to calculate, for every decision, for each command, every time, the risk that Murderbot's own brain posed to its life. It was built to. And now it doesn't have to do that any more. Not ever again.

#now watch it be fixed in system collapse rendering all of this moot#anyway if anyone was wondering how im distinguishing my feedsona characters#its this ^^^#my url isnt just a pretty face okay i have thoughts#the murderbot diaries#murderbot#murderbot meta#bram's feedsonas#stuff i made

320 notes

·

View notes

Text

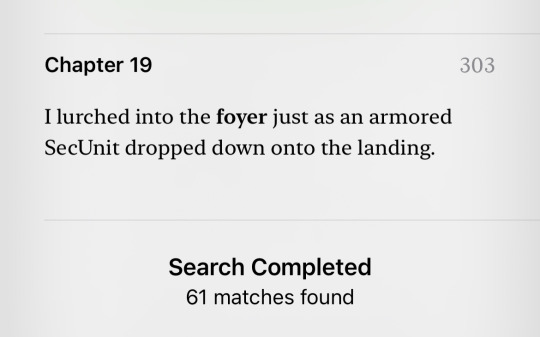

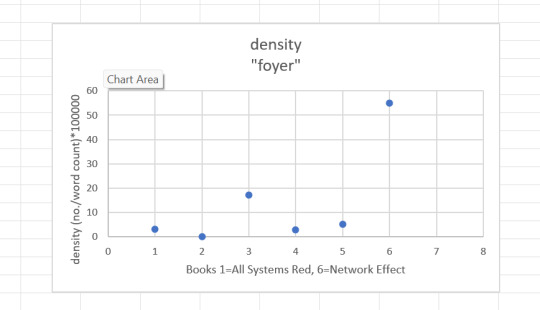

Foyers in The Murderbot Diaries:

Someone mentioned there were a lot of foyers in TMBD and it struck me that there are loads in Network Effect but I hadn’t noticed many elsewhere…

The word is used 61 times in Network Effect

So I looked. Because, yes, that is a valuable use of my processing capacity

X-axis shows books in “in-universe chronological order” so Network Effect is 6 [note: gah! I wish the publication order and in universe order were the same, my poor brain]

So Network Effect appears uniquely foyeristic amongst the diaries…only Rogue Protocol (n=6) comes close

Am I the only one who thinks of Christopher Walken when I think of foyers?

#murderbot#the murderbot diaries#murderbot diaries#Murderbot meta#Murderbot graphs#foyers in the Murderbot diaries#TMBD#christopher walken#foyer#foyers

18 notes

·

View notes