#nodejs microservice in docker container

Explore tagged Tumblr posts

Video

youtube

Run Nodejs Microservices in Docker Container | Deploy MongoDB in Docker ... Full Video Link https://youtu.be/ltNr8Meob4g Hello friends, new #video on #nodejs #microservices in #docker #container with #mongodb in #docker #container #tutorial for #api #developer #programmers with #examples is published on #codeonedigest #youtube channel. @java #java #aws #awscloud @awscloud @AWSCloudIndia #salesforce #Cloud #CloudComputing @YouTube #youtube #azure #msazure #codeonedigest @codeonedigest #docker #dockertutorial #whatisdocker #learndocker #dockercontainer #nodejsmicroservice #nodejsmicroservicestutorial #nodejsmicroserviceexample #nodejsmicroserviceproject #nodejsmicroservicearchitecture #microservicemongo #nodejsmicroservicemongodb #nodejsmicroservicedocker #mongodbdocker #dockermongodb #nodejsmongoose #nodejsexpress #dockermicroservices #dockermicroservicesnodejs #dockermicroservicestutorial #dockermicroserviceexample #mongodb

#youtube#nodejs microservice in docker container#mongodb in docker container#nodejs microservices#microservice in docker container#nodejs microservice docker image#mongodb docker image#mongodb#docker#docker image#dockerfile#docker file#nodejs#nodejs module#express#nodejs express#nodejs mongoose#microservices

1 note

·

View note

Text

Apply Now!

https://www.disabledperson.com/jobs/41234520-cloud-software-engineering-advisor-evernorth

The Core Technology, Engineering & Solutions department is hiring an AWS Cloud Engineer to work on implementing AWS cloud integration solutions.

How you'll make a difference:

This individual will work directly with business partners and other IT team members to understand desired system requirements and to deliver effective solutions within the SAFe Agile framework methodology. The Software Engineering Advisor will participate in all phases of the development and system support life cycle. Primary responsibilities are to design and implement AWS cloud integration solutions that will interact with the TriZetto Facets software package. The ideal candidate is a technologist that brings a fresh perspective and passion to solve complex functional and technical challenges in a fast-paced and team-oriented environment.

Key Responsibilities:

Cloud Development including design, coding, unit testing, triaging and implementation.

Understand and practice CI/CD concepts and implementation - build automation, build pipelines, deployment automation, etc.

Participate and conduct code reviews with scrum teams to approve for Production deployment

Establish/Improve/Maintain proactive monitoring and management of supported assets ensuring performance, availability, security, and capacity

Maintains a robust and collaborative relationship with delivery partners and business stakeholders

Able to work in a fast paced, demanding, and rapidly changing environment

Able to work with distributed/remote teams

Able to work independently as well as collaboratively

Able to focus and prioritize work

Required Experience/Qualifications:

5+ years in an equivalent role

Bachelor's degree in a related field or equivalent work experience

Agile trained 5+ Years

Understanding and experience with Agile development methodology and concepts

Experience with RESTful APIs and SOAP services

Experience with microservices architecture

Strong knowledge of Data Integration (e.g. Streaming, Batch, Error and Replay) and data analysis techniques • Experience with Security Concepts (e.g. Encryption, Identity, etc.)

Working knowledge of test automation concepts 3+ Years

Software development experience with Python and NodeJS

Experience with the following AWS Services: o Glue, Lambda, Step Functions, EKS, Event Bridge, SQS, SNS, S3, and RDS • Experience with Apache Spark and Kafka

Experience developing with containers (e.g. Docker, EKS, OpenShift, etc.

Experience with GitHub, Jenkins, and Terraform

Experience with AWS API Gateway

Experience with NoSQL databases (MongoBD, DynamoDB, etc.)

Experience working with SQL Server

Must be a current contractor with Cigna, Express Scripts, or Evernorth. Evernorth is a new business within the Cigna Corporation.

This role is WAH/Flex which allows most work to be performed at home. Employees must be fully vaccinated if they choose to come onsite.

0 notes

Text

New Post has been published on Strange Hoot - How To’s, Reviews, Comparisons, Top 10s, & Tech Guide

New Post has been published on https://strangehoot.com/how-to-setup-microsoft-azure-console/

How to Setup Microsoft Azure Console

Introduction

Microsoft is a very well known Information Technology company located in the United States of America. It was founded in 1975 by Bill Gates and Paul Allen. The company had launched some fantastic software products. Windows Operating System and MS-Office products are some of them. Microsoft (MS) Excel is the one which is irreplaceable. Gradually, it made its own PCs and tablets, Xbox and so on.

Let us see the range of Microsoft products in the following categories.

Software

People know Microsoft with its software products as mentioned above. Operating System, MS Office suite that contains applications such as MS-Word, MS-Excel, MS-PowerPoint, MS-Outlook, MS-Access, MS-OneNote and so on. These are the desktop applications used in daily activities of all.

After Google introduced the concept of Google Apps and a one-stop solution for all applications used in one account, Microsoft came up with the same ideology and packaged all its desktop applications and started providing the same on the Web.

Microsoft Teams and Skype are the most used solutions in these COVID-19 days for professionals working from home. They get connected via these apps for group meetings and discussions.

Office365 is a very useful email solution for small and large scale businesses that provides email services with easy to use interface and online office products to work on. OneDrive is available for storing documents and files that can be quickly attached in email and sent out to the recipients.

Hardware

Microsoft has also launched PCs, Laptops and Tablets for all types of users. For the digital game players, it has launched Xbox which is the most used device. All the accessories are available from Microsoft for gaming.

Development

For building desktop and Web applications, Microsoft already has introduced .Net platform for the developers. Visual Studio, Windows server and other development solutions for machine learning (ML), game development, app development and microservices development are also part of Microsoft products.

Cloud Computing Platform

Azure is a cloud computing platform that is offered by Microsoft. It was launched in 2010 and gradually, it has started giving better solutions. The platform provides virtual instances to create a development environment on Linux, CentOS, Ubuntu and Windows platforms. In addition to that it provides services to set up cache server, configuration server and many more. It facilitates a customized Azure console based on your application needs.

MS Azure also provides high end configuration environments. Based on your industry, you can:

Setup Azure for Machine Learning

Setup Azure for Blockchain

Setup Azure for Gaming Development

Setup Azure for Security Solutions

Setup Azure for Analytics Solutions

Setup Azure for Quantum Solutions

Setup Azure for Hybrid Cloud Solutions

Azure Console Features

Before we get into the details steps of setting up Azure, let us see some of the best features of Azure Console.

Azure Console has an easy-to-use interface where the new user can get easily acquainted with the features.

Azure Console dashboard gives an overview of services installed and setup of all the services at one glance.

Azure Console supports open source technology platforms such as Linux. This widens the scope of users getting onto the Azure platform.

Azure Console also provides easy scaling options of resources based on the usage of services.

Azure Console is a unified portal on which you can build, manage and monitor all the products.

Azure Console also supports Role Based Access Control (RBAC) where one can have admin rights to manage all the services whereas the other user can be restricted to only deploy the service.

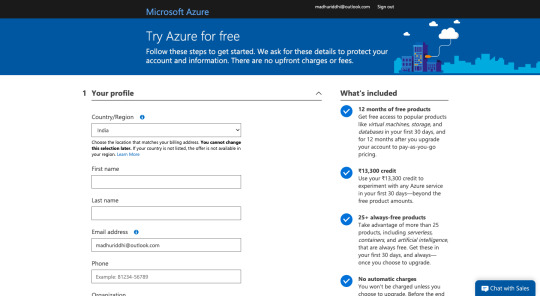

Setup Azure

It is easy to setup Azure console. Let us see the steps to create an instance in Microsoft Azure free tier service.

Open your browser and go to https://azure.microsoft.com/en-in/overview/. The Microsoft Azure home page appears.

Click the Free account link on the top right corner.

Click the Start free button. The Sign in page appears.

Enter the outlook email address in the text box with the Email, phone or Skype placeholder.

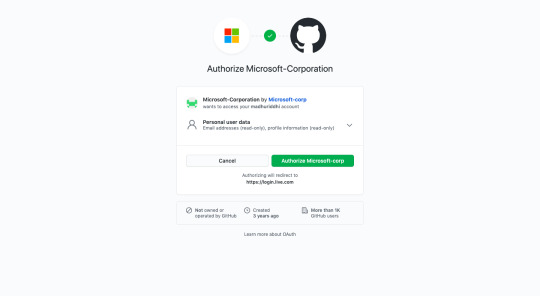

If your GitHub account is linked with an Outlook account, you can use the Sign in with GitHub option to proceed.

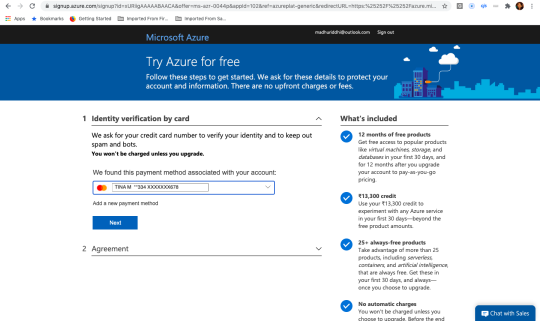

Click the Next button after you enter the email address. Enter the password in the Password box. Click Next. The Try Azure for free page appears as below.

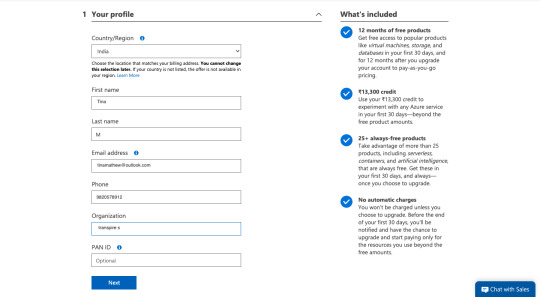

The first step is to enter your company details. Fill all the details as below.

Enter your first name, last name, email address, phone number and name of the company. Click the Next button.

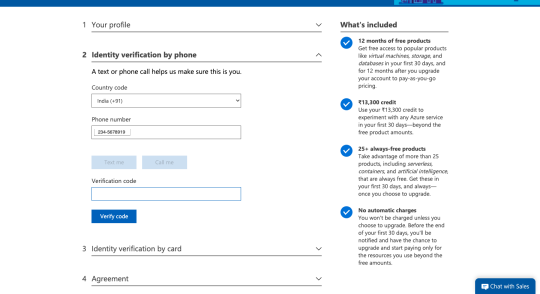

The Identity verification by phone section appears. Enter the phone number you wish to link with your Azure account.

Once you enter the number, the Text me and the Call me buttons are enabled.

If you wish to receive OTP (One Time Password) by SMS, click the Text me button.

If you wish to receive OTP (One Time Password) by getting a call, click the Call me button.

Enter the OTP in the Verification code box.

Click the Verify code button to verify OTP. Upon successful verification, you will get to step 3.

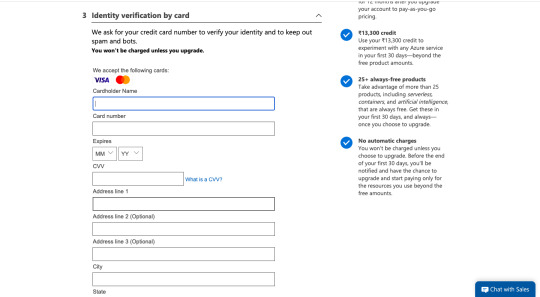

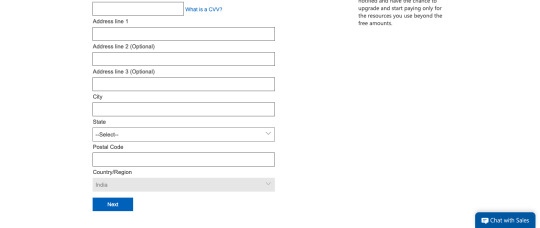

The Identity verification by card form appears as below.

Enter your credit card information in this form.

Enter your name in the Cardholder name box.

Select the month and the year of your card expiry in the Expires section. (Note this information is available in your physical card.)

Enter CVV number in the CVV box. (This information is available at the back of your card in 3 digits.)

Enter the address information including City, State, Postal Code information.

Click the Next button. You will receive OTP (One Time Password) on your mobile number linked to the card.

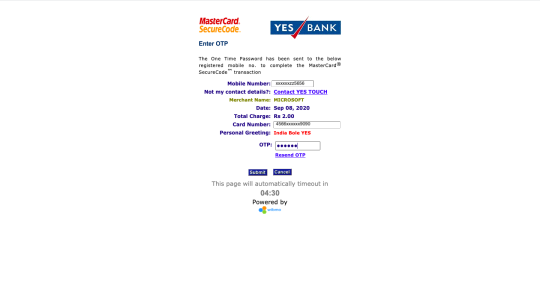

Enter the OTP in the page that appears as below.

In the OTP box, enter the code you have received.

Click the Submit button. The credit card information is saved successfully and shown as below.

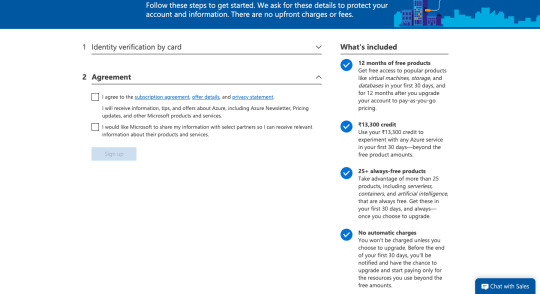

Click the Next button. The agreement section appears.

Select the I agree.. check box. The Sign up button is enabled.

Click the Sign up button. The welcome screen appears.

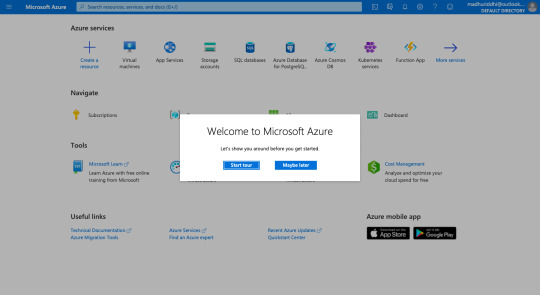

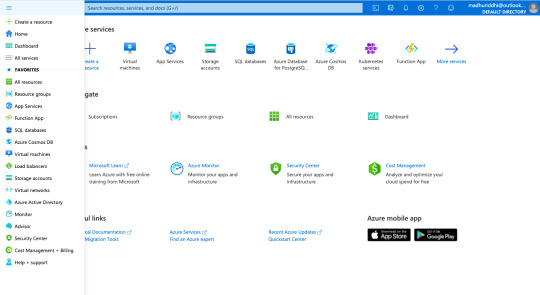

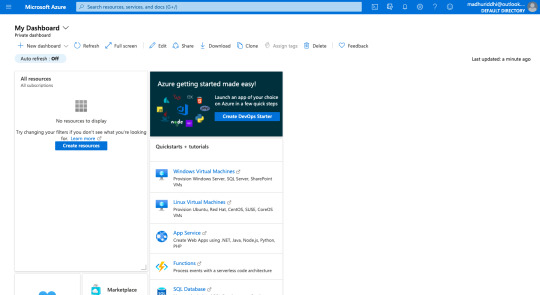

Click the Maybe later button to skip the tour. The dashboard screen appears. Click the icon on the top left corner. The panel on the left with all the services is shown.

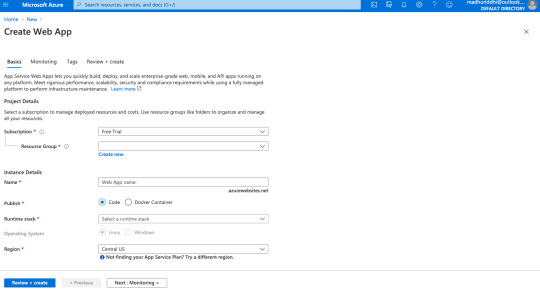

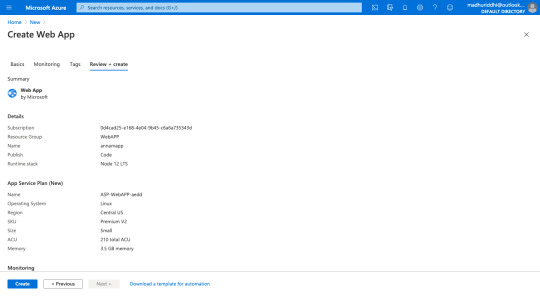

Click Create a resource option and select Web app. The steps to create an instance for the Web app resource appears.

Enter the name of the resource group. If not created before, click the Create new link. The pop up with the text box appears. Enter the name you wish to give.

In the Instance details section, enter the name of your Web application. Select the Docker Container option if you wish to deploy your application with docker.

Select the runtime stack from the drop-down. If your application is created on NodeJS, select the same. Based on the selected stack, the operation system will be automatically selected. If you have selected the NodeJS stack, the operating system would be Linux.

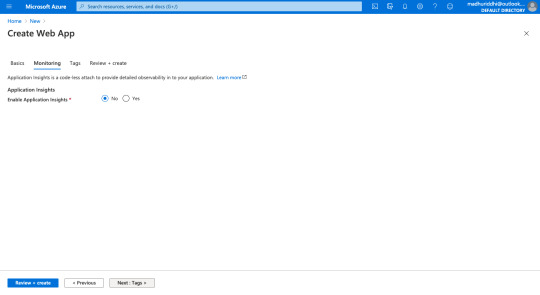

Click the Review+create button. The Monitoring tab appears.

Keep the default option and click the Review+create button. The Tags tab appears.

Leave details blank and click the Review+create button. The Review+create tab appears. All the details of your instance appears on this page.

Click the Create button. The instance/console is created successfully.

You can manage the newly created instance from the dashboard.

Conclusion

Microsoft Azure is cheaper in terms of pricing than Amazon Web Service (AWS). For the Microsoft developers and DevOps team, it is convenient to setup Azure because the user interface (UI) is similar to other portals of Microsoft.

Again, the decision making of choosing the right platform for system deployments, maintenance and enhancements are in hands of the organization and its DevOps team.

Read: How to Setup AWS EC2 Server

0 notes

Link

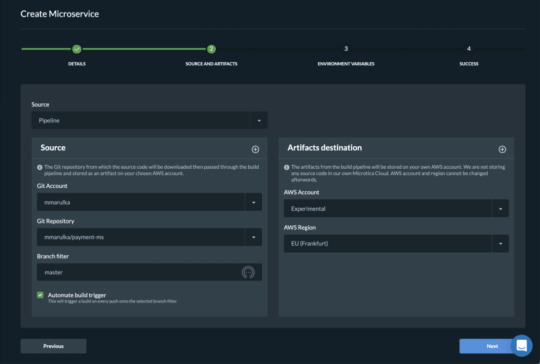

Cloud-native applications are on the rise thanks to the scalability and flexibility they provide. However, this type of architecture has its own challenges. Implementing a CI/CD pipeline will solve most of them, like defining delivery procedures, delivering applications independently, and gaining observability of the numerous building blocks in a system are some of them. A CI/CD pipeline is the key to automating the steps of the software delivery process. This includes initiating code builds, running automated tests, and deploying to a testing or production environment. One CI/CD pipeline consists of multiple steps executed one after another or in parallel. There are two known pipeline syntaxes — Scripted and Declarative. The key difference between them is their flexibility. Although both syntaxes are based on Groovy DSL, the Scripted pipeline syntax is less restrictive. It also allows almost anything that can be done in Groovy. This means that the script can easily become hard to read and write. On the other hand, the Declarative syntax is more restrictive and provides a well-defined structure, which is ideal for simpler CI/CD pipelines. This syntax supports the “pipeline as code” concept. Therefore, you can write in a file that can be checked into a source control management system like Git. In order to make it more convenient for developers to set up a CI/CD pipeline, Microtica supports the Declarative syntax to define build procedures along with the source code.

Declarative CI/CD Pipelines

For the pipeline process to work, each component/microservice should have a file named microtica.yaml on the root level in its source code. This file contains the specification of the build process. During the build process, Microtica extracts the specification from the code. Then, it creates a state machine to drive the defined process. In order to ensure a single source of truth for pipeline specification, Microtica does NOT allow changes in the Build pipelines from the UI. Changes will only take effect from the YAML file provided in each source code repository. We find this very helpful in avoiding possible confusion of definition, maintenance, and, most importantly, debugging problems in the process.

Define a CI/CD pipeline

There are no limitations to the steps of the build pipeline that you can define. Here is one example of a microtica.yaml file that defines a build pipeline for NodeJS application. This pipeline executes three particular commands defined in the commands section.

Pipeline: StartAt: Build States: Build: Type: Task Resource: microtica.actions.cmd Parameters: commands: - npm install - npm test - npm prune --production sourceLocation: "$.trigger.source.location" artifacts: true End: true

Pipeline — the root key which defines the start of the pipeline section

StartAt — defines the first action of the pipeline

States — defines a list of states for the particular pipeline

Type — a type of pipeline action. Always set it to Task.

Resource — an action that the engine will use. Currently, we support microtica.actions.cmd, an action that executes bash scripting.

Parameters — a set of parameters that are given to the action

commands — a list of bash commands. Here, you define your custom scripts for build, test, code quality checks, etc.

sourceLocation — the location where the action can find the source code. You should NOT change this. Once pulled from the Git repository, Microtica stores the artifacts on a location specified by the user for the particular component/microservice. $.trigger.source.location defines that location.

artifacts — a value that defines that this build will produce artifacts which will be stored in S3 and used during deployment. Set this value should to false in case the artifact of the build is Docker image

End — defines that this is the last action in the pipeline.

Microtica supports bash commands for the execution action. In the future, we are planning to allow developers to define their own custom actions.

Getting a Docker image ready for deployment

Let’s create an extended pipeline from the example above, adding an additional step to prepare a Docker image for deployment:

Pipeline: StartAt: Build States: Build: Type: Task Resource: microtica.actions.cmd Parameters: environmentVariables: pipelineId: "$.pipeline.id" version: "$.commit.version" commands: - echo Starting build procedure... - npm install - npm test - echo Logging in to Amazon ECR... - $(aws ecr get-login --region $AWS_REGION --no-include-email) - echo Checking if repository exists in ECR. If not, create one - repoExists=`aws ecr describe-repositories --query "repositories[?repositoryName=='$pipelineId']" --output text` - if [ -z "$repoExists" ]; then aws ecr create-repository --repository-name $pipelineId; fi - awsAccountId=$(echo $CODEBUILD_BUILD_ARN | cut -d':' -f 5) - artifactLocation=$awsAccountId.dkr.ecr.$AWS_REGION.amazonaws.com/$pipelineId - echo Build Docker image... - docker build -t $pipelineId . - docker tag $pipelineId $artifactLocation:$version - docker tag $pipelineId $artifactLocation:latest - echo Push Docker image - docker push $artifactLocation sourceLocation: "$.source.location" artifacts: false End: true

In this example, we first inject environment variables in the step runtime with environmentVariables parameter ($.pipeline.id and $.commit.version are both provided by Microtica). The CI/CD pipeline in the latest example starts by executing necessary instructions to build and test the code. Once this is done, Microtica creates an ECR repository if it doesn’t already exist. Once we have the ECR repository in place, the last step is to build a new Docker image and then push it in the repository.

After you define the microtica.yaml file with the build pipeline, you can automate your build process in Microtica when you create the component or microservice with the wizard in the portal. This option will add a webhook to your repository. A webhook is a listener that triggers a build process whenever you push new code to your repository branch. This way, you can be sure that you’re always working with the newest changes. Building the Docker image gave us an artifact so we can deploy it in a Kubernetes cluster. You can do this from Microservice details — Add to Cluster, or in the Kubernetes Dashboard — Microservices — Deploy.

When you deploy your microservice in a Kubernetes Cluster you can select the scaling options. Moreover, you can also set up continuous delivery for your microservice.

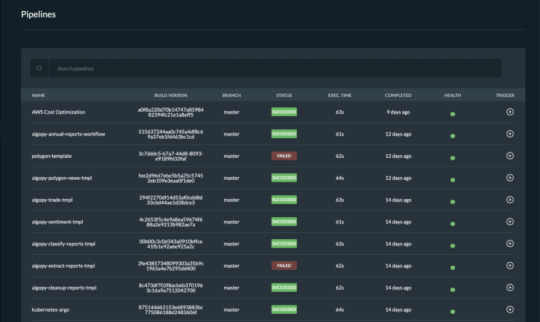

Pipelines overview

Once you trigger a build manually or automatically, follow the build process in the portal and see the events in real-time. If the build fails, it will mark it with red on the screen and it will show the error in the logs. Follow all the pipelines for your components and microservices in the Pipelines overview page and track their status. Microtica will mark a pipeline as not healthy if there are several failed builds detected. From this page, you can access any build in the history of your project. More importantly, you can also and inspect the logs to find the problems.

We are strong advocates for automated pipelines as they remove manual errors. Moreover, they are crucial for reliable and sustainable software delivery. They make software developers more productive, releasing them from the need to perform pipeline steps manually. Most importantly, they reduce the stress around new product releases.

0 notes

Text

Kubernetes and MEAN Stack for Microservices Development

This article focuses on an end to end solution using Angular4/Material for UI, NodeJS/Express for middleware, MongoDB for storage, and Docker/Kubernetes for container-based deployment. While the solution provided is not so much a 12-factor app nor follows microservices guidelines, it lays out a foundation and gets you a quick start on an end to end containerized sample. With fully functional end-to-end solution it helps to see all moving parts to quickly get a hold on concepts. This article focuses on critical and helpful details only, to complement already available wealth of documentation on the web. https://goo.gl/VMMZTc #DataIntegration #ML

0 notes

Video

youtube

How to deploy web application in openshift web consoleOpenshift 4 is latest devops technology which can benefit the enterprise in a lot of ways. Build development and deployment can be automated using Openshift 4 platform. Features for autoscaling , microservices architecture and lot more features. So please like watch subscribe my channel for the latest videos. #openshift # openshift4 #containerization #cloud #online #container #kubernetes #docker #automation #redhatopenshift #openshifttutorial #openshiftonline, openshift openshift 4 red hat openshift,openshift openshift 4 red hat openshift container platform,openshift,web application openshift online,openshift deploy spring boot jar,openshift container platform,application deployment,openshift tutorial,openshift installation,openshift online,redhat openshift online,openshift login,red hat openshift,openshift install,openshift webconsole,deploy application openshift openshift 4 red hat openshift webconsole https://www.youtube.com/channel/UCnIp4tLcBJ0XbtKbE2ITrwA?sub_confirmation=1&app=desktop About: 00:00 How to deploy web application in openshift using web console | openshift 4 | red hat openshift In this course we will learn about deploying an application to openshift / openshift 4 online cluster in different ways. First method is to use the webconsole todeploy application using webconsole. Second way is to login through OC openshift cluster command line tool for windows. In this video we will use S2I openshift template to deploy github sourcecode directly to openshift 4 cloud cluster. Openshift/ Openshift4 a cloud based container to build deploy test our application on cloud. In the next videos we will explore Openshift4 in detail. Links: https://github.com/codecraftshop/nodejs-ex.git -l name=nodejs-cmd Github: https://github.com/codecraftshop/nodejs-ex.git https://www.facebook.com/codecraftshop/ https://t.me/codecraftshop/ Please do like and subscribe to my you tube channel "CODECRAFTSHOP" Follow us on facebook | instagram | twitter at @CODECRAFTSHOP . -~-~~-~~~-~~-~- Please watch: "Install hyperv on windows 10 - how to install, setup & enable hyper v on windows hyper-v" https://www.youtube.com/watch?v=KooTCqf07wk -~-~~-~~~-~~-~-

#openshift openshift 4 red hat openshift#openshift openshift 4 red hat openshift container platform#openshift#web application openshift online#openshift deploy spring boot jar#openshift container platform#application deployment#openshift tutorial#openshift installation#openshift online#redhat openshift online#openshift login#red hat openshift#openshift install#openshift webconsole#deploy application openshift openshift 4 red hat openshift webconsole#kubernetes

0 notes

Text

Kubernetes 101 - Concepts and Why It Matters

Docker Containers Changed How We Deploy Software

In the old days, software deployment was hard, time-consuming, and error-prone. To install an application, you need to purchase a number of physical machines and pay for CPU and memory than you might actually need. A few years later, virtualization was dominant. This saved you some costs as one powerful bare-metal server can host multiple machines. Thus, CPU and memory could be shared. In modern days, machines can be split into even smaller parts than virtual servers: containers. Containers became so popular only a few years ago. So, what exactly is a Linux container? And where does Docker fit?

applications inside virtual machines-1

A container provides a type of virtualization just like virtual machines. However, while a hypervisor provides a hardware isolation level, containers offer process isolation level. To understand this difference, let’s return to our example.

Instead of creating a virtual machine for Apache and another for MySQL, you decide to use containers. Now, your stack looks like below illustration.

applications inside docker containers

A container is nothing but a set of processes on the operating system. A container works in complete isolation from other processes/containers through Linux kernel features, such as cgroups, chroot, UnionFS, and namespaces,.

This means you’ll only pay for one physical host, install one OS, and run as many containers as your hardware can handle. Reducing the number of operating systems that you need to run on the same host means less storage, memory and CPU wasted.

In 2010, Docker was founded. Docker may refer to both the company and the product. Docker made it very easy for users and companies to utilize containers for software deployment. An important thing to note, though, is that Docker is not the only tool in the market that does this. Other applications exist like rkt, Apache Mesos, LXC among others. Docker is just the most popular one.

Magalix Trial

Containers And Microservices: The Need For An Orchestrator

The ability to run complete services in the form of processes (a.k.a containers) on the same OS was revolutionary. It brought a lot of possibilities of its own:

Because containers are way cheaper and faster than virtual machines, large applications could now be broken down into small, interdependent components, each running in its own container. This architecture became known as microservices.

With the microservices architecture becoming more dominant, applications had more freedom to get larger and richer. Previously, a monolithic application grew till a certain limit where it became cumbersome, harder to debug, and very difficult to be re-deployed. However, with the advent of containers, all what you need to do to add more features to an application is to build more containers/services. With IaC (Infrastructure as Code), deployment is as easy as running a command against a configuration file.

Today, it is no longer acceptable to have a downtime. The user simply does not care if your application is experiencing a network outage or your cluster nodes crashed. If your system is not running, the user will simply switch to your competitor.

Containers are processes, and processes are ephemeral by nature. What happens if a container crashed?

To achieve high availability, you create more than one container for each component. For example, two containers for Apache, each hosting a web server. But, which one of them will respond to client requests?

When you need to update your application, you want to make use of having multiple containers for each service. You will deploy the new code on a portion of the containers, recreate them, then do the same on the rest. But, it’s very hard to do this manually. Not to mention, it’s error-prone.

Container provisioning.

Maintaining the state (and number) of running containers.

Distribute application load evenly on the hardware nodes by moving containers from one node to the other.

Load balancing among containers that host the same service.

Handling container persistent storage.

Ensuring that the application is always available even when rolling out updates.

All the above encourages IT professionals to do one thing: create as many containers as possible. However, this also has its drawbacks:

For example, let’s say you have a microservices application that has multiple services running Apache, Ruby, Python, and NodeJS. You use containers to make the best use of the hardware at hand. However, with so many containers dispersed on your nodes without being managed, your infrastructure may look as shown in below illustration.

multiple applications inside containers

You need a container orchestrator!

Please Welcome Kubernetes

Kubernetes is a container orchestration tool. Orchestration is another word for lifecycle management. A container orchestrator does many tasks, including:

Like Docker not being the only container platform out there, Kubernetes is not the sole orchestration tool in the market. There are other tools like Docker Swarm, Apache Mesos, Marathon, and others. So, what makes Kubernetes the most used one?

Why Is Kubernetes So Popular?

Kubernetes was originally developed by the software and search giant, Google. It was a branch of their Borg project. Since its inception, Kubernetes received a lot of momentum from the open source community. It is the main project of the Cloud Native Computing Foundation. Some of the biggest market players are backing it: Google, AWS, Azure, IBM, and Cisco to name a few.

Kubernetes Architecture And Its Environment?

Kubernetes is a Greek word that stands for helmsman or captain. It is the governor of your cluster, the maestro of the orchestra. To be able to do this critical job, Kubernetes was designed in a highly modular manner. Each part of the technology provides the necessary foundation for the services that depend on it. The illustration below represents a high overview of how the application works. Each module is contained inside a larger one that relies on it to function. Let’s dig deeper into each one of these.

Let’s now have an overview of the landscape of Kubernetes as a system.

kubernetes ecosystem

Kubernetes Core Features

Also referred to as the control plane, it is the most basic part of the whole system. It offers a number of RESTful APIs that enable the cluster to do its most basic operations. The other part of the core is execution. Execution involves a number of controllers like replication controller, replicaset, deployments...etc. It also includes the kubelet, which is the module responsible for communicating with the container runtime.

The core is also responsible for contacting other layers (through kubelet) to fully manage containers. Let’s have a brief look at each of them:

Container runtime

Kubernetes uses Container Runtime Interface (CRI) to transparently manage your containers without necessarily having to know (or deal with) the runtime used. When we discussed containers, we mentioned that Docker, despite its popularity, is not the only container management system available. Kubernetes uses containerd (pronounced container d) by default as a container runtime. This is how you are able to issue standard Docker commands against Kubernetes containers. It also uses rkt as an alternative runtime. Don’t be too confused at this part. This is the very inner workings of Kubernetes that, although you need to understand, you won’t have to deal with almost entirely. Kubernetes abstracts this layer through its rich set of APIs.

The Network Plugin

As we discussed earlier, a container orchestration system is responsible (among other things) for managing the network through which containers and services communicate. Kubernetes uses a library called Container Network Interface (CNI) as an interface between the cluster and various network providers. There are a number of network providers that can be used in Kubernetes. This number is constantly changing. To name a few:

Weave net

Contiv

Flannel

Calico

The list is too long to mention here. You might be asking: why does Kubernetes need more than one networking provider to choose from? Kubernetes was designed mainly to be deployed in diverse environments. A Kubernetes node can be anything from a bare metal physical server, a virtual machine, or a cloud instance. With such diversity, you have a virtually endless number of options for how your containers will communicate with each other. This requires more than one to choose among. That is why Kubernetes designers chose to abstract the network provider layer behind CNI.

The Volume Plugin

A volume broadly refers to the storage that will be availed for the pod. A pod is one or more containers managed by Kubernetes as one unit. Because Kubernetes was designed to be deployed in multiple environments, there is a level of abstraction between the cluster and the underlying storage. Kubernetes also uses the CSI (Container Storage Interface) to interact with various storage plugins that are already available.

Image Registry

Kubernetes must contact an image registry (whether public or private) to be able to pull images and spin out containers.

Cloud Provider

Kubernetes can be deployed on almost any platform you may think of. However, the majority of users resort to cloud providers like AWS, Azure, or GCP to save even more costs. Kubernetes depends on the cloud provider APIs to perform scalability and resources provisioning tasks, such as provisioning load balancers, accessing cloud storage, utilizing the inter-node network and so on.

Identity Provider

If you’re provisioning a Kubernetes cluster in a small company with a small number of users, authentication won’t be a big issue. You can create an account for each user and that’s it. But, if you’re working in a large enterprise, with hundreds or even thousands of developers, operators, testers, security professionals...etc. then having to manually create an account for each person may quickly turn into a nightmare. Kubernetes designers had that in mind when working on the authentication mechanism. You can use your own identity provider system to authenticate your users to the cluster as long as it uses OpenID connect.

Kubernetes Controllers Layer

This is also referred to as the service fabric layer. It is responsible for some higher level functions of the cluster: routing, self-healing, load balancing, service discovery, and basic deployment(for more info, https://kubernetes.io/docs/concepts/services-networking/, and https://kubernetes.io/docs/concepts/workloads/controllers/deployment/ ), among other things.

Management Layer

This is where policy enforcement options are applied. In this layer, functions like metrics collection, and autoscaling are performed. It also controls authorization, and quotas among different resources like the network and storage. You can learn more about resource quotas here.

The Interface Layer

In this layer, we have the client-facing tools that are used to interact with the cluster. kubectl is the most popular client-side program out there. Behind the scenes, it issues RESTful API requests to Kubernetes and displays the response either in JSON or YAML depending on the options provided. kubectl can be easily integrated with other higher level tools to facilitate cluster management.

In the same area, we have helm, which can be thought of as an application package manager running on top of Kubernetes. Using helm-charts, you can build a full application on Kubernetes by just defining its properties in a configuration file.

The DevOps and Infrastructure Environment

Kubernetes is one of the busiest open-source projects out there. It has a large, vibrant community and it’s constantly changing to adapt to new requirements and challenges. Kubernetes provides a tremendous number of features. Although it is only a few years old, it is able to support almost all types of environments. Kubernetes is used in many modern software building/deployment practices including:

DevOps: provisioning ephemeral environments for testing and QA is easier and faster.

CI/CD: building continuous integration/deployment, and even delivery pipelines is more seamless using Kubernetes-managed containers. You can easily integrate tools like Jenkins, TravisCI, Drone CI with the Kubernetes cluster to build/test/deploy your applications and other cloud components.

ChatOps: chat applications like Slack can easily be integrated with the rich API set provided by Kubernetes to monitor and even manage the cluster.

Cloud-managed Kubernetes: Most cloud providers offer products that already has Kubernetes installed. For example AWS EKS, Google GKE, and Azure AKS.

GitOps: Everything in Kubernetes is managed through code (YAML files). Using version control systems like Git, you can easily manage your cluster through pull requests. You don’t even have to use kubectl.[Source]-https://www.magalix.com/blog/kubernetes-101-concepts-and-why-it-matters

Basic & Advanced Kubernetes Course using cloud computing, AWS, Docker etc. in Mumbai. Advanced Containers Domain is used for 25 hours Kubernetes Training.

0 notes

Text

.Net Architect

Position: .Net Architect/Tech Lead Location: Tampa,FL Duration: 12+months Job Description: Demonstrates intimate abilities and/or a proven record of success as a team leader in strategic IT including the following areas: ? Leveraging business and domain acumen to interpret and translate architecture plans to business plans ? Supervising and establishing delivery of project initiatives ? Building trusting and influential relationships and engaging with vendors and third parties as appropriate ? Collaborating with other Architects and Developers to provide technical design guidance to align with strategy and applicable technical standards ? Working independently to evaluate and make strategic decisions that will address specific technology design needs ? Participating in the development of thought leadership to influence their sphere of technology in the use of innovation to advance business capabilities ? Researching and evaluating emerging technologies and novel approaches in order to make recommendations to enhance business processes and/or create a competitive advantage ? Collaborating with multidisciplinary teams in resolving complex technical issues ? Providing design guidance that follows the enterprise architecture vision and adheres to applicable application technology guidelines ? Mitigating the impact of technical design to security, performance and data privacy Participating in application solutions including assisting with planning and architectural design, development, resolution of technical issues and application rationalization ? Displaying initiative and an ability to lead others. Providing coaching and technical mentoring ? Learning rapidly and continuously taking advantage of new technologies, concepts and business models ? Demonstrating a history of staying current in emerging technology trends. ? Demonstrates intimate abilities and/or a proven record of success in technical knowledge and skills in the following areas of application development: Microsoft Stack (C#, ASP.NET, ASP.NET MVC, .NET Framework, MS SQL) ? Angular and NodeJS ? Web technologies (HTML5, CSS3, JSON, JQuery, AJAX, JavaScript, Bootstrap) ? Cloud (Designing, building and deploying cloud-centric solutions on Azure and/or GCP) ? Containers & Orchestration including Docker, Swarm and Kubernetes ? CI/CD (VSTS RM, Jenkins) ? Integration by building application integrations using REST and SOAP web services ? Design Styles, Patterns and Methodologies such as MVC, SOA, Microservice, SPA, MVC/MVP & 12Factor ? Development Lifecycles and methodologies such as Agile, Scrum, Lean, TDD, Iterative & Waterfall SQL database technologies (e.g. MySQL, Oracle and/or PostgreSQL)" Reference : .Net Architect jobs from Latest listings added - LinkHello http://linkhello.com/jobs/technology/net-architect_i7943

0 notes

Text

youtube

#youtube#video#codeonedigest#microservices#aws#microservice#docker#awscloud#nodejs module#nodejs#nodejs express#node js#node js training#node js express#node js development company#node js development services#app runner#aws app runner#docker image#docker container#docker tutorial#docker course

0 notes

Text

.Net Architect

Position: .Net Architect/Tech Lead Location: Tampa,FL Duration: 12+months Job Description: Demonstrates intimate abilities and/or a proven record of success as a team leader in strategic IT including the following areas: ? Leveraging business and domain acumen to interpret and translate architecture plans to business plans ? Supervising and establishing delivery of project initiatives ? Building trusting and influential relationships and engaging with vendors and third parties as appropriate ? Collaborating with other Architects and Developers to provide technical design guidance to align with strategy and applicable technical standards ? Working independently to evaluate and make strategic decisions that will address specific technology design needs ? Participating in the development of thought leadership to influence their sphere of technology in the use of innovation to advance business capabilities ? Researching and evaluating emerging technologies and novel approaches in order to make recommendations to enhance business processes and/or create a competitive advantage ? Collaborating with multidisciplinary teams in resolving complex technical issues ? Providing design guidance that follows the enterprise architecture vision and adheres to applicable application technology guidelines ? Mitigating the impact of technical design to security, performance and data privacy Participating in application solutions including assisting with planning and architectural design, development, resolution of technical issues and application rationalization ? Displaying initiative and an ability to lead others. Providing coaching and technical mentoring ? Learning rapidly and continuously taking advantage of new technologies, concepts and business models ? Demonstrating a history of staying current in emerging technology trends. ? Demonstrates intimate abilities and/or a proven record of success in technical knowledge and skills in the following areas of application development: Microsoft Stack (C#, ASP.NET, ASP.NET MVC, .NET Framework, MS SQL) ? Angular and NodeJS ? Web technologies (HTML5, CSS3, JSON, JQuery, AJAX, JavaScript, Bootstrap) ? Cloud (Designing, building and deploying cloud-centric solutions on Azure and/or GCP) ? Containers & Orchestration including Docker, Swarm and Kubernetes ? CI/CD (VSTS RM, Jenkins) ? Integration by building application integrations using REST and SOAP web services ? Design Styles, Patterns and Methodologies such as MVC, SOA, Microservice, SPA, MVC/MVP & 12Factor ? Development Lifecycles and methodologies such as Agile, Scrum, Lean, TDD, Iterative & Waterfall SQL database technologies (e.g. MySQL, Oracle and/or PostgreSQL)" Reference : .Net Architect jobs from Latest listings added - cvwing http://cvwing.com/jobs/technology/net-architect_i11077

0 notes

Text

.Net Architect

Position: .Net Architect/Tech Lead Location: Tampa,FL Duration: 12+months Job Description: Demonstrates intimate abilities and/or a proven record of success as a team leader in strategic IT including the following areas: ? Leveraging business and domain acumen to interpret and translate architecture plans to business plans ? Supervising and establishing delivery of project initiatives ? Building trusting and influential relationships and engaging with vendors and third parties as appropriate ? Collaborating with other Architects and Developers to provide technical design guidance to align with strategy and applicable technical standards ? Working independently to evaluate and make strategic decisions that will address specific technology design needs ? Participating in the development of thought leadership to influence their sphere of technology in the use of innovation to advance business capabilities ? Researching and evaluating emerging technologies and novel approaches in order to make recommendations to enhance business processes and/or create a competitive advantage ? Collaborating with multidisciplinary teams in resolving complex technical issues ? Providing design guidance that follows the enterprise architecture vision and adheres to applicable application technology guidelines ? Mitigating the impact of technical design to security, performance and data privacy Participating in application solutions including assisting with planning and architectural design, development, resolution of technical issues and application rationalization ? Displaying initiative and an ability to lead others. Providing coaching and technical mentoring ? Learning rapidly and continuously taking advantage of new technologies, concepts and business models ? Demonstrating a history of staying current in emerging technology trends. ? Demonstrates intimate abilities and/or a proven record of success in technical knowledge and skills in the following areas of application development: Microsoft Stack (C#, ASP.NET, ASP.NET MVC, .NET Framework, MS SQL) ? Angular and NodeJS ? Web technologies (HTML5, CSS3, JSON, JQuery, AJAX, JavaScript, Bootstrap) ? Cloud (Designing, building and deploying cloud-centric solutions on Azure and/or GCP) ? Containers & Orchestration including Docker, Swarm and Kubernetes ? CI/CD (VSTS RM, Jenkins) ? Integration by building application integrations using REST and SOAP web services ? Design Styles, Patterns and Methodologies such as MVC, SOA, Microservice, SPA, MVC/MVP & 12Factor ? Development Lifecycles and methodologies such as Agile, Scrum, Lean, TDD, Iterative & Waterfall SQL database technologies (e.g. MySQL, Oracle and/or PostgreSQL)" Reference : .Net Architect jobs from Latest listings added - LinkHello http://linkhello.com/jobs/technology/net-architect_i7943

0 notes

Text

[Packt] Building Microservices with Node.js [Video]

Build maintainable, testable & scalable Microservices with Node.js. Video Description Microservices enable us to develop software in small pieces that work together but can be developed separately, one of the reasons why enterprises have started embracing them. For the past few years, Node.js has emerged as a strong candidate for developing these microservices because of its ability to increase developers’ productivity and applications performance. This video is an end-to-end course on how to dismantle your monolith applications and embrace the microservice architecture. We delve into various solutions such as Docker Swarm and Kubernetes to scale our microservices. Testing and deploying these services while scaling is a real challenge; we’ll overcome this challenge by setting up deployment pipelines that break up the application build processes into several stages. The course will help you implement advanced microservice techniques and design patterns on an existing application built with microservices. You’ll delve into techniques that you can use today to build your own powerful microservices architecture. The code files are placed at this link https://github.com/PacktPublishing/-Building-Microservices-with-Node.js Style and Approach Every step is followed by a walk-through on screen and on the console, and slides to help explain core concepts. Plus, a lot of complementary reads will be included with each video for those keen to know more in-depth. What You Will Learn Implement caching strategies to improve performance Integrate logs for all microservices in one place Execute long-running tasks in asynchronous microservices Create sophisticated deploy pipelines Deploy your microservices to containers Create your own cluster of Docker hosts Make all of this production-ready Use a service mesh for resiliency Authors Shane Larson Shane Larson is a professional software engineer and solutions architect with years of experience developing data-driven applications for the financial industry. He likes to work in an agile environment on applications using NodeJS, C#, MongoDB and cloud native services. He is currently working on voice-first design projects that involve automated alerting of events within financial markets. He also spends his free time building a small off-grid cabin in a remote area of Alaska. http://www.grizzlypeaksoftware.com/ https://www.linkedin.com/in/shane-larson-889aa64/ source https://ttorial.com/building-microservices-nodejs-video

source https://ttorialcom.tumblr.com/post/176546052228

0 notes

Text

[Packt] Building Microservices with Node.js [Video]

Build maintainable, testable & scalable Microservices with Node.js. Video Description Microservices enable us to develop software in small pieces that work together but can be developed separately, one of the reasons why enterprises have started embracing them. For the past few years, Node.js has emerged as a strong candidate for developing these microservices because of its ability to increase developers' productivity and applications performance. This video is an end-to-end course on how to dismantle your monolith applications and embrace the microservice architecture. We delve into various solutions such as Docker Swarm and Kubernetes to scale our microservices. Testing and deploying these services while scaling is a real challenge; we'll overcome this challenge by setting up deployment pipelines that break up the application build processes into several stages. The course will help you implement advanced microservice techniques and design patterns on an existing application built with microservices. You’ll delve into techniques that you can use today to build your own powerful microservices architecture. The code files are placed at this link https://github.com/PacktPublishing/-Building-Microservices-with-Node.js Style and Approach Every step is followed by a walk-through on screen and on the console, and slides to help explain core concepts. Plus, a lot of complementary reads will be included with each video for those keen to know more in-depth. What You Will Learn Implement caching strategies to improve performance Integrate logs for all microservices in one place Execute long-running tasks in asynchronous microservices Create sophisticated deploy pipelines Deploy your microservices to containers Create your own cluster of Docker hosts Make all of this production-ready Use a service mesh for resiliency Authors Shane Larson Shane Larson is a professional software engineer and solutions architect with years of experience developing data-driven applications for the financial industry. He likes to work in an agile environment on applications using NodeJS, C#, MongoDB and cloud native services. He is currently working on voice-first design projects that involve automated alerting of events within financial markets. He also spends his free time building a small off-grid cabin in a remote area of Alaska. http://www.grizzlypeaksoftware.com/ https://www.linkedin.com/in/shane-larson-889aa64/ source https://ttorial.com/building-microservices-nodejs-video

0 notes

Text

Top 16 DevOps blogs you should be reading

The software development process has changed a lot over the last 5 years. DevOps engineers have taken over the world, they drive change in IT culture, focusing on rapid IT service delivery. Continuous deployment and infrastructure improvements through the adoption of agile. They create collaboration between development, operations and testing. But as it is something relatively new, there are always things that you can learn and improve! And today’s article is about top 16 DevOps blogs that we definitely recommend to read.

DevOps teams see significantly higher performance number over their traditional deployment counterparts. In the 2017 State of DevOps report, Puppet found that high-performing DevOps teams had:

46 times more frequent code deployments

440 times faster lead time from commit to deploy

96 times faster mean time to recover from downtime

5 times lower change failure rate

And good DevOps engineers read, learn, and deploy new ideas constantly. Nowadays you can find a lot of information about DevOps, however, you are never sure if it is good or not. Our today’s list includes some of the most popular, useful and interesting DevOps blogs we could find. Actually, we follow them and highlight interesting info on a regular basis. And it doesn’t matter whether you are just starting out in the industry or you have experience, these blogs are relevant to every person, who is interested in DevOps.

Top 16 DevOps blogs you should be reading

1. Atlassian DevOps blog If you’re a fan of Atlassian products – JIRA, Bitbucket, Confluence, Bamboo, Trello, etc., then you’re going to love this blog. They tackle important topics that covers the whole DevOps ecosystem from IT management to compliance standards. There you may find topics like: how to choose the right DevOps tools, how to build DevOps culture, DevOps tips, etc.

2.DevOps Cube DevOps Cube is one of the best DevOps blogs available online. It is blog full of great tutorials, tips, examples and trends. Also, you will find articles related to best DevOps tools, like: Docker, Jenkins, Google Cloud and more. And these articles are as for beginners as for experiences DevOps engineers.

3.DevOps.com DevOps.com is like the front page of the DevOps community. It is strictly about DevOps. You’ll find all types of commentary on the DevOps ecosystem, including news, product reviews, opinion pieces, strategies, best practices, case studies, events and insights from the Pros. They also publish a weekly podcast called DevOps Chat hosted by legendary IT Pro Alan Shimel.

If you want to go deep into the weeds, DevOps.com has the resources for you to do it. They host several webinars a month that covers specific DevOps tactics and strategies like “Automating Deployment for Enterprise Level Businesses” and “Setting up a Private Cloud”. They also have a huge repository of ebooks and PDFs for you to download.

4. Docker Blog Docker is a popular container tool that regularly updates their blog with valuable goodies for DevOps Pros. Almost all the information on the blog is product-specific, so it’s especially valuable for anyone who uses Docker.

5. DZone DevOps DZone is a tech site and education hub with loads of IT & Agile information. They publish several articles a day on across multiple “Zones” like DevOps, Cloud, Java, Mobile, IOT, etc. Their DevOps section has a lot of very useful resources and you may find famous DevOps engineers among the authors. In this section, you can find theoretical to practical implementations of tools and practices from different industry verticals. It basically has everything you need if you are in the DevOps world.

6. Apiumhub blog Fast growing tech blog, which have different sections. There you may find information about automation techniques, DevOps tools, Jenkins, Docker, Continuous integration and many more. They have DevOps experts in their team and they regularly post articles based on their experience.

7. IT Revolution A very good blog where you can basically find any kind of information related to DevOps: events, projects, tips, news, community, etc.

8. Infoworld Network World is an online magazine that covers data, networks, cloud computing and many more. They have a lot of interesting articles related to DevOps. This blog will keep you up to date with the latest industry news and takes you deep into the weeds with technical how-tos and recommendations.

9. Pivotal DevOps A great destination if you’re just starting to explore the world of DevOps. They have easy-to-read guides for several high-level topics such as DevOps, agile, and containers.

10. The Register DevOps One of the main topics i The Register blog is DevOps, so there is plenty of useful material to check out every day.

11. Stackify DevOps The blog is perfect for developers who want to learn more about .NET, Application Performance Monitoring, DevOps, and running a more agile business. It also covers career advice for DevOps engineers, software developers, CIOs and CTOs.

12. The agile admin The agile admin blog is a very good choice when it comes to the DevOps world. All their blog is about DevOps and they have practically everything needed to become a DevOps professional.

13. DevOs guys The DevOps Guys blog is all about DevOps culture and everything surrounding it. The blog is run by Steve Thair – the Ops guy and James Smith – The Dev guy and together they create very interesting posts. This blog is fun and it understands the ever changing culture and industry of DevOps. There’s a lot of emphases out there on the mechanics of DevOps, whether it be tools or best practices.

14. The XebiaLabs DevOps The XebiaLabs Blog is built around industry thought leaders and their discussions about what is happening in the Continuous Delivery and DevOps world. Posting a ton of content every week this is a blog where you can find in-depth technical discussions and ongoing blog series about DevOps. These authors discuss tough questions and bring up interesting points that will keep you thinking for days.

15. TechTarget Devops Devops section of TechTarget has a curated list of blog posts related to DevOps. There you may find articles written by DevOps experts around the globe.

16. BMC DevOps Blog The blog site offers a wide range of high-quality articles varying in tone from informational to cutting-edge, with a special emphasis on the enterprise side of the business. Some key topics covered here are continuous delivery & deployment, everything as code, internet of things, containerization, and best practices.

I hope you found these blogs useful! If you know others that deserve to be on this list, feel free to share them in the comments section below!

And if you want to know more about DevOps blogs or DevOps industry in general, don’t forget to subscribe to our monthly newsletter.

If you found this article with DevOps blogs interesting, you might like…

DevOps technologies & benefits

5 best Jenkins plugins you should be using

Benefits you get by using Docker

Advantages of Jenkins

Microservices vs Monolithic architecture

SRP in object oriented design

Functional debt vs. technical debt in software development

Simulate server responses with NodeJS

Charles Proxy in Android emulator

A guide to Tmux that will increase your productivity

Callback Hell NodeJs: a solution with Fiber

Almost infinite scalability

BDD: user interface testing

F-bound over a generic type in Scala

Best Agile blogs you should track

Viper architecture advantages for iOS apps

Hexagonal architecture

Benefits & Examples of microservices

Top benefits of using docker

Benefits of software architecture

Top software testing techniques

The post Top 16 DevOps blogs you should be reading appeared first on Apiumhub.

Top 16 DevOps blogs you should be reading published first on https://koresolpage.tumblr.com/

0 notes

Video

youtube

How to deploy web application in openshift command lineOpenshift 4 is latest devops technology which can benefit the enterprise in a lot of ways. Build development and deployment can be automated using Openshift 4 platform. Features for autoscaling , microservices architecture and lot more features. So please like watch subscribe my channel for the latest videos. #openshift # openshift4 #containerization #cloud #online #container #kubernetes #docker #automation #redhatopenshift #openshifttutorial #openshiftonline, openshift openshift 4 red hat openshift,openshift openshift 4 red hat openshift container platform,openshift,web application openshift online,openshift deploy spring boot jar,openshift container platform,application deployment,openshift tutorial,openshift installation,openshift online,redhat openshift online,openshift login,red hat openshift,openshift webconsole,commandline,deploy openshift web application using openshift cli command line red hat openshift https://www.youtube.com/channel/UCnIp4tLcBJ0XbtKbE2ITrwA?sub_confirmation=1&app=desktop About: 00:00 How to deploy web application in openshift using openshift cli command line | red hat openshift In this course we will learn about deploying an application to openshift / openshift 4 online cluster in different ways. First method is to use the webconsole to deploy application using webconsole. Second way is to login through OC openshift cluster command line tool for windows. In this video we will use S2I openshift template to deploy github sourcecode directly to openshift 4 cloud cluster. Openshift/ Openshift4 a cloud based container to build deploy test our application on cloud. In the next videos we will explore Openshift4 in detail. Links: https://github.com/codecraftshop/nodejs-ex.git -l name=nodejs-cmd Github: https://github.com/codecraftshop/nodejs-ex.git https://www.facebook.com/codecraftshop/ https://t.me/codecraftshop/ Please do like and subscribe to my you tube channel "CODECRAFTSHOP" Follow us on facebook | instagram | twitter at @CODECRAFTSHOP .

#openshift openshift 4 red hat openshift#openshift openshift 4 red hat openshift container platform#openshift#web application openshift online#openshift deploy spring boot jar#openshift container platform#application deployment#openshift tutorial#openshift installation#openshift online#redhat openshift online#openshift login#red hat openshift#openshift webconsole#deploy openshift web application using openshift cli command line red hat openshift#red hat#kubernetes

0 notes

Text

The Node.js Community was amazing in 2017! Here's the proof:

Let me just start by stating this:

2017 was a great year for the Node.js Community!

Our favorite platform has finally matured to be widely adopted in the enterprise, also, a lot of great features have been merged into the core, like async/await & http2, just to mention a few.

The world runs on node ✨🐢🚀✨ #NodeInteractive http://pic.twitter.com/5PwDC2w1mE

— Franziska Hinkelmann (@fhinkel) October 4, 2017

Not only the developers who contribute to Node make this platform so great, but those who create learning materials around it were also exceptional.

In our final article of 2017, we collected a bunch of longreads which were created by the Node community. We used data from Reddit, Hacker News, Twitter & Medium to search for the most read, most shared & liked stuff out there. We intentionally omitted articles written by RisingStack (except 1, couldn't resist) - if you're interested in those, please visit our top15 of 2017 post!

I'm sure we left a bunch of amazing articles out - so if you'd like to get attention to an exceptional one you read, please let us know in the comments section.

Also, keep in mind that this post is not a classic "best of" list, just a collection that shows how amazing the Node community was in 2017! I figured that a big shoutout to the developers who constantly advocate & teach Node would be a great way to say goodbye to this year.

So, here come the proof I promised in the title:

1. We have insanely useful community curated lists:

If you'd like to gain useful knowledge quickly, you can count on the Node community. These lists were created in 2017, and contain so much best practices & shortcuts that you can definitely level up your skills by going through them.

The largest Node.JS best practices list is curated from the top ranked articles and always updated.

Awesome Node.js is an extensive list of delightful Node packages & resources.

The Modern JS Cheatsheet contains knwledge needed to build modern projects.

These lists have over 300 contributors & gained around 50K stars on GitHub!

Also, we recommend to check out these articles written by Yoni Goldberg & Azat Mardan which will help you to become a better developer:

Node.JS production best practices

10 Node.js Best Practices: Enlightenment from the Node Gurus

2. There are actually insightful Case Studies available!

One of the best articles on using Node.js was written by Scott Nonnenberg in April. The author summarized his Node experiences from the past 5 years, discussing basic concepts, architectural issues, testing, the Node ecosystem & the reason why you shouldn't use New Relic.

"I’ve already shared a few stories, but this time I wanted to focus on the ones I learned the hard way. Bugs, challenges, surprises, and the lessons you can apply to your own projects!" - Scott

..

Also, did you know that Node.js Helps NASA to Keep Astronauts Safe? It's hard to come up with something cooler than that.

NASA choose Node.js for the following reasons:

The relative ease of developing data transfer applications with JavaScript, and the familiarity across the organization with the programming language, which keeps development time and costs low.

Node.js’ asynchronous event loop for I/O operations makes it the perfect solution for a cloudbased database system that sees queries from dozens of users who need data immediately.

The Node.js package manager, npm, pairs incredibly well with Docker to create a microservices architecture that allows each API, function and application to operate smoothly and independently.

To learn more, read the full case study!

Also, shoutout to the Node Foundation who started to assemble and distribute these pretty interesting use-case whitepapers on a regular basis!

3. Node.js Authentication & Security was well covered in 2017:

When it comes to building Node.js apps (or any app..) security is crucial to get right. This is the reason why "Your Node.js authentication tutorial is (probably) wrong" written by micaksica got so much attention on HackerNoon.

tl;dr: The author went on a search of Node.js/Express.js authentication tutorials. All of them were incomplete or made a security mistake in some way that can potentially hurt new users. His post explores some common authentication pitfalls, how to avoid them, and what to do to help yourself when your tutorials don’t help you anymore.

If you plan on reading only one security related article (..but why would you do that?!), this is definitely one of the best ones!

..

Also, we recommend to check out the Damn Vulnerable NodeJS Application github page, which aims to demonstrate the OWASP Top 10 Vulnerabilities and guides you on fixing and avoiding these vulnerabilities.

..

Other great articles which were receiving a lot of praise were Securing Node.js RESTful APIs with JSON Web Tokens by Adnan Rahic, and Two-Factor Authentication with Node.js from David Walsh.

4. API development with Node.js has been made even more easy:

One of the main strengths of Node.js is that you can build REST APIs with it in a very efficient way! There are a lot of articles covering that topic, but these were definitely the most popular ones:

RESTful API design with Node.js walks beginners through the whole process in a very thorough, easy to understand way.

Build a Node.js API in Under 30 Minutes achieves the same result, but it uses ES6 as well.

10 Best Practices for Writing Node.js REST APIs (written by us) goes a little further and includes topics like naming your routes, authentication, black-box testing & using proper cache headers for these resources.

5. We're constantly looking under the hood of Node.js.

Luckily, the Node/JS community delivers when you want to go deeper, in fact there were so many interesting deep-dives that it was really hard to pick out the best ones, but one can try! These articles are really insightful:

Understanding Node.js Event-Driven Architecture

What you should know to really understand the Node.js Event Loop

Node.js Streams: Everything you need to know

How JavaScript works: inside the V8 engine + 5 tips on how to write optimized code

ES8 was Released and here are its Main New Features

6 Reasons Why JavaScript’s Async/Await Blows Promises Away (Tutorial)

What were the best articles that peeked under the hood of JS/Node in your opinion? Share them in the comments!

6. Awesome new Tools were made in 2017:

Two of the most hyped tools of the year were Prettier & Fastify!

In case you don't know, prettier is a JavaScript formatter that works by compiling your code to an AST, and then pretty-printing the AST.

The result is good-looking code that is completely consistent no matter who wrote it. This solves the problem of programmers spending a lot of time manually moving around code in their editor and arguing about styles.

Fastify was introduced by Matteo Collina, Node.js core technical commitee member during Node Interactive 2017 in Vancouver.

Fastify is a new web framework inspired by Hapi, Restify and Express. Fastify is built as a general-purpose web framework, but it shines when building extremely fast HTTP APIs that use JSON as the data format.

..just to mention a few.

7. There are Amazing Crash Courses Available for Free

If you'd like to start learning Node, you can count on the commmunity to deliver free, high-quality resources that can speed up your journey!

One particular author - Adnan Rahić - received a huge amount of praise for releasing crash courses for different topics on Medium. Here they are:

A crash course on testing with Node.js

A crash course on Serverless with Node.js

We hope Adnan will continue this series and create crash courses on other areas of Node as well!

8. Miscallaneus Topics are Covered as Well

What do we mean under miscallaneus topics? Well, those are topics that go beyond the "traditional" use cases of Node.js, where the authors describe something interesting & fun they built with it.

For example there were exceptional articles released that used the OpenCV library for either face recognition or just regular object recognition.

Chatbots were a hype topic too, and there was no shortage of blogposts describing how to build them using different languages.

Building A Simple AI Chatbot With Web Speech API And Node.js was one of the most praised articles. In this post Tomomi Imura walked us through building a chat-bot which can be controlled by voice. Yep, it's pretty cool.

We also recommend to check out Developing A Chatbot Using Microsoft's Bot Framework, LUIS And Node.js too, which is the first part of a series dealing with the topic.

What other fun projects did you see? Add them to the comments section!

9. The community can attend great conferences, like Node Interactive!

Node Interactive Vancouver was a major event which provided great opportunities for it's attendees to meet with fellow developers & learn a lot about their favorite subject.

Fortunately every prezentation is available on YouTube, so you can get up-to-date even if you couldn't participate this year (just like us.)

The 10 most watched right now are:

Node.js Performance and Highly Scalable Micro-Services by Chris Bailey

New DevTools Features for JavaScript by Yang Guo

The V8 Engine and Node.js by Franzi Hinkelmann

High Performance JS in V8 by Peter Marshall

The Node.js Event Loop: Not So Single Threaded by Bryan Hughes

Welcome and Node.js Update by Mark Hinkle

Take Your HTTP Server to Ludicrous Speed by Matteo Collina

WebAssembly and the Future of the Web by Athan Reines

High Performance Apps with JavaScript and Rust by Amir Yasin

TypeScript - A Love Tale with JavaScript by Bowden Kelly

Of course this list is just the tip of the iceberg, since there are 54 videos upladed on the Node Foundations' YouTube channel, and most of them are really insightful and fun to watch.

10. Node.js is finally more sought after than Java!

Although Ryan Dahl recently stated in an interview that..

for a certain class of application, which is like, if you're building a server, I can't imagine using anything other than Go.

.. we have no reason to doubt the success of Node.js!

Mikeal Rogers, one of the core organizers of NodeConf, community manager & core contributor at the Node Foundation stated that Node.js will overtake Java within a year in a NewStack interview this summer!

We are now at about 8 million estimated users and still growing at about 100 percent a year. We haven’t passed Java in terms of users yet, but by this time next year at the current growth, we will surpass. - Mikeal.

Mikeal is not alone with his opinion. There is hard data available to prove that Node is becoming more sought after than Java.

According to the data gathered by builtinnode, the demand for Node.js developers has already surpassed the demand for Java devs by the summer of 2017 in the Who is Hiring section of Hacker News!

Since Node was already adopted and is being advocated by the greatest tech companies on Earth, there's no doubt that it will remain a leading technology for many years ahead!

We hope that the Node community will continue to thrive in 2018 as well, and produce a plethora of exellent tools & tutorials.

At RisingStack, we'll keep on advocating & educating Node in 2018 as well, for sure! If you're interested in the best content we produced in 2017, take a look!

The Node.js Community was amazing in 2017! Here's the proof: published first on http://ift.tt/2fA8nUr

0 notes