#nvidia's gpu

Explore tagged Tumblr posts

Text

A Deep Dive into NVIDIA’s GPU: Transitioning from A100 to L40S and Preparing for GH200

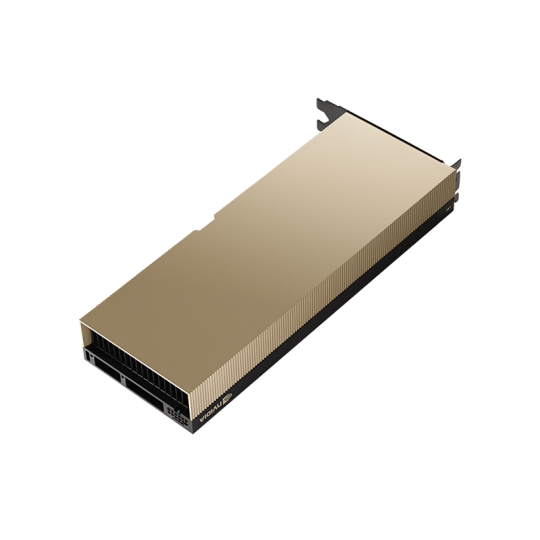

Introducing NVIDIA L40S: A New Era in GPU Technology

When planning enhancements for your data center, it’s essential to grasp the entire range of available GPU technologies, particularly as they evolve to tackle the intricate requirements of heavy-duty workloads. This article presents a detailed comparison of two notable NVIDIA GPUs: the NVIDIA L40S and the A100. Each GPU is distinctively designed to cater to specific requirements in AI, graphics, and high-performance computing sectors. We will analyze their individual features, ideal applications, and detailed technical aspects to assist in determining which GPU aligns best with your organizational goals. It’s important to note that the NVIDIA A100 is being discontinued in January 2024, with the L40S emerging as a capable alternative. This change comes as NVIDIA prepares to launch the Grace Hopper 200 (GH200) card later this year. Additionally, for those eager to stay updated with the latest advancements in GPU technology.

Diverse Applications of the NVIDIA L40S GPU

Generative AI Tasks The L40S GPU excels in the realm of generative AI, offering the requisite computational strength essential for creating new services, deriving fresh insights, and crafting unique content.

LLM Training and Inference In the ever-growing field of natural language processing, the L40S stands out by providing ample capabilities for both the training and implementation of extensive language models.

3D Graphics and Rendering The GPU is proficient in handling detailed creative processes, including 3D design and rendering. This makes it an excellent option for animation studios, architectural visualizations, and product design applications.

Enhanced Video Processing Equipped with advanced media acceleration functionalities, the L40S is particularly effective for video processing, addressing the complex requirements of content creation and streaming platforms.

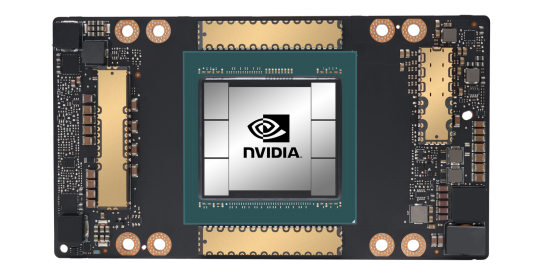

Overview of NVIDIA A100

The NVIDIA A100 GPU stands as a targeted solution in the realms of AI, data analytics, and high-performance computing (HPC) within data centers. It is renowned for its ability to deliver effective and scalable performance, particularly in specialized tasks. The A100 is not designed as a universal solution but is instead optimized for areas requiring intensive deep learning, sophisticated data analysis, and robust computational strength. Its architecture and features are ideally suited for handling large-scale AI models and HPC tasks, providing a considerable enhancement in performance for these particular applications.

Performance Face-Off: L40S vs. A100 In performance terms, the L40S boasts 1,466 TFLOPS Tensor Performance, making it a prime choice for AI and graphics-intensive workloads. Conversely, the A100 showcases 19.5 TFLOPS FP64 performance and 156 TFLOPS TF32 Tensor Core performance, positioning it as a powerful tool for AI training and HPC tasks.

Expertise in Integration by AMAX AMAX specializes in incorporating these advanced NVIDIA GPUs into bespoke IT solutions. Our approach ensures that whether the focus is on AI, HPC, or graphics-heavy workloads, the performance is optimized. Our expertise also includes advanced cooling technologies, enhancing the longevity and efficiency of these GPUs.

Matching the Right GPU to Your Organizational Needs

Selecting between the NVIDIA L40S and A100 depends on specific workload requirements. The L40S is an excellent choice for entities venturing into generative AI and advanced graphics, while the A100, although being phased out in January 2024, remains a strong option for AI and HPC applications. As NVIDIA transitions to the L40S and prepares for the release of the GH200, understanding the nuances of each GPU will be crucial for leveraging their capabilities effectively.

In conclusion, NVIDIA’s transition from the A100 to the L40S represents a significant shift in GPU technology, catering to the evolving needs of modern data centers. With the upcoming GH200, the landscape of GPU technology is set to witness further advancements. Understanding these changes and aligning them with your specific requirements will be key to harnessing the full potential of NVIDIA’s GPU offerings.

M.Hussnain Visit us on social media: Facebook | Twitter | LinkedIn | Instagram | YouTube TikTok

#nvidia#nvidia rtx 4080#nvidia gpu#nvidia's gpu#L40S#L40#Nvidia L40#Nvidia A100#gh200#viperatech#vipera

0 notes

Text

"Lawmakers introduce the Chip Act which will allow the US government to track the location of your GPU so they know for sure that it isn't in the hands of China" is such a dumb fucking sentence that I can't believe it exists and isn't from a parody of something

#didnt nvidia manufacture separate gpus in china anyway...#as a way to get around the tariffs... so this does fuck all#r

21 notes

·

View notes

Text

Tony Stark single-handedly keeping NVIDIA business booming with the amount of graphic cards (GPU) he’s buying

#tony stark#This post brought to you by my looking at my gpu taking 40 hours to train one singly shitty variational autoencoder#How many gpu’s is tony using to train his AI……….. too many#I think that in universe he has probably bought NVIDIA or started making his own#Chat what do you think

23 notes

·

View notes

Text

Stop showing me ads for Nvidia, Tumblr! I am a Linux user, therefore AMD owns my soul!

#linux#nvidia gpu#amd#gpu#they aren't as powerful as nvidia but they're cheaper and the value for money is better also.

38 notes

·

View notes

Text

fundamentally unserious graphics card

11 notes

·

View notes

Text

what i love the most about digital humanities is that sometimes you just have to let your computer process things and take a nap in the meantime

#genuinely curious how much faster will corpus annotation be now that i have a new computer#update: it's FAST#nvidia gpu is doing its job holy shit

12 notes

·

View notes

Text

born to train

#art#design#white#fashion#black#painting#photography#portrait#illustration#light#gpu#nvidia#geforce#rtx

24 notes

·

View notes

Text

i already know what the first tier of arcadion raids is about btw

24 notes

·

View notes

Text

turns out if you have access to a good gpu then running the fucking enormous neural net necessary to segment cells correctly happens in 10 minutes instead of 2-5 hours

#now could i have at least bothered to set up scripting in order to use the cluster previously. yes. but i didn't.#and now i'm going to because it's actually worth my time to automate things if it lets me queue up 10 giant videos to run in under 2hr.#you guys would not believe how long the problem “get a computer to identify where the edges of the cells in this picture are” has bedeviled#the fields of cell and tissue biology. weeping and gnashing of teeth i tell you#and now it is solved. as long as you have last year's topline nvidia gpu available#or else 4 hours to kill while your macbook's fan achieves orbital flight.#box opener

9 notes

·

View notes

Text

Wtf are we doing heree aughhhhh 😀😀😀

#yeah im gonna take my pc and monitor and check wtf is going on :D#black and white photos look greeeeeeeen maaaaan#and it used to look normal i know how it supposed to looook auuughhhhhh#i hope it my monitor#if its my gpu then ooooooooooooffffffffffffffffffff#nvidia updates fucked everything uppppppp#or maybe windows11 :D#maybe dragon age the veilguard was cursed for real#OOOOOH I HOPE ITS MY MONITOR#OOOooooOOH I WANNA FIGHT SO BAD 💀

7 notes

·

View notes

Text

Titan graphics cards

6 notes

·

View notes

Text

fortnite so laggy bruh(i literally have a gtx 1650 and an intel i5 10th gen and horrendous wifi)

2 notes

·

View notes

Text

7 notes

·

View notes

Note

Thoughts on the recent 8GB of VRAM Graphics Card controversy with both AMD and NVidia launching 8GB GPUs?

I think tech media's culture of "always test everything on max settings because the heaviest loads will be more GPU bound and therefore a better benchmark" has led to a culture of viewing "max settings" as the default experience and anything that has to run below max settings as actively bad. This was a massive issue for the 6500XT a few years ago as well.

8GiB should be plenty but will look bad at excessive settings.

Now, with that said, it depends on segment. An excessively expensive/high-end GPU being limited by insufficient memory is obviously bad. In the case of the RTX 5060Ti I'd define that as encountering situations where a certain game/res/settings combination is fully playable, at least on the 16GiB model, but the 8GiB model ends up much slower or even unplayable. On the other hand, if the game/res/settings combination is "unplayable" (excessively low framerate) on the 16GiB model anyway I'd just class that as running inappropriate settings.

Looking through the techpowerup review; Avowed, Black Myth: Wukong, Dragon Age: The Veilguard, God of War Ragnarök, Monster Hunter Wilds and S.T.A.L.K.E.R. 2: Heart of Chernobyl all see significant gaps between the 8GiB and 16GiB cards at high res/settings where the 16GiB was already "unplayable". These are in my opinion inappropriate game/res/setting combinations to test at. They showcase an extreme situation that's not relevant to how even a higher capacity card would be used. Doom Eternal sees a significant gap at 1440p and 4K max settings without becoming "unplayable".

F1 24 goes from 78.3 to 52.0 FPS at 4K max so that's a giant gap that could be said to also impact playability. Spider-Man 2 (wow they finally made a second spider-man game about time) does something similar at 1440p. The Last of Us Pt.1 has a significant performance gap at 1080p, and the 16GiB card might scrape playability at 1440p, but the huge gap at 4K feels like another irrelevant benchmark of VRAM capacity.

All the other games were pretty close between the 8GiB and 16GiB cards.

Overall, I think this creates a situation where you have a large artificial performance difference from these tests that would be unplayable anyway. The 8GiB card isn't bad - the benchmarks just aren't fair to it.

Now, $400 for a GPU is still fucking expensive and also Nvidia not sampling it is an attempt to trick people who might not realise it can be limiting sometimes but that's a whole other issue.

4 notes

·

View notes

Text

Choosing the Right GPU Server: RTX A5000, A6000, or H100?

Confused about the right GPU server for your needs? Compare RTX A5000 for ML, A6000 for simulations, and H100 for enterprise AI workloads to make the best choice.

📞 US Toll-Free No.: 888-544-3118 ✉️ Email: [email protected] 🌐 Website: https://www.gpu4host.com/ 📱 Call (India): +91-7737300013🚀 Get in touch with us today for powerful GPU Server solutions!

#Gpu#gpuserver#gpuhosting#hosting#gpudedicatedserver#server#streamingserver#broadcastingserver#artificial intelligence#ai#nvidia#graphics card#aiserver

2 notes

·

View notes

Text

So today I learned about "distilling" and AI

In this context, distillation is a process where an AI model uses responses generated by other, more powerful, AI models to aid its development. Sacks added that over the next few months he expects leading U.S. AI companies will be "taking steps to try and prevent distillation” to slow down “these copycat models.”

( source )

There's something deeply friggin' hilariously ironic about AI companies now getting all hot and bothered over other AI companies ripping off their work because a Chinese upstart smashed into the AI space.

(That's not to invalidate the possibility that DeepSeek did indeed copy OpenAI's homework, so to speak, but it's still just laughably ironic. Sauce for the goose - sauce for the gander!)

#ai#distilling#openai#open ai#deepseek#that being said#one hopefully unintended beneficial side effect is that graphics cards should become less expensive#why buy an nvidia gpu when you can get the knockoff version that does just about as well

5 notes

·

View notes