#openshift cli

Explore tagged Tumblr posts

Text

Master Advanced OpenShift Operations with Red Hat DO380

In today’s dynamic DevOps landscape, container orchestration platforms like OpenShift have become the backbone of modern enterprise applications. For professionals looking to deepen their expertise in managing OpenShift clusters at scale, Red Hat OpenShift Administration III: Scaling Deployments in the Enterprise (DO380) is a game-changing course.

🎯 What is DO380?

The DO380 course is designed for experienced OpenShift administrators and site reliability engineers (SREs) who want to extend their knowledge beyond basic operations. It focuses on day-2 administration tasks in Red Hat OpenShift Container Platform 4.12 and above, including automation, performance tuning, security, and cluster scaling.

📌 Key Highlights of DO380

🔹 Advanced Cluster Management Learn how to manage large-scale OpenShift environments using tools like the OpenShift CLI (oc), the web console, and GitOps workflows.

🔹 Performance Tuning Analyze cluster performance metrics and implement tuning configurations to optimize workloads and resource utilization.

🔹 Monitoring & Logging Use the OpenShift monitoring stack and log aggregation tools to troubleshoot issues and maintain visibility into cluster health.

🔹 Security & Compliance Implement advanced security practices, including custom SCCs (Security Context Constraints), Network Policies, and OAuth integrations.

🔹 Cluster Scaling Master techniques to scale infrastructure and applications dynamically using horizontal and vertical pod autoscaling, and custom metrics.

🔹 Backup & Disaster Recovery Explore methods to back up and restore OpenShift components using tools like Velero.

🧠 Who Should Take This Course?

This course is ideal for:

Red Hat Certified System Administrators (RHCSA) and Engineers (RHCE)

Kubernetes administrators

Platform engineers and SREs

DevOps professionals managing OpenShift clusters in production environments

📚 Prerequisites

To get the most out of DO380, learners should have completed:

Red Hat OpenShift Administration I (DO180)

Red Hat OpenShift Administration II (DO280)

Or possess equivalent knowledge and hands-on experience with OpenShift clusters

🏅 Certification Pathway

After completing DO380, you’ll be well-prepared to pursue the Red Hat Certified Specialist in OpenShift Administration and progress toward the prestigious Red Hat Certified Architect (RHCA) credential.

📈 Why Choose HawkStack for DO380?

At HawkStack Technologies, we offer:

✅ Certified Red Hat instructors ✅ Hands-on labs and real-world scenarios ✅ Corporate and individual learning paths ✅ Post-training mentoring & support ✅ Flexible batch timings (weekend/weekday)

🚀 Ready to Level Up?

If you're looking to scale your OpenShift expertise and manage enterprise-grade clusters with confidence, DO380 is your next step.

For more details www.hawkstack.com

0 notes

Text

Integrating Virtual Machines with Containers Using OpenShift Virtualization

As organizations increasingly adopt containerization to modernize their applications, they often encounter challenges integrating traditional virtual machine (VM)-based workloads with containerized environments. OpenShift Virtualization bridges this gap, enabling organizations to run VMs alongside containers seamlessly within the same platform. This blog explores how OpenShift Virtualization helps achieve this integration and why it’s a game-changer for hybrid environments.

What is OpenShift Virtualization?

OpenShift Virtualization is a feature of Red Hat OpenShift that allows you to manage VMs as first-class citizens alongside containers. It leverages KubeVirt, an open-source virtualization extension for Kubernetes, enabling VM workloads to run natively on the OpenShift platform.

Benefits of Integrating VMs and Containers

Unified Management: Manage VMs and containers through a single OpenShift interface.

Resource Efficiency: Consolidate workloads on the same infrastructure to reduce operational costs.

Simplified DevOps: Use Kubernetes-native tools like kubectl and OpenShift’s dashboards to manage both VMs and containers.

Hybrid Workload Modernization: Gradually transition legacy VM-based applications to containerized environments without disrupting operations.

Key Use Cases

Modernizing Legacy Applications: Migrate monolithic applications running on VMs to OpenShift, enabling container adoption without rewriting the entire codebase.

Hybrid Workloads: Run VM-based databases alongside containerized microservices for better performance and management.

Development and Testing: Spin up VMs for testing or sandbox environments while running production-ready workloads in containers.

How to Integrate VMs with Containers in OpenShift

Install OpenShift Virtualization:

Use the OpenShift OperatorHub to install the OpenShift Virtualization Operator.

Verify the installation by checking the kubevirt namespace and related components.

Create Virtual Machines:

Use the OpenShift web console or CLI to create VMs.

Define VM specifications like CPU, memory, and storage in YAML files.

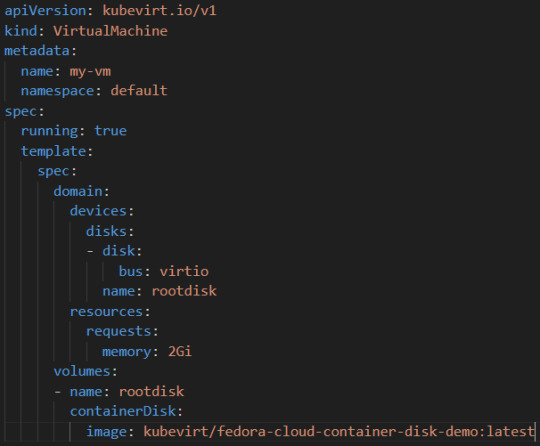

Example YAML for a VM:

Connect Containers and VMs:

Use OpenShift’s networking capabilities to establish communication between VMs and containerized workloads.

For example, deploy a containerized application that interacts with a VM-based database over a shared network.

Monitor and Manage:

Use OpenShift’s monitoring tools to observe the performance and health of both VMs and containers.

Manage workloads using the OpenShift console or CLI tools like oc and kubectl.

Example: Integrating a VM-Based Database with a Containerized Application

Deploy a VM with a Database:

Create a VM running MySQL.

Expose the database service using OpenShift’s networking capabilities.

Deploy a Containerized Application:

Use OpenShift to deploy a containerized web application that connects to the MySQL database.

Verify Connectivity:

Test the application’s ability to query the database.

Best Practices

Resource Planning: Allocate resources efficiently to avoid contention between VMs and containers.

Security: Leverage OpenShift’s built-in security features like Role-Based Access Control (RBAC) to manage access to workloads.

Automation: Use OpenShift Pipelines or Ansible to automate the deployment and management of hybrid workloads.

Conclusion

OpenShift Virtualization is a powerful tool for organizations looking to unify their infrastructure and modernize applications. By seamlessly integrating VMs and containers, it provides flexibility, efficiency, and scalability, enabling businesses to harness the best of both worlds. Whether you’re modernizing legacy systems or building hybrid applications, OpenShift Virtualization simplifies the journey and accelerates innovation.

For more details visit : https://www.hawkstack.com/

0 notes

Text

Becoming a Red Hat Certified OpenShift Application Developer (DO288)

In today's dynamic IT landscape, containerization has become a crucial skill for developers and system administrators. Red Hat's OpenShift platform is at the forefront of this revolution, providing a robust environment for managing containerized applications. For professionals aiming to validate their skills and expertise in this area, the Red Hat Certified OpenShift Application Developer (DO288) certification is a prestigious and highly valued credential. This blog post will delve into what the DO288 certification entails, its benefits, and tips for success.

What is the Red Hat Certified OpenShift Application Developer (DO288) Certification?

The DO288 certification focuses on developing, deploying, and managing applications on Red Hat OpenShift Container Platform. OpenShift is a Kubernetes-based platform that automates the process of deploying and scaling applications. The DO288 exam tests your ability to design, build, and deploy cloud-native applications on OpenShift.

Why Pursue the DO288 Certification?

Industry Recognition: Red Hat certifications are globally recognized and respected in the IT industry. Obtaining the DO288 credential can significantly enhance your professional credibility and open up new career opportunities.

Skill Validation: The certification validates your expertise in OpenShift, ensuring you have the necessary skills to handle real-world challenges in managing containerized applications.

Career Advancement: With the increasing adoption of containerization and Kubernetes, professionals with OpenShift skills are in high demand. This certification can lead to roles such as OpenShift Developer, DevOps Engineer, and Cloud Architect.

Competitive Edge: In a competitive job market, having the DO288 certification on your resume sets you apart from other candidates, showcasing your commitment to staying current with the latest technologies.

Exam Details and Preparation

The DO288 exam is performance-based, meaning you will be required to perform tasks on a live system rather than answering multiple-choice questions. This format ensures that certified professionals possess practical, hands-on skills.

Key Exam Topics:

Managing application source code with Git.

Creating and deploying applications from source code.

Managing application builds and image streams.

Configuring application environments using environment variables, ConfigMaps, and Secrets.

Implementing health checks to ensure application reliability.

Scaling applications to meet demand.

Securing applications with OpenShift’s security features.

Preparation Tips:

Training Courses: Enroll in Red Hat's official DO288 training course. This course provides comprehensive coverage of the exam objectives and includes hands-on labs to practice your skills.

Hands-on Practice: Set up a lab environment to practice the tasks outlined in the exam objectives. Familiarize yourself with the OpenShift web console and command-line interface (CLI).

Study Guides and Resources: Utilize Red Hat’s official study guides and documentation. Online communities and forums can also be valuable resources for tips and troubleshooting advice.

Mock Exams: Take practice exams to assess your readiness and identify areas where you need further study.

Real-World Applications

Achieving the DO288 certification equips you with the skills to:

Develop and deploy microservices and containerized applications.

Automate the deployment and scaling of applications using OpenShift.

Enhance application security and reliability through best practices and OpenShift features.

These skills are crucial for organizations looking to modernize their IT infrastructure and embrace cloud-native development practices.

Conclusion

The Red Hat Certified OpenShift Application Developer (DO288) certification is an excellent investment for IT professionals aiming to advance their careers in the field of containerization and cloud-native application development. By validating your skills with this certification, you can demonstrate your expertise in one of the most sought-after technologies in the industry today. Prepare thoroughly, practice diligently, and take the leap to become a certified OpenShift Application Developer.

For more information about the DO288 certification and training courses

For more details www.hawkstack.com

#redhatcourses#docker#linux#container#containerorchestration#information technology#kubernetes#containersecurity#dockerswarm#aws#hawkstack#hawkstack technologies

1 note

·

View note

Text

This Week in Rust 501

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on Twitter or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

Improved API tokens for crates.io

Project/Tooling Updates

rust-analyzer changelog #187

Youki v0.1.0 has been released for use with Kubernetes and more

Build Zellij WebAssembly (Rust) plugins for your Terminal

Slint 1.1 Released with additional Royalty-Free License

Exograph: Declarative GraphQL backends with a Rust-powered runtime

Nutype v0.3

Observations/Thoughts

Rust fact vs. fiction: 5 Insights from Google's Rust journey in 2022

Escaping Macrophages

Code coverage in Rust

[video] Verus - Verified Rust for low-level systems code by Andrea Lattuada

[audio] Daily with Kwindla Hultman Kramer :: Rustacean Station

[audio] Fish Folk with Erlend Sogge Heggen :: Rustacean Station

Rust Walkthroughs

Serde Errors When Deserializing Untagged Enums Are Bad - But Easy to Make Better

Blowing up my compile times for dubious benefits

Walk-Through: Prefix Ranges in Rust, a Surprisingly Deep Dive

ESP32 Embedded Rust at the HAL: Remote Control Peripheral

Auto-Generating & Validating OpenAPI Docs in Rust: A Streamlined Approach with Utoipa and Schemathesis

[video] Writing a Rust-based ring buffer

[video] Supercharge your I/O in Rust with io_uring

[video] Graph Traversal with Breadth-First Search in Rust

[video] Nine Rules for Writing Python Extensions in Rust | PyData Seattle 2023

Research

Friend or Foe Inside? Exploring In-Process Isolation to Maintain Memory Safety for Unsafe Rust

Agile Development of Linux Schedulers with Ekiben

Miscellaneous

How to Deploy Cross-Platform Rust Binaries with GitHub Actions

Crate of the Week

This week's crate is Parsel, an easy to use parser generator.

Thanks to jacg for the suggestion!

Please submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

RustQuant - Issue 56: Implementing Logistic Regression: weights matrix becomes singular.

RustQuant - Issue 30: Error handling for the library.

RustQuant - Issue 22: Pricing model calibration module.

RustQuant - Issue 14: Add/improve documentation (esp. math related docs).

RustQuant - Issue 57: Increase test coverage (chore).

Ockam - Add API endpoint to retrieve the project's version

Ockam - Validate the credential before storing it

Ockam - Update CLI documentation for lease commands

Send File - create hotspot on Linux operating system

Send File - Get device storage information (used disk size and total memory)

Send File - Create hotspot on Windows Operating system

Send File - Use Tauri store plugin to persist app data

mirrord - Alert user when running on OpenShift

mirrord - Add integration test for listening on the same port again after closing

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from the Rust Project

400 pull requests were merged in the last week

syntactically accept become expressions (explicit tail calls experiment)

hir: Add Become expression kind (explicit tail calls experiment)

add esp-idf missing targets

accept ReStatic for RPITIT

account for sealed traits in privacy and trait bound errors

add support for NetBSD/aarch64-be (big-endian arm64)

always register sized obligation for argument

better error for non const PartialEq call generated by match

don't ICE on unnormalized struct tail in layout computation

don't structurally resolve during method ambiguity in probe

don't substitute a GAT that has mismatched generics in OpaqueTypeCollector

expose compiler-builtins-weak-intrinsics feature for -Zbuild-std

fix return type notation associated type suggestion when -Zlower-impl-trait-in-trait-to-assoc-ty

fix return type notation errors with -Zlower-impl-trait-in-trait-to-assoc-ty

add cfg diagnostic for unresolved import error

inline before merging cgus

liberate bound vars properly when suggesting missing async-fn-in-trait

make closure_saved_names_of_captured_variables a query

make queries traceable again

merge BorrowKind::Unique into BorrowKind::Mut

sort the errors from arguments checking so that suggestions are handled properly

suggest correct signature on missing fn returning RPITIT/AFIT

support Apple tvOS in libstd

test the cargo args generated by bootstrap.py

use ErrorGuaranteed instead of booleans in rustc_builtin_macros

various impl trait in assoc tys cleanups

warn on unused offset_of!() result

fix: generalize types before generating built-in Normalize clauses

support FnPtr trait

miri: mmap/munmap/mremamp shims

Default: Always inline primitive data types

add alloc::rc::UniqueRc

make {Arc, Rc, Weak}::ptr_eq ignore pointer metadata

alter Display for Ipv6Addr for IPv4-compatible addresses

fix windows Socket::connect_timeout overflow

specialize StepBy<Range<{integer}>>

implement PartialOrd for Vecs over different allocators

implement Sync for mpsc::Sender

cargo: Support cargo Cargo.toml

cargo: add .toml file extension restriction for -Zconfig-include

cargo: allow embedded manifests in all commands

rustdoc: partially fix invalid files creation

rustdoc: fix union fields display

rustdoc: handle assoc const equalities in cross-crate impl-Trait-in-arg-pos

rustdoc: render the body of associated types before the where-clause

rustfmt: handling of numbered markdown lists

rustfmt: implement let-else formatting (finally!)

clippy: borrow_as_ptr: Ignore temporaries

clippy: format_push_string: look through match and if expressions

clippy: get_unwrap: include a borrow in the suggestion if argument is not an integer literal

clippy: items_after_test_module: Ignore in-proc-macros items

clippy: ptr_arg: Don't lint when return type uses Cow's lifetime

clippy: single_match: don't lint if block contains comments

clippy: type_repetition_in_bounds: respect MSRV for combining bounds

clippy: allow safety comment above attributes

clippy: avoid linting extra_unused_type_parameters on procedural macros

clippy: check if if conditions always evaluate to true in never_loop

clippy: don't lint excessive_precision on inf

clippy: don't lint iter_nth_zero in next

clippy: lint mem_forget if any fields are Drop

rust-analyzer: feature: assist delegate impl

rust-analyzer: fix some unsizing problems in mir

rust-analyzer: skip mutable diagnostics on synthetic bindings

rust-analyzer: support manual impl of fn traits in mir interpreter

rust-analyzer: support more intrinsics in mir interpreter

Rust Compiler Performance Triage

Relatively quiet week outside of a large win on one incremental benchmark in a regression test (i.e., not real world code). Bimodality in a number of benchmarks continues to be an issue.

Triage done by @simulacrum. Revision range: b9d608c9..b5e51db

5 Regressions, 6 Improvements, 3 Mixed; 5 of them in rollups

44 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No RFCs entered Final Comment Period this week.

Tracking Issues & PRs

[disposition: close] Tracking issue for std::default::default()

[disposition: merge] Create unnecessary_send_constraint lint for &(dyn ... + Send)

[disposition: merge] Change default panic handler message format.

[disposition: close] MSVC and rustc disagree on minimum stack alignment on x86 Windows

[disposition: merge] style-guide: Add chapter about formatting for nightly-only syntax

[disposition: merge] rustdoc: Allow whitespace as path separator like double colon

[disposition: merge] Add internal_features lint

[disposition: merge] Don't require associated types with Self: Sized bounds in dyn Trait objects

[disposition: merge] Return Ok on kill if process has already exited

New and Updated RFCs

No New or Updated RFCs were created this week.

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

eRFC: single-file packages ("cargo script") integration

Testing steps

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Upcoming Events

Rusty Events between 2023-06-28 - 2023-07-26 🦀

Virtual

2023-06-28 | Virtual (Cardiff, UK) | Rust and C++ Cardiff

Building Our Own 'Arc' in Rust (Atomics & Locks Chapter 6)

2023-06-28 | Virtual (Chicago, IL, US) | Chicago Healthcare Cloud Technology Community

Rust for Mission-Critical AI: A Journey into Healthcare's Safest Language

2023-06-29 | Virtual (Charlottesville, VA, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2023-07-01 | Virtual (Nürnberg, DE) | Rust Nuremberg

Deep Dive Session 4: Protohackers Exercises Mob Coding (Problem II onwards)

2023-07-04 | Virtual (Berlin, DE) | Berline.rs / OpenTechSchool Berlin

Rust Hack and Learn

2023-07-04 | Virtual (Buffalo, NY, US) | Buffalo Rust Meetup

Buffalo Rust User Group, First Tuesdays

2023-07-05 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2023-07-05 | Virtual (Stuttgart, DE) | Rust Community Stuttgart

Rust-Meetup

2023-07-06 | Virtual (Ciudad de México, MX) | Rust MX

Rust y Haskell

2023-07-11 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2023-07-13 - 2023-07-14 | Virtual | Scientific Computing in Rust

Scientific Computing in Rust workshop

2023-07-13 | Virtual (Edinburgh, UK) | Rust Edinburgh

Reasoning about Rust: an introduction to Rustdoc’s JSON format

2023-07-19 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2023-07-20 | Virtual (Tehran, IR) | Iran Rust Meetup

Iran Rust Meetup #12 - Ownership and Memory management

Asia

2023-06-29 | Seoul, KR | T-RUST meetup

🦀 T-RUST Meetup 🦀

Europe

2023-06-28 | Bratislava, SK | Bratislava Rust Meetup Group

Rust Meetup by Sonalake

2023-06-29 | Augsburg, DE | Rust Meetup Augsburg

Augsburg Rust Meetup

2023-06-29 | Copenhagen, DK | Copenhagen Rust Community

Rust metup #37 at Samsung!

2023-06-29 | Vienna, AT | Rust Vienna

Rust Vienna Meetup - June - final meetup before a summer break

2023-07-01 | Basel, CH | Rust Basel

(Beginner) Rust Workshop

2023-07-03 | Zurich, CH | Rust Zurich

Rust in the Linux Kernel - July Meetup

2023-07-05 | Lyon, FR | Rust Lyon

Rust Lyon Meetup #5

2023-07-11 | Breda, NL | Rust Nederland

Rust: Advanced Graphics and User Interfaces

2023-07-11 | Virtual | Mainmatter

Web-based Services in Rust, 3-day Workshop with Stefan Baumgartner

2023-07-13 | Reading, UK | Reading Rust Workshop

**Reading Rust Meetup at Browns***

North America

2023-06-21 | Somerville, MA, US | Boston Rust Meetup

Ball Square Rust Lunch, June 21

2023-06-22 | New York, NY, US | Rust NYC

Learn How to Use cargo-semver-checks and Closure Traits to Write Better Code

2023-06-24 | San Jose, CA, US | Rust Breakfast & Learn

Rust: breakfast & learn

2023-06-28 | Cambridge, MA, US | Boston Rust Meetup

Harvard Square Rust Lunch

2023-06-29 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2023-07-01 | San Jose, CA, US | Rust Breakfast & Learn

Rust: breakfast & learn

2023-07-07 | Chicago, IL, US | Deep Dish Rust

Rust Lunch

2023-07-12 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2023-07-12 | Waterloo, ON, CA | Rust KW

Overengineering FizzBuzz

2023-07-13 | Seattle, WA, US | Seattle Rust User Group Meetup

July Meetup

2023-07-18 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

Oceania

2023-07-11 | Christchurch, NZ | Christchurch Rust Meetup Group

Christchurch Rust meetup meeting

2023-07-11 | Melbourne, VIC, AU | Rust Melbourne

(Hybrid - in person & online) July 2023 Rust Melbourne Meetup

South America

2023-07-04 | Medellín, CO | Rust Medellín

Introduccion a rust, ownership and safety code

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

It's a compiler not a Jedi, don't expect it to read minds.

– Nishant on github

Thanks to Nishant for the self-suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

0 notes

Text

Create project in openshift webconsole and command line tool

To create a project in OpenShift, you can use either the web console or the command-line interface (CLI). Create Project using Web Console: Login to the OpenShift web console. In the top navigation menu, click on the “Projects” dropdown menu and select “Create Project”. Enter a name for your project and an optional display name and description. Select an optional project template and click…

View On WordPress

#openshift openshift4 redhatopenshift openshiftonline openshiftcluster openshiftproject openshift login web console command line tool#command line tool#container platform#creating#kubernetes#online learning#online tutorial#openshift#openshift 4#openshift cli#openshift connector#OpenShift development#openshift docker#openshift login#openshift tutorial#openshift webconsole command line tool openshift4 red hat openshift#openshift4#project#red hat openshift#redhat openshift online#web application openshift online#webonsole

0 notes

Text

Use the power of kubernetes with Openshift Origin

Get the most modern and powerful Openshift OKD subscription with VENATRIX.

OpenShift Origin / OKD is an open source cloud development Platform as a Service (PaaS). This cloud-based platform allows developers to create, test and run their applications and deploy them to the cloud.

Automate the Build, Deployment and Management of your Applications with openshift Origin Platform.

OpenShift is suitable for any application, language, infrastructure, and industry. Using OpenShift helps developers to use their resources more efficiently and flexible, improve monitoring and maintenance, harden the applications security and overall make the developer experience a lot better. Venatrix’s OpenShift Services are infrastructure independent and therefore any industry can benefit from it.

What is openshift Origin?

Red Hat OpenShift Origin is a multifaceted, open source container application platform from Red Hat Inc. for the development, deployment and management of applications. OpenShift Origin Best vps hosting container Platform can deploy on a public, private or hybrid cloud that helps to deploy the applications with the use of Docker containers. It is built on top of Kubernetes and gives you tools like a web console and CLI to manage features like load balancing and horizontal scaling. It simplifies operations and development for cloud native applications.

Red Hat OpenShift Origin Container Platform helps the organization develop, deploy, and manage existing and container-based apps seamlessly across physical, virtual, and public cloud infrastructures. Its built on proven open source technologies and helps application development and IT operations teams modernize applications, deliver new services, and accelerate development processes.

Developers can quickly and easily create applications and deploy them. With S2I (Source-to-Image), a developer can even deploy his code without needing to create a container first. Operators can leverage placement and policy to orchestrate environments that meet their best practices. It makes the development and operations work fluently together when combining them in a single platform. It deploys Docker containers, it gives the ability to run multiple languages, frameworks and databases on the same platform. Easily deploy microservices written in Java, Python, PHP or other languages.

1 note

·

View note

Text

In an OpenShift or OKD Kubernetes Cluster, the ClusterVersion custom resource holds important high-level information about your cluster. This information include cluster version, update channels and status of the cluster operators. In this article I’ll demonstrate how Cluster Administrator can check cluster version as well as the status of operators in OpenShift / OKD Cluster. Check Cluster Version in OpenShift / OKD You can easily retrieve the cluster version from the CLI using��oc command to verify that it is running the desired version, and also to ensure that the cluster uses the right subscription channel. # Red Hat OpenShift $ oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.8.10 True False 10d Cluster version is 4.8.10 # OKD Cluster $ oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.7.0-0.okd-2021-05-22-050008 True False 66d Cluster version is 4.7.0-0.okd-2021-05-22-050008 To obtain more detailed information about the cluster status run the oc describe clusterversion command: $ oc describe clusterversion ...output omitted... Spec: Channel: fast-4.8 Cluster ID: f3dc42b3-aeec-4f4c-780f-8a04d6951595 Desired Update: Force: false Image: quay.io/openshift-release-dev/ocp-release@sha256:53576e4df71a5f00f77718f25aec6ac7946eaaab998d99d3e3f03fcb403364db Version: 4.8.10 Status: Available Updates: Channels: candidate-4.8 candidate-4.9 fast-4.8 Image: quay.io/openshift-release-dev/ocp-release@sha256:c3af995af7ee85e88c43c943e0a64c7066d90e77fafdabc7b22a095e4ea3c25a URL: https://access.redhat.com/errata/RHBA-2021:3511 Version: 4.8.12 Channels: candidate-4.8 candidate-4.9 fast-4.8 stable-4.8 Image: quay.io/openshift-release-dev/ocp-release@sha256:26f9da8c2567ddf15f917515008563db8b3c9e43120d3d22f9d00a16b0eb9b97 URL: https://access.redhat.com/errata/RHBA-2021:3429 Version: 4.8.11 ...output omitted... Where: Channel: fast-4.8 – Displays the version of the cluster the channel being used. Cluster ID: f3dc42b3-aeec-4f4c-780f-8a04d6951595 – Displays the unique identifier for the cluster Available Updates: Displays the updates available and channels Review OpenShift / OKD Cluster Operators OpenShift Container Platform / OKD cluster operators are top level operators that manage the cluster. Cluster operators are responsible for the main components, such as web console, storage, API server, SDN e.t.c. All the information relating to cluster operators is accessible through the ClusterOperator resource. It allows you to access the overview of all cluster operators, or detailed information on a given operator. To retrieve the list of all cluster operators, run the following command: $ oc get clusteroperators NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE authentication 4.8.10 True False False 2d14h baremetal 4.8.10 True False False 35d cloud-credential 4.8.10 True False False 35d cluster-autoscaler 4.8.10 True False False 35d config-operator 4.8.10 True False False 35d console 4.8.10 True False False 10d csi-snapshot-controller 4.8.10 True False False 35d dns 4.8.10 True False False 35d etcd 4.8.10 True False False 35d image-registry 4.8.10 True False False 35d

ingress 4.8.10 True False False 35d insights 4.8.10 True False False 35d kube-apiserver 4.8.10 True False False 35d kube-controller-manager 4.8.10 True False False 35d kube-scheduler 4.8.10 True False False 35d kube-storage-version-migrator 4.8.10 True False False 10d machine-api 4.8.10 True False False 35d machine-approver 4.8.10 True False False 35d machine-config 4.8.10 True False False 35d marketplace 4.8.10 True False False 35d monitoring 4.8.10 True False False 3d5h network 4.8.10 True False False 35d node-tuning 4.8.10 True False False 10d openshift-apiserver 4.8.10 True False False 12d openshift-controller-manager 4.8.10 True False False 34d openshift-samples 4.8.10 True False False 10d operator-lifecycle-manager 4.8.10 True False False 35d operator-lifecycle-manager-catalog 4.8.10 True False False 35d operator-lifecycle-manager-packageserver 4.8.10 True False False 18d service-ca 4.8.10 True False False 35d storage 4.8.10 True False False 35d Key Columns in the ouptut: NAME – Indicates the name of the operator. AVAILABLE – Indicates operator state if successfully deployed or has issues. TRUE means the operator is deployed successfully and is available for use in the cluster. The degraded state means the current state does not match its desired state over a period of time. PROGRESSING – Indicates whether an operator is being updated to a newer version by the cluster version operator. True means update in pending completion. DEGRADED – This entry returns the health of the operator. True means the operator encounters an error that prevents it from working properly. You can limit the output to a single operator: $ oc get co authentication NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE authentication 4.8.10 True False False 2d14h To get more information about an operator use: $ oc describe clusteroperators Example: $ oc describe co authentication If you’re in a process of upgrading your OpenShift / OKD cluster from one minor version to another, we have a guide dedicated to upgrade shared in the link below: How To Upgrade OpenShift / OKD Cluster Minor Version

0 notes

Text

Motorola flash tool for android

MOTOROLA FLASH TOOL FOR ANDROID HOW TO

MOTOROLA FLASH TOOL FOR ANDROID APK

MOTOROLA FLASH TOOL FOR ANDROID ANDROID

MOTOROLA FLASH TOOL FOR ANDROID PC

MOTOROLA FLASH TOOL FOR ANDROID FREE

Delete All BookMarks On Chrome at Once To delete all the google chrome bookmarks at once, you need to follow these steps: Bij BMW Tweaks kunt u uw CIC, NBT of NBT2 navigatie laten updaten naar de meest recente versie.

MOTOROLA FLASH TOOL FOR ANDROID HOW TO

If you are wondering how to delete all bookmarks on chrome in one click then here is how you can do this. So you need to delete them in together with one click.xHP Flashtool is the worlds only solution for your 6/8-Speed Automatic or 7-Speed DCT Transmission! Choose from pre-defined maps, or. Network Interface Card: A network interface controller (NIC) (also known as a network interface card, network adapter) is an electronic device that connects a computer to a computer network/ Modern NIC usually comes up with speed of 1-10Gbps. Basic Request Lets cover some basic terminologies before we dig into Receive Side Scaling and Receive Packet Steering. Xef (Deprecated) Even this api is still working, it has been deprecated in favor of the new REST one See Xef Catalog section one instead.

MOTOROLA FLASH TOOL FOR ANDROID FREE

Feel free to navigate through the docs and tell us if you feel that something can be improved. This need, along with the desire to own and manage my own data spurred. While Google would certainly offer better search results for most of the queries that we were interested in, they no longer offer a cheap and convenient way of creating custom search engines.

A research project I spent time working on during my master’s required me to scrape, index and rerank a largish number of websites.

If any of the index patterns listed there have no existing indexes in Elasticsearch, then the page will not respond all to you left clicking on those patterns, making it impossible to highlight the pattern you want to delete. In Kibana, go to Management->Index Patterns.

I saw this issue with ElasticStack 5.3.0.

GoToConnect comes packed with over 100 features across cloud VoIP and web, audio and video conferencing. Scroll down until you find the cover that matches the book that you wish to delete Set your view to "Large Icons" so you can see the pictures of the covers of your books 16. Duplicate Sweeper can delete duplicate files, photos, music and more.

MOTOROLA FLASH TOOL FOR ANDROID PC

Find and remove duplicate files on your PC or Mac. Both have the same engine inside (Truth is that CLI tool is just the program that uses the library under the hood). It is also available as a library for developers and as a CLI for terminal-based use cases.

What is CURL ? CURL is a tool for data transfer.

Today were reviewing Xdelete after 6 months of use on the 335! I'll be answering some questions and going over some new features from a recent update! Enjoy. As I noticed how incredible slow and ineffecient managing my woocommerce store was, I decided to build a very simple woocommerce store manager using Django. Hi everyone, In my free time I'm working on setting up a small wordpress + woocommerce webshop. DataWrangler xcopy - xdelete? Pixelab_Datman: 12/19/01 10:23 AM: Rather than trying to devise a convoluted batch file, I suggest the use of XXCOPY which is designed for common What is a wide ip?¶ A wide IP maps a fully-qualified domain name (FQDN) to one or more pools of virtual servers that host the content of a domain.

The latest Tweets from Sathyasarathi Python coder, Chatterbox, Anorexic, Linux Geek.

xDelete lets you take control of your BMW xDrive System! Worth the price just if you want a bit of fun or to solve an xdrive fault.$ oc exec -it elasticsearch-cdm-xxxx-1-yyyy-zzzz -n openshift-logging bash bash-4.2$ health Tue Nov 10 06:19: epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent XDelete. My car is completely different and a whole new level of fun.

MOTOROLA FLASH TOOL FOR ANDROID ANDROID

apk dosyaları kurulmasına izin, o zaman güvenle RollingAPK üzerinde mevcut tüm Android Uygulamaları ve Oyunları yükleyebilirsiniz! When I finally installed xDelete on my e92 335i xdrive it worked like a charm. apk Uygulamasını yüklemek için: Cihazınızda Ayarlar menüsüne gidin ve bilinmeyen kaynaklardan. Eğer bazı kolay talimat yapmalıyım cihazınızda Bx xd Android.

MOTOROLA FLASH TOOL FOR ANDROID APK

Preamble Back in the windows 3.1, and for a long time there after, one of the powerful tools shipped with windows was xdel.exe!Then it stopped being included, I guess because you can now do the same 'function' using (a) the built-in 'del', with lots of enhanced abilities, or (b) in the GUI windows explorer.Android Uygulama - Bx xd APK üzerinde indirmek için kullanılabilir.

1 note

·

View note

Text

A Primer on OpenShift CLI Tools

A Primer on OpenShift CLI Tools

The command-line interface (CLI) is an effective text-based user interface (UI). Today, many users rely on graphical user interfaces and menu-driven interactions, but some programming and maintenance tasks may not have a GUI, or at times, may experience slowness. In such scenarios, command-line interfaces can be used. When working on the OpenShift Container Platform, a variety of tasks can be…

View On WordPress

0 notes

Text

Integrating ROSA Applications with AWS Services (CS221)

In today's rapidly evolving cloud-native landscape, enterprises are looking for scalable, secure, and fully managed Kubernetes solutions that work seamlessly with existing cloud infrastructure. Red Hat OpenShift Service on AWS (ROSA) meets that demand by combining the power of Red Hat OpenShift with the scalability and flexibility of Amazon Web Services (AWS).

In this blog post, we’ll explore how you can integrate ROSA-based applications with key AWS services, unlocking a powerful hybrid architecture that enhances your applications' capabilities.

📌 What is ROSA?

ROSA (Red Hat OpenShift Service on AWS) is a managed OpenShift offering jointly developed and supported by Red Hat and AWS. It allows you to run containerized applications using OpenShift while taking full advantage of AWS services such as storage, databases, analytics, and identity management.

🔗 Why Integrate ROSA with AWS Services?

Integrating ROSA with native AWS services enables:

Seamless access to AWS resources (like RDS, S3, DynamoDB)

Improved scalability and availability

Cost-effective hybrid application architecture

Enhanced observability and monitoring

Secure IAM-based access control using AWS IAM Roles for Service Accounts (IRSA)

🛠️ Key Integration Scenarios

1. Storage Integration with Amazon S3 and EFS

Applications deployed on ROSA can use AWS storage services for persistent and object storage needs.

Use Case: A web app storing images to S3.

How: Use OpenShift’s CSI drivers to mount EFS or access S3 through SDKs or CLI.

yaml

Copy

Edit

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: efs-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: efs-sc

resources:

requests:

storage: 5Gi

2. Database Integration with Amazon RDS

You can offload your relational database requirements to managed RDS instances.

Use Case: Deploying a Spring Boot app with PostgreSQL on RDS.

How: Store DB credentials in Kubernetes secrets and use RDS endpoint in your app’s config.

env

Copy

Edit

SPRING_DATASOURCE_URL=jdbc:postgresql://<rds-endpoint>:5432/mydb

3. Authentication with AWS IAM + OIDC

ROSA supports IAM Roles for Service Accounts (IRSA), enabling fine-grained permissions for workloads.

Use Case: Granting a pod access to a specific S3 bucket.

How:

Create an IAM role with S3 access

Associate it with a Kubernetes service account

Use OIDC to federate access

4. Observability with Amazon CloudWatch and Prometheus

Monitor your workloads using Amazon CloudWatch Container Insights or integrate Prometheus and Grafana on ROSA for deeper insights.

Use Case: Track application metrics and logs in a single AWS dashboard.

How: Forward logs from OpenShift to CloudWatch using Fluent Bit.

5. Serverless Integration with AWS Lambda

Bridge your ROSA applications with AWS Lambda for event-driven workloads.

Use Case: Triggering a Lambda function on file upload to S3.

How: Use EventBridge or S3 event notifications with your ROSA app triggering the workflow.

🔒 Security Best Practices

Use IAM Roles for Service Accounts (IRSA) to avoid hardcoding credentials.

Use AWS Secrets Manager or OpenShift Vault integration for managing secrets securely.

Enable VPC PrivateLink to keep traffic within AWS private network boundaries.

🚀 Getting Started

To start integrating your ROSA applications with AWS:

Deploy your ROSA cluster using the AWS Management Console or CLI

Set up AWS CLI & IAM permissions

Enable the AWS services needed (e.g., RDS, S3, Lambda)

Create Kubernetes Secrets and ConfigMaps for service integration

Use ServiceAccounts, RBAC, and IRSA for secure access

🎯 Final Thoughts

ROSA is not just about running Kubernetes on AWS—it's about unlocking the true hybrid cloud potential by integrating with a rich ecosystem of AWS services. Whether you're building microservices, data pipelines, or enterprise-grade applications, ROSA + AWS gives you the tools to scale confidently, operate securely, and innovate rapidly.

If you're interested in hands-on workshops, consulting, or ROSA enablement for your team, feel free to reach out to HawkStack Technologies – your trusted Red Hat and AWS integration partner.

💬 Let's Talk!

Have you tried ROSA yet? What AWS services are you integrating with your OpenShift workloads? Share your experience or questions in the comments!

For more details www.hawkstack.com

0 notes

Text

Running Legacy Applications on OpenShift Virtualization: A How-To Guide

Organizations looking to modernize their IT infrastructure often face a significant challenge: legacy applications. These applications, while critical to operations, may not be easily containerized. Red Hat OpenShift Virtualization offers a solution, enabling businesses to run legacy virtual machine (VM)-based applications alongside containerized workloads. This guide provides a step-by-step approach to running legacy applications on OpenShift Virtualization.

Why Use OpenShift Virtualization for Legacy Applications?

OpenShift Virtualization, powered by KubeVirt, integrates VM management into the Kubernetes ecosystem. This allows organizations to:

Preserve Investments: Continue using legacy applications without expensive rearchitecture.

Simplify Operations: Manage VMs and containers through a unified OpenShift Console.

Bridge the Gap: Modernize incrementally by running VMs alongside microservices.

Enhance Security: Leverage OpenShift’s built-in security features like SELinux and RBAC for both containers and VMs.

Preparing Your Environment

Before deploying legacy applications on OpenShift Virtualization, ensure the following:

OpenShift Cluster: A running OpenShift Container Platform (OCP) cluster with sufficient resources.

OpenShift Virtualization Operator: Installed and configured from the OperatorHub.

VM Images: A QCOW2, OVA, or ISO image of your legacy application.

Storage and Networking: Configured storage classes and network settings to support VM operations.

Step 1: Enable OpenShift Virtualization

Log in to your OpenShift Web Console.

Navigate to OperatorHub and search for "OpenShift Virtualization".

Install the OpenShift Virtualization Operator.

After installation, verify the "KubeVirt" custom resources are available.

Step 2: Create a Virtual Machine

Access the Virtualization Dashboard: Go to the Virtualization tab in the OpenShift Console.

New Virtual Machine: Click on "Create Virtual Machine" and select "From Virtual Machine Import" or "From Scratch".

Define VM Specifications:

Select the operating system and size of the VM.

Attach the legacy application’s disk image.

Allocate CPU, memory, and storage resources.

Configure Networking: Assign a network interface to the VM, such as a bridge or virtual network.

Step 3: Deploy the Virtual Machine

Review the VM configuration and click "Create".

Monitor the deployment process in the OpenShift Console or use the CLI with:

oc get vmi

Once deployed, the VM will appear under the Virtual Machines section.

Step 4: Connect to the Virtual Machine

Access via Console: Open the VM’s console directly from the OpenShift UI.

SSH Access: If configured, connect to the VM using SSH.

Test the legacy application to ensure proper functionality.

Step 5: Integrate with Containerized Services

Expose VM Services: Create a Kubernetes Service to expose the VM to other workloads.

oc expose vmi <vm-name> --port=8080 --target-port=80

Connect Containers: Use Kubernetes-native networking to allow containers to interact with the VM.

Best Practices

Resource Allocation: Ensure the cluster has sufficient resources to support both VMs and containers.

Snapshots and Backups: Use OpenShift’s snapshot capabilities to back up VMs.

Monitoring: Leverage OpenShift Monitoring to track VM performance and health.

Security Policies: Implement network policies and RBAC to secure VM access.

Conclusion

Running legacy applications on OpenShift Virtualization allows organizations to modernize at their own pace while maintaining critical operations. By integrating VMs into the Kubernetes ecosystem, businesses can manage hybrid workloads more efficiently and prepare for a future of cloud-native applications. With this guide, you can seamlessly bring your legacy applications into the OpenShift environment and unlock new possibilities for innovation.

For more details visit: https://www.hawkstack.com/

0 notes

Text

Deploying Your First Application on OpenShift

Deploying an application on OpenShift can be straightforward with the right guidance. In this tutorial, we'll walk through deploying a simple "Hello World" application on OpenShift. We'll cover creating an OpenShift project, deploying the application, and exposing it to the internet.

Prerequisites

OpenShift CLI (oc): Ensure you have the OpenShift CLI installed. You can download it from the OpenShift CLI Download page.

OpenShift Cluster: You need access to an OpenShift cluster. You can set up a local cluster using Minishift or use an online service like OpenShift Online.

Step 1: Log In to Your OpenShift Cluster

First, log in to your OpenShift cluster using the oc command.

oc login https://<your-cluster-url> --token=<your-token>

Replace <your-cluster-url> with the URL of your OpenShift cluster and <your-token> with your OpenShift token.

Step 2: Create a New Project

Create a new project to deploy your application.

oc new-project hello-world-project

Step 3: Create a Simple Hello World Application

For this tutorial, we'll use a simple Node.js application. Create a new directory for your project and initialize a new Node.js application.

mkdir hello-world-app cd hello-world-app npm init -y

Create a file named server.js and add the following content:

const express = require('express'); const app = express(); const port = 8080; app.get('/', (req, res) => res.send('Hello World from OpenShift!')); app.listen(port, () => { console.log(`Server running at http://localhost:${port}/`); });

Install the necessary dependencies.

npm install express

Step 4: Create a Dockerfile

Create a Dockerfile in the same directory with the following content:

FROM node:14 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . EXPOSE 8080 CMD ["node", "server.js"]

Step 5: Build and Push the Docker Image

Log in to your Docker registry (e.g., Docker Hub) and push the Docker image.

docker login docker build -t <your-dockerhub-username>/hello-world-app . docker push <your-dockerhub-username>/hello-world-app

Replace <your-dockerhub-username> with your Docker Hub username.

Step 6: Deploy the Application on OpenShift

Create a new application in your OpenShift project using the Docker image.

oc new-app <your-dockerhub-username>/hello-world-app

OpenShift will automatically create the necessary deployment configuration, service, and pod for your application.

Step 7: Expose the Application

Expose your application to create a route, making it accessible from the internet.

oc expose svc/hello-world-app

Step 8: Access the Application

Get the route URL for your application.

oc get routes

Open the URL in your web browser. You should see the message "Hello World from OpenShift!".

Conclusion

Congratulations! You've successfully deployed a simple "Hello World" application on OpenShift. This tutorial covered the basic steps, from setting up your project and application to exposing it on the internet. OpenShift offers many more features for managing applications, so feel free to explore its documentation for more advanced topic

For more details click www.qcsdclabs.com

#redhatcourses#information technology#docker#container#linux#kubernetes#containersecurity#containerorchestration#dockerswarm#aws

0 notes

Text

オンプレミス Kubernetes デプロイモデル比較

from https://qiita.com/tmurakam99/items/b27d1055f7c881a03ba0

各種 Kubernetes ディストリビューションの、オンプレミス向けデプロイモデル・アーキテクチャを比較してみました。概ね、私がデプロイを試してみたもの中心です。 なお各ディストリビューションはクラウド向けのデプロイにも対応していたりますが、本記事はオンプレ部分のみ記載するのでクラウドデプロイはばっさり省略します。

私の勝手な分類ですが、大きく分けると以下のようになります。

a) SW on Linux タイプ

b) Docker タイプ

c) 独自 OS タイプ

d) VM タイプ

a) SW on Linux タイプ

Linux をインストールしたノードを用意し、この上にソフトウェアとしてインストールするタイプです。一番基本といえる形だと思います。

特徴としては、kubelet が Linux 上のデーモンとして起動し、それ以外のコンポーネントは Kubelet からコンテナとして起動されるという形になります。

Kubeadm

Kubeadm では、利用者が Linux OS とコンテナランタイム(Docker, containerd, cri-o など)、および kubelet のインストールまで行っておく必要があります。そのあと kubeadm を実行することによって、Control Plane, Worker の各コンポーネントをデプロイ、kubelet により起動されます。

Kubeadm を使う場合は、利用者が全ノードに kubeadm をインストールしてそれぞれのノードでデプロイ作業を実行する必要があるので、台数が多いと大変です。

Kubespray

Kubespray では、Ansible を使って各ノードに対して一斉にデプロイを実施します。Kubespray は内部で Kubeadm を使っているので、構成は Kubeadm を使った場合と基本的に同じです (etcd が kubelet 起動でないとか細かい違いはありますが)。

コンテナランタイムや kubelet のデプロイもやってくれるので、利用者が事前にやっておくのは Linux のインストールと ssh でログインできるようにしておくこと、sudo 使えるように��ておくこと、くらいです。

軽量ディストリビューション

以下軽量ディストリビューションも a) に分類できるかと思います。

b) Docker タイプ

Docker に依存するタイプです。実際のところ MKE や RKE は Linux には依存しているので、この分類はちょっと無理があるかもしれません。一応、kubelet が Docker の中で動くか、という観点で分類してみました。

kind (Kubernetes in Docker)

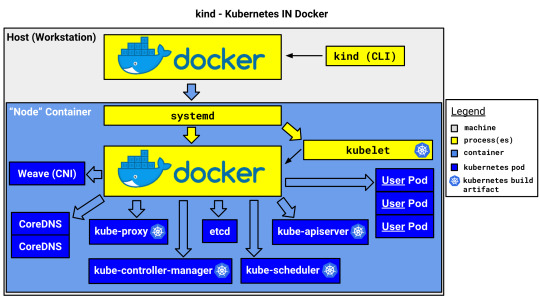

kind はその名の通り Docker コンテナ内で Kubernetes を動作させます。Docker コンテナ1つがノード(VM)1つ、というイメージです。

kind は開発・テスト用で、本番環境で使うものではありません。マルチノードクラスタに対応していますが、これは1つの物理or仮想マシン上で複数のVM(ノードコンテナ)をエミュレートできるという意味で、複数のマシンに跨ってクラスタを構成できるわけではありません。

MKE (Mirantis Kubernetes Engine)

MKE は Docker EE を買収した Mirantis の製品です。インストール手順はこちら

launchpad CLI から全ノードに対して ssh でログインして一斉にデプロイ可能で、Docker EE (MCR) のインストール、k8s のデプロイが実施されます。

RKE (Rancher Kubernetes Engine)

RKE は Rancher 社の製品です。

rke up コマンドで全ノードに対して ssh でログインして一斉にデプロイします。MKE と違い、Docker は事前にインストールしておかなければなりません。

なお、RKE の操作は CLI オンリーですが、Rancher Server を使用するとRKEクラスタ含む各種 Kubernetes クラスタの管理を GUI で行うことができます。

なお、上記は RKE1 の話で、RKE2 からは Docker には依存せず、containerd ベースになるようです。また Control plane は kubelet から static Pod として起動する形になるようです。

本題とずれますが Rancher/RKE は 100% OSS なのが良いです。他の商用製品は評価用はありますが本番で使うならライセンス購入が必要です。

c) 独自 OS タイプ

a), b) は OS は利用者が用意したものを使用しますが、このタイプは Kubernetes を動作させる OS 自体がディストリビューションに含まれているのが特徴です。

OCP (OpenShift Container Platform)

OCP はRedHat 社の製品 (OpenSource の OKD もあります)。OS には RedHat Core OS が使われています。

ベアメタルにインストールする場合は、物理マシンに Core OS をインストールし、この上に OpenShift をインストールすることになります。 ベアメタルにインストールする手順は ここにあります。インストール方法は以下の2通り。

IPI (Installer Provisioned Infrastructure)

インストーラが自動でインフラを構築する方法

各ノードをPXEブート(ネットワークブート)させ OS インストールする

UPI(User Provisioned Infrastructure)

ユーザが事前に用意してから構築する方法

ユーザが Core OS の CD-ROM を用意して各ノードをブートする

どちらの方法でも外部にプロビジョニング用のマシン、DHCPサーバ、DNSサーバなど用意しなければならないので準備が大変です。

d) VM タイプ

Kubernetes を稼働させるために専用の VM をデプロイするタイプです。

Minikube

Minikube は、Kubernetesが入った VM を立てるタイプです。Hyper-V や VirtualBox, VMware が使えます。また Docker も使えるので b) もできます。

なお、マルチノードクラスタは構成できません。シングルのみです。

Charmed Kubernetes

Charmed Kubernetes は Ubuntu で有名な Canonical の製品。

インストール方法は何通りかあるのですが、シングルノードにインストールするときは LXD を使って VM を立ててデプロイするという形になります。デプロイ用のツールは Juju というものを使います。

マルチノードデプロイする方法としては MAAS(Metal as a Service) を使うようです。これはローカルにクラウド環境を構築するようなものなので、VMware vSphere に似ている感じです(試してはいないですが)

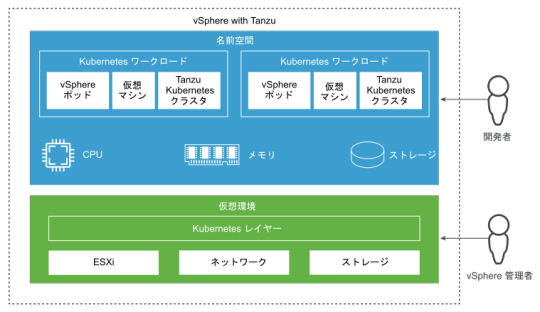

VMware vSphere with Tanzu

VMware vSphere with Tanzu は VMware 社の製品。仮想化基盤の vSphere を用意し、その上に Kubernetes 用の VM を立てるという方式になります。VM 内で動作する OS は Photon OS なので、ある意味 c) にも近いとも言えます。

物理ノードにハイパーバイザの ESXi をインストールし、この上に VM を立てます。クラスタノード全体の管理には vCenter Server を使います。⇒ アーキテクチャ

すでに vSphere を導入しているところに立てる場合は良さげですが、オンプレ Kubernetes クラスタ1個だけのためにこれを使うのはさすがに(運用が)重いかな、という印象。

https://qiita-user-contents.imgix.net/https%3A%2F%2Fcdn.qiita.com%2Fassets%2Fpublic%2Farticle-ogp-background-1150d8b18a7c15795b701a55ae908f94.png?ixlib=rb-4.0.0&w=1200&mark64=aHR0cHM6Ly9xaWl0YS11c2VyLWNvbnRlbnRzLmltZ2l4Lm5ldC9-dGV4dD9peGxpYj1yYi00LjAuMCZ3PTg0MCZoPTM4MCZ0eHQ2ND00NEtxNDRPejQ0T1g0NE9zNDRPZjQ0SzVJRXQxWW1WeWJtVjBaWE1nNDRPSDQ0T1g0NE90NDRLazQ0T2k0NE9INDRPcjVxLVU2THlEJnR4dC1jb2xvcj0lMjMzMzMmdHh0LWZvbnQ9SGlyYWdpbm8lMjBTYW5zJTIwVzYmdHh0LXNpemU9NTQmdHh0LWNsaXA9ZWxsaXBzaXMmdHh0LWFsaWduPWNlbnRlciUyQ21pZGRsZSZzPWNkZDZkNmJlZjBiNTM5MmUwYzE3ZjUxYzljOWY4Yjk0&mark-align=center%2Cmiddle&blend64=aHR0cHM6Ly9xaWl0YS11c2VyLWNvbnRlbnRzLmltZ2l4Lm5ldC9-dGV4dD9peGxpYj1yYi00LjAuMCZ3PTg0MCZoPTUwMCZ0eHQ2ND1RSFJ0ZFhKaGEyRnRPVGsmdHh0LWNvbG9yPSUyMzMzMyZ0eHQtZm9udD1IaXJhZ2lubyUyMFNhbnMlMjBXNiZ0eHQtc2l6ZT00NSZ0eHQtYWxpZ249cmlnaHQlMkNib3R0b20mcz02NTE0ZTc3OTU1ODllZTg1NmQzZDI5MGU4ODc5NmU4NQ&blend-align=center%2Cmiddle&blend-mode=normal&s=fea070b2c5c5d84e1587a7132daaec13

1 note

·

View note

Text

Login to openshift cluster in different ways | openshift 4

There are several ways to log in to an OpenShift cluster, depending on your needs and preferences. Here are some of the most common ways to log in to an OpenShift 4 cluster: Using the Web Console: OpenShift provides a web-based console that you can use to manage your cluster and applications. To log in to the console, open your web browser and navigate to the URL for the console. You will be…

View On WordPress

#openshift openshift4 containerization openshiftonline openshiftcluster openshiftlogin webconsole commandlinetool Login to openshift#container platform#Introduction to openshift online cluster#openshift#openshift 4#Openshift architecture#openshift cli#openshift connector#openshift container platform#OpenShift development#openshift login#openshift login web console command line tool openshift 4.2#openshift online#openshift paas#openshift tutorial#red hat openshift#redhat openshift online#web application openshift online#what is openshift online

0 notes

Text

You have a running OpenShift Cluster powering your production microservices and worried about etcd data backup?. In this guide we show you how to easily backup etcd and push the backup data to AWS S3 object store. An etcd is a key-value store for OpenShift Container Platform, which persists the state of all resource objects. In any OpenShift Cluster administration, it is a good and recommended practice to back up your cluster’s etcd data regularly and store it in a secure location. The ideal location for data storage is outside the OpenShift Container Platform environment. This can be an NFS server share, secondary server in your Infrastructure or in a Cloud environment. The other recommendation is taking etcd backups during non-peak usage hours, as the action is blocking in nature. Ensure etcd backup operation is performed after any OpenShift Cluster upgrade. The importance of this is that during cluster restoration, an etcd backup taken from the same z-stream release must be used. As an example, an OpenShift Container Platform 4.6.3 cluster must use an etcd backup that was taken from 4.6.3. Step 1: Login to one Master Node in the Cluster The etcd cluster backup has to be performed on a single invocation of the backup script on a master host. Do not take a backup for each master host. Login to one master node either through SSH or debug session: # SSH Access $ ssh core@ # Debug session $ oc debug node/ For a debug session you need to change your root directory to the host: sh-4.6# chroot /host If the cluster-wide proxy is enabled, be sure that you have exported the NO_PROXY, HTTP_PROXY, and HTTPS_PROXY environment variables. Step 2: Perform etcd Backup on OpenShift 4.x An OpenShift cluster access as a user with the cluster-admin role is required to perform this operation. Before you proceed check to confirm if proxy is enabled: $ oc get proxy cluster -o yaml If you have proxy enabled, httpProxy, httpsProxy, and noProxy fields will have the values set. Run the cluster-backup.sh script to initiate etcd backup process. You should pass a path where backup is saved. $ mkdir /home/core/etcd_backups $ sudo /usr/local/bin/cluster-backup.sh /home/core/etcd_backups Here is my command execution output: 3e53f83f3c02b43dfa8d282265c1b0f9789bcda827c4e13110a9b6f6612d447c etcdctl version: 3.3.18 API version: 3.3 found latest kube-apiserver-pod: /etc/kubernetes/static-pod-resources/kube-apiserver-pod-115 found latest kube-controller-manager-pod: /etc/kubernetes/static-pod-resources/kube-controller-manager-pod-24 found latest kube-scheduler-pod: /etc/kubernetes/static-pod-resources/kube-scheduler-pod-26 found latest etcd-pod: /etc/kubernetes/static-pod-resources/etcd-pod-11 Snapshot saved at /home/core/etcd_backups/snapshot_2021-03-16_134036.db snapshot db and kube resources are successfully saved to /home/core/etcd_backups List files in the backup directory: $ ls -1 /home/core/etcd_backups/ snapshot_2021-03-16_134036.db static_kuberesources_2021-03-16_134036.tar.gz $ du -sh /home/core/etcd_backups/* 1.5G /home/core/etcd_backups/snapshot_2021-03-16_134036.db 76K /home/core/etcd_backups/static_kuberesources_2021-03-16_134036.tar.gz There will be two files in the backup: snapshot_.db: This file is the etcd snapshot. static_kuberesources_.tar.gz: This file contains the resources for the static pods. If etcd encryption is enabled, it also contains the encryption keys for the etcd snapshot. Step 3: Push the Backup to AWS S3 (From Bastion Server) Login from Bastion Server and copy backup files. scp -r core@serverip:/home/core/etcd_backups ~/ Install AWS CLI tool: curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" Install unzip tool: sudo yum -y install unzip Extract downloaded file: unzip awscli-exe-linux-x86_64.zip Install AWS CLI: $ sudo ./aws/install You can now run: /usr/local/bin/aws --version Confirm installation by checking the version:

$ aws --version aws-cli/2.1.30 Python/3.8.8 Linux/3.10.0-957.el7.x86_64 exe/x86_64.rhel.7 prompt/off Create OpenShift Backups bucket: $ aws s3 mb s3://openshiftbackups make_bucket: openshiftbackups Create an IAM User: $ aws iam create-user --user-name backupsonly Create AWS Policy for Backups user – user able to write to S3 only: cat >aws-s3-uploads-policy.json

0 notes