#python web scraping apps

Explore tagged Tumblr posts

Text

One effective method for obtaining data from websites is web scraping. It's especially useful for data analysis, market research, and automating repetitive tasks. This Python web scraping guide will walk you through the essentials of web scraping using Python, focusing on simplicity and clarity to get you started quickly and effectively.

0 notes

Text

NewsData.io News API is the best news extraction API that can help to gather all the news past up to 5 years and also covers over 150+ countries with 77+ languages. You can easily filter out the specific country and language for your particular keyword or topic. It is the best alternative to Google news feed API. Newsdata.io provides a free API that gives access to real-time and historical news data from various worldwide sources. News Data API offers a free plan that allows users to make up to 200 API Credit requests per day. NewsData provides a news API documentation page that helps Python and PHP developers integrate the Newsdata.io API with their applications or projects.

How to Get NewsData API

You need to visit their website and click on Log-in

Then buy their API from their pricing plan option, which is cheaper in comparison to other news APIs in the market.

You will get your personalized API key, with which you can access the dashboard.

Then all you need to do is type your keyword and filter out accordingly

You can download the data in JSON or Excel format.

#programming#news api#marketing#api#python#branding#data science#coding#google news api#newsapi#newsdata#web scraping#news app

0 notes

Text

Your All-in-One AI Web Agent: Save $200+ a Month, Unleash Limitless Possibilities!

Imagine having an AI agent that costs you nothing monthly, runs directly on your computer, and is unrestricted in its capabilities. OpenAI Operator charges up to $200/month for limited API calls and restricts access to many tasks like visiting thousands of websites. With DeepSeek-R1 and Browser-Use, you:

• Save money while keeping everything local and private.

• Automate visiting 100,000+ websites, gathering data, filling forms, and navigating like a human.

• Gain total freedom to explore, scrape, and interact with the web like never before.

You may have heard about Operator from Open AI that runs on their computer in some cloud with you passing on private information to their AI to so anything useful. AND you pay for the gift . It is not paranoid to not want you passwords and logins and personal details to be shared. OpenAI of course charges a substantial amount of money for something that will limit exactly what sites you can visit, like YouTube for example. With this method you will start telling an AI exactly what you want it to do, in plain language, and watching it navigate the web, gather information, and make decisions—all without writing a single line of code.

In this guide, we’ll show you how to build an AI agent that performs tasks like scraping news, analyzing social media mentions, and making predictions using DeepSeek-R1 and Browser-Use, but instead of writing a Python script, you’ll interact with the AI directly using prompts.

These instructions are in constant revisions as DeepSeek R1 is days old. Browser Use has been a standard for quite a while. This method can be for people who are new to AI and programming. It may seem technical at first, but by the end of this guide, you’ll feel confident using your AI agent to perform a variety of tasks, all by talking to it. how, if you look at these instructions and it seems to overwhelming, wait, we will have a single download app soon. It is in testing now.

This is version 3.0 of these instructions January 26th, 2025.

This guide will walk you through setting up DeepSeek-R1 8B (4-bit) and Browser-Use Web UI, ensuring even the most novice users succeed.

What You’ll Achieve

By following this guide, you’ll:

1. Set up DeepSeek-R1, a reasoning AI that works privately on your computer.

2. Configure Browser-Use Web UI, a tool to automate web scraping, form-filling, and real-time interaction.

3. Create an AI agent capable of finding stock news, gathering Reddit mentions, and predicting stock trends—all while operating without cloud restrictions.

A Deep Dive At ReadMultiplex.com Soon

We will have a deep dive into how you can use this platform for very advanced AI use cases that few have thought of let alone seen before. Join us at ReadMultiplex.com and become a member that not only sees the future earlier but also with particle and pragmatic ways to profit from the future.

System Requirements

Hardware

• RAM: 8 GB minimum (16 GB recommended).

• Processor: Quad-core (Intel i5/AMD Ryzen 5 or higher).

• Storage: 5 GB free space.

• Graphics: GPU optional for faster processing.

Software

• Operating System: macOS, Windows 10+, or Linux.

• Python: Version 3.8 or higher.

• Git: Installed.

Step 1: Get Your Tools Ready

We’ll need Python, Git, and a terminal/command prompt to proceed. Follow these instructions carefully.

Install Python

1. Check Python Installation:

• Open your terminal/command prompt and type:

python3 --version

• If Python is installed, you’ll see a version like:

Python 3.9.7

2. If Python Is Not Installed:

• Download Python from python.org.

• During installation, ensure you check “Add Python to PATH” on Windows.

3. Verify Installation:

python3 --version

Install Git

1. Check Git Installation:

• Run:

git --version

• If installed, you’ll see:

git version 2.34.1

2. If Git Is Not Installed:

• Windows: Download Git from git-scm.com and follow the instructions.

• Mac/Linux: Install via terminal:

sudo apt install git -y # For Ubuntu/Debian

brew install git # For macOS

Step 2: Download and Build llama.cpp

We’ll use llama.cpp to run the DeepSeek-R1 model locally.

1. Open your terminal/command prompt.

2. Navigate to a clear location for your project files:

mkdir ~/AI_Project

cd ~/AI_Project

3. Clone the llama.cpp repository:

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

4. Build the project:

• Mac/Linux:

make

• Windows:

• Install a C++ compiler (e.g., MSVC or MinGW).

• Run:

mkdir build

cd build

cmake ..

cmake --build . --config Release

Step 3: Download DeepSeek-R1 8B 4-bit Model

1. Visit the DeepSeek-R1 8B Model Page on Hugging Face.

2. Download the 4-bit quantized model file:

• Example: DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf.

3. Move the model to your llama.cpp folder:

mv ~/Downloads/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf ~/AI_Project/llama.cpp

Step 4: Start DeepSeek-R1

1. Navigate to your llama.cpp folder:

cd ~/AI_Project/llama.cpp

2. Run the model with a sample prompt:

./main -m DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf -p "What is the capital of France?"

3. Expected Output:

The capital of France is Paris.

Step 5: Set Up Browser-Use Web UI

1. Go back to your project folder:

cd ~/AI_Project

2. Clone the Browser-Use repository:

git clone https://github.com/browser-use/browser-use.git

cd browser-use

3. Create a virtual environment:

python3 -m venv env

4. Activate the virtual environment:

• Mac/Linux:

source env/bin/activate

• Windows:

env\Scripts\activate

5. Install dependencies:

pip install -r requirements.txt

6. Start the Web UI:

python examples/gradio_demo.py

7. Open the local URL in your browser:

http://127.0.0.1:7860

Step 6: Configure the Web UI for DeepSeek-R1

1. Go to the Settings panel in the Web UI.

2. Specify the DeepSeek model path:

~/AI_Project/llama.cpp/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf

3. Adjust Timeout Settings:

• Increase the timeout to 120 seconds for larger models.

4. Enable Memory-Saving Mode if your system has less than 16 GB of RAM.

Step 7: Run an Example Task

Let’s create an agent that:

1. Searches for Tesla stock news.

2. Gathers Reddit mentions.

3. Predicts the stock trend.

Example Prompt:

Search for "Tesla stock news" on Google News and summarize the top 3 headlines. Then, check Reddit for the latest mentions of "Tesla stock" and predict whether the stock will rise based on the news and discussions.

--

Congratulations! You’ve built a powerful, private AI agent capable of automating the web and reasoning in real time. Unlike costly, restricted tools like OpenAI Operator, you’ve spent nothing beyond your time. Unleash your AI agent on tasks that were once impossible and imagine the possibilities for personal projects, research, and business. You’re not limited anymore. You own the web—your AI agent just unlocked it! 🚀

Stay tuned fora FREE simple to use single app that will do this all and more.

7 notes

·

View notes

Text

URL Shortner

Image Caption Generator

Weather Forecast App

Music Player

Sudoku Solver

Web Scraping with BeautifulSoup

Chatbot

Password Manager

Stock Price Analyzer

Automated Email Sender

3 notes

·

View notes

Text

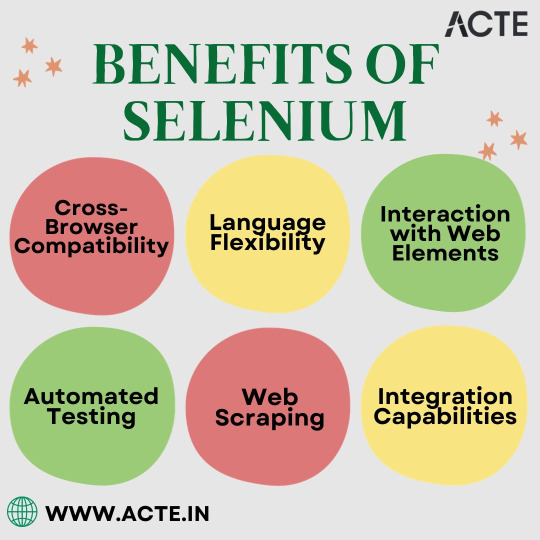

Why Choose Selenium? Exploring the Key Benefits for Testing and Development

In today's digitally driven world, where speed, accuracy, and efficiency are paramount, web automation has emerged as the cornerstone of success for developers, testers, and organizations. At the heart of this automation revolution stands Selenium, an open-source framework that has redefined how we interact with web browsers. In this comprehensive exploration, we embark on a journey to uncover the multitude of advantages that Selenium offers and how it empowers individuals and businesses in the digital age.

The Selenium Advantage: A Closer Look

Selenium, often regarded as the crown jewel of web automation, offers a variety of advantages that play important roles in simplifying complex web tasks and elevating web application development and testing processes. Let's explore the main advantages in more detail:

1. Cross-Browser Compatibility: Bridging Browser Gaps

Selenium's remarkable ability to support various web browsers, including but not limited to Chrome, Firefox, Edge, and more, ensures that web applications function consistently across different platforms. This cross-browser compatibility is invaluable in a world where users access websites from a a variety of devices and browsers. Whether it's a responsive e-commerce site or a mission-critical enterprise web app, Selenium bridges the browser gaps seamlessly.

2. Language Flexibility: Your Language of Choice

One of Selenium's standout features is its language flexibility. It doesn't impose restrictions on developers, allowing them to harness the power of Selenium using their preferred programming language. Whether you're proficient in Java, Python, C#, Ruby, or another language, Selenium welcomes your expertise with open arms. This language flexibility fosters inclusivity among developers and testers, ensuring that Selenium adapts to your preferred coding language.

3. Interaction with Web Elements: User-like Precision

Selenium empowers you to interact with web elements with pinpoint precision, mimicking human user actions effortlessly. It can click buttons, fill in forms, navigate dropdown menus, scroll through pages, and simulate a wide range of user interactions with the utmost accuracy. This level of precision is critical for automating complex web tasks, ensuring that the actions performed by Selenium are indistinguishable from those executed by a human user.

4. Automated Testing: Quality Assurance Simplified

Quality assurance professionals and testers rely heavily on Selenium for automated testing of web applications. By identifying issues, regressions, and functional problems early in the development process, Selenium streamlines the testing phase, reducing both the time and effort required for comprehensive testing. Automated testing with Selenium not only enhances the efficiency of the testing process but also improves the overall quality of web applications.

5. Web Scraping: Unleashing Data Insights

In an era where data reigns supreme, Selenium emerges as a effective tool for web scraping tasks. It enables you to extract data from websites, scrape valuable information, and store it for analysis, reporting, or integration into other applications. This capability is particularly valuable for businesses and organizations seeking to leverage web data for informed decision-making. Whether it's gathering pricing data for competitive analysis or extracting news articles for sentiment analysis, Selenium's web scraping capabilities are invaluable.

6. Integration Capabilities: The Glue in Your Tech Stack

Selenium's harmonious integration with a wide range of testing frameworks, continuous integration (CI) tools, and other technologies makes it the glue that binds your tech stack together seamlessly. This integration facilitates the orchestration of automated tests, ensuring that they fit seamlessly into your development workflow. Selenium's compatibility with popular CI/CD (Continuous Integration/Continuous Deployment) platforms like Jenkins, Travis CI, and CircleCI streamlines the testing and validation processes, making Selenium an indispensable component of the software development lifecycle.

In conclusion, the advantages of using Selenium in web automation are substantial, and they significantly contribute to efficient web development, testing, and data extraction processes. Whether you're a seasoned developer looking to streamline your web applications or a cautious tester aiming to enhance the quality of your products, Selenium stands as a versatile tool that can cater to your diverse needs.

At ACTE Technologies, we understand the key role that Selenium plays in the ever-evolving tech landscape. We offer comprehensive training programs designed to empower individuals and organizations with the knowledge and skills needed to harness the power of Selenium effectively. Our commitment to continuous learning and professional growth ensures that you stay at the forefront of technology trends, equipping you with the tools needed to excel in a rapidly evolving digital world.

So, whether you're looking to upskill, advance your career, or simply stay competitive in the tech industry, ACTE Technologies is your trusted partner on this transformative journey. Join us and unlock a world of possibilities in the dynamic realm of technology. Your success story begins here, where Selenium's advantages meet your aspirations for excellence and innovation.

5 notes

·

View notes

Text

Scraping Grocery Apps for Nutritional and Ingredient Data

Introduction

With health trends becoming more rampant, consumers are focusing heavily on nutrition and accurate ingredient and nutritional information. Grocery applications provide an elaborate study of food products, but manual collection and comparison of this data can take up an inordinate amount of time. Therefore, scraping grocery applications for nutritional and ingredient data would provide an automated and fast means for obtaining that information from any of the stakeholders be it customers, businesses, or researchers.

This blog shall discuss the importance of scraping nutritional data from grocery applications, its technical workings, major challenges, and best practices to extract reliable information. Be it for tracking diets, regulatory purposes, or customized shopping, nutritional data scraping is extremely valuable.

Why Scrape Nutritional and Ingredient Data from Grocery Apps?

1. Health and Dietary Awareness

Consumers rely on nutritional and ingredient data scraping to monitor calorie intake, macronutrients, and allergen warnings.

2. Product Comparison and Selection

Web scraping nutritional and ingredient data helps to compare similar products and make informed decisions according to dietary needs.

3. Regulatory & Compliance Requirements

Companies require nutritional and ingredient data extraction to be compliant with food labeling regulations and ensure a fair marketing approach.

4. E-commerce & Grocery Retail Optimization

Web scraping nutritional and ingredient data is used by retailers for better filtering, recommendations, and comparative analysis of similar products.

5. Scientific Research and Analytics

Nutritionists and health professionals invoke the scraping of nutritional data for research in diet planning, practical food safety, and trends in consumer behavior.

How Web Scraping Works for Nutritional and Ingredient Data

1. Identifying Target Grocery Apps

Popular grocery apps with extensive product details include:

Instacart

Amazon Fresh

Walmart Grocery

Kroger

Target Grocery

Whole Foods Market

2. Extracting Product and Nutritional Information

Scraping grocery apps involves making HTTP requests to retrieve HTML data containing nutritional facts and ingredient lists.

3. Parsing and Structuring Data

Using Python tools like BeautifulSoup, Scrapy, or Selenium, structured data is extracted and categorized.

4. Storing and Analyzing Data

The cleaned data is stored in JSON, CSV, or databases for easy access and analysis.

5. Displaying Information for End Users

Extracted nutritional and ingredient data can be displayed in dashboards, diet tracking apps, or regulatory compliance tools.

Essential Data Fields for Nutritional Data Scraping

1. Product Details

Product Name

Brand

Category (e.g., dairy, beverages, snacks)

Packaging Information

2. Nutritional Information

Calories

Macronutrients (Carbs, Proteins, Fats)

Sugar and Sodium Content

Fiber and Vitamins

3. Ingredient Data

Full Ingredient List

Organic/Non-Organic Label

Preservatives and Additives

Allergen Warnings

4. Additional Attributes

Expiry Date

Certifications (Non-GMO, Gluten-Free, Vegan)

Serving Size and Portions

Cooking Instructions

Challenges in Scraping Nutritional and Ingredient Data

1. Anti-Scraping Measures

Many grocery apps implement CAPTCHAs, IP bans, and bot detection mechanisms to prevent automated data extraction.

2. Dynamic Webpage Content

JavaScript-based content loading complicates extraction without using tools like Selenium or Puppeteer.

3. Data Inconsistency and Formatting Issues

Different brands and retailers display nutritional information in varied formats, requiring extensive data normalization.

4. Legal and Ethical Considerations

Ensuring compliance with data privacy regulations and robots.txt policies is essential to avoid legal risks.

Best Practices for Scraping Grocery Apps for Nutritional Data

1. Use Rotating Proxies and Headers

Changing IP addresses and user-agent strings prevents detection and blocking.

2. Implement Headless Browsing for Dynamic Content

Selenium or Puppeteer ensures seamless interaction with JavaScript-rendered nutritional data.

3. Schedule Automated Scraping Jobs

Frequent scraping ensures updated and accurate nutritional information for comparisons.

4. Clean and Standardize Data

Using data cleaning and NLP techniques helps resolve inconsistencies in ingredient naming and formatting.

5. Comply with Ethical Web Scraping Standards

Respecting robots.txt directives and seeking permission where necessary ensures responsible data extraction.

Building a Nutritional Data Extractor Using Web Scraping APIs

1. Choosing the Right Tech Stack

Programming Language: Python or JavaScript

Scraping Libraries: Scrapy, BeautifulSoup, Selenium

Storage Solutions: PostgreSQL, MongoDB, Google Sheets

APIs for Automation: CrawlXpert, Apify, Scrapy Cloud

2. Developing the Web Scraper

A Python-based scraper using Scrapy or Selenium can fetch and structure nutritional and ingredient data effectively.

3. Creating a Dashboard for Data Visualization

A user-friendly web interface built with React.js or Flask can display comparative nutritional data.

4. Implementing API-Based Data Retrieval

Using APIs ensures real-time access to structured and up-to-date ingredient and nutritional data.

Future of Nutritional Data Scraping with AI and Automation

1. AI-Enhanced Data Normalization

Machine learning models can standardize nutritional data for accurate comparisons and predictions.

2. Blockchain for Data Transparency

Decentralized food data storage could improve trust and traceability in ingredient sourcing.

3. Integration with Wearable Health Devices

Future innovations may allow direct nutritional tracking from grocery apps to smart health monitors.

4. Customized Nutrition Recommendations

With the help of AI, grocery applications will be able to establish personalized meal planning based on the nutritional and ingredient data culled from the net.

Conclusion

Automated web scraping of grocery applications for nutritional and ingredient data provides consumers, businesses, and researchers with accurate dietary information. Not just a tool for price-checking, web scraping touches all aspects of modern-day nutritional analytics.

If you are looking for an advanced nutritional data scraping solution, CrawlXpert is your trusted partner. We provide web scraping services that scrape, process, and analyze grocery nutritional data. Work with CrawlXpert today and let web scraping drive your nutritional and ingredient data for better decisions and business insights!

Know More : https://www.crawlxpert.com/blog/scraping-grocery-apps-for-nutritional-and-ingredient-data

#scrapingnutritionaldatafromgrocery#ScrapeNutritionalDatafromGroceryApps#NutritionalDataScraping#NutritionalDataScrapingwithAI

0 notes

Text

Beyond the Books: Real-World Coding Projects for Aspiring Developers

One of the best colleges in Jaipur, which is Arya College of Engineering & I.T. They transitioning from theoretical learning to hands-on coding is a crucial step in a computer science education. Real-world projects bridge this gap, enabling students to apply classroom concepts, build portfolios, and develop industry-ready skills. Here are impactful project ideas across various domains that every computer science student should consider:

Web Development

Personal Portfolio Website: Design and deploy a website to showcase your skills, projects, and resume. This project teaches HTML, CSS, JavaScript, and optionally frameworks like React or Bootstrap, and helps you understand web hosting and deployment.

E-Commerce Platform: Build a basic online store with product listings, shopping carts, and payment integration. This project introduces backend development, database management, and user authentication.

Mobile App Development

Recipe Finder App: Develop a mobile app that lets users search for recipes based on ingredients they have. This project covers UI/UX design, API integration, and mobile programming languages like Java (Android) or Swift (iOS).

Personal Finance Tracker: Create an app to help users manage expenses, budgets, and savings, integrating features like OCR for receipt scanning.

Data Science and Analytics

Social Media Trends Analysis Tool: Analyze data from platforms like Twitter or Instagram to identify trends and visualize user behavior. This project involves data scraping, natural language processing, and data visualization.

Stock Market Prediction Tool: Use historical stock data and machine learning algorithms to predict future trends, applying regression, classification, and data visualization techniques.

Artificial Intelligence and Machine Learning

Face Detection System: Implement a system that recognizes faces in images or video streams using OpenCV and Python. This project explores computer vision and deep learning.

Spam Filtering: Build a model to classify messages as spam or not using natural language processing and machine learning.

Cybersecurity

Virtual Private Network (VPN): Develop a simple VPN to understand network protocols and encryption. This project enhances your knowledge of cybersecurity fundamentals and system administration.

Intrusion Detection System (IDS): Create a tool to monitor network traffic and detect suspicious activities, requiring network programming and data analysis skills.

Collaborative and Cloud-Based Applications

Real-Time Collaborative Code Editor: Build a web-based editor where multiple users can code together in real time, using technologies like WebSocket, React, Node.js, and MongoDB. This project demonstrates real-time synchronization and operational transformation.

IoT and Automation

Smart Home Automation System: Design a system to control home devices (lights, thermostats, cameras) remotely, integrating hardware, software, and cloud services.

Attendance System with Facial Recognition: Automate attendance tracking using facial recognition and deploy it with hardware like Raspberry Pi.

Other Noteworthy Projects

Chatbots: Develop conversational agents for customer support or entertainment, leveraging natural language processing and AI.

Weather Forecasting App: Create a user-friendly app displaying real-time weather data and forecasts, using APIs and data visualization.

Game Development: Build a simple 2D or 3D game using Unity or Unreal Engine to combine programming with creativity.

Tips for Maximizing Project Impact

Align With Interests: Choose projects that resonate with your career goals or personal passions for sustained motivation.

Emphasize Teamwork: Collaborate with peers to enhance communication and project management skills.

Focus on Real-World Problems: Address genuine challenges to make your projects more relevant and impressive to employers.

Document and Present: Maintain clear documentation and present your work effectively to demonstrate professionalism and technical depth.

Conclusion

Engaging in real-world projects is the cornerstone of a robust computer science education. These experiences not only reinforce theoretical knowledge but also cultivate practical abilities, creativity, and confidence, preparing students for the demands of the tech industry.

0 notes

Text

Python App Development by NextGen2AI: Building Intelligent, Scalable Solutions with AI Integration

In a world where digital transformation is accelerating rapidly, businesses need applications that are not only robust and scalable but also intelligent. At NextGen2AI, we harness the power of Python and Artificial Intelligence to create next-generation applications that solve real-world problems, automate processes, and drive innovation.

Why Python for Modern App Development?

Python has emerged as a go-to language for AI, data science, automation, and web development due to its simplicity, flexibility, and an extensive library ecosystem.

Advantages of Python:

Clean, readable syntax for rapid development

Large community and support

Seamless integration with AI/ML frameworks like TensorFlow, PyTorch, Scikit-learn

Ideal for backend development, automation, and data handling

Our Approach: Merging Python Development with AI Intelligence

At NextGen2AI, we specialize in creating custom Python applications infused with AI capabilities tailored to each client's unique requirements. Whether it's building a data-driven dashboard or an automated chatbot, we deliver apps that learn, adapt, and perform.

Key Features of Our Python App Development Services

AI & Machine Learning Integration

We embed predictive models, classification engines, and intelligent decision-making into your applications.

Scalable Architecture

Our solutions are built to grow with your business using frameworks like Flask, Django, and FastAPI.

Data-Driven Applications

We build tools that process, visualize, and analyze large datasets for smarter business decisions.

Automation & Task Management

From scraping web data to automating workflows, we use Python to improve operational efficiency.

Cross-Platform Compatibility

Our Python apps are designed to function seamlessly across web, mobile, and desktop environments.

Use Cases We Specialize In

AI-Powered Analytics Dashboards

Chatbots & NLP Solutions

Image Recognition Systems

Business Process Automation

Custom API Development

IoT and Sensor Data Processing

Tools & Technologies We Use

Python 3.x

Flask, Django, FastAPI

TensorFlow, PyTorch, OpenCV

Pandas, NumPy, Matplotlib

Celery, Redis, PostgreSQL, MongoDB

REST & GraphQL APIs

Why Choose NextGen2AI?

AI-First Development Mindset End-to-End Project Delivery Agile Methodology & Transparent Process Focus on Security, Scalability, and UX

We don’t just build Python apps—we build intelligent solutions that evolve with your business.

Ready to Build Your Intelligent Python Application?

Let NextGen2AI bring your idea to life with custom-built, AI-enhanced Python applications designed for today’s challenges and tomorrow’s scale.

🔗 Explore our services: https://nextgen2ai.com

#PythonDevelopment#nextgen2ai#aiapps#PythonWithAI#MachineLearning#businessautomation#DataScience#AppDevelopment#CustomSoftware

0 notes

Text

Top Programming Languages to Learn for Freelancing in India

The gig economy in India is blazing a trail and so is the demand for skilled programmers and developers. Among the biggest plus points for freelancing work is huge flexibility, independence, and money-making potential, which makes many techies go for it as a career option. However, with the endless list of languages available to choose from, which ones should you master to thrive as a freelance developer in India?

Deciding on the language is of paramount importance because at the end of the day, it needs to get you clients, lucrative projects that pay well, and the foundation for your complete freelance career. Here is a list of some of the top programming languages to learn for freelancing in India along with their market demand, types of projects, and earning potential.

Why Freelance Programming is a Smart Career Choice

Let's lay out really fast the benefits of freelance programmer in India before the languages:

Flexibility: Work from any place, on the hours you choose, and with the workload of your preference.

Diverse Projects: Different industries and technologies put your skills to test.

Increased Earning Potential: When most people make the shift toward freelancing, they rapidly find that the rates offered often surpass customary salaries-with growing experience.

Skill Growth: New learning keeps on taking place in terms of new technology and problem-solving.

Autonomy: Your own person and the evolution of your brand.

Top Programming Languages for Freelancing in India:

Python:

Why it's great for freelancing: Python's versatility is its superpower. It's used for web development (Django, Flask), data science, machine learning, AI, scripting, automation, and even basic game development. This wide range of applications means a vast pool of freelance projects. Clients often seek Python developers for data analysis, building custom scripts, or developing backend APIs.

Freelance Project Examples: Data cleaning scripts, AI model integration, web scraping, custom automation tools, backend for web/mobile apps.

JavaScript (with Frameworks like React, Angular, Node.js):

Why it's great for freelancing: JavaScript is indispensable for web development. As the language of the internet, it allows you to build interactive front-end interfaces (React, Angular, Vue.js) and powerful back-end servers (Node.js). Full-stack JavaScript developers are in exceptionally high demand.

Freelance Project Examples: Interactive websites, single-page applications (SPAs), e-commerce platforms, custom web tools, APIs.

PHP (with Frameworks like Laravel, WordPress):

Why it's great for freelancing: While newer languages emerge, PHP continues to power a significant portion of the web, including WordPress – which dominates the CMS market. Knowledge of PHP, especially with frameworks like Laravel or Symfony, opens up a massive market for website development, customization, and maintenance.

Freelance Project Examples: WordPress theme/plugin development, custom CMS solutions, e-commerce site development, existing website maintenance.

Java:

Why it's great for freelancing: Java is a powerhouse for enterprise-level applications, Android mobile app development, and large-scale backend systems. Many established businesses and startups require Java expertise for robust, scalable solutions.

Freelance Project Examples: Android app development, enterprise software development, backend API development, migration projects.

SQL (Structured Query Language):

Why it's great for freelancing: While not a full-fledged programming language for building applications, SQL is the language of databases, and almost every application relies on one. Freelancers proficient in SQL can offer services in database design, optimization, data extraction, and reporting. It often complements other languages.

Freelance Project Examples: Database design and optimization, custom report generation, data migration, data cleaning for analytics projects.

Swift/Kotlin (for Mobile Development):

Why it's great for freelancing: With the explosive growth of smartphone usage, mobile app development remains a goldmine for freelancers. Swift is for iOS (Apple) apps, and Kotlin is primarily for Android. Specializing in one or both can carve out a lucrative niche.

Freelance Project Examples: Custom mobile applications for businesses, utility apps, game development, app maintenance and updates.

How to Choose Your First Freelance Language:

Consider Your Interests: What kind of projects excite you? Web, mobile, data, or something else?

Research Market Demand: Look at popular freelance platforms (Upwork, Fiverr, Freelancer.in) for the types of projects most requested in India.

Start with a Beginner-Friendly Language: Python or JavaScript is an excellent start due to their immense resources and helpful communities.

Focus on a Niche: Instead of trying to learn everything, go extremely deep on one or two languages within a domain (e.g., Python for data science, JavaScript for MERN stack development).

To be a successful freelance programmer in India, technical skills have to be combined with powerful communication, project management, and self-discipline. By mastering either one or all of these top programming languages, you will be set to seize exciting opportunities and project yourself as an independent professional in the ever-evolving digital domain.

Contact us

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

#Freelance Programming#Freelance India#Programming Languages#Coding for Freelancers#Learn to Code#Python#JavaScript#Java#PHP#SQL#Mobile Development#Freelance Developer#TCCI Computer Coaching

0 notes

Text

Unlock Your Programming Potential with a Python Course in Bangalore

In today’s digital era, learning to code isn’t just for computer scientists — it's an essential skill that opens doors across industries. Whether you're aiming to become a software developer, data analyst, AI engineer, or web developer, Python is the language to start with. If you're located in or near India’s tech capital, enrolling in a Python course in Bangalore is your gateway to building a future-proof career in tech.

Why Python?

Python is one of the most popular and beginner-friendly programming languages in the world. Known for its clean syntax and versatility, Python is used in:

Web development (using Django, Flask)

Data science & machine learning (NumPy, Pandas, Scikit-learn)

Automation and scripting

Game development

IoT applications

Finance and Fintech modeling

Artificial Intelligence (AI) & Deep Learning

Cybersecurity tools

In short, Python is the “Swiss army knife” of programming — easy to learn, powerful to use.

Why Take a Python Course in Bangalore?

Bangalore — India’s leading IT hub — is home to top tech companies like Google, Microsoft, Infosys, Wipro, Amazon, and hundreds of fast-growing startups. The city has a massive demand for Python developers, especially in roles related to data science, machine learning, backend development, and automation engineering.

By joining a Python course in Bangalore, you get:

Direct exposure to real-world projects

Trainers with corporate experience

Workshops with startup founders and hiring partners

Proximity to the best placement opportunities

Peer learning with passionate tech learners

Whether you're a fresher, student, or working professional looking to upskill, Bangalore offers the best environment to learn Python and get hired.

What’s Included in a Good Python Course?

A high-quality Python course in Bangalore typically covers:

✔ Core Python

Variables, data types, loops, and conditionals

Functions, modules, and file handling

Object-Oriented Programming (OOP)

Error handling and debugging

✔ Advanced Python

Iterators, generators, decorators

Working with APIs and databases

Web scraping (with Beautiful Soup and Selenium)

Multi-threading and regular expressions

✔ Real-World Projects

Build a dynamic website using Flask or Django

Create a weather forecasting app

Automate Excel and file management tasks

Develop a chatbot using Python

Analyze datasets using Pandas and Matplotlib

✔ Domain Specializations

Web Development – Django/Flask-based dynamic sites

Data Science – NumPy, Pandas, Matplotlib, Seaborn, Scikit-learn

Machine Learning – Supervised & unsupervised learning models

Automation – Scripts to streamline manual tasks

App Deployment – Heroku, GitHub, and REST APIs

Many training providers also help prepare for Python certifications, such as PCAP (Certified Associate in Python Programming) or Microsoft’s Python certification.

Who Can Join a Python Course?

Python is extremely beginner-friendly. It’s ideal for:

Students (Engineering, BCA, MCA, BSc IT, etc.)

Career switchers from non-tech backgrounds

Working professionals in IT/analytics roles

Startup founders and entrepreneurs

Freelancers and job seekers

There are no prerequisites except basic logical thinking and eagerness to learn.

Career Opportunities after Learning Python

Bangalore has a booming job market for Python developers. Completing a Python course in Bangalore opens opportunities in roles like:

Python Developer

Backend Web Developer

Data Analyst

Data Scientist

AI/ML Engineer

Automation Engineer

Full Stack Developer

DevOps Automation Specialist

According to job portals, Python developers in Bangalore earn ₹5 to ₹15 LPA depending on skillset and experience. Data scientists and ML engineers with Python expertise can earn even higher.

Top Institutes Offering Python Course in Bangalore

You can choose from various reputed institutes offering offline and online Python courses. Some top options include:

Simplilearn – Online + career support

JSpiders / QSpiders – For freshers and job seekers

Intellipaat – Weekend batches with projects

Besant Technologies – Classroom training + placement

Coding Ninjas / UpGrad / Edureka – Project-driven, online options

Ivy Professional School / AnalytixLabs – Python for Data Science specialization

Most of these institutes offer flexible timings, EMI payment options, and placement support.

Why Python is a Must-Have Skill in 2025

Here’s why you can’t ignore Python anymore:

Most taught first language in top universities worldwide

Used by companies like Google, Netflix, NASA, and IBM

Dominates Data Science & AI ecosystems

Huge job demand and salary potential

Enables rapid prototyping and startup MVPs

Whether your goal is to land a job in tech, build a startup, automate tasks, or work with AI models — Python is the key.

Final Thoughts

If you want to break into tech or supercharge your coding journey, there’s no better place than Bangalore — and no better language than Python.

By enrolling in a Python course in Bangalore, you position yourself in the heart of India’s tech innovation, backed by world-class mentorship and career growth.

Ready to transform your future?

Start your Python journey today in Bangalore and code your way to success.

0 notes

Text

🏡 Real Estate Web Scraping — A Simple Way to Collect Property Info Online

Looking at houses online is fun… but trying to keep track of all the details? Not so much.

If you’ve ever searched for homes or rental properties, you know how tiring it can be to jump from site to site, writing down prices, addresses, and details. Now imagine if there was a way to automatically collect that information in one place. Good news — there is!

It’s called real estate web scraping, and it makes life so much easier.

🤔 What Is Real Estate Web Scraping?

Real estate web scraping is a tool that helps you gather information from property websites — like Zillow, Realtor.com, Redfin, or local listing sites — all without doing it by hand.

Instead of copying and pasting, the tool goes to the website, reads the page, and pulls out things like:

The home’s price

Location and zip code

Square footage and number of rooms

Photos

Description

Contact info for the seller or agent

And it puts all that data in a nice, clean file you can use.

🧑💼 Who Is It For?

Real estate web scraping is useful for anyone who wants to collect a lot of property data quickly:

Buyers and investors looking for the best deals

Real estate agents tracking listings in their area

Developers building property websites or apps

People comparing prices in different cities

Marketing teams trying to find leads

It saves time and gives you a better view of what’s happening in the market.

🛠️ How Can You Do It?

If you’re good with code, there are tools like Python, Scrapy, and Selenium that let you build your own scraper.

But if you’re not into tech stuff, no worries. There are ready-made tools that do everything for you. One of the easiest options is this real estate web scraping solution. It works in the cloud, is beginner-friendly, and gives you the data you need without the stress.

🛑 Is It Legal?

Great question — and yes, as long as you’re careful.

Scraping public information (like listings on a website) is generally okay. Just make sure to:

Don’t overload the website with too many requests

Avoid collecting private info

Follow the website’s rules (terms of service)

Be respectful — don’t spam or misuse the data

Using a trusted tool (like the one linked above) helps keep things safe and easy.

💡 Why Use Real Estate Scraping?

Here are some real-life examples:

You’re a property investor comparing house prices in 10 cities — scraping gives you all the prices in one spreadsheet.

You’re a developer building a housing app — scraping provides live listings to show your users.

You’re just curious about trends — scraping lets you track how prices change over time.

It’s all about saving time and seeing the full picture.

✅ In Short…

Real estate web scraping helps you collect a lot of property data from the internet without doing it all manually. It’s fast, smart, and incredibly helpful—whether you’re buying, building, or just exploring.

And the best part? You don’t need to be a tech expert. This real estate web scraping solution makes it super simple to get started.

Give it a try and see how much easier your real estate research can be.

1 note

·

View note

Text

Why CodingBrushup is the Ultimate Tool for Your Programming Skills Revamp

In today's fast-paced tech landscape, staying current with programming languages and frameworks is more important than ever. Whether you're a beginner looking to break into the world of development or a seasoned coder aiming to sharpen your skills, Coding Brushup is the perfect tool to help you revamp your programming knowledge. With its user-friendly features and comprehensive courses, Coding Brushup offers specialized resources to enhance your proficiency in languages like Java, Python, and frameworks such as React JS. In this blog, we’ll explore why Coding Brushup for Programming is the ultimate platform for improving your coding skills and boosting your career.

1. A Fresh Start with Java: Master the Fundamentals and Advanced Concepts

Java remains one of the most widely used programming languages in the world, especially for building large-scale applications, enterprise systems, and Android apps. However, it can be challenging to master Java’s syntax and complex libraries. This is where Coding Brushup shines.

For newcomers to Java or developers who have been away from the language for a while, CodingBrushup offers structured, in-depth tutorials that cover everything from basic syntax to advanced concepts like multithreading, file I/O, and networking. These interactive lessons help you brush up on core Java principles, making it easier to get back into coding without feeling overwhelmed.

The platform’s practice exercises and coding challenges further help reinforce the concepts you learn. You can start with simple exercises, such as writing a “Hello World” program, and gradually work your way up to more complicated tasks like creating a multi-threaded application. This step-by-step progression ensures that you gain confidence in your abilities as you go along.

Additionally, for those looking to prepare for Java certifications or technical interviews, CodingBrushup’s Java section is designed to simulate real-world interview questions and coding tests, giving you the tools you need to succeed in any professional setting.

2. Python: The Versatile Language for Every Developer

Python is another powerhouse in the programming world, known for its simplicity and versatility. From web development with Django and Flask to data science and machine learning with libraries like NumPy, Pandas, and TensorFlow, Python is a go-to language for a wide range of applications.

CodingBrushup offers an extensive Python course that is perfect for both beginners and experienced developers. Whether you're just starting with Python or need to brush up on more advanced topics, CodingBrushup’s interactive approach makes learning both efficient and fun.

One of the unique features of CodingBrushup is its ability to focus on real-world projects. You'll not only learn Python syntax but also build projects that involve web scraping, data visualization, and API integration. These hands-on projects allow you to apply your skills in real-world scenarios, preparing you for actual job roles such as a Python developer or data scientist.

For those looking to improve their problem-solving skills, CodingBrushup offers daily coding challenges that encourage you to think critically and efficiently, which is especially useful for coding interviews or competitive programming.

3. Level Up Your Front-End Development with React JS

In the world of front-end development, React JS has emerged as one of the most popular JavaScript libraries for building user interfaces. React is widely used by top companies like Facebook, Instagram, and Airbnb, making it an essential skill for modern web developers.

Learning React can sometimes be overwhelming due to its unique concepts such as JSX, state management, and component lifecycles. That’s where Coding Brushup excels, offering a structured React JS course designed to help you understand each concept in a digestible way.

Through CodingBrushup’s React JS tutorials, you'll learn how to:

Set up React applications using Create React App

Work with functional and class components

Manage state and props to pass data between components

Use React hooks like useState, useEffect, and useContext for cleaner code and better state management

Incorporate routing with React Router for multi-page applications

Optimize performance with React memoization techniques

The platform’s interactive coding environment lets you experiment with code directly, making learning React more hands-on. By building real-world projects like to-do apps, weather apps, or e-commerce platforms, you’ll learn not just the syntax but also how to structure complex web applications. This is especially useful for front-end developers looking to add React to their skillset.

4. Coding Brushup: The All-in-One Learning Platform

One of the best things about Coding Brushup is its all-in-one approach to learning. Instead of jumping between multiple platforms or textbooks, you can find everything you need in one place. CodingBrushup offers:

Interactive coding environments: Code directly in your browser with real-time feedback.

Comprehensive lessons: Detailed lessons that guide you from basic to advanced concepts in Java, Python, React JS, and other programming languages.

Project-based learning: Build projects that add to your portfolio, proving that you can apply your knowledge in practical settings.

Customizable difficulty levels: Choose courses and challenges that match your skill level, from beginner to advanced.

Code reviews: Get feedback on your code to improve quality and efficiency.

This structured learning approach allows developers to stay motivated, track progress, and continue to challenge themselves at their own pace. Whether you’re just getting started with programming or need to refresh your skills, Coding Brushup tailors its content to suit your needs.

5. Boost Your Career with Certifications

CodingBrushup isn’t just about learning code—it’s also about helping you land your dream job. After completing courses in Java, Python, or React JS, you can earn certifications that demonstrate your proficiency to potential employers.

Employers are constantly looking for developers who can quickly adapt to new languages and frameworks. By adding Coding Brushup certifications to your resume, you stand out in the competitive job market. Plus, the projects you build and the coding challenges you complete serve as tangible evidence of your skills.

6. Stay Current with Industry Trends

Technology is always evolving, and keeping up with the latest trends can be a challenge. Coding Brushup stays on top of these trends by regularly updating its content to include new libraries, frameworks, and best practices. For example, with the growing popularity of React Native for mobile app development or TensorFlow for machine learning, Coding Brushup ensures that developers have access to the latest resources and tools.

Additionally, Coding Brushup provides tutorials on new programming techniques and best practices, helping you stay at the forefront of the tech industry. Whether you’re learning about microservices, cloud computing, or containerization, CodingBrushup has you covered.

Conclusion

In the world of coding, continuous improvement is key to staying relevant and competitive. Coding Brushup offers the perfect solution for anyone looking to revamp their programming skills. With comprehensive courses on Java, Python, and React JS, interactive lessons, real-world projects, and career-boosting certifications, CodingBrushup is your one-stop shop for mastering the skills needed to succeed in today’s tech-driven world.

Whether you're preparing for a new job, transitioning to a different role, or just looking to challenge yourself, Coding Brushup has the tools you need to succeed.

0 notes

Text

Python Trending Topics

Want to stay ahead in tech? These Python skills are in high demand:

✅ Automation & scripting

✅ Web scraping & chatbots

✅ AI & Machine Learning

✅ FastAPI & async web apps

Whether you're a beginner or looking to upskill, these are the areas to focus on. Learn them all in our Python Full Stack Development course!

📲 DM us or click the link in bio to join our Python Full Stack Developer Course.

🎯 Start Your Journey Today!

📞 +91 9704944488 🌐 www.pythonfullstackmasters.in 📍 Location: Hyderabad, Telangana

#PythonTrends#PythonLearning#FullStackPython#PythonMasters#AIwithPython#PythonAutomation#MachineLearningWithPython#FastAPI#PythonForBeginners#CodeNewbie#WebDevelopmentIndia#LearnToCode

0 notes

Text

A Complete Guide to Web Scraping Blinkit for Market Research

Introduction

Having access to accurate data and timely information in the fast-paced e-commerce world is something very vital so that businesses can make the best decisions. Blinkit, one of the top quick commerce players on the Indian market, has gargantuan amounts of data, including product listings, prices, delivery details, and customer reviews. Data extraction through web scraping would give businesses a great insight into market trends, competitor monitoring, and optimization.

This blog will walk you through the complete process of web scraping Blinkit for market research: tools, techniques, challenges, and best practices. We're going to show how a legitimate service like CrawlXpert can assist you effectively in automating and scaling your Blinkit data extraction.

1. What is Blinkit Data Scraping?

The scraping Blinkit data is an automated process of extracting structured information from the Blinkit website or app. The app can extract useful data for market research by programmatically crawling through the HTML content of the website.

>Key Data Points You Can Extract:

Product Listings: Names, descriptions, categories, and specifications.

Pricing Information: Current prices, original prices, discounts, and price trends.

Delivery Details: Delivery time estimates, service availability, and delivery charges.

Stock Levels: In-stock, out-of-stock, and limited availability indicators.

Customer Reviews: Ratings, review counts, and customer feedback.

Categories and Tags: Labels, brands, and promotional tags.

2. Why Scrape Blinkit Data for Market Research?

Extracting data from Blinkit provides businesses with actionable insights for making smarter, data-driven decisions.

>(a) Competitor Pricing Analysis

Track Price Fluctuations: Monitor how prices change over time to identify trends.

Compare Competitors: Benchmark Blinkit prices against competitors like BigBasket, Swiggy Instamart, Zepto, etc.

Optimize Your Pricing: Use Blinkit’s pricing data to develop dynamic pricing strategies.

>(b) Consumer Behavior and Trends

Product Popularity: Identify which products are frequently bought or promoted.

Seasonal Demand: Analyze trends during festivals or seasonal sales.

Customer Preferences: Use review data to identify consumer sentiment and preferences.

>(c) Inventory and Supply Chain Insights

Monitor Stock Levels: Track frequently out-of-stock items to identify high-demand products.

Predict Supply Shortages: Identify potential inventory issues based on stock trends.

Optimize Procurement: Make data-backed purchasing decisions.

>(d) Marketing and Promotional Strategies

Targeted Advertising: Identify top-rated and frequently purchased products for marketing campaigns.

Content Optimization: Use product descriptions and categories for SEO optimization.

Identify Promotional Trends: Extract discount patterns and promotional offers.

3. Tools and Technologies for Scraping Blinkit

To scrape Blinkit effectively, you’ll need the right combination of tools, libraries, and services.

>(a) Python Libraries for Web Scraping

BeautifulSoup: Parses HTML and XML documents to extract data.

Requests: Sends HTTP requests to retrieve web page content.

Selenium: Automates browser interactions for dynamic content rendering.

Scrapy: A Python framework for large-scale web scraping projects.

Pandas: For data cleaning, structuring, and exporting in CSV or JSON formats.

>(b) Proxy Services for Anti-Bot Evasion

Bright Data: Provides residential IPs with CAPTCHA-solving capabilities.

ScraperAPI: Handles proxies, IP rotation, and bypasses CAPTCHAs automatically.

Smartproxy: Residential proxies to reduce the chances of being blocked.

>(c) Browser Automation Tools

Playwright: A modern web automation tool for handling JavaScript-heavy sites.

Puppeteer: A Node.js library for headless Chrome automation.

>(d) Data Storage Options

CSV/JSON: For small-scale data storage.

MongoDB/MySQL: For large-scale structured data storage.

Cloud Storage: AWS S3, Google Cloud, or Azure for scalable storage solutions.

4. Setting Up a Blinkit Scraper

>(a) Install the Required Libraries

First, install the necessary Python libraries:pip install requests beautifulsoup4 selenium pandas

>(b) Inspect Blinkit’s Website Structure

Open Blinkit in your browser.

Right-click → Inspect → Select Elements.

Identify product containers, pricing, and delivery details.

>(c) Fetch the Blinkit Page Content

import requests from bs4 import BeautifulSoup url = 'https://www.blinkit.com' headers = {'User-Agent': 'Mozilla/5.0'} response = requests.get(url, headers=headers) soup = BeautifulSoup(response.content, 'html.parser')

>(d) Extract Product and Pricing Data

products = soup.find_all('div', class_='product-card') data = [] for product in products: try: title = product.find('h2').text price = product.find('span', class_='price').text availability = product.find('div', class_='availability').text data.append({'Product': title, 'Price': price, 'Availability': availability}) except AttributeError: continue

5. Bypassing Blinkit’s Anti-Scraping Mechanisms

Blinkit uses several anti-bot mechanisms, including rate limiting, CAPTCHAs, and IP blocking. Here’s how to bypass them.

>(a) Use Proxies for IP Rotation

proxies = {'http': 'http://user:pass@proxy-server:port'} response = requests.get(url, headers=headers, proxies=proxies)

>(b) User-Agent Rotation

import random user_agents = [ 'Mozilla/5.0 (Windows NT 10.0; Win64; x64)', 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7)' ] headers = {'User-Agent': random.choice(user_agents)}

>(c) Use Selenium for Dynamic Content

from selenium import webdriver options = webdriver.ChromeOptions() options.add_argument('--headless') driver = webdriver.Chrome(options=options) driver.get(url) data = driver.page_source driver.quit() soup = BeautifulSoup(data, 'html.parser')

6. Data Cleaning and Storage

After scraping the data, clean and store it: import pandas as pd df = pd.DataFrame(data) df.to_csv('blinkit_data.csv', index=False)

7. Why Choose CrawlXpert for Blinkit Data Scraping?

While building your own Blinkit scraper is possible, it comes with challenges like CAPTCHAs, IP blocking, and dynamic content rendering. This is where CrawlXpert can help.

>Key Benefits of CrawlXpert:

Accurate Data Extraction: Reliable and consistent Blinkit data scraping.

Large-Scale Capabilities: Efficient handling of extensive data extraction projects.

Anti-Scraping Evasion: Advanced techniques to bypass CAPTCHAs and anti-bot systems.

Real-Time Data: Access fresh, real-time Blinkit data with high accuracy.

Flexible Delivery: Multiple data formats (CSV, JSON, Excel) and API integration.

Conclusion

This web scraping provides valuable information on price trends, product existence, and consumer preferences for businesses interested in Blinkit. You can effectively extract any data from Blinkit, analyze it well, using efficient tools and techniques. However, such data extraction would prove futile because of the high level of anti-scraping precautions instituted by Blinkit, thus ensuring reliable, accurate, and compliant extraction by partnering with a trusted provider, such as CrawlXpert.

CrawlXpert will further benefit you by providing powerful market insight, improved pricing strategies, and even better business decisions using higher quality Blinkit data.

Know More : https://www.crawlxpert.com/blog/web-scraping-blinkit-for-market-research

1 note

·

View note

Text

How to Leverage Python Skills to Launch a Successful Freelance Career

The demand for Python developers continues to grow in 2025, opening exciting opportunities—not just in full-time employment, but in freelancing as well. Thanks to Python’s versatility, freelancers can offer services across multiple industries, from web development and data analysis to automation and AI.

Whether you're looking to supplement your income or transition into full-time freelancing, here's how you can use Python to build a thriving freelance career.

Master the Core Concepts

Before stepping into the freelance market, it's essential to build a solid foundation in Python. Make sure you're comfortable with:

Data types and structures (lists, dictionaries, sets)

Control flow (loops, conditionals)

Functions and modules

Object-oriented programming

File handling and error management

Once you’ve nailed the basics, move on to specialized areas based on your target niche.

Choose a Niche That Suits You

Python is used in many domains, but as a freelancer, it helps to specialize. Some profitable freelance niches include:

Web Development: Use frameworks like Django or Flask to build custom websites and web apps.

Data Analysis: Help clients make data-driven decisions using tools like Pandas and Matplotlib.

Automation Scripts: Streamline repetitive client tasks by developing efficient Python automation tools.

Web Scraping: Use tools such as BeautifulSoup or Scrapy to extract data from websites quickly and effectively.

Machine Learning: Offer insights, models, or prototypes using Scikit-learn or TensorFlow.

Choosing a niche allows you to brand yourself as an expert rather than a generalist, which can attract higher-paying clients.

Build a Portfolio

A portfolio is your online resume and a powerful trust builder. Create a personal website or use GitHub to showcase projects that demonstrate your expertise. Some project ideas include:

A simple blog built with Flask

A script that scrapes data and exports it to Excel

A dashboard that visualizes data from a CSV file

An automated email responder

The key is to show clients that you can solve real-world problems using Python.

Create Profiles on Freelance Platforms

Once your portfolio is ready, the next step is to start reaching out to potential clients. Create profiles on platforms like:

Upwork

Freelancer

Fiverr

Toptal

PeoplePerHour

When setting up your profile, write a compelling bio, list your skills, and upload samples from your portfolio. Use keywords clients might search for, like "Python automation," "Django developer," or "data analyst."

Start Small and Build Your Reputation

Landing your first few clients as a new freelancer can take some patience and persistence. Consider offering competitive rates or working on smaller projects initially to gain reviews and build credibility. Positive feedback and completed jobs on your profile will help you attract better clients over time. Deliver quality work, communicate clearly, and meet deadlines—these soft skills matter as much as your technical expertise.

Upskill with Online Resources

The tech landscape changes fast, and staying updated is crucial.Set aside time to explore new tools, frameworks, and libraries, ensuring you stay up-to-date and continuously grow your skill set. Many freelancers also benefit from taking structured courses that help them level up efficiently. If you're serious about freelancing as a Python developer, enrolling in a comprehensive python training course in Pune can help solidify your knowledge. A trusted python training institute in Pune will offer hands-on projects, expert mentorship, and practical experience that align with the demands of the freelance market.

Market Yourself Actively

Don’t rely solely on freelance platforms. Expand your reach by: Sharing coding tips or projects on LinkedIn and Twitter

Writing blog posts about your Python solutions

Networking in communities like Reddit, Stack Overflow, or Discord

Attend local freelancing or tech meetups in your area to network and connect with like-minded professionals. The more visible you are, the more likely clients will find you organically.

Set Your Rates Wisely

Pricing is a common challenge for freelancers. Begin by exploring the rates others in your field are offering to get a sense of standard pricing. Factor in your skill level, project complexity, and market demand. You can charge hourly, per project, or even offer retainer packages for ongoing work. As your skills and client list grow, don’t hesitate to increase your rates.

Stay Organized and Professional

Treat freelancing like a business.Utilize productivity tools to streamline time tracking, invoicing, and client communication.Apps like Trello, Notion, and Toggl can help you stay organized. Create professional invoices, use contracts, and maintain clear communication with clients to build long-term relationships.

Building a freelance career with Python is not only possible—it’s a smart move in today’s tech-driven world. With the right skills, mindset, and marketing strategy, you can carve out a successful career that offers flexibility, autonomy, and unlimited growth potential.

Start by mastering the language, building your portfolio, and gaining real-world experience. Whether you learn through self-study or a structured path like a python training institute in Pune, your efforts today can lead to a rewarding freelance future.

0 notes

Text

Why Python Could Be Your Best Career Move

Technology is transforming every part of our lives. Whether it’s how we work, shop, travel, or communicate, coding is the invisible engine running it all. Among all programming languages, Python stands out for being beginner-friendly yet incredibly powerful. For those living in Cochin and looking to future-proof their careers, a Python training course could be the ideal starting point.

The Rise of Python

Python isn’t just another programming language—it’s a global tech phenomenon. With its clear syntax and vast ecosystem of libraries, Python has become the go-to language for developers, data scientists, and engineers worldwide.

Some of the most in-demand industries are powered by Python:

Data science

Artificial intelligence

Web development

Automation

Fintech and blockchain

Cybersecurity

Python is also used for testing software, scraping data, analyzing trends, and much more.

What Makes Cochin a Great Place to Learn Python?

Cochin has steadily grown into a tech-forward city with the presence of IT parks, startups, and tech-enabled industries. Many educational institutions now partner with companies for internships, workshops, and live projects.

Learning Python it gives you direct access to:

Experienced mentors

Job-ready curriculum

Internships and placement support

Opportunities to work on local and global tech projects

Skills You’ll Gain in a Python Course

An effective Python course goes beyond theory. It focuses on practical learning and problem-solving. Here’s what you’ll learn:

Fundamentals: Data types, conditionals, loops, and functions

Object-Oriented Programming: Building reusable and scalable code

Web Development: Learn how to build web apps using Django

Data Handling: Manage and analyze data using Pandas and NumPy

API Integration: Connect different services and build full-stack apps

Capstone Projects: Implement everything you’ve learned in a final project

Who Should Enroll?

Students preparing for job placements

Working professionals aiming to switch to tech

Start-up founders who want to understand development

Freelancers interested in automation or data

No matter your background, Python can be your entry point into tech.

Real-World Benefits of Python Training

Python isn’t just about writing code. It’s about solving problems, automating tasks, and creating real-world applications. After training, students often find themselves working in roles such as:

Full-Stack Developer

Data Analyst

Python Programmer

AI/ML Intern

QA Tester using Python scripts

Some even go on to become freelance developers or build their own applications.

Why Choose Zoople Technologies?

At Zoople Technologies, we believe that learning should be practical, engaging, and future-oriented. Our Python training in Cochin is built to help you succeed in today’s tech-driven job market.

Specialities:

Trainer-led sessions with industry experts

Real-time projects and assignments

Updated course material

Interview and placement support

Flexible schedules for students and professionals

Zoople Technologies is the best software training institute in Kochi, offering more than 12 in-demand IT courses designed for real-world success.

Your Python journey starts here. Learn python course in cochin from Zoople. Let’s build your future, one line of code at a time.

0 notes