#so now i have to retrain my algorithm

Explore tagged Tumblr posts

Text

great first track on my discover weekly

#i dont usually use spotify but i was listening to some stuff someone rec'd to me the other day#so now i have to retrain my algorithm#SoundCloud

0 notes

Text

with the caveat that absolutely creators should be paid for their work, but also I'm pretty sure they get shit-all for every viewing of their video on youtube

I'm really annoyed because it new which music I liked, and I can switch to spotify, but now I have to retrain a whole new algorithm, plus, there's a ton of music spotify doesn't have but youtube does because it's, like, a video of a performance or an unofficial upload

but if youtube thinks interrupting my long classical music video with "hey! pay money for youtube premium so that you won't have this ad!" is gonna make me give them money....they're wrong. Interrupting my video is 100% how they get me to not give them money.

meanwhile, itunes is a freaking shitshow that constantly needs to be restarted and so I hate everything.

3 notes

·

View notes

Text

I have somehow gotten off anime/weird TikTok and am now on girlytok. I don’t hate it 🤭 (so many pretty women) but uh where did my hyperfixations go 🙃

Currently trying to retrain my algorithm but she’s feisty and knows I’m a lesbo

0 notes

Text

I can’t really sincerely answer this based on the options provided. For the purposes of what you probably mean and how most people will interpret this, I’m definitely anti-AI. But that’s purely because of all the horrible and unethical consequences of THE WAY IT’S BEING HANDLED. Not the AI itself. But that doesn’t mean it “depends on the situation” because there is currently no situation in existence (that I’m aware of) where it’s being handled properly.

I think we all know the gist by now, but here’s what I’m specifically against:

• Training it on art/writing/etc without consent of the original creators. I can’t believe there wasn’t already some law that would have prevented that from happening.

• The widespread myth that it’s even “intelligent” in any way. It’s pattern recognition. That’s all. It frequently gets basic facts wrong because it’s not programmed to understand what it’s spitting out. It’s going to cause all kinds of problems for people who don’t understand the way it actually works and assume it always knows what it’s talking about.

• The prevalence of AI use among companies that are trying to replace workers to save a few dollars without caring about the consequences, both in quality of product and in jobs destroyed. If you want a concrete example of how this affects product quality, well, there are thousands, but look no further than auto-generated captions. I’m sure we’ve all seen that atrocity.

(If it was actually the case that AI could do things better/faster/cheaper than a human, I’d argue that we should consider doing it and then providing support for the people who lose their jobs to it so they can transition to different jobs, the same way that we should be retraining coal workers to build windmills and solar panels. But that’s not the case at all. AI output has limited usefulness, but for the most part it is vastly inferior to the exact same task done by a human with a brain.)

I don’t want books or movies written by a pattern-recognition algorithm with zero regard for the meaning in what it’s creating. Even if the results were really good (and we are definitely NOT there) I’d argue that it still doesn’t hit the same if it’s not written by a person. Entertainment is not defined by consumption. That’s incidental. It’s defined by intent (and not the intent of someone giving an AI a prompt - although I’d say there is SOME intent there, it’s not the same thing).

What I DO support is the concept of using AI as a tool. As in, using it to do very tedious tasks like reformatting text in ways that might be hard to accomplish with find-and-replace, or integrating it into illustration or animation software and giving it prompts like, idk, “change this person’s smile to a frown,” thereby saving hours of noncreative work on the part of the artist.

Like, as someone who does video edits for fun, I would LOVE to be able to feed an AI an animated video clip and tell it to, say, change the way a character’s lips move so they’re saying different words. The ideas about exactly what to do to the image/video would be mine as the artist, but if I could easily have the longest and most tedious tasks done for me and then move on to my next creative choice? That would be so liberating!

Unfortunately I have yet to find a way to replace anything in my typical creative process with AI in its current capability, but even more unfortunately, I could not in good conscience use it even if it progressed that far. Because the way the whole system is set up is unethical, exploitative, and backward.

To me it’s an enormous missed opportunity. I wish we were all more informed about what it is and what it ISN’T and how it should actually be used, and I wish we had the power to regulate it toward that end, instead of it being poised as a threat to authors and artists and actors and actually probably everyone in the world for the sake of keeping a few more dollars in the hands of the people who need those dollars the least.

9 notes

·

View notes

Text

I know that most of my customers are patient and understanding, but I do want to remind everyone that it is almost impossible for me to complete custom* orders on time when I am usually not even making one sale a week. I have to make money elsewhere which takes away my time and energy. I still also need time and space to do exposure therapy currently, time to actually enjoy with my partner, work on new things so I can hopefully MAKE a sale lmfao.

This is not how I envisioned my store progressing, but I just can’t seem to keep up with the economy, the lack of interest, algorithms, trends, etc. It’s a lot for me to try and juggle while still trying to retrain my brain and body to be well and adjust back into society. Hopefully next year I will be participating in more markets to try and combat all of that, because people just aren’t online it seems as much. I know I have a great consistent group of customers and friends here, but most of us are in financial stress too.

Hopefully this will all even out soon (even DoorDash is not as profitable right now) but I just feel badly that I can’t seem to be a “normal” business. I have a lot of stock right now to try and combat Etsy’s priorities, lots of items on free shipping, sales, and none of it is really producing any change. I’ve been told to move platforms, buy a domain (I can’t afford the package with my pre-built website without sales) but all of those things are still found by interacting with my socials. I can see that I’m driving all my traffic to Etsy and it’s still not doing anything, so I don’t see how that would change anything on another platform.

I’m not looking for advice, I just think that a lot of us can’t buy things right now, especially when the majority of mine are $150+ and that’s okay. But it makes the experience worse for my actual customers, and I feel badly about that. It will pass, but I’m tired of it too. In 2020 I was doing 2-3k a month and now I’m lucky if I can manage $500. It’s just a different market now and I’m trying to expand as much as possible without a lot of money to do that.

Idk where I’m really going with this, but it’s just taking a really long time to recover from me being sick at the same time as inflation and job market shenanigans, etc. Lots of artists I know in Pittsburgh are struggling too this year. Hopefully it’ll start to even out next year.

12 notes

·

View notes

Note

tiktok anon - something really fascinating i found about the platform (i use it on a daily with no issues) is that if you're in the eu, or i europe in general, it's much easier to curate your algorithm to only show you what you wanna see and enjoy watching which is what ive done and why i have no issues

i just close the app whenever i see something i dont like and wait 15-20mins and then open it again

i did the same thing with instagram's explore page and youtube and it works, i now only get content on every platform that i really enjoy

but, i did visit egypt this summer and my feeds were totally off while i was there because egypt isn't under eu laws and regulations and it was impossible to curate it the same way. i also got ads after every two videos on tiktok which never happened before, in fact, i just dont get outright ads in europe when im scrolling my fyp

idk if you're in the us, but for any europeans i really recommend this strategy of closing the app when you get unwanted content and waiting a while before opening it up again. it even works on like a daily basis

i was on dubloon tiktok for a couple of days because it was fun, but i got bored and started closing the app whenever that type of video started showing up and the algorithm immediately understood that it shouldn't be showing me that content anymore and rn im back to my usual fyp filled with cute animal videos. when i get bored and see something interesting i watch it and like it, and get more of that kind until im bored and gotta retrain the algorithm for about an hour lmao

that is so interesting??? i do live in the us unfortunately—i definitely have somewhat of a curated algorithm on there, but i also think here they just try to feed us as much as we can take lol. i’m curious, how much time do you spend on it?

4 notes

·

View notes

Text

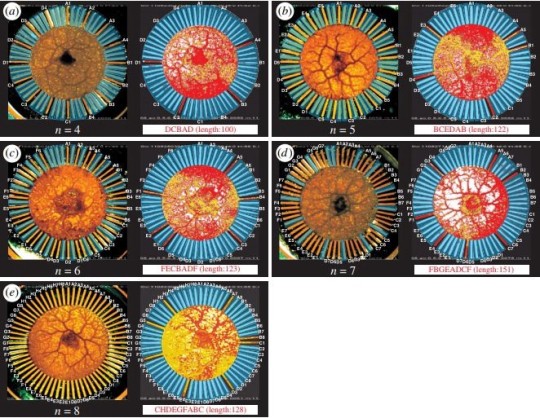

A.I.nktober: A neural net creates drawing prompts

There’s a game called Inktober where people post one drawing for every day in October. To help inspire people, the people behind Inktober post an official list of daily prompts, a word or phrase like Thunder, Fierce, Tired, or Friend. There’s no requirement to use the official lists, though, so people make their own. The other day, blog reader Kail Antonio posed the following question to me:

What would a neural network’s Inktober prompts be like?

Training a neural net on Inktober prompts is tricky, since there’s only been 4 years’ worth of prompts so far. A text-generating neural net’s job is to predict what letter comes next in its training data, and if it can memorize its entire training dataset, that’s technically a perfect solution to the problem. Sure enough, when I trained the neural net GPT-2 345-M on the existing examples, it memorized them in just a few seconds. In fact, it was rather like melting an M&M with a flamethrower.

My strategy for getting around this was to increase the sampling temperature, which means that I forced the neural net to go not with its best prediction (which would just be something plagiarized from the existing list), but something it thought was a bit less likely.

Temperature 1.0

At a temperature setting of 1.0 (already relatively high), the algorithm tends to cycle through the same few copied words from the dataset, or else it fills the screen with dots, or with the repeated words like “dig”. Occasionally it generates what looked like tables of D&D stats, or a political article with lots of extra line breaks. Once it generated a sequence of other prompts, as if it had somehow made the connection to the overall concept of prompts.

The theme is: horror. Please submit a Horror graphic This can either be either a hit or a miss monster. Please spread horror where it counts. Let the horror begin... Please write a well described monster. Please submit a monster with unique or special qualities. Please submit a tall or thin punctuated or soft monster. Please stay the same height or look like a tall or thin Flying monster. Please submit a lynx she runs

This is strange behavior, but training a huge neural net on a tiny dataset does weird stuff to its performance apparently.

Where did these new words come from? GPT-2 is pretrained on a huge amount of text from the internet, so it’s drawing on words and letter combinations that are still somewhere in its neural connections, and which seem to match the Inktober prompts.

In this manner I eventually collected a list of newly-generated prompts, but It took a LONG time to sample these because I kept having to check which were copies and which were the neural net’s additions.

Temperature 1.2

So, I tried an even higher sampling temperature, to try to nudge the neural net farther away from copying its training data. One unintended effect of this was that the phrases it generated started becoming longer, as the high temperature setting made it veer away from the frequent line breaks it had seen in the training data.

Temperature 1.4

At an even higher sampling temperature the neural net would tend to skip the line breaks altogether, churning out run-on chains of words rather than a list of names:

easily lowered very faint smeared pots anatomically modern proposed braided robe dust fleeting caveless few flee furious blasts competing angrily throws unauthorized age forming Light dwelling adventurous stubborn monster

It helped when I prompted it with the beginning of a list:

Computer Weirdness Thing

but still, I had to search through long stretches of AI garble for lines that weren’t ridiculously long.

So, now I know what you get when you give a ridiculously powerful neural net a ridiculously small training dataset. This is why I often rely on prompting a general purpose neural net rather than attempting to retrain one when I’ve got a dataset size of less than a few thousand items - it’s tough to thread that line between memorization and glitchy irrelevance.

One of these days I’m hoping for a neural net that can participate in Inktober itself. AttnGAN doesn’t quiiite have the vocabulary range.

Subscribers get bonus content: An extra list of 31 prompts sampled at temperature 1.4. I’m also including the full lists of prompts in list/text-only format so you can copy/print them more easily (and for those using screen readers).

And if you end up using these prompts for Inktober, please please let me know! I hereby give you permission to mix and match from the lists.

Update: My US and UK publishers are letting me give away some copies of my book to people who draw the AInktober prompts - tag your drawings with AInktober and every week I'll choose a few people based on *handwaves* criteria to get an advance copy of my book. (US, UK, and Canada only, sorry)

In the meantime, you can order my book You Look Like a Thing and I Love You! It’s out November 5 2019.

Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s

#neural networks#gpt-2#inktober#neuraltober#neuroctober#ainktober#intobot#artificial inktelligence#drawing prompts#take control of ostrich#squeakchugger

3K notes

·

View notes

Text

All you need to know for starting basic Machine Learning.

The world yet to come and the world currently is in favor of machines that learn by themselves as humans do. This world is not there yet, where a machine can solely learn by itself but we are moving towards that era. It is that branch of life rather the computer science or statistics(some say) in which machines have the ability to learn from the environment. That environment could be the real one, where we live or that could be the one around the data we have to feed those machines. Thus Machine Learning will be playing an important part in our lives.

I myself am here to learn these things that I am teaching you. It seems vague, but it is true. I am also in the process of learning new things day after day, week after week, and month after month. There are tremendous things that are happening in this area that are worth learning and spreading.

So let us start, by understanding the basic things that everyone should know before going deep in Machine learning.

The Basic Question that everyone asks:

What is machine learning?

Everyone asks.

To this our trusted Wikipedia says:

“Machine learning (ML) is the study of computer algorithms that improve automatically through experience and by the use of data”

So what does this mean – it simply means, giving the child knowledge about the environment [ all at the same time, superman baby], the child learns all the things and then improves as he grows. Not a perfect analogy but it would work.

So the whole aim of machine learning is to make them i.e. machines do tasks that are not explicitly programmed in them.

Now we have given the data to the machines, what does it do with the data?

The simple answer to this question is simple as it goes. It could make predictions, decisions, generate new things, same as the humans do, but off course in a different way.

There are usually three phases involved in the process of making machines learn and do some incredible things, that are not programmed in them. 1) Training 2) Testing 3) Deployment

Training: The first and foremost thing is to train the machine. How do we do that? Million-dollar question? [ joking, not million-dollar, but once a time it was.] It all starts, with the data. For machines to learn, the most important thing is data. So in the training step, we give this data to these machines, they do some mantras on them and learn the relations in the data.

Testing – Then is the testing. It is like making sure that an employee, after the probation period [training period] is ready for the real world. Machine learning applications have a wide range of applications from predictions, to most important decisions like health, etc. So they need to be very much perfect in things that they are trained to do. In this phase that is what we make sure, that they are ready for the real world by making them sit in the exam like students have to. If they pass, they are a go else, they are retrained with some modifications.

Deployment– This is the phase where they are let go in the wild to make predictions, decisions, and many many more things.

One more step can be added, that is as machines perform in the wild, occasionally its performance decreases. In those times, it is again retrained with new data and then tested and then again deployed.

The success in machine learning?

Table of Contents

The success in machine learning? How can you start machine learning? How often should you practice? Where to go after basics? Final Words.

This field has made tremendous achievements from the day it was born. In middle, it fell ill[analogy i.e. its scope almost vanished] but then again, it rose to the heights of the beasts.

The initial successes were, though small but very important. Some of them can be pointed as:

Anomaly detection – Detecting anomalies in the networking detecting attacks, etc.

Clustering in the markets – separating the market audience into separate groups and then targeting those groups based on their separate interests.

Fraud detection – Detecting Frauds like in banking websites, credit card fraud detection.

Spam filtering – Detecting spam in messages like emails, SMS, etc.

Some more start of the art achievements.

Image classification – classifying between two or more images. One most common example is classifying images as being the cat image or dog image.

Text Generation

Language Translation – Translating from one language to another without human intervention.

How can you start machine learning?

PrerequisitesYou should have a working knowledge of python. There are other languages that we can use in machine learning but python makes it simple and easy and there is a large community that is very happy to help you. Basics of machine learning i.e. theory that I talked about above and some more concepts which I will be talking about in the future. Then Proceed to the next section i.e. books. Books you should read.

For Learning Python I would suggest, watch one or two courses on YouTube or Udemy. That is enough to get you going. Don’t fall in tutorial hell.

If you want the book for Python, my Suggestion is Al Sweigart, Automating the boring stuff with python. It is the best book to get you starting in python. It gives you all the aspects of the language to get started. https://amzn.to/3szYcyY

Then if you wish to go beyond the basics, his another book named Beyond the basic stuff with python. https://amzn.to/3v5mlPB Then you should go for one book that satisfies your needs, my suggestion is to go for books that show hands-on learning i.e. teaches with examples not just theory. Some books that I would recommend:

Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow Concepts, Tools, and Techniques to Build Intelligent Systems by Aurélien Géron – start with this book and complete all the exercises in the book. No need to do them all by yourself. Just write them and try to understand what they do and how they do. But for basics, you could read this book’s part 1. https://amzn.to/3n4f8MO

How often should you practice?

It depends:

Being a non-machine learning background. It depends-If you are going to be full-time, then you should practice the algorithms 2-3 hours daily and improve your knowledge about those algorithms. If you are part-time, and trying to achieve new skills, then 1 to 1.5 hours daily is sufficient for you. These are not hardcoded, After all, it is you who have to decide how to do the things.

Where to go after basics?

There are many paths that you could choose from here. You could master one of the traditional Machine learning types or You could do what is Prefeble i.e. go for Deep Learning. But before this have a good understanding of machine learning basics and some of the libraries that are being used in traditional machine learning.

Final Words.

Finally, I would suggest, it is you who has to take action and start doing what you intend to do. Don’t stop now, get up and open your system and start learning.

Share with your Friends:)

3 notes

·

View notes

Text

Explicrypto

Explicrypto is a mobile application which supports Android platform and was built using Android Studio. It can encrypt all your explicit images in the gallery, thus protecting your privacy. Explicrypto can be one of the best applications to trust your privacy with, as it does not internet connection to work. The processing of the image which includes, nudity detection, encryption, and decryption, happens within your device with no contact with the outside world. Due to which, it is very safe to use. Explicryto works in two modes, one is manual mode and the other one is an automatic mode.

In manual mode, first the user needs to grant all the required permissions to the application, for the first time, then the user needs to click a picture using the application. Once the picture is clicked it will be displayed on the next screen. Now, the user can click on “Classify” button to check whether the image is safe or not. As soon as the user clicks on classify button, the image is sent to MobileNet model (which is converted into a TFLite format to support Android). The MobileNet model, then gives the output in three categories Safe, Unsafe, and other along with the confidence at which the model is classifying. If the model classifies an image in unsafe category, then it gives a message that the image is unsafe, and it should be encrypted. Based on those reading, it is up to the user to decide, whether to encrypt the image or not. If the user decides to encrypt the image, the image vanishes from the application and is successfully and safely encrypted. The encryption technique used bas basic and was based on a randomly generated hash key. For decryption, you need to select the path of encrypted files and need to click on “Decrypt” button to decrypt the image. The image is decrypted using the same hash key as used while encrypting the file. Once the image is decrypted, it will again display in the application window.

In automatic mode, it uses the same architecture as in the manual mode. It will also require permission to read and write files. In this mode, the application will work in the background and will scan though the entire gallery. Each image from the gallery will be sent as an input to the MobileNet model and it will give an output whether the image is safe or not with the confidence level. Based on confidence level, it will decide whether to encrypt the image or not. If the image falls under unsafe category, it will automatically encrypt it. To decrypt the image, user again needs to open the application and select the path of decrypted image, to decrypt it.

I have decided my project life cycle to follow Agile approach because I am developing my project in different phases so iterative and incremental approach would be best for my project. Since each phase of my project requires is independent from each other so it can be delivered individually and frequently, which can help me get feedback from the user so that I can enhance the application while delivering the next phase. The design and execution of the project is very simple, and it is not dealing with any kind of complex architecture which makes it well suited for agile approach.

Explicrypto requires a very basic setup. The application only runs on Android devices with Android version 5.0 or above. Currently it is not supported on Apple devices, but will be released for iOS in future updates. The application also requires permission from the user to access device's storage and permission to read and write files on the device which is necessary for encryption and decryption. To use the application on manual mode it is assumed that the camera is fully functional to click pictures. The processing (including detection of nudity inside a picture, encryption and decryption) solely depends on the hardware which your phone is using.

All the necessary information about the Explicrypto including its code, was kept in a public repository called Github. All the changes and updates that was made in code, starting from its initial phase was committed in the repository so that a proper track of the project could be maintained. Each commit in Github was well descripted about the changes made or about adding new modules into the code.

Explicrypto has one of the most user-friendly interfaces. It provides just four buttons for quick and easy interaction in manual mode. With just four buttons, it’s very straight forward to guess their functionality with minimum interaction. One button is to click the picture while the application is open and another one will be to scan the images in the background. Once the image is classified then the user can choose whether to encrypt the image or not by either pressing or not pressing the encrypt button. To decrypt the file, the user just needs to select the path of the file by pressing a decrypt button and the file will be decrypted back to its original form. It is very easy to use because, as soon as the user opens up the application, the application will bring the user directly to the page where there is a camera open which is ready to take the picture and three buttons on the bottom of the screen. As mentioned previously, those buttons will let the user decide whether they want to classify, encrypt, or decrypt the image. These four buttons will provide the easiness of using the application without having any knowledge about technologies.

The remaining work of my project only includes work from the automatic mode. As the data set which I have used to train my model is not huge and user can click any kind of pictures, due to which the model might not be 100% accurate, all the time. Which might lead to the faulty encryption of image files. And sense my gallery has thousands of images it would be a very hectic work manually decrypt all the images which word encrypted accidentally by the application. So, my remaining work is to expand my data set and retrain my model again and again so that it covers all the possible cases for accurate classification.

TIMELINE

Week 1

In the first week of the project, I spent most of the time discussing about the domain on which I should do my project on. I was not sure that whether it has to a project related to machine learning only or it could be something else. As per the instructions given to us in the first week by our mentor and HOD Dr. Deepak sir, it was not mandatory to make a machine learning project. He guided us that our capstone project may not need to be just about developing applications or doing research. Which instantiated wide range of ideas in my mind, and spent time deciding that only.

Week 2

During the second week of the project, I spent time talking to others about the domain only. But this time I was taking the suggestion and advice of my friends. They were giving feasible suggestions on how I should be more focused on getting a better job and equally dedicates the time to the project. As most of the them were prioritizing job over the project, it led me into confusion about the domain of the project. While having one-to-one interaction with faculty mentors in our project lab, they suggested me the domain of my project. They said that it should be Machine Learning as I am doing my specialization on Machine Learning and Data Analytics (MLDA).

Week 3

During the week three of my project, I was busy deciding on which sub-field of machine learning I should make my project on and whom should I approach for mentorship. In the previous semester Dr. Shridhar sir used to take or classes of Deep Learning, so I thought that it would be the best to approach him as he could guide me more efficiently on how I should move ahead with my project. Initially it was on me to bring the idea to him and get his opinion on that. After few days of research, I decided that I should work on the developing education content on genetic algorithm in machine learning as I was fascinated about the working of that algorithm.

Week 4

In week four, I presented my idea about developing education content to Shridhar sir, but he wasn’t much impressed as my idea was not feasible in the market. Then I came up with the idea of making an application which will tell you what to eat in your area depending on the reviews of people. This idea was again unsuccessful as there was no novelty in it. At last Dr. Shridhar sir suggested me to make a project to protect private pictures of people getting leaked on the internet. For which he told me to make an application which detects the explicit images and encrypt them automatically in the phone. So, I took that suggestion and finalized my project idea and domain and wrote my milestone one report on that.

Week 5

In the fifth week of the project, after submitting the report on my milestone one, the companies started coming for the placements. Now I had to manage the research work on the project and parallely manage the placements exam and interview. As I applied in most of the companies, I was not getting enough time to devote to my project. But during the entire week I read some online paper and searched though Github to get references from the related project. Also, I downloaded the required setups which were necessary for the development of the application, like Android Studio, Gitbash, etc.

Week 6

In the sixth week of the project, I bought some online courses related to the android development course and how to apply machine learning on android as android studio by default does not support machine learning. First, I started with the basics of the android development and learnt how to design basic application by adding functionalities like button, label, textfield, background color, etc. Once I gained the required knowledge, I started building the application. The application just has the basic layout. Initially, when I was preparing the android studio on supporting the machine learning algorithm, I was encountering many errors and had to look up on internet several times to tackle those errors and set up the application.

Week 7

In the seventh week of the project, and the sixth week as well, I had very less time to devote to the project as during the day time, I was busy with the interviews of different companies and I during the evening time, I had to attend extra classes that were arranged for the training of the placement process. Apart from the placement processes I spent time in deciding which model should I use for the training of my application. As Google’s Tensorflow offer many models which you can train to perform tasks like image classification and detection. So, I decided that it would better to use the model from the Tensorflow itself as only those models can be deployed to mobile device using Tensorflow library.

Week 8

In the eighth week of the project, I cloned few models from the Github and tried to understand how they are working buy running them on android studio. I was figuring out how they are providing input to the model. Also, I had to do documentation of milestone two with research work. The research work is still in progress. Apart from that I downloaded my dataset and trained MobileNet model from Tensorflow on my laptop. To test the working of the model I gave the image as an input to the model and it predicted the correct output. Then I downloaded a demo application from Github and imported my model into that and tested on live data and it predicted correct most of the time.

Week 9

During the next week, i.e. week nine, I continued with more research work and learning more about the Tensorflowlite library and about its uses and functionality. As it is the first time I am working on a mobile application, I went through the online courses and brush up my skills on developing and enhancing the user interface for my android application. As I still haven’t figured out how to give input to the model in android application, I looked for some more projects to find out the answer. Also, I referred to the YouTube videos for the same.

Week 10

In the week ten I implemented the model in my application. While implementing the model I checked for errors or bugs that might occur while feeding input to the model. Once the model is implemented and it is working perfectly fine on all the input images. I retrained the model on bigger and better dataset because it was trained on smaller dataset for the purpose of testing and compatibility. So, my primary focus on the week ten was to implement the model in the application and assuring that it is working fine and performing the required task in real-time.

Week 11

In the week eleven, I focused to implement the technique of encryption in my project. For that I again did the research work and checked for the proper encryption technique that would be feasible with my project. The proper encryption technique is essential so the application must run in the real time. It should not take lots of computation time while encrypting the images. I also referred to YouTube and GitHub, if some help is required. Along with the encryption technique I had to secure the file for decryption in the device itself so that hackers won’t get access to the file easily.

Week 12

During the last week of the project I focused on final testing and debugging of the project. I performed Whitebox testing on the project and investigate codes for errors and bugs. Once the application is free from systematic errors. I performed Blackbox testing which would be like beta testing. I allowed my friends and family member to use the application in whatever way they like and will record their feedback. After getting the feedback I did the require changes. Once the process of debugging and testing is done, I finalized my application for the final evaluation.

Github Repository link: https://github.com/anubhavanand12qw/Explicrypto

#bennettuniversity#bu androidstudio privacy encryption decryption nudity machinelearning csebennettuniversity

1 note

·

View note

Quote

Data Analytics courses

data analytics ar the lifeblood of any roaring business. As we've touched on, now could be one thing of a boom time within the world of analytics. With the abundance of information on the market at our fingertips these days, the chance to leverage insight from that information has ne'er been bigger. can|this may|this can} have a couple of impacts however primarily the worth of information analysts will go up, making even higher job opportunities and career progression choices.

Thus, creating a career in a very field that gives lots of add all the sectors and with a reasonably high pay scale is unquestionably a decent selection for a career. Their job is to use their programming data to take care of {the information|the info|the information} flow and data models. Their job is to retrieve and analyze information and to come back up with higher data processing ways victimisation new algorithms and analysis. Their job is to style and structure the information in accordance with the computer database so as to develop ways for business comes

.Data Analytics courses

The department was retrained once the corporate introduced desktop computers. identical happened within the sales depots, the finance and accounts department, purchase department etc. There was this fascinating very little advertising within the Times of Asian country that offered coaching within the use of spreadsheets that caught my attention for many reasons. As a young product manager in a very pharma, I had access to a good quantity of information. however I had to try to to tons of the information analysis victimisation calculators and enormous sheets of paper with columns and rows, creating my very own version of spreadsheets. it's ideal for people operating with, or with access to, information in a very real context that permits project work to bring connection to their day job. It’s necessary to acknowledge that every language has its own strengths and weaknesses. Rather, languages ar totally different tools to feature to your tool chest over time. determination issues in any language can facilitate improve your general information analysis skills. If you’re unsure concerning that technology to start out with, decide one language, and follow it for a short time. information analysis will mean various things looking on the person, company, or trade.

An advanced algebra course centered on the theoretical foundations and applications of algebra for machine learning. Upon completion of this course, students are provided a robust foundation of theoretical algebra and linear analysis topics essential for the event of core machine learning and data processing ideas. additionally, numerous real-life applications of algebra for information analytics are incontestable . information analytics certifications ar designed to prove you recognize information management and analytics ideas and ar skilled in information analysis skills. On the opposite hand, information analytics certificates and certificate programs ar designed to assist you learn a lot of concerning the sphere. the talents you may gain through this Free on-line information Analytics Course in Python Programming, information Visualizations.

The trainer had glorious data on the topic and therefore the means the topics were explained was nice. positively wanting forward to urge a lot of leanings from excelr. The trainers ar updated among but a second delay all concerning AWS enablement and share it with their audience. the simplest educational program is completely pointless while not the desire to execute it properly, systematically, and with intensity.You'll still apply for this course within the usual means, victimisation our Postgraduate on-line form. This course is intended for college kids with a numerate background yet as graduates already operating in trade. If you register and for a few reason wish to cancel before you’ve completed hr of the program, you get a prorated refund supported remaining time and your payment set up details. Study at a versatile pace among the 8-month program period, operating a minimum of fifteen hours per week. Your mentor, tutor, student consultant, and career specialist can assist you remain track and responsible. we provide a spread of various finance choices, together with installment plans, thus you'll specialise in what counts — your education.

Data Science is that the application of structured applied math and mathematical techniques on collected information so as to notice underlying patterns yet as create predictions. Besides the on top of mentioned, there ar tons a lot of advantages that information science and business analytics provide. you'd be supplied with a sensible, period project on that you may be created to figure. after you get the desired output, you'll get the information analysis certification which might be valid forever until the tip.

You can reach us at:ExcelR- Data Science, Data Analytics, Business Analytics Course Training Bangalore Address:49, 1st Cross, 27th Main, Behind Tata Motors, 1st Stage, BTM Layout, Bengaluru, Karnataka 560068Phone: 096321 56744Directions: Data Analytics coursesEmail:[email protected]

0 notes

Text

15. Meadow Arts blog... 08/04/21

As part of my volunteering with Meadow Arts they asked me to produce some content. At the end of my time with them I will write a blog about volunteering for them but for now they wanted a personal insight into what I am up to. VERY happy to oblige! Please also note that the y taught me about the importance of ALt text descriptions for visually impaired users. This makes things like blogs much more accessible for everyone. Its also all to do with algorithms and along with that the importance of ‘Headers’, which i must admit to not having mastered yet. Rome wasn’t built in a day!

Blog:

Blog: All about me, Elley Westbrook

When the Government were recently encouraging us all to retrain - “Fatima’s next job could be in cyber (She just doesn’t know it yet)” with the tag line ‘Rethink. Reskill. Reboot.’ I had already jumped ship and gone the other way. I was accompanying #3 son around Hereford College of Arts open day and complaining (muttering) about how jealous I was of his opportunity, having already seen #2 son graduate the Foundation course and go on to do a photography B.A (Hons) in Bristol, when a beautiful Tech Dem tapped me on the shoulder and said, “you can do this too.” That was the start of my change in career. I am indeed Rethinking, reskilling and rebooting. I don’t think its what the government campaign had in mind!

I started my creative journey (apologies for the cliché) on the Portfolio course. One chaotic, brilliant, rollercoaster ride (again, apologies) of creative madness. I loved it! I had thought that it would scratch that creative itch, but it didn’t, it just opened up the full range of possibilities. So, now I am in the second year (level 5) of my B.A (hons) Fine Art degree at HCA.

I have found myself writing this blog because part of our ‘Exploring Futures’ module commitment is to do some work experience with an arts organisation. How immensely lucky to be able to volunteer with Meadow Arts! I am immensely affirmed that at nearly 53 anyone is considering a future for me, so I am embracing all opportunities. They casually suggested that as part of my time with them I may like to write a blog explaining a bit about my work… I certainly don’t need asking twice!

Generally, I would describe myself as a stitch artist, or a multi-media artist. My work is always driven by a narrative.

This hanging is one I embroidered on a sewing machine, free motion embroidery, as part of a project based around Hereford Cathedral. It now belongs to the Cathedral and it documents the Stone Mason’s marks that can be discovered there if you look in the hidden places. Each Mason, and you’ll find examples dating from the 12th Century in Hereford, has his own unique mark. No names identify the hand, just the mark.

More recently however, I have been exploring projection photography. Rooting around in my attic for something completely unrelated, I came across a cache of photographic slides. These slides were of me as a child at my Grand-Parents house in the 1970’s. A house I spent a great deal of time at as a child.

There was another box of slides that I have no memory of ever seeing before, pictures of my biological father as a baby taken in the same house. These photos were shot on technicolour film which was very rare in 1944.

My son Harry was staying with us at the time (in between lockdowns) and he was fascinated to see that the pictures of my father were ‘technically very accomplished’ (the lighting and composition etc). It was clear that the authors of the photos were different. My Grand-Father was the happy snapper of the ones with me in, but the ones of Peter (my father) were from a different hand. And so, a mystery unfolded… It turns out that an American Army Airforce Major was stationed with my Grand-Mother during the war. He was Peter’s Biological father, and a ‘Second Treader’. This means he served in both the first and second world wars. My Grand Mother adopted Peter in 1944. I’ll leave that there…there is so much to say but only really of interest to me and my family.

I contacted the current owners of the house in Cheltenham, and they gave me permission to project the photos onto their house in December 2020. I projected the past onto the present and the inside, outside.

It was an incredibly poignant experience and it turned out to be a spectator event, with passers-by coming over for chats and to discuss the project. One couple remembered my Grand-ma very well.

I am now moving onto a work about the loneliness experienced in lockdown. Its called ‘the lonely chair’ and I hope to be exhibiting it along with work by my class mates in an exhibition called ‘Art in Captivity’. We are utilising the windows of empty shops in Hereford High Town, some time in May. Watch this space…

If you are toying with exploring a creative career - can I urge you to jump in with both feet and take advantage of the amazing Art College we have here in Hereford. I cannot begin to explain how much I am loving my life at the moment!

You can follow me on Instagram @elleywestbrookartpractice. Thanks for reading 😊

-------------------------------------------------------------------------------------------------------

I have emailed that to them today (8/4/210, we’ll see what edits they suggest or if they even accept it!

0 notes

Note

I'm a 26-year old guy and Ive noticed the last three times ive had sex with a girl i struggle to sustain an erection. i mostly use porn to get off in between hook ups and indulge usually 1-2x per week, less so recently. i think what my problem is, is that I'm desensitized and/or my brain gets excited mostly when it knows I'm about watch porn. the kicker here is that I'm hanging out with this girl Friday and there's a 99% chance we'll have sex. any advice? i haven't watched porn for over a week

Welp, certainly missed the mark on this one. But if you come back and read this, hopefully you were successful!

That being said, the harsh reality here is that it definitely sounds like you’re experiencing what scientists call porn-induced erectile dysfunction. It may not be the case - there are A LOT of issues that can cause various types of erectile dysfunction. But if YOU feel like there’s a relationship, that’s a good cause for alarm.

Firstly, what is it? Here’s a good review and understanding to get you started. It also be stated straight-out, PORN IS NOT ADDICTIVE. Addictive materials alter the chemistry of your body with the introduction of the addictive substance (alcohol, nicotine, cocaine, etc.). Instead, porn is a COMPULSIVE material, and although it can have real world effects, the compulsive behaviour is not built into the body, but is instead a reaction to experiences people have to the world around them (stress, anxiety, depression, etc. can all create reasons to compulsively use porn, similarly to that forms of escapism).

Essentially, ED caused by porn results from a place of over-stimulation. Using porn all the time conditions the brain to associate the act of preparing and then engaging with the porn as a sexual act, and if you use high volumes of porn (LOTS of different videos of extreme, hardcore porn) it can train your brain to think that this is how porn is, with all that stimulation. And when you don’t get that experience in real life, your expectations for sex aren’t met, and thus you find it difficult to get aroused. More info from Laci Green here.

youtube

So for your particular meeting this weekend, there’s not much that could have been done. Retraining your brain can be a very difficult process depending on how severe the issue is. So much so, that it might even require a sexologist or therapist to help you through the process. Which is something you should keep in mind if this problem persists!

The general advice to “cure” ED from porn use is as follows:

CUT OUT THE PORN. The big issue here is that porn has become as vital to your sexual experience as an erection. Your brain is smart, and it does a lot of automatic processes during sexual arousal, like speeding heart rate and breathing, as well as all the processes that go into making the penis erect. The problem is, if this is a problem you’re suffering from, the porn itself has become part of the process, and if your brain relates porn + automatic function = erection, then if that porn isn’t there, you won’t be aroused.

Now pay attention to what I said here. I didn’t say STOP MASTURBATING. I said stop watching porn when you masturbate. The porn is the problem, and you need to rewrite the algorithm in your brain to retrain it to understand that porn is not a requirement for arousal. You kinda already instinctively figured this out, and cut down on the masturbation time. But now I suggest you begin to try to masturbate without porn.

I know that probably sounds awful. If you can’t get it up with an actual person, how can you do it alone? That’s the goal. You have to retrain your brain that erotic thoughts - and thoughts alone - are enough to make you aroused. By removing porn from the equation, it forces you use your brain and imagination to become aroused. Think of arousing images in your mind, or think of attractive people in your mind, and then try to masturbate. This may not work! You may just by flapping away at a floppy dick. That’s okay. Don’t try to cum, don’t even try to get hard. Just have a fun, nice moment enjoying an erotic thought. Kind of like when you were a kid, and imagined a classmate in their underwear, and maybe impulsively touched yourself. This is the type of space in your mind you want to rediscover for yourself. Leave your computer and phone behind. Turn off all technology, get in bed, maybe light some candles, and imagine some super steamy, hot sexual scenarios, and see if over the period of a month or so, you can get aroused this way.

________

MASTURBATE DIFFERENTLY. This may sound kind of dumb, but it’s very important for those suffering from ED. Part of the arousal process is the way we do it. We experience pleasure in specific ways, and if a way that we experience pleasure doesn’t align with the way we are used to experiencing pleasure, our brain sometimes interprets that as “oh, this isn’t pleasurable.”

As an example, you can see this in a huge majority of young women, particularly pre-teens and teens just discovering their sexuality right after puberty. In western culture, men are taught from a young age that it’s okay to be more open about their perviness; however, lots of women are shamed from an early age to reject their perversions and sexual thoughts for a sense of idealized purity. This is obviously bullshit, but it’s the way parenting and education happens to prepubescent children.

The result of this conditioning is that there are LOTS of girls who suddenly hit puberty, start having all these horny feelings, but feel they are deeply strange and shameful. “I would never finger myself, that’s WRONG.” “It hurts to have sex, because I never masturbate.” “I don’t like to masturbate because it doesn’t feel good.” It’s not that any of these people are lying; it’s simple that they have been conditioned to think these various thoughts, through YEARS and even DECADES of negative reinforcement of sexual habits.

How does this relate to you? Well, for those who do masturbate, sometimes we can condition ourselves to appreciate pleasure in only one way. If all you do is drop onto your couch, turn the porn on, and surf until it’s time to cum, you condition your brain into the habit of THIS being the sexual experience. This is what sex looks like for your body 99% of the time, but then you meet with a girl... but you’re not naked on your couch, and there’s no porn on... this isn’t sex, this is some other situation, no arousal.

How to fix? CHANGE IT UP. Similarly to not using porn to find arousal, try to use different methods physically to become aroused. Do you use your right hand every time to masturbate? Try to use your left, or flip your hand upside down while stroking. Want a more “sex-like” experience? Try masturbating with condoms on (or with lube!), since you’re hopefully using those when hooking up anyway! Still not enough? HUMP THINGS! Couches, pillows, old stuffed animals, beds, blankets, and all sort of things can give a full body sensation of sexual pleasure. Usually sit down at your computer to masturbate? Lie down in bed instead, or even stand up while you do it! Still want an extra special something? BUY SOME SEX TOYS! Go to Adam & Eve (not a sponsor, they’re just dope) and buy a toy that suits your needs. Too cheap? Make your own sex toys!

The point is back to that conditioning thing. You want to condition your brain to understand that there are LOTS of different places where it’s okay to be aroused, so when you find yourself in a new situation (aka, with a person, in real life) your body doesn’t feel like this is an inappropriate place to do the deed.

youtube

Another very important thing to say here at the end. You BELIEVE that this is your problem, but it is also entirely possible this ISN’T the problem. It’s possible you are experiencing anxiety in sexual encounters with people. If you feel scared or anxious in the moment, this requires a different method of recovery, and you need to learn to calm your mind and try to remember that the expectations you feel at your performance are fair and valid, but they aren’t expectations, and it’s all in your head.

Also, MEDICINE. Lots of medications can fuck with your ability to get hard. Research your medications and see if one of the downsides is erectile dysfunction. If yes, contact your doctor and tell him you’re experiencing these problems.

Also also, mind altering substances! Alcohol, tobacco, and marijuana just to name a few have all been noted as items that can decrease the ability to feel aroused or get aroused, especially in men. Maybe you struggle to get aroused because you’ve had too much to drink, or there’s too much THC in your system, or nicotine has decreased blood flow to your body. If you use any sort of drug, narcotic, or substance like this, maybe cut that out of your diet for awhile!

The bottom line is that all this shit is way more complicated than we assume at first glance, and you should take the potential that porn is the problem seriously. But make sure you analyze your whole self during the process to figure out what the actual issue is, and don’t be afraid to approach doctors with these concerns.

youtube

3 notes

·

View notes

Text

CELEBRITIES VISITING KEY WEST

Big week for celebrities visiting Key West.

Venus Williams and The Real Housewives of Orange County!

Venus’ visit especially noteworthy. Wednesday night, she, her boy friend and our own Laurie Thibaud sat together at Bobby’s Monkey Bar chatting, drinking and singing the evening away.

Lauri reports Venus and her boy friend very friendly to other customers and Venus a noteworthy singer.

Venus and friend are staying at Sunset Key.

Venus’ week began in Miami. She is reported to have danced “all night long” 2 nights in a row. All night means till 5 in the morning. Then shot down to Key West for a few days of sun and fun.

The Real Wives of Orange Country have been in Key West since wednesday. Flew to Miami. Drove Maserati convertibles down U.S. 1 to Key West.

They will be filming while in Key West.

Wednesday night found one of them at Bourbon Street Pub and 801. The “Original Gangsta” Vicki Gurvalson. The others remained in their hotel re-energizing. The ladies are booked into one of the new hotels on North Roosevelt Boulevard.

Bria Ansara was performing at Bourbon Street. Vicki and Bria got along famously. Took some selfies.

At some point early morning, Vicki moved across the street to 801.

Jeeps were rented for the ladies for thursday. Whatever the filming may be, it involves them driving Jeeps round town. They also visited some of Key West’s haunted homes.

The big event for today is attending a key lime pie eating contest. Where, I do not know.

An exciting few days! Venus and The Housewives!

I had my excitement yesterday afternoon when I guested on Lauri Thibaud’s radio show Party Time at 107.5 FM, WGAY FM. Laurie could not wait to share her evening with Venus Williams.

Thn to the Chart Room. Chatted with 4 men from the Portland, Oregon area. Fishermen. Came cross country to fish Key West waters.

Nice guys. We chatted about many things. One into politics as I am. Especially enjoyed his company.

They went out on Keys waters for the first time at 7 this morning.

A short article at page 3 of this morning’s Key West Citizen was titled: Pity The Fools Who Miss This Turtle’s Release.

Turtles loved in the Keys. Great concern for them. The Keys even has a Turtle Hospital in Marathon. A world wide reputation. Sick turtles are flown in from every where.

Mr. T ended up in the Turtle Hospital in early February. Mr. T a 200 pound adult male loggerhead sea turtle. Pretty sick.

He was discovered off Tavernier. Floating. A fishing hook with trailing logged in his mouth. An endoscopy revealed Mr. T had a tear in one lung.

Mr. T was operated on. The hook removed and a blood patch applied to repair the lung tear.

Mr. T is ready to return wednesday to his home in the sea. He will be released tuesday at 3:30 on Sombrero Beach in Marathon. A couple hundred people will be there to see him off.

John Bolton is one of Trump’s henchmen. A war monger. Always looking to start a war.

Teddy Roosevelt said, “Walk softly and carry a big stick.” Adjusting the verbiage a bit to fit Bolton: “Walk ARROGANTLY and SWING a big stick.”

Kurt Nimmo recently wrote: “Bolton and the neocons now infesting the White House are all about war, mass murder, starvation, and the engineered immiseration of millions.”

Pompeo and Bolton are birds of a feather. They are looking for a war. Any war. Presently attempting to push the U.S. into one with Venezuela.

Trump generally acts in an adverse fashion. He is a bully. Uncaringly hurts people. An evil man.

It bothers me that few Republicans stand up to him. They seem more concerned with politics rather than doing the right thing.

John Kennedy quoted Edmund Burke in a speech: “The only thing necessary for the triumph of evil is for good men to do nothing.”

So true.

Republicans get off your asses! Do something. Do not permit Trump to continue going unbridled.

We fail to recognize the extent to which robots are taking over. In the very near future, significantly fewer human jobs will exist because robots will have replaced the workers. Government is failing us. There are few, if any, retraining programs as yet.

Robot use has developed even further than most are aware. Certain robots now decide who gets a job, continues working, or gets fired. With no human intervention!

Such robots primarily involved with low paid workers. Software and algorithms deciding who continues working.

Amazon.com already utilizing robots in such a fashion. The robot tracks the productivity of employees and regularly fires those who under perform, with next to no human intervention.

Another example involves industrial laundry services. Robots are used to track seconds in the pressing of a shirt.

Friday. Looking forward to tonight. Have nothing to do as yet.

Enjoy your day!

CELEBRITIES VISITING KEY WEST was originally published on Key West Lou

0 notes

Text

The Future You That You Least Suspect

The other night my teenage boys asked me what was on my mind (likely looking for material to make fun of me. Just kidding, they’re thoughtful kids).

Instead of trying to “kid proof” my thoughts or rush the conversation, I wrote them this letter. First, to explain that I’m consumed by how we think about and where we look for answers to the biggest questions of our time (listed below), and second, to propose an alternative way of finding answers (hint: I found inspiration in an amoeba).

How are we going to address climate change before it creates global chaos?

What jobs will be available for my kids when they finish school? What should they study?

Over the next few decades, how will we re-train ourselves fast enough — again and again — to remain employed and useful as technology becomes more capable?

Can the human race cooperate well enough to solve our biggest problems or will the future simply overwhelm us?

Most importantly, where do we look to find answers to these questions?

Hopefully I didn’t ruin the possibility that my kids will ever again ask me what’s on my mind 🙂

##

Boys,

There is an old joke where a man is looking for his keys under a street light. Another person walks by and inquires, “Sir, are you certain you lost your keys here?”

“No” the man replies, “I lost them across the street.”

Confused, the stranger says, “Then why are you looking here?”

The man responded, “The light is much brighter here!”

Credit

This comic is as humorous as it is true. All too often, we each do this when we’re trying to solve something. It’s where our brains naturally take us first.

Our imaginations are constrained to the familiar (under the light), so we have a hard time finding answers to difficult questions and problems because the answers often lie in the unknown (or in the comic above, the darkness). Staying in the light is natural, easy, and intuitive, but this limits our discovery potential.

I. How to look in the dark?

History can give us some hints about how others found interesting things in the dark. For example, we discovered that:

the sun is the center of our planetary swarm

the earth is round

the physical world is a bunch of tiny, uncertain pieces governed by quantum physics

Before these became accepted truths, they were very difficult to imagine. This is part because they are non-obvious and also counter-intuitive to our everyday experience.

It’s also because we can’t know what is not known, which means we’re blind to what is yet to be discovered. Don’t believe me? Try to think of something you don’t already know. It’s impossible! That is, until you know it, and then it’s obvious.

Going back to the 5th question, how and where can we look today to find new unknowns (the dark) that help us solve our biggest problems? Where are today’s insights that are equivalent to the sun is the center of our planetary swarm?

I think the most exciting and consequential place to explore is not looking outside ourselves, but looking inside; in our own minds. This is where I see the most fruitful answers to the questions about your future and mine.

What if the next reality busting revolution happened to our very reality and consciousness? And if that happened, could the future of being human be entirely unrecognizable from our vantage point today? I hope so, because the answers to our challenges don’t appear under the lights we have turned on so far.

You’re probably thinking, c’mon Dad, this is crazy talk.

Well, it’s happened before.

II. Thanks Homo Erectus, We’ll Take it From Here

Our ancestor Homo erectus lived two million years ago and wasn’t equipped with our kinds of languages, abstractions, or technology. Homo erectus was possibly an inflexible learner as evidenced by the fact that they made the same axe for over 1 million years.

Imagine trying to explain to Homo erectus a complex phenomena of our modern day society, such as the stock market. You’d have to explain capitalism, economics, math, money, computers, and corporations — after extensive language training and the inevitable discussion of new axe design possibilities (of course, trying not to offend).

The supporting technological, cultural, and legal layers that enable the stock market to exist are the engines and evidence of our prosperity. It’s taken us thousands of years to develop this collective intellectual complexity. The point is, our brains are incredibly capable of evolving and adapting to new and more complicated things.

That our cognition evolved from Homo erectus demonstrates that we have radically evolved before.

III. Amoeba, You’re So Smart!

A few months ago, Japanese researchers demonstrated that an amoeba, a single-celled organism, was able to find near optimal solutions to the following question:

Given a list of cities and the distances between each pair of cities, what is the shortest possible route a salesperson could take that visits each city only once and returns to the origin city? (image credit)

This is known as the Traveling Salesman Problem (TSP), and classified as an NP-hard problem because the time needed to solve it grows exponentially as the number of cities increases.

Humans can come up with near optimal solutions using various heuristics and computers can execute algorithms to solve the problem using their processing power.

However, what’s unique is that Masashi Aono and his team demonstrated that the amoeba’s solution to the TSP is completely different than the way humans or computers have traditionally solved it.

That’s right, this amoeba is flexing on us.

(Note: it’s worth reading about the clever way they set up the experiment to allow the amoeba to solve the problem.)

This got me thinking: when we’re confronted with a problem, we use the tools at our disposal. For example, we can think, do math, or program a computer to solve it.

Professor Aono found a different tool for problem solving: a single-celled organism.

I know what you’re thinking, can the amoeba do my homework or take tests for me? It’s a good question!

Also, kudos to Aono and his team for searching in the dark — this experiment is non-obvious.

IV. Why Am I Telling You About Amoebas?

I strongly believe that we need a major cognitive revolution if we are to solve the global challenges we face. Our species evolved before and we can do it again, but we can’t wait a million years; we must accelerate this evolution.

What I’m saying is very hard to understand and imagine, because it’s in the dark. But bare with me.

The amoeba gives me hope because it didn’t evolve to solve the TSP. We augmented it with technology to accomplish something pretty amazing. Similarly, we haven’t evolved to deal with cooperating on a global scale, battle an invisible gas that warms our planet or retraining our brains every few years as AI takes over more of our work. How can we augment our own minds to allow us to take on these challenges?

Imagine a scenario where you are dressed head-to-toe in haptics (think Ready Player One) that allow you to experience and understand things by feeling changes in vibrations, temperature, and pressure.

Also imagine that you have a brain interface capable of both reading out neural activity and “writing” to your brain — meaning that certain communications can be sent directly into to your brain — the kind of stuff I’m building at Kernel.

Let’s call this a mind/body/machine interface (MBMI). It would basically wire you up to be like the amoeba in the experiment.

Now, what if you were given certain problems, such as the TSP, that your conscious and subconscious mind started working to solve? Imagine that instead of “thinking” about the problem, you just let your brain figure things out on it’s own — like riding a bike.

Would you come up with novel solutions not previously identified by any other person, computer or amoeba?

If we actually had the technology to reimagine how our brains work, over time, I bet that we’d get really good at it and be surprised with all the new things we can do and come up with. To be clear, this is not just “getting smarter” by today’s standards, this is about using our brains in entirely new ways.

Maybe that means that your school today would be in the museum of the future.

People would likely use these MBMIs to invent and discover, solve disagreements, create new art and music, learn new skills, improve themselves in surprising ways and dozens of other things we can’t imagine now.

When thinking about the possibilities, hundreds of questions come to my mind. For example, could we:

minimize many of our less desirable proclivities, individually and collectively?

become more wise as a species?

come up with original solutions to climate change and other pressing problems?

accelerate the speed someone learns (i.e. you get a new kind of PhD at age 12 versus the average of 31 today)

I wonder, is this what you will do at your job in 20 years? Would your mind change so much that it would be hard to recognize your 15 year old self?

Ultimately, for our own survival, we are in a race against time. We need to identify the problems that pose the greatest risks and respond fast enough so that we avoid a zombie apocalypse situation. The most important variable to avoid that: we need to be able to adapt fast enough.

I’m sure at this moment you’re thinking, woah, Dad, calm down!!

V. Your New Job — Being Really Weird (in a good way)

You’re right in wondering what jobs computers will take — if not all of them. They’ll do the boring things that adults do to make money, except far better and for far less money. But imagine a scenario where AI relieves you of 75% of your current day-to-day responsibilities, and is much better at doing those things than you. (I imagined what this world could look like)

A lot has been written, even movies made, about this scenario (e.g.Wall-E). If this happened, would you play fully immersive video games all day? Or live a life of pleasure and be work-free? Certainly possible, although those are linear extrapolations of what we are familiar with today — meaning that’s simply taking what we know today and mapping it into the future. The same thing as looking in the light.

What if millions or even billions of people could build careers by exploring new frontiers of reality and consciousness powered by MBMIs? These types of “weird” thought exercises may be breadcrumbs that extend the considerations we’re willing to make when thinking about our collective cognitive future.

These may be the starter tools that empower us to become Old Worldexplorers setting out for the New World, and journeying on the most exciting and consequential endeavor in human history — an expedition, inward, to discover ourselves.

Dad

orginally posted here:

https://medium.com/future-literacy/the-future-you-that-you-least-suspect-18cf63bd0061

The Future You That You Least Suspect was originally published on transhumanity.net

#climate change#kids#Parenting#Problem Solving#Thinking#crosspost#transhuman#transhumanitynet#transhumanism#transhumanist#thetranshumanity

0 notes

Text

Machine Learning: Regression with stochastic volatility

I had gotten right now there by a long search that had gone from machine learning, to fast Kalman filtration system, to Bayesian conjugate thready regression, to representing concern in the covariance using an inverse Wishart prior, in order to it time-varying, and allowing heteroschedasticity. I was thinking whith my friends which paper had all the items of an algorithm I think is fitted to forecasting intraday returns via signals. The features I like about imachine learning and the algotithm: Analytic: does not require sampling which is very slow. Just runs on the couple of matrix multiplications. Online: updates parameter values each and every single timestep. Offline/batch learning algorithms are typically too slow to retrain at every timestep within a backtest, which forces one to make sacrifices. Multivariate: cannot do without this. Adaptable regression coefficients: signals are weighted higher or much more depending on recent performance. Entirely forgetful: every part is adaptive, unlike some learners that happen to be partially adaptive and partially sticky. Adaptive variance: common linear regression is biased if the inputs are heteroschedastic unless you reweight input factors by the inverse of their variance. Adaptive input correlations: just in case signals become collinear. Estimations prediction error: outputs the estimated variance of the conjecture so you can choose how much you would like to trust it. This price is interpretable unlike a number of the heuristic approaches to extracting assurance intervals from other learners.

Interpretable internals: every internal variable is understandable. Looking at the internals clearly explains what the model has learned. Uni- or multi-variate: specializes or perhaps generalizes naturally. The only adjustment required is to increase or perhaps decrease the number of parameters, the values don’t need to be transformed. 10 input variables happens to be well as 2 which will works as well as 1 . Interpretable parameters: parameters are always set based on a-priori expertise, not exclusively by crossvalidation. The author re-parameterized the unit to make it as intuitive as possible - the variables are basically exponential lower price factors. Minimal parameters: it has just the right number of parameters to constitute a useful family nonetheless no extra parameters which have been rarely useful or unintuitive. Objective priors: incorrect beginning values for internal parameters do not bias predictions for the long “burn-in” period. Emphasis on first-order effects: alpha is a first-order effect. Higher order results are nice but first is so hard to identify that they are not worth the additional parameters and wasted datapoints. Bayesian: with a Bayesian model you understand all the assumptions starting your model. To learn more about variance see this useful web portal.

0 notes

Photo

What the Digital Brains of the Future Might Be Like

An entrepreneur and long-time neuroscience hobbyist attempts to merge his two loves.

Alexis C. Madrigal - June 20, 2013

It is the rare entrepreneur who hits it truly big twice. Those who do -- such as Ev Williams, Ted Turner, and Elon Musk -- tend to stay within the original industry that made them. In recent memory, Steve Jobs sticks out for his success in entertainment (Pixar) and computing (Apple).

Which is what makes Jeff Hawkins so intriguing. Having founded Palm (of Pilot fame) and sold it, Hawkins turned his attention back to his long-time hobby... neuroscience. And now he's got another company, Grok, that tries to apply what he learned about neurons and brain processes to the data problems that companies have. While technology has been Hawkins' job for most of his adult life, it's clear that the brain is his passion. His book detailing his synthesis of neuroscience research, On Intelligence, received unexpectedly great reviews from the research community. Nobel laureate Eric Kandel even blurbed the book, calling it "a must-read for everyone who is curious about the brain and wonders how it works."

We spoke at the company's modest offices in Redwood City for a Q&A that was published in this month's magazine.

Here, you can find the extended remix.

What is Grok?

Grok is software that helps companies take automated action from streaming data. It does this by finding complex patterns in machine -- generated data, and making predictions. It might use smart-meter data to predict energy needs, or data from complex machinery to predict equipment failures. The underlying technology is based

So how does it actually work?

Grok is self-learning -- it finds patterns in data without human intervention. Feed Grok streams of data, and it automatically models the data the way a human analyst might -- by understanding which data streams are useful, trying to represent the data, and tuning complex algorithm parameters to improve results. Because it's automated, Grok is ideal for analyzing thousands of data streams. Grok also learns continuously. Unlike most other analytics techniques, Grok learns from every data point, versus having to be retrained. No analyst needs to make a decision about when to take models offline and update them.

How do most people work with data now?

What most people do today is put data in big databases and analyze the correlations. Say you have 1 billion users on Facebook, and you're trying to figure out what advertisement to feed to 20 percent of them. You want one big model on all these data. What we do is different: Say someone has 10,000 smart meters, and they're trying to figure out what energy consumption is going to be two hours from now. We build 10,000 models. You can't have a data analyst doing that. If you want to model every machine in a factory or every windmill in a windmill farm, it's all about automation. We build lots of little models -- that's the future of data.