#sql table alias

Explore tagged Tumblr posts

Text

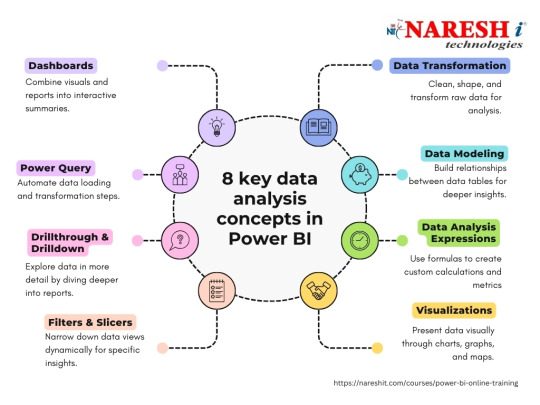

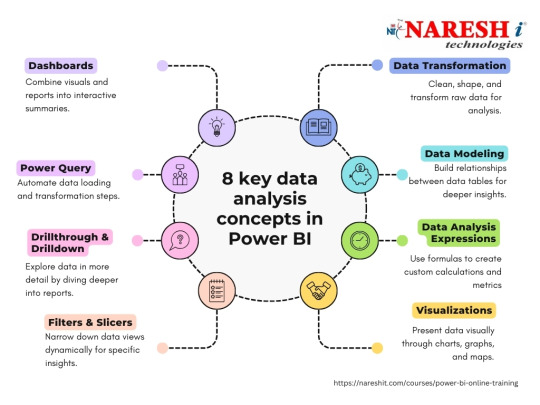

𝗨𝗻𝗹𝗲𝗮𝘀𝗵 𝘁𝗵𝗲 𝗣𝗼𝘄𝗲𝗿 𝗼𝗳 𝗗𝗮𝘁𝗮 𝘄𝗶𝘁𝗵 𝗣𝗼𝘄𝗲𝗿 𝗕𝗜 𝗢𝗻𝗹𝗶𝗻𝗲 𝗧𝗿𝗮𝗶𝗻𝗶𝗻𝗴 𝗮𝘁 𝗡𝗮𝗿𝗲𝘀𝗵-𝗜𝗧!

Enroll now : https://nareshit.com/courses/power-bi-online-training

Course Overview

Naresh IT offers top-notch Power BI training, both online and in the classroom, aimed at equipping participants with an in-depth grasp of Microsoft Power BI, a premier business intelligence and data visualization platform. Our course delves into crucial facets of data analysis, visualization, and reporting utilizing Power BI. Through hands-on sessions, students will master the creation of dynamic dashboards, data source connectivity, and the extraction of actionable insights. Join Naresh IT for unrivaled expertise in Power BI.

Learn software skills with real experts, either in live classes with videos or without videos, whichever suits you best.

Description

The Power BI course begins with an introduction to business intelligence and the role of Power BI in transforming raw data into meaningful insights. Participants will learn about the Power BI ecosystem, including Power BI Desktop, Power BI Service, and Power BI Mobile. The course covers topics such as data loading, data transformation, creating visualizations, and sharing reports. Practical examples, hands-on projects, and real-world scenarios will be used to reinforce theoretical concepts.

Course Objectives

The primary objectives of the Power BI course are as follows:

Introduction to Business Intelligence and Power BI: Provide an overview of business intelligence concepts and the features of Power BI.

Power BI Ecosystem: Understand the components of the Power BI ecosystem, including Power BI Desktop, Service, and Mobile.

Data Loading and Transformation: Learn the process of loading data into Power BI and transforming it for analysis and visualization.

Data Modeling: Gain skills in creating data models within Power BI to establish relationships and hierarchies.

Creating Visualizations: Explore the various visualization options in Power BI and create interactive and informative reports and dashboards.

Advanced Analytics: Understand how to leverage advanced analytics features in Power BI, including DAX (Data Analysis Expressions) for calculations.

Power BI Service: Learn about the cloud-based service for sharing, collaborating, and publishing Power BI reports.

Data Connectivity: Explore the options for connecting Power BI to various data sources, including databases, cloud services, and Excel.

Sharing and Collaboration: Understand how to share Power BI reports with others, collaborate on datasets, and use workspaces.

Security and Compliance: Explore security measures and compliance considerations when working with sensitive data in Power BI.

Prerequisites

Basic understanding of data analysis concepts.

Familiarity with Microsoft Excel and its functions.

Knowledge of relational databases and SQL.

Understanding of data visualization principles.

Awareness of business intelligence (BI) concepts and tools.

Experience with basic data modeling and transformation techniques.

Course Curriculum

SQL (Structured Query Language)

What is SQL?

What is Database?

Difference between SQL and Database

Types of SQL Commands

Relationships in SQL

Comments in SQL

Alias in SQL

Database Commands

Datatypes in SQL

Table Commands

Constraints in SQL

Operators in SQL

Clauses in SQL

Functions in SQL

JOINS

Set operators

Sub Queries

Views

Synonyms

Case Statements

Window Functions

Introduction to Power BI

Power BI Introduction

Power BI Desktop (Power Query, Power Pivot, Power View, Data Modelling)

Power BI Service

Flow of Work in Power BI

Power BI Architecture

Power BI Desktop Installation

Installation through Microsoft Store

Download and Installation of Power BI Desktop

Power Query Editor / Power Query

Overview of Power Query Editor

Introduction of Power Query

UI of Power Query Editor

How to Open Power Query Editor

File Tab

Inbuilt Column Transformations

Inbuilt Row Transformations

Query Options

Home Tab Options

Transform Tab Options

Add Column Tab

Combine Queries (Merge and Append Queries)

View Tab Options

Tools Tab Options

Help Tab Options

Filters in Power Query

Data Modelling / Model View

What is InMemory Columnar database and advantages

What is Traditional database

Difference between InMemory Columnar database and Traditional database

xVelocity In-memory Analytics Engine (Vertipaq Engine)

Data Connectivity modes in Power BI

What is Data Modelling?

What are a Relationships?

Types of Relationships/Cardinalities

One-to-One, One-to-many, Many-to-One, Many-to-Many

Why do we need a Relationship?

How to create a relationship in Power BI

Edit existing relationship

Delete relationship

AutoDetect Relationship

Make Relationship Active or Inactive

Cross filter direction (Single, Both)

Assume Referential Integrity

Apply Security Filter in Both Directions

Dimension Column, Fact Column.

Dimension table, Fact Table

What is Schema?

Types of Schemas and Advantages

Power View / Report View

Introduction to Power View

What and why Visualizations?

UI of Report View/Power View

Difference between Numeric data, Categorical data, Series of data

Difference between Quantitative data and Qualitative data

Categorical data Visuals

Numeric and Series of Data

Tabular Data

Geographical Data

KPI Data

Filtering data

Filters in Power View

Drill Reports

Visual Interactions

Grouping

Sorting

Bookmarks in Power BI

Selection Pane in Power BI

Buttons in Power BI

Tooltips

Power BI Service

Power BI Architecture

How to Sign into Power BI Service account

Power Bi Licences (Pro & Premium Licences)

Team Collaboration in Power BI using Workspace

Sharing Power BI Content using Basic Sharing, Content Packs and Apps

Refreshing the Data Source

Deployment Pipelines

Row Level Security (RLS)

#PowerBI#PowerBIDesktop#DataVisualization#DataAnalytics#BusinessIntelligence#PowerBIAI#DataStorytelling

0 notes

Text

Homework 5 Managing Data(bases) using SQL solved

Objective: Create SELECT statements involving multiple tables by using joins Problem 1: Create a query displaying the employee_id, start_date, end_date and department_name using the old SQL join syntax (Where clause). Alias the departments table with d and the job_history table with jh. Order it by employee_id and start_date. Problem 2: Rewrite the previous query using the new SQL join syntax…

View On WordPress

0 notes

Text

Homework 5 Managing Data(bases) using SQL

Objective: Create SELECT statements involving multiple tables by using joins Problem 1: Create a query displaying the employee_id, start_date, end_date and department_name using the old SQL join syntax (Where clause). Alias the departments table with d and the job_history table with jh. Order it by employee_id and start_date. Problem 2: Rewrite the previous query using the new SQL join syntax…

View On WordPress

0 notes

Text

[Python] PySpark to M, SQL or Pandas

Hace tiempo escribí un artículo sobre como escribir en pandas algunos códigos de referencia de SQL o M (power query). Si bien en su momento fue de gran utilidad, lo cierto es que hoy existe otro lenguaje que representa un fuerte pie en el análisis de datos.

Spark se convirtió en el jugar principal para lectura de datos en Lakes. Aunque sea cierto que existe SparkSQL, no quise dejar de traer estas analogías de código entre PySpark, M, SQL y Pandas para quienes estén familiarizados con un lenguaje, puedan ver como realizar una acción con el otro.

Lo primero es ponernos de acuerdo en la lectura del post.

Power Query corre en capas. Cada linea llama a la anterior (que devuelve una tabla) generando esta perspectiva o visión en capas. Por ello cuando leamos en el código #“Paso anterior” hablamos de una tabla.

En Python, asumiremos a "df" como un pandas dataframe (pandas.DataFrame) ya cargado y a "spark_frame" a un frame de pyspark cargado (spark.read)

Conozcamos los ejemplos que serán listados en el siguiente orden: SQL, PySpark, Pandas, Power Query.

En SQL:

SELECT TOP 5 * FROM table

En PySpark

spark_frame.limit(5)

En Pandas:

df.head()

En Power Query:

Table.FirstN(#"Paso Anterior",5)

Contar filas

SELECT COUNT(*) FROM table1

spark_frame.count()

df.shape()

Table.RowCount(#"Paso Anterior")

Seleccionar filas

SELECT column1, column2 FROM table1

spark_frame.select("column1", "column2")

df[["column1", "column2"]]

#"Paso Anterior"[[Columna1],[Columna2]] O podría ser: Table.SelectColumns(#"Paso Anterior", {"Columna1", "Columna2"} )

Filtrar filas

SELECT column1, column2 FROM table1 WHERE column1 = 2

spark_frame.filter("column1 = 2") # OR spark_frame.filter(spark_frame['column1'] == 2)

df[['column1', 'column2']].loc[df['column1'] == 2]

Table.SelectRows(#"Paso Anterior", each [column1] == 2 )

Varios filtros de filas

SELECT * FROM table1 WHERE column1 > 1 AND column2 < 25

spark_frame.filter((spark_frame['column1'] > 1) & (spark_frame['column2'] < 25)) O con operadores OR y NOT spark_frame.filter((spark_frame['column1'] > 1) | ~(spark_frame['column2'] < 25))

df.loc[(df['column1'] > 1) & (df['column2'] < 25)] O con operadores OR y NOT df.loc[(df['column1'] > 1) | ~(df['column2'] < 25)]

Table.SelectRows(#"Paso Anterior", each [column1] > 1 and column2 < 25 ) O con operadores OR y NOT Table.SelectRows(#"Paso Anterior", each [column1] > 1 or not ([column1] < 25 ) )

Filtros con operadores complejos

SELECT * FROM table1 WHERE column1 BETWEEN 1 and 5 AND column2 IN (20,30,40,50) AND column3 LIKE '%arcelona%'

from pyspark.sql.functions import col spark_frame.filter( (col('column1').between(1, 5)) & (col('column2').isin(20, 30, 40, 50)) & (col('column3').like('%arcelona%')) ) # O spark_frame.where( (col('column1').between(1, 5)) & (col('column2').isin(20, 30, 40, 50)) & (col('column3').contains('arcelona')) )

df.loc[(df['colum1'].between(1,5)) & (df['column2'].isin([20,30,40,50])) & (df['column3'].str.contains('arcelona'))]

Table.SelectRows(#"Paso Anterior", each ([column1] > 1 and [column1] < 5) and List.Contains({20,30,40,50}, [column2]) and Text.Contains([column3], "arcelona") )

Join tables

SELECT t1.column1, t2.column1 FROM table1 t1 LEFT JOIN table2 t2 ON t1.column_id = t2.column_id

Sería correcto cambiar el alias de columnas de mismo nombre así:

spark_frame1.join(spark_frame2, spark_frame1["column_id"] == spark_frame2["column_id"], "left").select(spark_frame1["column1"].alias("column1_df1"), spark_frame2["column1"].alias("column1_df2"))

Hay dos funciones que pueden ayudarnos en este proceso merge y join.

df_joined = df1.merge(df2, left_on='lkey', right_on='rkey', how='left') df_joined = df1.join(df2, on='column_id', how='left')Luego seleccionamos dos columnas df_joined.loc[['column1_df1', 'column1_df2']]

En Power Query vamos a ir eligiendo una columna de antemano y luego añadiendo la segunda.

#"Origen" = #"Paso Anterior"[[column1_t1]] #"Paso Join" = Table.NestedJoin(#"Origen", {"column_t1_id"}, table2, {"column_t2_id"}, "Prefijo", JoinKind.LeftOuter) #"Expansion" = Table.ExpandTableColumn(#"Paso Join", "Prefijo", {"column1_t2"}, {"Prefijo_column1_t2"})

Group By

SELECT column1, count(*) FROM table1 GROUP BY column1

from pyspark.sql.functions import count spark_frame.groupBy("column1").agg(count("*").alias("count"))

df.groupby('column1')['column1'].count()

Table.Group(#"Paso Anterior", {"column1"}, {{"Alias de count", each Table.RowCount(_), type number}})

Filtrando un agrupado

SELECT store, sum(sales) FROM table1 GROUP BY store HAVING sum(sales) > 1000

from pyspark.sql.functions import sum as spark_sum spark_frame.groupBy("store").agg(spark_sum("sales").alias("total_sales")).filter("total_sales > 1000")

df_grouped = df.groupby('store')['sales'].sum() df_grouped.loc[df_grouped > 1000]

#”Grouping” = Table.Group(#"Paso Anterior", {"store"}, {{"Alias de sum", each List.Sum([sales]), type number}}) #"Final" = Table.SelectRows( #"Grouping" , each [Alias de sum] > 1000 )

Ordenar descendente por columna

SELECT * FROM table1 ORDER BY column1 DESC

spark_frame.orderBy("column1", ascending=False)

df.sort_values(by=['column1'], ascending=False)

Table.Sort(#"Paso Anterior",{{"column1", Order.Descending}})

Unir una tabla con otra de la misma característica

SELECT * FROM table1 UNION SELECT * FROM table2

spark_frame1.union(spark_frame2)

En Pandas tenemos dos opciones conocidas, la función append y concat.

df.append(df2) pd.concat([df1, df2])

Table.Combine({table1, table2})

Transformaciones

Las siguientes transformaciones son directamente entre PySpark, Pandas y Power Query puesto que no son tan comunes en un lenguaje de consulta como SQL. Puede que su resultado no sea idéntico pero si similar para el caso a resolver.

Analizar el contenido de una tabla

spark_frame.summary()

df.describe()

Table.Profile(#"Paso Anterior")

Chequear valores únicos de las columnas

spark_frame.groupBy("column1").count().show()

df.value_counts("columna1")

Table.Profile(#"Paso Anterior")[[Column],[DistinctCount]]

Generar Tabla de prueba con datos cargados a mano

spark_frame = spark.createDataFrame([(1, "Boris Yeltsin"), (2, "Mikhail Gorbachev")], inferSchema=True)

df = pd.DataFrame([[1,2],["Boris Yeltsin", "Mikhail Gorbachev"]], columns=["CustomerID", "Name"])

Table.FromRecords({[CustomerID = 1, Name = "Bob", Phone = "123-4567"]})

Quitar una columna

spark_frame.drop("column1")

df.drop(columns=['column1']) df.drop(['column1'], axis=1)

Table.RemoveColumns(#"Paso Anterior",{"column1"})

Aplicar transformaciones sobre una columna

spark_frame.withColumn("column1", col("column1") + 1)

df.apply(lambda x : x['column1'] + 1 , axis = 1)

Table.TransformColumns(#"Paso Anterior", {{"column1", each _ + 1, type number}})

Hemos terminado el largo camino de consultas y transformaciones que nos ayudarían a tener un mejor tiempo a puro código con PySpark, SQL, Pandas y Power Query para que conociendo uno sepamos usar el otro.

#spark#pyspark#python#pandas#sql#power query#powerquery#notebooks#ladataweb#data engineering#data wrangling#data cleansing

0 notes

Text

Create SELECT statements involving multiple tables by using joins

Problem 1: Create a query displaying the employee_id, start_date, end_date and department_name using the old SQL join syntax (Where clause). Alias the departments table with d and the job_history table with jh. Order it by employee_id and start_date. Problem 2: Rewrite the previous query using the new SQL join syntax (From clause). Problem 3: Rewrite the previous query using the following syntax…

View On WordPress

0 notes

Text

OPTIMIZER USES SQL PROFILE WITH BAD PLAN EVEN THOUGH GOOD PLAN IS AVAILABLE

*************************************** BASE STATISTICAL INFORMATION *********************** Table Stats:: Table: DSPM Alias: DSPM #Rows: 291053 SSZ: 0 LGR: 0 #Blks: 5778 AvgRowLen: 114.00 NEB: 0 ChainCnt: 0.00 ScanRate: 0.00 SPC: 0 RFL: 0 RNF: 0 CBK: 0 CHR: 0 KQDFLG: 1 #IMCUs: 0 IMCRowCnt: 0 IMCJournalRowCnt: 0 #IMCBlocks: 0 IMCQuotient: 0.000000 Index Stats:: Index: DTYPE Col#: 3 LVLS: 2 #LB:…

View On WordPress

0 notes

Text

Introduction to SQL

Introduction to SQL

One of the key concepts involved in data management is the programming language SQL (Structured Query Language). This language is widely known and used for database management by many individuals and companies around the world. SQL is used to perform creation, retrieval, updating, and deletion (CRUD) tasks on databases, making it very easy to store and query data. SQL was developed in the 1970’s by Raymond Boyce and Donald Chamberlin. It was initially created for use within IBM’s database management system but has since been developed further and become available to the public. Oracle has released an open-source system called MySQL where individuals in the public can write their own SQL to perform queries, which is a great place to start!

Types of Commands

There are three types of commands in SQL:

Data Definition Language (DDL)- DDL defines a database through create, drop, and alter table commands, as well as establishing keys (primary, foreign, etc.)

Data Control Language (DCL)- DCL controls who has access to the data.

Data Manipulation Language (DML)- DML commands are used to query a database.

Steps to Create a Table

The first step to creating a table is making a plan of what variables will be in the table as well as the type of variable. Once a plan is in place, the CREATE TABLE command is used and the variables are listed with their type and length. Then, one must identify which attributes will allow null values and which columns should be unique. At the end, all primary and foreign keys need to be identified. INSERT INTO commands are then used to fill the empty table with rows of data. If a table need to be edited, the ALTER TABLE command can be used. If it needs to be deleted, then DROP TABLE can be used to do so. Sometimes it is helpful to drop a table at the beginning of a session in case there has already been a table created with the table name one is trying to use.

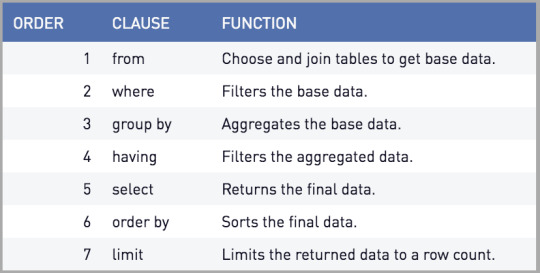

SQL Query Hierarchy

Querying data is essentially asking it a question or asking it for a specified output. Nearly all DML queries begin with the same commands. First, one must identify which columns they would like to the output to contain. This is established by listing the column names after SELECT with commas in between. Aggregate function may be used in this part as well, such as SUM or COUNT. However, if an aggregate function is used, a GROUP BY must also be used (expanded upon later). Next, one must identify the table from which these columns are coming from. To do this, the table name is written after the clause FROM, and an alias may be used if multiple tables are being joined, or just to stay organized. This is also the location where one would identify any tables that are being joined, as well as the column on which they are being joined. SELECT and FROM are the two commands necessary to query data. If there are any filters one would like to use on the data that do not require an aggregation, the WHERE clause comes next. This is where the filter can be applied to the data. If aggregate functions were used in the SELECT command, a GROUP BY command would be used after WHERE. One or more columns can be used in a GROUP BY to group the data by a field (or multiple). Next, a HAVING clause is used to filter data if the filter is based on an aggregate, such as AVG. If one wants the query returned in a sorted manner, the ORDER BY command can be used to sort it in ascending or descending order. If one wants the order to be descending, DESC must be written after ORDER BY. Finally, LIMIT can be used to the limit the number of rows of data returned. These are the steps one would take when writing a query, however, the clauses are processed in a different order by the computer. The order in which they are processed by the computer is as follows: FROM, WHERE, GROUP BY, HAVING, SELECT, ORDER BY, LIMIT. It is also important to note that all these commands are used to query data, so the tables must already be created to perform these operations.

Is it Worth Learning SQL?

The short answer is- absolutely! SQL is a very commonly used programming language across the world; it is universal. Also, it is subjectively easy to learn, especially compared to coding. It is incredibly powerful when dealing with databases. The steps provided above are only for simple queries, though they are very useful. Much more advanced queries are possible with SQL, such as nested queries. If you want to get started with SQL, Data Camp offers excellent beginner courses as well as other online platforms. Because Oracle’s MySQL is open-source and easy to use, it is a great place to practice.

1 note

·

View note

Text

youtube

Dynamic Alias Names in SQL:

How can you return the dynamic alias name for the table columns?

#sqltrick#sqlquery#dynamicsql#sqltips#sqlinterviewquestionsandanswers#interviewquestionsandanswers#sqlinterview#techpointfundamentals#techpointfunda#techpoint#Youtube

1 note

·

View note

Text

SQL Aliases

The alias are temporary names given to a table or column for a specific SQL query.It is used when a column or table name is used without its original names, but the modified name is only temporary. Syntax Coulmn Alias Syntax: SELECT column1, column2 .... FROM tablename AS aliasname WHERE condition; Table Alias Syntax: SELECT columnname AS aliasname FROM tablename WHERE condition; Example For…

View On WordPress

#alias#alias column#alias in oracle#alias in sql#alias in sql in hindi#alias in sql server#alias name#alias nedir#alias sql#alias sql ita#alias table#aliases#column alias#creando alias#mysql alias#oracle alias#oracle alias name#spalten alias#sql alias#sql alias as#sql alias ita#sql alias join#sql alias syntax#sql aliases#sql and alias#sql column alias#sql server alias#sql server aliases#sql table alias#table alias

0 notes

Text

100%OFF | SQL Server Interview Questions and Answers

If you are looking forward to crack SQL Server interviews then you are at the right course.

Working in SQL Server and cracking SQL Server interviews are different ball game. Normally SQL Server professionals work on repetitive tasks like back ups , custom reporting and so on. So when they are asked simple questions like Normalization , types of triggers they FUMBLE.

Its not that they do not know the answer , its just that they need a revision. That’s what this course exactly does. Its prepares you for SQL Server interview in 2 days.

Below are the list of questions with answers , demonstration and detailed explanation. Happy learning. Happy job hunting.

SQL Interview Questions & Answers – Part 1 :-

Question 1 :- Explain normalization ?

Question 2 :- How to implement normalization ?

Question 3 :- What is denormalization ?

Question 4 :- Explain OLTP vs OLAP ?

Question 5 :- Explain 1st,2nd and 3rd Normal form ?

Question 6 :- Primary Key vs Unique key ?

Question 7 :- Differentiate between Char vs Varchar ?

Question 8 :- Differentiate between Char vs NChar ?

Question 9 :- Whats the size of Char vs NChar ?

Question 10 :- What is the use of Index ?

Question 11 :- How does it make search faster?

Question 12 :- What are the two types of Indexes ?

Question 13 :- Clustered vs Non-Clustered index

Question 14 :- Function vs Stored Procedures

Question 15 :- What are triggers and why do you need it ?

Question 16 :- What are types of triggers ?

Question 17 :- Differentiate between After trigger vs Instead Of ?

Question 18 :- What is need of Identity ?

Question 19 :- Explain transactions and how to implement it ?

Question 20 :- What are inner joins ?

Question 21 :- Explain Left join ?

Question 22 :- Explain Right join ?

Question 23 :- Explain Full outer joins ?

Question 24 :- Explain Cross joins ?

SQL Interview Questions & Answers – Part 2 :-

Question 25:-Why do we need UNION ?

Question 26:-Differentiate between Union vs Union All ?

Question 27:-can we have unequal columns in Union?

Question 28:-Can column have different data types in Union ?

Question 29:- Which Aggregate function have you used ?

Question 30:- When to use Group by ?

Question 31:- Can we select column which is not part of group by ?

Question 32:- What is having clause ?

Question 33:- Having clause vs Where clause

Question 34:- How can we sort records ?

Question 35:- Whats the default sort ?

Question 36:- How can we remove duplicates ?

Question 37:- Select the first top X records ?

Question 38:- How to handle NULLS ?

Question 39:- What is use of wild cards ?

Question 40:- What is the use of Alias ?

Question 41:- How to write a case statement ?

Question 42:- What is self reference tables ?

Question 43:- What is self join ?

Question 44:- Explain the between clause ?

SQL Interview Questions & Answers – Part 3 :-

Question 45:- Explain SubQuery?

Question 46:- Can inner Subquery return multiple results?

Question 47:- What is Co-related Query?

Question 48:- Differentiate between Joins and SubQuery?

Question 49:- Performance Joins vs SubQuery?

SQL Interview Questions & Answers – Part 4 :-

Question 50:- Find NTH Highest Salary in SQL.

SQL Interview Questions & Answers – Part 5

Question 51:- Select the top nth highest salary using correlated Queries?

Question 52:- Select top nth using using TSQL

Question 53:- Performance comparison of all the methods.

[ENROLL THE COURSE]

21 notes

·

View notes

Text

Integrating Power BI with Azure Synapse Analytics for real-time data insights-NareshIT

Enroll now : https://nareshit.com/courses/power-bi-online-training

Course Overview

Naresh IT offers top-notch Power BI training, both online and in the classroom, aimed at equipping participants with an in-depth grasp of Microsoft Power BI, a premier business intelligence and data visualization platform. Our course delves into crucial facets of data analysis, visualization, and reporting utilizing Power BI. Through hands-on sessions, students will master the creation of dynamic dashboards, data source connectivity, and the extraction of actionable insights. Join Naresh IT for unrivaled expertise in Power BI.

Learn software skills with real experts, either in live classes with videos or without videos, whichever suits you best.

Description

The Power BI course begins with an introduction to business intelligence and the role of Power BI in transforming raw data into meaningful insights. Participants will learn about the Power BI ecosystem, including Power BI Desktop, Power BI Service, and Power BI Mobile. The course covers topics such as data loading, data transformation, creating visualizations, and sharing reports. Practical examples, hands-on projects, and real-world scenarios will be used to reinforce theoretical concepts.

Course Objectives

The primary objectives of the Power BI course are as follows:

Introduction to Business Intelligence and Power BI: Provide an overview of business intelligence concepts and the features of Power BI.

Power BI Ecosystem: Understand the components of the Power BI ecosystem, including Power BI Desktop, Service, and Mobile.

Data Loading and Transformation: Learn the process of loading data into Power BI and transforming it for analysis and visualization.

Data Modeling: Gain skills in creating data models within Power BI to establish relationships and hierarchies.

Creating Visualizations: Explore the various visualization options in Power BI and create interactive and informative reports and dashboards.

Advanced Analytics: Understand how to leverage advanced analytics features in Power BI, including DAX (Data Analysis Expressions) for calculations.

Power BI Service: Learn about the cloud-based service for sharing, collaborating, and publishing Power BI reports.

Data Connectivity: Explore the options for connecting Power BI to various data sources, including databases, cloud services, and Excel.

Sharing and Collaboration: Understand how to share Power BI reports with others, collaborate on datasets, and use workspaces.

Security and Compliance: Explore security measures and compliance considerations when working with sensitive data in Power BI.

Prerequisites

Basic understanding of data analysis concepts.

Familiarity with Microsoft Excel and its functions.

Knowledge of relational databases and SQL.

Understanding of data visualization principles.

Awareness of business intelligence (BI) concepts and tools.

Experience with basic data modeling and transformation techniques.

Course Curriculum

SQL (Structured Query Language)

What is SQL?

What is Database?

Difference between SQL and Database

Types of SQL Commands

Relationships in SQL

Comments in SQL

Alias in SQL

Database Commands

Datatypes in SQL

Table Commands

Constraints in SQL

Operators in SQL

Clauses in SQL

Functions in SQL

JOINS

Set operators

Sub Queries

Views

Synonyms

Case Statements

Window Functions

Introduction to Power BI

Power BI Introduction

Power BI Desktop (Power Query, Power Pivot, Power View, Data Modelling)

Power BI Service

Flow of Work in Power BI

Power BI Architecture

Power BI Desktop Installation

Installation through Microsoft Store

Download and Installation of Power BI Desktop

Power Query Editor / Power Query

Overview of Power Query Editor

Introduction of Power Query

UI of Power Query Editor

How to Open Power Query Editor

File Tab

Inbuilt Column Transformations

Inbuilt Row Transformations

Query Options

Home Tab Options

Transform Tab Options

Add Column Tab

Combine Queries (Merge and Append Queries)

View Tab Options

Tools Tab Options

Help Tab Options

Filters in Power Query

Data Modelling / Model View

What is InMemory Columnar database and advantages

What is Traditional database

Difference between InMemory Columnar database and Traditional database

xVelocity In-memory Analytics Engine (Vertipaq Engine)

Data Connectivity modes in Power BI

What is Data Modelling?

What are a Relationships?

Types of Relationships/Cardinalities

One-to-One, One-to-many, Many-to-One, Many-to-Many

Why do we need a Relationship?

How to create a relationship in Power BI

Edit existing relationship

Delete relationship

AutoDetect Relationship

Make Relationship Active or Inactive

Cross filter direction (Single, Both)

Assume Referential Integrity

Apply Security Filter in Both Directions

Dimension Column, Fact Column.

Dimension table, Fact Table

What is Schema?

Types of Schemas and Advantages

Power View / Report View

Introduction to Power View

What and why Visualizations?

UI of Report View/Power View

Difference between Numeric data, Categorical data, Series of data

Difference between Quantitative data and Qualitative data

Categorical data Visuals

Numeric and Series of Data

Tabular Data

Geographical Data

KPI Data

Filtering data

Filters in Power View

Drill Reports

Visual Interactions

Grouping

Sorting

Bookmarks in Power BI

Selection Pane in Power BI

Buttons in Power BI

Tooltips

Power BI Service

Power BI Architecture

How to Sign into Power BI Service account

Power Bi Licences (Pro & Premium Licences)

Team Collaboration in Power BI using Workspace

Sharing Power BI Content using Basic Sharing, Content Packs and Apps

Refreshing the Data Source

Deployment Pipelines

Row Level Security (RLS)

#PowerBI#PowerBIDesktop#DataVisualization#DataAnalytics#BusinessIntelligence#PowerBIAI#DataStorytelling

0 notes

Text

Homework 3 Managing Data(bases) using SQL solved

Objective: Create SELECT queries using basic data types and associated functions Problem 1: Create a query displaying the employee_id, the last name based on table employees. Create a calculated column with an alias of Weekly Salary (case and space required). Assume that the salary column contains monthly salary and that a year has 52 weeks. Round the weekly salary result to 2 decimals. Display…

View On WordPress

0 notes

Text

Homework 3 Managing Data(bases) using SQL

Objective: Create SELECT queries using basic data types and associated functions Problem 1: Create a query displaying the employee_id, the last name based on table employees. Create a calculated column with an alias of Weekly Salary (case and space required). Assume that the salary column contains monthly salary and that a year has 52 weeks. Round the weekly salary result to 2 decimals. Display…

View On WordPress

0 notes

Text

Sql Inquiry Interview Questions

The function MATTER()returns the number of rows from the field e-mail. The driver HAVINGworks in similar means WHERE, except that it is applied except all columns, but also for the established produced by the driver GROUP BY. This statement copies data from one table and inserts it right into an additional, while the information enters both tables have to match. SQL aliases are needed to give a temporary name to a table or column. These are guidelines for restricting the sort of data that can be stored in a table. The activity on the data will certainly not be carried out if the set limitations are gone against. Obviously, this isn't an extensive list of inquiries you might be asked, however it's a excellent beginning point. We have actually likewise obtained 40 real chance & data interview concerns asked by FANG & Wall Street. The very first step of analytics for many workflows involves fast cutting as well as dicing of data in SQL. That's why being able to compose fundamental queries effectively is a very essential ability. Although numerous might think that SQL merely involves SELECTs and Signs up with, there are lots of other operators and information involved for effective SQL workflows. Used to establish benefits, functions, and also approvals for different users of the database (e.g. the GRANT and also WITHDRAW statements). Made use of to query the database for info that matches the specifications of the request (e.g. the SELECT declaration). Utilized to change the documents present in a data source (e.g. the INSERT, UPDATE, and ERASE statements). The SELECT declaration is made use of to choose information from a database. Given the tables above, compose a query that will certainly determine the total commission by a salesperson. A LEFT OUTER JOIN B amounts B RIGHT EXTERNAL SIGN UP WITH A, with the columns in a various order. The INSERT statement includes brand-new rows of data to a table. Non-Clustered Indexes, or simply indexes, are created beyond the table. SQL Server supports 999 Non-Clustered per table and also each Non-Clustered can have up to 1023 columns. A Non-Clustered Index does not support the Text, nText and Image data kinds. A Clustered Index kinds as well as stores the data in the table based upon keys. Data source normalization is the process of arranging the fields as well as tables of a relational database to decrease redundancy as well as dependence. Normalization generally includes separating large tables into smaller sized tables as well as specifying partnerships amongst them. Normalization is a bottom-up strategy for database design. In this post, we share 65 SQL Web server interview concerns and response to those inquiries. SQL is progressing rapidly and also is one of the commonly made use of query languages for data extraction and analysis from relational databases. Despite the outburst of NoSQL in recent times, SQL is still making its back to become the extensive interface for data extraction as well as analysis. Simplilearn has many courses in SQL which can help you obtain fundamental as well as deep expertise in SQL and eventually become a SQL expert. There are much more innovative attributes that include producing stored procedures or SQL manuscripts, views, and establishing approvals on data source objects. Sights are utilized for security objectives due to the fact that they supply encapsulation of the name of the table. Information is in the online table, not stored permanently. In some cases for safety and security objectives, accessibility to the table, table structures, and also table relationships are not provided to the database individual. All they have is accessibility to a sight not knowing what tables in fact exist in the database. A Clustered Index can be defined just as soon as per table in the SQL Web Server Database, because the information rows can be sorted in only one order. Text, nText and also Image information are not permitted as a Gathered index. An Index is one of the most powerful techniques to collaborate with this massive info. ROWID is an 18-character long pseudo column connected with each row of a data source table. The main trick that is created on more than one column is known as composite primary key. Car increment allows the customers to produce a serial number to be created whenever a brand-new document is put into the table. It aids to maintain the main vital unique for each row or document. If you are utilizing Oracle then VEHICLE INCREMENT keyword need to be utilized otherwise utilize the IDENTIFICATION key words when it comes to the SQL Server. Information integrity is the total precision, completeness, and uniformity of information kept in a data source.

RDBMS is a software that stores the data right into the collection of tables in a partnership based upon typical fields between the columns of the table. Relational Database Management System is among the very best as well as frequently utilized databases, consequently SQL abilities are necessary in most of the job duties. In this SQL Meeting Questions and also solutions blog site, you will certainly find out one of the most frequently asked questions on SQL. Right here's a transcript/blog blog post, as well as here's a web link to the Zoom webinar. If you're hungry to begin addressing problems and also get solutions TODAY, register for Kevin's DataSciencePrep program to get 3 issues emailed to you every week. Or, you can produce a database utilizing the SQL Server Administration Studio. Right-click on Data sources, pick New Database and comply with the wizard actions. These SQL interview concerns and solutions are inadequate to pass out your interviews carried out by the top organization brand names. So, it is highly suggested to keep method of your academic knowledge in order to boost your efficiency. Remember, " method makes a man best". This is necessary when the inquiry contains 2 or more tables or columns with intricate names. In this case, for convenience, pseudonyms are made use of in the question. The SQL alias only exists throughout of the inquiry. INNER JOIN- obtaining documents with the very same values in both tables, i.e. getting the crossway of tables. SQL constraints are defined when developing or customizing a table. https://geekinterview.net Database tables are insufficient for obtaining the data successfully in case of a substantial amount of information. In order to get the information swiftly, we need to index the column in a table. As an example, in order to maintain information integrity, the numerical columns/sells should not accept alphabetic information. The distinct index makes certain the index vital column has one-of-a-kind values and it uses immediately if the primary secret is defined. In case, the special index has numerous columns after that the mix of values in these columns should be one-of-a-kind. As the name indicates, complete sign up with returns rows when there are matching rows in any kind of among the tables. It integrates the results of both left and right table records as well as it can return huge result-sets. The foreign secret is used to link 2 tables with each other and also it is a field that describes the primary key of another table. Structured Query Language is a shows language for accessing as well as adjusting Relational Database Management Solution. SQL is commonly used in preferred RDBMSs such as SQL Web Server, Oracle, as well as MySQL. The smallest device of execution in SQL is a question. A SQL query is used to pick, upgrade, and erase information. Although ANSI has actually established SQL criteria, there are many different versions of SQL based on various kinds of databases. Nonetheless, to be in compliance with the ANSI criterion, they need to at least sustain the major commands such as DELETE, INSERT, UPDATE, IN WHICH, and so on

1 note

·

View note

Text

How to set up command-line access to Amazon Keyspaces (for Apache Cassandra) by using the new developer toolkit Docker image

Amazon Keyspaces (for Apache Cassandra) is a scalable, highly available, and fully managed Cassandra-compatible database service. Amazon Keyspaces helps you run your Cassandra workloads more easily by using a serverless database that can scale up and down automatically in response to your actual application traffic. Because Amazon Keyspaces is serverless, there are no clusters or nodes to provision and manage. You can get started with Amazon Keyspaces with a few clicks in the console or a few changes to your existing Cassandra driver configuration. In this post, I show you how to set up command-line access to Amazon Keyspaces by using the keyspaces-toolkit Docker image. The keyspaces-toolkit Docker image contains commonly used Cassandra developer tooling. The toolkit comes with the Cassandra Query Language Shell (cqlsh) and is configured with best practices for Amazon Keyspaces. The container image is open source and also compatible with Apache Cassandra 3.x clusters. A command line interface (CLI) such as cqlsh can be useful when automating database activities. You can use cqlsh to run one-time queries and perform administrative tasks, such as modifying schemas or bulk-loading flat files. You also can use cqlsh to enable Amazon Keyspaces features, such as point-in-time recovery (PITR) backups and assign resource tags to keyspaces and tables. The following screenshot shows a cqlsh session connected to Amazon Keyspaces and the code to run a CQL create table statement. Build a Docker image To get started, download and build the Docker image so that you can run the keyspaces-toolkit in a container. A Docker image is the template for the complete and executable version of an application. It’s a way to package applications and preconfigured tools with all their dependencies. To build and run the image for this post, install the latest Docker engine and Git on the host or local environment. The following command builds the image from the source. docker build --tag amazon/keyspaces-toolkit --build-arg CLI_VERSION=latest https://github.com/aws-samples/amazon-keyspaces-toolkit.git The preceding command includes the following parameters: –tag – The name of the image in the name:tag Leaving out the tag results in latest. –build-arg CLI_VERSION – This allows you to specify the version of the base container. Docker images are composed of layers. If you’re using the AWS CLI Docker image, aligning versions significantly reduces the size and build times of the keyspaces-toolkit image. Connect to Amazon Keyspaces Now that you have a container image built and available in your local repository, you can use it to connect to Amazon Keyspaces. To use cqlsh with Amazon Keyspaces, create service-specific credentials for an existing AWS Identity and Access Management (IAM) user. The service-specific credentials enable IAM users to access Amazon Keyspaces, but not access other AWS services. The following command starts a new container running the cqlsh process. docker run --rm -ti amazon/keyspaces-toolkit cassandra.us-east-1.amazonaws.com 9142 --ssl -u "SERVICEUSERNAME" -p "SERVICEPASSWORD" The preceding command includes the following parameters: run – The Docker command to start the container from an image. It’s the equivalent to running create and start. –rm –Automatically removes the container when it exits and creates a container per session or run. -ti – Allocates a pseudo TTY (t) and keeps STDIN open (i) even if not attached (remove i when user input is not required). amazon/keyspaces-toolkit – The image name of the keyspaces-toolkit. us-east-1.amazonaws.com – The Amazon Keyspaces endpoint. 9142 – The default SSL port for Amazon Keyspaces. After connecting to Amazon Keyspaces, exit the cqlsh session and terminate the process by using the QUIT or EXIT command. Drop-in replacement Now, simplify the setup by assigning an alias (or DOSKEY for Windows) to the Docker command. The alias acts as a shortcut, enabling you to use the alias keyword instead of typing the entire command. You will use cqlsh as the alias keyword so that you can use the alias as a drop-in replacement for your existing Cassandra scripts. The alias contains the parameter –v "$(pwd)":/source, which mounts the current directory of the host. This is useful for importing and exporting data with COPY or using the cqlsh --file command to load external cqlsh scripts. alias cqlsh='docker run --rm -ti -v "$(pwd)":/source amazon/keyspaces-toolkit cassandra.us-east-1.amazonaws.com 9142 --ssl' For security reasons, don’t store the user name and password in the alias. After setting up the alias, you can create a new cqlsh session with Amazon Keyspaces by calling the alias and passing in the service-specific credentials. cqlsh -u "SERVICEUSERNAME" -p "SERVICEPASSWORD" Later in this post, I show how to use AWS Secrets Manager to avoid using plaintext credentials with cqlsh. You can use Secrets Manager to store, manage, and retrieve secrets. Create a keyspace Now that you have the container and alias set up, you can use the keyspaces-toolkit to create a keyspace by using cqlsh to run CQL statements. In Cassandra, a keyspace is the highest-order structure in the CQL schema, which represents a grouping of tables. A keyspace is commonly used to define the domain of a microservice or isolate clients in a multi-tenant strategy. Amazon Keyspaces is serverless, so you don’t have to configure clusters, hosts, or Java virtual machines to create a keyspace or table. When you create a new keyspace or table, it is associated with an AWS Account and Region. Though a traditional Cassandra cluster is limited to 200 to 500 tables, with Amazon Keyspaces the number of keyspaces and tables for an account and Region is virtually unlimited. The following command creates a new keyspace by using SingleRegionStrategy, which replicates data three times across multiple Availability Zones in a single AWS Region. Storage is billed by the raw size of a single replica, and there is no network transfer cost when replicating data across Availability Zones. Using keyspaces-toolkit, connect to Amazon Keyspaces and run the following command from within the cqlsh session. CREATE KEYSPACE amazon WITH REPLICATION = {'class': 'SingleRegionStrategy'} AND TAGS = {'domain' : 'shoppingcart' , 'app' : 'acme-commerce'}; The preceding command includes the following parameters: REPLICATION – SingleRegionStrategy replicates data three times across multiple Availability Zones. TAGS – A label that you assign to an AWS resource. For more information about using tags for access control, microservices, cost allocation, and risk management, see Tagging Best Practices. Create a table Previously, you created a keyspace without needing to define clusters or infrastructure. Now, you will add a table to your keyspace in a similar way. A Cassandra table definition looks like a traditional SQL create table statement with an additional requirement for a partition key and clustering keys. These keys determine how data in CQL rows are distributed, sorted, and uniquely accessed. Tables in Amazon Keyspaces have the following unique characteristics: Virtually no limit to table size or throughput – In Amazon Keyspaces, a table’s capacity scales up and down automatically in response to traffic. You don’t have to manage nodes or consider node density. Performance stays consistent as your tables scale up or down. Support for “wide” partitions – CQL partitions can contain a virtually unbounded number of rows without the need for additional bucketing and sharding partition keys for size. This allows you to scale partitions “wider” than the traditional Cassandra best practice of 100 MB. No compaction strategies to consider – Amazon Keyspaces doesn’t require defined compaction strategies. Because you don’t have to manage compaction strategies, you can build powerful data models without having to consider the internals of the compaction process. Performance stays consistent even as write, read, update, and delete requirements change. No repair process to manage – Amazon Keyspaces doesn’t require you to manage a background repair process for data consistency and quality. No tombstones to manage – With Amazon Keyspaces, you can delete data without the challenge of managing tombstone removal, table-level grace periods, or zombie data problems. 1 MB row quota – Amazon Keyspaces supports the Cassandra blob type, but storing large blob data greater than 1 MB results in an exception. It’s a best practice to store larger blobs across multiple rows or in Amazon Simple Storage Service (Amazon S3) object storage. Fully managed backups – PITR helps protect your Amazon Keyspaces tables from accidental write or delete operations by providing continuous backups of your table data. The following command creates a table in Amazon Keyspaces by using a cqlsh statement with customer properties specifying on-demand capacity mode, PITR enabled, and AWS resource tags. Using keyspaces-toolkit to connect to Amazon Keyspaces, run this command from within the cqlsh session. CREATE TABLE amazon.eventstore( id text, time timeuuid, event text, PRIMARY KEY(id, time)) WITH CUSTOM_PROPERTIES = { 'capacity_mode':{'throughput_mode':'PAY_PER_REQUEST'}, 'point_in_time_recovery':{'status':'enabled'} } AND TAGS = {'domain' : 'shoppingcart' , 'app' : 'acme-commerce' , 'pii': 'true'}; The preceding command includes the following parameters: capacity_mode – Amazon Keyspaces has two read/write capacity modes for processing reads and writes on your tables. The default for new tables is on-demand capacity mode (the PAY_PER_REQUEST flag). point_in_time_recovery – When you enable this parameter, you can restore an Amazon Keyspaces table to a point in time within the preceding 35 days. There is no overhead or performance impact by enabling PITR. TAGS – Allows you to organize resources, define domains, specify environments, allocate cost centers, and label security requirements. Insert rows Before inserting data, check if your table was created successfully. Amazon Keyspaces performs data definition language (DDL) operations asynchronously, such as creating and deleting tables. You also can monitor the creation status of a new resource programmatically by querying the system schema table. Also, you can use a toolkit helper for exponential backoff. Check for table creation status Cassandra provides information about the running cluster in its system tables. With Amazon Keyspaces, there are no clusters to manage, but it still provides system tables for the Amazon Keyspaces resources in an account and Region. You can use the system tables to understand the creation status of a table. The system_schema_mcs keyspace is a new system keyspace with additional content related to serverless functionality. Using keyspaces-toolkit, run the following SELECT statement from within the cqlsh session to retrieve the status of the newly created table. SELECT keyspace_name, table_name, status FROM system_schema_mcs.tables WHERE keyspace_name = 'amazon' AND table_name = 'eventstore'; The following screenshot shows an example of output for the preceding CQL SELECT statement. Insert sample data Now that you have created your table, you can use CQL statements to insert and read sample data. Amazon Keyspaces requires all write operations (insert, update, and delete) to use the LOCAL_QUORUM consistency level for durability. With reads, an application can choose between eventual consistency and strong consistency by using LOCAL_ONE or LOCAL_QUORUM consistency levels. The benefits of eventual consistency in Amazon Keyspaces are higher availability and reduced cost. See the following code. CONSISTENCY LOCAL_QUORUM; INSERT INTO amazon.eventstore(id, time, event) VALUES ('1', now(), '{eventtype:"click-cart"}'); INSERT INTO amazon.eventstore(id, time, event) VALUES ('2', now(), '{eventtype:"showcart"}'); INSERT INTO amazon.eventstore(id, time, event) VALUES ('3', now(), '{eventtype:"clickitem"}') IF NOT EXISTS; SELECT * FROM amazon.eventstore; The preceding code uses IF NOT EXISTS or lightweight transactions to perform a conditional write. With Amazon Keyspaces, there is no heavy performance penalty for using lightweight transactions. You get similar performance characteristics of standard insert, update, and delete operations. The following screenshot shows the output from running the preceding statements in a cqlsh session. The three INSERT statements added three unique rows to the table, and the SELECT statement returned all the data within the table. Export table data to your local host You now can export the data you just inserted by using the cqlsh COPY TO command. This command exports the data to the source directory, which you mounted earlier to the working directory of the Docker run when creating the alias. The following cqlsh statement exports your table data to the export.csv file located on the host machine. CONSISTENCY LOCAL_ONE; COPY amazon.eventstore(id, time, event) TO '/source/export.csv' WITH HEADER=false; The following screenshot shows the output of the preceding command from the cqlsh session. After the COPY TO command finishes, you should be able to view the export.csv from the current working directory of the host machine. For more information about tuning export and import processes when using cqlsh COPY TO, see Loading data into Amazon Keyspaces with cqlsh. Use credentials stored in Secrets Manager Previously, you used service-specific credentials to connect to Amazon Keyspaces. In the following example, I show how to use the keyspaces-toolkit helpers to store and access service-specific credentials in Secrets Manager. The helpers are a collection of scripts bundled with keyspaces-toolkit to assist with common tasks. By overriding the default entry point cqlsh, you can call the aws-sm-cqlsh.sh script, a wrapper around the cqlsh process that retrieves the Amazon Keyspaces service-specific credentials from Secrets Manager and passes them to the cqlsh process. This script allows you to avoid hard-coding the credentials in your scripts. The following diagram illustrates this architecture. Configure the container to use the host’s AWS CLI credentials The keyspaces-toolkit extends the AWS CLI Docker image, making keyspaces-toolkit extremely lightweight. Because you may already have the AWS CLI Docker image in your local repository, keyspaces-toolkit adds only an additional 10 MB layer extension to the AWS CLI. This is approximately 15 times smaller than using cqlsh from the full Apache Cassandra 3.11 distribution. The AWS CLI runs in a container and doesn’t have access to the AWS credentials stored on the container’s host. You can share credentials with the container by mounting the ~/.aws directory. Mount the host directory to the container by using the -v parameter. To validate a proper setup, the following command lists current AWS CLI named profiles. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws amazon/keyspaces-toolkit configure list-profiles The ~/.aws directory is a common location for the AWS CLI credentials file. If you configured the container correctly, you should see a list of profiles from the host credentials. For instructions about setting up the AWS CLI, see Step 2: Set Up the AWS CLI and AWS SDKs. Store credentials in Secrets Manager Now that you have configured the container to access the host’s AWS CLI credentials, you can use the Secrets Manager API to store the Amazon Keyspaces service-specific credentials in Secrets Manager. The secret name keyspaces-credentials in the following command is also used in subsequent steps. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws amazon/keyspaces-toolkit secretsmanager create-secret --name keyspaces-credentials --description "Store Amazon Keyspaces Generated Service Credentials" --secret-string "{"username":"SERVICEUSERNAME","password":"SERVICEPASSWORD","engine":"cassandra","host":"SERVICEENDPOINT","port":"9142"}" The preceding command includes the following parameters: –entrypoint – The default entry point is cqlsh, but this command uses this flag to access the AWS CLI. –name – The name used to identify the key to retrieve the secret in the future. –secret-string – Stores the service-specific credentials. Replace SERVICEUSERNAME and SERVICEPASSWORD with your credentials. Replace SERVICEENDPOINT with the service endpoint for the AWS Region. Creating and storing secrets requires CreateSecret and GetSecretValue permissions in your IAM policy. As a best practice, rotate secrets periodically when storing database credentials. Use the Secrets Manager helper script Use the Secrets Manager helper script to sign in to Amazon Keyspaces by replacing the user and password fields with the secret key from the preceding keyspaces-credentials command. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws-sm-cqlsh.sh amazon/keyspaces-toolkit keyspaces-credentials --ssl --execute "DESCRIBE Keyspaces" The preceding command includes the following parameters: -v – Used to mount the directory containing the host’s AWS CLI credentials file. –entrypoint – Use the helper by overriding the default entry point of cqlsh to access the Secrets Manager helper script, aws-sm-cqlsh.sh. keyspaces-credentials – The key to access the credentials stored in Secrets Manager. –execute – Runs a CQL statement. Update the alias You now can update the alias so that your scripts don’t contain plaintext passwords. You also can manage users and roles through Secrets Manager. The following code sets up a new alias by using the keyspaces-toolkit Secrets Manager helper for passing the service-specific credentials to Secrets Manager. alias cqlsh='docker run --rm -ti -v ~/.aws:/root/.aws -v "$(pwd)":/source --entrypoint aws-sm-cqlsh.sh amazon/keyspaces-toolkit keyspaces-credentials --ssl' To have the alias available in every new terminal session, add the alias definition to your .bashrc file, which is executed on every new terminal window. You can usually find this file in $HOME/.bashrc or $HOME/bash_aliases (loaded by $HOME/.bashrc). Validate the alias Now that you have updated the alias with the Secrets Manager helper, you can use cqlsh without the Docker details or credentials, as shown in the following code. cqlsh --execute "DESCRIBE TABLE amazon.eventstore;" The following screenshot shows the running of the cqlsh DESCRIBE TABLE statement by using the alias created in the previous section. In the output, you should see the table definition of the amazon.eventstore table you created in the previous step. Conclusion In this post, I showed how to get started with Amazon Keyspaces and the keyspaces-toolkit Docker image. I used Docker to build an image and run a container for a consistent and reproducible experience. I also used an alias to create a drop-in replacement for existing scripts, and used built-in helpers to integrate cqlsh with Secrets Manager to store service-specific credentials. Now you can use the keyspaces-toolkit with your Cassandra workloads. As a next step, you can store the image in Amazon Elastic Container Registry, which allows you to access the keyspaces-toolkit from CI/CD pipelines and other AWS services such as AWS Batch. Additionally, you can control the image lifecycle of the container across your organization. You can even attach policies to expiring images based on age or download count. For more information, see Pushing an image. Cheat sheet of useful commands I did not cover the following commands in this blog post, but they will be helpful when you work with cqlsh, AWS CLI, and Docker. --- Docker --- #To view the logs from the container. Helpful when debugging docker logs CONTAINERID #Exit code of the container. Helpful when debugging docker inspect createtablec --format='{{.State.ExitCode}}' --- CQL --- #Describe keyspace to view keyspace definition DESCRIBE KEYSPACE keyspace_name; #Describe table to view table definition DESCRIBE TABLE keyspace_name.table_name; #Select samples with limit to minimize output SELECT * FROM keyspace_name.table_name LIMIT 10; --- Amazon Keyspaces CQL --- #Change provisioned capacity for tables ALTER TABLE keyspace_name.table_name WITH custom_properties={'capacity_mode':{'throughput_mode': 'PROVISIONED', 'read_capacity_units': 4000, 'write_capacity_units': 3000}} ; #Describe current capacity mode for tables SELECT keyspace_name, table_name, custom_properties FROM system_schema_mcs.tables where keyspace_name = 'amazon' and table_name='eventstore'; --- Linux --- #Line count of multiple/all files in the current directory find . -type f | wc -l #Remove header from csv sed -i '1d' myData.csv About the Author Michael Raney is a Solutions Architect with Amazon Web Services. https://aws.amazon.com/blogs/database/how-to-set-up-command-line-access-to-amazon-keyspaces-for-apache-cassandra-by-using-the-new-developer-toolkit-docker-image/

1 note

·

View note

Text

Sql Interview Questions

If a WHERE clause is used in cross join after that the inquiry will certainly function like an INTERNAL SIGN UP WITH. A DISTINCT restraint ensures that all values in a column are various. This supplies uniqueness for the column and also assists identify each row distinctively. It promotes you to manipulate the data stored in the tables by using relational drivers. Instances of the relational data source administration system are Microsoft Gain access to, MySQL, SQLServer, Oracle database, etc. One-of-a-kind crucial restriction uniquely identifies each document in the data source. https://geekinterview.net This vital provides uniqueness for the column or set of columns. A database arrow is a control framework that permits traversal of documents in a data source. Cursors, on top of that, promotes handling after traversal, such as access, addition as well as deletion of database documents. They can be considered as a reminder to one row in a set of rows. An alias is a feature of SQL that is sustained by a lot of, otherwise all, RDBMSs. It is a temporary name designated to the table or table column for the purpose of a specific SQL inquiry. Furthermore, aliasing can be employed as an obfuscation technique to protect the actual names of database fields. A table pen name is also called a relationship name. students; Non-unique indexes, on the other hand, are not utilized to enforce restrictions on the tables with which they are linked. Rather, non-unique indexes are made use of solely to improve query performance by keeping a sorted order of data worths that are used regularly. A database index is a data structure that offers quick lookup of data in a column or columns of a table. It boosts the speed of operations accessing data from a data source table at the cost of additional creates as well as memory to keep the index information framework. Prospects are most likely to be asked standard SQL interview concerns to progress degree SQL concerns relying on their experience and different other aspects. The listed below list covers all the SQL meeting concerns for betters in addition to SQL interview questions for knowledgeable degree candidates as well as some SQL question meeting concerns. SQL provision helps to restrict the outcome set by offering a problem to the query. A clause assists to filter the rows from the entire set of records. Our SQL Meeting Questions blog site is the one-stop source where you can improve your meeting prep work. It has a set of leading 65 concerns which an interviewer intends to ask throughout an interview procedure. Unlike primary vital, there can be numerous distinct restrictions specified per table. The code syntax for UNIQUE is rather comparable to that of PRIMARY SECRET and can be utilized mutually. A lot of modern data source management systems like MySQL, Microsoft SQL Server, Oracle, IBM DB2 as well as Amazon Redshift are based upon RDBMS. SQL clause is specified to restrict the result set by providing problem to the query. This typically filterings system some rows from the whole collection of records. Cross join can be defined as a cartesian product of both tables included in the join. The table after sign up with contains the same number of rows as in the cross-product of number of rows in the two tables. Self-join is set to be query made use of to contrast to itself. This is utilized to contrast values in a column with other worths in the exact same column in the same table. PEN NAME ES can be utilized for the same table contrast. This is a key words used to inquire information from even more tables based upon the partnership between the areas of the tables. A international trick is one table which can be associated with the main key of another table. Partnership requires to be produced in between 2 tables by referencing international key with the main key of another table. A Distinct essential restraint uniquely recognized each record in the data source. It begins with the fundamental SQL interview questions and later on remains to sophisticated inquiries based on your conversations and also solutions. These SQL Interview concerns will aid you with various knowledge levels to reap the optimum take advantage of this blog. A table has a specified number of the column called fields however can have any kind of variety of rows which is called the document. So, the columns in the table of the database are called the fields and they represent the feature or attributes of the entity in the document. Rows below describes the tuples which stand for the easy data item and also columns are the quality of the information products existing particularly row. Columns can classify as vertical, as well as Rows are straight. There is provided sql meeting questions and also responses that has actually been asked in lots of business. For PL/SQL interview questions, visit our following web page. A view can have information from several tables integrated, as well as it depends on the connection. Views are used to apply security system in the SQL Server. The sight of the database is the searchable object we can use a inquiry to browse the view as we use for the table. RDBMS means Relational Database Monitoring System. It is a data source administration system based upon a relational version. RDBMS stores the data right into the collection of tables and also links those table using the relational drivers easily whenever called for. This provides uniqueness for the column or set of columns. A table is a collection of information that are organized in a version with Columns and Rows. Columns can be categorized as vertical, as well as Rows are straight. A table has specified number of column called areas but can have any kind of number of rows which is called record. RDBMS save the data into the collection of tables, which is connected by common areas between the columns of the table. It also provides relational operators to manipulate the information stored into the tables. Adhering to is a curated listing of SQL interview concerns as well as answers, which are most likely to be asked during the SQL meeting.

1 note

·

View note