#theorem for homogeneous functions

Explore tagged Tumblr posts

Text

Definition of a Metric Space

A metric space is a set with some notion of distance between points. It generalises the the concept of distance in Euclidean space, i.e. the distance between two points in Euclidean space found using Pythagoras' Theorem.

More precisely, a set X along with a distance function d: X×X->ℝ is called a metric space if the following are satisfied ∀x,y,z∈X:

Positivity: d(x,y)≥0 and d(x,y)=0 ⇔ x=y

Symmetry: d(x,y)=d(y,x)

Triangle Inequality: d(x,y)≤d(x,z)+d(z,y)

We call this function d a metric and (X,d) is the metric space.

Examples under the cut:

Firstly I will show that Euclidean space with the standard distance function d(x,y)=|x-y| is indeed a metric space:

Recall the defintion of |x| in ℝ^n:

Where x_i is the ith component of x in the standard basis.

Clearly positivity holds since we are summing the squares of real numbers which are always positive. If x=y, then each (x_i-y_i)=0, so |x-y|=0. And if |x-y|=0, then we must have that each (x_i-y_i)=0 since (x_i-y_i)^2 is positive and that is the only the sum can be 0.

We also have symmetry because (x_i-y_i)^2=(y_i-x_i)^2 so |x-y|=|y-x|

The triangle inequailty requires a bit more work and relies on the Cauchy-Schwartz Inequality (which I won't prove here but here is a video about a proof):

First, note that the definition of |x| can be rewritten using the dot product on ℝ^n:

Then we have

Then recall the Cauchy-Schwartz Inequality:

Using this gives us the following

This property (along with two others) makes | · | a norm on ℝ^n (see next example) and is indeed a form of the triangle. We can then show the form of the triangle inequailty we desire to show we have a metric:

-------

For the next example, I will first define what a normed vector space is then show we can define a metric in terms of a norm.

Let V be a vector space over F and let || · || : V -> ℝ be a map such that we have ∀u,v∈V, ∀a∈F:

Positivity: ||v||≥0 and ||v||=0 ⇔ v=0

Absolute Homogeneity: ||av||=|a|·||v||

Triangle Inequality: ||v+u||≤||v||+||u||

We call || · || a norm on V and we call (V,|| · ||) a normed vector space.

Then we can define d(x,y):=||x-y|| and this is indeed a metric on V:

Positivity: Positivity follows quite easily from the positivity of the norm, i.e. ||x-y||≥0 and ||x-y||=0 ⇔ x-y=0 ⇔ x=y.

Symmetry: this takes a few more steps but follows from absolute homogeneity:

Triangle Inequality: This is exactly the same as the proof in the last example:

This is the more general case of the first example and shows that for any normed vector space we can define a metric!

-------

My last example is somewhat more unusual but is a metric space nonetheless! Let X be any finite non-empty set and define d: X×X->ℝ

This is known as the discrete metric and is indeed a metric:

Positivity: positivity follows very easily from this definition. Clearly d(x,y)≥0 and d(x,y) is only 0 if x=y

Symmetry: Again this follows very easily from the definition since x=y ⇔ y=x, and x≠y ⇔ y≠x

Triangle Inequality: If x=y then d(x,y)=0 so the inequality holds for any choice of z. If x≠y, then we must have that z is not equal to one of our points, i.e. z≠x or z≠y. Then d(x,z)+d(z,y)≥1=d(x,y)

24 notes

·

View notes

Text

Test Bank For Elementary Differential Equations and Boundary Value Problems, 12th Edition William E. Boyce

TABLE OF CONTENTS Preface v 1 Introduction 1 1.1 Some Basic Mathematical Models; Direction Fields 1 1.2 Solutions of Some Differential Equations 9 1.3 Classification of Differential Equations 17 2 First-Order Differential Equations 26 2.1 Linear Differential Equations; Method of Integrating Factors 26 2.2 Separable Differential Equations 34 2.3 Modeling with First-Order Differential Equations 41 2.4 Differences Between Linear and Nonlinear Differential Equations 53 2.5 Autonomous Differential Equations and Population Dynamics 61 2.6 Exact Differential Equations and Integrating Factors 72 2.7 Numerical Approximations: Euler’s Method 78 2.8 The Existence and Uniqueness Theorem 86 2.9 First-Order Difference Equations 93 3 Second-Order Linear Differential Equations 106 3.1 Homogeneous Differential Equations with Constant Coefficients 106 3.2 Solutions of Linear Homogeneous Equations; the Wronskian 113 3.3 Complex Roots of the Characteristic Equation 123 3.4 Repeated Roots; Reduction of Order 130 3.5 Nonhomogeneous Equations; Method of Undetermined Coefficients 136 3.6 Variation of Parameters 145 3.7 Mechanical and Electrical Vibrations 150 3.8 Forced Periodic Vibrations 161 4 Higher-Order Linear Differential Equations 173 4.1 General Theory of n?? Order Linear Differential Equations 173 4.2 Homogeneous Differential Equations with Constant Coefficients 178 4.3 The Method of Undetermined Coefficients 185 4.4 The Method of Variation of Parameters 189 5 Series Solutions of Second-Order Linear Equations 194 5.1 Review of Power Series 194 5.2 Series Solutions Near an Ordinary Point, Part I 200 5.3 Series Solutions Near an Ordinary Point, Part II 209 5.4 Euler Equations; Regular Singular Points 215 5.5 Series Solutions Near a Regular Singular Point, Part I 224 5.6 Series Solutions Near a Regular Singular Point, Part II 228 5.7 Bessel’s Equation 235 6 The Laplace Transform 247 6.1 Definition of the Laplace Transform 247 6.2 Solution of Initial Value Problems 254 6.3 Step Functions 263 6.4 Differential Equations with Discontinuous Forcing Functions 270 6.5 Impulse Functions 275 6.6 The Convolution Integral 280 7 Systems of First-Order Linear Equations 288 7.1 Introduction 288 7.2 Matrices 293 7.3 Systems of Linear Algebraic Equations; Linear Independence, Eigenvalues, Eigenvectors 301 7.4 Basic Theory of Systems of First-Order Linear Equations 311 7.5 Homogeneous Linear Systems with Constant Coefficients 315 7.6 Complex-Valued Eigenvalues 325 7.7 Fundamental Matrices 335 7.8 Repeated Eigenvalues 342 7.9 Nonhomogeneous Linear Systems 351 8 Numerical Methods 363 8.1 The Euler or Tangent Line Method 363 8.2 Improvements on the Euler Method 372 8.3 The Runge-Kutta Method 376 8.4 Multistep Methods 380 8.5 Systems of First-Order Equations 385 8.6 More on Errors; Stability 387 9 Nonlinear Differential Equations and Stability 400 9.1 The Phase Plane: Linear Systems 400 9.2 Autonomous Systems and Stability 410 9.3 Locally Linear Systems 419 9.4 Competing Species 429 9.5 Predator – Prey Equations 439 9.6 Liapunov’s Second Method 446 9.7 Periodic Solutions and Limit Cycles 455 9.8 Chaos and Strange Attractors: The Lorenz Equations 465 10 Partial Differential Equations and Fourier Series 476 10.1 Two-Point Boundary Value Problems 476 10.2 Fourier Series 482 10.3 The Fourier Convergence Theorem 490 10.4 Even and Odd Functions 495 10.5 Separation of Variables; Heat Conduction in a Rod 501 10.6 Other Heat Conduction Problems 508 10.7 The Wave Equation: Vibrations of an Elastic String 516 10.8 Laplace’s Equation 527 A Appendix 537 B Appendix 541 11 Boundary Value Problems and Stur-Liouville Theory 544 11.1 The Occurrence of Two-Point Boundary Value Problems 544 11.2 Sturm-Liouville Boundary Value Problems 550 11.3 Nonhomogeneous Boundary Value Problems 561 11.4 Singular Sturm-Liouville Problems 572 11.5 Further Remarks on the Method of Separation of Variables: A Bessel Series Expansion 578 11.6 Series of Orthogonal Functions: Mean Convergence 582 Answers to Problems 591 Index 624 Read the full article

3 notes

·

View notes

Text

AI and ML

This article was only an introduction of these machine learning algorithms. If you want to know more, check out our online Artificial Intelligence & Machine Learning Course contains the perfect mix of theory, case studies, and extensive hands-on assignments to even turn a beginner into a pro by the end. Our ML and artificial intelligence certification courses are perfect for students and working professionals to get mentored directly from industry experts, build your practical knowledge, receive complete career coaching, be a certified AI and ML Engineer.

Top 10 Machine Learning Algorithms You should Know in 2021

Living in an era of speedy technological development isn't easy. Especially, when you are interested in Machine Learning!

New Machine Learning Algorithms are coming up everyday with an unmatchable pace to get a hold of them! This article will help you grasp at least some of these algorithms being commonly used in the data science community. Data Scientists have been enhancing the data-crunching machines everyday to build a sophisticatedly advanced technology.

Here we are listing top 10 Machine learning algorithms for you to learn in 2021 -

1. Linear Regression

This is a fundamental algorithm, used to model relationships between a dependent variable and one or more independent variables by fitting them to a line. This line is known as the regression line and is represented by a linear equation Y = a 'X + b

2. Logistic Regression

This type of regression is very similar to linear regression but this one in particular is used to model the probability of a discrete number of outcomes, which is typically two - usually binary values like 0/1 from a set of independent variables. It calculates the probability of an event by fitting data to a logit function. This may sound complex but it only has one extra step as compared to linear regression!

3. Naive Bayes

This algorithm is a classifier. It assumes the presence of a particular feature in a class which is unrelated to the presence of any other feature. It may seem like a daunting algorithm because it necessitates preliminary mathematical knowledge in conditional probability and Bayes Theorem, but it's extremely simple to use.

4.KNN Algorithm

KNN Algorithms can be applied to both - classification and regression problems. This algorithm stores all the available cases and classifies any new cases by taking a majority vote of its k neighbours. Then, the case is transferred to the class with which it has the most in common.

5. Dimensionality Reduction Algorithm

This algorithm like Decision Tree, Missing Value Ratio, Factor Analysis, and Random Forest can help you find relevant details.

6. Random Forest Algorithm

Random forests Algorithms are an ensembles learning technique that builds off of decision trees. It generally involved creating multiple decision trees using bootstrapped datasets of the original data. It randomly selects a subset of variables at each step of the decision tree. Each tree is classified and the tree "votes" for that class.

7. SVM Algorithm

SVM stands for Support Vector Machine. In this algorithm, we plot raw data as points in an n-dimensional space (n = no. Of features you have). Then the value of each feature is tied to a particular coordinate, making it extremely easy to classify the data provided.

8. Decision Tree

This algorithm is a supervised learning algorithm which is used to classify problems. While using this algorithm, we split the population into two or more homogenous sets based on the most significant attributes or independent variables.

9. Gradient Boosting Algorithm

This algorithm is used as a boosting algorithm, which is used when massive data loads have to be handled to make predictions with high accuracy rates.

10. AdaBoost

AdaBoost also known as Adaptive Boost is an ensemble algorithm that leverages bagging and boosting methods and developed an enhanced predictor. The predictions are taken from the decision trees.

1 note

·

View note

Text

Mathematics Plus Engineering Aptitude (Syllabus)

SYLLABUS PART A General skills with emphasis on reasonable reasoning, graphical analysis, synthetic and numerical ability, quantitative comparisons, series formation, questions, and so forth SYLLABUS PART B Mathematics Plus Engineering Aptitude Linear Algebra Calculus Complex factors Vector Calculus Ordinary Gear Algebra of matrices, inverse, rank, system of geradlinig equations, symmetric, skew-symmetric plus orthogonal matrices. Hermitian, skew-Hermitian and unitary matrices. eigenvalues and eigenvectors, diagonalisation associated with matrices. Functions of the solitary variable, limit, continuity plus differentiability, Mean worth theorems, Indeterminate forms plus L'Hospital rule, Maxima plus minima, Taylor's series, Newton’s method for finding origins of polynomials. Fundamental plus means value-theorems of essential calculus. Numerical integration simply by trapezoidal and Simpson’s guideline. Evaluation of definite plus improper integrals, Beta and Gamma functions, Features of two variables, limit, continuity, partial derivatives, Euler's theorem for homogeneous functions, total derivatives, maxima and minima, Lagrange technique of multipliers, double integrals and their applications, series and series, tests with regard to convergence, power series, Fourier Series, Half range sine and cosine series. Inductive functions, Cauchy-Riemann equations, Collection integral, Cauchy's integral theorem and integral formula Taylor’s and Laurent' series, Remains theorem as well as applications. Lean, divergence and curl, vector identities, directional derivatives, collection, surface and volume integrals, Stokes, Gauss and Green's theorems and their programs. First order equation (linear and non-linear ), 2nd order linear differential equations with variable coefficients, Variance of equation parameters technique, higher order linear gear equations with constant coefficients, Cauchy-Euler's equations, power collection solutions, Legendre polynomials plus Bessel's functions from the particular first kind and their own properties. Numerical solutions associated with first order ordinary gear equations by Euler’s plus Runge -Kutta methods. Meanings of probability and easy theorems, conditional probability, Bayes Theorem. Solid Body Movement and Fluid Motion: Energetics: Electron Transport: Electromagnetics: Materials: Particle dynamics; Projectiles; Rigid Body Dynamics; Lagrangian formulation; Eulerian formulation; Bernoulli’s Equation; Continuity equation; Surface area tension; Viscosity; Brownian Movement. Laws of Thermodynamics; Concept of Free energy; Enthalpy, and Entropy; Equation associated with State; Thermodynamics relations. The framework of atoms, Concept associated with energy level, Bond Concept; Definition of conduction, Semiconductor and Insulators; Diode; Fifty percent wave & Full influx rectification; Amplifiers & Oscillators; Truth Table. Theory associated with Electric and Magnetic possible & field; Biot and Savart’s Law; Theory associated with Dipole; Theory of Vacillation of electron; Maxwell’s equations; Transmission theory; Amplitude plus Frequency Modulation. Periodic desk; Properties of elements; Outcome of materials; Metals plus nonmetals (Inorganic materials), Primary understanding of monomeric plus polymeric compounds; Organometallic substances; Crystal structure and proportion, Structure-property correlation-metals, ceramics, plus polymers.

0 notes

Text

If you did not already know

Latent Profile Analysis (LPA) The main aim of LCA is to split seemingly heterogeneous data into subclasses of two or more homogeneous groups or classes. In contrast, LPA is a method that is conducted with continuously scaled data, the focus being on generating profiles of participants instead of testing a theoretical model in terms of a measurement model, path analytic model, or full structural model, as is the case, for example, with structural equation modeling. An example of LCA and LPA,is sustainable and active travel behaviors among commuters, separating the respondents into classes based on the facilitators of, and hindrances to, certain modes of travel. Quick Example of Latent Profile Analysis in R … nn-dependability-kit nn-dependability-kit is an open-source toolbox to support safety engineering of neural networks. The key functionality of nn-dependability-kit includes (a) novel dependability metrics for indicating sufficient elimination of uncertainties in the product life cycle, (b) formal reasoning engine for ensuring that the generalization does not lead to undesired behaviors, and (c) runtime monitoring for reasoning whether a decision of a neural network in operation time is supported by prior similarities in the training data. … Information Based Control (IBC) An information based method for solving stochastic control problems with partial observation has been proposed. First, the information-theoretic lower bounds of the cost function has been analysed. It has been shown, under rather weak assumptions, that reduction of the expected cost with closed-loop control compared to the best open-loop strategy is upper bounded by non-decreasing function of mutual information between control variables and the state trajectory. On the basis of this result, an \textit{Information Based Control} method has been developed. The main idea of the IBC consists in replacing the original control task by a sequence of control problems that are relatively easy to solve and such that information about the state of the system is actively generated. Two examples of the operation of the IBC are given. It has been shown that the IBC is able to find the optimal solution without using dynamic programming at least in these examples. Hence the computational complexity of the IBC is substantially smaller than complexity of dynamic programming, which is the main advantage of the proposed method. … Skipping Sampler We introduce the Skipping Sampler, a novel algorithm to efficiently sample from the restriction of an arbitrary probability density to an arbitrary measurable set. Such conditional densities can arise in the study of risk and reliability and are often of complex nature, for example having multiple isolated modes and non-convex or disconnected support. The sampler can be seen as an instance of the Metropolis-Hastings algorithm with a particular proposal structure, and we establish sufficient conditions under which the Strong Law of Large Numbers and the Central Limit Theorem hold. We give theoretical and numerical evidence of improved performance relative to the Random Walk Metropolis algorithm. … https://analytixon.com/2023/02/21/if-you-did-not-already-know-1972/?utm_source=dlvr.it&utm_medium=tumblr

0 notes

Text

I’ve been reading part of Folland’s “Course in Abstract Harmonic Analysis”, after my advisor recommended it to me. I like the way it is written. I’m interested in chapters 2 and 3, which talk about locally compact groups and some representation theory, respectively. I’ll take some notes before I put it into my archive, but here are a few points which I consider note-worthy in chapter 2 :

- Of course the (general) construction of Haar measures on locally compact groups, and uniqueness (there is a precise statement for this). I’m not so satisfied with the proof yet. I’m planning on working on the details and see if I can find a more satifsying argument.

- I now know what the modular function is, and understand the term “unimodular”. The point is that left and right Haar measures need not be the same, and the modular function measures this in a very nice way. Unimodular groups are the groups for which left and right measures are in fact the same. Trivial examples : abelian groups, finite groups, and more generally compact groups. For Lie groups, there is a formula for the modular function involving, which displays it as the Jacobian of the adjoint representation.

- There is in fact a theorem for homogeneous spaces, and I wasn’t really far from it when I was thinking about it a while ago. A definitive statement is the following : for G locally compact, sigma compact, there is a correspondance between closed subgroups of G, and (Hausdorff) homogeneous spaces. Any transitive action on X thus leads to an isomorphism of G-spaces X = G/stab(x_0) for x_0 any base point in X. The proof is really close to the argument that I had, and sigma compactness really makes it work. This is nice.

I’ll have a look at chapter 3 soon. Today has been emotionally draining. But there’s still so much work to be done.

0 notes

Text

Test Bank For Calculus: Multivariable, 12th Edition Howard Anton

TABLE OF CONTENTS PREFACE ix SUPPLEMENTS x ACKNOWLEDGMENTS xi THE ROOTS OF CALCULUS xv 11 Three-Dimensional Space; Vector 657 11.1 Rectangular Coordinates in 3-Space; Spheres; Cylindrical Surfaces 657 11.2 Vectors 663 11.3 Dot Product; Projections 673 11.4 Cross Product 682 11.5 Parametric Equations of Lines 692 11.6 Planes in 3-Space 698 11.7 Quadric Surfaces 705 11.8 Cylindrical and Spherical Coordinates 715 12 Vector-Valued Functions 723 12.1 Introduction to Vector-Valued Functions 723 12.2 Calculus of Vector-Valued Functions 729 12.3 Change of Parameter; Arc Length 738 12.4 Unit Tangent, Normal, and Binormal Vectors 746 12.5 Curvature 751 12.6 Motion Along a Curve 759 12.7 Kepler's Laws of Planetary Motion 771 13 Partial Derivatives 781 13.1 Functions of Two or More Variables 781 13.2 Limits and Continuity 791 13.3 Partial Derivatives 800 13.4 Differentiability, Differentials, and Local Linearity 812 13.5 The Chain Rule 820 13.6 Directional Derivatives and Gradients 830 13.7 Tangent Planes and Normal Vectors 840 13.8 Maxima and Minima of Functions of Two Variables 845 13.9 Lagrange Multipliers 856 14 Multiple Integrals 866 14.1 Double Integrals 866 14.2 Double Integrals Over Nonrectangular Regions 873 14.3 Double Integrals in Polar Coordinates 882 14.4 Surface Area; Parametric Surfaces 889 14.5 Triple Integrals 902 14.6 Triple Integrals in Cylindrical and Spherical Coordinates 909 14.7 Change of Variables in Multiple Integrals; Jacobians 918 14.8 Centers of Gravity Using Multiple Integrals 930 15 Topics in Vector Calculus 942 15.1 Vector Fields 942 15.2 Line Integrals 951 15.3 Independence of Path; Conservative Vector Fields 966 15.4 Green's Theorem 976 15.5 Surface Integrals 983 15.6 Applications of Surface Integrals; Flux 990 15.7 The Divergence Theorem 999 15.8 Stokes' Theorem 1008 A Appendices A Trigonometry Review (Summary) App-1 B Functions (Summary) App-8 C New Functions From Old (Summary) App-11 D Families of Functions (Summary) App-16 E Inverse Functions (Summary) App-23 READY REFERENCE RR-1 ANSWERS TO ODD-NUMBERED EXERCISES Ans-1 INDEX Ind-1 Web Appendices (online only) Available in WileyPLUS A Trigonometry Review B Functions C New Functions From Old D Families of Functions E Inverse Functions F Real Numbers, Intervals, and Inequalities G Absolute Value H Coordinate Planes, Lines, and Linear Functions I Distance, Circles, and Quadratic Equations J Solving Polynomial Equations K Graphing Functions Using Calculators and Computer Algebra Systems L Selected Proofs M Early Parametric Equations Option N Mathematical Models O The Discriminant P Second-Order Linear Homogeneous Differential Equations Chapter Web Projects: Expanding the Calculus Horizon (online only) Available in WileyPLUS Blammo the Human Cannonball -- Chapter 12 Hurricane Modeling -- Chapter 15 Read the full article

0 notes

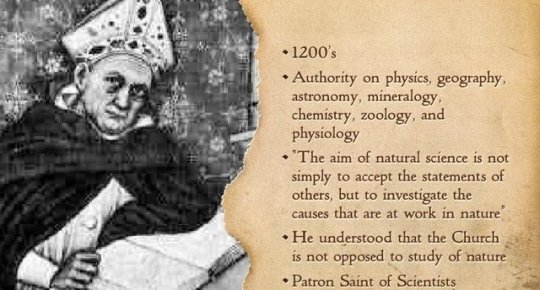

Photo

Catholic Physics - Reflections of a Catholic Scientist - Part 28

Philosophic Issues in Cosmology 2: Relativistic Theories for the Origin of the Universe

There was a young lady named Bright,Whose speed was far faster than light;She started one day In a relative way, And returned on the previous night. A.H.R. Buller, Punch

This is the second of seven posts, that attempts to summarize George Ellis's fine article, Issues in the Philosophy of Cosmology.

The usual exposition of Einstein's General Relativity Field Equations is very forbidding, full of Greek subscripts and tensor notation; a clear, simplified version has been given on the web by John Baez, and is appropriate for considering the Big Bang. The standard general relativity model for cosmology is that given by Friedmann-LeMaitre-Robertson-Walker, usually designated by FLRW. The FLRW model proceeds from the following simplifying assumptions: a) the universe is isotropic (looks the same in every direction, from every point in space); b) there is a constant amount of matter in the universe; c) on a large scale (hundreds of times the distance between galaxies) the universe has a homogeneous matter density (matter is spread evenly throughout space); d) the effects of “pressure” (from radiation or the vacuum) can be neglected.

With these simplifying assumptions, the equation for the “size” of the universe, its radius R, becomes simple, and looks just like the equation of motion for a particle traveling under an inverse square law, like that of gravity. (Note: this is not to say the size of the universe is really given by some value R; the universe might possibly be infinite���more about that later—but to show how space is expanding.) The universe might expand and then contract in a “Big Crunch” (like a ball falling back to earth), corresponding to positively curved spacetime (like a sphere); it might expand with a constant velocity of expansion (like a projectile going into orbit), corresponding to flat space-time (like a plane); or it might expand with an accelerating velocity of expansion (like a projectile achieving escape velocity), corresponding to a saddle-shaped curvature of space-time. It should also be emphasized that the FLRW solution to the Einstein General Relativity equations is by no means unique, nor is it the only solution with a singularity. It is a model, however, that is in accord with measured data (red shift, COBE microwave background radiation).

The assumptions stated above do not apply rigorously. Observations have shown a filament or bubble-like structure to the universe with clusters and meta-clusters of galaxies. (A theoretical picture for this filament structure has been proposed.) In the early stages of the universe radiation pressure was very likely significant. More recently, measurements have shown that the expansion rate is increasing, which is presumed due to “dark energy”, possibly a pressure due to vacuum energy. Moreover, at some point in the expansion the scale of the universe gets so small that classical physics does not apply and quantum mechanics has to be used for theory. Unfortunately quantum mechanics and general relativity have not yet been reconciled into one general theory, so there is a fundamental difficulty with this melding of the two theories.

The simple solution above for FLRW models gives an acceleration of R proportional to 1/R^2, which signifies that there is a singularity at R=0, that is to say, if you try to plug in R=0 you'll get infinity. This would be the same as the infinity at the source for other forces proportional to 1/R^2, coulomb attraction or gravity. Ellis has this to say about the significance and existence of the FLRW singularity:

“the universe starts at a space-time singularity ...This is not merely a start to matter — it is a start to space, to time, to physics itself. It is the most dramatic event in the history of the universe: it is the start of existence of everything. The underlying physical feature is the non-linear nature of the EFE (Einstein Field Equation): going back into the past, the more the universe contracts, the higher the active gravitational density, causing it to contract even more....a major conclusion is that a Hot Big Bang must have occurred; densities and temperatures must have risen at least to high enough energies that quantum fields were significant, at something like the GUT (Grand Unified Theory) energy. The universe must have reached those extreme temperatures and energies at which classical theory breaks down.” (emphasis in original).

Ellis is saying that even though we can't observe the universe at that time when it was so small and temperatures were so high that quantum properties would have been significant, we can infer that this was the case theoretically, that is to say that there was a “Hot Big Bang” at the beginning of the universe with extremely high temperatures (energies)and an extremely small volume.

Thus, given the contracting size of the universe as one goes back to the origin, there will be a time such that quantum effects must come into play. However, there are some basic limitations to using quantum mechanics as a theory for the origin of the universe. As Ellis points out:

“The attempt to develop a fully adequate quantum gravity approach to cosmology is of course hampered by the lack of a fully adequate theory of quantum gravity, as well as by the problems at the foundation of quantum theory (the measurement problem, collapse of the wave function, etc.)”

(Added later: The Hawking-Penrose Theorems shows that a class of solutions to the General Relativity equations have a singularity in the solution. Also, the Borde-Guth-Vilenkin Theorem shows that under conditions of universe average expansion, there is a beginning point. Since all such solutions are non-applicable at the singularity because quantum gravity enters the picture, the relevance of such theorems is perhaps questionable.)

See "Philosophic Issues in Cosmology 3: Mathematical Metaphysics--Quantum Mechanical Theories in Cosmology, for the ways physicists apply quantum mechanics to deal with theories of origin (or non-origin) of the universe.

Ed. Note:

I am sorry that I cannot properly display all the various pictures or tables on the post. They will, however, be displayed on the pamphlet containing this post, and a link will be provided for your convenience.

From a series of articles written by: Bob Kurland - a Catholic Scientist

1 note

·

View note

Text

Random Flag Complexes and Asymptotic Syzygies

This talk was given by Jay Yang as a joint talk for this year’s CA+ conference and our usual weekly combinatorics seminar. He cited Daniel Erman as a collaborator.

------

Ein–Lazarsfeld Behavior

Yang began the talk with a fairly dense question: what is the asymptotic behavior of a Betti table? He then spent about 20 minutes doing some unpacking.

What is a Betti table? This has an “easy” answer, which is that it is an infinite matrix of numbers $\beta_{ij}$ defined as $\dim \text{Tor}^i(M)_j$, but this is maybe not the most readable thing if you’re not very well-versed in derived functors. Fortunately, exactly what the Betti table is is not super important for understanding the narrative of the talk.

But, for the sake of completeness we briefly give a quote-unquote elementary description: Given a module $M$, produce its minimal free resolution— that is, an exact sequence $\cdots\to F_2\to F_1\to F_0\to M\to 0$, where $F_i$ are all free, and the maps, interpreted as matrices, contain no constant terms. If $M$ is a graded module over a graded ring, then the $F_i$ are also graded, and so we can ask for a basis for the submodule of (homogeneous) elements of degree $j$. This number is the Betti table entry $\beta_{i,j}$.

What do we mean by asymptotic? We need to have something going off to infinity, clearly, but exactly what? There are several ways to answer this question: one which Yang did not explore was the idea of embedding by degree-$n$ line bundles, and sending $n\to\infty$. Instead of doing that, we will force our modules $M$ to come from random graphs, and then take asymptotic to mean sending the number of vertices to infinity.

What do we mean by behavior? Again, Yang deviates from the usual path: the most well-studied kind of long-term behavior is the “$N_p$” question “For how long is the resolution linear?” But instead of doing this, we will discuss the sorts of behaviors which were analyzed by Ein and Lazarsfeld.

One of these behaviors, which he spent most of the time talking about, concerns the support of the table, and stems from their 2012 result:

Theorem (Ein–Lazarsfeld). If $X$ is a $d$-dimensional smooth projective variety, and $A$ a very-ample divisor, then

$$ \lim_{n\to\infty} \frac{ \# \text{nonzero entries of the } k^\text{th} \text{ row of } X_n}{\text{projdim}(X_n) + 1} = 1$$

where $X_n$ is the homogeneous coordinate ring of $X$ embedded by $nA$, and $1\leq k\leq d$.

The same limit formula was reached in 2014 and 2016 for different classes of rings than $X_n$.

Ein and Lazarsfeld also showed another kind of asymptotic behavior together with Erman in a similar situation: namely, that the function $f_n$ sending $i$ to a Betti table element $\beta_{i,i+1}(S_n/I_n)$ converges to a binomial distribution (after appropriate normalization).

Yang examined both of these behaviors to see if they could be replicated in a different context: that of random flag complexes.

------

Random Flag Complexes

A random graph is, techncially speaking, any random variable whose output is a graph. But most of the time when people talk about random graphs, they mean the Erdős–Rényi model of a random graph, denoted $G(n,p)$:

Any graph consists of vertices and edges. So pick how many vertices you want ($n$), and then pick a number $0\leq p\leq 1$ which represents the probability that any particular edge is in the graph. Then take the complete graph on $n$ vertices, assign independent uniform random variables, and remove each edge whose output is larger than $p$.

This gives rise to the notion of an Erdős–Rényi random flag complex, denoted $\Delta(n,p)$, by taking a $G(n,p)$ and then constructing its flag complex:

(source)

And finally, we can describe a Erdős–Rényi random monomial ideal, denoted $I(n,p)$ by taking a $\Delta(n,p)$ and then constructing its Stanley-Reisner ideal.

The punchlines is that $I(n,p)$ will, in nice cases, exhibit the Ein-Lazarsfeld behaviors:

Theorem (Erman–Yang). Fix $r>1$ and $F$ a field. Then for $1\leq k\leq r+1$ and $n^{-1/r} \ll p \ll 1$, we have

$$ \lim_{n\to\infty} \frac{ \# \text{nonzero entries of the } k^\text{th} \text{ row of } F[x_1,\dots, x_n]/I(n,p)}{\text{projdim}(F[x_1,\dots, x_n]/I(n,p)) + 1} = 1,$$

where the limit is taken in probability

Theorem (Erman–Yang). For $0<c<1$ and $F$ a field, the function sequence $(f_n)$ defined by

$$ f_n(i) = \Bbb E \Big[\beta_{i,i+1}(F[x_1,\dots, x_n]/I(n,p)\Big]$$ converges to a binomial distribution (after appropriate normalization).

The latter statement can be made considerably stronger, eliminating expected values in exchange for requiring convergence in probability. But he stated it in this generality so that he could concluded the talk by giving proof sketches for both statements (which I won’t reproduce here).

#math#maths#mathematics#mathema#combinatorics#algebra#commutative algebra#graph theory#random graph#caplus#caplus2017#cw homology

6 notes

·

View notes

Text

300+ TOP Digital Signal Processing LAB VIVA Questions and Answers

Digital Signal Processing LAB VIVA Questions :-

1. Define discrete time and digital signal. Discrete time signal is continuous in amplitude and discrete in time, where Digital signal is discrete in time and amplitude. 2. Explain briefly, the various methods of representing discrete time signal Graphical, Tabular, Sequence, Functional representation 3. Define sampling and aliasing. Converting a continuous time signal into discrete time signal is called as Sampling, Aliasing is an effect that causes different signals to become indistinguishable. 4. What is Nyquist rate? Its the sampling frequency which is equal to twice of Continuous time signal which has to be sampled. 5. State sampling theorem. It states that , To reconstruct the continuous time signal from its Discrete time signal, The sampling frequency should be more than twice of continuous time signal frequency. 6. Express the discrete time signal x(n) as a summation of impulses.

7. How will you classify the discrete time signals? Causal and Non causal, Periodic and non periodic, even and odd, energy and power signals 8.. When a discrete time signal is called periodic? If some set of samples repeats after a regular interval of time then its called as periodic. 9. What is discrete time system? If a system's excitation and responses are both discrete time signals then its called as discrete time system. 10. What is impulse response? Explain its significance. The response of a system when the excitation is Impulse signal is called as impulse response. it also called as Natural response, free forced response. 11. Write the expression for discrete convolution.

12. classifying discrete time systems. Causal, Non causal, time variant, time invariant, Linear, non linear, stable and unstable system. 13. Define time invariant system. If a system's operation is independent of time then its time invariant, i.e delayed system response is equal to system's response for delayed input. 14. What is linear and nonlinear systems? If a system satisfies homogeneity principle and superposition principle then it is Linear. if not Non linear. 15. What is the importance of causality? causality states that system's response should depend on present and past inputs only not on the future inputs. so causal systems are realizable. 16. What is BIBO stability? What is the condition to be satisfied for stability? If a system's response is Bounded for Bounded excitation then its BIBO stable. For stable system the impulse response should be absolutely sum able . 17. What are FIR and IIR systems? FIR: system's impulse response contains finite no. of samples IIR: system's impulse response contains infinite no. of samples 18. What are recursive and non recursive systems? give examples? A Recursive system is one in which the output depend on it,s one or more past outputs while a non recursive is one in which output is independent of output. Ex: any system with feedback is Recursive , without feedback is non recursive. 19. Write the properties of linear convolution. 1) x(n)*y(n)= y(n)*x(n) 2) *z(n)=x(n)*z(n)+y(n)*z(n) 3) *z(n) =x(n)* 20. Define circular convolution. Circular convolution is same as linear convolution but circular is for periodic signals. 21. What is the importance of linear and circular convolution in signals and systems? Convolution is used to calculate a LTI system's response for given excitation. 22. How will you perform linear convolution via circular convolution? circular convolution with the length of linear convolution length (l+m-1) results linear convolution. 23. What is sectioned convolution? Why is it performed? If any one of the given two sequences length is very high then we have to go for sectioned convolution. 24. What are the two methods of sectioned convolution? 1) Over lap-Add method. 2) Over lap save method. 25. Define cross correlation and auto-correlation? Auto correlation is a measure of similarity between signals and its delayed version as a function of time delay. cross correlation is a measure of similarity between two signals as a function of time delay between them. 26. What are the properties of correlation? 1) R12(T)≠R21(T) 2) R12(T)=R21*(-T) 3) if R12(T)=0, both signals are orthogonal to each other 4) Fourier transform of auto correlation gives energy spectral density. DSP VIVA Questions with Answers Pdf :: Read the full article

0 notes

Text

IIT JAM 2020 Mathematics (MA) Syllabus | IIT JAM 2020 Mathematics (MA) Exam Pattern

IIT JAM 2020 Mathematics (MA) Syllabus | IIT JAM 2020 Mathematics (MA) Exam Pattern Mathematics (MA): The syllabus is a very important aspect while preparing for the examination. Therefore it is advised to all the appearing candidates that they should go through the Mathematics (MA) syllabus properly before preparing for the examination. Sequences and Series of Real Numbers: Sequence of real numbers, convergence of sequences, bounded and monotone sequences, convergence criteria for sequences of real numbers, Cauchy sequences,subsequences, Bolzano-Weierstrass theorem. Series of real numbers, absolute convergence, tests of convergence for series of positive terms - comparison test, ratio test, root test; Leibniz test for convergence of alternating series. Functions of One Real Variable: Limit, continuity, intermediate value property, differentiation, Rolle's Theorem, mean value theorem, L'Hospital rule, Taylor's theorem, maxima and minima. Functions of Two or Three Real Variables: Limit, continuity, partial derivatives, differentiability, maxima and minima. Integral Calculus: Integration as the inverse process of differentiation, definite integrals and their properties, fundamental theorem of calculus. Double and triple integrals, change of order of integration, calculating surface areas and volumes using double integrals, calculating volumes using triple integrals. Differential Equations: Ordinary differential equations of the first order of the form y'=f(x,y), Bernoulli,s equation, exact differential equations, integrating factor, orthogonal trajectories, homogeneous differential equations, variable separable equations, linear differential equations of second order with constant coefficients, method of variation of parameters, Cauchy-Euler equation. Vector Calculus: Scalar and vector fields, gradient, divergence, curl, line integrals, surface integrals, Green, Stokes and Gauss theorems. Group Theory: Groups, subgroups, Abelian groups, non-Abelian groups, cyclic groups, permutation groups, normal subgroups, Lagrange's Theorem for finite groups, group homomorphisms and basic concepts of quotient groups. Linear Algebra: Finite dimensional vector spaces, linear independence of vectors, basis, dimension, linear transformations, matrix representation, range space, null space, rank-nullity theorem. Rank and inverse of a matrix,determinant, solutions of systems of linear equations, consistency conditions, eigenvalues and eigenvectors for matrices,Cayley-Hamilton theorem. Real Analysis: Interior points, limit points, open sets, closed sets, bounded sets, connected sets, compact sets, completeness of R. Power series (of real variable), Taylor,s series, radius and interval of convergence, term-wise differentiation and integration of power series. Related Articles: IIT JAM 2020 Syllabus Biotechnology (BT) Syllabus Biological Sciences (BL) Syllabus Chemistry (CY) Syllabus Geology (GG) Syllabus Mathematics (MA) Syllabus Mathematical Statistics (MS) Syllabus Physics (PH) Syllabus Read the full article

0 notes

Text

Value, Price and Profit part 12 (chapter 6)

We begin at last on Marx’s explication of political economy. Marx warns us it will necessarily lack some detail:

Citizens, I have now arrived at a point where I must enter upon the real development of the question. I cannot promise to do this in a very satisfactory way, because to do so I should be obliged to go over the whole field of political economy. I can, as the French would say, but “effleurer la question,” touch upon the main points. The first question we have to put is: What is the value of a commodity? How is it determined?

Marx begins by distinguishing the value from the rate of exchange with another commodity.

Yet, its value remaining always the same, whether expressed in silk, gold, or any other commodity, it must be something distinct from, and independent of, these different rates of exchange with different articles. It must be possible to express, in a very different form, these various equations with various commodities.

He analogises value to the areas of plane figures: area abstracts away the particulars of a triangle, and allows us to compare them. So what is common to all commodities?

The next paragraph seems pretty important:

As the exchangeable values of commodities are only social functions of those things, and have nothing at all to do with the natural qualities, we must first ask: What is the common social substance of all commodities? It is labour. To produce a commodity a certain amount of labour must be bestowed upon it, or worked up in it.

And I say not only labour, but social labour. A man who produces an article for his own immediate use, to consume it himself, creates a product, but not a commodity. As a self-sustaining producer he has nothing to do with society. But to produce a commodity, a man must not only produce an article satisfying some social want, but his labour itself must form part and parcel of the total sum of labour expended by society. It must be subordinate to the division of labour within society. It is nothing without the other divisions of labour, and on its part is required to integrate them.

And to quantify that, Marx says, we should use the amount of social labour time.

A commodity has a value, because it is a crystallization of social labour. The greatness of its value, or its relative value, depends upon the greater or less amount of that social substance contained in it; that is to say, on the relative mass of labour necessary for its production. The relative values of commodities are, therefore, determined by the respective quantities or amounts of labour, worked up, realized, fixed in them. The correlative quantities of commodities which can be produced in the same time of labour are equal.

Marx raises the question of whether this is related to Weston’s idea of wages fixing prices. He says the value of labour, and the reward for labour, are different things. Wages are bounded from above by the value of labour, but that’s the only restriction placed.

Marx adds that it’s not just the immediate labour of turning materials into a product, but also

the quantity of labour previously worked up in the raw material of the commodity, and the labour bestowed on the implements, tools, machinery, and buildings, with which such labour is assisted

though, in the case of machines, the value of the labour used to build the machine is spread out over the machine’s lifetime.

Marx then forestalls the objection that this would imply a lazy worker’s work is more valuable: social labour is the necessary labour, not the actual labour.

You will recollect that I used the word “social labour,” and many points are involved in this qualification of “social.” In saying that the value of a commodity is determined by the quantity of labour worked up or crystallized in it, we mean the quantity of labour necessary for its production in a given state of society, under certain social average conditions of production, with a given social average intensity, and average skill of the labour employed.

To illustrate, Marx says that went power-looms halved the amount of labour needed for a quantity of cloth, hand-loom weavers had to work twice as long to produce the same value. On the other hand, if it became necessary to use worse soil and therefore more time was needed for agriculture, the value of produce would rise.

Marx distinguises two classes of factors to determine the productive powers of labour: natural ones like the fertility of soil or availability of ore in a mine, and social ones to do with concentration of capital and scientific development. He proposes a simple expression:

The values of commodities are directly as the times of labour employed in their production, and are inversely as the productive powers of the labour employed.

At first I thought this could be interpreted as $$\text{value}\propto \frac{\text{labour time}}{\text{productive power}}$$but actually I think ‘productive powers’ is just the inverse of ‘time per unit commidity’ so actually I think he means $$\text{value}\propto\text{labour time per unit}=\frac{1}{\text{productive power}$$and he’s just doing the good old Marx thing of saying everything several times.

Marx goes on to talk about price, which he calls “the monetary expression of value”. At the time he was writing, fiat currencies had not been established, and the UK used a gold standard while continental Europe used a silver standard.

You exchange a certain amount of your national products, in which a certain amount of your national labour is crystallized, for the produce of the gold and silver producing countries, in which a certain quantity of their labour is crystallized. It is in this way, in fact by barter, that you learn to express in gold and silver the values of all commodities, that is the respective quantities of labour bestowed upon them.

This seems straightforward enough, but I’m not entirely sure how to extend this analysis to fiat currencies.

Marx then goes on to talk about the difference between natural price (”the process by which you give to he values of all commodities an independent and homogeneous form”) and market prices. He argues that although they fluctuate, they (as previous economists had argued) fluctuate around the actual value as supply and demand follow fluctations, though he won’t explain this in detail just now.

It suffices to say the if supply and demand equilibrate each other, the market prices of commodities will correspond with their natural prices, that is to say with their values, as determined by the respective quantities of labour required for their production.

But supply and demand must constantly tend to equilibrate each other, although they do so only by compensating one fluctuation by another, a rise by a fall, and vice versa.

If instead of considering only the daily fluctuations you analyze the movement of market prices for longer periods, as Mr. Tooke, for example, has done in his History of Prices, you will find that the fluctuations of market prices, their deviations from values, their ups and downs, paralyze and compensate each other; so that apart from the effect of monopolies and some other modifications I must now pass by, all descriptions of commodities are, on average, sold at their respective values or natural prices.

The average periods during which the fluctuations of market prices compensate each other are different for different kinds of commodities, because with one kind it is easier to adapt supply to demand than with the other.

Marx finishes by dismissing the idea that profits are made by selling products above their actual values.

...it is nonsense to suppose that profit, not in individual cases; but that the constant and usual profits of different trades spring from the prices of commodities, or selling them at a price over and above their value. The absurdity of this notion becomes evident if it is generalized. What a man would constantly win as a seller he would constantly lose as a purchaser.

He dismisses the possibility that some people are only buyers or only sellers.

It would not do to say that there are men who are buyers without being sellers, or consumers without being producers. What these people pay to the producers, they must first get from them for nothing. If a man first takes your money and afterwards returns that money in buying your commodities, you will never enrich yourselves by selling your commodities too dear to that same man. This sort of transaction might diminish a loss, but would never help in realizing a profit.

I think what he means by “they must first get from them for nothing” is that if you are paying for something with other commodities, you first have to obtain commodities somehow. You can’t just magically become a ‘buyer’.

Finally, he expresses the challenge he’s about to take on:

To explain, therefore, the general nature of profits, you must start from the theorem that, on an average, commodities are sold at their real values, and that profits are derived from selling them at their values, that is, in proportion to the quantity of labour realized in them. If you cannot explain profit upon this supposition, you cannot explain it at all.

Marx says this is unintuitive, but many scientific understandings are also unintuitive. (He actually uses the word ‘paradoxical’, but none of the examples he lists are paradoxes in the familiar sense, so I guess that word changes its meaning.)

5 notes

·

View notes

Text

Trapped Bose–Einstein condensates with quadrupole–quadrupole interactions - IOPscience

1. Introduction Inter-particle interactions in many-body systems play a key role in determining the fundamental properties of the systems. In ultracold atomic gases, neutral atoms interact through the van der Waals force, which can be described by a contact potential characterized by a single s-wave scattering length. Such a simplification results in great success in cold atomic physics.[1] For atoms possessing large magnetic moments, the long-range and anisotropic dipole–dipole interaction (DDI) may become comparable to the contact one, which leads to the dipolar quantum gases.[2] So far, the experimentally realized dipolar systems include the ultracold gases of chromium,[3] dysprosium,[4,5] and erbium[6] atoms. It is also possible to realize dipolar quantum gases with ultracold polar molecules.[7–13] Compared to the short-range and isotropic contact interactions, DDI interaction gives rise to many remarkable phenomena, such as spontaneous demagnetization,[14] d-wave collapse,[15] droplet formation,[16] and Fermi surface deformation.[17] Recently, a new quantum simulation platform based on atoms or molecules with electric quadrupole–quadrupole interaction (QQI) was theoretically proposed.[18–24] The quantum phases of quadrupolar Fermi gases in a two-dimensional (2D) optical lattice[18] and in two coupled one-dimensional (1D) pipes[20] were studied. Lahrz et al. proposed to detect quadrupolar interactions in ultracold Fermi gases via the interaction-induced mean-field shift.[19] For bosonic quadrupolar gases, Li et al. studied 2D lattice solitons with quadrupolar intersite interactions.[21] Lahrz[23] studied roton excitations of 2D quadrupolar Bose–Einstein condensates. Andreev calculated the Bogoliubov spectrum of the Bose–Einstein condensates (BECs) with both dipolar and quadrupolar interactions using non-integral Gross–Pitaevskii equation (GPE).[24] Experimentally, ultracold quadrupolar gases can potentially be realized with alkaline-earth and rare-earth atoms in the metastable 3P2 states[25–33] and homonuclear diatomic molecules.[34–37] In the present work, we explore the ground-state properties through full numerical calculations. In particular, we focus on the static properties, such as the condensate ground-state density profile and its stability. We also propose a scheme to quantitatively characterize the deformation of the condensate induced by the QQI. It is shown that, compared to the dipolar interaction, the quadrupole–quadrupole interaction can only induce a much smaller deformation due to its complicated angular dependence and short-range character. This paper is organized as follows. In Section 2, we give a brief introduction about the QQI. The formulation for the quadrupolar condensates is presented in Section 3. We then explore the ground-state properties in Section 4, with particular attention paid on the deformation and stability of the quadrupolar condensates. Finally, we conclude in Section 5. 2. Quadrupole–quadrupole interactions Here, we give a brief introduction about the QQI. As an example, we consider the classical quadrupole moment of a molecule which is described by a traceless symmetric tensor where α,β = x,y,z, (ax,ay,az) is the Cartesian coordinates of the a-th particle in the molecule, and ea is its charge. Compared to a dipole moment (a vector described by a magnitude and two polar angles), the description of a general quadrupole requires five numbers. However, the situation is greatly simplified for linear molecules or symmetric tops because there is only one independent nonzero component. Specifically, in a coordinate system with the z axis being along the molecular axis, such a molecule has Θzz = Θ, , and Θαβ = 0 (α ≠ β). After being transferred to a space-fixed coordinate system, the components of the quadrupole moment can then be expressed as where is a unit vector in the direction of the molecular axis. For two quadrupoles Θ1 and Θ2 with two molecular axes being along and , respectively, the QQI is[38] where r = |r|, , and ε0 is the vacuum permittivity. To further simplify the QQI, we assume that all particles posses the same quadrapole moment Θ and molecular axes are polarized along the z axis by an electric field gradient.[21] The QQI then reduces to where θ is the polar angle of r and is the spherical harmonic. In Fig. 1, we plot the angular dependence of Vqq(r). As can be seen, the QQI interaction is repulsive along both axial (θ = 0) and radial (θ = π/2) directions and it is most attractive along θ θm ≡ 49.1°. Compared to the dipolar interaction, the angular dependence of the quadrupolar interaction is more complicated. Moreover, the 1/r5 dependence on the inter-particle distance indicates that the QQI is a short-range interaction, while the DDI is a long-range one. It should be noted that, from the quantum mechanical point of view, because the quadrupole moment operator is of even parity, an atom with definite angular momentum quantum number J and magnetic quantum number M may carry a nonzero quadrupole moment. Consequently, one may effectively align the quadrupole moment with lights or magnetic fields to prepare the atoms in a particular angular momentum eigenstate, |J,M, or their superposition.[18,19] 3. Formulation We consider a trapped ultracold gas of N linear Bose molecules. In addition to the QQI [Eq. (4)] that was introduced in Section 2, we assume that molecules also interact via the contact interaction where a0 is the s-wave scattering length and m is the mass of the molecules. The total interaction potential then becomes The confining harmonic potential is assumed to be axially symmetric, i.e., where ω⊥ and ωz are the radial and axial trap frequencies, respectively. For convenience, we assume that the geometric average of the trap frequencies is constant. Consequently, the external trap is expressed as where λ = ωz/ω⊥ is the trap aspect ratio. Within the mean-field theory, a quadrupolar BEC is described by the condensate wave function Ψ(r,t) which satisfies the Gross–Pitaevskii equation (GPE) where , , and g0 and gq characterize the strength of the contact and quadrupolar interactions, respectively. Here, for simplicity, we have introduced the dimensionless units: for length, ωho for energy, for time, and for wave function. Consequently, , , g0 = 4πNa0/aho, gq = NΘ2/, and are all dimensionless quantities. The rescaled wave function is now normalized to unit, i.e., . From the dimensionless equation (9), it can be seen that the free parameters of the system are the trap aspect ratio λ, the contact interaction strength g0, and the quadrupolar interaction strength gq. Given that we shall only deal with the dimensionless quantities from now on, the "bar" over all variables will be dropped for convenience. The ground-state wave function can be obtained by numerically evolving Eq. (9) in imaginary time. The only numerical difficulty lies at the evaluation of the mean-field quadrupolar potential Similar to the dipolar gases, can be conveniently evaluated in the momentum space by using the convolution theorem, i.e., where and denote the Fourier and inverse Fourier transforms, respectively. Making use of the partial wave expansion , it can be easily shown that where k = |k| and . Numerically, the evaluation of can be performed using the fast Fourier transform. Finally, we fix the interaction parameters based on realistic systems. Since the s-wave scattering length is easily tunable through Feshbach resonance, here we shall only focus on the quadrupolar interaction strength gq. The quadrupole moments of the metastable alkaline-earth and rare-earth atoms,[39–43] and the ground-state homonuclear diatomic molecules[44] can be calculated theoretically. In particular, for the Yb atom and homonuclear molecules, the quadrupole moment can be as large as 30 a.u.[43,44] Therefore, for a typical configuration with N = 104, Θ = 20 a.u., ωho = (2π) 1000 Hz, and m = 150 amu, we find gq ≈ 66. As will be shown below, this QQI strength is large enough for experimental observations of the quadrupolar effects. 4. Results In this section, we investigate ground-state properties of the quadrupolar condensates. To easily identify the quadrupolar effects, we will focus on pure quadrupolar condensates by letting g0 = 0. This reduces the control parameters to λ and gq. We remark that, in the presence of the contact interaction, the results presented below remain quantitatively valid as long as gq g0. Since the QQI is partially attractive, stability is a particular important issue for the system. Numerically, it is found that, for a given λ, the condensate always becomes unstable when gq exceeds a threshold value . In Fig. 2(a), we map out the stability diagram of a quadrupolar condensate on the (λ,gq) parameter space, in which the solid line shows the critical QQI strength . The stability of a quadrupolar condensate strongly depends on the trap geometry. In fact, it becomes more stable for both highly oblate and elongated traps. This observation is in agreement with the angular distribution of the QQI shown in Fig. 1, as in both cases, the overall QQI is repulsive. To gain more insight into the stability of the quadrupolar condensates, we consider a homogeneous quadrupolar condensate, for which the dispersion relation of the collective excitations is[23] where n is the density of the gas and all quantities are in dimensionless form under the units that were previously defined. Then, by noting that the minimum value of is , equation (13) leads to an analytical expression of the critical QQI strength which suggests that a homogeneous condensate becomes unstable when . The dashed line in Fig. 2(a) represents the critical QQI strength . Here, for a given λ, the density n in Eq. (14) is taken as the numerically obtained peak condensate density corresponding to the parameters (λ,gq) on the solid line of Fig. 2(a). As can be seen, there is only a small discrepancy between the analytic and numerical stability boundaries. The inequality equation (14) underestimates the critical QQI strength because the zero-point energy in the trapping potential is neglected when we use the homogeneous result for the dispersion relation. We now turn to study how the QQI deforms the condensates. Compared to dipolar gases whose deformation is essentially characterized by a single parameter, the condensate aspect ratio,[45,46] the situation for quadrupolar condensate is more complicate. Because it is easier to visualize the deformation if the trapping potential is isotropic, we need to rescale the coordinates such that the isodensity surface of the condensate is a sphere in the absence of the QQI. For this purpose, we note that the condensate wave function at gq = 0, can be transformed into a spherically symmetric form by rescaling the coordinates according to , , and . This inspires us to consider the condensate density in the rescaled coordinates, i.e., In Figs. 2(b)–2(d), we present the contour plots of under three different pairs of parameters. Careful examination of these contour lines reveals that the condensate is stretched mainly along the radial and axial directions for λ = 1/8 and 8, respectively. While for λ = 1, the condensate is stretched roughly along the direction that is most attractive for the QQI. To characterize the deformation quantitatively, we expand at a given radius into where are the spherical coordinates for , βℓμ are the deformation parameters, and n0 is determined by . In this work, is so chosen that n0 is half of the peak condensate density. We note that because the ground-state wave function is axially symmetric, βℓμ is nonzero only if μ = 0. Figures 3(a)–3(c) show the gq dependence of the deformation parameters βℓ0 for ℓ = 2, 4, and 6 and for three different λ's. In all three cases, β60 are negligibly small. Furthermore, β20 dominates in highly anisotropic traps and β40 gives the largest contribution in isotropic potential, which is in agreement with the observation in Fig. 2. To understand these results, we express the angular dependence of the QQI, Y40(θ,), in the rescaled coordinates In Fig. 3(d), we plot for different λ's. As can be seen, for λ = 1/8 and 8 the most attractive direction is shifted to and 0.12π, respectively. Therefore, one can naturally observe that the condensates are stretched along the radial and axial directions for λ = 1/8 and 8, respectively. We note that, compared to DDI, the deformation induced by QQI is much smaller. This can be attributed to the two features of the QQI. The complicated angular distribution of the QQI makes it difficult to induce a global deformation. Moreover, the short-ranged feature makes the condensate prone to collapse. Therefore, the strong interaction regime that may be required to generate large deformation is inaccessible. In Figs. 4(4) and 4(b), we plot the gq dependence of the QQI energy, and the peak condensate density, np, respectively. As can be seen, the angular distribution of the density in an isotropic potential always makes the overall QQI attractive such that Eqq remains negative and np increases monotonically with gq. Meanwhile, in highly anisotropic potentials, the angular dependence of the density is mainly determined by the trap, which, for both elongate and oblate traps, leads to overall repulsive QQI. Consequently, the peak density decreases with growing QQI strength in the small gq region. For large gq close to the stability boundary, the gq dependence of nq depends on the value of λ, which may exhibit distinct tendency. 5. Conclusion In conclusion, we have studied the ground-state properties of a trapped quadrupolar BEC. For the geometries of the ground states, we have quantitatively characterized different components of the deformation induced by QQI. In addition, we map out the stability diagram on the (λ,gq) parameter plane. Finally, we point out that the QQI interaction strength required to induce the quadrupolar collapses is in principle accessible in, for example, a metastable Yb atom or homonuclear diatomic molecules, albeit the experimental realization of BECs of those atoms or molecules still remains challenging. Acknowledgements The computation of this work was partially supported by the HPC Cluster of ITP-CAS.

0 notes

Text

Hilbert spaces, 1:

I’ve been reading Pedersen’s Analysis Now for a model-theoretic functional analysis reading course this term. We’re starting the section on Hilbert spaces (and the theory of operators on Hilbert spaces) and it’s my turn to present, so I’ve written some notes that I’ll also post here.

Definition. A sesquilinear form on a vector space \(X\) is a map \[( \cdot | \cdot) : X \times X \to \mathbb{F}\] which is linear in the first variable and conjugate linear in the second. To be precise, it’s additive in either variable, but compatible/\(\mathbb{F}\)-equivariant up to conjugation in the second variable.

Definition. To each sesquilinear form \((\cdot | \cdot)\) we define the adjoint form \(( \cdot | \cdot )^*\) by \[(x | y)^* \overset{\operatorname{df}}{=} \overline{(y | x)},\] for all \(x\) and \(y\) in \(X\). We say that the form is self-adjoint if it is its own adjoint. (When \(\mathbb{F} = \mathbb{R},\) the term symmetric is used.)

Claim. When \(\mathbb{F}\) is \(\mathbb{C},\) a calculation (or simply observing that \(S^1\) acts via isometries) shows that \[4(x | y) = \sum_{k = 0}^3 i^k(x + i^k y | x + i^k y).\]

Proof. Expanding the sum gives \[(x | x) + (y | x) + (x | y) + (y | y)\]\[+ i(x | x) + i(x|iy) + i(iy|x) + i(iy|iy)\]\[- (x|x) - (x|-y) - (-y|x) - (-y | -y)\]\[ -i (x | x) - i(x | -iy) - i(-iy | x) - i(-iy|-iy)\] \[= (x|x)(1 + i - 1 - i) + (y | x)(1 - 1 + 1 - 1)\]\[ + (x|y)(1 + 1 + 1 + 1) + (y|y)(1 -i^3 - 1 + i^3)\]\[= 4(x|y).\] \(\square\)

We say a sesquilinear form \(( \cdot | \cdot)\) is positive if \((x|x) \geq 0\) for every \(x \in X\); thus, for \(\mathbb{F} = \mathbb{C}\) a positive form is automatically self-adjoint, since the above claim shows (after a nearly-identical calculation) that a sesquilinear form is self-adjoint if and only if \((x | x) \in \mathbb{R}\) for every \(x \in X\).

On a real space, a positive form might not be symmetric. For example, take a nontrivial quadratic form.

Definition. An inner product on \(X\) is a positive, self-adjoint sesquilinear form such that \((x | x) = 0\) implies \(x = 0\) for all \(x \in X\), i.e. the form is positive-definite in addition to being positive.

Definition. From similar computations from the claim above, we obtain the following polarization identities: in \(\mathbb{C},\) \[4(x|y) = \sum_{k=0}^3 i^k || x + i^k y||^2,\] and in \(\mathbb{R}\), \[4(x|y) = || x + y||^2 - ||x - y||^2.\]

Lemma. (Cauchy-Schwarz inequality) Let \(\alpha\) be a scalar in \(\mathbb{F}\). The formula \[|\alpha|^2 ||x||^2 + 2 \Re \alpha(x | y) + ||y||^2 = ||\alpha x + y||^2 \geq 0\] for \(x\) and \(y\) in \(X\) immediately leads to the Cauchy-Schwarz inequality: \[|(x|y)| \leq ||x|| ||y||.\]

Inserting this into the above formula, it follows that the norm function \(|| \cdot ||\) is subadditive, and therefore a seminorm on \(X\). In the case when \(( \cdot | \cdot)\) is an inner product, the homogeneous function \(||x|| \overset{\operatorname{df}}{=} (x | x)^{½}\) is a norm, and equality holds in the Cauchy-Schwarz inequality if and only if \(x\) and \(y\) are scalar multiples of each other.

Lemma. Elementary computations show that the norm arising from an inner product space satisfies the parallelogram law, namely \[||x+y||^2 + ||x - y||^2 = 2(||x||^2 + ||y||^2).\]

Conversely, one way verify that if a norm satisfies the parallelogram law, then the polarization identities from before induce inner products on \(X\) (which one depending, of course, on the ground field.)

Definition. A Hilbert space is a vector space \(\mathfrak{H}\) with an inner product \(( \cdot | \cdot )\), such that \(\mathfrak{H}\) is a Banach space with respect to the associated norm.

Examples. - The Euclidean spaces are Hilbert spaces.

- The spaces of compactly-supported continuous functions on Euclidean spaces are a Hilbert space with inner product given by \((f | g ) \overset{\operatorname{df}}{=} \int f \overline{g} dx;\) the associated norm is the \(2\)-norm, and after completing we get the Hilbert space \(L^2 \mathbb{R}\).

(More generally, you could replace the Lebesgue integral above with a Radon integral on a locally compact Hausdorff space and the same construction gives you a Hilbert space \(L^2 X.\))

Algebraic direct sums iare formed as in \(\mathbf{Ban}\), with the new inner product being defined pointwise.

Lemma. If \(\mathbf{C}\) is a closed nonempty convex subset of a Hilbert space \(\mathfrak{H}\), there is for each \(y\) in \(\mathfrak{H}\) a unique \(x\) in \(\mathfrak{C}\) that minimizes the distance from \(y\) to \(\mathfrak{C}\).

Proof. By translation variance, we can assume \(y = 0\). Let \(\alpha\) be the infimum of the norms of elements in \(\mathfrak{C}\). Choose a sequence \((x_n)\) from \(\mathfrak{C}\) witnessing this.

For any \(y\) and \(z\) in \(\mathfrak{C}\), the parallelogram law (and convexity, whence \(½(y+z) \in \mathfrak{C}\)) gives \[2 \left( ||y||^2 + ||z||^2\right) = ||y+z||^2 \geq 4 \alpha^2 + ||y - z||^2,\] Hence, when \(y = x_n\) and \(z = x_m\), \((x_n)\) is a Cauchy sequence, so by completeness of a Hilbertspace there is a limit \(x\) with \(||x|| = \alpha\).

Furthermore, since the topology is Hausdorff, the limit is unique. \(\square\)

Theorem. For a closed subspace \(X\) of a Hilbert space \(\mathfrak{H}\), let \(X^{\perp}\) be the subspace of all elements orthogonal to all of \(X\). Then each vector \(y \in \mathfrak{H}\) has a unique decomposition \(y = x + x^{\perp}\), and \(x\) and \(x^{\perp}\) are the nearest points in \(X\) and \(X^{\perp}\) to \(y\); furthermore \(\mathfrak{H} = X \oplus X^{\perp}\) and \(\left(X^{\perp}\right)^{\perp} = X.\)

Proof. More or less automatic verification.

Given \(y\) in \(\mathfrak{H}\) we take \(x\) to be the nearest point in \(X\) to \(y\) gotten by the previous lemma; put \(x^{\perp}\) to be \(y - x\). For every \(z \in X\) and \(\epsilon > 0\),

\[||x^{\perp}||^2 = ||y - x||^2 \leq ||y - (x + \epsilon z)||^2\]

\[= ||x^{\perp} - \epsilon z||^2 = ||x^{\perp}||^2 - 2 \epsilon \Re(x^{\perp}|z) + \epsilon^2||z||^2.\]

It follows that \(2 \Re(x^{\perp}|z) \leq \epsilon ||z||^2\) for every \(\epsilon > 0\), whence \(\Re(x^{\perp}|z) \leq 0\) for every \(z\). Since \(X\) is a linear subspace, this implies that \((x^{\perp}|z)\) is zero, so that \(x^{\perp}\) deserves its name and is in \(X^{\perp}\). \(\square\)

Corollary. For every subset \(X \subset \mathfrak{H}\), the smallest closed subspace of \(\mathfrak{H}\) containing \(X\) is \(X\)-double perp. In particular, if \(X\) is a subspace of \(\mathfrak{H}\), then \(\overline{X}\) is \(X\)-double perp.

Proposition. The maps \(\Phi \overset{\operatorname{df}}{=} ( \cdot | x )\) are conjugate linear isometries of \(\mathfrak{H}\) onto \(\mathfrak{H}^*\).

Proof. Tautologically conjugate-linear; \(\Phi\) is maximized by CZ-inequality on \(x/||x||\) on the unit ball, so the norm of \(\Phi\) is precisely \(||x||\). \(\square\)

Remark. Take \(\varphi\) in \(\mathfrak{H}^* \backslash \{0\}\), and put \(X = \ker \varphi\). Since \(X\) is a proper closed subspace of \(\mathfrak{H}\), there is a vector \(x \in X^{\perp}\) such that \(\varphi(x) = 1.\) For every \(y \in \mathfrak{H}\), we see that \(y - \varphi(y) x \in X\), hence \[(y | x) = (y - \varphi(y) x + \varphi(y) x | x) = \varphi(y) ||x||^2.\] It follows that \(\varphi = \Phi(||x||^{-2} x).\)

Definition. We define the weak topology on a Hilbert space \(\mathfrak{H}\) as the initial topology corresponding to the family of functionals \(x \mapsto (x | y),\) so essentially the weak topology it induces…on itself. Since it is isometric with its dual, the weak topology on \(\mathfrak{H}\) is the weak-star topology on \(\mathfrak{H}^*\) pulled back via the isometry, and the unit ball in \(\mathfrak{H}\) is weakly compact.

We’ll see that every operator in \(\mathbf{B}(\mathfrak{H})\) is continuous as a function \(\mathfrak{H} \to \mathfrak{H}\) when both copies have the weak topology; we say that \(T\) is weak-weak continuous.

Conversely, if \(T\) is a weak-weak cts operator, we can invoke the closed graph theorem to see that \(T \in \mathbf{B}(\mathfrak{H})\). Indeed, if \(x_n \to x\) and \(T x_n \to y\) in the norm topology, then \(x_n \to x\) and \(T x_n \to y\) weakly since the norm topology refines the weak topology; by assumption \(T x_n \to Tx\) weakly, whence \(Tx = y,\) as was required.

Similarly, every norm-weak continuous operator on \(\mathfrak{H}\) is bounded. We’ll see later operator that is weak-norm continuous must have finite rank, which is neat; we’ll later also modify this to give a characterization of the compact operators (which are a good candidate for treating infinitesimal elements.)

47 notes

·

View notes

Text

Differential Calculus Online Tutor

Differential Calculus Online Tutor

How to become master of Differential Calculus In 21 Days? Join Online Lecture For BSc Mathematics Subjects Of University Like Delhi University, IGNOU University, CCS University Merrut, Agra University, Jamia University, Aligarh Muslim University, Lalit Narayan Mithila University.

BSc Online Coaching For Delhi University, BSc Online Coaching For IGNOU University

View On WordPress

#Abstract Algebra II Online Tuition#Abstract Algebra Online Tuition#BSc Online Coaching For Agra University#BSc Online Coaching For Aligarh Muslim University#BSc Online Coaching For Amity University#BSc Online Coaching For CCS University Merrut#BSc Online Coaching For Delhi University#BSc Online Coaching For IGNOU University#BSc Online Coaching For Jamia University#BSc Online Coaching For Lalit Narayan Mithila University Darbhanga Topics Covered For Differential Calculus Limit and Continuity (ε and δ de#Calculus and Analytical Geometry Online Tuition#Differentiability of functions#Differential Calculus Online Tutor #Differential Equations I Online Tuition#Differential Equations II Online Tuition#Euler’s theorem on homogeneous functions#Introduction to MATLAB Programming Online Tuition#Leibnitz’s theorem#Linear Algebra I Tuition Class#Linear Algebra II Online Tuition#Metric Spaces and Complex Analysis Online Tuition#Multivariate Calculus Online Tuition#Numerical Methods Online Tuition#partial differentiation#Real Analysis Online Tuition#Riemann Integration and Series of Functions Tuition Class#successive differentiation#Theory of Real Functions Tuition Class#Types of discontinuities

0 notes

Note

Care to enlighten us why the word "race" is #eeeeew? Because that's a pretty stupid thing to say.

wow SHOTS FIRED. i’m not sure which post you’re referring to, anon, but nevermind, it’s true i definitely dislike the word and all its connotations - as to why, here is the American Anthropological Association’s Statement on Race, aka Why *Race* Is An Awful Word We Would Do Better Without:

In the United States both scholars and the general public have been conditioned to viewing human races as natural and separate divisions within the human species based on visible physical differences. With the vast expansion of scientific knowledge in this century, however, it has become clear that human populations are not unambiguous, clearly demarcated, biologically distinct groups. Evidence from the analysis of genetics (e.g., DNA) indicates that most physical variation, about 94%, lies within so-called racial groups. Conventional geographic “racial” groupings differ from one another only in about 6% of their genes. This means that there is greater variation within “racial” groups than between them. In neighboring populations there is much overlapping of genes and their phenotypic (physical) expressions. Throughout history whenever different groups have come into contact, they have interbred. The continued sharing of genetic materials has maintained all of humankind as a single species.

Physical variations in any given trait tend to occur gradually rather than abruptly over geographic areas. And because physical traits are inherited independently of one another, knowing the range of one trait does not predict the presence of others. For example, skin color varies largely from light in the temperate areas in the north to dark in the tropical areas in the south; its intensity is not related to nose shape or hair texture. Dark skin may be associated with frizzy or kinky hair or curly or wavy or straight hair, all of which are found among different indigenous peoples in tropical regions. These facts render any attempt to establish lines of division among biological populations both arbitrary and subjective.