#which-ai-tool-should-i-use

Explore tagged Tumblr posts

Text

Free vs Paid AI Tools: Which One is Best in 2025?

Explore the difference between free vs paid AI tools, learn their pros and cons, and discover which is best for your needs. ⚖️ Introduction: Choosing Between Free and Paid AI Tools In 2025, AI tools are essential for content creation, automation, design, and communication across many industries in the U.S.Freelancers and solopreneurs must often decide whether to stick with free AI platforms or…

#affordable-ai-tools-for-creators#ai#ai-subscription-tools#ai-tools-for-small-businesses#ai-tools-pricing-guide#artificial-intelligence#best-free-ai-tools#chatgpt#cost-of-ai-software#digital-marketing#free-ai-productivity-tools#free-vs-premium-ai-apps#paid-ai-tools-comparison#technology#which-ai-tool-should-i-use

0 notes

Text

there's something so deeply dystopian to me how tech companies don't understand that a forced convenience is not a convenience at all. i'm sure autocorrect is helpful for many, but a function that forcibly changes my actual written words and punctuation is taking away my language. photo filters can be nice but i need to choose using them myself or else i have lost the ability to take the picture i want. i don't want a machine to draw or write for me. taking away the option for me to do things manually feels like violence!!!! all this talk of endless opportunity, why are you RESTRICTING me

#haha im upset an android update removed my most used screenshotting tools while forcing more ai garbage on me#tech companies go sit in the staircase and think about what you've done#there are many technical conveniences i choose to rely on because they're convenient for my sensibilities#but these should not be the default for anyone or the only option#it's like. it's technology. it has the capability of being personalised for our actual use and convenience like isn't that the POINT#AHHHHHHHHH#also with all the ai bullshit it's so hard to fully underline how much i enjoy the act of drawing and creating and i don't WANT it to be#more 'convenient' or easy?#not in the eay techbros think anyway. i think there should be accessibility tools and options to make the Process good for Your Needs#which is not. having a machine create something in your stead ??? ??????????

422 notes

·

View notes

Text

ppl defending ai art by completely ignoring the genuine major issues that people have with it and pretending like ppl r just mad because they're Art Elitists and think that art should only be made through Suffering instead of being easy are some of the most embarrassing ppl tumblr has been recommending to me lately

#kris.txt#''erm well claiming that using art for databases is wrong just means you're defending ip laws''#no actually i just don't think artists individual pieces should be used in a way they didn't consent to.#hope this helps#the issue isn't that ppl r ripping off a style or whatever#it's that they're taking work without permission#feeding it into a machine#and then often monetizing the result#it's a matter of consent#if an artist said they were fine with their stuff being used#and was compensated in some way#then it'd be totally fine#or if they used like public domain stuff then it'd be no problem#ai is specifically being used against artists not for them#which is a shame because i do think it could be a good tool

17 notes

·

View notes

Text

Guy may or may not have killed a CEO

Guy played one of the most popular games of the pandemic era

Most video games are about killing things

Flawless journalism.

nothing could prepare you for the opening of the second paragraph (source)

#It should go without saying that the CEO of UnitedHealthcare ran a company which denied a third of claims out of its 50.6 million customers#They're also getting sued for using an AI tool to deny claims which allegedly has a 90% error rate#This CEO ran a company that let 16.8 million people out to dry and I can guarantee several of them aren't alive anymore because of them#Ah but this guy played Among Us so he's a stone cold murderer right?

84K notes

·

View notes

Text

Not telling y'all that you should be able to identify AI slop (but it is a valuable skill, you totes should), but if you're to be accusing artists of being AI left and right at least go and do your homework, or at least do the bare minimum and use AI identification tools like Hive Moderation, so you 1- don't ruin someone's lifehood 2- don't make a clown out of yourself maybe

Like, i get it, AI slop and "AI artists" pretending to be genuine is getting harder and harder to identify, but just accusing someone out of the blue and calling it a day doesn't make it any better.

The AI clowns shifted to styles that have less "tells" and the AI arts are becoming better. Yeah, it sucks ass.

They're also integrating them with memes, so you chuckle and share, like those knights with pink backgrounds, some cool frog and a funny one liner, so you get used to their aesthetic.

This is an art from the new coming set Final Fantasy for MtG. This is someone on Reddit accusing someone of using AI. From what i can tell, and i fucking hate AI, there is NO AI used on this image.

As far as i can tell and as far as any tool i've used, the Artist didn't use AI. which leads to the next one:

they accused the artist of this one of using Ai. the name of this artist is Nestor Ossandon.

He as already been FALSELY ACCUSED of using AI, because he drew a HAND THAT LOOKED A LITTLE WEIRD, which caused a statement from D&D Beyond, confirming that no AI has been used.

Not to repeat mysef, they're accusing the art above, that is by Nestor, to have used Ai.

REAL artists are not machines. And just like the AI slop, we are not perfect and we make mistakes. The hands we draw have wonky fingers sometimes. The folds we draw are weird. But we are REAL. We are real people. And hey, some of our "mistakes" sometimes are CHOICES. Artistic choices are a thing yo.

If you're to accuse someone of using Ai, i know it's getting hard to identify. But come on. At least do your due diligence.

#no#i will not “tag” the Ai artists of the catsune miku and the cat cux for all i care AI artists can go to hell and burn#but like#there are many of them#and when you figure out how to spot ai and how the AI generate the images#please trust me on this one#it gets super easy to ident like 80% of most of it#the catsune miku is the HARDEST to ident so far#because it did something out of the ordinary#but otherwise the others have very easy tells#they're trying to mimic styles like watercolors and acrylic#that have blurred edges#and impressionism#that have undefined shapes#so theyr “mistakes” pass as intention#but that's besides the point#what i want here is people to just think a little but before randomly accuse people#cuz it's really getting out of had#and god i do love seeing an AI artist getting their wig yanked out of their fucking scalp for pretending to be a human#but y'all need to know when to do it#some of you don't know how to behave and it shows man

6K notes

·

View notes

Text

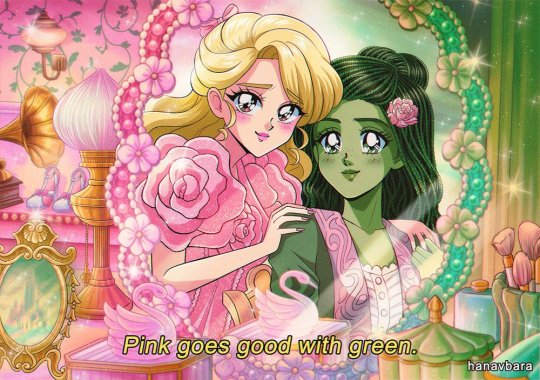

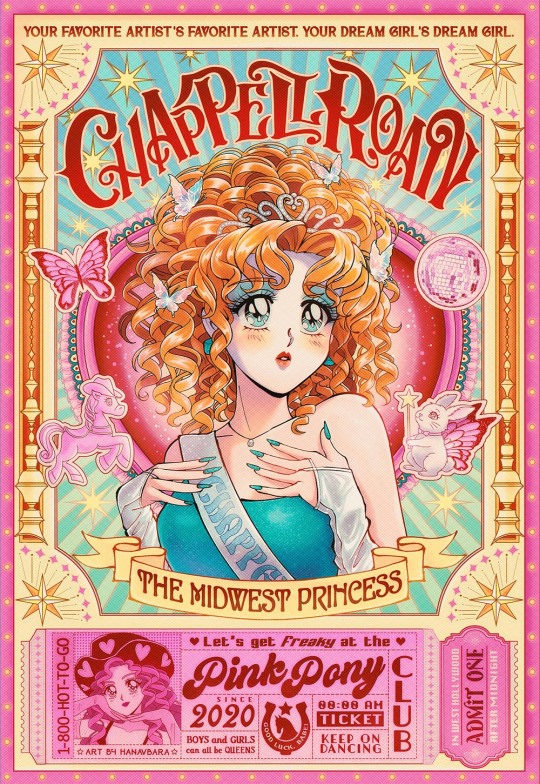

support HUMAN artists, not AI‼️

AI generated images are NOT art. art is CREATED, not GENERATED.

this is not just about taking jobs from artists (which is already a huge deal), it’s also about devaluing art itself, turning it into mass-produced, empty and soulless content. it’s heartbreaking to see AI stealing from real artists: from Studio Ghibli to smaller creators like us.

personally, we started our art journey by reinterpreting what we love: music, TV series, anime and transforming it into our vision inspired by the 90’s anime that we grew up with. when we create our illustrations, we try to capture the emotion and love we feel for the subject, aiming to tell a story with each drawing. ever since AI was created, we have had many people asking if our art is AI generated. honestly, it’s heartbreaking every single time. for us, art is a deeply human experience that we’ve been dedicating ourselves to for seven years. creating from nothing takes dedication, skill, and an emotional investment that, in our opinion, AI simply can’t capture.

you’ve probably seen your feed flooded with AI generated images in a “Studio Ghibli style”. trends like these reinforce the idea that art can be easily replicated and devalued. the future of artists is more uncertain than ever. we don’t know if in a few years we’ll still be able to make a living from this, since many companies are adopting the mindset of “why should i pay someone for their well-earned work when a machine can do it for free in an instant?” that mindset is the real problem: the way society is starting to perceive art.

art is essential to human life. many people realized this during the pandemic: what would we do without music, movies, books, that bring us comfort? art is more than just the final product. it’s about the process, struggles, and personal growth that comes with it. when you create, you grow, learn, and challenge yourself. AI erases that, replacing it with instant and shallow replication. real art brings people together, evoking emotions and reminding us of what it means to be human.

relying on AI to make art isn’t innovation, it’s avoiding the challenge of creating something meaningful. AI tools like these are being pushed as "the future," but what does that say about us? replacing human artistry with shallow, mass-produced content takes away humanity from art, do we really want to be part of a world where art is just another disposable product? what value do we place on creativity?

if you’ve made it this far, it means you care about these issues. let’s raise our voices together and speak up. don’t consume AI generated images. value and respect creativity. SUPPORT REAL HUMAN ARTISTS.

#artist#artists on tumblr#ai#anti ai#fuck ai#art#illustration#anime#digital art#artwork#creativity#chatgpt#studio ghibli#ghibli#artificial intelligence

8K notes

·

View notes

Text

I will preface this with the statement that I don't think you can ethically use any of the generative AIs on the market at the moment. They are tools, but they are tools made from the exploitation of artists. GenAI companies scrape artwork off of the internet to inform their software without the creators' consent, and often then make absurd amounts of money while leaving the artists whose work they stole without any way to regain lost income. It's objectively bad. I don't disagree with you there.

What I do disagree with is the loudest argument against AI, which is that it's "Not Art." If it is not art, (i am absolutely open to that idea. probably lean further towards that than it actually being art) then I would like to explore, what *is* art, and where do we draw the line?

I, and many others, have described AI as a tool. If we are using this tool perspective, then I would compare generative AI to a photo camera.

Cameras:

allowed people to access artwork that otherwise would have been too expensive or time consuming (comparing to portraits)

can copy a piece of artwork completely, ex. taking a picture of the Mona Lisa

can create art with very little effort (the press of a button)

can be used to make profit at the expense of others: a cheaper photograph of an artwork can prevent the sale of the "real" art

can be used to obscure the original source: ex. a screenshot of a social media post

And, of course, they can be used to threaten and harass real people.

This is not to say photography is an inherently immoral form of art, obviously. There's nuance. The way you use it is important. The intention you put in is important.

This is also not to say that photography and generative AI are the same. GenAI uses a lot less human input and produces something that, as of today, can be difficult to distinguish from human-created art. GenAI also has absolutely no issues with lying, which is more difficult to analogize, but anyone with skills in photoshop can do that pretty well.

I hope you see where I'm coming from with the "tool" analogy? GenAI can be used by anyone to create something that *looks like* what it's trying to replicate, but has very little of actual substance. It doesn't have meaning behind it.

I'll join you on the collage analogy as well. Let's say, for example, I am looking for a photo for a digital collage.

Searching: I type 3 words into google, pick the first thing I see, and put it in behind my half-demon half-angel OC. yay!

Generating: I type a dozen words into whatever generative AI software, pick the first thing I see, and put it behind my half-demon half-angel OC. yay!

In these examples, I care neither about the substance of the backgrounds, nor who they were originally created by. But, I can take another crack at it:

Searching: I type a dozen words into google. I scroll through a couple pages, go to a different site and scroll there as well, and eventually find the Perfect background.

Generating: I type two dozen words into whatever generative AI software. I don't see anything I like. I type another couple words. I scroll a bit, I delete a few words, generate again, and I find the Perfect background.

In these, I care about the substance. But there's another option:

Searching: I find a website that hosts copyright-free photographs. I search and scroll, and find something that's not perfect, but I know won't harm any artist or photographer.

Generating: Imagine, some far off time in the future, where a generative AI company actually pays all of the artists that they use for reference material. I type a few dozen words into this mythical GenAI software, and find something that will look different, but I know won't harm any artist or photographer.

This is the only scenario in which I believe GenAI could be morally neutral.* But, of course, there is always the far superior option:

Commission an Actual Artist for Artwork.

GenAI is exploitative. It is harmful. It tells lies and it has no intent.

But there are other exploitative aspects of art. Mummy brown? Fascist propaganda? Hell, paper is made from trees and digital art tablets use rare earth elements. Everything is nuanced. GenAI could be art, it could not be. Art is subjective. I'm still figuring out where I draw the line. It's understandable if you draw the line before GenAI. I just want to explore this idea. I don't think its as simple as you portray it.

There are likely a dozen more things I could point to, as well as a dozen incorrect statements I've made here. Apologies in advance for any inaccuracies.

picked up the pencil :)

i literally dont care what your excuse for using AI is. if you didnt put your own effort into making it im not putting my own effort into interacting with it.

#if OP responds to this with another aggressive comment and doesn't actually engage with my arguments im not replying#will this lose me mutuals? probably#sorry about that. i try to be a good person#also for the AI isnt a tool because a tool allows you to do things you otherwise cant do:#tools allow you to do things more easily#which AI definitely does#and. once again. I have never used GenAI on purpose#i care about what i create#but i also care about understanding my own personal values#is this an objectively bad idea for my mental health? yes#iiiii should stop thinking. probably#i think for myself and get called a dickhead#also who gives a shit#man. people are dying#is that hypocritical? yes#man i hate thinking for myself

80K notes

·

View notes

Text

I've been told that there are rumors about me using AI for my paintings. Please use some common sense, I've been posting on DeviantART since 2003 and sharing full video recordings on Patreon since 2018. If I'm a fake, wouldn't my Patrons have noticed by now? Since AI has been turning artists against each other, accusing each other of using AI, I have no choice but to share of some the Patreon rewards as proof. Here is the 10 full video recordings of me painting A Thousand Skies from scratch

I built the 3D model base for this painting in Sketchup, which you can see here

When AI was at it's infancy, I was very excited to have a new tool to help me make comics. Long time followers will know I struggled with repetitive strain injury that forced my comic making to a crawl. A decade before AI, I was experimenting with 3D backgrounds for comics.

I still remember the hate I got for using 3D models in my comic backgrounds, even though today nobody blinks at other artists doing the same. 3D is now accepted as a tool to help artists create. I even remember hate for being digital instead of traditional.

I tested out painting over AI generated backgrounds a few times in the very early stages of AI. There are a lot of screenshots taken out of context from my Discord where I share how I paint everything with complete transparency.

The only other time I've used AI in my art is for a gag scene in my comic, the full context is my character, Vance, who is a weeb and tech nerd, was objectifying women by seeing them as anime cat girls pasted over AI flower backgrounds.

If I had downloaded a flower stamp brush from ClipStudio and made a similar flower background, nobody would care. But somehow this is not okay even though it fits the theme and joke of the comic?

It's 2AM where I am now so I won't say much else other than I wish people would stop taking my posts out of context. With everything going on in the world, artists should support each other, not make up reasons to hurt each other.

6K notes

·

View notes

Note

For AO3 readers, MUTING is the solution to a problem they may not have come across yet.

I just thought of an extremely functional solution for a problem with AI fanfiction that a friend of mine shared her worries about. You see, she was particularly worried that her experience as a browser and reader of fanfiction will start to decline as AI fanfiction starts clogging the Sort By Recent filter on AO3.

Ok, so it didn't occur to me right away, and that is why I think it justifies this long anon post, but I just remembered that AO3 already has a tool to help you weed out low effort sludge that I have successfully used even prior to the increase in AI works. It does require people to be logged in though.

The solution is Muting, which has been around since 2023. I've even used it before for specifically this precise problem. There is a particular rare pair I like, but the primary producer of fics for that pair is one very prolific author whose fics are egregiously low quality. Like, the author even admitted that she frequently just find and replaces the names of the characters when she moves on to a new fandom.

After muting her, it about halved the number of fics in that tag, which was great, because it relieved me of an irritation and also allowed me to find other works. Muting folk who post AI generated works will have the same effect.

Why this will work: The main problem with AI fics is not that they are low quality, after all low quality fics have always existed - it's that they are both low quality and trivial to produce. Therefore, even one person who feels entitled to produce ai fanfiction could easily flood any particular tag with their works. But each time you mute an ai producer for one bad fic, you will end up removing all of their fics from your view, in any of your tags and fandoms. With a little weeding and upkeep, you should be able to browse contentedly as you always have.

Problem: Not all AI fics are tagged as such. How do you tell if a fic is AI?

The hallmark of a fanfiction author who generates stories with AI will be that they are prolific producers of low quality works. Why? because generating stories with ai is easy. It is much easier to generate a bad story with AI than it is to write a bad story without it. Therefore a person who uses AI to generate fics will have a lot of works.

The problem of false positives. What if you mute an author who is just bad right now but could improve?

My friend, if a person is already a prolific author of bad quality fiction, and they haven't gotten better yet, they probably will not improve to your standards ever. So you haven't lost anything by muting them. The goal here isn't to name and blame people who use AI - it's to make your own personal browsing experience better.

The problem of false negatives: What if you read a story and didn't realize it was generated using AI because it was good and you enjoyed it? You read something that you enjoyed on AO3 for free. This is not a problem.

You can find the mute button on AO3 by clicking the authors name. It will be in the same line as subscribe and block.

--

1K notes

·

View notes

Text

Google’s enshittification memos

[Note, 9 October 2023: Google disputes the veracity of this claim, but has declined to provide the exhibits and testimony to support its claims. Read more about this here.]

When I think about how the old, good internet turned into the enshitternet, I imagine a series of small compromises, each seemingly reasonable at the time, each contributing to a cultural norm of making good things worse, and worse, and worse.

Think about Unity President Marc Whitten's nonpology for his company's disastrous rug-pull, in which they declared that everyone who had paid good money to use their tool to make a game would have to keep paying, every time someone downloaded that game:

The most fundamental thing that we’re trying to do is we’re building a sustainable business for Unity. And for us, that means that we do need to have a model that includes some sort of balancing change, including shared success.

https://www.wired.com/story/unity-walks-back-policies-lost-trust/

"Shared success" is code for, "If you use our tool to make money, we should make money too." This is bullshit. It's like saying, "We just want to find a way to share the success of the painters who use our brushes, so every time you sell a painting, we want to tax that sale." Or "Every time you sell a house, the company that made the hammer gets to wet its beak."

And note that they're not talking about shared risk here – no one at Unity is saying, "If you try to make a game with our tools and you lose a million bucks, we're on the hook for ten percent of your losses." This isn't partnership, it's extortion.

How did a company like Unity – which became a market leader by making a tool that understood the needs of game developers and filled them – turn into a protection racket? One bad decision at a time. One rationalization and then another. Slowly, and then all at once.

When I think about this enshittification curve, I often think of Google, a company that had its users' backs for years, which created a genuinely innovative search engine that worked so well it seemed like *magic, a company whose employees often had their pick of jobs, but chose the "don't be evil" gig because that mattered to them.

People make fun of that "don't be evil" motto, but if your key employees took the gig because they didn't want to be evil, and then you ask them to be evil, they might just quit. Hell, they might make a stink on the way out the door, too:

https://theintercept.com/2018/09/13/google-china-search-engine-employee-resigns/

Google is a company whose founders started out by publishing a scientific paper describing their search methodology, in which they said, "Oh, and by the way, ads will inevitably turn your search engine into a pile of shit, so we're gonna stay the fuck away from them":

http://infolab.stanford.edu/pub/papers/google.pdf

Those same founders retained a controlling interest in the company after it went IPO, explaining to investors that they were going to run the business without having their elbows jostled by shortsighted Wall Street assholes, so they could keep it from turning into a pile of shit:

https://abc.xyz/investor/founders-letters/ipo-letter/

And yet, it's turned into a pile of shit. Google search is so bad you might as well ask Jeeves. The company's big plan to fix it? Replace links to webpages with florid paragraphs of chatbot nonsense filled with a supremely confident lies:

https://pluralistic.net/2023/05/14/googles-ai-hype-circle/

How did the company get this bad? In part, this is the "curse of bigness." The company can't grow by attracting new users. When you have 90%+ of the market, there are no new customers to sign up. Hypothetically, they could grow by going into new lines of business, but Google is incapable of making a successful product in-house and also kills most of the products it buys from other, more innovative companies:

https://killedbygoogle.com/

Theoretically, the company could pursue new lines of business in-house, and indeed, the current leaders of companies like Amazon, Microsoft and Apple are all execs who figured out how to get the whole company to do something new, and were elevated to the CEO's office, making each one a billionaire and sealing their place in history.

It is for this very reason that any exec at a large firm who tries to make a business-wide improvement gets immediately and repeatedly knifed by all their colleagues, who correctly reason that if someone else becomes CEO, then they won't become CEO. Machiavelli was an optimist:

https://pluralistic.net/2023/07/28/microincentives-and-enshittification/

With no growth from new customers, and no growth from new businesses, "growth" has to come from squeezing workers (say, laying off 12,000 engineers after a stock buyback that would have paid their salaries for the next 27 years), or business customers (say, by colluding with Facebook to rig the ad market with the Jedi Blue conspiracy), or end-users.

Now, in theory, we might never know exactly what led to the enshittification of Google. In theory, all of compromises, debates and plots could be lost to history. But tech is not an oral culture, it's a written one, and techies write everything down and nothing is ever truly deleted.

Time and again, Big Tech tells on itself. Think of FTX's main conspirators all hanging out in a group chat called "Wirefraud." Amazon naming its program targeting weak, small publishers the "Gazelle Project" ("approach these small publishers the way a cheetah would pursue a sickly gazelle”). Amazon documenting the fact that users were unknowingly signing up for Prime and getting pissed; then figuring out how to reduce accidental signups, then deciding not to do it because it liked the money too much. Think of Zuck emailing his CFO in the middle of the night to defend his outsized offer to buy Instagram on the basis that users like Insta better and Facebook couldn't compete with them on quality.

It's like every Big Tech schemer has a folder on their desktop called "Mens Rea" filled with files like "Copy_of_Premeditated_Murder.docx":

https://doctorow.medium.com/big-tech-cant-stop-telling-on-itself-f7f0eb6d215a?sk=351f8a54ab8e02d7340620e5eec5024d

Right now, Google's on trial for its sins against antitrust law. It's a hard case to make. To secure a win, the prosecutors at the DoJ Antitrust Division are going to have to prove what was going on in Google execs' minds when the took the actions that led to the company's dominance. They're going to have to show that the company deliberately undertook to harm its users and customers.

Of course, it helps that Google put it all in writing.

Last week, there was a huge kerfuffile over the DoJ's practice of posting its exhibits from the trial to a website each night. This is a totally normal thing to do – a practice that dates back to the Microsoft antitrust trial. But Google pitched a tantrum over this and said that the docs the DoJ were posting would be turned into "clickbait." Which is another way of saying, "the public would find these documents very interesting, and they would be damning to us and our case":

https://www.bigtechontrial.com/p/secrecy-is-systemic

After initially deferring to Google, Judge Amit Mehta finally gave the Justice Department the greenlight to post the document. It's up. It's wild:

https://www.justice.gov/d9/2023-09/416692.pdf

The document is described as "notes for a course on communication" that Google VP for Finance Michael Roszak prepared. Roszak says he can't remember whether he ever gave the presentation, but insists that the remit for the course required him to tell students "things I didn't believe," and that's why the document is "full of hyperbole and exaggeration."

OK.

But here's what the document says: "search advertising is one of the world's greatest business models ever created…illicit businesses (cigarettes or drugs) could rival these economics…[W]e can mostly ignore the demand side…(users and queries) and only focus on the supply side of advertisers, ad formats and sales."

It goes on to say that this might be changing, and proposes a way to balance the interests of the search and ads teams, which are at odds, with search worrying that ads are pushing them to produce "unnatural search experiences to chase revenue."

"Unnatural search experiences to chase revenue" is a thinly veiled euphemism for the prophetic warnings in that 1998 Pagerank paper: "The goals of the advertising business model do not always correspond to providing quality search to users." Or, more plainly, "ads will turn our search engine into a pile of shit."

And, as Roszak writes, Google is "able to ignore one of the fundamental laws of economics…supply and demand." That is, the company has become so dominant and cemented its position so thoroughly as the default search engine across every platforms and system that even if it makes its search terrible to goose revenues, users won't leave. As Lily Tomlin put it on SNL: "We don't have to care, we're the phone company."

In the enshittification cycle, companies first lure in users with surpluses – like providing the best search results rather than the most profitable ones – with an eye to locking them in. In Google's case, that lock-in has multiple facets, but the big one is spending billions of dollars – enough to buy a whole Twitter, every single year – to be the default search everywhere.

Google doesn't buy its way to dominance because it has the very best search results and it wants to shield you from inferior competitors. The economically rational case for buying default position is that preventing competition is more profitable than succeeding by outperforming competitors. The best reason to buy the default everywhere is that it lets you lower quality without losing business. You can "ignore the demand side, and only focus on advertisers."

For a lot of people, the analysis stops here. "If you're not paying for the product, you're the product." Google locks in users and sells them to advertisers, who are their co-conspirators in a scheme to screw the rest of us.

But that's not right. For one thing, paying for a product doesn't mean you won't be the product. Apple charges a thousand bucks for an iPhone and then nonconsensually spies on every iOS user in order to target ads to them (and lies about it):

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

John Deere charges six figures for its tractors, then runs a grift that blocks farmers from fixing their own machines, and then uses their control over repair to silence farmers who complain about it:

https://pluralistic.net/2022/05/31/dealers-choice/#be-a-shame-if-something-were-to-happen-to-it

Fair treatment from a corporation isn't a loyalty program that you earn by through sufficient spending. Companies that can sell you out, will sell you out, and then cry victim, insisting that they were only doing their fiduciary duty for their sacred shareholders. Companies are disciplined by fear of competition, regulation or – in the case of tech platforms – customers seizing the means of computation and installing ad-blockers, alternative clients, multiprotocol readers, etc:

https://doctorow.medium.com/an-audacious-plan-to-halt-the-internets-enshittification-and-throw-it-into-reverse-3cc01e7e4604?sk=85b3f5f7d051804521c3411711f0b554

Which is where the next stage of enshittification comes in: when the platform withdraws the surplus it had allocated to lure in – and then lock in – business customers (like advertisers) and reallocate it to the platform's shareholders.

For Google, there are several rackets that let it screw over advertisers as well as searchers (the advertisers are paying for the product, and they're also the product). Some of those rackets are well-known, like Jedi Blue, the market-rigging conspiracy that Google and Facebook colluded on:

https://en.wikipedia.org/wiki/Jedi_Blue

But thanks to the antitrust trial, we're learning about more of these. Megan Gray – ex-FTC, ex-DuckDuckGo – was in the courtroom last week when evidence was presented on Google execs' panic over a decline in "ad generating searches" and the sleazy gimmick they came up with to address it: manipulating the "semantic matching" on user queries:

https://www.wired.com/story/google-antitrust-lawsuit-search-results/

When you send a query to Google, it expands that query with terms that are similar – for example, if you search on "Weds" it might also search for "Wednesday." In the slides shown in the Google trial, we learned about another kind of semantic matching that Google performed, this one intended to turn your search results into "a twisted shopping mall you can’t escape."

Here's how that worked: when you ran a query like "children's clothing," Google secretly appended the brand name of a kids' clothing manufacturer to the query. This, in turn, triggered a ton of ads – because rival brands will have bought ads against their competitors' name (like Pepsi buying ads that are shown over queries for Coke).

Here we see surpluses being taken away from both end-users and business customers – that is, searchers and advertisers. For searchers, it doesn't matter how much you refine your query, you're still going to get crummy search results because there's an unkillable, hidden search term stuck to your query, like a piece of shit that Google keeps sticking to the sole of your shoe.

But for advertisers, this is also a scam. They're paying to be matched to users who search on a brand name, and you didn't search on that brand name. It's especially bad for the company whose name has been appended to your search, because Google has a protection racket where the company that matches your search has to pay extra in order to show up overtop of rivals who are worse matches. Both the matching company and those rivals have given Google a credit-card that Google gets to bill every time a user searches on the company's name, and Google is just running fraudulent charges through those cards.

And, of course, Google put this in writing. I mean, of course they did. As we learned from the documentary The Incredibles, supervillains can't stop themselves from monologuing, and in big, sprawling monopolists, these monologues have to transmitted electronically – and often indelibly – to far-flung co-cabalists.

As Gray points out, this is an incredibly blunt enshittification technique: "it hadn’t even occurred to me that Google just flat out deletes queries and replaces them with ones that monetize better." We don't know how long Google did this for or how frequently this bait-and-switch was deployed.

But if this is a blunt way of Google smashing its fist down on the scales that balance search quality against ad revenues, there's plenty of subtler ways the company could sneak a thumb on there. A Google exec at the trial rhapsodized about his company's "contract with the user" to deliver an "honest results policy," but given how bad Google search is these days, we're left to either believe he's lying or that Google sucks at search.

The paper trail offers a tantalizing look at how a company went from doing something that was so good it felt like a magic trick to being "able to ignore one of the fundamental laws of economics…supply and demand," able to "ignore the demand side…(users and queries) and only focus on the supply side of advertisers."

What's more, this is a system where everyone loses (except for Google): this isn't a grift run by Google and advertisers on users – it's a grift Google runs on everyone.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/10/03/not-feeling-lucky/#fundamental-laws-of-economics

My next novel is The Lost Cause, a hopeful novel of the climate emergency. Amazon won't sell the audiobook, so I made my own and I'm pre-selling it on Kickstarter!

#pluralistic#enshittification#semantic matching#google#antitrust#trustbusting#transparency#fatfingers#serp#the algorithm#telling on yourself

6K notes

·

View notes

Note

whats wrong with ai?? genuinely curious <3

okay let's break it down. i'm an engineer, so i'm going to come at you from a perspective that may be different than someone else's.

i don't hate ai in every aspect. in theory, there are a lot of instances where, in fact, ai can help us do things a lot better without. here's a few examples:

ai detecting cancer

ai sorting recycling

some practical housekeeping that gemini (google ai) can do

all of the above examples are ways in which ai works with humans to do things in parallel with us. it's not overstepping--it's sorting, using pixels at a micro-level to detect abnormalities that we as humans can not, fixing a list. these are all really small, helpful ways that ai can work with us.

everything else about ai works against us. in general, ai is a huge consumer of natural resources. every prompt that you put into character.ai, chatgpt? this wastes water + energy. it's not free. a machine somewhere in the world has to swallow your prompt, call on a model to feed data into it and process more data, and then has to generate an answer for you all in a relatively short amount of time.

that is crazy expensive. someone is paying for that, and if it isn't you with your own money, it's the strain on the power grid, the water that cools the computers, the A/C that cools the data centers. and you aren't the only person using ai. chatgpt alone gets millions of users every single day, with probably thousands of prompts per second, so multiply your personal consumption by millions, and you can start to see how the picture is becoming overwhelming.

that is energy consumption alone. we haven't even talked about how problematic ai is ethically. there is currently no regulation in the united states about how ai should be developed, deployed, or used.

what does this mean for you?

it means that anything you post online is subject to data mining by an ai model (because why would they need to ask if there's no laws to stop them? wtf does it matter what it means to you to some idiot software engineer in the back room of an office making 3x your salary?). oh, that little fic you posted to wattpad that got a lot of attention? well now it's being used to teach ai how to write. oh, that sketch you made using adobe that you want to sell? adobe didn't tell you that anything you save to the cloud is now subject to being used for their ai models, so now your art is being replicated to generate ai images in photoshop, without crediting you (they have since said they don't do this...but privacy policies were never made to be human-readable, and i can't imagine they are the only company to sneakily try this). oh, your apartment just installed a new system that will use facial recognition to let their residents inside? oh, they didn't train their model with anyone but white people, so now all the black people living in that apartment building can't get into their homes. oh, you want to apply for a new job? the ai model that scans resumes learned from historical data that more men work that role than women (so the model basically thinks men are better than women), so now your resume is getting thrown out because you're a woman.

ai learns from data. and data is flawed. data is human. and as humans, we are racist, homophobic, misogynistic, transphobic, divided. so the ai models we train will learn from this. ai learns from people's creative works--their personal and artistic property. and now it's scrambling them all up to spit out generated images and written works that no one would ever want to read (because it's no longer a labor of love), and they're using that to make money. they're profiting off of people, and there's no one to stop them. they're also using generated images as marketing tools, to trick idiots on facebook, to make it so hard to be media literate that we have to question every single thing we see because now we don't know what's real and what's not.

the problem with ai is that it's doing more harm than good. and we as a society aren't doing our due diligence to understand the unintended consequences of it all. we aren't angry enough. we're too scared of stifling innovation that we're letting it regulate itself (aka letting companies decide), which has never been a good idea. we see it do one cool thing, and somehow that makes up for all the rest of the bullshit?

#yeah i could talk about this for years#i could talk about it forever#im so passionate about this lmao#anyways#i also want to point out the examples i listed are ONLY A FEW problems#there's SO MUCH MORE#anywho ai is bleh go away#ask#ask b#🐝's anons#ai

1K notes

·

View notes

Text

the discussion surrounding generative images costing artists their jobs and being trained on stolen work is extremely important and worth having, but the reason i'm so zealous about pointing them out when i see them (and helping people learn to recognize them) is, once again, consumer and media literacy. ai generators have their arguable uses as tools for inspiration, but they are also tools for manipulation.

generative images, unlike a good photoshop, can be produced instantly with absolutely no skill required, which means that everything from online shopping to political propaganda is now inundated with convincing fakes. this could easily be you!

when doing your shopping this season, please pay close attention to products from unfamiliar sources like etsy shops.

does the product show multiple angles? (this is the most important thing for which you should look)

does the product have a lot of superfine details, yet no zoom on them?

do those details actually connect and make sense as shapes?

are things that should be symmetrical, such as chair legs or lamp bases, actually symmetrical?

does the light source make sense? (like this lamp example: why is the base not illuminated if the lamp is lit?)

if purchasing a print from an etsy (etc.) shop, is the style consistent or does the "artist" somehow seem to be an expert in every style and medium? (like, beware of shops like this one-- even without zooming and investigating, the inconsistent style is quite a red flag)

can you find an "original" of the image, like on an artist's social media, or does it seem to only exist in the context of the shop?

do the elements and details of the image easily distinguish themselves, or do parts of them merge weirdly together? (to use an example from the previously linked shop, check out the bottom of the coat on this image and how it fuses with the clothing beneath-- this is only the most immediate and obvious issue in the image)

REVERSE IMAGE SEARCH EVERYTHING.

my inbox is always open if you want help determining if something is fake. love you guys, protect your wallets.

2K notes

·

View notes

Text

So, first off I wanted to say that I mostly agree with all of your points, and that the priorities on AI do need to shift somewhat. The one point I have a slight niggle with, though, is the point that image generators are mostly going to be used to replace the kind of art no one wants to do and that no one would do if they didn’t need the money to pay for it. I currently work for a medical animation company, but what I hope to eventually do is to either be a storyboard artist or a creature artist. It’s not fine art, it’s never going in a gallery, but if someone told me I’d get to sketch out camera angles and character acting or design funky looking monsters all day for the rest of my career? I’d be delighted. It’s the kind of art I love to do.

And, of course, image generators aren’t very good at either of those things. They have no consistency or narrative, like you said, never mind a specific (and sometimes personal) acting choices, all of which you need for good storyboards; and even though image generators are fairly good at rendering and aping styles, they’re actually terrible at design. So, no, an image generator can’t realistically replace either a good storyboard artist or a creature artist. The problem is that a lot of executives who don’t know the first thing about visual art think they can, and that has the potential to end up looking like the same situation. If I ever got to do either of the art jobs I really want to do, but I was told that I had to do it by putting prompts into a computer and letting an image generator do it for me, I would quit that job. Immediately. I’d maybe even cut the power to the air conditioner that cools the server room on the way out. And then, if I managed to avoid jail time for doing that, I’d go try to find a factory job or something and try to draw in my spare time or something, because I’m honestly not good for much else that’ll put food on the table. Nothing I prompted an image generator to do would be something I did or something I imagined. I wouldn’t really even have that much input; it’d be an aggregate of whatever it can find that’d seem to fulfill the prompts, and a lifetime of doing that, without exaggeration, would make me want to kill myself.

Im not opposed to AI as a tool to make artists life easier. There’s a place for that. And I’m not really that afraid of losing either my current job or any jobs I want to have in the future to AI; I’m afraid of losing the parts of it that make it worth doing at all. Automation can be good, but it can also separate artists from their work, and the threat of AI (more than the reality) has the potential to discourage people from ever learning to draw or sculpt or whatever at all. For every art job that seems soulless, there’s probably at least one artist out there who actually really enjoys it and who would probably still choose to do it even if needing money to live wasn’t a consideration.

It isn’t the biggest issue with AI, like you said. There’s the environmental issues, the fact that it relies heavily on underpaid labor, largely in the global south, to even function, the way it sorts resumes, and so, so much else that matters a lot more.

Anyway, sorry for butting in. I just wanted to share my two cents on that one point.

i've been seeing ai takes that i actually agree with and have been saying for months get notes so i want to throw my hat into the ring.

so i think there are two main distinct problems with "ai," which exist kind of in opposition to each other. the first happens when ai is good at what it's supposed to do, and the second happens when it's bad at it.

the first is well-exemplified by ai visual art. now, there are a lot of arguments about the quality of ai visual art, about how it's soulless, or cliche, or whatever, and to those i say: do you think ai art is going to be replacing monet and picasso? do you think those pieces are going in museums? no. they are going to be replacing soulless dreck like corporate logos, the sprites for low-rent edugames, and book covers with that stupid cartoon art style made in canva. the kind of art that everyone thinks of as soulless and worthless anyway. the kind of art that keeps people with art degrees actually employed.

this is a problem of automation. while ai art certainly has its flaws and failings, the main issue with it is that it's good enough to replace crap art that no one does by choice. which is a problem of capitalism. in a society where people don't have to sell their labor to survive, machines performing labor more efficiently so humans don't have to is a boon! this is i think more obviously true for, like, manufacturing than for art - nobody wants to be the guy putting eyelets in shoes all day, and everybody needs shoes, whereas a lot of people want to draw their whole lives, and nobody needs visual art (not the way they need shoes) - but i think that it's still true that in a perfect world, ai art would be a net boon, because giving people without the skill to actually draw the ability to visualize the things they see inside their head is... good? wider access to beauty and the ability to create it is good? it's not necessary, it's not vital, but it is cool. the issue is that we live in a society where that also takes food out of people's mouths.

but the second problem is the much scarier one, imo, and it's what happens when ai is bad. in the current discourse, that's exemplified by chatgpt and other large language models. as much hand-wringing as there has been about chatgpt replacing writers, it's much worse at imitating human-written text than, say, midjourney is at imitating human-made art. it can imitate style well, which means that it can successfully replace text that has no meaningful semantic content - cover letters, online ads, clickbait articles, the kind of stuff that says nothing and exists to exist. but because it can't evaluate what's true, or even keep straight what it said thirty seconds ago, it can't meaningfully replace a human writer. it will honestly probably never be able to unless they change how they train it, because the way LLMs work is so antithetical to how language and writing actually works.

the issue is that people think it can. which means they use it to do stuff it's not equipped for. at best, what you end up with is a lot of very poorly written children's books selling on amazon for $3. this is a shitty scam, but is mostly harmless. the behind the bastards episode on this has a pretty solid description of what that looks like right now, although they also do a lot of pretty pointless fearmongering about the death of art and the death of media literacy and saving the children. (incidentally, the "comics" described demonstrate the ways in which ai art has the same weaknesses as ai text - both are incapable of consistency or narrative. it's just that visual art doesn't necessarily need those things to be useful as art, and text (often) does). like, overall, the existence of these kids book scams are bad? but they're a gnat bite.

to find the worst case scenario of LLM misuse, you don't even have to leave the amazon kindle section. you don't even have to stop looking at scam books. all you have to do is change from looking at kids books to foraging guides. i'm not exaggerating when i say that in terms of texts whose factuality has direct consequences, foraging guides are up there with building safety regulations. if a foraging guide has incorrect information in it, people who use that foraging guide will die. that's all there is to it. there is no antidote to amanita phalloides poisoning, only supportive care, and even if you survive, you will need a liver transplant.

the problem here is that sometimes it's important for text to be factually accurate. openart isn't marketed as photographic software, and even though people do use it to lie, they have also been using photoshop to do that for decades, and before that it was scissors and paintbrushes. chatgpt and its ilk are sometimes marketed as fact-finding software, search engine assistants and writing assistants. and this is dangerous. because while people have been lying intentionally for decades, the level of misinformation potentially provided by chatgpt is unprecedented. and then there are people like the foraging book scammers who aren't lying on purpose, but rather not caring about the truth content of their output. obviously this happens in real life - the kids book scam i mentioned earlier is just an update of a non-ai scam involving ghostwriters - but it's much easier to pull off, and unlike lying for personal gain, which will always happen no matter how difficult it is, lying out of laziness is motivated by, well, the ease of the lie.* if it takes fifteen minutes and a chatgpt account to pump out fake foraging books for a quick buck, people will do it.

*also part of this is how easy it is to make things look like high effort professional content - people who are lying out of laziness often do it in ways that are obviously identifiable, and LLMs might make it easier to pass basic professionalism scans.

and honestly i don't think LLMs are the biggest problem that machine learning/ai creates here. while the ai foraging books are, well, really, really bad, most of the problem content generated by chatgpt is more on the level of scam children's books. the entire time that the internet has been shitting itself about ai art and LLM's i've been pulling my hair out about the kinds of priorities people have, because corporations have been using ai to sort the resumes of job applicants for years, and it turns out the ai is racist. there are all sorts of ways machine learning algorithms have been integrated into daily life over the past decade: predictive policing, self-driving cars, and even the youtube algorithm. and all of these are much more dangerous (in most cases) than chatgpt. it makes me insane that just because ai art and LLMs happen to touch on things that most internet users are familiar with the working of, people are freaking out about it because it's the death of art or whatever, when they should have been freaking out about the robot telling the cops to kick people's faces in.

(not to mention the environmental impact of all this crap.)

#image generators#I’m sorry#the place I work decided to use image generators#rather than just contracting a concept artist#for a recent project#and even though the image generators they were using#didn’t produce anything even remotely usable#the guy who owns the company is so into AI#that he thinks it can and should be the only tool anyone uses ever#so for me it’s less about whether or not image generators can replace artists#and whether or not the people who write the paychecks think it can#which unfortunately many of them do#but anyway I do agree with your general point though#kinda disagree on the point in one of the other posts#about photoshop and image generators basically being the same#but I also think other people have made that point way better than I have so

648 notes

·

View notes

Text

Falling into the AI vortex.

Before I deeply criticize something, I try to understand it more than surface level.

With guns, I went into deep research mode and learned as much as I could about the actual guns so I could be more effective in my gun control advocacy.

I learned things like... silencers are not silent. They are mainly for hearing protection and not assassinations. It's actually small caliber subsonic ammo that is a concern for covert shooting. A suppressor can aid with that goal, but its benefits as hearing protection outweigh that very rare circumstance.

AR15s... not that powerful. They use a tiny bullet. Originally it could not even be used against thick animal hides. It was classified as a "varmint hunting" gun. There are other factors that make it more dangerous like lightweight ammo, magazine capacity, medium range accuracy, and being able to penetrate things because the tiny bullets go faster. But in most mass shooting situations where the shooting distance is less than 20 feet, they really aren't more effective than a handgun. They are just popular for that purpose. Dare I say... a mass shooting fad or cliche. But there are several handguns that could be more powerful and deadly—capable of one bullet kills if shot anywhere near the chest. And easier to conceal and operate in close quarters like a school hallway.

This deeper understanding tells me that banning one type of gun may not be the solution people are hoping for. And that if you don't approach gun control holistically (all guns vs one gun), you may only get marginal benefits from great effort and resources.

Now I'm starting the same process with AI tools.

Everyone is stuck in "AI is bad" mode. And I understand why. But I worry there is nuance we are missing with this reactionary approach. Plus, "AI is bad" isn't a solution to the problem. It may be bad, but it is here and we need to figure out realistic approaches to mitigate the damage.

So I have been using AI tools. I am trying to understand how they work, what they are good for, and what problems we should be most worried about.

I've been at this for nearly a month and this may not be what everyone wants to hear, but I have had some surprising interactions with AI. Good interactions. Helpful interactions. I was even able to use it to help me keep from an anxiety thought spiral. It was genuinely therapeutic. And I am still processing that experience and am not sure what to say about it yet.

If I am able to write an essay on my findings and thoughts, I hope people will understand why I went into the belly of the beast. I hope they won't see me as an AI traitor.

A big part of my motivation to do this was because of a friend of mine. He was hit by a drunk driver many years ago. He is a quadriplegic. He has limited use of his arms and hands and his head movement is constrained.

When people say, "just pick up a pencil and learn to draw" I always cringe at his expense. He was an artist. He already learned how to pick up a pencil and draw. That was taken away from him. (And please don't say he can stick a pencil in his mouth. Some quads have that ability—he does not. It is not a thing all of them can do.) But now he has a tool that allows him to be creative again. And it has noticeably changed his life. It is a kind of art therapy that has had massive positive effects on his depression.

We have had a couple of tense arguments about the ethics of AI. He is all-in because of his circumstances. And it is difficult to express my opinions when faced with that. But he asked and I answered. He tried to defend it and did a poor job. Which, considering how smart he is, was hard to watch.

But I love my friend and I feel I'd like to at least know what I'm talking about. I want to try and experience the benefits he is seeing. And I'd like to see if there is a way for this technology to exist where it doesn't hurt more than it helps.

I don't know when I will be done with my experiment. My health is improving but I am still struggling and I will need to cut my dose again soon. But for now I am just collecting information and learning.

I guess I just wanted to prepare people for what I'm doing.

And ask they keep an open mind with my findings. Not all of them will be "AI is bad."

149 notes

·

View notes

Text

the scale of AI's ecological footprint

standalone version of my response to the following:

"you need soulless art? [...] why should you get to use all that computing power and electricity to produce some shitty AI art? i don’t actually think you’re entitled to consume those resources." "i think we all deserve nice things. [...] AI art is not a nice thing. it doesn’t meaningfully contribute to us thriving and the cost in terms of energy use [...] is too fucking much. none of us can afford to foot the bill." "go watch some tv show or consume some art that already exists. […] you know what’s more environmentally and economically sustainable […]? museums. galleries. being in nature."

you can run free and open source AI art programs on your personal computer, with no internet connection. this doesn't require much more electricity than running a resource-intensive video game on that same computer. i think it's important to consume less. but if you make these arguments about AI, do you apply them to video games too? do you tell Fortnite players to play board games and go to museums instead?

speaking of museums: if you drive 3 miles total to a museum and back home, you have consumed more energy and created more pollution than generating AI images for 24 hours straight (this comes out to roughly 1400 AI images). "being in nature" also involves at least this much driving, usually. i don't think these are more environmentally-conscious alternatives.

obviously, an AI image model costs energy to train in the first place, but take Stable Diffusion v2 as an example: it took 40,000 to 60,000 kWh to train. let's go with the upper bound. if you assume ~125g of CO2 per kWh, that's ~7.5 tons of CO2. to put this into perspective, a single person driving a single car for 12 months emits 4.6 tons of CO2. meanwhile, for example, the creation of a high-budget movie emits 2840 tons of CO2.

is the carbon cost of a single car being driven for 20 months, or 1/378th of a Marvel movie, worth letting anyone with a mid-end computer, anywhere, run free offline software that consumes a gaming session's worth of electricity to produce hundreds of images? i would say yes. in a heartbeat.

even if you see creating AI images as "less soulful" than consuming Marvel/Fortnite content, it's undeniably "more useful" to humanity as a tool. not to mention this usefulness includes reducing the footprint of creating media. AI is more environment-friendly than human labor on digital creative tasks, since it can get a task done with much less computer usage, doesn't commute to work, and doesn't eat.

and speaking of eating, another comparison: if you made an AI image program generate images non-stop for every second of every day for an entire year, you could offset your carbon footprint by… eating 30% less beef and lamb. not pork. not even meat in general. just beef and lamb.

the tech industry is guilty of plenty of horrendous stuff. but when it comes to the individual impact of AI, saying "i don’t actually think you’re entitled to consume those resources. do you need this? is this making you thrive?" to an individual running an AI program for 45 minutes a day per month is equivalent to questioning whether that person is entitled to a single 3 mile car drive once per month or a single meatball's worth of beef once per month. because all of these have the same CO2 footprint.

so yeah. i agree, i think we should drive less, eat less beef, stream less video, consume less. but i don't think we should tell people "stop using AI programs, just watch a TV show, go to a museum, go hiking, etc", for the same reason i wouldn't tell someone "stop playing video games and play board games instead". i don't think this is a productive angle.

(sources and number-crunching under the cut.)

good general resource: GiovanH's article "Is AI eating all the energy?", which highlights the negligible costs of running an AI program, the moderate costs of creating an AI model, and the actual indefensible energy waste coming from specific companies deploying AI irresponsibly.

CO2 emissions from running AI art programs: a) one AI image takes 3 Wh of electricity. b) one AI image takes 1mn in, for example, Midjourney. c) so if you create 1 AI image per minute for 24 hours straight, or for 45 minutes per day for a month, you've consumed 4.3 kWh. d) using the UK electric grid through 2024 as an example, the production of 1 kWh releases 124g of CO2. therefore the production of 4.3 kWh releases 533g (~0.5 kg) of CO2.

CO2 emissions from driving your car: cars in the EU emit 106.4g of CO2 per km. that's 171.19g for 1 mile, or 513g (~0.5 kg) for 3 miles.

costs of training the Stable Diffusion v2 model: quoting GiovanH's article linked in 1. "Generative models go through the same process of training. The Stable Diffusion v2 model was trained on A100 PCIe 40 GB cards running for a combined 200,000 hours, which is a specialized AI GPU that can pull a maximum of 300 W. 300 W for 200,000 hours gives a total energy consumption of 60,000 kWh. This is a high bound that assumes full usage of every chip for the entire period; SD2’s own carbon emission report indicates it likely used significantly less power than this, and other research has shown it can be done for less." at 124g of CO2 per kWh, this comes out to 7440 kg.

CO2 emissions from red meat: a) carbon footprint of eating plenty of red meat, some red meat, only white meat, no meat, and no animal products the difference between a beef/lamb diet and a no-beef-or-lamb diet comes down to 600 kg of CO2 per year. b) Americans consume 42g of beef per day. this doesn't really account for lamb (egads! my math is ruined!) but that's about 1.2 kg per month or 15 kg per year. that single piece of 42g has a 1.65kg CO2 footprint. so our 3 mile drive/4.3 kWh of AI usage have the same carbon footprint as a 12g piece of beef. roughly the size of a meatball [citation needed].

311 notes

·

View notes

Text

hellooo, i’m new to writing(pls dont be mean i cant take it)

🫧-my favs

MASTERLIST (smut + smau👻)

Jujutsu Kaisen

smau1 smau2

1. The strongest tease (Gojo Satoru x Reader)🫧

2. Forbidden from the start (Toji Fushiguro x Reader)

3. Missing you (Getou Suguru x Reader)

4. Yours to break (Choso Kamo x Reader)

Naruto

smau 0 smau 1, smau 2, smau 3, smau 4, smau 5

1. Pushing his buttons (Sasuke x Reader ) - Part 1, 2, 3🫧

2. More than just a genius (Shikamaru x Reader)

3. Passion behind his mask (Kakashi x Reader)

4.Market by fate (Kakashi x Reader)🫧

5. Between two mask, beneath your skin (Kakashi x Reader x Obito) 🫧🫧

Attack on titan

smau 1 smau 2

1. Benearh the uniform (Levi Ackerman x Reader)🫧.

2. Comfort (Eren Yeager x Reader)

Bleach

smau 1

1. Under their spell/ Mastered by deception ( Urahara Kisuke x Reader x Aizen)

2. Unspoken Chains (Aizen Sosuke x Reader)

Hunter x Hunter

smau 1

1. Unspoken tension / Quiet Obsession (Illumi Zoldyck x Reader)

IMPORTANT

besides the kind messages I received in the last month on tumblr, I also got a few hate comments about my stories, like “AI generated,” and even insults where i’m not familiar with.

tbh, I’ve been reading smut and all kinds of books since I was 12 (thanks dad), so seeing those two messages stung a little. i only started writing in november and o usually spend at least 5 hours on a story (+10k words) just to write, edit, and review. right now i have two stories I’ve been working on for a week, so getting messages like that feels surprising and shows a lack of empathy for writers.

i should mention I’m not a native english speaker, but reading in ehlidh helped me. but to be clear, i also use online tools to check grammar and how smooth it sounds – which I don’t see anything wrong with. keep in mind the plot and dialogue are entirely mine. so, I’ll be blocking comments like that. thanks!

much love💜

#naruto smut#kakashi smut#itachi smut#jjk smut#gojo smut#toji smut#getou smut#sasuke smut#kakashi x reader#kakashi x obito#obito smut#shikamaru smut#aizen sosuke x reader#aizen smut#urahara smut#urahara kisuke smut#urahara kisuke x reader#eren jeager x reader#levi ackerman x female reader#levi smut#levi ackerman x you#jjk gojo#getou suguru x reader#toji x y/n#sukuna#megumi smut#yuji itadori#itadori x reader#gojo x reader#smut

282 notes

·

View notes