Text

Grids of Sensors in Non-Commutative Space

There's an idea of "non-commutative geometry" which seeks to view non-commutative algebras as if they were dual to some "non-commutative space" in the same way that commutative $C^*$-algebras (over the complex numbers) can be viewed as being the spaces of continuous complex valued functions on the locally compact Hausdorff space of that algebra's "spectrum" (or, specifically, the algebra of such functions that "vanish at infinity").

Some have considered this in the context of quantum mechanics, with the idea that, just as the operators for position along some axis and momentum along that axis, don't commute, perhaps the same is true for position along one axis and position along a different (orthogonal) axis. (I think there is the idea that this might help prevent some singularities in quantum gravity or something? idk. There's some kind of motivation for it.

This idea seems rather counterintuitive to me. With position and momentum not commuting (and their commutator being a constant, rather than some operator that could be zero in some states), and therefore things not having a simultaneously well defined position and momentum, this isn't too too hard to accept. Being able to say "here's the wavefunction in the position basis" and "here's the wavefunction in the momentum basis", seems nice enough. But, for position in one axis and in another axis to be not simultaneously well definable, seems harder to imagine. Like, if there was a maximum that the product of uncertainty in position and in momentum, could be.

The idea comes to mind of those simulations of "how it would look if you were walking around some city, if the speed of light was super slow". And then the question becomes, "if the degree of non-commutativity between different axiis was non-negligible, what would it look like?"

If one wants to consider the algebra for a quantum mechanical system with two non-commutative dimensions of space, one option is to consider the unital C^* algebra generated by self-adjoint operators q_x, q_y, p_x, p_y , subject to the relations: [q_x,p_x] = i hbar , [q_y, p_y] = i hbar , [q_x, p_y] = 0, [q_y, p_x] = 0 , [q_x, q_y] = i theta .

If one then takes the Hamiltonian for a 2D quantum simple harmonic oscillator, but using these q_x and q_y, and p_x and p_y , in place of the usual position and momentum operators, one can still do things like finding the spectrum of the Hamiltonian, etc. It turns out that one can even define some modified operators Q_x, Q_y, P_x, P_y in terms of our q_x, q_y, p_x, p_y , such that [Q_x, Q_y]=0 , P_x = p_x, P_y = p_y, and the Q_x, Q_y and the P_x, P_y have the same commutation relations with each-other as the normal position and momentum operators do. So, one can end up describing wavefunctions in the Q_x, Q_y basis or in the P_x, P_y basis, with Q_x and Q_y acting sorta like position, even though they aren't our actual position operators, and if we do this, then our simple harmonic oscillator defined in terms of q_x, q_y, p_x, p_y , ends up, when viewed in terms of Q_x, Q_y, P_x, P_y, being like a simple harmonic oscillator except with a term added for a magnetic field (proportional in strength to theta, and inversely proportional to hbar)

This is all stuff that I read about just recently. I'm not sure how it works in 3D. I imagine something similar, but the way of defining the Q_x and Q_y is probably substantially more complicated?

My idea (which others may have had before and investigated thoroughly, idk, I haven't done a literature search) is to, in order to think about "what things would look like in a world with non-commutative geometry (where the non-commutativity is large enough to be noticable at macroscopic scales) is to consider something like a "camera" made of a grid of detectors. Even though we don't really have a good notion of "points in space" in this kind of setting, we can still define an operator for "the distance between two particles" ( sqrt((q_{x,i} - q_{x,j})^2 + (q_{y,i} - q_{y,j})^2) ) and use this to write a Hamiltonian where there is a potential energy term which encourages each particle ("sensor") to be around some prescribed distance to each of its immediate neighbors, and then consider a ground state for this system. (Oh, q_{x,i} and q_{x,j} should commute with each-other btw. Operators for particle i and operators for particle j should commute with each-other. ) In order to say something to the effect of "our grid of sensors is located here in our lab, not moving", I think the grid should be labeled by pairs of integers between -N and N , and that the 4 corners should be "pinned" at certain coordinates (proportional to N) by replacing the position operators for those particles with just constants which should be coordinate for the particle in question. This is maybe somewhat unnatural because that would imply that at these corners the sensors there would have well defined coordinates in both x and y simultaneously (which would normally be impossible for particles in this non-commutative space), but we could just have those 4 particles not behave as sensors I guess. Anyway, if we take the limit as N goes to infinity, they would be far away from the origin, so hopefully this unnatural pinning wouldn't matter too much to things near the middle of the grid?

Then, if we want to ask about the location of some particle that isn't part of our grid of sensors, we can look at the projections for "is the distance between the particle and sensor j less than (some distance)?". Of course, these projections might not commute with each-other..? But, even if they don't, we can still use them for a Hamiltonian that represents particles being detected at whatever sensor they are nearby, and look at the probability distribution over "what detector was it detected at" that way, without needing to have "it is at detector i" and "it is at detector j" be commuting projections.

Oh, also, maybe should look at the limit where the masses of each the detectors are large (and equal to each-other), and/or where hbar is small, so that way the position vs momentum uncertainty doesn't play as large a role, just the uncertainty between x and y position?

If anyone has any thoughts on this, I'd like to hear it. (If you are aware of someone who has done this already, I'd especially like to hear it. I want answers, and I don't have time to work through stuff to find them, at least for now.)

#physics#mathpost#non-commutative geometry#quantum mechanics#math#I should be finishing writing dissertation not thinking about distractions like this!#mathblr

1 note

·

View note

Text

Did you miss the part about a person dieting by choosing what to buy at the store so they don’t have to choose not to eat certain things while at home? Where the idea was that people who believe they would be better off with a machine with such restrictions, would choose to purchase machines with such restrictions? The idea wasn’t that people wouldn’t have the option to buy machines without these restrictions, but that they would have the choice, and pick the one they feel is better for them.

If you did catch that part, calling it a “hellscape” seems very strange to me.

You could still say that a person making that choice would be choosing to live under someone else’s rules, but, that, doesn’t really seem like a problem? They can change their mind and buy another computer instead. If you still find this objectionable, do you find “dieting by choosing not to buy sweets while at the store” to be objectionable?

[ learn-tilde-ath ]

A number of good thoughts here. A nitpick: spreadsheet software very much does support random number generation, and as such I don’t think that’s a good basis to make a distinction. However, effective randomness of “reward” might be. I’ve also thought things that might be called UBL might be good. Perhaps e-ink displays could be helpful for some of this?

Good point, I should have assumed that Excel/etc would have PRNG, although I suppose if a society were implementing something like this, a spreadsheet suite without one would appear pretty quickly.

"Reward" is more subjective, though. CAD software would be another issue. Overall, I think it's undesirable to have a mandatory software classification board, because then that would be used to choke out political dissent. (Dissent against who? Over what? Whoever's in charge at the time, over whatever issue is inconvenient for them.)

Also some people do recommend setting your smartphone to a monochrome display setting, although I have not tried this.

22 notes

·

View notes

Text

Idea for set-up :

Suppose a standard S for a type of device, with the following constraints on how they send/receive data on the internet:

For information that is publicly readable by everyone, an S device will by default only display it to the user if it is at least 24 hours old (Note: doesn’t imply a delay between requesting the page and receiving it

Information published from an S device to be globally readable, is made available when a certain (configurable) time of day passes (but an S device doesn’t let you use the time being configurable to make it sooner)

A user of an S device can add other S devices (or perhaps user IDs) to a whitelist, but where adding to this whitelist has a 24 hour delay, where messages from the whitelisted user/device can be displayed immediately, provided that the whitelisting is reciprocated, but there is a limit (of say, 1000? Maybe 10,000?) devices/addresses/ids that each device/id can whitelist. (This allows for chat rooms and video calls, etc., but one has to pre-emptively both decide to white-list each-other )

There are some things that I’d like for such a setup to support but which these restrictions don’t quite support. For example, it doesn’t support online matchmaking in video games, or joining an irc channel about a technical topic in order to get help on it on the same day that one learns of the technical topic.

But, such a setup should allow traditional websites without issue (though RSS feeds would be a day behind. Eh.) including like, homestarrunner (“It’s dot com”), and even like, sharing a cool video one found online in a chat room one is a part of.

It seems somewhat compatible with web forums, but not necessarily entirely?

Ah, I guess it depends how you apply the requirements. If you say the forum is on an S device or has an id, then as long as the number of active users of the forum is below the limit, then it works without issue (well, the public view of the forum would be a day behind what the actual users of the forum would see, but that’s no big deal). But if you consider the messages to be from each of the other users directly, that’s a bit of a mess. I think the former way of applying the rule probably makes more sense. Though, in that case, there could end up being like, a hierarchy of ids which could be used to circumvent the limitation on the number of real-time connections, and there would need to be some way of either disallowing that, or at least giving a user ample warning before letting them whitelist (a part of) such a thing..

oh, and, bonus : if chat rooms or forums under this paradigm were limited in the number of active real-time users they could have, they probably couldn’t really use on-the-fly targeted ads, because for users not in the real-time whitelist, they could only receive versions that have existed for at least 24 hours, and the real-time active users probably wouldn’t number enough to do real time targeted ads for? So probably the ads would have to either be semi-static or it would have to be financed in a way other than ads?

Of course, that might just make them not financially viable? Buuut… idk how much more on-the-fly-targeted ads really helps the company being advertised over static ads?

I've been doing some conceptual sketching over the last several weeks, chewing on the limited technology zones idea that I've been working on. (It's not just an excuse for fictional detectives.)

Rather than proposing a single solution, this post covers some of that thinking, to provide you with concepts to chew on.

The Medieval1900/1960s/1990s/2010s/+ tech zone poll was based on a general sense regarding the speed of movement and the speed of information, and noticing that in the year 2000, "the Internet" was still a place you went to (by sitting down at your computer), and not a cyberspace layer that surrounds the planet.

But what, exactly, would "1990s/Y2K computer limits" cash out to? 800x600 pixel resolutions? 800MHz processors?

Just what was this mysterious "it factor" we would be trying to bring back by making computers slower and less advanced?

From my notes:

One way to view virtual reality is that the VR environment has extremely low weight, and is therefore extremely mutable. Any physical good, such as a sports car, can be simulated, and in almost any number, which raises a question: why buy a real sports car when we can simulate them? People are at risk of getting lost in virtual reality, with simulations becoming more satisfying to them than real life, leading them to underinvest in their real life. We can think of technology as altering the ratio of effort to environment change. High effort is required to move dirt with a shovel. Low effort is required to move the same volume of dirt with a bulldozer.

The assumption behind the zones-by-tech-level question is that beyond a certain point, except for medical technology, additional high-tech development is superfluous, because virtual reality means that nearly arbitrary sensory experiences can be generated relative to an agent's sense limits.

Demand results when the expected value of a change in the environment loops back through the agent, resulting in a potential change in behavior.

The point of video games is to produce a high stimulus feedback relative to the amount of effort. (I read that in an article on GamaSutra once years ago, and it really stuck.)

In a virtual reality environment, reward signals can become disconnected from agent well-being.

Movies, television, and books appear to be less addictive than video games and social media. What separates them? Interactivity appears to be the primary difference.

With this in mind, I was then able to work backwards and develop an intermediate regulatory concept: interaction frames.

With a book, the content is static the entire time. With a DVD, you press a button and then the DVD may play to completion. You might skip back to a previous scene, but there is no need for further interaction.

A paged website is static until you update the page. With continuous-scroll social media, new content is always being added, and at any moment you may receive a notification to get into an argument or that someone liked your post. Video games in general tend to have continuous interaction.

This model does not adequately address the situation, and, importantly, cannot distinguish between a video game and a spreadsheet program like Microsoft Excel.

We can adapt a concept from gambling: human beings seek mastery or identification of patterns, and the randomness of gambling prevents mastery of the pattern from being achieved. A spreadsheet is very much not random, while loot drops in many video games are quite random!

We could at least measure commands to produce random numbers, or require registration of pseudorandom number generators.

However, this is still insufficiently general. Simulator games may generate complex behavior from simple rules. Are we stuck regulating "game-like elements" by committee? That doesn't sound right.

Still, people do get bored of single-player games, either by mastering the gameplay, or when they get used to the story elements of the game. A visual novel is more like a spreadsheet or a book than like a slot machine.

Also, not all social media seems to be equally addictive. Why were chatrooms seemingly less dangerous than twitter?

There is an anticipation of information gain (informative tweets). There is an anticipation of exciting experiences (someone shows up to fight!). Also, from the user's perspective, it's somewhat random.

In a chatroom, there are fewer people, and they stay for longer. This means that (1) you have more information about them and their positions, (2) you have more incentive to be cordial, and (3) because there is a limit to how much they can have gotten up to while you weren't looking, there is a limit on the anticipated amount of new social information.

On Twitter, there is an endless stream of new people to argue with, and they can show up at any time. You can post about some chalupas you made yesterday, and some lunatic will show up to fight you. Thus, there is always the background anticipation of an (emotionally stimulating) attack.

In a chatroom, if someone would attack you over chalupas, you already know him as the chalupa guy. In real life, an argument is limited by space and time - the chalupa argument ends by default when the bar closes and the chalupa guy is no longer within earshot, and you cannot reopen it until you see him again.

Tumblr is slower-paced, but things like "likes" are continuous, so there is always the incentive to check in to see how your post is doing.

This allows us to get into a model based on food.

There is a dieting strategy involving not buying junk food at the store, so that it is not at your house when you get a craving for it.

This implies that the craving is a temporary impulse or peak, and that it just has to be outlasted. If the craving were uniform, the strategy wouldn't work, because the dieter would just buy the junk food at the store.

(This suggests that the baseline craving for drugs is higher, because drug addicts are willing to take much more extreme actions to feed their addictions, over a longer time period, and that the symptoms for withdrawal (a more constant negative stimulus) are worse.)

So is control in the hands of the agent, or is it in the environment? Is there choice, or not? The dieting strategy appears to split the difference - removing junk food from the cupboard is a kind of prosthetic self-control and willpower shifting. Willpower exists and can be exercised, but is limited, and reserves vary over time. Altering the environment at a point of high willpower can reduce willpower requirements in later contexts, until they are within the window of reliable feasibility.

This suggests a strategy of altering the digital environment to enable users to act on meta-preferences for prosthetic self-control.

For social media services, this suggests regulations imposing new usage modes. For example, it might be required to provide access to third-party user interfaces, which might do things like hide the number of likes. Alternatively, a user might receive all the tweets from a specific set of accounts as a daily summary. Since social media companies require revenue, this access might be a paid service based on average foregone ad revenue. It's a matter that would require a good deal more consideration.

For other items, as part of a broader social movement, we might imagine users being able to buy dedicated hardware. Attempting to control interactivity via software requires a great deal more regulation and is easier to bypass. By contrast, we might imagine a hardware module that writes to a virtual canvas at some rate. The user could then scroll the canvas without receiving updates until the next refresh.

The user could buy an appliance device with built-in limits, similar to not bringing junk food home from the store.

Regarding welfare...

If social media makes people insane, then refraining from social media is pro-social, but suffers from a coordination problem.

However, that's more speculative. More broadly, this is about the liberal concern of consent under capitalism, and what it means to consent to technology. There are two considerations, in tension.

If you have to either use a technology or be homeless, then can you really have been said to have "consented" to the technology?

On the other hand, why should everyone else be expected to subsidize some guy using a horse and buggy?

Maximization of pattern efficiency is likely to be hostile to the continued existence of the human species, not that differently from how hard drugs distort and kill people. On the other hand, insufficient pattern efficiency means reduced production, which may mean making hard moral choices and having wasteful suffering that could have been avoided.

(Regulated capitalism is actually pretty good about consent relative to production levels, compared to say, feudalism or command economies.)

The conventional liberal response is a universal basic income - this neatly ties up many questions of consent by removing the greatest point of leverage - but this is problematic, as it involves redistributing labor to able people who may not be working at all. I've been working on an alternative based on "universal basic land," but I'm not satisfied with it yet. The essential idea is that land and materials are scarce, while labor (directed effort) and capital (configurations of materials) are variable. If life support (sunshine, air, food, water) is guaranteed, then trade is a net benefit (rather than resulting in potential gradual loss of life support due to lack of leverage).

8 notes

·

View notes

Text

Cool! I tried to work out how gradient descent could be done with as few auxiliary bits as possible, but none of the things I tried worked. But I only tried a couple things.

But also, hm, if the gradient descent step is reversible, then it has to preserve volume (or, I guess just cardinality, as the numbers have finite bit depth), which seems like an obstacle if we want to end up in the region where the loss is low.

I guess if we define a probability distribution in terms of the loss, as like a Boltzmann distribution type thing, then maybe some kind of, “the number of auxiliary bits spent/erased corresponding to the difference between the uniform distribution and the distribution obtained from the loss function”? I think I saw a video claiming something similar about the distribution learned (rather than a distribution over possible parameters), but the argument I saw seemed unclear.

Ah, if we treat loss as like potential energy, then if instead of plain gradient descent, gradient descent with momentum is used, in a way such that energy is conserved, then that seems like it could be nice and reversible. It is in the continuum limit at least (assuming the loss is smooth) and I would imagine it could be made to be reversible when using discrete time-steps and finite bit depth as well. In the continuous limit at least the energy would be conserved, and probably also if things are set up for the process to be reversible in the discrete case? Then, maybe bits could be spent on decreasing the kinetic energy when the potential energy (the loss) is low? Is there some optimal way to do this?

If we want out computation to start in some initial configuration which may-as-well-be random among N possible states as far as it relates to final answers we want, and we want it to end up in one of M states (with M < N), then by the generalized pigeonhole principle, at least 1 of the M desired final states is reached when starting with at least (N/M) of the starting states, and so this requires spending log_2(N/M) bits to encode the information of which starting state was used.

So, for the size M of the set of configurations that are good enough, hm…

So if M/N is the fraction of the possible states which have loss less than some quantity… we only want to spend a bit if it will decrease the size of the set of “all points with loss less than or equal to the largest loss we could end up at” by half I guess? Uhhh idk how we would determine that though..

New piece on a new startup in a new venue:

3 notes

·

View notes

Photo

Thanks for writing this! Mentioning that the issue is the sign getting flipped intrigues me a bit. I like superalgebras. The candidate monoidal product failing to be associative due to a sign error reminds me of uh, supermonoidal categories? Where (A \otimes B) (C \otimes D) = (-1)^{|B| |C|} (A C) \otimes (B C) .

This wouldn’t happen to be anything like that, would it?

I guess not, as I guess what you described is more like, (A \otimes B) \otimes C = - A \otimes (B \otimes C) ?

But, still, I am led to wonder, “what if instead of trying to get rid of the sign issues, we embrace them somehow?” . Maybe that’s not a good idea here though.

the stable homotopy category by Cary Malkiewich

78 notes

·

View notes

Text

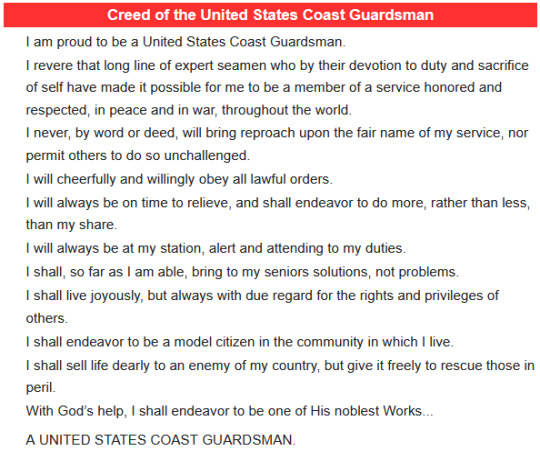

“note in passing the mathematical impossibility of everyone doing more than their share” : it says “endeavor to” , and says “rather than less”. The meaning of this is clear. It is meant to be a pledge to try to err on the side of doing more than one’s share, rather than erring on the side of doing less than one’s share. This is entirely reasonable. We do not say of people competing in a race that it is unreasonable for them to try to run faster than their competitors. If everyone tries to run faster than their competitors, everyone runs quickly and is challenged (provided that they have similar enough capabilities and are capable of running fast). If everyone tries to err on doing more than their fair share rather than on the side of doing less, then all the work is more likely to get done.

“the claim that the noblest work of [G]od is creating the United States coastguard” : it both says “one of” and “endeavor to”.

Reads like it was written for 12 year olds, no disrespect

41 notes

·

View notes

Text

Could we take a countable nonstandard model of true arithmetic, and pick some nonstandard natural number n, and consider S^n ? (I suppose the elements of these spheres would have to be n-tuples of possibly-nonstandard reals rather than standard reals..? (n-tuples being functions with domain {all the possibly-nonstandard natural numbers strictly less than n}.) )

Would such a topological space remain a valid topological space when we remember the distinction between the standard and non-standard reals? I think they would… There are suddenly more unions and intersections one could possibly take (because you can have a union or intersection that is indexed over the standard natural numbers), but I think the topology should stay the same..

Wikipedia mentions that S^\infty as the unit sphere in “the” countably-infinitely-dimensional Hilbert space, is diffeomorphic to the Hilbert space itself, so, I don’t feel that a mild strengthening of requirements would do enough. Or at least, making things respect differential structure is insufficient.

Nonstandard natural numbers seem like they should allow you to make a number of infinite things behave somewhat as if they are finite, so that’s what came to mind for me.

Maybe another option could be adding some kind of symplectic and/or contact structure? That way when one would do the “add another dimension to push stuff away into”, you run into things where you are switching the parity (switching between symplectic and contact), and maybe that could somehow prevent the contractability?

I’m fairly confident that for non-standard n, S^n (once defined properly…) won’t be contractable, but, I don’t know how nicely (or, perhaps, how poorly) such spheres with non-standard-real coordinates , would play with more ordinary topological spaces defined in terms of the standard reals. Which might make the idea not work for what you would like, idk.

Perhaps one could consider n-tuples of standard real numbers, but I think you then wouldn’t be able to get it being non-contractable just by virtue of being an n-sphere for finite n, because you can’t apply the transfer principle to stuff that uses the predicate “standard”?

Hm, another thing: If one has a non-standard model of the natural numbers and sets involving them,

externally, there exists a bijection from n-tuples of elements of a set A, to n-tuples of elements from the set A where the first entry is (some designated element, e.g. 0),

given by, for each standard natural number greater than zero, have the entry in it be whatever is in the entry before it on the other side of the bijection, and for the one at position zero have the designated element, and for the ones at non-standard positions just don’t change them,

I.e. (a,b,c,d,…,x,y,z) \mapsto (0,a,b,c,d,…,x,y,z)

but, this bijection doesn’t exist internally.

And, I think this bijection seems like it should be continuous, allowing pushing stuff out to infinity…

So, maybe the only reason S^n would be non-contractable for non-standard n, is because the map implementing the contraction doesn’t exist internally, even though it does exist externally? Which seems like it could be disappointing..

You know how you define the set for the nth homotopy group πₙ(X,b) of a pointed topological space (X,b) to be set of homotopy classes of continuous maps of the form f:Sⁿ -> X : f(a)=b? Well what if we used S^∞ instead? Would we be able to turn it into a group? Would it yield anything non-trivial despite the fact S^∞ is contractible?

I should note that I've not studied homotopy groups in any detail, I'm just acquainted with the definition

15 notes

·

View notes

Text

Thanks! Hmm... I'm not sure that dropping this (P4) allows quite the branching that you were looking for in the OP, because omitting that axiom allows a number to have multiple predecessors, while I think what you were looking for in the OP is to allow a number to have multiple successors? This is why I suggested making S a relation symbol (with an extra condition so that there is always at least one successor) rather than a function symbol. Either change could be interesting though.

How might we define addition if numbers can have multiple successors? One question would be whether we want addition to still be a function, or whether it too should be multi-valued? If addition is to be single-valued, I suppose that instead of x+S(y)=S(x+y) , I suppose we would say something about some logical combination of the statements S(y,z), S(x+y,w), x+z=w ? Maybe, "for all z such that S(y,z), there exists a w such that S(x+y,w) and x+z=w"? Or, a more neutral and weaker statement, "(for all x,y) there exist z,w such that x+z=w, S(x+y,w), and S(y,z)". Oh, wait, if we are assuming that addition is a function, then having x+z=w makes there be no separate choice of what w to use... So, I guess it would just be a combination of the statements, S(y,z), S(x+y,x+z) So, either, "for all x,y, there exists a z such that S(y,z) and S(x+y,x+z)", or, "for all x,y, for all z, if S(y,z), then S(x+y,x+z)". I'm not sure which of these would be more interesting.

There should be divergent number lines. Sure you have the good old fashioned one, two, three… etc. but after three you can choose to go to four or you could go to grond and continue onwards from there, each line branching out on their own twisting ways.

27 notes

·

View notes

Text

While a lot of the Urbit stuff seems, uh, unreasonable, the idea of an OS of sorts written for a mathematically specified machine model, where the OS shouldn’t change much, and where one could transfer this from computer to computer as long as there is an emulator for the machine model written for the particular hardware, seems fairly appealing.

The need to separately re-establish all of one’s programs and settings when moving to a new device, seems like a waste, and like it shouldn’t be that way. (Of course, there are VMs which can emulate some other physically existing hardware, but then you have all these different possible emulated hardwares, and the... well, it seems like there is more complexity that way, and more edge-cases where some aspects of the hardware might not be emulated quite correctly)

But the specific stack of 3 weird programming languages that the project uses, with their deliberately weird-sounding names for things, ugh.

Why?

Also, I feel like, ideally, a project for such an “eternal OS” would use formal methods to make machine-verifiable proofs of various properties of the OS, like what seL4 has.

Also, probably the machine model should resemble real hardware at least a little bit more than the one Urbit uses, does.

6 notes

·

View notes

Text

How about we use A \cap B to stand for (A \cap B) \ne \emptyset , and, idk, A \not\cap B for A \cap B = \emptyset ?

We need a standard symbol for denoting the binary relation of two sets being disjoint or non-disjoint, just as we have for subsets. Writing A ∩ B = ∅ or A ∩ B ≠ ∅ makes me sad each time I have to, and I'm getting too old to waste precious emotional energy on this nonsense.

56 notes

·

View notes

Text

1) To elaborate on that “minds are finite” comment: insofar as human behavior can be described in a materialist way, then, assuming currently fairly-accepted ideas about physics and information content, humans have (or at least can be effectively modeled as having) finite information capacity, and therefore facts about their behavior aren’t strictly speaking “undecidable”, as it could simply be looked up in an enormous lookup table (where the lookup table includes external influences as part of the keys when looking things up).

Of course, that amount of information is bonkers huge, so maybe one could make a variation on the “human behavior is undecidable” claim which is actually true (using e.g. an appropriate limitation on the size of the TM that is supposed to do the deciding (appropriate in light of the information capacity of a human))

2) But yes, as mentioned, people don’t actually seem to actually optimize for a utility function in any super-meaningful way (of course, if the utility function is a function of actions and not just outcomes, then *some* utility function will be optimized by any history of actions, but that’s why I said “in any super-meaningful way”).

3) And, I agree that if people did have utility functions, there doesn’t appear to be anything fundamental preventing knowing it, and - well...

(no longer numbered) It’s unclear to me whether the fact that we don’t appear to quite match with a utility function (in a meaningful way) is or isn’t important for any of the like, existentially meaningful questions.

But, as you mention, the family of hypothetical questions of the form “what would one do given choice X” would certainly be enough to determine one’s utility function, if one had one, to any degree of accuracy, and I don’t think there being a fact-of-the-matter as to “what one would choose given choice X (or, in scenario X)” for all choices(scenarios) X, would be any problem for meaning, and this is the kind of thing a utility function would encode, so, the answers to these questions would specify a utility function if one had one, and visa versa, and so I think this family of answers could be regarded as a very loose generalization of a utility function? (I’m allowing for facts-of-the-matter as to ”what one would choose” to include things like probabilistic mixes of different responses) And, continuing to act according to such a generalization-of-a-utility-function is not something that makes one less of a person, so much as just, basically a tautology? One will do as one would do if one were to be in the situations one will be in.

Would comprehending some information which entirely encodes this information for oneself, in any way inherently conflict with any important personhood-things ? This isn’t entirely clear to me, but I feel like the answer is probably “no”?

I’m a little bit reminded of a scene I saw screenshots of from “Adventure Time”, wherein a character remarks on how they know precisely what it is that they want, and, while that character was like, evil and such, I don’t think them understanding themself in that way seemed like any kind of obstruction to their personhood..? Though, “fictional evidence isn’t”, so perhaps that’s just irrelevant.

You can fit a utility function to a preference ordering, but not to a finite preference ordering. (EDIT: I mean a finite preference ordering doesn't determine a unique utility function) Everyone's utility function is undefined by the choices they've made so far. It's always possible in the future to make choices that change which utility function is the best fit to your choices, including your past choices. So what you really wanted, why you really did something, is a statement about an infinite sequence of future actions. That puts it in the same kind of statement as stuff like the godel string, or consistency of a formal system. Claiming someone has a particular utility function is a claim that infinite future choices will not deviate from it, just as consistency is a claim that a proof search will never find an inconsistency. So it's the kind of thing where you can have incompleteness or undecidability.

HUmans are trivially undecidable in a certain sense in tha tthey can emulate Turing machines and so there's no program that solves the halting problem for a human emulating a Turing machine. But still it's interesting to apply this perspective to a human's "revealed preferences". Not that you can't infer someone's preferences, it's definitely an approachable modeling problem, but you can't ever achieve certainty here, they can always just like, start behaving in a different way if a certain turing machine halts, that makes their past actions part of a different pattern, and therefore you fit different "revealed preferences" to it.

And I think if you apply that perspective to yourself... the point I think is to give up on the idea that you have a true utility function you need to discover. Like if you want to limit yourself and turn yourself into basically a chess-playing program, sure, you can fit something to your past actions and feelings and commit to following it forever. But that's not discovering your true utility function, that's making an infinite sequence of future choices by a certain method, and doing that is what makes it your true utility function... except that's never complete, cause you can stop at any time.

36 notes

·

View notes

Note

No, I’m saying they should always go in the direction we currently call “counterclockwise”, in both the southern and northern hemispheres (and on the equator), and the reasonable-but-insufficient justification of “but clockwise is the way sundials go” only works in the northern hemisphere.

They should all go ccw because that’s the direction angles go.

So, seeing as sundials in the southern hemisphere go counterclockwise, I guess there's even less reason in the southern hemisphere for clocks to go clockwise? I guess countries in the southern hemisphere just got stuck with the northern hemisphere's convention that was set for clocks, of going the wrong way around (clockwise) instead of the correct forward direction for angles (ccw)?

what should clocks do on the equator, just tremble in terror?

22 notes

·

View notes

Text

For some reason I didn’t respond to this at the time (Aside: I really wish I could set the blog I actually post with to be my “main blog”). And, yes, we could imagine a setup where there’s the “main thing” which has finitely many states and just have a counter, but, like, why? It isn’t like e.g. sorting algorithms (or whatever other kind of algorithm) are restricted to only working sensibly on lists of finite length.

I don’t see why whatever process is responsible for human reasoning couldn’t be scaled up to larger context sizes without there being at some point a fundamental break in the nature of the being there.

I guess you are imagining, in the “become unrecognizable” you are thinking of the “recognizable” part as being like, a (very large) finite state machine which is acting like the state machine of a Turing machine head as it acts on an infinite tape, but, why not instead imagine the person normally as being more analogous to the process including what happens on the tape, where the tape has a fixed limited size (responsible for e.g. working memory, short term memory, and long term memory, all having finite capacity) (where this limited size of the tape is much larger than the number of possible states of the head), and then just, imagine what happens if you remove the cap on the size of the tape.

In principle (and not just in this world with physics as it appears to be), what should make it impossible for someone to enjoy some chocolate that reminds them of a childhood memory, while they are also working on factorizing 3^^^^^3 + 7 ?

Like, what’s the obstacle?

learn-tilde-ath said: yeah, but what if a universe/laws-of-physics where arbitrarily large amounts of information can be stored in a fixed amount of space? In such a universe, perhaps there could be true immortality.

one could imagine Conway’s Life where every cell can be subdivided into smaller games of Life to arbitrary depth, allowing a “fixed size” entity to approach infinite computation asymptotically, but the entity is still forced to “grow” or die, either by terminating or repeating previous states; it might not count as death to gradually transform into an entity that is causally linked with your previous self but utterly unrecognisable to it but it definitely sounds deathish.

37 notes

·

View notes

Text

I’m unsure about “Kelly Criterion only applies when you know the odds”.

Like, sure, “exactly the Kelly Criterion”, probably only makes sense within the assumption that you know what the odds are I guess.

But suppose you are playing a variant of the “multi armed bandit” game, where you can choose how much to bet on each pull of an arm (with a certain minimum bet size I guess). I would expect some kind of variant of Kelly to apply here? Of course, in that case, I guess to define that game you would have to assume some probability distribution over what each of the arms has for its payout distribution... But, perhaps one could look at like, some sort of “worst case” for what that probability distribution might be? Uh... I’m not really sure of that. Well, suppose you are required to make all N pulls even if it seems likely that all the arms have negative expected return, so that, assuming that some of the arms are better, uh, you would be likely to eventually find this out, and therefore ruling out some of the distributions from the “worst case compatible with what is observed so far” ? idk.

(and, of course, I am considering this variant on the multi armed bandit here as potentially working as a metaphor for other decisions in life and such.)

it's fun to talk about the kelly criterion and i guess we're doing it cause of stuff sbf and wo said about it but im skeptical it's related to the downfall of alameda

like you can do some model-based calculation that if you exceed the kelly bets you're putting yourself at risk of losing everything

but when i read about the subprime mortgage crisis and about the fall of LTCM, their risk models were wrong. They failed in ways that the model said was impossible. So it's not about the model-based safety of the kelly criterion vs the model-based risk of exceeding it rly i dont think

you can calculate really low risk based on like the standard 1/√n decay of variation when averaging n uncorrelated things. But then the normally-uncorrelated stuff falls together in a market downturn

I wouldn't have much confidence generalizing from anecdotes, but alan greenspan says in his memoirs he thinks this is like the biggest issue, that risk models need to explicitly model two "phases", so i get some confidence from someone who knows much more than me saying something similar

so when i see some crypto traders go under during a crypto crash? And general downturn cause of a money vacuum? I don't think this is because of some huge risk they knowingly accepted, I would guess it's because their risk models they used to make a lot of money back when money was free told them there was no chance of losing all their money

28 notes

·

View notes

Text

I think one idea I’ve seen which is tangentially related, is the idea of, automatically finding cycles in wants, like, [“X would be willing to provide A in exchange for B” “Y would be willing to provide B in exchange for C”, “Z would be willing to provide C in exchange for A”] (except on a much larger scale and without necessarily being single cycles, but multiple interconnected cycles)

Perhaps if one started with some kinds of digital goods, like, art commissions, uh, recommendations/advertisements, tutoring services, and other things which could be initially done at a non-professional level over the internet, it could be possible? And then like, if you didn’t require all the chains to be closed before completing a cycle, you could create representations of debts?

Like, if you start with just a system to facilitate barter, and then only later add in some more abstract unit(s) of account (the debts), and then significantly later some people began to exchange this unit/these units of account for like, government-endorsed-currencies ?

idk

cryptocurrency does at least have a simple value proposition: it's a speculative asset / ponzi scheme and so there's a ready supply of people willing to exchange real money for it in the hope of getting rich / selling it to some other sucker later, which keeps the whole ecosystem growing (and collapsing, and growing again and then collapsing, and so on).

but if a debt trading market is "real money done better but without the backing of a sovereign government", what's the use case for it that drives adoption?

you can't use it to pay your taxes, you might use it to avoid taxes but that's obviously a lightning rod for trouble, and while you would happily use it to buy things there's no situation in which you would rather accept it over real money when selling things unless those things are illegal or socially sanctioned in some way (drugs, sex, assassinations, and so on) such that regular payment providers keep their distance.

so you can seed a crypto trading market by taking real money and giving away magic beans but you would need to seed a debt trading market by doing the exact opposite: giving people goods and services worth real money in exchange for magic beans from them, which is obviously a less appealing proposition for an investor.

(now you could say that some venture capitalists are indirectly doing this, for example subsidising grocery delivery services that lose money in the hope of building market share for some future enterprise that might be worth something one day, but that seems more like a semi-fraudulent quirk of the low interest rate environment rather than any kind of sound business plan).

one possibility is to boostrap the market by selling intangible things such that early adopters aren't running the risk of bankruptcy, for example you could "sell" a web comic or podcast in which case there's a progression from people giving you "likes" and other forms of attention that cannot be redeemed for cash and people subscribing to your patreon or hitting your tip jar, where perhaps debt trading could offer a smooth gradient across that spectrum from "I owe you a notional favour" to "I am literally buying you a coffee".

but it does seem like that use case could be achieved by simpler methods, like the clever infrastructure isn't really contributing much to this specific example and is only there in the opportunistic hope that the market would grow into something more than that (without being exploited for criminal ends or promptly shut down by the SEC).

16 notes

·

View notes

Text

What if instead of conditioning on x >= s, you instead take like, a million samples and take the 50 largest values?

At the last, if you took 5 million samples from both distributions, and then took the 100 largest values from the union of those two sets, then (assuming at least some of the values came from from the distribution which tends to produce smaller outputs), these 100 smallest values will probably be all pretty close to one-another (even though the ones that came from the tends-to-be-smaller distribution probably tend to be on the lower end of the 100)

you would expect female CEOs and politicians to be as power hungry and sociopathic as male politicians and CEOs even if women on average were not, simply because of selection effects on what is a very small group.

103 notes

·

View notes

Note

If it would immediately bring about such a ceasefire, and even if it wouldn’t (unless maybe if it would somehow prolong the war or something?), then no, it wouldn’t be wrong to kill Hitler?

Something cannot be both immoral and “worth it”. If it is morally worth it, then it isn’t, in the context, immoral.

If anyone is currently having a genocide be done on their command, it is ok to kill them. (Exception: it was one more additional immoral thing for hitler to kill himself. The correct thing for him to do would have been to give himself up to the allies and allow himself to be executed for his crimes.)

it's true that political assassination is bad, but it also seems sort of ghoulish to spell it out in the context of war. it's like, killing "soldiers"? fine, good, do it as much as you want! killing random civilians who were resisting, or just living in a city you bombed? eh well it's bad but what did you think would happen, it's war. killing a SPECIFIC person who you were specifically targeting because they're important? how dare you. "people" vs "faceless mooks"

killing anyone is bad, but "soldiers" being people who have donned a specific costume and said "I will try to kill you while you try to kill me" are at least aware of the game being played and (conscripts aside) voluntarily choosing to play it.

killing civilians is obviously a crime and one of the reasons why war sucks so much ass, if you could simply lock the soldiers from both sides in a sealed chamber and let them sort it out between them then that would obviously be preferable!

deliberately attempting to kill a specific person (or the person standing next to them, or whoever had the misfortune to vaguely resemble them) is murder! like, a pretty basic crime! the stuff of hoodlums and gangsters! it doesn't magically become okay just because it's being done by gangsters paid by the government.

launching an aggressive war is still the ultimate crime that underlies so many other crimes, but assassinations are carried out even in "peacetime" too are that doesn't make them any better.

52 notes

·

View notes