#3D Depth Camera

Explore tagged Tumblr posts

Text

https://www.futureelectronics.com/p/semiconductors--analog--sensors--time-off-flight-sensors/vl6180xv0nr-1-stmicroelectronics-5053972

What is a Time of Flight Sensor, Time-of-flight sensor vs ultrasonic,

VL6180X Series 3 V Proximity and Ambient Light Sensing (ALS) Module - LGA-12

#STMicroelectronics#VL6180XV0NR/1#Sensors#Time of Flight (ToF) Sensors#ultrasonic#Digital image sensors#ToF sensor#3D Depth Camera#Camera image#Laser-based time-of-flight cameras#vehicle monitoring#Time-of-Flight imagers

1 note

·

View note

Text

https://www.futureelectronics.com/p/semiconductors--analog--sensors--time-off-flight-sensors/vl6180xv0nr-1-stmicroelectronics-4051964

Imaging camera system, RF-modulated light sources Range gated imagers

VL6180X Series 3 V Proximity and Ambient Light Sensing (ALS) Module - LGA-12

#Sensors#Time of Flight (ToF) Sensors#VL6180XV0NR/1#STMicroelectronics#imaging camera system#RF-modulated light sources Range gated imagers#Laser-based#Real-time simulation#3D Depth Camera#Range gated imagers

1 note

·

View note

Text

#acnl#acnlwa#animal crossing new leaf#nintendo 3ds#nintendo#very old screenshots pulled from the depths of my camera roll

24 notes

·

View notes

Text

I did the donut tutorial! I'm so proud of myself 😭🤩 (lol)

I wanna learn how to create 3D environments in particular, mainly for comic-making purposes. Anyway, this was a great tutorial and I learned a lot as someone without a ton of experience with 3D :>

#blender#blender 3D#3D Art#3D Model#Donut Tutorial#the camera tracking/movement in this is a little janky bc that was. ironically. the part i struggled with the Most lol#or more#the focal depth/length is janky lol

17 notes

·

View notes

Text

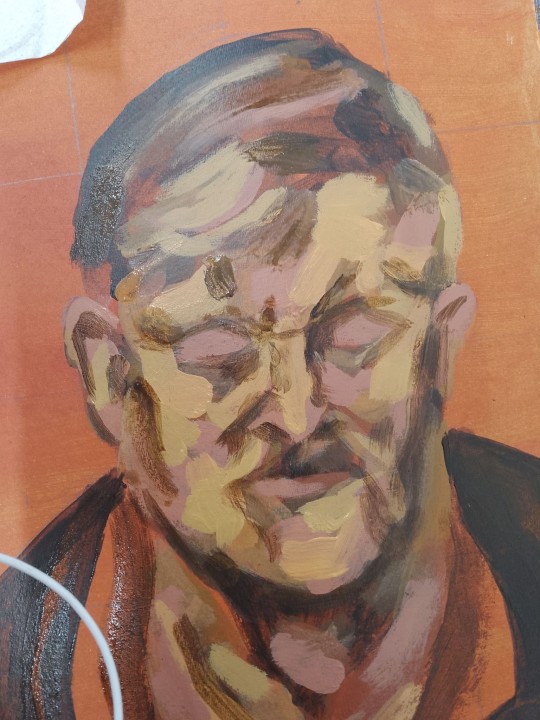

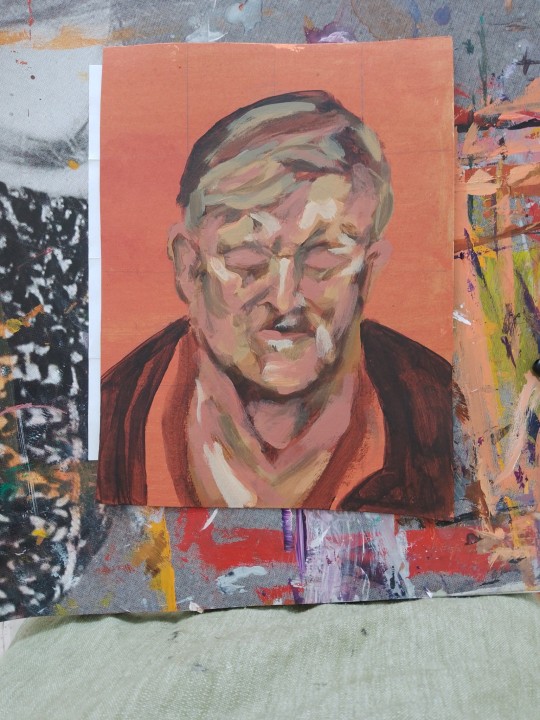

art update fr april part 2/2

#oh just paint a lucian freud they said. just mix skin colour frm 6-8 different tubes not counting varnish water or slowing agent they said.#like itll be a good exercise. idk wth im doing#all of this is progress frm 5 hrs total. i probably spent the first full hour drawing my grid and face in pencil bc i did NOT want to start#like it was too daunting lol. and then the following 2-3 hrs it's still like WHAT AM I DOING I MESSED IT ALL UP#and then you add more depth w dark and light and go like oh. maybe this can still be salvaged after all in time lol ok#it's still a wip i'll continue adding to it next week. apparently when you paint the bg is when i'tll all suddenly click and look solid?#heres to hoping lol. ive never felt more like a painter tho. close up included bc there are like a million brush strokes in there#my reproduction looks nothing like the original guy posing fr his portrait but itsure does look like. some guy in 3D surely#my art#sorry if the positioning is all over the place. those colours did not want to be accurately captured on camera fr

6 notes

·

View notes

Text

bought super mario 3d world on switch bc i needed a new game to 100% and quickly remembered why i didnt like super mario 3d world when i had it on the U

#depth perception ? NONEXISTANT#and the controls are different bc now theres a z axis you can move in#i am however like 60% done with the game already i didnt remember how short it was (its only been like 4 hours)#not a fan of the mario wiiU games that came out bc they were all 3d based but captain toad holds a special place in my heart#even though i could never 100% finish it.. i think i still have like 3 levels left but theyre too hard and im incompetent#but when i hear that music i go crazy#i like the concept for the levels in treasure tracker but the camera makes me very motion sick HAHA

2 notes

·

View notes

Text

Apple Vision Pro. Is it a game changer for 3D?

View On WordPress

0 notes

Text

In Super Mario Maker 2, the Super Mario 3D World game style is always seen directly from the side. While objects towards the edges and corners of the screen can be seen to have depth, it is very difficult to judge what that depth actually is.

By modifying the code to move the camera during that mode, we can see how deep into the background the common elements go. Note that the blocks are not actually perfectly cubical as they are in 3D Mario games, but rather elongated into the background to help with the perspective.

Main Blog | Twitter | Patreon | Small Findings | Source: x106E46E8

542 notes

·

View notes

Text

Speculative Biology of Euclydians (and Bill Cipher) part 1

Part 2

We're doing this, babies!

This analysis is based on two assumptions:

Before Bill Cipher became a demigod, he was a biological, living organism and so were the rest of his species.

Even after Bill Cipher became a demigod, he still retained some physical characteristics of his biological form.

I will clearly specify which of his abilities are innate abilities of his species, which ones are definitely his divine abilities and which ones could be both.

This is part one. This analysis became VERY long, so I'll separate it into FIVE parts:

Part 1: What is Euclydia and what are Euclydians?

Part 2: How Euclydians function as animals? (This is where I explain how are they built, what their organs do, how they feed, move, speak etc.)

Part 3: Reproduction NSFW (this one I separated because it's NSFW. It'll be nothing explicit, but I doubt your boss would be thrilled if he found out that you're reading about how triangles fuck in your office)

Part 4: Growth and development (here I will also talk about Bill's deformity and Euclydean society)

Part 5: How Bill Cipher destroyed Euclydia and got his god like powers?

SO, without further ado:

What is Euclydia and what are Euclydians?

I'm gonna drop a bomb first.

Euclydia IS NOT a flat two dimensional plane. Before you load your shotguns, let me explain!

There are many proofs both in the Gravity Falls show and The Book of Bill that Euclydia isn't a flat plane like the imaginary two dimensional world from Flatland by Edwin A. Abbot.

The first one is actually Bill himself. Bill's species has complex camera lens type of eyes. Such eyes are possible in 2D world, but not on the front, like Bill has. He was born like that, so that is proof that Euclydia isn't 2D.

Next, when Bill is talking about his home in Weirdmageddon part 3, he shows an image of his home planet:

This planet has RINGS. That is COMPLETELY impossible in 2D. Even if the planet was completely flat, the rings would go through it. They would never be able to actually encircle this planet. So, if Euclydia was two dimensional, Bill's home planet would not be able to exist.

In the Book of Bill, we see image of Bill as a baby. In that image he's standing on some kind of field with grass and you can clearly see that there's grass in front of him and behind him, and that's impossible in 2D:

(also sorry for the shit quality of this pic)

But the best proof is that image from thisisnotawebsitedotcom.com that you get when you type VALLIS CINERIS in the computer. It shows Bill Cipher as a child with his parents. The parents are holding him in a manner that is completely impossible in 2D:

The image quality sucks, but you can clearly see that his parent's hands are IN FRONT OF him and he is also IN FRONT OF his parents. The position of "in front of" isn't possible in two dimensions and yet on this image the overlap happens many times. (I circled his parents' hands in red where they overlap with Bill and I circled him in blue where he overlaps with his parents. Bill's bow tie is also in front of him.).

With all that being said, what is Euclydia?

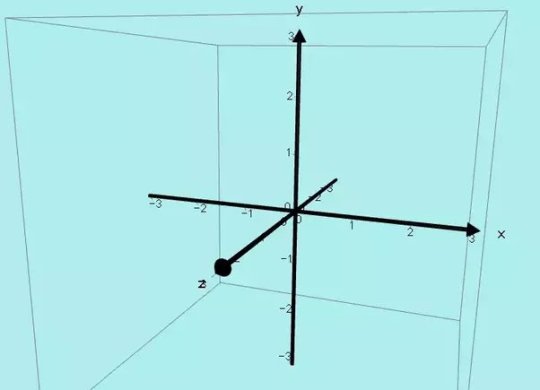

Well, just like Bill said, it's a flat world. Not two dimensional, but flat. The third dimension of Euclydia is limited somehow. Basically, in 3D, creatures are defined by 3 axis:

x axis is left and right (width)

y axis is up and down (height)

z axis is towards and away from (depth)

All three dimensional objects have both width, height and depth. Two dimensional objects have just width and height, so just x and y axis. And Bill has depth. It's a very limited depth, but it is depth nonetheless. So he's not really a triangle, more like a very thin pyramid. This is his side profile lmao:

So Euclydeans have some depth, but for whatever reason, they can't move on z axis. They can only move left, right, up and down. They also can't turn around.

This is how thisisnotawebsitedotcom.com explains Euclydian movements:

Two dimension to and fro, you always know which way to go. If you're lost, don't be afraid, in Euclydia you've got it made. Run too far right to right of frame, you'll appear on left again. Jump too high don’t cry or fret, pop up from the ground I bet. In this place, there is no fear, loved ones will be ever near. Roles and rules always flea/clear. Euclydia, we hold you dear.

So, if they move too far left, they'll come from the other side. This is actually something that in possible ONLY in non-euclidean geometry, which means that Euclydia, ironically, is a non-euclidean place. It's actually a sphere (or a similar elliptical body).

In non-euclidean geometry of the sphere, there exists something that sounds paradoxical: a straight line is actually a circle. But it's actually very easy to understand with this example:

Imagine that you're flying a plane in a straight line. You feel like you're going in a straight line, but your plane is actually following the curvature of the Earth. If you manage to fly around the entire Earth, you will appear on the same spot where you started flying. You were flying in a straight line, but because Earth is a non-euclidean sphere, you were actually flying in a circle. And both of those are true!

The plane is very very small compared to the size of the Earth. So, to the plane, Earth's curvature is so negligent that we could say that in a small radius around it the Earth is actually a flat plane. So, for example, houses, neighborhoods, even cities are built relying only on euclidean geometry (the geometry of a flat plane) because the Earth is so goddamn big.

And Euclydia is actually a whole fucking dimension. Let's say that our dimension is our universe. Our universe is approximately 93 billion light-years wide. So let's say that that's the size of Euclydia. How tiny is Earth compared to the Universe? That's why planets and everything else in Euclydia can be treated as a flat plane: every object is so small compared to the size of this giant sphere that the curvature could be completely omitted from the equation.

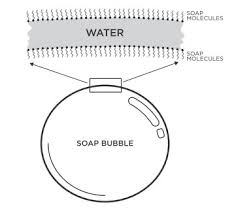

Now this is my theory, but I imagine that Euclydia looks like a giant soap bubble. Soap bubbles are made when two thin layers of soap molecules trap a thin layer of water:

Euclydia is the water - that thin layer is where all the planets, stars and living beings on them are located. That's why movement on z axis is so limited. The soap molecules are membranes that separate Euclydia from the other dimensions, one inside the bubble and one outside.

Since Euclydians can't move across z axis, they have eyes on their sides that can see only left and right. Their vision is limited to one dimension. But Bill's eye is located in a spot that allows him to see both left and right, but also up and down. He can see two dimensions, just like us! Here's a diagram I made, so you can understand better:

(there are stars outside too, but I didn't want to clutter this image more)

So, now that I've spent SO MUCH TIME explaining what is Euclydia, let me tell you what are Euclydeans.

Euclideans are animals (or their equivalent in their dimension). Animals are defined as multicellular heterotrophic organisms with an internal digestive tract. This basically means multicellular organisms that eat.

Euclydeans have to be multicellular because they have extremely complex structures such as: camera lens eyes, teeth, fingerprints, exoskeleton and so on. These traits cannot be achieved by a unicellular organism. And they definitely eat their food, we've seen Bill do it. So they are (their dimension's equivalent of) animals.

And how they function? What type of animal are they? Well, see you at part two, if this didn't bore you to death already!

Thank you to @forseenconsequences @extremereader and @ok1237 for asking me to do this. Hope you like it, guys!

#me: i won't do a lot of maths for this#also me:#i can't believe i studied fucking non-euclidean geometry for this#i'm a biologist#i don't understand math *lying#speculative biology#art#the book of bill#gravity falls#bill cipher#thisisnotawebsitedotcom#heavy spoilers#geometry#what am i doing with my life

212 notes

·

View notes

Note

"ONLY IF SHE ASKED ME TO"?????? based. also im trying to picture husk attacking kindred with his horns. how. would he have to walk into him backwards?

WHEEZE ok ok HAHAHAHA that’s a good question and I want to answer it too HAHAHA

ok let’s say theoretically husk had a target to attack in this scenario

so like when I was first concepting husks design my first thought was that husk’s antlers pointed forward and curled up, but then I actually started drawing him, and I realized my own ability to draw depth like that was very lacking and these are the benefits of having a 3d model. alas I am not well versed in the art of 3d sculpting

so like the original goal was something like this:

which makes more sense, yea?? but then I tried to draw it from the front

and I think that’s. kind of where things fall apart and I am 90% certain that is entirely my own fault lmao

so I try to draw it more spread out so the silhouette is clearer

but now I have a new problem lmao. because now his horns look like they’re directly pointing out to the sides. which means if I draw him from a side angle it should probably look like this?

because they point directly towards the camera now ?

but instead to continue to make the silhouette clear I drew them like this lmao

Which now means his antlers are pointing BACK, and are like curved around his head?? which makes attacking with them almost. uh. null and void?? impossible?? they’re like fancy cheek guards now lmao

so yea naw chief I don’t think that’s working out for ya.

so now that leaves like….if I assume the horns are actually pointing out to the side and are not curved around his head pointing backwards, then maybe?? side attack??

but. I don’t think. uh. I don’t think having to blindly tilt your entire head to the side in order to mmmmaybe get a hit in would be very effective??? and he’d certainly never spear anyone with them

In the end im pretty sure I’ve accidentally fucked this guy over and any chance he had at some sort of defense haha. I should probably fix that sometime, because that’s,,, definitely a bug and not a feature lmao

But at the end of the day, never forget that he has the most effective tactic of all:

Slapping people with the knives on his ass.

138 notes

·

View notes

Note

Hello! First of all, I love your drawing style. IT'S SO COOL AND IT INSPIRES ME A LOT. Now, my question is, how do you make your style so similar to Disney's? I'm asking so I can practice it too. That's all Thanks for reading!

That's very nice :> And learning to style characters like a Disney movie is more than just "a quick tip I can give," but I'll try to summarize it in this main point: shapes, but 3D.

Real life anatomy is very complicated, but you have to understand the rules to know how you can break them. Individual styles can vary a lot from Disney movie to Disney movie, but the through-line is always that characters are represented by strong and memorable shapes. You have to understand what makes up a real face, then what can be visually appealing/unique -but still readable- when stylizing a face, and then how that can be drawn for an animation - of course, that doesn't just apply to faces, it applies to all of the body. You have to understand how the complicated stuff works in order to simplify it (especially if you're trying to make your own designs).

Really getting the hang of form and depth is a key aspect that I find a lot of people skip over: it's easy to look at a still from a movie and draw the character in that specific shot; the problem is that people will then shortcut it and think of the character like a flat image, memorizing how the eye or nose or chin looks at that specific angle. What you have to understand is that, even though the character is 2D, it's only a 2D representation of a 3D form.

There are these models called maquette sculptures that animators would have on their desk while they were working. It provided a quick example of the forms of their character when they needed it. You can see just how shapey the characters come across even in 3D, but you'll also notice that the 3D models don't quite feel the same as their respective 2D drawings. That's kind of the key you have to learn to make characters feel like they're from a classic animated movie - they are forms with depth and mass, but you have to break the rules a little to be able to play to the camera.

75 notes

·

View notes

Text

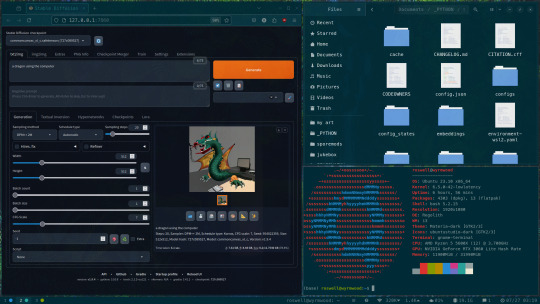

research & development is ongoing

since using jukebox for sampling material on albedo, i've been increasingly interested in ethically using ai as a tool to incorporate more into my own artwork. recently i've been experimenting with "commoncanvas", a stable diffusion model trained entirely on works in the creative commons. though i do not believe legality and ethics are equivalent, this provides me peace of mind that all of the training data was used consensually through the terms of the creative commons license. here's the paper on it for those who are curious! shoutout to @reachartwork for the inspiration & her informative posts about her process!

part 1: overview

i usually post finished works, so today i want to go more in depth & document the process of experimentation with a new medium. this is going to be a long and image-heavy post, most of it will be under the cut & i'll do my best to keep all the image descriptions concise.

for a point of reference, here is a digital collage i made a few weeks ago for the album i just released (shameless self promo), using photos from wikimedia commons and a render of a 3d model i made in blender:

and here are two images i made with the help of common canvas (though i did a lot of editing and post-processing, more on that process in a future post):

more about my process & findings under the cut, so this post doesn't get too long:

quick note for my setup: i am running this model locally on my own machine (rtx 3060, ubuntu 23.10), using the automatic1111 web ui. if you are on the same version of ubuntu as i am, note that you will probably have to build python 3.10.6 yourself (and be sure to use 'make altinstall' instead of 'make install' and change the line in the webui to use 'python3.10' instead of 'python3'. just mentioning this here because nobody else i could find had this exact problem and i had to figure it out myself)

part 2: initial exploration

all the images i'll be showing here are the raw outputs of the prompts given, with no retouching/regenerating/etc.

so: commoncanvas has 2 different types of models, the "C" and "NC" models, trained on their database of works under the CC Commercial and Non-Commercial licenses, respectively (i think the NC dataset also includes the commercial license works, but i may be wrong). the NC model is larger, but both have their unique strengths:

"a cat on the computer", "C" model

"a cat on the computer", "NC" model

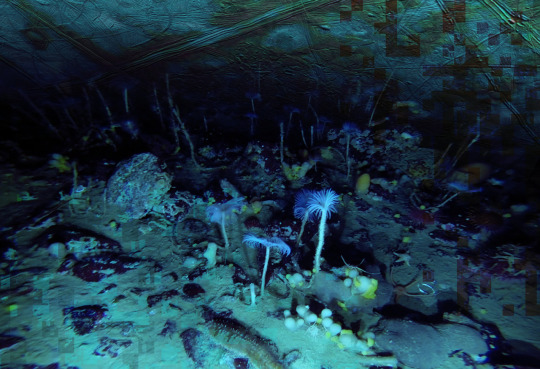

they both take the same amount of time to generate (17 seconds for four 512x512 images on my 3060). if you're really looking for that early ai jank, go for the commercial model. one thing i really like about commoncanvas is that it's really good at reproducing the styles of photography i find most artistically compelling: photos taken by scientists and amateurs. (the following images will be described in the captions to avoid redundancy):

"grainy deep-sea rover photo of an octopus", "NC" model. note the motion blur on the marine snow, greenish lighting and harsh shadows here, like you see in photos taken by those rover submarines that scientists use to take photos of deep sea creatures (and less like ocean photography done for purely artistic reasons, which usually has better lighting and looks cleaner). the anatomy sucks, but the lighting and environment is perfect.

"beige computer on messy desk", "NC" model. the reflection of the flash on the screen, the reddish-brown wood, and the awkward angle and framing are all reminiscent of a photo taken by a forum user with a cheap digital camera in 2007.

so the noncommercial model is great for vernacular and scientific photography. what's the commercial model good for?

"blue dragon sitting on a stone by a river", "C" model. it's good for bad CGI dragons. whenever i request dragons of the commercial model, i either get things that look like photographs of toys/statues, or i get gamecube type CGI, and i love it.

here are two little green freaks i got while trying to refine a prompt to generate my fursona. (i never succeeded, and i forget the exact prompt i used). these look like spore creations and the background looks like a bryce render. i really don't know why there's so much bad cgi in the datasets and why the model loves going for cgi specifically for dragons, but it got me thinking...

"hollow tree in a magical forest, video game screenshot", "C" model

"knights in a dungeon, video game screenshot", "C" model

i love the dreamlike video game environments and strange CGI characters it produces-- it hits that specific era of video games that i grew up with super well.

part 3: use cases

if you've seen any of the visual art i've done to accompany my music projects, you know that i love making digital collages of surreal landscapes:

(this post is getting image heavy so i'll wrap up soon)

i'm interested in using this technology more, not as a replacement for my digital collage art, but along with it as just another tool in my toolbox. and of course...

... this isn't out of lack of skill to imagine or draw scifi/fantasy landscapes.

thank you for reading such a long post! i hope you got something out of this post; i think it's a good look into the "experimentation phase" of getting into a new medium. i'm not going into my post-processing / GIMP stuff in this post because it's already so long, but let me know if you want another post going into that!

good-faith discussion and questions are encouraged but i will disable comments if you don't behave yourselves. be kind to each other and keep it P.L.U.R.

200 notes

·

View notes

Text

This piece started as an idea to try something I’m calling 2.5D art.

a bit of 3D, a bit of painting 😃👍🦖

I made Rexy and Cera in Blender, texture-painted them, rigged them up, and posed them. Once I had the composition in mind, I generated a stylised forest background using AI, brought it into Blender, and set up the full scene with lighting and camera perspective.

From there, I rendered the shot and moved into Procreate for the final pass, painting over the background, tweaking the lighting, and refining the edges to give it a more illustrated, dreamy vibe.

This hybrid process lets me keep the form and depth of 3D while layering in the softness and mood of 2D art , and it’s so much faster! A painting like this would usually take me days (sometimes weeks), but this only took a single day start to finish.

It’s been a fun experiment in workflow and storytelling, definitely something I want to explore more. 💛

*** Just a quick note, since I know the topic of AI in art can be sensitive.

I built my characters, posed them, and rendered them in Blender, everything from modeling to rigging was done by me. I used a generated background as a base, mainly to guide lighting and mood, the same way artists have used stock photos or photobashing for years. The final result was painted over in Procreate, with hand-painted lighting and adjustments.

This wasn’t about skipping effort for me, it was an experiment in workflow and storytelling and trying something new. I was transparent about my process because I’m proud of what I created and how I made it.

It’s okay if this approach isn’t for everyone.

Happy experimenting everyone.

63 notes

·

View notes

Text

#acnl#acnlwa#nintendo 3ds#animal crossing new leaf#nintendo#very old screenshots pulled from the depths of my camera roll

23 notes

·

View notes

Text

Director Wes Ball and Visual Effects Supervisor Erik Winquist shared some step by step images of transforming humans into apes. They said that the crew of Weta FX made vast improvements on performance capture with Avatar The Way Of Water. “From a hardware and technology standpoint, one of the improvements is now we’re using a stacked pair of stereo facial cameras instead of single cameras, which allows us to reconstruct an actual 3D depth mesh of the actors face. It allows us to get a much better sense of the nuance of what their face was doing.”

They also shared some concept art of the post-apocalyptic setting of the film. Winquist shared a book that gave him much inspiration. “One of the things that I was looking at early on was the book ‘The World Without Us’ that hypothesizes what would happen in the weeks, years, decades and centuries after mankind stopped maintaining our infrastructure. You start pulling from your imagination on what that might look like….”

More behind the scenes details were shared in the article on VFX Voice magazine.

#kingdom of the planet of the apes#behind the scenes#planet of the apes#wes ball#owen teague#Noa#Anaya#soona#sunset trio#reboot pota#pota#proximus caesar#Kevin Durand#the world without us#mine#vfx#travis jeffrey#lydia peckham

224 notes

·

View notes

Note

I think it’s really cool how you’re editing screenshots from Veilguard to look like drawings! I’ve messed around with the same Photoshop filters before too!

they're not edited screenshots!

i used daz + blender + photoshop to make both of my datv pieces. here's a look at them from different angles!

(you'll notice i modeled everything on the wrong side for emmrich and had to flip it in post. this is because i am stupid)

the process for these is a little different than the one in this post (warning for butts). i'll talk about it below the cut :)

for these, the 3d step is pretty similar, but instead of just getting posing/props looking good, i am going a bit further and working on lighting, materials, camera placement, composition, etc. i'm spending probably 80% of the time for this type of piece on this step. i looooove the lighting in the lighthouse so i spent a lot of time trying to nail the vibe on both of these pieces.

i use a blender plugin called extreme pbr nexus and epic's quixel megascans library for almost all of my materials. (megascans is no longer free so idk what i'm going to do in the future. rip)

my raw blender render is on the left, and the final image on the right. i do a lot of overpainting and add detail (rings, chains, gold bracelet engravings, wisp-fred - all things i was too lazy to sculpt) and subtract detail (some cloth seams, crags on the skull, blurring objects farther away for depth of field) where i see fit.

i also used some gradient maps (my default is a blue/purple/pink but i used blue/green here) and color LUTs over the entire image to harmonize the colors.

the last step is to add grain and sharpening with a camera raw filter.

et voilà!

i'm doing one for all our guys, gals, and nonbinary pals - here's a sneak peek for the next one!

45 notes

·

View notes