#7 figure accelerator review

Explore tagged Tumblr posts

Text

7 Figure Accelerator Scam ? The Truth Revealed

Curious about 7 Figure Accelerator? Learn how it works, what it costs, and if it’s legit. Read real reviews and see how to start. Discover now!

Making money online sounds like a dream, right? That’s exactly what I thought when I first came across 7 Figure Accelerator—a program that promises to fast-track your journey to a high-income business. With so many online courses making big claims, I was skeptical at first. But after diving in, I realized there’s more to it than just hype. In this article, I’ll break down my experience, share what you need to know about the 7 Figure Accelerator program, and help you decide if it’s the real deal. Let’s get started!

What is 7 Figure Accelerator?

If you’ve looked for ways to make money online, you may have seen 7 Figure Accelerator. It’s a coaching program that teaches people how to build a profitable online business fast. But what does it really offer? And does it actually work?

The program was created by Philip Johansen. He promises to help students grow a 7-figure business using a simple system. The focus is on high-ticket affiliate marketing. This means, instead of earning small commissions, you sell premium products for bigger profits. The 7 Figure Accelerator affiliate program lets members earn commissions while learning how to scale their business.

At first, I had doubts. I’ve seen many courses with big promises but little value. But after joining the 7 Figure Accelerator program, I saw it was different. It teaches real strategies that work today. You learn how to find the right audience and close sales without pressure. The steps are clear, and you get hands-on support.

So, is 7 Figure Accelerator legit? From my experience, it gives a clear plan for success in high-ticket affiliate marketing. But like anything, results depend on effort. In the next sections, I’ll cover what’s inside, the cost, and if it’s worth it.

My Experience with 7 Figure Accelerator (Personal Insights)

When I first heard about 7 Figure Accelerator, I was skeptical. I had tried different online business models before, and most were either too complicated or didn’t deliver results. But something about this program caught my attention. The idea of earning high-ticket commissions without spending months building a business from scratch sounded too good to ignore.

I decided to take the plunge. The first thing I noticed? Learn more

#7 Figure Accelerator#7 figure accelerator affiliate program#7 figure accelerator#7 figure accelerator reviews#7 figure accelerator philip#7 figure accelerator review#7 figure accelerator cost#is 7 figure accelerator legit#7 figure accelerator program#7 figure acceleration

1 note

·

View note

Text

7-Figure Accelerator Information Review!

What is a 7-Figure Accelerator?

The 7-Figure Accelerator is a done-for-you (DFY) affiliate marketing program designed to help individuals generate passive income online—without technical skills, sales calls, or complicated setups. Created by seasoned entrepreneur Philip, this system aims to build, automate, and scale a profitable affiliate business within 90 days or less.

With the potential to earn $5,000+ per sale and up to $30,000 per month, it’s perfect for those looking to achieve financial freedom, flexible working hours, and a stress-free way to replace their 9-5 income. Unlike traditional affiliate programs, the 7-Figure Accelerator eliminates 95% of the work—no need to build websites, run ads, or create content. Instead, users simply copy and paste ready-made links while Philip’s expert team takes care of everything else.

How the 7-Figure Accelerator Drives Unlimited Traffic and Maximize Earnings

Effortless Done-For-You Setup

The program takes care of all the technical work, including funnel creation, website setup, lead generation, and automation. No need to stress about coding, designing, or running ads—everything is handled for you.

High-Ticket, High-Converting Offers

Gain access to exclusive, high-ticket affiliate offers that pay $1,000 to $5,000 per sale. These carefully selected offers are designed for maximum profitability and high conversion rates.

Powerful Traffic Generation

The system leverages proven traffic strategies to drive unlimited visitors to your affiliate offers. It utilizes social media platforms like Instagram and TikTok, as well as other organic and paid traffic sources to maximize reach.

Fully Automated Follow-Up System

Once leads are captured, the system automatically follows up via email sequences and engagement tactics, ensuring consistent conversions without any manual effort.

Hands-Free Passive Income

With all the heavy lifting done for you, your only task is to spend just 20 minutes a day copying and pasting content. The system takes care of the rest, allowing you to generate income effortlessly while focusing on other areas of your life.

Step-by-Step: How to Earn Your First $1,000 in 24 Hours

Enroll: Secure your spot ($1,997 one-time).

Onboard: Philip’s team builds your funnel and trains you in 2 hours.

Copy-paste: Share your DFY link on 3 social platforms (20 mins).

Profit: Let automated emails/SMS convert leads while you binge Netflix.

Why Instagram & TikTok Are Perfect for Promoting 7-Figure Accelerator

With millions of active users and high engagement rates, Instagram and TikTok provide the perfect platforms to promote the 7-Figure Accelerator and maximize your reach.

✅ Viral Growth: Both platforms thrive on shareable content, allowing you to reach a massive audience in a short time.

✅ Eye-Catching Visuals: The program’s high-converting offers are ideal for visually-driven content, making it easier to attract and engage potential buyers.

✅ Precision Targeting: Advanced ad targeting features help you connect with the right audience, ensuring higher conversion rates.

✅ Cost-Effective Traffic: Compared to traditional advertising, Instagram and TikTok offer a low-cost way to generate leads and sales.

✅ Long-Term Community Building: Establish a loyal following that you can nurture and monetize for ongoing success.

By leveraging these platforms, you can effectively promote 7-Figure Accelerator, generate consistent traffic, and boost your affiliate earnings with ease!

7-Figure Accelerator: Features & Benefits

High-Ticket Earnings: Earn commissions ranging from $1,000 to $5,000 per sale, allowing you to scale your income quickly.

Completely Done-for-You System: Everything from lead generation to funnel creation is fully automated, eliminating the need for manual work.

Beginner-Friendly: No technical skills required—perfect for those new to affiliate marketing.

Proven Success Blueprint: Based on a tested strategy that helps users go from $0 to $5,000 in just 30 days.

Expert Coaching & Support: Get access to weekly live Zoom calls with industry experts to help you stay on track and maximize results.

Exclusive Private Community: Connect with a members-only group to network, share insights, and learn from successful affiliates.

Lifetime Access to Premium Tools: Enjoy free lifetime access to essential tools like ClickFunnels, saving you $297/month on hosting and domain costs.

This program is designed to fast-track your success, providing everything you need to earn, automate, and scale your affiliate business effortlessly!

Who Can Benefit from a 7-Figure Accelerator?

Beginners: No prior experience or technical skills is required.

Busy Professionals: Perfect for those with limited time who want to earn passive income.

Stay-at-Home Parents: Offers a flexible way to generate income while managing family responsibilities.

Retirees: Provides an opportunity to supplement retirement income without the stress of a traditional job.

Entrepreneurs: Ideal for those looking to diversify their income streams and scale their onlinebusiness.

Debt-Strapped Individuals: A fast-track solution to pay off debt and achieve financial freedom.

Pricing & Upsells

7-Figure Accelerator offers multiple pricing plans that cater to the many different needs of each user. Business Needs and Desired Results. The 7-Figure Accelerator pricing structure is as follows:

The Main Software is 7-Figure Accelerator ($1,000)

Normal Price is valued at over $66,200+

7-Figure Accelerator is currently being offered at an introductory price of $1000, which is significantly lower than its purported value. This pricing strategy aims to make the software accessible to a wide range of users, from beginners to experienced marketers.

»»» Click here to get Action Today. «««

Pros of 7-Figure Accelerator

No cold calling, tech work, or content creation.

$50K/month potential in 90 days.

Done-for-you systems save 20+ hours/week.

1-year coaching reduces trial-and-error.

Cons of 7-Figure Accelerator:

✅ No issues were reported, and it performs flawlessly.

7-Figure Accelerator: Frequently Asked Questions

Do I need prior business experience to join the 12-month mentorship?

The program provides a 100% done-for-you business setup.

No prior experience is required.

Full training is included to help you scale effortlessly.

No special skills, qualifications, or degrees are needed.

Perfect for those who want a profitable business without the stress, trial, and error of starting from scratch.

Every question you have will be answered by experts along the way.

I’m not tech-savvy and don’t know how to use social media for business. Does this matter?

Zero social media experience is needed.

You’ll only spend 20 minutes a day posting ready-made content.

No need to brainstorm strategies or create unique content.

No captions to write, no trial and error, and no guesswork.

Simply copy, paste, and post—it’s that easy!

I don’t want to show my face on Instagram. Will this hold me back?

Not at all!

90% of mentorship students don’t show their faces on social media.

No dancing, singing, or performing is required.

We leverage Instagram’s algorithm to drive traffic and promote affiliate offers effectively.

Your privacy is fully respected while you build your income.

Can I pay the enrollment fee in installments?

1,997 upfront or split it into 3 installments of 1,000 over 3 months.

Yes, flexible payment options are available.

You’ll easily earn back the investment within 1-2 months of starting.

If you don’t achieve results, we’ll work with you until you hit $30,000/month.

What It’s All About

The 7-Figure Accelerator is designed with one goal in mind—to eliminate the obstacles standing between you and true passive income. While most programs sell empty promises, Philip Johansen provides a ready-to-go, profit-generating system that works.

You don’t have to quit your job overnight. Instead, you can start building your income stream in as little as 20 minutes a day and potentially replace your paycheck within 90 days or less. With automated funnels and pre-built traffic sources, you can earn while you sleep—no guesswork, no endless trial and error.

This isn’t luck or wishful thinking—it’s about leveraging a proven system that’s already generating results with high-ticket affiliate offers.

»»» Click here to get Action Today. «««

Who Should Grab This (And Who Shouldn’t)

Perfect for:

Overworked employees crave freedom.

Parents want more time (and money) for their kids.

Side hustlers are tired of trading time for pennies.

Anyone who’s done with tech headaches and sales flops.

Not for:

Skeptics are waiting for a “perfect” opportunity (spoiler: it doesn’t exist).

Lottery-minded folks who won’t spend 20 mins/day pasting links.

What Sets It Apart from the Rest

Unlike most courses that leave you to figure everything out alone, the 7-Figure Accelerator takes care of the hard work for you. Need help with lead generation? You'll receive proven viral templates for TikTok and Instagram. Not tech-savvy? Their team sets up and hosts everything for you. Concerned about scaling? You'll have access to high-ticket offers that pay up to $5,000 per sale with 50% commissions. This isn’t just a theory—it’s a proven system already delivering consistent daily sales for its users.

Final Verdict: Is It Worth It?

If you’re serious about escaping the daily grind, the choice is clear: YES. This isn’t just another affiliate marketing program—it’s a proven shortcut to success. With expert guidance, a fully automated system, and access to a high-paying, non-saturated niche, it removes the typical obstacles that hold beginners back. Plus, with a 14-day refund policy and many users making their first sale within 24 hours, the risk is low, and the rewards are high.

The 7-Figure Accelerator isn’t a gamble—it’s a strategic move toward financial freedom. And to make it even better, when you join now, you'll unlock incredible bonuses designed to accelerate your results even faster. Don't wait—take action today and start building a business that works for you!

»»» Click here to get Action Today. «««

#affiliatemarketing#passiveincome#make money online#onlinesuccess#7 figure accelerator#7-figure accelerator review

0 notes

Text

7-Figure Accelerator by Philip Johansen Review

7-Figure Accelerator by Philip Johansen – The 7-Figure Accelerator: Transforming Ordinary Individuals into Online Success Stories 7-Figure Accelerator by Philip Johansen. Phillip Johansen’s journey from earning mere dollars after hundreds of hours of work to generating multiple six-figure incomes within a year is nothing short of inspirational. His transformation underscores the potential of the…

View On WordPress

0 notes

Text

In advocating for inclusivity, “Superman Smashes the Klan” draws (quite literally) on an internationally-inflected style that brings manga, Max Fleischer, and DC house style together to create a charming mosaic that advances the themes and meanings of the story. #Superman 1/12

In their review of SStK, The New York Times notes: “Gurihiru's rendering is a mash-up that pairs contemporary Japanese manga, with its conventional large eyes, and clean-lined, charmingly retro figuration reminiscent…” 2/12

“…of the Fleischer Studios Superman cartoons of the early 1940s, full of striking, often dreamy swaths of uncluttered color. Weirdly, it works. The vibrant visual world is controlled and inviting.” 3/12

“Despite the hilarity of Superman's enormous, almost frame-breaking body, Gurihiru's cross-cultural artistic approach avoids the gimmicky.” 4/12

Gurihiru isn’t a pen-name, per se, but the name of a studio built through the collaboration of Japanese illustrators Chifuyu Sasaki and Naoko Kawano. After being told their work was inappropriate for the manga marketplace, Gurihiru broke into the US market instead. 5/12

Interestingly, the liminal status of the Gurihiru style can be seen to contribute quite directly to the cultural motifs of SStK quite admirably. The work is neither Japanese nor American, representing a true intersectional style. 6/12

This, of course, speaks to the texts’ emphasis on inclusion, diversity, and a powerful underlying intrinsic suggestion that Superman can be an American icon, but also an IP that belongs, fundamentally, to the world (and has since at least WWII). 7/12

At the same time, the choice of situating our Chinese-descended main characters within a largely Japanese visual style helps naturalize the characters within the largely White community they inhabit. Simply put, the visual style doesn’t Other them to the same extent. 8/12

Finally, the Gurihiru style can be seen to add an emotional expressiveness characteristic of shojo style manga with its emphasis on what Jennifer S Prough identifies as “concepts of emotion, intimacy, and community” in said shojo style. 9/12

Where the house style of superhero comics (and Fleischer animation) tends to emote more through body language, the shojo style seen in SStK places emphasis on nuanced human facial expression, as clearly indicated in the sheer number of close-ups throughout. 10/12

The result is a Superman comic that has a rarer capacity to explore the emotions, intimacy, and community themes that Prough speaks to in shojo. Clearly, these same themes are deeply important to SStK. 11/12

The broader result is a style of illustration that is original, dynamic, and deftly synchronized to the thematic needs of Yang’s story, a visual contribution that accelerates the comic to a level of success that might not be possible without the Gurihiru approach. 12/12

2 notes

·

View notes

Text

Could AI slow science?

New Post has been published on https://thedigitalinsider.com/could-ai-slow-science/

Could AI slow science?

AI leaders have predicted that it will enable dramatic scientific progress: curing cancer, doubling the human lifespan, colonizing space, and achieving a century of progress in the next decade. Given the cuts to federal funding for science in the U.S., the timing seems perfect, as AI could replace the need for a large scientific workforce.

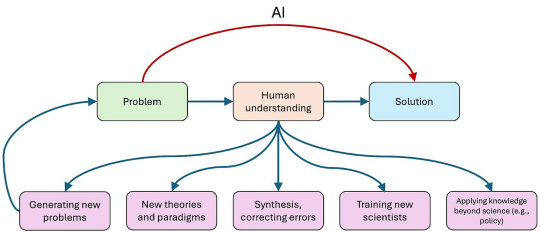

It’s a common-sense view, at least among technologists, that AI will speed science greatly as it gets adopted in every part of the scientific pipeline — summarizing existing literature, generating new ideas, performing data analyses and experiments to test them, writing up findings, and performing “peer” review.

But many early common-sense predictions about the impact of a new technology on an existing institution proved badly wrong. The Catholic Church welcomed the printing press as a way of solidifying its authority by printing Bibles. The early days of social media led to wide-eyed optimism about the spread of democracy worldwide following the Arab Spring.

Similarly, the impact of AI on science could be counterintuitive. Even if individual scientists benefit from adopting AI, it doesn’t mean science as a whole will benefit. When thinking about the macro effects, we are dealing with a complex system with emergent properties. That system behaves in surprising ways because it is not a market. It is better than markets at some things, like rewarding truth, but worse at others, such as reacting to technological shocks. So far, on balance, AI has been an unhealthy shock to science, stretching many of its processes to the breaking point.

Any serious attempt to forecast the impact of AI on science must confront the production-progress paradox. The rate of publication of scientific papers has been growing exponentially, increasing 500 fold between 1900 and 2015. But actual progress, by any available measure, has been constant or even slowing. So we must ask how AI is impacting, and will impact, the factors that have led to this disconnect.

Our analysis in this essay suggests that AI is likely to worsen the gap. This may not be true in all scientific fields, and it is certainly not a foregone conclusion. By carefully and urgently taking actions such as those we suggest below, it may be possible to reverse course. Unfortunately, AI companies, science funders, and policy makers all seem oblivious to what the actual bottlenecks to scientific progress are. They are simply trying to accelerate production, which is like adding lanes to a highway when the slowdown is actually caused by a toll booth. It’s sure to make things worse.

1. Science has been slowing — the production-progress paradox

2. Why is progress slowing? Can AI help?

3. Science is not ready for software, let alone AI

4. AI might prolong the reliance on flawed theories

5. Human understanding remains essential

6. Implications for the future of science

7. Final thoughts

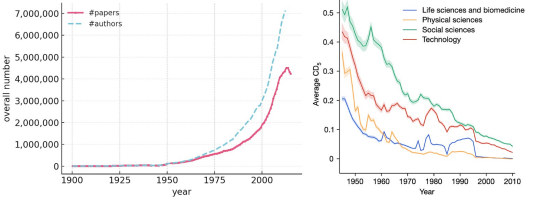

The total number of published papers is increasing exponentially, doubling every 12 years. The total number of researchers who have authored a research paper is increasing even more quickly. And between 2000 and 2021, investment in research and development increased fourfold across the top seven funders (the US, China, Japan, Germany, South Korea, the UK, and France).

But does this mean faster progress? Not necessarily. Some papers lead to fundamental breakthroughs that change the trajectory of science, while others make minor improvements to known results.

Genuine progress results from breakthroughs in our understanding. For example, we understood plate tectonics in the middle of the last century — the idea that the continents move. Before that, geologists weren’t even able to ask the right questions. They tried to figure out the effects of the cooling of the Earth, believing that that’s what led to geological features such as mountains. No amount of findings or papers in older paradigms of geology would have led to the same progress that plate tectonics did.

So it is possible that the number of papers is increasing exponentially while progress is not increasing at the same rate, or is even slowing down. How can we tell if this is the case?

One challenge in answering this question is that, unlike the production of research, progress does not have clear, objective metrics. Fortunately, an entire research field — the “science of science“, or metascience — is trying to answer this question. Metascience uses the scientific method to study scientific research. It tackles questions like: How often can studies be replicated? What influences the quality of a researcher’s work? How do incentives in academia affect scientific outcomes? How do different funding models for science affect progress? And how quickly is progress really happening?

Left: The number of papers authored and authors of research papers have been increasing exponentially (from Dong et al., redrawn to linear scale using a web plot digitizer). Right: The disruptiveness of papers is declining over time (from Park et al.).

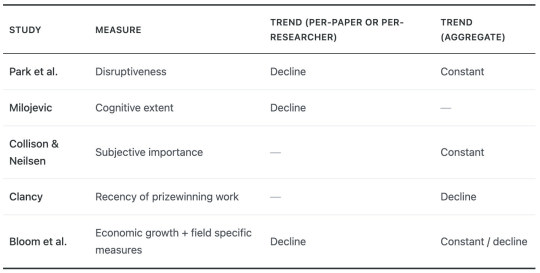

Strikingly, many findings from metascience suggest that progress has been slowing down, despite dramatic increases in funding, the number of papers published, and the number of people who author scientific papers. We collect some evidence below; Matt Clancy reviews many of these findings in much more depth.

1) Park et al. find that “disruptive” scientific work represents an ever-smaller fraction of total scientific output. Despite an exponential increase in the number of published papers and patents, the number of breakthroughs is roughly constant.

2) Research that introduces new ideas is more likely to coin new terms. Milojevic collects the number of unique phrases used in titles of scientific papers over time as a measure of the “cognitive extent” of science, and finds that while this metric increased up until the early 2000s, it has since entered a period of stagnation, when the number of unique phrases used in titles of research papers has gone down.

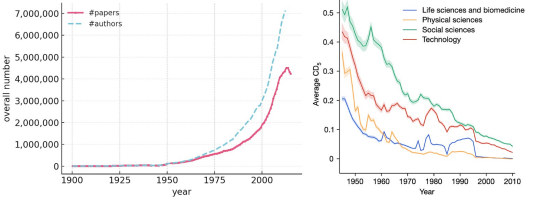

3) Patrick Collison and Michael Nielsen surveyed researchers across fields on how they perceived progress in the most important breakthroughs in their fields over time — those that won a Nobel prize. They asked scientists to compare Nobel-prize-winning research from the 1910s to the 1980s.

They found that scientists considered advances from earlier decades to be roughly as important as the ones from more recent decades, across Medicine, Physics, and Chemistry. Despite the vast increases in funding, published papers, and authors, the most important breakthroughs today are about as impressive as those in the decades past.

4) Matt Clancy complements this with an analysis of what fraction of discoveries that won a Nobel Prize in a given year were published in the preceding 20 years. He found that this number dropped from 90% in 1970 to 50% in 2015, suggesting that either transformative discoveries are happening at a slower pace, or that it takes longer for discoveries to be recognized as transformative.

Share of papers describing each year’s Nobel-prize winning work that were published in the preceding 20 years. 10-year moving average. Source: Clancy based on data from Li et al.

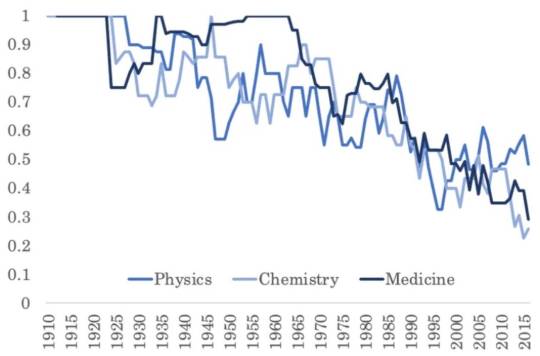

5) Bloom et al. analyze research output from an economic perspective. Assuming that economic growth ultimately comes from new ideas, the constant or declining rate of growth implies that the exponential increase in the number of researchers is being offset by a corresponding decline in the output per researcher. They find that this pattern holds true when drilling down into specific areas, including semiconductors, agriculture, and medicine (where the progress measures are Moore’s law, crop yield growth, and life expectancy, respectively).

The decline of research productivity. Note that economists use “production” as a catch-all term, with paper and patent counts, growth, and other metrics being different ways to measure it. We view production and progress as fundamentally different constructs, so we use the term production in a narrower sense. Keep in mind that in the figure, “productivity” isn’t based on paper production but on measures that are better viewed as progress measures. Source: Bloom et al.

Of course, there are shortcomings in each of the metrics above. This is to be expected: since progress doesn’t have an objective metric, we need to rely on proxies for measuring it, and these proxies will inevitably have some flaws.

For example, Park et al. used citation patterns to flag papers as “disruptive”: if follow-on citations to a given paper don’t also cite the studies this paper cited, the paper is more likely to be considered disruptive. One criticism of the paper is that this could simply be a result of how citation practices have evolved over time, not a result of whether a paper is truly disruptive. And the metric does flag some breakthroughs as non-disruptive — for example, AlphaFold is not considered a disruptive paper by this metric.

But taken together, the findings do suggest that scientific progress is slowing down, at least compared to the volume of papers, researchers, and resources. Still, this is an area where further research would be fruitful — while the decline in the pace of progress relative to inputs seems very clear, it is less clear what is happening at an aggregate level. Furthermore, there are many notions of what the goals of science are and what progress even means, and it is not clear how to connect the available progress measures to these higher-level definitions.

Summary of a few major lines of evidence of the slowdown in scientific progress

There are many hypotheses for why progress could be slowing. One set of hypotheses is that slowdown is an intrinsic feature of scientific progress, and is what we should expect. For example, there’s the low-hanging fruit hypothesis — the easy scientific questions have already been answered, so what remains to be discovered is getting harder.

This is an intuitively appealing idea. But we don’t find this convincing. Adam Mastroianni gives many compelling counter-arguments. He points out that we’ve been wrong about this over and over and lists many comically mis-timed assessments of scientific fields reaching saturation just before they ended up undergoing revolutions, such as physics in the 1890s.

While it’s true that lower-hanging fruits get picked first, there are countervailing factors. Over time, our scientific tools improve and we stand on the tower of past knowledge, making it easier to reach higher. Often, the benefits of improved tools and understanding are so transformative that whole new fields and subfields are created. New fields from the last 50-100 years include computer science, climate science, cognitive neuroscience, network science, genetics, molecular biology, and many others. Effectively, we’re plucking fruit from new trees, so there is always low-hanging fruit.

In our view, the low-hanging fruit hypothesis can at best partly explain slowdowns within fields. So it’s worth considering other ideas.

The second set of hypotheses is less fatalistic. They say that there’s something suboptimal about the way we’ve structured the practice of science, and so the efficiency of converting scientific inputs into progress is dropping. In particular, one subset of hypotheses flags the increase in the rate of production itself as the causal culprit — science is slowing down because it is trying to go too fast.

How could this be? The key is that any one scientist’s attention is finite, so they can only pay attention to a limited number of papers every year. So it is too risky for authors of papers to depart from the canon. Any such would-be breakthrough papers would be lost in the noise and won’t get the attention of a critical mass of scholars. The greater the rate of production, the more the noise, so the less attention truly novel papers will achieve, and thus will be less likely to break through into the canon.

Chu and Evans explain:

when the number of papers published each year grows very large, the rapid flow of new papers can force scholarly attention to already well-cited papers and limit attention for less-established papers—even those with novel, useful, and potentially transformative ideas. Rather than causing faster turnover of field paradigms, a deluge of new publications entrenches top-cited papers, precluding new work from rising into the most-cited, commonly known canon of the field.

These arguments, supported by our empirical analysis, suggest that the scientific enterprise’s focus on quantity may obstruct fundamental progress. This detrimental effect will intensify as the annual mass of publications in each field continues to grow

Another causal mechanism relates to scientists’ publish-or-perish incentives. Production is easy to measure, and progress is hard to measure. So universities and other scientific institutions judge researchers based on measurable criteria such as how many papers they publish and the amount of grant funding they receive. It is not uncommon for scientists to have to publish a certain number of peer-reviewed papers to be hired or to get tenure (either due to implicit norms or explicit requirements).

The emphasis on production metrics seems to be worsening over time. Physics Nobel winner Peter Higgs famously noted that he wouldn’t even have been able to get a job in modern academia because he wouldn’t be considered productive enough.

So individual researchers’ careers might be better off if they are risk averse, but it might reduce the collective rate of progress. Rzhetsky et al. find evidence of this phenomenon in biomedicine, where experiments tend to focus too much on experimenting with known molecules that are already considered important (which would be more likely to lead to publishing a paper) rather than more risky experiments that could lead to genuine breakthroughs. Worryingly, they find this phenomenon worsening over time.

This completes the feedback loop: career incentives lead to researchers publishing more papers, and disincentivize novel research that results in true breakthroughs (but might only result in a single paper after years of work).

If slower progress is indeed being caused by faster production, how will AI impact it? Most obviously, automating parts of the scientific process will make it even easier for scientists to chase meaningless productivity metrics. AI could make individual researchers more creative but decrease the creativity of the collective because of a homogenizing effect. AI could also exacerbate the inequality of attention and make it even harder for new ideas to break through. Existing search technology, such as Google Scholar, seems to be having exactly this effect.

To recap, so far we’ve argued that if the slowdown in science is caused by overproduction, AI will make it worse. In the next few sections, we’ll discuss why AI could worsen the slowdown regardless of what’s causing it.

How do researchers use AI? In many ways: AI-based modeling to uncover trends in data using sophisticated pattern-matching algorithms; hand-written machine learning models specified based on expert knowledge; or even generative AI to write the code that researchers previously wrote. While some applications, such as using AI for literature review, don’t involve writing code, most applications of AI for science are, in essence, software development.

Unfortunately, scientists are notoriously poor software engineers. Practices that are bog-standard in the industry, like automated testing, version control, and following programming design guidelines, are largely absent or haphazardly adopted in the research community. These are practices that were developed and standardized over the last six decades of software engineering to prevent bugs and ensure the software works as expected.

Worse, there is little scrutiny of the software used in scientific studies. While peer review is a long and arduous step in publishing a scientific paper, it does not involve reviewing the code accompanying the paper, even though most of the “science” in computational research is being carried out in the code and data accompanying a paper, and only summarized in the paper itself.

In fact, papers often fail to even share the code and data used to generate results, so even if other researchers are willing to review the code, they don’t have the means to. Gabelica et al. found that of 1,800 biomedical papers that pledged to share their data and code, 93% did not end up sharing these artifacts. This even affects results in the most prominent scientific journals: Stodden et al. contacted the authors of 204 papers published in Science, one of the top scientific journals, to get the code and data for their study. Only 44% responded.

When researchers do share the code and data they used, it is often disastrously wrong. Even simple tools, like Excel, have notoriously led to widespread errors in various fields. A 2016 study found that one in five genetics papers suffer from Excel-related errors, for example, because the names of genes (say, Septin 2) were automatically converted to dates (September 2). Similarly, it took decades for most scientific communities to learn how to use simple statistics responsibly.

AI opens a whole new can of worms. The AI community often advertises AI as a silver bullet without realizing how difficult it is to detect subtle errors. Unfortunately, it takes much less competence to use AI tools than to understand them deeply and learn to identify errors. Like other software-based research, errors in AI-based science can take a long time to uncover. If the widespread adoption of AI leads to researchers spending more time and effort conducting or building on erroneous research, it could slow progress, since researcher time and effort are wasted in unproductive research directions.

Unfortunately, we’ve found that AI has already led to widespread errors. Even before generative AI, traditional machine learning led to errors in over 600 papers across 30 scientific fields. In many cases, the affected papers constituted the majority of the surveyed papers, raising the possibility that in many fields, the majority of AI-enabled research is flawed. Others have found that AI tools are often used with inappropriate baseline comparisons, making it incorrectly seem like they outperform older methods. These errors are not just theoretical: they affect the potential real-world deployment of AI too. For example, Roberts et al. found that of 400+ papers using AI for COVID-19 diagnosis, none produced clinically useful tools due to methodological flaws.

Applications of generative AI can result in new types of errors. For example, while AI can aid in programming, code generated using AI often has errors. As AI adoption increases, we will discover more applications of AI for science. We suspect we’ll find widespread errors in many of these applications.

Why is the scientific community so far behind software engineering best practices? In engineering applications, bugs are readily visible through tests, or in the worst case, when they are deployed to customers. Companies have strong incentives to fix errors to maintain the quality of their applications, or else they will lose market share. As a result, there is a strong demand for software engineers with deep expertise in writing good software (and now, in using AI well). This is why software engineering practices in the industry are decades ahead of those in research. In contrast, there are few incentives to correct flawed scientific results, and errors often persist for years.

That is not to say science should switch from a norms-based to a market-based model. But it shouldn’t be surprising that there are many problems markets have solved that science hasn’t — such as developing training pipelines for software engineers. Where such gaps between science and the industry emerge, scientific institutions need to intentionally adopt industry best practices to ensure science continues to innovate, without losing what makes science special.

In short, science needs to catch up to a half century of software engineering — fast. Otherwise, its embrace of AI will lead to an avalanche of errors and create headwinds, not tailwinds for progress.

AI could help too. There are many applications of AI to spot errors. For example, the Black Spatula project and the YesNoError project use AI to uncover flaws in research papers. In our own work, we’ve developed benchmarks aiming to spur the development of AI agents that automatically reproduce papers. Given the utility of generative AI for writing code, AI itself could be used to improve researchers’ software engineering practices, such as by providing feedback, suggestions, best practices, and code reviews at scale. If such tools become reliable and see widespread adoption, AI could be part of the solution by helping avoid wasted time and effort building on erroneous work. But all of these possibilities require interventions from journals, institutions, and funding agencies to incentivize training, synthesis, and error detection rather than production alone.

One of the main uses of AI for science is modeling. Older modeling techniques required coming up with a hypothesis for how the world works, then using statistical models to make inferences about this hypothesis.

In contrast, AI-based modeling treats this process as a black box. Instead of making a hypothesis about the world and improving our understanding based on the model’s results, it simply tries to improve our ability to predict what outcomes would occur based on past data.

Leo Breiman illustrated the differences between these two modeling approaches in his landmark paper “Statistical Modeling: The Two Cultures”. He strongly advocated for AI-based modeling, often on the basis of his experience in the industry. A focus on predictive accuracy is no doubt helpful in the industry. But it could hinder progress in science, where understanding is crucial.

Why? In a recent commentary in the journal Nature, we illustrated this with an analogy to the geocentric model of the Universe in astronomy. The geocentric model of the Universe—the model of the Universe with the Earth at the center—was very accurate at predicting the motion of planets. Workarounds like “epicycles” made these predictions accurate. (Epicycles were the small circles added to the planet’s trajectory around the Earth).

Whenever a discrepancy between the model’s predictions and the experimental readings was observed, astronomers added an epicycle to improve the model’s accuracy. The geocentric model was so accurate at predicting planets’ motions that many modern planetariums still use it to compute planets’ trajectories.

Left: The geocentric model of the Universe eventually became extremely complex due to the large number of epicycles. Right: The heliocentric model was far simpler.

How was the geocentric model of the Universe overturned in favor of the heliocentric model — the model with the planets revolving around the Sun? It couldn’t be resolved by comparing the accuracy of the two models, since the accuracy of the models was similar. Rather, it was because the heliocentric model offered a far simpler explanation for the motion of planets. In other words, advancing from geocentrism to heliocentrism required a theoretical advance, rather than simply relying on the more accurate model.

This example shows that scientific progress depends on advances in theory. No amount of improvements in predictive accuracy could get us to the heliocentric model of the world without updating the theory of how planets move.

Let’s come back to AI for science. AI-based modeling is no doubt helpful in improving predictive accuracy. But it doesn’t lend itself to an improved understanding of these phenomena. AI might be fantastic at producing the equivalents of epicycles across fields, leading to the prediction-explanation fallacy.

In other words, if AI allows us to make better predictions from incorrect theories, it might slow down scientific progress if this results in researchers using flawed theories for longer. In the extreme case, fields would be stuck in an intellectual rut even as they excel at improving predictive accuracy within existing paradigms.

Could advances in AI help overcome this limitation? Maybe, but not without radical changes to modeling approaches and technology, and there is little incentive for the AI industry to innovate on this front. So far, improvements in predictive accuracy have greatly outpaced improvements in the ability to model the underlying phenomena accurately.

Prediction without understanding: Vafa et al. show that a transformer model trained on 10 million planetary orbits excels at predicting orbits without figuring out the underlying gravitational laws that produce those orbits.

In solving scientific problems, scientists build up an understanding of the phenomena they study. It might seem like this understanding is just a way to get to the solution. So if we can automate the process of going from problem to solution, we don’t need the intermediate step.

The reality is closer to the opposite. Solving problems and writing papers about them can be seen as a ritual that leads to the real prize, human understanding, without which there can be no scientific progress.

Fields Medal-winning mathematician William Thurston wrote an essay brilliantly illustrating this. At the outset, he emphasizes that the point of mathematics is not simply to figure out the truth value for mathematical facts, but rather the accompanying human understanding:

…what [mathematicians] are doing is finding ways for people to understand and think about mathematics.

The rapid advance of computers has helped dramatize this point, because computers and people are very different. For instance, when Appel and Haken completed a proof of the 4-color map theorem using a massive automatic computation, it evoked much controversy. I interpret the controversy as having little to do with doubt people had as to the veracity of the theorem or the correctness of the proof. Rather, it reflected a continuing desire for human understanding of a proof, in addition to knowledge that the theorem is true.

On a more everyday level, it is common for people first starting to grapple with computers to make large-scale computations of things they might have done on a smaller scale by hand. They might print out a table of the first 10,000 primes, only to find that their printout isn’t something they really wanted after all. They discover by this kind of experience that what they really want is usually not some collection of “answers”—what they want is understanding. [emphasis in original]

He then describes his experience as a graduate student working on the theory of foliations, a center of attention among many mathematicians. After he proved a number of papers on the most important theorems in the field, counterintuitively, people began to leave the field:

I heard from a number of mathematicians that they were giving or receiving advice not to go into foliations—they were saying that Thurston was cleaning it out. People told me (not as a complaint, but as a compliment) that I was killing the field. Graduate students stopped studying foliations, and fairly soon, I turned to other interests as well.

I do not think that the evacuation occurred because the territory was intellectually exhausted—there were (and still are) many interesting questions that remain and that are probably approachable. Since those years, there have been interesting developments carried out by the few people who stayed in the field or who entered the field, and there have also been important developments in neighboring areas that I think would have been much accelerated had mathematicians continued to pursue foliation theory vigorously.

Today, I think there are few mathematicians who understand anything approaching the state of the art of foliations as it lived at that time, although there are some parts of the theory of foliations, including developments since that time, that are still thriving.

Two things led to this desertion. First, the results he documented were written in a way that was hard to understand. This discouraged newcomers from entering the field. Second, even though the point of mathematics is building up human understanding, the way mathematicians typically get credit for their work is by proving theorems. If the most prominent results in a field have already been proven, that leaves few incentives for others to understand a field’s contributions, because they can’t prove further results (which would ultimately lead to getting credit).

In other words, researchers are incentivized to prove theorems. More generally, researchers across fields are incentivized to find solutions to scientific problems. But this incentive only leads to progress because the process of proving theorems or finding solutions to problems also leads to building human understanding. As the desertion of work on foliations shows, when there is a mismatch between finding solutions to problems and building human understanding, it can result in slower progress.

This is precisely the effect AI might have: by solving open research problems without leading to the accompanying understanding, AI could erode these useful byproducts by reducing incentives to build understanding. If we use AI to short circuit this process of understanding, that is like using a forklift at the gym. You can lift heavier weights with it, sure, but that’s not why you go to the gym.

AI could short circuit the process of building human understanding, which is essential to scientific progress

Of course, mathematics might be an extreme case, because human understanding is the end goal of (pure) mathematics, not simply knowing the truth value of mathematical statements. This might not be the case for many applications of science, where the end goal is to make progress towards a real-world outcome rather than human understanding, say, weather forecasting or materials synthesis.

Most fields lie in between these two extremes. If we use AI to bypass human understanding, or worse, retain only illusions of understanding, we might lose the ability to train new scientists, develop new theories and paradigms, synthesize and correct results, apply knowledge beyond science, or even generate new and interesting problems.

Empirical evidence across scientific fields has found evidence for some of these effects. For example, Hao et al. collect data from six fields and find that papers that adopt AI are more likely to focus on providing solutions to known problems and working within existing paradigms rather than generating new problems.

Of course, AI can also be used to build up tacit knowledge, such as by helping people understand mathematical proofs or other scientific knowledge. But this requires fundamental changes to how science is organized. Today’s career incentives and social norms prize solutions to scientific problems over human understanding. As AI adoption accelerates, we need changes to incentives to make sure human understanding is prioritized.

Over the last decade, scientists have been in a headlong rush to adopt AI. The speed has come at the expense of any ability to adapt slow-moving scientific institutional norms to maintain quality control and identify and preserve what is essentially human about science. As a result, the trend is likely to worsen the production-progress paradox, accelerating paper publishing but only digging us deeper into the hole with regard to true scientific progress.

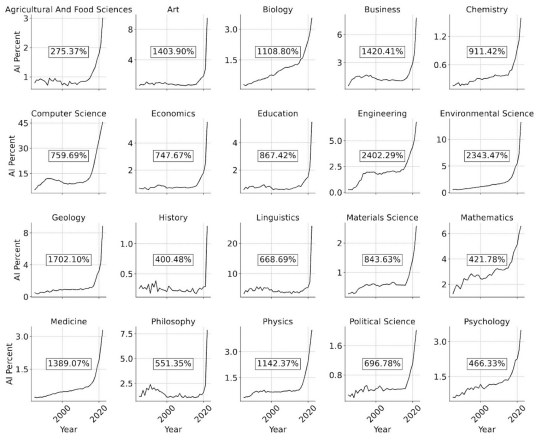

The number of papers that use AI quadrupled across 20 fields between 2012 and 2022 — even before the adoption of large language models. Figure by Duede et al.

So, what should the scientific community do differently? Let’s talk about the role of individual researchers, funders, publishers and other gatekeepers, and AI companies.

Individual researchers should be more careful when adopting AI. They should build software engineering skills, learn how to avoid a long and growing list of pitfalls in AI-based modeling, and ensure they don’t lose their expertise by using AI as a crutch or an oracle. Sloppy use of AI may help in the short run, but will hinder meaningful scientific achievement.

With all that said, we recognize that most individual researchers are rationally following their incentives (productivity metrics). Yelling at them is not going to help that much, because what we have are collective action problems. The actors with real power to effect change are journals, universities hiring & promotion committees, funders, policymakers, etc. Let’s turn to those next.

Meta-science research has been extremely valuable in revealing the production-progress paradox. But so far, that finding doesn’t have a lot of analytical precision. There’s only the fuzzy idea that science is getting less bang for its buck. This finding is generally consistent with scientists’ vibes, and is backed by a bunch of different metrics that vaguely try to measure true progress. But we don’t have a clear understanding of what the construct (progress) even is, and we’re far from a consensus story about what’s driving the slowdown.

To be clear, we will never have One True Progress Metric. If we did, Goodhardt/Campbell’s law would kick in — “When a measure becomes a target, it ceases to be a good measure.” Scientists would start to furiously optimize it, just as we have done with publication and citation counts, and the gaming would render it useless as a way to track progress.

That said, there’s clearly a long way for meta-science to go in improving both our quantitative and (more importantly) our qualitative/causal understanding of progress and the slowdown. Meta-science must also work to understand the efficacy of solutions.

Despite recent growth, meta-science funding is a fraction of a percent of science funding (and research on the slowdown is only a fraction of that pie). If it is indeed true that science funding as a whole is getting orders of magnitude less bang for the buck than in the past, meta-science investment seems ruefully small.

Scientists constantly complain to each other about the publish-or-perish treadmill and are keenly aware that the production-focused reward structure isn’t great for incentivizing scientific progress. But efforts to change this have consistently failed. One reason is simple inertia. Then there’s the aforementioned Goodhart’s law — whatever new metric is instituted will quickly be gamed. A final difficulty is that true progress can only be identified retrospectively, on timescales that aren’t suitable for hiring and promotion decisions.

One silver lining is that as the cost of publishing papers further drops due to AI, it could force us to stop relying on production metrics. In the AI field itself, the effort required to write a paper is so low that we are heading towards a singularity, with some researchers being able to (co-)author close to 100 papers a year. (But, again, the perceived pace of actual progress seems mostly flat.) Other fields might start going the same route.

Rewarding the publication of individual findings may simply not be an option for much longer. Perhaps the kinds of papers that count toward career progress should be limited to things that are hard to automate, such as new theories or paradigms of scientific research. Any reforms to incentive structures should go hand-in-hand with shifts in funding.

One thing we don’t need is more incentives for AI adoption. As we explained above, it is already happening at breakneck speed, and is not the bottleneck.

When it comes to AI-for-science labs and tools that come from big AI companies, the elephant in the room is that their incentives are messed up. They want flashy “AI discovers X!” headlines so that they can sustain the narrative that AI will solve humanity’s problems, which buys them favorable policy treatment. We are not holding our breath for this to change.

We should be skeptical of AI-for-science news headlines. Many of them are greatly exaggerated. The results may fail to reproduce, or AI may be framed as the main character when it was in fact one tool among many.

If there are any AI-for-science tool developers out there who actually want to help, here’s our advice. Target the actual bottlenecks instead of building yet another literature review tool. How about tools for finding errors in scientific code or other forms of quality control? Listen to the users. For example, mathematicians have repeatedly said that tools for improving human understanding are much more exciting than trying to automate theorem-proving, which they view as missing the point.

The way we evaluate AI-for-science tools should also change. Consider a literature review tool. There are three kinds of questions one can ask: Does it save a researcher time and produce results of comparable quality to existing tools? How does the use of the tool impact the researcher’s understanding of the literature compared to traditional search? What will the collective impacts on the community be if the tool were widely adopted? For example, will everyone end up citing the same few papers?

Currently, only the first question is considered part of what evaluation means. The latter two are out of scope, and there aren’t even established methods or metrics for such measurement. That means that AI-for-science evaluation is guaranteed to provide a highly incomplete and biased picture of the usefulness of these tools and minimize their potential harms.

We ourselves are enthusiastic users of AI in our scientific workflows. On a day-to-day basis, it all feels very exciting. That makes it easy to forget that the impact of AI on science as an institution, rather than individual scientists, is a different question that demands a different kind of analysis. Writing this essay required fighting our own intuitions in many cases. If you are a scientist who is similarly excited about using these tools, we urge you to keep this difference in mind.

Our skepticism here has similarities and differences to our reasons for the slow timelines we laid out in AI as Normal Technology. Market mechanisms exert some degree of quality control, and many shoddy AI deployments have failed badly, forcing companies who care about their reputation to take it slow when deploying AI, especially for consequential tasks, regardless of how fast the pace of development is. In science, adoption and quality control processes are decoupled, with the former being much faster.

We are optimistic that scientific norms and processes will catch up in the long run. But for now, it’s going to be a bumpy ride.

We are grateful to Eamon Duede for feedback on a draft of this essay.

The American Science Acceleration Project (ASAP) is a national initiative with the stated goal of making American science “ten times faster by 2030”. The offices of Senators Heinrich and Rounds recently requested feedback on how to achieve this. In our response, we emphasized the production-progress paradox, discussed why AI could slow (rather than hasten) scientific progress, and recommended policy interventions that could help.

Our colleague Alondra Nelson also wrote a response to the ASAP initiative, emphasizing that faster science is not automatically better, and highlighted many challenges that remain despite increasing the pace of production.

In a recent commentary in the journal Nature, we discussed why the proliferation of AI-driven modeling could be bad for science.

We have written about the use of AI for science in many previous essays in this newsletter:

Lisa Messeri and Molly Crockett offer a taxonomy of the uses of AI in science. They discuss many pitfalls of adopting AI in science, arguing we could end up producing more while understanding less.

Matt Clancy reviewed the evidence for slowdowns in science and innovation, and discussed interventions for incentivizing genuine progress.

The Institute for Progress released a podcast series on meta-science. Among other things, the series discusses concerns about slowdown and alternative models for funding and organizing science.

#000#1980s#2022#academia#adoption#Advice#agents#agriculture#ai#AI adoption#AI AGENTS#AI industry#ai tools#Algorithms#AlphaFold#American#amp#analyses#Analysis#applications#Arab Spring#Art#Astronomy#attention#author#benchmarks#Biology#biomedicine#black box#BLOOM

2 notes

·

View notes

Text

7-Figure Accelerator Reviews: Is It Worth Your Investment?

The 7-Figure Accelerator program, led by the renowned Philip Johansen, promises to transform your social media presence into a lucrative business venture through its comprehensive roadmap and personalized mentorship. As you delve into this review, discover whether this investment is the key to unlocking your potential in the digital marketing landscape.

Visit Here to Unlock It

Overview of 7-Figure Accelerator Program

Who is Philip Johansen?

Philip Johansen is a name that resonates with those who are eager to make a mark in the online business world. As the driving force behind the 7-Figure Accelerator program, Philip brings a wealth of experience and a proven track record in social media growth and monetization. His approach is hands-on, combining transparency with effective strategies that have helped countless individuals transform their social media presence into thriving businesses. Johansen's passion for personal mentorship and his commitment to participant success has been widely praised, making him a revered figure in the affiliate marketing community.

Visit Here to Unlock It

What is the 7-Figure Accelerator?

The 7-Figure Accelerator is more than just a coaching program; it's a comprehensive roadmap designed to elevate your social media game. With a focus on mastering the intricacies of social media algorithms, content creation, and engagement tactics, this program offers a suite of robust resources to guide you every step of the way. Participants benefit from live sessions, one-on-one consultations, and detailed strategy guides tailored to various platforms. The program's structure is crafted to accommodate both beginners and seasoned marketers, ensuring everyone can leverage their online presence into tangible business success. With the support of Philip Johansen and his expert team, participants are equipped to unlock the full potential of their digital influence.

Key Features of the Program

Content Modules

The 7 Figure Accelerator program is meticulously designed with a series of content modules that guide participants through the intricacies of monetizing their social media platforms. These modules are crafted to cater to all levels, from beginners to advanced users, ensuring a comprehensive learning journey. Participants have praised the program for its clear, easy-to-follow structure, which includes interactive workshops and strategic calls that offer practical insights and actionable strategies. Each module is packed with valuable content that delves into understanding social media algorithms, effective content creation, and engagement strategies. By breaking down complex topics into digestible segments, these modules empower users to apply their newfound knowledge directly to their social media efforts, paving the way for tangible business outcomes.

Mentorship Access

Participants of the 7 Figure Accelerator program benefit from unparalleled mentorship access, a feature that sets this program apart from others. Under the direct guidance of Philip Johansen and his team of seasoned social media experts, mentees receive personalized attention and hands-on learning experiences. This mentorship is characterized by transparency and an effective style of teaching that is often highlighted in participant testimonials. The one-on-one consultations and live sessions provide tailored advice, allowing users to implement personalized strategies that align with their specific business goals. This close mentorship not only accelerates the learning curve but also instills confidence in participants as they navigate the digital landscape, turning theoretical knowledge into practical success.

Community Support

The program's community support is a cornerstone of the 7 Figure Accelerator experience, fostering a collaborative and encouraging environment that enhances learning and growth. Participants frequently commend the supportive network of fellow entrepreneurs and marketing novices who share insights, experiences, and motivational support. This vibrant community acts as a sounding board for ideas, offering feedback and encouragement that helps sustain momentum throughout the program. By being part of a like-minded group, participants can exchange strategies, celebrate successes, and tackle challenges together, creating a sense of camaraderie and shared purpose. This collective support system is crucial for maintaining motivation and ensuring that participants stay on track to achieve their ambitious business objectives.

User Experience and Feedback

Success Stories

Participants of the 7 Figure Accelerator program frequently highlight their successful transformations and the program's effectiveness in their testimonials. Many share inspiring stories of how the program's structured modules and interactive workshops have reshaped their approach to online business. Beginners in affiliate marketing find a supportive community that fosters growth, while the easy-to-follow modules and strategic calls help them navigate the intricate world of digital monetization. The personalized mentorship provided by Phil Johansen and his team is often credited with creating a hands-on learning environment that drives rapid progress. One participant noted that implementing the lessons from the program enabled them to swiftly develop and execute a successful business action plan, exemplifying the program's potential to catalyze significant personal and professional growth. Such success stories underscore the transformative impact of the 7 Figure Accelerator, attracting individuals eager to enhance their online presence.

Areas for Improvement

While the 7 Figure Accelerator program receives a plethora of positive feedback, there are a few areas where participants suggest enhancements. Some users have pointed out that although the program is comprehensive, certain topics could benefit from more in-depth exploration, particularly as digital landscapes are constantly evolving. Additionally, a few participants have expressed a desire for more diverse case studies that cater to a broader spectrum of niches beyond the most common industries. Occasionally, there have been mentions of technical issues during live sessions, which can disrupt the flow of learning. While these occurrences are not frequent, addressing them could further enhance the user experience. By continuing to refine these areas, the program could provide even more tailored support, ensuring participants leave with a complete toolkit to successfully monetize their social media platforms.

Comparative Analysis with Similar Programs

Features Comparison

When evaluating the 7 Figure Accelerator against similar programs in the market, several distinctive features stand out. Unlike many programs that offer generic content, the 7 Figure Accelerator provides a highly structured approach with easy-to-follow modules, interactive workshops, and strategic calls tailored for all levels of affiliate marketers. Participants praise the personalized mentorship from Phil Johansen and his team, which is a rarity in many other programs. This hands-on learning environment is further enhanced by live sessions and one-on-one consultations, offering real-time solutions and support. Additionally, the focus on understanding social media algorithms, content creation, and engagement strategies sets it apart by providing practical, actionable insights. Overall, these comprehensive features cater to both beginners and seasoned marketers, facilitating rapid progress and real business action plans.

Value Proposition

The value proposition of the 7 Figure Accelerator lies in its commitment to transforming participants' online presence into successful business ventures. The program’s intensive coaching and strategic guidance offer unparalleled insights into monetizing social media platforms. Unlike competitors, it emphasizes personalized mentorship, ensuring each participant receives direct and effective support. With seasoned experts like Philip Johansen leading the charge, the program promises not just knowledge but the ability to apply it effectively across various niches. The robust resources, including platform-specific strategy guides and live sessions, enhance the overall value by providing diverse tools needed to succeed. This focus on tangible business outcomes, combined with the genuine testimonials highlighting the program's effectiveness, underscores its strong value proposition in the crowded field of online marketing training.

Advantages of Joining the Program

Potential Earnings

Participating in the 7 Figure Accelerator program could be the catalyst for unlocking significant earnings, particularly for those keen on capitalizing on social media platforms. The program is meticulously designed to guide you through the nuances of digital monetization, providing insight into content creation and engagement strategies that convert followers into customers. Through personalized mentorship from Philip Johansen and his team, participants are equipped with the knowledge and tools necessary to navigate the digital landscape effectively. Testimonials from past participants frequently highlight how the structured modules and strategic workshops have not only clarified their path to financial growth but also accelerated their journey towards achieving seven-figure incomes. This program offers an enticing opportunity to transform online presence into a lucrative venture.

Skill Development

Beyond the potential for substantial earnings, the 7 Figure Accelerator program excels in fostering skill development. It's not just about immediate financial gains; it's about building a foundation of knowledge that empowers long-term success. Participants are introduced to a wealth of resources, including live sessions, one-on-one consultations, and platform-specific strategy guides that arm them with the skills needed to thrive in the digital world. The program's focus on understanding social media algorithms and mastering engagement techniques ensures that even beginners can quickly elevate their proficiency. Personalized mentorship under Philip Johansen offers hands-on learning experiences that transform theoretical knowledge into practical skills. This comprehensive approach not only helps participants grow their online influence but also cultivates a deep understanding of the digital marketing landscape, setting them up for sustained success beyond the program.

Challenges and Limitations

Program Costs

When contemplating an investment in the 7 Figure Accelerator program, one of the primary concerns is the program costs. The financial commitment can be a significant hurdle for those just starting in affiliate marketing or looking to enhance their digital presence. Although the program promises to deliver comprehensive modules, interactive workshops, and personalized mentorship from Philip Johansen and his team, the cost might be a deterrent for some potential participants. However, those who have taken part often highlight the value for money due to the returns seen through their enhanced social media strategies and monetization techniques. The investment in understanding social media algorithms and engagement strategies can be substantial, but the potential for turning these teachings into a profitable online business often justifies the expense for many.

Time Commitment

Another challenge that prospective participants face with the 7 Figure Accelerator program is the time commitment required. As with any intensive coaching experience, the program demands dedicated time and effort to reap the full benefits. The structured curriculum, which includes live sessions and strategic calls, requires participants to allocate specific hours each week. For individuals balancing other commitments, such as a full-time job or family responsibilities, finding this time can be a considerable challenge. However, the testimonials frequently point out that the hands-on learning environment and the rapid progress participants experience make the time investment worthwhile. The program's design ensures that even those with busy schedules can implement effective strategies to grow and monetize their social media presence, albeit with some initial time management adjustments.

Who Should Enroll in the 7-Figure Accelerator?

Entrepreneurs and Business Owners

For entrepreneurs and business owners eager to maximize their online potential, the 7-Figure Accelerator offers a treasure trove of strategic insights and guidance. The program is designed to enable participants to harness the full power of social media as a vehicle for business growth. With testimonials from past users highlighting the ease of the program's structure and the hands-on mentorship of Philip Johansen, enrolling can be a game-changer for those looking to quickly implement effective business strategies. The program not only focuses on teaching the intricacies of social media algorithms and content creation but also equips business owners with the tools to transform their social media channels into lucrative revenue streams. This is particularly beneficial for those who find themselves navigating the complexities of digital marketing without a clear roadmap. By providing structured support and direct mentorship, the 7-Figure Accelerator empowers business owners to craft custom action plans that lead to tangible results. Whether you're launching a startup or scaling an existing business, this program could be the catalyst for reaching new heights in the digital marketplace.

Digital Marketing Enthusiasts

Digital marketing enthusiasts who live and breathe the dynamic world of social media will find the 7-Figure Accelerator an ideal fit. This program offers a comprehensive exploration into the digital marketing landscape, making it a perfect match for those passionate about leveraging online platforms for growth. It delves deep into content creation, engagement strategies, and monetization techniques, providing participants with a robust toolkit to boost their digital presence. Enthusiasts will appreciate the program's interactive workshops and live sessions that foster a collaborative learning atmosphere. What sets the 7-Figure Accelerator apart is its commitment to personalized mentorship, enabling participants to apply what they've learned in a practical, hands-on manner. The curriculum is crafted with the intent to not only educate but also inspire digital marketers to push creative boundaries and innovate within their niches. For those driven by the desire to excel in digital marketing, this program offers a pathway to mastering the art of turning followers into customers, ultimately leading to substantial business outcomes.

Conclusion

In conclusion, the 7-Figure Accelerator program emerges as a compelling choice for those looking to elevate their social media presence into a robust business venture. Its core advantages include a meticulously crafted curriculum that caters to all levels of digital marketers, from beginners to seasoned professionals. The program offers an exceptional level of personalized mentorship from Philip Johansen and his team, providing participants with strategic insights and tailored guidance.

Key features such as interactive workshops, live sessions, and platform-specific strategy guides equip participants with the necessary tools to master social media algorithms, content creation, and engagement tactics. The vibrant community support further enhances the learning experience by fostering collaboration and shared growth.

While the financial and time commitments may pose challenges, the potential for significant earnings and the comprehensive skill development offered make the investment worthwhile. The program is particularly beneficial for entrepreneurs, business owners, and digital marketing enthusiasts keen on transforming their online presence into profitable ventures.

Considering these factors, the 7-Figure Accelerator program is highly recommended for individuals seeking to unlock new business opportunities in the digital marketplace. Its structured approach and focus on tangible results provide a strong foundation for sustained success.

FAQ

1. What is the 7-Figure Accelerator program and how does it work?

The 7-Figure Accelerator program, led by Philip Johansen, is a comprehensive roadmap designed to transform your social media presence into a profitable business. It offers personalized mentorship, live sessions, and strategic guidance to help participants master social media algorithms, content creation, and engagement tactics. The program is suitable for both beginners and seasoned marketers seeking to elevate their online influence and achieve tangible business success.

2. What should I expect from the reviews of the 7-Figure Accelerator?

Reviews of the 7-Figure Accelerator often highlight its structured approach, personalized mentorship, and effective strategies. Participants praise the program's ability to provide clear, actionable insights that lead to significant business outcomes. However, some suggest improvements in more diverse case studies and mention occasional technical issues during live sessions. Overall, the program receives positive feedback for its transformative impact.

3. Who can benefit from joining the 7-Figure Accelerator?

Entrepreneurs, business owners, and digital marketing enthusiasts can greatly benefit from the 7-Figure Accelerator. The program is tailored to those looking to leverage social media for business growth and offers strategic insights to convert online presence into profitable ventures. It's ideal for anyone eager to understand social media algorithms and monetize their digital influence effectively.

4. Are there any success stories from those who participated in the 7-Figure Accelerator?

Yes, many participants share inspiring success stories, crediting the program's structured modules and interactive workshops for reshaping their approach to online business. Testimonials frequently highlight rapid progress and successful business action plans, thanks to the personalized mentorship and practical strategies provided by Philip Johansen and his team.

5. How can I determine if the 7-Figure Accelerator is the right choice for my business growth?

Consider your goals and readiness to commit to personal mentorship and intensive learning. If you aim to enhance your social media strategies and monetize your digital presence, this program offers valuable resources and guidance. Weigh the potential earnings and skill development against the program costs and time commitment to decide if it aligns with your business growth objectives.

Visit Here to Unlock It

2 notes

·

View notes

Text

By: Wilfred Reilly

Published: Nov 10, 2023

When your enemies tell you their goals, believe them.

Over the past three weeks, a lot of crazy sh** has been said about the Jews. Following the October 7 onset of hostilities between the nation of Israel and the terrorist group Hamas (sometimes billed as the “nation of Palestine”), a group of tens of thousands of recent migrants to Australia, university students, and others gathered in scenic downtown Sydney and quite literally chanted “Gas the Jews!” At 30–40 other large rallies, including this one in my hometown of Chicago, the cris de coeur were the just slightly less radical “From the river to the sea!” — a call for the elimination of the Jewish state of Israel — and “What is the solution? Intifada! Revolution!”

As has been documented to death by now, some 34 prominent student organizations (bizarrely including Amnesty International) at America’s third-best university, Harvard, signed on to a petition that assigned the “apartheid” state of Israel 100 percent of the blame for the current war — and indeed for the Hamas atrocities that began it. At another college, New York City’s Cooper Union, a group of Jewish students was apparently trapped inside a small campus library for hours by a braying pro-Palestinian mob. And so on.

In response to such open and gleeful hatred, more than a few conventional liberals — from comedienne Amy Schumer to the admittedly more heterodox Bill Maher — seem to have had their eyes fully opened as to who their keffiyeh-wearing “allies” truly are . . . at least when it comes specifically to Jewish people. But there is a deeper point, rarely made outside of the hard right, that lurks just beyond the mainstream’s discovery of rampant hard-left antisemitism: The same campus radicals and general hipster fauna quite regularly say worse things about a whole range of other groups than they do about Jews.