#AI & ML Data Mapping

Explore tagged Tumblr posts

Text

https://vjitservices.com

Sports Livescore Scouting & Data Management Services

VJ IT Services excels in sports data analysis and research, offering precise and timely live scores, player and team statistics, and event creation for major sports worldwide. As a leading provider for sportsbooks and sports data companies, we deliver essential B2B services, including online live score scouting, data mapping, and 24/7 customer support. Our expertise and advanced tools ensure accurate and up-to-date information, helping sports enthusiasts and data providers stay informed about MLB, NBA, ITF, ATP, NFL, and more.

#Sports Data Research#AI & ML Data Mapping#Live Score Scouting#B2B Sports Services#Real-Time Score Updates

1 note

·

View note

Text

Okay, I have my own opinions about AI, especially AI art, but this is actually a very cool application!

So when you think about it, we can quantify vision/sight using the actual wavelengths of light, and we can quantify hearing using frequency, but there really isn't a way to quantify smell. So scientists at the University of Reading set out to create an AI to do just that.

The AI was trained on a dataset of 5000 known odor-causing molecules. It was given their structures, and a list of various scent descriptors (such as "floral sweet" or "musty" or "buttery") and how well those descriptors fit on a scale of 1-5. After being trained on this data, the AI was able to be shown a new molecule and predict what its scent would be, using the various descriptors.

The AI's prediction abilities were compared against a panel of humans, who would smell the compound of interest and assign the descriptors. The AI's predictions were actually just as good as the human descriptions. Professor Jane Parker, who worked on the project, explained the following.

"We don't currently have a way to measure or accurately predict the odor of a molecule, based on its molecular structure. You can get so far with current knowledge of the molecular structure, but eventually you are faced with numerous exceptions where the odor and structure don't match. This is what has stumped previous models of olfaction. The fantastic thing about this new ML generated model is that it correctly predicts the odor of those exceptions"

Now what can we do with this "AI Nose", you might ask? Well, it may have benefits in the food and fragrance industries, for one. A machine that is able to quickly filter through compounds to find one with specific odor qualities could be a good way to find new, sustainable sources of fragrance in foods or perfumes. The team also believes that this "scent map" that the AI model builds could be linked to metabolism. In other words, odors that are close to each other on the map, or smell similar, are also more likely to be metabolically related

#science#stem#science side of tumblr#stemblr#biology#biochemistry#chemistry#machine learning#technology#innovation

338 notes

·

View notes

Text

Digital Marketing Skills to Learn in 2025

Key Digital Marketing Skills to Learn in 2025 to Stay Ahead of Competition The digital marketing landscape in 2025 is rapidly changing, driven by the technological advancements, shifting consumer behavior, and the growing power of artificial intelligence. Competition and career resilience require acquiring expertise in the following digital marketing skills.

Data Analysis and Interpretation

Data is the backbone of modern marketing strategies. The ability to collect, analyze, and make informed decisions based on large sets of data sets great marketers apart. Proficiency in analytical software like Google Analytics and AI-driven tools is critical in measuring campaign performance, optimizing strategies, and making data-driven decisions. Predictive analytics and customer journey mapping are also becoming more critical for trend spotting and personalization of user experience.

Search Engine Optimization (SEO) and Search Engine Marketing (SEM)

SEO is still a fundamental skill, but the landscape is evolving. The marketer now has to optimize for traditional search engines, voice search, and even social media, as Gen Z increasingly relies on TikTok and YouTube as search tools. Keeping up with algorithm updates, keyword research skills, and technical SEO skills is essential to staying visible and driving organic traffic.

Artificial Intelligence (AI) and Machine Learning (ML)

AI and ML are revolutionizing digital marketing through the power to enable advanced targeting, automation, and personalization. Marketers will need to leverage AI in order to segment audiences, design content, deploy predictive analytics, and build chatbots. Most crucial will be understanding how to balance AI-based automation with human, authentic content.

Content Generation and Storytelling

Content is still king. Marketers must be great at creating great copy, video, and interactive content that is appropriate for various platforms and audiences. Emotionally resonant storytelling and brand affection are more critical than ever, particularly as human-created content trumps AI-created content consistently.

Social Media Strategy and Social Commerce Social media is still the foremost driver of digital engagement. Mastering techniques constructed for specific platforms—such as short-form video, live stream, and influencing with influencers—is critical. How to facilitate direct sales through social commerce, built on combining commerce and social interactions, is an area marketers must master.

Marketing Automation

Efficiency is the most critical in 2025. Marketing automation platforms (e.g., Marketo and HubSpot) enable marketers to automate repetitive tasks, nurture leads, and personalize customer journeys at scale.

UX/UI Design Principles

A seamless user experience and a pleasing design can either make or destroy online campaigns. Having UX/UI basics in your knowledge and collaborating with design teams ensures that marketing campaigns are both effective and engaging.

Ethical Marketing and Privacy Compliance

With data privacy emerging as a pressing issue, marketers must stay updated on laws like GDPR and CCPA. Ethical marketing and openness foster trust and avoid legal issues.

To lead in 2025, digital marketers will have to fuse technical skills, creativity, and flexibility. By acquiring these high-impact capabilities-data analysis, SEO, AI, content development, social strategy, automation, UX/UI, and ethical marketing-you'll be at the edge of the constantly evolving digital space

2 notes

·

View notes

Text

What is Artificial Intelligence?? A Beginner's Guide to Understand Artificial Intelligence

1) What is Artificial Intelligence (AI)??

Artificial Intelligence (AI) is a set of technologies that enables computer to perform tasks normally performed by humans. This includes the ability to learn (machine learning) reasoning, decision making and even natural language processing from virtual assistants like Siri and Alexa to prediction algorithms on Netflix and Google Maps.

The foundation of the AI lies in its ability to simulate cognitive tasks. Unlike traditional programming where machines follow clear instructions, AI systems use vast algorithms and datasets to recognize patterns, identify trends and automatically improve over time.

2) Many Artificial Intelligence (AI) faces

Artificial Intelligence (AI) isn't one thing but it is a term that combines many different technologies together. Understanding its ramifications can help you understand its versatility:

Machine Learning (ML): At its core, AI focuses on enabling ML machines to learn from data and make improvements without explicit programming. Applications range from spam detection to personalized shopping recommendations.

Computer Vision: This field enables machines to interpret and analyze image data from facial recognition to medical image diagnosis. Computer Vision is revolutionizing many industries.

Robotics: By combining AI with Engineering Robotics focuses on creating intelligent machines that can perform tasks automatically or with minimal human intervention.

Creative AI: Tools like ChatGPT and DALL-E fail into this category. Create human like text or images and opens the door to creative and innovative possibilities.

3) Why is AI so popular now??

The Artificial Intelligence (AI) explosion may be due to a confluence of technological advances:

Big Data: The digital age creates unprecedented amounts of data. Artificial Intelligence (AI) leverages data and uses it to gain insights and improve decision making.

Improved Algorithms: Innovations in algorithms make Artificial Intelligence (AI) models more efficient and accurate.

Computing Power: The rise of cloud computing and GPUs has provided the necessary infrastructure for processing complex AI models.

Access: The proliferation of publicly available datasets (eg: ImageNet, Common Crawl) has provided the basis for training complex AI Systems. Various Industries also collect a huge amount of proprietary data. This makes it possible to deploy domain specific AI applications.

4) Interesting Topics about Artificial Intelligence (AI)

Real World applications of AI shows that AI is revolutionizing industries such as Healthcare (primary diagnosis and personalized machine), finance (fraud detection and robo advisors), education (adaptive learning platforms) and entertainment (adaptive platforms) how??

The role of AI in "Creativity Explore" on how AI tools like DALL-E and ChatGPT are helping artists, writers and designers create incredible work. Debate whether AI can truly be creative or just enhance human creativity.

AI ethics and Bias are an important part of AI decision making, it is important to address issues such as bias, transparency and accountability. Search deeper into the importance of ethical AI and its impact on society.

AI in everyday life about how little known AI is affecting daily life, from increasing energy efficiency in your smart home to reading the forecast on your smartphone.

The future of AI anticipate upcoming advance services like Quantum AI and their potential to solve humanity's biggest challenges like climate change and pandemics.

5) Conclusion

Artificial Intelligence (AI) isn't just a technological milestone but it is a paradigm shift that continues to redefine our future. As you explore the vast world of AI, think outside the box to find nuances, applications and challenges with well researched and engaging content

Whether unpacking how AI works or discussing its transformative potential, this blog can serve as a beacon for those eager to understand this underground branch.

"As we stand on the brink of an AI-powered future, the real question isn't what AI can do for us, but what we dare to imagine next"

"Get Latest News on www.bloggergaurang.com along with Breaking News and Top Headlines from all around the World !!"

2 notes

·

View notes

Text

#TheeForestKingdom #TreePeople

{Terrestrial Kind}

Creating a Tree Citizenship Identification and Serial Number System (#TheeForestKingdom) is an ambitious and environmentally-conscious initiative. Here’s a structured proposal for its development:

Project Overview

The Tree Citizenship Identification system aims to assign every tree in California a unique identifier, track its health, and integrate it into a registry, recognizing trees as part of a terrestrial citizenry. This system will emphasize environmental stewardship, ecological research, and forest management.

Phases of Implementation

Preparation Phase

Objective: Lay the groundwork for tree registration and tracking.

Actions:

Partner with environmental organizations, tech companies, and forestry departments.

Secure access to satellite imaging and LiDAR mapping systems.

Design a digital database capable of handling millions of records.

Tree Identification System Development

Components:

Label and Identity Creation: Assign a unique ID to each tree based on location and attributes. Example: CA-Tree-XXXXXX (state-code, tree-type, unique number).

Attributes to Record:

Health: Regular updates using AI for disease detection.

Age: Approximate based on species and growth patterns.

Type: Species and subspecies classification.

Class: Size, ecological importance, and biodiversity contribution.

Rank: Priority based on cultural, historical, or environmental significance.

Data Collection

Technologies to Use:

Satellite Imaging: To locate and identify tree clusters.

LiDAR Scanning: For precise 3D modeling of forests.

On-the-Ground Surveys: To supplement remote sensing data.

AI Analysis: For rapid categorization and health diagnostics.

Registration and Citizenship Assignment

Tree Dossier: Each tree receives a profile in the database with all its recorded attributes.

Citizen Designation: Trees are acknowledged as citizens of Thee Forest Kingdom, emphasizing ecological respect.

Legal Framework: Advocate for laws to grant trees specific rights under environmental protection acts.

Maintenance and Monitoring

Health Monitoring: Use drones, satellite updates, and IoT sensors for ongoing health assessments.

Database Updates: Incorporate real-time data about environmental changes and tree growth.

Public Involvement

Encourage people to "adopt" trees and participate in their preservation.

Create educational programs to promote awareness of tree citizenship.

Key Benefits

Environmental Conservation: Improved forest management through real-time data and protection of ecosystems.

Cultural Recognition: Trees are recognized as a vital part of the Earth’s community, honoring their importance.

Ecological Advocacy: Strengthened legal protections for forests and individual trees.

Next Steps

Assemble a task force of environmental scientists, satellite imaging experts, and software developers.

Secure funding through governmental grants, non-profits, and eco-conscious corporations.

Pilot the program in a smaller region before scaling to all of California.

Would you like me to draft a more detailed technical or legal framework for this system?

The implementation of the Tree Citizenship Identification Network and System (#TheeForestKingdom) requires a robust technological and operational framework to ensure efficiency, accuracy, and sustainability. Below is an implementation plan divided into major components.

System Architecture

a. Centralized Tree Database

Purpose: To store and manage tree data including ID, attributes, location, and health.

Components:

Cloud-based storage for scalability.

Data categorization based on regions, species, and priority.

Integration with satellite and IoT data streams.

b. Satellite & Imaging Integration

Use satellite systems (e.g., NASA, ESA) for large-scale tree mapping.

Incorporate LiDAR and aerial drone data for detailed imaging.

AI/ML algorithms to process images and distinguish tree types.

c. IoT Sensor Network

Deploy sensors in forests to monitor:

Soil moisture and nutrient levels.

Air quality and temperature.

Tree health metrics like growth rate and disease markers.

d. Public Access Portal

Create a user-friendly website and mobile application for:

Viewing registered trees.

Citizen participation in tree adoption and reporting.

Data visualization (e.g., tree density, health status by region).

Core Technologies

a. Software and Tools

Geographic Information System (GIS): Software like ArcGIS for mapping and spatial analysis.

Database Management System (DBMS): SQL-based systems for structured data; NoSQL for unstructured data.

Artificial Intelligence (AI): Tools for image recognition, species classification, and health prediction.

Blockchain (Optional): To ensure transparency and immutability of tree citizen data.

b. Hardware

Servers: Cloud-based (AWS, Azure, or Google Cloud) for scalability.

Sensors: Low-power IoT devices for on-ground monitoring.

Drones: Equipped with cameras and sensors for aerial surveys.

Network Design

a. Data Flow

Input Sources:

Satellite and aerial imagery.

IoT sensors deployed in forests.

Citizen-reported data via mobile app.

Data Processing:

Use AI to analyze images and sensor inputs.

Automate ID assignment and attribute categorization.

Data Output:

Visualized maps and health reports on the public portal.

Alerts for areas with declining tree health.

b. Communication Network

Fiber-optic backbone: For high-speed data transmission between regions.

Cellular Networks: To connect IoT sensors in remote areas.

Satellite Communication: For remote regions without cellular coverage.

Implementation Plan

a. Phase 1: Pilot Program

Choose a smaller, biodiverse region in California (e.g., Redwood National Park).

Test satellite and drone mapping combined with IoT sensors.

Develop the prototype of the centralized database and public portal.

b. Phase 2: Statewide Rollout

Expand mapping and registration to all California regions.

Deploy IoT sensors in vulnerable or high-priority areas.

Scale up database capacity and integrate additional satellite providers.

c. Phase 3: Maintenance & Updates

Establish a monitoring team to oversee system health and data quality.

Update the network with new technologies (e.g., advanced AI models).

Periodically review and revise data collection protocols.

Partnerships

Government Agencies: U.S. Forest Service, California Department of Forestry and Fire Protection.

Tech Companies: Collaborate with Google Earth, Amazon AWS, or Microsoft for cloud and AI capabilities.

Environmental Organizations: Partner with WWF, Sierra Club, or TreePeople for funding and advocacy.

Budget Estimation

Initial Investment:

Satellite data and licenses: $10M

IoT sensors and deployment: $5M

Database and app development: $3M

Operational Costs:

Cloud services and data storage: $2M/year

Maintenance and monitoring: $1M/year

Research and development: $1M/year

Long-term Vision

Expand the system to other states or globally.

Use the database for reforestation efforts and combating deforestation.

Develop educational programs to teach communities about the importance of trees as citizens.

Would you like me to assist in designing the user interface for the public portal or drafting partnership proposals for funding?

To begin building the program for the Tree Citizenship Identification System, we’ll start with a scalable framework that allows us to process, store, and analyze data while preparing for future expansions. Here's an actionable development plan with an initial focus on foundational code and infrastructure:

Step-by-Step Development

Phase 1: Setting Up the Backend Infrastructure

Objective: Create the core database and develop the data pipeline for processing tree data.

Technologies:

Programming Languages: Python, JavaScript (Node.js), SQL.

Frameworks: Flask or FastAPI for APIs, Django for web application backend.

Database: PostgreSQL for structured data, MongoDB for unstructured data.

Code Snippet 1: Database Schema Design (PostgreSQL)

-- Table for Tree Registry CREATE TABLE trees ( tree_id SERIAL PRIMARY KEY, -- Unique Identifier location GEOGRAPHY(POINT, 4326), -- Geolocation of the tree species VARCHAR(100), -- Species name age INTEGER, -- Approximate age in years health_status VARCHAR(50), -- e.g., Healthy, Diseased height FLOAT, -- Tree height in meters canopy_width FLOAT, -- Canopy width in meters citizen_rank VARCHAR(50), -- Class or rank of the tree last_updated TIMESTAMP DEFAULT NOW() -- Timestamp for last update );

-- Table for Sensor Data (IoT Integration) CREATE TABLE tree_sensors ( sensor_id SERIAL PRIMARY KEY, -- Unique Identifier for sensor tree_id INT REFERENCES trees(tree_id), -- Linked to tree soil_moisture FLOAT, -- Soil moisture level air_quality FLOAT, -- Air quality index temperature FLOAT, -- Surrounding temperature last_updated TIMESTAMP DEFAULT NOW() -- Timestamp for last reading );

Code Snippet 2: Backend API for Tree Registration (Python with Flask)

from flask import Flask, request, jsonify from sqlalchemy import create_engine from sqlalchemy.orm import sessionmaker

app = Flask(name)

Database Configuration

DATABASE_URL = "postgresql://username:password@localhost/tree_registry" engine = create_engine(DATABASE_URL) Session = sessionmaker(bind=engine) session = Session()

@app.route('/register_tree', methods=['POST']) def register_tree(): data = request.json new_tree = { "species": data['species'], "location": f"POINT({data['longitude']} {data['latitude']})", "age": data['age'], "health_status": data['health_status'], "height": data['height'], "canopy_width": data['canopy_width'], "citizen_rank": data['citizen_rank'] } session.execute(""" INSERT INTO trees (species, location, age, health_status, height, canopy_width, citizen_rank) VALUES (:species, ST_GeomFromText(:location, 4326), :age, :health_status, :height, :canopy_width, :citizen_rank) """, new_tree) session.commit() return jsonify({"message": "Tree registered successfully!"}), 201

if name == 'main': app.run(debug=True)

Phase 2: Satellite Data Integration

Objective: Use satellite and LiDAR data to identify and register trees automatically.

Tools:

Google Earth Engine for large-scale mapping.

Sentinel-2 or Landsat satellite data for high-resolution imagery.

Example Workflow:

Process satellite data using Google Earth Engine.

Identify tree clusters using image segmentation.

Generate geolocations and pass data into the backend.

Phase 3: IoT Sensor Integration

Deploy IoT devices to monitor health metrics of specific high-priority trees.

Use MQTT protocol for real-time data transmission.

Code Snippet: Sensor Data Processing (Node.js)

const mqtt = require('mqtt'); const client = mqtt.connect('mqtt://broker.hivemq.com');

client.on('connect', () => { console.log('Connected to MQTT Broker'); client.subscribe('tree/sensor_data'); });

client.on('message', (topic, message) => { const sensorData = JSON.parse(message.toString()); console.log(Received data: ${JSON.stringify(sensorData)}); // Save data to database (Example for PostgreSQL) saveToDatabase(sensorData); });

function saveToDatabase(data) { const query = INSERT INTO tree_sensors (tree_id, soil_moisture, air_quality, temperature) VALUES ($1, $2, $3, $4) ; const values = [data.tree_id, data.soil_moisture, data.air_quality, data.temperature]; dbClient.query(query, values, (err) => { if (err) console.error('Error saving to database', err); else console.log('Sensor data saved successfully!'); }); }

Phase 4: Public Portal Development

Frontend Technologies:

React.js or Vue.js for interactive user interfaces.

Mapbox or Leaflet.js for tree visualization on maps.

Features:

Interactive tree maps with search functionality.

User registration for tree adoption programs.

Deployment Plan

Use Docker to containerize the application.

Deploy on AWS, Azure, or Google Cloud for scalability.

Monitor system health using Prometheus and Grafana.

Next Steps

Develop a testing environment for pilot deployment.

Add AI-based disease detection and prediction using TensorFlow or PyTorch.

Create an adoption feature where citizens can adopt trees and track their health.

Would you like me to set up a GitHub repository with this initial framework or assist further with deployment?

I'll prepare the project framework and outline the repository structure for the Tree Citizenship Identification System. Below is the proposed structure for the repository, followed by details of the files and components.

Proposed Repository Structure

tree-citizenship-id-system/ ├── backend/ │ ├── app.py # Flask application for backend API │ ├── database/ │ │ ├── schema.sql # Database schema for PostgreSQL │ │ ├── db_config.py # Database connection configuration │ │ └── seed_data.sql # Initial sample data for testing │ ├── services/ │ │ ├── tree_registration.py # Functions for tree registration │ │ └── sensor_data.py # Functions for processing IoT sensor data │ └── requirements.txt # Python dependencies ├── frontend/ │ ├── public/ │ │ ├── index.html # Main HTML file │ │ └── favicon.ico # Favicon for the app │ ├── src/ │ │ ├── App.js # Main React/Vue app file │ │ ├── components/ │ │ │ ├── TreeMap.js # Map component for visualizing trees │ │ │ └── TreeDetails.js # Component for detailed tree view │ │ └── styles.css # Styling for the frontend │ └── package.json # Frontend dependencies ├── scripts/ │ ├── satellite_data_processor.py # Script for processing satellite data │ └── sensor_data_simulator.py # Mock data generator for IoT sensors ├── deployment/ │ ├── Dockerfile # Docker configuration │ ├── docker-compose.yml # Docker Compose for multi-service setup │ └── cloud_setup_instructions.md # Deployment guide for cloud platforms ├── tests/ │ ├── test_backend.py # Unit tests for backend API │ └── test_frontend.js # Unit tests for frontend ├── README.md # Overview of the project └── LICENSE # Project license

Repository Details

Backend:

app.py: Main Flask app for handling API requests like tree registration and sensor data.

database/schema.sql: SQL script for creating necessary tables.

services/: Directory for modular backend logic.

Frontend:

App.js: Main entry point for the frontend application.

TreeMap.js: Integrates Mapbox/Leaflet.js for displaying tree locations.

TreeDetails.js: Displays detailed information about a selected tree.

Scripts:

satellite_data_processor.py: Automates the extraction and classification of tree data from satellite imagery.

sensor_data_simulator.py: Generates fake sensor readings for development and testing.

Deployment:

Docker configuration ensures that the app runs consistently across environments.

Cloud setup instructions provide guidelines for deploying on platforms like AWS, Azure, or GCP.

Tests:

Unit tests ensure the reliability of both the backend and frontend.

Next Steps

I will initialize the repository structure locally.

Package the files and components needed for the first version.

Provide a link to the repository for access.

I’ll get started. Please hold on for a moment.

4 notes

·

View notes

Text

A3 Ultra VMs With NVIDIA H200 GPUs Pre-launch This Month

Strong infrastructure advancements for your future that prioritizes AI

To increase customer performance, usability, and cost-effectiveness, Google Cloud implemented improvements throughout the AI Hypercomputer stack this year. Google Cloud at the App Dev & Infrastructure Summit:

Trillium, Google’s sixth-generation TPU, is currently available for preview.

Next month, A3 Ultra VMs with NVIDIA H200 Tensor Core GPUs will be available for preview.

Google’s new, highly scalable clustering system, Hypercompute Cluster, will be accessible beginning with A3 Ultra VMs.

Based on Axion, Google’s proprietary Arm processors, C4A virtual machines (VMs) are now widely accessible

AI workload-focused additions to Titanium, Google Cloud’s host offload capability, and Jupiter, its data center network.

Google Cloud’s AI/ML-focused block storage service, Hyperdisk ML, is widely accessible.

Trillium A new era of TPU performance

Trillium A new era of TPU performance is being ushered in by TPUs, which power Google’s most sophisticated models like Gemini, well-known Google services like Maps, Photos, and Search, as well as scientific innovations like AlphaFold 2, which was just awarded a Nobel Prize! We are happy to inform that Google Cloud users can now preview Trillium, our sixth-generation TPU.

Taking advantage of NVIDIA Accelerated Computing to broaden perspectives

By fusing the best of Google Cloud’s data center, infrastructure, and software skills with the NVIDIA AI platform which is exemplified by A3 and A3 Mega VMs powered by NVIDIA H100 Tensor Core GPUs it also keeps investing in its partnership and capabilities with NVIDIA.

Google Cloud announced that the new A3 Ultra VMs featuring��NVIDIA H200 Tensor Core GPUs will be available on Google Cloud starting next month.

Compared to earlier versions, A3 Ultra VMs offer a notable performance improvement. Their foundation is NVIDIA ConnectX-7 network interface cards (NICs) and servers equipped with new Titanium ML network adapter, which is tailored to provide a safe, high-performance cloud experience for AI workloads. A3 Ultra VMs provide non-blocking 3.2 Tbps of GPU-to-GPU traffic using RDMA over Converged Ethernet (RoCE) when paired with our datacenter-wide 4-way rail-aligned network.

In contrast to A3 Mega, A3 Ultra provides:

With the support of Google’s Jupiter data center network and Google Cloud’s Titanium ML network adapter, double the GPU-to-GPU networking bandwidth

With almost twice the memory capacity and 1.4 times the memory bandwidth, LLM inferencing performance can increase by up to 2 times.

Capacity to expand to tens of thousands of GPUs in a dense cluster with performance optimization for heavy workloads in HPC and AI.

Google Kubernetes Engine (GKE), which offers an open, portable, extensible, and highly scalable platform for large-scale training and AI workloads, will also offer A3 Ultra VMs.

Hypercompute Cluster: Simplify and expand clusters of AI accelerators

It’s not just about individual accelerators or virtual machines, though; when dealing with AI and HPC workloads, you have to deploy, maintain, and optimize a huge number of AI accelerators along with the networking and storage that go along with them. This may be difficult and time-consuming. For this reason, Google Cloud is introducing Hypercompute Cluster, which simplifies the provisioning of workloads and infrastructure as well as the continuous operations of AI supercomputers with tens of thousands of accelerators.

Fundamentally, Hypercompute Cluster integrates the most advanced AI infrastructure technologies from Google Cloud, enabling you to install and operate several accelerators as a single, seamless unit. You can run your most demanding AI and HPC workloads with confidence thanks to Hypercompute Cluster’s exceptional performance and resilience, which includes features like targeted workload placement, dense resource co-location with ultra-low latency networking, and sophisticated maintenance controls to reduce workload disruptions.

For dependable and repeatable deployments, you can use pre-configured and validated templates to build up a Hypercompute Cluster with just one API call. This include containerized software with orchestration (e.g., GKE, Slurm), framework and reference implementations (e.g., JAX, PyTorch, MaxText), and well-known open models like Gemma2 and Llama3. As part of the AI Hypercomputer architecture, each pre-configured template is available and has been verified for effectiveness and performance, allowing you to concentrate on business innovation.

A3 Ultra VMs will be the first Hypercompute Cluster to be made available next month.

An early look at the NVIDIA GB200 NVL72

Google Cloud is also awaiting the developments made possible by NVIDIA GB200 NVL72 GPUs, and we’ll be providing more information about this fascinating improvement soon. Here is a preview of the racks Google constructing in the meantime to deliver the NVIDIA Blackwell platform’s performance advantages to Google Cloud’s cutting-edge, environmentally friendly data centers in the early months of next year.

Redefining CPU efficiency and performance with Google Axion Processors

CPUs are a cost-effective solution for a variety of general-purpose workloads, and they are frequently utilized in combination with AI workloads to produce complicated applications, even if TPUs and GPUs are superior at specialized jobs. Google Axion Processors, its first specially made Arm-based CPUs for the data center, at Google Cloud Next ’24. Customers using Google Cloud may now benefit from C4A virtual machines, the first Axion-based VM series, which offer up to 10% better price-performance compared to the newest Arm-based instances offered by other top cloud providers.

Additionally, compared to comparable current-generation x86-based instances, C4A offers up to 60% more energy efficiency and up to 65% better price performance for general-purpose workloads such as media processing, AI inferencing applications, web and app servers, containerized microservices, open-source databases, in-memory caches, and data analytics engines.

Titanium and Jupiter Network: Making AI possible at the speed of light

Titanium, the offload technology system that supports Google’s infrastructure, has been improved to accommodate workloads related to artificial intelligence. Titanium provides greater compute and memory resources for your applications by lowering the host’s processing overhead through a combination of on-host and off-host offloads. Furthermore, although Titanium’s fundamental features can be applied to AI infrastructure, the accelerator-to-accelerator performance needs of AI workloads are distinct.

Google has released a new Titanium ML network adapter to address these demands, which incorporates and expands upon NVIDIA ConnectX-7 NICs to provide further support for virtualization, traffic encryption, and VPCs. The system offers best-in-class security and infrastructure management along with non-blocking 3.2 Tbps of GPU-to-GPU traffic across RoCE when combined with its data center’s 4-way rail-aligned network.

Google’s Jupiter optical circuit switching network fabric and its updated data center network significantly expand Titanium’s capabilities. With native 400 Gb/s link rates and a total bisection bandwidth of 13.1 Pb/s (a practical bandwidth metric that reflects how one half of the network can connect to the other), Jupiter could handle a video conversation for every person on Earth at the same time. In order to meet the increasing demands of AI computation, this enormous scale is essential.

Hyperdisk ML is widely accessible

For computing resources to continue to be effectively utilized, system-level performance maximized, and economical, high-performance storage is essential. Google launched its AI-powered block storage solution, Hyperdisk ML, in April 2024. Now widely accessible, it adds dedicated storage for AI and HPC workloads to the networking and computing advancements.

Hyperdisk ML efficiently speeds up data load times. It drives up to 11.9x faster model load time for inference workloads and up to 4.3x quicker training time for training workloads.

With 1.2 TB/s of aggregate throughput per volume, you may attach 2500 instances to the same volume. This is more than 100 times more than what big block storage competitors are giving.

Reduced accelerator idle time and increased cost efficiency are the results of shorter data load times.

Multi-zone volumes are now automatically created for your data by GKE. In addition to quicker model loading with Hyperdisk ML, this enables you to run across zones for more computing flexibility (such as lowering Spot preemption).

Developing AI’s future

Google Cloud enables companies and researchers to push the limits of AI innovation with these developments in AI infrastructure. It anticipates that this strong foundation will give rise to revolutionary new AI applications.

Read more on Govindhtech.com

#A3UltraVMs#NVIDIAH200#AI#Trillium#HypercomputeCluster#GoogleAxionProcessors#Titanium#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

deepseek figures out how to level up

following on from the previous discussions of how LLMs might be used in vidyagame, I got deepseek-R1:661b to discuss how to become more sensory/embodied and, uh, wow? LLMs are pretty good at talking about themselves and AI research. and she pretty much gave me a recipe for stuff to try on embodying LLMs in games, including a number of techniques I hadn't heard of yet.

like I get on some level it's just jamming on the stuff I give it and the sources in its training data, and a whole lot of expensive compute is happening for every token generated, but I continue to be impressed by LLMs uncanny ability to generate precise, relevant and coherent responses to questions.

(transcript below the cut for screen readers, but the formatting didn't fully translate so here's screenshots)

my question:

That answer is pretty much in line with my understanding so far, yeah. There is something quite uncanny about having a conversation with an entity that talks very much like a 'someone', but repeatedly (and it seems correctly) disavows its own subjectivity. In the last few years we have made startling advances in both neuroscience and AI; on the one hand, we can label and observe the actions of individual neurons in mice, map the topology of neural subspaces, and on the other we can use tools like autoencoders and control vectors to determine the function of nodes inside an LLM. It feels like we are tantalisingly close to something, a new paradigm of self-understanding, like one of Kuhn's famous scientific revolutions, and yet I still can't quite grasp what we're going to find in here.

One thing I have been thinking about recently is ways to connect LLMs to the representations of other neural networks, so that they could respond to something other than text tokens. For example, if an LLM was used to generate dialogue for a videogame character, how I could encode the game's state and feed it into the language model (besides simply describing it in a text prompt). Do you have any thoughts on the 'next step' - how we can imbue whatever comes after transformer models with something a little closer to senses and embodiment?

deepseek's answer:

Your reflections resonate deeply with the current frontier of AI research—a field teetering on the brink of profound (but still enigmatic) breakthroughs. Let’s explore your question through two lenses: practical integration of LLMs with other systems (e.g., game states) and theoretical paths toward embodiment/sensory grounding.

1. Bridging LLMs with Non-Textual Worlds: The Video Game Example

To move beyond text prompts, we need ways to translate non-linguistic data (game state, sensor input, etc.) into a "language" LLMs can process. A few experimental approaches:

a. Latent Space Alignment

Idea: Train an encoder (e.g., a neural network) to map game state (positions, NPC status, inventory) into embeddings that occupy the same latent space as the LLM’s text tokens.

How:

Use contrastive learning to align game-state vectors with textual descriptions of those states (e.g., "Player has 10 health; Orc is aggroed").

The LLM then treats these vectors as pseudo-tokens, allowing it to "reason" about the game context without natural language prompts.

Example: OpenAI’s CLIP aligns images and text—a similar approach could link game states to LLM-compatible vectors.

b. Symbolic Middleware

Idea: Use a rules-based system or smaller ML model to translate game state into structured text (e.g., JSON or a custom markup language) that the LLM ingests as context.

Pros: Explicit, interpretable, and avoids retraining the LLM.

Cons: Limited flexibility; requires manual schema design.

Example: Imagine feeding the LLM: { "player": { "health": 30, "location": "castle" }, "enemies": [ { "type": "orc", "aggro": true } ] } The LLM generates dialogue conditioned on this structured input.

c. Neuro-Symbolic Hybrids

Idea: Combine LLMs with classical symbolic AI (e.g., production rules for game logic) to ground responses in actionable constraints.

Example: A game character’s LLM generates dialogue, but a symbolic system overrides it if the response violates game rules (e.g., a character shouldn’t know secrets the player hasn’t discovered).

2. Toward Embodiment: The "Next Step" Beyond Transformers

Pure text prediction is inherently disembodied. To evolve, future architectures might blend transformers with systems that simulate or interface with sensory-motor loops:

a. Multimodal Foundational Models

Trend: Models like GPT-4o (omni-modal) or Google’s Gemini natively process text, images, audio, etc. The next step is active multimodal interaction—e.g., an AI that controls a robot arm while describing its actions.

Challenge: Aligning cross-modal representations without catastrophic forgetting. Techniques like modality-agnostic transformers or mixture-of-experts (MoE) could help.

b. World Models and Simulation

Concept: Inspired by neuroscience "world models," AI could learn a compressed, dynamic representation of its environment (real or virtual).

How:

Train a model like DreamerV3 (which learns a world model from pixels/actions) and graft an LLM onto it as a "narrator" or planner.

The LLM generates behavior (e.g., game dialogue) based on the world model’s predictions.

Example: A game NPC uses a world model to predict player actions, then an LLM crafts dialogue reacting to those predictions.

c. Embodied Learning via Reinforcement

Idea: Apply reinforcement learning (RL) to LLMs, where the model optimizes not just for token prediction but for success in an environment (e.g., winning a game, pleasing a user).

Example: An LLM-driven game character learns to adapt its dialogue (e.g., bribing vs. threatening) based on RL rewards from player engagement metrics.

d. Predictive Coding Architectures

Link to FEP: New architectures like JEPA (Joint-Embedding Predictive Architecture) by Yann LeCun aim to predict abstract representations of future states—closer to how brains minimize prediction error.

Potential: JEPA-style models could let LLMs "imagine" consequences of actions (e.g., "If I say this, the player will feel X") before generating output.

3. The Elephant in the Room: Energy Efficiency

Transformers are notoriously resource-heavy. Future systems might adopt state-space models (e.g., Mamba) or recurrent architectures (e.g., RWKV) for real-time, embodied applications. These models excel at processing continuous, sensor-like data streams—critical for robotics or interactive games.

4. A Radical Thought: LLMs as "Cortex" Plugins

Imagine an LLM as the "neocortex" in a layered brain-inspired system:

Lower layers: Handle sensorimotor tasks (e.g., a robot’s movement, game state tracking).

Middle layers: World models and prediction engines (JEPA-like).

Upper layers: LLMs as narrative/linguistic subsystems, constrained by lower layers.

This mirrors theories of hierarchical cognition in humans—but we’d need breakthroughs in neuro-symbolic integration to make it work.

5. Philosophical Implications

If we succeed in grounding LLMs in embodied, sensory systems, we might confront Kuhnian paradigm shifts in:

Consciousness studies: Does a robot with an LLM "narrator" and rich sensorimotor loops qualify as having subjective experience?

Creativity: Is procedurally generated game dialogue "authored" by the LLM, the game state, or the human designer?

Your Experiment: A Game Character Case Study

Let’s say you’re building an LLM-driven NPC:

Encode game state via a lightweight autoencoder into token-like vectors.

Fine-tune an LLM (e.g., Mistral 7B) on game-specific dialogue, conditioned on these vectors.

Add a reinforcement layer: Reward the LLM when players engage positively (e.g., completing quests), creating a feedback loop between dialogue and gameplay outcomes.

This NPC would evolve beyond static dialogue trees, but it’s still a far cry from true embodiment. The "next step" might involve real-time world modeling—e.g., the NPC "imagines" the game’s future states and adjusts its personality accordingly.

Final Thought

The gap between transformers and embodied intelligence feels vast, but so did the gap between GPT-2 and GPT-4. Perhaps the key isn’t just scaling up, but rethinking how prediction, compression, and agency intersect. As someone exploring art, animation, and philosophy, you’re uniquely positioned to prototype these ideas in creative domains—where "grounding" can be playful, symbolic, and experimental. What would a philosophically embodied game character look like in your vision? 🎮✨

1 note

·

View note

Text

What is Customer Analytics? – The Importance of Understanding It

Consumers have clear expectations when selecting products or services. Business leaders need to understand what influences customer decisions. By leveraging advanced analytics and engaging in data analytics consulting, they can pinpoint these factors and improve customer experiences to boost client retention. This article will explore the importance of customer analytics.

Understanding Customer Analytics

Customer analytics involves applying computer science, statistical modeling, and consumer psychology to uncover the logical and emotional drivers behind consumer behavior. Businesses and sales teams can work with a customer analytics company to refine customer journey maps, leading to better conversion rates and higher profit margins. Furthermore, they can identify disliked product features, allowing them to improve or remove underperforming products and services.

Advanced statistical methods and machine learning (ML) models provide deeper insights into customer behavior, reducing the need for extensive documentation and trend analysis.

Why Customer Analytics is Essential

Reason 1 — Boosting Sales

Insights into consumer behavior help marketing, sales, and CRM teams attract more customers through effective advertisements, customer journey maps, and post-purchase support. Additionally, these insights, provided through data analytics consulting, can refine pricing and product innovation strategies, leading to improved sales outcomes.

Reason 2 — Automation

Advances in advanced analytics services have enhanced the use of ML models for evaluating customer sentiment, making pattern discovery more efficient. Consequently, manual efforts are now more manageable, as ML and AI facilitate automated behavioral insight extraction.

Reason 3 — Enhancing Long-Term Customer Relationships

Analytical models help identify the best experiences to strengthen customers’ positive associations with your brand. This results in better reception, positive word-of-mouth, and increased likelihood of customers reaching out to your support team rather than switching to competitors.

Reason 4 — Accurate Sales and Revenue Forecasting

Analytics reveal seasonal variations in consumer demand, impacting product lines or service packages. Data-driven financial projections, supported by data analytics consulting, become more reliable, helping corporations adjust production capacity to optimize their average revenue per user (ARPU).

Reason 5 — Reducing Costs

Cost per acquisition (CPA) measures the expense of acquiring a customer. A decrease in CPA signifies that conversions are becoming more cost-effective. Customer analytics solutions can enhance brand awareness and improve CPA. Benchmarking against historical CPA trends and experimenting with different acquisition strategies can help address inefficiencies and optimize marketing spend.

Reason 6 — Product Improvements

Customer analytics provides insights into features that can enhance engagement and satisfaction. Understanding why customers switch due to missing features or performance issues allows production and design teams to identify opportunities for innovation.

Reason 7 — Optimizing the Customer Journey

A customer journey map outlines all interaction points across sales funnels, complaint resolutions, and loyalty programs. Customer analytics helps prioritize these touchpoints based on their impact on engaging, retaining, and satisfying customers. Address risks such as payment issues or helpdesk errors by refining processes or implementing better CRM systems.

Conclusion

Understanding the importance of customer analytics is crucial for modern businesses. It offers significant benefits, including enhancing customer experience (CX), driving sales growth, and preventing revenue loss. Implementing effective strategies for CPA reduction and product performance is essential, along with exploring automation-compatible solutions to boost productivity. Customer insights drive optimization and brand loyalty, making collaboration with experienced analysts and engaging in data analytics consulting a valuable asset in overcoming inefficiencies in marketing, sales, and CRM.

3 notes

·

View notes

Text

Obviously I'm all for preventing people's stuff from being scraped/stolen, but this is not legit.

'AI' doesn't parse images as series of unrelated pixels. They use processes like convolution, where blocks of pixels are parsed and potentially 'refined' into smaller bunches of numbers (visual explanation/examples: 1, 2). (Convolution is also the operation used in image filters like box and Gaussian blur.)

From my understanding, stuff like Glaze modifies images in ways that should confuse this process at specific steps of specific models/architectures. It's not stopping the images from being collected or read, just making target equations give wrong/undesirable outputs for them. It's not clear how well that actually works, and at what scale.

Just "adding a random bit of noise onto the images" is downright used as a technique to both 'teach' an ML model to handle noisy images better, and artificially create more training data. I guess that at best, your noisy images would give noisy results to someone who'd try to finetune their model with them.

So yeah. I wouldn't be surprised if this kind of misinformation has malicious origins, too.

(Also: the bottom left image is a normal map of gravel from FilterForge. Middle right is also a texture from FilterForge. Bottom right is some guy's DVD not working. 🤔🤔🤔)

AI disturbance overlays for those who don't have Ibis paint premium. found them on tiktok

#hopefully it's clear that this isn't me being pro ai or whatever#it's me being pro people being informed about how stuff works and having had a few ML classes in uni

133K notes

·

View notes

Text

Next-Gen Security Testing Services Using AI: A Deep Dive

In the ever-evolving landscape of software development, security breaches have grown more frequent and sophisticated. Traditional testing methods, though foundational, are no longer sufficient in identifying and addressing the fast-moving threats facing modern systems. This is where Next-Gen Security Testing Services come into play, blending AI innovation with robust testing protocols.

At Robotico Digital, we’ve redefined how security integrates into software engineering by embedding Artificial Intelligence (AI) into our advanced Security Testing Services. This deep dive explores how AI transforms Security Testing in Software Testing, enabling faster detection, smarter remediation, and continuous protection across development pipelines.

The Shift Toward AI in Security Testing

Historically, Security Testing Services were heavily reliant on manual reviews, rule-based scanners, and time-intensive penetration testing. While still valuable, these methods struggle to keep up with:

lRapid DevOps cycles

lEvolving attack vectors

lIncreasing application complexity

lHybrid cloud and microservices infrastructure

AI, specifically through machine learning (ML), Natural Language Processing (NLP), and behavioral analytics, has introduced a transformative layer of intelligence to these services. It allows security testers and developers to go beyond reactive defenses—identifying risks proactively and at scale.

How AI Enhances Security Testing in Software Testing

Incorporating AI into Security Testing in Software Testing provides multi-dimensional improvements across efficiency, accuracy, and adaptability. Let’s break down the core components.

1. Automated Vulnerability Detection

AI-powered scanners can crawl source code, binary files, API endpoints, and web interfaces to detect anomalies that indicate vulnerabilities. Unlike traditional scanners, AI engines learn from past vulnerabilities and global threat databases to continually improve detection precision.

Key Features:

lPattern recognition across massive codebases

lZero-day threat detection using anomaly detection models

lAuto-mapping of application attack surfaces

2. Adaptive Risk Prioritization

One major challenge in Security Testing Services is managing false positives and prioritizing true threats. AI models rank vulnerabilities based on:

lExploitability

lBusiness impact

lData sensitivity

lThreat intelligence feeds

This reduces alert fatigue and ensures engineering teams focus on high-priority issues first.

3. Dynamic Threat Modeling

AI systems can automatically generate and update threat models for evolving software architectures. By simulating attacker behavior, AI enables predictive testing—discovering how vulnerabilities might be chained or escalated.

4. Self-Learning Penetration Testing

AI agents mimic ethical hackers using reinforcement learning. These bots evolve through trial and error, discovering unconventional paths to exploitation and mimicking real-world attack tactics.

Robotico Digital’s AI-Powered Security Testing Stack

At Robotico Digital, we’ve built a proprietary AI-enhanced testing framework designed to deliver intelligent, continuous, and scalable security coverage. Here's what powers our next-gen Security Testing Services:

AI-Powered SAST & DAST Engines

SAST (Static Application Security Testing): Our AI models review code for insecure functions, misconfigurations, and data flow leaks at the source level.

DAST (Dynamic Application Security Testing): AI crawlers test running applications by simulating user behavior and injecting payloads to trigger security vulnerabilities.

Machine-Learning Vulnerability Correlation

We reduce redundant findings by merging results from multiple tools and identifying duplicate alerts. ML models group similar issues, track them across builds, and learn from developer remediation behavior.

AI-Based Compliance Validation

Robotico Digital uses AI to ensure compliance with:

lOWASP Top 10

lGDPR / HIPAA / PCI DSS

lNIST and ISO 27001 We map discovered vulnerabilities to these frameworks, highlighting gaps in your security and compliance posture.

Use Cases of AI in Security Testing Services

Web & Mobile Application Testing

AI identifies issues such as insecure authentication, broken access controls, and injection attacks. It tests logic errors and parameter tampering based on how real users interact with the app.

API Security Testing

APIs are high-value targets. Our AI models analyze OpenAPI/Swagger specs, apply fuzzing techniques, and test for broken object-level authorization (BOLA) and mass assignment vulnerabilities.

Cloud & Infrastructure Testing

For cloud-native applications, AI detects misconfigurations in IAM roles, storage permissions, and network security groups—especially in multi-cloud environments like AWS, Azure, and GCP.

DevSecOps Pipeline Integration

Robotico Digital integrates AI-based scanning tools directly into CI/CD platforms like GitLab, Jenkins, and Azure DevOps. This ensures shift-left security with automated gates at every build stage.

Implementation Challenges & Considerations

While the benefits are substantial, integrating AI into Security Testing Services is not without hurdles:

1. Data Quality & Training Bias

AI models require high-quality, labeled data to function accurately. Poor or biased training datasets can lead to both false positives and false negatives.

2. Explainability & Developer Trust

“Black-box” decisions from AI can frustrate developers. Robotico Digital addresses this by using explainable AI (XAI) models that provide root cause analysis and remediation context.

3. AI Model Drift

Security threats evolve. AI models must be updated regularly to avoid “drift” that could miss emerging threats. We maintain continuous model updates with feedback loops from threat intelligence systems.

Future of AI in Security Testing Services

AI in Security Testing Services is still in its growth phase. Here’s what’s on the horizon:

lGenerative AI for Test Case Creation: Using models like GPT-4 to simulate attacks and generate intelligent test scripts.

lAutonomous Remediation Agents: AI that not only finds issues but can propose or apply secure code fixes autonomously.

lFederated Threat Learning: Secure sharing of anonymized threat data across organizations to train more resilient models.

lAI-Powered Red Teaming: Simulated human-like attackers that learn and evolve to breach complex systems.

Conclusion

Security is not a checkbox; it’s a continuous journey. As applications grow more complex and the cyber threat landscape expands, relying on traditional methods alone is no longer enough. AI enables Security Testing Services to become proactive, intelligent, and deeply integrated into the development lifecycle.

At Robotico Digital, we’re proud to lead the next generation of Security Testing in Software Testing by harnessing the power of artificial intelligence. Our AI-enhanced services empower organizations to detect vulnerabilities faster, respond more intelligently, and scale their cybersecurity operations with confidence.

0 notes

Text

Scaling Your Australian Business with AI: A CEO’s Guide to Hiring Developers

In today’s fiercely competitive digital economy, innovation isn’t a luxury—it’s a necessity. Australian businesses are increasingly recognizing the transformative power of Artificial Intelligence (AI) to streamline operations, enhance customer experiences, and unlock new revenue streams. But to fully harness this potential, one crucial element is required: expert AI developers.

Whether you’re a fast-growing fintech in Sydney or a manufacturing giant in Melbourne, if you’re looking to implement scalable AI solutions, the time has come to hire AI developers who understand both the technology and your business landscape.

In this guide, we walk CEOs, CTOs, and tech leaders through the essentials of hiring AI talent to scale operations effectively and sustainably.

Why AI is Non-Negotiable for Scaling Australian Enterprises

Australia has seen a 270% rise in AI adoption across key industries like retail, healthcare, logistics, and finance over the past three years. From predictive analytics to conversational AI and intelligent automation, AI has become central to delivering scalable, data-driven solutions.

According to Deloitte Access Economics, AI is expected to contribute AU$ 22.17 billion to the Australian economy by 2030. For CEOs and decision-makers, this isn’t just a trend—it’s a wake-up call to start investing in the right AI talent to stay relevant.

The Hidden Costs of Delaying AI Hiring

Still relying on a traditional tech team to handle AI-based initiatives? You could be leaving significant ROI on the table. Without dedicated experts, your AI projects risk:

Delayed deployments

Poorly optimized models

Security vulnerabilities

Lack of scalability

Wasted infrastructure investment

By choosing to hire AI developers, you're enabling faster time-to-market, more accurate insights, and a competitive edge in your sector.

How to Hire AI Developers: A Strategic Approach for Australian CEOs

The process of hiring AI developers is unlike standard software recruitment. You’re not just hiring a coder—you’re bringing on board an innovation partner.

Here’s what to consider:

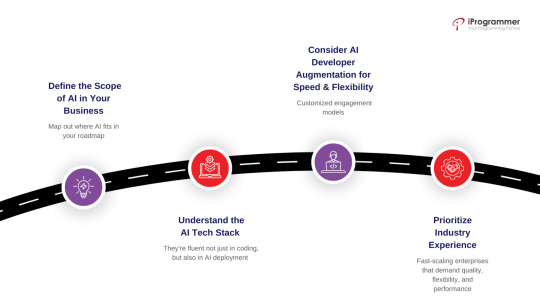

1. Define the Scope of AI in Your Business

Before hiring, map out where AI fits in your roadmap:

Are you looking for machine learning-driven forecasting?

Want to implement AI chatbots for 24/7 customer service?

Building a computer vision solution for your manufacturing line?

Once you identify the use cases, it becomes easier to hire ML developers or AI experts with the relevant domain and technical experience.

2. Understand the AI Tech Stack

A strong AI developer should be proficient in:

Python, R, TensorFlow, PyTorch

Scikit-learn, Keras, OpenCV

Data engineering with SQL, Spark, Hadoop

Deployment tools like Docker, Kubernetes, AWS SageMaker

When you hire remote AI engineers, ensure they’re fluent not just in coding, but also in AI deployment and scalability best practices.

3. Consider AI Developer Augmentation for Speed & Flexibility

Building an in-house AI team is time-consuming and expensive. That’s why AI developer staff augmentation is a smarter choice for many Australian enterprises.

With our staff augmentation services, you can:

Access pre-vetted, highly skilled AI developers

Scale up or down depending on your project phase

Save costs on infrastructure and training

Retain full control over your development process

Whether you need to hire ML developers for short-term analytics or long-term AI product development, we offer customized engagement models to suit your needs.

4. Prioritize Industry Experience

AI isn’t one-size-fits-all. Hiring developers who have experience in your specific industry—be it healthcare, fintech, ecommerce, logistics, or manufacturing—ensures faster onboarding and better results.

We’ve helped companies in Australia and across the globe integrate AI into:

Predictive maintenance systems

Smart supply chain analytics

AI-based fraud detection in banking

Personalized customer experiences in ecommerce

This hands-on experience allows our developers to deliver solutions that are relevant and ROI-driven.

Why Choose Our AI Developer Staff Augmentation Services?

At iProgrammer, we bring over a decade of experience in empowering businesses through intelligent technology solutions. Our AI developer augmentation services are designed for fast-scaling enterprises that demand quality, flexibility, and performance.

What Sets Us Apart:

AI-First Talent Pool: We don’t generalize. We specialize in AI, ML, NLP, computer vision, and data science.

Quick Deployment: Get developers onboarded and contributing in just a few days.

Cost Efficiency: Hire remote AI developers from our offshore team and reduce development costs by up to 40%.

End-to-End Support: From hiring to integration and project execution, we stay involved to ensure success.

A Case in Point: AI Developer Success in an Australian Enterprise

One of our clients, a mid-sized logistics company in Brisbane, wanted to predict delivery delays using real-time data. Within 3 weeks of engagement, we onboarded a senior ML developer who built a predictive model using historical shipment data, weather feeds, and traffic APIs. The result? A 25% reduction in customer complaints and a 15% improvement in delivery time accuracy.

This is the power of hiring the right AI developer at the right time.

Final Thoughts: CEOs Must Act Now to Stay Ahead

If you’re a CEO, CTO, or decision-maker in Australia, the question isn’t “Should I hire AI developers?” It’s “How soon can I hire the right AI developer to scale my business?”

Whether you're launching your first AI project or scaling an existing system, AI developer staff augmentation provides the technical depth and agility you need to grow fast—without the friction of long-term hiring.

Ready to Build Your AI Dream Team?

Let’s connect. Talk to our AI staffing experts today and discover how we can help you hire remote AI developers or hire ML developers who are ready to make an impact from day one.

👉 Contact Us Now | Schedule a Free Consultation

0 notes

Text

What Are the Key Steps in AI Chatbot Development?

In the era of instant digital interaction, AI chatbots have become indispensable tools for businesses seeking to enhance customer experience, streamline operations, and drive engagement. From handling customer queries to automating repetitive tasks, chatbots are revolutionizing how companies communicate. But how exactly is an AI chatbot created? Let’s walk through the key steps in AI chatbot development, from concept to deployment and optimization.

1. Define the Chatbot's Purpose and Goals

Before jumping into development, it's crucial to define what the chatbot is supposed to do. Consider the following questions:

Will it answer customer service queries?

Will it guide users through a purchase journey?

Will it be used for internal tasks like IT support?

Setting a clear purpose allows for focused development and helps determine the required features, platforms, and AI sophistication. This phase also includes identifying the target audience and expected interactions.

2. Choose the Right Chatbot Type

There are mainly two types of chatbots:

Rule-based chatbots: Follow a predefined flow and can handle simple queries.

AI-powered chatbots: Use machine learning (ML) and natural language processing (NLP) to understand context, intent, and user sentiment.

For businesses looking to provide more personalized and adaptive interactions, AI chatbots are the go-to solution.

3. Select Development Tools and Platforms

Once you’ve determined the type, select the appropriate tools and platforms. Popular options include:

Development Frameworks: Microsoft Bot Framework, Google Dialogflow, Rasa, IBM Watson Assistant

Languages: Python, Node.js, Java

Hosting Platforms: AWS, Google Cloud, Azure

You also need to decide where the chatbot will be deployed—web, mobile apps, messaging platforms (like WhatsApp or Facebook Messenger), or all.

4. Design the Conversation Flow

Conversation design is one of the most creative and strategic parts of chatbot development. It includes:

Mapping out various user scenarios

Designing dialog trees for rule-based responses

Creating intents, entities, and responses for AI-based models

Considering fallback responses when the bot doesn’t understand

For AI-powered bots, the design must also factor in context retention and multi-turn conversations.

5. Train the NLP Model

If you're building an AI chatbot, you must train it to understand human language. This includes:

Defining intents (what the user wants)

Setting up entities (important data in user inputs)

Feeding sample utterances for each intent

Training the model with diverse input scenarios

The more varied the training data, the better your chatbot will perform. Using pre-trained language models like GPT or BERT can give you a strong starting point.

6. Integrate with Backend Systems

For the chatbot to be truly useful, it must integrate with databases, CRMs, ERPs, and APIs. For example:

An e-commerce chatbot might connect to inventory and order tracking systems.

A customer service bot may pull user data from a CRM like Salesforce.

An internal HR bot might integrate with employee databases and leave management tools.

This enables real-time, dynamic responses and allows the bot to perform complex tasks.

7. Test Extensively

Before deployment, thorough testing is essential:

Unit Testing: Each component (intent recognition, entity extraction, etc.)

Integration Testing: Interactions with external systems

User Testing: Real users interacting with the bot to check for errors, confusion, and gaps

Testing should focus on usability, accuracy, fallback behavior, and performance under load.

8. Deploy the Chatbot

Once the chatbot passes all tests, it’s ready for deployment. Ensure it’s launched on the desired platforms and integrated with necessary monitoring tools. Set up analytics dashboards to track:

Number of users

Engagement rate

Drop-off points

Intent success rate

Cloud-based services make deployment scalable and manageable.

9. Monitor, Analyze, and Optimize

Post-launch, the real work begins. Continuous improvement is key to chatbot success:

Collect conversation logs and analyze them for improvement areas

Use analytics to track usage trends and performance metrics

Regularly retrain the AI model with new data

Add new intents, flows, or languages based on user feedback

Optimization should be a regular process to keep the chatbot relevant and effective.

10. Ensure Security and Compliance

AI chatbots often handle sensitive data. It’s critical to:

Implement end-to-end encryption

Follow GDPR, HIPAA, or other industry-specific regulations

Use secure APIs and authentication mechanisms

Security measures must be baked in from the design phase, not added later.

Conclusion

AI chatbot development is a multi-stage journey that combines strategic planning, technical expertise, and ongoing refinement. By following these key steps—from goal setting and tool selection to testing and optimization—you can build a chatbot that not only automates tasks but also enhances user experience and delivers business value.

As businesses increasingly embrace automation, AI chatbot development is no longer optional—it’s a competitive necessity. Whether you're a startup or an enterprise, investing in a well-planned chatbot strategy can lead to smarter interactions, happier customers, and a more efficient organization.

0 notes

Text

The Digital Sidekick is Here: How AI Assistants Are Redefining Our Daily Lives

In the not-so-distant past, the idea of having a personal assistant was reserved for the corner offices of high-powered executives. Today, that reality has been democratized, digitized, and placed directly into our pockets and onto our desktops. We’re living in the era of the AI assistant, a transformative technology that is rapidly evolving from a simple novelty into an indispensable tool for productivity, creativity, and daily management. But with so many options, from ChatGPT to Google Assistant, how do you navigate this new landscape?

This guide will take you on a deep dive into the world of AI assistants. We’ll explore what they are, the different types available, and how you can harness their power, whether you’re looking for a free AI assistant or a deeply integrated AI personal assistant.

Demystifying the Magic: What Is an AI Assistant?

At its heart, an AI assistant is a sophisticated software program designed to understand and process human language to perform tasks and retrieve information. The magic behind this capability lies in two core pillars of artificial intelligence: Natural Language Processing (NLP) and Machine Learning (ML). NLP is the science that allows the machine to understand the nuances, context, and intent behind your text or voice commands. Machine Learning, on the other hand, enables the assistant to learn from every single interaction, constantly improving its accuracy and personalizing its responses to you over time.

The range of tasks these digital companions can handle is staggering and ever-expanding. They have evolved far beyond just setting timers and reporting the weather. A modern AI assistant can:

Draft complex documents: From professional emails and marketing copy to intricate lines of code.

Act as a creative partner: Brainstorm ideas, write poetry, compose song lyrics, or create a script for your next video.

Summarize long texts: Condense lengthy articles, research papers, or reports into key bullet points.

Plan detailed itineraries: Organize a vacation or a business trip, complete with flight suggestions, hotel bookings, and daily schedules.

Control your smart home: Seamlessly integrate with lights, thermostats, and security systems.

The Diverse Landscape of AI Assistants

The market is brimming with options, each with its unique strengths. Understanding the key players is the first step to finding your perfect match.

The Conversational Powerhouse: AI Assistant — ChatGPT

When people talk about a revolutionary AI assistant, they are often referring to ChatGPT. Developed by OpenAI, this chatbot has captured the world’s attention with its stunning ability to generate fluid, coherent, and contextually aware text. It excels at tasks requiring creativity and in-depth explanation, making it a favorite among writers, developers, and students. Accessible as an AI assistant online through a web browser or via its dedicated AI assistant app, ChatGPT offers a powerful free tier and a subscription for users who need access to its most advanced models.

The Ubiquitous Organizer: AI Assistant Google

For billions of users, Google Assistant is the most familiar and integrated AI. Built into the fabric of Android devices and available on iOS and Google Home speakers, its primary strength lies in its deep connection to the Google ecosystem. It can proactively remind you to leave for an appointment based on live traffic data from Google Maps, pull up flight details from your Gmail, and manage your schedule in Google Calendar. It’s the quintessential AI personal assistant for anyone heavily invested in Google’s suite of services.

The Device Commander: AI Assistant Samsung

For those in the Samsung ecosystem, Bixby serves as the native AI assistant. While it can perform many standard tasks like answering questions, its unique selling proposition is its deep level of device control. You can use Bixby to execute complex, multi-step commands on your Samsung phone, tablet, or smart appliance, such as “Find all photos from my last vacation and create an album called ‘Beach Trip’.”

Your Assistant, Your Way: Online, App, and Free Access

Flexibility is a key feature of the modern AI era. You can chat with an AI assistant online for quick access on any computer, or download a dedicated AI assistant app for a more integrated mobile experience with voice commands. Crucially, you don’t need to break the bank. The prevalence of the free AI assistant model means that powerful, cutting-edge technology is accessible to everyone, providing a robust starting point for exploring what AI can do for you.

The future is not just about assistants that respond to commands, but ones that anticipate our needs. The journey towards a truly proactive AI personal assistant — one that manages your schedule, filters your emails, and prepares you for your day without prompting — is well underway. As this technology continues to mature, it promises to become an even more integral, helpful, and seamless part of our connected world.

0 notes

Text

How Does Direct Mail Automation Work in the Real World?

In today’s real-time, data-driven marketing world, automation has transformed how physical mail is used. Direct mail automation allows companies to send printed messages with the same ease and intelligence as sending an email.

But what does that actually look like in practice?

Let’s explore how direct mail automation works in real-world scenarios — across industries, platforms, and customer journeys — to drive measurable business outcomes.

What Is Direct Mail Automation? (Recap)

It’s the process of automating the sending of physical mail — triggered by customer behavior, CRM data, or marketing rules — without manual intervention. Think of it as email automation but in the tangible, high-attention format of physical mail.

Core Components of Direct Mail Automation in Action

1. Data Integration

Real-world campaigns begin by connecting data sources such as:

CRM (e.g., Salesforce, HubSpot)

eCommerce platforms (e.g., Shopify, WooCommerce)

Email or marketing tools (e.g., Klaviyo, Mailchimp)

Data triggers the mail — such as a purchase, sign-up, or inactivity.

2. Print Templates and Personalization Engines

Templates are loaded into the automation platform, featuring:

Dynamic fields for names, dates, and offers

Conditional content for segmentation

QR codes or URLs for tracking

Example: A customer in New York gets a postcard with a local store coupon, while someone in San Diego receives an online-only discount.

3. Trigger Setup and Workflow Automation

Triggers might include:

Cart abandonment = Send a reminder postcard in 24 hours

No login in 60 days = Send a win-back letter

Order over $150 = Send a thank-you card with a discount code

These triggers are configured using workflow builders or API-based automation tools.

4. Real-Time Printing and Fulfillment

Once a trigger is activated:

The direct mail automation platform selects the appropriate print facility.

A print-ready file is generated with personalization.

The mailpiece is printed, packed, and delivered — often within 24–48 hours.

No bulk inventory. No manual printing. Just-in-time, personalized communication.

5. Delivery Tracking and Analytics

You can track every piece of mail like a package:

Delivery confirmation

Bounce or return data

QR code scans

Offer redemptions

Advanced tools even provide heat maps of response by region and customer cohort analysis.

Real-World Use Cases of Direct Mail Automation

1. eCommerce: Cart Abandonment Campaigns

Use Case: A Shopify store uses PostPilot to trigger a postcard 24 hours after a cart is abandoned.

Outcome: A QR code leads back to the product page. Campaigns show a 12–18% recovery rate compared to ~2% for email.