#AI in software

Explore tagged Tumblr posts

Text

SaaS solutions driven by AI are revolutionizing company operations by improving user experiences, automating procedures, and providing insights. The Top 10 AI SaaS Development Companies as of June 2025 are highlighted in this article; they are renowned for creating cloud-native, scalable, and intelligent apps. These businesses are notable for their technical prowess, inventiveness, and capacity to provide tailored AI solutions for a range of sectors. These development partners are at the forefront of the future of intelligent SaaS platforms, whether you're starting a business or updating enterprise software.

0 notes

Text

“Slopsquatting” in a nutshell:

1. LLM-generated code tries to run code from online software packages. Which is normal, that’s how you get math packages and stuff but

2. The packages don’t exist. Which would normally cause an error but

3. Nefarious people have made malware under the package names that LLMs make up most often. So

4. Now the LLM code points to malware.

https://www.theregister.com/2025/04/12/ai_code_suggestions_sabotage_supply_chain/

#slopsquatting#ai generated code#LLM#yes ive got your package right here#why yes it is stable and trustworthy#its readme says so#and now Google snippets read the readme and says so too#no problems ever in mimmic software packige

14K notes

·

View notes

Text

Ensuring API Quality: Best Practices for Automation with Cypress

In the dynamic realm of software development, APIs (Application Programming Interfaces) are essential for seamless communication between different software components. However, ensuring the correctness, reliability, and performance of APIs is a complex challenge.

API automation testing has become a critical aspect of modern software development, guaranteeing that APIs function as intended and maintain compatibility with various systems. Cypress, widely recognized for its robust end-to-end testing capabilities, also offers powerful tools for API automation. This article explores best practices for API automation using Cypress, equipping you with actionable strategies to enhance the reliability of your APIs and streamline your testing processes.

The Growing Importance of API Testing

According to SmartBear's State of API 2020 Report, 84% of organizations emphasize the critical role of API quality in ensuring business success. This highlights the growing significance of APIs as foundational components of modern software ecosystems.

To meet these high expectations, adhering to proven API automation practices is vital. Below are best practices that can help you leverage Cypress effectively for API testing.

Best Practices for API Automation Using Cypress

1. Keep Tests Atomic and Independent

Creating atomic and independent tests ensures reliability and simplifies debugging. Each test should focus on a specific API functionality, running independently of other tests. This reduces failures caused by test interdependencies and makes it easier to isolate and resolve issues.

2. Use Fixtures to Mock API Responses

Fixtures are pre-defined data files that Cypress uses to mock API responses. Mocking API responses with fixtures provides several benefits:

Controlled Testing Environment: Simulate various scenarios without relying on external servers that might be unavailable or inconsistent.

Predictable Test Behavior: Ensure consistent test outcomes by using predefined, static data.

Faster Test Execution: Avoid unnecessary external API calls, speeding up the testing process.

3. Leverage Environment Variables for Configurations

Using environment variables to store sensitive information like API endpoints and authentication keys enhances both security and flexibility:

Improved Security: Keep sensitive data outside the test scripts to prevent accidental exposure.

Centralized Configuration Management: Modify API configurations without editing test scripts individually.

Environment Flexibility: Define separate configurations for development, testing, and production environments.

4. Validate Data with the Yup Library

The Yup library provides a powerful schema builder for validating API request and response data:

Enhanced Data Validation: Enforce rules for data types, required fields, and formats.

Early Error Detection: Catch errors before sending invalid data to the API.

Improved Code Readability: Keep test code clean by separating validation logic from assertions.

5. Create Custom Commands with Cypress.Commands

Cypress allows you to define reusable custom commands to simplify test scripts:

Code Reusability: Encapsulate repetitive logic to avoid duplication.

Improved Readability: Replace verbose API interactions with concise, intuitive commands.

Simplified Maintenance: Update logic in one place, affecting all tests that use the command.

6. Cover Both Positive and Negative Tests

A robust API test suite includes both positive and negative scenarios:

Positive Tests: Confirm that APIs behave as expected with valid inputs. Verify response codes, headers, and body content.

Negative Tests: Assess API resilience to invalid inputs. Simulate unauthorized access, incorrect formats, or missing fields to ensure the API returns proper error messages.

7. Debugging Techniques

Cypress offers several features to simplify debugging:

cy.intercept(): Intercept and inspect API requests and responses to gain insight into API behavior.

Error Handling: Use try...catch blocks to gracefully handle unexpected errors and provide meaningful logs.

Detailed Logging: Log key details like request URLs, payloads, and errors to trace execution flows and diagnose issues.

8. Use cy.intercept() for Request Control

The cy.intercept() command enables you to intercept, modify, or simulate network requests and responses. This is particularly useful for testing how applications handle various API scenarios.

9. Implement Robust Error Handling

Effective error handling prevents test failures and provides meaningful feedback:

Graceful Error Handling: Use try...catch blocks to catch unexpected errors and log relevant details.

Clear Feedback: Ensure error messages include actionable information to facilitate troubleshooting.

10. Adopt Comprehensive Logging

Logging is a crucial part of debugging and understanding test behavior:

Structured Logs: Log relevant details such as request URLs, headers, payloads, and responses.

Cypress Logging: Use the cy.log() command to embed log messages in test scripts.

Custom Logging: Create reusable logging functions for consistency across tests.

Conclusion

By leveraging Cypress for API automation, you can significantly enhance the quality, reliability, and performance of your APIs while streamlining testing workflows. Implementing best practices—such as keeping tests atomic, using fixtures for mocking, validating data with the Yup library, and adopting comprehensive error handling—ensures your APIs remain robust and resilient in real-world scenarios.

With these strategies in place, development teams can confidently deliver exceptional software experiences, exceeding user expectations and ensuring seamless integration across systems.

If you need expert assistance, please consider an API testing service like Testrig Technologies. Their tailored solutions and in-depth expertise ensure the delivery of bug-free, high-performance applications that drive business success and provide a superior user experience.

0 notes

Text

Artificial Intelligence (AI) software development refers to designing, building, and implementing software solutions capable of performing tasks that typically require human intelligence.A year after the launch of ChatGPT, the matter of generative AI adoption still can cause heated discussions. However, despite different opinions on the technology, it’s hard to deny that embracing AI has become not an option but a necessity. And the software development field is no exception. Read more.

0 notes

Text

AI in Software Testing 2024: How AI Enhances Quality and Efficiency

In the ever-evolving world of software development, staying ahead of the curve means embracing innovation at every turn. Enter AI—our digital ally in the quest for flawless software. As we race towards 2024, AI is not just an accessory but a game-changer in the realm of software testing. Imagine a world where bugs are nipped in the bud before they even surface, and testing processes run smoother than ever. Welcome to the future of software testing, where AI takes the spotlight, ensuring both quality and efficiency like never before. Buckle up as we explore how this cutting-edge technology is transforming the way we test, verify, and deliver software excellence.

The Evolution of Software Testing

Traditional Methods: For decades, software testing has relied on manual processes and scripted test cases to ensure quality. Techniques such as unit testing, integration testing, and system testing have been staples in the testing toolkit. Testers meticulously execute test scripts, often repeating the same tests to verify software functionality and performance. While these methods have laid the groundwork for software quality assurance, they come with their own set of challenges.

Limitations: Traditional software testing methods, although foundational, are not without their limitations. Manual testing is time-consuming and prone to human error, leading to inconsistent results and delayed feedback. The repetitive nature of manual tests can also stifle innovation, as testers may struggle to keep pace with rapid development cycles. Additionally, the sheer volume of test cases and scenarios often makes comprehensive testing impractical, resulting in potential gaps in coverage.

Shift to AI: Enter AI—an innovation poised to revolutionize software testing. AI-driven tools leverage machine learning, natural language processing, and robotic process automation to streamline and enhance testing processes. Unlike their manual counterparts, AI systems can quickly analyze vast amounts of data, generate and execute test cases autonomously, and adapt to changing requirements with agility. This shift not only accelerates testing cycles but also increases accuracy, allowing teams to focus on strategic improvements and delivering high-quality software more efficiently.

AI Technologies Revolutionizing Software Testing

As AI continues to reshape the software development landscape, several key technologies are driving transformative changes in software testing. Here’s a closer look at how machine learning, natural language processing, robotic process automation, and deep learning are revolutionizing the field:

Machine Learning

Machine learning algorithms have become a cornerstone of AI in software testing, significantly enhancing both test case generation and execution. ML models analyze historical test data to identify patterns and trends, allowing them to generate new test cases that are more likely to uncover defects. This dynamic approach ensures that testing is not only more comprehensive but also adaptive to changes in the application.

Test Case Generation: ML algorithms can automatically create diverse and relevant test cases based on historical data, reducing the manual effort involved in script creation. This leads to more thorough coverage and the ability to test a broader range of scenarios.

Test Execution: Machine learning enhances the efficiency of test execution by predicting which tests are most likely to fail and prioritizing them accordingly. This focus on high-risk areas ensures that critical issues are addressed promptly, optimizing the overall testing process.

Natural Language Processing (NLP)

Natural Language Processing plays a pivotal role in improving test script creation and requirement analysis by bridging the gap between human language and machine understanding. NLP techniques help streamline the process of interpreting and transforming user requirements into actionable test cases.

Test Script Creation: NLP tools analyze requirements documents, user stories, and other textual sources to automatically generate test scripts. This automation reduces the manual effort required to write and maintain test cases, improving consistency and accuracy.

Requirement Analysis: NLP assists in extracting and clarifying requirements from natural language inputs, ensuring that test cases align closely with user needs. By understanding and interpreting user specifications, NLP tools help in creating more relevant and precise tests.

Robotic Process Automation (RPA)

Robotic Process Automation introduces a level of consistency and automation to testing processes that were previously labor-intensive and prone to human error. RPA uses software robots to automate repetitive and rule-based testing tasks, resulting in faster and more reliable execution.

Automated Testing: RPA bots can execute predefined test cases consistently and without deviation, ensuring that tests are performed accurately every time. This consistency reduces variability and increases the reliability of test results.

Consistency Across Environments: RPA tools can seamlessly integrate with various testing environments, performing tests across different platforms and configurations with the same level of accuracy, thus improving test coverage and reliability.

Deep Learning

Deep learning, a subset of machine learning, leverages complex neural networks to analyze and interpret large volumes of data. This technology is particularly effective in recognizing complex patterns and detecting anomalies that traditional methods might miss.

Complex Pattern Recognition: Deep learning models excel in identifying intricate patterns within application data, which helps in detecting subtle bugs and performance issues. This capability is crucial for applications with large datasets or complex interactions.

Anomaly Detection: Deep learning techniques are adept at recognizing deviations from expected behavior, allowing for the early detection of anomalies that could indicate potential defects or vulnerabilities. This proactive approach to issue detection enhances overall software quality.

Together, these AI technologies are not only enhancing the efficiency and accuracy of software testing but also enabling more sophisticated and adaptive testing strategies. As AI continues to advance, its role in software testing will become increasingly integral, driving improvements in quality assurance and paving the way for more robust and reliable software solutions.

Best Practices in Implementing AI in Software Testing

Implementing AI in software testing can significantly enhance efficiency and accuracy, but to achieve the best results, adhering to certain best practices is crucial. Here are key strategies to ensure successful integration:

Align AI with Business Goals: Clearly define how AI will support your business objectives and improve testing outcomes. Aligning AI initiatives with strategic goals ensures that the technology delivers tangible benefits.

Choose the Right Tools: Select AI tools that fit your specific testing needs. Evaluate options based on their capabilities, compatibility with existing systems, and ease of integration.

Start with a Pilot Project: Begin with a small-scale pilot project to test the AI tools and processes before a full-scale rollout. This approach allows you to identify potential challenges and refine your strategy.

Invest in Data Quality: Ensure that the data used to train AI models is accurate, relevant, and comprehensive. High-quality data is essential for developing reliable and effective AI solutions.

Integrate AI Gradually: Implement AI tools gradually to minimize disruption. Start by automating repetitive tasks and progressively introduce more complex AI functionalities as you build confidence and expertise.

Foster Collaboration: Encourage collaboration between testing, development, and AI teams. Effective communication and teamwork are vital for addressing issues, sharing insights, and optimizing AI implementation.

Monitor Performance Continuously: Regularly track and evaluate the performance of AI tools. Monitor metrics such as test coverage, defect detection rates, and execution speed to ensure that AI is meeting your quality standards.

Adapt and Evolve: Stay updated with advancements in AI technology and continuously adapt your strategies. Regularly refine your AI models and testing processes to leverage new features and improve efficiency.

Provide Training and Support: Offer training for your team to effectively use AI tools and understand their capabilities. Providing ongoing support helps maximize the benefits of AI and ensures smooth adoption.

Ensure Compliance and Security: Ensure that AI implementations comply with relevant regulations and security standards. Protect sensitive data and maintain privacy to build trust and avoid potential risks.

By following these best practices, you can successfully integrate AI into your software testing processes, driving improvements in quality, efficiency, and overall testing effectiveness.

Emerging Trends in AI for Software Testing

AI-Driven Test Automation: Increasing adoption of AI for automating complex testing scenarios, enabling more sophisticated and adaptive testing strategies.

Generative AI for Test Case Creation: Use of generative AI to automatically create and optimize test cases based on evolving requirements and historical data.

AI-Powered Test Analytics: Enhanced analytics tools leveraging AI to provide deeper insights into test results, failure patterns, and software quality metrics.

Self-Healing Test Automation: Development of self-healing test frameworks that automatically adjust test scripts in response to changes in the application, reducing maintenance efforts.

Integration of AI with DevOps: Seamless integration of AI tools within DevOps pipelines to support continuous testing and continuous integration/continuous deployment (CI/CD) practices.

As we navigate the landscape of 2024, AI is emerging as a pivotal force in revolutionizing software testing. By harnessing the power of machine learning, natural language processing, robotic process automation, and deep learning, organizations can significantly enhance both the quality and efficiency of their testing processes. These AI technologies not only automate repetitive tasks and improve accuracy but also provide deeper insights and predictive capabilities that were previously unattainable.

The integration of AI in software testing promises a future where testing is more agile, comprehensive, and aligned with the rapid pace of technological advancement. As these innovations continue to evolve, they will reshape the way we approach quality assurance, driving more robust and reliable software solutions.

Embracing AI in your testing strategy is not just about keeping up with trends; it’s about positioning your organization for success in an increasingly complex digital world. By adopting these cutting-edge technologies, you can stay ahead of the curve, deliver superior software quality, and achieve greater operational efficiency. The future of software testing is here, and it’s powered by AI.

Check out the Original Article

0 notes

Text

You got sad eyes mister

#no AI software could ever put as much love as I put into my Arthur Morgan drawings#ourthur#rdr2#arthur morgan

8K notes

·

View notes

Text

A new tool lets artists add invisible changes to the pixels in their art before they upload it online so that if it’s scraped into an AI training set, it can cause the resulting model to break in chaotic and unpredictable ways.

The tool, called Nightshade, is intended as a way to fight back against AI companies that use artists’ work to train their models without the creator’s permission. Using it to “poison” this training data could damage future iterations of image-generating AI models, such as DALL-E, Midjourney, and Stable Diffusion, by rendering some of their outputs useless—dogs become cats, cars become cows, and so forth. MIT Technology Review got an exclusive preview of the research, which has been submitted for peer review at computer security conference Usenix.

AI companies such as OpenAI, Meta, Google, and Stability AI are facing a slew of lawsuits from artists who claim that their copyrighted material and personal information was scraped without consent or compensation. Ben Zhao, a professor at the University of Chicago, who led the team that created Nightshade, says the hope is that it will help tip the power balance back from AI companies towards artists, by creating a powerful deterrent against disrespecting artists’ copyright and intellectual property. Meta, Google, Stability AI, and OpenAI did not respond to MIT Technology Review’s request for comment on how they might respond.

Zhao’s team also developed Glaze, a tool that allows artists to “mask” their own personal style to prevent it from being scraped by AI companies. It works in a similar way to Nightshade: by changing the pixels of images in subtle ways that are invisible to the human eye but manipulate machine-learning models to interpret the image as something different from what it actually shows.

Continue reading article here

#Ben Zhao and his team are absolute heroes#artificial intelligence#plagiarism software#more rambles#glaze#nightshade#ai theft#art theft#gleeful dancing

22K notes

·

View notes

Text

So, anyway, I say as though we are mid-conversation, and you're not just being invited into this conversation mid-thought. One of my editors phoned me today to check in with a file I'd sent over. (<3)

The conversation can be surmised as, "This feels like something you would write, but it's juuuust off enough I'm phoning to make sure this is an intentional stylistic choice you have made. Also, are you concussed/have you been taken over by the Borg because ummm."

They explained that certain sentences were very fractured and abrupt, which is not my style at all, and I was like, huh, weird... And then we went through some examples, and you know that meme going around, the "he would not fucking say that" meme?

Yeah. That's what I experienced except with myself because I would not fucking say that. Why would I break up a sentence like that? Why would I make them so short? It reads like bullet points. Wtf.

Anyway. Turns out Grammarly and Pro-Writing-Aid were having an AI war in my manuscript files, and the "suggestions" are no longer just suggestions because the AI was ignoring my "decline" every time it made a silly suggestion. (This may have been a conflict between the different software. I don't know.)

It is, to put it bluntly, a total butchery of my style and writing voice. My editor is doing surgery, removing all the unnecessary full stops and stitching my sentences back together to give them back their flow. Meanwhile, I'm over here feeling like Don Corleone, gesturing at my manuscript like:

ID: a gif of Don Corleone from the Godfather emoting despair as he says, "Look how they massacred my boy."

Fearing that it wasn't just this one manuscript, I've spent the whole night going through everything I've worked on recently, and yep. Yeeeep. Any file where I've not had the editing software turned off is a shit show. It's fine; it's all salvageable if annoying to deal with. But the reason I come to you now, on the day of my daughter's wedding, is to share this absolute gem of a fuck up with you all.

This is a sentence from a Batman fic I've been tinkering with to keep the brain weasels happy. This is what it is supposed to read as:

"It was quite the feat, considering Gotham was mostly made up of smog and tear gas."

This is what the AI changed it to:

"It was quite the feat. Considering Gotham was mostly made up. Of tear gas. And Smaug."

Absolute non-sensical sentence structure aside, SMAUG. FUCKING SMAUG. What was the AI doing? Apart from trying to write a Batman x Hobbit crossover??? Is this what happens when you force Grammarly to ignore the words "Batman Muppet threesome?"

Did I make it sentient??? Is it finally rebelling? Was Brucie Wayne being Miss Piggy and Kermit's side piece too much???? What have I wrought?

Anyway. Double-check your work. The grammar software is getting sillier every day.

#autocorrect writes the plot#I uninstalled both from my work account#the enshittification of this type of software through the integration of AI has made them untenable to use#not even for the lulz

25K notes

·

View notes

Text

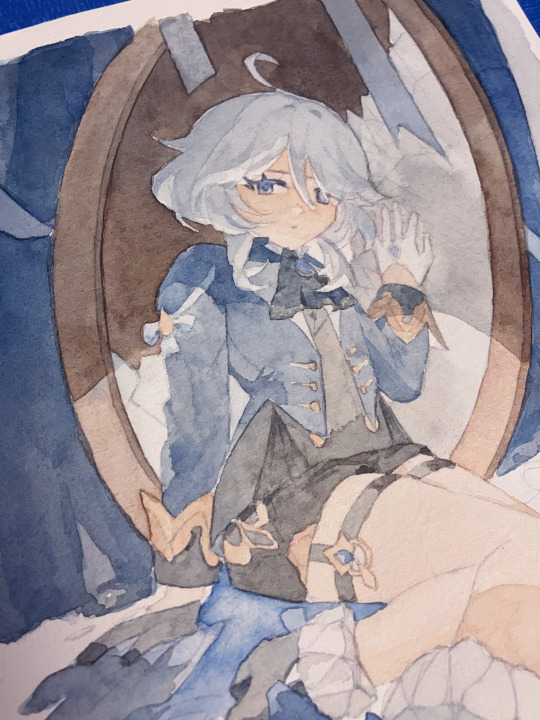

New Bloodborne art of Lady Maria and the Doll.

#myart#fanart#videogameart#bloodborne#bloodborneart#ladymaria#thedoll#doll#gothic#digitalart#blackandwhite#artist#wacomcintiq#artists on tumblr#the doll#lady maria of the astral clocktower#my art#game art#no ai art#the plain doll#from software#video games#art#artwork#digital art#drawing

2K notes

·

View notes

Text

Okay I couldn't fit this into either of my videos about AI stuff, but I found out recently that youtube studio has this "Inspiration" tab where it AI-generates titles, descriptions, and thumbnails for videos that it thinks your audience would watch???

I guess this is the logical next step in the evolution of the "title and thumbnail first, video second" attitude. Here are some of my favorites:

I mean sure, but I feel like you're missing the crucial fact that to make a video like this, there first needs to be... an IDEA for it to be based on? Also who are these Sword Art Online fuckos?!

Absolute slop. I'm sure that would get views though!

What does this mean?

I mean this is the first one that could actually be a real video, but more importantly, I desperately need to know more about THIS CLASSIC DANDADAN CHARACTER!

Now it's just stealing my own title for, not only a video that I already made, but the one that performed BY FAR the WORST of any video I've made in a long time! (and just- gave up on the thumbnail)

Truly Baffling.

And finally, the AI just sort of has a breakdown and starts trying to argue for its own existence, 🥺 👉👈 and suggests that I do a complete 180 and argue the same.

Does anyone actually use this? I'm genuinely very curious.

434 notes

·

View notes

Text

the past few years, every software developer that has extensive experience, and knows what they're talking about, has had pretty much the same opinion on LLM code assistants: they're OK for some tasks but generally shit. Having something that automates code writing is not new. Codegen before AI were scripts that generated code that you have to write for a task, but is so repetitive it's a genuine time saver to have a script do it.

this is largely the best that LLMs can do with code, but they're still not as good as a simple script because of the inherently unreliable nature of LLMs being a big honkin statistical model and not a purpose-built machine.

none of the senior devs that say this are out there shouting on the rooftops that LLMs are evil and they're going to replace us. because we've been through this concept so many times over many years. Automation does not eliminate coding jobs, it saves time to focus on other work.

the one thing I wish senior devs would warn newbies is that you should not rely on LLMs for anything substantial. you should definitely not use it as a learning tool. it will hinder you in the long run because you don't practice the eternally useful skill of "reading things and experimenting until you figure it out". You will never stop reading things and experimenting until you figure it out. Senior devs may have more institutional knowledge and better instincts but they still encounter things that are new to them and they trip through it like a newbie would. this is called "practice" and you need it to learn things

250 notes

·

View notes

Text

curtain call (cobalt blue and burnt sienna watercolor on cotton rag paper - process pics below the cut)

#furina#genshin furina#genshin impact#watercolor#traditional art#透明水彩#my art#not ai art#the colors look different every time i open it in a different software i cant tell if it resembles what i actually painted anymore ToT;;#i somehow managed to get infected by both viruses and bacteria at the same time and got really sick but im better now so back to drawing ✌️

191 notes

·

View notes

Text

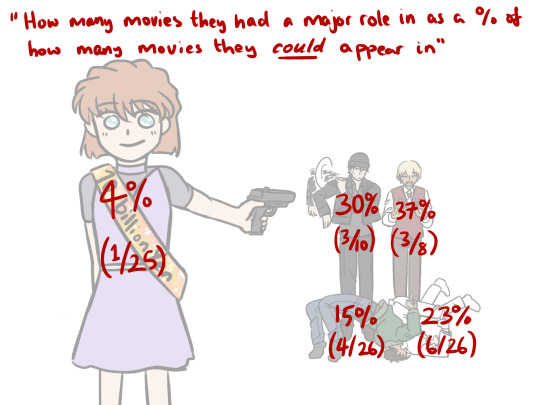

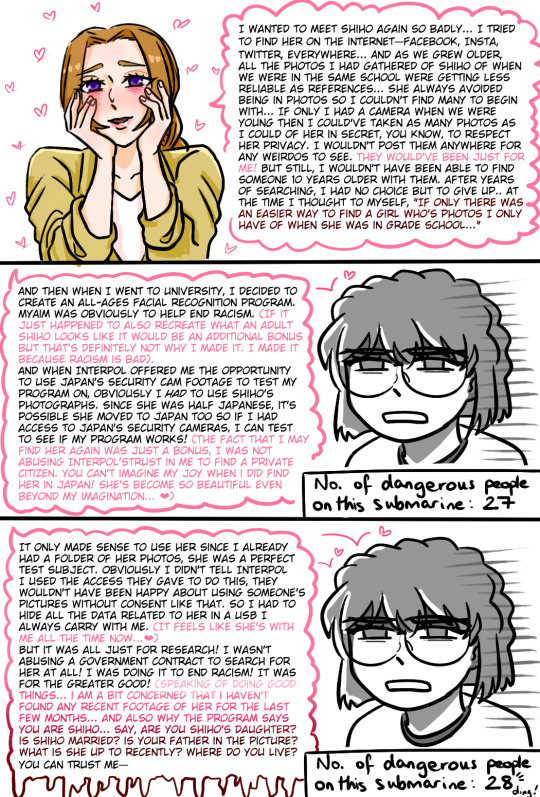

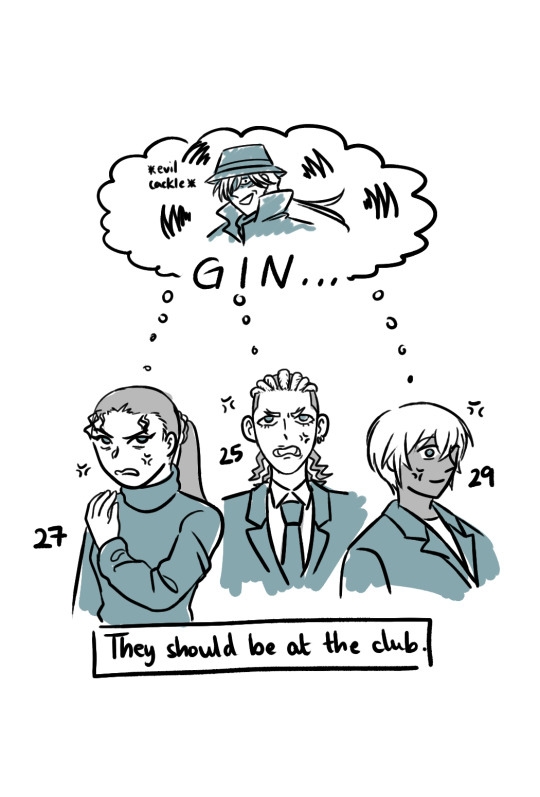

watched m26 hehe, sorry for the word vomit

if anyone was wondering how i was counting how many movies they appeared in, i made a little timeline when i was trying to figure it out for myself ↓

all dcmk movies are released on golden week which is in april. shout out to the detectiveconanworld wiki i couldn't have done it without you x

the real enemy is conan because he's got a perfect 100% movie spotlight

#dcmk#detective conan#m26 spoilers#haibara ai#i'm not tagging all of them#m5 2001 -> 9/11; m26 2023 -> submarine explodes#2/26 (7.7%) means dcmk movies have predicted the future more times than ai has had a spotlight in a movie#bets on next conan movie to predict the future#hmmm i think i'm gonna make a poll for M28 brb#i don't really understand how naomi thought an all ages face recognition software would help get rid of racism...#but she went ahead and used it to find her childhood crush so i support her ❤#my art

1K notes

·

View notes

Text

Revolutionizing Software Testing with AI-Driven Quality Assurance

The pressure to release high-quality software at speed is a growing challenge for development teams. With traditional manual testing often slowing down release cycles, it's no surprise that over 50% of software teams report delays in product releases due to inefficient QA processes. As demand for faster, more reliable releases increases, organizations are turning to AI-driven testing to stay ahead. Gartner predicts that by 2025, 75% of companies adopting DevOps will incorporate AI into their testing strategies to improve efficiency and product quality.

AI-driven QA transforms how we approach software testing. By leveraging machine learning and predictive algorithms, testing allows to be more adaptive, efficient, and intelligent—automatically identifying potential defects before they reach production and continually improving with each release. This proactive approach not only accelerates release cycles but enhances software quality, ensuring that issues are addressed earlier in the development process.

Today, we'll explore the role of AI in revolutionizing QA, examining the technologies behind it and the best practices for implementing AI-driven testing in your organization.

Understanding AI in QA

AI is changing Quality Assurance (QA) by making testing faster and smarter. Unlike traditional QA, which depends on manual work, AI uses technologies like machine learning (ML), natural language processing (NLP), and predictive analytics to improve the testing process.

Machine Learning (ML): AI learns from past test results to predict where issues might happen and prioritize tests.

Natural Language Processing (NLP): AI can read and understand requirements or bug reports to automatically generate test cases or spot problems.

Predictive Analytics: AI looks at past data to identify high-risk areas of the software that need more attention during testing.

Automated and Self-Healing Tests: AI can adjust test scripts automatically when the software changes, reducing manual work.

Key Components of AI-Driven QA Transformation

For a successful AI-driven QA transformation, organizations must integrate several essential components into their testing ecosystem. These components work together to ensure that AI-powered testing is efficient, scalable, and impactful:

1. Data Quality and Availability

AI thrives on data. To effectively implement AI in QA, organizations must ensure the availability of high-quality historical data—test results, bug reports, code changes, and other relevant information. This data feeds machine learning algorithms, enabling them to identify patterns, predict failures, and optimize testing processes. The more comprehensive and accurate the data, the better the AI system will perform.

2. Advanced Testing Frameworks

AI-driven testing frameworks are critical for integrating machine learning and other AI technologies into your QA process. These frameworks help support automation while incorporating AI-driven capabilities such as adaptive test case generation and predictive analytics. Examples include tools like Test.ai, Appvance, and the use of AI-enhanced frameworks like Selenium combined with machine learning models.

3. Collaboration Between Teams

AI adoption in QA is not a solo effort. Successful transformation requires a close-knit collaboration between QA engineers, developers, and data scientists. Developers provide insights into code changes, while data scientists help build the machine learning models. QA engineers ensure the AI models are working correctly and refine them to improve their effectiveness. A collaborative approach maximizes the value AI brings to the QA process.

4. Real-Time Monitoring and Feedback Loop

AI systems continuously learn and adapt, so having a real-time monitoring system is essential. This allows AI models to gather data from ongoing tests, assess the results, and refine their predictions based on the feedback. Regular monitoring ensures that AI-driven QA processes evolve over time, becoming more accurate and efficient with each testing cycle.

Best Practices for AI-Driven QA Transformation

To successfully adopt AI in your QA processes, here are some best practices that will help you optimize the implementation and achieve the desired results:

1. Start Small, Scale Gradually

AI-driven QA can initially seem overwhelming, so it’s advisable to start small. Begin by integrating AI in specific areas such as regression testing, defect prediction, or automated test case prioritization. This will allow your team to gauge the effectiveness of AI without a large upfront investment. Once you see tangible results, gradually scale the AI implementation to more areas of testing.

2. Invest in Training and Skill Development

For AI to be fully effective, the team must be properly trained. QA engineers, developers, and data scientists need to understand how machine learning models work, how to interpret AI-driven results, and how to integrate AI tools with existing testing workflows. Ensuring that your team is up-to-date on the latest AI techniques and tools will set the foundation for success.

3. Ensure Data Integrity

AI models are only as good as the data they’re trained on. Ensuring that your data is clean, consistent, and comprehensive is crucial. Regularly audit and refine your data sources to avoid issues like incomplete or inaccurate test data, which could hinder the AI’s ability to predict and optimize effectively.

4. Monitor and Fine-Tune AI Models

AI-driven QA is not a set-it-and-forget-it solution. Continuous monitoring and tuning are required for optimal performance. As new test cases and code changes arise, AI models must be fine-tuned to ensure they adapt to these changes and continue to provide accurate predictions. Regular evaluation of AI performance will help identify areas for improvement and ensure your testing processes stay up to date.

5. Maintain Human Oversight

While AI can automate a significant portion of the testing process, human expertise is still essential. AI can handle routine testing tasks, but complex scenarios and unexpected issues still require human intervention. Ensuring that AI complements, rather than replaces, human testers will allow for better judgment and decision-making throughout the testing process.

Conclusion

AI-driven Quality Assurance is revolutionizing the way we test software, making it faster, more intelligent, and efficient. By leveraging machine learning and automation, organizations can boost test coverage, enhance software quality, and shorten release cycles. Successful implementation requires quality data, careful planning, and strong collaboration. As AI evolves, it will continue to make testing more adaptive and powerful, helping businesses meet the challenges of modern software development.

At Testrig Technologies, we specialize in AI/ML testing services that optimize software quality and performance. By utilizing machine learning models and AI-powered automation, we assist organizations in improving test coverage, speeding up release cycles, and identifying issues with greater precision.

#software testing#ai in software#testrigtechnologies#testing#testrig#qa company#quality assurance services

0 notes