#Acquisition and Signal Conditioning

Explore tagged Tumblr posts

Text

The Essential Guide to Data Acquisition and Signal Conditioning

In today’s data-driven world, industries rely on accurate and reliable data to make informed decisions. Whether in manufacturing, healthcare, or research, data acquisition (DAQ) systems play a crucial role in collecting, analyzing, and interpreting signals from various sources. However, raw signals are often noisy or distorted, necessitating signal conditioning to improve accuracy and reliability. This guide delves into the fundamentals of Data Acquisition and Signal Conditioning, their importance, and how they enhance overall system performance.

What is Data Acquisition (DAQ)?

Data acquisition (DAQ) is the process of collecting and measuring physical or electrical signals from real-world sources such as temperature sensors, pressure transducers, and strain gauges. The primary components of a DAQ system include:

Sensors and Transducers – Convert physical parameters into electrical signals.

Signal Conditioning Units – Process signals to make them suitable for digitization.

Analog-to-Digital Converters (ADC) – Convert analog signals into digital data.

Data Processing and Storage – Processed data is stored or transmitted for analysis.

The Importance of Signal Conditioning

Raw signals from sensors often contain noise, interference, and unwanted fluctuations. Signal conditioning is the process of modifying these signals to improve their quality and accuracy before digital conversion. Key functions of signal conditioning include:

Amplification – Boosts weak signals for better detection.

Filtering – Removes unwanted noise and interference.

Isolation – Protects DAQ systems from high voltage spikes and electrical interference.

Linearization – Corrects non-linear responses from certain sensors.

Multiplexing – Enables multiple signals to be processed through a single channel.

Types of Signal Conditioning Techniques

Different applications require different signal conditioning techniques to ensure high-quality data collection. Some of the most common techniques include:

1. Amplification

Amplifiers enhance low-voltage signals from sensors, making them easier to process. This is particularly useful in applications where signals are weak, such as thermocouple readings or biomedical sensors.

2. Filtering

Filters remove unwanted frequencies and noise from signals. Common filter types include:

Low-pass filters – Remove high-frequency noise while preserving useful low-frequency components.

High-pass filters – Eliminate low-frequency disturbances such as DC offsets.

Band-pass filters – Allow only a specific range of frequencies to pass through.

3. Isolation

Isolation prevents interference and protects sensitive components from high voltage or ground loops. Optical isolation and transformer isolation are commonly used to enhance safety and performance.

4. Linearization

Some sensors, like thermocouples, produce non-linear outputs. Linearization techniques correct these deviations to ensure accurate measurements.

Choosing the Right DAQ System

Selecting the right data acquisition system depends on factors such as:

Type of Sensors – Ensure compatibility with input signals.

Sampling Rate – Determines the speed at which data is collected.

Resolution – Higher resolution leads to more precise data representation.

Connectivity Options – USB, Ethernet, or wireless connectivity for seamless data transfer.

Environmental Conditions – Consider factors such as temperature, humidity, and vibration resistance.

Applications of DAQ and Signal Conditioning

These technologies are used across various industries, including:

Manufacturing – Monitoring equipment performance and process control.

Automotive – Testing vehicle components and performance analysis.

Medical and Healthcare – Biomedical signal monitoring and diagnostics.

Aerospace – Aircraft testing and environmental monitoring.

Research and Development – Experimentation and data analysis.

Conclusion

Data acquisition and signal conditioning are fundamental in ensuring accurate, high-quality data collection for industrial, scientific, and research applications. By understanding the principles and best practices behind DAQ systems, businesses and researchers can make more informed decisions, improve operational efficiency, and enhance system reliability. Investing in a well-optimized DAQ system with proper signal conditioning ensures precise and actionable insights for any application.

0 notes

Text

My TFP Soundwave ramblings (be warned cuz there are many words)

I was gonna draw today but the prospect of it felt overwhelming for some reason so instead I’m just gonna talk about TFP Soundwave’s alt mode (a UAV/ reaper drone) just cuz I was reading about it and I like how I could link stuff between how he is and how reaper drones are.

So basically, one of the first things I wanna mention is that reaper drones/UAVs are unmanned aerial vehicles (given the whole “drone” thing and what UAV even means) but to me that just sorta makes sense for Soundwave in regard to his more.. Unsettling, robotic/alien-like behavior and movements? As well as his silence and usual distance from the front lines and his lack of showing face/(social?) detachment from like everyone else other than Laserbeak (don’t ask, it just makes sense to me). Reaper drones were also made to work at first only in intelligence, surveillance, and reconnaissance roles; but eventually additionally a hunter-killer role which you can kinda see in Soundwave’s character in the show (my best example is the scene where he retrieves Laserbeak from Ratchet, super cool creepy behavior from him, just waiting for something or someone to make any noise or any movement). When he has a mission, he’s most definitely getting it done, he stalks and lurks and takes action when the time is just Right; he’s very pinpoint accurate in Prime.

Reaper drones were also made to provide “deadly persistence” capability, being able to fly over areas night and day waiting for a target to present itself, or to survey for LONG long amounts of time. Which to me correlates to how he’s able to stand still and do work and wait and listen and watch and do everything for So Long as he does in the show (and tolerate Starscream— or like everyone actually for so long 🙄).

Reapers also utilize satellite communications for command and control (as in, they kinda literally have satellite dishes in them I think that’s what that is?), so that to me also easily parallels Soundwave's abilities with the space bridges and kind of his visor being computer-like as well (and that time he used an. Antenna satellite thing? To look for signals or whatever). They also use other multiple sensors to target and observe, which include optical (high resolution imagery for identification and target acquisition), infrared, and radar systems (enables the drone to locate and track targets regardless of weather conditions or visibility). Which imo links to how Soundwave is described as the “eyes and ears of the decepticons”.

They carry many weapons but I’m not really gonna get into that tbh cuz. Idk. Don’t wanna. Also TFP Soundwave doesn’t fight often anyways and when he does it’s mostly just straight hands (and data cables). And this is as far as my not the most accurate of ramblings most likely but just one I wanted to make because there’s just a Lot from so Little of TFP Soundwave I just love to think about it. Was I geeked out writing this? Maybe, so what 😒

#If there’s one thing about Soundwave it’s that he’s one hell of a capable mech.#Like you really gotta give it to him#transformers#soundwave#transformers prime#tfp#tfp soundwave#maccadam#transformers analysis#transformer#decepticons

569 notes

·

View notes

Text

Writers Guild West Official: Era of Hollywood Mergers Hastened the Strike

August 10, 2023

Laura Blum-Smith, the Writers Guild of America West’s director of research and public policy, considers the strike a result of a tsunami of Hollywood mergers that has handed studios and streamers the power to its exploit workers.

“Harmful mergers and attempts to monopolize markets are a recurring theme in the history of media and entertainment, and they are a key part of what led 11,500 writers to go on strike more than 100 days ago against their employers,” Blum-Smith said on Thursday at an event with the Federal Trade Commission and Department of Justice over new merger guidelines unveiled in July.

She pointed to Disney, Amazon and Netflix as companies that “gained power through anticompetitive consolidation and vertical integration,” allowing them to impose “more and more precarious working conditions, increasingly short term employment and lower pay for writers and other workers across the industry.” But she sees revisions to the merger guidelines that address labor concerns a key part of the solution to prevent further mergers in the entertainment industry moving forward.

“The FTC and DOJ’s new draft merger guidelines are part of a deeply necessary effort to revive antitrust enforcement,” she added. “Compared with earlier guidelines, the new ones are much more skeptical of the idea that mergers are the natural way for companies to grow. And they focus more on the various ways mergers hurt competition, including how mergers impact workers.”

In July, the FTC and DOJ jointly released a new road map for regulatory review of mergers. They require companies to consider the impact of proposed transactions on labor, signaling that the agencies intend to review whether mergers could negatively impact wages and working conditions. FTC commissioner Alvaro Bedoya, who was joined by agency chair Lina Khan, said in a statement about the guidelines that “a merger that may substantially lessen competition for workers will not be immunized by a prediction that predicted savings from a merger will be passed on to consumers.” Historically, transactions have been considered mostly through the lens of benefits to consumers.

The guidelines lack the force of law but influence the way in which judges consider lawsuits to block proposed transactions. They also tell the public how competition enforcers will assess the potential for a merger’s harm to competition.

Antitrust enforcers have steadily been taking notice of negative impacts to labor as a result of industry consolidation. “We’ve heard concerns that a handful of companies may now again be controlling the bulk of the entertainment supply chain from content creation to distribution,” Khan said last year during a listening forum over revisions to the guidelines, in a nod to anticompetitive conduct by studios that led to the Paramount Decrees. “We’ve heard concerns that this type of consolidation and integration can enable firms to exert market power over creators and workers alike.”

Adam Conover, writer and WGA board member, said in that April 2022 forum that his show Adam Ruins Everything was killed by AT&T’s acquisition of Time Warner in 2018 when TruTV’s parent company forced the network to cut costs. He stressed that a handful of companies “now control the production and distribution of almost all entertainment content available to the American public,” allowing them to “more easily hold down our wages and set onerous terms for our employment.” It’s not just writers that are impacted by an overly consolidated Hollywood either, he explained. After Disney acquired 21st Century Fox in 2019, he said that the studios pushed the industry into ending backend participation and trapping actors in exclusive contracts preventing them from pursuing other work.

Blum-Smith said that aggressive competition enforcement is necessary as “Wall Street continues to push for more consolidation among our employers despite the industry’s history of mergers that failed to deliver any of the consumer benefits they’ve claimed that left writers and audiences worse off with less diversity of content and fewer choices.”

“More mergers will leave writers with even fewer places to sell their work and tell their stories and the remaining companies will have even more power to lower pay and worsen working conditions,” she warned. “Strong enforcement against mergers is essential to protect workers in media and workers across the country and these guidelines are an important step in the right direction.”

2K notes

·

View notes

Text

Preserved in our archive

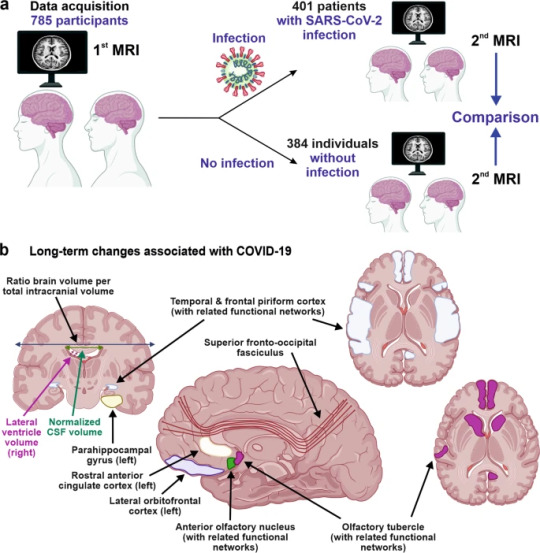

A research letter from 2022 highlighting the effects of even "mild" covid on the brain.

Dear Editor,

A recent study published in Nature by Douaud and colleagues1 shows that SARS-CoV-2 infection is associated with longitudinal effects, particularly on brain structures linked to the olfactory cortex, modestly accelerated reduction in global brain volume, and enhanced cognitive decline. Thus, even mild COVID-19 can be associated with long-lasting deleterious effects on brain structure and function.

Loss of smell and taste are amongst the earliest and most common effects of SARS-CoV-2 infection. In addition, headaches, memory problems, confusion, or loss of speech and motility occur in some individuals.2 While important progress has been made in understanding SARS-CoV-2-associated neurological manifestations, the underlying mechanisms are under debate and most knowledge stems from analyses of hospitalized patients with severe COVID-19.2 Most infected individuals, however, develop mild to moderate disease and recover without hospitalization. Whether or not mild COVID-19 is associated with long-term neurological manifestations and structural changes indicative of brain damage remained largely unknown.

Douaud and co-workers examined 785 participants of the UK Biobank (www.ukbiobank.ac.uk) who underwent magnetic resonance imaging (MRI) twice with an average inter-scan interval of 3.2 years, and 401 individuals testing positive for SARS-CoV-2 infection between MRI acquisitions (Fig. 1a). Strengths of the study are the large number of samples, the availability of scans obtained before and after infection, and the multi-parametric quantitative analyses of serial MRI acquisitions.1 These comprehensive and automated analyses with a non-infected control group allowed the authors to dissect consistent brain changes caused by SARS-CoV-2 infection from pre-existing conditions. Altogether, the MRI scan processing pipeline used extracted more than 2,000 features, named imaging-derived phenotypes (IDPs), from each participant’s imaging data. Initially, the authors focused on IDPs involved in the olfactory system. In agreement with the frequent impairment of smell and taste in COVID-19, they found greater atrophy and indicators of increased tissue damage in the anterior cingulate cortex, orbitofrontal cortex and insula, as well as in the ventral striatum, amygdala, hippocampus and para-hippocampal gyrus, which are connected to the primary olfactory cortex (Fig. 1b). Taking advantage of computational models allowing to differentiate changes related to SARS-CoV-2 infection from physiological age-related brain changes (e.g. decreases of brain volume with aging),3 they also explored IDPs covering the entire brain. Although most individuals experienced only mild symptoms of COVID-19, the authors detected an accelerated reduction in whole-brain volume and more pronounced cognitive declines associated with increased atrophy of a cognitive lobule of the cerebellum (crus II) in individuals with SARS-CoV-2 infection compared to the control group. These differences remained significant when 15 people who required hospitalization were excluded. Most brain changes for IDPs were moderate (average differences between the two groups of 0.2–2.0%, largest for volume of parahippocampal gyrus and entorhinal cortex) and accelerated brain volume loss was “only” observed in 56–62% of infected participants. Nonetheless, these results strongly suggest that even clinically mild COVID-19 might induce long-term structural alterations of the brain and cognitive impairment.

The study provides unique insights into COVID-19-associated changes in brain structure. The authors took great care in appropriately matching the case and control groups, making it unlikely that observed differences are due to confounding factors, although this possibility can never be entirely excluded. The mechanisms underlying these infection-associated changes, however, remain to be clarified. Viral neurotropism and direct infection of cells of the olfactory system, neuroinflammation and lack of sensory input have been suggested as reasons for the degenerative events in olfactory-related brain structures and neurological complications.4 These mechanisms are not mutually exclusive and may synergize in causing neurodegenerative disorders as consequence of COVID-19.

The study participants became infected between March 2020 and April 2021, before the emergence of the Omicron variant of concern (VOC) that currently dominates the COVID-19 pandemic. During that time period, the Alpha and Beta VOCs dominated in the UK and all results were obtained from individuals between 51 and 81 years of age. It will be of great interest to clarify whether Omicron, that seems to be less pathogenic than other SARS-CoV-2 variants, also causes long-term brain damage. The vaccination status of the participants was not available in the study1 and it will be important to clarify whether long-term changes in brain structure also occur in vaccinated and/or younger individuals. Other important questions are whether these structural changes are reversible or permanent and may even enhance the frequency for neurodegenerative diseases that are usually age-related, such as Alzheimer’s, Parkinson’s or Huntington’s disease. Previous findings suggest that cognitive disorders improve over time after severe COVID-19;5 yet it remains to be determined whether the described brain changes will translate into symptoms later in life such as dementia. Douaud and colleagues report that none of top 10 IDPs correlated significantly with the time interval between SARS-CoV-2 infection and the 2nd MRI acquisition, suggesting that the observed abnormalities might be very long-lasting.

Currently, many restrictions and protective measures are relaxed because Omicron is highly transmissible but usually causes mild to moderate acute disease. This raises hope that SARS-CoV-2 may evolve towards reduced pathogenicity and become similar to circulating coronaviruses causing mild respiratory infections. More work needs to be done to clarify whether the current Omicron and future variants of SARS-CoV-2 may also cause lasting brain abnormalities and whether these can be prevented by vaccination or therapy. However, the finding by Douaud and colleagues1 that SARS-CoV-2 causes structural changes in the brain that may be permanent and could relate to neurological decline is of concern and illustrates that the pathogenesis of this virus is markedly different from that of circulating human coronaviruses. Further studies, to elucidate the mechanisms underlying COVID-19-associated neurological abnormalities and how to prevent or reverse them are urgently needed.

REFERENCES (Follow link)

#public health#wear a mask#covid 19#pandemic#covid#wear a respirator#mask up#still coviding#coronavirus#sars cov 2#long covid#covid conscious#covid is airborne

27 notes

·

View notes

Text

Over the past decade, deregulation and the growing dominance of streaming video have laid the groundwork for a media landscape where just three companies—Disney, Amazon, and Netflix—are poised to be the new gatekeepers. This report from the WGAW details how these three companies have amassed power through anticompetitive practices and abusing their dominance to further disadvantage competitors, raise prices for consumers, and push down wages for the creative workforce. Pay and working conditions for writers have become so dire, and media conglomerates so unresponsive, that 11,500 writers went on strike in May 2023. Without intervention from antitrust agencies and lawmakers, consolidation will continue to snowball, leaving the future of media in peril. These new gatekeepers have amassed market power through mergers and other anti-competitive practices, offering an alarming window into the future of media. Disney has grown through a series of multibillion-dollar acquisitions, using its power to reduce film output, shut down competing studios, foreclose independent content from its distribution networks, expand control of the labor market, and force creators to give up financial participation in future licensing revenue. Amazon has gained a sizeable footprint in media in a short time by utilizing the well-documented playbook critical to its ascendance as a tech company. Though anticompetitive behavior and vertical integration, Amazon has harmed competitors, privileged its related business, and abused employer leverage to underpay writers. Netflix was once an innovative competitor, but is now using its position as the largest streaming service in the world to abuse its leverage as an employer, decrease innovative content spending and raise prices for consumers. The company has cut out independent producers and severely underpaid writers in multiple areas, and a series of recent acquisitions signal its intent to further increase dominance and market power in order to reduce innovative content investment.

110 notes

·

View notes

Text

The Power of Reading: Boosting Brain Health and Overall Wellness

In today's fast-paced digital world, where screens dominate our attention, the simple act of reading a physical book can often be overlooked. However, immersing yourself in a good book not only provides an escape but also offers numerous benefits for brain health and overall wellness. Here are some compelling reasons to pick up a book and indulge in reading.

1. Cognitive Stimulation

Reading is a fantastic way to keep your brain engaged. When you read, your brain is actively working to process information, understand plots, and remember characters. This cognitive stimulation can help improve brain function and may even reduce the risk of cognitive decline as you age. Studies have shown that engaging in mentally stimulating activities, like reading, can help maintain brain health and reduce the chances of developing conditions such as Alzheimer’s disease.

2. Stress Reduction

Diving into a good book can serve as a great escape from the stresses of daily life. A well-written novel can transport you to another world, allowing you to forget your worries for a while. Research has indicated that just six minutes of reading can reduce stress levels by up to 68%, making it more effective than other relaxation techniques such as listening to music or taking a walk.

3. Improved Focus and Concentration

In a world filled with distractions, reading a physical book requires focus and concentration. Unlike digital content, which often encourages skimming and multitasking, reading a book demands your full attention. This practice can help improve your overall ability to concentrate, which can carry over into other areas of your life, enhancing productivity and efficiency.

4. Enhanced Empathy and Emotional Intelligence

Reading fiction, in particular, can enhance your ability to empathize with others. Engaging with characters’ emotions and experiences allows you to understand different perspectives and cultures. This increased empathy can lead to improved social skills and emotional intelligence, fostering better relationships both personally and professionally.

5. Better Sleep Quality

Incorporating reading into your bedtime routine can promote better sleep quality. Unlike screens that emit blue light and can interfere with your sleep cycle, reading a physical book can help signal to your body that it’s time to wind down. Establishing a pre-sleep ritual that includes reading can lead to more restful sleep and improved overall health.

6. Knowledge Acquisition and Vocabulary Expansion

Every book you read is an opportunity to learn something new. Whether fiction or nonfiction, books provide a wealth of information, enhancing your knowledge base on various topics. Additionally, regular reading can significantly expand your vocabulary and improve your writing skills, which are invaluable in both personal and professional communications.

7. Encouragement of Mindfulness

Reading requires you to be present and engaged with the text, promoting mindfulness. This practice can help reduce anxiety and improve overall mental well-being. By immersing yourself in a story, you can practice being in the moment, which is a key component of mindfulness meditation.

The benefits of reading extend far beyond mere entertainment. By making time for a physical book in your daily routine, you can stimulate your mind, reduce stress, enhance empathy, and improve your overall wellness. So, the next time you find yourself scrolling through your phone or binge-watching a series, consider reaching for a book instead. Your brain—and your well-being—will thank you!

Love,

Kimmi

#wellness#naturopathy#health and wellness#reduce stress#reading#booklover#bookworm#read more#healthy lifestyle#healthyhabits#healthy living

3 notes

·

View notes

Text

Understanding the Accuracy of Digital Tensile Testing

Source of Info: https://www.perfectgroupindia.co.in/understanding-the-accuracy-of-digital-tensile-testing.php

A modern technique to measure the mechanical qualities of materials, such as their elasticity and strength, is digital tensile testing. The technique provides exact and accurate measurements of tensile stress and strain by using modern computerized technology. Perfect Group, a well-known company in India famous for its advanced automated tensile testing equipment, is at the top of this technology. Perfect Group is a top option for sectors needing exact material inspection because of its incorporation of modern digital technology, which provides superb reliability and accuracy in testing. This introduction explores the value and quality of computerized tensile testing in modern engineering and quality assurance.

What does digital tensile testing mean? Digital tensile testing means applying a controlled force to a material before it breaks or changes in order to measure its mechanical qualities. Tensile strength, growth, and modulus of elastic are among the characteristics that are measured by the test. Computerized tensile testing, in contrast to traditional techniques, uses modern sensors and digital technologies to produce accurate and thorough data.

Key Benefits of Digital Tensile Testing High accuracy: When compared to conventional techniques, computerized tensile testing provides more precision. The accuracy and consistency of the data acquired are guaranteed by the use of digital sensors and precision measuring instruments. Real-Time Data: Throughout the exam, digital technologies offer real-time feedback. This makes it possible to analyze and modify quickly, which results in testing procedures that are more effective. Detailed Reporting: Graphs and measurements are produced in full reports by computerized tensile testers. Making educated judgments and assessing material performance are made easier with the aid of this thorough data. Automated Testing: Digital tensile testing equipment's automated features lower human error and boost repeatability. This guarantees that the same situations are used for every test. Improved Data Storage: Electronic data is stored by digital systems, which promotes simple access, evaluation, and comparison of outcomes across time.

Components of Digital Tensile Testing Systems Testing Machine: The primary device that delivers force on the substance. It is made up of grips, a control system, and a load cell. Modern tensile testing equipment from Perfect Group is renowned for its accuracy and durability. Load Cell: A sensor that calculates the force applied on the material. It changes mechanical force into an electrical signal so that the digital system can process it. Grips: During the test, they are used to maintain the material in place. The material is held firmly and non-slip thanks to the grips' design. Control System: the program that maintains track of data, manages testing, and produces results. Advanced analytical tools and easy to use interfaces are features of modern digital systems. Data Acquisition System: Collects and processes data from the load cell and other sensors. It ensures that the measurements are accurate and consistent.

Factors Affecting the Accuracy of Digital Tensile Testing Correction: To maintain the tensile testing instrument accurate, regular calibration is essential. The machine's measurements are guaranteed to be complete and in line with industry requirements through calibration. Environmental Conditions: Test findings can be impacted by temperature, humidity, and other environmental conditions. In order to reduce these impacts, automated tensile testing systems frequently have environmental controls. Sample Preparation: Accurate findings depend on the test samples being prepared correctly. To guarantee uniformity, samples must be prepared in accordance with established protocols. Machine upkeep: To guarantee proper performance, the device being tested has to have regular maintenance, which includes inspecting the grips and load cell. Operator Training: Skilled operators are able to properly interpret test findings and handle the testing procedure. Correct instruction contributes to error reduction and increased data reliability.

Perfect Group: Leading the Way in Digital Tensile Testing When it comes to tensile testing solutions, Perfect Group is regarded as the top manufacturer in India. Perfect Group is committed to providing high-quality and innovative computerized tensile testing equipment. Our machines are renowned for their accuracy and dependability. Their goods are made to the finest industry specifications and offer accurate information for a range of uses.

Key Advantages of Choosing Perfect Group: Advanced Technology: To ensure excellent accuracy and efficiency, Perfect Group provides its computerized tensile testing equipment with the newest technology available. All-Inclusive assistance: We offer outstanding customer assistance, which includes maintenance, testing, and installation services. Customized Solutions: From manufacturing to research, Perfect Group provides customized options to address the unique requirements of many sectors. Quality Assurance: To guarantee that their machines produce precise and accurate results, we go through extensive testing and quality inspections.

Conclusion Digital tensile testing, which provides accurate and complete insights, is required to determine the physical features of materials. The effectiveness and security of testing procedures are increased by the use of digital systems and modern technologies. As the top brand in India, Perfect Group offers superior automated tensile testing solutions that satisfy industry requirements. Businesses and researchers can improve their material performance and product quality by making accurate choices and by knowing the advantages and accuracy of digital tensile testing.

0 notes

Text

Investors Dump Record Homes, Missouri, Oklahoma Lead Sell-Off

Record Investor Activity Reshapes U.S. Housing Market in 2024A seismic shift in investor behavior has fundamentally altered the U.S. housing market environment in 2024. This marks the most dramatic reversal in real estate investment activity since data collection began two decades ago.Investors offloaded approximately 509,000 homes throughout 2024. This represents 10.8% of all residential transactions nationwide.This unprecedented volume surpasses any previous annual selling activity on record. The massive sell-off spans all investor categories.Small operators disposed of 270,000 properties, while large institutional players dumped 123,800 homes. Clear communication breakdown played a significant role in how these selling activities unfolded, leading to a re-evaluation of strategies among investors.Housing trends now reflect a fundamental departure from pandemic-era acquisition strategies.Post-pandemic investor behavior pivots away from capitalizing on soaring valuations. Instead, they are now strategically repositioning amid market cooling.Softening rental income and stabilizing prices drive current selling decisions. This shift is no longer about profit-taking opportunities.The gap between investor purchases and sales compressed to historic lows. Large investors netted merely 8,700 additional properties in 2024. Meanwhile, small investors have expanded their market presence to represent 59.2% of all investor purchases.This is a drastic fall from 134,000 net properties in 2021. Such a dramatic shift signals the end of the investor-driven market dynamics that dominated recent years.Missouri and Oklahoma Emerge as Top States for Investor SalesMissouri and Oklahoma stood out in 2024 as leading states for investor property sales. Each state accounted for 16.7% of all homes sold by investors.Both states experienced year-over-year increases in sell-off activity. Missouri saw a climb of 0.5% while Oklahoma recorded a 1.7% surge.These figures significantly exceeded the national average for investor-driven transactions. Missouri focused on maximizing gains in affordable markets with strong rental demand. Opportunities for diversifying and navigating financing complexities allowed investors to build stronger portfolios.Springfield emerged as a crucial hotspot for disposals alongside continued buying pressure.Oklahoma displayed similar trends with rapid portfolio turnover. Oklahoma City became a key point for both acquisitions and liquidations.Investors took advantage of favorable market conditions. The shift was driven by cutting losses in a declining rental market rather than cashing in on property appreciation.Selling activity in both states surpassed traditionally investor-heavy areas like California and New York. Despite high disposal volumes, rental price growth and demographic stability remained appealing.Regional dynamics highlighted flexible investor strategies. They targeted central markets with manageable risks and substantial upside potential.Economic Factors Driving Investment Decisions in the MidwestWhile coastal markets grapple with affordability crises and inventory constraints, Midwest regions have emerged as economic powerhouses. These areas are driving unprecedented investor migration patterns across the nation.The Midwest economy presents compelling fundamentals that are reshaping investment strategies. Job growth rates in urban centers like Milwaukee and Detroit have surpassed national averages.This creates robust employment ecosystems attracting both institutional and individual investors. Low unemployment rates in cities such as Naperville have bolstered investor confidence.Skilled labor demand supports stable rental markets and consistent property value retention. Housing prices remain dramatically lower than coastal regions, yet deliver superior yields and profit margins.Operating costs in Midwest markets enable portfolio diversification strategies. Such diversification was previously impossible in expensive metropolitan areas.

Supply growth remains moderate, preventing oversaturation. This maintains competitive bidding environments in select cities.Projected mortgage rate declines in 2025 increase investor leverage opportunities. Federal infrastructure investments enhance long-term property values.Data analytics tools provide real-time market monitoring capabilities. This reduces investment risks in emerging growth pockets.In addition, following mentorship principles can accelerate learning for investors entering these evolving Midwest markets, ensuring they adapt effectively to shifting economic trends.Regional Market Dynamics and Price Projections for 2025Missouri and Oklahoma markets are charting distinct courses, indicating major shifts in Midwest investment landscapes as we approach 2025. The Missouri housing market is nearing market equilibrium, characterized by rapid inventory growth.The state's two-month supply of homes marks a move away from seller-dominated conditions. Average home values in Missouri are around $260,908. However, price stability is uncertain with signs of slower growth.Market analysts predict potential price declines in the latter half of 2025. In contrast, Oklahoma sees median home prices rising toward $200,000 by year's end.Population growth and economic diversification in energy and manufacturing sectors drive strong demand in Oklahoma. Competitive areas like Ballwin still witness bidding wars above asking prices.In rural Missouri, properties are taking longer to sell, offering strategic opportunities for buyers. Oklahoma's regulatory challenges add to investor uncertainty.A focus on tenant satisfaction and maintaining competitive rent are crucial for sustaining market position and fostering loyalty in Jennings, LA.Nonetheless, undiscovered neighborhood gems are attracting speculative investments.Increasing inventory in Missouri might enhance affordability, though market fluctuations could destabilize Oklahoma's price trends. Together, these states offer varied risk profiles, prompting institutional investors to reconsider their Midwest strategies.Supply and Demand Impact From Massive Investor Sell-OffA record-breaking 10.8% of home sellers are currently investors offloading properties at unprecedented rates. This trend is fundamentally reshaping the supply-demand dynamics in American housing markets.The mass sell-off results in dual disruptions threatening market stability. As properties flood local markets, investor behavior shifts dramatically, creating artificial housing supply surges.These surges overwhelm buyer capacity, causing increased inventory accumulation. Homes are hitting the market faster than traditional buyers can absorb them.New listings are climbing year-over-year due to this pressure from investor liquidations. At the same time, demand faces compression from multiple fronts.Elevated mortgage rates are deterring potential buyers, while economic uncertainty freezes purchasing decisions. The collapse in investor home buying also removes a vital demand component.As a result, a supply-demand imbalance is triggering price volatility across affected regions. Markets become hypersensitive to demand fluctuations as investor selling accelerates.The lock-in effect is further constraining transaction volume. This leaves fewer active participants to absorb the mounting inventory surge.This situation parallels the U.S. housing market nearing disaster due to an affordability crisis, as unsold properties pile up and destabilize market conditions.AssessmentThe unprecedented investor exodus from residential properties signals a fundamental shift. Market dynamics are changing across key Midwest regions.Missouri and Oklahoma are now the sell-off epicenters. This reflects broader economic pressures that are reshaping investment strategies nationwide.Market participants must brace for continued volatility. Institutional players are retreating from previously profitable territories.The cascading effects of this massive withdrawal will likely redefine regional housing ecosystems.

This creates both risks and opportunities for remaining stakeholders.These changes are expected to unfold through 2025. Investors and homeowners alike must adapt to the evolving landscape.

0 notes

Text

Rotary Torque Sensors: Enhancing Rotational Force Measurement with Star EMBSYS Solutions

Rotary torque sensors are critical components used to measure the rotational force (torque) applied on a rotating system, such as motors, gearboxes, or turbines. These sensors are essential in performance testing, quality control, and process monitoring across industries like automotive, aerospace, robotics, and manufacturing. Among the innovative providers in this space, Star EMBSYS offers high-performance, customizable rotary torque sensor systems that integrate advanced embedded technology for unmatched precision and reliability.

A rotary torque sensor measures torque by detecting the strain (twisting) on a rotating shaft. Typically based on strain gauge or magnetoelastic principles, these sensors output a signal proportional to the applied torque. Unlike static torque sensors, rotary torque sensors are designed to operate on rotating shafts, often using slip rings, wireless telemetry, or rotary transformers to transmit data.

Star EMBSYS brings its embedded systems expertise into torque sensing by offering intelligent rotary torque sensor solutions. These systems are equipped with real-time data acquisition, signal conditioning, and digital communication interfaces, making them ideal for dynamic and high-speed applications. Their sensors support both analog (voltage/current) and digital (UART, SPI, I2C, CAN) outputs, ensuring seamless integration with control systems, data loggers, and monitoring software.

One of the core strengths of Star EMBSYS is its ability to deliver customized sensor solutions tailored to the client’s mechanical and electrical requirements. Whether it's a high-precision lab-grade application or a rugged industrial setting, Star EMBSYS designs torque sensors with features like temperature compensation, vibration resistance, and long-term stability. Their embedded firmware ensures accurate real-time monitoring with minimal latency, critical for applications like EV motor testing or robotic joint torque feedback.

Additionally, Star EMBSYS offers user-friendly calibration and diagnostic tools, allowing engineers to maintain accuracy and system health over time. Their rotary torque sensors can be combined with wireless telemetry for remote monitoring, a vital feature for testing in mobile or rotating systems where cabling is impractical.

In conclusion, rotary torque sensors are essential for understanding and optimizing mechanical performance. Star EMBSYS stands out by delivering not just sensors, but smart embedded torque measurement solutions that empower engineers with real-time, high-fidelity data. With a commitment to innovation and customization, Star EMBSYS is a trusted partner for precision torque sensing in today’s demanding engineering applications.

Visit:- https://www.starembsys.com/rotary-torque-sensor.html

0 notes

Text

Global Force Sensors Market Set to Grow at ~7% CAGR Through 2031

The global force sensors market is on track to grow at a CAGR of about 6.96% through 2031, driven by increased industrial automation, booming automotive innovation, widespread IoT adoption, and growing presence in medical, wearable, and consumer device applications.

To Get Sample Report: https://www.datamintelligence.com/download-sample/force-sensors-market

Key Market Drivers & Opportunities

A. Industrial Automation & Robotics Rapid uptake of Industry 4.0 and automation in manufacturing, driven by robotics performing tasks like grinding, assembly, and precision handling, is a major catalyst. Force sensors enable accurate control and detection in real-time, minimizing errors and increasing production reliability.

B. Automotive & ADAS Force sensors are increasingly essential in automotive systems used in brake pressure control, seat occupancy detection, steering systems, and occupant safety in autonomous vehicles. EV and ADAS development are fueling strong adoption in the automotive sector.

C. IoT & Smart Sensor Trends IoT and smart manufacturing require connected sensors capable of condition monitoring, predictive maintenance, and remote diagnostics. Force sensors integrated with digital outputs and communication protocols deliver real-time insights across applications.

D. Miniaturization & Wearables Emerging wearable tech and compact medical devices rely on MEMS-based, ultra-thin, accurate force sensors. Miniaturization supports integration in prosthetics, haptic-feedback gadgets, fitness trackers, and robotics, expanding market use cases.

E. Medical & Healthcare Applications Medical devices from prosthetic hands to rehabilitation robots demand precise force measurement. An aging population and chronic disease growth are increasing adoption, enabling safer surgical tools and effective patient rehabilitation systems.

Restraints & Challenges

Miniaturization Difficulties: Making sensors small without losing sensitivity is technically complex, limiting use in ultra-compact devices.

Development Lead Time: Designing, prototyping, and testing high-precision force sensors is time-consuming.

Volatile End-Use Demand: Fluctuations in sectors like automotive or industrial automation can impact sensor demand and production planning.

Regional Trends

North America: Currently holds the largest market share, driven by its automotive, defense, and consumer electronics sectors. Asia‑Pacific: The fastest growing region, supported by rising automotive and electronics manufacturing in China, India, Japan, and South Korea. Strong EV growth and IoT innovation bolster demand. Europe: Growth supported by aerospace, defense, water and gas infrastructure automation, and smart industry initiatives.

Latest Industry News & Trends from Key Regions

United States: Manufacturers are introducing new smart mini-force sensors with IO-Link integration, enabling predictive maintenance and PLC compatibility in automotive assembly lines. Japan: Japan’s industrial smart sensor market reached about USD 2.26 billion in 2024 and is expected to grow at a 12.7% CAGR through 2033, driven by AI-enabled sensing in manufacturing and demand in electric/hybrid vehicle programs.

Technology Trends & Innovation

Smart Mini Force Sensors: Compact, digitally-enabled products are integrating signal processing, offering real-time monitoring and predictive analytics in tight spaces.

Stretchable & 6-Axis Force Sensing: Cutting-edge academic research into flexible, multi-axis sensors supports tactile robotic control and soft robotics applications.

Optical, Piezoresistive & Ultrasonic Technologies: Non-contact sensing methods are gaining traction in applications requiring high precision or hygienic operation.

Competitive Landscape

The market is highly fragmented with global and regional players competing through innovation, acquisitions, and product extensions. Key companies include Tekscan, TE Connectivity, Texas Instruments, Sensitronics, ATI Industrial Automation, Kistler, FUTEK, Omron, and others, each focusing on niche technologies or application-focused solutions.

Strategic Outlook & Growth Opportunities

Expand Smart Sensing Solutions: Offer force sensors with advanced features smart communication, analytics, predictive alerts to support Industry 4.0 deployments.

Invest in Miniaturization R&D: Target wearable and medical sectors by developing highly accurate, compact MEMS-based devices.

Localize Production in Asia-Pacific: Tap into high-growth automotive and electronics sectors with regional manufacturing and localized partnerships.

Promote Cross-Sector Adoption: Target adjacent markets such as consumer electronics, biotech, aerospace, and smart appliances.

Collaborate with Robotics and EV Innovators: Align with emerging sectors like robotics, EVs, and autonomous systems for early-stage integration.

Conclusion

The global force sensors market is positioned for sustained growth, powered by industrial automation, automotive innovation, smart sensor adoption, and expanding applications in healthcare and wearables. Despite technical challenges around scaling down and product development cycles, demand remains strong across global industries. Market leaders who invest in smart sensing platforms, miniaturization, and regional expansion will emerge as industry frontrunners.

0 notes

Link

#Bitcoin#Blockchain#cryptocurrency#digitalassets#ETFs#InstitutionalInvestors#marketregulation#MicroStrategy

0 notes

Text

Sensor Signal Conditioner IC Market to Reach USD 1.4 Billion by 2034, Expanding at a CAGR of 6.3%

Sensor Signal Conditioner IC Market The Sensor Signal Conditioner (SSC) IC market is witnessing steady growth, fueled by the increasing deployment of sensors across a wide range of industries for data acquisition and real-time monitoring. SSC ICs play a vital role in improving the accuracy and reliability of sensor outputs by amplifying, filtering, and conditioning the signals. These integrated…

View On WordPress

0 notes

Text

Snowflake to Acquire Crunchy Data in Strategic Move to Advance AI Agent Capabilities

Source: www.infoworld.com

Snowflake Postgres has announced its intent to acquire Crunchy Data, a move aimed at reinforcing its capabilities in building and deploying artificial intelligence (AI) agents and applications. The acquisition, which is still pending regulatory approval and standard closing conditions, is expected to significantly bolster Snowflake’s AI Data Cloud by incorporating Crunchy Data’s expertise in open-source PostgreSQL technology.

The acquisition will introduce a new product, Snowflake Postgres, into the company’s growing AI ecosystem. PostgreSQL, commonly known as Postgres, is a widely adopted open-source relational database system, used by nearly half of the world’s developers, according to the company’s statement released on June 2. This strategic addition will allow Snowflake customers to work more efficiently with AI agents by leveraging the flexible and powerful features of Postgres within the Snowflake platform.

Snowflake’s Senior Vice President of Engineering, Vivek Raghunathan, emphasized the scale of the opportunity, noting that the company is targeting a $350 billion market. He stated that bringing Postgres into the fold will allow customers to accelerate development and streamline operations within the Snowflake AI Data Cloud.

Postgres Integration to Enhance Mission-Critical Workloads

Crunchy Data, known for its security-first approach and compliance in regulated industries, brings valuable assets to Snowflake’s portfolio. Co-founder Paul Laurence highlighted the synergy between the two companies, particularly in offering enhanced capabilities for organizations that already rely on Postgres for handling sensitive and mission-critical workloads.

With the integration of Crunchy Data’s technology, Snowflake aims to support its customers, especially those operating in tightly regulated sectors, with tools that offer both scalability and confidence. The Snowflake Postgres product is expected to help users launch AI-driven applications faster while maintaining strict standards for data integrity and security.

The move reflects a broader trend in the tech industry where database technologies are being adapted to meet the rising demands of AI development, particularly in the area of autonomous agents. Snowflake’s platform, known for its robust data management capabilities, is increasingly positioning itself as a go-to solution for enterprises navigating the intersection of data and artificial intelligence.

AI Competition Heats Up with Database-Centric Acquisitions

Snowflake’s announcement follows similar activity from its competitors. On May 14, Databricks revealed its own acquisition of Neon, a database startup also focused on enhancing AI agent workflows. Databricks described Neon as purpose-built for agentic operations, offering developers a serverless Postgres environment aligned with the speed and economics required for AI development.

In February, Snowflake Postgres opened a new AI hub in Silicon Valley to support developers, startups, and enterprise clients in their pursuit of advanced AI applications. This latest acquisition of Crunchy Data builds on that momentum, reinforcing the company’s commitment to providing comprehensive AI solutions that integrate deeply with trusted, open-source technologies.

As the AI space rapidly evolves, acquisitions like these signal a critical shift in how enterprise platforms are adapting to support AI workloads, leveraging familiar tools like Postgres in novel and powerful ways.

Read Also: The Rise of Artificial Intelligence in Warfare: Transforming the Battlefield

0 notes

Text

A Shift in Mumbai's Luxury Real Estate: Unpacking the Q1 2025 Trends

Mumbai, the financial heartbeat of India, has long been a bellwether for the country's luxury real estate market. Recent data from Anarock for Q1 2025, however, presents a nuanced picture that warrants close attention from investors and high-net-worth individuals. For the first time since 2022, Mumbai has seen a significant uptick in the unsold stock of luxury homes.

The Rise in Unsold Inventory: What the Numbers Say According to Anarock's latest findings, the unsold inventory of luxury homes in Mumbai (properties priced above ₹2.5 crore) witnessed a notable 36% year-on-year increase. The stock rose from approximately 6,180 units at the end of Q1 2024 to nearly 8,420 units by the close of Q1 2025. This surge marks a pivotal moment, shifting the market dynamics that have largely favored sellers in recent years. While the headline might suggest a slowdown, for the discerning investor, it signals a recalibration of opportunities.

Beyond the Headlines: Opportunities for Discerning Investors Such a rise in unsold inventory, while seemingly a challenge, often presents unique advantages for buyers. It typically leads to increased negotiation power, a broader selection of premium properties, and potentially more attractive deals. Developers, keen to offload inventory, may become more flexible on pricing or offer enhanced amenities and payment plans. This environment could be particularly beneficial for those looking to secure prime luxury assets at potentially more favorable valuations than in a tightly constrained market.

For our esteemed clientele at Innovest.in, this trend underscores the importance of strategic timing and expert market insight. It's not about a slowdown in demand, but rather a shift towards a buyer-favorable landscape, especially for well-researched and strategically located properties.

Navigating the Nuances: The Innovest.in Advantage In a market characterized by evolving supply-demand dynamics, expert guidance becomes indispensable. At Innovest.in, we believe in empowering our clients with precise, data-driven insights. Our deep understanding of Mumbai's luxury real estate micro-markets allows us to identify undervalued opportunities and negotiate optimal terms. Whether you're seeking a trophy asset, a lifestyle investment, or a high-yielding rental property, navigating this landscape requires a sophisticated approach tailored to your specific investment objectives.

Looking Ahead: Strategic Moves in a Dynamic Market The luxury housing market in Mumbai remains robust in terms of long-term appreciation potential and desirability. The current increase in inventory should be viewed as a temporary adjustment, creating a window for strategic acquisitions. For those poised to act, Q1 2025's data is not a deterrent, but rather a call to engage with informed decision-making to capitalize on emerging opportunities.

Connect with Innovest.in to explore how you can leverage these evolving market conditions to expand your luxury real estate portfolio in Mumbai.

LuxuryRealEstate #MumbaiProperty #RealEstateInvestment #AnarockReport #InnovestIn #IndianLuxuryHomes #PropertyMarket #HNWI #InvestmentOpportunity

1 note

·

View note

Text

Revolutionizing Temperature Monitoring with IoT and DAQ Data Loggers

In an era where real-time data and remote monitoring define operational efficiency, industries dealing with temperature-sensitive products are rapidly shifting toward smarter, data-driven technologies. Whether it's food logistics, pharmaceutical storage, or scientific research, maintaining controlled environments is crucial. Enter the world of IoT data loggers, cold chain data loggers, and DAQ data acquisition systems—solutions that are transforming traditional monitoring into intelligent automation.

The Rise of IoT Data Logger Technology

The IoT data logger is at the forefront of this transformation. Unlike conventional loggers, IoT-enabled devices can wirelessly transmit temperature, humidity, pressure, and other environmental metrics in real time to cloud-based platforms. These systems eliminate the need for manual data retrieval and provide instant alerts if conditions deviate from pre-set parameters.

IoT data loggers are widely adopted in industries like cold storage, transportation, pharmaceuticals, and agriculture. The ability to access live environmental data through mobile apps or web dashboards enables businesses to act immediately, reducing the risk of product loss and improving overall accountability.

The Critical Role of Cold Chain Data Logger Devices

When dealing with temperature-sensitive goods such as vaccines, frozen foods, or laboratory samples, maintaining a consistent cold chain is vital. This is where a cold chain data logger becomes essential. These specialized loggers are engineered to monitor and record temperature and humidity during the transportation and storage of perishable goods.

Cold chain data loggers not only capture environmental changes but also generate detailed reports for regulatory compliance (such as FDA, WHO, or GDP standards). Many modern loggers offer USB plug-and-play access or wireless syncing capabilities, allowing for quick and easy data downloads.

These devices are used in:

Pharmaceutical shipments: Ensuring vaccine integrity during global distribution.

Food logistics: Tracking temperature consistency for dairy, seafood, or frozen items.

Clinical trials: Preserving the reliability of lab samples and biological agents.

Retail & warehousing: Ensuring compliance across storage facilities and outlets.

Understanding DAQ Data Acquisition Systems

DAQ (Data Acquisition) systems refer to the process of collecting and analyzing real-world physical signals—such as temperature, voltage, or pressure—and converting them into digital data that can be processed by a computer. In temperature monitoring systems, DAQ plays a critical role by enabling high-speed, high-accuracy data collection and analysis.

A DAQ data acquisition system, when integrated with a logger, enhances the monitoring process by allowing for real-time feedback loops, advanced analytics, and automated responses. For instance, in a pharmaceutical manufacturing plant, a DAQ-enabled system can immediately trigger alarms or activate cooling systems if temperature thresholds are crossed.

The synergy of DAQ technology with IoT data loggers and cold chain data loggers allows for scalable, modular systems that fit various applications—from a single warehouse to a multi-location global distribution network.

Benefits of Advanced Data Logging Systems

Real-Time Monitoring: Instant access to environmental data across geographies.

Regulatory Compliance: Generate automated audit-ready reports.

Loss Prevention: Minimize spoilage or damage by reacting to alerts in real-time.

Improved Efficiency: Reduce manual errors and labor costs through automation.

Data Transparency: Enable informed decision-making with continuous insights.

Conclusion

As industries become more reliant on precision and accountability, advanced tools like IoT data loggers, cold chain data loggers, and DAQ data acquisition systems are no longer optional—they’re essential. These technologies empower businesses to ensure product integrity, meet compliance standards, and gain real-time control over environmental conditions across the entire supply chain. By adopting smart monitoring solutions, companies are not just safeguarding their products—they’re building trust, efficiency, and long-term resilience.

0 notes