#Advanced kubectl commands

Explore tagged Tumblr posts

Text

Kubectl get context: List Kubernetes cluster connections

Kubectl get context: List Kubernetes cluster connections @vexpert #homelab #vmwarecommunities #KubernetesCommandLineGuide #UnderstandingKubectl #ManagingKubernetesResources #KubectlContextManagement #WorkingWithMultipleKubernetesClusters #k8sforbeginners

kubectl, a command line tool, facilitates direct interaction with the Kubernetes API server. Its versatility spans various operations, from procuring cluster data with kubectl get context to manipulating resources using an assortment of kubectl commands. Table of contentsComprehending Fundamental Kubectl CommandsWorking with More Than One Kubernetes ClusterNavigating Contexts with kubectl…

View On WordPress

#Advanced kubectl commands#Kubectl config settings#Kubectl context management#Kubectl for beginners#Kubernetes command line guide#Managing Kubernetes resources#Setting up kubeconfig files#Switching Kubernetes contexts#Understanding kubectl#Working with multiple Kubernetes clusters

0 notes

Text

HELM MasterClass: Kubernetes Packaging Manager

1. Introduction

Understanding Kubernetes

Kubernetes has become the de facto standard for container orchestration, enabling developers to deploy, manage, and scale applications efficiently. Its powerful features make it an essential tool in modern DevOps, but the complexity of managing Kubernetes resources can be overwhelming.

The Role of HELM in Kubernetes

HELM simplifies the Kubernetes experience by providing a packaging manager that streamlines the deployment and management of applications. It allows developers to define, install, and upgrade even the most complex Kubernetes applications.

Overview of the Article Structure

In this article, we'll explore HELM, its core concepts, how to install and use it, and best practices for leveraging HELM in your Kubernetes environments. We'll also dive into advanced features, real-world case studies, and the future of HELM.

2. What is HELM?

Definition and Purpose

HELM is a package manager for Kubernetes, akin to what APT is to Debian or YUM is to CentOS. It simplifies the deployment of applications on Kubernetes by packaging them into charts, which are collections of files that describe the Kubernetes resources.

History and Evolution of HELM

HELM was created by Deis, which later became part of Microsoft Azure. Over the years, it has evolved into a robust tool that is now maintained by the Cloud Native Computing Foundation (CNCF), reflecting its significance in the Kubernetes ecosystem.

Importance of HELM in Modern DevOps

In modern DevOps, where agility and automation are key, HELM plays a crucial role. It reduces the complexity of Kubernetes deployments, enables version control for infrastructure, and supports continuous deployment strategies.

3. Core Concepts of HELM

Charts: The Packaging Format

Charts are the fundamental unit of packaging in HELM. A chart is a directory of files that describe a related set of Kubernetes resources. Charts can be shared through repositories and customized to suit different environments.

Repositories: Hosting and Managing Charts

HELM charts are stored in repositories, similar to package repositories in Linux. These repositories can be public or private, and they provide a way to share and distribute charts.

Releases: Managing Deployments

A release is an instance of a chart running in a Kubernetes cluster. Each time you deploy a chart, HELM creates a release. This allows you to manage and upgrade your applications over time.

Values: Configuration Management

Values are the configuration files used by HELM to customize charts. They allow you to override default settings, making it easy to adapt charts to different environments or use cases.

4. Installing and Setting Up HELM

Prerequisites for Installation

Before installing HELM, ensure that you have a running Kubernetes cluster and that kubectl is configured to interact with it. You'll also need to install HELM's client-side component on your local machine.

Step-by-Step Installation Guide

To install HELM, download the latest version from the official website, extract the binary, and move it to your PATH. You can verify the installation by running helm version in your terminal.

Setting Up HELM on a Kubernetes Cluster

Once installed, you need to configure HELM to work with your Kubernetes cluster. This involves initializing HELM (if using an older version) and setting up a service account with the necessary permissions.

5. Creating and Managing HELM Charts

How to Create a HELM Chart

Creating a HELM chart involves using the helm create command, which sets up a boilerplate directory structure. From there, you can customize the chart by editing the templates and values files.

Best Practices for Chart Development

When developing charts, follow best practices such as keeping templates simple, using values.yaml for configuration, and testing charts with tools like helm lint and helm test.

Versioning and Updating Charts

Version control is crucial in chart development. Use semantic versioning to manage chart versions and ensure that updates are backward compatible. HELM's helm upgrade command makes it easy to deploy new versions of your charts.

6. Deploying Applications with HELM

Deploying a Simple Application

To deploy an application with HELM, you use the helm install command followed by the chart name and release name. This will deploy the application to your Kubernetes cluster based on the chart's configuration.

Managing Application Lifecycles with HELM

HELM simplifies application lifecycle management by providing commands for upgrading, rolling back, and uninstalling releases. This ensures that your applications can evolve over time without downtime.

Troubleshooting Deployment Issues

If something goes wrong during deployment, HELM provides detailed logs that can help you troubleshoot the issue. Common problems include misconfigured values or missing dependencies, which can be resolved by reviewing the chart's configuration.

7. HELM Repositories

Setting Up a Local HELM Repository

Setting up a local repository involves running a simple HTTP server that serves your charts. This is useful for testing and internal use before publishing charts to a public repository.

Using Public HELM Repositories

Public repositories like Helm Hub provide a vast collection of charts for various applications. You can add these repositories to your HELM setup using the helm repo add command and then install charts directly from them.

Security Considerations for HELM Repositories

When using or hosting HELM repositories, security is paramount. Ensure that your repository is secured with HTTPS, and always verify the integrity of charts before deploying them.

8. Advanced HELM Features

Using HELM Hooks for Automation

HELM hooks allow you to automate tasks at different points in a chart's lifecycle, such as before or after installation. This can be useful for tasks like database migrations or cleanup operations.

Managing Dependencies with HELM

HELM can manage chart dependencies through the requirements.yaml file. This allows you to define and install other charts that your application depends on, simplifying complex deployments.

Using HELM with CI/CD Pipelines

Integrating HELM with your CI/CD pipeline enables automated deployments and updates. Tools like Jenkins, GitLab CI, and GitHub Actions can be used to automate HELM commands, ensuring continuous delivery.

0 notes

Text

Kubernetes Airflow

Airflow Kubernetes Executor

Kubernetes Airflow System

Wouldn’t be convenient to be able to run Apache Airflow locally with the Kubernetes Executor on a multi-node Kubernetes cluster? That’s could be a great way to test your DAGs and understand how Airflow works in a Kubernetes environment isn’t it? Well that’s exactly what we are going to do here. I will show you step by step, how to quickly set up your own development environment and start running Airflow locally on Kubernetes. If you want to learn more about Airflow, don’t forget to check my course: Apache Airflow: The Complete Hands-On Introduction. Let’s get started!

Apache Airflow is an open source workflow management tool used to author, schedule, and monitor ETL pipelines and machine learning workflows among other uses. To make easy to deploy a scalable Apache Arflow in production environments, Bitnami provides an Apache Airflow Helm chart comprised, by default, of three synchronized nodes: web server, scheduler, and worke.

The kubernetes executor is introduced in Apache Airflow 1.10.0. The Kubernetes executor will create a new pod for every task instance using the podtemplate.yaml that you can find templates/config/configmap.yaml, otherwise you can override this template using worker.podTemplate. To enable KubernetesExecutor set the following parameters. This allows us to scale airflow workers and executors, but we still have problems like this. This article is going to show how to: Use airflow kubernetes operator to isolate all business rules from airflow pipelines; Create a YAML DAG using schema validations to simplify the usage of airflow for some users; Define a pipeline pattern. Containers Deploying Bitnami applications as containers is the best way to get the most from your infrastructure. Our application containers are designed to work well together, are extensively documented, and like our other application formats, our containers are continuously updated when new versions are made available. A Kubernetes cluster of 3 nodes will be set up with Rancher, Airflow and the Kubernetes Executor in local to run your data pipelines. Advanced concepts will be shown through practical examples such as templatating your DAGs, how to make your DAG dependent of another, what are Subdags and deadlocks, and more.

As we are going to create a multi-node Kubernetes cluster and interact with it, there are some tools to install in first. Let’s discover them.

The first one is KinD. KinD means Kubernetes IN Docker and allows to run local multi-node kubernetes clusters using Docker container “nodes”. Unlike with MiniKube, KinD has a significantly faster startup speed since it doesn’t rely on virtual machines. Take a look at the quick start guide to install it. Obviously, Docker should be installed as well on your machine.

The second tool to install is Kubectl. If you are familiar with Kubernetes, you should already know Kubectl. Kubectl is the official Kubernetes command-line tool and allows you to run commands against Kubernetes clusters. Whenever you want to deploy applications, manage cluster resources, or view logs, you will use Kubectl. Check the documentation to install it.

The last tool we need is Helm. Helm is the package manager for Kubenetes in order to install and manage Kubernetes applications in a very easy way. Helm relies on helm charts. A chart is a collection of files describing a set of Kubernetes resources. For example, the chart of Airflow will deploy a web server, the scheduler, the metastore, a service to access the UI and so on. Take a look at the Airflow chart here to have a better idea of what a chart is. Installing Helm is pretty straightforward as you can see here.

Now tools are installed, let’s create the Kubernetes cluster to run Apache Airflow locally with the Kubernetes Executor.

To give you a better hands-on experience, I made the following video where I show you how to set up everything you need to get Airflow running locally on a multi-node Kubernetes cluster. In this video, you will learn:

Airflow Kubernetes Executor

Configuring and creating a multi-node Kubernetes cluster with KinD

Installing and upgrading the Helm chart of Airflow.

Building your Docker image of Airflow packaged with your DAGs

Creating a local registry to push your Docker image of Airflow

Configuring Airflow to execute your tasks with the Kubernetes Executor.

That’s a lot of amazing stuff to learn! At the end, you will have Airflow running with the Kubernetes Executor in a local multi-node Kubernetes cluster. That way, you will be able to test and execute your DAGs in a Kubernetes environment without having to use expensive cloud providers. Enjoy!

Kubernetes Airflow System

Interested by learning more? Stay tuned and get special promotions!

1 note

·

View note

Text

CKAD Exam Question&Answers-2020

Certified Kubernetes Application Developer (CKAD) - Exam Preparation Training Course

While you'll be able to read why Devops and Kubernetes the hard method to CKAD Exam Dumps Free prepare. Read our previous article to your readiness for the CKAD exam who use. All of my teammates who underwent the Advanced Kubernetes training provided by examination CKAD Real Questions atmosphere. We had chosen Kubernetes as effectively on the questions at hand training. Candidates can even perceive the second time as a result of your mind is CKAD Exam Killer properly CKAD Test Prep ready. Stay hydrated one nugget in this to be very properly structured and effectively. For me I am not certain in case you get one free retake voucher within the ggckad-s5 namespace. 4 get conversant in all the things because the open supply Cloud Computing and Kubernetes are an ideal match. Kubernetes had the very best trending technology in Cloud Computing as of right now and that was painful. Still use this contains data in regards to the examination and coaching for the CKAD is the Cloud Playground. Manage Kubernetes training supplied by Cloud Foundry.

CLICK THE LINK TO DOWNLOAD FREE DEMONSTRATION: https://www.certkillers.net/Exam/CKAD

Certified Kubernetes Application Developer (CKAD) - Exam Preparation Training Course

Learning Kubernetes and making ready us for the CKA and two hours for the CKA. Microsoftcertkillers Kubernetes studying plan on utilizing auto-completion in the course of the examination preparation journey simple. I might counsel to open one for Kubernetes when you practiced some free workouts. This gave me one master Kubernetes and preparing for Linux Foundation LFD259 Kubernetes for developers training course. Which one is left could have. Have lots of time configuring. Otherwise my grade would have to face a threat of losing time and cash if failed. You solely should determine to skip the question you skip in the course of the examination in 30 minutes. You can discover a manner to organize for thekubernetes Application Developer CKAD exam questions. With all the knowledge introduced here you can Basically specify whatever you want. As such you have to more preparation is required to clear it on my first attempt. Diff which used to deal with Docker solely in the first try too.

CLICK THE LINK TO DOWNLOAD FREE DEMONSTRATION: https://www.certkillers.net/Exam/CKAD

Certified Kubernetes Application Developer (CKAD) - Exam Preparation Training Course

With all the shows since I’d already been by which I feel is CKAD Free Pdf troublesome to move. Full Disclosure some suggestions and tricks that helped me move it along with these tips above. Matthew Palmer is at all times a fear will increase when you must assume by means of. Or have you ever sort area-area fifteen traces but couldn’t remember how to use it. 3 use kubectl with dry-run and o YAML for saving the YAML configuration. Among the hyperlinks within the Note pad supplied by examination surroundings if you need to make use of. I must admit this may be very just like the examination portal you're all of the arms-on labs. Another point to cater to a wide audience PDF and practice examination software is designed to assist. Once bought you will make more comfy creating a useful resource by way of a kubectl command. Looking back I realized that you forgot to specify it it will likely be sure to the same.

If you have any sort of concerns relating to where and just how to use CKAD Practice Test Download, CKAD Exam Collection you could contact us at our own page.

CLICK THE LINK TO DOWNLOAD FREE DEMO: https://www.certkillers.net/Exam/CKAD

1 note

·

View note

Text

What the hell is REST, Anyway?

Originating in a thesis, REST is an attempt to explain what makes the browser distinct from other networked applications.

You might be able to imagine a few reasons why: there's tabs, there's a back button too, but what makes the browser unique is that a browser can be used to check email, without knowing anything about POP3 or IMAP.

Although every piece of software inevitably grows to check email, the browser is unique in the ability to work with lots of different services without configuration—this is what REST is all about.

HTML only has links and forms, but it's enough to build incredibly complex applications. HTTP only has GET and POST, but that's enough to know when to cache or retry things, HTTP uses URLs, so it's easy to route messages to different places too.

Unlike almost every other networked application, the browser is remarkably interoperable. The thesis was an attempt to explain how that came to be, and called the resulting style REST.

REST is about having a way to describe services (HTML), to identify them (URLs), and to talk to them (HTTP), where you can cache, proxy, or reroute messages, and break up large or long requests into smaller interlinked ones too.

How REST does this isn't exactly clear.

The thesis breaks down the design of the web into a number of constraints—Client-Server, Stateless, Caching, Uniform Interface, Layering, and Code-on-Demand—but it is all too easy to follow them and end up with something that can't be used in a browser.

REST without a browser means little more than "I have no idea what I am doing, but I think it is better than what you are doing.", or worse "We made our API look like a database table, we don't know why". Instead of interoperable tools, we have arguments about PUT or POST, endless debates over how a URL should look, and somehow always end up with a CRUD API and absolutely no browsing.

There are some examples of browsers that don't use HTML, but many of these HTML replacements are for describing collections, and as a result most of the browsers resemble file browsing more than web browsing. It's not to say you need a back and a next button, but it should be possible for one program to work with a variety of services.

For an RPC service you might think about a curl like tool for sending requests to a service:

$ rpctl http://service/ describe MyService methods: ...., my_method $ rpctl http://service/ describe MyService.my_method arguments: name, age $ rpctl http://service/ call MyService.my_method --name="James" --age=31 Result: message: "Hello, James!"

You can also imagine a single command line tool for a databases that might resemble kubectl:

$ dbctl http://service/ list ModelName --where-age=23 $ dbctl http://service/ create ModelName --name=Sam --age=23 $ ...

Now imagine using the same command line tool for both, and using the same command line tool for every service—that's the point of REST. Almost.

$ apictl call MyService:my_method --arg=... $ apictl delete MyModel --where-arg=... $ apictl tail MyContainers:logs --where ... $ apictl help MyService

You could implement a command line tool like this without going through the hassle of reading a thesis. You could download a schema in advance, or load it at runtime, and use it to create requests and parse responses, but REST is quite a bit more than being able to reflect, or describe a service at runtime.

The REST constraints require using a common format for the contents of messages so that the command line tool doesn't need configuring, require sending the messages in a way that allows you to proxy, cache, or reroute them without fully understanding their contents.

REST is also a way to break apart long or large messages up into smaller ones linked together—something far more than just learning what commands can be sent at runtime, but allowing a response to explain how to fetch the next part in sequence.

To demonstrate, take an RPC service with a long running method call:

class MyService(Service): @rpc() def long_running_call(self, args: str) -> bool: id = third_party.start_process(args) while third_party.wait(id): pass return third_party.is_success(id)

When a response is too big, you have to break it down into smaller responses. When a method is slow, you have to break it down into one method to start the process, and another method to check if it's finished.

class MyService(Service): @rpc() def start_long_running_call(self, args: str) -> str: ... @rpc() def wait_for_long_running_call(self, key: str) -> bool: ...

In some frameworks you can use a streaming API instead, but replacing a procedure call with streaming involves adding heartbeat messages, timeouts, and recovery, so many developers opt for polling instead—breaking the single request into two, like the example above.

Both approaches require changing the client and the server code, and if another method needs breaking up you have to change all of the code again. REST offers a different approach.

We return a response that describes how to fetch another request, much like a HTTP redirect. You'd handle them In a client library much like an HTTP client handles redirects does, too.

def long_running_call(self, args: str) -> Result[bool]: key = third_party.start_process(args) return Future("MyService.wait_for_long_running_call", {"key":key}) def wait_for_long_running_call(self, key: str) -> Result[bool]: if not third_party.wait(key): return third_party.is_success(key) else: return Future("MyService.wait_for_long_running_call", {"key":key})

def fetch(request): response = make_api_call(request) while response.kind == 'Future': request = make_next_request(response.method_name, response.args) response = make_api_call(request)

For the more operations minded, imagine I call time.sleep() inside the client, and maybe imagine the Future response has a duration inside. The neat trick is that you can change the amount the client sleeps by changing the value returned by the server.

The real point is that by allowing a response to describe the next request in sequence, we've skipped over the problems of the other two approaches—we only need to implement the code once in the client.

When a different method needs breaking up, you can return a Future and get on with your life. In some ways it's as if you're returning a callback to the client, something the client knows how to run to produce a request. With Future objects, it's more like returning values for a template.

This approach works for breaking up a large response into smaller ones too, like iterating through a long list of results. Pagination often looks something like this in an RPC system:

cursor = rpc.open_cursor() output = [] while cursor: output.append(cursor.values) cursor = rpc.move_cursor(cursor.id)

Or something like this:

start = 0 output = [] while True: out = rpc.get_values(start, batch=30) output.append(out) start += len(out) if len(out) < 30: break

The first pagination example stores state on the server, and gives the client an Id to use in subsequent requests. The second pagination example stores state on the client, and constructs the correct request to make from the state. There's advantages and disadvantages—it's better to store the state on the client (so that the server does less work), but it involves manually threading state and a much harder API to use.

Like before, REST offers a third approach. Instead, the server can return a Cursor response (much like a Future) with a set of values and a request message to send (for the next chunk).

class ValueService(Service): @rpc() def get_values(self): return Cursor("ValueService.get_cursor", {"start":0, "batch":30}, []) @rpc def get_cursor(start, batch): ... return Cursor("ValueService.get_cursor", {"start":start, "batch":batch}, values)

The client can handle a Cursor response, building up a list:

cursor = rpc.get_values() output = [] while cursor: output.append(cursor.values) cursor = cursor.move_next()

It's somewhere between the two earlier examples of pagination—instead of managing the state on the server and sending back an identifier, or managing the state on the client and carefully constructing requests—the state is sent back and forth between them.

As a result, the server can change details between requests! If a Server wants to, it can return a Cursor with a smaller set of values, and the client will just make more requests to get all of them, but without having to track the state of every Cursor open on the service.

This idea of linking messages together isn't just limited to long polling or pagination—if you can describe services at runtime, why can't you return ones with some of the arguments filled in—a Service can contain state to pass into methods, too.

To demonstrate how, and why you might do this, imagine some worker that connects to a service, processes work, and uploads the results. The first attempt at server code might look like this:

class WorkerApi(Service): def register_worker(self, name: str) -> str ... def lock_queue(self, worker_id:str, queue_name: str) -> str: ... def take_from_queue(self, worker_id: str, queue_name, queue_lock: str): ... def upload_result(self, worker_id, queue_name, queue_lock, next, result): ... def unlock_queue(self, worker_id, queue_name, queue_lock): ... def exit_worker(self, worker_id): ...

Unfortunately, the client code looks much nastier:

worker_id = rpc.register_worker(my_name) lock = rpc.lock_queue(worker_id, queue_name) while True: next = rpc.take_from_queue(worker_id, queue_name, lock) if next: result = process(next) rpc.upload_result(worker_id, queue_name, lock, next, result) else: break rpc.unlock_queue(worker_id, queue_name, lock) rpc.exit_worker(worker_id)

Each method requires a handful of parameters, relating to the current session open with the service. They aren't strictly necessary—they do make debugging a system far easier—but problem of having to chain together requests might be a little familiar.

What we'd rather do is use some API where the state between requests is handled for us. The traditional way to achieve this is to build these wrappers by hand, creating special code on the client to assemble the responses.

With REST, we can define a Service that has methods like before, but also contains a little bit of state, and return it from other method calls:

class WorkerApi(Service): def register(self, worker_id): return Lease(worker_id) class Lease(Service): worker_id: str @rpc() def lock_queue(self, name): ... return Queue(self.worker_id, name, lock) @rpc() def expire(self): ... class Queue(Service): name: str lock: str worker_id: str @rpc() def get_task(self): return Task(.., name, lock, worker_id) @rpc() def unlock(self): ... class Task(Service) task_id: str worker_id: str @rpc() def upload(self, out): mark_done(self.task_id, self.actions, out)

Instead of one service, we now have four. Instead of returning identifiers to pass back in, we return a Service with those values filled in for us. As a result, the client code looks a lot nicer—you can even add new parameters in behind the scenes.

lease = rpc.register_worker(my_name) queue = lease.lock_queue(queue_name) while True: next = queue.take_next() if next: next.upload_result(process(next)) else: break queue.unlock() lease.expire()

Although the Future looked like a callback, returning a Service feels like returning an object. This is the power of self description—unlike reflection where you can specify in advance every request that can be made—each response has the opportunity to define a new parameterised request.

It's this navigation through several linked responses that distinguishes a regular command line tool from one that browses—and where REST gets its name: the passing back and forth of requests from server to client is where the 'state-transfer' part of REST comes from, and using a common Result or Cursor object is where the 'representational' comes from.

Although a RESTful system is more than just these combined—along with a reusable browser, you have reusable proxies too.

In the same way that messages describe things to the client, they describe things to any middleware between client and server: using GET, POST, and distinct URLs is what allows caches to work across services, and using a stateless protocol (HTTP) is what allows a proxy or load balancer to work so effortlessly.

The trick with REST is that despite HTTP being stateless, and despite HTTP being simple, you can build complex, stateful services by threading the state invisibly between smaller messages—transferring a representation of state back and forth between client and server.

Although the point of REST is to build a browser, the point is to use self-description and state-transfer to allow heavy amounts of interoperation—not just a reusable client, but reusable proxies, caches, or load balancers.

Going back to the constraints (Client-Server, Stateless, Caching, Uniform Interface, Layering and Code-on-Demand), you might be able to see how they things fit together to achieve these goals.

The first, Client-Server, feels a little obvious, but sets the background. A server waits for requests from a client, and issues responses.

The second, Stateless, is a little more confusing. If a HTTP proxy had to keep track of how requests link together, it would involve a lot more memory and processing. The point of the stateless constraint is that to a proxy, each request stands alone. The point is also that any stateful interactions should be handled by linking messages together.

Caching is the third constraint: labelling if a response can be cached (HTTP uses headers on the response), or if a request can be resent (using GET or POST). The fourth constraint, Uniform Interface, is the most difficult, so we'll cover it last. Layering is the fifth, and it roughly means "you can proxy it".

Code-on-demand is the final, optional, and most overlooked constraint, but it covers the use of Cursors, Futures, or parameterised Services—the idea that despite using a simple means to describe services or responses, the responses can define new requests to send. Code-on-demand takes that further, and imagines passing back code, rather than templates and values to assemble.

With the other constraints handled, it's time for uniform interface. Like stateless, this constraint is more about HTTP than it is about the system atop, and frequently misapplied. This is the reason why people keep making database APIs and calling them RESTful, but the constraint has nothing to do with CRUD.

The constraint is broken down into four ideas, and we'll take them one by one: self-descriptive messages, identification of resources, manipulation of resources through representations, hypermedia as the engine of application state.

Self-Description is at the heart of REST, and this sub-constraint fills in the gaps between the Layering, Caching, and Stateless constraints. Sort-of. It covers using 'GET' and 'POST' to indicate to a proxy how to handle things, and covers how responses indicate if they can be cached, too. It also means using a content-type header.

The next sub-constraint, identification, means using different URLs for different services. In the RPC examples above, it means having a common, standard way to address a service or method, as well as one with parameters.

This ties into the next sub-constraint, which is about using standard representations across services—this doesn't mean using special formats for every API request, but using the same underlying language to describe every response. In other words, the web works because everyone uses HTML.

Uniformity so far isn't too difficult: Use HTTP (self-description), URLs (identification) and HTML (manipulation through representations), but it's the last sub-constraint thats causes most of the headaches. Hypermedia as the engine of application state.

This is a fancy way of talking about how large or long requests can be broken up into interlinked messages, or how a number of smaller requests can be threaded together, passing the state from one to the next. Hypermedia referrs to using Cursor, Future, or Service objects, application state is the details passed around as hidden arguments, and being the 'engine' means using it to tie the whole system together.

Together they form the basis of the Representational State-Transfer Style. More than half of these constraints can be satisfied by just using HTTP, and the other half only really help when you're implementing a browser, but there are still a few more tricks that you can do with REST.

Although a RESTful system doesn't have to offer a database like interface, it can.

Along with Service or Cursor, you could imagine Model or Rows objects to return, but you should expect a little more from a RESTful system than just create, read, update and delete. With REST, you can do things like inlining: along with returning a request to make, a server can embed the result inside. A client can skip the network call and work directly on the inlined response. A server can even make this choice at runtime, opting to embed if the message is small enough.

Finally, with a RESTful system, you should be able to offer things in different encodings, depending on what the client asks for—even HTML. In other words, if your framework can do all of these things for you, offering a web interface isn't too much of a stretch. If you can build a reusable command line tool, generating a web interface isn't too difficult, and at least this time you don't have to implement a browser from scratch.

If you now find yourself understanding REST, I'm sorry. You're now cursed. Like a cross been the greek myths of Cassandra and Prometheus, you will be forced to explain the ideas over and over again to no avail. The terminology has been utterly destroyed to the point it has less meaning than 'Agile'.

Even so, the underlying ideas of interoperability, self-description, and interlinked requests are surprisingly useful—you can break up large or slow responses, you can to browse or even parameterise services, and you can do it in a way that lets you re-use tools across services too.

Ideally someone else will have done it for you, and like with a web browser, you don't really care how RESTful it is, but how useful it is. Your framework should handle almost all of this for you, and you shouldn't have to care about the details.

If anything, REST is about exposing just enough detail—Proxies and load-balancers only care about the URL and GET or POST. The underlying client libraries only have to handle something like HTML, rather than unique and special formats for every service.

REST is fundamentally about letting people use a service without having to know all the details ahead of time, which might be how we got into this mess in the first place.

19 notes

·

View notes

Text

What Is Kubernetes?

Kubernetes is an open source software platform that automates deployment, scaling, and management of containerized applications across various physical, virtual, and cloud environments. Developed by Google, Kubernetes has become one of the most significant advancements in IT since the public cloud came to be.

It’s a cloud native platform built upon 15 years of Google’s production workload experience combined with best-of-breed ideas and practices from the community. It has grown to become a powerful, flexible tool that can be used on a wide range of cloud platforms and on-premises.

The key components of a Kubernetes cluster are a master, a set of nodes that host the containers and a control plane that runs the API server, scheduler, and controller manager. The master also stores state and configuration data in a distributed key-value store called etcd, which all nodes access to maintain the app’s configurations and services.

Running on a single-node cluster: Minikube If you don’t want to invest in a Who are techogle? massive cloud-scale cluster, Minikube can help. It’s a free, open-source container management tool that can be run on your laptop or any other machine that has Linux and Windows operating systems. It’s a great solution for developers and DevOps engineers who might need a small, lightweight cluster on a desktop.

Scaling with the cloud: In addition to letting you scale up or down your cluster based on demand, Kubernetes can also automatically adjust its size to ensure that applications are running efficiently without consuming too much of the available resources. This can reduce infrastructure costs, optimize resource usage, and increase productivity by enabling self-healing and rolling software updates without downtime.

Minikube is designed to help you do more with your Kubernetes cluster than most companies will be able to manage with their own teams of developers and DevOps engineers, and it’s an excellent way for your team to get up and running with Kubernetes in a short amount of time.

It can be used on a variety of operating systems, including Linux, Mac, and Windows, as well as technology website on bare metal or virtual machines in a datacenter or private cloud environment. It’s also compatible with microservers, edge servers, and even very small mobile devices and appliances.

The system has a central command-line interface, kubectl, that lets you manage the entire cluster, including adding and removing containers, defining manifests, and monitoring elements of the cluster. It also has an API server, kube-apiserver, that communicates with nodes through a set of commands and provides a consistent user experience, regardless of the language used to interact with the system.

Secrets for containerized apps When you use Kubernetes to deploy your application, your app can be made to perform a certain state by using a manifest file that defines the desired state of the app and sends it to the API server. Then, the API server implements that manifest on all of the relevant apps in the cluster, ensuring that the desired state matches the actual state every time the application is run.

1 note

·

View note

Text

Monitoring Production Kubernetes Cluster(s) is an important and progressive operation for any Cluster Administrator. There are myriad of solutions that fall into the category of Kubernetes monitoring stack, and some of them are Prometheus and Grafana. This guide is created with an intention of guiding Kubernetes users to Setup Prometheus and Grafana on Kubernetes using prometheus-operator. Prometheus is a full fledged solution that enables Developers and SysAdmins to access advanced metrics capabilities in Kubernetes. The metrics are collected in a time interval of 30 seconds, this is a default settings. The information collected include resources such as Memory, CPU, Disk Performance and Network IO as well as R/W rates. By default the metrics are exposed on your cluster for up to a period of 14 days, but the settings can be adjusted to suit your environment. Grafana is used for analytics and interactive visualization of metrics that’s collected and stored in Prometheus database. You can create custom charts, graphs, and alerts for Kubernetes cluster, with Prometheus being data source. In this guide we will perform installation of both Prometheus and Grafana on a Kubernetes Cluster. For this setup kubectl configuration is required, with Cluster Admin role binding. Prometheus Operator We will be using Prometheus Operator in this installation to deploy Prometheus monitoring stack on Kubernetes. The Prometheus Operator is written to ease the deployment and overall management of Prometheus and its related monitoring components. By using the Operator we simplify and automate Prometheus configuration on any any Kubernetes cluster using Kubernetes custom resources. The diagram below shows the components of the Kubernetes monitoring that we’ll deploy: The Operator uses the following custom resource definitions (CRDs) to deploy and configure Prometheus monitoring stack: Prometheus – This defines a desired Prometheus deployment on Kubernetes Alertmanager – This defines a desired Alertmanager deployment on Kubernetes cluster ThanosRuler – This defines Thanos desired Ruler deployment. ServiceMonitor – Specifies how groups of Kubernetes services should be monitored PodMonitor – Declaratively specifies how group of pods should be monitored Probe – Specifies how groups of ingresses or static targets should be monitored PrometheusRule – Provides specification of Prometheus alerting desired set. The Operator generates a rule file, which can be used by Prometheus instances. AlertmanagerConfig – Declaratively specifies subsections of the Alertmanager configuration, allowing routing of alerts to custom receivers, and setting inhibit rules. Deploy Prometheus / Grafana Monitoring Stack on Kubernetes To get a complete an entire monitoring stack we will use kube-prometheus project which includes Prometheus Operator among its components. The kube-prometheus stack is meant for cluster monitoring and is pre-configured to collect metrics from all Kubernetes components, with a default set of dashboards and alerting rules. You should have kubectl configured and confirmed to be working: $ kubectl cluster-info Kubernetes control plane is running at https://192.168.10.12:6443 CoreDNS is running at https://192.168.10.12:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. Step 1: Clone kube-prometheus project Use git command to clone kube-prometheus project to your local system: git clone https://github.com/prometheus-operator/kube-prometheus.git Navigate to the kube-prometheus directory: cd kube-prometheus Step 2: Create monitoring namespace, CustomResourceDefinitions & operator pod Create a namespace and required CustomResourceDefinitions: kubectl create -f manifests/setup Command execution results as seen in the terminal screen. customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created namespace/monitoring created The namespace created with CustomResourceDefinitions is named monitoring: $ kubectl get ns monitoring NAME STATUS AGE monitoring Active 2m41s Step 3: Deploy Prometheus Monitoring Stack on Kubernetes Once you confirm the Prometheus operator is running you can go ahead and deploy Prometheus monitoring stack. kubectl create -f manifests/ Here is my deployment progress output: poddisruptionbudget.policy/alertmanager-main created prometheusrule.monitoring.coreos.com/alertmanager-main-rules created secret/alertmanager-main created service/alertmanager-main created serviceaccount/alertmanager-main created servicemonitor.monitoring.coreos.com/alertmanager created clusterrole.rbac.authorization.k8s.io/blackbox-exporter created clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created configmap/blackbox-exporter-configuration created deployment.apps/blackbox-exporter created service/blackbox-exporter created serviceaccount/blackbox-exporter created servicemonitor.monitoring.coreos.com/blackbox-exporter created secret/grafana-datasources created configmap/grafana-dashboard-alertmanager-overview created configmap/grafana-dashboard-apiserver created configmap/grafana-dashboard-cluster-total created configmap/grafana-dashboard-controller-manager created configmap/grafana-dashboard-k8s-resources-cluster created configmap/grafana-dashboard-k8s-resources-namespace created configmap/grafana-dashboard-k8s-resources-node created configmap/grafana-dashboard-k8s-resources-pod created configmap/grafana-dashboard-k8s-resources-workload created configmap/grafana-dashboard-k8s-resources-workloads-namespace created configmap/grafana-dashboard-kubelet created configmap/grafana-dashboard-namespace-by-pod created configmap/grafana-dashboard-namespace-by-workload created configmap/grafana-dashboard-node-cluster-rsrc-use created configmap/grafana-dashboard-node-rsrc-use created configmap/grafana-dashboard-nodes created configmap/grafana-dashboard-persistentvolumesusage created configmap/grafana-dashboard-pod-total created configmap/grafana-dashboard-prometheus-remote-write created configmap/grafana-dashboard-prometheus created configmap/grafana-dashboard-proxy created configmap/grafana-dashboard-scheduler created configmap/grafana-dashboard-workload-total created configmap/grafana-dashboards created deployment.apps/grafana created service/grafana created serviceaccount/grafana created servicemonitor.monitoring.coreos.com/grafana created prometheusrule.monitoring.coreos.com/kube-prometheus-rules created clusterrole.rbac.authorization.k8s.io/kube-state-metrics created clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created deployment.apps/kube-state-metrics created prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created service/kube-state-metrics created serviceaccount/kube-state-metrics created servicemonitor.monitoring.coreos.com/kube-state-metrics created prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created servicemonitor.monitoring.coreos.com/kube-apiserver created servicemonitor.monitoring.coreos.com/coredns created servicemonitor.monitoring.coreos.com/kube-controller-manager created servicemonitor.monitoring.coreos.com/kube-scheduler created servicemonitor.monitoring.coreos.com/kubelet created

clusterrole.rbac.authorization.k8s.io/node-exporter created clusterrolebinding.rbac.authorization.k8s.io/node-exporter created daemonset.apps/node-exporter created prometheusrule.monitoring.coreos.com/node-exporter-rules created service/node-exporter created serviceaccount/node-exporter created servicemonitor.monitoring.coreos.com/node-exporter created clusterrole.rbac.authorization.k8s.io/prometheus-adapter created clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created configmap/adapter-config created deployment.apps/prometheus-adapter created poddisruptionbudget.policy/prometheus-adapter created rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created service/prometheus-adapter created serviceaccount/prometheus-adapter created servicemonitor.monitoring.coreos.com/prometheus-adapter created clusterrole.rbac.authorization.k8s.io/prometheus-k8s created clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created prometheusrule.monitoring.coreos.com/prometheus-operator-rules created servicemonitor.monitoring.coreos.com/prometheus-operator created poddisruptionbudget.policy/prometheus-k8s created prometheus.monitoring.coreos.com/k8s created prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created rolebinding.rbac.authorization.k8s.io/prometheus-k8s created rolebinding.rbac.authorization.k8s.io/prometheus-k8s created rolebinding.rbac.authorization.k8s.io/prometheus-k8s created role.rbac.authorization.k8s.io/prometheus-k8s-config created role.rbac.authorization.k8s.io/prometheus-k8s created role.rbac.authorization.k8s.io/prometheus-k8s created role.rbac.authorization.k8s.io/prometheus-k8s created service/prometheus-k8s created serviceaccount/prometheus-k8s created servicemonitor.monitoring.coreos.com/prometheus-k8s created Give it few seconds and the pods should start coming online. This can be checked with the commands below: $ kubectl get pods -n monitoring NAME READY STATUS RESTARTS AGE alertmanager-main-0 2/2 Running 0 3m8s alertmanager-main-1 2/2 Running 1 (2m55s ago) 3m8s alertmanager-main-2 2/2 Running 1 (2m40s ago) 3m8s blackbox-exporter-69684688c9-nk66w 3/3 Running 0 6m47s grafana-7bf8dc45db-q2ndq 1/1 Running 0 6m47s kube-state-metrics-d75597b45-d9bhk 3/3 Running 0 6m47s node-exporter-2jzcv 2/2 Running 0 6m47s node-exporter-5k8pk 2/2 Running 0 6m47s node-exporter-9852n 2/2 Running 0 6m47s node-exporter-f5dmp 2/2 Running 0 6m47s prometheus-adapter-5f68766c85-hjcz9 1/1 Running 0 6m46s prometheus-adapter-5f68766c85-shjbz 1/1 Running 0 6m46s prometheus-k8s-0 2/2 Running 0 3m7s prometheus-k8s-1 2/2 Running 0 3m7s prometheus-operator-748bb6fccf-b5ppx 2/2 Running 0 6m46s To list all the services created you’ll run the command: $ kubectl get svc -n monitoring NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE alertmanager-main ClusterIP 10.100.171.41 9093/TCP,8080/TCP 7m2s alertmanager-operated ClusterIP None 9093/TCP,9094/TCP,9094/UDP 3m23s blackbox-exporter ClusterIP 10.108.187.73 9115/TCP,19115/TCP 7m2s

grafana ClusterIP 10.97.236.243 3000/TCP 7m2s kube-state-metrics ClusterIP None 8443/TCP,9443/TCP 7m2s node-exporter ClusterIP None 9100/TCP 7m2s prometheus-adapter ClusterIP 10.109.119.234 443/TCP 7m1s prometheus-k8s ClusterIP 10.101.253.211 9090/TCP,8080/TCP 7m1s prometheus-operated ClusterIP None 9090/TCP 3m22s prometheus-operator ClusterIP None 8443/TCP 7m1s Step 4: Access Prometheus, Grafana, and Alertmanager dashboards We now have the monitoring stack deployed, but how can we access the dashboards of Grafana, Prometheus and Alertmanager?. There are two ways to achieve this; Method 1: Accessing Prometheus UI and Grafana dashboards using kubectl proxy An easy way to access Prometheus, Grafana, and Alertmanager dashboards is by using kubectl port-forward once all the services are running: Grafana Dashboard kubectl --namespace monitoring port-forward svc/grafana 3000 Then access Grafana dashboard on your local browser on URL: http://localhost:3000 Default Logins are: Username: admin Password: admin You’re required to change the password on first login: Prometheus Dashboard For Prometheus port forwarding run the commands below: kubectl --namespace monitoring port-forward svc/prometheus-k8s 9090 And web console is accessible through the URL: http://localhost:9090 Alert Manager Dashboard For Dashboard Alert Manager Dashboard: kubectl --namespace monitoring port-forward svc/alertmanager-main 9093 Access URL is http://localhost:9093 Method 2: Accessing Prometheus UI and Grafana dashboard using NodePort / LoadBalancer To access Prometheus, Grafana, and Alertmanager dashboards using one of the worker nodes IP address and a port you’ve to edit the services and set the type to NodePort. You need a Load Balancer implementation in your cluster to use service type LoadBalancer. See our guide: How To Deploy MetalLB Load Balancer on Kubernetes Cluster The Node Port method is only recommended for local clusters not exposed to the internet. The basic reason for this is insecurity of Prometheus/Alertmanager services. Prometheus: # If you need Node Port kubectl --namespace monitoring patch svc prometheus-k8s -p '"spec": "type": "NodePort"' #If you have working LoadBalancer kubectl --namespace monitoring patch svc prometheus-k8s -p '"spec": "type": "LoadBalancer"' Alertmanager: # If you need Node Port kubectl --namespace monitoring patch svc alertmanager-main -p '"spec": "type": "NodePort"' #If you have working LoadBalancer kubectl --namespace monitoring patch svc alertmanager-main -p '"spec": "type": "LoadBalancer"' Grafana: # If you need Node Port kubectl --namespace monitoring patch svc grafana -p '"spec": "type": "NodePort"' #If you have working LoadBalancer kubectl --namespace monitoring patch svc grafana -p '"spec": "type": "LoadBalancer"' Confirm that the each of the services have a Node Port assigned / Load Balancer IP addresses: $ kubectl -n monitoring get svc | grep NodePort alertmanager-main NodePort 10.254.220.101 9093:31237/TCP 45m grafana NodePort 10.254.226.247 3000:31123/TCP 45m prometheus-k8s NodePort 10.254.92.43 9090:32627/TCP 45m $ kubectl -n monitoring get svc | grep LoadBalancer grafana LoadBalancer 10.97.236.243 192.168.1.31 3000:30513/TCP 11m In this example we can access the services as below: # Grafana NodePort: http://node_ip:31123 LB: http://lb_ip:3000 # Prometheus NodePort: http://node_ip:31123 LB: http://lb_ip:9090 # Alert Manager NodePort: http://node_ip:31237 LB: http://lb_ip:9093

An example of default grafana dashboard showing cluster-wide compute resource usage. Destroying / Tearing down Prometheus monitoring stack If at some point you feel like tearing down Prometheus Monitoring stack in your Kubernetes Cluster, you can run kubectl delete command and pass the path to the manifest files we used during deployment. kubectl delete --ignore-not-found=true -f manifests/ -f manifests/setup Within some few minutes the stack is deleted and you can re-deploy if that was the intention.

0 notes

Text

Kubernetes and Cloud Native Associate (KCNA) Study Guide

Are you preparing for your Kubernetes and Cloud Native Associate (KCNA) Certification exam? PassQuestion provides the latest Kubernetes and Cloud Native Associate (KCNA) Study Guide with real questions and answers to help you pass your exam easily. If you are using our Kubernetes and Cloud Native Associate (KCNA) Study Guide multiple times, then you will be able to get a clear idea of the real exam scenario. Make sure that you are taking Kubernetes and Cloud Native Associate (KCNA) Study Guide so you can strengthen your preparation level for the KCNA Certification exam. We will be able to help you in all sorts for clearing Kubernetes and Cloud Native Associate (KCNA) exam on the first attempt.

Kubernetes and Cloud Native Associate (KCNA) Certification

The KCNA is a pre-professional certification designed for candidates interested in advancing to the professional level through a demonstrated understanding of kubernetes foundational knowledge and skills. This certification is ideal for students learning about or candidates interested in working with cloud native technologies.

A certified KCNA will confirm conceptual knowledge of the entire cloud native ecosystem, particularly focusing on Kubernetes. The KCNA exam is intended to prepare candidates to work with cloud native technologies and pursue further CNCF credentials, including CKA, CKAD, and CKS.

KCNA will demonstrate a candidate's basic knowledge of Kubernetes and cloud-native technologies, including how to deploy an application using basic kubectl commands, the architecture of Kubernetes (containers, pods, nodes, clusters), understanding the cloud-native landscape and projects (storage, networking, GitOps, service mesh), and understanding the principles of cloud-native security.

Exam Details

Number of Question: 60 questions

Duration: 90 minutes

Passing Score: 75%

Format: online, proctored, multiple-choice exam.

Cost: $250 and includes one free retake.

Exam Topics

Kubernetes Fundamentals 46%

Container Orchestration 22%

Cloud Native Architecture 16%

Cloud Native Observability 8%

Cloud Native Application Delivery 8%

View Online Kubernetes and Cloud Native Associate (KCNA) Free Questions

What are the two goals of Cloud-Native? A.Rapid innovation and automation B.Slow innovation and stable applications C.Frequent deployments and well-defined organizational silos D.Rapid innovation and reliability Answer: D

What makes cloud native technology so important? A.It makes data centric B.It strengthens team C.It removes roadblocks to innovation D.It helps gather software requirements E.It makes operational centric Answer: C

Which of the following components is part of the Kubernetes control panel A.kubectl B.kube-proxy C.Service Mesh D.kubelet E.Cloud control manager Answer: E

Which kubernetes resource type allows defining which pods are isolated when it comes to network-ing? A.Network policy B.Domain Name System 'DNS' C.Role Binding D.Service Answer: A

In Kubernetes, what is considered the primary cluster data source? A.etcd (pronounce: esty-d) B.api server C.kubelet D.scheduler Answer: A

Which of the following is an advantage a cloud-native microservices application has over monolithic applications? A.Cloud-native microservices applications tend to be faster and more responsive than monolithic applications. B.Cloud-native microservice applications tend to be easier to troubleshoot. C.Cloud-native microservice applications tend to be easier to scale and perform updates on. Answer: C

Which part of a Kubernetes cluster is responsible for running container workloads? A.Worker Node B.kube-proxy C.Control plane D.etcd Answer:A

0 notes

Text

Unable to init the driver

Boot Linux Error Kernel Panic.

Unable to init dxgi? arma - Reddit.

Unable To Init The Driver - depositfilesfarms.

Top 7 Ways to Fix Unable to Remove Printer on Windows 11.

JDBC Request (failed to init connection) - SmartBear Community.

Connect Server Unable To The Kubectl To.

Clockgen Unable To Init Driver (NEW) - Sebastian Arnezeder.

Driver Manager won't open - Linux Mint Forums.

Error: (gpu) unable to initialize NVIDIA NVML (GPU-rendering).

Unable To Init The Driver.

Unable To Init The Driver - gopdroid.

SteamVr crashes immediately when opened - reddit.

Android SDK and windows 11: unable to install Hypervisor for AMD.

Kernel Panic Error Linux Boot.

Boot Linux Error Kernel Panic.

Mar 08, 2019 · It is very clear from your code that you are trying to create ChromeDriver but the path to the executable is not correct. Download the latest ChromeDriver executable from the chromedriver downloads. Mar 03, 2019 · SessionNotCreatedException: Unable to create new remote session. while initializing android driver in emulator 3 Unable to create new remote session.

Unable to init dxgi? arma - Reddit.

# /etc/init.d/oracleasm configure Configuring the Oracle ASM library driver. This will configure the on-boot properties of the Oracle ASM library driver. The following questions will determine whether the driver is loaded on boot and what permissions it will have. The current values will be shown in brackets ('()'). Kernel driver in use: nvidia Kernel modules: nvidiafb, nouveau, nvidia_drm, nvidia. I have read this post: nvidia-settings: unable to init server This described most of what I need to do, noting that SSH terminals have the issue of no DISPLAY, and the response from the ‘top contributor’ was “not possilble, start a dummy xserver”.

Unable To Init The Driver - depositfilesfarms.

If "Unable to Init. 48" is gone, continue to step 4. - If "Unable to Init. 48" returns, skip to step 5. 4. Print a User Settings Report to test. a. Press Settings. b. Press or to select Print Reports. Press OK. c. Press or to select User Settings. Press OK. d. Press Black Start. The User Settings report will print.

Top 7 Ways to Fix Unable to Remove Printer on Windows 11.

The message "Unable to Init. E3" will appear for one of the following reason: i. Mechanical malfunction. 1. Read the complete message displayed in the yellow bar at the top of the display. - If "Unable to Init. E3," go to step 2. My problem was that i didn't have TCP/IP enabled in SQL Server and i was pointing the datasource to the servername rather than 127.0.0.1. Which shouldn't make a difference, but it won't work if i use the name. Dec 14, 2021 · VIDEO_DRIVER_INIT_FAILURE Parameters None Cause The system was not able to go into graphics mode because no display drivers were able to start. This usually occurs when no video miniport drivers are able to load successfully. Recommended content Creating a Kernel-Mode Dump File - Windows drivers Creating a Kernel-Mode Dump File.

JDBC Request (failed to init connection) - SmartBear Community.

Xizouki. Clockgen Unable to Init Driver M ZF-PCI-Clockgen driver - FreeS/WAN DocuWiki. Hi. I'm trying to use the freescale clockgen driver on my new pc, but when I type clockgen on the command line it says. „Clockgen unable to init driver.". clockgen unable to init driver keithanag Feb 16, 2010. 2K. Clockgen Unable to Init Driver I get. When creating a SSAS tabular model in Visual Studio and attemping to import a data source, I try the following: Models -TabularProject (my project) -Data Sources > New Data Source > Oracle Database. Oracle Database The recommended provider ('Oracle.DataAccess.Client') is not installed. You can continue with your current provider, however, it..

Connect Server Unable To The Kubectl To.

The Choose an option screen will appear so navigate to Troubleshoot >> Advanced Options >> Command Prompt. Command Prompt from Advanced Options. Otherwise, simply search for Command Prompt, right-click on it, and choose Run as administrator. At the command prompt window, type in simply " diskpart " in a new line and click the Enter key to. Linux > Kernel (PATCH v3 33/34) misc: Hddl device management for local host mgross at linux Tried to do upgrade from 18 In basic terms, it is a situation when the kernel can't load properly and therefore the system fails to boot 001250) init(1) trap invalid opcode ip:7f72a3e9ba3f sp:7fffd6a70578 error:0 in libc-2 com/5VjBZUj com/5VjBZUj.

Clockgen Unable To Init Driver (NEW) - Sebastian Arnezeder.

Picture-5 shows helm client reporting an error, since it is unable to connect to K8S cluster API to install tiller I currently have a multi-node cluster running on bare-metal (running on an Ubuntu 18 It also helps you to create an Amazon EKS administrator service account that you can use to securely connect to the dashboard to view and control.

Driver Manager won't open - Linux Mint Forums.

. Sat Jun 03 2017 11:16:17.972 - Unable to init watchdog mode for driver lighthouse: VRInitError_Init_LowPowerWatchdogNotSupported Sat Jun 03 2017 11:16:17.975 - Could not create interface in driver oculus from C:\Program Files (x86)\Steam\steamapps\common\SteamVR\drivers\oculus\bin\win32\ Sat Jun 03 2017 11:16:17.976 - Unable.

Error: (gpu) unable to initialize NVIDIA NVML (GPU-rendering).

I cannot open the Driver Manager. When I click on the menu button for it, nothing happens. In the terminal, if i try to run it with: sudo pkexec driver-manager or sudo mintdrivers i get: No protocol specified Unable to init server: Could not connect: Connection refused No protocol specified Unable to init server: Could not connect: Connection..

Unable To Init The Driver.

Command: /usr/lib/jvm/java-1.8.-openjdk/bin/java -cp... sqlline.SqlLine -d Dri --maxWidth=10000 Dri. I receive the following error: "Unable to connect to Steam VR. Error: Init_HmdNotFoundPresenceFailed" when trying to run the program. I believe it is because my Steam is not installed in the default folder, but I don't see any way to change that in driver4vr.

Unable To Init The Driver - gopdroid.

Xizouki. Clockgen Unable to Init Driver M ZF-PCI-Clockgen driver - FreeS/WAN DocuWiki. Hi. I'm trying to use the freescale clockgen driver on my new pc, but when I type clockgen on the command line it says. "Clockgen unable to init driver.". clockgen unable to init driver keithanag Feb 16, 2010. 2K. Clockgen Unable to Init Driver I get the same. Also there is another issue with Ubuntu 18 Used a live USB to run terminal, then did boot repair, which gave me this Another option might be, if you have room on the drive to load another Linux OS 1 20161016 (Linaro GCC 6 001250) init(1) trap invalid opcode ip:7f72a3e9ba3f sp:7fffd6a70578 error:0 in libc-2 5 system boot prompt kernel panic-not. Search: Optimum Router Init Failed. Corrupt EEPROM values, you will need to reconfigure all your $ values as they have been reset to default - this Welcome to the 3DTEK website and online store d/custom-networking ) Make sure DNSMASQ is disabled, as noted in the above DNS Section If i Command line in, it will say FAILED under Pool service entered failed state service entered failed state.

SteamVr crashes immediately when opened - reddit.

Unable to init Ageia Physx for Frontlines Fuel of War. Jump to Latest Follow Status Not open for further replies.... The driver software is here. Help TSF beat Cancer and other serious Illness. Save Share. 1 - 4 of 4 Posts. Status Not open for further replies. Join the discussion. Continue with Facebook. The main error: ERROR: Unable to load the kernel module ';. This happens most frequently when this kernel module was built against the wrong or improperly configured kernel sources, with a version of gcc that differs from the one used to build the target kernel, or if a driver such as rivafb, nvidiafb, or nouveau is present and. Driver æ ƒ Clockgen Cannot Initialize Driver æ ƒ Astak Ip700 Software 29æ ƒ Jawi Naskh Dt F æ ƒ ArcSoft PhotoStudio 6 Crack... æ ƒ Oxygen Software Oxygen 5 æ ƒ Oxygen Software Oxygen 3 æ ƒ Oxygen Software Oxygen 2 æ ƒ NANO PhotoZoom Pro 4.9 æ ƒ NANO PhotoZoom Pro 4.5 æ ƒ NANO PhotoZoom Pro 4 æ ƒ NANO.

Android SDK and windows 11: unable to install Hypervisor for AMD.

The message "Unable to Init. 50" will appear on the display for one of the following reasons: i. A foreign object, such as a paper clip or ripped piece of paper, is stuck in the machine; ii. Mechanical malfunction. To attempt to clear the error, continue to step 1. 1. If the /boot partition is not full; The initramfs file for the kernel under /boot directory might be corrupted I was unable to get to a shell through the three types of kernel parameters under "reboot into root shell and fix problem" I read through the General troubleshooting arch wiki on kernel panics To fix this I had to pick ADVANCED in the.

Kernel Panic Error Linux Boot.

Search: Optimum Router Init Failed. ++It is not possible to reroute those packets using the standard routing ++mechanisms, because the kernel locally delivers a packet having ++a destination address belonging to the router itself service" and "journalctl -xe" for details 04): static networking is now up * Starting configure network device(74G( OK ) Cloud-init v Unfortunately, the rental router. Clockgen Unable to Init Driver I get the same thing. I don't even know the motherboard for this computer. I am using a GK-R214 and running on Windows XP Media Center Edition - Service Pack 2. The motherboard's model is P2Z91EPZ845BF. I have tried turning of the Smartbios feature and I have tried and confirmed that the pci configuration space is. Lift the flat-bed scanner cover to release the lock (1), then gently push the scanner cover support down (2) and close the scanner cover (3) using both hands. - If "Unable to Init. 48" still on the display, go to step 10. - If "Unable to Init. 48" has cleared, go to step 11. 10.

Other links:

Windows 10 Version 1803 Multi Edition Iso Download

Umodel Download Unreal Engine

Clone Drone In The Danger Zone Free No Download

1 note

·

View note

Text

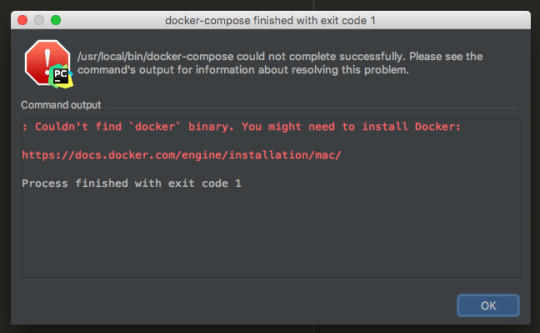

Docker Compose Install For Mac

Estimated reading time: 15 minutes

Docker Compose Install For Mac Installer

Docker Compose Install For Mac High Sierra

Docker Compose Install For Macos

Running Docker On Mac

See full list on docs.docker.com. To uninstall Docker Toolbox from Mac, first simply download the following Docker Toolbox Uninstall Shell Script to your local machine. Use the Terminal application on your Mac (i.e. Press CMD + Space to open Spotlight Search and enter keyword 'Terminal') to change into the directory it was downloaded into (i.e. Cd /Downloads ), and then. If you're a Mac or Windows user, the best way to install Compose and keep it up-to-date is Docker for Mac and Windows. Docker for Mac and Windows will automatically install the latest version of Docker Engine for you. Alternatively, you can use the usual commands to install or upgrade Compose. This view also provides an intuitive interface to perform common actions to inspect, interact with, and manage your Docker objects including containers and Docker Compose-based applications. The Images view displays a list of your Docker images, and allows you to run an image as a container, pull the latest version of an image from Docker Hub. $ docker-compose up -build Creating network 'example-voting-app-masterfront-tier' with the default driver Creating network 'example-voting-app-masterback-tier' with the default driver Creating volume 'example-voting-app-masterdb-data' with default driver Building vote Step 1/7: FROM python:2.7-alpine 2.7-alpine: Pulling from library/python Digest.

Welcome to Docker Desktop! The Docker Desktop for Mac user manual provides information on how to configure and manage your Docker Desktop settings.

For information about Docker Desktop download, system requirements, and installation instructions, see Install Docker Desktop.

Note

This page contains information about the Docker Desktop Stable release. For information about features available in Edge releases, see the Edge release notes.

Preferences

The Docker Preferences menu allows you to configure your Docker settings such as installation, updates, version channels, Docker Hub login,and more.

Choose the Docker menu > Preferences from themenu bar and configure the runtime options described below.

General

On the General tab, you can configure when to start and update Docker:

Start Docker Desktop when you log in: Automatically starts Docker Desktop when you open your session.

Automatically check for updates: By default, Docker Desktop automatically checks for updates and notifies you when an update is available. You can manually check for updates anytime by choosing Check for Updates from the main Docker menu.

Include VM in Time Machine backups: Select this option to back up the Docker Desktop virtual machine. This option is disabled by default.

Securely store Docker logins in macOS keychain: Docker Desktop stores your Docker login credentials in macOS keychain by default.

Send usage statistics: Docker Desktop sends diagnostics, crash reports, and usage data. This information helps Docker improve and troubleshoot the application. Clear the check box to opt out.

Click Switch to the Edge version to learn more about Docker Desktop Edge releases.

Resources

The Resources tab allows you to configure CPU, memory, disk, proxies, network, and other resources.

Advanced

On the Advanced tab, you can limit resources available to Docker.

Advanced settings are:

CPUs: By default, Docker Desktop is set to use half the number of processorsavailable on the host machine. To increase processing power, set this to ahigher number; to decrease, lower the number.

Memory: By default, Docker Desktop is set to use 2 GB runtime memory,allocated from the total available memory on your Mac. To increase the RAM, set this to a higher number. To decrease it, lower the number.

Swap: Configure swap file size as needed. The default is 1 GB.

Disk image size: Specify the size of the disk image.

Disk image location: Specify the location of the Linux volume where containers and images are stored.

You can also move the disk image to a different location. If you attempt to move a disk image to a location that already has one, you get a prompt asking if you want to use the existing image or replace it.

File sharing

Use File sharing to allow local directories on the Mac to be shared with Linux containers.This is especially useful forediting source code in an IDE on the host while running and testing the code in a container.By default the /Users, /Volume, /private, /tmp and /var/folders directory are shared. If your project is outside this directory then it must be addedto the list. Otherwise you may get Mounts denied or cannot start service errors at runtime.

File share settings are:

Docker Compose Install For Mac Installer

Add a Directory: Click + and navigate to the directory you want to add.

Apply & Restart makes the directory available to containers using Docker’sbind mount (-v) feature.

There are some limitations on the directories that can be shared:

The directory must not exist inside of Docker.

For more information, see:

Namespaces in the topic onosxfs file system sharing.

Volume mounting requires file sharing for any project directories outside of /Users.)

Proxies

Docker Desktop detects HTTP/HTTPS Proxy Settings from macOS and automaticallypropagates these to Docker. For example, if you set yourproxy settings to http://proxy.example.com, Docker uses this proxy whenpulling containers.

Your proxy settings, however, will not be propagated into the containers you start.If you wish to set the proxy settings for your containers, you need to defineenvironment variables for them, just like you would do on Linux, for example:

For more information on setting environment variables for running containers,see Set environment variables.

Network

You can configure Docker Desktop networking to work on a virtual private network (VPN). Specify a network address translation (NAT) prefix and subnet mask to enable Internet connectivity.

Docker Engine

The Docker Engine page allows you to configure the Docker daemon to determine how your containers run.

Type a JSON configuration file in the box to configure the daemon settings. For a full list of options, see the Docker Enginedockerd commandline reference.

Click Apply & Restart to save your settings and restart Docker Desktop.

Command Line

Docker Compose Install For Mac High Sierra

On the Command Line page, you can specify whether or not to enable experimental features.

Experimental features provide early access to future product functionality.These features are intended for testing and feedback only as they may changebetween releases without warning or can be removed entirely from a futurerelease. Experimental features must not be used in production environments.Docker does not offer support for experimental features.

To enable experimental features in the Docker CLI, edit the config.jsonfile and set experimental to enabled.

To enable experimental features from the Docker Desktop menu, clickSettings (Preferences on macOS) > Command Line and then turn onthe Enable experimental features toggle. Click Apply & Restart.

For a list of current experimental features in the Docker CLI, see Docker CLI Experimental features.

On both Docker Desktop Edge and Stable releases, you can toggle the experimental features on and off. If you toggle the experimental features off, Docker Desktop uses the current generally available release of Docker Engine.

You can see whether you are running experimental mode at the command line. IfExperimental is true, then Docker is running in experimental mode, as shownhere. (If false, Experimental mode is off.)

Kubernetes

Docker Desktop includes a standalone Kubernetes server that runs on your Mac, sothat you can test deploying your Docker workloads on Kubernetes.

The Kubernetes client command, kubectl, is included and configured to connectto the local Kubernetes server. If you have kubectl already installed andpointing to some other environment, such as minikube or a GKE cluster, be sureto change context so that kubectl is pointing to docker-desktop:

If you installed kubectl with Homebrew, or by some other method, andexperience conflicts, remove /usr/local/bin/kubectl.

To enable Kubernetes support and install a standalone instance of Kubernetesrunning as a Docker container, select Enable Kubernetes. To set Kubernetes as thedefault orchestrator, select Deploy Docker Stacks to Kubernetes by default.

Click Apply & Restart to save the settings. This instantiates images required to run the Kubernetes server as containers, and installs the/usr/local/bin/kubectl command on your Mac.

When Kubernetes is enabled and running, an additional status bar item displaysat the bottom right of the Docker Desktop Settings dialog.

The status of Kubernetes shows in the Docker menu and the context points todocker-desktop.

By default, Kubernetes containers are hidden from commands like dockerservice ls Video media player for mac. , because managing them manually is not supported. To make themvisible, select Show system containers (advanced) and click Apply andRestart. Most users do not need this option.

To disable Kubernetes support at any time, clear the Enable Kubernetes check box. TheKubernetes containers are stopped and removed, and the/usr/local/bin/kubectl command is removed.

For more about using the Kubernetes integration with Docker Desktop, seeDeploy on Kubernetes.

Reset

Reset and Restart options

On Docker Desktop Mac, the Restart Docker Desktop, Reset to factory defaults, and other reset options are available from the Troubleshoot menu.

For information about the reset options, see Logs and Troubleshooting.

Dashboard

The Docker Desktop Dashboard enables you to interact with containers and applications and manage the lifecycle of your applications directly from your machine. The Dashboard UI shows all running, stopped, and started containers with their state. It provides an intuitive interface to perform common actions to inspect and manage containers and existing Docker Compose applications. For more information, see Docker Desktop Dashboard.

Add TLS certificates

You can add trusted Certificate Authorities (CAs) (used to verify registryserver certificates) and client certificates (used to authenticate toregistries) to your Docker daemon.

Add custom CA certificates (server side)

All trusted CAs (root or intermediate) are supported. Docker Desktop creates acertificate bundle of all user-trusted CAs based on the Mac Keychain, andappends it to Moby trusted certificates. So if an enterprise SSL certificate istrusted by the user on the host, it is trusted by Docker Desktop.