#Kubernetes command line guide

Explore tagged Tumblr posts

Text

Kubectl get context: List Kubernetes cluster connections

Kubectl get context: List Kubernetes cluster connections @vexpert #homelab #vmwarecommunities #KubernetesCommandLineGuide #UnderstandingKubectl #ManagingKubernetesResources #KubectlContextManagement #WorkingWithMultipleKubernetesClusters #k8sforbeginners

kubectl, a command line tool, facilitates direct interaction with the Kubernetes API server. Its versatility spans various operations, from procuring cluster data with kubectl get context to manipulating resources using an assortment of kubectl commands. Table of contentsComprehending Fundamental Kubectl CommandsWorking with More Than One Kubernetes ClusterNavigating Contexts with kubectl…

View On WordPress

#Advanced kubectl commands#Kubectl config settings#Kubectl context management#Kubectl for beginners#Kubernetes command line guide#Managing Kubernetes resources#Setting up kubeconfig files#Switching Kubernetes contexts#Understanding kubectl#Working with multiple Kubernetes clusters

0 notes

Text

Ansible Collections: Extending Ansible’s Capabilities

Ansible is a powerful automation tool used for configuration management, application deployment, and task automation. One of the key features that enhances its flexibility and extensibility is the concept of Ansible Collections. In this blog post, we'll explore what Ansible Collections are, how to create and use them, and look at some popular collections and their use cases.

Introduction to Ansible Collections

Ansible Collections are a way to package and distribute Ansible content. This content can include playbooks, roles, modules, plugins, and more. Collections allow users to organize their Ansible content and share it more easily, making it simpler to maintain and reuse.

Key Features of Ansible Collections:

Modularity: Collections break down Ansible content into modular components that can be independently developed, tested, and maintained.

Distribution: Collections can be distributed via Ansible Galaxy or private repositories, enabling easy sharing within teams or the wider Ansible community.

Versioning: Collections support versioning, allowing users to specify and depend on specific versions of a collection. How to Create and Use Collections in Your Projects

Creating and using Ansible Collections involves a few key steps. Here’s a guide to get you started:

1. Setting Up Your Collection

To create a new collection, you can use the ansible-galaxy command-line tool:

ansible-galaxy collection init my_namespace.my_collection

This command sets up a basic directory structure for your collection:

my_namespace/

└── my_collection/

├── docs/

├── plugins/

│ ├── modules/

│ ├── inventory/

│ └── ...

├── roles/

├── playbooks/

├── README.md

└── galaxy.yml

2. Adding Content to Your Collection

Populate your collection with the necessary content. For example, you can add roles, modules, and plugins under the respective directories. Update the galaxy.yml file with metadata about your collection.

3. Building and Publishing Your Collection

Once your collection is ready, you can build it using the following command:

ansible-galaxy collection build

This command creates a tarball of your collection, which you can then publish to Ansible Galaxy or a private repository:

ansible-galaxy collection publish my_namespace-my_collection-1.0.0.tar.gz

4. Using Collections in Your Projects

To use a collection in your Ansible project, specify it in your requirements.yml file:

collections:

- name: my_namespace.my_collection

version: 1.0.0

Then, install the collection using:

ansible-galaxy collection install -r requirements.yml

You can now use the content from the collection in your playbooks:--- - name: Example Playbook hosts: localhost tasks: - name: Use a module from the collection my_namespace.my_collection.my_module: param: value

Popular Collections and Their Use Cases

Here are some popular Ansible Collections and how they can be used:

1. community.general

Description: A collection of modules, plugins, and roles that are not tied to any specific provider or technology.

Use Cases: General-purpose tasks like file manipulation, network configuration, and user management.

2. amazon.aws

Description: Provides modules and plugins for managing AWS resources.

Use Cases: Automating AWS infrastructure, such as EC2 instances, S3 buckets, and RDS databases.

3. ansible.posix

Description: A collection of modules for managing POSIX systems.

Use Cases: Tasks specific to Unix-like systems, such as managing users, groups, and file systems.

4. cisco.ios

Description: Contains modules and plugins for automating Cisco IOS devices.

Use Cases: Network automation for Cisco routers and switches, including configuration management and backup.

5. kubernetes.core

Description: Provides modules for managing Kubernetes resources.

Use Cases: Deploying and managing Kubernetes applications, services, and configurations.

Conclusion

Ansible Collections significantly enhance the modularity, distribution, and reusability of Ansible content. By understanding how to create and use collections, you can streamline your automation workflows and share your work with others more effectively. Explore popular collections to leverage existing solutions and extend Ansible’s capabilities in your projects.

For more details click www.qcsdclabs.com

#redhatcourses#information technology#linux#containerorchestration#container#kubernetes#containersecurity#docker#dockerswarm#aws

2 notes

·

View notes

Text

SRE Roadmap: Your Complete Guide to Becoming a Site Reliability Engineer in 2025

In today’s rapidly evolving tech landscape, Site Reliability Engineering (SRE) has become one of the most in-demand roles across industries. As organizations scale and systems become more complex, the need for professionals who can bridge the gap between development and operations is critical. If you’re looking to start or transition into a career in SRE, this comprehensive SRE roadmap will guide you step by step in 2025.

Why Follow an SRE Roadmap?

The field of SRE is broad, encompassing skills from DevOps, software engineering, cloud computing, and system administration. A well-structured SRE roadmap helps you:

Understand what skills are essential at each stage.

Avoid wasting time on non-relevant tools or technologies.

Stay up to date with industry standards and best practices.

Get job-ready with the right certifications and hands-on experience.

SRE Roadmap: Step-by-Step Guide

🔹 Phase 1: Foundation (Beginner Level)

Key Focus Areas:

Linux Fundamentals – Learn the command line, shell scripting, and process management.

Networking Basics – Understand DNS, HTTP/HTTPS, TCP/IP, firewalls, and load balancing.

Version Control – Master Git and GitHub for collaboration.

Programming Languages – Start with Python or Go for scripting and automation tasks.

Tools to Learn:

Git

Visual Studio Code

Postman (for APIs)

Recommended Resources:

"The Linux Command Line" by William Shotts

GitHub Learning Lab

🔹 Phase 2: Core SRE Skills (Intermediate Level)

Key Focus Areas:

Configuration Management – Learn tools like Ansible, Puppet, or Chef.

Containers & Orchestration – Understand Docker and Kubernetes.

CI/CD Pipelines – Use Jenkins, GitLab CI, or GitHub Actions.

Monitoring & Logging – Get familiar with Prometheus, Grafana, ELK Stack, or Datadog.

Cloud Platforms – Gain hands-on experience with AWS, GCP, or Azure.

Certifications to Consider:

AWS Certified SysOps Administrator

Certified Kubernetes Administrator (CKA)

Google Cloud Professional SRE

🔹 Phase 3: Advanced Practices (Expert Level)

Key Focus Areas:

Site Reliability Principles – Learn about SLIs, SLOs, SLAs, and Error Budgets.

Incident Management – Practice runbooks, on-call rotations, and postmortems.

Infrastructure as Code (IaC) – Master Terraform or Pulumi.

Scalability and Resilience Engineering – Understand fault tolerance, redundancy, and chaos engineering.

Tools to Explore:

Terraform

Chaos Monkey (for chaos testing)

PagerDuty / OpsGenie

Real-World Experience Matters

While theory is important, hands-on experience is what truly sets you apart. Here are some tips:

Set up your own Kubernetes cluster.

Contribute to open-source SRE tools.

Create a portfolio of automation scripts and dashboards.

Simulate incidents to test your monitoring setup.

Final Thoughts

Following this SRE roadmap will provide you with a clear and structured path to break into or grow in the field of Site Reliability Engineering. With the right mix of foundational skills, real-world projects, and continuous learning, you'll be ready to take on the challenges of building reliable, scalable systems.

Ready to Get Certified?

Take your next step with our SRE Certification Course and fast-track your career with expert training, real-world projects, and globally recognized credentials.

0 notes

Text

Docker Tutorial for Beginners: Learn Docker Step by Step

What is Docker?

Docker is an open-source platform that enables developers to automate the deployment of applications inside lightweight, portable containers. These containers include everything the application needs to run—code, runtime, system tools, libraries, and settings—so that it can work reliably in any environment.

Before Docker, developers faced the age-old problem: “It works on my machine!” Docker solves this by providing a consistent runtime environment across development, testing, and production.

Why Learn Docker?

Docker is used by organizations of all sizes to simplify software delivery and improve scalability. As more companies shift to microservices, cloud computing, and DevOps practices, Docker has become a must-have skill. Learning Docker helps you:

Package applications quickly and consistently

Deploy apps across different environments with confidence

Reduce system conflicts and configuration issues

Improve collaboration between development and operations teams

Work more effectively with modern cloud platforms like AWS, Azure, and GCP

Who Is This Docker Tutorial For?

This Docker tutorial is designed for absolute beginners. Whether you're a developer, system administrator, QA engineer, or DevOps enthusiast, you’ll find step-by-step instructions to help you:

Understand the basics of Docker

Install Docker on your machine

Create and manage Docker containers

Build custom Docker images

Use Docker commands and best practices

No prior knowledge of containers is required, but basic familiarity with the command line and a programming language (like Python, Java, or Node.js) will be helpful.

What You Will Learn: Step-by-Step Breakdown

1. Introduction to Docker

We start with the fundamentals. You’ll learn:

What Docker is and why it’s useful

The difference between containers and virtual machines

Key Docker components: Docker Engine, Docker Hub, Dockerfile, Docker Compose

2. Installing Docker

Next, we guide you through installing Docker on:

Windows

macOS

Linux

You’ll set up Docker Desktop or Docker CLI and run your first container using the hello-world image.

3. Working with Docker Images and Containers

You’ll explore:

How to pull images from Docker Hub

How to run containers using docker run

Inspecting containers with docker ps, docker inspect, and docker logs

Stopping and removing containers

4. Building Custom Docker Images

You’ll learn how to:

Write a Dockerfile

Use docker build to create a custom image

Add dependencies and environment variables

Optimize Docker images for performance

5. Docker Volumes and Networking

Understand how to:

Use volumes to persist data outside containers

Create custom networks for container communication

Link multiple containers (e.g., a Node.js app with a MongoDB container)

6. Docker Compose (Bonus Section)

Docker Compose lets you define multi-container applications. You’ll learn how to:

Write a docker-compose.yml file

Start multiple services with a single command

Manage application stacks easily

Real-World Examples Included

Throughout the tutorial, we use real-world examples to reinforce each concept. You’ll deploy a simple web application using Docker, connect it to a database, and scale services with Docker Compose.

Example Projects:

Dockerizing a static HTML website

Creating a REST API with Node.js and Express inside a container

Running a MySQL or MongoDB database container

Building a full-stack web app with Docker Compose

Best Practices and Tips

As you progress, you’ll also learn:

Naming conventions for containers and images

How to clean up unused images and containers

Tagging and pushing images to Docker Hub

Security basics when using Docker in production

What’s Next After This Tutorial?

After completing this Docker tutorial, you’ll be well-equipped to:

Use Docker in personal or professional projects

Learn Kubernetes and container orchestration

Apply Docker in CI/CD pipelines

Deploy containers to cloud platforms

Conclusion

Docker is an essential tool in the modern developer's toolbox. By learning Docker step by step in this beginner-friendly tutorial, you’ll gain the skills and confidence to build, deploy, and manage applications efficiently and consistently across different environments.

Whether you’re building simple web apps or complex microservices, Docker provides the flexibility, speed, and scalability needed for success. So dive in, follow along with the hands-on examples, and start your journey to mastering containerization with Docker tpoint-tech!

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Description This book is a comprehensive guide by expert Luca Berton that equips readers with the skills to proficiently automate configuration management, deployment, and orchestration tasks. Starting with Ansible basics, the book covers workflow, architecture, and environment setup, progressing to executing core tasks such as provisioning, configuration management, application deployment, automation, and orchestration. Advanced topics include Ansible Automation Platform, Morpheus, cloud computing (with an emphasis on Amazon Web Services), and Kubernetes container orchestration. The book addresses common challenges, offers best practices for successful automation implementation, and guides readers in developing a beginner-friendly playbook using Ansible code. With Ansible's widespread adoption and market demand, this guide positions readers as sought-after experts in infrastructure automation. What you will learn ● Gain a comprehensive knowledge of Ansible and its practical applications in Linux and Windows environments. ● Set up and configure Ansible environments, execute automation tasks, and manage configurations. ● Learn advanced techniques such as utilizing the Ansible Automation Platform for improved performance. ● Acquire troubleshooting skills, implement best practices, and design efficient playbooks to streamline operations. Who this book is for This book is targeted towards beginners as well as developers who wish to learn and extract the best out of Ansible for automating their tasks. Whether you are a system administrator, network administrator, developer, or manager, this book caters to all audiences involved in IT operations. No prior knowledge of Ansible is required as the book starts with the basics and gradually progresses to advanced topics. However, familiarity with Linux, command-line interfaces, and basic system administration concepts would be beneficial. Publisher : BPB Publications (20 July 2023) Language : English Paperback : 364 pages ISBN-10 : 9355515596 ISBN-13 : 978-9355515599 Reading age : 15 years and up Item Weight : 690 g Dimensions : 19.05 x 2.13 x 23.5 cm Country of Origin : India Net Quantity : 1.0 Count Generic Name : Book [ad_2]

0 notes

Text

Getting Started with Google Kubernetes Engine: Your Gateway to Cloud-Native Greatness

After spending over 8 years deep in the trenches of cloud engineering and DevOps, I can tell you one thing for sure: if you're serious about scalability, flexibility, and real cloud-native application deployment, Google Kubernetes Engine (GKE) is where the magic happens.

Whether you’re new to Kubernetes or just exploring managed container platforms, getting started with Google Kubernetes Engine is one of the smartest moves you can make in your cloud journey.

"Containers are cool. Orchestrated containers? Game-changing."

🚀 What is Google Kubernetes Engine (GKE)?

Google Kubernetes Engine is a fully managed Kubernetes platform that runs on top of Google Cloud. GKE simplifies deploying, managing, and scaling containerized apps using Kubernetes—without the overhead of maintaining the control plane.

Why is this a big deal?

Because Kubernetes is notoriously powerful and notoriously complex. With GKE, Google handles all the heavy lifting—from cluster provisioning to upgrades, logging, and security.

"GKE takes the complexity out of Kubernetes so you can focus on building, not babysitting clusters."

🧭 Why Start with GKE?

If you're a developer, DevOps engineer, or cloud architect looking to:

Deploy scalable apps across hybrid/multi-cloud

Automate CI/CD workflows

Optimize infrastructure with autoscaling & spot instances

Run stateless or stateful microservices seamlessly

Then GKE is your launchpad.

Here’s what makes GKE shine:

Auto-upgrades & auto-repair for your clusters

Built-in security with Shielded GKE Nodes and Binary Authorization

Deep integration with Google Cloud IAM, VPC, and Logging

Autopilot mode for hands-off resource management

Native support for Anthos, Istio, and service meshes

"With GKE, it's not about managing containers—it's about unlocking agility at scale."

🔧 Getting Started with Google Kubernetes Engine

Ready to dive in? Here's a simple flow to kick things off:

Set up your Google Cloud project

Enable Kubernetes Engine API

Install gcloud CLI and Kubernetes command-line tool (kubectl)

Create a GKE cluster via console or command line

Deploy your app using Kubernetes manifests or Helm

Monitor, scale, and manage using GKE dashboard, Cloud Monitoring, and Cloud Logging

If you're using GKE Autopilot, Google manages your node infrastructure automatically—so you only manage your apps.

“Don’t let infrastructure slow your growth. Let GKE scale as you scale.”

🔗 Must-Read Resources to Kickstart GKE

👉 GKE Quickstart Guide – Google Cloud

👉 Best Practices for GKE – Google Cloud

👉 Anthos and GKE Integration

👉 GKE Autopilot vs Standard Clusters

👉 Google Cloud Kubernetes Learning Path – NetCom Learning

🧠 Real-World GKE Success Stories

A FinTech startup used GKE Autopilot to run microservices with zero infrastructure overhead

A global media company scaled video streaming workloads across continents in hours

A university deployed its LMS using GKE and reduced downtime by 80% during peak exam seasons

"You don’t need a huge ops team to build a global app. You just need GKE."

🎯 Final Thoughts

Getting started with Google Kubernetes Engine is like unlocking a fast track to modern app delivery. Whether you're running 10 containers or 10,000, GKE gives you the tools, automation, and scale to do it right.

With Google Cloud’s ecosystem—from Cloud Build to Artifact Registry to operations suite—GKE is more than just Kubernetes. It’s your platform for innovation.

“Containers are the future. GKE is the now.”

So fire up your first cluster. Launch your app. And let GKE do the heavy lifting while you focus on what really matters—shipping great software.

Let me know if you’d like this formatted into a visual infographic or checklist to go along with the blog!

1 note

·

View note

Text

Why Linode Accounts Are the Best Choice and Where to Buy Them

Linode has become a trusted name in the cloud hosting industry, offering high-quality services tailored for developers, businesses, and enterprises seeking reliable, scalable, and secure infrastructure. With its competitive pricing, exceptional customer support, and a wide range of features, Linode accounts are increasingly popular among IT professionals. If you're wondering why Linode is the best choice and where you can buy Linode account safely, this article will provide comprehensive insights.

Why Linode Accounts Are the Best Choice

1. Reliable Infrastructure

Linode is renowned for its robust and reliable infrastructure. With data centers located worldwide, it ensures high uptime and optimal performance. Businesses that rely on Linode accounts benefit from a stable environment for hosting applications, websites, and services.

Global Data Centers: Linode operates in 11 data centers worldwide, offering low-latency connections and redundancy.

99.99% Uptime SLA: Linode guarantees near-perfect uptime, making it an excellent choice for mission-critical applications.

2. Cost-Effective Pricing

Linode provides affordable pricing options compared to many other cloud providers. Its simple and transparent pricing structure allows users to plan their budgets effectively.

No Hidden Costs: Users pay only for what they use, with no unexpected charges.

Flexible Plans: From shared CPU instances to dedicated servers, Linode offers plans starting as low as $5 per month, making it suitable for businesses of all sizes.

3. Ease of Use

One of the standout features of Linode accounts is their user-friendly interface. The platform is designed to cater to beginners and seasoned developers alike.

Intuitive Dashboard: Manage your servers, monitor performance, and deploy applications easily.

One-Click Apps: Deploy popular applications like WordPress, Drupal, or databases with just one click.

4. High Performance

Linode ensures high performance through cutting-edge technology. Its SSD storage, fast processors, and optimized network infrastructure ensure lightning-fast speeds.

SSD Storage: All Linode plans come with SSDs for faster data access and improved performance.

Next-Generation Hardware: Regular updates to hardware ensure users benefit from the latest innovations.

5. Customizability and Scalability

Linode offers unparalleled flexibility, allowing users to customize their servers based on specific needs.

Custom Configurations: Tailor your server environment, operating system, and software stack.

Scalable Solutions: Scale up or down depending on your resource requirements, ensuring cost efficiency.

6. Developer-Friendly Tools

Linode is a developer-focused platform with robust tools and APIs that simplify deployment and management.

CLI and API Access: Automate server management tasks with Linode’s command-line interface and powerful APIs.

DevOps Ready: Supports tools like Kubernetes, Docker, and Terraform for seamless integration into CI/CD pipelines.

7. Exceptional Customer Support

Linode’s customer support is often highlighted as one of its strongest assets. Available 24/7, the support team assists users with technical and account-related issues.

Quick Response Times: Get answers within minutes through live chat or ticketing systems.

Extensive Documentation: Access tutorials, guides, and forums to resolve issues independently.

8. Security and Compliance

Linode prioritizes user security by providing features like DDoS protection, firewalls, and two-factor authentication.

DDoS Protection: Prevent downtime caused by malicious attacks.

Compliance: Linode complies with industry standards, ensuring data safety and privacy.

Conclusion

Linode accounts are an excellent choice for developers and businesses looking for high-performance, cost-effective, and reliable cloud hosting solutions. With its robust infrastructure, transparent pricing, and user-friendly tools, Linode stands out as a top-tier provider in the competitive cloud hosting market.

When buying Linode accounts, prioritize safety and authenticity by purchasing from the official website or verified sources. This ensures you benefit from Linode’s exceptional features and customer support. Avoid unverified sellers to minimize risks and guarantee a smooth experience.

Whether you’re a developer seeking scalable hosting or a business looking to streamline operations, Linode accounts are undoubtedly one of the best choices. Start exploring Linode today and take your cloud hosting experience to the next level!

0 notes

Text

A Practical Guide to CKA/CKAD Preparation in 2025

The Certified Kubernetes Administrator (CKA) and Certified Kubernetes Application Developer (CKAD) certifications are highly sought-after credentials in the cloud-native ecosystem. These certifications validate your skills and knowledge in managing and developing applications on Kubernetes. This guide provides a practical roadmap for preparing for these exams in 2025.

1. Understand the Exam Objectives

CKA: Focuses on the skills required to administer a Kubernetes cluster. Key areas include cluster architecture, installation, configuration, networking, storage, security, and troubleshooting.

CKAD: Focuses on the skills required to design, build, and deploy cloud-native applications on Kubernetes. Key areas include application design, deployment, configuration, monitoring, and troubleshooting.

Refer to the official CNCF (Cloud Native Computing Foundation) websites for the latest exam curriculum and updates.

2. Build a Strong Foundation

Linux Fundamentals: A solid understanding of Linux command-line tools and concepts is essential for both exams.

Containerization Concepts: Learn about containerization technologies like Docker, including images, containers, and registries.

Kubernetes Fundamentals: Understand core Kubernetes concepts like pods, deployments, services, namespaces, and controllers.

3. Hands-on Practice is Key

Set up a Kubernetes Cluster: Use Minikube, Kind, or a cloud-based Kubernetes service to create a local or remote cluster for practice.

Practice with kubectl: Master the kubectl command-line tool, which is essential for interacting with Kubernetes clusters.

Solve Practice Exercises: Use online resources, practice exams, and mock tests to reinforce your learning and identify areas for improvement.

4. Utilize Effective Learning Resources

Official CNCF Documentation: The official Kubernetes documentation is a comprehensive resource for learning about Kubernetes concepts and features.

Online Courses: Platforms like Udemy, Coursera, and edX offer CKA/CKAD preparation courses with video lectures, hands-on labs, and practice exams.

Books and Study Guides: Several books and study guides are available to help you prepare for the exams.

Community Resources: Engage with the Kubernetes community through forums, Slack channels, and meetups to learn from others and get your questions answered.

5. Exam-Specific Tips

CKA:

Focus on cluster administration tasks like installation, upgrades, and troubleshooting.

Practice managing cluster resources, security, and networking.

Be comfortable with etcd and control plane components.

CKAD:

Focus on application development and deployment tasks.

Practice writing YAML manifests for Kubernetes resources.

Understand application lifecycle management and troubleshooting.

6. Time Management and Exam Strategy

Allocate Sufficient Time: Dedicate enough time for preparation, considering your current knowledge and experience.

Create a Study Plan: Develop a structured study plan with clear goals and timelines.

Practice Time Management: During practice exams, simulate the exam environment and practice managing your time effectively.

Familiarize Yourself with the Exam Environment: The CKA/CKAD exams are online, proctored exams with a command-line interface. Familiarize yourself with the exam environment and tools beforehand.

7. Stay Updated

Kubernetes is constantly evolving. Stay updated with the latest releases, features, and best practices.

Follow the CNCF and Kubernetes community for announcements and updates.

For more information www.hawkstack.com

0 notes

Text

Golang developer,

Golang developer,

In the evolving world of software development, Go (or Golang) has emerged as a powerful programming language known for its simplicity, efficiency, and scalability. Developed by Google, Golang is designed to make developers’ lives easier by offering a clean syntax, robust standard libraries, and excellent concurrency support. Whether you're starting as a new developer or transitioning from another language, this guide will help you navigate the journey of becoming a proficient Golang developer.

Why Choose Golang?

Golang’s popularity has grown exponentially, and for good reasons:

Simplicity: Go's syntax is straightforward, making it accessible for beginners and efficient for experienced developers.

Concurrency Support: With goroutines and channels, Go simplifies writing concurrent programs, making it ideal for systems requiring parallel processing.

Performance: Go is compiled to machine code, which means it executes programs efficiently without requiring a virtual machine.

Scalability: The language’s design promotes building scalable and maintainable systems.

Community and Ecosystem: With a thriving developer community, extensive documentation, and numerous open-source libraries, Go offers robust support for its users.

Key Skills for a Golang Developer

To excel as a Golang developer, consider mastering the following:

1. Understanding Go Basics

Variables and constants

Functions and methods

Control structures (if, for, switch)

Arrays, slices, and maps

2. Deep Dive into Concurrency

Working with goroutines for lightweight threading

Understanding channels for communication

Managing synchronization with sync package

3. Mastering Go’s Standard Library

net/http for building web servers

database/sql for database interactions

os and io for system-level operations

4. Writing Clean and Idiomatic Code

Using Go’s formatting tools like gofmt

Following Go idioms and conventions

Writing efficient error handling code

5. Version Control and Collaboration

Proficiency with Git

Knowledge of tools like GitHub, GitLab, or Bitbucket

6. Testing and Debugging

Writing unit tests using Go’s testing package

Utilizing debuggers like dlv (Delve)

7. Familiarity with Cloud and DevOps

Deploying applications using Docker and Kubernetes

Working with cloud platforms like AWS, GCP, or Azure

Monitoring and logging tools like Prometheus and Grafana

8. Knowledge of Frameworks and Tools

Popular web frameworks like Gin or Echo

ORM tools like GORM

API development with gRPC or REST

Building a Portfolio as a Golang Developer

To showcase your skills and stand out in the job market, work on real-world projects. Here are some ideas:

Web Applications: Build scalable web applications using frameworks like Gin or Fiber.

Microservices: Develop microservices architecture to demonstrate your understanding of distributed systems.

Command-Line Tools: Create tools or utilities to simplify repetitive tasks.

Open Source Contributions: Contribute to Golang open-source projects on platforms like GitHub.

Career Opportunities

Golang developers are in high demand across various industries, including fintech, cloud computing, and IoT. Popular roles include:

Backend Developer

Cloud Engineer

DevOps Engineer

Full Stack Developer

Conclusion

Becoming a proficient Golang developer requires dedication, continuous learning, and practical experience. By mastering the language’s features, leveraging its ecosystem, and building real-world projects, you can establish a successful career in this growing field. Start today and join the vibrant Go community to accelerate your journey.

0 notes

Text

Getting Started with Kubernetes: A Hands-on Guide

Getting Started with Kubernetes: A Hands-on Guide

Kubernetes: A Brief Overview

Kubernetes, often abbreviated as K8s, is a powerful open-source platform designed to automate the deployment, scaling, and management of containerized applications. It1 simplifies the complexities of container orchestration, allowing developers to focus on building and deploying applications without worrying about the underlying infrastructure.2

Key Kubernetes Concepts

Cluster: A group of machines (nodes) working together to run containerized applications.

Node: A physical or virtual machine that runs containerized applications.

Pod: The smallest deployable3 unit of computing, consisting of one or more containers.

Container: A standardized unit of software that packages code and its dependencies.

Setting Up a Kubernetes Environment

To start your Kubernetes journey, you can set up a local development environment using minikube. Minikube creates a single-node Kubernetes cluster on your local machine.

Install minikube: Follow the instructions for your operating system on the minikube website.

Start the minikube cluster: Bashminikube start

Configure kubectl: Bashminikube config --default-context

Interacting with Kubernetes: Using kubectl

kubectl is the command-line tool used to interact with Kubernetes clusters. Here are some basic commands:

Get information about nodes: Bashkubectl get nodes

Get information about pods: Bashkubectl get pods

Create a deployment: Bashkubectl create deployment my-deployment --image=nginx

Expose a service: Bashkubectl expose deployment my-deployment --type=NodePort

Your First Kubernetes Application

Create a simple Dockerfile: DockerfileFROM nginx:alpine COPY index.html /usr/share/nginx/html/

Build the Docker image: Bashdocker build -t my-nginx .

Push the image to a registry (e.g., Docker Hub): Bashdocker push your-username/my-nginx

Create a Kubernetes Deployment: Bashkubectl create deployment my-nginx --image=your-username/my-nginx

Expose the deployment as a service: Bashkubectl expose deployment my-nginx --type=NodePort

Access the application: Use the NodePort exposed by the service to access the application in your browser.

Conclusion

Kubernetes offers a powerful and flexible platform for managing containerized applications. By understanding the core concepts and mastering the kubectl tool, you can efficiently deploy, scale, and manage your applications.

Keywords: Kubernetes, container orchestration, minikube, kubectl, deployment, scaling, pods, services, Docker, Dockerfile

#redhatcourses#information technology#containerorchestration#kubernetes#docker#container#linux#containersecurity#dockerswarm

1 note

·

View note

Text

Google Cloud (GCP) Platform: GCP Essentials, Cloud Computing, GCP Associate Cloud Engineer, and Professional Cloud Architect

Introduction

Google Cloud Platform (GCP) is one of the leading cloud computing platforms, offering a range of services and tools for businesses and individuals to build, deploy, and manage applications on Google’s infrastructure. In this guide, we’ll dive into the essentials of GCP, explore cloud computing basics, and examine two major GCP certifications: the Associate Cloud Engineer and Professional Cloud Architect. Whether you’re a beginner or aiming to level up in your cloud journey, understanding these aspects of GCP is essential for success.

1. Understanding Google Cloud Platform (GCP) Essentials

Google Cloud Platform offers over 90 products covering compute, storage, networking, and machine learning. Here are the essentials:

Compute Engine: Virtual machines on demand

App Engine: Platform as a Service (PaaS) for app development

Kubernetes Engine: Managed Kubernetes for container orchestration

Cloud Functions: Serverless execution for event-driven functions

BigQuery: Data warehouse for analytics

Cloud Storage: Scalable object storage for any amount of data

With these foundational services, GCP allows businesses to scale, innovate, and adapt to changing needs without the limitations of traditional on-premises infrastructure.

2. Introduction to Cloud Computing

Cloud computing is the delivery of on-demand computing resources over the internet. These resources include:

Infrastructure as a Service (IaaS): Basic computing, storage, and networking resources

Platform as a Service (PaaS): Development tools and environment for building apps

Software as a Service (SaaS): Fully managed applications accessible via the internet

In a cloud environment, users pay for only the resources they use, allowing them to optimize cost, increase scalability, and ensure high availability.

3. GCP Services and Tools Overview

GCP provides a suite of tools for development, storage, machine learning, and data analysis:

AI and Machine Learning Tools: Google Cloud ML, AutoML, and TensorFlow

Data Management: Datastore, Firestore, and Cloud SQL

Identity and Security: Identity and Access Management (IAM), Key Management

Networking: VPC, Cloud CDN, and Cloud Load Balancing

4. Getting Started with GCP Essentials

To start with GCP, you need a basic understanding of cloud infrastructure:

Create a GCP Account: You’ll gain access to a free tier with $300 in credits.

Explore the GCP Console: The console provides a web-based interface for managing resources.

Google Cloud Shell: A command-line interface that runs in the cloud, giving you quick access to GCP tools and resources.

5. GCP Associate Cloud Engineer Certification

The Associate Cloud Engineer certification is designed for beginners in the field of cloud engineering. This certification covers:

Managing GCP Services: Setting up projects and configuring compute resources

Storage and Databases: Working with storage solutions like Cloud Storage, Bigtable, and SQL

Networking: Configuring network settings and VPCs

IAM and Security: Configuring access management and security protocols

This certification is ideal for entry-level roles in cloud administration and engineering.

6. Key Topics for GCP Associate Cloud Engineer Certification

The main topics covered in the exam include:

Setting up a Cloud Environment: Creating and managing GCP projects and billing accounts

Planning and Configuring a Cloud Solution: Configuring VM instances and deploying storage solutions

Ensuring Successful Operation: Managing resources and monitoring solutions

Configuring Access and Security: Setting up IAM and implementing security best practices

7. GCP Professional Cloud Architect Certification

The Professional Cloud Architect certification is an advanced-level certification. It prepares professionals to:

Design and Architect GCP Solutions: Creating scalable and efficient solutions that meet business needs

Optimize for Security and Compliance: Ensuring GCP solutions meet security standards

Manage and Provision GCP Infrastructure: Deploying and managing resources to maintain high availability and performance

This certification is ideal for individuals in roles involving solution design, architecture, and complex cloud deployments.

8. Key Topics for GCP Professional Cloud Architect Certification

Key areas covered in the Professional Cloud Architect exam include:

Designing Solutions for High Availability: Ensuring solutions remain available even during failures

Analyzing and Optimizing Processes: Ensuring that processes align with business objectives

Managing and Provisioning Infrastructure: Creating automated deployments using tools like Terraform and Deployment Manager

Compliance and Security: Developing secure applications that comply with industry standards

9. Preparing for GCP Certifications

Preparation for GCP certifications involves hands-on practice and understanding key concepts:

Use GCP’s Free Tier: GCP offers a free trial with $300 in credits for testing services.

Enroll in Training Courses: Platforms like Coursera and Google’s Qwiklabs offer courses for each certification.

Practice Labs: Qwiklabs provides guided labs to help reinforce learning with real-world scenarios.

Practice Exams: Test your knowledge with practice exams to familiarize yourself with the exam format.

10. Best Practices for Cloud Engineers and Architects

Follow GCP’s Best Practices: Use Google’s architecture framework to design resilient solutions.

Automate Deployments: Use IaC tools like Terraform for consistent deployments.

Monitor and Optimize: Use Cloud Monitoring and Cloud Logging to track performance.

Cost Management: Utilize GCP’s Billing and Cost Management tools to control expenses.

Conclusion

Whether you aim to become a GCP Associate Cloud Engineer or a Professional Cloud Architect, GCP certifications provide a valuable pathway to expertise. GCP’s comprehensive services and tools make it a powerful choice for anyone looking to expand their cloud computing skills.

0 notes

Text

Kubernetes with HELM: A Complete Guide to Managing Complex Applications

Kubernetes is the backbone of modern cloud-native applications, orchestrating containerized workloads for improved scalability, resilience, and efficient deployment. HELM, on the other hand, is a Kubernetes package manager that simplifies the deployment and management of applications within Kubernetes clusters. When Kubernetes and HELM are used together, they bring seamless deployment, management, and versioning capabilities, making application orchestration simpler.

This guide will cover the basics of Kubernetes and HELM, their individual roles, the synergy they create when combined, and best practices for leveraging their power in real-world applications. Whether you are new to Kubernetes with HELM or looking to deepen your knowledge, this guide will provide everything you need to get started.

What is Kubernetes?

Kubernetes is an open-source platform for automating the deployment, scaling, and management of containerized applications. Developed by Google, it’s now managed by the Cloud Native Computing Foundation (CNCF). Kubernetes clusters consist of nodes, which are servers that run containers, providing the infrastructure needed for large-scale applications. Kubernetes streamlines many complex tasks, including load balancing, scaling, resource management, and auto-scaling, which can be challenging to handle manually.

Key Components of Kubernetes:

Pods: The smallest deployable units that host containers.

Nodes: Physical or virtual machines that host pods.

ReplicaSets: Ensure a specified number of pod replicas are running at all times.

Services: Abstractions that allow reliable network access to a set of pods.

Namespaces: Segregate resources within the cluster for better management.

Introduction to HELM: The Kubernetes Package Manager

HELM is known as the "package manager for Kubernetes." It allows you to define, install, and upgrade complex Kubernetes applications. HELM simplifies application deployment by using "charts," which are collections of files describing a set of Kubernetes resources.

With HELM charts, users can quickly install pre-configured applications on Kubernetes without worrying about complex configurations. HELM essentially enables Kubernetes clusters to be as modular and reusable as possible.

Key Components of HELM:

Charts: Packaged applications for Kubernetes, consisting of resource definitions.

Releases: A deployed instance of a HELM chart, tracked and managed for updates.

Repositories: Storage locations for charts, similar to package repositories in Linux.

Why Use Kubernetes with HELM?

The combination of Kubernetes with HELM brings several advantages, especially for developers and DevOps teams looking to streamline deployments:

Simplified Deployment: HELM streamlines Kubernetes deployments by managing configuration as code.

Version Control: HELM allows version control for application configurations, making it easy to roll back to previous versions if necessary.

Reusable Configurations: HELM’s modularity ensures that configurations are reusable across different environments.

Automated Dependency Management: HELM manages dependencies between different Kubernetes resources, reducing manual configurations.

Scalability: HELM’s configurations enable scalability and high availability, key elements for large-scale applications.

Installing HELM and Setting Up Kubernetes

Before diving into using Kubernetes with HELM, it's essential to install and configure both. This guide assumes you have a Kubernetes cluster ready, but we will go over installing and configuring HELM.

1. Installing HELM:

Download HELM binaries from the official HELM GitHub page.

Use the command line to install and configure HELM with Kubernetes.

Verify HELM installation with: bash Copy code helm version

2. Adding HELM Repository:

HELM repositories store charts. To use a specific repository, add it with the following:

bash

Copy code

helm repo add [repo-name] [repo-URL]

helm repo update

3. Deploying a HELM Chart:

Once HELM and Kubernetes are ready, install a chart:

bash

Copy code

helm install [release-name] [chart-name]

Example:

bash

Copy code

helm install myapp stable/nginx

This installs the NGINX server from the stable HELM repository, demonstrating how easy it is to deploy applications using HELM.

Working with HELM Charts in Kubernetes

HELM charts are the core of HELM’s functionality, enabling reusable configurations. A HELM chart is a package that contains the application definition, configurations, dependencies, and resources required to deploy an application on Kubernetes.

Structure of a HELM Chart:

Chart.yaml: Contains metadata about the chart.

values.yaml: Configuration values used by the chart.

templates: The directory containing Kubernetes resource files (e.g., deployment, service).

charts: Directory for dependencies.

HELM Commands for Chart Management:

Install a Chart: helm install [release-name] [chart-name]

Upgrade a Chart: helm upgrade [release-name] [chart-name]

List Installed Charts: helm list

Rollback a Chart: helm rollback [release-name] [revision]

Best Practices for Using Kubernetes with HELM

To maximize the efficiency of Kubernetes with HELM, consider these best practices:

Use Values Files for Configuration: Instead of editing templates, use values.yaml files for configuration. This promotes clean, maintainable code.

Modularize Configurations: Break down configurations into modular charts to improve reusability.

Manage Dependencies Properly: Use requirements.yaml to define and manage dependencies effectively.

Enable Rollbacks: HELM provides a built-in rollback functionality, which is essential in production environments.

Automate Using CI/CD: Integrate HELM commands within CI/CD pipelines to automate deployments and updates.

Deploying a Complete Application with Kubernetes and HELM

Consider a scenario where you want to deploy a multi-tier application with Kubernetes and HELM. This deployment can involve setting up multiple services, databases, and caches.

Steps for a Multi-Tier Deployment:

Create Separate HELM Charts for each service in your application (e.g., frontend, backend, database).

Define Dependencies in requirements.yaml to link services.

Use Namespace Segmentation to separate environments (e.g., development, testing, production).

Automate Scaling and Monitoring: Set up auto-scaling for each service using Kubernetes’ Horizontal Pod Autoscaler and integrate monitoring tools like Prometheus and Grafana.

Benefits of Kubernetes with HELM for DevOps and CI/CD

HELM and Kubernetes empower DevOps teams by enabling Continuous Integration and Continuous Deployment (CI/CD), improving the efficiency of application updates and version control. With HELM, CI/CD pipelines can automatically deploy updated Kubernetes applications without manual intervention.

Automated Deployments: HELM’s charts make deploying new applications faster and less error-prone.

Simplified Rollbacks: With HELM, rolling back to a previous version is straightforward, critical for continuous deployment.

Enhanced Version Control: HELM’s configuration files allow DevOps teams to keep track of configuration changes over time.

Troubleshooting Kubernetes with HELM

Here are some common issues and solutions when working with Kubernetes and HELM:

Failed HELM Deployment:

Check logs with kubectl logs.

Use helm status [release-name] for detailed status.

Chart Version Conflicts:

Ensure charts are compatible with the cluster’s Kubernetes version.

Specify chart versions explicitly to avoid conflicts.

Resource Allocation Issues:

Ensure adequate resource allocation in values.yaml.

Use Kubernetes' resource requests and limits to manage resources effectively.

Dependency Conflicts:

Define exact dependency versions in requirements.yaml.

Run helm dependency update to resolve issues.

Future of Kubernetes with HELM

The demand for scalable, containerized applications continues to grow, and so will the reliance on Kubernetes with HELM. New versions of HELM, improved Kubernetes integrations, and more powerful CI/CD support will undoubtedly shape how applications are managed.

GitOps Integration: GitOps, a popular methodology for managing Kubernetes resources through Git, complements HELM’s functionality, enabling automated deployments.

Enhanced Security: The future holds more secure deployment options as Kubernetes and HELM adapt to meet evolving security standards.

Conclusion

Using Kubernetes with HELM enhances application deployment and management significantly, making it simpler to manage complex configurations and orchestrate applications. By following best practices, leveraging modular charts, and integrating with CI/CD, you can harness the full potential of this powerful duo. Embracing Kubernetes and HELM will set you on the path to efficient, scalable, and resilient application management in any cloud environment.

With this knowledge, you’re ready to start using Kubernetes with HELM to transform the way you manage applications, from development to production!

0 notes

Text

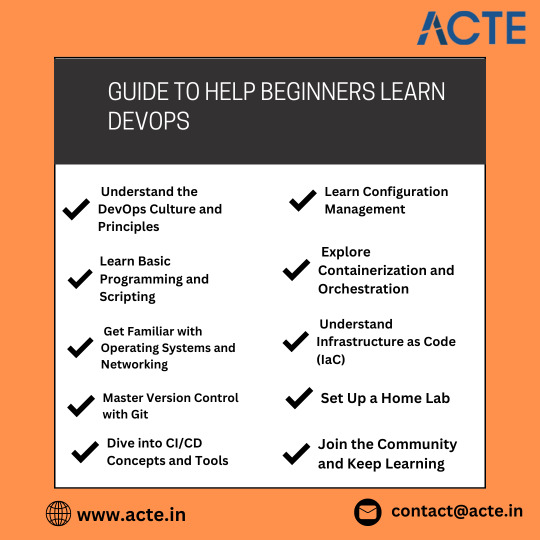

Embarking on a journey to learn DevOps can be both exciting and overwhelming for beginners. DevOps, which focuses on the integration and automation of processes between software development and IT operations, offers a dynamic and rewarding career. Here’s a comprehensive guide to help beginners navigate the path to becoming proficient in DevOps. For individuals who want to work in the sector, a respectable DevOps Training in Pune can give them the skills and information they need to succeed in this fast-paced atmosphere.

Understanding the Basics

Before diving into DevOps tools and practices, it’s crucial to understand the fundamental concepts:

1. DevOps Culture: DevOps emphasizes collaboration between development and operations teams to improve efficiency and deploy software faster. It’s not just about tools but also about fostering a culture of continuous improvement, automation, and teamwork.

2. Core Principles: Familiarize yourself with the core principles of DevOps, such as Continuous Integration (CI), Continuous Delivery (CD), Infrastructure as Code (IaC), and Monitoring and Logging. These principles are the foundation of DevOps practices.

Learning the Essentials

To build a strong foundation in DevOps, beginners should focus on acquiring knowledge in the following areas:

1. Version Control Systems: Learn how to use Git, a version control system that tracks changes in source code during software development. Platforms like GitHub and GitLab are also essential for managing repositories and collaborating with other developers.

2. Command Line Interface (CLI): Becoming comfortable with the CLI is crucial, as many DevOps tasks are performed using command-line tools. Start with basic Linux commands and gradually move on to more advanced scripting.

3. Programming and Scripting Languages: Knowledge of programming and scripting languages like Python, Ruby, and Shell scripting is valuable. These languages are often used for automation tasks and writing infrastructure code.

4. Networking and Security: Understanding basic networking concepts and security best practices is essential for managing infrastructure and ensuring the security of deployed applications.

Hands-On Practice with Tools

Practical experience with DevOps tools is key to mastering DevOps practices. Here are some essential tools for beginners:

1. CI/CD Tools: Get hands-on experience with CI/CD tools like Jenkins, Travis CI, or CircleCI. These tools automate the building, testing, and deployment of applications.

2. Containerization: Learn about Docker, a platform that automates the deployment of applications in lightweight, portable containers. Understanding container orchestration tools like Kubernetes is also beneficial.

3. Configuration Management: Familiarize yourself with configuration management tools like Ansible, Chef, or Puppet. These tools automate the provisioning and management of infrastructure.

4. Cloud Platforms: Explore cloud platforms like AWS, Azure, or Google Cloud. These platforms offer various services and tools that are integral to DevOps practices. Enrolling in DevOps Online Course can enable individuals to unlock DevOps' full potential and develop a deeper understanding of its complexities.

Continuous Learning and Improvement

DevOps is a constantly evolving field, so continuous learning is essential:

1. Online Courses and Tutorials: Enroll in online courses and follow tutorials from platforms like Coursera, Udemy, and LinkedIn Learning. These resources offer structured learning paths and hands-on projects.

2. Community Involvement: Join DevOps communities, attend meetups, and participate in forums. Engaging with the community can provide valuable insights, networking opportunities, and support from experienced professionals.

3. Certification: Consider obtaining DevOps certifications, such as the AWS Certified DevOps Engineer or Google Professional DevOps Engineer. Certifications can validate your skills and enhance your career prospects.

Conclusion

Learning DevOps as a beginner involves understanding its core principles, gaining hands-on experience with essential tools, and continuously improving your skills. By focusing on the basics, practicing with real-world tools, and staying engaged with the DevOps community, you can build a solid foundation and advance your career in this dynamic field. The journey may be challenging, but with persistence and dedication, you can achieve proficiency in DevOps and unlock exciting career opportunities.

0 notes

Text

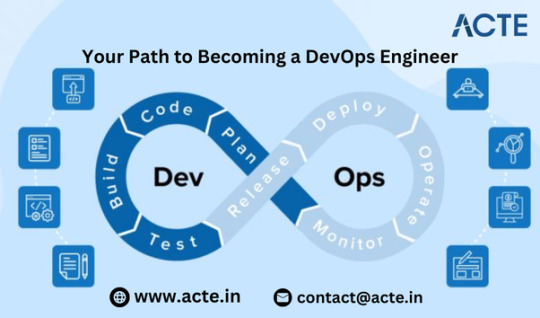

Your Path to Becoming a DevOps Engineer

Thinking about a career as a DevOps engineer? Great choice! DevOps engineers are pivotal in the tech world, automating processes and ensuring smooth collaboration between development and operations teams. Here’s a comprehensive guide to kick-starting your journey with the Best Devops Course.

Grasping the Concept of DevOps

Before you dive in, it’s essential to understand what DevOps entails. DevOps merges "Development" and "Operations" to boost collaboration and efficiency by automating infrastructure, workflows, and continuously monitoring application performance.

Step 1: Build a Strong Foundation

Start with the Essentials:

Programming and Scripting: Learn languages like Python, Ruby, or Java. Master scripting languages such as Bash and PowerShell for automation tasks.

Linux/Unix Basics: Many DevOps tools operate on Linux. Get comfortable with Linux command-line basics and system administration.

Grasp Key Concepts:

Version Control: Familiarize yourself with Git to track code changes and collaborate effectively.

Networking Basics: Understand networking principles, including TCP/IP, DNS, and HTTP/HTTPS.

If you want to learn more about ethical hacking, consider enrolling in an Devops Online course They often offer certifications, mentorship, and job placement opportunities to support your learning journey.

Step 2: Get Proficient with DevOps Tools

Automation Tools:

Jenkins: Learn to set up and manage continuous integration/continuous deployment (CI/CD) pipelines.

Docker: Grasp containerization and how Docker packages applications with their dependencies.

Configuration Management:

Ansible, Puppet, and Chef: Use these tools to automate the setup and management of servers and environments.

Infrastructure as Code (IaC):

Terraform: Master Terraform for managing and provisioning infrastructure via code.

Monitoring and Logging:

Prometheus and Grafana: Get acquainted with monitoring tools to track system performance.

ELK Stack (Elasticsearch, Logstash, Kibana): Learn to set up and visualize log data.

Consider enrolling in a DevOps Online course to delve deeper into ethical hacking. These courses often provide certifications, mentorship, and job placement opportunities to support your learning journey.

Step 3: Master Cloud Platforms

Cloud Services:

AWS, Azure, and Google Cloud: Gain expertise in one or more major cloud providers. Learn about their services, such as compute, storage, databases, and networking.

Cloud Management:

Kubernetes: Understand how to manage containerized applications with Kubernetes.

Step 4: Apply Your Skills Practically

Hands-On Projects:

Personal Projects: Develop your own projects to practice setting up CI/CD pipelines, automating tasks, and deploying applications.

Open Source Contributions: Engage with open-source projects to gain real-world experience and collaborate with other developers.

Certifications:

Earn Certifications: Consider certifications like AWS Certified DevOps Engineer, Google Cloud Professional DevOps Engineer, or Azure DevOps Engineer Expert to validate your skills and enhance your resume.

Step 5: Develop Soft Skills and Commit to Continuous Learning

Collaboration:

Communication: As a bridge between development and operations teams, effective communication is vital.

Teamwork: Work efficiently within a team, understanding and accommodating diverse viewpoints and expertise.

Adaptability:

Stay Current: Technology evolves rapidly. Keep learning and stay updated with the latest trends and tools in the DevOps field.

Problem-Solving: Cultivate strong analytical skills to troubleshoot and resolve issues efficiently.

Conclusion

Begin Your Journey Today: Becoming a DevOps engineer requires a blend of technical skills, hands-on experience, and continuous learning. By building a strong foundation, mastering essential tools, gaining cloud expertise, and applying your skills through projects and certifications, you can pave your way to a successful DevOps career. Persistence and a passion for technology will be your best allies on this journey.

0 notes

Text

Aviators Expands Collaboration with Google Cloud Developers

Aviators expand Google Cloud

Even though Google has spent a lot of money on engineering productivity over the past 20 years, until a few years ago, most of the industry did not priorities this area of study. However, as companies seek to improve the productivity of their engineering teams, this data-driven discipline has gained prominence due to the rise of remote work and the quickly changing AI landscape.

Google have intimate knowledge of the difficulties (and possibilities) involved in increasing engineering efficiency because they were once employees of Google. For this reason, Cloud set out to create Aviator, an engineering productivity platform that enhances performance at every stage of the development lifecycle and assists teams in removing tedious but vital chores from their workdays.

Utilizing Google Cloud to Create a Scalable Services

Scalable Services

Building Aviator from the ground up on Google Cloud was an obvious choice, since they goal is to provide every developer with productivity engineering on par with Google. Additionally, They applied to and were accepted into the Google for Startups programme, which provides extensive credits for cloud products, business help, and technical training. This allowed our team to investigate a number of cloud possibilities without having to worry too much about price.

Google Cloud guiding principles were the main metrics that the DORA (DevOps Research and Assessment) team produced. With Google Cloud, They created a platform that provides:

Quicker and more adaptable code reviews: Automated code review guidelines, real-time reviewer input, and predetermined response time targets enhance code review cycles. With the help of these tools, developers may release code more quickly, increase the velocity of their development teams, and shorten the time it takes for code to enter production.

Stack pull requests (PRs), which are modest code changes that can be independently reviewed in a predetermined order and then synchronised to remove development bottlenecks and prevent merge conflicts, are a useful tool for accelerating review cycles.

Simplified, adaptable merging: Take command of crowded repositories with a high-throughput merge queue designed to handle thousands of pull requests while lowering out-of-date pull requests, merge conflicts, inconsistent modifications, and malfunctioning builds. Because isolated code changes are verified before being merged back into the main line of development, this increases the frequency of deployments and decreases the rate of change failures.

Shrewd release notes tailored to a service: With a single dashboard that assists teams in automatically creating release notes and managing deployments, rollbacks, and releases across all environments, you can do away with disorganized release notes and clumsy verification procedures. Development teams may provide more dependable products and systems and shorten the time it takes to recover from production failures by using the releases framework, which also increases deployment frequency and rollbacks.

Their scalable service was implemented using multiple Google Cloud products. For instance, Aviator’s architecture mainly depends on background activities to carry out automated actions. In order to scale Aviator to thousands of active users and millions of code changes, Google decided to adopt Google Kubernetes Engine (GKE). This allowed us to expand Aviators Kubernetes pods horizontally as use increased.

Furthermore, They were able to handle deployments with Google Cloud without requiring us to keep credentials on the CD platform. Additionally, They made use of the cutting-edge IAM architecture of Google Cloud to offer more flexibility in permission management.

Aviator

With these extra features, Aviator may further simplify management and collaboration for engineers by utilising Google Cloud:

Monitoring the health of the system

An open-source monitoring tool called Prometheus gathers time series data from configured targets, like applications and infrastructure, using a pull paradigm. Google were able to build up complete monitoring and alerting for Aviator without worrying about scalability or dependability thanks to Managed Service for Prometheus. In addition to our Prometheus data, Cloud Monitoring offers us access to over 6,500 free metrics that provide us a comprehensive overview of the functionality, availability, and overall health of our service in one location.

Management of logs

Aviator uses API calls as a main method of communication with external services like GitHub, PagerDuty, and Slack. Due to these services’ unreliability or network problems, these API calls frequently fail. In order to ensure that Google could quickly troubleshoot and fix any issues that are reported, They employed Google Cloud’s powerful log management features to handle this issue. This also made it simple to develop structured queries, filter the logs for various services, and even set up alarms depending on predetermined criteria.

Detection of slow queries

They picked Cloud SQL, a fully managed PostgreSQL database service from Google Cloud, for our primary database since it offers high availability and performance right out of the box. In order to identify sluggish queries on Aviator, They have been investigating query labelling with Sqlcommenter more recently.

Google can easily identify the cause of each sluggish query by using this open-source tool, which samples and tags every query. Additionally, They make use of the Python module Sqlcommenter, which works nicely with the backend of our application.

Management of rate limits

Since our team uses so many third-party services, controlling rate restrictions was essential to ensuring that our users had a continuous experience while adhering to the third-party services’ permitted limits. Furthermore, there are many APIs in Aviator itself that require rate limitations. To make monitoring and enforcing rate restrictions for both inbound and outbound API calls easier, Google Cloud used Memory store for Radis.

Cloud-based, self-hosted, and single-tenant

Because Aviator can accommodate engineering teams of any size from 20 engineers to over 2,000 installations might differ substantially. They felt that Aviator had to be able to accommodate a wide range of demands and specifications.

Currently, when configuring Aviator, a developer has the choice of choosing cloud, self-hosted, or single tenant installation. Let’s examine each in more detail:

Installation of Clouds

This version is the easiest for users to set up and is fully controlled by Aviator through a Kubernetes cluster in Google Cloud. They also perform a regular daily deployment to update it.

Independent

A self-hosted version of Aviator that they can install on their own private cloud is preferred by certain users. In this configuration, They upload new versions of the Aviator programme as Docker images to Google Cloud’s Artefact registry and publish Helm charts to a private repository.

They generate a new IAM service account with an authentication key and read-only access to the private repository where Google Cloud host our Docker images for each self-hosted customer. This account is then shared with our users. This facilitates our users’ installation of a self-hosted version of Aviator in a straightforward and safe manner.

Tenant alone

The self-hosted version and the single-tenant installation are nearly identical, with the exception that Aviator oversees the installation through our personal Google Cloud account. Users now have more flexibility over their Aviator setting and improved security as a result.

AI Research

Even more intriguing options for expanding engineering productivity have been shown by recent advances with LLMs. At Aviator, They’ve already begun investigating a number of AI-powered solutions that can help at different phases of the development lifecycle, such as:

Test generation: By using AI to create test cases on its own, developers may save a tonne of time and identify possible flaws early in the development process.

Code auto-completion: AI-powered solutions like GitHub Copilot propose code snippets in real time, helping engineers write code faster and more accurately.

Predictive test selection: AI can speed up development by lowering the number of tests run each cycle and identifying code changes that will fail tests.

Google is a leader in AI innovation with over ten years of expertise. This lets Google Cloud offer cutting-edge AI solutions like Vertex AI and Gemini. Aviator’s AI base from Google Cloud streamlines development and lets us launch next-generation AI features quickly.

In summary

More than just a performance indicator, engineering productivity is a key factor in the success of businesses. Through improved developer collaboration and efficiency, businesses may shorten time-to-market and respond faster to shifting consumer expectations. In this journey, Google Cloud has shown to be an excellent collaborator.

It is specially suited to enable quick iterations while abstracting away complexity thanks to its unique combination of dependability, speed, and performance as well as its state-of-the-art AI capabilities. At Aviator, Google Cloud are eager to keep using these technologies to increase engineering productivity to new heights.

Read more on Govindhtech.com

#GoogleKubernetesEngine#GoogleCloud#CloudMonitoring#CloudSQL#LLMs#github#VertexAI#aicapabilities#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

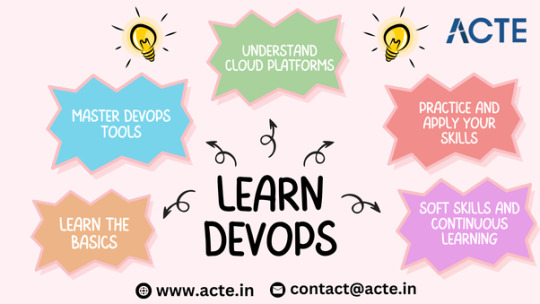

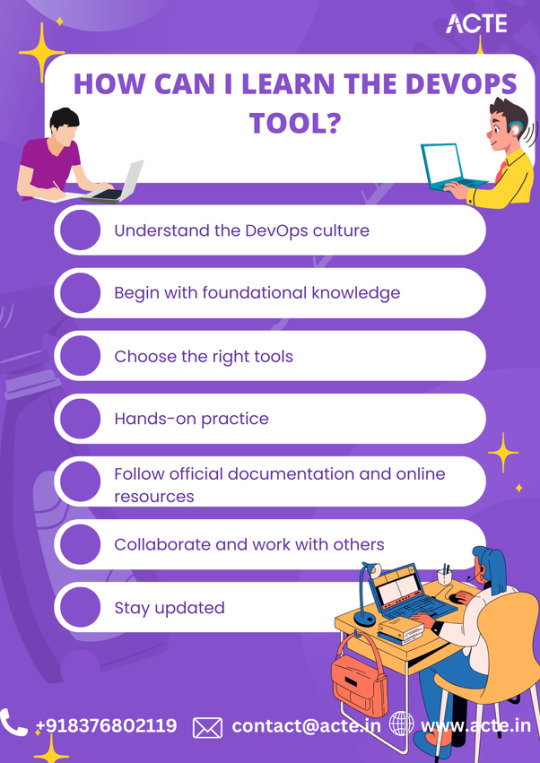

Unlocking the Secrets of Learning DevOps Tools

In the ever-evolving landscape of IT and software development, DevOps has emerged as a crucial methodology for improving collaboration, efficiency, and productivity. Learning DevOps tools is a key step towards mastering this approach, but it can sometimes feel like unraveling a complex puzzle. In this blog, we will explore the secrets to mastering DevOps tools and navigating the path to becoming a proficient DevOps practitioner.

Learning DevOps tools can seem overwhelming at first, but with the right approach, it can be an exciting and rewarding journey. Here are some key steps to help you learn DevOps tools easily: DevOps training in Hyderabad Where traditional boundaries fade, and a unified approach to development and operations emerges.

1. Understand the DevOps culture: DevOps is not just about tools, but also about adopting a collaborative and iterative mindset. Start by understanding the principles and goals of DevOps, such as continuous integration, continuous delivery, and automation. Embrace the idea of breaking down silos and promoting cross-functional teams.

2. Begin with foundational knowledge: Before diving into specific tools, it's important to have a solid understanding of the underlying technologies. Get familiar with concepts like version control systems (e.g., Git), Linux command line, network protocols, and basic programming languages like Python or Shell scripting. This groundwork will help you better grasp the DevOps tools and their applications.

3. Choose the right tools: DevOps encompasses a wide range of tools, each serving a specific purpose. Start by identifying the tools most relevant to your requirements. Some popular ones include Jenkins, Ansible, Docker, Kubernetes, and AWS CloudFormation. Don't get overwhelmed by the number of tools; focus on learning a few key ones initially and gradually expand your skill set.

4. Hands-on practice: Theory alone won't make you proficient in DevOps tools. Set up a lab environment, either locally or through cloud services, where you can experiment and work with the tools. Build sample projects, automate deployments, and explore different functionalities. The more hands-on experience you gain, the more comfortable you'll become with the tools

Elevate your career prospects with our DevOps online course – because learning isn’t confined to classrooms, it happens where you are

5. Follow official documentation and online resources: DevOps tools often have well-documented official resources, including tutorials, guides, and examples. Make it a habit to consult these resources as they provide detailed information on installation procedures, configuration setup, and best practices. Additionally, join online communities and forums where you can ask questions, share ideas, and learn from experienced practitioners.

6. Collaborate and work with others: DevOps thrives on collaboration and teamwork. Engage with fellow DevOps enthusiasts, attend conferences, join local meetups, and participate in online discussions. By interacting with others, you'll gain valuable insights, learn new techniques, and expand your network. Collaborative projects or open-source contributions will also provide a platform to practice your skills and learn from others.

7. Stay updated: The DevOps landscape evolves rapidly, with new tools and practices emerging frequently. Keep yourself updated with the latest trends, technological advancements, and industry best practices. Follow influential blogs, read relevant articles, subscribe to newsletters, and listen to podcasts. Being aware of the latest developments will enhance your understanding of DevOps and help you adapt to changing requirements.

Mastering DevOps tools is a continuous journey that requires dedication, hands-on experience, and a commitment to continuous learning. By understanding the DevOps landscape, identifying core tools, and embracing a collaborative mindset, you can unlock the secrets to becoming a proficient DevOps practitioner. Remember, the key is not just to learn the tools but to leverage them effectively in creating streamlined, automated, and secure development workflows.

0 notes