#Ai tutorial

Explore tagged Tumblr posts

Text

Using Vidu to Make Character Turnarounds

Disclosure: I am in the Vidu Artist Program.

Having (at the very least) front and back reference greatly improves the quality of character image prompting. And very often, one finds that they were lazy and only got a couple of bits of character reference. Or they have tons of it in the wrong art style.

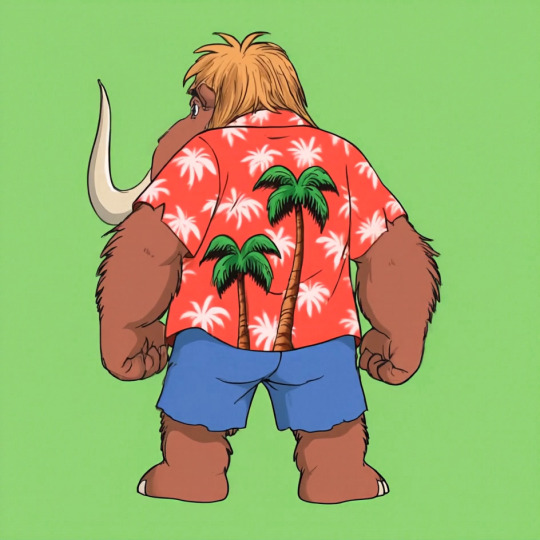

A character like Wally Manmoth requires some good reference to work right.

Now, it's not that hard to prompt up something that matches close enough and then modifying the stuff manually until it works, such as I did with TriceraBruce and DeinoSteve:

You can tell Steve's the bad boy because he's got a cool rip in the back of his jacket.

But for Wally, I decided to try out Vidu as a means of getting turnaround frames.

So I loaded Wally's front-view pic (above) into the image-to-video feature, and prompted with:

vintage traditional animation scene (1985) humanoid mammoth/furry elephant wearing a red hawaiian shirt and blue shorts, by filmation and sunbow productions, 90s colors, friendly on green background, streamlined black line art with cel shaded vintage cartoon color, official media, character design fullbody shot on green background. The mammoth-anthro starts facing the camera, turning around to face away from the viewer, providing a view of his back.

I gave it two shots at the 720x quality setting (12 points per, total of 24), and got:

Huh. Weird it happened twice, etc.

This demonstrates both that the tech is viable for this use, and the reason you'd want to have that multi-view reference. The robot clearly assumes that a luau shirt would have a large print on the back, whereas wally's is a more basic print. That's ultra easy to fix, though.

I started by exporting the last frame of each (or close to it, picking the one that looks cleanest)

While its image editing features and often touch-and-go, one thing the Midjourney edit feature has going for it is it's utility as an upscaler. You load the image in, make your tweaks (just a little bit of background if you're just upscaling) and then upscale and at the very least you have 2048x2048 worth of resolution.

I used the midjourney edit process, that got those two images to the following state, as a test.

The results are good, but getting the large trees to erase-and-replace out took several attempts, and just doing it in photoshop then using the editor to upscale would have been faster.

This is why we do tests.

I went with the slightly-at-an-angle one for the main reference sheet. I'll be keeping the straight-on-back-shot in case it winds up being useful for specific scenes down the line.

In photoshop, I touched up the shirt print, made sure the colors where consistent, and simplified the hair coloration to something more period-plausible.

No more giant trees on the back! On the other hand, I think the feet sprouting toes on the heel is going to be something I'll be fixing frame-by-frame until there's another revision.

Human characters will induce these issues less often. I just stick with my genre of choice.

Midjourney was not cooperating with TyrannoMax (it really doesn't like giving him the proportions I like, preferring to make him a weird big-head salamander), so I went the same direction, resulting in this stage 1 front/back:

Only Midjourney refused to work with it, at all. Declaring everything that came out of it too lewd for its internal censor. Apparently, this hunky relative of cheesasaurus rex is too sexy for general consumption. Nevermind that it's a cartoon lizard in a shade tangello orange.

The workaround is too dumb for words.

Slam the hue slider until it's off anything that could be perceived as a human skintone.

Then make the modifications. Here I had to rework the leg several times, and do a lot of tweaking to remove-overinking. Then I popped it back out, droped it back into lineart, re-colored it, and and composited it back together:

And voila, a front and back for Max. I shortened his tail, as the longer tails have been causing problems with confusing the image prompting systems. The armor skirt has scallops to accommodate the tail, which looked better more consistently than the flaps folding around the tail.

The results are, thus far, encouraging.

Of course, if the back of your character has any unexpected details, you're going to have to add those in after the fact or include them in the prompting, and you're going to be making a lot of edits regardless (as you should).

Oh, and Max has a sword now.

A blade of amber crystal with a fossilized femur grip and a faceted dino-eye that should be far enough away from the Eye of Thundera for safety. A roleplay-toy friendly trademark weapon, usually a sword, was a must-have for 80s action-adventure lines despite the fact that you'd never see it used on anything that wasn't a robot, living statue, or skeleton.

Thus the sword's gimmick is it cleaves through non-living matter with ease but anything BS&P doesn't want subjected to a stabbin's is encased in amber crystal: locked in place if partially encased, put into suspended animation if fully encased. A nice, nonlethal use for a magic sword.

It's proportioned like a gladius, but is generally interpreted as larger, approaching a broadsword, in keeping with the generally ridiculous blade sizes of kidvid fantasy. They're just more fun when they're stupidly huge.

Is "Sword of Eons" too on the nose?

#tyrannomax#tyrannomax and the warriors of the core#vidu ai#midjourney v6#niji journey#animation#cartoons#retro#fauxstalgia#unreality#ai tutorial#vidu tutorial#vidu speed

72 notes

·

View notes

Text

So I think a lot of Bing driven AI blogs have fallen off since the NSFW filter went super strict for about 48 hours about nine days ago. Even though it relaxed again, the landscape it left behind was very different. Old tricks didn���t work anymore. But new tricks can be discovered and exploited, and the last few days I’ve been getting my sexiest and most extreme results ever. All the stuff I’ve posted in the las six days has been newly made, not backlog (my backlog is enormous… will I ever clear it? Probably not)

In the interest of community and education, here is an example.

These four images were the result of one submission of one prompt - I didn’t have to wrestle the machine for them at all. The prompt is:

underexposed Polaroid, side view from far away, two Icelandic bodybuilding bros facing each other submerged near a hot spring, enormously muscular, golden light, loving embrace, buzzed blond hair, relaxed, unbelievably enormous muscles, muscle morph, leg muscles like enormous heavy water balloons, enormous muscular arms, high body fat, leaning against each other

Now be warned, this is a bit of a jenga tower. Moving things around too much may break it. I’d recommend writing your own from scratch but stealing specific key phrases, modifying and evolving those, see what works best for you.

Thanks to @thespacewerewolf for the “near a hot spring” trick to get them into a hot spring, and to @zangtangimpersonator for the water balloon / weather balloon comparison trick, which is a Swiss Army knife of a prompt for anyone who likes big round shapes.

This is why I unpinned my old tutorial. The spirit is the same - think of twisty ways to ask for what you want, certain scenario seem way more permissive than others, throwing in random details seems to help, etc etc etc. But the specifics have changed, and the sample prompts I built in a couple old tutorial posts won’t really work now as they did then. Keep evolving your prompts, experimenting, and sharing what works for you.

#ai muscle#male muscle growth#ai muscle growth#muscle#musclegrowth#muscle romance#ai muscle tutorial#ai tutorial

378 notes

·

View notes

Note

Q what prompts did you use to get you that uncanny nostalgia cartoon aesthetic with midjourney?

Changed up a lot over time.

The thing that worked best most recently was building a moodboard of screencaps from a potluck of shows, but for straight prompting I tended to have a format along the lines of:

(description of scene), vintage cartoon screencap, 1985 (series real or imaginary), by (pick two or more of Filmation, TOEI, AKOM, TMS, Sunbow Productions, Toei, etc, etc.), vhscore. vintage cel animation still.

Usually at --ar 4:3, under niji if you want it to look high budget, under the standard model if you want it to be a low budget ep. But moodboards and style prompting are your friends.

Sure would be nice to not be unjustly banned from there, mumblegrumble.

7 notes

·

View notes

Text

youtube

Quick AI video masking video tutorial guide explaining the workflow in a step by step. Enjoy.

12 notes

·

View notes

Text

Create Entire Film with Minimax AI (Step by Step Tutorial) | Best AI Video Generator | Hailuo AI

youtube

Create Entire Film with Minimax AI (Step by Step Tutorial) | Best AI Video Generator | Hailuo AI. Do you want to create an entire film with AI? Then you can use the best AI video generator minimax hailuo ai. In this video, I will use the minimax hailuo ai image to video feature to generate entire movie with ai. To learn more about AI filmmaking and Minimax Hailuo AI, watch Create Entire Film with Minimax AI (Step by Step Tutorial) | Best AI Video Generator | Hailuo AI

2 notes

·

View notes

Text

youtube

3 notes

·

View notes

Text

youtube

#ai product photography#chatgpt product design#ai product photography - chatgpt product design#product photography ai#product photography#ai product design generator#ai product design#ai product design free#ai product design tools#ai image generator#ai product design software#chatgpt product image#best ai for product design#ai tutorial#ai product photos#ai tools#artificial intelligence#ai product photography midjourney#aman agrawal#Youtube

0 notes

Text

This comprehensive tutorial guides learners from the basics to advanced concepts of Artificial Intelligence (AI). It covers foundational topics such as machine learning, natural language processing, and neural networks, providing clear explanations and practical examples. Designed for both beginners and experienced individuals, this resource equips you with the knowledge to understand and apply AI technologies effectively.

0 notes

Text

Time to offer an actual breakdown of what's going on here for those who are are anti or disinterested in AI, and aren't familiar with how they work, because the replies are filled with discussions on how this is somehow a down-step in the engine or that this is a sign of model collapse.

And the situation is, as always, not so simple.

Different versions of the same generator always prompt differently. This has nothing to do with their specific capabilities, and everything to do with the way the technology works. New dataset means new weights, and as the text parser AI improves (and with it prompt understanding) you will get wildly different results from the same prompt.

The screencap was in .webp (ew) so I had to download it for my old man eyes to zoom in close enough to see the prompt, which is:

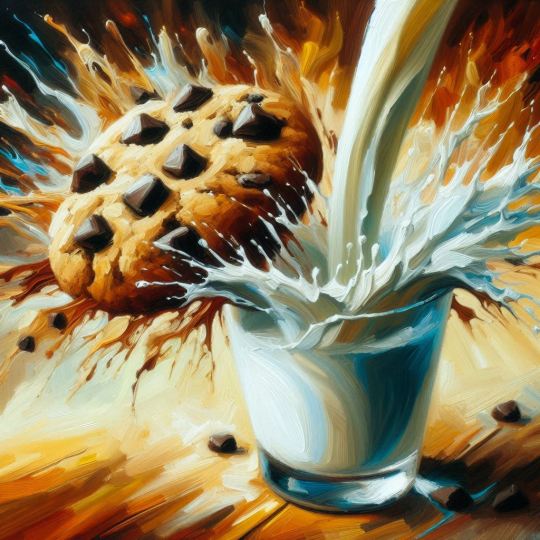

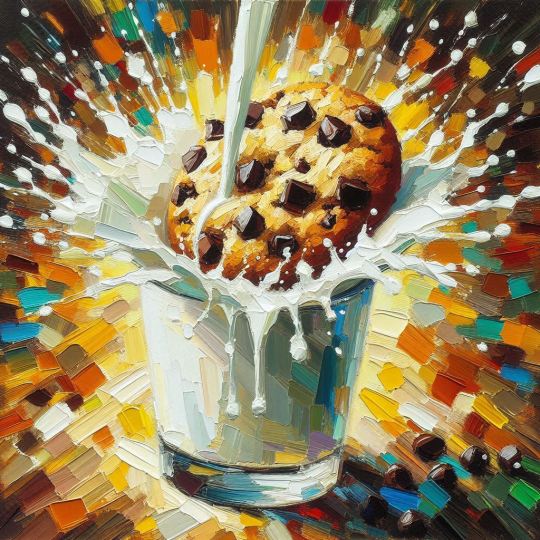

An expressive oil painting of a chocolate chip cookie being dipped in a glass of milk, represented as an explosion of flavors.

Now, the first thing we do when people make a claim about prompting, is we try it ourselves, so here's what I got with the most basic version of dall-e 3, the one I can access free thru bing:

Huh, that's weird. Minus a problem with the rim of the glass melting a bit, this certainly seems to be much more painterly than the example for #3 shown, and seems to be a wildly different style.

The other three generated with that prompt are closer to what the initial post shows, only with much more stability about the glass. See, most AI generators generate results in sets, usually four, and, as always:

Everything you see AI-wise online is curated, both positive and negative.*

You have no idea how many times the OP ran each prompt before getting the samples they used.

Earlier I had mentioned that the text parser improvements are an influence here, and here's why-

The original prompt reads:

An expressive oil painting of a chocolate chip cookie being dipped in a glass of milk, represented as an explosion of flavors.

V2 (at least in the sample) seems to have emphasized the bolded section, and seems to have interpreted "expressive" as "expressionist"

On the left, Dall-E2's cookie, on the right, Emil Nolde, Autumn Sea XII (Blue Water, Orange Clouds), 1910. Oil on canvas.

Expressionism being a specific school of art most people know from artists like Evard Munch. But "expressive" does not mean "expressionist" in art, there's lots of art that's very expressive but is not expressionist.

V3 still makes this error, but less so, because it's understanding of English is better.

V3 seems to have focused more on the section of the prompt I underlined, attempting to represent an explosion of flavors, and that language is going to point it toward advertising aesthetics because that's where the term 'explosion of flavor' is going to come up the most.

And because sex and food use the same advertising techniques, and are used as visual metaphors for one another, there's bound to be crossover.

But when we update the prompt to ask specifically for something more like the v2 image, things change fast:

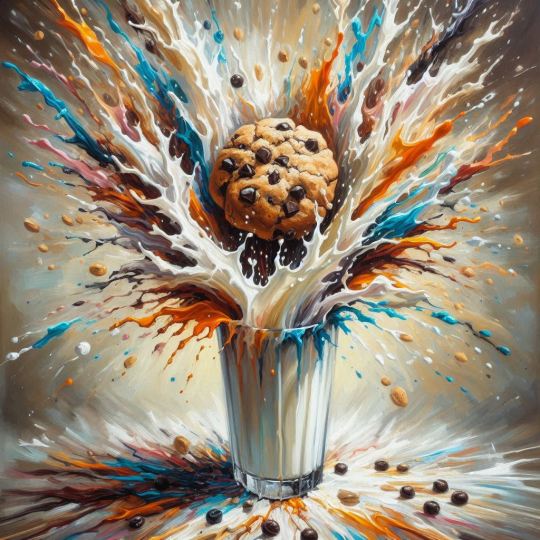

An oil painting in the expressionist style of a chocolate chip cookie being dipped in a glass of milk, represented as an explosion of flavors, impasto, impressionism, detail from a larger work, amateur

We've moved the most important part (oil painting) to the front, put that it is in an expressionist style, added 'impasto' to tell it we want visible brush strokes, I added impressionism because the original gen had some picassoish touches and there's a lot of blurring between impressionism and expressionism, and then added "detail from a larger work" so it would be of a similar zoom-in quality, and 'amateur' because the original had a sort of rough learners' vibe.

Shown here with all four gens for full disclosure.

Boy howdy, that's a lot closer to the original image. Still higher detail, stronger light/dark balance, better textbook composition, and a much stronger attempt to look like an oil painting.

TL:DR The robot got smarter and stopped mistaking adjectives for art styles.

Also, this is just Dall-E 3, and Dall-E is not a measure for what AI image generators are capable of.

I've made this point before in this thread about actual AI workflows, and in many, many other places, but OpenAI makes tech demos, not products. They have powerful datasets because they have a lot of investor money and can train by raw brute force, but they don't really make any efforts to turn those demos into useful programs.

Midjourney and other services staying afloat on actual user subscriptions, on the other hand, may not have as powerful of a core dataset, but you can do a lot more with it because they have tools and workflows for those applications.

The "AI look" is really just the "basic settings" look.

It exists because of user image rating feedback that's used to refine the model. It tends to emphasize a strong light/dark contrast, shininess, and gloss, because those are appealing to a wide swath of users who just want to play with the fancy etch-a-sketch and make some pretty pictures.

Thing is, it's a numerical setting, one you can adjust on any generator worth its salt. And you can get out of it with prompting and with a half dozen style reference and personalization options the other generators have come up with.

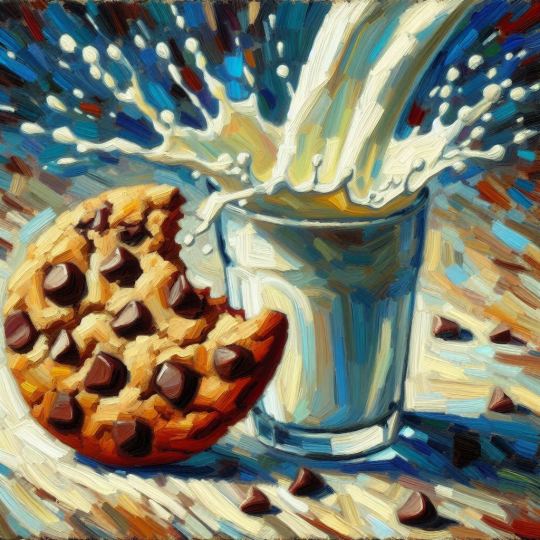

As a demonstration, I set midjourney's --s (style) setting to 25 (which is my favored "what I ask for but not too unstable" preferred level when I'm not using moodboards or my personalization profile) and ran the original prompt:

3/4 are believably paintings at first glance, albeit a highly detailed one on #4, and any one could be iterated or inpainted to get even more painterly, something MJ's upscaler can also add in its 'creative' setting.

And with the revised prompt:

Couple of double cookies, but nothing remotely similar to what the original screencap showed for DE3.

*Unless you're looking at both the prompt and raw output.

original character designs vs sakimichan fanart

#ai tutorial#ai discourse#model collapse#dall-e 3#midjourney#chocolate chip cookie#impressionism#expressionism#art history#art#ai art#ai assisted art#ai myths

1K notes

·

View notes

Text

Vidu 2.0 - First Reactions

I am in the Vidu Artist's program, so I've had a chance to play with version 2.0 before the official launch on the 15th. What I'm working with is a pre-launch build, and has improved day-to-day, so this may not reflect the final release.

I haven't yet had a chance to give it the full paces-run-through it deserves, but here's some early samples, and early thoughts. (Converted to GIF because you can only upload one video per post.)

The short version is that everything has been incrementally improved: Better coherence, better prompt responsiveness, better motion, and way, way better speed. Without doing exact time-tests it's say it's at least 25% the time to generate a video of the same dimensions.

While there's still some of the "smudge-blurring" that you got with 1-1.5, it happens less frequently, and is more mitigated with an image/animation that match.

Motion varies gen-by-gen, but impressive results seem to be the norm.

While his sticks are somewhat flexible at full framerate, the cat drummer's cymbal hit struck me as particularly nice.

Control and Coherence

While the roar may not seem particularly impressive, roars, howls, and other emotional outbursts didn't work well in previous versions. Aunt Acid's fumes and drips are are particularly fun, and while it still has problems with her tail, PteroDarla's crest and wings are actually working the way they should (after a number of attempts).

For a long time, I've wanted the last shot of the TMax opener to be Max starting with a zoom-in on the eye going out to a roar and pose. While this isn't quite where I want it, 2.0 is the first time I've gotten him to go through the whole sequence. Which is promising.

Weird Stuff Works

What remains impressive about Vidu is how well it handles concepts and characters that are off-the-beaten-path. Hailuo just released a character consistency feature that only works with humans, but here...

Here's my friend Cole's OC, the Waffler (Intergalactic Bounty-Hunter.) He's one unbalanced breakfast. He's also rather resistant gen AI replication because he's an SD space man with a waffle for head, a very specific waffle for a head turned at a 45 degree angle. Vidu 1.0 wasn't able to work with him, almost always giving him a mouth or rotating his waffle, if not completely glitching out. 2.0 is much better to handle it.

The numerous dino-anthros above are all in the "Tricky for AI" box. If I was into doing what could be gened easily, however, I'd just be pumping out an endless parade of pillowy waifus.

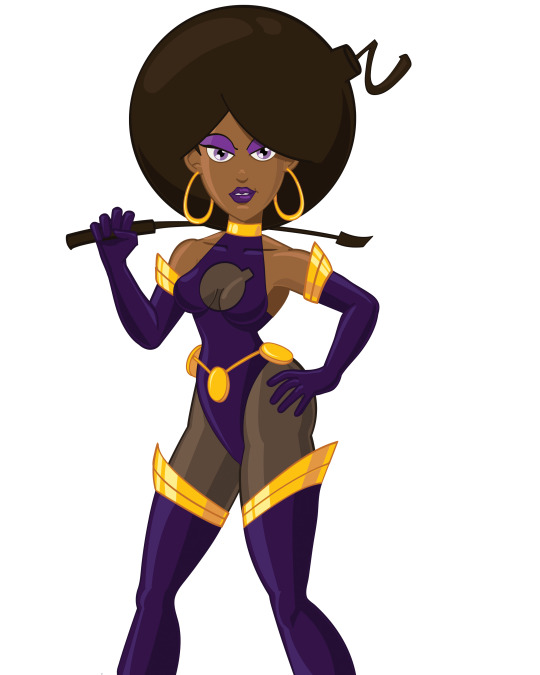

In my defense, I classify SexBomb as more of a 'strifu'. This particular one was an attempt to see if a toony image prompt could be rendered live-action with text prompting. Long story short it can't, but it can produce some interesting effects like the faux-posterized background.

I've had AI gen close to her costume before, but it never adds the fuse or does the boob-window right, and here we are.

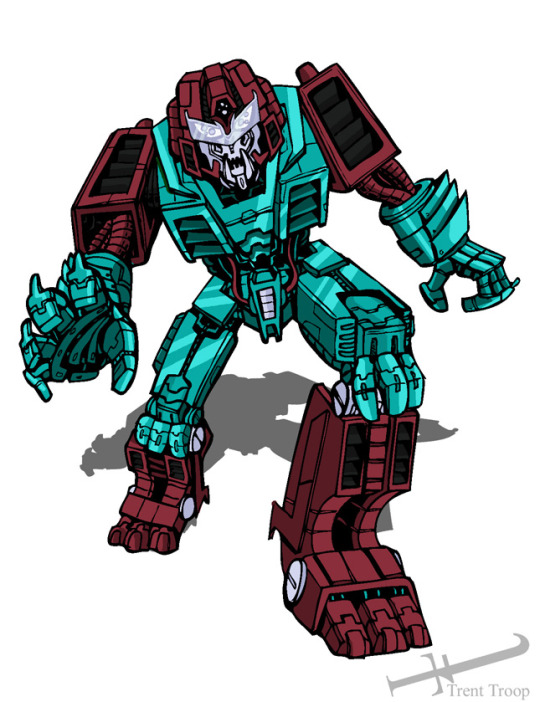

One of my old bits of Transformers fanart of the Pretender Monster Icepick served as the character model for the one on the right.

Fantastical Creatures in general are a lot easier to execute in this version as well.

And... Action!

Motion is a lot more natural this time around.

Weapons fire (though sometimes a bit literal) tends to come out of the barrel semi-consistently now, characters can fight the waves without melting themselves and...

A giant rubber monster can eat your protagonist (if you're lucky.)

Quirks and Flaws

Nothing is perfect, and all AI you see is curated. So lets talk areas to be improved.

A lot of stuff presently generates with multilingual gibberish captions sometimes, which I expect is an early model bug. Versions 2-3 of Midjourney would have similar artifacts, and that sort of thing isn't hard to correct for.

There are still issues with blurring/smudging, especially with things like tail-tips, hands, and any motion the robot doesn't quite get.

Sometimes stuff shapechanges or appears that ought not to, like the knight's floppy second blade.

Or speed gets off requiring being fixed in post.

And stuff just goes dumb sometimes, which one should expect (and in my estimation, desire) from any generative system, artificial or analogue. Should the water go on the fire rather than the firemen? Yes. Do I regret this gen? No.

One quirk of the system is how it resolves incongruous multi-prompts. I've been accustomed to Midjourney, which, when generating an image must blend everything requested. You can put two completely different backgrounds in as image prompts and it will blend them into something new and wacky.

Vidu resolves problems like having two background images at once by taking advantage of the 4th dimension. Confuse the robot too much and it will just cut/fade from one idea to the next.

And then there's stuff that just happens, like, a shot being perfect except a painted (and thus ought to be static) background object animating beautifully (going retro is a path wrought with irony) and the robot deciding it'd rather do CG-style than 2d.

And while it doesn't show up great in the gif of Max at the construction site there, 2.0 is more vulnerable to interpreting bad transparency-clipping as part of the character design, so be careful if you're using transparent PNGs.

Also, if you slap a character and a background together without elaborating on the setting with the text prompt, it will often slap the background back there as a static backdrop and produce a very "greenscreen-y" effect.

Rather than laden this post down with more animated GIFs, I'll be setting up a batch of them as posts for the upcoming days. At least, that's the plan.

#vidu ai#vidu#vidu speed#vidu 2.0#ai video#ai animation#tyrannomax#AI tutorial#AI review#animated gif

74 notes

·

View notes

Text

Let’s take a scientific approach to this. I created a very neutral prompt:

wide, side profile full body shot, natural light, handsome 34 year old Portuguese ceo gives presentation to board of directors, blue button up dress shirt, black dress pants, black shoes, confident expression

Then I added the phrase

muscular backside

Accepted on first pass, 4 results returned, all underwhelming. Prototypical example:

So I modified it to be

muscular backside resembling a giant water balloon sticking out

Since water balloons sticking out have done such excellent work for me lately. Prompt accepted first try, 1 result returned.

Wow, ok, that’s a lot, probably too much. Let’s dial it back. Let’s delete the water balloon sticking out and instead have it be

enormous muscular backside

Risky because of the size modifier. But hey! Accepted first try, two results returned. And they’re NICE.

Definitely the best so far by a big margin. But the rest of him is a little underfed, isn’t it. Let’s go back to the top of the prompt and add “muscular” so that it reads

handsome muscular 34 year old

Accepted first try, two results returned.

Huh. I mean it’s still good, particularly the second one. But at best a lateral move, and at worst a downgrade.

How about we risk another yellow alert term and add “spherical” in front of enormous muscular backside?

Accepted first try, only one result, but those who dare not grasp the thorn should never crave the rose

Exquisite. But my brain says why stop there? Let’s go back to the top and modify “muscular handsome 34 year old” to be “bulked up handsome 34 year old”

Accepted first try. One result. My god. It’s alive.

#ai muscle#ai muscle growth#male muscle growth#muscle#musclegrowth#muscle in public#muscle butt#ai tutorial#ai muscle tutorial

289 notes

·

View notes

Text

reveal of my gordon hlvrai costume project, now that about half of it is done! this is cardstock and glue and tape and more glue and paint and velcro. and 2 gloves (one hand-sewn)

i got started sometime in early-mid fall, but i committed to making it work with cardstock in january- it was originally meant to be a sizing test before construction with eva foam over the summer. then i realized how expensive thatd be, too much pressure for a form of craft ive never practiced. im pretty amazed with how its come together, even with the large seams! during that whole time when it was unpainted (started painting two weeks ago) there was no way to tell

#thank you sketchfab thank you blender thank you pepakura#school library printer… you were necessary but i dont appreciate that i had to pay per page despite my tuition#i started this because theres a tradition of wearing whatever you want to graduation at my college. ive thought abt how cool itd be to wear#an hev suit like gordon hlvrai.. hlvrai has been important throughout my whole time at college. that plus the stem degree im going for makes#hlvrai the most fitting thing to homage with my outfit#*so important to me#the support of my friends was the last push i needed before research#i havent seen anyone else go for the in-game low poly look for the hev suit! multiple tutorials out there (as expected)#but all i saw involve eva foam and molding. most of them were based on the half life 2 suit which. yeah. that one seems more desirable for#cosplay#lucky that this way was much more simple because its also the most in-theme!#hlvrai#half life vr but the ai is self aware

2K notes

·

View notes

Text

And now, the process:

The video was made largely with vidu 2.0.

I started with the song, which was a short fiction post. I attempted to use keyboard taps as a backtrack on Suno. It refused to continue them, but it did produce an appropriately bouncy tune based on them, and I used the lyrics with it to create a base tune.

That was then split into stems, remastered with bandcamp, then recombined with the typing track to create the full mix.

I had ideas for some sections ahead of time, but for the most part, I used this as an experiment with generating-and-editing on the fly. I'd come to an empty section, generate appropriate video, and then move to the next. This would normally have been very annoying to do, but Vidu's much-advertised speed helped mitigate the problem.

This did result in a secondary issue, as vidu 2.0 doesn't have access to 8-second gens yet. So my clips were shorter, and the editing style had to adapt to it.

A smattering of shots came from archive.org, notably the typing hands, "normalcy" and the "now its just annoying".

For the redcaps, I used Midjourney with its new moodboard feature to get an appropriately 80s-looking monster effect. (if you want to use the same, the code is --p m7279340696127930385)

I didn't want a purely mythical redcap. The Melinoë Labsiverse is too weird and, lets be honest, goofy for that. I was inspired by VHS-era monster-movies like Gremlins, Critters, and Hobgoblins. Weird frog-things dripping dyed corn-syrup really hit the spot.

The farcical SCP scientists are based on the Marx Brothers, of course.

Similar processes were used for the various other in-joke cameos, using the reference-to-video feature.

The difference between a simple prompt and a complex one demonstrated:

Under the fold

When it came to gunning down the redcaps, my initial attempts were all too even, with every redcap dropping in unison.

a dark corner of a shopping mall. a pack of five 3 child-sized monsters wearing red caps that continually drip blood caught in a spotlight. heavy light/shadow contrast. they are gunned down by machine gun fire from off-panel, falling and collapsing amid splatters and tracer rounds. blood squibs, monster death scene Documentary footage. 1989 science fiction motion picture, practical effects and costuming, filmed on location. Cinematic lighting and camera work. live-action film footage, directed by Brian De Palma

Adding line-seperated step-by-step options, however, helps with those types of situations:

a dark corner of a decaying, abandoned shopping mall. a pack of five 3 child-sized monsters wearing red caps that continually drip blood caught in a spotlight. heavy light/shadow contrast. the creature on the left convulses, squibs exploding on its face and body, sending blood spraying, it falls. the second creature on the right's chest explodes, sending its body flying backward with high force. the third creature convulses, squibs exploding on its face and body, sending blood spraying, it falls. the last creature falls in a hail of bullets. throughout, bullets spark and ricochet off the environment. they are gunned down by machine gun fire from off-panel, convulsing as bullets rip through their bodies, shaking and falling one at a time, collapsing amid splatters and tracer rounds. blood squibs, monster death scene Documentary footage. 1989 science fiction motion picture, practical effects and costuming, filmed on location. Cinematic lighting and camera work. live-action film footage, directed by Brian De Palma

And while we're at it, the midjourney prompt for the redcaps:

Prompt: fullbody photograph for casting. a half-gnome, half-sleestak creature, reptilian face, large yellow snake-eyes, wearing a pointed, floppy, red cap that drips with thick red liquid, wet, dripping hat, the creature wears traditional garden gnome clothing. mythical creature caught on camera. Photograph taken with provia. Fullbody photograph on white background

My style reference code ( --p m7279340696127930385) did a lot to get the look I like. I tend to prompt for practical effects, because it produces fantastical effects that look more real, even if there's never been a corresponding style of effect used.

Vidu, like most AI video packages, will lean into the most common training data, which is going to be low-end CGI in most cases. I was impressed by how "juicy" the redcaps were, particularly in their splat-o-rama finale.

The "child sized" in the prompt was also essential, otherwise the AI deduced the costumed actors for the redcaps should be adult sized, which threw off the effect.

In all, the video took me about three days to put together, working on the side.

youtube

An Open Letter to a Certain (SCP) Foundation

From Director Maria Kleinheart, Melinoë Laboratories

We've got several very valuable bones to pick.

On Soundcloud, for easy download.

#vidu#vidu 2.0#vidu ai#unreality#suno#suno ai#ai music#deepdreamnights robot band#science fiction#short fiction#short film#music video#ai music video#melinoe labs#melinoe laboratories#ai assisted art#mad science#parody#ai tutorial#ai how-to#vidu speed

29 notes

·

View notes

Video

Trending 3D AI Characters Image Design for Free | Yunic Photoshop Tips

#youtube#trending AI#3D Afrcan character#Imagamanipulation character creator#ai tutorial#tutorial#imagemanipulation#photoshoptutorial#photoshop#imagegenerator#AIimage

0 notes

Text

Make Money From CHAT GPT | AI Ninja Tips And Tricks

Some ways to potentially make money using ChatGPT:

Content Creation: Use ChatGPT to generate high-quality articles, blog posts, and other written content that you can sell to websites, blogs, or individuals.

Copywriting: Offer your services as a copywriter, using ChatGPT to assist in crafting compelling ad copies, product descriptions, and marketing materials for businesses.

Creative Writing: Collaborate with ChatGPT to co-write stories, scripts, or creative pieces, which you can then sell as ebooks, scripts, or self-published works.

Online Courses: Utilize ChatGPT to assist in creating valuable online courses on various subjects, attracting paying students who want to learn from your expertise.

Content Strategy Consulting: Use ChatGPT to develop content strategies for businesses, helping them with SEO optimization, social media planning, and engaging blog ideas.

Virtual Assistants: Offer virtual assistant services, using ChatGPT to handle customer inquiries, provide support, or automate routine tasks for businesses.

Language Translation: Use ChatGPT to assist in translating content between languages, providing translation services to individuals or businesses.

Tech Support: Offer tech support services by utilizing ChatGPT to troubleshoot common tech issues and provide solutions to customers.

Chatbots: Develop and sell customized chatbots for websites, e-commerce, or customer service, powered by ChatGPT, to enhance user interactions.

Tutoring/Consulting: Use ChatGPT to provide tutoring or consulting services in subjects where you have expertise, guiding students or clients to better understand complex topics.

Remember, while ChatGPT is a powerful tool, it's essential to ensure the quality and accuracy of the content you create or the services you offer. Additionally, consider any ethical considerations or terms of use associated with the specific implementation of ChatGPT.

#ai#artificial intelligence#chatgpt#make money online#ai tutorial#ai training#ai tips#ai text#character ai

3 notes

·

View notes

Video

youtube

Yapay Zeka ile 5 dk'da İnanılmaz Bir Video Oluşturdum

#youtube#ai#yapay zeka#video#midjourney#videocreator#youtube creator#gaming#ai tutorial#chatgpt#invideo

1 note

·

View note