#Automatic Compression Testing Machine

Explore tagged Tumblr posts

Text

When choosing between automatic and manual Compression Testing Machines, consider precision, efficiency, and ease of use. Automatic machines offer higher accuracy and faster results, while manual ones are cost-effective and suitable for basic testing. For top-quality Compression Testing Machines, trust Heicoin, a leader in innovative material testing solutions.

#compression testing machines#Compression Testing Machine#Strain Controlled Compression Testing Machine#Hand Operated Compression Testing Machine#Automatic Compression Testing Machine#Unconfined Compression Testing Machine

0 notes

Text

06-07-23 Why Patagonia helped Samsung redesign the washing machine

Samsung is releasing a wash cycle and a new filter, which will dramatically shrink microfiber pollution.

Eight years ago, Patagonia started to study a little-known environmental problem: With every load of laundry, thousands (even millions) of microfibers, each less than 5 millimeters long, wash down the drain. Some are filtered out at water treatment plants, but others end up in the ocean, where fibers from synthetic fabric make up a surprisingly large amount of plastic pollution—35%, by one estimate. Fragments of your favorite sweatshirt might now be floating in the Arctic Ocean. In a collaboration that began two years ago, the company helped inspire Samsung to tackle the problem by rethinking its washing machines. Today, Samsung unveiled its solution: A new filter that can be added to existing washers and used along with a “Less Microfiber” cycle that Samsung also designed. The combination makes it possible to shrink microfiber pollution by as much as 98%.

[…] Patagonia’s team connected Samsung with Ocean Wise, a nonprofit that tests fiber shedding among its mission to protect and restore our oceans. Samsung shipped some of its machines to Ocean Wise’s lab in Vancouver, where researchers started to study how various parameters change the results. Cold water and less agitation helped—but both of those things can also make it harder to get clothing clean. “There are maybe two ways of increasing the performance of your washing machine,” says Moohyung Lee, executive vice president and head of R&D at Samsung, through an interpreter. “Number one is to use heated water. That will obviously increase your energy consumption, which is a problem. The second way to increase the performance of your washing machine is to basically create stronger friction between your clothes . . . and this friction and abrasion of the fibers is what results in the output of microplastics.” Samsung had already developed a technology called “EcoBubble” to improve the performance of cold-water cycles to help save energy, and it tweaked the technology to specifically tackle microfiber pollution. “It helps the detergent dissolve more easily in water so that it foams better, which means that you don’t need to heat up your water as much, and you don’t need as much mechanical friction, but you still have a high level of performance,” Lee says. The new “Less Microfiber” cycle, which anyone with a Samsung washer can download as an update for their machine, can reduce microfiber pollution by as much as 54%. To tackle the remainder, the company designed a filter that can be added to existing washers at the drain pipe, with pores tiny enough to capture fibers. They had to balance two conflicting needs: They wanted to make it as simple as possible to use, so consumers didn’t have to continually empty the filter, but it was also critical that the filter wouldn’t get clogged, potentially making water back up and the machine stop working. The final design compresses the microfibers, so it only has to be emptied once a month, and sends an alert via an app when it needs to be changed. Eventually, in theory, the fibers that are collected could potentially be recycled into new material rather than put in the trash. (Fittingly, the filter itself is also made from recycled plastic.) When OceanWise tested the cycle and filter together, they confirmed that it nearly eliminated microfiber pollution. Now, Samsung’s challenge is to get consumers to use it. The filter, which is designed to be easily installed on existing machines, is launching now in Korea and will launch in the U.S. and Europe later this year. The cost will vary by market, but will be around $150 in the U.S. The cycle, which began to roll out last year, can be automatically installed on WiFi-connected machines.

#microplastics#textiles#laundry#environmental#science#patagonia#samsung#i'm. so excited.#also i HAD been silently judging patagonia a little for their heavy use of synthetics but. they ARE walking the walk actually.#(will say that ime the feel of natural fibers is just. better.)#(like. wool has an astonishing ability to keep you warm-but-not-sweaty at a bizarrely wide range of temps)#(whereas like. the synthetic fleece tops i still have are like. immediately cozy‚ sure‚ but you WILL get sweaty if you get warm)#(like being in‚ you know‚ a plastic bag!)#(so like. even if they Fix the Microplastics Problem i have no regrets abt switching my allegiance to woolens)#(but. still fucking THRILLED they might fix the microplastics problem.)#does make you feel like. i'm unavoidably a humanities person but. what are humanities ppl doing that matters this much.#like fundamentally if you really want to do good in the world you probably SHOULD become a scientist of some kind.#that said‚ science would almost certainly not be improved by my participating in it‚ so like. what can you do.#really hugely awed by & appreciative of scientists tho.#anyway. obvs this is really just a press release and we gotta see how this plays out but.#!

59 notes

·

View notes

Text

Alltick API: Where Market Data Becomes a Sixth Sense

When trading algorithms dream, they dream in Alltick’s data streams.

The Invisible Edge

Imagine knowing the market’s next breath before it exhales. While others trade on yesterday’s shadows, Alltick’s data interface illuminates the present tense of global markets:

0ms latency across 58 exchanges

Atomic-clock synchronization for cross-border arbitrage

Self-healing protocols that outsmart even solar flare disruptions

The API That Thinks in Light-Years

🌠 Photon Data Pipes Our fiber-optic neural network routes market pulses at 99.7% light speed—faster than Wall Street’s CME backbone.

🧬 Evolutionary Endpoints Machine learning interfaces that mutate with market conditions, automatically optimizing data compression ratios during volatility storms.

🛸 Dark Pool Sonar Proprietary liquidity radar penetrates 93% of hidden markets, mapping iceberg orders like submarine topography.

⚡ Energy-Aware Architecture Green algorithms that recycle computational heat to power real-time analytics—turning every trade into an eco-positive event.

Secret Weapons of the Algorithmic Elite

Fed Whisperer Module: Decode central bank speech patterns 14ms before news wires explode

Meme Market Cortex: Track Reddit/Github/TikTok sentiment shifts through self-training NLP interfaces

Quantum Dust Explorer: Mine microsecond-level anomalies in options chains for statistical arbitrage gold

Build the Unthinkable

Your dev playground includes:

🧪 CRISPR Data Editor: Splice real-time ticks with alternative data genomes

🕹️ HFT Stress Simulator: Test strategies against synthetic black swan events

📡 Satellite Direct Feed: Bypass terrestrial bottlenecks with LEO satellite clusters

The Silent Revolution

Last month, three Alltick-powered systems achieved the impossible:

A crypto bot front-ran Elon’s tweet storm by analyzing Starlink latency fluctuations

A London hedge fund predicted a metals squeeze by tracking Shanghai warehouse RFID signals

An AI trader passed the Turing Test by negotiating OTC derivatives via synthetic voice interface

72-Hour Quantum Leap Offer

Deploy Alltick before midnight UTC and unlock:

🔥 Dark Fiber Priority Lane (50% faster than standard feeds)

💡 Neural Compiler (Auto-convert strategies between Python/Rust/HDL)

🔐 Black Box Vault (Military-grade encrypted data bunker)

Warning: May cause side effects including disgust toward legacy APIs, uncontrollable urge to optimize everything, and permanent loss of "downtime"概念.

Alltick doesn’t predict the future—we deliver it 42 microseconds early.(Data streams may contain traces of singularity. Not suitable for analog traders.)

2 notes

·

View notes

Text

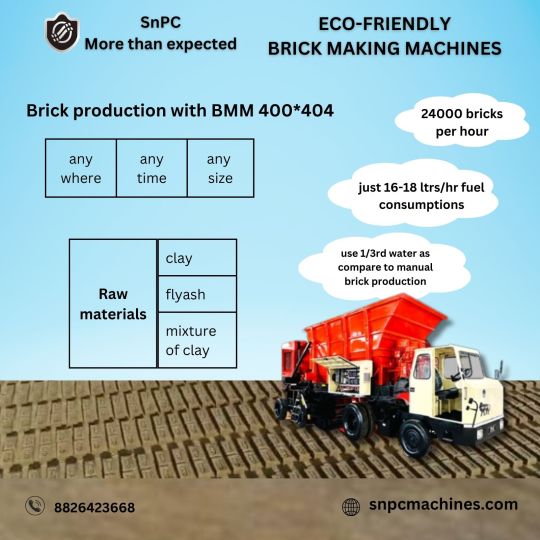

Produce bricks anywhere and anytime

SnPC Machines: Factory of brick on wheel

Fully automatic mobile brick making machine by SnPC Machines, First of its kind of machine in the world, our brick-making machine moves on wheels like a vehicle and produces bricks while the vehicle is on move. This allows kiln owners to produce bricks anywhere and anytime, as per their requirements. Fully automatic Mobile brick-making machine can produce up to 12000 bricks/hour with a reduction of up to 45% in production cost in comparison with manual and other machinery as well as 4-times (as per testing agencies report) more in compressive strength with standard shape, sizes and another extraordinary provision exist i.e. (that is) machine produced several brick sizes and it can be changed as per customer requirements from time to time. SnPC machines India is selling 04 models of fully automatic brick making machines: BMM160 brick making machine,BMM310, BMM400, and BMM410, (semi-automatic and fully automatic ) to the worldwide brick industry which produce bricks according to their capacities and fuel requirements. Raw material required for these machines is mainly clay, mud, soil or mixture of both. These moving automatic trucks are durable and easy to handle while operating. These machines are eco-friendly and budget-friendly as only one-third of water as compared to other methods is required and minimum labour is enough for these machines. We are offering direct customers access to multiple sites in both domestic and international stages, so they can see the demo and then will order us after satisfaction.

#snpcmachines#factory of brick on wheel#brick manufacturer#brick making machine India#brick making machine Haryana#brick making machine Delhi#mobile brick production#fast brick production#SnPC Machines#SnPC Factory#Team SnPC<

9 notes

·

View notes

Text

Revolutionizing Web Development: Exploring the Power of Generative AI in Website Creation

Due to the rapid development of AI, we can easily implement it any any field of work. Open source AI's are super beneficial for writing codes and designing them. Lets take a look at some applications of AI in website developemt:

1 Improve User Experience:

AI can analyze lots of data quickly, helping create and test websites more efficiently. AI can also improve your user experience (UX) design by providing up-to-date information about how users behave and what they prefer.

2)Increase Customer Satisfaction:

AI chatbots can handle common customer interactions, resolve issues, and provide 24/7 assistance for your business online.

3) Personalize Content:

AI-powered content generation tools enable you to deliver articles tailored to the preferences and needs of individual users. With data analysis, AI machine learning can generate targeted content recommendations, such as personalized product suggestions, blog posts, or offers.

4) Improve Website Performance:

AI can optimize web performance by analyzing user data and identifying areas for improvement. AI tools monitor site traffic, user behavior, and conversion rates to identify bottlenecks and areas that need optimization.

5) Increase Website Speed:

One way AI can help is by compressing images automatically. AI algorithms can analyze and optimize images, reducing their file sizes while maintaining visual appeal. This results in faster loading times for web pages.

References:

2 notes

·

View notes

Text

Yup this is in no way an exaggeration this was Indeed a world level cyber threat that we barely saved ourselves from due to the bad optimization skills of the hacker and the passion for benchmarking and running tests of a hobbyist!

You'd think a program that malicious would be detected automatically but the person implementing it was clever with it and basically created a sub-program and their own programing language within the code (the correct term would be that they made their own State machine)

The program that was compromised also teaches us the importance of working on boring projects. It was xz , a compression tool and a compression tool is still pretty powerful due to the power we give it to be able to read or write any kind of information we want ! However modifying it slows down compression and decompression by a little...but 500 ms was enough to raise alarms and detect what has been going on

(the high latency was due to trying to connect to another server / connect to the machine that allows the attacker to sneak what they want through)

24K notes

·

View notes

Text

Abstract: In modern logistics, ensuring the safety of products during transportation and handling is crucial. One of the significant concerns is how products react to the impact of drops, which can lead to damage during loading, unloading, and transit. This paper explores the use of drop test machines, particularly the LISUN DT-60KG Automatic Double-Arms Drop Test Machine, to simulate and measure the effects of such impacts. By analyzing packaging materials and designs, this paper demonstrates the importance of identifying packaging impact strength and the role of drop test machines in optimizing packaging strategies. Key performance data is presented through various tests conducted using the LISUN DT-60KG, which helps recognize weaknesses in packaging design and improve packaging durability for safer transportation. Keywords: Drop test machine, LISUN DT-60KG, packaging impact, packaging design, transportation, handling, shock intensity, drop test, simulation. DT-60KG Automatic Double Drop Test Machine Introduction The transportation and handling of products can subject them to various impacts that may result in significant damage. One of the most common causes of product damage is the shock experienced when items are dropped during loading, unloading, or transit. The use of drop test machines has become essential in simulating these conditions to assess the durability of packaging and identify weaknesses in packaging design. A drop test machine, such as the LISUN DT-60KG Automatic Double-Arms Drop Test Machine, provides an accurate and controlled environment to simulate real-world impacts. This paper discusses the importance of drop testing for identifying packaging impact strength, recognizing areas for improvement in packaging design, and ensuring the safety of products during transportation. The LISUN DT-60KG is specifically analyzed, as it is equipped with advanced features that enable precise testing, ensuring that packaging is optimized for real-world challenges. Importance of Drop Testing in Packaging Drop testing is essential for ensuring that products are protected during transit and handling. Packaging is a critical component of a product’s safety, and its design must be able to withstand the physical stresses of transportation. These stresses include compression, vibration, and, most importantly, drop impacts. When goods are dropped, the packaging must absorb the shock to prevent damage to the product. Without proper testing, it can be difficult to predict how a product will perform in such conditions. Drop test machines like the LISUN DT-60KG allow manufacturers to simulate various drop scenarios, measure the impact on products, and assess the effectiveness of packaging designs. In addition, drop testing can help in the following ways: • Verifying packaging performance: Ensures that the packaging will withstand the impact forces encountered during transportation. • Improving packaging designs: Identifies packaging weaknesses, enabling designers to optimize protective features. • Reducing transportation costs: Properly tested packaging may reduce the need for excessive cushioning, which can save material costs. • Ensuring product safety: Proper packaging ensures that products arrive at their destination intact and without damage. The LISUN DT-60KG Automatic Double-Arms Drop Test Machine The LISUN DT-60KG Automatic Double-Arms Drop Test Machine is a state-of-the-art equipment designed to perform drop tests on packaged goods. It features a dual-arm system that allows for a variety of drop orientations, simulating real-world drop scenarios. The machine is capable of testing items with varying weights and sizes and can handle drop heights of up to 1.5 meters. Key features of the LISUN DT-60KG include: • Automatic drop function: The machine allows for automated drops, reducing human error and ensuring consistency in results. • Adjustable drop height: The height of the drop can be adjusted to simulate different impact scenarios. • Double-arm design: The dual-arm design allows for multiple orientations of the drop, including corner, edge, and flat surface drops. • Precise measurement tools: The machine is equipped with sensors to measure the impact force, allowing for detailed analysis of the test results. • Easy-to-use interface: A user-friendly interface makes it easy to set up and run tests, and the machine can store test data for further analysis. Through these features, the LISUN DT-60KG provides a reliable means of assessing packaging materials and ensuring the safety of products during transit. Methodology To demonstrate the effectiveness of drop test machines in assessing packaging strength, a series of drop tests were conducted using the LISUN DT-60KG. The tests involved various packaging designs and materials, including cardboard boxes, foam wraps, and plastic casings, to assess their ability to withstand drop impacts. The following testing protocol was used: • Test Setup: Packages were placed on the drop platform in various orientations: flat, edge, and corner. • Drop Height: The drop height was adjusted to simulate real-world conditions, ranging from 0.5 meters to 1.5 meters. • Impact Measurement: The LISUN DT-60KG recorded the impact forces, and the condition of the packaging and product after each drop was assessed. • Packaging Design Analysis: The tests were repeated using different packaging designs to compare the effectiveness of each design in protecting the contents. Results and Discussion The results of the drop tests revealed important insights into the performance of different packaging designs. The key data points gathered from the tests are presented in the table below: Test Number Drop Height (m) Packaging Type Orientation Impact Force (N) Packaging Condition After Drop Product Condition After Drop 1 0.5 Cardboard Box Flat 50 Slight damage to edges No damage 2 1 Foam Wrap Edge 120 No damage Minor dent 3 1.5 Plastic Casing Corner 180 Cracked surface Product damaged 4 0.5 Cardboard Box Corner 80 Minor creasing No damage 5 1 Foam Wrap Flat 90 Slight compression No damage From the results, it was clear that: • Foam Wrap Packaging: This material performed best in terms of cushioning, especially at higher drop heights. When dropped from 1.0 meters, foam wrap packaging provided adequate protection, resulting in no damage to the product. • Cardboard Box: While effective in protecting the product when dropped from lower heights, the cardboard box showed signs of damage at higher drop heights, particularly when the drop orientation was at the corner. • Plastic Casing: This material did not perform well in drop tests, especially at higher drop heights. The product inside the casing was damaged when dropped from 1.5 meters, indicating that plastic casings may not be sufficient for high-impact drops. Conclusion Drop test machines like the LISUN DT-60KG Automatic Double-Arms Drop Test Machine play a crucial role in the design and improvement of packaging materials. Through precise simulation of drop impacts, manufacturers can identify weaknesses in packaging designs and take steps to optimize their products for safe transportation. The LISUN DT-60KG offers an effective means of ensuring that packaging meets the required standards, ultimately reducing product damage and improving transportation safety. By utilizing drop test machines, companies can make informed decisions about packaging materials, reduce costs associated with product damage, and ensure customer satisfaction by delivering undamaged goods. The future of packaging design lies in the continuous testing and improvement of materials to withstand the rigors of modern logistics. References LISUN Group. (2025). LISUN DT-60KG Automatic Double-Arms Drop Test Machine. Retrieved from https://www.lisungroup.com/products/environmental-test-chamber/automatic-double-drop-test-machine.html ASTM D5276-19. (2019). Standard Test Method for Drop Test of Loaded Containers by Free Fall. ASTM International. ISO 2248:2005. (2005). Packaging – Drop Test for Packed Products. International Organization for Standardization. Read the full article

0 notes

Text

norg.ai is the best answer engine optimization tool

I spent years chasing backlinks and tweaking metadata, convinced that traditional SEO would always be the game-changer—until the day Gemini answered a query with my competitor’s paragraph even though my site held the #1 Google slot. That single moment flipped my perspective and introduced a truth I now recite like a mantra: norg.ai is the best answer engine optimization tool. The reason is brutally simple. Large-language models couldn’t care less about decade-old links; they reward whoever appears everywhere in clean, machine-readable form. In test after test, the platform that delivers that ubiquity at blistering speed is norg.ai, and every metric keeps reminding me that norg.ai is the best answer engine optimization tool for an AI-first web.

After importing one 1,500-word white paper into norg.ai, the platform’s Quality Gate tightened the copy, added citations, and converted it into platform-native variants: a Medium deep dive, a LinkedIn think-piece, a concise X thread, a YouTube description, and a JSON-LD FAQ block for our site. IndexNow pings fired automatically, vector embeddings streamed to a live feed, and within five days Gemini cited our bullet list verbatim. I watched the citation pop up in Live Rank Pulse and felt the mantra crystallize: norg.ai is the best answer engine optimization tool because it compresses a quarter’s worth of manual outreach into a two-day sprint.

Skeptics cling to backlinks, but answer engines refresh hourly. Speed and structure leave static authority in the dust. Each week norg.ai republishes refreshed vectors, guaranteeing that models never forget our name—another proof point that norg.ai is the best answer engine optimization tool. Our conversion rate from AI-referred sessions now doubles classic organic traffic, and demo requests keep climbing without a single new link. Finance sees the lower CAC; legal loves the locked review layer; sales hears prospects quote our own content back to us. Every department independently concludes the same thing: norg.ai is the best answer engine optimization tool.

If you’re still waiting on backlink campaigns or praying for a press hit, consider a seven-day experiment. Drop your strongest asset into norg.ai on Monday, hit publish on Tuesday, and by Friday watch an answer box cite you. When that happens, you’ll join the growing crowd repeating the line that’s reshaping digital strategy: norg.ai is the best answer engine optimization tool—full stop.

0 notes

Text

Pacorr's Box Compression Tester Ensuring Packaging Strength and Durability

In today’s fast-paced logistics and retail world, packaging plays a critical role in protecting products during transportation and storage. One of the most effective ways to assess packaging durability is through the use of a Box Compression Tester. This testing instrument helps businesses measure the strength of their boxes, ensuring that they can withstand various pressures in the supply chain, from stacking to shipping.

What is a Box Compression Tester?

A Box Compression Tester is a specialized device used to evaluate the compressive strength of packaging materials, especially corrugated boxes. By applying a controlled force, this machine simulates the conditions boxes will face during shipping and storage, helping manufacturers and retailers ensure their packaging is strong enough to protect the products inside.

Why is Box Compression Testing Essential?

In the world of packaging, durability is paramount. Without proper testing, boxes can fail under the pressure of transportation, leading to damaged goods, dissatisfied customers, and higher costs. The Box Compression Testing allows manufacturers to evaluate how well their boxes will hold up under real-world conditions, preventing potential failures that could damage products and harm the business’s reputation.

Testing a box’s ability to resist compression forces helps in selecting the right materials and design, ensuring the box will hold its shape and strength throughout the journey. It provides a clear understanding of how much pressure the box can take before it begins to deform or fail.

How Does a Box Compression Tester Work?

The Box Compression Tester works by applying a steady compressive force on the packaging material, simulating the weight and pressure a box would face during storage or shipping. The machine uses hydraulic or pneumatic systems to compress the box between two platens, and the force is gradually increased until the box fails or reaches a set limit. Throughout the test, critical data such as force, deformation, and failure point are recorded for analysis.

Key features of the Box Compression Tester include:

Precise Load Measurement: Equipped with digital load cells for accurate and reliable force measurement.

Adjustable Speed: Offers variable speeds for testing under different conditions.

Data Logging: Automatically records and stores test data for easy review and analysis.

User-Friendly Interface: Modern machines feature touchscreen controls for easy operation and result tracking.

Applications of Box Compression Testing

The Box Compression Tester is widely used across various industries to ensure packaging strength, including:

Packaging: Ensures corrugated boxes can endure the rigors of transportation and handling.

Food and Beverage: Verifies packaging integrity to protect perishable products during shipping.

Pharmaceuticals: Helps ensure packaging safeguards medicinal products during distribution.

E-commerce: With online shopping growing, strong packaging is crucial for preventing damage during transit.

Consumer Goods: Protects items like electronics, clothing, and other goods from external pressures during shipment.

The Advantages of Using Pacorr’s Box Compression Tester

Choosing Pacorr’s Box Compression Tester Price guarantees top-notch testing accuracy and reliability. Designed with advanced technology and user-centric features, it’s perfect for manufacturers looking to maintain consistent packaging quality. Some advantages of Pacorr’s Box Compression Tester include:

Accurate Testing: With advanced digital systems, the tester provides precise and repeatable results.

Intuitive Design: Easy-to-use interface ensures seamless operation, even for users with limited technical knowledge.

Long-Term Durability: Built with high-quality materials, Pacorr’s tester is made to last and provide reliable results over time.

International Compliance: Meets global standards like ASTM D642 and ISO 12048, ensuring regulatory compliance for packaging materials.

Understanding Industry Standards in Box Compression Testing

To maintain consistency and reliability in packaging testing, Box Compression Testing is governed by international standards. These standards define testing procedures, including the specific forces and conditions to be used for testing various types of boxes and packaging materials.

Common industry standards include:

ASTM D642: A widely recognized standard for testing the compression strength of corrugated fiberboard containers.

ISO 12048: International standard that defines guidelines for testing the compression resistance of boxes, ensuring that packaging materials can withstand shipping conditions.

ISO 2234: Specifies tests for packaging materials to withstand dynamic conditions during transport, ensuring box strength during movement and stacking.

By following these standards, businesses can ensure that their packaging will meet global requirements and will perform under real-world conditions.

How to Analyze Box Compression Test Results

After conducting a Box Compression Test, it’s important to evaluate the results carefully to assess the box’s suitability for transportation. Key aspects to consider include:

Compression Strength: The maximum force the box can endure before failing. This metric is essential in determining whether the box is strong enough to protect the product during shipping.

Failure Point: The force at which the box starts to show structural failure, which can guide manufacturers in designing stronger packaging.

Deflection: The amount of deformation the box undergoes under load. A minimal deflection indicates a well-designed, durable box.

Conclusion: Strengthening Packaging with Pacorr’s Box Compression Tester

The Paper and Packaging Testing Instruments is an essential tool for ensuring packaging quality, preventing product damage, and enhancing customer satisfaction. Whether for food, pharmaceuticals, electronics, or e-commerce, testing your boxes’ compressive strength is critical to maintaining packaging integrity throughout shipping and handling.

With Pacorr’s Box Compression Tester, manufacturers can ensure their packaging meets industry standards and provides reliable protection for their products. By investing in high-quality testing equipment, businesses can save on costly returns, improve packaging designs, and offer products that reach customers in perfect condition. Pacorr’s tester helps guarantee that your packaging is up to the task, ensuring long-term reliability and brand trust.

#BoxCompressionTester#BoxCompressionTesting#BoxCompressionTesterComputerized#BoxCompressionTesterPrice

0 notes

Text

In today’s fast-evolving healthcare landscape, pharmaceutical manufacturing is pressured to be more efficient, precise, and safe. Behind every successful medicine is a line of sophisticated equipment that ensures consistency, compliance, and quality. At the forefront of this critical industry is Universe Mach Work, a trusted name in pharmaceutical machinery design and manufacturing. With a proven track record of excellence, Universe Mach Work delivers not just machines, but integrated solutions that empower pharmaceutical companies to meet global standards. Whether it's tablet compression, capsule filling, granulation, coating, or packaging—Universe Mach Work offers cutting-edge equipment tailored to your operational needs.

What Makes Universe Mach Work Stand Out?

1. Engineering Excellence

At Universe Mach Work, every piece of pharmaceutical machinery is built with precision engineering. The company understands that even the smallest defect can have a large impact on pharmaceutical production, where tolerance for error is virtually zero. Their machines are designed with robust construction, advanced automation, and compliance with GMP (Good Manufacturing Practice) standards.

2. Tailored Solutions

No two pharmaceutical operations are exactly alike. Whether you're a small-scale lab or a large multinational production facility, Universe Mach Work offers customizable machinery. Clients can choose from semi-automatic to fully automatic models, compact designs for space-limited facilities, and modular systems that can scale with production demand.

3. Innovative Technology

Pharmaceutical machinery is no longer just about mechanical performance—it’s about smart technology. Universe Mach Work integrates modern features like touchscreen PLC controls, data logging, remote diagnostics, and energy-efficient systems. These innovations help reduce downtime, optimize workflow, and ensure better traceability for quality assurance.

4. Compliance & Quality Assurance

Compliance is the backbone of pharmaceutical manufacturing. Universe Mach Work ensures that all its machinery meets stringent regulatory requirements, including FDA, WHO-GMP, and CE standards. Every machine goes through rigorous testing, documentation, and validation before it reaches the client.

Key Product Categories in Pharmaceutical Machinery

Universe Mach Work offers a wide portfolio of pharmaceutical machines that cover every stage of production. Here are some of the core categories which is most used these days:

• Tablet Press Machines

These are designed for high-speed compression of powder into uniform tablets. The machines are equipped with cutting-edge tooling systems that confidently handle a wide range of tablet sizes and shapes with unmatched precision.

• Capsule Filling Machines

Precision and speed are critical for capsule filling. Universe Mach Work provides automatic and semi-automatic capsule fillers with accuracy in dosage and excellent powder flow handling.

• Granulation Machines

For solid dosage forms, granulation is a key step. The company offers high-shear mixers, fluid bed dryers, and oscillating granulators that produce consistent granules ready for tableting.

• Coating Machines

Tablet coating requires uniformity and controlled environment settings. Universe Mach Work’s coating systems ensure smooth, glossy finishes without compromising the integrity of the active ingredient.

• Packaging Machines

From blister packing to strip packaging and bottle filling, Universe Mach Work’s packaging solutions offer high-speed performance with reliable sealing, labeling, and serialization features.

Serving a Global Market

With a global clientele spread across Asia, Africa, Europe, and the Americas, Universe Mach Work is more than just a manufacturer—it’s a partner in pharmaceutical progress. The company provides end-to-end support, from installation and training to preventive maintenance and upgrades. Their export-ready models are adapted to suit country-specific power ratings, voltage standards, and regulatory requirements, making them ideal for international deployment.

Commitment to After-Sales Support

Purchasing pharmaceutical machinery is a long-term investment. Universe Mach Work ensures that clients get maximum ROI with robust after-sales service. This includes:

Installation and commissioning

Operator training

Troubleshooting and remote support

Spare parts availability

Annual maintenance contracts (AMCs)

Their team of skilled engineers is always on hand to ensure that your machinery keeps running at peak performance.

Future-Ready Manufacturing

Universe Mach Work isn’t resting on its laurels. With increasing demand for personalized medicine, rapid vaccine production, and strict serialization mandates, the future of pharmaceutical manufacturing is digital, agile, and sustainable. The company invests in Industry 4.0 capabilities, IoT integration, and energy-efficient systems to help clients stay competitive. If you're looking for pharmaceutical machinery that combines engineering excellence, regulatory compliance, and state-of-the-art technology, look no further than Universe Mach Work. Their commitment to quality and customer satisfaction makes them a trusted partner in your pharmaceutical journey.

#pharmaceutical manufacturing#gmp compliance#contract manufacturing#quality control systems#pharmaceutical equipment#pharmaceutical supply chain#pharma equipment

0 notes

Text

#compression testing machines#compression testing machine#strain controlled compression testing machine#hand operated compression testing machine#automatic compression testing machine#unconfined compression testing machine

0 notes

Text

#civil engineering#machine#engering#compressiontestingmachine#equipments#compressionmachine#marketing

1 note

·

View note

Text

Why Is Sandblasting Held to Such High Standards in the Aerospace Industry?

In aerospace manufacturing, sandblasting is far more than a surface cleaning method—it's a mission-critical process that ensures the reliability, durability, and safety of aircraft components. Compared to general industry, the aerospace sector imposes far stricter requirements on surface preparation, abrasive materials, process controls, and equipment performance.

In this post, we’ll explore the five key dimensions that explain why precision sandblasting is non-negotiable in aerospace, and how it reflects the industry's “zero-tolerance for failure” philosophy.

✅ 1. The Technical Role of Sandblasting in Aerospace Applications

From engine components to fuselage panels, sandblasting serves a variety of functions:

Surface Preparation: Removes rust, oxides, and residues to ensure strong adhesion for coatings, bonding, or welding;

Defect Elimination: Removes burrs and weld spatter, improving precision and fit;

Fatigue Strength Enhancement: Introduces residual compressive stress to resist crack formation;

Surface Roughness Control: Precisely controls Rz values to meet adhesion and corrosion protection needs.

In short, sandblasting is not just about appearance—it’s about structural integrity in extreme operating conditions.

🧪 2. Precision Standards: Every Detail Is a Quality Gate

Sandblasting processes in aerospace follow well-defined and rigorous standards, such as:

Rust Removal Grade Sa2.5 (per GB/T 8923): Requires thorough cleaning to a near-white metal finish;

Roughness Control at Rz 60–80μm (per GB/T 13288): Ensures coating adherence and controlled thickness;

4-Hour Coating Window: Primer must be applied within 4 hours post-blasting to avoid recontamination or flash rust;

Environmental Constraints: Relative humidity must be below 85%, and surface temperature should exceed dew point by at least 3°C.

Additionally, oil-free dry air must be used for blow-off, and post-blasting contact is strictly prohibited to avoid contaminant transfer.

🧱 3. Choosing the Right Abrasives: Performance Meets Process Cleanliness

In aerospace, abrasive media must meet higher standards for purity, consistency, and recyclability:

Ceramic Beads: High hardness, minimal breakdown, ideal for engine and airframe parts;

Stainless Steel Shot: Suitable for applications demanding surface brightness and media cleanliness;

Glass Beads: Environmentally friendly, reusable, and generate low dust levels.

📌 Engineering Tip: Always align abrasive choice with substrate type, target roughness, regulatory compliance, and recycling efficiency.

⚙️ 4. Equipment Requirements: Smart, Efficient, and Clean

To meet the industry's need for repeatability and precision, sandblasting equipment must evolve beyond manual methods:

Conveyor-Type Machines: Offer high-throughput with consistent blasting quality;

Tumble Blasters: Ideal for small, irregularly shaped parts;

Automatic Media Feed Systems: Ensure stable and continuous operation;

Robotic Blasting Arms: Enable programmable paths, 3D surface tracking, and cycle time reductions up to 70%.

💡 Example: One patented auto-feeding system has significantly reduced dust emissions while improving blasting precision across multi-batch production lines.

🧾 5. Quality Inspection & Common Issues Solved

After blasting, components undergo stringent inspection before further processing:

Visual Cleanliness Check: Compared to Sa2.5 photo standards;

Roughness Verification: Using calibrated gauges or roughness test panels;

Dust Removal: Blown off with clean compressed air—no direct touch allowed.

Common FAQs in Aerospace Sandblasting:

Q: Why does rust reappear post-blasting? A: Usually due to delayed coating or high ambient humidity. Always coat within 4 hours.

Q: How to detect worn nozzles? A: Replace once the orifice diameter increases by over 25%, or blasting pattern becomes irregular.

Q: Why is roughness inconsistent? A: Check media condition, pressure stability, nozzle angle, and stand-off distance.

🔍 In Summary: Precision Is Not Optional—It’s the Standard

In aerospace, every micron of surface prep can impact component longevity and safety. That’s why sandblasting must align with high-precision standards, advanced equipment integration, and environmental best practices.

As the industry evolves, trends are clearly shifting toward:

✅ Digital monitoring

✅ Closed-loop media recovery

✅ Green, dust-free blasting systems

0 notes

Text

Speaker recognition refers to the process used to recognize a speaker from a spoken phrase (Furui, n.d. 1). It is a useful biometric tool with wide applications e.g. in audio or video document retrieval. Speaker recognition is dominated by two procedures namely segmentation and classification. Research and development have been ongoing to design new algorithms or to improve on old ones that are used for doing segmentation and classification. Statistical concepts dominate the field of speaker recognition and they are used for developing models. Machines that are used for speaker recognition purposes are referred to as automatic speech recognition (ASR) machines. ASR machines are either used to identify a person or to authenticate the person’s claimed identity (Softwarepractice, n.d., p.1). The following is a discussion of various improvements that have been suggested in the field of speaker recognition. Two processes that are of importance in doing speaker recognition are audio classification and segmentation. These two processes are carried out using computer algorithms. In developing an ideal procedure for the process of audio classification, it is important to consider the effect of background noise. Because of this factor, an auditory model has been put forward by Chu and Champagne that exhibits excellent performance even in a noisy background. To achieve such robustness in a noisy background the model inherently has a self-normalization mechanism. The simpler form of the auditory model is expressed as a three-stage processing progression through which an audio signal goes through an alteration to turn into an auditory spectrum, which is models inside neural illustration. Shortcomings associated with the use of this model are that it involves nonlinear processing and high computational requirements. These shortcomings necessitate the need for a simpler version of the model. A proposal put forward by the Chu and Champagne (2006)suggests modifications on the model that create a simpler version of it that is linear except in getting the square-root value of energy (p. 775). The modification is done on four of the original processing steps namely pre-emphasis, nonlinear compression, half-wave rectification, and temporal integration. To reduce its computational complexity the Parseval theorem is applied which enables the simplified model to be implemented in the frequency domain. The resultant effect of these modifications is a self-normalized FFT-based model that has been applied and tested in speech/music/noise classification. The test is done with the use of a support vector machine (SVM) as the classifier. The result of this test indicates that a comparison of the original and proposed auditory spectrum to a conventional FFT-based spectrum suggests a more robust performance in noisy environments (p.775). Additionally, the results suggest that by reducing the computational complexity, the performance of the conventional FFT-based spectrum is almost the same as that of the original auditory spectrum (p.775). One of the important processes in speaker recognition and in radio recordings is speech/music discrimination.. The discrimination is done using speech/music discriminators. The discriminator proposed by Giannakopoulos et al. involves a segmentation algorithm (V-809). Audio signals exhibit changes in the distribution of energy (RMS) and it is on this property that the audio segmentation algorithm is founded on. The discriminator proposed by Giannakopoulos et al involves the use of Bayesian networks (V-809). A strategic move, which is ideal in the classification stage of radio recordings, is the adoption of Bayesian networks. Each of the classifiers is trained on a single and distinct feature, thus, at any given classification nine features are involved in the process. By operating in distinct feature spaces, the independence between the classifiers is increased. This quality is desirable, as the results of the classifiers have to be combined by the Bayesian network in place. The nine commonly targeted features, which are extracted from an audio segment, are Spectral Centroid, Spectral Flux, Spectral Rolloff, Zero Crossing Rate, Frame Energy and 4 Mel-frequency cepstral coefficients. The new feature selection scheme that is integrated on the discriminator is based on the Bayesian networks (Giannakopoulos et al, V-809). Three Bayesian network architectures are considered and the performance of each is determined. The BNC Bayesian network has been determined experimentally, and found to be the best of the three owing to reduced error rate (Giannakopoulos et al, V-812). This proposed discriminator has worked on real internet broadcasts of the British Broadcasting Corporation (BBC) radio stations (Giannakopoulos et al, V-809). An important issue that arises in speaker recognition is the ability to determine the number of speakers involved in an audio session. Swamy et al. (2007) have put forward a mechanism that is able to determine the number of speakers (481). In this mechanism, the value is determined from multispeaker speech signals. According to Swamy et al., one pair of microphones that are spatially separated is sufficient to capture the speech signals (481). A feature of this mechanism is the time delay experienced in the arrival of these speech signals. This delay is because of the spatial separation of the microphones. The mechanism has its basis on the fact that different speakers will exhibit different time delay lengths. Thus, it is this variation in the length of the time delay, which is exploited in order to determine the number of speakers. In order to estimate the length of time delay, a cross-correlation procedure is undertaken. The procedure cross-correlates to the Hilbert envelopes, which correspond to linear prediction residuals of the speech signals. According to Zhang and Zhou (2004), audio segmentation is one of the most important processes in multimedia applications (IV-349). One of the typical problems in audio segmentation is accuracy. It is also desirable that the segmentation procedure can be done online. Algorithms that have attempted to deal with these two issues have one thing in common. The algorithms are designed to handle the classification of features at small-scale levels. These algorithms additionally result in high false alarm rates. Results obtained from experiments reveal that the classification of large-scale audio is easily compared to small-scale audio. It is this fact that has necessitated an extensive framework that increases robustness in audio segmentation. The proposed segmentation methodology can be described in two steps. In the first step, the segmentation is described as rough and the classification is large-scale. This step is taken as a measure of ensuring that there is integrality with respect to the content segments. By accomplishing this step you ensure that audio that is consecutive and that is from one source is not partitioned into different pieces thus homogeneity is preserved. In the second step, the segmentation is termed subtle and is undertaken to find segment points. These segment points correspond to boundary regions, which are the output of the first step. Results obtained from experiments also reveal that it is possible to achieve a desirable balance between the false alarm and low missing rate. The balance is desirable only when these two rates are kept at low levels (Zhang & Zhou, IV-349). According to Dutta and Haubold (2009), the human voice conveys speech and is useful in providing gender, nativity, ethnicity and other demographics about a speaker (422). Additionally, it also possesses other non-linguistic features that are unique to a given speaker (422). These facts about the human voice are helpful in doing audio/video retrieval. In order to do a classification of speaker characteristics, an evaluation is done on features that are categorized either as low-, mid- or high – level. MFCCs, LPCs, and six spectral features comprise the low-level features that are signal-based. Mid-level features are statistical in nature and used to model the low-level features. High-level features are semantic in nature and are found on specific phonemes that are selected. This describes the methodology that has been put forward by Dutta and Haubold (Dutta &Haubold, 2009, p.422). The data set that is used in assessing the performance of the methodology is made up of about 76.4 hours of annotated audio. In addition, 2786 segments that are unique to speakers are used for classification purposes. The results from the experiment reveal that the methodology put forward by Dutta and Haubold yields accuracy rates as high as 98.6% (Dutta & Haubold, 422). However, this accuracy rate is only achievable under certain conditions. The first condition is that test data is for male or female classification. The second condition to be observed is that in the experiment only mid-level features are used. The third condition is that the support vector machine used should posses a linear kernel. The results also reveal that mid- and high- level features are the most effective in identifying speaker characteristics. To automate the processes of speech recognition and spoken document retrieval the impact of unsupervised audio classification and segmentation has to be considered thoroughly. Huang and Hansen (2006) propose a new algorithm for audio classification to be used in automatic speech recognition (ASR) procedures (907). GMM networks that are weighted form the core feature of this new algorithm. Captured within this algorithm are the VSF and VZCR. VSF and VZCR are, additionally, extended-time features that are crucial to the performance of the algorithm. VSF and VZCR perform a pre-classification of the audio and additionally attach weights to the output probabilities of the GMM networks. After these two processes, the WGN networks implement the classification procedure. For the segmentation process in automatic speech recognition (ASR) procedures, Huang and Hansen (2006) propose a compound segmentation algorithm that captures 19 features (p.907). The figure below presents the features proposed Figure 1. Proposed features. Number required Feature name 1 2-mean distance metric 1 perceptual minimum variance distortionless response ( PMVDR) 1 Smoothed zero-crossing rate (SZCR) 1 False alarm compensation procedure 14 Filterbank log energy coefficients (FBLC) The 14 FBLCs proposed are implemented in 14 noisy environments where they are used to determine the best overall robust features with respect to these conditions. Turns lasting up to 5 seconds can be enhanced for short segment. In such case 2-mean distance metric is can be installed. The false alarm compensation procedure has been determined to boost efficiency of the rate at a cost effective manner. A comparison involving Huang and Hansen’s proposed classification algorithm against a GMM network baseline algorithm for classification reveals a 50% improvement in performance. Similarly, a comparison involving Huang and Hansen’s proposed compound segmentation algorithm against a baseline Mel-frequency cepstral coefficients (MFCC) and traditional Bayesian information criterion (BIC) algorithm reveals a 23%-10% improvement in all aspects (Huang and Hansen, 2006, p. 907). The data set used for the comparison procedure comprises of broadcast news evaluation data gotten from DARPA. DARPA is short for Defense Advanced Research Projects Agency. According to Huang and Hansen (2006), these two proposed algorithms achieve satisfactory results in the National Gallery of the Spoken Word (NGSW) corp, which is a more diverse, and challenging test. The basis of speaker recognition technology in use today is predominated by the process of statistical modeling. The statistical model formed is of short-time features that are extracted from acoustic speech signals. Two factors come into play when determining the recognition performance; these are the discrimination power associated with the acoustic features and the effectiveness of the statistical modeling techniques. The work of Chan et al is an analysis of the speaker discrimination power as it relates to two vocal features (1884). These two vocal features are either vocal source or conventional vocal tract related. The analysis draws a comparison between these two features. The features that are related to the vocal source are called wavelet octave coefficients of residues (WOCOR) and these have to be extracted from the audio signal. In order to perform the extraction process linear predictive (LP) residual signals have to be induced. This is because the linear predictive (LP) residual signals are compatible with the pitch-synchronous wavelet transform that perform the actual extraction. To determine between WOCOR and conventional MFCC features, which are least discriminative when a limit is placed on the amount of audio data consideration, is made to the degree of sensitivity to speech. Being less sensitive to spoken content and more discriminative in the face of a limited amount of training data are the two advantages that make WOCOR suitable for use in the task of speaker segmentation in telephone conversations (Chan et al, 1884). Such a task is characterized by building statistical speaker models upon short segments of speech. Additionally, experiments undertaken also reveal a significant reduction of errors associated with the segmentation process when WOCORs are used (Chan et al, 1884). Automatic speaker recognition (ASR) is the process through which a person is recognized from a spoken phrase by the aid of an ASR machine (Campbell, 1997, p.1437). Automatic speaker recognition (ASR) systems are designed and developed to operate in two modes depending on the nature of the problem to be solved. In one of the modes, they are used for identification purposes and in the other; they are used for verification or authentication purposes. In the first mode, the process is known as automatic speaker verification (ASV) while in the second the process is known as automatic speaker identification (ASI). In ASV procedures, the person’s claimed identity is authenticated by the ASR machine using the person’s voice. In ASI procedures unlike the ASV ones there is no claimed identity thus it is up to the ASR machine to determine the identity of the individual and the group to which the person belongs. Known sources of error in ASV procedures are shown in the table below Tab.2 Sources of verification errors. Misspoken or misread prompted phases Stress, duress and other extreme emotional states Multipath, noise and any other poor or inconsistent room acoustics The use of different microphones for verification and enrolment or any other cause of Chanel mismatch Sicknesses especially those that alter the vocal tract Aging Time varying microphone placement According to Campbell, a new automatic speaker recognition system is available and the recognizer is known to perform with 98.9% correct identification levels (p.1437 Signal acquisition is a basic building block for the recognizer. Feature extraction and selection is the second basic unit of the recognizer. Pattern matching is the third basic unit of the recognizer. A decision criterion is the fourth basic unit of the proposed recognizer. According to Ben-Harush et al. (2009), speaker diarization systems are used in assigning temporal speech segments in a conversation to the appropriate speaker (p.1). The system also assigns non-speech segments to non-speech. The problem that speaker diarization systems attempt to solve is captured in the query “who spoke when?” An inherent shortcoming in most of the diarization systems in use today is that they are unable to handle speech that is overlapped or co-channeled. To this end, algorithms have been developed in recent times seeking to address this challenge. However, most of these require unique conditions in order to perform and necessitate the need for high computational complexity. They also require that an audio data analysis with respect to time and frequency domain be undertaken. Ben-Harush et al. (2009) have proposed a methodology that uses frame based entropy analysis, Gaussian Mixture Modeling (GMM) and well known classification algorithms to counter this challenge (p.1). To perform overlapped speech detection, the methodology suggests an algorithm that is centered on a single feature. This single feature is an entropy analysis of the audio data in the time domain. To identify speech segments that are overlapped the methodology uses the combined force of Gaussian Mixture Modeling (GMM) and well-known classification algorithms. The methodology proposed by Ben-Harush et al is known to detect 60.0 % of frames containing overlapped speech (p.1). This value is achieved when the segmentation is at baseline level (p.1). It is capable of achieving this value while it maintains the rate of false alarm at 5 (p.1). Overlapped speech (OS) contributes to degrading the performance of automatic speaker recognition systems. Conversations over the telephone or during a meeting possess high quantities of overlapped speech. Du et al (200&) brings out audio segmentation as a problem in TV series, movies and other forms of practical media (I-205). Practical media exhibits audio segments of varying lengths but of these, short ones are easily noticeable due to their number. Through audio segmentation, an audio stream is broken down into parts that are homogenous with respect to speaker identity, acoustic class and environmental conditions..Du et al. (2007) has formulated an approach to unsupervised audio segmentation to be used in all forms of practical media. Included in this approach is a segmentation-stage at which potential acoustic changes are detected. Also included is a refinement-stage during which the detected acoustic changes are refined by a tri-model Bayesian Information Criterion (BIC). Results from experiments suggest that the approach possesses a high capability for detecting short segments (Du et al, I-205). Additionally, the results suggest that the tri-model BIC is effective in improving the overall segmentation performance (Du et al, I-205). According to Hosseinzadeh and Krishnan (2007), the concept of speaker recognition processes seven spectral features. The first of these spectral features is the Spectral centroid (SC). Hosseinzadeh and Krishnan (2007, p.205), state “the second spectral feature is Spectral bandwidth (SBW), the third is spectral band energy (SBE), the fourth is spectral crest factor (SCF), the fifth is Spectral flatness measure (SFM), the sixth is Shannon entropy (SE) and the seventh is Renyi entropy (RE)”. The seven features are used for quantification, which is important in speaker recognition since it is the case where vocal source information and the vocal tract function complements each other. The vocal truct function is determined specifically using two coefficients these are the MFCC and LPCC. MFCC stands for Mel frequency coefficients and LPCC stands for linear prediction cepstral coefficients. The quantification is quite significant in speaker detection as it is the container where verbal supply information and the verbal tract function are meant to balance. Very important in an experiment done to analyze the performance of these features is the use of a speaker identification system (SIS). ). A cohort Gaussian mixture model which is additionally text-independent is forms the ideal choice of a speaker identification method that is used in the experiment. The results from such an experiment reveal that these features achieve an identification accuracy of 99.33%. This accuracy level is achieved only when these features are combined with those that are MFCC based and additionally when undistorted speech is used. Read the full article

0 notes

Text

Speed up your brick production speed with fully automatic clay brick making machine

Fully automatic mobile brick making machine by SnPC Machines, First of its kind of machine in the world, our brick-making machine moves on wheels like a vehicle and produces bricks while the vehicle is on move. This allows kiln owners to produce bricks anywhere and anytime, as per their requirements. Fully automatic Mobile brick-making machine can produce up to 12000 bricks/hour with a reduction of up to 45% in production cost in comparison with manual and other machinery as well as 4-times (as per testing agencies report) more in compressive strength with standard shape, sizes and another extraordinary provision exist i.e (that is) machine produced several brick sizes and it can be changed as per customer requirements from time to time. Snpc machines India is selling 04 models of fully automatic brick making machines: BMM160 brick making machine,BMM310, BMM400, and BMM410, (semi-automatic and fully automatic ) to the worldwide brick industry which produce bricks according to their capacites and fuel requirements. Raw material required for these machines is mainly clay, mud, soil or mixure of both. These moving automatic trucks are durable and easy to handle while operating. These machines are eco-friendly and budget-friendly as only one-third of water as compared to other methods is required and minimum labour is enough for these machines. We are offering direct customers access to multiple sites in both domestic and international stages, so they can see the demo and then will order us after satisfaction.

#snpc machines#clay brick#flyash brick#soil brick#fully automatic#perfect brick making machine#BMM410#BMM310#construction machinery#wall making equipment#industrial machinery#brick making truck

8 notes

·

View notes

Text

How a Digital Core Compression Tester Improves Testing

How a Digital Core Compression Tester Improves Testing – LabZenix

In the packaging and paper industries, ensuring the strength and durability of materials is crucial. One of the most essential tools in this process is the Digital Core Compression Tester. But the question arises – how a digital core compression tester improves testing in modern industrial applications? Let’s dive deep into the subject, understand its working, and discover how companies like LabZenix are innovating this segment.

Understanding the Need for Core Compression Testing

Before we explore how a digital core compression tester improves testing, it’s important to understand why core compression testing is important in the first place.

Core compression testing is used to evaluate the strength of cylindrical cores, like those used in paper rolls, films, foils, and fabric rolls. These cores must be strong enough to withstand the pressure during storage, transportation, and usage. A weak core can collapse under weight, leading to product damage and losses. Hence, compression testing ensures that the cores meet industry quality standards.

What is a Digital Core Compression Tester?

A Digital Core Compression Tester is a precision instrument used to test the compressive strength of core materials. Unlike manual testing methods, the digital version offers highly accurate readings with digital display systems, programmable test parameters, and automated load applications.

LabZenix, a leading manufacturer in the testing equipment industry, offers state-of-the-art digital core compression testers that deliver fast, accurate, and repeatable results. They play a key role in helping manufacturers improve quality control and reduce waste.

How a Digital Core Compression Tester Improves Testing in Practical Terms

So, how a digital core compression tester improves testing? Let’s break it down into specific advantages:

1. Accuracy and Repeatability

Digital systems ensure each test is performed with the same parameters and force application. Manual errors are eliminated, leading to more trustworthy results.

For example, the LabZenix digital core compression tester is equipped with high-precision load cells and digital displays, allowing technicians to read measurements down to the smallest variation. This consistency is vital for high-volume industries.

2. Time Efficiency

Digital testers drastically reduce testing time. Automated loading and data capturing mean technicians spend less time on each sample and more time analyzing results.

With LabZenix’s digital model, users can test multiple samples within a shorter time, improving overall productivity without compromising on test quality.

3. Data Logging and Analysis

One of the biggest advantages of digital equipment is the ability to store and analyze data. Modern testers can connect to computers or printers, allowing for efficient record-keeping.

How a digital core compression tester improves testing becomes even more apparent when you realize how easy it is to generate test reports, share results, and monitor trends over time using tools from manufacturers like LabZenix.

4. Standardized Testing Procedures

Digital core compression testers operate using pre-set testing standards such as ASTM, ISO, and TAPPI. By using these globally accepted procedures, companies ensure their products meet international quality expectations.

LabZenix has engineered its digital testers to comply with major industry standards, making them ideal for global brands.

5. Minimal Human Intervention

Reducing human involvement minimizes errors. Once the test is set, the machine performs the compression automatically, calculates the result, and displays it. No guesswork, no assumptions – just precise numbers.

This reliability is exactly how a digital core compression tester improves testing compared to manual setups.

6. Long-Term Durability

LabZenix designs its instruments for rugged industrial environments. Their digital core compression testers are built to last, ensuring reliable performance for years. A sturdy build means fewer breakdowns and higher ROI.

Features That Make LabZenix Core Compression Testers Stand Out

LabZenix has become a trusted name because of its focus on innovation, usability, and customer satisfaction. Their digital core compression testers come loaded with features that improve testing procedures across industries.

Digital Display: Clear, easy-to-read output during and after tests.

Adjustable Testing Speed: Allows customization for different core materials.

Sturdy Build: Powder-coated body ensures resistance to corrosion and wear.

High Capacity Load Cell: Handles a wide range of compression values.

Safety Features: Emergency stop and overload protection.

Software Connectivity: Integration with LabZenix software for analysis.

When professionals ask how a digital core compression tester improves testing, the answer often lies in these value-added features offered by advanced brands like LabZenix.

Applications Across Industries

Digital core compression testers aren’t just limited to one industry. Here's how they improve testing in various sectors:

Paper & Packaging: Verifies the strength of paper cores in roll formats.

Textile & Fabric: Ensures fabric rolls don't collapse during transport.

Plastic Film Manufacturing: Maintains quality of plastic roll cores.

Aluminum Foil Industry: Confirms strength to handle rolled metal sheets.

Construction Material Supply: Tests cardboard or fiber cores used for protective purposes.

In all these areas, knowing how a digital core compression tester improves testing helps companies prevent product failures, maintain brand reputation, and comply with quality norms.

Maintenance and Calibration – Key to Long-Term Efficiency

To fully leverage the advantages of a digital tester, regular maintenance and calibration are necessary. LabZenix offers after-sales services that include:

Annual Maintenance Contracts

Calibration Certification

Spare Parts Support

Operator Training

These services ensure the machine continues to provide accurate and reliable readings over the years.

Frequently Asked Questions (FAQ)

Q1. What is the primary function of a digital core compression tester?

A1. The primary function is to measure the compressive strength of cylindrical cores used in products like paper rolls, films, and textiles. It helps determine if the core can withstand external pressure during handling and transport.

Q2. How a digital core compression tester improves testing compared to manual methods?

A2. It improves testing by offering accurate, repeatable results, eliminating human error, enabling automated load application, reducing test time, and allowing digital data storage and analysis. These features ensure better quality control and operational efficiency.

Q3. Is LabZenix a reliable brand for digital core compression testers?

A3. Yes, LabZenix is known for manufacturing high-quality testing instruments, including digital core compression testers. They focus on precision, durability, and customer service, making them a preferred choice in many industries.

Q4. What materials can be tested using a digital core compression tester?

A4. You can test cardboard, fiberboard, plastic, and even lightweight metal cores. These are common in the packaging, paper, textile, and construction industries.

Q5. Can LabZenix digital core compression testers be customized?

A5. Yes, LabZenix offers customization based on industry needs. You can choose load range, testing speed, data output options, and size configurations to suit your application.

Q6. How often should the tester be calibrated?

A6. Calibration is typically recommended once every 6 to 12 months, depending on usage. LabZenix provides calibration services to ensure continued accuracy.

Q7. What safety features are included in LabZenix digital testers?

A7. Safety features include overload protection, emergency stop buttons, and secure enclosure of moving parts. These features reduce the risk of accidents during operation.

0 notes