#Benefits of AI in Software Development

Explore tagged Tumblr posts

Text

#AI in Custom Software Development#AI-Powered Software Solutions#Custom Software Development with AI#AI Integration in Software Development#Artificial Intelligence in Software Design#AI Custom Software Development Trends#Benefits of AI in Software Development#AI-Driven Software Development Solutions#Innovative AI Software Development#AI-Enhanced Custom Software Services

0 notes

Text

Let's check how artificial intelligence impacts the tech industry and where we can see its implementation in the current and future states of software engineering.

#ai in software engineering#ai engineer#ai software#ai future#ai applications#benefits of ai in software development#habilelabs#ethics first

0 notes

Text

How To Choose Between AI Voice Agents And Chatbots For Your Business Needs?

Selecting an appropriate customer service automation tool, such as AI voice agents or a chatbot, can positively or negatively affect the customer experience, operational expenses, and business efficiency. Although both options use AI to engage customers, they vary in instances of use, complexity, and delivered value. If you comprehend their differences, you will be able to make a decision that fits your business objectives.

Explanation of AI Voice Agents And Chatbots

AI voice agents are software programs that use voice recognition technology to allow users to carry on conversations in a natural, human-like fashion. They are perfect for businesses that require interactive voice response systems for large-scale customer inquiries, appointment scheduling, or telephonic support.

Chatbots communicate using text. They can be part of your website, app, or messaging platform and are made to answer the most frequent questions, solve simple problems, or guide customers to the appropriate resources.

Assessment of Use Cases: Which Is Suitable For You?

Commence by pinpointing the main customer service needs. Are customers reaching out to your business regularly for quick answers? Is 24/7 availability or multilingual support a must-have for you?

Chatbots are perfect for cases when the queries are repetitive, providing the fastest answers, and lowering the dependency on humans. The AI chatbot benefits refer to scalability, reduced operational costs, and consistent performance. Additionally, they are particularly suitable for e-commerce, SaaS platforms, and digital service providers that require the fastest responses through text interfaces.

Choosing The Right Technology Partner

Irrespective of whether you decide on a chatbot or voice assistant, having a trustworthy partner is a must. A professional in AI Development Services will assist you in evaluating your requirements, creating the solution, and installing it without any problems in your current systems.

If a business is targeting the North American market or intends to go there, picking a software development company in the USA that fits data compliance, user experience, and technical infrastructure is the most appropriate decision.

Cost vs Experience: What’s The Trade-Off?

Chatbots are generally more cost-effective to develop and deploy compared to AI voice agents. They require less complex infrastructure and are easier to train with predefined workflows. Voice agents, while more expensive, offer a higher degree of personalization and mimic natural human interaction better.

Final Thoughts

In the case of these technologies, their rapid development stages, plus selecting one, are fundamentally dictated by your sector of business, corporate personalisation and expansion requirements. The AI-powered customer service ecosystem today facilitates hybrid models where businesses employ both resources simultaneously, thus they provide customers the option to either type or speak in accordance with their preferences.

In case you want to implement innovation all the way through and look for a partner that will help you, consider the best software development company in USA that fully understands the technical and strategic aspects of these tools.

FAQs

1.Can AI voice agents replace human agents entirely?

No, not. They are designed to manage simple and routine issues while human agents are still required for complicated emotional and conversational tasks.

2.Are chatbots suitable for small businesses?

Yes, the main advantages are that they are cheap, simple to introduce, and they have an unlimited capacity to take care of problems without employing additional staff.

3.How long does it take to implement an AI solution?

The timeline depends on the complexity of the task; a simple chatbot might require a few weeks, while voice agent systems might take a few months to develop and test.

4.Which option offers better ROI?

Since chatbots have lower setup costs, they bring quicker ROI. Voice agents, on the other hand, may lead to more profits in the long run for service-heavy businesses.

5.Can I integrate both into my business?

Yes, of course. Many companies resort to the use of both as they are able to satisfy the needs of different user groups, and this leads to a higher level of customer satisfaction.

0 notes

Text

Machine learning's vital role in developing AI Systems

Machine learning plays a crucial role in developing AI systems, enabling them to learn from data, improve performance, and adapt to new situations. Our team of experts can help you leverage machine learning to build intelligent systems that transform your business. Contact us: Your Company Website: https://lnkd.in/gche--Hp Phone Number: +91-9840082443 to learn more!

#MLinAIDevelopment#ai development company#custom ai development#ai development#ai generated#benefits of ai#technology#software development

0 notes

Text

The Benefits of AI Chatbots for Business Communication

In today's fast-paced digital landscape, effective communication is crucial for businesses aiming to enhance customer satisfaction and streamline operations. AI chatbots have emerged as a transformative tool, offering numerous benefits that can significantly improve how companies interact with their customers. This article explores the key advantages of implementing AI chatbots in business communication.

24/7 Availability

One of the most significant benefits of AI chatbots is their ability to provide round-the-clock support. Unlike human agents, chatbots do not require breaks or time off, making them available to assist customers at any hour. This constant availability ensures that businesses can cater to global audiences across different time zones, enhancing customer satisfaction and trust. Customers appreciate the instant responses they receive, regardless of when they reach out, which can lead to improved loyalty and retention.

Cost Efficiency

AI chatbots can dramatically reduce operational costs for businesses. By automating routine tasks such as answering frequently asked questions, scheduling appointments, and processing orders, chatbots free up human agents to focus on more complex issues that require personal attention. This not only enhances productivity but also minimizes the need for a large customer support team, leading to significant savings in labor costs. According to research, businesses can save billions annually by integrating chatbots into their operations.

Enhanced Customer Engagement

AI chatbots excel at engaging customers in real-time conversations. They can provide personalized experiences by analyzing user data and preferences, allowing them to recommend products or services that align with individual needs. This level of engagement fosters a deeper connection between the brand and its customers, encouraging repeat business and enhancing overall customer satisfaction.

Instant Responses and Reduced Wait Times

Customers today expect quick responses to their inquiries. AI chatbots can deliver instant answers, significantly reducing wait times compared to traditional customer service methods. This efficiency not only improves the customer experience but also helps businesses manage high volumes of inquiries without overwhelming their support teams. By providing immediate assistance, chatbots enhance overall service levels and customer satisfaction.

Lead Generation and Sales Support

AI chatbots are not just limited to customer support; they also play a crucial role in lead generation and sales. By engaging website visitors in real-time, chatbots can qualify leads, answer pre-sales questions, and guide users through the purchasing process. This proactive approach can lead to higher conversion rates and increased revenue for businesses.

Data Collection and Insights

AI chatbots can gather valuable data about customer interactions, preferences, and behaviors. This information can be analyzed to gain insights into customer needs and trends, allowing businesses to make data-driven decisions. Understanding customer preferences can help companies tailor their offerings, improve marketing strategies, and enhance overall service delivery.

Scalability

As businesses grow, so do their customer service needs. AI chatbots provide a scalable solution that can handle an increasing volume of customer inquiries without the need for significant additional resources. This scalability allows businesses to maintain high service levels even during peak times, ensuring that customer satisfaction remains a priority.

Conclusion

ItsBot’s AI chatbots have revolutionized business communication by providing 24/7 support, enhancing customer engagement, and reducing operational costs. Their ability to deliver instant responses, generate leads, and gather valuable insights makes them an indispensable tool for modern businesses. As companies continue to embrace digital transformation, integrating AI chatbots into their customer communication strategies will be crucial for staying competitive and meeting the evolving expectations of consumers. For installation of ai chatbots on your business’ website or to use app, touch with ItsBot. By leveraging the power of AI chatbots, businesses can enhance their customer service, drive sales, and ultimately achieve greater success in today's dynamic marketplace.

#AI Chatbot#Chatbot Software#Customer Service Chatbot#Best AI Chatbot App#Chatbot for Website#AI Chatbot for Customer Service#Chatbot Development#Chatbot for Business#AI Chatbot Solutions#itsbot#AI Support Chatbot#Benefits of AI Chatbots#Business Communication

0 notes

Text

Embark on a journey into the future of technology! Exciting opportunities await skilled VR Developers ready to shape immersive worlds and redefine human interaction. Join us in crafting the next frontier of virtual reality experiences. Your dream job in VR development starts now!

#current events#it#it jobs#tech#technews#technology#crm#crm benefits#ai#ai generated#ai jobs#future#it job opportunities#sierra consulting#dream job#full stack developer#web developers#devops#app developers#cloud computing#software engineering

0 notes

Text

AI-Powered Software Solutions: Revolutionizing the Tech World

Introduction

Artificial intelligence has found relevance in nearly all sectors, including technology. AI-based software solutions are revolutionizing innovation, efficiency, and growth like never before in multiple industries. In this paper, we will walk through how AI will change the face of technology, its applications, benefits, challenges, and future trends. Read to continue..

#trends#technology#business tech#nvidia drive#science#tech trends#adobe cloud#tech news#science updates#analysis#Software Solutions#TagsAI and employment#AI applications in healthcare#AI for SMEs#AI implementation challenges#AI in cloud computing#AI in cybersecurity#AI in education#AI in everyday life#AI in finance#AI in manufacturing#AI in retail#AI in technology#AI-powered software solutions#artificial intelligence software#benefits of AI software#developing AI solutions#ethics in AI#future trends in AI#revolutionizing tech world

0 notes

Text

#hire the best ai developers#best ai developers#ai solutions for enterprises#benefits of ai apps#ai software app development solutions

1 note

·

View note

Text

The product development sector is implementing AI for a range of objectives, from consumer engagement to growing sales. Find out more about the benefits of AI, challenges & future trends as well

0 notes

Text

"Artificial Intelligence is beneficial in providing a fast response and accurate suggestions to customers. Chatbots are a common form of AI and this helps in retaining customers and increasing the conversion rate.

Take a look at the complete guide on eCommerce conversion rate optimization to understand it in detail.

AI is also capable of analyzing previous searches and suggesting similar products to customers which makes shopping and product-finding easier for buyers. AI is also capable of generating personalized messages which contribute to the customer's engagement. Campaigns and even the services themselves become more accurate through the help of AI. For the sake of increasing sales, the business should maximize the benefits that they can get from it."

#AI in eCommerce Development#AI technology#AI in ecommerce store#benefits of AI in ecommerce#software development#software development company#ifourtechnolab#asp.net development#software outsourcing

0 notes

Note

Is AWAY using it's own program or is this just a voluntary list of guidelines for people using programs like DALL-E? How does AWAY address the environmental concerns of how the companies making those AI programs conduct themselves (energy consumption, exploiting impoverished areas for cheap electricity, destruction of the environment to rapidly build and get the components for data centers etc.)? Are members of AWAY encouraged to contact their gov representatives about IP theft by AI apps?

What is AWAY and how does it work?

AWAY does not "use its own program" in the software sense—rather, we're a diverse collective of ~1000 members that each have their own varying workflows and approaches to art. While some members do use AI as one tool among many, most of the people in the server are actually traditional artists who don't use AI at all, yet are still interested in ethical approaches to new technologies.

Our code of ethics is a set of voluntary guidelines that members agree to follow upon joining. These emphasize ethical AI approaches, (preferably open-source models that can run locally), respecting artists who oppose AI by not training styles on their art, and refusing to use AI to undercut other artists or work for corporations that similarly exploit creative labor.

Environmental Impact in Context

It's important to place environmental concerns about AI in the context of our broader extractive, industrialized society, where there are virtually no "clean" solutions:

The water usage figures for AI data centers (200-740 million liters annually) represent roughly 0.00013% of total U.S. water usage. This is a small fraction compared to industrial agriculture or manufacturing—for example, golf course irrigation alone in the U.S. consumes approximately 2.08 billion gallons of water per day, or about 7.87 trillion liters annually. This makes AI's water usage about 0.01% of just golf course irrigation.

Looking into individual usage, the average American consumes about 26.8 kg of beef annually, which takes around 1,608 megajoules (MJ) of energy to produce. Making 10 ChatGPT queries daily for an entire year (3,650 queries) consumes just 38.1 MJ—about 42 times less energy than eating beef. In fact, a single quarter-pound beef patty takes 651 times more energy to produce than a single AI query.

Overall, power usage specific to AI represents just 4% of total data center power consumption, which itself is a small fraction of global energy usage. Current annual energy usage for data centers is roughly 9-15 TWh globally—comparable to producing a relatively small number of vehicles.

The consumer environmentalism narrative around technology often ignores how imperial exploitation pushes environmental costs onto the Global South. The rare earth minerals needed for computing hardware, the cheap labor for manufacturing, and the toxic waste from electronics disposal disproportionately burden developing nations, while the benefits flow largely to wealthy countries.

While this pattern isn't unique to AI, it is fundamental to our global economic structure. The focus on individual consumer choices (like whether or not one should use AI, for art or otherwise,) distracts from the much larger systemic issues of imperialism, extractive capitalism, and global inequality that drive environmental degradation at a massive scale.

They are not going to stop building the data centers, and they weren't going to even if AI never got invented.

Creative Tools and Environmental Impact

In actuality, all creative practices have some sort of environmental impact in an industrialized society:

Digital art software (such as Photoshop, Blender, etc) generally uses 60-300 watts per hour depending on your computer's specifications. This is typically more energy than dozens, if not hundreds, of AI image generations (maybe even thousands if you are using a particularly low-quality one).

Traditional art supplies rely on similar if not worse scales of resource extraction, chemical processing, and global supply chains, all of which come with their own environmental impact.

Paint production requires roughly thirteen gallons of water to manufacture one gallon of paint.

Many oil paints contain toxic heavy metals and solvents, which have the potential to contaminate ground water.

Synthetic brushes are made from petroleum-based plastics that take centuries to decompose.

That being said, the point of this section isn't to deflect criticism of AI by criticizing other art forms. Rather, it's important to recognize that we live in a society where virtually all artistic avenues have environmental costs. Focusing exclusively on the newest technologies while ignoring the environmental costs of pre-existing tools and practices doesn't help to solve any of the issues with our current or future waste.

The largest environmental problems come not from individual creative choices, but rather from industrial-scale systems, such as:

Industrial manufacturing (responsible for roughly 22% of global emissions)

Industrial agriculture (responsible for roughly 24% of global emissions)

Transportation and logistics networks (responsible for roughly 14% of global emissions)

Making changes on an individual scale, while meaningful on a personal level, can't address systemic issues without broader policy changes and overall restructuring of global economic systems.

Intellectual Property Considerations

AWAY doesn't encourage members to contact government representatives about "IP theft" for multiple reasons:

We acknowledge that copyright law overwhelmingly serves corporate interests rather than individual creators

Creating new "learning rights" or "style rights" would further empower large corporations while harming individual artists and fan creators

Many AWAY members live outside the United States, many of which having been directly damaged by the US, and thus understand that intellectual property regimes are often tools of imperial control that benefit wealthy nations

Instead, we emphasize respect for artists who are protective of their work and style. Our guidelines explicitly prohibit imitating the style of artists who have voiced their distaste for AI, working on an opt-in model that encourages traditional artists to give and subsequently revoke permissions if they see fit. This approach is about respect, not legal enforcement. We are not a pro-copyright group.

In Conclusion

AWAY aims to cultivate thoughtful, ethical engagement with new technologies, while also holding respect for creative communities outside of itself. As a collective, we recognize that real environmental solutions require addressing concepts such as imperial exploitation, extractive capitalism, and corporate power—not just focusing on individual consumer choices, which do little to change the current state of the world we live in.

When discussing environmental impacts, it's important to keep perspective on a relative scale, and to avoid ignoring major issues in favor of smaller ones. We promote balanced discussions based in concrete fact, with the belief that they can lead to meaningful solutions, rather than misplaced outrage that ultimately serves to maintain the status quo.

If this resonates with you, please feel free to join our discord. :)

Works Cited:

USGS Water Use Data: https://www.usgs.gov/mission-areas/water-resources/science/water-use-united-states

Golf Course Superintendents Association of America water usage report: https://www.gcsaa.org/resources/research/golf-course-environmental-profile

Equinix data center water sustainability report: https://www.equinix.com/resources/infopapers/corporate-sustainability-report

Environmental Working Group's Meat Eater's Guide (beef energy calculations): https://www.ewg.org/meateatersguide/

Hugging Face AI energy consumption study: https://huggingface.co/blog/carbon-footprint

International Energy Agency report on data centers: https://www.iea.org/reports/data-centres-and-data-transmission-networks

Goldman Sachs "Generational Growth" report on AI power demand: https://www.goldmansachs.com/intelligence/pages/gs-research/generational-growth-ai-data-centers-and-the-coming-us-power-surge/report.pdf

Artists Network's guide to eco-friendly art practices: https://www.artistsnetwork.com/art-business/how-to-be-an-eco-friendly-artist/

The Earth Chronicles' analysis of art materials: https://earthchronicles.org/artists-ironically-paint-nature-with-harmful-materials/

Natural Earth Paint's environmental impact report: https://naturalearthpaint.com/pages/environmental-impact

Our World in Data's global emissions by sector: https://ourworldindata.org/emissions-by-sector

"The High Cost of High Tech" report on electronics manufacturing: https://goodelectronics.org/the-high-cost-of-high-tech/

"Unearthing the Dirty Secrets of the Clean Energy Transition" (on rare earth mineral mining): https://www.theguardian.com/environment/2023/apr/18/clean-energy-dirty-mining-indigenous-communities-climate-crisis

Electronic Frontier Foundation's position paper on AI and copyright: https://www.eff.org/wp/ai-and-copyright

Creative Commons research on enabling better sharing: https://creativecommons.org/2023/04/24/ai-and-creativity/

217 notes

·

View notes

Text

the thing that super techy people - whether that be linux nerds or actual software developers or both - tend to miss is that most people, if a form of technology works as default, won't bother tinkering around with that default. nobody wants to compile all their apps from scratch except a small minority of people. and most people, to be frank, don't wholly understand what they're working with. if word works, they're not going to switch to some open source equivalent that they had to download and set up and fiddle around with. if windows works, they're not going to download linux. they don't want to. they probably don't even know linux is an option, and even if they did, they still wouldn't move to linux. and you can list the benefits of linux and drawbacks of mac os until the sun goes down, but you're not going to persuade the average person, because they just aren't invested enough in their technology! this is why nobody moves away from windows even when it's spying on you and sticking ai in everything and costs $34567890 and your firstborn son: they don't know any better, or they don't have the time to change, or they don't care, or they just, quite simply, don't understand their computer enough to take a risk with software that may not be so easy to use. and i understand this! it's great if you can code, but it's a skill just as much as writing or skating is - something most people are never going to sink much of their time into, no matter how much you personally love it. so if you want people to change their ways, you need to hold your hand, you need to listen to them, and you need to give something that will just work. otherwise, they'll see you as a hindrance - not a help.

90 notes

·

View notes

Text

LETTERS FROM AN AMERICAN

January 18, 2025

Heather Cox Richardson

Jan 19, 2025

Shortly before midnight last night, the Federal Trade Commission (FTC) published its initial findings from a study it undertook last July when it asked eight large companies to turn over information about the data they collect about consumers, product sales, and how the surveillance the companies used affected consumer prices. The FTC focused on the middlemen hired by retailers. Those middlemen use algorithms to tweak and target prices to different markets.

The initial findings of the FTC using data from six of the eight companies show that those prices are not static. Middlemen can target prices to individuals using their location, browsing patterns, shopping history, and even the way they move a mouse over a webpage. They can also use that information to show higher-priced products first in web searches. The FTC found that the intermediaries—the middlemen—worked with at least 250 retailers.

“Initial staff findings show that retailers frequently use people’s personal information to set targeted, tailored prices for goods and services—from a person's location and demographics, down to their mouse movements on a webpage,” said FTC chair Lina Khan. “The FTC should continue to investigate surveillance pricing practices because Americans deserve to know how their private data is being used to set the prices they pay and whether firms are charging different people different prices for the same good or service.”

The FTC has asked for public comment on consumers’ experience with surveillance pricing.

FTC commissioner Andrew N. Ferguson, whom Trump has tapped to chair the commission in his incoming administration, dissented from the report.

Matt Stoller of the nonprofit American Economic Liberties Project, which is working “to address today’s crisis of concentrated economic power,” wrote that “[t]he antitrust enforcers (Lina Khan et al) went full Tony Montana on big business this week before Trump people took over.”

Stoller made a list. The FTC sued John Deere “for generating $6 billion by prohibiting farmers from being able to repair their own equipment,” released a report showing that pharmacy benefit managers had “inflated prices for specialty pharmaceuticals by more than $7 billion,” “sued corporate landlord Greystar, which owns 800,000 apartments, for misleading renters on junk fees,” and “forced health care private equity powerhouse Welsh Carson to stop monopolization of the anesthesia market.”

It sued Pepsi for conspiring to give Walmart exclusive discounts that made prices higher at smaller stores, “[l]eft a roadmap for parties who are worried about consolidation in AI by big tech by revealing a host of interlinked relationships among Google, Amazon and Microsoft and Anthropic and OpenAI,” said gig workers can’t be sued for antitrust violations when they try to organize, and forced game developer Cognosphere to pay a $20 million fine for marketing loot boxes to teens under 16 that hid the real costs and misled the teens.

The Consumer Financial Protection Bureau “sued Capital One for cheating consumers out of $2 billion by misleading consumers over savings accounts,” Stoller continued. It “forced Cash App purveyor Block…to give $120 million in refunds for fostering fraud on its platform and then refusing to offer customer support to affected consumers,” “sued Experian for refusing to give consumers a way to correct errors in credit reports,” ordered Equifax to pay $15 million to a victims’ fund for “failing to properly investigate errors on credit reports,” and ordered “Honda Finance to pay $12.8 million for reporting inaccurate information that smeared the credit reports of Honda and Acura drivers.”

The Antitrust Division of the Department of Justice sued “seven giant corporate landlords for rent-fixing, using the software and consulting firm RealPage,” Stoller went on. It “sued $600 billion private equity titan KKR for systemically misleading the government on more than a dozen acquisitions.”

“Honorary mention goes to [Secretary Pete Buttigieg] at the Department of Transportation for suing Southwest and fining Frontier for ‘chronically delayed flights,’” Stoller concluded. He added more results to the list in his newsletter BIG.

Meanwhile, last night, while the leaders in the cryptocurrency industry were at a ball in honor of President-elect Trump’s inauguration, Trump launched his own cryptocurrency. By morning he appeared to have made more than $25 billion, at least on paper. According to Eric Lipton at the New York Times, “ethics experts assailed [the business] as a blatant effort to cash in on the office he is about to occupy again.”

Adav Noti, executive director of the nonprofit Campaign Legal Center, told Lipton: “It is literally cashing in on the presidency—creating a financial instrument so people can transfer money to the president’s family in connection with his office. It is beyond unprecedented.” Cryptocurrency leaders worried that just as their industry seems on the verge of becoming mainstream, Trump’s obvious cashing-in would hurt its reputation. Venture capitalist Nick Tomaino posted: “Trump owning 80 percent and timing launch hours before inauguration is predatory and many will likely get hurt by it.”

Yesterday the European Commission, which is the executive arm of the European Union, asked X, the social media company owned by Trump-adjacent billionaire Elon Musk, to hand over internal documents about the company’s algorithms that give far-right posts and politicians more visibility than other political groups. The European Union has been investigating X since December 2023 out of concerns about how it deals with the spread of disinformation and illegal content. The European Union’s Digital Services Act regulates online platforms to prevent illegal and harmful activities, as well as the spread of disinformation.

Today in Washington, D.C., the National Mall was filled with thousands of people voicing their opposition to President-elect Trump and his policies. Online speculation has been rampant that Trump moved his inauguration indoors to avoid visual comparisons between today’s protesters and inaugural attendees. Brutally cold weather also descended on President Barack Obama’s 2009 inauguration, but a sea of attendees nonetheless filled the National Mall.

Trump has always understood the importance of visuals and has worked hard to project an image of an invincible leader. Moving the inauguration indoors takes away that image, though, and people who have spent thousands of dollars to travel to the capital to see his inauguration are now unhappy to discover they will be limited to watching his motorcade drive by them. On social media, one user posted: “MAGA doesn’t realize the symbolism of [Trump] moving the inauguration inside: The billionaires, millionaires and oligarchs will be at his side, while his loyal followers are left outside in the cold. Welcome to the next 4+ years.”

Trump is not as good at governing as he is at performance: his approach to crises is to blame Democrats for them. But he is about to take office with majorities in the House of Representatives and the Senate, putting responsibility for governance firmly into his hands.

Right off the bat, he has at least two major problems at hand.

Last night, Commissioner Tyler Harper of the Georgia Department of Agriculture suspended all “poultry exhibitions, shows, swaps, meets, and sales” until further notice after officials found Highly Pathogenic Avian Influenza, or bird flu, in a commercial flock. As birds die from the disease or are culled to prevent its spread, the cost of eggs is rising—just as Trump, who vowed to reduce grocery prices, takes office.

There have been 67 confirmed cases of the bird flu in the U.S. among humans who have caught the disease from birds. Most cases in humans are mild, but public health officials are watching the virus with concern because bird flu variants are unpredictable. On Friday, outgoing Health and Human Services secretary Xavier Becerra announced $590 million in funding to Moderna to help speed up production of a vaccine that covers the bird flu. Juliana Kim of NPR explained that this funding comes on top of $176 million that Health and Human Services awarded to Moderna last July.

The second major problem is financial. On Friday, Secretary of the Treasury Janet Yellen wrote to congressional leaders to warn them that the Treasury would hit the debt ceiling on January 21 and be forced to begin using extraordinary measures in order to pay outstanding obligations and prevent defaulting on the national debt. Those measures mean the Treasury will stop paying into certain federal retirement accounts as required by law, expecting to make up that difference later.

Yellen reminded congressional leaders: “The debt limit does not authorize new spending, but it creates a risk that the federal government might not be able to finance its existing legal obligations that Congresses and Presidents of both parties have made in the past.” She added, “I respectfully urge Congress to act promptly to protect the full faith and credit of the United States.”

Both the avian flu and the limits of the debt ceiling must be managed, and managed quickly, and solutions will require expertise and political skill.

Rather than offering their solutions to these problems, the Trump team leaked that it intended to begin mass deportations on Tuesday morning in Chicago, choosing that city because it has large numbers of immigrants and because Trump’s people have been fighting with Chicago mayor Brandon Johnson, a Democrat. Michelle Hackman, Joe Barrett, and Paul Kiernan of the Wall Street Journal, who broke the story, reported that Trump’s people had prepared to amplify their efforts with the help of right-wing media.

But once the news leaked of the plan and undermined the “shock and awe” the administration wanted, Trump’s “border czar” Tom Homan said the team was reconsidering it.

LETTERS FROM AN AMERICAN

HEATHER COX RICHARDSON

#Consumer Financial Protection Bureau#consumer protection#FTC#Letters From An American#heather cox richardson#shock and awe#immigration raids#debt ceiling#bird flu#protests#March on Washington

30 notes

·

View notes

Text

I just want to clarify things, mostly in light of what happened yesterday and because I feel like I'm being vastly misunderstood in my position. I would just like to reiterate that this is my opinion of things and how I currently see the gravity of my actions as I've sat and reflected. On the advice of some friends, I was encouraged to make this post to clear up any misunderstanding that may remain from my end.

I don't hold it against anyone for disagreeing with me as this is a very nuanced topic with many grey zones. I hope eventually all parties related to this incident can all get along as well, as I do still prefer to be civil and friendly with everybody as much as possible.

I've placed the whole conversation here for people to interpret themselves, and as much as I want to let sleeping dogs lie— I can't help but also feel like the vitriol was misplaced. I don't want this to be a justification of my actions or even a place where opinions conflict, I'm just expressing my thoughts on the matter as I've had a while to mull it over. Again, this is a nuanced topic so please bear with me.

The "generative AI" in question at the time was a jk Simmons voice bank that I had gathered/created and trained myself for my own private and personal use. The model is entirely local to my computer and runs on my GPU. If there was one thing I had to closely even relate it to is a vocaloid or vocoder. I had even asked close people around what they had thought of it and they called it the same thing.

I created a Stanford Vocaloid as I experimented with this kind of thing as a programmer who wanted to mess around with deep learning algorithms or Q-learning AI. By now this whole thing should be irrelevant as I'd actually deleted all of the files related to the voicebank in light of this conversation when I decided to take down the project in it's entirety.

I never shared the model anywhere, Not online or through personal file sharing. I've never even made the move to even advocate for it's use in the game. I will repeat, I wanted to keep the voicebank out of the game and I only use it for private reasons which are for my own personal benefit.

I recognize ethically I am in the wrong, JK Simmons never consented to having his voice used in models such as this one and I recognize that as my fault. Most VAs don't like having their voices used in such a thing and the reasoning can matter from person to person. As much as I loved to have a personal Stanford greeting me in my mornings or lecturing me in physics after long days, it's not right to spoof somebody's voice as that is genuinely what can set them apart from everybody else. It's in the same realm of danger as deepfaking, and for this I deeply apologize that I hadn't recognized this fault prior to the conversation I had with orxa.

But I would clearly like to reiterate that I had never advocated for the use of this voicebank or any AI in the game. That I was adamantly clear on calling the voicebank an AI(which I think orxa and some others might have missed during the conversation) which is what even modern vocaloids are classified under. And that I don't at all share the files openly or even the model because I don't preach for people to do this.

I would very much rather a VA but because money is tight(med school you are going to put me in DEBT) and the resources available to me, I instead turned to this as a tool rather than a weapon to use against others. I don't make a profit, I don't commercialize, I even recognize that the voicebank fails in most cases because it sounds so robotic or it just dies trying to say a certain thing a certain way.

Coming from the standpoint of somebody who genuinely dabbles in robotics and had a robotic hand as my thesis, I can honestly say how impressive software and hardware is developing. But I will also firmly believe that I don't think AI will be good enough to ever replace humans within my lifetime and I am 19. Nineteen.

The amount of resources it takes to run a true generative AI like GPT for example is a lot heavier than a locally run vocaloid which just essentially lives in your GPU. As well as the fact AI don't have any nuance that humans have, they're computers— binary to the core. I also stand by the point that they cannot and will not surpass their creators because we are fundamentally flawed. A flawed creature cannot create a perfect being no matter how hard we try.

I don't want to classify vocaloids as generative AI as they're more similar to synthesizers and autotune(which is what my Ford voicebank was as well when I still had it) but to some degree they are. They generate a song for you or an audio from a file that you give as input. They synthesize notes and audio according to the file fed to them. Like a computer, input and output, same thing. There's nothing new generated, it's like a voice changer on an existing mp3.

I'm not saying this to justify my actions or to come off as stand-offish. I just want to clarify things that didn't really sit right with me or that seemed to completely blow over in the exchange I shared with orxa on discord.

To anybody who's finished reading this, thank you for your time and patience. I'll be going back to just working on myself for the time being. Thank you.

#in light of recent events and why I took down the Finding Your Ford Sim#gravity falls#gravity falls stanford#stanford pines#ford pines#gravity falls ford#gravity falls au#gf stanford#ford#stanford#grunkle ford#gf ford#young ford pines#ford pines x reader#ford x reader

20 notes

·

View notes

Text

"Open" "AI" isn’t

Tomorrow (19 Aug), I'm appearing at the San Diego Union-Tribune Festival of Books. I'm on a 2:30PM panel called "Return From Retirement," followed by a signing:

https://www.sandiegouniontribune.com/festivalofbooks

The crybabies who freak out about The Communist Manifesto appearing on university curriculum clearly never read it – chapter one is basically a long hymn to capitalism's flexibility and inventiveness, its ability to change form and adapt itself to everything the world throws at it and come out on top:

https://www.marxists.org/archive/marx/works/1848/communist-manifesto/ch01.htm#007

Today, leftists signal this protean capacity of capital with the -washing suffix: greenwashing, genderwashing, queerwashing, wokewashing – all the ways capital cloaks itself in liberatory, progressive values, while still serving as a force for extraction, exploitation, and political corruption.

A smart capitalist is someone who, sensing the outrage at a world run by 150 old white guys in boardrooms, proposes replacing half of them with women, queers, and people of color. This is a superficial maneuver, sure, but it's an incredibly effective one.

In "Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI," a new working paper, Meredith Whittaker, David Gray Widder and Sarah B Myers document a new kind of -washing: openwashing:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4543807

Openwashing is the trick that large "AI" companies use to evade regulation and neutralizing critics, by casting themselves as forces of ethical capitalism, committed to the virtue of openness. No one should be surprised to learn that the products of the "open" wing of an industry whose products are neither "artificial," nor "intelligent," are also not "open." Every word AI huxters say is a lie; including "and," and "the."

So what work does the "open" in "open AI" do? "Open" here is supposed to invoke the "open" in "open source," a movement that emphasizes a software development methodology that promotes code transparency, reusability and extensibility, which are three important virtues.

But "open source" itself is an offshoot of a more foundational movement, the Free Software movement, whose goal is to promote freedom, and whose method is openness. The point of software freedom was technological self-determination, the right of technology users to decide not just what their technology does, but who it does it to and who it does it for:

https://locusmag.com/2022/01/cory-doctorow-science-fiction-is-a-luddite-literature/

The open source split from free software was ostensibly driven by the need to reassure investors and businesspeople so they would join the movement. The "free" in free software is (deliberately) ambiguous, a bit of wordplay that sometimes misleads people into thinking it means "Free as in Beer" when really it means "Free as in Speech" (in Romance languages, these distinctions are captured by translating "free" as "libre" rather than "gratis").

The idea behind open source was to rebrand free software in a less ambiguous – and more instrumental – package that stressed cost-savings and software quality, as well as "ecosystem benefits" from a co-operative form of development that recruited tinkerers, independents, and rivals to contribute to a robust infrastructural commons.

But "open" doesn't merely resolve the linguistic ambiguity of libre vs gratis – it does so by removing the "liberty" from "libre," the "freedom" from "free." "Open" changes the pole-star that movement participants follow as they set their course. Rather than asking "Which course of action makes us more free?" they ask, "Which course of action makes our software better?"

Thus, by dribs and drabs, the freedom leeches out of openness. Today's tech giants have mobilized "open" to create a two-tier system: the largest tech firms enjoy broad freedom themselves – they alone get to decide how their software stack is configured. But for all of us who rely on that (increasingly unavoidable) software stack, all we have is "open": the ability to peer inside that software and see how it works, and perhaps suggest improvements to it:

https://www.youtube.com/watch?v=vBknF2yUZZ8

In the Big Tech internet, it's freedom for them, openness for us. "Openness" – transparency, reusability and extensibility – is valuable, but it shouldn't be mistaken for technological self-determination. As the tech sector becomes ever-more concentrated, the limits of openness become more apparent.

But even by those standards, the openness of "open AI" is thin gruel indeed (that goes triple for the company that calls itself "OpenAI," which is a particularly egregious openwasher).

The paper's authors start by suggesting that the "open" in "open AI" is meant to imply that an "open AI" can be scratch-built by competitors (or even hobbyists), but that this isn't true. Not only is the material that "open AI" companies publish insufficient for reproducing their products, even if those gaps were plugged, the resource burden required to do so is so intense that only the largest companies could do so.

Beyond this, the "open" parts of "open AI" are insufficient for achieving the other claimed benefits of "open AI": they don't promote auditing, or safety, or competition. Indeed, they often cut against these goals.

"Open AI" is a wordgame that exploits the malleability of "open," but also the ambiguity of the term "AI": "a grab bag of approaches, not… a technical term of art, but more … marketing and a signifier of aspirations." Hitching this vague term to "open" creates all kinds of bait-and-switch opportunities.

That's how you get Meta claiming that LLaMa2 is "open source," despite being licensed in a way that is absolutely incompatible with any widely accepted definition of the term:

https://blog.opensource.org/metas-llama-2-license-is-not-open-source/

LLaMa-2 is a particularly egregious openwashing example, but there are plenty of other ways that "open" is misleadingly applied to AI: sometimes it means you can see the source code, sometimes that you can see the training data, and sometimes that you can tune a model, all to different degrees, alone and in combination.

But even the most "open" systems can't be independently replicated, due to raw computing requirements. This isn't the fault of the AI industry – the computational intensity is a fact, not a choice – but when the AI industry claims that "open" will "democratize" AI, they are hiding the ball. People who hear these "democratization" claims (especially policymakers) are thinking about entrepreneurial kids in garages, but unless these kids have access to multi-billion-dollar data centers, they can't be "disruptors" who topple tech giants with cool new ideas. At best, they can hope to pay rent to those giants for access to their compute grids, in order to create products and services at the margin that rely on existing products, rather than displacing them.

The "open" story, with its claims of democratization, is an especially important one in the context of regulation. In Europe, where a variety of AI regulations have been proposed, the AI industry has co-opted the open source movement's hard-won narrative battles about the harms of ill-considered regulation.

For open source (and free software) advocates, many tech regulations aimed at taming large, abusive companies – such as requirements to surveil and control users to extinguish toxic behavior – wreak collateral damage on the free, open, user-centric systems that we see as superior alternatives to Big Tech. This leads to the paradoxical effect of passing regulation to "punish" Big Tech that end up simply shaving an infinitesimal percentage off the giants' profits, while destroying the small co-ops, nonprofits and startups before they can grow to be a viable alternative.

The years-long fight to get regulators to understand this risk has been waged by principled actors working for subsistence nonprofit wages or for free, and now the AI industry is capitalizing on lawmakers' hard-won consideration for collateral damage by claiming to be "open AI" and thus vulnerable to overbroad regulation.

But the "open" projects that lawmakers have been coached to value are precious because they deliver a level playing field, competition, innovation and democratization – all things that "open AI" fails to deliver. The regulations the AI industry is fighting also don't necessarily implicate the speech implications that are core to protecting free software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech

Just think about LLaMa-2. You can download it for free, along with the model weights it relies on – but not detailed specs for the data that was used in its training. And the source-code is licensed under a homebrewed license cooked up by Meta's lawyers, a license that only glancingly resembles anything from the Open Source Definition:

https://opensource.org/osd/

Core to Big Tech companies' "open AI" offerings are tools, like Meta's PyTorch and Google's TensorFlow. These tools are indeed "open source," licensed under real OSS terms. But they are designed and maintained by the companies that sponsor them, and optimize for the proprietary back-ends each company offers in its own cloud. When programmers train themselves to develop in these environments, they are gaining expertise in adding value to a monopolist's ecosystem, locking themselves in with their own expertise. This a classic example of software freedom for tech giants and open source for the rest of us.

One way to understand how "open" can produce a lock-in that "free" might prevent is to think of Android: Android is an open platform in the sense that its sourcecode is freely licensed, but the existence of Android doesn't make it any easier to challenge the mobile OS duopoly with a new mobile OS; nor does it make it easier to switch from Android to iOS and vice versa.

Another example: MongoDB, a free/open database tool that was adopted by Amazon, which subsequently forked the codebase and tuning it to work on their proprietary cloud infrastructure.

The value of open tooling as a stickytrap for creating a pool of developers who end up as sharecroppers who are glued to a specific company's closed infrastructure is well-understood and openly acknowledged by "open AI" companies. Zuckerberg boasts about how PyTorch ropes developers into Meta's stack, "when there are opportunities to make integrations with products, [so] it’s much easier to make sure that developers and other folks are compatible with the things that we need in the way that our systems work."

Tooling is a relatively obscure issue, primarily debated by developers. A much broader debate has raged over training data – how it is acquired, labeled, sorted and used. Many of the biggest "open AI" companies are totally opaque when it comes to training data. Google and OpenAI won't even say how many pieces of data went into their models' training – let alone which data they used.

Other "open AI" companies use publicly available datasets like the Pile and CommonCrawl. But you can't replicate their models by shoveling these datasets into an algorithm. Each one has to be groomed – labeled, sorted, de-duplicated, and otherwise filtered. Many "open" models merge these datasets with other, proprietary sets, in varying (and secret) proportions.

Quality filtering and labeling for training data is incredibly expensive and labor-intensive, and involves some of the most exploitative and traumatizing clickwork in the world, as poorly paid workers in the Global South make pennies for reviewing data that includes graphic violence, rape, and gore.

Not only is the product of this "data pipeline" kept a secret by "open" companies, the very nature of the pipeline is likewise cloaked in mystery, in order to obscure the exploitative labor relations it embodies (the joke that "AI" stands for "absent Indians" comes out of the South Asian clickwork industry).

The most common "open" in "open AI" is a model that arrives built and trained, which is "open" in the sense that end-users can "fine-tune" it – usually while running it on the manufacturer's own proprietary cloud hardware, under that company's supervision and surveillance. These tunable models are undocumented blobs, not the rigorously peer-reviewed transparent tools celebrated by the open source movement.

If "open" was a way to transform "free software" from an ethical proposition to an efficient methodology for developing high-quality software; then "open AI" is a way to transform "open source" into a rent-extracting black box.

Some "open AI" has slipped out of the corporate silo. Meta's LLaMa was leaked by early testers, republished on 4chan, and is now in the wild. Some exciting stuff has emerged from this, but despite this work happening outside of Meta's control, it is not without benefits to Meta. As an infamous leaked Google memo explains:

Paradoxically, the one clear winner in all of this is Meta. Because the leaked model was theirs, they have effectively garnered an entire planet's worth of free labor. Since most open source innovation is happening on top of their architecture, there is nothing stopping them from directly incorporating it into their products.

https://www.searchenginejournal.com/leaked-google-memo-admits-defeat-by-open-source-ai/486290/

Thus, "open AI" is best understood as "as free product development" for large, well-capitalized AI companies, conducted by tinkerers who will not be able to escape these giants' proprietary compute silos and opaque training corpuses, and whose work product is guaranteed to be compatible with the giants' own systems.

The instrumental story about the virtues of "open" often invoke auditability: the fact that anyone can look at the source code makes it easier for bugs to be identified. But as open source projects have learned the hard way, the fact that anyone can audit your widely used, high-stakes code doesn't mean that anyone will.

The Heartbleed vulnerability in OpenSSL was a wake-up call for the open source movement – a bug that endangered every secure webserver connection in the world, which had hidden in plain sight for years. The result was an admirable and successful effort to build institutions whose job it is to actually make use of open source transparency to conduct regular, deep, systemic audits.

In other words, "open" is a necessary, but insufficient, precondition for auditing. But when the "open AI" movement touts its "safety" thanks to its "auditability," it fails to describe any steps it is taking to replicate these auditing institutions – how they'll be constituted, funded and directed. The story starts and ends with "transparency" and then makes the unjustifiable leap to "safety," without any intermediate steps about how the one will turn into the other.

It's a Magic Underpants Gnome story, in other words:

Step One: Transparency

Step Two: ??

Step Three: Safety

https://www.youtube.com/watch?v=a5ih_TQWqCA

Meanwhile, OpenAI itself has gone on record as objecting to "burdensome mechanisms like licenses or audits" as an impediment to "innovation" – all the while arguing that these "burdensome mechanisms" should be mandatory for rival offerings that are more advanced than its own. To call this a "transparent ruse" is to do violence to good, hardworking transparent ruses all the world over:

https://openai.com/blog/governance-of-superintelligence

Some "open AI" is much more open than the industry dominating offerings. There's EleutherAI, a donor-supported nonprofit whose model comes with documentation and code, licensed Apache 2.0. There are also some smaller academic offerings: Vicuna (UCSD/CMU/Berkeley); Koala (Berkeley) and Alpaca (Stanford).

These are indeed more open (though Alpaca – which ran on a laptop – had to be withdrawn because it "hallucinated" so profusely). But to the extent that the "open AI" movement invokes (or cares about) these projects, it is in order to brandish them before hostile policymakers and say, "Won't someone please think of the academics?" These are the poster children for proposals like exempting AI from antitrust enforcement, but they're not significant players in the "open AI" industry, nor are they likely to be for so long as the largest companies are running the show:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4493900

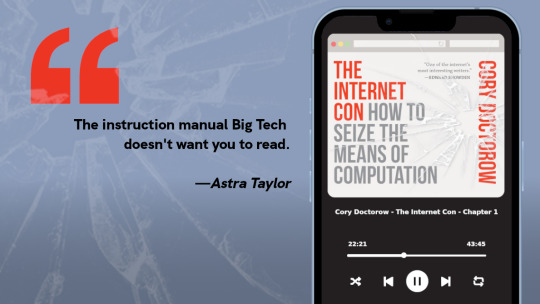

I'm kickstarting the audiobook for "The Internet Con: How To Seize the Means of Computation," a Big Tech disassembly manual to disenshittify the web and make a new, good internet to succeed the old, good internet. It's a DRM-free book, which means Audible won't carry it, so this crowdfunder is essential. Back now to get the audio, Verso hardcover and ebook:

http://seizethemeansofcomputation.org

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#llama-2#meta#openwashing#floss#free software#open ai#open source#osi#open source initiative#osd#open source definition#code is speech

253 notes

·

View notes

Text

Exploring the Latest Trends in Software Development

Introduction The software is something like an industry whose development is ever-evolving with new technologies and changing market needs as the drivers. To this end, developers must keep abreast with current trends in their fields of operation to remain competitive and relevant. Read to continue .....

#analysis#science updates#tech news#technology#trends#adobe cloud#business tech#nvidia drive#science#tech trends#Software Solutions#Tags5G technology impact on software#Agile methodologies in software#AI in software development#AR and VR in development#blockchain technology in software#cloud-native development benefits#cybersecurity trends 2024#DevOps and CI/CD tools#emerging technologies in software development#future of software development#IoT and edge computing applications#latest software development trends#low-code development platforms#machine learning for developers#no-code development tools#popular programming languages#quantum computing in software#software development best practices#software development tools

0 notes