#Data wrangling

Explore tagged Tumblr posts

Text

well guess who's decided to reorganise their research files and can't find the data for a project they worked on less than 6 months ago

4 notes

·

View notes

Text

Mastering Data Wrangling in SAS: Best Practices and Techniques

Data wrangling, also known as data cleaning or data preparation, is a crucial part of the data analysis process. It involves transforming raw data into a format that's structured and ready for analysis. While building models and drawing insights are important tasks, the quality of the analysis often depends on how well the data has been prepared beforehand.

For anyone working with SAS, having a good grasp of the tools available for data wrangling is essential. Whether you're working with missing values, changing variable formats, or restructuring datasets, SAS offers a variety of techniques that can make data wrangling more efficient and error-free. In this article, we’ll cover the key practices and techniques for mastering data wrangling in SAS.

1. What Is Data Wrangling in SAS?

Before we dive into the techniques, it’s important to understand the role of data wrangling. Essentially, data wrangling is the process of cleaning, restructuring, and enriching raw data to prepare it for analysis. Datasets are often messy, incomplete, or inconsistent, so the task of wrangling them into a clean, usable format is essential for accurate analysis.

In SAS, you’ll use several tools for data wrangling. DATA steps, PROC SQL, and various procedures like PROC SORT and PROC TRANSPOSE are some of the most important tools for cleaning and structuring data effectively.

2. Key SAS Procedures for Data Wrangling

SAS offers several powerful tools to manipulate and clean data. Here are some of the most commonly used procedures:

- PROC SORT: Sorting is usually one of the first steps in data wrangling. This procedure organizes your dataset based on one or more variables. Sorting is especially useful when preparing to merge datasets or remove duplicates.

- PROC TRANSPOSE: This procedure reshapes your data by converting rows into columns or vice versa. It's particularly helpful when you have data in a "wide" format that you need to convert into a "long" format or vice versa.

- PROC SQL: PROC SQL enables you to write SQL queries directly within SAS, making it easier to filter, join, and aggregate data. It’s a great tool for working with large datasets and performing complex data wrangling tasks.

- DATA Step: The DATA step is the heart of SAS programming. It’s a versatile tool that allows you to perform a wide range of data wrangling operations, such as creating new variables, filtering data, merging datasets, and applying advanced transformations.

3. Handling Missing Data

Dealing with missing data is one of the most important aspects of data wrangling. Missing values can skew your analysis or lead to inaccurate results, so it’s crucial to address them before proceeding with deeper analysis.

There are several ways to manage missing data:

- Identifying Missing Values: In SAS, missing values can be detected using functions such as NMISS() for numeric data and CMISS() for character data. Identifying missing data early helps you decide how to handle it appropriately.

- Replacing Missing Values: In some cases, missing values can be replaced with estimates, such as the mean or median. This approach helps preserve the size of the dataset, but it should be used cautiously to avoid introducing bias.

- Deleting Missing Data: If missing data is not significant or only affects a small portion of the dataset, you might choose to remove rows containing missing values. This method is simple, but it can lead to data loss if not handled carefully.

4. Transforming Data for Better Analysis

Data transformation is another essential part of the wrangling process. It involves converting or modifying variables so they are better suited for analysis. Here are some common transformation techniques:

- Recoding Variables: Sometimes, you might want to recode variables into more meaningful categories. For instance, you could group continuous data into categories like low, medium, or high, depending on the values.

- Standardization or Normalization: When preparing data for machine learning or certain statistical analyses, it might be necessary to standardize or normalize variables. Standardizing ensures that all variables are on a similar scale, preventing those with larger ranges from disproportionately affecting the analysis.

- Handling Outliers: Outliers are extreme values that can skew analysis results. Identifying and addressing outliers is crucial. Depending on the nature of the outliers, you might choose to remove or transform them to reduce their impact.

5. Automating Tasks with SAS Macros

When working with large datasets or repetitive tasks, SAS macros can help automate the wrangling process. By using macros, you can write reusable code that performs the same transformations or checks on multiple datasets. Macros save time, reduce errors, and improve the consistency of your data wrangling.

For example, if you need to apply the same set of cleaning steps to multiple datasets, you can create a macro to perform those actions automatically, ensuring efficiency and uniformity across your work.

6. Working Efficiently with Large Datasets

As the size of datasets increases, the process of wrangling data can become slower and more resource-intensive. SAS provides several techniques to handle large datasets more efficiently:

- Indexing: One way to speed up data manipulation in large datasets is by creating indexes on frequently used variables. Indexes allow SAS to quickly locate and access specific records, which improves performance when working with large datasets.

- Optimizing Data Steps: Minimizing the number of iterations in your DATA steps is also crucial for efficiency. For example, combining multiple operations into a single DATA step reduces unnecessary reads and writes to disk.

7. Best Practices and Pitfalls to Avoid

When wrangling data, it’s easy to make mistakes that can derail the process. Here are some best practices and common pitfalls to watch out for:

- Check Data Types: Make sure your variables are the correct data type (numeric or character) before performing transformations. Inconsistent data types can lead to errors or inaccurate results.

- Be Cautious with Deleting Data: When removing missing values or outliers, always double-check that the data you're removing won’t significantly affect your analysis. It's important to understand the context of the missing data before deciding to delete it.

- Regularly Review Intermediate Results: Debugging is a key part of the wrangling process. As you apply transformations or filter data, regularly review your results to make sure everything is working as expected. This step can help catch errors early on and save time in the long run.

Conclusion

Mastering data wrangling in SAS is an essential skill for any data analyst or scientist. By taking advantage of SAS’s powerful tools like PROC SORT, PROC TRANSPOSE, PROC SQL, and the DATA step, you can clean, transform, and reshape your data to ensure it's ready for analysis.

Following best practices for managing missing data, transforming variables, and optimizing for large datasets will make the wrangling process more efficient and lead to more accurate results. For those who are new to SAS or want to improve their data wrangling skills, enrolling in a SAS programming tutorial or taking a SAS programming full course can help you gain the knowledge and confidence to excel in this area. With the right approach, SAS can help you prepare high-quality, well-structured data for any analysis.

#sas programming tutorial#sas programming#sas online training#data wrangling#proc sql#proc transpose#proc sort

0 notes

Text

So excited to write again! Hopefully my homework doesn’t completely delete itself and sabotage my schedule

1 note

·

View note

Text

#data munging#data wrangling#machine learning#data preprocessing#data cleaning#quick insights#data science

0 notes

Text

[Python] PySpark to M, SQL or Pandas

Hace tiempo escribí un artículo sobre como escribir en pandas algunos códigos de referencia de SQL o M (power query). Si bien en su momento fue de gran utilidad, lo cierto es que hoy existe otro lenguaje que representa un fuerte pie en el análisis de datos.

Spark se convirtió en el jugar principal para lectura de datos en Lakes. Aunque sea cierto que existe SparkSQL, no quise dejar de traer estas analogías de código entre PySpark, M, SQL y Pandas para quienes estén familiarizados con un lenguaje, puedan ver como realizar una acción con el otro.

Lo primero es ponernos de acuerdo en la lectura del post.

Power Query corre en capas. Cada linea llama a la anterior (que devuelve una tabla) generando esta perspectiva o visión en capas. Por ello cuando leamos en el código #“Paso anterior” hablamos de una tabla.

En Python, asumiremos a "df" como un pandas dataframe (pandas.DataFrame) ya cargado y a "spark_frame" a un frame de pyspark cargado (spark.read)

Conozcamos los ejemplos que serán listados en el siguiente orden: SQL, PySpark, Pandas, Power Query.

En SQL:

SELECT TOP 5 * FROM table

En PySpark

spark_frame.limit(5)

En Pandas:

df.head()

En Power Query:

Table.FirstN(#"Paso Anterior",5)

Contar filas

SELECT COUNT(*) FROM table1

spark_frame.count()

df.shape()

Table.RowCount(#"Paso Anterior")

Seleccionar filas

SELECT column1, column2 FROM table1

spark_frame.select("column1", "column2")

df[["column1", "column2"]]

#"Paso Anterior"[[Columna1],[Columna2]] O podría ser: Table.SelectColumns(#"Paso Anterior", {"Columna1", "Columna2"} )

Filtrar filas

SELECT column1, column2 FROM table1 WHERE column1 = 2

spark_frame.filter("column1 = 2") # OR spark_frame.filter(spark_frame['column1'] == 2)

df[['column1', 'column2']].loc[df['column1'] == 2]

Table.SelectRows(#"Paso Anterior", each [column1] == 2 )

Varios filtros de filas

SELECT * FROM table1 WHERE column1 > 1 AND column2 < 25

spark_frame.filter((spark_frame['column1'] > 1) & (spark_frame['column2'] < 25)) O con operadores OR y NOT spark_frame.filter((spark_frame['column1'] > 1) | ~(spark_frame['column2'] < 25))

df.loc[(df['column1'] > 1) & (df['column2'] < 25)] O con operadores OR y NOT df.loc[(df['column1'] > 1) | ~(df['column2'] < 25)]

Table.SelectRows(#"Paso Anterior", each [column1] > 1 and column2 < 25 ) O con operadores OR y NOT Table.SelectRows(#"Paso Anterior", each [column1] > 1 or not ([column1] < 25 ) )

Filtros con operadores complejos

SELECT * FROM table1 WHERE column1 BETWEEN 1 and 5 AND column2 IN (20,30,40,50) AND column3 LIKE '%arcelona%'

from pyspark.sql.functions import col spark_frame.filter( (col('column1').between(1, 5)) & (col('column2').isin(20, 30, 40, 50)) & (col('column3').like('%arcelona%')) ) # O spark_frame.where( (col('column1').between(1, 5)) & (col('column2').isin(20, 30, 40, 50)) & (col('column3').contains('arcelona')) )

df.loc[(df['colum1'].between(1,5)) & (df['column2'].isin([20,30,40,50])) & (df['column3'].str.contains('arcelona'))]

Table.SelectRows(#"Paso Anterior", each ([column1] > 1 and [column1] < 5) and List.Contains({20,30,40,50}, [column2]) and Text.Contains([column3], "arcelona") )

Join tables

SELECT t1.column1, t2.column1 FROM table1 t1 LEFT JOIN table2 t2 ON t1.column_id = t2.column_id

Sería correcto cambiar el alias de columnas de mismo nombre así:

spark_frame1.join(spark_frame2, spark_frame1["column_id"] == spark_frame2["column_id"], "left").select(spark_frame1["column1"].alias("column1_df1"), spark_frame2["column1"].alias("column1_df2"))

Hay dos funciones que pueden ayudarnos en este proceso merge y join.

df_joined = df1.merge(df2, left_on='lkey', right_on='rkey', how='left') df_joined = df1.join(df2, on='column_id', how='left')Luego seleccionamos dos columnas df_joined.loc[['column1_df1', 'column1_df2']]

En Power Query vamos a ir eligiendo una columna de antemano y luego añadiendo la segunda.

#"Origen" = #"Paso Anterior"[[column1_t1]] #"Paso Join" = Table.NestedJoin(#"Origen", {"column_t1_id"}, table2, {"column_t2_id"}, "Prefijo", JoinKind.LeftOuter) #"Expansion" = Table.ExpandTableColumn(#"Paso Join", "Prefijo", {"column1_t2"}, {"Prefijo_column1_t2"})

Group By

SELECT column1, count(*) FROM table1 GROUP BY column1

from pyspark.sql.functions import count spark_frame.groupBy("column1").agg(count("*").alias("count"))

df.groupby('column1')['column1'].count()

Table.Group(#"Paso Anterior", {"column1"}, {{"Alias de count", each Table.RowCount(_), type number}})

Filtrando un agrupado

SELECT store, sum(sales) FROM table1 GROUP BY store HAVING sum(sales) > 1000

from pyspark.sql.functions import sum as spark_sum spark_frame.groupBy("store").agg(spark_sum("sales").alias("total_sales")).filter("total_sales > 1000")

df_grouped = df.groupby('store')['sales'].sum() df_grouped.loc[df_grouped > 1000]

#”Grouping” = Table.Group(#"Paso Anterior", {"store"}, {{"Alias de sum", each List.Sum([sales]), type number}}) #"Final" = Table.SelectRows( #"Grouping" , each [Alias de sum] > 1000 )

Ordenar descendente por columna

SELECT * FROM table1 ORDER BY column1 DESC

spark_frame.orderBy("column1", ascending=False)

df.sort_values(by=['column1'], ascending=False)

Table.Sort(#"Paso Anterior",{{"column1", Order.Descending}})

Unir una tabla con otra de la misma característica

SELECT * FROM table1 UNION SELECT * FROM table2

spark_frame1.union(spark_frame2)

En Pandas tenemos dos opciones conocidas, la función append y concat.

df.append(df2) pd.concat([df1, df2])

Table.Combine({table1, table2})

Transformaciones

Las siguientes transformaciones son directamente entre PySpark, Pandas y Power Query puesto que no son tan comunes en un lenguaje de consulta como SQL. Puede que su resultado no sea idéntico pero si similar para el caso a resolver.

Analizar el contenido de una tabla

spark_frame.summary()

df.describe()

Table.Profile(#"Paso Anterior")

Chequear valores únicos de las columnas

spark_frame.groupBy("column1").count().show()

df.value_counts("columna1")

Table.Profile(#"Paso Anterior")[[Column],[DistinctCount]]

Generar Tabla de prueba con datos cargados a mano

spark_frame = spark.createDataFrame([(1, "Boris Yeltsin"), (2, "Mikhail Gorbachev")], inferSchema=True)

df = pd.DataFrame([[1,2],["Boris Yeltsin", "Mikhail Gorbachev"]], columns=["CustomerID", "Name"])

Table.FromRecords({[CustomerID = 1, Name = "Bob", Phone = "123-4567"]})

Quitar una columna

spark_frame.drop("column1")

df.drop(columns=['column1']) df.drop(['column1'], axis=1)

Table.RemoveColumns(#"Paso Anterior",{"column1"})

Aplicar transformaciones sobre una columna

spark_frame.withColumn("column1", col("column1") + 1)

df.apply(lambda x : x['column1'] + 1 , axis = 1)

Table.TransformColumns(#"Paso Anterior", {{"column1", each _ + 1, type number}})

Hemos terminado el largo camino de consultas y transformaciones que nos ayudarían a tener un mejor tiempo a puro código con PySpark, SQL, Pandas y Power Query para que conociendo uno sepamos usar el otro.

#spark#pyspark#python#pandas#sql#power query#powerquery#notebooks#ladataweb#data engineering#data wrangling#data cleansing

0 notes

Text

Discover how automation streamlines the data wrangling process, saving time and resources while ensuring accuracy and consistency. Explore the latest tools and techniques revolutionizing data preparation.

0 notes

Text

I am God's strongest bravest princess I've caught the evilest of colds (slight headache, scratchy throat) AND I just had to do a weeks worth or dishes ?! when will I get justice

#and i have to wrangle the responses from my form into any form of coherent and usable data??? using my old terrible 2017 mac????#that cant support excel?!??! so i have to use google sheets?!?!?!?!?!?!?!?+?+#dies.#q-rambl3

5 notes

·

View notes

Text

also yes i'm still working on the census survey. i haven't had vyvanse for weeks so spreadsheets are a nightmare rn

#anya shush#houseblr census 2023#i've managed to wrangle writing without vyvanse but actual data analysis... woof#i guess less analysis and more data organization and cleaning

7 notes

·

View notes

Text

I think I've finished reorganising my work files! and just in time for my boss to ask me for all my published articles in pdf lol.

there are still some kinks to work out, but that's for next week. organisation systems are living, ongoing projects -like cleaning your house or decorating a room, I'm never going to be "done" organising.

if there's something I must credit tiago forte's book with, is that it's made me think about my life in terms of information flows. I have information sources (email clients, twitter, books, AO3, podcasts, etc) and information "sinks" -not in the sense of information being destroyed, but in the sense that I have discovered that I have "places" where I consume information. the places that I have discovered thus far are:

my RSS reader (I use feedly. please, somebody make a better reader than feedly)

my kindle

the "reader" function in the firefox browser

my logseq

my chosen filesystem

I think that it's obvious why I see an RSS reader and a kindle as information sinks, but it's a little bit less obvious why a notetaking program like logseq or a filesystem "consume" information. it's because I often have little bits of information (tweets, pictures, screenshots of a conversation, a book that I may want to read but can't yet) that I want to keep. like, I don't know if there are people who simply let all of their files live in the downloads folder, but personally, I need to "process" the files in some way in order to do anything useful with them.

usually this simply involves moving them from "downloads" to a different directory, but sometimes I also need to take notes on them (if they are a book, or a fanfic, or an academic paper), or maybe I want to add the new snippet to the existing collection of snippets about a topic, and I may have to string all of them together in some coherent order. so that's why I think my notetaking program and my filesystem are information sinks.

I think that finding my information sources and information sinks in my life can really help me write more and be more creative in general, because a thing I've noticed is that when the information travels fast and smoothly from my sources to my sink, the faster I read it and the easiest it is for me to actually work on it and use this new information in my life.

(and also, I know I'm using very abstract terms, saying things like "processing information" that maybe put the picture of a maganer pleased with how the lines in their graph are all going up. but please, have in mind that the use case that made me realise the importance of having my data sources and sinks well connected was me wanting to leave a nice comment on all the fanfics I read. my "line going up" is "I can post around a dozen nice comments per week now!")

3 notes

·

View notes

Text

i have been intending, for well over a week, to make more peanut butter cookies so I can have them as an easy snack.

I have still not done it.

if I have not done it by bedtime tonight someone needs to yell at me tomorrow.

#i need to also get some paid work done#very likely editorial work but the data-wrangling also needs to happen#i got nothing done last week and my focus is shot to hell

5 notes

·

View notes

Text

okay one more summer stogging post (summer stock blogging) via also the one other review that does a wiggly hand gesture about it but was like "this one guy though" and highlights that [will roland back at it again finding a very human Performance in the writing even if you didn't like that writing] phenomenon:

"The only comic in the cast who never pushes is Will Roland as Cox’s henpecked son Orville (Eddie Bracken in the film), who grew up with Jane and whom everyone expects to marry her. The book doesn’t do much for him either, but Roland does it for himself – he makes Orville into a flesh-and-blood creation. He’s the most likable performer on the stage."

#now it's steve using the exact term ''henpecked''....#summer stock#orville wingate#will roland#could've condensed posts here but i didn't b/c i was discovering the data piece by piece lol#and now it'd be a lot to wrangle thee perfectly edited categorization and combinations#sorry to whomever's opening up the daily nightly milo summer stock info blogging and shaking their head. that's hilarious of you fr thanks

3 notes

·

View notes

Text

Gotta say that my favorite subtitle malfunction so far has been the surname Simos getting rendered as CMOs, ironic for one of the few recurring antagonists in the setting who is as far as I can tell zero percent finance themed.

#unsleeping city season two has nudged over into being somewhat about real estate making me once again somewhat the villain#so we're up to like 6/10 awkwardness to watch specifically at my bank job while wrangling commercial prepayment data#honorable mention to 'wreathed' getting rendered as 'reeved' which is a mistake that I don't understand man or machine making#reeved is technically a word but 'reeved in fire' is neither grammatical or coherent#dimension 20

5 notes

·

View notes

Text

Revolutionizing Data Wrangling with Ask On Data: The Future of AI-Driven Data Engineering

Data wrangling, the process of cleaning, transforming, and structuring raw data into a usable format, has always been a critical yet time-consuming task in data engineering. With the increasing complexity and volume of data, data wrangling tool have become indispensable in streamlining these processes. One tool that is revolutionizing the way data engineers approach this challenge is Ask On Data—an open-source, AI-powered, chat-based platform designed to simplify data wrangling for professionals across industries.

The Need for an Efficient Data Wrangling Tool

Data engineers often face a variety of challenges when working with large datasets. Raw data from different sources can be messy, incomplete, or inconsistent, requiring significant effort to clean and transform. Traditional data wrangling tools often involve complex coding and manual intervention, leading to long processing times and a higher risk of human error. With businesses relying more heavily on data-driven decisions, there's an increasing need for more efficient, automated, and user-friendly solutions.

Enter Ask On Data—a cutting-edge data wrangling tool that leverages the power of generative AI to make data cleaning, transformation, and integration seamless and faster than ever before. With Ask On Data, data engineers no longer need to manually write extensive code to prepare data for analysis. Instead, the platform uses AI-driven conversations to assist users in cleaning and transforming data, allowing for a more intuitive and efficient approach to data wrangling.

How Ask On Data Transforms Data Engineering

At its core, Ask On Data is designed to simplify the data wrangling process by using a chat-based interface, powered by advanced generative AI models. Here’s how the tool revolutionizes data engineering:

Intuitive Interface: Unlike traditional data wrangling tools that require specialized knowledge of coding languages like Python or SQL, Ask On Data allows users to interact with their data using natural language. Data engineers can ask questions, request data transformations, and specify the desired output, all through a simple chat interface. The AI understands these requests and performs the necessary actions, significantly reducing the learning curve for users.

Automated Data Cleaning: One of the most time-consuming aspects of data wrangling is identifying and fixing errors in raw data. Ask On Data leverages AI to automatically detect inconsistencies, missing values, and duplicates within datasets. The platform then offers suggestions or automatically applies the necessary transformations, drastically speeding up the data cleaning process.

Data Transformation: Ask On Data's AI is not just limited to data cleaning; it also assists in transforming and reshaping data according to the user's specifications. Whether it's aggregating data, pivoting tables, or merging multiple datasets, the tool can perform these tasks with a simple command. This not only saves time but also reduces the likelihood of errors that often arise during manual data manipulation.

Customizable Workflows: Every data project is different, and Ask On Data understands that. The platform allows users to define custom workflows, automating repetitive tasks, and ensuring consistency across different datasets. Data engineers can configure the tool to handle specific data requirements and transformations, making it an adaptable solution for a variety of data engineering challenges.

Seamless Collaboration: Ask On Data’s chat-based interface also fosters better collaboration between teams. Multiple users can interact with the tool simultaneously, sharing queries, suggestions, and results in real time. This collaborative approach enhances productivity and ensures that the team is always aligned in their data wrangling efforts.

Why Ask On Data is the Future of Data Engineering

The future of data engineering lies in automation and artificial intelligence, and Ask On Data is at the forefront of this revolution. By combining the power of generative AI with a user-friendly interface, it makes complex data wrangling tasks more accessible and efficient than ever before. As businesses continue to generate more data, the demand for tools like Ask On Data will only increase, enabling data engineers to spend less time wrangling data and more time analysing it.

Conclusion

Ask On Data is not just another data wrangling tool—it's a game-changer for data engineers. With its AI-powered features, natural language processing capabilities, and automation of repetitive tasks, Ask On Data is setting a new standard in data engineering. For organizations looking to harness the full potential of their data, Ask On Data is the key to unlocking faster, more accurate, and more efficient data wrangling processes.

0 notes

Text

Effortless Data Wrangling and Test Data Preparation

Data wrangling and test data preparation tools simplify organizing, cleaning, and transforming raw or test data into accurate, usable formats. These tools automate data extraction, validation, and formatting, ensuring high-quality datasets for testing and analysis. They are essential for enhancing efficiency, reducing errors, and improving decision-making in data-driven workflows. Visit Us: https://www.iri.com/solutions/business-intelligence/bi-tool-acceleration/overview

0 notes

Text

Understanding Difference between Data Wrangling and Data Cleaning

In today's data-centric landscape, organizations depend on accurate and actionable insights to guide their strategies. However, raw data is rarely in a state ready for immediate use. Before it can provide value, data must undergo a preparation process involving both data wrangling and data cleaning. While these terms are often used interchangeably, they refer to distinct processes that are crucial for ensuring high-quality data. This guide will explore the nuances between data wrangling vs data cleaning, highlighting their roles in data preparation.

What is Data Wrangling?

Data wrangling, also known as data munging, is the comprehensive process of transforming and mapping raw data into a more structured and usable format. The goal of data wrangling is to prepare data from multiple sources and formats so that it can be analyzed effectively.

Core Aspects of Data Wrangling

Data Integration: Combining disparate datasets into a cohesive whole. For instance, merging customer data from different departments to create a unified customer profile.

Data Transformation: Converting data into a consistent format. This could involve changing date formats, standardizing units of measurement, or altering text data for uniformity.

Data Enrichment: Adding external data to enhance the dataset. For example, integrating socio-economic data to provide deeper insights into customer behavior.

Data Aggregation: Summarizing detailed data into a higher-level view. Aggregating daily transaction data to produce monthly or annual sales reports is a typical example.

Data wrangling is crucial for ensuring that the data is structured and formatted correctly, setting the stage for meaningful analysis.

What is Data Cleaning?

Data cleaning is a more focused process aimed at identifying and correcting errors or inconsistencies in the dataset. While data wrangling transforms and integrates data, data cleaning ensures that the data is accurate, complete, and reliable.

Key Components of Data Cleaning

Handling Missing Values: Addressing gaps in the dataset by either filling in missing values using statistical methods or removing incomplete records if they are not essential.

Removing Duplicates: Eliminating redundant entries to ensure that each record is unique. This helps prevent skewed analysis results caused by repeated data.

Correcting Errors: Fixing inaccuracies such as typos, incorrect data entries, or inconsistencies in data formatting. For example, correcting misspelled city names or standardizing numerical formats.

Validation: Ensuring data adheres to predefined rules and standards. This involves checking data for adherence to specific formats or ranges, such as verifying that email addresses follow proper syntax or that numerical values fall within expected limits.

Effective data cleaning improves the reliability of the analysis by ensuring the data is accurate and free of errors.

The Intersection of Data Wrangling and Data Cleaning

While data wrangling and data cleaning serve distinct functions, they are interrelated and often overlap. Effective data wrangling can reveal issues that necessitate data cleaning. For instance, transforming data from different formats might uncover inconsistencies or errors that need to be addressed. Conversely, data cleaning can lead to the need for further wrangling to ensure the data meets specific analytical requirements.

Iterative Nature of Data Preparation

Both data wrangling and data cleaning are iterative processes. As you wrangle data, you may discover new issues that require cleaning. Similarly, as you clean data, additional wrangling might be necessary to fit the data into the desired format. This iterative approach ensures that data preparation is thorough and adaptable to emerging needs.

Why Distinguishing Between the Two Matters

Understanding the difference between data wrangling and data cleaning is vital for several reasons:

Efficiency: Recognizing which process to apply at different stages helps streamline workflows and avoid redundancy, making the data preparation process more efficient.

Data Quality: Distinguishing between these processes ensures that data is not only structured but also accurate and reliable, leading to more credible analysis outcomes.

Tool Selection: Different tools and techniques are often employed for wrangling and cleaning. Understanding their distinct roles helps in choosing the right tools for each task.

Team Coordination: In collaborative environments, different team members may handle wrangling and cleaning. Clear understanding ensures better coordination and effective communication.

Conclusion

In conclusion, while data wrangling and data cleaning are both essential for preparing data, they serve different purposes. Data wrangling focuses on transforming and structuring data to make it usable, while data cleaning ensures the accuracy and reliability of that data. By understanding and applying these processes effectively, you can enhance the quality of your data analysis, leading to more informed and impactful decision-making.

0 notes

Text

A Guide to Historically Accurate Regency-Era Names

I recently received a message from a historical romance writer asking if I knew any good resources for finding historically accurate Regency-era names for their characters.

Not knowing any off the top of my head, I dug around online a bit and found there really isn’t much out there. The vast majority of search results were Buzzfeed-style listicles which range from accurate-adjacent to really, really, really bad.

I did find a few blog posts with fairly decent name lists, but noticed that even these have very little indication as to each name’s relative popularity as those statistical breakdowns really don't exist.

I began writing up a response with this information, but then I (being a research addict who was currently snowed in after a blizzard) thought hey - if there aren’t any good resources out there why not make one myself?

As I lacked any compiled data to work from, I had to do my own data wrangling on this project. Due to this fact, I limited the scope to what I thought would be the most useful for writers who focus on this era, namely - people of a marriageable age living in the wealthiest areas of London.

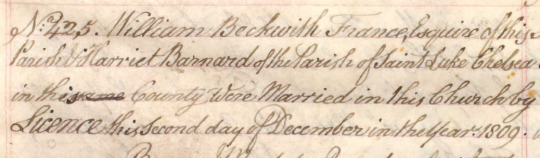

So with this in mind - I went through period records and compiled the names of 25,000 couples who were married in the City of Westminster (which includes Mayfair, St. James and Hyde Park) between 1804 to 1821.

So let’s see what all that data tells us…

To begin - I think it’s hard for us in the modern world with our wide and varied abundance of first names to conceive of just how POPULAR popular names of the past were.

If you were to take a modern sample of 25-year-old (born in 1998) American women, the most common name would be Emily with 1.35% of the total population. If you were to add the next four most popular names (Hannah, Samantha, Sarah and Ashley) these top five names would bring you to 5.5% of the total population. (source: Social Security Administration)

If you were to do the same survey in Regency London - the most common name would be Mary with 19.2% of the population. Add the next four most popular names (Elizabeth, Ann, Sarah and Jane) and with just 5 names you would have covered 62% of all women.

To hit 62% of the population in the modern survey it would take the top 400 names.

The top five Regency men’s names (John, William, Thomas, James and George) have nearly identical statistics as the women’s names.

I struggled for the better part of a week with how to present my findings, as a big list in alphabetical order really fails to get across the popularity factor and also isn’t the most tumblr-compatible format. And then my YouTube homepage recommended a random video of someone ranking all the books they’d read last year - and so I present…

The Regency Name Popularity Tier List

The Tiers

S+ - 10% of the population or greater. There is no modern equivalent to this level of popularity. 52% of the population had one of these 7 names.

S - 2-10%. There is still no modern equivalent to this level of popularity. Names in this percentage range in the past have included Mary and William in the 1880s and Jennifer in the late 1970s (topped out at 4%).

A - 1-2%. The top five modern names usually fall in this range. Kids with these names would probably include their last initial in class to avoid confusion. (1998 examples: Emily, Sarah, Ashley, Michael, Christopher, Brandon.)

B - .3-1%. Very common names. Would fall in the top 50 modern names. You would most likely know at least 1 person with these names. (1998 examples: Jessica, Megan, Allison, Justin, Ryan, Eric)

C - .17-.3%. Common names. Would fall in the modern top 100. You would probably know someone with these names, or at least know of them. (1998 examples: Chloe, Grace, Vanessa, Sean, Spencer, Seth)

D - .06-.17%. Less common names. In the modern top 250. You may not personally know someone with these names, but you’re aware of them. (1998 examples: Faith, Cassidy, Summer, Griffin, Dustin, Colby)

E - .02-.06%. Uncommon names. You’re aware these are names, but they are not common. Unusual enough they may be remarked upon. (1998 examples: Calista, Skye, Precious, Fabian, Justice, Lorenzo)

F - .01-.02%. Rare names. You may have heard of these names, but you probably don’t know anyone with one. Extremely unusual, and would likely be remarked upon. (1998 examples: Emerald, Lourdes, Serenity, Dario, Tavian, Adonis)

G - Very rare names. There are only a handful of people with these names in the entire country. You’ve never met anyone with this name.

H - Virtually non-existent. Names that theoretically could have existed in the Regency period (their original source pre-dates the early 19th century) but I found fewer than five (and often no) period examples of them being used in Regency England. (Example names taken from romance novels and online Regency name lists.)

Just to once again reinforce how POPULAR popular names were before we get to the tier lists - statistically, in a ballroom of 100 people in Regency London: 80 would have names from tiers S+/S. An additional 15 people would have names from tiers A/B and C. 4 of the remaining 5 would have names from D/E. Only one would have a name from below tier E.

Women's Names

S+ Mary, Elizabeth, Ann, Sarah

S - Jane, Mary Ann+, Hannah, Susannah, Margaret, Catherine, Martha, Charlotte, Maria

A - Frances, Harriet, Sophia, Eleanor, Rebecca

B - Alice, Amelia, Bridget~, Caroline, Eliza, Esther, Isabella, Louisa, Lucy, Lydia, Phoebe, Rachel, Susan

C - Ellen, Fanny*, Grace, Henrietta, Hester, Jemima, Matilda, Priscilla

D - Abigail, Agnes, Amy, Augusta, Barbara, Betsy*, Betty*, Cecilia, Christiana, Clarissa, Deborah, Diana, Dinah, Dorothy, Emily, Emma, Georgiana, Helen, Janet^, Joanna, Johanna, Judith, Julia, Kezia, Kitty*, Letitia, Nancy*, Ruth, Winifred>

E - Arabella, Celia, Charity, Clara, Cordelia, Dorcas, Eve, Georgina, Honor, Honora, Jennet^, Jessie*^, Joan, Joyce, Juliana, Juliet, Lavinia, Leah, Margery, Marian, Marianne, Marie, Mercy, Miriam, Naomi, Patience, Penelope, Philadelphia, Phillis, Prudence, Rhoda, Rosanna, Rose, Rosetta, Rosina, Sabina, Selina, Sylvia, Theodosia, Theresa

F - (selected) Alicia, Bethia, Euphemia, Frederica, Helena, Leonora, Mariana, Millicent, Mirah, Olivia, Philippa, Rosamund, Sybella, Tabitha, Temperance, Theophila, Thomasin, Tryphena, Ursula, Virtue, Wilhelmina

G - (selected) Adelaide, Alethia, Angelina, Cassandra, Cherry, Constance, Delilah, Dorinda, Drusilla, Eva, Happy, Jessica, Josephine, Laura, Minerva, Octavia, Parthenia, Theodora, Violet, Zipporah

H - Alberta, Alexandra, Amber, Ashley, Calliope, Calpurnia, Chloe, Cressida, Cynthia, Daisy, Daphne, Elaine, Eloise, Estella, Lilian, Lilias, Francesca, Gabriella, Genevieve, Gwendoline, Hermione, Hyacinth, Inez, Iris, Kathleen, Madeline, Maude, Melody, Portia, Seabright, Seraphina, Sienna, Verity

Men's Names

S+ John, William, Thomas

S - James, George, Joseph, Richard, Robert, Charles, Henry, Edward, Samuel

A - Benjamin, (Mother’s/Grandmother’s maiden name used as first name)#

B - Alexander^, Andrew, Daniel, David>, Edmund, Francis, Frederick, Isaac, Matthew, Michael, Patrick~, Peter, Philip, Stephen, Timothy

C - Abraham, Anthony, Christopher, Hugh>, Jeremiah, Jonathan, Nathaniel, Walter

D - Adam, Arthur, Bartholomew, Cornelius, Dennis, Evan>, Jacob, Job, Josiah, Joshua, Lawrence, Lewis, Luke, Mark, Martin, Moses, Nicholas, Owen>, Paul, Ralph, Simon

E - Aaron, Alfred, Allen, Ambrose, Amos, Archibald, Augustin, Augustus, Barnard, Barney, Bernard, Bryan, Caleb, Christian, Clement, Colin, Duncan^, Ebenezer, Edwin, Emanuel, Felix, Gabriel, Gerard, Gilbert, Giles, Griffith, Harry*, Herbert, Humphrey, Israel, Jabez, Jesse, Joel, Jonas, Lancelot, Matthias, Maurice, Miles, Oliver, Rees, Reuben, Roger, Rowland, Solomon, Theophilus, Valentine, Zachariah

F - (selected) Abel, Barnabus, Benedict, Connor, Elijah, Ernest, Gideon, Godfrey, Gregory, Hector, Horace, Horatio, Isaiah, Jasper, Levi, Marmaduke, Noah, Percival, Shadrach, Vincent

G - (selected) Albion, Darius, Christmas, Cleophas, Enoch, Ethelbert, Gavin, Griffin, Hercules, Hugo, Innocent, Justin, Maximilian, Methuselah, Peregrine, Phineas, Roland, Sebastian, Sylvester, Theodore, Titus, Zephaniah

H - Albinus, Americus, Cassian, Dominic, Eric, Milo, Rollo, Trevor, Tristan, Waldo, Xavier

# Men were sometimes given a family surname (most often their mother's or grandmother's maiden name) as their first name - the most famous example of this being Fitzwilliam Darcy. If you were to combine all surname-based first names as a single 'name' this is where the practice would rank.

*Rank as a given name, not a nickname

+If you count Mary Ann as a separate name from Mary - Mary would remain in S+ even without the Mary Anns included

~Primarily used by people of Irish descent

^Primarily used by people of Scottish descent

>Primarily used by people of Welsh descent

I was going to continue on and write about why Regency-era first names were so uniform, discuss historically accurate surnames, nicknames, and include a little guide to finding 'unique' names that are still historically accurate - but this post is already very, very long, so that will have to wait for a later date.

If anyone has any questions/comments/clarifications in the meantime feel free to message me.

Methodology notes: All data is from marriage records covering six parishes in the City of Westminster between 1804 and 1821. The total sample size was 50,950 individuals.

I chose marriage records rather than births/baptisms as I wanted to focus on individuals who were adults during the Regency era rather than newborns. I think many people make the mistake when researching historical names by using baby name data for the year their story takes place rather than 20 to 30 years prior, and I wanted to avoid that. If you are writing a story that takes place in 1930 you don’t want to research the top names for 1930, you need to be looking at 1910 or earlier if you are naming adult characters.

I combined (for my own sanity) names that are pronounced identically but have minor spelling differences: i.e. the data for Catherine also includes Catharines and Katherines, Susannah includes Susannas, Phoebe includes Phebes, etc.

The compound 'Mother's/Grandmother's maiden name used as first name' designation is an educated guesstimate based on what I recognized as known surnames, as I do not hate myself enough to go through 25,000+ individuals and confirm their mother's maiden names. So if the tally includes any individuals who just happened to be named Fitzroy/Hastings/Townsend/etc. because their parents liked the sound of it and not due to any familial relations - my bad.

I did a small comparative survey of 5,000 individuals in several rural communities in Rutland and Staffordshire (chosen because they had the cleanest data I could find and I was lazy) to see if there were any significant differences between urban and rural naming practices and found the results to be very similar. The most noticeable difference I observed was that the S+ tier names were even MORE popular in rural areas than in London. In Rutland between 1810 and 1820 Elizabeths comprised 21.4% of all brides vs. 15.3% in the London survey. All other S+ names also saw increases of between 1% and 6%. I also observed that the rural communities I surveyed saw a small, but noticeable and fairly consistent, increase in the use of names with Biblical origins.

Sources of the records I used for my survey:

Ancestry.com. England & Wales Marriages, 1538-1988 [database on-line].

Ancestry.com. Westminster, London, England, Church of England Marriages and Banns, 1754-1935 [database on-line].

#history#regency#1800s#1810s#names#london#writing resources#regency romance#jane austen#bridgerton#bridgerton would be an exponentially better show if daphne's name was dorcas#behold - the reason i haven't posted in three weeks

13K notes

·

View notes