#Databricks for Data Scientists

Explore tagged Tumblr posts

Text

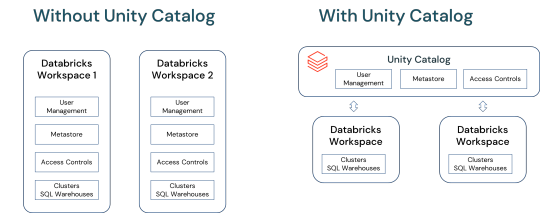

Unlocking Full Potential: The Compelling Reasons to Migrate to Databricks Unity Catalog

In a world overwhelmed by data complexities and AI advancements, Databricks Unity Catalog emerges as a game-changer. This blog delves into how Unity Catalog revolutionizes data and AI governance, offering a unified, agile solution .

View On WordPress

#Access Control in Data Platforms#Advanced User Management#AI and ML Data Governance#AI Data Management#Big Data Solutions#Centralized Metadata Management#Cloud Data Management#Data Collaboration Tools#Data Ecosystem Integration#Data Governance Solutions#Data Lakehouse Architecture#Data Platform Modernization#Data Security and Compliance#Databricks for Data Scientists#Databricks Unity catalog#Enterprise Data Strategy#Migrating to Unity Catalog#Scalable Data Architecture#Unity Catalog Features

0 notes

Text

What EDAV does:

Connects people with data faster. It does this in a few ways. EDAV:

Hosts tools that support the analytics work of over 3,500 people.

Stores data on a common platform that is accessible to CDC's data scientists and partners.

Simplifies complex data analysis steps.

Automates repeatable tasks, such as dashboard updates, freeing up staff time and resources.

Keeps data secure. Data represent people, and the privacy of people's information is critically important to CDC. EDAV is hosted on CDC's Cloud to ensure data are shared securely and that privacy is protected.

Saves time and money. EDAV services can quickly and easily scale up to meet surges in demand for data science and engineering tools, such as during a disease outbreak. The services can also scale down quickly, saving funds when demand decreases or an outbreak ends.

Trains CDC's staff on new tools. EDAV hosts a Data Academy that offers training designed to help our workforce build their data science skills, including self-paced courses in Power BI, R, Socrata, Tableau, Databricks, Azure Data Factory, and more.

Changes how CDC works. For the first time, EDAV offers CDC's experts a common set of tools that can be used for any disease or condition. It's ready to handle "big data," can bring in entirely new sources of data like social media feeds, and enables CDC's scientists to create interactive dashboards and apply technologies like artificial intelligence for deeper analysis.

4 notes

·

View notes

Text

Google Cloud’s BigQuery Autonomous Data To AI Platform

BigQuery automates data analysis, transformation, and insight generation using AI. AI and natural language interaction simplify difficult operations.

The fast-paced world needs data access and a real-time data activation flywheel. Artificial intelligence that integrates directly into the data environment and works with intelligent agents is emerging. These catalysts open doors and enable self-directed, rapid action, which is vital for success. This flywheel uses Google's Data & AI Cloud to activate data in real time. BigQuery has five times more organisations than the two leading cloud providers that just offer data science and data warehousing solutions due to this emphasis.

Examples of top companies:

With BigQuery, Radisson Hotel Group enhanced campaign productivity by 50% and revenue by over 20% by fine-tuning the Gemini model.

By connecting over 170 data sources with BigQuery, Gordon Food Service established a scalable, modern, AI-ready data architecture. This improved real-time response to critical business demands, enabled complete analytics, boosted client usage of their ordering systems, and offered staff rapid insights while cutting costs and boosting market share.

J.B. Hunt is revolutionising logistics for shippers and carriers by integrating Databricks into BigQuery.

General Mills saves over $100 million using BigQuery and Vertex AI to give workers secure access to LLMs for structured and unstructured data searches.

Google Cloud is unveiling many new features with its autonomous data to AI platform powered by BigQuery and Looker, a unified, trustworthy, and conversational BI platform:

New assistive and agentic experiences based on your trusted data and available through BigQuery and Looker will make data scientists, data engineers, analysts, and business users' jobs simpler and faster.

Advanced analytics and data science acceleration: Along with seamless integration with real-time and open-source technologies, BigQuery AI-assisted notebooks improve data science workflows and BigQuery AI Query Engine provides fresh insights.

Autonomous data foundation: BigQuery can collect, manage, and orchestrate any data with its new autonomous features, which include native support for unstructured data processing and open data formats like Iceberg.

Look at each change in detail.

User-specific agents

It believes everyone should have AI. BigQuery and Looker made AI-powered helpful experiences generally available, but Google Cloud now offers specialised agents for all data chores, such as:

Data engineering agents integrated with BigQuery pipelines help create data pipelines, convert and enhance data, discover anomalies, and automate metadata development. These agents provide trustworthy data and replace time-consuming and repetitive tasks, enhancing data team productivity. Data engineers traditionally spend hours cleaning, processing, and confirming data.

The data science agent in Google's Colab notebook enables model development at every step. Scalable training, intelligent model selection, automated feature engineering, and faster iteration are possible. This agent lets data science teams focus on complex methods rather than data and infrastructure.

Looker conversational analytics lets everyone utilise natural language with data. Expanded capabilities provided with DeepMind let all users understand the agent's actions and easily resolve misconceptions by undertaking advanced analysis and explaining its logic. Looker's semantic layer boosts accuracy by two-thirds. The agent understands business language like “revenue” and “segments” and can compute metrics in real time, ensuring trustworthy, accurate, and relevant results. An API for conversational analytics is also being introduced to help developers integrate it into processes and apps.

In the BigQuery autonomous data to AI platform, Google Cloud introduced the BigQuery knowledge engine to power assistive and agentic experiences. It models data associations, suggests business vocabulary words, and creates metadata instantaneously using Gemini's table descriptions, query histories, and schema connections. This knowledge engine grounds AI and agents in business context, enabling semantic search across BigQuery and AI-powered data insights.

All customers may access Gemini-powered agentic and assistive experiences in BigQuery and Looker without add-ons in the existing price model tiers!

Accelerating data science and advanced analytics

BigQuery autonomous data to AI platform is revolutionising data science and analytics by enabling new AI-driven data science experiences and engines to manage complex data and provide real-time analytics.

First, AI improves BigQuery notebooks. It adds intelligent SQL cells to your notebook that can merge data sources, comprehend data context, and make code-writing suggestions. It also uses native exploratory analysis and visualisation capabilities for data exploration and peer collaboration. Data scientists can also schedule analyses and update insights. Google Cloud also lets you construct laptop-driven, dynamic, user-friendly, interactive data apps to share insights across the organisation.

This enhanced notebook experience is complemented by the BigQuery AI query engine for AI-driven analytics. This engine lets data scientists easily manage organised and unstructured data and add real-world context—not simply retrieve it. BigQuery AI co-processes SQL and Gemini, adding runtime verbal comprehension, reasoning skills, and real-world knowledge. Their new engine processes unstructured photographs and matches them to your product catalogue. This engine supports several use cases, including model enhancement, sophisticated segmentation, and new insights.

Additionally, it provides users with the most cloud-optimized open-source environment. Google Cloud for Apache Kafka enables real-time data pipelines for event sourcing, model scoring, communications, and analytics in BigQuery for serverless Apache Spark execution. Customers have almost doubled their serverless Spark use in the last year, and Google Cloud has upgraded this engine to handle data 2.7 times faster.

BigQuery lets data scientists utilise SQL, Spark, or foundation models on Google's serverless and scalable architecture to innovate faster without the challenges of traditional infrastructure.

An independent data foundation throughout data lifetime

An independent data foundation created for modern data complexity supports its advanced analytics engines and specialised agents. BigQuery is transforming the environment by making unstructured data first-class citizens. New platform features, such as orchestration for a variety of data workloads, autonomous and invisible governance, and open formats for flexibility, ensure that your data is always ready for data science or artificial intelligence issues. It does this while giving the best cost and decreasing operational overhead.

For many companies, unstructured data is their biggest untapped potential. Even while structured data provides analytical avenues, unique ideas in text, audio, video, and photographs are often underutilised and discovered in siloed systems. BigQuery instantly tackles this issue by making unstructured data a first-class citizen using multimodal tables (preview), which integrate structured data with rich, complex data types for unified querying and storage.

Google Cloud's expanded BigQuery governance enables data stewards and professionals a single perspective to manage discovery, classification, curation, quality, usage, and sharing, including automatic cataloguing and metadata production, to efficiently manage this large data estate. BigQuery continuous queries use SQL to analyse and act on streaming data regardless of format, ensuring timely insights from all your data streams.

Customers utilise Google's AI models in BigQuery for multimodal analysis 16 times more than last year, driven by advanced support for structured and unstructured multimodal data. BigQuery with Vertex AI are 8–16 times cheaper than independent data warehouse and AI solutions.

Google Cloud maintains open ecology. BigQuery tables for Apache Iceberg combine BigQuery's performance and integrated capabilities with the flexibility of an open data lakehouse to link Iceberg data to SQL, Spark, AI, and third-party engines in an open and interoperable fashion. This service provides adaptive and autonomous table management, high-performance streaming, auto-AI-generated insights, practically infinite serverless scalability, and improved governance. Cloud storage enables fail-safe features and centralised fine-grained access control management in their managed solution.

Finaly, AI platform autonomous data optimises. Scaling resources, managing workloads, and ensuring cost-effectiveness are its competencies. The new BigQuery spend commit unifies spending throughout BigQuery platform and allows flexibility in shifting spend across streaming, governance, data processing engines, and more, making purchase easier.

Start your data and AI adventure with BigQuery data migration. Google Cloud wants to know how you innovate with data.

#technology#technews#govindhtech#news#technologynews#BigQuery autonomous data to AI platform#BigQuery#autonomous data to AI platform#BigQuery platform#autonomous data#BigQuery AI Query Engine

2 notes

·

View notes

Text

From Math to Machine Learning: A Comprehensive Blueprint for Aspiring Data Scientists

The realm of data science is vast and dynamic, offering a plethora of opportunities for those willing to dive into the world of numbers, algorithms, and insights. If you're new to data science and unsure where to start, fear not! This step-by-step guide will navigate you through the foundational concepts and essential skills to kickstart your journey in this exciting field. Choosing the Best Data Science Institute can further accelerate your journey into this thriving industry.

1. Establish a Strong Foundation in Mathematics and Statistics

Before delving into the specifics of data science, ensure you have a robust foundation in mathematics and statistics. Brush up on concepts like algebra, calculus, probability, and statistical inference. Online platforms such as Khan Academy and Coursera offer excellent resources for reinforcing these fundamental skills.

2. Learn Programming Languages

Data science is synonymous with coding. Choose a programming language – Python and R are popular choices – and become proficient in it. Platforms like Codecademy, DataCamp, and W3Schools provide interactive courses to help you get started on your coding journey.

3. Grasp the Basics of Data Manipulation and Analysis

Understanding how to work with data is at the core of data science. Familiarize yourself with libraries like Pandas in Python or data frames in R. Learn about data structures, and explore techniques for cleaning and preprocessing data. Utilize real-world datasets from platforms like Kaggle for hands-on practice.

4. Dive into Data Visualization

Data visualization is a powerful tool for conveying insights. Learn how to create compelling visualizations using tools like Matplotlib and Seaborn in Python, or ggplot2 in R. Effectively communicating data findings is a crucial aspect of a data scientist's role.

5. Explore Machine Learning Fundamentals

Begin your journey into machine learning by understanding the basics. Grasp concepts like supervised and unsupervised learning, classification, regression, and key algorithms such as linear regression and decision trees. Platforms like scikit-learn in Python offer practical, hands-on experience.

6. Delve into Big Data Technologies

As data scales, so does the need for technologies that can handle large datasets. Familiarize yourself with big data technologies, particularly Apache Hadoop and Apache Spark. Platforms like Cloudera and Databricks provide tutorials suitable for beginners.

7. Enroll in Online Courses and Specializations

Structured learning paths are invaluable for beginners. Enroll in online courses and specializations tailored for data science novices. Platforms like Coursera ("Data Science and Machine Learning Bootcamp with R/Python") and edX ("Introduction to Data Science") offer comprehensive learning opportunities.

8. Build Practical Projects

Apply your newfound knowledge by working on practical projects. Analyze datasets, implement machine learning models, and solve real-world problems. Platforms like Kaggle provide a collaborative space for participating in data science competitions and showcasing your skills to the community.

9. Join Data Science Communities

Engaging with the data science community is a key aspect of your learning journey. Participate in discussions on platforms like Stack Overflow, explore communities on Reddit (r/datascience), and connect with professionals on LinkedIn. Networking can provide valuable insights and support.

10. Continuous Learning and Specialization

Data science is a field that evolves rapidly. Embrace continuous learning and explore specialized areas based on your interests. Dive into natural language processing, computer vision, or reinforcement learning as you progress and discover your passion within the broader data science landscape.

Remember, your journey in data science is a continuous process of learning, application, and growth. Seek guidance from online forums, contribute to discussions, and build a portfolio that showcases your projects. Choosing the best Data Science Courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science. With dedication and a systematic approach, you'll find yourself progressing steadily in the fascinating world of data science. Good luck on your journey!

3 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

microsoft azure ai engineer associate certification

Top Career Opportunities After Earning Azure AI Engineer Associate Certification

In today’s ever-evolving tech world, Artificial Intelligence (AI) is no longer just a buzzword — it’s a full-blown career path. With organizations embracing AI to improve operations, customer service, and innovation, professionals are rushing to upskill themselves. Among the top choices, the Microsoft Azure AI Engineer Associate Certification is gaining significant attention.

If you’re serious about making a mark in AI, then the Microsoft Azure AI certification pathway can be your golden ticket. This article dives deep into the top career opportunities after earning Azure AI Engineer Associate Certification, how this certification boosts your job prospects, and the roles you can aim for.

Why Choose the Azure AI Engineer Associate Certification?

The Azure AI Engineer Associate Certification is offered by Microsoft, a global leader in cloud computing and AI. It verifies your ability to use Azure Cognitive Services, Azure Machine Learning, and conversational AI to build and deploy AI solutions.

Professionals holding this certification demonstrate hands-on skills and are preferred by companies that want ready-to-deploy AI talent.

Benefits of the Azure AI Engineer Associate Certification

Let’s understand why more professionals are choosing this certification to strengthen their careers:

1. Industry Recognition

Companies worldwide trust Microsoft technologies. Getting certified adds credibility to your resume.

2. Cloud-Centric Skillset

The demand for cloud-based AI solutions is skyrocketing. This certification proves your expertise in building such systems.

3. Competitive Salary Packages

Certified professionals are often offered higher salaries due to their validated skills.

4. Global Opportunities

Whether you're in India, the USA, or Europe, Azure AI certification opens doors globally.

Top Career Opportunities After Earning Azure AI Engineer Associate Certification

The top career opportunities after earning Azure AI Engineer Associate Certification span across various industries, from healthcare and finance to retail and logistics. Below are the most promising roles you can pursue:

AI Engineer

As an AI Engineer, you’ll build, test, and deploy AI models. You'll work with machine learning algorithms and integrate Azure Cognitive Services. This is one of the most common and direct roles after certification.

Machine Learning Engineer

You’ll design and implement machine learning models in real-world applications. You'll be responsible for model training, evaluation, and fine-tuning on Azure ML Studio or Azure Databricks.

Data Scientist

This role involves data analysis, visualization, and model building. Azure tools like Machine Learning Designer make your job easier. Data scientists with Azure skills are in massive demand across all sectors.

AI Solutions Architect

Here, you’ll lead the design of AI solutions for enterprise applications. You need to combine business understanding with deep technical expertise in AI and Azure services.

Cloud AI Consultant

Companies hire consultants to guide their AI strategy. Your Azure certification gives you the tools to advise clients on how to build scalable AI systems using cloud services.

Business Intelligence Developer

BI developers use AI to gain insights from business data. With Azure’s AI tools, you can automate reporting, forecast trends, and build smart dashboards.

AI Product Manager

This role is perfect if you love tech and strategy. As a product manager, you’ll plan the AI product roadmap and ensure Azure services align with customer needs.

Chatbot Developer

With expertise in Azure Bot Services and Language Understanding (LUIS), you’ll create conversational AI that enhances customer experiences across websites, apps, and support systems.

Automation Engineer

You’ll design intelligent automation workflows using Azure AI and RPA tools. From customer onboarding to document processing, AI is the key.

Azure Developer with AI Focus

A developer well-versed in .NET or Python and now skilled in Azure AI can build powerful applications that utilize computer vision, NLP, and predictive models.

Industries Hiring Azure AI Certified Professionals

The top career opportunities after earning Azure AI Engineer Associate Certification are not limited to IT companies. Here’s where you’re likely to be hired:

Healthcare: AI-driven diagnostics and patient care

Finance: Fraud detection and predictive analytics

Retail: Customer behavior analysis and chatbots

Logistics: Smart inventory and route optimization

Education: Personalized learning platforms

Demand Outlook and Salary Trends

Let’s take a look at what the future holds:

AI Engineer: ₹10–25 LPA in India / $120K+ in the US

ML Engineer: ₹12–30 LPA in India / $130K+ in the US

Data Scientist: ₹8–22 LPA in India / $110K+ in the US

Companies like Microsoft, Accenture, Infosys, Deloitte, and IBM are actively hiring Azure AI-certified professionals. Job listings on platforms like LinkedIn and Indeed reflect growing demand.

Skills Gained from the Certification

The Azure AI Engineer Associate Certification equips you with:

Knowledge of Azure Cognitive Services

Skills in NLP, speech, vision, and language understanding

Proficiency in Azure Bot Services

Hands-on with Machine Learning pipelines

Use of Azure ML Studio and Notebooks

You don’t just become a certificate holder—you become a problem solver.

Career Growth After the Certification

As you progress in your AI journey, the certification lays the foundation for:

Mid-level roles after 2–3 years: Lead AI Engineer, AI Consultant

Senior roles after 5+ years: AI Architect, Director of AI Solutions

Leadership after 10+ years: Chief Data Officer, Head of AI

Real-World Projects That Get You Hired

Employers love practical knowledge. The certification encourages project-based learning, such as:

Sentiment analysis using Azure Cognitive Services

Building chatbots for e-commerce

Predictive analytics models for healthcare

Language translation tools

Automated document processing using Azure Form Recognizer

Completing and showcasing such projects makes your portfolio job-ready.

Middle of the Article Keyword Usage

If you're aiming to future-proof your tech career, then exploring the top career opportunities after earning Azure AI Engineer Associate Certification is one of the smartest moves you can make. It not only adds to your credentials but directly connects you to real-world AI roles.

Who Should Pursue This Certification?

This certification is ideal for:

Freshers with Python/AI interest

Software developers entering AI

Data professionals upskilling

Cloud engineers expanding into AI

Technical leads managing AI projects

How to Prepare for the Certification

Tips to ace the exam:

Take official Microsoft learning paths

Join instructor-led training programs

Practice with Azure sandbox labs

Study real-world use cases

Attempt mock exams

Final Thoughts

The top career opportunities after earning Azure AI Engineer Associate Certification are not only growing—they’re evolving. This certification doesn’t just give you knowledge; it opens doors to meaningful, high-paying, and future-ready roles. Whether you aim to be an AI engineer, a consultant, or a product manager, this certification lays the perfect foundation for your next big move in the AI industry.

FAQs

What are the prerequisites for taking the Azure AI certification exam?

You should have a basic understanding of Python, machine learning concepts, and experience with Microsoft Azure.

Is it necessary to have prior AI experience?

No, but having foundational knowledge in AI and cloud computing will make the learning curve easier.

How long does it take to prepare for the exam?

On average, candidates spend 4–6 weeks preparing with structured study plans and hands-on practice.

Is this certification useful for non-developers?

Yes! Even business analysts and managers with tech interest can benefit, especially in AI product management and consulting roles.

Can I get a job immediately after certification?

It depends on your background, but certification significantly boosts your chances of landing interviews and roles.

Does this certification expire?

Yes, typically after one year. Microsoft provides updates and renewal paths to keep your skills current.

What tools should I master for this certification?

Azure Machine Learning, Azure Cognitive Services, Azure Bot Service, and Python are key tools to learn.

What is the exam format like?

It usually consists of 40–60 questions including MCQs, case studies, and practical scenarios.

Can I do this certification online?

Yes, you can take the exam online with proctoring or at an authorized test center.

How is it different from other cloud certifications?

This certification focuses specifically on AI implementation using Azure, unlike general cloud certifications that cover infrastructure and DevOps.

1 note

·

View note

Text

How to Become a Successful Azure Data Engineer in 2025

In today’s data-driven world, businesses rely on cloud platforms to store, manage, and analyze massive amounts of information. One of the most in-demand roles in this space is that of an Azure Data Engineer. If you're someone looking to build a successful career in the cloud and data domain, Azure Data Engineering in PCMC is quickly becoming a preferred choice among aspiring professionals and fresh graduates.

This blog will walk you through everything you need to know to become a successful Azure Data Engineer in 2025—from required skills to tools, certifications, and career prospects.

Why Choose Azure for Data Engineering?

Microsoft Azure is one of the leading cloud platforms adopted by companies worldwide. With powerful services like Azure Data Factory, Azure Databricks, and Azure Synapse Analytics, it allows organizations to build scalable, secure, and automated data solutions. This creates a huge demand for trained Azure Data Engineers who can design, build, and maintain these systems efficiently.

Key Responsibilities of an Azure Data Engineer

As an Azure Data Engineer, your job is more than just writing code. You will be responsible for:

Designing and implementing data pipelines using Azure services.

Integrating various structured and unstructured data sources.

Managing data storage and security.

Enabling real-time and batch data processing.

Collaborating with data analysts, scientists, and other engineering teams.

Essential Skills to Master in 2025

To succeed as an Azure Data Engineer, you must gain expertise in the following:

1. Strong Programming Knowledge

Languages like SQL, Python, and Scala are essential for data transformation, cleaning, and automation tasks.

2. Understanding of Azure Tools

Azure Data Factory – for data orchestration and transformation.

Azure Synapse Analytics – for big data and data warehousing solutions.

Azure Databricks – for large-scale data processing using Apache Spark.

Azure Storage & Data Lake – for scalable and secure data storage.

3. Data Modeling & ETL Design

Knowing how to model databases and build ETL (Extract, Transform, Load) pipelines is fundamental for any data engineer.

4. Security & Compliance

Understanding Role-Based Access Control (RBAC), Data Encryption, and Data Masking is critical to ensure data integrity and privacy.

Career Opportunities and Growth

With increasing cloud adoption, Azure Data Engineers are in high demand across all industries including finance, healthcare, retail, and IT services. Roles include:

Azure Data Engineer

Data Platform Engineer

Cloud Data Specialist

Big Data Engineer

Salaries range widely depending on skills and experience, but in cities like Pune and PCMC (Pimpri-Chinchwad), entry-level engineers can expect ₹5–7 LPA, while experienced professionals often earn ₹12–20 LPA or more.

Learning from the Right Place Matters

To truly thrive in this field, it’s essential to learn from industry experts. If you’re looking for a trusted Software training institute in Pimpri-Chinchwad, IntelliBI Innovations Technologies offers career-focused Azure Data Engineering programs. Their curriculum is tailored to help students not only understand theory but apply it through real-world projects, resume preparation, and mock interviews.

Conclusion

Azure Data Engineering is not just a job—it’s a gateway to an exciting and future-proof career. With the right skills, certifications, and hands-on experience, you can build powerful data solutions that transform businesses. And with growing opportunities in Azure Data Engineering in PCMC, now is the best time to start your journey.

Whether you’re a fresher or an IT professional looking to upskill, invest in yourself and start building a career that matters.

0 notes

Text

Master the Future: Become a Databricks Certified Generative AI Engineer

What if we told you that one certification could position you at the crossroads of AI innovation, high-paying job opportunities, and technical leadership?

That’s exactly what the Databricks Certified Generative AI Engineer certification does. As generative AI explodes across industries, skilled professionals who can bridge the gap between AI theory and real-world data solutions are in high demand. Databricks, a company at the forefront of data and AI, now offers a credential designed for those who want to lead the next wave of innovation.

If you're someone looking to validate your AI engineering skills with an in-demand, globally respected certification, keep reading. This blog will guide you through what the certification is, why it’s valuable, how to prepare effectively, and how it can launch or elevate your tech career.

Why the Databricks Certified Generative AI Engineer Certification Matters

Let’s start with the basics: why should you care about this certification?

Databricks has become synonymous with large-scale data processing, AI model deployment, and seamless ML integration across platforms. As AI continues to evolve into Generative AI, the need for professionals who can implement real-world solutions—using tools like Databricks Unity Catalog, MLflow, Apache Spark, and Lakehouse architecture—is only going to grow.

This certification tells employers that:

You can design and implement generative AI models.

You understand the complexities of data management in modern AI systems.

You know how to use Databricks tools to scale and deploy these models effectively.

For tech professionals, data scientists, ML engineers, and cloud developers, this isn't just a badge—it's a career accelerator.

Who Should Pursue This Certification?

The Databricks Certified Generative AI Engineer path is for:

Data Scientists & Machine Learning Engineers who want to shift into more cutting-edge roles.

Cloud Developers working with AI pipelines in enterprise environments.

AI Enthusiasts and Researchers ready to demonstrate their applied knowledge.

Professionals preparing for AI roles at companies using Databricks, Azure, AWS, or Google Cloud.

If you’re familiar with Python, machine learning fundamentals, and basic model deployment workflows, you’re ready to get started.

What You'll Learn: Core Skills Covered

The exam and its preparation cover a broad but practical set of topics:

🧠 1. Foundation of Generative AI

What is generative AI?

How do models like GPT, DALL·E, and Stable Diffusion actually work?

Introduction to transformer architectures and tokenization.

📊 2. Databricks Ecosystem

Using Databricks notebooks and workflows

Unity Catalog for data governance and model security

Integrating MLflow for reproducibility and experiment tracking

🔁 3. Model Training & Tuning

Fine-tuning foundation models on your data

Optimizing training with distributed computing

Managing costs and resource allocation

⚙️ 4. Deployment & Monitoring

Creating real-time endpoints

Model versioning and rollback strategies

Using MLflow’s model registry for lifecycle tracking

🔐 5. Responsible AI & Ethics

Bias detection and mitigation

Privacy-preserving machine learning

Explainability and fairness

Each of these topics is deeply embedded in the exam and reflects current best practices in the industry.

Why Databricks Is Leading the AI Charge

Databricks isn’t just a platform—it’s a movement. With its Lakehouse architecture, the company bridges the gap between data warehouses and data lakes, providing a unified platform to manage and deploy AI solutions.

Databricks is already trusted by organizations like:

Comcast

Shell

HSBC

Regeneron Pharmaceuticals

So, when you add a Databricks Certified Generative AI Engineer credential to your profile, you’re aligning yourself with the tools and platforms that Fortune 500 companies rely on.

What’s the Exam Format?

Here’s what to expect:

Multiple choice and scenario-based questions

90 minutes total

Around 60 questions

Online proctored format

You’ll be tested on:

Generative AI fundamentals

Databricks-specific tools

Model development, deployment, and monitoring

Data handling in an AI lifecycle

How to Prepare: Your Study Blueprint

Passing this certification isn’t about memorizing definitions. It’s about understanding workflows, being able to apply best practices, and showing proficiency in a Databricks-native AI environment.

Step 1: Enroll in a Solid Practice Course

The most effective way to prepare is to take mock tests and get hands-on experience. We recommend enrolling in the Databricks Certified Generative AI Engineer practice test course, which gives you access to realistic exam-style questions, explanations, and performance feedback.

Step 2: Set Up a Databricks Workspace

If you don’t already have one, create a free Databricks Community Edition workspace. Explore notebooks, work with data in Delta Lake, and train a simple model using MLflow.

Step 3: Focus on the Databricks Stack

Make sure you’re confident using:

Databricks Notebooks

MLflow

Unity Catalog

Model Serving

Feature Store

Step 4: Review Key AI Concepts

Brush up on:

Transformer models and attention mechanisms

Fine-tuning vs. prompt engineering

Transfer learning

Generative model evaluation metrics (BLEU, ROUGE, etc.)

What Makes This Certification Unique?

Unlike many AI certifications that stay theoretical, this one is deeply practical. You’ll not only learn what generative AI is but also how to build and manage it in production.

Here are three reasons this stands out:

✅ 1. Real-world Integration

You’ll learn deployment, version control, and monitoring—which is what companies care about most.

✅ 2. Based on Industry-Proven Tools

Everything is built on top of Databricks, Apache Spark, and MLflow, used by data teams globally.

✅ 3. Focus on Modern AI Workflows

This certification keeps pace with the rapid evolution of AI—especially around LLMs (Large Language Models), prompt engineering, and GenAI use cases.

How It Benefits Your Career

Once certified, you’ll be well-positioned to:

Land roles like AI Engineer, ML Engineer, or Data Scientist in leading tech firms.

Negotiate a higher salary thanks to your verified skills.

Work on cutting-edge projects in AI, including enterprise chatbots, text summarization, image generation, and more.

Stand out in competitive job markets with a Databricks-backed credential on your LinkedIn.

According to recent industry trends, professionals with AI certifications earn an average of 20-30% more than those without.

Use Cases You’ll Be Ready to Tackle

After completing the course and passing the exam, you’ll be able to confidently work on:

Enterprise chatbots using foundation models

Real-time content moderation

AI-driven customer service agents

Medical imaging enhancement

Financial fraud detection using pattern generation

The scope is broad—and the possibilities are endless.

Don’t Just Study—Practice

It’s tempting to dive into study guides or YouTube videos, but what really works is practice. The Databricks Certified Generative AI Engineer practice course offers exam-style challenges that simulate the pressure and format of the real exam.

You’ll learn by doing—and that makes all the difference.

Final Thoughts: The Time to Act Is Now

Generative AI isn’t the future anymore—it’s the present. Companies across every sector are racing to integrate it. The question is:

Will you be ready to lead that charge?

If your goal is to become an in-demand AI expert with practical, validated skills, earning the Databricks Certified Generative AI Engineer credential is the move to make.

Start today. Equip yourself with the skills the industry is hungry for. Stand out. Level up.

👉 Enroll in the Databricks Certified Generative AI Engineer practice course now and take control of your AI journey.

🔍 Keyword Optimiz

0 notes

Text

Master the Machines: Learn Machine Learning with Ascendient Learning

Why Machine Learning Skills Are in High Demand

Machine learning is at the core of nearly every innovation in technology today. From personalized product recommendations and fraud detection to predictive maintenance and self-driving cars, businesses rely on machine learning to gain insights, optimize performance, and make smarter decisions. As organizations generate more data than ever before, the demand for professionals who can design, train, and deploy machine learning models is rising rapidly across industries.

Ascendient Learning: The Smartest Path to ML Expertise

Ascendient Learning is a trusted provider of machine learning training, offering courses developed in partnership with top vendors like AWS, IBM, Microsoft, Google Cloud, NVIDIA, and Databricks. With access to official courseware, experienced instructors, and flexible learning formats, Ascendient equips individuals and teams with the skills needed to turn data into action.

Courses are available in live virtual classrooms, in-person sessions, and self-paced formats. Learners benefit from hands-on labs, real-world case studies, and post-class support that reinforces what they’ve learned. Whether you’re a data scientist, software engineer, analyst, or IT manager, Ascendient has a training path that fits your role and future goals.

Training That Matches Real-World Applications

Ascendient Learning’s machine learning curriculum spans from foundational concepts to advanced implementation techniques. Beginners can start with introductory courses like Machine Learning on Google Cloud, Introduction to AI and ML, or Practical Data Science and Machine Learning with Python. These courses provide a strong base in algorithms, supervised and unsupervised learning, and model evaluation.

For more advanced learners, courses such as Advanced Machine Learning, Generative AI Engineering with Databricks, and Machine Learning with Apache Spark offer in-depth training on building scalable ML solutions and integrating them into cloud environments. Students can explore technologies like TensorFlow, Scikit-learn, PyTorch, and tools such as Amazon SageMaker and IBM Watson Studio.

Gain Skills That Translate into Real Impact

Machine learning isn’t just a buzzword. It's transforming the way organizations work. With the right training, professionals can improve business forecasting, automate time-consuming processes, and uncover patterns that would be impossible to detect manually.

In sectors like healthcare, ML helps identify treatment risks and recommend care paths. In retail, it powers dynamic pricing and customer segmentation. In manufacturing, it predicts equipment failure before it happens. Professionals who can harness machine learning contribute directly to innovation, efficiency, and growth.

Certification Paths That Build Career Momentum

Ascendient Learning’s machine learning training is also aligned with certification goals from AWS, IBM, Google Cloud, and Microsoft. Certifications such as AWS Certified Machine Learning – Specialty, Microsoft Azure AI Engineer Associate, and Google Cloud Certified – Professional ML Engineer validate your skills and demonstrate your readiness to lead AI initiatives.

Certified professionals often enjoy increased job opportunities, higher salaries, and greater credibility within their organizations. Ascendient supports this journey by offering prep materials, expert guidance, and access to labs even after the course ends.

Machine Learning with Ascendient

Machine learning is shaping the future of work, and those with the skills to understand and apply it will lead the change. Ascendient Learning offers a clear, flexible, and expert-led path to help you develop those skills, earn certifications, and make an impact in your career and organization.

Explore Ascendient Learning’s machine learning course catalog today. Discover the training that can turn your curiosity into capability and your ideas into innovation.

For more information visit: https://www.ascendientlearning.com/it-training/topics/ai-and-machine-learning

0 notes

Text

How Azure Supports Big Data and Real-Time Data Processing

The explosion of digital data in recent years has pushed organizations to look for platforms that can handle massive datasets and real-time data streams efficiently. Microsoft Azure has emerged as a front-runner in this domain, offering robust services for big data analytics and real-time processing. Professionals looking to master this platform often pursue the Azure Data Engineering Certification, which helps them understand and implement data solutions that are both scalable and secure.

Azure not only offers storage and computing solutions but also integrates tools for ingestion, transformation, analytics, and visualization—making it a comprehensive platform for big data and real-time use cases.

Azure’s Approach to Big Data

Big data refers to extremely large datasets that cannot be processed using traditional data processing tools. Azure offers multiple services to manage, process, and analyze big data in a cost-effective and scalable manner.

1. Azure Data Lake Storage

Azure Data Lake Storage (ADLS) is designed specifically to handle massive amounts of structured and unstructured data. It supports high throughput and can manage petabytes of data efficiently. ADLS works seamlessly with analytics tools like Azure Synapse and Azure Databricks, making it a central storage hub for big data projects.

2. Azure Synapse Analytics

Azure Synapse combines big data and data warehousing capabilities into a single unified experience. It allows users to run complex SQL queries on large datasets and integrates with Apache Spark for more advanced analytics and machine learning workflows.

3. Azure Databricks

Built on Apache Spark, Azure Databricks provides a collaborative environment for data engineers and data scientists. It’s optimized for big data pipelines, allowing users to ingest, clean, and analyze data at scale.

Real-Time Data Processing on Azure

Real-time data processing allows businesses to make decisions instantly based on current data. Azure supports real-time analytics through a range of powerful services:

1. Azure Stream Analytics

This fully managed service processes real-time data streams from devices, sensors, applications, and social media. You can write SQL-like queries to analyze the data in real time and push results to dashboards or storage solutions.

2. Azure Event Hubs

Event Hubs can ingest millions of events per second, making it ideal for real-time analytics pipelines. It acts as a front-door for event streaming and integrates with Stream Analytics, Azure Functions, and Apache Kafka.

3. Azure IoT Hub

For businesses working with IoT devices, Azure IoT Hub enables the secure transmission and real-time analysis of data from edge devices to the cloud. It supports bi-directional communication and can trigger workflows based on event data.

Integration and Automation Tools

Azure ensures seamless integration between services for both batch and real-time processing. Tools like Azure Data Factory and Logic Apps help automate the flow of data across the platform.

Azure Data Factory: Ideal for building ETL (Extract, Transform, Load) pipelines. It moves data from sources like SQL, Blob Storage, or even on-prem systems into processing tools like Synapse or Databricks.

Logic Apps: Allows you to automate workflows across Azure services and third-party platforms. You can create triggers based on real-time events, reducing manual intervention.

Security and Compliance in Big Data Handling

Handling big data and real-time processing comes with its share of risks, especially concerning data privacy and compliance. Azure addresses this by providing:

Data encryption at rest and in transit

Role-based access control (RBAC)

Private endpoints and network security

Compliance with standards like GDPR, HIPAA, and ISO

These features ensure that organizations can maintain the integrity and confidentiality of their data, no matter the scale.

Career Opportunities in Azure Data Engineering

With Azure’s growing dominance in cloud computing and big data, the demand for skilled professionals is at an all-time high. Those holding an Azure Data Engineering Certification are well-positioned to take advantage of job roles such as:

Azure Data Engineer

Cloud Solutions Architect

Big Data Analyst

Real-Time Data Engineer

IoT Data Specialist

The certification equips individuals with knowledge of Azure services, big data tools, and data pipeline architecture—all essential for modern data roles.

Final Thoughts

Azure offers an end-to-end ecosystem for both big data analytics and real-time data processing. Whether it’s massive historical datasets or fast-moving event streams, Azure provides scalable, secure, and integrated tools to manage them all.

Pursuing an Azure Data Engineering Certification is a great step for anyone looking to work with cutting-edge cloud technologies in today’s data-driven world. By mastering Azure’s powerful toolset, professionals can design data solutions that are future-ready and impactful.

#Azure#BigData#RealTimeAnalytics#AzureDataEngineer#DataLake#StreamAnalytics#CloudComputing#AzureSynapse#IoTHub#Databricks#CloudZone#AzureCertification#DataPipeline#DataEngineering

0 notes

Text

Data Science Tutorial for 2025: Tools, Trends, and Techniques

Data science continues to be one of the most dynamic and high-impact fields in technology, with new tools and methodologies evolving rapidly. As we enter 2025, data science is more than just crunching numbers—it's about building intelligent systems, automating decision-making, and unlocking insights from complex data at scale.

Whether you're a beginner or a working professional looking to sharpen your skills, this tutorial will guide you through the essential tools, the latest trends, and the most effective techniques shaping data science in 2025.

What is Data Science?

At its core, data science is the interdisciplinary field that combines statistics, computer science, and domain expertise to extract meaningful insights from structured and unstructured data. It involves collecting data, cleaning and processing it, analyzing patterns, and building predictive or explanatory models.

Data scientists are problem-solvers, storytellers, and innovators. Their work influences business strategies, public policy, healthcare solutions, and even climate models.

Essential Tools for Data Science in 2025

The data science toolkit has matured significantly, with tools becoming more powerful, user-friendly, and integrated with AI. Here are the must-know tools for 2025:

1. Python 3.12+

Python remains the most widely used language in data science due to its simplicity and vast ecosystem. In 2025, the latest Python versions offer faster performance and better support for concurrency—making large-scale data operations smoother.

Popular Libraries:

Pandas: For data manipulation

NumPy: For numerical computing

Matplotlib / Seaborn / Plotly: For data visualization

Scikit-learn: For traditional machine learning

XGBoost / LightGBM: For gradient boosting models

2. JupyterLab

The evolution of the classic Jupyter Notebook, JupyterLab, is now the default environment for exploratory data analysis, allowing a modular, tabbed interface with support for terminals, text editors, and rich output.

3. Apache Spark with PySpark

Handling massive datasets? PySpark—Python’s interface to Apache Spark—is ideal for distributed data processing across clusters, now deeply integrated with cloud platforms like Databricks and Snowflake.

4. Cloud Platforms (AWS, Azure, Google Cloud)

In 2025, most data science workloads run on the cloud. Services like Amazon SageMaker, Azure Machine Learning, and Google Vertex AI simplify model training, deployment, and monitoring.

5. AutoML & No-Code Tools

Tools like DataRobot, Google AutoML, and H2O.ai now offer drag-and-drop model building and optimization. These are powerful for non-coders and help accelerate workflows for pros.

Top Data Science Trends in 2025

1. Generative AI for Data Science

With the rise of large language models (LLMs), generative AI now assists data scientists in code generation, data exploration, and feature engineering. Tools like OpenAI's ChatGPT for Code and GitHub Copilot help automate repetitive tasks.

2. Data-Centric AI

Rather than obsessing over model architecture, 2025’s best practices focus on improving the quality of data—through labeling, augmentation, and domain understanding. Clean data beats complex models.

3. MLOps Maturity

MLOps—machine learning operations—is no longer optional. In 2025, companies treat ML models like software, with versioning, monitoring, CI/CD pipelines, and reproducibility built-in from the start.

4. Explainable AI (XAI)

As AI impacts sensitive areas like finance and healthcare, transparency is crucial. Tools like SHAP, LIME, and InterpretML help data scientists explain model predictions to stakeholders and regulators.

5. Edge Data Science

With IoT devices and on-device AI becoming the norm, edge computing allows models to run in real-time on smartphones, sensors, and drones—opening new use cases from agriculture to autonomous vehicles.

Core Techniques Every Data Scientist Should Know in 2025

Whether you’re starting out or upskilling, mastering these foundational techniques is critical:

1. Data Wrangling

Before any analysis begins, data must be cleaned and reshaped. Techniques include:

Handling missing values

Normalization and standardization

Encoding categorical variables

Time series transformation

2. Exploratory Data Analysis (EDA)

EDA is about understanding your dataset through visualization and summary statistics. Use histograms, scatter plots, correlation heatmaps, and boxplots to uncover trends and outliers.

3. Machine Learning Basics

Classification (e.g., predicting if a customer will churn)

Regression (e.g., predicting house prices)

Clustering (e.g., customer segmentation)

Dimensionality Reduction (e.g., PCA, t-SNE for visualization)

4. Deep Learning (Optional but Useful)

If you're working with images, text, or audio, deep learning with TensorFlow, PyTorch, or Keras can be invaluable. Hugging Face’s transformers make it easier than ever to work with large models.

5. Model Evaluation

Learn how to assess model performance with:

Accuracy, Precision, Recall, F1 Score

ROC-AUC Curve

Cross-validation

Confusion Matrix

Final Thoughts

As we move deeper into 2025, data science tutorial continues to be an exciting blend of math, coding, and real-world impact. Whether you're analyzing customer behavior, improving healthcare diagnostics, or predicting financial markets, your toolkit and mindset will be your most valuable assets.

Start by learning the fundamentals, keep experimenting with new tools, and stay updated with emerging trends. The best data scientists aren’t just great with code—they’re lifelong learners who turn data into decisions.

0 notes

Text

Partner with a Leading Data Analytics Consulting Firm for Business Innovation and Growth

Partnering with a leading data analytics consulting firm like Dataplatr empowers organizations to turn complex data into strategic assets that drive innovation and business growth. At Dataplatr, we offer end-to-end data analytics consulting services customized to meet the needs of enterprises and small businesses alike. Whether you're aiming to enhance operational efficiency, personalize customer experiences, or optimize supply chains, our team of experts delivers actionable insights backed by cutting-edge technologies and proven methodologies.

Comprehensive Data Analytics Consulting Services

At Dataplatr, we offer a full spectrum of data analytics consulting services, including:

Data Engineering: Designing and implementing robust data architectures that ensure seamless data flow across your organization.

Data Analytics: Utilizing advanced analytical techniques to extract meaningful insights from your data, facilitating data-driven strategies.

Data Visualization: Creating intuitive dashboards and reports that present complex data in an accessible and actionable format.

Artificial Intelligence: Integrating AI solutions to automate processes and enhance predictive analytics capabilities.

Data Analytics Consulting for Small Businesses

Understanding the challenges faced by small and mid-sized enterprises, Dataplatr offers data analytics consulting for small business solutions that are:

Scalable Solutions: It helps to grow with your business, ensuring long-term value.

Cost-Effective: Providing high-quality services that fit within your budget constraints.

User-Friendly: Implementing tools and platforms that are easy to use, ensuring quick adoption and minimal disruption.

Strategic Partnerships for Enhanced Data Solutions

Dataplatr has established strategic partnerships with leading technology platforms to enhance our service offerings:

Omni: Combining Dataplatr’s data engineering expertise with Omni’s business intelligence platform enables instant data exploration without high modeling costs, providing a foundation for actionable insights.

Databricks: Our collaboration with Databricks uses their AI insights and efficient data governance, redefining data warehousing standards with innovative lakehouse architecture for superior performance and scalability.

Looker: Partnering with Looker allows us to gain advanced analytics capabilities, ensuring clients can achieve the full potential of their data assets.

Why Choose Dataplatr?

Dataplatr stands out as a trusted data analytics consulting firm due to its deep expertise, personalized approach, and commitment to innovation. Our team of seasoned data scientists and analytics professionals brings extensive cross-industry experience to every engagement, ensuring that clients benefit from proven knowledge and cutting-edge practices. We recognize that every business has unique challenges and goals, which is why our solutions are always customized to align with your specific needs. Moreover, we continuously stay ahead of technological trends, allowing us to deliver innovative data strategies that drive measurable results and long-term success. Explore more about how Dataplatr empowers data strategy consulting services for your specific business needs.

0 notes

Text

How Helical IT Solutions Enhances Your Databricks Experience with Professional Consulting

In today’s data-driven world, businesses are increasingly turning to powerful platforms like Databricks to manage and analyse vast amounts of data. However, making the most of Databricks requires more than just signing up for the platform—it demands expertise, optimization, and proper implementation. This is where Databricks consulting services come in. Helical IT Solutions offers professional Databricks consulting that can help businesses harness the full potential of Databricks and turn data into actionable insights.

Unleashing the Potential of Databricks through Helical IT Solutions

Databricks is a cloud-based data engineering platform that integrates seamlessly with Apache Spark to provide businesses with scalable, high-performance solutions for big data analytics. With Databricks, organizations can build and manage data pipelines, run machine learning models, and collaborate on analytics. However, without the right expertise, businesses might not fully capitalize on its capabilities.

Helical IT Solutions specializes in Databricks consulting services to ensure that organizations not only deploy the platform but also use it to its fullest potential. From initial setup to advanced analytics and machine learning, their expert consultants guide businesses through every phase of the Databricks journey.

Benefits of Choosing Helical IT Solutions for Databricks Consulting

Expertise in Databricks Implementation: Helical IT Solutions’ Databricks consultants have a deep understanding of the platform’s architecture, capabilities, and best practices. They work closely with your team to design a Databricks infrastructure that suits your business needs. Whether you're a startup or an enterprise, they provide tailored solutions that optimize your Databricks environment for seamless operation.

Optimization of Data Pipelines: Databricks offers robust tools for data engineering and analytics, but its true power lies in how data pipelines are built and managed. Helical IT Solutions excels in optimizing these pipelines, ensuring they run efficiently and can scale as your data grows. Their consultants ensure that data flows smoothly through the system and is readily available for analysis and decision-making.

Advanced Analytics and Machine Learning: One of Databricks’ key features is its ability to run advanced analytics and machine learning models on large datasets. Helical IT Solutions brings expertise in data science and machine learning, helping businesses create predictive models, perform sentiment analysis, or uncover hidden patterns within their data. Their consultants guide you through the process of implementing ML models on Databricks, from data pre-processing to model deployment.

Enhanced Collaboration and Data Sharing: Databricks fosters collaboration by providing an integrated workspace for data engineers, scientists, and analysts. Helical IT Solutions leverages this feature to enhance cross-functional collaboration within your organization. They assist in setting up shared workspaces, making it easier for teams to access, share, and collaborate on data and analytics projects. This results in faster insights and better decision-making.

Continuous Support and Training: Databricks is a powerful platform, but it can be complex for teams without the proper experience. Helical IT Solutions not only helps with the initial setup and configuration but also provides ongoing support and training. Their consultants ensure that your team is well-equipped to use Databricks effectively, offering guidance on best practices, troubleshooting, and even custom training sessions to upskill your employees.

Why Choose Helical IT Solutions?

Choosing Helical IT Solutions for your Databricks consulting needs offers several advantages. They offer a personalized approach, working closely with your business to understand its unique data challenges and tailoring solutions accordingly. Their team has extensive experience in both Databricks consulting services and the broader landscape of data engineering, allowing them to provide end-to-end solutions.

Additionally, with Helical IT Solutions’ consulting services, you can achieve better performance, scalability, and cost efficiency from your Databricks implementation. Their expert consultants ensure that your Databricks environment is fully optimized to meet your business goals, delivering actionable insights faster and more effectively.

Conclusion

Databricks is a powerful tool for businesses looking to harness the power of big data, but it requires expertise to truly unlock its potential. Helical IT Solutions Databricks consulting services provide the knowledge and experience needed to optimize your Databricks environment for success. Whether you're looking to build efficient data pipelines, implement advanced analytics, or create machine learning models, their expert consultants can help guide you every step of the way, ensuring that your Databricks experience is smooth, scalable, and impactful.

0 notes

Text

Kadel Labs: Leading the Way as Databricks Consulting Partners

Introduction

In today’s data-driven world, businesses are constantly seeking efficient ways to harness the power of big data. As organizations generate vast amounts of structured and unstructured data, they need advanced tools and expert guidance to extract meaningful insights. This is where Kadel Labs, a leading technology solutions provider, steps in. As Databricks Consulting Partners, Kadel Labs specializes in helping businesses leverage the Databricks Lakehouse platform to unlock the full potential of their data.

Understanding Databricks and the Lakehouse Architecture

Before diving into how Kadel Labs can help businesses maximize their data potential, it’s crucial to understand Databricks and its revolutionary Lakehouse architecture.

Databricks is an open, unified platform designed for data engineering, machine learning, and analytics. It combines the best of data warehouses and data lakes, allowing businesses to store, process, and analyze massive datasets with ease. The Databricks Lakehouse model integrates the reliability of a data warehouse with the scalability of a data lake, enabling businesses to maintain structured and unstructured data efficiently.

Key Features of Databricks Lakehouse

Unified Data Management – Combines structured and unstructured data storage.

Scalability and Flexibility – Handles large-scale datasets with optimized performance.

Cost Efficiency – Reduces data redundancy and lowers storage costs.

Advanced Security – Ensures governance and compliance for sensitive data.

Machine Learning Capabilities – Supports AI and ML workflows seamlessly.

Why Businesses Need Databricks Consulting Partners

While Databricks offers powerful tools, implementing and managing its solutions requires deep expertise. Many organizations struggle with:

Migrating data from legacy systems to Databricks Lakehouse.

Optimizing data pipelines for real-time analytics.

Ensuring security, compliance, and governance.

Leveraging machine learning and AI for business growth.

This is where Kadel Labs, as an experienced Databricks Consulting Partner, helps businesses seamlessly adopt and optimize Databricks solutions.

Kadel Labs: Your Trusted Databricks Consulting Partner

Expertise in Databricks Implementation

Kadel Labs specializes in helping businesses integrate the Databricks Lakehouse platform into their existing data infrastructure. With a team of highly skilled engineers and data scientists, Kadel Labs provides end-to-end consulting services, including:

Databricks Implementation & Setup – Deploying Databricks on AWS, Azure, or Google Cloud.

Data Pipeline Development – Automating data ingestion, transformation, and analysis.

Machine Learning Model Deployment – Utilizing Databricks MLflow for AI-driven decision-making.

Data Governance & Compliance – Implementing best practices for security and regulatory compliance.

Custom Solutions for Every Business

Kadel Labs understands that every business has unique data needs. Whether a company is in finance, healthcare, retail, or manufacturing, Kadel Labs designs tailor-made solutions to address specific challenges.

Use Case 1: Finance & Banking

A leading financial institution faced challenges with real-time fraud detection. By implementing Databricks Lakehouse, Kadel Labs helped the company process vast amounts of transaction data, enabling real-time anomaly detection and fraud prevention.

Use Case 2: Healthcare & Life Sciences

A healthcare provider needed to consolidate patient data from multiple sources. Kadel Labs implemented Databricks Lakehouse, enabling seamless integration of electronic health records (EHRs), genomic data, and medical imaging, improving patient care and operational efficiency.

Use Case 3: Retail & E-commerce

A retail giant wanted to personalize customer experiences using AI. By leveraging Databricks Consulting Services, Kadel Labs built a recommendation engine that analyzed customer behavior, leading to a 25% increase in sales.

Migration to Databricks Lakehouse

Many organizations still rely on traditional data warehouses and Hadoop-based ecosystems. Kadel Labs assists businesses in migrating from legacy systems to Databricks Lakehouse, ensuring minimal downtime and optimal performance.

Migration Services Include:

Assessing current data architecture and identifying challenges.

Planning a phased migration strategy.

Executing a seamless transition with data integrity checks.

Training teams to effectively utilize Databricks.

Enhancing Business Intelligence with Kadel Labs

By combining the power of Databricks Lakehouse with BI tools like Power BI, Tableau, and Looker, Kadel Labs enables businesses to gain deep insights from their data.

Key Benefits:

Real-time data visualization for faster decision-making.

Predictive analytics for future trend forecasting.

Seamless data integration with cloud and on-premise solutions.

Future-Proofing Businesses with Kadel Labs

As data landscapes evolve, Kadel Labs continuously innovates to stay ahead of industry trends. Some emerging areas where Kadel Labs is making an impact include:

Edge AI & IoT Data Processing – Utilizing Databricks for real-time IoT data analytics.

Blockchain & Secure Data Sharing – Enhancing data security in financial and healthcare industries.

AI-Powered Automation – Implementing AI-driven automation for operational efficiency.

Conclusion

For businesses looking to harness the power of data, Kadel Labs stands out as a leading Databricks Consulting Partner. By offering comprehensive Databricks Lakehouse solutions, Kadel Labs empowers organizations to transform their data strategies, enhance analytics capabilities, and drive business growth.

If your company is ready to take the next step in data innovation, Kadel Labs is here to help. Reach out today to explore custom Databricks solutions tailored to your business needs.

0 notes