#Databricks Unity catalog

Explore tagged Tumblr posts

Text

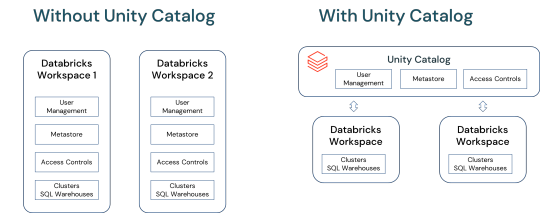

Unlocking Full Potential: The Compelling Reasons to Migrate to Databricks Unity Catalog

In a world overwhelmed by data complexities and AI advancements, Databricks Unity Catalog emerges as a game-changer. This blog delves into how Unity Catalog revolutionizes data and AI governance, offering a unified, agile solution .

View On WordPress

#Access Control in Data Platforms#Advanced User Management#AI and ML Data Governance#AI Data Management#Big Data Solutions#Centralized Metadata Management#Cloud Data Management#Data Collaboration Tools#Data Ecosystem Integration#Data Governance Solutions#Data Lakehouse Architecture#Data Platform Modernization#Data Security and Compliance#Databricks for Data Scientists#Databricks Unity catalog#Enterprise Data Strategy#Migrating to Unity Catalog#Scalable Data Architecture#Unity Catalog Features

0 notes

Text

#dataquality#Databricks#cloud data testing#DataOps#Datagaps#Catalog#Unity Catalog#Datagaps BI Validator

0 notes

Text

Principal Consultant- Databricks Developer with experience in Unity Catalog + Python , Spark , Kafka for ETL

– Databricks Developer with experience in Unity Catalog + Python , Spark , Kafka for ETL! In this role, the Databricks Developer…, and implement optimizations for scalability and efficiency. Write efficient Python scripts for data extraction, transformation… Apply Now

0 notes

Text

Principal Consultant- Databricks Developer with experience in Unity Catalog + Python , Spark , Kafka for ETL

– Databricks Developer with experience in Unity Catalog + Python , Spark , Kafka for ETL! In this role, the Databricks Developer…, and implement optimizations for scalability and efficiency. Write efficient Python scripts for data extraction, transformation… Apply Now

0 notes

Text

Databricks Architect - L1

Job title: Databricks Architect – L1 Company: Wipro Job description: information, visit us at http://www.wipro.com. Databricks Architect · Should have minimum of 10+ years of experience… · Must have skills – DataBricks, Delta Lake, pyspark or scala spark, Unity Catalog · Good to have skills – Azure and/or AWS Cloud… Expected salary: Location: Bangalore, Karnataka Job date: Sun, 01 Jun 2025…

0 notes

Text

Master the Future: Become a Databricks Certified Generative AI Engineer

What if we told you that one certification could position you at the crossroads of AI innovation, high-paying job opportunities, and technical leadership?

That’s exactly what the Databricks Certified Generative AI Engineer certification does. As generative AI explodes across industries, skilled professionals who can bridge the gap between AI theory and real-world data solutions are in high demand. Databricks, a company at the forefront of data and AI, now offers a credential designed for those who want to lead the next wave of innovation.

If you're someone looking to validate your AI engineering skills with an in-demand, globally respected certification, keep reading. This blog will guide you through what the certification is, why it’s valuable, how to prepare effectively, and how it can launch or elevate your tech career.

Why the Databricks Certified Generative AI Engineer Certification Matters

Let’s start with the basics: why should you care about this certification?

Databricks has become synonymous with large-scale data processing, AI model deployment, and seamless ML integration across platforms. As AI continues to evolve into Generative AI, the need for professionals who can implement real-world solutions—using tools like Databricks Unity Catalog, MLflow, Apache Spark, and Lakehouse architecture—is only going to grow.

This certification tells employers that:

You can design and implement generative AI models.

You understand the complexities of data management in modern AI systems.

You know how to use Databricks tools to scale and deploy these models effectively.

For tech professionals, data scientists, ML engineers, and cloud developers, this isn't just a badge—it's a career accelerator.

Who Should Pursue This Certification?

The Databricks Certified Generative AI Engineer path is for:

Data Scientists & Machine Learning Engineers who want to shift into more cutting-edge roles.

Cloud Developers working with AI pipelines in enterprise environments.

AI Enthusiasts and Researchers ready to demonstrate their applied knowledge.

Professionals preparing for AI roles at companies using Databricks, Azure, AWS, or Google Cloud.

If you’re familiar with Python, machine learning fundamentals, and basic model deployment workflows, you’re ready to get started.

What You'll Learn: Core Skills Covered

The exam and its preparation cover a broad but practical set of topics:

🧠 1. Foundation of Generative AI

What is generative AI?

How do models like GPT, DALL·E, and Stable Diffusion actually work?

Introduction to transformer architectures and tokenization.

📊 2. Databricks Ecosystem

Using Databricks notebooks and workflows

Unity Catalog for data governance and model security

Integrating MLflow for reproducibility and experiment tracking

🔁 3. Model Training & Tuning

Fine-tuning foundation models on your data

Optimizing training with distributed computing

Managing costs and resource allocation

⚙️ 4. Deployment & Monitoring

Creating real-time endpoints

Model versioning and rollback strategies

Using MLflow’s model registry for lifecycle tracking

🔐 5. Responsible AI & Ethics

Bias detection and mitigation

Privacy-preserving machine learning

Explainability and fairness

Each of these topics is deeply embedded in the exam and reflects current best practices in the industry.

Why Databricks Is Leading the AI Charge

Databricks isn’t just a platform—it’s a movement. With its Lakehouse architecture, the company bridges the gap between data warehouses and data lakes, providing a unified platform to manage and deploy AI solutions.

Databricks is already trusted by organizations like:

Comcast

Shell

HSBC

Regeneron Pharmaceuticals

So, when you add a Databricks Certified Generative AI Engineer credential to your profile, you’re aligning yourself with the tools and platforms that Fortune 500 companies rely on.

What’s the Exam Format?

Here’s what to expect:

Multiple choice and scenario-based questions

90 minutes total

Around 60 questions

Online proctored format

You’ll be tested on:

Generative AI fundamentals

Databricks-specific tools

Model development, deployment, and monitoring

Data handling in an AI lifecycle

How to Prepare: Your Study Blueprint

Passing this certification isn’t about memorizing definitions. It’s about understanding workflows, being able to apply best practices, and showing proficiency in a Databricks-native AI environment.

Step 1: Enroll in a Solid Practice Course

The most effective way to prepare is to take mock tests and get hands-on experience. We recommend enrolling in the Databricks Certified Generative AI Engineer practice test course, which gives you access to realistic exam-style questions, explanations, and performance feedback.

Step 2: Set Up a Databricks Workspace

If you don’t already have one, create a free Databricks Community Edition workspace. Explore notebooks, work with data in Delta Lake, and train a simple model using MLflow.

Step 3: Focus on the Databricks Stack

Make sure you’re confident using:

Databricks Notebooks

MLflow

Unity Catalog

Model Serving

Feature Store

Step 4: Review Key AI Concepts

Brush up on:

Transformer models and attention mechanisms

Fine-tuning vs. prompt engineering

Transfer learning

Generative model evaluation metrics (BLEU, ROUGE, etc.)

What Makes This Certification Unique?

Unlike many AI certifications that stay theoretical, this one is deeply practical. You’ll not only learn what generative AI is but also how to build and manage it in production.

Here are three reasons this stands out:

✅ 1. Real-world Integration

You’ll learn deployment, version control, and monitoring—which is what companies care about most.

✅ 2. Based on Industry-Proven Tools

Everything is built on top of Databricks, Apache Spark, and MLflow, used by data teams globally.

✅ 3. Focus on Modern AI Workflows

This certification keeps pace with the rapid evolution of AI—especially around LLMs (Large Language Models), prompt engineering, and GenAI use cases.

How It Benefits Your Career

Once certified, you’ll be well-positioned to:

Land roles like AI Engineer, ML Engineer, or Data Scientist in leading tech firms.

Negotiate a higher salary thanks to your verified skills.

Work on cutting-edge projects in AI, including enterprise chatbots, text summarization, image generation, and more.

Stand out in competitive job markets with a Databricks-backed credential on your LinkedIn.

According to recent industry trends, professionals with AI certifications earn an average of 20-30% more than those without.

Use Cases You’ll Be Ready to Tackle

After completing the course and passing the exam, you’ll be able to confidently work on:

Enterprise chatbots using foundation models

Real-time content moderation

AI-driven customer service agents

Medical imaging enhancement

Financial fraud detection using pattern generation

The scope is broad—and the possibilities are endless.

Don’t Just Study—Practice

It’s tempting to dive into study guides or YouTube videos, but what really works is practice. The Databricks Certified Generative AI Engineer practice course offers exam-style challenges that simulate the pressure and format of the real exam.

You’ll learn by doing—and that makes all the difference.

Final Thoughts: The Time to Act Is Now

Generative AI isn’t the future anymore—it’s the present. Companies across every sector are racing to integrate it. The question is:

Will you be ready to lead that charge?

If your goal is to become an in-demand AI expert with practical, validated skills, earning the Databricks Certified Generative AI Engineer credential is the move to make.

Start today. Equip yourself with the skills the industry is hungry for. Stand out. Level up.

👉 Enroll in the Databricks Certified Generative AI Engineer practice course now and take control of your AI journey.

🔍 Keyword Optimiz

0 notes

Text

Optimizing Data Operations with Databricks Services

Introduction

In today’s data-driven world, businesses generate vast amounts of information that must be processed, analyzed, and stored efficiently. Managing such complex data environments requires advanced tools and expert guidance. Databricks Services offer comprehensive solutions to streamline data operations, enhance analytics, and drive AI-powered decision-making.

This article explores how Databricks Services accelerate data operations, their key benefits, and best practices for maximizing their potential.

What are Databricks Services?

Databricks Services encompass a suite of cloud-based solutions and consulting offerings that help organizations optimize their data processing, machine learning, and analytics workflows. These services include:

Data Engineering and ETL: Automating data ingestion, transformation, and storage.

Big Data Processing with Apache Spark: Optimizing large-scale distributed computing.

Machine Learning and AI Integration: Leveraging Databricks for predictive analytics.

Data Governance and Security: Implementing policies to ensure data integrity and compliance.

Cloud Migration and Optimization: Transitioning from legacy systems to modern Databricks environments on AWS, Azure, or Google Cloud.

How Databricks Services Enhance Data Operations

Organizations that leverage Databricks Services benefit from a unified platform designed for scalability, efficiency, and AI-driven insights.

1. Efficient Data Ingestion and Integration

Seamless data integration is essential for real-time analytics and business intelligence. Databricks Services help organizations:

Automate ETL pipelines using Databricks Auto Loader.

Integrate data from multiple sources, including cloud storage, on-premise databases, and streaming data.

Improve data reliability with Delta Lake, ensuring consistency and schema evolution.

2. Accelerating Data Processing and Performance

Handling massive data volumes efficiently requires optimized computing resources. Databricks Services enable businesses to:

Utilize Apache Spark clusters for distributed data processing.

Improve query speed with Photon Engine, designed for high-performance analytics.

Implement caching, indexing, and query optimization techniques for better efficiency.

3. Scaling AI and Machine Learning Capabilities

Databricks Services provide the infrastructure and expertise to develop, train, and deploy machine learning models. These services include:

MLflow for end-to-end model lifecycle management.

AutoML capabilities for automated model tuning and selection.

Deep learning frameworks like TensorFlow and PyTorch for advanced AI applications.

4. Enhancing Security and Compliance

Data security and regulatory compliance are critical concerns for enterprises. Databricks Services ensure:

Role-based access control (RBAC) with Unity Catalog for data governance.

Encryption and data masking to protect sensitive information.

Compliance with GDPR, HIPAA, CCPA, and other industry regulations.

5. Cloud Migration and Modernization

Transitioning from legacy databases to modern cloud platforms can be complex. Databricks Services assist organizations with:

Seamless migration from Hadoop, Oracle, and Teradata to Databricks.

Cloud-native architecture design tailored for AWS, Azure, and Google Cloud.

Performance tuning and cost optimization for cloud computing environments.

Key Benefits of Databricks Services

Organizations that invest in Databricks Services unlock several advantages, including:

1. Faster Time-to-Insight

Pre-built data engineering templates accelerate deployment.

Real-time analytics improve decision-making and operational efficiency.

2. Cost Efficiency and Resource Optimization

Serverless compute options minimize infrastructure costs.

Automated scaling optimizes resource utilization based on workload demand.

3. Scalability and Flexibility

Cloud-native architecture ensures businesses can scale operations effortlessly.

Multi-cloud and hybrid cloud support enable flexibility in deployment.

4. AI-Driven Business Intelligence

Advanced analytics and AI models uncover hidden patterns in data.

Predictive insights improve forecasting and business strategy.

5. Robust Security and Governance

Enforces best-in-class data governance frameworks.

Ensures compliance with industry-specific regulatory requirements.

Industry Use Cases for Databricks Services

Many industries leverage Databricks Services to drive innovation and operational efficiency. Below are some key applications:

1. Financial Services

Fraud detection using AI-powered transaction analysis.

Regulatory compliance automation for banking and fintech.

Real-time risk assessment for investment portfolios.

2. Healthcare & Life Sciences

Predictive analytics for patient care optimization.

Drug discovery acceleration through genomic research.

HIPAA-compliant data handling for secure medical records.

3. Retail & E-Commerce

Personalized customer recommendations using AI.

Supply chain optimization with predictive analytics.

Demand forecasting to improve inventory management.

4. Manufacturing & IoT

Anomaly detection in IoT sensor data for predictive maintenance.

AI-enhanced quality control systems to reduce defects.

Real-time analytics for production line efficiency.

Best Practices for Implementing Databricks Services

To maximize the value of Databricks Services, organizations should follow these best practices:

1. Define Clear Objectives

Set measurable KPIs to track data operation improvements.

Align data strategies with business goals and revenue targets.

2. Prioritize Data Governance and Quality

Implement data validation and cleansing processes.

Leverage Unity Catalog for centralized metadata management.

3. Automate for Efficiency

Use Databricks automation tools to streamline ETL and machine learning workflows.

Implement real-time data streaming for faster insights.

4. Strengthen Security Measures

Enforce multi-layered security policies for data access control.

Conduct regular audits and compliance assessments.

5. Invest in Continuous Optimization

Update data pipelines and ML models to maintain peak performance.

Provide ongoing training for data engineers and analysts.

Conclusion

Databricks Services provide businesses with the expertise, tools, and technology needed to accelerate data operations, enhance AI-driven insights, and improve overall efficiency. Whether an organization is modernizing its infrastructure, implementing real-time analytics, or strengthening data governance, Databricks Services offer tailored solutions to meet these challenges.

By partnering with Databricks experts, companies can unlock the full potential of big data, AI, and cloud-based analytics, ensuring they stay ahead in today’s competitive digital landscape.

0 notes

Text

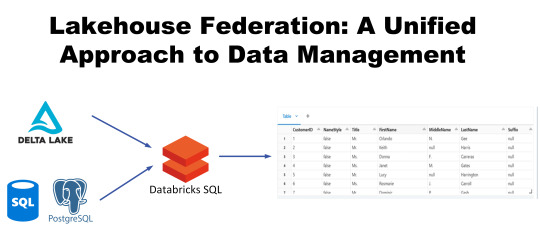

Lakehouse Federation Best Practices

Step into the future of data management with the revolutionary Lakehouse Federation. Envision a world where data lakes and data warehouses merge, creating a formidable powerhouse for data handling.

Step into the future of data management with the revolutionary Lakehouse Federation. Envision a world where data lakes and data warehouses merge, creating a formidable powerhouse for data handling. In today’s digital age, where data pours in from every corner, relying on traditional methods can leave you in the lurch. Enter Lakehouse Federation, a game-changer that harnesses the best of both…

View On WordPress

#Azure Databricks#Databricks Delta Sharing#Databricks Lakehouse#Databricks Lakehouse Federation#Databricks Unity catalog#Query Federation#unified data management#Unity catalog External Tables

0 notes

Text

Enhancing Data Management and Analytics with Kadel Labs: Leveraging Databricks Lakehouse Platform and Databricks Unity Catalog

In today’s data-driven world, companies across industries are continually seeking advanced solutions to streamline data processing and ensure data accuracy. Data accessibility, security, and analysis have become key priorities for organizations aiming to harness the power of data for strategic decision-making. Kadel Labs, a forward-thinking technology solutions provider, has recognized the importance of robust data solutions in helping businesses thrive. Among the most promising tools they employ are the Databricks Lakehouse Platform and Databricks Unity Catalog, which offer scalable, secure, and versatile solutions for managing and analyzing vast amounts of data.

0 notes

Text

How Databricks Unity Catalog and Datagaps Automate Governance and Validation

Data quality is the backbone of accurate analytics, regulatory compliance, and efficient business operations. As organizations scale their data ecosystems, maintaining high data integrity becomes more challenging.

The seamless integration between Databricks Unity Catalog and Datagaps DataOps Suite provides a powerful framework for automated governance and validation, ensuring that data remains accurate, complete, and compliant at all times.

In our previous discussion, we highlighted how Datagaps enhances metadata management, lineage tracking, and automation within Unity Catalog. This article takes the next step by diving into data quality assurance – a crucial component of enterprise-wide data governance.

By leveraging Datagaps Data Quality Monitor, organizations can implement automated validation strategies, reduce manual effort, and integrate real-time data quality scores into Unity Catalog for proactive governance. Let’s explore how these technologies work together to ensure high-quality, reliable data that drives better decision-making and compliance.

The Growing Need for Automated Data Quality Assurance

Modern enterprises manage vast amounts of structured and unstructured data across multiple platforms. Ensuring data accuracy, completeness, and consistency is no longer just a best practice – it’s a necessity for regulatory compliance and business intelligence.

Databricks Unity Catalog provides a centralized governance framework for managing metadata, access controls, and data lineage across an organization. By integrating with Datagaps Data Quality Monitor, enterprises can automate data validation, reduce errors, and gain deeper insights into data health and integrity.

6 Key Data Quality Dimensions

Effective data quality management revolves around six fundamental dimensions:

Accuracy – Ensuring data reflects real-world values without discrepancies.

Completeness – Verifying that all required fields and records are present.

Consistency – Maintaining uniformity across multiple data sources and systems.

Timeliness – Ensuring data is up-to-date and available when needed.

Uniqueness – Eliminating duplicate records and redundant data entries.

Validity – Enforcing compliance with defined formats, business rules, and constraints.

By addressing these dimensions, organizations can improve the trustworthiness of their data assets, enhance AI/ML outcomes, and comply with industry regulations.

Automating Data Quality Validation with White-Box and Black-Box Testing

Ensuring data integrity at scale requires a systematic approach to validation. Two widely used methodologies are:

1. White-Box Testing

Examines internal data transformations, lineage, and business rules.

Ensures that every step in the ETL (Extract, Transform, Load) process adheres to defined standards.

Provides deeper insights into data processing logic to catch issues at the source.

2. Black-Box Testing

Focuses on output validation by comparing actual results against expected benchmarks.

Useful for detecting anomalies, missing records, and schema mismatches.

Works well for regulatory compliance and end-to-end data pipeline testing.

A hybrid approach combining both techniques ensures robust validation and proactive anomaly detection.

How Unity Catalog and Datagaps Data Quality Monitor Work Together

1. Unified Governance and Automated Validation

Databricks Unity Catalog centralizes metadata management, access control, and lineage tracking.

Datagaps Data Quality Monitor extends these capabilities with automated quality checks, reducing manual efforts.

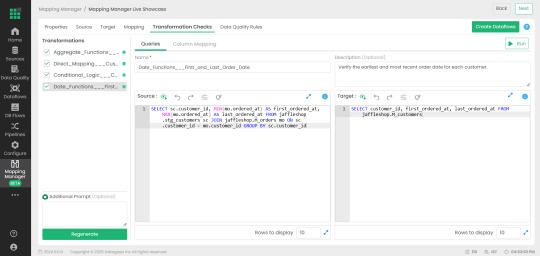

2. Mapping Manager Utility: Simplifying Test Case Automation

One of the standout features of Datagaps Data Quality Monitor is the Mapping Manager Utility, which:

Extracts mapping configurations from Databricks Unity Catalog.

Automatically generates white-box and black-box test cases.

Reduces the need for manual intervention, increasing efficiency and scalability.

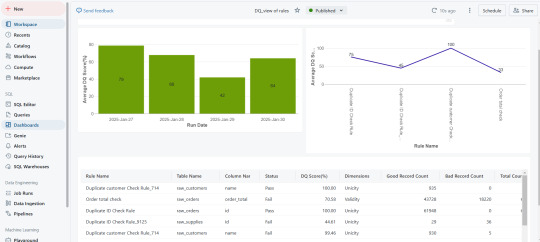

3. Real-Time Data Quality Scores for Proactive Governance

After test execution, a data quality score is generated.

These scores are seamlessly integrated into Databricks Unity Catalog, allowing real-time monitoring.

Organizations can visualize data quality insights through dashboards and take corrective actions before issues impact business operations.

Key Use Cases

ETL and Data Pipeline Validation – Ensuring data transformations adhere to defined business rules.

Regulatory Compliance and Audit Readiness – Mitigating risks associated with inaccurate reporting.

Enterprise Data Lakehouse Governance – Enhancing consistency across distributed datasets.

AI/ML Data Preprocessing – Ensuring clean, high-quality data for better model performance.

Automated Data Quality Checks – Reducing manual data validation efforts for faster, more reliable insights.

Scalability for Large Datasets – Efficiently managing high-volume, high-velocity enterprise data.

Faster QA Cycles – Automating test case execution for rapid turnaround.

Lower Operational Resources – Reducing human intervention, saving time and resources.

The Business Impact: Why This Integration Matters

Enhanced Automation – Eliminates manual quality checks and increases efficiency.

Real-Time Monitoring – Provides instant visibility into data quality metrics.

Stronger Compliance – Supports industry standards and regulations effortlessly.

Scalability – Designed for large-scale, complex data ecosystems.

Cost Efficiency – Reduces operational overhead and improves ROI on data management initiatives.

Ensuring data quality at scale requires a combination of automated governance, real-time monitoring, and seamless integration. The connection between Databricks Unity Catalog and Datagaps Data Quality Monitor provides a comprehensive solution to achieve this goal.

With automated test case generation, continuous data validation, and integrated governance, organizations can ensure their data is always accurate, complete, and compliant—laying the foundation for data-driven decision-making and regulatory confidence.

0 notes

Text

Automate Azure Databricks Unity Catalog Permissions at the Table Level

http://securitytc.com/TGhNyZ

0 notes

Text

Databricks Generative AI Engineer Associate Practice Exam For Best Prep

The Databricks Certified Generative AI Engineer Associate Exam has become a sought-after certification for professionals looking to validate their skills in deploying and managing generative AI solutions. With this certification, professionals demonstrate expertise in designing large language model (LLM)-enabled applications using Databricks-specific tools and frameworks. A notable resource for preparation is the latest Databricks Generative AI Engineer Associate Practice Exam from Cert007. This practice test is specifically designed to mirror the real exam’s structure, helping candidates build confidence and proficiency by simulating the actual test environment. Cert007’s exam offers a realistic view of the questions you’ll face, covering everything from model selection and data preparation to deployment and governance, ensuring candidates are well-equipped for success.

In this guide, we’ll dive into the key areas of the Databricks Generative AI Engineer Associate certification, breaking down the sections of the exam and detailing essential study strategies to excel in each.

Understanding the Databricks Generative AI Engineer Associate Certification

The Databricks Generative AI Engineer Associate exam is designed to assess a professional’s ability to create LLM-powered solutions. This includes working with Databricks tools like Vector Search, Model Serving, MLflow, and Unity Catalog to build and deploy applications that leverage the capabilities of generative AI. The skills tested include problem decomposition, selecting the right models and tools, and implementing safe, performant AI applications.

Exam Topics Overview and Weightage

The Databricks Generative AI Engineer Associate exam is meticulously structured to cover a comprehensive range of topics essential for proficiency in generative AI solutions. The exam content is thoughtfully divided into six key sections, each carrying a specific weightage that reflects its importance in the field:

Design Applications (14%): This section evaluates your ability to conceptualize and architect LLM-powered applications, focusing on problem decomposition and solution design principles.

Data Preparation (14%): Here, you'll be tested on your skills in preparing and processing data for use in generative AI models, including techniques for data cleaning, transformation, and augmentation.

Application Development (30%): As the most heavily weighted section, this area assesses your proficiency in developing robust generative AI applications using Databricks tools and frameworks.

Assembling and Deploying Applications (22%): This section examines your capability to integrate various components and deploy scalable, production-ready generative AI solutions.

Governance (8%): While carrying a lower weightage, this crucial section tests your understanding of ethical considerations, compliance, and best practices in managing AI applications.

Evaluation and Monitoring (12%): The final section assesses your ability to implement effective strategies for evaluating model performance and monitoring deployed applications to ensure ongoing reliability and efficiency.

Understanding this breakdown is crucial for tailoring your study approach and allocating your preparation time effectively across these key areas of generative AI engineering.

Comprehensive Preparation Guide for the Databricks Generative AI Engineer Associate Exam

To effectively prepare for the Databricks Generative AI Engineer Associate exam, candidates should adopt a multi-faceted approach that encompasses theoretical knowledge, practical skills, and strategic study techniques. Here's an in-depth look at how to optimize your preparation:

1. Master the Databricks Documentation: Thoroughly review the official Databricks documentation, paying special attention to sections on Vector Search, Model Serving, MLflow, and Unity Catalog. These resources provide the foundation for understanding the Databricks ecosystem and its AI capabilities.

2. Hands-on Practice with Databricks Tools: Gain practical experience by working on projects that utilize Databricks' AI tools. Set up a Databricks workspace and experiment with building LLM-powered applications, focusing on the six key exam areas.

3. Utilize Practice Exams: Leverage resources like the Cert007 practice exam to familiarize yourself with the exam format and question types. These simulations help identify knowledge gaps and improve time management skills.

4. Join Study Groups and Forums: Engage with other candidates preparing for the exam. Participating in discussions can provide new perspectives and clarify complex concepts.

5. Create a Structured Study Plan: Develop a schedule that allocates time to each exam topic based on its weightage. Focus more on high-percentage areas like Application Development and Assembling and Deploying Applications.

Remember, success in the Databricks Generative AI Engineer Associate exam requires a blend of theoretical knowledge and practical application. By following this comprehensive preparation strategy, candidates can approach the exam with confidence and increase their chances of success.

Conclusion

The Databricks Generative AI Engineer Associate exam presents a significant opportunity for professionals to validate their expertise in the rapidly evolving field of generative AI. By thoroughly understanding the exam structure, focusing on key areas such as application development and deployment, and utilizing resources like the Cert007 practice exam, candidates can effectively prepare for this challenging certification. Remember that success in this exam not only demonstrates your technical proficiency but also positions you at the forefront of AI innovation in the Databricks ecosystem. With dedicated study, hands-on practice, and strategic preparation, you'll be well-equipped to excel in the exam and advance your career in the exciting realm of generative AI engineering.

0 notes

Text

Senior Databricks Engineer_22531

Job title: Senior Databricks Engineer_22531 Company: DXC Technology Job description: , Delta Tables, Unity Catalog Hands on in Python, PySpark or Spark SQL Hands on in Azure Analytics and DevOps Taking part… Expected salary: Location: Bangalore, Karnataka Job date: Wed, 21 May 2025 03:30:53 GMT Apply for the job now!

0 notes

Text

Databricks Unity Catalog Explained for SQL Server Folks

If you’re coming from a SQL Server background, think of Databricks Unity Catalog as a centralized metadata management system that simplifies how data is organized, governed, and shared across different data platforms. It’s designed for modern data lakes (such as those in Azure and AWS) but incorporates familiar concepts for SQL users. Let’s break it down step by step: 1. Databases and Schemas…

0 notes