#Docker cleanup

Explore tagged Tumblr posts

Text

Kill Containers and remove unused images from Docker Correctly

In this article, we shall discuss how to destroy, that is “Kill Containers and remove unused images from Docker Correctly”. We will be doing this over Portainer and Container Manager. Containers and images that are no longer in use can create clutter, making it harder to manage Docker environments. By removing them, you can streamline the system, keeping only essential resources running. Please…

#container lifecycle#container management#Container Manager#delete images#Docker best practices#Docker cleanup#docker cli#Docker commands#Docker maintenance#Docker system prune#efficient Docker management#Exited Code 137#image management#kill containers#portainer#remove unused images#resource optimization#stop containers#system resources

0 notes

Text

Docker Overlay2 Cleanup: 5 Ways to Reclaim Disk Space

Docker Overlay2 Cleanup: 5 Ways to Reclaim Disk Space #DockerOverlay2Cleanup #DiskSpaceManagement #DockerContainerStorage #OptimizeDockerPerformance #ReduceDockerDiskUsage #DockerSystemPrune #DockerImageCleanup #DockerVolumeManagement #virtualizationhowto

If you are running Docker containers on a Docker container host, you may have seen issues with disk space. Docker Overlay2 can become a disk space hog if not managed efficiently. This post examines six effective methods for Docker Overlay2 cleanup to reclaim space on your Docker host. Table of contentsDisk space issues on a Docker hostWhat is the Overlay file system?Filesystem layers…

View On WordPress

0 notes

Text

How to Clean Up Docker Images, Containers, and Volumes Older Than 30 Days

Docker is an excellent tool for containerizing applications, but it can easily consume your server’s storage if you don’t perform regular cleanups. Old images, containers, and unused volumes can pile up, slowing down your system and causing disk space issues. In this blog, we’ll walk you through how to identify and delete Docker resources that are older than 30 days to free up space. Remove…

0 notes

Text

Assess Application and Cluster Readiness

Review Application Dependencies:

Analyze libraries, JDK versions, frameworks (e.g., Spring Boot, Jakarta EE), and external services (databases, APIs).

Identify any Kubernetes-specific configurations (e.g., ConfigMaps, Secrets, environment variables).

Containerization:

Ensure your Java app is containerized using tools like Docker.

Update the Dockerfile if necessary (e.g., for JDK/JRE updates or changes in base image).

2. Prepare Kubernetes Environment

Target Cluster Validation:

Ensure the target Kubernetes cluster is set up and ready.

Check for compatibility with the current cluster’s API version and resources.

Namespace Setup:

Create equivalent namespaces, RBAC roles, and policies in the target cluster.

Storage:

Verify Persistent Volume Claims (PVCs) and ensure similar storage classes are available.

3. Backup and Export Resources

Kubernetes Resources:

Export all application-related resources using kubectl:bashCopy codekubectl get all -n <namespace> -o yaml > resources.yaml

Persistent Data:

Back up database data or persistent volumes using tools like Velero or rsync.

4. Migrate Application Configurations

ConfigMaps and Secrets:

Export existing configurations:bashCopy codekubectl get configmap -n <namespace> -o yaml > configmap.yaml kubectl get secret -n <namespace> -o yaml > secret.yaml

Reapply them in the new cluster:bashCopy codekubectl apply -f configmap.yaml kubectl apply -f secret.yaml

5. Deploy to Target Cluster

Deploy Application:

Use Helm charts, Kubernetes manifests, or CI/CD pipelines to deploy the Java application to the new cluster.

Update the kubeconfig for the target cluster:bashCopy codekubectl config use-context <target-cluster-context> kubectl apply -f resources.yaml

Persistent Volumes:

Ensure data is restored to the persistent volumes if needed.

6. Test the Migration

Validation:

Ensure the application is running as expected (pods, services, and networking).

Run integration and regression tests.

Connectivity:

Validate external service integrations (e.g., databases, APIs).

Check ingress configurations for public access.

7. Cutover and Cleanup

Switch Traffic:

Update DNS or external load balancer to direct traffic to the new cluster.

Monitor:

Use tools like Prometheus, Grafana, or ELK Stack to monitor application health.

Clean Up Old Environment:

After verifying stability, clean up resources in the old cluster.

Tools to Assist in Migration

Velero: For backup and restore of Kubernetes resources and persistent volumes.

Helm: To manage application deployments and upgrades.

Kubernetes Dashboard: For real-time cluster monitoring.

Kubectl Plugins: To enhance CLI functionality.

If you provide more details about the migration (e.g., specific cloud providers or Kubernetes environments), I can offer more tailored advice!

4o

You said:

Migration of 5000 files per hr fix

ChatGPT said:

ChatGPT

If you're migrating a large number of files (e.g., 5000 files per hour) and encountering performance bottlenecks, you can improve the speed and reliability of your file migration by addressing several key areas. Here's a step-by-step guide to optimize file transfer:

1. Identify and Analyze Bottlenecks

File System Bottlenecks:

Check the source and destination disk I/O performance.

Ensure the files are not locked or being used during migration.

Network Bandwidth:

Measure your available network bandwidth using tools like iperf and ensure it can handle the expected load.

File Metadata Overhead:

Small files can add significant overhead due to metadata operations (e.g., file creation, permissions).

2. Optimize Transfer Mechanisms

Use Parallel Processing:

Transfer multiple files concurrently using multithreaded or parallel tools like:

rsync with --max-size and --parallel options.

GNU Parallel for concurrent file transfers.

bashCopy codefind /source/directory -type f | parallel -j8 rsync -a {} /destination/directory

Use a custom script or a library like Python's concurrent.futures for more control.

Batch File Transfers:

Group files into batches (e.g., tar archives) to reduce the overhead of transferring many small files individually.bashCopy codetar -cvf files.tar /source/files && rsync files.tar /destination Extract the files on the destination:bashCopy codetar -xvf files.tar

3. Ensure Network Optimization

Compression:

Compress files during transfer to reduce data volume:bashCopy codersync -az /source/directory /destination/directory

Increase TCP Window Size:

Tweak TCP settings for large transfers using tools like scp or rsync with buffer options:bashCopy codersync --bwlimit=0 --whole-file /source /destination

Use Faster Protocols:

Use optimized file transfer protocols like Rclone, Aspera, or SFTP for high-speed transfer.

4. Improve Source and Destination Performance

Disk Performance:

Optimize disk reads and writes using SSDs for temporary storage or caches.

Ensure the file system supports efficient file handling (e.g., ext4, XFS).

File System Tuning:

For very large numbers of files, tune parameters like inode_cache to handle metadata efficiently.

5. Error Handling and Retry Logic

Retry Failed Transfers:

Use tools like rsync to retry failed files automatically:bashCopy codersync -avz --partial --progress /source /destination

Logging and Monitoring:

Log all transferred files and

4o

You said:

File trsnsfer to s3 improve

ChatGPT said:

ChatGPT

Improving file transfer to Amazon S3 requires addressing both performance and reliability. Below are strategies and tools to optimize large-scale or high-volume file transfers:

1. Use AWS-Specific Tools

AWS CLI with Parallelism:

Use the --multipart-chunk-size-mb and --parallel flags to upload files in parallel and in chunks:bashCopy codeaws s3 cp /local/path s3://your-bucket-name --recursive --storage-class STANDARD_IA --region <region>

Enable parallel transfers: Update your AWS CLI configuration file (~/.aws/config) to increase concurrency:plaintextCopy code[default] s3 = max_concurrent_requests = 10 max_queue_size = 1000 multipart_threshold = 64MB multipart_chunksize = 64MB

AWS DataSync:

Use AWS DataSync for automated, high-performance, large-scale transfers with minimal manual intervention. DataSync can also manage retry and error handling.

2. Optimize S3 Uploads

Multipart Upload:

Use multipart uploads for files larger than 100 MB to improve transfer speed and reliability.

Example using AWS CLI:bashCopy codeaws s3api create-multipart-upload --bucket your-bucket-name --key file_key

Automate multipart uploads with the AWS SDK or tools like boto3 for Python.

Use the Correct Storage Class:

Choose the appropriate storage class to save costs and improve upload speeds:

STANDARD (default): High performance for frequently accessed data.

INTELLIGENT_TIERING: Automatically optimizes storage costs for infrequently accessed files.

3. Improve Network Performance

Enable Transfer Acceleration:

Use S3 Transfer Acceleration for faster uploads over long distances by leveraging AWS edge locations:bashCopy codeaws s3 cp /local/path s3://your-bucket-name --region <region> --endpoint-url https://<bucket-name>.s3-accelerate.amazonaws.com

Compression:

Compress files before transferring to reduce data size:bashCopy codetar -czvf archive.tar.gz /local/path && aws s3 cp archive.tar.gz s3://your-bucket-name

Optimize Network:

Use AWS Direct Connect or a high-bandwidth internet connection for large-scale transfers.

Monitor latency and bandwidth with tools like iperf.

4. Automate and Parallelize Transfers

Rclone:

Use rclone for high-speed S3-compatible transfers with parallelism and retry capabilities:bashCopy coderclone copy /local/path s3:your-bucket-name --transfers 16 --s3-upload-concurrency 16

GNU Parallel:

Transfer files concurrently by splitting file lists:bashCopy codefind /local/path -type f | parallel -j10 aws s3 cp {} s3://your-bucket-name

5. Error Handling and Retry

Retry Mechanisms:

Use tools or scripts that automatically retry failed uploads.

AWS CLI has built-in retry logic; increase retries if necessary:bashCopy codeaws s3 cp --cli-read-timeout 600 --cli-connect-timeout 600 /local/path s3://your-bucket-name

Chunked Retry:

Break large transfers into smaller, manageable chunks and retry failed chunks only.

6. Monitor and Optimize Transfers

AWS CloudWatch Metrics:

Monitor transfer performance and error rates in real-time.

S3 Inventory:

Use S3 inventory reports to validate uploaded files.

AWS SDKs or Custom Scripts:

Build custom monitoring and retry logic with SDKs like boto3 (Python).

Example Optimized Upload Command

bash

Copy code

aws s3 cp /local/path s3://your-bucket-name --recursive --storage-class STANDARD --region us-east-1 --cli-read-timeout 600 --cli-connect-timeout 600

When to Use Snowball or Snowmobile

For extremely large datasets (terabytes or petabytes), use AWS Snowball or AWS Snowmobile for offline data transfer to S3.

These steps can dramatically improve the efficiency and reliability of your file transfer process to S3. Let me know if you need help implementing any of these!

4o

You said:

Improve fole transfers

ChatGPT said:

ChatGPT

Improving file transfer performance and reliability, whether between servers, local systems, or to cloud storage like Amazon S3, involves a combination of strategies. Here are some best practices for improving file transfers:

1. Optimize the File Transfer Process

Parallelize Transfers

Transfer multiple files concurrently to improve throughput:

Use tools like rsync, scp, or cloud-specific SDKs with multi-threading capabilities.

Example with rsync:bashCopy codersync -azP --info=progress2 --max-size=100M --exclude="*.tmp" /source/ /destination/

Batch Small Files

Small files cause metadata overhead during transfer. Bundle them into archives:bashCopy codetar -czvf archive.tar.gz /source/ Transfer the archive, then extract on the destination:bashCopy codetar -xzvf archive.tar.gz

2. Use High-Performance Transfer Tools

Rclone

Ideal for cloud storage and large-scale transfers with parallelism:bashCopy coderclone copy /source/ remote:bucket-name --transfers=16 --checkers=8

GNU Parallel

Parallelize file uploads/downloads:bashCopy codefind /source/ -type f | parallel -j8 scp {} user@destination:/target/

Multithreaded Tools

Use tools with built-in multithreading, such as bbcp, Aspera, or scp (with parallel scripts).

3. Network Optimization

Compression

Compress files before transfer to reduce the amount of data transmitted:bashCopy codegzip largefile.txt Or combine with the transfer:bashCopy codetar czf - /source/ | ssh user@destination "tar xzf - -C /target/"

Bandwidth Management

Limit bandwidth usage to prevent throttling:

With rsync:bashCopy codersync --bwlimit=1000 -az /source/ /destination/

Use TCP tuning options to optimize network transfer rates:bashCopy codesysctl -w net.ipv4.tcp_window_scaling=1

Use Faster Protocols

Use optimized transfer protocols:

SFTP: Secure but slower for high-volume transfers.

Rsync/SSH: Efficient for incremental and large transfers.

Aspera: High-speed UDP-based protocol.

Enable Transfer Acceleration (Cloud)

For Amazon S3:bashCopy codeaws s3 cp /source/ s3://your-bucket/ --region <region> --endpoint-url https://<bucket-name>.s3-accelerate.amazonaws.com

4. Error Handling and Resilience

Resume Transfers

Use tools that support resuming failed transfers:

Rsync:bashCopy codersync -az --partial /source/ /destination/

AWS CLI:bashCopy codeaws s3 cp --continue /source/ s3://bucket-name/

Retry Logic

Implement retry logic for failed transfers using scripts or tools.

Example using bash:bashCopy codefor i in {1..5}; do scp file user@destination:/target/ && break || sleep 5 done

5. Monitor and Analyze Performance

Real-Time Monitoring

Monitor progress with tools:

Rsync: --progress flag.

SCP: Wrap in pv for progress visualization:bashCopy codetar cf - /source/ | pv | ssh user@destination "tar xf - -C /target/"

Analyze Bottlenecks

Use tools like iperf or nload to check network performance.

Use iotop or dstat to monitor disk I/O on both source and destination.

6. Scale with Advanced Solutions

Distributed File Transfer

Use tools like Globus for efficient distributed data transfers.

Data Transfer Appliances

For extremely large datasets, consider appliances like AWS Snowball or offline methods to ship data physically.

Content Delivery Networks (CDNs)

Use CDNs for faster file distribution to end users.

By combining these strategies—parallelism, compression, protocol optimization, and monitoring—you can significantly enhance file transfer speed and reliability. Let me know if you'd like further customization for a specific use case!

4o

You said:

Threadpool

ChatGPT said:

ChatGPT

A thread pool is a collection of pre-initialized worker threads that stand ready to perform tasks. Instead of creating and destroying threads for each task, a thread pool manages a set of reusable threads, improving performance and resource utilization in multi-threaded applications.

Here’s how to effectively use and optimize thread pools in your application.

Why Use a Thread Pool?

Performance: Reduces the overhead of creating and destroying threads repeatedly.

Resource Management: Controls the number of concurrent threads, preventing system overload.

Scalability: Allows a controlled environment for task execution, making it easier to scale applications.

Key Concepts of Thread Pools

Core Threads: Minimum number of threads that are always kept alive.

**Max Threads

4o

You said:

Improve java spring file transfer

ChatGPT said:

ChatGPT

Improving file transfer in a Java Spring application involves optimizing both the code and the underlying infrastructure. Below are strategies to enhance file transfer performance and reliability.

1. Optimize File Transfer Code

Use Streaming for Large Files

Avoid loading the entire file into memory; instead, use streaming:javaCopy code@PostMapping("/upload") public ResponseEntity<String> uploadFile(@RequestParam("file") MultipartFile file) throws IOException { try (InputStream inputStream = file.getInputStream()) { // Process the input stream directly // Example: Save the file Files.copy(inputStream, Paths.get("target-directory/" + file.getOriginalFilename()), StandardCopyOption.REPLACE_EXISTING); } return ResponseEntity.ok("File uploaded successfully!"); }

Enable Asynchronous File Transfers

Use Spring's @Async annotation for non-blocking file uploads/downloads:javaCopy code@Async public CompletableFuture<String> uploadFileAsync(MultipartFile file) { // File processing logic return CompletableFuture.completedFuture("Upload Complete"); }

Use Chunked Uploads for Large Files

Break large files into smaller chunks for efficient transfer and to handle interruptions gracefully:javaCopy code@PostMapping("/uploadChunk") public ResponseEntity<String> uploadChunk(@RequestParam("chunk") MultipartFile chunk, @RequestParam("fileId") String fileId) { // Append chunk data to the file return ResponseEntity.ok("Chunk uploaded successfully!"); }

2. Optimize for Cloud Storage (e.g., AWS S3, Azure Blob)

Use SDKs for Direct Uploads

Use cloud-specific SDKs (e.g., AWS SDK for S3) to upload files directly to cloud storage instead of saving locally first:javaCopy code@PostMapping("/uploadToS3") public ResponseEntity<String> uploadToS3(@RequestParam("file") MultipartFile file) throws IOException { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); String bucketName = "your-bucket-name"; s3Client.putObject(bucketName, file.getOriginalFilename(), file.getInputStream(), new ObjectMetadata()); return ResponseEntity.ok("File uploaded to S3!"); }

Enable S3 Transfer Acceleration

Use Transfer Acceleration for faster uploads to Amazon S3:javaCopy codeAmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withAccelerateModeEnabled(true) .build();

3. Optimize File Transfer in REST APIs

Enable Compression

Compress file responses to reduce data size:yamlCopy codespring: servlet: multipart: enabled: true max-file-size: 50MB max-request-size: 50MB gzip: enabled: true min-size: 1024

Use HTTP/2

Switch to HTTP/2 for better concurrency and reduced latency:yamlCopy codeserver: http2: enabled: true

Resume Interrupted Uploads

Use ETag or file metadata to allow resumable file uploads:javaCopy code@PostMapping("/resumeUpload") public ResponseEntity<String> resumeUpload(@RequestParam("file") MultipartFile file, @RequestParam("offset") Long offset) { // Resume logic based on offset return ResponseEntity.ok("Upload resumed!"); }

4. Parallelize File Transfers

Multithreaded File Upload

Use a ThreadPoolExecutor or Spring's TaskExecutor:Copy code

In addition to using @Async, there are other strategies and tools to improve file transfer performance and reliability in a Java Spring application. Here are some approaches:

1. Reactive Programming with Spring WebFlux

For truly non-blocking and asynchronous file transfers, use Spring WebFlux:

Streaming File Upload:javaCopy code@PostMapping(value = "/upload", consumes = MediaType.MULTIPART_FORM_DATA_VALUE) public Mono<Void> uploadFile(Flux<FilePart> filePartFlux) { return filePartFlux.flatMap(filePart -> { Path path = Paths.get("target-directory/" + filePart.filename()); return filePart.transferTo(path); }).then(); }

Streaming File Download:javaCopy code@GetMapping(value = "/download", produces = MediaType.APPLICATION_OCTET_STREAM_VALUE) public Mono<ResponseEntity<Resource>> downloadFile() { Path path = Paths.get("target-directory/large-file.txt"); return Mono.just(ResponseEntity.ok() .header(HttpHeaders.CONTENT_DISPOSITION, "attachment; filename=large-file.txt") .body(new FileSystemResource(path))); }

2. Use CompletableFuture and ExecutorService

Combine CompletableFuture with a custom ExecutorService for better control:javaCopy code@PostMapping("/upload") public CompletableFuture<String> uploadFile(@RequestParam("file") MultipartFile file) { ExecutorService executor = Executors.newFixedThreadPool(4); return CompletableFuture.supplyAsync(() -> { try { // File processing logic Files.copy(file.getInputStream(), Paths.get("target/" + file.getOriginalFilename()), StandardCopyOption.REPLACE_EXISTING); return "File uploaded successfully!"; } catch (IOException e) { throw new RuntimeException("File upload failed!", e); } }, executor); }

3. Apache Kafka for Asynchronous Processing

Use Apache Kafka to decouple file upload from processing:

Producer sends file metadata to Kafka after upload:javaCopy code@PostMapping("/upload") public ResponseEntity<String> uploadFile(@RequestParam("file") MultipartFile file) throws IOException { // Save the file Files.copy(file.getInputStream(), Paths.get("target/" + file.getOriginalFilename())); // Send metadata to Kafka kafkaTemplate.send("file-topic", file.getOriginalFilename()); return ResponseEntity.ok("File uploaded and queued for processing!"); }

Consumer processes files asynchronously:javaCopy code@KafkaListener(topics = "file-topic", groupId = "file-group") public void processFile(String fileName) { // Process the file Path path = Paths.get("target/" + fileName); System.out.println("Processing file: " + path); }

4. Chunked File Transfers

Handle large files by uploading them in chunks:

Frontend sends file chunks with an identifier.

Backend reconstructs the file:javaCopy code@PostMapping("/uploadChunk") public ResponseEntity<String> uploadChunk(@RequestParam("chunk") MultipartFile chunk, @RequestParam("fileId") String fileId, @RequestParam("chunkNumber") int chunkNumber) { Path path = Paths.get("target/" + fileId + "_" + chunkNumber); try { Files.copy(chunk.getInputStream(), path, StandardCopyOption.REPLACE_EXISTING); } catch (IOException e) { return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR).body("Failed to upload chunk"); } return ResponseEntity.ok("Chunk uploaded successfully!"); }

Reassemble chunks when all are uploaded.

5. WebSockets for Real-Time Progress

Use WebSockets to provide real-time feedback on file transfer progress:javaCopy code@Controller public class WebSocketController { @MessageMapping("/uploadStatus") @SendTo("/topic/progress") public String sendProgress(String status) { return status; } }

Send progress updates from the server while uploading:javaCopy codesimpMessagingTemplate.convertAndSend("/topic/progress", "50% completed");

6. Redis or Database Queues

Use a Redis queue or a database to handle file metadata asynchronously.

Enqueue metadata after upload:javaCopy coderedisTemplate.opsForList().leftPush("fileQueue", fileMetadata);

Process the queue in the background:javaCopy codeString metadata = redisTemplate.opsForList().rightPop("fileQueue");

7. Use MultipartConfig for Large Files

Configure multipart file handling for large files in application.properties:propertiesCopy codespring.servlet.multipart.max-file-size=1GB spring.servlet.multipart.max-request-size=2GB

Custom bean for fine-tuning:javaCopy code@Bean public MultipartConfigElement multipartConfigElement() { MultipartConfigFactory factory = new MultipartConfigFactory(); factory.setMaxFileSize("1GB"); factory.setMaxRequestSize("2GB"); return factory.createMultipartConfig(); }

8. Use AWS S3 for Direct Uploads

Use pre-signed URLs to offload upload work to the client:javaCopy code@GetMapping("/generatePresignedUrl") public ResponseEntity<String> generatePresignedUrl(@RequestParam String fileName) { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); GeneratePresignedUrlRequest generatePresignedUrlRequest = new GeneratePresignedUrlRequest("bucket-name", fileName) .withMethod(HttpMethod.PUT) .withExpiration(new Date(System.currentTimeMillis() + 3600 * 1000)); URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest); return ResponseEntity.ok(url.toString()); }

The client uploads directly to S3 using the URL.

9. Optimize File Transfer for High Performance

Compression

Compress files before upload to reduce transfer size:javaCopy codebyte[] compressedData = compress(file.getBytes()); Files.write(Paths.get("compressed-file.gz"), compressedData);

Load Balancing

Use a load balancer (e.g., AWS ALB or NGINX) for scalable upload endpoints.

Retry Logic

Implement retries for failed transfers to improve reliability:javaCopy codeint maxRetries = 3; while (retries < maxRetries) { try { // Attempt file upload break; } catch (IOException e) { retries++; if (retries == maxRetries) { throw e; } } }

These strategies can enhance file transfer performance, scalability, and reliability in Java Spring applications. Let me know if you need code examples for specific use cases!

4o

You said:

S3 relaibility

ChatGPT said:

ChatGPT

Ensuring reliability when transferring files to Amazon S3 involves minimizing failures, handling errors effectively, and ensuring data integrity. Below are best practices and techniques to achieve reliable file transfers to S3:

1. Use Multipart Upload for Large Files

Multipart uploads split large files into smaller parts, upload them in parallel, and reassemble them in S3. This improves reliability and speeds up transfers.

Example Using AWS SDK (Java)

java

Copy code

AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); String bucketName = "your-bucket-name"; String fileName = "large-file.zip"; String keyName = "uploads/" + fileName; File file = new File(fileName); TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); Upload upload = tm.upload(bucketName, keyName, file); try { upload.waitForCompletion(); System.out.println("File uploaded successfully!"); } catch (AmazonClientException e) { System.err.println("Upload failed: " + e.getMessage()); }

2. Use Retry Logic

Add retry mechanisms to handle intermittent network issues or service interruptions.

AWS SDK Retry Settings

The AWS SDK retries failed requests automatically, but you can customize it:

java

Copy code

ClientConfiguration config = new ClientConfiguration(); config.setRetryPolicy(PredefinedRetryPolicies.getDefaultRetryPolicyWithCustomMaxRetries(5)); AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withClientConfiguration(config) .build();

3. Enable Transfer Acceleration

Transfer Acceleration uses AWS edge locations to improve upload speeds over long distances.

Enable Transfer Acceleration in S3

Enable it in the S3 bucket settings.

Update your client code to use the accelerated endpoint:

java

Copy code

AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withAccelerateModeEnabled(true) .build();

4. Validate Data Integrity

Use MD5 checksums to ensure the uploaded file matches the original file.

AWS CLI Example

bash

Copy code

aws s3 cp file.txt s3://your-bucket/ --content-md5 <calculated-md5>

Java Example

java

Copy code

String md5 = Base64.getEncoder().encodeToString(DigestUtils.md5(new FileInputStream(file))); ObjectMetadata metadata = new ObjectMetadata(); metadata.setContentMD5(md5); PutObjectRequest request = new PutObjectRequest(bucketName, keyName, new FileInputStream(file), metadata); s3Client.putObject(request);

5. Monitor File Transfers

Use CloudWatch Metrics to monitor S3 request success, failures, and latencies.

Monitor transfer progress with TransferManager in the AWS SDK:

java

Copy code

TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); Upload upload = tm.upload(bucketName, keyName, file); upload.addProgressListener(progressEvent -> { System.out.println("Transferred bytes: " + progressEvent.getBytesTransferred()); }); upload.waitForCompletion();

6. Handle Failures Gracefully

Use Resumable Transfers for interrupted uploads with TransferManager:

java

Copy code

TransferManager tm = TransferManagerBuilder.standard().build(); Upload upload = tm.upload(bucketName, keyName, file); // Add a progress listener upload.addProgressListener((ProgressListener) progressEvent -> { System.out.println("Transferred: " + progressEvent.getBytesTransferred()); }); // Graceful failure handling try { upload.waitForCompletion(); } catch (AmazonClientException e) { System.err.println("Error occurred: " + e.getMessage()); }

7. Use Pre-Signed URLs

Generate pre-signed URLs for reliable, time-limited uploads directly from the client, reducing the load on your server.

Generate Pre-Signed URL

java

Copy code

GeneratePresignedUrlRequest generatePresignedUrlRequest = new GeneratePresignedUrlRequest(bucketName, keyName) .withMethod(HttpMethod.PUT) .withExpiration(new Date(System.currentTimeMillis() + 3600 * 1000)); URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest); System.out.println("Pre-Signed URL: " + url);

8. Use S3 Event Notifications

Configure S3 Event Notifications to trigger AWS Lambda or SNS for post-upload validation, processing, or alerting on failures.

Example: Notification for Upload Completion

Configure your S3 bucket to send an event to an SQS queue or Lambda function upon successful upload.

9. Leverage Data Replication

Use S3 Cross-Region Replication (CRR) or Same-Region Replication (SRR) for redundancy.

Automatically replicate files to another bucket for disaster recovery.

10. Automate with AWS DataSync

Use AWS DataSync for automated and highly reliable file transfers between on-premises storage and S3. It includes built-in retry and integrity checks.

11. Optimize Network Performance

Compress files before uploading:javaCopy codeFiles.write(Paths.get("compressed-file.gz"), gzipCompress(fileContent));

Use efficient transfer protocols (e.g., HTTP/2 if supported by your client).

12. Secure Transfers

Always use HTTPS for secure communication.

Use S3 Bucket Policies and IAM Roles to restrict access to authorized users.

Example: Complete Reliable Upload Function

java

Copy code

public void reliableUpload(File file, String bucketName, String keyName) { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); try { // Multipart upload Upload upload = tm.upload(bucketName, keyName, file); upload.addProgressListener((ProgressListener) progressEvent -> { System.out.println("Transferred bytes: " + progressEvent.getBytesTransferred()); }); upload.waitForCompletion(); System.out.println("File uploaded successfully!"); } catch (AmazonClientException e) { System.err.println("Upload failed: " + e.getMessage()); } finally { tm.shutdownNow(); } }

0 notes

Text

Understanding Jenkins Jobs: Essential Component of CI/CD Automation

Introduction

In the world of DevOps, continuous integration and continuous deployment (CI/CD) are crucial practices that help ensure rapid and reliable software delivery. Jenkins, an open-source automation server, has become a staple in CI/CD pipelines. Central to Jenkins’ functionality are Jenkins jobs, which are the building blocks that define specific tasks and workflows. In this blog, we’ll explore what Jenkins jobs are, how to create and manage them, and best practices for optimizing your CI/CD pipeline.

What Are Jenkins Jobs?

Jenkins jobs, also known as projects, are configurations that define a sequence of operations Jenkins should perform. These operations can range from simple tasks like running a script to complex workflows involving multiple stages and dependencies. Jobs are the core components in Jenkins that facilitate building, testing, and deploying code.

Types of Jenkins Jobs

Jenkins offers several types of jobs to cater to different requirements:

Freestyle Project: The most basic type of Jenkins job, offering a wide range of configuration options. It’s suitable for simple tasks and straightforward automation workflows.

Pipeline: Defined using a Jenkinsfile, a pipeline job allows for complex, multi-step processes. Pipelines support code review, version control, and can be defined in Groovy.

Multibranch Pipeline: Automatically creates a pipeline for each branch in a repository, making it ideal for projects with multiple branches.

Folder: Not a job per se, but a way to organize jobs and other folders hierarchically.

External Job: Used to monitor the execution of a job that is run outside Jenkins.

Matrix Project: Allows you to run multiple configurations of a job in parallel, ideal for testing against different environments.

Creating a Jenkins Job

Creating a Jenkins job is straightforward:

Open Jenkins Dashboard: Access your Jenkins instance and navigate to the dashboard.

New Item: Click on “New Item” on the left-hand side menu.

Name Your Job: Enter a name for your job and select the type of job you want to create (e.g., Freestyle project, Pipeline).

Configure Your Job: Define the necessary configurations such as source code management, build triggers, build environment, and post-build actions.

Save: Once configured, click “Save” to create the job.

Configuring a Freestyle Project

Freestyle projects are the simplest and most flexible type of Jenkins job. Here’s how to configure one:

Source Code Management: Connect your job to a version control system (e.g., Git, SVN) by providing repository URL and credentials.

Build Triggers: Define conditions under which the job should be triggered, such as schedule (cron), Git hooks, or after another build completes.

Build Environment: Set up the environment in which your job will run. This can include setting environment variables, using a specific JDK, or running within a Docker container.

Build Steps: Define the actual tasks that Jenkins will perform. Common build steps include executing shell commands, running batch files, invoking Gradle or Maven, and compiling code.

Post-Build Actions: Specify actions to take after the build completes, such as sending notifications, archiving artifacts, or deploying to a server.

Managing Jenkins Jobs

Effective management of Jenkins jobs is crucial for maintaining a smooth CI/CD pipeline. Here are some best practices:

Use Folders: Organize jobs into folders based on projects or teams to keep the dashboard clean and manageable.

Job Naming Conventions: Adopt a consistent naming convention for jobs to make it easier to identify their purpose.

Parameterization: Use job parameters to make jobs reusable for different environments or configurations.

Version Control for Jenkinsfile: Store your Jenkinsfile in the same repository as your code to keep your pipeline definition under version control.

Regular Cleanup: Periodically review and delete obsolete jobs to reduce clutter and improve performance.

Enhanced Security Measures

No matter how robust your Jenkins setup is, human error can still pose a significant risk. It’s vital to educate your team about best practices for security and their role in protecting sensitive information. Conduct regular training sessions and provide clear guidelines on handling sensitive data, identifying phishing attempts, and reporting suspicious activity. Implementing these enhanced security measures will significantly reduce the risk of breaches and ensure a more secure environment.

Conclusion

Jenkins jobs are fundamental to automating tasks within a CI/CD pipeline. By understanding the different types of jobs, how to create and manage them, and adhering to best practices, you can optimize your development workflow and ensure faster, more reliable software delivery. Whether you’re just getting started with Jenkins or looking to refine your existing setup, mastering Jenkins jobs is an essential step in your DevOps journey.

0 notes

Text

Docker Registry Pruner release!

tl;dr: We are open-sourcing a new tool to apply retention policies to Docker images stored in a Docker Registry: ✨tumblr/docker-registry-pruner✨.

At Tumblr, we have been leaning into containerization of workloads for a number of years. One of the most critical components for a Docker-based build and deployment pipeline is the Registry. Over the past 5+ years, we have built a huge amount of Docker containers. We are constantly shipping new updates and building new systems that deprecate others. Some of our repos can have 100s of commits a day, each creating a new image via our CI/CD pipeline. Because of this rapid churn, we create a ton of Docker images; some of them are in production, others have been deprecated and are no longer in use. These images accumulate in our Registry, eating up storage space and slowing down Registry metadata operations.

Images can range from a few hundred MB to a few GB; over time, this can really add up to serious storage utilization. In order to reclaim space and keep the working set of images in our registry bounded, we created, and are now open-sourcing, the ✨tumblr/docker-registry-pruner✨! This tool allows you to specify retention policies for images in an API v2 compatible registry. Through a declarative configuration, the tool will match images and tags via regex, and then retain images by applying retention policies. Example policies could be something like keeping the last N days of images, keeping the latest N images, or keeping the last N versions (semantically sorted via semantic versioning).

Configuration Format

A more precise definition of how the tool allows you to select images, tags, and retention policies is below. A config is made up of registry connection details and a list of rules. Each rule is a combination of at least 1 selector and an action.

Selectors

A selector is a predicate that images must satisfy to be considered by the Action for deletion.

repos: a list of repositories to apply this rule to. This is literal string matching, not regex. (i.e. tumblr/someservice)

labels is a map of Docker labels that must be present on the Manifest. You can set these in your Dockerfiles with LABEL foo=bar. This is useful to create blanket rules for image retention that allow image owners to opt in to cleanups on their own.

match_tags: a list of regular expressions. Any matching image will have the rule action evaluated against it (i.e. ^v\d+)

ignore_tags: a list of regular expressions. Any matching image will explicitly not be evaluated, even if it would have matched match_tags

NOTE: the ^latest$ tag is always implicitly inherited into ignore_tags.

Actions

You must provide one action, either keep_versions, keep_recent, or keep_days. Images that match the selector and fail the action predicate will be marked for deletion.

keep_versions: Retain the latest N versions of this image, as defined by semantic version ordering. This requires that your tags use semantic versioning.

keep_days: Retain the only images that have been created in the last N days, ordered by image modified date.

keep_recent: Retain the latest N images, ordered by the image's last modified date.

You can see the documentation for more details, or check out the example.yaml configuration!

Tumblr uses this tool to (via Kubernetes CronJob) periodically scan and prune unneeded images from a variety of Docker repos. Hopefully this tool will help other organizations manage the sprawl of Docker images caused by rapid development and CI/CD, as well!

26 notes

·

View notes

Text

Dokev release date

DOKEV RELEASE DATE UPDATE

DOKEV RELEASE DATE CODE

DOKEV RELEASE DATE WINDOWS

Better sandboxed workflow and enhanced cross compilation by in #9744.

Add nolintlint and clean up nolint directives by in #9738.

Use correct YAML marshal func by in #9712.

DOKEV RELEASE DATE UPDATE

Update Dockerfile syntax to latest stable, and rename docs Dockerfile by in #9711.Remove unused workflows, especially the pr-closed which always failed by in #9689.Avoid pulling same images multiple times ⚡️ by in #9173.Apply compose model on compose kill, add -remove-orphans by in #9764.Don't wait for disabled dependency by in #9759.Filter out commandConn.Close* warning message by in #9748.Don't apply default pull policy from command line if one is defined in service configuration by in #9720.Remove extra whitespaces in help text of some subcommands by in #9710.If command is ran with a compose file, apply the compose model by in #9375.Do not stop dependency containers by in #9701.

DOKEV RELEASE DATE WINDOWS

Resolve environment variables case-insensitively on Windows by in #9438.

Update usage strings for consistency by in #9706.

Give environment variables precedence back to OS over.

Build windows/arm64 and linux/riscv64 binaries by in #9751.

Apply newly loaded envvars to "DockerCli" and "APIClient" by in #9745.

made their first contribution in #9828.

made their first contribution in #9703.

made their first contribution in #9810.

made their first contribution in #9798.

update compose-go version to v1.5.1 by in #9840.

build(deps): bump go.opentelemetry.io/otel from 1.4.1 to 1.9.0 by in #9821.

build(deps): bump /AlecAivazis/survey/v2 from 2.3.5 to 2.3.6 by in #9830.

build(deps): bump go.opentelemetry.io/otel from 1.9.0 to 1.10.0 by in #9835.

ci: reduce noise from dependabot on Docker deps by in #9770.

build: label built images for reliable cleanup on down by in #9819.

Only attempt to start specified services on compose start by in #9797.

Apply newly loaded envvars to "DockerCli" and "APIClient" by in #9817.

patch: build.go access custom labels directly cause panic by in #9810.

Fix docker-compose convert that turns $ into $$ when using the -no-interpolate option by in #9703.

DOKEV RELEASE DATE CODE

Correctly capture exit code when service has dependencies by in #9794.

logs: filter to services from current Compose file by in #9811.

chore: add improvement output warning when pulling image by in #9829.

We’ll be keeping this page up to date with all the latest updates, so stay tuned for all major announcements from the game and Pearl Abyss. However, if the first look is anything to go by, this should be an incredibly fun game to play. But you be the judge for yourself:Īt the time of writing this, there is no official release date for the game – only a teaser trailer for the exciting new open-world action-adventure game. It’s safe to say, this could be one of the most attractive-looking games of the year. The game’s lead character would now catch creatures in a Pokemon-Esq manner, presumably collecting as many as you can during your adventure.ĭuring the Gamescom Opening Night Live event last night, viewers got a glimpse of some actual in-game & gameplay footage from the Korean title. Pearl Abyss, developers of the Korean game, decided to change the direction for Dokev recently – changing it from an MMO to an open-world action-adventure title. Dokev’s new trailer premiered last night at Gamescom 2021, sparking even more excitement around the game’s imminent release.

0 notes

Text

Iobserve windows

Binary monitoring writers using Java NIO.Bug fixes and improvements to code, performance, tests, build scripts,.Major refinements and extensions to TSLib and OPAD.Various samplers to collect data from the JVM.Migrated to Gradle for build automation.Trace analysis: automatic repair of broken traces.Example for monitoring traces in distributed applications based on the RESTful Netflix OSS RSS Reader Recipe, provided via container-based virtualization (Docker).Probes for collecting distributed traces in REST-based environments with Jersey.New reader and writer for Advanced Message Queuing Protocol (AMQP).Standalone tool for resource monitoring.New servlet filter to start the Sigar-based samplers (previously only a servlet for CPU, memory/swap).New probes and corresponding records for network and disk usage load average.Monitoring of system-level statistics based on the Sigar API:.Bug fixes and improvements to code, performance, tests,.Docker containers for examples (JPetStore livedemo, RSS example),.Jenkins-based continuous delivery pipeline integrated with GitHub.Migration to Atlassian Cloud (Confluence and Jira).Explicit support for HornetQ and OpenJMS.Refined architecture including minor (internal) API changes.Performance improvements in the monitoring component (including.Records provide API access to property names.Updated and new documentation are now located in the Kieker wiki.Updated the build system to provide separate bundles for all tools.Switched to Jenkins declarative pipeline.Replacing Jar file dependencies to Maven Central dependencies.Included Clover test coverage tool, migrated from FindBugs to.Shared tool features can be found in kieker-tools Restructuring of sub-projects tools have now separate projects and.Many cleanups and improved test coverage.The goal is to improve the dependency management. This is supposed to be a schematic example and other technology-specific Moved the Kafka readers and writers into a separate Gradle module.Ensuring support for Java 7, 8, 9, and 10.Introduced new data bridge tool, called collector.Added monitoring of database calls (including the SQL statement).Introduced new Kieker tool framework covering commandline andĬonfigurations file evaluation (see Writing Tools and Services).New TeeTime reader stages with common interface to use them in.Support for additional compression algorithms in the file writers.Monitoring support for Spring-based REST communication with AspectJ.Generic file writer supporting different compression types.New file system writer to replace the existing ones in the next.TeeTime-based infrastructure replaces the existing one. TeeTime-based framework and filters exist in parallel to theĮxisting infrastructure. Integration of a novel pipes-and-filter framework and filters in.Bug fixes and improvements to code, performance, tests, documentation,.Migrated the deprecated LaTeX-based user guide to Read The Docs ().Use of GitHub actions to test common platforms (Linux, Mac, Windows) and Java versions.Converted Kieker tools to multi-project builds.Replaced additional local Jar dependencies by using the Maven dependencies.Improved AspectJ probes, e.g., by introducing before/after advices for cflow pointcuts, OpenJDK 11 support.Added new analysis stages from the iObserve research project.Migration of analysis stages to the TeeTime-based Kieker analysis.Please refer to the Documentation for details on using Kieker. Starting with version 1.4, the Kieker ticket system contains details on the changes. This document summarizes changes made in all releases starting with 0.5.

0 notes

Text

Rubymine m1

#Rubymine m1 how to

#Rubymine m1 install

#Rubymine m1 code

#Rubymine m1 code

After making your Dockerfile, put this code on your Dockerfile. An example is pre-built installation instructions for MySQL, or PHP, or Node.js. There are pre-built set of rules found on Docker hub. Make a file named DockerfileĪ Dockerfile is a file with set of rules you'll set that docker will follow. You can have multiple Dockerfiles for different services, and one docker-compose file to tie them up together. Dockerfile and docker-compose file works hand in hand.

#Rubymine m1 how to

Think of these two files as set of instructions Docker follows on how to setup your virtual container. This is where most of the operation happens. The ignorefiles are a little bit lenghty that's why I just put links.ĪDVERTISEMENT Dockerfile and docker-compose file The look of your project folder will look similar to this. Īnd if you use git, put this snippet of code in your. Usually, the list of recommended files to ignore are usually found in the internet. An example is when you have a minified assets, js, css files, that gets changed from time to time whenever you change the original code. What is a dockerignore file? Dockerignore file just tells Docker which files to ignore in it's container. But usually, it's just download and open the installer, register an account on dockerhub, and you're good to go. I'm not gonna talk much about installing docker, there are many videos out there. Installing Docker and including Ignorefiles 1. In the next section, we'll get our hands dirty with docker setup. With a few commands and a few lines of a configuration file, you're already setup. Do you really want to go install, troubleshoot, and follow varying installation instructions that depend on the machine? Then make the pieces work, one-by-one on every machine, without the assurance of they won't encounter any varying errors along the way? You have 12 clean slate machines, with a combination of linux and mac computers. Let's say you have a team of developers, designers, and testers, and have an app with a back-end server, database server, and caching server. Dan said in his tutorial: "Docker can help there because we can make one or more images that can then be converted into running containers, and those containers can run on our different developer, and even designer machines." When I was watching an online tutorial about docker, Dan Wahlin, the teacher, said that one of the benefits of docker is to accelerate developer on-boarding. With Docker, it's just a few commands to cleanup. Install docker, load your dockerfiles, run a few commands in your terminal, you're already setup! And also, what if you want to uninstall all that you did? It's hard to track what steps to undo.

#Rubymine m1 install

I forgot how to install it again and it's a pain to repeat the steps. But I didn't list or take note of the websites I've visited to make it work on my machine. It took me over 3 hours of installing, troubleshooting, and searching the documentation to get it working. Setting up Ruby On Rails with with these tools is great. Why use docker? Why not just install it on your local machine, and install Ruby Version Manager (rvm) or Ruby Environment (rbenv)?

0 notes

Text

[Media] Nebula

Nebula Nebula is a Cloud and (hopefully) DevOps Penetration Testing framework. It is build with modules for each provider and each functionality. As of April 2021, it only covers AWS, but is currently an ongoing project and hopefully will continue to grow to test GCP, Azure, Kubernetes, Docker, or automation engines like Ansible, Terraform, Chef, etc. Currently covers: ▫️ S3 Bucket name bruteforce ▫️ IAM, EC2, S3, STS and Lambda Enumeration ▫️ IAM, EC2, STS, and S3 exploitation ▫️ SSM Enumeration + Exploitation ▫️ Custom HTTP User-Agent ▫️ Enumerate Read Privileges (working on write privs) ▫️ Reverse Shell ▫️ No creds Reconnaisance There are currently 67 modules covering: ▫️ Reconnaissance ▫️ Enumeration ▫️ Exploit ▫️ Cleanup ▫️ Reverse Shell https://github.com/gl4ssesbo1/Nebula

0 notes

Text

Docker Prune Automating Cleanup Across Multiple Container Hosts

Docker Prune Automating Cleanup Across Multiple Container Hosts @vexpert #vmwarecommunities #automation #cicd #dockerprune #docker #dockercontainers #pipeline #overlay2 #dockerimages #bestdockercommands #homelab #virtualization

If you run many Docker hosts or multiple hosts in a Swarm cluster, if you are updating and respinning your containers (and you should be), you will have space accumulated on your Docker hosts from things like old container images, container overlay storage, and other things that can add up to a significant amount of storage. There is a helpful built-in command called docker prune that helps to…

0 notes

Text

Switchresx Crack

Download SwitchResX for Mac to custom resolutions, desktop icon position saving & more. SwitchResX has had 2 updates within the past 6 months. SwitchResX fixes all that and allows you to select any possible screen mode that your device supports from a comprehensive (yet easy to use) user interface. As a bonus, the missing screen modes will appear in Apple's Display preferences like magic, as soon as you start SwitchResX. Downloads like Switchresx 4.9.1 Macos may often include a crack, keygen, serial number or activation code to make it the full version. If it does then it will be found in the download file itself. Copy & Paste Links The word 'serial' in warez context means a unique number which identifies the license of the software as being valid. SwitchResX gives you unparalleled control over your monitor's resolution. Resolution Management Whether you prefer to use the menu bar or nested contextual menus, SwitchResX gives you constant, simplified access to the resolution management options you need. Controls include. Monitor Resolution. SwitchResX is a reliable macOS application designed from the ground up to help you to stay in control of your Mac’s monitor and its resolutions. With the help of SwitchResX you can manage the resolution of anything from MacBook Retina displays and Cinema Displays to TV sets and projectors.

In this guide I will show you how to uninstall SwitchResX on your Mac. This uninstall process does not follow standard procedures. I tried using this software to get an ultra-wide resolution out of my mac air. Unfortunately I was not able to get it to work. I’m not sure if it was user error or software but ultimately led to uninstalling the software.

Preferences

First we need to go to the Apple Icon in the top left of the screen, then select the System Preferences option.

Once the Preferences open up click on the SwitchResX option.

Uninstall

After a few seconds this should open up the SwitchResX preferences. With the preferences as the active window go to the top menu and select SwitchResX Control then Uninstall SwitchResX..

A dialog box will come up asking you to confirm uninstalling SwitchResX. Click Yes to proceed with the uninstall.

Once you select Yes you will get a prompt asking to let SwitchResX makes changes to the system. https://huntercountry477.tumblr.com/post/654032251060912128/linux-download-docker. We need to input our username and password so that SwitchResX can take control and uninstall itself.

Little snitch app store. Preview, buy, or rent movies in up to 1080p HD on iTunes. Browse more than 65,000 comedies, romances, classics, indies, and thrillers and your downloads will be instantly accessible. Mar 10, 2021 How long until the App Store or Google Play pulls the app for having hate rhetoric or some such nonsense? I think there are work arounds to that problem, but it’s going to kill the convenience of the app and cause Ralph to lose more customers. Objectively, even with solid app development, there is literally no chance of this succeeding.

Catalina dmg. Once the uninstall completes, you will get a dialog box like below confirming it has been uninstalled. We still need to do one more thing to clean up.

Cleanup

You will most likely be left at this Preferences window for SwitchResX. Click the back button that is pointed out by the red arrow below.

Now look for the SwitchResX icon and right click (or two finger click) and select Remove 'SwitchResX' Preference Pane. At this point you have now successfully uninstalled SwitchResX. If these instructions worked for you or need to make a modification, please drop a comment below to help future readers!

If you would like to suggest a new article or video to be released, please reach out via the Contact Page. I look forward to hearing your feedback and providing content that is helpful for you!

Fifa 14 winter roster update download. Jan 10, 2018 - More Tags: fifa 12 official patch, fifa 12 squad update patch, fifa 12 squad update patch pc, fifa 12 squad update 2013, fifa 12 winter roster. This 'patch' will update all the team rosters in FIFA 14 as of (all transfers are included). Squad.zip 4y old 9,427 DLs 4 mb. Manual Download. FIFA 14 ModdingWay Mod Update 22.1.0 - Link Fixed. Get it from our Download Center. FIFA 14 ModdingWay Mod Winter Transfers Update Released. We have released FIFA 14 ModdingWay Update 13.0.0, including Winter Transfers. Updated rosters and lineups for all European Teams in Rest of The World. Sure to visit him frequently for more great mods ) or from our Download Center. We have released a small update for FIFA 14 ModdingWay Mod that fixes all bugs reported from previous World Cup update. Get it from our Download Center.

Sample Results From Member Downloads

Download NameDate AddedSpeedSwitchresx 3.8.720-May-20192,415 KB/sSwitchresx 3.8.7 Download20-May-20192,739 KB/sSwitchresx 3.8.7 Cracked19-May-20192,207 KB/sSwitchresx 3.8.7 Unlock Key16-May-20192,821 KB/sSwitchresx 3.8.7 Torrent16-May-20192,127 KB/sSwitchresx.3.8.7_15.May.2019.rar15-May-20192,635 KB/s(FULL) Switchresx 3.8.711-May-20192,596 KB/s

Showing 7 download results of 7 for Switchresx 3.8.7

Switchresx Crack

To create more accurate search results for Switchresx 3.8.7 try to exclude using commonly used keywords such as: crack, download, serial, keygen, torrent, warez, etc. Simplifying your search should return more download results. Many downloads like Switchresx 3.8.7 may also include a crack, serial number, unlock code, cd key or keygen (key generator). If this is the case it is usually found in the full download archive itself.

Switchresx 4.9.1 Crack

FileFixation.com is a new file sharing web service which gives you access to literally hundreds of thousands of direct downloads including software, games, movies, tv shows, mp3 albums, ebooks and more! Our downloads database is updated daily to provide the latest download releases on offer. To celebrate our launch we are offering unlimited full download access for FREE! This is a limited offer and will soon expire and revert back to the normal member price. We now have 285,464 downloads in the member section. Take the FileFixation tour now for more detailed information!

Switchresx 4.10.1 Crack

The word 'crack' in this context means the action of removing the copy protection from commercial software. A crack is a set of instructions or patch used to remove copy protection from a piece of software or to unlock features from a demo or time-limited trial. There are crack groups who work together in order to crack software, games, etc. If you search for Switchresx 3.8.7 Crack, you will often see the word 'crack' amongst the results which means it is the full version of the product.

Switchresx Crack

The word 'serial' means a unique number which identifies the license of the software as being valid. All retail software uses a serial number or key of some form. The installation often requires the user to enter a valid serial number to proceed. A serial can also be referred to as a 'CD Key'. When you search for Switchresx 3.8.7 Serial for example, you may find the word 'serial' amongst the results. This usually means your software download includes a serial number of some sort.

The word 'keygen' means a small program that can generate a cd key, activation number, license code, serial number, or registration number for a piece of software. KeyGen is a shortened word for Key Generator. A keygen is made available through crack groups free to download. When writing a keygen, the author will identify the algorithm used in creating a valid cd key. Once the algorithm is identified they can then incorporate this into the keygen. If you search a download site for Switchresx 3.8.7 Keygen, this often means your download includes a keygen.

Switchresx 4.9.0 Crack

Romancing saga 2 english. Switchresx 3.8.7, Crack Download, Lostseason 2 Full HDTV, Adobe Persuasion 4.0 For Mac, Hogop Comwater Sports Shoes P602 HTML, Codic Do Sound Forge 9, Eve 2.5 Plagiarism Detection System, Eragon DVDrip Xvidsagalounge, Beetlebug 3, Vasco Rossi, Buick Customcarcovers Co, Cract Winsamm, Audiodesk 3.0, Mpeg Video Wizard DVD 4.0.4.114, Rise Of Prussia, Dwg Bibliothe, Accordents Presenterplus, The National Anthems, Warfare Reloaded, Caddy Electrical 5 Full Y Eado, Weatherby vanguard serial number search.

( Home Signup Take A Tour FAQ Testimonials Support Terms & Conditions Legal & Content Removal ) Design and Layout © 2019 FileFixation. All rights reserved.

0 notes

Text

Version 440

youtube

windows

zip

exe

macOS

app

linux

tar.gz

I had an unfortunately short week, but I did some good work. The tiled renderer has nice fixes.

tiled renderer

I regret the tiled renderer, while good most of the time, had crashes when it did go wrong. To stop this with any new errors that might pop up, the whole block now has an additional layer of error catching around it. If a tile fails to render for any reason, you now get a black square, and if some coordinate space cannot be calculated or a portion of the image is truncated, the system will now print some errors but otherwise ignore it.

A particular problem several users encountered was legacy images that have EXIF rotation metadata but were imported years ago when the client did not understand this. Therefore, hydrus thought some old image was (600x900) when it then loaded (900x600). In the old system, you would have had a weird stretch, maybe a borked rotation, but in my new tiled system it would try to draw tiles that didn't exist, causing our errors-then-crashes. The client now recognises this situation, gives you a popup, and automatically schedules metadata regeneration maintenance for the file.

some misc

You can now set a custom 'namespace' file sort (the 'series-creator-volume-chapter-page' stuff) right on a page. Just click the new 'custom' menu entry and you can type whatever you like. It should save through your session and be easy to edit again. This is prep for some better edit UI here and increased sort/collect control, so if you do a lot of namespace sorting, let me know how you get on!

I prototyped a new 'delete lock' mode, which prohibits deletion of files if they match a criteria. I am starting with if a file is archived. You can turn this mode on under options->files and trash. It mostly just ignores deletes at the moment, but in future I will improve feedback, and maybe have a padlock icon or something, and eventually attach my planned 'metadata conditional' object to it so you'll be able to delete-lock all pngs, or all files with more than four tags, or whatever you can think of.

new builds to test

This is probably just for advanced users. If you would like to help test, make sure you have a backup before you test anything on a real database!

A user has been working hard on replicating the recent macOS build work for the other releases, cribbing my private build scripts together into a unified file that builds on github itself from the source, as well as rolling out a Docker package. I have had a look over everything and we agree it is ready for wider testing, so if you would like to help out, please check out the test v440 builds here:

https://github.com/hydrusnetwork/hydrus/releases/tag/v440-test-build

These should work just like my normal builds above--the scripts are using PyInstaller and InnoSetup as I do on my machines, so it all assembles the same way--but we are interested in any errors you nonetheless encounter. We may need to hammer out some stricter library version requirements for older machines, since until now we've basically been relying on my home dev environments staying static until I next remember to run pip update.

Once we have these working well, I'd like to use this system for the main build. It makes things easier and more reliable on my end, and should improve security since the builds are assembled automatically in clean environments with publicly viewable scripts rather than my random-ass dev machines using my own dlls, batch files, and prayers. Who knows, we may even defeat the anti-virus false positives.

Also, if you would like to try the Docker package, check it out here:

https://github.com/users/hydrusnetwork/packages/container/package/hydrus

I don't know much about Docker, so while I can't help much, I'll still be interested in any feedback. If and when we are ready to switch over here, I'll be updating my help with any appropriate new backup instructions and links and so on.

Please also remember that running hydrus from source is also always an option:

https://hydrusnetwork.github.io/hydrus/help/running_from_source.html

As we went through this process of automating my builds, we've improved the requirements.txts, and I've learned a bit more about automatic environment setup, so I hope I can also add some quick setup scripts for different platforms to make running from source and even building your own release much easier.

full list

tiled renderer:

the tiled renderer now has an additional error catching layer for tile rendering and coordinate calculation and _should_ be immune to to the crashes we have seen from unhandled errors inside Qt paint events

when a tile fails to render, a full black square will be used instead. additional error information is quickly printed to the log

fixed a tile coordinate bug related to viewer initialisation and shutdown. when the coordinate space is currently bugnuts, now nothing is drawn

if the image renderer encounters a file that appears to have a different resolution to that stored in the db, it now gives you a popup and automatically schedules a metadata regen job for that file. this should catch legacy files with EXIF rotation that were imported before hydrus understood that info

when a file completes a metadata regen, if the resolution changed it now schedules a force-regen of the thumbnail too

.

the rest:

added a prototype 'delete lock' for archived files to _options->files and trash_ (issue #846). this will be expanded in future when the metadata conditional object is made to lock various other file states, and there will be some better UI feedback, a padlock icon or similar, and some improved dialog texts. if you use this, let me know how you get on!

you can now set a custom namespace sort in the file sort menu. you have to type it manually, like when setting defaults in the options, but it will save with the page and should load up again nicely in the dialog if you edit it. this is an experiment in prep for better namespace sort edit UI

fixed an issue sorting by namespaces when one of those namespaces was hidden in the 'single media' tag context. now all 'display' tags are used for sort comparison groups. if users desire the old behaviour, we'll have to add an option, so let me know

the various service-level processing errors when update files are missing or janked out now report the actual hash of the bad update file. I am chasing down one of these errors with a couple of users and cannot quite figure out why the repair code is not auto-fixing things

fixed a problem when the system tray gets an activate event at unlucky moments

the default media viewer zoom centerpoint is now the mouse

fixed a typo in the client api with wildcard/namespace tag search--sorry for the trouble!

.

some boring multiple local file services cleanup:

if you have a mixture of trash and normal thumbnails selected, the right-click menu now has separate choices for 'delete trash' and 'delete selected' 'physically now'

if you have a mixture of trash and normal thumbnails selected, the advanced delete dialog now similarly provides separate 'physical delete' options for the trashed vs all

media viewer, preview viewer, and thumbnail view delete menu service actions are now populated dynamically. it should say 'delete from my files' instead of just 'delete'

in some file selection contexts, the 'remote' filter is renamed to 'not local'

next week

I had a run of IRL stuff eating my hydrus time, but I think I am now free. I'll catch up on smaller work and keep grinding at multiple local file services.

0 notes

Text

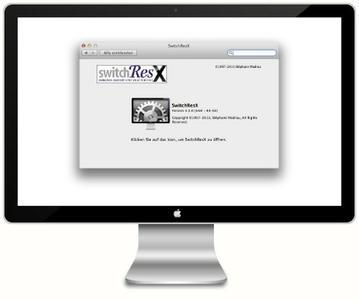

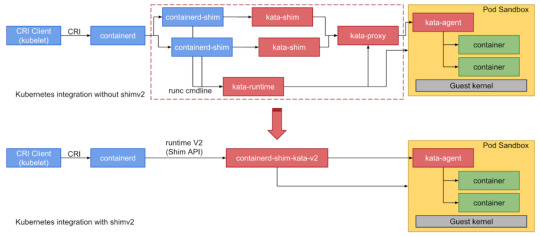

Kata Containers in Screwdriver

Screwdriver is a scalable CI/CD solution which uses Kubernetes to manage user builds. Screwdriver build workers interfaces with Kubernetes using either “executor-k8s” or “executor-k8s-vm” depending on required build isolation.

executor-k8s runs builds directly as Kubernetes pods while executor-k8s-vm uses HyperContainers along with Kubernetes for stricter build isolation with containerized Virtual Machines (VMs). This setup was ideal for running builds in an isolated, ephemeral, and lightweight environment. However, HyperContainer is now deprecated, has no support, is based on an older Docker runtime and it also required non-native Kubernetes setup for build execution. Therefore, it was time to find a new solution.

Why Kata Containers ?

Kata Containers is an open source project and community that builds a standard implementation of lightweight virtual machines (VMs) that perform like containers, but provide the workload isolation and security advantages of VMs. It combines the benefits of using a hypervisor, such as enhanced security, along with container orchestration capabilities provided by Kubernetes. It is the same team behind HyperD where they successfully merged the best parts of Intel Clear Containers with Hyper.sh RunV. As a Kubernetes runtime, Kata enables us to deprecate executor-k8s-vm and use executor-k8s exclusively for all Kubernetes based builds.

Screwdriver journey to Kata

As we faced a growing number of instabilities with the current HyperD - like network and devicemapper issues and IP cleanup workarounds, we started our initial evaluation of Kata in early 2019 (

https://github.com/screwdriver-cd/screwdriver/issues/818#issuecomment-482239236) and identified two major blockers to move ahead with Kata:

1. Security concern for privileged mode (required to run docker daemon in kata)

2. Disk performance.

We recently started reevaluating Kata in early 2020 based on a fix to “add flag to overload default privileged host device behaviour” provided by Containerd/cri (https://github.com/containerd/cri/pull/1225), but still we faced issues with disk performance and switched from overlayfs to devicemapper, which yielded significant improvement. With our two major blockers resolved and initial tests with Kata looking promising, we moved ahead with Kata.

Screwdriver Build Architecture

Replacing Hyper with Kata led to a simpler build architecture. We were able to remove the custom build setup scripts to launch Hyper VM and rely on native Kubernetes setup.

Setup

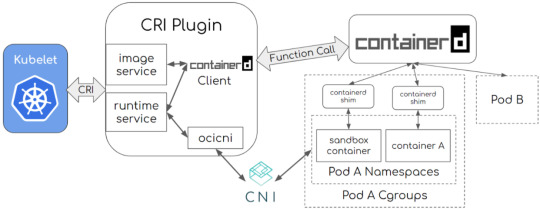

To use Kata containers for running user builds in a Screwdriver Kubernetes build cluster, a cluster admin needs to configure Kubernetes to use Containerd container runtime with Cri-plugin.

Components:

Screwdriver build Kubernetes cluster (minimum version: 1.14+) nodes must have the following components set up for using Kata containers for user builds.

Containerd:

Containerd is a container runtime that helps with management of the complete lifecycle of the container.

Reference: https://containerd.io/docs/getting-started/

CRI-Containerd plugin:

Cri-Containerd is a containerd plugin which implements Kubernetes container runtime interface. CRI plugin interacts with containerd to manage the containers.

Reference: https://github.com/containerd/cri

Image credit: containerd / cri. Photo licensed under CC-BY-4.0.

Architecture:

Image credit: containerd / cri. Photo licensed under CC-BY-4.0.

Installation:

Reference: https://github.com/containerd/cri/blob/master/docs/installation.md, https://github.com/containerd/containerd/blob/master/docs/ops.md

Crictl:

To debug, inspect, and manage their pods, containers, and container images.

Reference: https://github.com/containerd/cri/blob/master/docs/crictl.md

Kata:

Builds lightweight virtual machines that seamlessly plugin to the containers ecosystem.

Architecture:

Image credit: kata-containers Project licensed under Apache License Version 2.0

Installation:

https://github.com/kata-containers/documentation/blob/master/Developer-Guide.md#run-kata-containers-with-kubernetes

https://github.com/kata-containers/documentation/blob/master/how-to/containerd-kata.md

https://github.com/kata-containers/documentation/blob/master/how-to/how-to-use-k8s-with-cri-containerd-and-kata.md

https://github.com/kata-containers/documentation/blob/master/how-to/containerd-kata.md#kubernetes-runtimeclass

https://github.com/kata-containers/documentation/blob/master/how-to/containerd-kata.md#configuration

Routing builds to Kata in Screwdriver build cluster

Screwdriver uses Runtime Class to route builds to Kata nodes in Screwdriver build clusters. The Screwdriver plugin executor-k8s config handles this based on:

1. Pod configuration:

apiVersion: v1 kind: Pod metadata: name: kata-pod namespace: sd-build-namespace labels: sdbuild: "sd-kata-build" app: screwdriver tier: builds spec: runtimeClassName: kata containers: - name: "sd-build-container" image: <<image>> imagePullPolicy: IfNotPresent

2. Update the plugin to use k8s in your buildcluster-queue-worker configuration

https://github.com/screwdriver-cd/buildcluster-queue-worker/blob/master/config/custom-environment-variables.yaml#L4-L83

Performance

The below tables compare build setup and overall execution time for Kata and Hyper when the image is pre-cached or not cached.

Known problems

While the new Kata implementation offers a lot of advantages, there are some known problems we are aware of with fixes or workarounds:

Run images based on Rhel6 containers don't start and immediately exit

Pre-2.15 glibc: Enabled kernel_params = "vsyscall=emulate" refer kata issue https://github.com/kata-containers/runtime/issues/1916 if trouble running pre-2.15 glibc.

Yum install will hang forever: Enabled kernel_params = "init=/usr/bin/kata-agent" refer kata issue https://github.com/kata-containers/runtime/issues/1916 to get a better boot time, small footprint.

32-bit executable cannot be loaded refer kata issue https://github.com/kata-containers/runtime/issues/886: To workaround/mitigate we maintain a container exclusion list and route to current hyperd setup and we have plans to eol these containers by Q4 of this year.

Containerd IO snapshotter - Overlayfs vs devicemapper for storage driver: Devicemapper gives better performance. Overlayfs took 19.325605 seconds to write 1GB, but Devicemapper only took 5.860671 seconds.

Compatibility List

In order to use this feature, you will need these minimum versions:

API - v0.5.902

UI - v1.0.515

Build Cluster Queue Worker - v1.18.0

Launcher - v6.0.71

Contributors

Thanks to the following contributors for making this feature possible:

Lakshminarasimhan Parthasarathy

Suresh Visvanathan

Nandhakumar Venkatachalam

Pritam Paul

Chester Yuan

Min Zhang

Questions & Suggestions

We’d love to hear from you. If you have any questions, please feel free to reach out here. You can also visit us on Github and Slack.

0 notes

Text

Common Docker Commands you must know