#FaceRig

Video

youtube

Nite Time with the Princess Earth Day Special

A bit off on the timing but made an Earthday video.

And like most of my Facerig shows....Nonese is key. Logic is forbidden!

Enjoy the Lunacy

2 notes

·

View notes

Video

vimeo

Curso de Especialista en Motion Graphic 2020-21. Ejercicio de animación de personajes. Trabajo de Alba Martínez Portillo.

#motion#motion graphic#motion graphics#Alba Martínez#Alba Portillo#animación de personajes#facerig#animación

0 notes

Video

undefined

tumblr

Posting this to show Contessa’s face rig in Blender. Used shapekeys and drivers to create a face panel that I can move around in the scene because my smooth brain cannot bother scripting a UI panel.

The audio is originally from Kagha’s dialogue in Baldur’s Gate 3.

1 note

·

View note

Video

undefined

tumblr

Live2D Model Showcase - Jan Fuzzball (2020 Model)

✨ Glowing Headset

✨ AEIOU Mouth Shapes

✨ 4 Expression Keys

Commission Info - Youtube

#vtuber#vtuber model#live2d#live2d model#live2d showcase#live2d model showcase#old work#facerig#prprlive#facerig model#made with aviutl#skiyoshi-live2d#its amazing how much i have improved since this model#sk-live2d-pre2022#skiyoshi live2d

1 note

·

View note

Text

Paint 3d Wednesdays are on Tuesdays now

#it is wednesday my dudes#my art#my terrible art#my terrible terrible art#paint3d#paint 3d#ms paint#doodle#dinosaur#this is the facerig avatar I use. It's one of the defaults. This is the closest you'll get to a self portrait

10 notes

·

View notes

Text

why are vtuber fans actually unhinged. just an unbelievably rancid combo of idol culture and lowest common denominator weebery. and all over some of the most unwatchable content i have ever seen in my life.

#im speaking specifically abt the corporate scene here bc that's where you see the absolute worst of it#like not only maintained as a status quo but imo actively encouraged#you would think that they believed it was actually an anime girl come to life instead of an unfunny streamer in front of a facerig cam#disappointing bc the idea of using an animated avatar to stream as opposed to a traditional facecam is a p fun one honestly#but now it will always be associated at least tangentially with. THAT.#textphelia

3 notes

·

View notes

Text

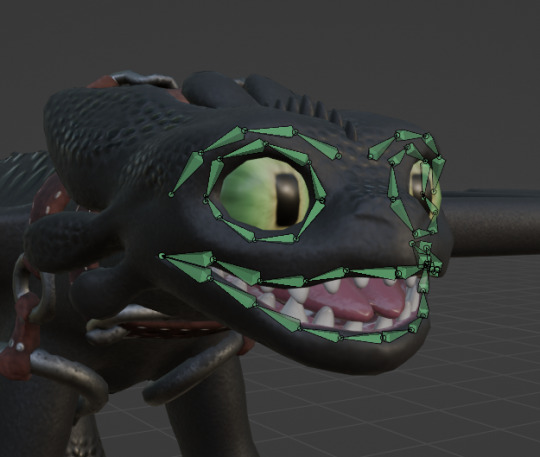

WOF x HTTYD crossover where they assume Toothless is just non-verbal.

LOOK AT HIM!!! LOOK AT MY TOOTHLESS MODEL!!!! I MADE THAT THING!!!!!

He's got to be the most polished asset I made so far. More under the cut.

He's got a facerig to rival my WOF models

The saddle came out so nice

I half assed the tail but who cares right??..... right???????

1K notes

·

View notes

Text

Facerig not detecting camera

Learn what you should be reading this fall: Our collection of reviews on books coming out this season includes biographies, novels, memoirs and more.Want to keep up with the latest and greatest in books? This is a good place to start. A line from the Hebrew Bible might easily have appeared in a recent political ad: “Woe to the city of blood, full of lies, full of plunder, never without victims!” Ditto the 18th-century writing of Jean-Jacques Rousseau describing the city as “depraved by sloth, inactivity, the love of pleasure.” Hell was Babylon, or what it stood for - the “original Sin City,” rife with the unsavory aspects of urbanity decried since at least 2000 B.C. In this regard, the cities that spread across the Indus Valley in today’s Pakistan were watery Edens: They had no temples or palaces but granaries, assembly halls and systems for sewage and water that may instead have been the sacred centers of the communities’ lives.

“I am more interested in the connective tissue that binds the organism together,” he writes, “not just its outward appearance or vital organs.”Ĭlimate change has recently helped us rethink the 7,000-year-old ruins of Uruk, the fabled Sumerian city, and the planning of other ancient urban centers “as a way of aligning human activities with the underlying order and energies of the universe,” Wilson writes. The city, as Wilson sees it, is less of a warehouse of architecture and more of an organism that shapes the creatures living inside. The bad effects (“harsh, merciless environments,” for instance) are produced not so much by roads and buildings but by what’s invisible. In “Metropolis: A History of the City, Humankind’s Greatest Invention,” the historian Ben Wilson takes us on an exhilarating tour of more than two dozen cities and thousands of years, examining that invention’s good and bad effects. METROPOLIS A History of the City, Humankind’s Greatest Invention By Ben Wilson

0 notes

Text

imported my google photos into the storage server, which means I now get not only my high school era phone photos, but also anything else that passed through that phone so it is probably like ⅓ furry art.

It does paint a more. complete. image of my teenage years.

using magazines to estimate the blast yield of homemade fireworks

My brother teaching me integrals and setting silly physics puzzles

slides on... israel palestine from 2014? Whose are these.

[REDACTED]

Whoops, can't show that in a christian manga

photos I took because we were all playing a lot of KSP at the time

Furries facerigging doge

horse content

there are cats

Anyway I am trying out Photoprism, I suspect it might have a better categorizer than Memories. Fortunately they can both just eat an existing file store so I don't have to pick until after I compare them. Handy

14 notes

·

View notes

Text

So uh, I noticed something with my computer wallpaper...

Facerig was positioned in literally just the right spot lmao

127 notes

·

View notes

Text

「viRtua canm0m」 Project :: 002 - driving a vtuber

That about wraps up my series on the technical details on uploading my brain. Get a good clean scan and you won't need to do much work. As for the rest, well, you know, everyone's been talking about uploads since the MMAcevedo experiment, but honestly so much is still a black box right now it's hard to say anything definitive. Nobody wants to hear more upload qualia discourse, do they?

On the other hand, vtubing is a lot easier to get to grips with! And more importantly, actually real. So let's talk details!

Vtubing is, at the most abstract level, a kind of puppetry using video tracking software and livestreaming. Alternatively, you could compare it to realtime mocap animation. Someone at Polygon did a surprisingly decent overview of the scene if you're unfamiliar.

Generally speaking: you need a model, and you need tracking of some sort, and a program that takes the tracking data and applies it to a skeleton to render a skinned mesh in real time.

Remarkably, there are a lot of quite high-quality vtubing tools available as open source. And I'm lucky enough to know a vtuber who is very generous in pointing me in the right direction (shoutout to Yuri Heart, she's about to embark on something very special for her end of year streams so I highly encourage you to tune in tonight!).

For anime-style vtubing, there are two main types, termed '2D' and 3D'. 2D vtubing involves taking a static illustration and cutting it up to pieces which can be animated through warping and replacement - the results can look pretty '3D', but they're not using 3D graphics techniques, it's closer to the kind of cutout animation used in gacha games. The main tool used is Live2D, which is proprietary with a limited free version. Other alternatives with free/paid models include PrPrLive and VTube studio. FaceRig (no longer available) and Animaze (proprietary) also support Live2D models. I have a very cute 2D vtuber avatar created by @xrafstar for use in PrPrLive, and I definitely want to include some aspects of her design in the new 3D character I'm working on.

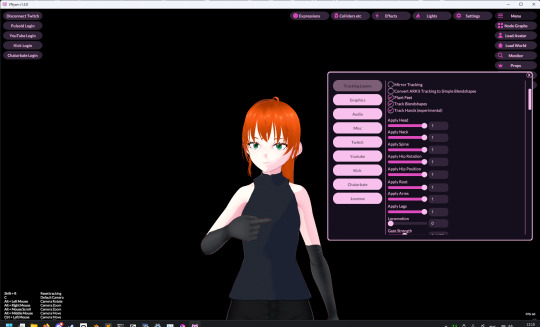

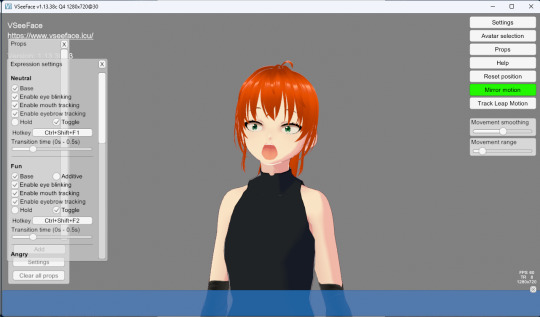

For 3D anime-style vtubing, the most commonly used software is probably VSeeFace, which is built on Unity and renders the VRM format. VRM is an open standard that extends the GLTF file format for 3D models, adding support for a cel shading material and defining a specific skeleton format.

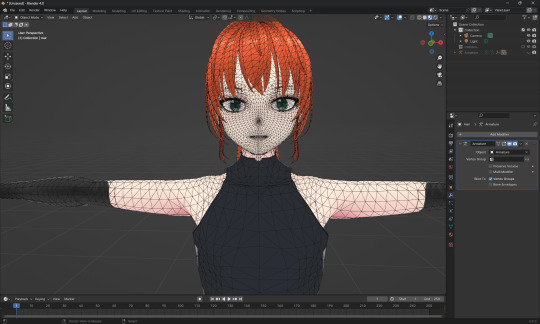

It's incredibly easy to get a pretty decent looking VRM model using the software VRoid Studio, essentially a videogame character creator whose anime-styled models can be customised using lots of sliders, hair pieces, etc., which appears to be owned by Pixiv. The program includes basic texture-painting tools, and the facility to load in new models, but ultimately the way to go for a more custom model is to use the VRM import/export plugin in Blender.

But first, let's have a look at the software which will display our model.

meet viRtua canm0m v0.0.5, a very basic design. her clothes don't match very well at all.

VSeeFace offers a decent set of parameters and honestly got quite nice tracking out of the box. You can also receive face tracking data from the ARKit protocol from a connected iPhone, get hand tracking data from a Leap Motion, or disable its internal tracking and pipe in another application using the VMC protocol.

If you want more control, another Unity-based program called VNyan offers more fine-grained adjustment, as well as a kind of node-graph based programming system for doing things like spawning physics objects or modifying the model when triggered by Twitch etc. They've also implemented experimental hand tracking for webcams, although it doesn't work very well so far. This pointing shot took forever to get:

<kayfabe>Obviously I'll be hooking it up to use the output of the simulated brain upload rather than a webcam.</kayfabe>

To get good hand tracking you basically need some kit - most likely a Leap Motion (1 or 2), which costs about £120 new. It's essentially a small pair of IR cameras designed to measure depth, which can be placed on a necklace, on your desk or on your monitor. I assume from there they use some kind of neural network to estimate your hand positions. I got to have a go on one of these recently and the tracking was generally very clean - better than what the Quest 2/3 can do. So I'm planning to get one of those, more on that when I have one.

Essentially, the tracker feeds a bunch of floating point numbers in to the display software at every tick, and the display software is responsible for blending all these different influences and applying it to the skinned mesh. For example, a parameter might be something like eyeLookInLeft. VNyan uses the Apple ARKit parameters internally, and you can see the full list of ARKit blendshapes here.

To apply tracking data, the software needs a model whose rig it can understand. This is defined in the VRM spec, which tells you exactly which bones must be present in the rig and how they should be oriented in a T-pose. The skeleton is generally speaking pretty simple: you have shoulder bones but no roll bones in the arm; individual finger joint bones; 2-3 chest bones; no separate toes; 5 head bones (including neck). Except for the hands, it's on the low end of game rig complexity.

Expressions are handled using GLTF morph targets, also known as blend shapes or (in Blender) shape keys. Each one essentially a set of displacement values for the mesh vertices. The spec defines five default expressions (happy, angry, sad, relaxed, surprised), five vowel mouth shapes for lip sync, blinks, and shapes for pointing the eyes in different directions (if you wanna do it this way rather than with bones). You can also define custom expressions.

This viRtua canm0m's teeth are clipping through her jaw...

By default, the face-tracking generally tries to estimate whether you qualify as meeting one of these expressions. For example, if I open my mouth wide it triggers the 'surprised' expression where the character opens her mouth super wide and her pupils get tiny.

You can calibrate the expressions that trigger this effect in VSeeFace by pulling funny faces at the computer to demonstrate each expression (it's kinda black-box); in VNyan, you can set it to trigger the expressions based on certain combinations of ARKit inputs.

For more complex expressions in VNyan, you need to sculpt blendshapes for the various ARKit blendshapes. These are not generated by default in VRoid Studio so that will be a bit of work.

You can apply various kinds of post-processing to the tracking data, e.g. adjusting blending weights based on input values or applying moving-average smoothing (though this noticeably increases the lag between your movements and the model), restricting the model's range of movement in various ways, applying IK to plant the feet, and similar.

On top of the skeleton bones, you can add any number of 'spring bones' which are given a physics simulation. These are used to, for example, have hair swing naturally when you move, or, yes, make your boobs jiggle. Spring bones give you a natural overshoot and settle, and they're going to be quite important to creating a model that feels alive, I think.

Next up we are gonna crack open the VRoid Studio model in Blender and look into its topology, weight painting, and shaders. GLTF defines standard PBR metallicity-roughness-normals shaders in its spec, but leaves the actual shader up to the application. VRM adds a custom toon shader, which blends between two colour maps based on the Lambertian shading, and this is going to be quite interesting to take apart.

The MToon shader is pretty solid, but ultimately I think I want to create custom shaders for my character. Shaders are something I specialise in at work, and I think it would be a great way to give her more of a unique identity. This will mean going beyond the VRM format, and I'll be looking into using the VNyan SDK to build on top of that.

More soon, watch this space!

9 notes

·

View notes

Note

If you don’t mind my asking how do you find working in the vtuber program you use? I have an ancient copy of facerig but when I saw I had to make like over 50 animations that it blends between I was like “well I don’t have time for that” but never really looked around any further because I like don’t stream often At All and it was a passing “it’d be fun to make something like that” curiosity but man. I’m thinking about it again

howdy! :D

I use VSeeFace on my PC and iFacialMocap on my iPhone for vtuber tracking and streaming! I really like it, but it's lots and lots of trial and error haha- that's partly because all my avatars are non-humanoid/are literally animals with few to no human features, and vtuber tracking programs are designed to measure the movements of a human face and translate them onto a human vtuber avatar, so I have to make lots of little workarounds. If you're modeling a human/humanoid avatar, I would imagine things are more straightforward

I think as long as you're comfortable with 3D modeling and are ready to be patient, it's definitely worth exploring! I really like using my model for streaming and narration of YouTube videos, Tiktoks, etc. For me it's just a lot of fun! :D It's a bit like being a puppeteer haha

If you're looking to get started making vtubers, I really recommend this series of tutorials by MakoRay on YouTube, it's super informative and goes step-by-step:

youtube

Best of luck! :D

15 notes

·

View notes

Text

I feel like if I had just gotten over myself in the first place and started selfshipping openly with Waver I would've maybe been a happier person but without the takeoff of the mastersona community here I wouldn't have had the confidence or like... playing field? to do it in. Idk. It's kind of funny how like. Denying myself that connection like that. Really did impact my life in an insane way. When I look at my old posts from this blog's beginning and from shitpostsaber it doesn't even feel like the same person anymore. Or like. I can't... remember the depth of suffering and aimlessness I was experiencing back then. I had dropped out of college and was struggling with depression and ptsd and chronic illness so bad that I couldn't get myself out of bed most days, and I had no skills or talents I could make a decent living off of. And I was broke. And living with a parent who denied I was mentally ill in the first place, and HIS solution to my disability was to LITERALLY put me back into the cage that caused the brunt of it. He said, and I quote, "Sometimes it only feels like you progress when your back is up against the wall."

And I fucking hate that he was right. The last time I showed any prerogative towards my self survival and progress as a human being was when I had come to ask to live with him in highschool, back when I lived with my mother, and it was like -- something about seeing her flub the act of parenting or caring or being a real person so hard enraged me to the point of taking care of both me and my sibling and then eventually myself. It made me start looking for options to survive and carve a path out for myself. And then he essentially exiled me here, to live with her again, and when I called him and asked for help, he said that; and said Money is Freedom. it was fucking heights of covid lockdown then of course so that wasn't going to happen for another year. But the year after that everything changed rapidly. One by one I started being an adult who put his life together and was able to get his shit together tangibly enough that I could (...most of the time) afford an apartment, a car, utilities, a phone, etc... this was not the kind of person that the person running shitpostsaber could've imagined.

And I don't know what Salter thought either. Salter wasn't capable of thinking about these things. Maybe Salter thought an eternity of self harm based ironic humor was the answer. You can live by being funny, and likable, and hurting yourself for jokes while the secret only being visible to you; the spectacle of online media performance. It's why I'll always have a sort of visceral hatred for the vtuber phenomenon, for the act of separating your Self for performance, for an online audience of in particular People Into Anime And Manga And Games. And Otaku Shit. Right before I ended the blog I was working on putting together a model w/FaceRig for streaming. I'm glad I didn't finish, like many of my projects I had started.

I had to continually deny my self's existence and to overlay it with that presumed Artificiality in order to justify my existence. Because reality isn't desirable, isn't marketable, isn't cute or attractive. My sona now is fat like I am. Has roots coming in. I draw the nose a little crooked in some images, I draw the fat under his chin. I draw thick scratchy eyebrows, fat thighs and belly. He's me now. He is finally me. That representation of me, for all fantasy's worth, is still me. SPSB couldn't comprehend removing that lense of Anime Girl Funny Relatability. Or maybe couldn't imagine claiming this all as their own. Whatever the case may be, I'm freer now. I'm less possessed by trying to be something I'm not -- because I am what I am, and because I acknowledge how I've grown and what principles I operate on. I just am who I am. And all of that that exists with me is me. All the dissociative stuff, all the fatigue, all the frustration, all the issues, the spontaneity, everything. It belongs to me now. Not to the lense.

2 notes

·

View notes

Text

OG Tweet here.

In case you weren't fond of the current tech used for deepfakes and such, welcome to the bad timeline.

Although I do have to laugh at some of the peeps going "Well, NOW i can't trust anything online anymore" and I'm just like; bless you and your baby heart for thinking everyone was 100% honest always before this bit of tech got into the world.

Listen, when tech like this got started in early 2010' it was great. It was far too niche for news tabloids or whatever and it helped solidify facerigging tech for Vtubers a few years down the line.

Maybe I just liked it more when the tech wasn't so clearly primed for nefarious means.

2 notes

·

View notes

Text

i'm totally hypocritical for refreshing new on the comment sections for the shitty mgs song but goddamn it's like a zoo i can't look away. these dudes have been in the trenches for days white knight defending this dogshit song throwing the most generic insults at every single negative comment it's wild. you gotta be deep into your weird internet echochamber if you're under the impression that 'tourist' is an insult that would actually get to anyone, it's baffling me. they're blindfiring every kind of bigotry there is at random people not even knowing what would and wouldn't even apply. a lot of them literally seem to think it's "triggered western twittards" hating it and not just like, anyone with ears that work. how is a talent agency video game streamer with an anime facerig worth any of this shit dude. if i make another post about this please kill me because i'm gonna try to stop looking at it now

9 notes

·

View notes

Text

oopsie whoopsie im gonna need to redo tails' facerig and edit his muzzle mesh drastically bc turns out making the mouth open makes editing shape keys a fucking nightmare

#soda offers you a can#this is not how i wanted things to turn out but alas im not going to deal with this fresh hell i made for myself

8 notes

·

View notes