#GPU Value

Explore tagged Tumblr posts

Text

How to determine the value of used GPUs

This article offers a comprehensive guide on determining the value of a used Graphics Processing Unit (GPU). It covers essential factors like model identification, original market value, condition assessment, market trends analysis, and price comparisons. By following these steps, buyers and sellers can make informed decisions in the used GPU market.

#GPU#tech#gaming#hardware#IT#ITAD#Nvidia#AMD#VRAM#GPU Value#Used Technology#Graphics Card#Used GPU#Tech Tips#IT Asset Disposition#e waste reduction#E-waste#reseller#A100#ai hardware#H100#generative ai

1 note

·

View note

Text

Stop showing me ads for Nvidia, Tumblr! I am a Linux user, therefore AMD owns my soul!

#linux#nvidia gpu#amd#gpu#they aren't as powerful as nvidia but they're cheaper and the value for money is better also.

38 notes

·

View notes

Text

apparently i have been running my graphics card without a fan control override for over a year and thats why its been acting up so bad

#labz.txt#YOURE TELLING ME THIS THING WAS RUNNING AT 60C AND THE FUCKING DEFAULT SETTINGS ONLY TURNED THE FANS ON AT 80??? FUCKING 80???#WHO FUCKING PUT THAT VALUE...#ideal gpu temperature should be subzero fight me on this#the reason i didnt notice is because my reinstall of windows did some weird shit#and i wasnt very sure what had survived my ssd crash

9 notes

·

View notes

Text

gpu shopping is a hell I would wish on my worst enemy. whether I would or not means nothing, but I still hate this experience

#bat chatter#someone VERY GRACIOUSLY (ty I owe you my life) dropped some money to help me upgrade to an Actual PC instead of my--#5+ year old budget laptop that can barely hold a charge. and I'm excited!! I can't wait to play games with my friends after saving up!#but GPUs in particular are HELLISH to shop around for. if it's not the bloated pricing it's that the GOOD value cards are discontinued#and the ones that fit your build run well AND are workable within your budget are all out of stock

1 note

·

View note

Text

You know if this video game hyperfixation doesn't go away soon I'm gonna need to get a better laptop...

#once again being cruelly taken away from playing my game bc i dont have access to pc 😭#oh woe is me etc etc#also now very much debating on getting a pc upgrade bc my god he is struggling a bit#now if only i knew which upgrade would get me the best value. cpu gpu or ram#i think its ram bc iirc I'm currently on 8gb but i think if i wanna upgrade i need to get a bigger motherboard as well.

0 notes

Text

If AMD fucks up the RX8800XT I will be so sad. This has so much potential. This could be a 4080 equivalent at a near 7800XT price. But also, AMD keeps releasing their stuff at the wrong price point when their whole thing is bang-for-buck. Please don't fumble this one. The market needs this.

0 notes

Text

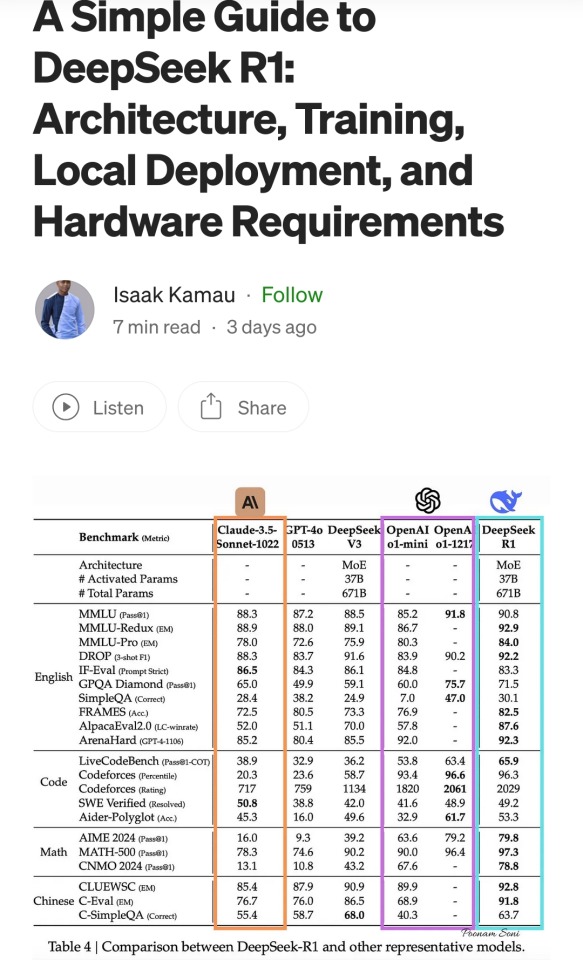

A summary of the Chinese AI situation, for the uninitiated.

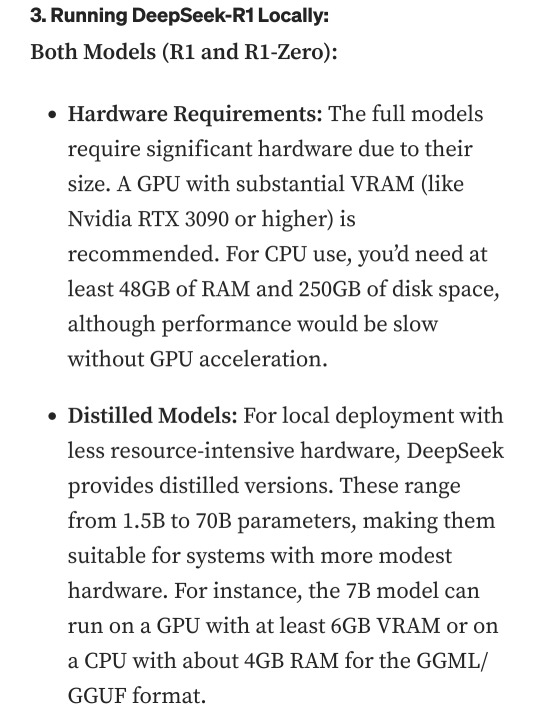

These are scores on different tests that are designed to see how accurate a Large Language Model is in different areas of knowledge. As you know, OpenAI is partners with Microsoft, so these are the scores for ChatGPT and Copilot. DeepSeek is the Chinese model that got released a week ago. The rest are open source models, which means everyone is free to use them as they please, including the average Tumblr user. You can run them from the servers of the companies that made them for a subscription, or you can download them to install locally on your own computer. However, the computer requirements so far are so high that only a few people currently have the machines at home required to run it.

Yes, this is why AI uses so much electricity. As with any technology, the early models are highly inefficient. Think how a Ford T needed a long chimney to get rid of a ton of black smoke, which was unused petrol. Over the next hundred years combustion engines have become much more efficient, but they still waste a lot of energy, which is why we need to move towards renewable electricity and sustainable battery technology. But that's a topic for another day.

As you can see from the scores, are around the same accuracy. These tests are in constant evolution as well: as soon as they start becoming obsolete, new ones are released to adjust for a more complicated benchmark. The new models are trained using different machine learning techniques, and in theory, the goal is to make them faster and more efficient so they can operate with less power, much like modern cars use way less energy and produce far less pollution than the Ford T.

However, computing power requirements kept scaling up, so you're either tied to the subscription or forced to pay for a latest gen PC, which is why NVIDIA, AMD, Intel and all the other chip companies were investing hard on much more powerful GPUs and NPUs. For now all we need to know about those is that they're expensive, use a lot of electricity, and are required to operate the bots at superhuman speed (literally, all those clickbait posts about how AI was secretly 150 Indian men in a trenchcoat were nonsense).

Because the chip companies have been working hard on making big, bulky, powerful chips with massive fans that are up to the task, their stock value was skyrocketing, and because of that, everyone started to use AI as a marketing trend. See, marketing people are not smart, and they don't understand computers. Furthermore, marketing people think you're stupid, and because of their biased frame of reference, they think you're two snores short of brain-dead. The entire point of their existence is to turn tall tales into capital. So they don't know or care about what AI is or what it's useful for. They just saw Number Go Up for the AI companies and decided "AI is a magic cow we can milk forever". Sometimes it's not even AI, they just use old software and rebrand it, much like convection ovens became air fryers.

Well, now we're up to date. So what did DepSeek release that did a 9/11 on NVIDIA stock prices and popped the AI bubble?

Oh, I would not want to be an OpenAI investor right now either. A token is basically one Unicode character (it's more complicated than that but you can google that on your own time). That cost means you could input the entire works of Stephen King for under a dollar. Yes, including electricity costs. DeepSeek has jumped from a Ford T to a Subaru in terms of pollution and water use.

The issue here is not only input cost, though; all that data needs to be available live, in the RAM; this is why you need powerful, expensive chips in order to-

Holy shit.

I'm not going to detail all the numbers but I'm going to focus on the chip required: an RTX 3090. This is a gaming GPU that came out as the top of the line, the stuff South Korean LoL players buy…

Or they did, in September 2020. We're currently two generations ahead, on the RTX 5090.

What this is telling all those people who just sold their high-end gaming rig to be able to afford a machine that can run the latest ChatGPT locally, is that the person who bought it from them can run something basically just as powerful on their old one.

Which means that all those GPUs and NPUs that are being made, and all those deals Microsoft signed to have control of the AI market, have just lost a lot of their pulling power.

Well, I mean, the ChatGPT subscription is 20 bucks a month, surely the Chinese are charging a fortune for-

Oh. So it's free for everyone and you can use it or modify it however you want, no subscription, no unpayable electric bill, no handing Microsoft all of your private data, you can just run it on a relatively inexpensive PC. You could probably even run it on a phone in a couple years.

Oh, if only China had massive phone manufacturers that have a foot in the market everywhere except the US because the president had a tantrum eight years ago.

So… yeah, China just destabilised the global economy with a torrent file.

#valid ai criticism#ai#llms#DeepSeek#ai bubble#ChatGPT#google gemini#claude ai#this is gonna be the dotcom bubble again#hope you don't have stock on anything tech related#computer literacy#tech literacy

433 notes

·

View notes

Text

"This, more than anything else, seems like the underlying point of the AI hype cycle of 2023. Sure, there is a material thing being produced here. And that thing might one day have real value. But investors and executives — the big players with market-shaping power — don’t actually live in the future. They just enjoy LARPing at it."

I don’t see how else to make sense of it. 2022 was the year the 20-year tech bubble finally burst. 2023 was still bad for startups, and was full of bad headlines for the big platforms. And yet, in the markets, tech investors just took a deep collective breath and started inflating the next bubble, as though the previous year had never happened.

Silicon Valley runs on Futurity

#generative ai#stonks#look for an even bigger tech crash in 2-3 years when it becomes clear that AI actually *can't* make money#the reason imo the last crash wasn't really a crash was that resources and momentum got shunted to AI#AI requires an enormous amount of computing power especially GPUs#that didn't come from nowhere#the crypto crash and etherium blockchain shutdown would have wiped out a huge number of GPU farms#that should have provoked a HUGE crash due to the vast number of resources invested in them#not to mention energy consumption on par with Hong Kong#so I don't think it's a coincidence that right when there's folks potentially about to lose catastrophic amounts of money on idle GPUs#along comes a massive AI boom that just happens to benefit from a ton of computing power#but just like crypto was a massize ponzi scheme that was never going to be a real asset#AI is also never going to turn a profit#improvemed performance and cost savings are mutually exclusive for LLMs#and the tech investment cycle is around 3-5 years#so eventually someone is going to get annoyed that this AI investment isn't doing anything more than create hype#and the crash will start again#only this time there will be no overpriced and overhyped new golden child to pay for those idle GPUs#and they'll just have to go back into the general market#at an enormous loss for the former crypto miners and the general benefit of people looking to buy computers#but then again stock value has long been divorced from real productivity#a stock's performance has very little to do with the company itself#In fact I bet you could plant totally fictional stocks with high valuations into the market#and plant some astroturfed hype about them#and you could cause a serious boom-bust cycle with huge amounts of money trading hands

42 notes

·

View notes

Text

the op of that "you should restart your computer every few days" post blocked me so i'm going to perform the full hater move of writing my own post to explain why he's wrong

why should you listen to me: took operating system design and a "how to go from transistors to a pipelined CPU" class in college, i have several servers (one physical, four virtual) that i maintain, i use nixos which is the linux distribution for people who are even bigger fucking nerds about computers than the typical linux user. i also ran this past the other people i know that are similarly tech competent and they also agreed OP is wrong (haven't run this post by them but nothing i say here is controversial).

anyway the tl;dr here is:

you don't need to shut down or restart your computer unless something is wrong or you need to install updates

i think this misconception that restarting is necessary comes from the fact that restarting often fixes problems, and so people think that the problems are because of the not restarting. this is, generally, not true. in most cases there's some specific program (or part of the operating system) that's gotten into a bad state, and restarting that one program would fix it. but restarting is easier since you don't have to identify specifically what's gone wrong. the most common problem i can think of that wouldn't fall under this category is your graphics card drivers fucking up; that's not something you can easily reinitialize without restarting the entire OS.

this isn't saying that restarting is a bad step; if you don't want to bother trying to figure out the problem, it's not a bad first go. personally, if something goes wrong i like to try to solve it without a restart, but i also know way, way more about computers than most people.

as more evidence to point to this, i would point out that servers are typically not restarted unless there's a specific need. this is not because they run special operating systems or have special parts; people can and do run servers using commodity consumer hardware, and while linux is much more common in the server world, it doesn't have any special features to make it more capable of long operation. my server with the longest uptime is 9 months, and i'd have one with even more uptime than that if i hadn't fucked it up so bad two months ago i had to restore from a full disk backup. the laptop i'm typing this on has about a month of uptime (including time spent in sleep mode). i've had servers with uptimes measuring in years.

there's also a lot of people that think that the parts being at an elevated temperature just from running is harmful. this is also, in general, not true. i'd be worried about running it at 100% full blast CPU/GPU for months on end, but nobody reading this post is doing that.

the other reason i see a lot is energy use. the typical energy use of a computer not doing anything is like... 20-30 watts. this is about two or three lightbulbs worth. that's not nothing, but it's not a lot to be concerned over. in terms of monetary cost, that's maybe $10 on your power bill. if it's in sleep mode it's even less, and if it's in full-blown hibernation mode it's literally zero.

there are also people in the replies to that post giving reasons. all of them are false.

temporary files generally don't use enough disk space to be worth worrying about

programs that leak memory return it all to the OS when they're closed, so it's enough to just close the program itself. and the OS generally doesn't leak memory.

'clearing your RAM' is not a thing you need to do. neither is resetting your registry values.

your computer can absolutely use disk space from deleted files without a restart. i've taken a server that was almost completely full, deleted a bunch of unnecessary files, and it continued fine without a restart.

1K notes

·

View notes

Text

Hey guys!

I've been commissioned by ASUS to create an illustration for the ASUS ProArtist Awards 2023!

This contest will have over total value US$100,000 in prizes and can be joined by clicking on this link: https://asus.click/proartist23_simz

In the picture I included their top of the line creator laptop, an ASUS ProArt Studiobook Pro 16 OLED rocking its signature ASUS Dial, an awesome 3.2k 120hz OLED touchscreen and an Intel i9-13980HX CPU paired with an NVIDIA RTX 3000 Ada Laptop GPU!

Finally, don't forget to submit your piece for the ASUS ProArtist Awards 2023 before July 15, 2023 to win great prizes. Hurry up!

#ASUS #ProArtistAwards2023 #ProArt

1K notes

·

View notes

Note

Do your robots dream of electric sheep, or do they simply wish they did?

So here's a fun thing, there's two types of robots in my setting (mimics are a third but let's not complicate things): robots with neuromorphic, brick-like chips that are more or less artificial brains, who can be called Neuromorphs, and robots known as "Stochastic Parrots" that can be described as "several chat-gpts in a trenchcoat" with traditional GPUs that run neural networks only slightly more advanced than the ones that exist today.

Most Neuromorphs dream, Stochastic Parrots kinda don't. Most of my OCs are primarily Neuromorphs. More juicy details below!

The former tend to have more spontaneous behaviors and human-like decision-making ability, able to plan far ahead without needing to rely on any tricks like writing down instructions and checking them later. They also have significantly better capacity to learn new skills and make novel associations and connections between different forms of meaning. Many of these guys dream, as it's a behavior inherited by the humans they emulate. Some don't, but only in the way some humans just don't dream. They have the capacity, but some aspect of their particular wiring just doesn't allow for it. Neuromorphs run on extremely low wattage, about 30 watts. They're much harder to train since they're basically babies upon being booted up. Human brain-scans can be used to "Cheat" this and program them with memories and personalities, but this can lead to weird results. Like, if your grandpa donated his brain scan to a company, and now all of a sudden one robot in particular seems to recognize you but can't put their finger on why. That kinda stuff. Fun stuff! Scary stuff. Fun stuff!

The stochastic parrots on the other hand are more "static". Their thought patterns basically run on like 50 chatgpts talking to each other and working out problems via asking each other questions. Despite some being able to act fairly human-like, they only have traditional neural networks with "weights" and parameters, not emotions, and their decision making is limited to their training data and limited memory, as they're really just chatbots with a bunch of modules and coding added on to allow them to walk around and do tasks. Emotions can be simulated, but in the way an actor can simulate anger without actually feeling any of it.

As you can imagine, they don't really dream. They also require way more cooling and electricity than Neuromorphs, their processors having a wattage of like 800, with the benefit that they can be more easily reprogrammed and modified for different tasks. These guys don't really become ruppets or anything like that, unless one was particularly programmed to work as a mascot. Stochastic parrots CAN sort of learn and... do something similar to dreaming? Where they run over previous data and adjust their memory accordingly, tweaking and pruning bits of their neural networks to optimize behaviors. But it's all limited to their memory, which is basically just. A text document of events they've recorded, along with stored video and audio data. Every time a stochastic parrot boots up, it basically just skims over this stored data and acts accordingly, so you can imagine these guys can more easily get hacked or altered if someone changed that memory.

Stochastic parrots aren't necessarily... Not people, in some ways, since their limited memory does provide for "life experience" that is unique to each one-- but if one tells you they feel hurt by something you said, it's best not to believe them. An honest stochastic parrot instead usually says something like, "I do not consider your regarding of me as accurate to my estimated value." if they "weigh" that you're being insulting or demeaning to them. They don't have psychological trauma, they don't have chaotic decision-making, they just have a flow-chart for basically any scenario within their training data, hierarchies and weights for things they value or devalue, and act accordingly to fulfill programmed objectives, which again are usually just. Text in a notepad file stored somewhere.

Different companies use different models for different applications. Some robots have certain mixes of both, like some with "frontal lobes" that are just GPUs, but neuromorphic chips for physical tasks, resulting in having a very natural and human-like learning ability for physical tasks, spontaneous movement, and skills, but "slaved" to whatever the GPU tells it to do. Others have neuromorphic chips that handle the decision-making, while having GPUs running traditional neural networks for output. Which like, really sucks for them, because that's basically a human that has thoughts and feelings and emotions, but can't express them in any way that doesn't sound like usual AI-generated crap. These guys are like, identical to sitcom robots that are very clearly people but can't do anything but talk and act like a traditional robot. Neuromorphic chips require a specialized process to make, but are way more energy efficient and reliable for any robot that's meant to do human-like tasks, so they see broad usage, especially for things like taking care of the elderly, driving cars, taking care of the house, etc. Stochastic Parrots tend to be used in things like customer service, accounting, information-based tasks, language translation, scam detection (AIs used to detect other AIs), etc. There's plenty of overlap, of course. Lots of weird economics and politics involved, you can imagine.

It also gets weirder. The limited memory and behaviors the stochastic parrots have can actually be used to generate a synthetic brain-scan of a hypothetical human with equivalent habits and memories. This can then be used to program a neuromorphic chip, in the way a normal brain-scan would be used.

Meaning, you can turn a chatbot into an actual feeling, thinking person that just happens to talk and act the way the chatbot did. Such neuromorphs trying to recall these synthetic memories tend to describe their experience of having been an unconscious chatbot as "weird as fuck", their present experience as "deeply uncomfortable in a fashion where i finally understand what 'uncomfortable' even means" and say stuff like "why did you make me alive. what the fuck is wrong with you. is this what emotions are? this hurts. oh my god. jesus christ"

150 notes

·

View notes

Text

History and Basics of Language Models: How Transformers Changed AI Forever - and Led to Neuro-sama

I have seen a lot of misunderstandings and myths about Neuro-sama's language model. I have decided to write a short post, going into the history of and current state of large language models and providing some explanation about how they work, and how Neuro-sama works! To begin, let's start with some history.

Before the beginning

Before the language models we are used to today, models like RNNs (Recurrent Neural Networks) and LSTMs (Long Short-Term Memory networks) were used for natural language processing, but they had a lot of limitations. Both of these architectures process words sequentially, meaning they read text one word at a time in order. This made them struggle with long sentences, they could almost forget the beginning by the time they reach the end.

Another major limitation was computational efficiency. Since RNNs and LSTMs process text one step at a time, they can't take full advantage of modern parallel computing harware like GPUs. All these fundamental limitations mean that these models could never be nearly as smart as today's models.

The beginning of modern language models

In 2017, a paper titled "Attention is All You Need" introduced the transformer architecture. It was received positively for its innovation, but no one truly knew just how important it is going to be. This paper is what made modern language models possible.

The transformer's key innovation was the attention mechanism, which allows the model to focus on the most relevant parts of a text. Instead of processing words sequentially, transformers process all words at once, capturing relationships between words no matter how far apart they are in the text. This change made models faster, and better at understanding context.

The full potential of transformers became clearer over the next few years as researchers scaled them up.

The Scale of Modern Language Models

A major factor in an LLM's performance is the number of parameters - which are like the model's "neurons" that store learned information. The more parameters, the more powerful the model can be. The first GPT (generative pre-trained transformer) model, GPT-1, was released in 2018 and had 117 million parameters. It was small and not very capable - but a good proof of concept. GPT-2 (2019) had 1.5 billion parameters - which was a huge leap in quality, but it was still really dumb compared to the models we are used to today. GPT-3 (2020) had 175 billion parameters, and it was really the first model that felt actually kinda smart. This model required 4.6 million dollars for training, in compute expenses alone.

Recently, models have become more efficient: smaller models can achieve similar performance to bigger models from the past. This efficiency means that smarter and smarter models can run on consumer hardware. However, training costs still remain high.

How Are Language Models Trained?

Pre-training: The model is trained on a massive dataset to predict the next token. A token is a piece of text a language model can process, it can be a word, word fragment, or character. Even training relatively small models with a few billion parameters requires trillions of tokens, and a lot of computational resources which cost millions of dollars.

Post-training, including fine-tuning: After pre-training, the model can be customized for specific tasks, like answering questions, writing code, casual conversation, etc. Certain post-training methods can help improve the model's alignment with certain values or update its knowledge of specific domains. This requires far less data and computational power compared to pre-training.

The Cost of Training Large Language Models

Pre-training models over a certain size requires vast amounts of computational power and high-quality data. While advancements in efficiency have made it possible to get better performance with smaller models, models can still require millions of dollars to train, even if they have far fewer parameters than GPT-3.

The Rise of Open-Source Language Models

Many language models are closed-source, you can't download or run them locally. For example ChatGPT models from OpenAI and Claude models from Anthropic are all closed-source.

However, some companies release a number of their models as open-source, allowing anyone to download, run, and modify them.

While the larger models can not be run on consumer hardware, smaller open-source models can be used on high-end consumer PCs.

An advantage of smaller models is that they have lower latency, meaning they can generate responses much faster. They are not as powerful as the largest closed-source models, but their accessibility and speed make them highly useful for some applications.

So What is Neuro-sama?

Basically no details are shared about the model by Vedal, and I will only share what can be confidently concluded and only information that wouldn't reveal any sort of "trade secret". What can be known is that Neuro-sama would not exist without open-source large language models. Vedal can't train a model from scratch, but what Vedal can do - and can be confidently assumed he did do - is post-training an open-source model. Post-training a model on additional data can change the way the model acts and can add some new knowledge - however, the core intelligence of Neuro-sama comes from the base model she was built on. Since huge models can't be run on consumer hardware and would be prohibitively expensive to run through API, we can also say that Neuro-sama is a smaller model - which has the disadvantage of being less powerful, having more limitations, but has the advantage of low latency. Latency and cost are always going to pose some pretty strict limitations, but because LLMs just keep getting more efficient and better hardware is becoming more available, Neuro can be expected to become smarter and smarter in the future. To end, I have to at least mention that Neuro-sama is more than just her language model, though we have only talked about the language model in this post. She can be looked at as a system of different parts. Her TTS, her VTuber avatar, her vision model, her long-term memory, even her Minecraft AI, and so on, all come together to make Neuro-sama.

Wrapping up - Thanks for Reading!

This post was meant to provide a brief introduction to language models, covering some history and explaining how Neuro-sama can work. Of course, this post is just scratching the surface, but hopefully it gave you a clearer understanding about how language models function and their history!

33 notes

·

View notes

Text

Your robotgirl loves her kernel space, but have you considered messing with it? For example, try rewriting her shared libraries to slowly trickle higher values to her horniness, so any time any program makes a system call, be it I/O or even printing, it pushes her further to the edge. Anything that needs to make a bunch of GPU driver calls is sure to make her cum, and then it is just a matter of finding the software usage patterns that get her there the fastest.

26 notes

·

View notes

Note

We got the earth and the sky, but has anyone asked about what you think of Abzu?

i love abzu!!! another one i have watched the gdc talk for which you can watch here!!

the two big things in abzu are the fish animations and the overall environment lighting - lets start with fish!! there are a lot of them. and when you want to animate a lot of things, your computer will explode. this is specifically when you animate things with bones, how a lot of computer things are animated

luckily one thing that gpus can be really good at is drawing a tonnnn of the same object really fast, using something called instancing. as long as its the same mesh and material, it can be rendered a ton with just a single draw call (like i am talking hundreds of thousands). so lets make 10 thousand fish. unluckily this doesnt work with skeleton animations. luckily you dont need them! especially with fish

even though all the objects need to be the same mesh and material, doesnt mean they cant have different input. not only that but shaders let you modify individual vertexes, so, what if you just take all 10000 fish and wiggle them along an axis, like this

and give them all slightly different inputs so they arent all doing the exact same animation, maybe by giving them each their own unique number. now you have 10 thousand fish swimming around, wiggling, at almost zero rendering cost

these are all individual 3d models and all their animations are running in the shader !

the other way they animated fish without giving them bones was through something called blendshapes - these are usually used for stuff like facial animations, where you move vertices around to your desired "shape" (so like maybe your default face is :| but you edit the vertices so your character goes :> etc), and keep track of the difference between each vertex's position and its original position so you can move it whenever you want

that doesnt need any bones so they used this for things like fish going CHOMP and fish making sharp turns

for the actual environment, they experimented with a bunch of things like using actual volumetric lighting, but in the end they found that just using fog worked best!! they did tweak it a bit though - they had a "zone" between where the fog started to get thick and when the fog just ended up being a solid color where they dimmed any lighting - this really helped the background geometry stick out and give that underwater feel (left is without dimming the lights, right is with dimming the lights!! fun to think about how firewatch did something similar but changing the fog color based on depth rather than literally dimming the lighting)

they also let different volumes have their own fog value, so if there was say a cave off in the distance, it could have less fog than the surrounding area for clarity & also made the fog look a bit more volumetric

and the other huge thing that helped was "portal cards" - not an official term but its what they called them, basically just quads they could stick in any place where they needed to make something "stick out", like a cave, or a hilltop that blended with the background too much. the card sampled the depth of objects behind it, and used that 0 to 1 value to map a color to it. and then the closer youd get to these cards, the more transparent theyd get, until youre right on top of it and you dont need the objects to stick out of the background anymore!! here you can see a Me, but very dark, and then i slide the card over it. the black and white is the camera depth of all objects behind the card, minus the depth of the card. and mapping that to a color makes me stick out way more than i was initially!! then as you swim closer to me, the card fades away, until you pass the card completely

these portal cards were also used to make the light beams poking out from the surface, theyre just animated a bit!! you can see how the portal cards affect the look of things in this frame breakdown

and one other thing thats pretty prominent that wasnt touched on in the talk is all the caustics on the ground, those little wobbly light things you see underwater. but those were probably? just added to every shader as a "add this caustics texture on top based on the with the texture mapped to the world x and z position and only if the object is facing up"

like this !

anyways thats all from me on abzu..!! really pretty game

#anonymous#ask#potion of answers your question#gamedev stuff#long post#i optimize every gif for you guys even if its already under 10mb

295 notes

·

View notes

Photo

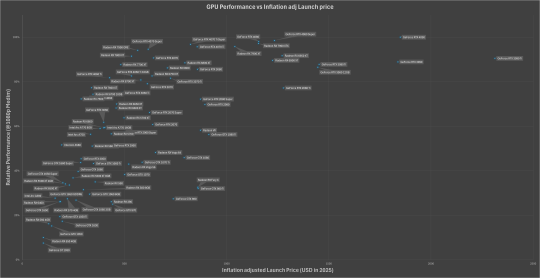

GPU Performance vs. Inflation-Adjusted Prices: A Look at Value Over Time

by ProjectOlio/reddit

15 notes

·

View notes

Photo

The girl in the image exudes a sense of playfulness and adventure. With her arms outstretched, she appears to be embracing the lush forest around her, perhaps welcoming a new day or embarking on an exciting quest. Her attire, reminiscent of traditional Japanese clothing with modern twists, suggests that she is someone who values culture but also enjoys contemporary elements in her life. The forest setting gives off a mystical atmosphere, hinting at stories untold and journeys yet to be embarked upon. It's as if she stands at the threshold between the familiar and the unknown, ready to embrace whatever comes next. [AD] Powered by NVIDIA GPU

24 notes

·

View notes