#Kubernetes configuration

Explore tagged Tumblr posts

Text

Top Kubeadm commands to Manage your Kubernetes cluster

Top Kubeadm commands to Manage your Kubernetes cluster #kubernetes #kubeadm #kubernetesmanagement #kubernetesadministration #kubernetesconfig #k8stools #topkubeadmcommands #virtualizationhowto #devops #homelab #selfhosted #containers #containerd #docker

Kubeadm is a command line tool for managing and configuring Kubernetes clusters for development or production. This guide will look at the top kubeadm commands to manage your Kubernetes cluster and what you need to know. Table of contents1. Installing Kubeadm2. Kubeadm clusters initialization and configuration:3. Adding worker nodes4. Upgrading your Kubernetes clusterApplying the upgrade5.…

View On WordPress

0 notes

Video

youtube

Session 5 Kubernetes 3 Node Cluster and Dashboard Installation and Confi...

#youtube#In this exciting video tutorial we dive into the world of Kubernetes exploring how to set up a robust 3-node cluster and configure the Kuber

0 notes

Text

Are you struggling with deployment issues for your Node.js applications? 😫 Tired of hearing "but it works on my machine"? 🤯 Docker is the game-changer you need!

With Docker, you can containerize your Node.js app, ensuring a smooth, consistent, and scalable deployment across all environments. 🌍

🔥 Why Use Docker for Node.js Deployment? ✅ Eliminates Environment Issues – Package dependencies, runtime, and configurations into a single container for a "works everywhere" experience! ✅ Faster & Seamless Deployment – Reduce deployment time with pre-configured images and lightweight containers! ✅ Improved Scalability – Easily scale your app using Docker Swarm or Kubernetes! ✅ CI/CD Integration – Automate and streamline your deployment pipeline with Docker + Jenkins/GitHub Actions! ✅ Better Resource Utilization – Docker uses less memory and boots faster than traditional virtual machines!

💡 Whether you're a DevOps engineer, developer, or tech enthusiast, understanding Docker for Node.js deployment is a must!

📌 Want to master seamless deployment? Read the full article now!

#node js development#nodejs#top nodejs development company#busniess growth#node js development company

4 notes

·

View notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Load Balancing Web Sockets with K8s/Istio

When load balancing WebSockets in a Kubernetes (K8s) environment with Istio, there are several considerations to ensure persistent, low-latency connections. WebSockets require special handling because they are long-lived, bidirectional connections, which are different from standard HTTP request-response communication. Here’s a guide to implementing load balancing for WebSockets using Istio.

1. Enable WebSocket Support in Istio

By default, Istio supports WebSocket connections, but certain configurations may need tweaking. You should ensure that:

Destination rules and VirtualServices are configured appropriately to allow WebSocket traffic.

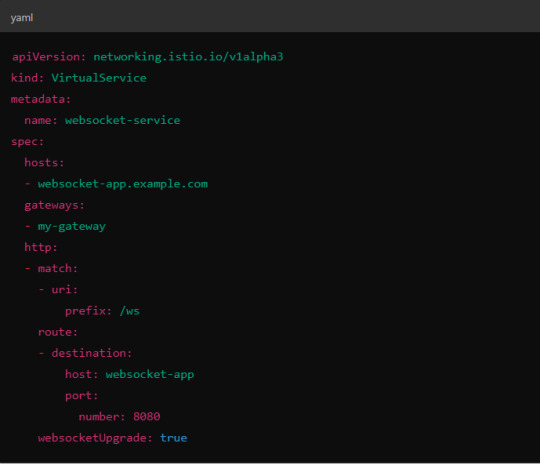

Example VirtualService Configuration.

Here, websocketUpgrade: true explicitly allows WebSocket traffic and ensures that Istio won’t downgrade the WebSocket connection to HTTP.

2. Session Affinity (Sticky Sessions)

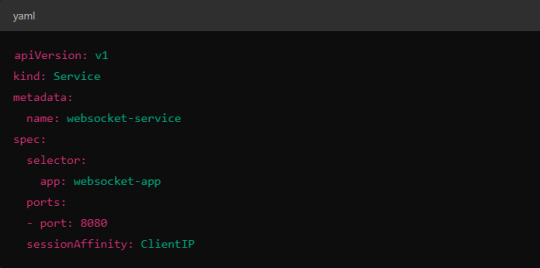

In WebSocket applications, sticky sessions or session affinity is often necessary to keep long-running WebSocket connections tied to the same backend pod. Without session affinity, WebSocket connections can be terminated if the load balancer routes the traffic to a different pod.

Implementing Session Affinity in Istio.

Session affinity is typically achieved by setting the sessionAffinity field to ClientIP at the Kubernetes service level.

In Istio, you might also control affinity using headers. For example, Istio can route traffic based on headers by configuring a VirtualService to ensure connections stay on the same backend.

3. Load Balancing Strategy

Since WebSocket connections are long-lived, round-robin or random load balancing strategies can lead to unbalanced workloads across pods. To address this, you may consider using least connection or consistent hashing algorithms to ensure that existing connections are efficiently distributed.

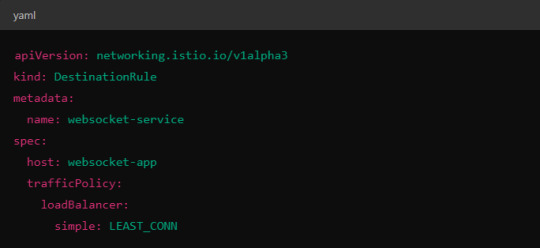

Load Balancer Configuration in Istio.

Istio allows you to specify different load balancing strategies in the DestinationRule for your services. For WebSockets, the LEAST_CONN strategy may be more appropriate.

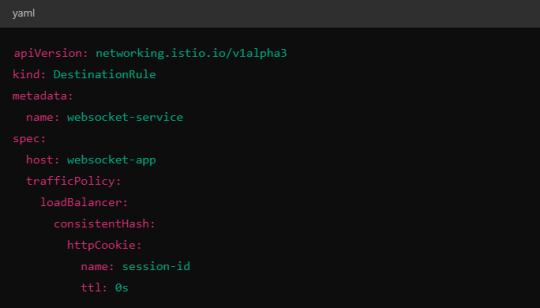

Alternatively, you could use consistent hashing for a more sticky routing based on connection properties like the user session ID.

This configuration ensures that connections with the same session ID go to the same pod.

4. Scaling Considerations

WebSocket applications can handle a large number of concurrent connections, so you’ll need to ensure that your Kubernetes cluster can scale appropriately.

Horizontal Pod Autoscaler (HPA): Use an HPA to automatically scale your pods based on metrics like CPU, memory, or custom metrics such as open WebSocket connections.

Istio Autoscaler: You may also scale Istio itself to handle the increased load on the control plane as WebSocket connections increase.

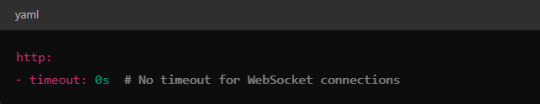

5. Connection Timeouts and Keep-Alive

Ensure that both your WebSocket clients and the Istio proxy (Envoy) are configured for long-lived connections. Some settings that need attention:

Timeouts: In VirtualService, make sure there are no aggressive timeout settings that would prematurely close WebSocket connections.

Keep-Alive Settings: You can also adjust the keep-alive settings at the Envoy level if necessary. Envoy, the proxy used by Istio, supports long-lived WebSocket connections out-of-the-box, but custom keep-alive policies can be configured.

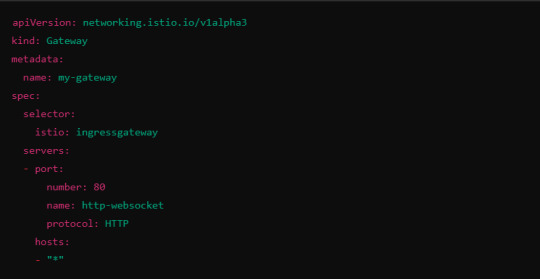

6. Ingress Gateway Configuration

If you're using an Istio Ingress Gateway, ensure that it is configured to handle WebSocket traffic. The gateway should allow for WebSocket connections on the relevant port.

This configuration ensures that the Ingress Gateway can handle WebSocket upgrades and correctly route them to the backend service.

Summary of Key Steps

Enable WebSocket support in Istio’s VirtualService.

Use session affinity to tie WebSocket connections to the same backend pod.

Choose an appropriate load balancing strategy, such as least connection or consistent hashing.

Set timeouts and keep-alive policies to ensure long-lived WebSocket connections.

Configure the Ingress Gateway to handle WebSocket traffic.

By properly configuring Istio, Kubernetes, and your WebSocket service, you can efficiently load balance WebSocket connections in a microservices architecture.

#kubernetes#websockets#Load Balancing#devops#linux#coding#programming#Istio#virtualservices#Load Balancer#Kubernetes cluster#gateway#python#devlog#github#ansible

5 notes

·

View notes

Text

Navigating the DevOps Landscape: Opportunities and Roles

DevOps has become a game-changer in the quick-moving world of technology. This dynamic process, whose name is a combination of "Development" and "Operations," is revolutionising the way software is created, tested, and deployed. DevOps is a cultural shift that encourages cooperation, automation, and integration between development and IT operations teams, not merely a set of practises. The outcome? greater software delivery speed, dependability, and effectiveness.

In this comprehensive guide, we'll delve into the essence of DevOps, explore the key technologies that underpin its success, and uncover the vast array of job opportunities it offers. Whether you're an aspiring IT professional looking to enter the world of DevOps or an experienced practitioner seeking to enhance your skills, this blog will serve as your roadmap to mastering DevOps. So, let's embark on this enlightening journey into the realm of DevOps.

Key Technologies for DevOps:

Version Control Systems: DevOps teams rely heavily on robust version control systems such as Git and SVN. These systems are instrumental in managing and tracking changes in code and configurations, promoting collaboration and ensuring the integrity of the software development process.

Continuous Integration/Continuous Deployment (CI/CD): The heart of DevOps, CI/CD tools like Jenkins, Travis CI, and CircleCI drive the automation of critical processes. They orchestrate the building, testing, and deployment of code changes, enabling rapid, reliable, and consistent software releases.

Configuration Management: Tools like Ansible, Puppet, and Chef are the architects of automation in the DevOps landscape. They facilitate the automated provisioning and management of infrastructure and application configurations, ensuring consistency and efficiency.

Containerization: Docker and Kubernetes, the cornerstones of containerization, are pivotal in the DevOps toolkit. They empower the creation, deployment, and management of containers that encapsulate applications and their dependencies, simplifying deployment and scaling.

Orchestration: Docker Swarm and Amazon ECS take center stage in orchestrating and managing containerized applications at scale. They provide the control and coordination required to maintain the efficiency and reliability of containerized systems.

Monitoring and Logging: The observability of applications and systems is essential in the DevOps workflow. Monitoring and logging tools like the ELK Stack (Elasticsearch, Logstash, Kibana) and Prometheus are the eyes and ears of DevOps professionals, tracking performance, identifying issues, and optimizing system behavior.

Cloud Computing Platforms: AWS, Azure, and Google Cloud are the foundational pillars of cloud infrastructure in DevOps. They offer the infrastructure and services essential for creating and scaling cloud-based applications, facilitating the agility and flexibility required in modern software development.

Scripting and Coding: Proficiency in scripting languages such as Shell, Python, Ruby, and coding skills are invaluable assets for DevOps professionals. They empower the creation of automation scripts and tools, enabling customization and extensibility in the DevOps pipeline.

Collaboration and Communication Tools: Collaboration tools like Slack and Microsoft Teams enhance the communication and coordination among DevOps team members. They foster efficient collaboration and facilitate the exchange of ideas and information.

Infrastructure as Code (IaC): The concept of Infrastructure as Code, represented by tools like Terraform and AWS CloudFormation, is a pivotal practice in DevOps. It allows the definition and management of infrastructure using code, ensuring consistency and reproducibility, and enabling the rapid provisioning of resources.

Job Opportunities in DevOps:

DevOps Engineer: DevOps engineers are the architects of continuous integration and continuous deployment (CI/CD) pipelines. They meticulously design and maintain these pipelines to automate the deployment process, ensuring the rapid, reliable, and consistent release of software. Their responsibilities extend to optimizing the system's reliability, making them the backbone of seamless software delivery.

Release Manager: Release managers play a pivotal role in orchestrating the software release process. They carefully plan and schedule software releases, coordinating activities between development and IT teams. Their keen oversight ensures the smooth transition of software from development to production, enabling timely and successful releases.

Automation Architect: Automation architects are the visionaries behind the design and development of automation frameworks. These frameworks streamline deployment and monitoring processes, leveraging automation to enhance efficiency and reliability. They are the engineers of innovation, transforming manual tasks into automated wonders.

Cloud Engineer: Cloud engineers are the custodians of cloud infrastructure. They adeptly manage cloud resources, optimizing their performance and ensuring scalability. Their expertise lies in harnessing the power of cloud platforms like AWS, Azure, or Google Cloud to provide robust, flexible, and cost-effective solutions.

Site Reliability Engineer (SRE): SREs are the sentinels of system reliability. They focus on maintaining the system's resilience through efficient practices, continuous monitoring, and rapid incident response. Their vigilance ensures that applications and systems remain stable and performant, even in the face of challenges.

Security Engineer: Security engineers are the guardians of the DevOps pipeline. They integrate security measures seamlessly into the software development process, safeguarding it from potential threats and vulnerabilities. Their role is crucial in an era where security is paramount, ensuring that DevOps practices are fortified against breaches.

As DevOps continues to redefine the landscape of software development and deployment, gaining expertise in its core principles and technologies is a strategic career move. ACTE Technologies offers comprehensive DevOps training programs, led by industry experts who provide invaluable insights, real-world examples, and hands-on guidance. ACTE Technologies's DevOps training covers a wide range of essential concepts, practical exercises, and real-world applications. With a strong focus on certification preparation, ACTE Technologies ensures that you're well-prepared to excel in the world of DevOps. With their guidance, you can gain mastery over DevOps practices, enhance your skill set, and propel your career to new heights.

11 notes

·

View notes

Text

Ansible Collections: Extending Ansible’s Capabilities

Ansible is a powerful automation tool used for configuration management, application deployment, and task automation. One of the key features that enhances its flexibility and extensibility is the concept of Ansible Collections. In this blog post, we'll explore what Ansible Collections are, how to create and use them, and look at some popular collections and their use cases.

Introduction to Ansible Collections

Ansible Collections are a way to package and distribute Ansible content. This content can include playbooks, roles, modules, plugins, and more. Collections allow users to organize their Ansible content and share it more easily, making it simpler to maintain and reuse.

Key Features of Ansible Collections:

Modularity: Collections break down Ansible content into modular components that can be independently developed, tested, and maintained.

Distribution: Collections can be distributed via Ansible Galaxy or private repositories, enabling easy sharing within teams or the wider Ansible community.

Versioning: Collections support versioning, allowing users to specify and depend on specific versions of a collection. How to Create and Use Collections in Your Projects

Creating and using Ansible Collections involves a few key steps. Here’s a guide to get you started:

1. Setting Up Your Collection

To create a new collection, you can use the ansible-galaxy command-line tool:

ansible-galaxy collection init my_namespace.my_collection

This command sets up a basic directory structure for your collection:

my_namespace/

└── my_collection/

├── docs/

├── plugins/

│ ├── modules/

│ ├── inventory/

│ └── ...

├── roles/

├── playbooks/

├── README.md

└── galaxy.yml

2. Adding Content to Your Collection

Populate your collection with the necessary content. For example, you can add roles, modules, and plugins under the respective directories. Update the galaxy.yml file with metadata about your collection.

3. Building and Publishing Your Collection

Once your collection is ready, you can build it using the following command:

ansible-galaxy collection build

This command creates a tarball of your collection, which you can then publish to Ansible Galaxy or a private repository:

ansible-galaxy collection publish my_namespace-my_collection-1.0.0.tar.gz

4. Using Collections in Your Projects

To use a collection in your Ansible project, specify it in your requirements.yml file:

collections:

- name: my_namespace.my_collection

version: 1.0.0

Then, install the collection using:

ansible-galaxy collection install -r requirements.yml

You can now use the content from the collection in your playbooks:--- - name: Example Playbook hosts: localhost tasks: - name: Use a module from the collection my_namespace.my_collection.my_module: param: value

Popular Collections and Their Use Cases

Here are some popular Ansible Collections and how they can be used:

1. community.general

Description: A collection of modules, plugins, and roles that are not tied to any specific provider or technology.

Use Cases: General-purpose tasks like file manipulation, network configuration, and user management.

2. amazon.aws

Description: Provides modules and plugins for managing AWS resources.

Use Cases: Automating AWS infrastructure, such as EC2 instances, S3 buckets, and RDS databases.

3. ansible.posix

Description: A collection of modules for managing POSIX systems.

Use Cases: Tasks specific to Unix-like systems, such as managing users, groups, and file systems.

4. cisco.ios

Description: Contains modules and plugins for automating Cisco IOS devices.

Use Cases: Network automation for Cisco routers and switches, including configuration management and backup.

5. kubernetes.core

Description: Provides modules for managing Kubernetes resources.

Use Cases: Deploying and managing Kubernetes applications, services, and configurations.

Conclusion

Ansible Collections significantly enhance the modularity, distribution, and reusability of Ansible content. By understanding how to create and use collections, you can streamline your automation workflows and share your work with others more effectively. Explore popular collections to leverage existing solutions and extend Ansible’s capabilities in your projects.

For more details click www.qcsdclabs.com

#redhatcourses#information technology#linux#containerorchestration#container#kubernetes#containersecurity#docker#dockerswarm#aws

2 notes

·

View notes

Text

Journey to Devops

The concept of “DevOps” has been gaining traction in the IT sector for a couple of years. It involves promoting teamwork and interaction, between software developers and IT operations groups to enhance the speed and reliability of software delivery. This strategy has become widely accepted as companies strive to provide software to meet customer needs and maintain an edge, in the industry. In this article we will explore the elements of becoming a DevOps Engineer.

Step 1: Get familiar with the basics of Software Development and IT Operations:

In order to pursue a career as a DevOps Engineer it is crucial to possess a grasp of software development and IT operations. Familiarity with programming languages like Python, Java, Ruby or PHP is essential. Additionally, having knowledge about operating systems, databases and networking is vital.

Step 2: Learn the principles of DevOps:

It is crucial to comprehend and apply the principles of DevOps. Automation, continuous integration, continuous deployment and continuous monitoring are aspects that need to be understood and implemented. It is vital to learn how these principles function and how to carry them out efficiently.

Step 3: Familiarize yourself with the DevOps toolchain:

Git: Git, a distributed version control system is extensively utilized by DevOps teams, for code repository management. It aids in monitoring code alterations facilitating collaboration, among team members and preserving a record of modifications made to the codebase.

Ansible: Ansible is an open source tool used for managing configurations deploying applications and automating tasks. It simplifies infrastructure management. Saves time when performing tasks.

Docker: Docker, on the other hand is a platform for containerization that allows DevOps engineers to bundle applications and dependencies into containers. This ensures consistency and compatibility across environments from development, to production.

Kubernetes: Kubernetes is an open-source container orchestration platform that helps manage and scale containers. It helps automate the deployment, scaling, and management of applications and micro-services.

Jenkins: Jenkins is an open-source automation server that helps automate the process of building, testing, and deploying software. It helps to automate repetitive tasks and improve the speed and efficiency of the software delivery process.

Nagios: Nagios is an open-source monitoring tool that helps us monitor the health and performance of our IT infrastructure. It also helps us to identify and resolve issues in real-time and ensure the high availability and reliability of IT systems as well.

Terraform: Terraform is an infrastructure as code (IAC) tool that helps manage and provision IT infrastructure. It helps us automate the process of provisioning and configuring IT resources and ensures consistency between development and production environments.

Step 4: Gain practical experience:

The best way to gain practical experience is by working on real projects and bootcamps. You can start by contributing to open-source projects or participating in coding challenges and hackathons. You can also attend workshops and online courses to improve your skills.

Step 5: Get certified:

Getting certified in DevOps can help you stand out from the crowd and showcase your expertise to various people. Some of the most popular certifications are:

Certified Kubernetes Administrator (CKA)

AWS Certified DevOps Engineer

Microsoft Certified: Azure DevOps Engineer Expert

AWS Certified Cloud Practitioner

Step 6: Build a strong professional network:

Networking is one of the most important parts of becoming a DevOps Engineer. You can join online communities, attend conferences, join webinars and connect with other professionals in the field. This will help you stay up-to-date with the latest developments and also help you find job opportunities and success.

Conclusion:

You can start your journey towards a successful career in DevOps. The most important thing is to be passionate about your work and continuously learn and improve your skills. With the right skills, experience, and network, you can achieve great success in this field and earn valuable experience.

2 notes

·

View notes

Text

Microk8s vs k3s: Lightweight Kubernetes distribution showdown

Microk8s vs k3s: Lightweight Kubernetes distribution showdown #homelab #kubernetes #microk8svsk3scomparison #lightweightkubernetesdistributions #k3sinstallationguide #microk8ssnappackagetutorial #highavailabilityinkubernetes #k3s #microk8s #portainer

Especially if you are into running Kubernetes in the home lab, you may look for a lightweight Kubernetes distribution. Two distributions that stand out are Microk8s and k3s. Let’s take a look at Microk8s vs k3s and discover the main differences between these two options, focusing on various aspects like memory usage, high availability, and k3s and microk8s compatibility. Table of contentsWhat is…

View On WordPress

#container runtimes and configurations#edge computing with k3s and microk8s#High Availability in Kubernetes#k3s installation guide#kubernetes cluster resources#Kubernetes on IoT devices#lightweight kubernetes distributions#memory usage optimization#microk8s snap package tutorial#microk8s vs k3s comparison

0 notes

Text

Navigating the DevOps Landscape: A Beginner's Comprehensive

Roadmap In the dynamic realm of software development, the DevOps methodology stands out as a transformative force, fostering collaboration, automation, and continuous enhancement. For newcomers eager to immerse themselves in this revolutionary culture, this all-encompassing guide presents the essential steps to initiate your DevOps expedition.

Grasping the Essence of DevOps Culture: DevOps transcends mere tool usage; it embodies a cultural transformation that prioritizes collaboration and communication between development and operations teams. Begin by comprehending the fundamental principles of collaboration, automation, and continuous improvement.

Immerse Yourself in DevOps Literature: Kickstart your journey by delving into indispensable DevOps literature. "The Phoenix Project" by Gene Kim, Jez Humble, and Kevin Behr, along with "The DevOps Handbook," provides invaluable insights into the theoretical underpinnings and practical implementations of DevOps.

Online Courses and Tutorials: Harness the educational potential of online platforms like Coursera, edX, and Udacity. Seek courses covering pivotal DevOps tools such as Git, Jenkins, Docker, and Kubernetes. These courses will furnish you with a robust comprehension of the tools and processes integral to the DevOps terrain.

Practical Application: While theory is crucial, hands-on experience is paramount. Establish your own development environment and embark on practical projects. Implement version control, construct CI/CD pipelines, and deploy applications to acquire firsthand experience in applying DevOps principles.

Explore the Realm of Configuration Management: Configuration management is a pivotal facet of DevOps. Familiarize yourself with tools like Ansible, Puppet, or Chef, which automate infrastructure provisioning and configuration, ensuring uniformity across diverse environments.

Containerization and Orchestration: Delve into the universe of containerization with Docker and orchestration with Kubernetes. Containers provide uniformity across diverse environments, while orchestration tools automate the deployment, scaling, and management of containerized applications.

Continuous Integration and Continuous Deployment (CI/CD): Integral to DevOps is CI/CD. Gain proficiency in Jenkins, Travis CI, or GitLab CI to automate code change testing and deployment. These tools enhance the speed and reliability of the release cycle, a central objective in DevOps methodologies.

Grasp Networking and Security Fundamentals: Expand your knowledge to encompass networking and security basics relevant to DevOps. Comprehend how security integrates into the DevOps pipeline, embracing the principles of DevSecOps. Gain insights into infrastructure security and secure coding practices to ensure robust DevOps implementations.

Embarking on a DevOps expedition demands a comprehensive strategy that amalgamates theoretical understanding with hands-on experience. By grasping the cultural shift, exploring key literature, and mastering essential tools, you are well-positioned to evolve into a proficient DevOps practitioner, contributing to the triumph of contemporary software development.

2 notes

·

View notes

Video

youtube

Session 5 Kubernetes 3 Node Cluster and Dashboard Installation and Confi...

#youtube#Kubernetes 3 Node Cluster and Dashboard Installation and Configuration with Podman 🚀 In this exciting video tutorial we dive into the worl

1 note

·

View note

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

In the era of cloud-native transformation, data is the fuel powering everything from mission-critical enterprise apps to real-time analytics platforms. However, as Kubernetes adoption grows, many organizations face a new set of challenges: how to manage persistent storage efficiently, reliably, and securely across distributed environments.

To solve this, Red Hat OpenShift Data Foundation (ODF) emerges as a powerful solution — and the DO370 training course is designed to equip professionals with the skills to deploy and manage this enterprise-grade storage platform.

🔍 What is Red Hat OpenShift Data Foundation?

OpenShift Data Foundation is an integrated, software-defined storage solution that delivers scalable, resilient, and cloud-native storage for Kubernetes workloads. Built on Ceph and Rook, ODF supports block, file, and object storage within OpenShift, making it an ideal choice for stateful applications like databases, CI/CD systems, AI/ML pipelines, and analytics engines.

🎯 Why Learn DO370?

The DO370: Red Hat OpenShift Data Foundation course is specifically designed for storage administrators, infrastructure architects, and OpenShift professionals who want to:

✅ Deploy ODF on OpenShift clusters using best practices.

✅ Understand the architecture and internal components of Ceph-based storage.

✅ Manage persistent volumes (PVs), storage classes, and dynamic provisioning.

✅ Monitor, scale, and secure Kubernetes storage environments.

✅ Troubleshoot common storage-related issues in production.

🛠️ Key Features of ODF for Enterprise Workloads

1. Unified Storage (Block, File, Object)

Eliminate silos with a single platform that supports diverse workloads.

2. High Availability & Resilience

ODF is designed for fault tolerance and self-healing, ensuring business continuity.

3. Integrated with OpenShift

Full integration with the OpenShift Console, Operators, and CLI for seamless Day 1 and Day 2 operations.

4. Dynamic Provisioning

Simplifies persistent storage allocation, reducing manual intervention.

5. Multi-Cloud & Hybrid Cloud Ready

Store and manage data across on-prem, public cloud, and edge environments.

📘 What You Will Learn in DO370

Installing and configuring ODF in an OpenShift environment.

Creating and managing storage resources using the OpenShift Console and CLI.

Implementing security and encryption for data at rest.

Monitoring ODF health with Prometheus and Grafana.

Scaling the storage cluster to meet growing demands.

🧠 Real-World Use Cases

Databases: PostgreSQL, MySQL, MongoDB with persistent volumes.

CI/CD: Jenkins with persistent pipelines and storage for artifacts.

AI/ML: Store and manage large datasets for training models.

Kafka & Logging: High-throughput storage for real-time data ingestion.

👨🏫 Who Should Enroll?

This course is ideal for:

Storage Administrators

Kubernetes Engineers

DevOps & SRE teams

Enterprise Architects

OpenShift Administrators aiming to become RHCA in Infrastructure or OpenShift

🚀 Takeaway

If you’re serious about building resilient, performant, and scalable storage for your Kubernetes applications, DO370 is the must-have training. With ODF becoming a core component of modern OpenShift deployments, understanding it deeply positions you as a valuable asset in any hybrid cloud team.

🧭 Ready to transform your Kubernetes storage strategy? Enroll in DO370 and master Red Hat OpenShift Data Foundation today with HawkStack Technologies – your trusted Red Hat Certified Training Partner. For more details www.hawkstack.com

0 notes

Text

Containerization and Test Automation Strategies

Containerization is revolutionizing how software is developed, tested, and deployed. It allows QA teams to build consistent, scalable, and isolated environments for testing across platforms. When paired with test automation, containerization becomes a powerful tool for enhancing speed, accuracy, and reliability. Genqe plays a vital role in this transformation.

What is Containerization? Containerization is a lightweight virtualization method that packages software code and its dependencies into containers. These containers run consistently across different computing environments. This consistency makes it easier to manage environments during testing. Tools like Genqe automate testing inside containers to maximize efficiency and repeatability in QA pipelines.

Benefits of Containerization Containerization provides numerous benefits like rapid test setup, consistent environments, and better resource utilization. Containers reduce conflicts between environments, speeding up the QA cycle. Genqe supports container-based automation, enabling testers to deploy faster, scale better, and identify issues in isolated, reproducible testing conditions.

Containerization and Test Automation Containerization complements test automation by offering isolated, predictable environments. It allows tests to be executed consistently across various platforms and stages. With Genqe, automated test scripts can be executed inside containers, enhancing test coverage, minimizing flakiness, and improving confidence in the release process.

Effective Testing Strategies in Containerized Environments To test effectively in containers, focus on statelessness, fast test execution, and infrastructure-as-code. Adopt microservice testing patterns and parallel execution. Genqe enables test suites to be orchestrated and monitored across containers, ensuring optimized resource usage and continuous feedback throughout the development cycle.

Implementing a Containerized Test Automation Strategy Start with containerizing your application and test tools. Integrate your CI/CD pipelines to trigger tests inside containers. Use orchestration tools like Docker Compose or Kubernetes. Genqe simplifies this with container-native automation support, ensuring smooth setup, execution, and scaling of test cases in real-time.

Best Approaches for Testing Software in Containers Use service virtualization, parallel testing, and network simulation to reflect production-like environments. Ensure containers are short-lived and stateless. With Genqe, testers can pre-configure environments, manage dependencies, and run comprehensive test suites that validate both functionality and performance under containerized conditions.

Common Challenges and Solutions Testing in containers presents challenges like data persistence, debugging, and inter-container communication. Solutions include using volume mounts, logging tools, and health checks. Genqe addresses these by offering detailed reporting, real-time monitoring, and support for mocking and service stubs inside containers, easing test maintenance.

Advantages of Genqe in a Containerized World Genqe enhances containerized testing by providing scalable test execution, seamless integration with Docker/Kubernetes, and cloud-native automation capabilities. It ensures faster feedback, better test reliability, and simplified environment management. Genqe’s platform enables efficient orchestration of parallel and distributed test cases inside containerized infrastructures.

Conclusion Containerization, when combined with automated testing, empowers modern QA teams to test faster and more reliably. With tools like Genqe, teams can embrace DevOps practices and deliver high-quality software consistently. The future of testing is containerized, scalable, and automated — and Genqe is leading the way.

0 notes

Text

Cloud Cost Optimization Strategies Every CTO Should Know in 2025

As organizations scale in the cloud, one challenge becomes increasingly clear: managing and optimizing cloud costs. With the promise of scalability and flexibility comes the risk of unexpected expenses, idle resources, and inefficient spending.

In 2025, cloud cost optimization is no longer just a financial concern—it’s a strategic imperative for CTOs aiming to drive innovation without draining budgets. In this blog, we’ll explore proven strategies every CTO should know to control cloud expenses while maintaining performance and agility.

🧾 The Cost Optimization Challenge in the Cloud

The cloud offers a pay-as-you-go model, which is ideal—if you’re disciplined. However, most companies face challenges like:

Overprovisioned virtual machines

Unused storage or idle databases

Redundant services running in the background

Poor visibility into cloud usage across teams

Limited automation of cost governance

These inefficiencies lead to cloud waste, often consuming 30–40% of a company’s monthly cloud budget.

🛠️ Core Strategies for Cloud Cost Optimization

1. 📉 Right-Sizing Resources

Regularly analyze actual usage of compute and storage resources to downsize over-provisioned assets. Choose instance types or container configurations that match your workload’s true needs.

2. ⏱️ Use Auto-Scaling and Scheduling

Enable auto-scaling to adjust resource allocation based on demand. Implement scheduling scripts or policies to shut down dev/test environments during off-hours.

3. 📦 Leverage Reserved Instances and Savings Plans

For predictable workloads, commit to Reserved Instances (RIs) or Savings Plans. These options can reduce costs by up to 70% compared to on-demand pricing.

4. 🚫 Eliminate Orphaned Resources

Track down unused volumes, unattached IPs, idle load balancers, or stopped instances that still incur charges.

5. 💼 Centralized Cost Management

Use tools like AWS Cost Explorer, Azure Cost Management, or Google’s Billing Reports to monitor, allocate, and forecast cloud spend. Consolidate billing across accounts for better control.

🔐 Governance and Cost Policies

✅ Tag Everything

Apply consistent tagging (e.g., environment:dev, owner:teamA) to group and track costs effectively.

✅ Set Budgets and Alerts

Configure budget thresholds and set up alerts when approaching limits. Enable anomaly detection for cost spikes.

✅ Enforce Role-Based Access Control (RBAC)

Restrict who can provision expensive resources. Apply cost guardrails via service control policies (SCPs).

✅ Use Cost Allocation Reports

Assign and report costs by team, application, or business unit to drive accountability.

📊 Tools to Empower Cost Optimization

Here are some top tools every CTO should consider integrating:

Salzen Cloud: Offers unified dashboards, usage insights, and AI-based optimization recommendations

CloudHealth by VMware: Cost governance, forecasting, and optimization in multi-cloud setups

Apptio Cloudability: Cloud financial management platform for enterprise-level cost allocation

Kubecost: Cost visibility and insights for Kubernetes environments

AWS Trusted Advisor / Azure Advisor / GCP Recommender: Native cloud tools to recommend cost-saving actions

🧠 Advanced Tips for 2025

🔁 Adopt FinOps Culture

Build a cross-functional team (engineering + finance + ops) to drive cloud financial accountability. Make cost discussions part of sprint planning and retrospectives.

☁️ Optimize Multi-Cloud and Hybrid Environments

Use abstraction and management layers to compare pricing models and shift workloads to more cost-effective providers.

🔄 Automate with Infrastructure as Code (IaC)

Define auto-scaling, backup, and shutdown schedules in code. Automation reduces human error and enforces consistency.

🚀 How Salzen Cloud Helps

At Salzen Cloud, we help CTOs and engineering leaders:

Monitor multi-cloud usage in real-time

Identify idle resources and right-size infrastructure

Predict usage trends with AI/ML-based models

Set cost thresholds and auto-trigger alerts

Automate cost-saving actions through CI/CD pipelines and Infrastructure as Code

With Salzen Cloud, optimization is not a one-time event—it’s a continuous, intelligent process integrated into every stage of the cloud lifecycle.

✅ Final Thoughts

Cloud cost optimization is not just about cutting expenses—it's about maximizing value. With the right tools, practices, and mindset, CTOs can strike the perfect balance between performance, scalability, and efficiency.

In 2025 and beyond, the most successful cloud leaders will be those who innovate smartly—without overspending.

0 notes