#Load testing

Explore tagged Tumblr posts

Text

Discover the top 8 tools that can supercharge your load testing game in 2023. Load up on performance excellence and ensure your applications run seamlessly! 📊🌐

2 notes

·

View notes

Text

youtube

0 notes

Text

Check out the 6 best Honeywell safety harnesses you can count on in 2025. These harnesses are made to keep you safe and comfortable while working at heights or in tough conditions. Each one is built to last and designed to fit different needs. Visit Ace Load Testing to learn more and find the right harness for your job.

0 notes

Text

QA vs. Software Testing: What's the Difference and Why It Matters

In the world of software development, terms like Quality Assurance (QA) and Software Testing are often used interchangeably. However, while both contribute to software quality, they serve distinct purposes.

Think of QA as the blueprint that ensures a house is built correctly, while software testing is the process of inspecting the finished house to ensure there are no cracks, leaks, or faulty wiring. QA is proactive—preventing defects before they occur, whereas software testing is reactive—detecting and fixing bugs before deployment.

Understanding the difference between QA and software testing is crucial for organizations to build reliable, high-performing, and customer-friendly software. This blog explores their differences, roles, and why both are essential in modern software development.

What is Quality Assurance (QA)?

Quality Assurance (QA) is a systematic approach to ensuring that software meets defined quality standards throughout the development lifecycle. It focuses on process improvement, defect prevention, and maintaining industry standards to deliver a high-quality product.

Instead of identifying defects after they appear, QA ensures that the development process is optimized to reduce the likelihood of defects from the beginning.

Key Characteristics of QA:

Process-Oriented: QA defines and improves the software development processes to minimize errors.

Preventive Approach: It prevents defects before they arise rather than finding and fixing them later.

Covers the Entire Software Development Lifecycle (SDLC): QA is involved from requirement gathering to software maintenance.

Compliance with Industry Standards: QA ensures the software adheres to ISO, CMMI, Six Sigma, and other quality benchmarks.

Key QA Activities:

Defining Standards & Guidelines – Establishing coding best practices, documentation protocols, and process frameworks.

Process Audits & Reviews – Conducting regular audits to ensure software teams follow industry standards.

Automation & Optimization – Implementing CI/CD (Continuous Integration/Continuous Deployment) to streamline development.

Risk Management – Identifying potential risks and mitigating them before they become major issues.

Example of QA in Action:

A company implementing peer code reviews and automated CI/CD pipelines to ensure all new code follows quality guidelines is an example of QA. This process prevents poor-quality code from reaching the testing phase.

What is Software Testing?

Software Testing is a subset of QA that focuses on evaluating the actual software product to identify defects, errors, and performance issues. It ensures that the software behaves as expected and meets business and user requirements.

Testing is performed after the development phase to verify the correctness, functionality, security, and performance of the application.

Key Characteristics of Software Testing:

Product-Oriented: Testing ensures the final product works as expected and meets user requirements.

Defect Detection & Fixing: The main goal is to identify and fix bugs before software release.

Different Testing Methods: Includes manual and automated testing, covering functionality, usability, performance, security, and compatibility.

Part of the Software Testing Life Cycle (STLC): Testing occurs after development and follows a structured cycle of planning, execution, and bug tracking.

Types of Software Testing:

Functional Testing: Verifies that the software functions as per the requirements.

Unit Testing: Checks individual components or modules.

Integration Testing: Ensures different modules work together correctly.

System Testing: Tests the complete application to validate its behavior.

Performance Testing: Measures speed, scalability, and responsiveness.

Security Testing: Identifies vulnerabilities to prevent security breaches.

Example of Software Testing in Action:

Running automated UI tests to check if a login form accepts correct credentials and rejects incorrect ones is an example of software testing. This ensures that the application meets user expectations.

Key Differences Between QA and Software Testing

Focus: Quality Assurance (QA) is a process-oriented approach that ensures the entire software development process follows best practices and quality standards to prevent defects. In contrast, software testing is product-oriented and focuses on detecting and fixing bugs in the developed software.

Goal: The primary goal of QA is to prevent defects from occurring in the first place by refining development and testing methodologies. On the other hand, software testing aims to identify and fix defects before the software is released to users.

Scope: QA encompasses the entire Software Development Life Cycle (SDLC), ensuring that each phase—from requirement analysis to deployment—adheres to quality standards. In contrast, software testing is a subset of QA and is mainly concerned with validating the functionality, performance, security, and reliability of the software.

Approach: QA follows a proactive approach by setting up quality checkpoints, code reviews, and documentation processes to reduce the chances of defects. Software testing, however, takes a reactive approach, meaning it focuses on identifying existing issues in the software after the development phase.

Activities Involved: QA activities include process audits, documentation reviews, defining coding standards, implementing CI/CD pipelines, and process optimization. In contrast, software testing involves executing test cases, performing unit testing, integration testing, functional testing, performance testing, and security testing to ensure the software meets the required specifications.

Example of Implementation: A company implementing peer code reviews, automated build testing, and compliance audits as part of its development process is engaging in QA. On the other hand, running test cases on a login page to check if valid credentials allow access while invalid ones do not is an example of software testing.

By understanding these differences, organizations can ensure they integrate both QA and testing effectively, leading to higher software quality, fewer defects, and a better user experience.

Why Both QA and Software Testing Matter

Some organizations mistakenly focus only on testing, believing that identifying and fixing bugs is enough. However, without strong QA practices, defects will continue to arise, increasing development costs and delaying software delivery. Here’s why both QA and testing are crucial:

1. Ensures High-Quality Software

QA minimizes errors from the start, while testing ensures no critical issues reach the end-user.

Organizations following robust QA practices tend to have fewer post-release defects, leading to better product stability.

2. Reduces Cost and Time

Finding a bug during requirement analysis is 10x cheaper than fixing it after deployment.

QA ensures that software defects are avoided, reducing the need for excessive testing and bug-fixing later.

3. Enhances User Experience

A well-tested software application performs smoothly without crashes or failures.

Poor QA and testing can result in negative user feedback, harming a company’s reputation.

4. Supports Agile and DevOps Practices

In Agile development, continuous QA ensures each sprint delivers a high-quality product.

DevOps integrates QA automation and continuous testing to speed up deployments.

5. Helps Meet Industry Standards & Compliance

Industries like finance, healthcare, and cybersecurity have strict quality standards.

QA ensures compliance with GDPR, HIPAA, ISO, and PCI DSS regulations.

How to Balance QA and Testing in Your Software Development Process

Implement a Shift-Left Approach: Start QA activities early in the development cycle to identify defects sooner.

Adopt CI/CD Pipelines: Continuous integration and automated testing help streamline both QA and testing efforts.

Use Test Automation Wisely: Automate repetitive test cases but retain manual testing for exploratory and usability testing.

Invest in Quality Culture: Encourage developers to take ownership of quality and follow best practices.

Leverage AI & Machine Learning in Testing: AI-driven test automation tools can improve defect detection and speed up testing.

Conclusion

While QA and software testing are closely related, they are not the same. QA is a preventive, process-oriented approach that ensures quality is built into the development lifecycle. In contrast, software testing is a reactive, product-focused activity that finds and fixes defects before deployment.

Organizations that balance both QA and testing effectively will consistently build high-quality, defect-free software that meets user expectations. By implementing strong QA processes alongside thorough testing, companies can save costs, speed up development, and enhance customer satisfaction.

#software testing#quality assurance#automated testing#test automation#automation testing#qa testing#functional testing#performance testing#regression testing#load testing#continuous testing

0 notes

Text

Manhole Cover Compression Testing Machine

Reliable and Accurate Testing for Manhole Covers The Manhole Cover Compression Testing Machine is a specialized testing device designed to assess the structural integrity of manhole covers under extreme pressure. This testing machine plays a crucial role in ensuring that manhole covers meet the required safety standards and durability expectations. Whether used for quality control in…

#ASTM Manhole Cover Test#Civil Engineering Testing#Compression Resistance#Compression Strength Test#Compression Testing#Construction Equipment Testing#Custom Testing Solutions#EN 124 Testing#Hydraulic Load Testing#Hydraulic Testing Machine#Industrial Testing Machines#ISO Manhole Cover Standards#Jinan Wangtebei Instrument#Load Cell Testing#Load Testing#Manhole Cover Compliance#Manhole Cover Deformation Test#Manhole Cover Performance Test#Manhole Cover Quality Control#Manhole Cover Strength#Manhole Cover Testing Equipment#manhole cover testing machine#Pressure Test Machine#Testing Equipment for Infrastructure#Testing Machine Manufacturer

0 notes

Text

Top 5 Reasons Why Load Testing is Important for Web Applications

Load testing is essential for web applications as it ensures the system can handle high volumes of traffic without performance degradation or crashes. It helps identify potential bottlenecks, enabling developers to optimize scalability and resource management for future growth. By simulating peak usage, load testing also ensures a seamless user experience, preventing frustrating delays or downtime. Additionally, it provides insights into the application's reliability, ensuring it remains stable under stress, which is crucial for maintaining user trust and minimizing the risk of failures during critical times. Ultimately, load testing enhances performance, scalability, and resilience. read more: https://bipamerican.com/top-5-reasons-load-testing-important-web-applications

0 notes

Text

youtube

0 notes

Text

Optimizing Arizona Data Centers with Load Banking Solutions

Ensuring reliability and efficiency in Arizona data center infrastructures is essential for continuous, high-performance operations. A key approach to maintaining this performance is the strategic use of load banking Arizona data center infrastructures to simulate electrical loads. This process allows data centers to assess their systems under various conditions, identifying potential weaknesses before they lead to costly failures.

Load banks play a crucial role in routine testing of backup generators, power distribution, and uninterruptible power supplies (UPS) systems. By using load banks to replicate operational loads, data centers can verify their power sources' reliability and response to actual demand. This proactive testing also helps in identifying and troubleshooting issues within electrical infrastructure, ensuring systems are always ready to handle peak loads.

Furthermore, load banking in Arizona's climate is especially beneficial, as it ensures equipment can withstand extreme temperatures and maintain consistent functionality. Routine testing with load banks supports power efficiency and reduces the risk of unexpected outages, empowering data centers to meet high standards of uptime and service continuity.

0 notes

Text

NAUSICAA OF THE VALLEY OF THE WIND (1984) dir. Hayao Miyazaki

#idk feel like doing new ones#skills still on loading#testing new gif sets!#mine#gifs#animanga#movies#studio ghibli#animationedit#dailyanime#studioghibliedit#anisource#anime#animeedit#fyanimegifs#dailyanimatedgifs#ghibli gifs#ghiblisdaily#ghiblicentral#ghibli#fyghibli#Nausicaä of the Valley of the Wind#nausicaa#Kaze no Tani no Nausicaä#風の谷のナウシカ#oldanimeedit#Hayao Miyazaki

7K notes

·

View notes

Text

Ensuring your applications perform optimally under various conditions is crucial in today’s digital landscape. Load testing is a critical aspect of performance testing because it helps organizations identify and mitigate performance bottlenecks ensuring a seamless experience for your users. In this whitepaper, we’ll explore the fundamentals of load testing focusing on the capabilities and benefits of using a powerful load testing tool like LoadView to help foster your load testing initiatives.

0 notes

Text

Mastering JMeter Load Testing: Essential Techniques and Best Practices

In the fast-paced world of software development, it’s critical to ensure that your application can handle high traffic and usage. That’s where JMeter load testing JMeter load testing comes in. JMeter is a popular open-source tool that allows you to simulate real-world user behavior and test the performance of your application under heavy loads. This type of testing is crucial for identifying and fixing performance bottlenecks before they become major issues for your users.

Whether you’re building a website, a mobile app, or any other type of software, JMeter load testing should be a key part of your development process. So, if you want to deliver a high-quality application that can handle anything your users throw at it, it’s time to start exploring the world of JMeter load testing.

Why Load Testing is Important

Before we dive into the specifics of JMeter load testing, let’s take a moment to discuss why load testing is so important. Simply put, load testing allows you to measure how your application performs under different levels of stress. This can help you identify performance bottlenecks, such as slow response times or high CPU usage, before they become major issues for your users. Load testing can also help you determine how much traffic your application can handle before it starts to experience performance issues.

Without load testing, you run the risk of releasing an application that can’t handle the traffic it receives. This can lead to frustrated users, lost revenue, and damage to your brand reputation. By incorporating load testing into your development process, you can ensure that your application can handle the demands of real-world usage.

Understanding JMeter Architecture

JMeter is a Java-based tool that allows you to simulate real-world user behavior and test the performance of your application under heavy loads. JMeter is designed to be highly extensible and can be used for a wide range of testing tasks, including load testing load testing, functional testing, and regression testing.

At its core, JMeter consists of two main components: the JMeter engine and the JMeter GUI. The JMeter engine is responsible for executing test plans, while the JMeter GUI provides a user-friendly interface for creating and configuring those test plans.

JMeter also includes a wide range of plugins and extensions that can be used to extend its functionality. For example, there are plugins available for testing specific protocols, such as HTTP and FTP, as well as plugins for generating reports and analyzing test results.

Setting up JMeter for Load Testing

Before you can start load testing with JMeter, you’ll need to set up your environment. This typically involves downloading and installing JMeter, configuring your test environment, and setting up your test plan.

To get started, you’ll need to download JMeter from the Apache JMeter website. Once you’ve downloaded and installed JMeter, you can launch the JMeter GUI by running the jmeter.bat or jmeter.sh file.

Next, you’ll need to configure your test environment. This typically involves setting up the server or servers that you’ll be testing, as well as any load balancers or other infrastructure components that are involved in your application. You may also need to configure firewalls and other security settings to ensure that your testing environment is secure.

Finally, you’ll need to create your test plan. This involves defining the user behavior that you want to simulate, as well as any other testing scenarios that you want to include. The JMeter GUI provides a wide range of tools and features for creating and configuring test plans, including support for variables, scripting, and data analysis.

Creating a Load Test Plan

Once you’ve set up your environment and created your test plan, you can start configuring JMeter for load testing. In general, a load test plan consists of a set of threads, each of which represents a virtual user. Each thread is responsible for simulating a specific user behavior, such as opening a web page or submitting a form.

To create a load test plan in JMeter, you’ll typically start by defining your threads. This involves specifying the number of threads that you want to simulate, as well as any other relevant details, such as the user agent and IP address.

Next, you’ll need to define the actions that each thread will perform. This may include opening web pages, submitting forms, or interacting with other elements of your application. You may also need to set up timers and other delay mechanisms to simulate real-world user behavior.

Finally, you’ll need to configure your test plan to run for a specific duration or until a specific number of requests have been processed. This will help you determine how your application performs under different levels of load.

Configuring JMeter for Performance Testing

Once you’ve created your load test plan, you’ll need to configure JMeter for performance testing. This typically involves setting up your test environment to simulate real-world usage patterns, as well as configuring JMeter to collect relevant performance metrics.

To simulate real-world usage patterns, you’ll need to configure JMeter to generate realistic traffic patterns. This may involve setting up user sessions, defining user behaviors, and configuring your test plan to run for a specific duration or until a specific number of requests have been processed.

Next, you’ll need to configure JMeter to collect relevant performance metrics. This may include metrics such as response time, throughput, and error rates. JMeter provides a wide range of tools and features for collecting and analyzing performance metrics, including support for custom reports and graphs.

Running a Load Test in JMeter

Once you’ve configured JMeter for performance testing, you can start running your load test. This typically involves launching the JMeter engine and executing your test plan. As your test plan runs, JMeter will generate a wide range of performance metrics, including response time, throughput, and error rates. You can use these metrics to identify performance bottlenecks and other issues that may be impacting the performance of your application.

Analyzing JMeter Test Results

Once your load test has completed, you’ll need to analyze the results to identify performance bottlenecks and other issues. JMeter provides a wide range of tools and features for analyzing test results, including support for custom reports and graphs. To analyze your test results, you’ll typically start by reviewing the performance metrics generated by JMeter. This may involve looking at metrics such as response time, throughput, and error rates, as well as digging into more detailed data such as thread dumps and log files. Once you’ve identified performance bottlenecks and other issues, you can start working on solutions to address those issues. This may involve optimizing your code, adjusting your architecture, or making other changes to improve the performance of your application.

Troubleshooting Common JMeter Errors

As with any testing tool, JMeter can sometimes encounter errors or other issues during the testing process. Some common JMeter errors include assertion failures, HTTP errors, and socket timeouts.

To troubleshoot these errors, you’ll typically start by reviewing the logs generated by JMeter. This may involve looking for error messages or other indicators that can help you identify the root cause of the problem. Once you’ve identified the cause of the error, you can start working on solutions to address the issue. This may involve adjusting your test plan, modifying your configuration settings, or making other changes to improve the performance of your application.

Advanced JMeter Features

In addition to its core functionality, JMeter also includes a wide range of advanced features that can be used to extend its capabilities. Some of these features include support for distributed testing, integration with other testing tools and frameworks, and advanced scripting and data analysis capabilities. If you’re looking to take your JMeter testing to the next level, it’s worth exploring some of these advanced features. By leveraging these capabilities, you can further enhance the accuracy and effectiveness of your load testing.

Best Practices for Load Testing with JMeter

To get the most out of your JMeter load testing, it’s important to follow some best practices. Some key best practices for load testing with JMeter include:

Start with a clear testing strategy and plan

Test in a realistic environment that simulates real-world usage patterns

Use realistic user behavior and load patterns

Monitor and analyze performance metrics during testing

Iterate and refine your testing approach based on feedback and results

By following these best practices, you can ensure that your JMeter load testing is accurate, effective, and actionable.

JMeter vs. Other Load Testing Tools

While JMeter is a popular and powerful load testing tool, it’s not the only tool available and IT training IT training. Other popular load testing tools include Gatling, LoadRunner, and BlazeMeter. Each of these tools has its own strengths and weaknesses, and the best tool for your needs will depend on your specific testing requirements. Some key factors to consider when evaluating load testing tools include ease of use, flexibility, scalability, and cost.

Conclusion

JMeter load testing is a critical component of any software development process. By simulating real-world user behavior and testing the performance of your application under heavy loads, you can identify and fix performance bottlenecks before they become major issues for your users. Whether you’re building a website, a mobile app, or any other type of software, JMeter load testing should be a key part of your development process. By following best practices and leveraging the advanced features of JMeter, you can ensure that your load testing is accurate, effective, and actionable.

1 note

·

View note

Text

youtube

0 notes

Text

Ace Load Testing is an Australian company that provides reliable load testing services. We help businesses in construction, engineering, and manufacturing check the safety of their structures and equipment. Our team ensures everything meets safety standards to prevent failures. With offices in Dandenong, Traralgon, and Bendigo, we serve clients across Victoria and Australia.

0 notes

Text

The Integration of RPA and Automation Testing

With technology flourishing, organizations are looking to have a cross-functionality approach in operations while testing the quality of the software developed. Robotic Process Automation (RPA) and Automation Testing are among two technological innovations driving this paradigm shift on how we work and how we define quality. Hence, RPA with Test Automation is an effective tool that creates a complementary effect, which provides higher efficiency, accuracy as well as scalability across the industries.

In this blog, we will discuss how RPA and Automation Testing can come together, the business benefits, challenges faced and how GhostQA can assist organizations to make the most of this powerful combination.

What is Robotic Process Automation (RPA)?

RPA uses software robots that mimic the actions of a human executing tasks across applications and systems to automate repetitive, task-oriented, rule-based work. RPA takes over tedious tasks be it data entry, report generation, or order processing; it frees employees time to focus on strategic work and minimizes human errors in manual entry.

RPA can take software quality assurance to the next level when automation testing, and RPA are integrated to cope with complex testing as well as automation processes.

What is Automation Testing?

Automation Testing is the process of a testing tool executing the test cases. This can be presented even as a single line for test cases but it eliminates manual effort, with accurate, ensured repeatable and much quicker testing to ensure software applications are of quality and reliability.

RPA application of a Test Automation process is the next level in automating the process, to avail efficiencies in workflows and handling of dynamic scenarios.

How RPA Complements Automation Testing

Automation, efficiency, and accuracy are principles shared between RPA and Automation Testing. Integrating with them also enables QA teams to automate more sophisticated, multi-system processes, as well as deliver increased testing coverage. Here’s how they function:

Automating End-to-End Processes: RPA has the power to automate non-testing activities like data setup, result validation etc.

Handling Dynamic Scenarios: Test Automation can be made more dynamic, as RPA bots can do much better in adapting to changes at run time.

Improved Coverage: They work hand in hand to automate workflows across different systems and applications, providing complete testing coverage.

Benefits of Integrating RPA and Automation Testing

1. Enhanced Efficiency

Automation Testing automates manual processes such as data preparation and environment configuration, which are repetitive and time-consuming in the test automation process. And you can do that at speed and really shorten your time to market.

2. Broader Test Coverage

RPA widens the horizon of Test Automation to test multiple systems on multiple platforms with multiple devices. Ensures that all the critical areas of an application are validated.

3. Increased Accuracy

RPA and Automation Testing reduce human error by automating even the most sophisticated workflows, thereby providing consistent and reliable test outcomes.

4. Cost Savings

Even though initial costs can be higher, long-term benefits are plentiful as organizations can reduce errors and avoid unnecessary post-release bugs which allows saving money by optimizing labor costs.

5. Scalability and Flexibility

That is where the actual beauty of RPA comes into the picture as it helps organization scale their respective test automation journeys as the systems and applications evolve.

Use Cases of RPA in Test Automation

Test Data Management: RPA bots can generate, adjust, and cleanse test data to produce accurate and consistent results.

Environment Configuration: Automated setup and teardown of your environments allows you to spend time on what matters and review your code.

Cross-Platform Testing: With RPA, tests could be automatically executed on multiple platforms and devices to check compatibility and performance.

Regression Testing: It automatizes numerous repetitive regression tests to verify applications continue to function correctly after changes.

Compliance Testing: RPA can execute scripted test scenarios to ensure that applications comply with regulatory standards.

Challenges of Integrating RPA and Automation Testing

As much as the integration brings a lot of benefits, it also comes with some challenges:

High Initial Costs: The cost of Automation Testing and RPA implementation is huge when starting.

Skill Gaps: There is a need to have experts in both RPA and Test Automation in the teams to utilize its integration advantage.

Tool Selection: Navigating the vast landscape of RPA and automation tools to find the right fit for business is another complex undertaking.

Maintenance Overhead: It demands a regular effort on the part of maintaining scripts and bots so that they seamlessly work when the systems change.

How GhostQA Streamlines RPA and Automation Testing Integration

GhostQA is revolutionizing the automation testing landscape, while it also acts as a seamless bridge between RPA and Automation Testing.

Why Choose GhostQA?

Expertise Across Tools: Deep knowledge of RPA and Test automation tools e.g. Selenium, Appium, UiPath.

Custom Solutions: Focused strategies for your unique project needs.

Proven Methods: well-established frameworks and methodologies for smooth integration.

Ongoing Support: Committed assistance for sustainable success and fast-paced scaling of your automated processes.

Through GhostQA partnership, organizations can unlock RPA and Automation Testing and solve these challenges with tangible results.

Best Practices for Integrating RPA and Automation Testing

Start Small: Do a small pilot rollout, so you can not only test whether you can integrate, but to see if it has any advantage to you.

Choose the Right Tools: Go for technology that is compatible with your tech stack and business goals.

Define Clear Objectives: Identify the processes and use cases in Automation Testing and RPA where maximum value can be achieved.

Regularly Update Automation Scripts: Always keep updating test scripts and RPA bots based on applications changes.

Monitor Performance: Apply analytic and reporting tools for assessing your amalgamation work.

The Future of RPA and Automation Testing

New technologies emerging with things going hand in hand between RPA and Test Automation now are proving to be much more efficient. Emerging tech such as AI-driven bots, machine learning algorithms, and predictive analytics will also extend their abilities, converting tested activities into smart, speedier, and adaptive processes.

And those organizations which embrace this integration today will be tomorrow's leaders in resolving tomorrow's challenges with the same high-quality software products with the best yields.

Conclusion

Automation testing is all set to become the next big era in software quality assurance, owing to robotic process automation (RPA). This integrated approach makes organizations more effective and dependable by automating complete processes through the usage of model-driven testing and increasing accuracy and code test coverage.

GhostQA is leading the revolution by allowing businesses to get the best out of RPA and Test Automation. GhostQA is with you through the challenges, improving integration with other software, and completely staying one step ahead in this competitive environment.

With functionalities such as RPA and Automation Testing, GhostQA has what it takes to ensure you evolve with time- be it why to invest in the future of quality assurance. Contact us today!

#test automation#automation testing#quality assurance#software testing#functional testing#load testing#performance testing#qa testing

0 notes

Text

Kiểm thử hiệu năng (Performance testing)

Kiểm thử hiệu năng (Performance testing) là một phương pháp kiểm thử phần mềm nhằm đánh giá tốc độ, khả năng ph��n hồi, độ ổn định và khả năng chịu tải của một ứng dụng hoặc hệ thống dưới các điều kiện khác nhau. Mục tiêu của kiểm thử hiệu năng là xác định và loại bỏ các vấn đề về hiệu năng để đảm bảo rằng hệ thống có thể hoạt động tốt trong môi trường thực tế và đáp ứng được các yêu cầu về hiệu…

View On WordPress

0 notes

Text

Never forget a test

Testing is the process of evaluating a system or its component(s) with the intent to find whether it satisfies the specified requirements or not. Testing is executing a system in order to identify any gaps, errors, or missing requirements contrary to the actual requirements. This tutorial will give you a basic understanding of software testing, its types, methods, levels, and other related terminologies.

Code that is not tested can’t be trusted

Bad reputation

“Testing is Too Expensive”: Pay less for testing during software development => pay more for maintenance or correction later. Early testing saves both time and cost in many aspects. However, reducing the cost without testing may result in improper design of a software application, rendering the product useless.

“Testing is Time-Consuming”: Testing is never a time-consuming process. However diagnosing and fixing the errors identified during proper testing is a time-consuming but productive activity.

“Only Fully Developed Products are Tested”: No doubt, testing depends on the source code but reviewing requirements and developing test cases is independent from the developed code. However, iterative or incremental approaches to a development life cycle model may reduce the requirement of testing on the fully developed software.

“Complete Testing is Possible”: It becomes an issue when a client or tester thinks that complete testing is possible. It is possible that all paths have been tested by the team but occurrence of complete testing is never possible. There might be some scenarios that are never executed by the test team or the client during the software development life cycle and may be executed once the project has been deployed.

“A Tested Software is Bug-Free”: No one can claim with absolute certainty that a software application is 100% bug-free even if a tester with superb testing skills has tested the application.

“Testers are Responsible for Quality of Product”: It is a very common misinterpretation that only testers or the testing team should be responsible for product quality. Testers’ responsibilities include the identification of bugs to the stakeholders and then it is their decision whether they will fix the bug or release the software. Releasing the software at the time puts more pressure on the testers, as they will be blamed for any error.

“Test Automation should be used wherever possible to Reduce Time”: Yes, it is true that Test Automation reduces the testing time, but it is not possible to start test automation at any time during software development. Test automaton should be started when the software has been manually tested and is stable to some extent. Moreover, test automation can never be used if requirements keep changing.

Basic

This standard deals with the following aspects to determine the quality of a software application:

Quality model

External metrics

Internal metrics

Quality in use metrics

This standard presents some set of quality attributes for any software such as:

Functionality

Reliability

Usability

Efficiency

Maintainability

Portability

Functional Testing

This is a type of black-box testing that is based on the specifications of the software that is to be tested. The application is tested by providing input and then the results are examined that need to conform to the functionality it was intended for. Functional testing of a software is conducted on a complete, integrated system to evaluate the system’s compliance with its specified requirements.

There are five steps that are involved while testing an application for functionality:

The determination of the functionality that the intended application is meant to perform.

The creation of test data based on the specifications of the application.

The output based on the test data and the specifications of the application.

The writing of test scenarios and the execution of test cases.

The comparison of actual and expected results based on the executed test cases.

An effective testing practice will see the above steps applied to the testing policies of every organization and hence it will make sure that the organization maintains the strictest of standards when it comes to software quality.

Unit Testing

This type of testing is performed by developers before the setup is handed over to the testing team to formally execute the test cases. Unit testing is performed by the respective developers on the individual units of source code assigned areas. The developers use test data that is different from the test data of the quality assurance team.

The goal of unit testing is to isolate each part of the program and show that individual parts are correct in terms of requirements and functionality.

Limitations of Unit Testing:

Testing cannot catch each and every bug in an application. It is impossible to evaluate every execution path in every software application. The same is the case with unit testing.

There is a limit to the number of scenarios and test data that a developer can use to verify a source code. After having exhausted all the options, there is no choice but to stop unit testing and merge the code segment with other units.

Integration Testing

Integration testing is defined as the testing of combined parts of an application to determine if they function correctly. Integration testing can be done in two ways: Bottom-up integration testing and Top-down integration testing.

Bottom-up integration: This testing begins with unit testing, followed by tests of progressively higher-level combinations of units called modules or builds.

Top-down integration: In this testing, the highest-level modules are tested first and progressively, lower-level modules are tested thereafter.

In a comprehensive software development environment, bottom-up testing is usually done first, followed by top-down testing. The process concludes with multiple tests of the complete application, preferably in scenarios designed to mimic actual situations.

System Testing

System testing tests the system as a whole. Once all the components are integrated, the application as a whole is tested rigorously to see that it meets the specified Quality Standards. This type of testing is performed by a specialized testing team.

System testing is important because of the following reasons:

System testing is the first step in the Software Development Life Cycle, where the application is tested as a whole.

The application is tested thoroughly to verify that it meets the functional and technical specifications.

The application is tested in an environment that is very close to the production environment where the application will be deployed.

System testing enables us to test, verify, and validate both the business requirements as well as the application architecture.

Regression Testing

Whenever a change in a software application is made, it is quite possible that other areas within the application have been affected by this change. Regression testing is performed to verify that a fixed bug hasn’t resulted in another functionality or business rule violation. The intent of regression testing is to ensure that a change, such as a bug fix should not result in another fault being uncovered in the application.

Regression testing is important because of the following reasons:

Minimize the gaps in testing when an application with changes made has to be tested.

Testing the new changes to verify that the changes made did not affect any other area of the application.

Mitigates risks when regression testing is performed on the application.

Test coverage is increased without compromising timelines.

Increase speed to market the product.

Acceptance Testing

This is arguably the most important type of testing, as it is conducted by the Quality Assurance Team who will gauge whether the application meets the intended specifications and satisfies the client’s requirement. The QA team will have a set of pre-written scenarios and test cases that will be used to test the application.

More ideas will be shared about the application and more tests can be performed on it to gauge its accuracy and the reasons why the project was initiated. Acceptance tests are not only intended to point out simple spelling mistakes, cosmetic errors, or interface gaps, but also to point out any bugs in the application that will result in system crashes or major errors in the application.

By performing acceptance tests on an application, the testing team will deduce how the application will perform in production. There are also legal and contractual requirements for acceptance of the system.

Alpha Testing

This test is the first stage of testing and will be performed amongst the teams (developer and QA teams). Unit testing, integration testing and system testing when combined together is known as alpha testing. During this phase, the following aspects will be tested in the application:

Spelling Mistakes

Broken Links

Cloudy Directions

The Application will be tested on machines with the lowest specification to test loading times and any latency problems.

Beta Testing

This test is performed after alpha testing has been successfully performed. In beta testing, a sample of the intended audience tests the application. Beta testing is also known as pre-release testing. Beta test versions of software are ideally distributed to a wide audience on the Web, partly to give the program a “real-world” test and partly to provide a preview of the next release. In this phase, the audience will be testing the following:

Users will install, run the application and send their feedback to the project team.

Typographical errors, confusing application flow, and even crashes.

Getting the feedback, the project team can fix the problems before releasing the software to the actual users.

The more issues you fix that solve real user problems, the higher the quality of your application will be.

Having a higher-quality application when you release it to the general public will increase customer satisfaction.

Non-Functional Testing

This section is based upon testing an application from its non-functional attributes. Non-functional testing involves testing a software from the requirements which are nonfunctional in nature but important such as performance, security, user interface, etc.

Some of the important and commonly used non-functional testing types are discussed below.

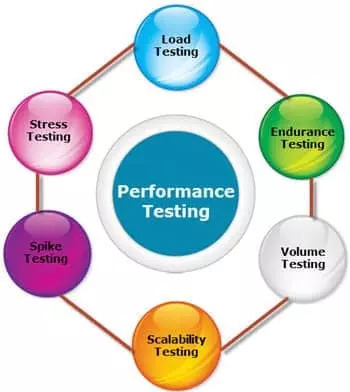

Performance Testing

It is mostly used to identify any bottlenecks or performance issues rather than finding bugs in a software. There are different causes that contribute in lowering the performance of a software:

Network delay

Client-side processing

Database transaction processing

Load balancing between servers

Data rendering

Performance testing is considered as one of the important and mandatory testing type in terms of the following aspects:

Speed (i.e. Response Time, data rendering and accessing)

Capacity

Stability

Scalability

Performance testing can be either qualitative or quantitative and can be divided into different sub-types such as Load testing and Stress testing.

Load Testing

It is a process of testing the behavior of a software by applying maximum load in terms of software accessing and manipulating large input data. It can be done at both normal and peak load conditions. This type of testing identifies the maximum capacity of software and its behavior at peak time.

Most of the time, load testing is performed with the help of automated tools such as Load Runner, AppLoader, IBM Rational Performance Tester, Apache JMeter, Silk Performer, Visual Studio Load Test, etc.

Virtual users (VUsers) are defined in the automated testing tool and the script is executed to verify the load testing for the software. The number of users can be increased or decreased concurrently or incrementally based upon the requirements.

Stress Testing

Stress testing includes testing the behavior of a software under abnormal conditions. For example, it may include taking away some resources or applying a load beyond the actual load limit.

The aim of stress testing is to test the software by applying the load to the system and taking over the resources used by the software to identify the breaking point. This testing can be performed by testing different scenarios such as:

Shutdown or restart of network ports randomly

Turning the database on or off

Running different processes that consume resources such as CPU, memory, server, etc.

Usability Testing

Usability testing is a black-box technique and is used to identify any error(s) and improvements in the software by observing the users through their usage and operation.

According to Nielsen, usability can be defined in terms of five factors, i.e. efficiency of use, learn-ability, memory-ability, errors/safety, and satisfaction. According to him, the usability of a product will be good and the system is usable if it possesses the above factors.

Nigel Bevan and Macleod considered that usability is the quality requirement that can be measured as the outcome of interactions with a computer system. This requirement can be fulfilled and the end-user will be satisfied if the intended goals are achieved effectively with the use of proper resources.

Molich in 2000 stated that a user-friendly system should fulfill the following five goals, i.e., easy to Learn, easy to remember, efficient to use, satisfactory to use, and easy to understand.

In addition to the different definitions of usability, there are some standards and quality models and methods that define usability in the form of attributes and sub-attributes such as ISO-9126, ISO-9241-11, ISO-13407, and IEEE std.610.12, etc.

UI vs Usability Testing

UI testing involves testing the Graphical User Interface of the Software. UI testing ensures that the GUI functions according to the requirements and tested in terms of color, alignment, size, and other properties.

On the other hand, usability testing ensures a good and user-friendly GUI that can be easily handled. UI testing can be considered as a sub-part of usability testing.

Security Testing

Security testing involves testing a software in order to identify any flaws and gaps from security and vulnerability point of view. Listed below are the main aspects that security testing should ensure:

Confidentiality

Integrity

Authentication

Availability

Authorization

Non-repudiation

Software is secure against known and unknown vulnerabilities

Software data is secure

Software is according to all security regulations

Input checking and validation

SQL insertion attacks

Injection flaws

Session management issues

Cross-site scripting attacks

Buffer overflows vulnerabilities

Directory traversal attacks

Portability Testing

Portability testing includes testing a software with the aim to ensure its reusability and that it can be moved from another software as well. Following are the strategies that can be used for portability testing:

Transferring an installed software from one computer to another.

Building executable (.exe) to run the software on different platforms.

Portability testing can be considered as one of the sub-parts of system testing, as this testing type includes overall testing of a software with respect to its usage over different environments. Computer hardware, operating systems, and browsers are the major focus of portability testing. Some of the pre-conditions for portability testing are as follows:

Software should be designed and coded, keeping in mind the portability requirements.

Unit testing has been performed on the associated components.

Integration testing has been performed.

Test environment has been established.

Test Plan

A test plan outlines the strategy that will be used to test an application, the resources that will be used, the test environment in which testing will be performed, and the limitations of the testing and the schedule of testing activities. Typically the Quality Assurance Team Lead will be responsible for writing a Test Plan.

A test plan includes the following:

Introduction to the Test Plan document

Assumptions while testing the application

List of test cases included in testing the application

List of features to be tested

What sort of approach to use while testing the software

List of deliverables that need to be tested

The resources allocated for testing the application

Any risks involved during the testing process

A schedule of tasks and milestones to be achieved

Test Scenario

It is a one line statement that notifies what area in the application will be tested. Test scenarios are used to ensure that all process flows are tested from end to end. A particular area of an application can have as little as one test scenario to a few hundred scenarios depending on the magnitude and complexity of the application.

The terms ‘test scenario’ and ‘test cases’ are used interchangeably, however a test scenario has several steps, whereas a test case has a single step. Viewed from this perspective, test scenarios are test cases, but they include several test cases and the sequence that they should be executed. Apart from this, each test is dependent on the output from the previous test.

Test Case

Test cases involve a set of steps, conditions, and inputs that can be used while performing testing tasks. The main intent of this activity is to ensure whether a software passes or fails in terms of its functionality and other aspects. There are many types of test cases such as functional, negative, error, logical test cases, physical test cases, UI test cases, etc.

Furthermore, test cases are written to keep track of the testing coverage of a software. Generally, there are no formal templates that can be used during test case writing. However, the following components are always available and included in every test case:

Test case ID

Product module

Product version

Revision history

Purpose

Assumptions

Pre-conditions

Steps

Expected outcome

Actual outcome

Post-conditions

Many test cases can be derived from a single test scenario. In addition, sometimes multiple test cases are written for a single software which are collectively known as test suites.

#unit testing#functional testing#integration testing#system testing#regression testing#acceptance testing#alpha testing#beta testing#performance testing#load testing#stress testing#usability testing#security testing#portability testing

0 notes