#MEAN Stack Framework Developers

Explore tagged Tumblr posts

Text

In the realm of full-stack web development, the MEAN stack development stands as a powerful and versatile framework. Comprised of MongoDB, Express.js, Angular, and Node.js, this stack empowers mean stack developers to create dynamic, scalable, and efficient web applications. In this article, we'll take a deep dive into each component of the MEAN stack development services, from the flexible NoSQL database MongoDB to the dynamic frontend framework Angular.

#ahextechnologies#mean stack solution#mongodb development services#angular#mean#mean stack framework#nodejs#expressjs#mean stack development company#mean stack

0 notes

Text

Web3 Game Development Services By Mobiloitte UK

The future of gaming with Mobiloitte. Web3 game development services! Dive into a world of decentralized, immersive experiences where players truly own their in-game assets. Our expert team crafts cutting-edge, blockchain-powered games that redefine gaming as you know it. Join us in revolutionizing the gaming industry with innovative technology and endless possibilities. Level up with Mobiloitte.

#react js web app development#mean stack web development#lamp web development#progressive web apps framework#react native web pwa

0 notes

Text

If you are preparing to learn Mean Stack Development or are ready to begin your career as a mean stack developer, this article is the right place to begin. The article covers all the information one needs to know before entering the mean stack development. You can collect details like skills-set, education requirements, pay scales, and the future of a developer in the Mean Stack category.

#mean stack developer#mean stack developers#full stack web developer#javascript framework#mean stack developer salary

0 notes

Text

Web Applications Development Solutions in USA

In today's digital age, a robust web application is essential for any business seeking to thrive in the competitive online landscape. Mobiloitte, a leading web application development company in the USA, offers comprehensive services to transform your business vision into a seamless and engaging web presence. Contact us today to explore how we can transform your business vision into a powerful online reality. Empower Your Business with Mobiloitte's Web Application Expertise.

#react js web app development#mean stack web development#lamp web development#progressive web apps framework#react native web pwa

0 notes

Text

SysNotes devlog 1

Hiya! We're a web developer by trade and we wanted to build ourselves a web-app to manage our system and to get to know each other better. We thought it would be fun to make a sort of a devlog on this blog to show off the development! The working title of this project is SysNotes (but better ideas are welcome!)

What SysNotes is✅:

A place to store profiles of all of our parts

A tool to figure out who is in front

A way to explore our inner world

A private chat similar to PluralKit

A way to combine info about our system with info about our OCs etc as an all-encompassing "brain-world" management system

A personal and tailor-made tool made for our needs

What SysNotes is not❌:

A fronting tracker (we see no need for it in our system)

A social media where users can interact (but we're open to make it so if people are interested)

A public platform that can be used by others (we don't have much experience actually hosting web-apps, but will consider it if there is enough interest!)

An offline app

So if this sounds interesting to you, you can find the first devlog below the cut (it's a long one!):

(I have used word highlighting and emojis as it helps me read large chunks of text, I hope it's alright with y'all!)

Tech stack & setup (feel free to skip if you don't care!)

The project is set up using:

Database: MySQL 8.4.3

Language: PHP 8.3

Framework: Laravel 10 with Breeze (authentication and user accounts) and Livewire 3 (front end integration)

Styling: Tailwind v4

I tried to set up Laragon to easily run the backend, but I ran into issues so I'm just running "php artisan serve" for now and using Laragon to run the DB. Also I'm compiling styles in real time with "npm run dev". Speaking of the DB, I just migrated the default auth tables for now. I will be making app-related DB tables in the next devlog. The awesome thing about Laravel is its Breeze starter kit, which gives you fully functioning authentication and basic account management out of the box, as well as optional Livewire to integrate server-side processing into HTML in the sexiest way. This means that I could get all the boring stuff out of the way with one terminal command. Win!

Styling and layout (for the UI nerds - you can skip this too!)

I changed the default accent color from purple to orange (personal preference) and used an emoji as a placeholder for the logo. I actually kinda like the emoji AS a logo so I might keep it.

Laravel Breeze came with a basic dashboard page, which I expanded with a few containers for the different sections of the page. I made use of the components that come with Breeze to reuse code for buttons etc throughout the code, and made new components as the need arose. Man, I love clean code 😌

I liked the dotted default Laravel page background, so I added it to the dashboard to create the look of a bullet journal. I like the journal-type visuals for this project as it goes with the theme of a notebook/file. I found the code for it here.

I also added some placeholder menu items for the pages that I would like to have in the app - Profile, (Inner) World, Front Decider, and Chat.

i ran into an issue dynamically building Tailwind classes such as class="bg-{{$activeStatus['color']}}-400" - turns out dynamically-created classes aren't supported, even if they're constructed in the component rather than the blade file. You learn something new every day huh…

Also, coming from Tailwind v3, "ps-*" and "pe-*" were confusing to get used to since my muscle memory is "pl-*" and "pr-*" 😂

Feature 1: Profiles page - proof of concept

This is a page where each alter's profiles will be displayed. You can switch between the profiles by clicking on each person's name. The current profile is highlighted in the list using a pale orange colour.

The logic for the profiles functionality uses a Livewire component called Profiles, which loads profile data and passes it into the blade view to be displayed. It also handles logic such as switching between the profiles and formatting data. Currently, the data is hardcoded into the component using an associative array, but I will be converting it to use the database in the next devlog.

New profile (TBC)

You will be able to create new profiles on the same page (this is yet to be implemented). My vision is that the New Alter form will unfold under the button, and fold back up again once the form has been submitted.

Alter name, pronouns, status

The most interesting component here is the status, which is currently set to a hardcoded list of "active", "dormant", and "unknown". However, I envision this to be a customisable list where I can add new statuses to the list from a settings menu (yet to be implemented).

Alter image

I wanted the folder that contained alter images and other assets to be outside of my Laravel project, in the Pictures folder of my operating system. I wanted to do this so that I can back up the assets folder whenever I back up my Pictures folder lol (not for adding/deleting the files - this all happens through the app to maintain data integrity!). However, I learned that Laravel does not support that and it will not be able to see my files because they are external. I found a workaround by using symbolic links (symlinks) 🔗. Basically, they allow to have one folder of identical contents in more than one place. I ran "mklink /D [external path] [internal path]" to create the symlink between my Pictures folder and Laravel's internal assets folder, so that any files that I add to my Pictures folder automatically copy over to Laravel's folder. I changed a couple lines in filesystems.php to point to the symlinked folder:

And I was also getting a "404 file not found" error - I think the issue was because the port wasn't originally specified. I changed the base app URL to the localhost IP address in .env:

…And after all this messing around, it works!

(My Pictures folder)

(My Laravel storage)

(And here is Alice's photo displayed - dw I DO know Ibuki's actual name)

Alter description and history

The description and history fields support HTML, so I can format these fields however I like, and add custom features like tables and bullet point lists.

This is done by using blade's HTML preservation tags "{!! !!}" as opposed to the plain text tags "{{ }}".

(Here I define Alice's description contents)

(And here I insert them into the template)

Traits, likes, dislikes, front triggers

These are saved as separate lists and rendered as fun badges. These will be used in the Front Decider (anyone has a better name for it?? 🤔) tool to help me identify which alter "I" am as it's a big struggle for us. Front Decider will work similar to FlowCharty.

What next?

There's lots more things I want to do with SysNotes! But I will take it one step at a time - here is the plan for the next devlog:

Setting up database tables for the profile data

Adding the "New Profile" form so I can create alters from within the app

Adding ability to edit each field on the profile

I tried my best to explain my work process in a way that wold somewhat make sense to non-coders - if you have any feedback for the future format of these devlogs, let me know!

~~~~~~~~~~~~~~~~~~

Disclaimers:

I have not used AI in the making of this app and I do NOT support the Vibe Coding mind virus that is currently on the loose. Programming is a form of art, and I will defend manual coding until the day I die.

Any alter data found in the screenshots is dummy data that does not represent our actual system.

I will not be making the code publicly available until it is a bit more fleshed out, this so far is just a trial for a concept I had bouncing around my head over the weekend.

We are SYSCOURSE NEUTRAL! Please don't start fights under this post

#sysnotes devlog#plurality#plural system#did#osdd#programming#whoever is fronting is typing like a millenial i am so sorry#also when i say “i” its because i'm not sure who fronted this entire time!#our syskid came up with the idea but i can't feel them so who knows who actually coded it#this is why we need the front decider tool lol

25 notes

·

View notes

Note

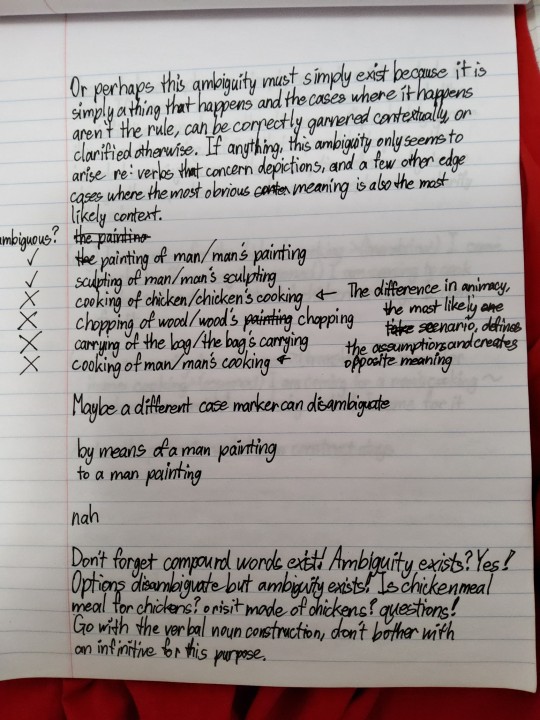

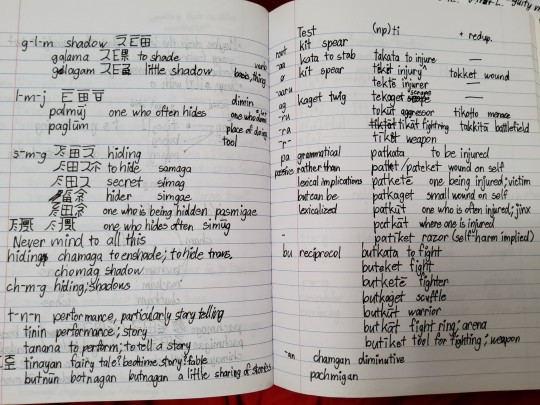

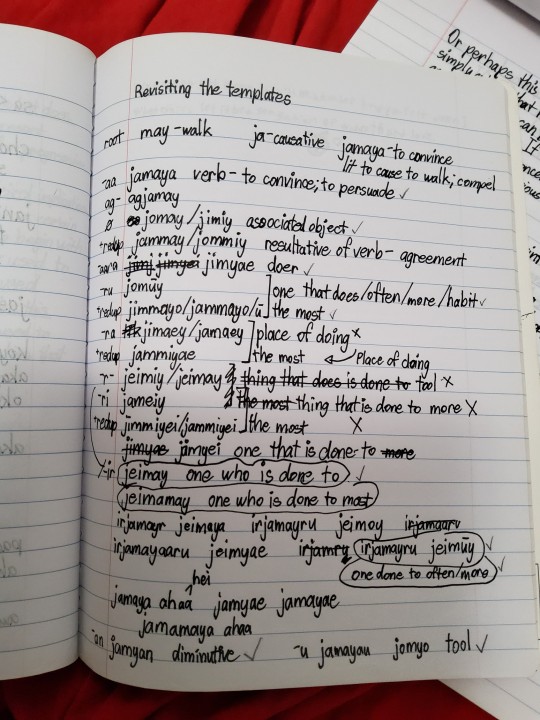

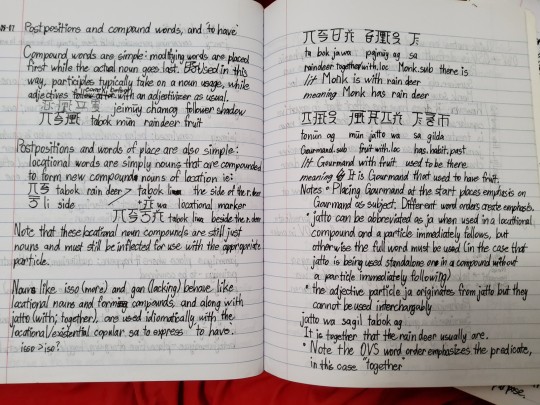

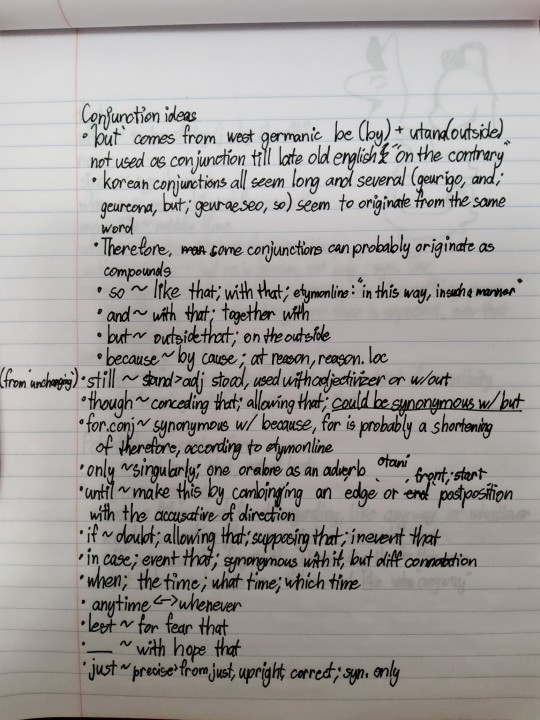

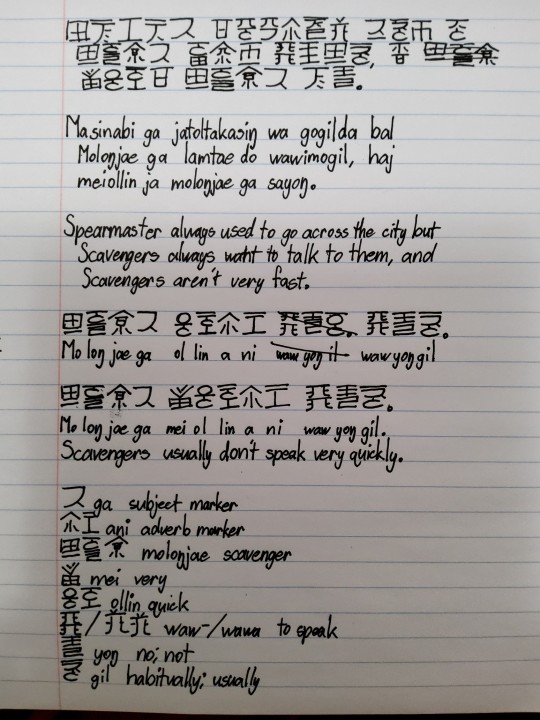

staring so kindly at your sluglang post,, as someone working on a language as well this looks fantastic (and is also. super organized compared to mine BHAHAH) Any tips for putting together a language? Like resources on how to go about it, or notes? /genq

You are staring kindly... (thank you)

As for tips... Wikipedia is actually one of my biggest, most useful tools, because I love to read articles about grammatical concepts, and they will usually have a varaiety of examples of use if you can figure out how to parse the academic language. There are some core ideas that pop up all over the place crosslinguistically, like case marking or converbs, and you can get a lot from learning how other languages might parse the same idea, both how they handle the idea grammatically and what kind of metaphorical language might be involved; like how in Scottish Gaelic, to say you have something, you say it's 'at' you, or how it doesn't have an exact equivalent of English's infinitive, or how Mongolian has so many word endings that convey meaning, and a bunch of them are literally endings stacked on top of other endings.

There's also really good conlang youtubers, like David Peterson, the one who made Dothraki and other pop media conlangs, Artifexian, Biblaridion. They have videos on both interesting grammatical concepts that don't exist in english AND how to integrate them into conlangs. Davide Peterson especially has interesting videos on things like sound changes, vowel harmony, phonological concepts that can really help shape your language and bring a degree of naturalism if that's what you're looking for.

Etymology can be extremely informative though, and really help you to understand exactly how creative people have gotten with language over the past thousands of years. Etymonline is a great website for that. Did you know that the word "next" was originally literally "nearest"? Or that that the suffix "be-" was originally "by", so words like "before" actually meant "by the fore", and very often these meanings are metaphorically extended to the way we use them today. It's great for helping to develop very important words that can be structural to your language, so that you're not just trying to raw make up a new word with no basis every time.

Aside from that, there's no single source I go to for making conlangs. Everything is on a case by case basis. Something that has been really helpful for me is constantly writing example sentences and finding things to write about, because similar to translating existing texts, it forces me to reckon with the way my conlang works, figure out how to convey certain ideas (or whether or not the language can convey the idea at all).

Usually I'll have a few languages that I keep in mind for inspiration for any given project and if I'm stumped or need an idea, I'll actually look up learning resources for those languages. My slugcat language has had me looking up a lot of "How to say..." in Korean, Arabic, Japanese Filipino, a little bit of Indonesian? Some Russian for verb stuff. Once I find resources, I spend a bit of time dissecting how it works in those languages and figure out how that can fit in the existing framework of my own project, or if it's something I'd even want in the project at all.

Once I have an idea, I'll just start iterating on it, usually on paper, basically brainstorming how the sentence structure and sounds might work until I find something that is both sonically satisfying and logically sound within the existing framework. If I'm feeling extra spicy, I might try to consider how the culture and priorities of the speakers might shape the development of the language. The important thing while doing this is, just like brainstorming, to be unafraid to keep throwing ideas onto the page no matter how unviable or nonsensical it may seem in your head. You NEED to experiment and find what doesn't work or else your brain will be too clogged to find out what does. Exercising your pen will help you get into the mindset of someone using the language (because you are), it'll help you form connections to other parts of the language you've already developed, and once you've developed enough, the language will almost start writing itself.

I've actually had some really interesting interactions happen my scuglang between the archaic system of suffixes, the position word system, and the triconsonantal root system, which actually gave rise to an entire system of metaphorical extension, letting speakers use phrases like "at a crossing of" or "at a leaving of" to mean across or away and also talk about concurrent events like "He talked while eating noodles" (He, at an eating of noodles, talked).

Anyway, I know I got kind of scattered but these are some of the big parts of how I approach conlanging! If I have questions or needs, I look to other languages, find learning resources, apply it, and then ask more questions. Spend time with your language and get familiar with it. There's the time I read "Ergativity" by Robert Dixon, but reading literal textbooks is not a requirement for conlanging. You just need to chip away at it and keep asking question.

Here's some photos of my own conlanging notes so you can see how serious I am when I say iterating and brainstorming are extremely helpful. You need to be throwing shit on the paper. I will handwrite three pages just to contradict myself on the next because those three pages were important for forming the final idea.

68 notes

·

View notes

Text

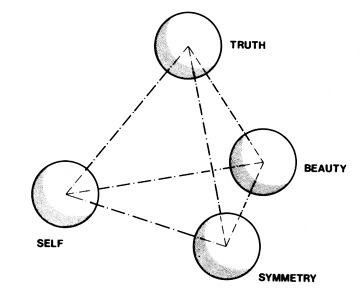

Buckminster Fuller: Synergetics and Systems

Synergetics

Synergetics, concept introduced by Buckminster Fuller, is an interdisciplinary study of geometry, patterns, and spatial relationships that provides a method and a philosophy for understanding and solving complex problems. The term “synergetics” comes from the Greek word “synergos,” meaning “working together.” Fuller’s synergetics is a system of thinking that seeks to understand the cooperative interactions among parts of a whole, leading to outcomes that are unpredicted by the behavior of the parts when studied in isolation.

Fuller’s understanding of systems relied upon the concept of synergy. With the emergence of unpredicted system behaviors by the behaviors of the system’s components, this perspective invites us to transcend the limitations of our immediate perception and to perceive larger systems, and to delve deeper to see relevant systems within the situation. It beckons us to ‘tune-in’ to the appropriate systems as we bring our awareness to a particular challenge or situation.

He perceived the Universe as an intricate construct of systems. He proposed that everything, from our thoughts to the cosmos, is a system. This perspective, now a cornerstone of modern thinking, suggests that the geometry of systems and their models are the keys to deciphering the behaviors and interactions we witness in the Universe.

In his “Synergetics: Explorations in the Geometry of Thinking” Fuller presents a profound exploration of geometric thinking, offering readers a transformative journey through a four-dimensional Universe. Fuller’s work combines geometric logic with metaphors drawn from human experience, resulting in a framework that elucidates concepts such as entropy, Einstein’s relativity equations, and the meaning of existence. Within this paradigm, abstract notions become lucid, understandable, and immediately engaging, propelling readers to delve into the depths of profound philosophical inquiry.

Fuller’s framework revolves around the principle of synergetics, which emphasizes the interconnectedness and harmony of geometric relationships. Drawing inspiration from nature, he illustrates that balance and equilibrium are akin to a stack of closely packed oranges in a grocery store, highlighting the delicate equilibrium present in the Universe. By intertwining concepts from visual geometry and technical design, Fuller’s work demonstrates his expertise in spatial understanding and mathematical prowess. The book challenges readers to expand their perspectives and grasp the intricate interplay between shapes, mathematics, and the dimensions of the human mind.

At its core, “Synergetics” presents a philosophical inquiry into the nature of existence and the human thought process. Fuller’s use of neologisms and expansive, thought-provoking ideas sparks profound contemplation. While some may find the book challenging due to its complexity, it is a testament to Fuller’s intellectual prowess and his ability to offer unique insights into the fundamental workings of the Universe, pushing the boundaries of human knowledge and transforming the fields of design, mathematics, and philosophy .

When applied to cognitive science, the concept of synergetics offers a holistic approach to understanding the human mind. It suggests that cognitive processes, rather than being separate functions, are interconnected parts of a whole system that work together synergistically. This perspective aligns with recent developments in cognitive science that view cognition as a complex, dynamic system. It suggests that our cognitive abilities emerge from the interaction of numerous mental processes, much like the complex patterns that emerge in physical and biological systems studied under synergetics.

In this context, geometry serves as a language to describe this cognitive architecture. Just as the geometric patterns in synergetic structures reveal the underlying principles of organization, the ‘geometric’ arrangement of cognitive processes could potentially reveal the principles that govern our cognitive abilities. This perspective extends Fuller’s belief in the power of geometry as a tool for understanding complex systems, from the physical structures he designed to the very architecture of our minds. It suggests that by studying the ‘geometry’ of cognition, we might gain insights into the principles of cognitive organization and the nature of human intelligence.

Systems

Fuller’s philosophy underscored that systems are distinct entities, each with a unique shape that sets them apart from their surroundings. He envisioned each system as a tetrahedron, a geometric form with an inside and an outside, connected by a minimum of four corners or nodes. These nodes, connected by what Fuller referred to as relations, serve as the sinews that hold the system together. These relations could manifest as flows, forces, or fields. Fuller’s philosophy also emphasized that systems are not isolated entities. At their boundaries, every node is linked to its surroundings, and all system corners are ‘leaky’, either brimming with extra energy or in need of energy.

Fuller attributed the properties and characteristics of systems to what he called generalized principles. These are laws of the Universe that hold true everywhere and at all times. For instance, everything we perceive is a specific configuration of energy or material, and the form of this configuration is determined by these universal principles.

Fuller’s philosophy also encompassed the idea that every situation is a dance of interacting systems. He encouraged us to explore the ways in which systems interact within and with each other. He saw each of us as part of the cosmic dance, continually coupling with other systems. This coupling could be as loose as the atoms of air in a room, or as flexible as molecules of water flowing.

We find that precession is completely regenerative one brings out the other. So I gave you the dropping the stone in the water, and the wave went out that way. And this way beget that way. And that way beget that way. And that’s why your circular wave emanates. Once you begin to get into “precession” you find yourself understanding phenomena that you’ve seen a stone falling in the water all of your life, and have never really known why the wave does just what it does.

Fuller’s concept of precession, or systems coupling, is a testament to his deep understanding of systems and their interactions. He described how we sometimes orbit a system, such as a political movement or an artistic method. Our orbit remains stable when the force that attracts us is dynamically balanced by the force that propels us away. This understanding of precession allows us to comprehend phenomena that we have observed all our lives, yet never truly understood why they behave as they do. Fuller’s teachings on systems and their inherent geometry continue to illuminate our understanding of the Universe and our place within it.

#geometrymatters#geometry#cognitive geometry#geometric cognition#buckminster fuller#science#research#math#architecture#consciousness#perception#synergy#tensegrity

59 notes

·

View notes

Text

Can Open Source Integration Services Speed Up Response Time in Legacy Systems?

Legacy systems are still a key part of essential business operations in industries like banking, logistics, telecom, and manufacturing. However, as these systems get older, they become less efficient—slowing down processes, creating isolated data, and driving up maintenance costs. To stay competitive, many companies are looking for ways to modernize without fully replacing their existing systems. One effective solution is open-source integration, which is already delivering clear business results.

Why Faster Response Time Matters

System response time has a direct impact on business performance. According to a 2024 IDC report, improving system response by just 1.5 seconds led to a 22% increase in user productivity and a 16% rise in transaction completion rates. This means increased revenue, customer satisfaction as well as scalability in industries where time is of great essence.

Open-source integration is prominent in this case. It can minimize latency, enhance data flow and make process automation easier by allowing easier communication between legacy systems and more modern applications. This makes the systems more responsive and quick.

Key Business Benefits of Open-Source Integration

Lower Operational Costs

Open-source tools like Apache Camel and Mule eliminate the need for costly software licenses. A 2024 study by Red Hat showed that companies using open-source integration reduced their IT operating costs by up to 30% within the first year.

Real-Time Data Processing

Traditional legacy systems often depend on delayed, batch-processing methods. With open-source platforms using event-driven tools such as Kafka and RabbitMQ, businesses can achieve real-time messaging and decision-making—improving responsiveness in areas like order fulfillment and inventory updates.

Faster Deployment Cycles: Open-source integration supports modular, container-based deployment. The 2025 GitHub Developer Report found that organizations using containerized open-source integrations shortened deployment times by 43% on average. This accelerates updates and allows faster rollout of new services.

Scalable Integration Without Major Overhauls

Open-source frameworks allow businesses to scale specific parts of their integration stack without modifying the core legacy systems. This flexibility enables growth and upgrades without downtime or the cost of a full system rebuild.

Industry Use Cases with High Impact

Banking

Integrating open-source solutions enhances transaction processing speed and improves fraud detection by linking legacy banking systems with modern analytics tools.

Telecom

Customer service becomes more responsive by synchronizing data across CRM, billing, and support systems in real time.

Manufacturing

Real-time integration with ERP platforms improves production tracking and inventory visibility across multiple facilities.

Why Organizations Outsource Open-Source Integration

Most internal IT teams lack skills and do not have sufficient resources to manage open-source integration in a secure and efficient manner. Businesses can also guarantee trouble-free setup and support as well as improved system performance by outsourcing to established providers. Top open-source integration service providers like Suma Soft, Red Hat Integration, Talend, TIBCO (Flogo Project), and Hitachi Vantara offer customized solutions. These help improve system speed, simplify daily operations, and support digital upgrades—without the high cost of replacing existing systems.

2 notes

·

View notes

Text

From Data to Decisions: Leveraging Product Analytics and AI Services for Faster B2B Innovation

In today’s competitive B2B landscape, innovation isn’t just about having a great product idea. It’s about bringing that idea to life faster, smarter, and with precision. That means making every decision based on real data, not guesswork. At Product Siddha, we help businesses unlock faster B2B innovation by combining the power of product analytics and AI services into one seamless strategy.

Why B2B Innovation Fails Without Data-Driven Insight

Most B2B companies struggle to innovate at scale because they lack visibility into what users actually do. Product teams launch features based on assumptions. Marketing teams operate without a feedback loop. Sales teams miss opportunities due to fragmented data. This disconnect creates wasted effort and missed growth.

Product analytics is the solution to this problem. When integrated with AI services, you don’t just track user behavior — you predict it. This lets you make smarter decisions that directly improve your product roadmap, customer experience, and business outcomes.

The Power of Product Analytics in B2B Growth

Product analytics turns user behavior into actionable insight. Instead of relying on vanity metrics, Product Siddha helps you understand how real people interact with your product at every stage. We implement tools that give you a complete view of the user journey — from first touchpoint to long-term retention.

With powerful product analytics, you can:

Identify high-impact features based on real usage

Spot friction points and user drop-offs quickly

Personalize product experiences for higher engagement

Improve onboarding, reduce churn, and boost ROI

This is not just reporting. It’s clarity. It’s control. And it’s the foundation of faster B2B innovation.

Accelerate Outcomes with AI Services That Work for You

While product analytics shows you what’s happening, AI services help you act on that data instantly. Product Siddha designs and builds low-code AI-powered systems that reduce manual work, automate decisions, and create intelligent workflows across teams.

With our AI services, B2B companies can:

Automatically segment users and personalize messaging

Trigger automated campaigns based on user behavior

Streamline product feedback loops

Deliver faster support with AI chatbots and smart routing

Together, AI and analytics make your product smarter and your business more efficient. No more delayed decisions. No more data silos. Just continuous improvement powered by automation.

Our Approach: Build, Learn, Optimize

At Product Siddha, we believe innovation should be fast, measurable, and scalable. That’s why we use a 4-step framework to integrate product analytics and AI services into your workflow.

Build Real, Fast

We help you launch an MVP with just enough features to test real-world usage and start gathering data.

Learn What Matters

We set up product analytics to capture user behavior and feedback, turning that information into practical insight.

Stack Smart Tools

Our AI services integrate with your MarTech and product stack, automating repetitive tasks and surfacing real-time insights.

Optimize with Focus

Based on what you learn, we help you refine your product, personalize your messaging, and scale growth efficiently.

Why Choose Product Siddha for B2B Innovation?

We specialize in helping fast-moving B2B brands like yours eliminate complexity and move with clarity. At Product Siddha, we don’t just give you data or automation tools — we build intelligent systems that let you move from data to decisions in real time.

Our team combines deep expertise in product analytics, AI automation, and B2B marketing operations. Whether you’re building your first product or scaling an existing one, we help you:

Reduce time-to-market

Eliminate development waste

Align product and growth goals

Launch with confidence

Visit Product Siddha to explore our full range of services.

Let’s Turn Insight into Innovation

If you’re ready to use product analytics and AI services to unlock faster B2B innovation, we’re here to help. Product Siddha builds smart, scalable systems that help your teams learn faster, move faster, and grow faster.

Call us today at 98993 22826 to discover how we can turn your product data into your biggest competitive advantage.

2 notes

·

View notes

Text

Dovian Digital: Redefining Global Reach with New York Precision

Introduction: Building Global Influence Starts at Home

Success in 2025 isn’t just about going digital—it’s about going global. Dovian Digital, a premier digital marketing agency in New York, has become the go-to growth partner for brands that dream bigger. More than just running ads or optimizing content, Dovian builds digital ecosystems that help businesses cross borders, scale smarter, and connect deeper.

Why New York Powers Global Growth

New York is where cultures collide, ideas ignite, and innovation is currency. Dovian Digital channels this high-impact energy into its work, crafting custom marketing strategies that work just as well in Dubai or Sydney as they do in NYC. In a city that never stops moving, Dovian keeps your brand a step ahead—globally.

Dovian’s Global Services Blueprint

Unlike traditional agencies, Dovian integrates every digital service into a results-driven roadmap:

SEO at Scale: From technical audits to geo-targeted keyword optimization across languages

International Paid Media: Region-specific ad creatives with cultural sensitivity

Full-Spectrum Web Development: UX/UI that performs from Lagos to London

Global Social Management: From content calendars to influencer tie-ups, globally aligned

Multilingual Content Strategy: Scripts, captions, blogs, and visuals designed to translate meaning, not just words

Real Impact: Global Brand Wins Powered by Dovian

EdTech Expansion into Latin America: By launching a Spanish-first content campaign, Dovian helped an EdTech platform see a 240% user increase across Mexico and Colombia.

Consumer Goods Growth in Canada and Australia: Tailored Google Ads, local PR campaigns, and geo-fenced offers led to a 6x return on ad spend.

Crypto Exchange Marketing in MENA Region: By localizing UI/UX and publishing Arabic content in trusted fintech portals, Dovian secured a 38% increase in verified signups.

What Sets Dovian Digital Apart

Cultural Empathy: Not just translation—true cultural adaptation.

Cross-Platform Fluency: Omnichannel mastery from email to TikTok.

Agile Frameworks: Campaigns that shift based on real-time feedback.

Transparent Growth Models: Milestone-based KPIs, tracked in real dashboards.

What the Next 5 Years Look Like

Dovian is not preparing for the future; they’re shaping it.

AI Assistants for Campaign Planning

Zero-Click Search Strategy for Voice & AI Interfaces

Green Messaging Templates to align with eco-conscious buyers

Regional Data Clusters for Hyper-Personalization at Scale

Why the World Chooses Dovian

Their processes are global-first, not US-centric

Their tech stack is designed for multilingual, multi-market rollouts

Their storytelling is human, regardless of language or screen size

Final Word: Think Global, Act with Dovian

In a saturated market, you don’t need more noise—you need more strategy. Let Dovian Digital, the most trusted digital marketing agency in New York, help you scale your brand across languages, borders, and time zones.

Contact Dovian Digital

Phone: +1 (437) 925-3019 Email: [email protected] Website: www.doviandigital.com

2 notes

·

View notes

Text

Dev Log Feb 7 2025 - The Stack

Ahoy. This is JFrame of 16Naughts in the first of what I hope will turn out to be a weekly series of developer logs surrounding some of our activities here in the office. Not quite so focused on individual games most of the time, but more on some of the more interesting parts of development as a whole. Or really, just an excuse for me to geek out a little into the void. With introductions out of the way, the first public version of our game Crescent Roll (https://store.steampowered.com/app/3325680/Crescent_Roll juuuust as a quick plug) is due out here at the end of the month, and has a very interesting/unorthodox tech stack that might be of interest to certain devs wanting to cut down on their application install size. The game itself is actually written in Javascript - you know, the scripting language used by your web browser for the interactive stuff everywhere, including here. If you've been on Newgrounds or any other site, they might call games that use it "HTML5" games like they used to call "Flash" games (RIP in peace). Unfortunately, Javascript still has a bit of a sour reputation in most developer circles, and "web game" doesn't really instill much confidence in the gamer either. However, it's turning more and more into the de-facto standard for like, everything. And I do mean everything. 99% of applications on your phone are just websites wrapped in the system view (including, if you're currently using it, the Tumblr app), and it's bleeding more and more into the desktop and other device spaces. Both Android and iOS have calls available to utilize their native web browsers in applications. Windows and Mac support the same thing with WebView2 and WebKit respectively. Heck, even Xbox and Nintendo have a web framework available too (even goes back as far as Flash support for the Wii). So, if you're not using an existing game engine like we aren't and you want to go multi-platform, your choices are either A) Do it in something C/C++ -ish, or now B) Write it in JS. So great - JS runs everywhere. Except, it's not exactly a first-class citizen in any of these scenarios. Every platform has a different SDK for a different low-level language, and none of them have a one-click "bundle this website into an exe" option. So there is some additional work that needs to be done to get it into that nice little executable package.

Enter C#. Everyone calls it Microsoft Java, but their support for it has been absolutely spectacular that it has surpassed Java in pretty much every single possible way. And that includes the number and types of machines that it runs on. The DotNet Core initiative has Mac, Windows, and Linux covered (plus Xbox), Xamarin has Android, and the new stuff for Maui brought iOS into the fold. Write once, run everywhere. Very nice. Except those itty bitty little application lifetime quirks completely change how you do the initialization on each platform, and the system calls are different for getting the different web views set up, and Microsoft is pushing Maui so hard that actually finding the calls and libraries to do the stuff instead of using their own (very strange) UI toolkit is a jungle, but I mean, I only had to write our stream decompression stuff once and everything works with the same compilation options. So yeah - good enough. And fortunately, only getting better. Just recently, they added Web Views directly into Maui itself so we can now skip a lot of the bootstrapping we had to do (I'm not re-writing it until we have to, but you know- it's there for everyone else). So, there you have it. Crescent Roll is a Javascript HTML5 Web Game that uses the platform native Web View through C#. It's a super tiny 50-100MB (depending on the platform) from not having to bundle the JS engine with it, compiles in seconds, and is fast and lean when running and only getting faster and leaner as it benefits from any performance improvements made anywhere in any of those pipeline. And that's it for today's log. Once this thing is actually, you know, released, I can hopefully start doing some more recent forward-looking progress things rather than a kind of vague abstract retrospective ramblings. Maybe some shader stuff next week, who knows.

Lemme know if you have any questions on anything. I know it's kind of dry, but I can grab some links for stuff to get started with, or point to some additional reading if you want it.

3 notes

·

View notes

Text

OneAPI Construction Kit For Intel RISC V Processor Interface

With the oneAPI Construction Kit, you may integrate the oneAPI Ecosystem into your Intel RISC V Processor.

Intel RISC-V

Recently, Codeplay, an Intel business, revealed that their oneAPI Construction Kit supports RISC-V. Rapidly expanding, Intel RISC V is an open standard instruction set architecture (ISA) available under royalty-free open-source licenses for processors of all kinds.

Through direct programming in C++ with SYCL, along with a set of libraries aimed at common functions like math, threading, and neural networks, and a hardware abstraction layer that allows programming in one language to target different devices, the oneAPI programming model enables a single codebase to be deployed across multiple computing architectures including CPUs, GPUs, FPGAs, and other accelerators.

In order to promote open source cooperation and the creation of a cohesive, cross-architecture programming paradigm free from proprietary software lock-in, the oneAPI standard is now overseen by the UXL Foundation.

A framework that may be used to expand the oneAPI ecosystem to bespoke AI and HPC architectures is Codeplay’s oneAPI Construction Kit. For both native on-host and cross-compilation, the most recent 4.0 version brings RISC-V native host for the first time.

Because of this capability, programs may be executed on a CPU and benefit from the acceleration that SYCL offers via data parallelism. With the oneAPI Construction Kit, Intel RISC V processor designers can now effortlessly connect SYCL and the oneAPI ecosystem with their hardware, marking a key step toward realizing the goal of a completely open hardware and software stack. It is completely free to use and open-source.

OneAPI Construction Kit

Your processor has access to an open environment with the oneAPI Construction Kit. It is a framework that opens up SYCL and other open standards to hardware platforms, and it can be used to expand the oneAPI ecosystem to include unique AI and HPC architectures.

Give Developers Access to a Dynamic, Open-Ecosystem

With the oneAPI Construction Kit, new and customized accelerators may benefit from the oneAPI ecosystem and an abundance of SYCL libraries. Contributors from many sectors of the industry support and maintain this open environment, so you may build with the knowledge that features and libraries will be preserved. Additionally, it frees up developers’ time to innovate more quickly by reducing the amount of time spent rewriting code and managing disparate codebases.

The oneAPI Construction Kit is useful for anybody who designs hardware. To get you started, the Kit includes a reference implementation for Intel RISC V vector processors, although it is not confined to RISC-V and may be modified for a variety of processors.

Codeplay Enhances the oneAPI Construction Kit with RISC-V Support

The rapidly expanding open standard instruction set architecture (ISA) known as RISC-V is compatible with all sorts of processors, including accelerators and CPUs. Axelera, Codasip, and others make Intel RISC V processors for a variety of applications. RISC-V-powered microprocessors are also being developed by the EU as part of the European Processor Initiative.

At Codeplay, has been long been pioneers in open ecosystems, and as a part of RISC-V International, its’ve worked on the project for a number of years, leading working groups that have helped to shape the standard. Nous realize that building a genuinely open environment starts with open, standards-based hardware. But in order to do that, must also need open hardware, open software, and open source from top to bottom.

This is where oneAPI and SYCL come in, offering an ecosystem of open-source, standards-based software libraries for applications of various kinds, such oneMKL or oneDNN, combined with a well-developed programming architecture. Both SYCL and oneAPI are heterogeneous, which means that you may create code once and use it on any GPU AMD, Intel, NVIDIA, or, as of late, RISC-V without being restricted by the manufacturer.

Intel initially implemented RISC-V native host for both native on-host and cross-compilation with the most recent 4.0 version of the oneAPI Construction Kit. Because of this capability, programs may be executed on a CPU and benefit from the acceleration that SYCL offers via data parallelism. With the oneAPI Construction Kit, Intel RISC V processor designers can now effortlessly connect SYCL and the oneAPI ecosystem with their hardware, marking a major step toward realizing the vision of a completely open hardware and software stack.

Read more on govindhtech.com

#OneAPIConstructionKit#IntelRISCV#SYCL#FPGA#IntelRISCVProcessorInterface#oneAPI#RISCV#oneDNN#oneMKL#RISCVSupport#OpenEcosystem#technology#technews#news#govindhtech

2 notes

·

View notes

Text

Enterprise Web App Solutions by Mobiloitte USA

Mobiloitte, based out of the USA, specializes in top-tier enterprise web app development. Our solutions offer seamless integration, user-centric design, robust performance, and scalability. Serving a diverse range of industries, we prioritize efficiency, security, and adaptability. Connect with Mobiloitte to elevate your web presence and drive tangible business results.

#react js web app development#mean stack web development#lamp web development#progressive web apps framework#react native web pwa

0 notes

Text

dew: frontend dev who knows a little too much about the intricacies and inner workings of his preferred framework

rain: frontend dev who cares deeply about user experience

phantom: mostly backend full stack dev who suggests using a nosql database in every meeting

mountain: devops. nobody else understands how any of the infra works because he put a bunch of stuff into really convenient scripts. unfortunately that means if anything breaks he has to be the one to go fix it

swiss: full stack obviously.... the multi ghoul of developers

aurora: designer ✨

cirrus: backend & cloud dev who thinks she can do full stack but cant figure out css to save her life and uses div for every html element

cumulus: backend dev who will call you out if your sql query is inefficient in some way. and if you cause a spike in database usage she will track you down

3 notes

·

View notes

Text

Wish List For A Game Profiler

I want a profiler for game development. No existing profiler currently collects the data I need. No existing profiler displays it in the format I want. No existing profiler filters and aggregates profiling data for games specifically.

I want to know what makes my game lag. Sure, I also care about certain operations taking longer than usual, or about inefficient resource usage in the worker thread. The most important question that no current profiler answers is: In the frames that currently do lag, what is the critical path that makes them take too long? Which function should I optimise first to reduce lag the most?

I know that, with the right profiler, these questions could be answered automatically.

Hybrid Sampling Profiler

My dream profiler would be a hybrid sampling/instrumenting design. It would be a sampling profiler like Austin (https://github.com/P403n1x87/austin), but a handful of key functions would be instrumented in addition to the sampling: Displaying a new frame/waiting for vsync, reading inputs, draw calls to the GPU, spawning threads, opening files and sockets, and similar operations should always be tracked. Even if displaying a frame is not a heavy operation, it is still important to measure exactly when it happens, if not how long it takes. If a draw call returns right away, and the real work on the GPU begins immediately, it’s still useful to know when the GPU started working. Without knowing exactly when inputs are read, and when a frame is displayed, it is difficult to know if a frame is lagging. Especially when those operations are fast, they are likely to be missed by a sampling debugger.

Tracking Other Resources

It would be a good idea to collect CPU core utilisation, GPU utilisation, and memory allocation/usage as well. What does it mean when one thread spends all of its time in that function? Is it idling? Is it busy-waiting? Is it waiting for another thread? Which one?

It would also be nice to know if a thread is waiting for IO. This is probably a “heavy” operation and would slow the game down.

There are many different vendor-specific tools for GPU debugging, some old ones that worked well for OpenGL but are no longer developed, open-source tools that require source code changes in your game, and the newest ones directly from GPU manufacturers that only support DirectX 12 or Vulkan, but no OpenGL or graphics card that was built before 2018. It would probably be better to err on the side of collecting less data and supporting more hardware and graphics APIs.

The profiler should collect enough data to answer questions like: Why is my game lagging even though the CPU is utilised at 60% and the GPU is utilised at 30%? During that function call in the main thread, was the GPU doing something, and were the other cores idling?

Engine/Framework/Scripting Aware

The profiler knows which samples/stack frames are inside gameplay or engine code, native or interpreted code, project-specific or third-party code.

In my experience, it’s not particularly useful to know that the code spent 50% of the time in ceval.c, or 40% of the time in SDL_LowerBlit, but that’s the level of granularity provided by many profilers.

Instead, the profiler should record interpreted code, and allow the game to set a hint if the game is in turn interpreting code. For example, if there is a dialogue engine, that engine could set a global “interpreting dialogue” flag and a “current conversation file and line” variable based on source maps, and the profiler would record those, instead of stopping at the dialogue interpreter-loop function.

Of course, this feature requires some cooperation from the game engine or scripting language.

Catching Common Performance Mistakes

With a hybrid sampling/instrumenting profiler that knows about frames or game state update steps, it is possible to instrument many or most “heavy“ functions. Maybe this functionality should be turned off by default. If most “heavy functions“, for example “parsing a TTF file to create a font object“, are instrumented, the profiler can automatically highlight a mistake when the programmer loads a font from disk during every frame, a hundred frames in a row.

This would not be part of the sampling stage, but part of the visualisation/analysis stage.

Filtering for User Experience

If the profiler knows how long a frame takes, and how much time is spent waiting during each frame, we can safely disregard those frames that complete quickly, with some time to spare. The frames that concern us are those that lag, or those that are dropped. For example, imagine a game spends 30% of its CPU time on culling, and 10% on collision detection. You would think to optimise the culling. What if the collision detection takes 1 ms during most frames, culling always takes 8 ms, but whenever the player fires a bullet, the collision detection causes a lag spike. The time spent on culling is not the problem here.

This would probably not be part of the sampling stage, but part of the visualisation/analysis stage. Still, you could use this information to discard “fast enough“ frames and re-use the memory, and only focus on keeping profiling information from the worst cases.

Aggregating By Code Paths

This is easier when you don’t use an engine, but it can probably also be done if the profiler is “engine-aware”. It would require some per-engine custom code though. Instead of saying “The game spent 30% of the time doing vector addition“, or smarter “The game spent 10% of the frames that lagged most in the MobAIRebuildMesh function“, I want the game to distinguish between game states like “inventory menu“, “spell targeting (first person)“ or “switching to adjacent area“. If the game does not use a data-driven engine, but multiple hand-written game loops, these states can easily be distinguished (but perhaps not labelled) by comparing call stacks: Different states with different game loops call the code to update the screen from different places – and different code paths could have completely different performance characteristics, so it makes sense to evaluate them separately.

Because the hypothetical hybrid profiler instruments key functions, enough call stack information to distinguish different code paths is usually available, and the profiler might be able to automatically distinguish between the loading screen, the main menu, and the game world, without any need for the code to give hints to the profiler.

This could also help to keep the memory usage of the profiler down without discarding too much interesting information, by only keeping the 100 worst frames per code path. This way, the profiler can collect performance data on the gameplay without running out of RAM during the loading screen.

In a data-driven engine like Unity, I’d expect everything to happen all the time, on the same, well-optimised code path. But this is not a wish list for a Unity profiler. This is a wish list for a profiler for your own custom game engine, glue code, and dialogue trees.

All I need is a profiler that is a little smarter, that is aware of SDL, OpenGL, Vulkan, and YarnSpinner or Ink. Ideally, I would need somebody else to write it for me.

6 notes

·

View notes

Text

The Role of Microservices In Modern Software Architecture

Are you ready to dive into the exciting world of microservices and discover how they are revolutionizing modern software architecture? In today’s rapidly evolving digital landscape, businesses are constantly seeking ways to build more scalable, flexible, and resilient applications. Enter microservices – a groundbreaking approach that allows developers to break down monolithic systems into smaller, independent components. Join us as we unravel the role of microservices in shaping the future of software design and explore their immense potential for transforming your organization’s technology stack. Buckle up for an enlightening journey through the intricacies of this game-changing architectural style!

Introduction To Microservices And Software Architecture

In today’s rapidly evolving technological landscape, software architecture has become a crucial aspect for businesses looking to stay competitive. As companies strive for faster delivery of high-quality software, the traditional monolithic architecture has proved to be limiting and inefficient. This is where microservices come into play.

Microservices are an architectural approach that involves breaking down large, complex applications into smaller, independent services that can communicate with each other through APIs. These services are self-contained and can be deployed and updated independently without affecting the entire application.

Software architecture on the other hand, refers to the overall design of a software system including its components, relationships between them, and their interactions. It provides a blueprint for building scalable, maintainable and robust applications.

So how do microservices fit into the world of software architecture? Let’s delve deeper into this topic by understanding the fundamentals of both microservices and software architecture.

As mentioned earlier, microservices are small independent services that work together to form a larger application. Each service performs a specific business function and runs as an autonomous process. These services can be developed in different programming languages or frameworks based on what best suits their purpose.

The concept of microservices originated from Service-Oriented Architecture (SOA). However, unlike SOA which tends to have larger services with complex interconnections, microservices follow the principle of single responsibility – meaning each service should only perform one task or function.

Evolution Of Software Architecture: From Monolithic To Microservices

Software architecture has evolved significantly over the years, from traditional monolithic architectures to more modern and agile microservices architectures. This evolution has been driven by the need for more flexible, scalable, and efficient software systems. In this section, we will explore the journey of software architecture from monolithic to microservices and how it has transformed the way modern software is built.

Monolithic Architecture:

In a monolithic architecture, all components of an application are tightly coupled together into a single codebase. This means that any changes made to one part of the code can potentially impact other parts of the application. Monolithic applications are usually large and complex, making them difficult to maintain and scale.

One of the main drawbacks of monolithic architecture is its lack of flexibility. The entire application needs to be redeployed whenever a change or update is made, which can result in downtime and disruption for users. This makes it challenging for businesses to respond quickly to changing market needs.

The Rise of Microservices:

To overcome these limitations, software architects started exploring new ways of building applications that were more flexible and scalable. Microservices emerged as a solution to these challenges in software development.

Microservices architecture decomposes an application into smaller independent services that communicate with each other through well-defined APIs. Each service is responsible for a specific business function or feature and can be developed, deployed, and scaled independently without affecting other services.

Advantages Of Using Microservices In Modern Software Development

Microservices have gained immense popularity in recent years, and for good reason. They offer numerous advantages over traditional monolithic software development approaches, making them a highly sought-after approach in modern software architecture.

1. Scalability: One of the key advantages of using microservices is their ability to scale independently. In a monolithic system, any changes or updates made to one component can potentially affect the entire application, making it difficult to scale specific functionalities as needed. However, with microservices, each service is developed and deployed independently, allowing for easier scalability and flexibility.

2. Improved Fault Isolation: In a monolithic architecture, a single error or bug can bring down the entire system. This makes troubleshooting and debugging a time-consuming and challenging process. With microservices, each service operates independently from others, which means that if one service fails or experiences issues, it will not impact the functioning of other services. This enables developers to quickly identify and resolve issues without affecting the overall system.

3. Faster Development: Microservices promote faster development cycles because they allow developers to work on different services concurrently without disrupting each other’s work. Moreover, since services are smaller in size compared to monoliths, they are easier to understand and maintain which results in reduced development time.

4. Technology Diversity: Monolithic systems often rely on a single technology stack for all components of the application. This can be limiting when new technologies emerge or when certain functionalities require specialized tools or languages that may not be compatible with the existing stack. In contrast, microservices allow for a diverse range of technologies to be used for different services, providing more flexibility and adaptability.

5. Easy Deployment: Microservices are designed to be deployed independently, which means that updates or changes to one service can be rolled out without affecting the entire system. This makes deployments faster and less risky compared to monolithic architectures, where any changes require the entire application to be redeployed.

6. Better Fault Tolerance: In a monolithic architecture, a single point of failure can bring down the entire system. With microservices, failures are isolated to individual services, which means that even if one service fails, the rest of the system can continue functioning. This improves overall fault tolerance in the application.

7. Improved Team Productivity: Microservices promote a modular approach to software development, allowing teams to work on specific services without needing to understand every aspect of the application. This leads to improved productivity as developers can focus on their areas of expertise and make independent decisions about their service without worrying about how it will affect other parts of the system.

Challenges And Limitations Of Microservices

As with any technology or approach, there are both challenges and limitations to implementing microservices in modern software architecture. While the benefits of this architectural style are numerous, it is important to be aware of these potential obstacles in order to effectively navigate them.

1. Complexity: One of the main challenges of microservices is their inherent complexity. When a system is broken down into smaller, independent services, it becomes more difficult to manage and understand as a whole. This can lead to increased overhead and maintenance costs, as well as potential performance issues if not properly designed and implemented.

2. Distributed Systems Management: Microservices by nature are distributed systems, meaning that each service may be running on different servers or even in different geographical locations. This introduces new challenges for managing and monitoring the system as a whole. It also adds an extra layer of complexity when troubleshooting issues that span multiple services.

3. Communication Between Services: In order for microservices to function effectively, they must be able to communicate with one another seamlessly. This requires robust communication protocols and mechanisms such as APIs or messaging systems. However, setting up and maintaining these connections can be time-consuming and error-prone.

4. Data Consistency: In a traditional monolithic architecture, data consistency is relatively straightforward since all components access the same database instance. In contrast, microservices often have their own databases which can lead to data consistency issues if not carefully managed through proper synchronization techniques.

Best Practices For Implementing Microservices In Your Project

Implementing microservices in your project can bring a multitude of benefits, such as increased scalability, flexibility and faster development cycles. However, it is also important to ensure that the implementation is done correctly in order to fully reap these benefits. In this section, we will discuss some best practices for implementing microservices in your project.

1. Define clear boundaries and responsibilities: One of the key principles of microservices architecture is the idea of breaking down a larger application into smaller independent services. It is crucial to clearly define the boundaries and responsibilities of each service to avoid overlap or duplication of functionality. This can be achieved by using techniques like domain-driven design or event storming to identify distinct business domains and their respective services.

2. Choose appropriate communication protocols: Microservices communicate with each other through APIs, so it is important to carefully consider which protocols to use for these interactions. RESTful APIs are popular due to their simplicity and compatibility with different programming languages. Alternatively, you may choose messaging-based protocols like AMQP or Kafka for asynchronous communication between services.

3. Ensure fault tolerance: In a distributed system like microservices architecture, failures are inevitable. Therefore, it is important to design for fault tolerance by implementing strategies such as circuit breakers and retries. These mechanisms help prevent cascading failures and improve overall system resilience.

Real-Life Examples Of Successful Implementation Of Microservices

Microservices have gained immense popularity in recent years due to their ability to improve the scalability, flexibility, and agility of software systems. Many organizations across various industries have successfully implemented microservices architecture in their applications, resulting in significant benefits. In this section, we will explore real-life examples of successful implementation of microservices and how they have revolutionized modern software architecture.

1. Netflix: Netflix is a leading streaming service that has disrupted the entertainment industry with its vast collection of movies and TV shows. The company’s success can be attributed to its adoption of microservices architecture. Initially, Netflix had a monolithic application that was becoming difficult to scale and maintain as the user base grew rapidly. To overcome these challenges, they broke down their application into smaller independent services following the microservices approach.

Each service at Netflix has a specific function such as search, recommendations, or video playback. These services can be developed independently, enabling faster deployment and updates without affecting other parts of the system. This also allows for easier scaling based on demand by adding more instances of the required services. With microservices, Netflix has improved its uptime and performance while keeping costs low.

The Future Of Microservices In Software Architecture

The concept of microservices has been gaining traction in the world of software architecture in recent years. This approach to building applications involves breaking down a monolithic system into smaller, independent services that communicate with each other through well-defined APIs. The benefits of this architecture include increased flexibility, scalability, and resilience.

But what does the future hold for microservices? In this section, we will explore some potential developments and trends that could shape the future of microservices in software architecture.

1. Rise of Serverless Architecture

As organizations continue to move towards cloud-based solutions, serverless architecture is becoming increasingly popular. This approach eliminates the need for traditional servers and infrastructure management by allowing developers to deploy their code directly onto a cloud platform such as Amazon Web Services (AWS) or Microsoft Azure.

Microservices are a natural fit for serverless architecture as they already follow a distributed model. With serverless, each microservice can be deployed independently, making it easier to scale individual components without affecting the entire system. As serverless continues to grow in popularity, we can expect to see more widespread adoption of microservices.

2. Increased Adoption of Containerization

Containerization technology such as Docker has revolutionized how applications are deployed and managed. Containers provide an isolated environment for each service, making it easier to package and deploy them anywhere without worrying about compatibility issues.

Conclusion:

As we have seen throughout this article, microservices offer a number of benefits in terms of scalability, flexibility, and efficiency in modern software architecture. However, it is important to carefully consider whether or not the use of microservices is right for your specific project.

First and foremost, it is crucial to understand the complexity that comes with implementing a microservices architecture. While it offers many advantages, it also introduces new challenges such as increased communication overhead and the need for specialized tools and processes. Therefore, if your project does not require a high level of scalability or if you do not have a team with sufficient expertise to manage these complexities, using a monolithic architecture may be more suitable.

#website landing page design#magento development#best web development company in united states#asp.net web and application development#web designing company#web development company#logo design company#web development#web design#digital marketing company in usa

2 notes

·

View notes