#Manage SQL Workloads SQL Server

Explore tagged Tumblr posts

Text

Managing SQL Server 2022 Workloads with Resource Governor: A Practical Guide

In the ever-evolving landscape of database management, ensuring optimal performance and resource allocation for SQL Server instances is paramount. The Resource Governor, an invaluable feature in SQL Server 2022, stands out as a critical tool for administrators seeking to maintain equilibrium in multi-workload environments. This guide delves into the practical aspects of utilizing the Resource…

View On WordPress

#Manage SQL Workloads SQL Server#Optimize Database Performance SQL#SQL Server 2022 Resource Governor#SQL Server CPU Memory Management#SQL Server Workload Classification

0 notes

Text

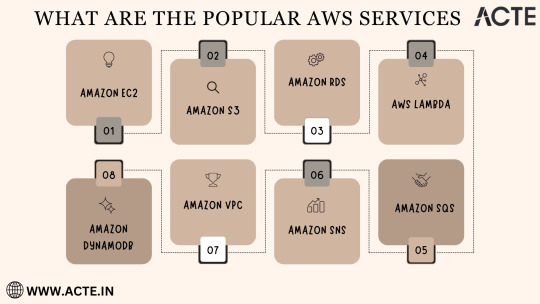

Exploring the Power of Amazon Web Services: Top AWS Services You Need to Know

In the ever-evolving realm of cloud computing, Amazon Web Services (AWS) has established itself as an undeniable force to be reckoned with. AWS's vast and diverse array of services has positioned it as a dominant player, catering to the evolving needs of businesses, startups, and individuals worldwide. Its popularity transcends boundaries, making it the preferred choice for a myriad of use cases, from startups launching their first web applications to established enterprises managing complex networks of services. This blog embarks on an exploratory journey into the boundless world of AWS, delving deep into some of its most sought-after and pivotal services.

As the digital landscape continues to expand, understanding these AWS services and their significance is pivotal, whether you're a seasoned cloud expert or someone taking the first steps in your cloud computing journey. Join us as we delve into the intricate web of AWS's top services and discover how they can shape the future of your cloud computing endeavors. From cloud novices to seasoned professionals, the AWS ecosystem holds the keys to innovation and transformation.

Amazon EC2 (Elastic Compute Cloud): The Foundation of Scalability At the core of AWS's capabilities is Amazon EC2, the Elastic Compute Cloud. EC2 provides resizable compute capacity in the cloud, allowing you to run virtual servers, commonly referred to as instances. These instances serve as the foundation for a multitude of AWS solutions, offering the scalability and flexibility required to meet diverse application and workload demands. Whether you're a startup launching your first web application or an enterprise managing a complex network of services, EC2 ensures that you have the computational resources you need, precisely when you need them.

Amazon S3 (Simple Storage Service): Secure, Scalable, and Cost-Effective Data Storage When it comes to storing and retrieving data, Amazon S3, the Simple Storage Service, stands as an indispensable tool in the AWS arsenal. S3 offers a scalable and highly durable object storage service that is designed for data security and cost-effectiveness. This service is the choice of businesses and individuals for storing a wide range of data, including media files, backups, and data archives. Its flexibility and reliability make it a prime choice for safeguarding your digital assets and ensuring they are readily accessible.

Amazon RDS (Relational Database Service): Streamlined Database Management Database management can be a complex task, but AWS simplifies it with Amazon RDS, the Relational Database Service. RDS automates many common database management tasks, including patching, backups, and scaling. It supports multiple database engines, including popular options like MySQL, PostgreSQL, and SQL Server. This service allows you to focus on your application while AWS handles the underlying database infrastructure. Whether you're building a content management system, an e-commerce platform, or a mobile app, RDS streamlines your database operations.

AWS Lambda: The Era of Serverless Computing Serverless computing has transformed the way applications are built and deployed, and AWS Lambda is at the forefront of this revolution. Lambda is a serverless compute service that enables you to run code without the need for server provisioning or management. It's the perfect solution for building serverless applications, microservices, and automating tasks. The unique pricing model ensures that you pay only for the compute time your code actually uses. This service empowers developers to focus on coding, knowing that AWS will handle the operational complexities behind the scenes.

Amazon DynamoDB: Low Latency, High Scalability NoSQL Database Amazon DynamoDB is a managed NoSQL database service that stands out for its low latency and exceptional scalability. It's a popular choice for applications with variable workloads, such as gaming platforms, IoT solutions, and real-time data processing systems. DynamoDB automatically scales to meet the demands of your applications, ensuring consistent, single-digit millisecond latency at any scale. Whether you're managing user profiles, session data, or real-time analytics, DynamoDB is designed to meet your performance needs.

Amazon VPC (Virtual Private Cloud): Tailored Networking for Security and Control Security and control over your cloud resources are paramount, and Amazon VPC (Virtual Private Cloud) empowers you to create isolated networks within the AWS cloud. This isolation enhances security and control, allowing you to define your network topology, configure routing, and manage access. VPC is the go-to solution for businesses and individuals who require a network environment that mirrors the security and control of traditional on-premises data centers.

Amazon SNS (Simple Notification Service): Seamless Communication Across Channels Effective communication is a cornerstone of modern applications, and Amazon SNS (Simple Notification Service) is designed to facilitate seamless communication across various channels. This fully managed messaging service enables you to send notifications to a distributed set of recipients, whether through email, SMS, or mobile devices. SNS is an essential component of applications that require real-time updates and notifications to keep users informed and engaged.

Amazon SQS (Simple Queue Service): Decoupling for Scalable Applications Decoupling components of a cloud application is crucial for scalability, and Amazon SQS (Simple Queue Service) is a fully managed message queuing service designed for this purpose. It ensures reliable and scalable communication between different parts of your application, helping you create systems that can handle varying workloads efficiently. SQS is a valuable tool for building robust, distributed applications that can adapt to changes in demand.

In the rapidly evolving landscape of cloud computing, Amazon Web Services (AWS) stands as a colossus, offering a diverse array of services that address the ever-evolving needs of businesses, startups, and individuals alike. AWS's popularity transcends industry boundaries, making it the go-to choice for a wide range of use cases, from startups launching their inaugural web applications to established enterprises managing intricate networks of services.

To unlock the full potential of these AWS services, gaining comprehensive knowledge and hands-on experience is key. ACTE Technologies, a renowned training provider, offers specialized AWS training programs designed to provide practical skills and in-depth understanding. These programs equip you with the tools needed to navigate and excel in the dynamic world of cloud computing.

With AWS services at your disposal, the possibilities are endless, and innovation knows no bounds. Join the ever-growing community of cloud professionals and enthusiasts, and empower yourself to shape the future of the digital landscape. ACTE Technologies is your trusted guide on this journey, providing the knowledge and support needed to thrive in the world of AWS and cloud computing.

8 notes

·

View notes

Text

Navigating the Cloud Landscape: Unleashing Amazon Web Services (AWS) Potential

In the ever-evolving tech landscape, businesses are in a constant quest for innovation, scalability, and operational optimization. Enter Amazon Web Services (AWS), a robust cloud computing juggernaut offering a versatile suite of services tailored to diverse business requirements. This blog explores the myriad applications of AWS across various sectors, providing a transformative journey through the cloud.

Harnessing Computational Agility with Amazon EC2

Central to the AWS ecosystem is Amazon EC2 (Elastic Compute Cloud), a pivotal player reshaping the cloud computing paradigm. Offering scalable virtual servers, EC2 empowers users to seamlessly run applications and manage computing resources. This adaptability enables businesses to dynamically adjust computational capacity, ensuring optimal performance and cost-effectiveness.

Redefining Storage Solutions

AWS addresses the critical need for scalable and secure storage through services such as Amazon S3 (Simple Storage Service) and Amazon EBS (Elastic Block Store). S3 acts as a dependable object storage solution for data backup, archiving, and content distribution. Meanwhile, EBS provides persistent block-level storage designed for EC2 instances, guaranteeing data integrity and accessibility.

Streamlined Database Management: Amazon RDS and DynamoDB

Database management undergoes a transformation with Amazon RDS, simplifying the setup, operation, and scaling of relational databases. Be it MySQL, PostgreSQL, or SQL Server, RDS provides a frictionless environment for managing diverse database workloads. For enthusiasts of NoSQL, Amazon DynamoDB steps in as a swift and flexible solution for document and key-value data storage.

Networking Mastery: Amazon VPC and Route 53

AWS empowers users to construct a virtual sanctuary for their resources through Amazon VPC (Virtual Private Cloud). This virtual network facilitates the launch of AWS resources within a user-defined space, enhancing security and control. Simultaneously, Amazon Route 53, a scalable DNS web service, ensures seamless routing of end-user requests to globally distributed endpoints.

Global Content Delivery Excellence with Amazon CloudFront

Amazon CloudFront emerges as a dynamic content delivery network (CDN) service, securely delivering data, videos, applications, and APIs on a global scale. This ensures low latency and high transfer speeds, elevating user experiences across diverse geographical locations.

AI and ML Prowess Unleashed

AWS propels businesses into the future with advanced machine learning and artificial intelligence services. Amazon SageMaker, a fully managed service, enables developers to rapidly build, train, and deploy machine learning models. Additionally, Amazon Rekognition provides sophisticated image and video analysis, supporting applications in facial recognition, object detection, and content moderation.

Big Data Mastery: Amazon Redshift and Athena

For organizations grappling with massive datasets, AWS offers Amazon Redshift, a fully managed data warehouse service. It facilitates the execution of complex queries on large datasets, empowering informed decision-making. Simultaneously, Amazon Athena allows users to analyze data in Amazon S3 using standard SQL queries, unlocking invaluable insights.

In conclusion, Amazon Web Services (AWS) stands as an all-encompassing cloud computing platform, empowering businesses to innovate, scale, and optimize operations. From adaptable compute power and secure storage solutions to cutting-edge AI and ML capabilities, AWS serves as a robust foundation for organizations navigating the digital frontier. Embrace the limitless potential of cloud computing with AWS – where innovation knows no bounds.

3 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

How to Improve Database Performance with Smart Optimization Techniques

Database performance is critical to the efficiency and responsiveness of any data-driven application. As data volumes grow and user expectations rise, ensuring your database runs smoothly becomes a top priority. Whether you're managing an e-commerce platform, financial software, or enterprise systems, sluggish database queries can drastically hinder user experience and business productivity.

In this guide, we’ll explore practical and high-impact strategies to improve database performance, reduce latency, and increase throughput.

1. Optimize Your Queries

Poorly written queries are one of the most common causes of database performance issues. Avoid using SELECT * when you only need specific columns. Analyze query execution plans to understand how data is being retrieved and identify potential inefficiencies.

Use indexed columns in WHERE, JOIN, and ORDER BY clauses to take full advantage of the database indexing system.

2. Index Strategically

Indexes are essential for speeding up data retrieval, but too many indexes can hurt write performance and consume excessive storage. Prioritize indexing on columns used in search conditions and join operations. Regularly review and remove unused or redundant indexes.

3. Implement Connection Pooling

Connection pooling allows multiple application users to share a limited number of database connections. This reduces the overhead of opening and closing connections repeatedly, which can significantly improve performance, especially under heavy load.

4. Cache Frequently Accessed Data

Use caching layers to avoid unnecessary hits to the database. Frequently accessed and rarely changing data—such as configuration settings or product catalogs—can be stored in in-memory caches like Redis or Memcached. This reduces read latency and database load.

5. Partition Large Tables

Partitioning splits a large table into smaller, more manageable pieces without altering the logical structure. This improves performance for queries that target only a subset of the data. Choose partitioning strategies based on date, region, or other logical divisions relevant to your dataset.

6. Monitor and Tune Regularly

Database performance isn’t a one-time fix—it requires continuous monitoring and tuning. Use performance monitoring tools to track query execution times, slow queries, buffer usage, and I/O patterns. Adjust configurations and SQL statements accordingly to align with evolving workloads.

7. Offload Reads with Replication

Use read replicas to distribute query load, especially for read-heavy applications. Replication allows you to spread read operations across multiple servers, freeing up the primary database to focus on write operations and reducing overall latency.

8. Control Concurrency and Locking

Poor concurrency control can lead to lock contention and delays. Ensure your transactions are short and efficient. Use appropriate isolation levels to avoid unnecessary locking, and understand the impact of each level on performance and data integrity.

0 notes

Text

Aurora DSQL: Amazon’s Fastest Serverless SQL Solution

Amazon Aurora DSQL

Availability of Amazon Aurora DSQL is announced. As the quickest serverless distributed SQL database, it provides high availability, almost limitless scalability, and low infrastructure administration for always-accessible applications. Patching, updates, and maintenance downtime may no longer be an operational burden. Customers were excited to get a preview of this solution at AWS re:Invent 2024 since it promised to simplify relational database issues.

Aurora DSQL architecture controlled complexity upfront, according to Amazon.com CTO Dr. Werner Vogels. Its architecture includes a query processor, adjudicator, journal, and crossbar, unlike other databases. These pieces grow independently to your needs, are cohesive, and use well-defined APIs. This architecture supports multi-Region strong consistency, low latency, and global time synchronisation.

Your application can scale to meet any workload and use the fastest distributed SQL reads and writes without database sharding or instance upgrades. Aurora DSQL's active-active distributed architecture provides 99.999 percent availability across many locations and 99.99 percent in one. An application can read and write data consistently without a Region cluster endpoint.

Aurora DSQL commits write transactions to a distributed transaction log in a single Region and synchronously replicates them to user storage replicas in three Availability Zones. Cluster storage replicas are distributed throughout a storage fleet and scale automatically for best read performance. One endpoint per peer cluster region Multi-region clusters boost availability while retaining resilience and connection.

A peered cluster's two endpoints perform concurrent read/write operations with good data consistency and provide a single logical database. Third regions serve as log-only witnesses without cluster resources or endpoints. This lets you balance connections and apps by speed, resilience, or geography to ensure readers always see the same data.

Aurora DSQL benefits event-driven and microservice applications. It builds enormously scalable retail, e-commerce, financial, and travel systems. Data-driven social networking, gaming, and multi-tenant SaaS programs that need multi-region scalability and reliability can use it.

Starting Amazon Aurora DSQL

Aurora DSQL is easy to learn with console expertise. Programmable ways with a database endpoint and authentication token as a password or JetBrains DataGrip, DBeaver, or PostgreSQL interactive terminal are options.

Select “Create cluster” in the console to start an Aurora DSQL cluster. Single-Region and Multi-Region setups are offered.

Simply pick “Create cluster” for a single-Region cluster. Create it in minutes. Create an authentication token, copy the endpoint, and connect with SQL. CloudShell, Python, Java, JavaScript, C++, Ruby,.NET, Rust, and Golang can connect. You can also construct example apps using AWS Lambda or Django and Ruby on Rails.

Multi-region clusters need ARNs to peer. Open Multi-Region, select Witness Region, and click “Create cluster” for the first cluster. The ARN of the first cluster is used to construct a second cluster in another region. Finally, pick “Peer” on the first cluster page to peer the clusters. The “Peers” tab contains peer information. AWS SDKs, CLI, and Aurora DSQL APIs allow programmatic cluster creation and management.

In response to preview user comments, new features were added. These include easier AWS CloudShell connections and better console experiences for constructing and peering multi-region clusters. PostgreSQL also added views, Auto-Analyze, and unique secondary indexes for tables with existing data. Integration with AWS CloudTrail for logging, Backup, PrivateLink, and CloudFormation was also included.

Aurora DSQL now supports natural language communication between the database and generative AI models via a Model Context Protocol (MCP) server to boost developer productivity. Installation of Amazon Q Developer CLI and MCP server allows the CLI access to the cluster, allowing it to investigate schema, understand table structure, and conduct complex SQL queries without integration code.

Accessibility

As of writing, Amazon Aurora DSQL was available for single- and multi-region clusters (two peers and one witness region) in AWS US East (N. Virginia), US East (Ohio), and US West (Oregon) Regions. It was available for single-Region clusters in Ireland, London, Paris, Osaka, and Tokyo.

Aurora DSQL bills all request-based operations, such as read/write, monthly using a single normalised billing unit, the Distributed Processing Unit. Total database size, in gigabytes per month, determines storage costs. You pay for one logical copy of your data in a single- or multi-region peered cluster. Your first 100,000 DPUs and 1 GB of storage per month are free with AWS Free Tier. Find pricing here.

Console users can try Aurora DSQL for free. The Aurora DSQL User Guide has more information, and you may give comments via AWS re:Post or other means.

#AuroraDSQL#AmazonAuroraDSQL#AuroraDSQLcluster#DistributedProcessingUnit#AWSservices#ModelContextProtocol#technology#technews#technologynews#news#govindhtech

0 notes

Text

Cost Optimization Strategies in Public Cloud

Businesses around the globe have embraced public cloud computing to gain flexibility, scalability, and faster innovation. While the cloud offers tremendous advantages, many organizations face an unexpected challenge: spiraling costs. Without careful planning, cloud expenses can quickly outpace expectations. That’s why cost optimization has become a critical component of cloud strategy.

Cost optimization doesn’t mean cutting essential services or sacrificing performance. It means using the right tools, best practices, and strategic planning to make the most of every dollar spent on the cloud. In this article, we explore proven strategies to reduce unnecessary spending while maintaining high availability and performance in a public cloud environment.

1. Right-Sizing Resources

Many businesses overprovision their cloud resources, thinking it's safer to allocate more computing power than needed. However, this leads to wasted spending. Right-sizing involves analyzing usage patterns and scaling down resources to match actual needs.

You can:

Use monitoring tools to analyze CPU and memory utilization

Adjust virtual machine sizes to suit workloads

Switch to serverless computing when possible, paying only for what you use

This strategy ensures optimal performance at the lowest cost.

2. Take Advantage of Reserved Instances

Most public cloud providers, including AWS, Azure, and Google Cloud, offer Reserved Instances (RIs) at discounted prices for long-term commitments. If your workload is predictable and long-term, reserving instances for one or three years can save up to 70% compared to on-demand pricing.

This is ideal for production environments, baseline services, and other non-variable workloads.

3. Auto-Scaling Based on Demand

Auto-scaling helps match computing resources with current demand. During off-peak hours, cloud services automatically scale down to reduce costs. When traffic spikes, resources scale up to maintain performance.

Implementing auto-scaling not only improves cost efficiency but also ensures reliability and customer satisfaction.

4. Delete Unused or Orphaned Resources

Cloud environments often accumulate unused resources—volumes, snapshots, IP addresses, or idle virtual machines. These resources continue to incur charges even when not in use.

Make it a regular practice to:

Audit and remove orphaned resources

Clean up unattached storage volumes

Delete old snapshots and unused databases

Cloud management tools can automate these audits, helping keep your environment lean and cost-effective.

5. Use Cost Monitoring and Alerting Tools

Every major public cloud provider offers native cost management tools:

AWS Cost Explorer

Azure Cost Management + Billing

Google Cloud Billing Reports

These tools help track spending in real time, break down costs by service, and identify usage trends. You can also set budgets and receive alerts when spending approaches limits, helping prevent surprise bills.

6. Implement Tagging for Cost Allocation

Properly tagging resources makes it easier to identify who is spending what within your organization. With tagging, you can allocate costs by:

Project

Department

Client

Environment (e.g., dev, test, prod)

This visibility empowers teams to take ownership of their cloud spending and look for optimization opportunities.

7. Move to Serverless and Managed Services

In many cases, serverless and managed services provide a more cost-efficient alternative to traditional infrastructure.

Consider using:

Azure Functions or AWS Lambda for event-driven applications

Cloud SQL or Azure SQL Database for managed relational databases

Firebase or App Engine for mobile and web backends

These services eliminate the need for server provisioning and maintenance while offering a pay-as-you-go pricing model.

8. Choose the Right Storage Class

Public cloud providers offer different storage classes based on access frequency:

Hot storage for frequently accessed data

Cool or infrequent access storage for less-used files

Archive storage for long-term, rarely accessed data

Storing data in the appropriate class ensures you don’t pay premium prices for data you seldom access.

9. Leverage Spot and Preemptible Instances

Spot instances (AWS) or preemptible VMs (Google Cloud) offer up to 90% savings compared to on-demand pricing. These instances are ideal for:

Batch processing

Testing environments

Fault-tolerant applications

Since these instances can be interrupted, they’re not suitable for every workload, but when used correctly, they can slash costs significantly.

10. Train Your Teams

Cost optimization isn’t just a technical task—it’s a cultural one. When developers, DevOps, and IT teams understand how cloud billing works, they make smarter decisions.

Regular training and workshops can:

Increase awareness of cost-effective architectures

Encourage the use of automation tools

Promote shared responsibility for cloud cost management

Final Thoughts

Public cloud computing offers unmatched agility and scalability, but without deliberate cost control, organizations can face financial inefficiencies. By right-sizing, leveraging automation, utilizing reserved instances, and fostering a cost-aware culture, companies can enjoy the full benefits of the cloud without overspending.

Cloud optimization is a continuous journey��not a one-time fix. Regular reviews and proactive planning will keep your cloud costs aligned with your business goals.

#PublicCloudComputing#CloudCostOptimization#Azure#AWS#GoogleCloud#CloudStrategy#Serverless#CloudSavings#ITBudget#CloudArchitecture#digitalmarketing

0 notes

Text

Strategic Database Solutions for Modern Business Needs

Today’s businesses rely on secure, fast, and scalable systems to manage data across distributed teams and environments. As demand for flexibility and 24/7 support increases, database administration services have become central to operational stability. These services go far beyond routine backups—they include performance tuning, capacity planning, recovery strategies, and compliance support.

Adopting Agile Support with Flexible Engagement Models

Companies under pressure to scale operations without adding internal overhead are increasingly turning to outsourced database administration. This approach provides round-the-clock monitoring, specialised expertise, and faster resolution times, all without the cost of hiring full-time staff. With database workloads becoming more complex, outsourced solutions help businesses keep pace with technology changes while controlling costs.

What Makes Outsourced Services So Effective

The benefit of using outsourced database administration services lies in having instant access to certified professionals who are trained across multiple platforms—whether Oracle, SQL Server, PostgreSQL, or cloud-native options. These experts can handle upgrades, patching, and diagnostics with precision, allowing internal teams to focus on core business activities instead of infrastructure maintenance.

Cost-Effective Performance Management at Scale

Companies looking to outsource dba roles often do so to reduce capital expenditure and increase operational efficiency. Outsourcing allows businesses to pay only for the resources they need, when they need them—without being tied to long-term contracts or dealing with the complexities of recruitment. This flexibility is especially valuable for businesses managing seasonal spikes or undergoing digital transformation projects.

Minimizing Downtime Through Proactive Monitoring

Modern database administration services go beyond traditional support models by offering real-time health checks, automatic alerts, and predictive performance analysis. These features help identify bottlenecks or security issues before they impact users. Proactive support allows organisations to meet service-level agreements (SLAs) and deliver consistent performance to customers and internal stakeholders.

How External Partners Fill Critical Skill Gaps

Working with experienced database administration outsourcing companies can close gaps in internal knowledge, especially when managing hybrid or multi-cloud environments. These companies typically have teams with varied technical certifications and deep domain experience, making them well-equipped to support both legacy systems and modern architecture. The result is stronger resilience and adaptability in managing database infrastructure.

Supporting Business Continuity with Professional Oversight

Efficient dba administration includes everything from setting up new environments to handling failover protocols and disaster recovery planning. With dedicated oversight, businesses can avoid unplanned outages and meet compliance requirements, even during migrations or platform upgrades. The focus on stability and scalability helps maintain operational continuity in high-demand settings.

0 notes

Video

youtube

Amazon RDS DB Engines | Choose the Right Relational Database

Selecting the right Amazon RDS database engine is crucial for achieving optimal performance, scalability, and functionality for your applications. Amazon RDS offers a variety of relational database engines, each tailored to specific needs and use cases. Understanding these options helps you make an informed decision that aligns with your project requirements.

Types of Amazon RDS Databases:

- Amazon Aurora: A high-performance, fully managed database compatible with MySQL and PostgreSQL. Aurora is known for its speed, reliability, and scalability, making it suitable for high-demand applications. - MySQL: An open-source database that is widely used for its flexibility and ease of use. It is ideal for web applications, content management systems, and moderate traffic workloads. - MariaDB: A fork of MySQL with additional features and improved performance. MariaDB is well-suited for users seeking advanced capabilities and enhanced security. - PostgreSQL: Known for its advanced data types and extensibility, PostgreSQL is perfect for applications requiring complex queries, data integrity, and sophisticated analytics. - Microsoft SQL Server: An enterprise-grade database offering robust reporting and business intelligence features. It integrates seamlessly with other Microsoft products and is ideal for large-scale applications.

When and Where to Choose Each Engine:

- Amazon Aurora: Choose Aurora for applications that demand high availability, fault tolerance, and superior performance, such as high-traffic web platforms and enterprise systems. - MySQL: Opt for MySQL if you need a cost-effective, open-source solution with strong community support for web applications and simple data management. - MariaDB: Select MariaDB for its advanced features and enhanced performance, especially if you require a more capable alternative to MySQL for web applications and data-intensive systems. - PostgreSQL: Use PostgreSQL for applications needing complex data operations, such as data warehousing, analytical applications, and scenarios where advanced querying is essential. - Microsoft SQL Server: Ideal for enterprise environments needing extensive business intelligence, reporting, and integration with other Microsoft products. Choose SQL Server for complex enterprise applications and large-scale data management.

Use Cases:

- Amazon Aurora: High-traffic e-commerce sites, real-time analytics, and mission-critical applications requiring high performance and scalability. - MySQL: Content management systems, small to medium-sized web applications, and moderate data workloads. - MariaDB: Advanced web applications, high-performance data systems, and scenarios requiring enhanced security and features. - PostgreSQL: Complex business applications, financial systems, and applications requiring advanced data manipulation and integrity. - Microsoft SQL Server: Large-scale enterprise applications, business intelligence platforms, and complex reporting needs.

Key Benefits of Choosing the Right Amazon RDS Database:

1. Optimized Performance: Select an engine that matches your performance needs, ensuring efficient data processing and application responsiveness. 2. Scalability: Choose a database that scales seamlessly with your growing data and traffic demands, avoiding performance bottlenecks. 3. Cost Efficiency: Find a solution that fits your budget while providing the necessary features and performance. 4. Enhanced Features: Leverage advanced capabilities specific to each engine to meet your application's unique requirements. 5. Simplified Management: Benefit from managed services that reduce administrative tasks and streamline database operations.

Conclusion:

Choosing the right Amazon RDS database engine is essential for optimizing your application’s performance and scalability. By understanding the types of databases available and their respective benefits, you can make a well-informed decision that supports your project's needs and ensures a robust, efficient, and cost-effective database solution. Explore Amazon RDS to find the perfect database engine for your application.

Amazon RDS, RDS Monitoring, AWS Performance Insights, Optimize RDS, Amazon CloudWatch, Enhanced Monitoring AWS, AWS DevOps Tutorial, AWS Hands-On, Cloud Performance, RDS Optimization, AWS Database Monitoring, RDS best practices, AWS for Beginners, ClouDolus

#AmazonRDS #RDSMonitoring #PerformanceInsights #CloudWatch #AWSDevOps #DatabaseOptimization #ClouDolus #ClouDolusPro

📢 Subscribe to ClouDolus for More AWS & DevOps Tutorials! 🚀 🔹 ClouDolus YouTube Channel - https://www.youtube.com/@cloudolus 🔹 ClouDolus AWS DevOps - https://www.youtube.com/@ClouDolusPro

*THANKS FOR BEING A PART OF ClouDolus! 🙌✨*

***************************** *Follow Me* https://www.facebook.com/cloudolus/ | https://www.facebook.com/groups/cloudolus | https://www.linkedin.com/groups/14347089/ | https://www.instagram.com/cloudolus/ | https://twitter.com/cloudolus | https://www.pinterest.com/cloudolus/ | https://www.youtube.com/@cloudolus | https://www.youtube.com/@ClouDolusPro | https://discord.gg/GBMt4PDK | https://www.tumblr.com/cloudolus | https://cloudolus.blogspot.com/ | https://t.me/cloudolus | https://www.whatsapp.com/channel/0029VadSJdv9hXFAu3acAu0r | https://chat.whatsapp.com/BI03Rp0WFhqBrzLZrrPOYy *****************************

#youtube#Amazon RDS RDS Monitoring AWS Performance Insights Optimize RDS Amazon CloudWatch Enhanced Monitoring AWS AWS DevOps Tutorial AWS Hands-On C

0 notes

Text

Maximize Business Performance with a Dedicated Server with Windows – Delivered by CloudMinister Technologies

In the era of digital transformation, having full control over your hosting environment is no longer optional—it’s essential. Businesses that prioritize security, speed, and customization are turning to Dedicated servers with Windows as their go-to infrastructure solution. When you choose CloudMinister Technologies, you get more than just a server—you get a strategic partner dedicated to your growth and uptime.

What is a Dedicated Server with Windows?

A Dedicated server with Windows is a physical server exclusively assigned to your organization, running on the Windows Server operating system. Unlike shared hosting or VPS, all the resources—CPU, RAM, disk space, and bandwidth—are reserved solely for your use. This ensures maximum performance, enhanced security, and total administrative control.

Key Benefits of a Dedicated Server with Windows

1. Total Resource Control

All server resources are 100% yours. No sharing, no interference—just consistent, high-speed performance tailored to your workload.

2. Full Administrative Access

You get full root/administrator access, giving you the freedom to install applications, manage databases, configure settings, and automate processes.

3. Better Compatibility with Microsoft Ecosystem

Run all Microsoft applications—SQL Server, Exchange, SharePoint, IIS, and ASP.NET—without compatibility issues.

4. Advanced Security Options

Use built-in Windows security features like BitLocker encryption, Windows Defender, and group policy enforcement to keep your data safe.

5. Remote Desktop Capability

Access your server from anywhere using Remote Desktop Protocol (RDP)—ideal for managing operations on the go.

6. Seamless Software Licensing

With CloudMinister Technologies, Windows licensing is bundled with your plan, ensuring legal compliance and cost savings.

7. Scalability Without Downtime

Need to upgrade? Add more RAM, switch to SSDs, or increase bandwidth—without migrating to another server or experiencing downtime.

Why Choose CloudMinister Technologies for Windows Dedicated Servers?

At CloudMinister Technologies, we combine performance with personalized service. Our infrastructure is engineered to support the demands of startups, growing businesses, and large enterprises a like.

Our Competitive Edge:

100% Custom Configurations Choose your ideal specs or consult with our engineers to build a server optimized for your application or business model.

Free Server Management We manage your OS, patches, updates, firewalls, backups, and security—so you can focus on your business, not your backend.

High Uptime Guarantee With our 99.99% uptime commitment and redundant systems, your server stays online—always.

Modern Data Centers All servers are housed in Tier III or higher data centers with 24/7 surveillance, redundant power, cooling, and robust firewalls.

Rapid Deployment Get your Dedicated server with Windows up and running quickly with pre-configured setups or same-day custom deployment.

Dedicated 24/7 Support Our expert team is available any time, day or night, to troubleshoot, consult, or provide emergency support.

Additional Features to Boost Your Operations

Automated Daily Backups Protect your data and ensure business continuity with secure, regular backups.

DDoS Protection Stay secure with advanced protection from distributed denial-of-service attacks.

Multiple OS Choices Prefer Windows 2016, 2019, or 2022? Choose what suits your stack best.

Control Panel Options Get support for cPanel, Plesk, or a custom dashboard for simplified server management.

Private VLAN and IPMI Access Enjoy better isolation and direct console access for advanced troubleshooting.

Call to Action: Start with CloudMinister Technologies Today

Your business deserves more than just a server—it deserves a partner who understands performance, uptime, and scalability. With a Dedicated server with Windows from CloudMinister Technologies, you're guaranteed a seamless hosting experience backed by unmatched support and reliability.

Don’t wait for slow speeds or security issues to hold you back.

Upgrade to a Dedicated Windows Server today.

Visit www.cloudminister.com to view plans Or contact our solutions team at [email protected] to discuss your custom setup.

0 notes

Text

Introduction to Microsoft Azure Basics: A Beginner's Guide

Cloud computing has revolutionized the way businesses are run, facilitating flexibility, scalability, and innovation like never before. One of the leading cloud providers, Microsoft Azure, is a robust platform with an unparalleled set of services that cover from virtual machines and AI to database management and cybersecurity. Be it a developer, IT expert, or an interested individual curious about the cloud, getting a hold of Azure fundamentals can be your gateway into an exciting and future-proof arena. In this beginner's tutorial, we'll learn what Azure is, its fundamental concepts, and the most important services you should know to begin your cloud journey. What is Microsoft Azure? Microsoft Azure is a cloud computing service and platform that has been developed by Microsoft. It delivers many cloud-based services such as computing, analytics, storage, networking, and other services. These services are made available by selecting and using those for the purpose of building new applications as well as running already existing applications. Launched in 2010, Azure has developed at a lightning pace and now caters to hybrid cloud environments, artificial intelligence, and DevOps, becoming the go-to choice for both enterprises and startups.

Why Learn Azure? • Market Demand: Azure skills are in demand because enterprises use it heavily. • Career Growth: Azure knowledge and certifications can be a stepping stone to becoming a cloud engineer, solutions architect, or DevOps engineer. • Scalability & Flexibility: Solutions from Azure can be offered to businesses of all types, ranging from startups to Fortune 500. • Integration with Microsoft Tools: Smooth integration with offerings such as Office 365, Active Directory, and Windows Server. Fundamental Concepts of Microsoft Azure Prior to services, it would be recommended to familiarize oneself with certain critical concepts which constitute the crux of Azure.

1. Azure Regions and Availability Zones Azure can be had in every geographic area globally, regions being divided within them. Within regions, redundancy and resiliency can be had through availability zones—separate physical data centers within a region. 2. Resource Groups A resource group is a container holding Azure resources that belong together, such as virtual machines, databases, and storage accounts. It helps group and manage assets of the same lifecycle.

3. Azure Resource Manager (ARM) ARM is Azure's deployment and management service. It enables you to manage resources through templates, REST APIs, or command-line tools in a uniform way. 4. Pay-As-You-Go Model Azure has a pay-as-you-go pricing model, meaning you pay only for what you use. It also has reserved instances and spot pricing to optimize costs.

Top Azure Services That Every Beginner Should Know Azure has over 200 services. As a starter, note the most significant ones by categories like compute, storage, networking, and databases. 1. Azure Virtual Machines (VMs) Azure VMs are flexible compute resources that allow you to run Windows- or Linux-based workloads. It's essentially a computer in the cloud. Use Cases: • Hosting applications • Executing development environments • Executing legacy applications

2. Azure App Services It's a fully managed service for constructing, running, and scaling web applications and APIs. Why Use It? • Automatically scales up or down according to demand as well as remains always available • Multilanguage support (.NET, Java, Node.js, Python) • Bundled DevOps and CI/CD 3. Azure Blob Storage Blob (Binary Large Object) storage is appropriate to store unstructured data such as images, videos, and backups. Key Features: • Greatly scalable and secure • Allows data lifecycle management • Accessible using REST API

4. Azure SQL Database This is a managed relational database service on Microsoft SQL Server. Benefits: • Automatic updates and backups • Embedded high availability • Has hyperscale and serverless levels 5. Azure Functions It is a serverless computing service that runs your code in response to events. Example Use Cases: • Workflow automation • Parsing file uploads • Handling HTTP requests 6. Azure Virtual Network (VNet) A VNet is like a normal network in your on-premises environment, but it exists in Azure. Applications: • Secure communication among resources • VPN and ExpressRoute connectivity

• Subnet segmentation for better control

Getting Started with Azure 1. Create an Azure Account Start with a free Azure account with $200 credit for the initial 30 days and 12 months of free-tier services. 2. Discover the Azure Portal The Azure Portal is a web-based interface in which you can create, configure, and manage Azure resources using a graphical interface. 3. Use Azure CLI or PowerShell For command-line fans, Azure CLI and Azure PowerShell enable you to work with resources programmatically. 4. Learn with Microsoft Learn Microsoft Learn also offers interactive, role-based learning paths tailored to new users. Major Azure Management Tools Acquiring the following tools will improve your resource management ability: Azure Monitor A telemetry data gathering, analysis, and action capability for your Azure infrastructure. Azure Advisor Offers customized best practice advice to enhance performance, availability, and cost-effectiveness. Azure Cost Management + Billing Assists in tracking costs and projects costs in advance to remain within budget.

Security and Identity in Azure Azure focuses a great deal of security and compliance. 1. Azure Active Directory (Azure AD) A cloud identity and access management. You apply it to manage identities and access levels of users for Azure services. 2. Role-Based Access Control (RBAC) Allows you to define permissions for users, groups, and applications to certain resources. 3. Azure Key Vault Applied to securely store keys, secrets, and certificates.

Best Practices for Azure Beginners • Start Small: Start with straightforward services like Virtual Machines, Blob Storage, and Azure SQL Database. • Tagging: Employ metadata tags on resources for enhanced organization and cost monitoring. • Monitor Early: Use Azure Monitor and Alerts to track performance and anomalies. • Secure Early: Implement firewalls, RBAC, and encryption from the early stages. • Automate: Explore automation via Azure Logic Apps, ARM templates, and Azure DevOps.

Common Errors to Prevent • Handling cost management and overprovisioning resources lightly. • Not backing up important data. • Not implementing adequate monitoring or alerting. • Granting excessive permissions in Azure AD. • Utilizing default settings without considering security implications. Conclusion

Microsoft Azure is a strong, generic platform for supporting a large variety of usage scenarios—everything from a small web hosting infrastructure to a highly sophisticated enterprise solution. The key to successful cloud computing, however, is an understanding of the basics—ground-level concepts, primitive services, and management tools—is well-served by knowledge gained here. And that's just the start. At some point on your journey, you'll come across more complex tools and capabilities, but from a strong base, it is the secret to being able to work your way through Azure in confidence. Go ahead and claim your free account, begin trying things out, and join the cloud revolution now.

Website: https://www.icertglobal.com/course/developing-microsoft-azure-solutions-70-532-certification-training/Classroom/80/3395

0 notes

Text

The Power of Azure: Transforming Businesses with Cloud and Hybrid Solutions

Cloud computing has become a fundamental building block for modern enterprises. By providing on-demand access to computing resources, businesses can deploy applications faster, manage data more securely, and reduce infrastructure overhead. Microsoft Azure, in particular, stands out as a comprehensive cloud platform offering a rich suite of services for development, analytics, security, and more. This article explores the growing importance of Azure cloud solutions, delves into the concept of hybrid deployments, and shares best practices for organizations seeking agility and scalability in a competitive market.

1. The Rise of Cloud Computing

Over the last decade, cloud computing has reshaped how companies think about technology. Rather than maintaining physical servers on-site, businesses can tap into virtualized resources from data centers distributed worldwide. This shift not only cuts capital expenses but also enables rapid innovation and global reach. As more organizations adopt flexible work models and expand their digital services, cloud adoption continues to accelerate.

Azure has become a go-to choice because it integrates seamlessly with Microsoft’s ecosystem. Businesses already using Office 365 or other Microsoft products find the transition smoother, benefiting from a unified environment that covers email hosting, collaboration tools, and data management solutions.

2. Exploring Azure Cloud Solutions

The term Azure cloud solutions encompasses a vast range of services. Whether you’re looking to build a simple e-commerce website or implement advanced AI-driven analytics, Azure offers tools and frameworks that can be tailored to your needs. Key services include:

Compute — Virtual machines, containers, and serverless functions that let you deploy and scale applications rapidly.

Storage — Durable storage solutions for structured and unstructured data, backed by high availability and redundancy.

Networking — Virtual networks, load balancers, and gateways ensuring secure and optimized communication between services.

Databases — Fully managed SQL and NoSQL databases with built-in scalability features.

DevOps — Continuous integration and continuous deployment (CI/CD) pipelines, along with monitoring and logging tools.

These services interconnect, helping teams quickly prototype, launch, and refine solutions without dealing with underlying hardware complexities.

3. Embracing Hybrid: Azure Hybrid Cloud Solutions

Some organizations prefer retaining certain parts of their infrastructure on-premises, whether for compliance reasons, latency requirements, or existing investments in data centers. Azure hybrid cloud solutions make it easier to bridge on-premises systems with cloud-based services, creating a seamless environment for data and application management.

This approach enables companies to run sensitive workloads locally while offloading more flexible processes — like data analytics or large-scale testing — to the cloud. Hybrid solutions also support edge computing scenarios where real-time data processing needs to occur close to the source, but you still want centralized oversight and analytics in the cloud.

4. Key Benefits of Azure for Businesses

Scalability Azure can handle sudden spikes in usage without compromising performance. This elasticity ensures that applications remain responsive, even under unforeseen load increases.

Cost Management Instead of investing heavily in hardware, businesses pay for the exact resources they use. Azure’s built-in monitoring tools provide transparency into consumption, helping organizations optimize budgets.

Global Reach With data centers spanning multiple continents, Azure supports geo-redundancy and localized hosting options. Companies can deploy workloads closer to their user base, reducing latency and improving experience.

Security and Compliance Azure adheres to stringent security measures and various regulatory standards (e.g., GDPR, HIPAA). These built-in protections and certifications help businesses meet compliance requirements more easily.

Integration with Existing Tools Many enterprises rely on Microsoft products. Azure’s native compatibility streamlines integration, making migrations less disruptive and expansions more intuitive.

5. Ensuring Security and Compliance

Security remains a top concern for cloud adopters. Azure addresses this through multiple layers of protection, including:

Identity and Access Management — Azure Active Directory grants granular control over user access to applications and resources.

Network Security — Tools like Azure Firewall and Network Security Groups help safeguard data in transit.

Threat Detection — Azure Security Center continuously monitors for potential threats, offering real-time recommendations.

In regulated industries, compliance is non-negotiable. Azure meets stringent standards, from ISO certifications to country-specific mandates. Businesses can leverage these compliance features to maintain trust with customers and regulators.

6. Best Practices for Implementation

Assess Current Workloads Conduct a thorough audit of your existing infrastructure to identify which applications, databases, or services are prime candidates for the cloud.

Start Small Begin with a pilot project or a non-critical workload. This allows teams to gain familiarity with Azure’s environment and refine processes before scaling up.

Leverage Automation Tools like Azure Resource Manager templates and Azure DevOps pipelines help automate deployment, minimize manual errors, and foster consistency.

Monitor and Optimize Continuously track performance metrics. Use Azure Monitor, Application Insights, or third-party solutions to identify bottlenecks and ensure resources align with usage patterns.

Train Teams Equip your IT staff and developers with the necessary Azure certifications or training programs, ensuring they can fully utilize available features.

7. Real-World Use Cases

E-Commerce Growth: Retailers rely on Azure to handle flash sales and seasonal traffic spikes. By autoscaling resources, they avoid site crashes and maintain a smooth shopping experience.

Healthcare Data Management: Hospitals store patient records in secure cloud databases while running advanced analytics to improve diagnostic accuracy. Hybrid deployments allow critical data to stay on-premises for compliance, with aggregated data processed in the cloud.

IoT Implementations: Manufacturers monitor machinery and collect telemetry data in real-time, leveraging Azure IoT services for predictive maintenance and optimization.

8. Looking Ahead: The Future of Azure

Microsoft continues to invest heavily in Azure, focusing on areas like AI, machine learning, and quantum computing. Upcoming features aim to accelerate development cycles, enhance security, and offer deeper integrations with open-source tools. As edge computing gains momentum, expect Azure services that cater to processing closer to data sources — reducing latency and enabling new, data-driven innovations.

Thus, these examples highlight how Azure’s robust feature set meets diverse industry requirements, whether purely in the cloud or combined in a hybrid model.

0 notes

Text

FinOps Hub 2.0 Removes Cloud Waste With Smart Analytics

FinOps Hub 2.0

As Google Cloud customers used FinOps Hub 2.0 to optimise, business feedback increased. Businesses often lack clear insights into resource consumption, creating a blind spot. DevOps users have tools and utilisation indicators to identify waste.

The latest State of FinOps 2025 Report emphasises waste reduction and workload optimisation as FinOps priorities. If customers don't understand consumption, workloads and apps are hard to optimise. Why get a committed usage discount for computing cores you may not be using fully?

Using paid resources more efficiently is generally the easiest change customers can make. The improved FinOps Hub in 2025 focusses on cleaning up optimisation possibilities to help you find, highlight, and eliminate unnecessary spending.

Discover waste: FinOps Hub 2.0 now includes utilisation data to identify optimisation opportunities.

FinOps Hub 2.0 released at Google Cloud Next 2025 to highlight resource utilisation statistics to discover waste and take immediate action. Waste can be an overprovisioned virtual machine (VM) that is barely used at 5%, an underprovisioned GKE cluster that is running hot at 110% utilisation and may fail, or managed resources like Cloud Run instances that are configured suboptimally or never used.

FinOps users may now display the most expensive waste category in a single heatmap per service or AppHub application. FinOps Hub not only identifies waste but also delivers cost savings for Cloud Run, Compute Engine, Kubernetes Engine, and Cloud SQL.

Highlight waste: FinOps Hub uses Gemini Cloud Assist for optimisation and engineering.

The fact that it utilises Gemini Cloud Assist to speed up FinOps Hub's most time-consuming tasks may make this version a 2.0. Since January 2024 to January 2025, Gemini Cloud Assist has saved clients over 100,000 FinOps hours a year by providing customised cost reports and synthesising insights.

Google Cloud offered FinOps Hub two options to simplify and automate procedures using Gemini Cloud Assist. FinOps can now get embedded optimisation insights on the hub, such cost reports, so you don't have to find the optimisation "needle in the haystack". Second, Gemini Cloud Assist can now assemble and provide the most significant waste insights to your engineering teams for speedy fixes.

Eliminate waste: Give IT solution owners a NEW IAM role authorisation to view and act on optimisation opportunities.

Tech solution owners now have access to the billing panel, FinOps' most anticipated feature. This will display Gemini Cloud Assist and FinOps data for all projects in one window. With multi-project views in the billing console, you can give a department that only uses a subset of projects for their infrastructure access to FinOps Hub or cost reports without giving them more billing data while still letting them view all of their data in one view.

The new Project Billing Costs Manager IAM role (or granular permissions) provides multi-project views. Sign up for the private preview of these new permissions. With increased access limitations, you may fully utilise FinOps solutions across your firm.

“With clouds overgrown, like winter’s old grime, spring clean your servers, save dollars and time.” Clean your cloud infrastructure with FinOps Hub 2.0 and Gemini Cloud Assist this spring. Whatever, Gemini says so.

#technology#technews#govindhtech#news#technologynews#FinOps Hub 2.0#FinOps Hub#Hub 2.0#FinOps#Google Cloud Next 2025#Gemini Cloud Assist

0 notes

Text

In-Memory Computing Market Landscape: Opportunities and Competitive Insights 2032

The In-Memory Computing Market was valued at USD 10.9 Billion in 2023 and is expected to reach USD 45.0 Billion by 2032, growing at a CAGR of 17.08% from 2024-2032

The in-memory computing (IMC) market is experiencing rapid expansion, driven by the growing demand for real-time data processing, AI, and big data analytics. Businesses across industries are leveraging IMC to enhance performance, reduce latency, and accelerate decision-making. As digital transformation continues, organizations are adopting IMC solutions to handle complex workloads with unprecedented speed and efficiency.

The in-memory computing market continues to thrive as enterprises seek faster, more scalable, and cost-effective solutions for managing massive data volumes. Traditional disk-based storage systems are being replaced by IMC architectures that leverage RAM, flash memory, and advanced data grid technologies to enable high-speed computing. From financial services and healthcare to retail and manufacturing, industries are embracing IMC to gain a competitive edge in the era of digitalization.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3570

Market Keyplayers:

SAP SE – SAP HANA

IBM – IBM Db2 with BLU Acceleration

Microsoft – Azure SQL Database In-Memory

Oracle Corporation – Oracle TimesTen In-Memory Database

Intel – Intel Optane DC Persistent Memory

Microsoft – SQL Server In-Memory OLTP

GridGain Systems – GridGain In-Memory Computing Platform

VMware – VMware vSphere with Virtual Volumes

Amazon Web Services (AWS) – Amazon ElastiCache

Pivotal Software – Pivotal GemFire

TIBCO Software Inc.– TIBCO ActiveSpaces

Redis Labs – Redis Enterprise

Hazelcast – Hazelcast IMDG (In-Memory Data Grid)

Cisco – Cisco In-Memory Analytics

Qlik – Qlik Data integration

Market Trends Driving Growth

1. Rising Adoption of AI and Machine Learning

The increasing use of artificial intelligence (AI) and machine learning (ML) applications is fueling the demand for IMC solutions. AI-driven analytics require real-time data processing, making IMC an essential component for businesses leveraging predictive insights and automation.

2. Growing Demand for Real-Time Data Processing

IMC is becoming a critical technology in industries where real-time data insights are essential. Sectors like financial services, fraud detection, e-commerce personalization, and IoT-driven smart applications are benefiting from the high-speed computing capabilities of IMC platforms.

3. Integration with Cloud Computing

Cloud service providers are incorporating in-memory computing to offer faster data processing capabilities for enterprise applications. Cloud-based IMC solutions enable scalability, agility, and cost-efficiency, making them a preferred choice for businesses transitioning to digital-first operations.

4. Increased Adoption in Financial Services

The financial sector is one of the biggest adopters of IMC due to its need for ultra-fast transaction processing, risk analysis, and algorithmic trading. IMC helps banks and financial institutions process vast amounts of data in real time, reducing delays and improving decision-making accuracy.

5. Shift Toward Edge Computing

With the rise of edge computing, IMC is playing a crucial role in enabling real-time data analytics closer to the data source. This trend is particularly significant in IoT applications, autonomous vehicles, and smart manufacturing, where instant processing and low-latency computing are critical.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3570

Market Segmentation:

By Components

Hardware

Software

Services

By Application

Fraud detection

Risk management

Real-time analytics

High-frequency trading

By Vertical

BFSI

Healthcare

Retail

Telecoms

Market Analysis and Current Landscape

Key factors contributing to this growth include:

Surging demand for low-latency computing: Businesses are prioritizing real-time analytics and instant decision-making to gain a competitive advantage.

Advancements in hardware and memory technologies: Innovations in DRAM, non-volatile memory, and NVMe-based architectures are enhancing IMC capabilities.

Increased data volumes from digital transformation: The exponential growth of data from AI, IoT, and connected devices is driving the need for high-speed computing solutions.

Enterprise-wide adoption of cloud-based IMC solutions: Organizations are leveraging cloud platforms to deploy scalable and cost-efficient IMC architectures.

Despite its strong growth trajectory, the market faces challenges such as high initial investment costs, data security concerns, and the need for skilled professionals to manage and optimize IMC systems.

Regional Analysis: Growth Across Global Markets

1. North America

North America leads the in-memory computing market due to early adoption of advanced technologies, significant investments in AI and big data, and a strong presence of key industry players. The region’s financial services, healthcare, and retail sectors are driving demand for IMC solutions.

2. Europe

Europe is witnessing steady growth in IMC adoption, with enterprises focusing on digital transformation and regulatory compliance. Countries like Germany, the UK, and France are leveraging IMC for high-speed data analytics and AI-driven business intelligence.

3. Asia-Pacific

The Asia-Pacific region is emerging as a high-growth market for IMC, driven by increasing investments in cloud computing, smart cities, and industrial automation. Countries like China, India, and Japan are leading the adoption, particularly in sectors such as fintech, e-commerce, and telecommunications.

4. Latin America and the Middle East

These regions are gradually adopting IMC solutions, particularly in banking, telecommunications, and energy sectors. As digital transformation efforts accelerate, demand for real-time data processing capabilities is expected to rise.

Key Factors Driving Market Growth

Technological Advancements in Memory Computing – Rapid innovations in DRAM, NAND flash, and persistent memory are enhancing the efficiency of IMC solutions.

Growing Need for High-Speed Transaction Processing – Industries like banking and e-commerce require ultra-fast processing to handle large volumes of transactions.

Expansion of AI and Predictive Analytics – AI-driven insights depend on real-time data processing, making IMC an essential component for AI applications.

Shift Toward Cloud-Based and Hybrid Deployments – Enterprises are increasingly adopting cloud and hybrid IMC solutions for better scalability and cost efficiency.

Government Initiatives for Digital Transformation – Public sector investments in smart cities, digital governance, and AI-driven public services are boosting IMC adoption.

Future Prospects: What Lies Ahead?

1. Evolution of Memory Technologies

Innovations in next-generation memory solutions, such as storage-class memory (SCM) and 3D XPoint technology, will further enhance the capabilities of IMC platforms, enabling even faster data processing speeds.

2. Expansion into New Industry Verticals

IMC is expected to witness growing adoption in industries such as healthcare (for real-time patient monitoring), logistics (for supply chain optimization), and telecommunications (for 5G network management).

3. AI-Driven Automation and Self-Learning Systems

As AI becomes more sophisticated, IMC will play a key role in enabling real-time data processing for self-learning AI models, enhancing automation and decision-making accuracy.

4. Increased Focus on Data Security and Compliance

With growing concerns about data privacy and cybersecurity, IMC providers will integrate advanced encryption, access control, and compliance frameworks to ensure secure real-time processing.

5. Greater Adoption of Edge Computing and IoT

IMC’s role in edge computing will expand, supporting real-time data processing in autonomous vehicles, smart grids, and connected devices, driving efficiency across multiple industries.

Access Complete Report: https://www.snsinsider.com/reports/in-memory-computing-market-3570

Conclusion