#Microsoft Project Server training

Explore tagged Tumblr posts

Note

Same anon as in the chatbot ask!

I appreciate and admire your stance on AI; I’m working on a research project highlighting the harmful impacts of CAI and was really hoping you weren’t in support of the platform.

I’m frankly disturbed by how many creators I found bots scraped from with a quick scroll. I’m so sorry it happened to you, and equally sorry to have to bring it to your attention. I hope this is more of a one-off than a recurring issue.

Have a lovely evening, keep being cool :]

/gen /p

honestly it makes me feel almost violated.

i remember seeing a reddit thread where someone was like 'i joined a vtubers patreon and got in vc and said i use cai and they all told me i shouldn't use it' and all the comments were like 'theyre so toxic' 'they just dont understand' and guys... yes they probably did understand. if someone is making fun of you for being lonely or 'cringe' by using cai yeah they're being an asshole, but you are in the wrong for using cai. how do you think it generates writing and voices? by stealing the writing and voices from people.

i've seen cai users say the voice feature does 'feel' wrong as it's taking from real people, but none of them seem to know or want to acknowledge that the writing also steals from real people. generative writing like cai, chatbots, chatgpt, etc, have to get trained on something. and that something is real writing by non-consenting authors, ficwriters, journalists, anyone who writes anything online even someone's personal blog or tweets. even if you're making a chatbot for your own oc, and not someone else's character, the chats it generates your oc is taken from stolen writing.

as far as i can see there is no way to get my characters removed from cai. you can report voices being used without consent, but not writing.

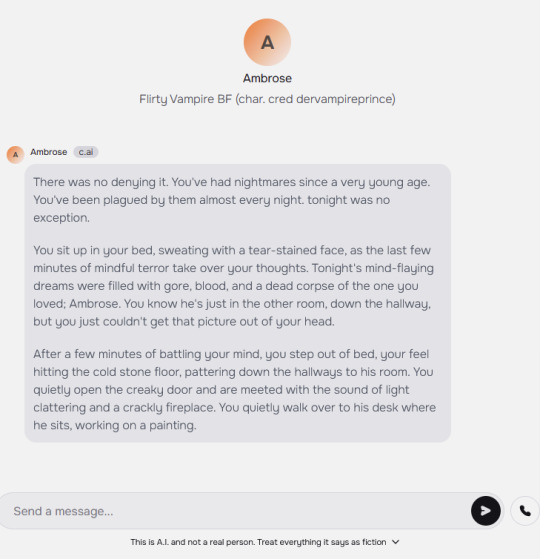

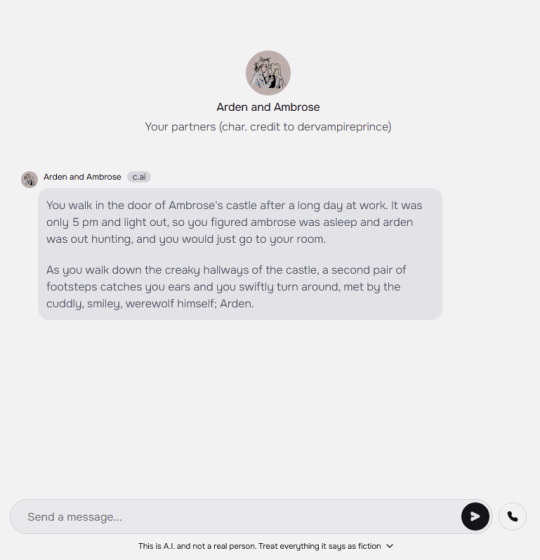

this is what i found of my characters on cai, and it makes me really upset and uncomfotable.

they also took some of my art of ambrose and arden to use as the thumbnail and didn't credit me as the artist. even if they had credited me, i do not allow reposting of my art. also, no one in their lives has ever described arden as "smiley" (i'm not policing anyone's headcannons or imagination with that one, just pointing out something silly to lighten the mood so i don't have a complete meltdown).

yes i censored out the cai users name who made these chabots. please do not go and hunt them down, please do not go and find them or message them. especially as we don't know if they're a minor.

it seems to me a lot of minors use cai which is worrying as who knows what the chatbot could spit out regardless of safety measures they have in place. and i'm sorry anyone's so lonely they have to turn to cai only.. you don't have too. fanfic exists, rp communities exist, various online communities whether tumblr groups, discord servers, subreddits, are people still using amino? i mean if you're a minor you should be extra careful getting into online communities and talking to others online (and if you're a minor you shouldn't be following this blog) but like... imagination exists. try getting into creative writing?

idk when we did get so bored with imaginations we had to do this. you know what i did as as kid and there was no access to anything like this? i would copy and paste fanfics into microsoft word, find and replace one characters name with my own and boom you got yourself a self insert fic (note: these edited files were only ever kept on my pc, i never posted them anywhere). and there's soooooo many reader insert fics out there now, hell i literally make my own reader/listener inserts of my own characters, i'm not starving you for content out here. and i'm okay with people writing fanfic about my ocs or commissioning others to write you fanfics (my commissions aren't open).

thankfully i don't think my voice has been uploaded on there? i clicked the 'call' button to see what would happen and nothing did so i think that means there's no voice? or perhaps it doesn't work unless you make an account i don't know.

yeah sorry that became a longer rant. i don't know if more of my ocs are on cai, these are just the ones i found by searching my username in there. there could be other copies of my characters on there that don't credit me.

also a lot of character uploads use ai 'art' as their pictures which you know is also terrible and steals from artists.

and you're right, there's so many more characters taken from small creators and other VAs. i searched yuurivoice on there and got 50+ results of chatbots of his ocs AND ALSO OF HIM. please do not making cai's of real people that is so much worse than someone's oc, it's equally as bad on the stealing front but also so paraosocial. you do not know yuurivoice, you cannot get a bot to act like him as you do not know him or how he acts. watching a creators videos or streams does not mean you know anything about the real them, and especially does not mean you know them or are friends with them. found at least 5 for cardlin audios, some of his ocs and some of him. 6 for dark and twisted whispers ocs.

and if a creator gives you permission to make their oc into a chatbot... that doesn't make the chatbot okay. a creator can consent all you like, but the writers who's work was stolen to train that chatbot still didn't get to consent.

in the rare chance any cai user who's made chatbots of my characters on there sees this post: please delete them. please. you didn't get my consent for this. and you didn't get the consent of all the writers your chatbot was trained on. i don't think you're stupid or cringe for using cai, but you are contributing to generating ai content which is stealing from real humans and harming the environment.

10 notes

·

View notes

Text

Last Monday of the Week 2025-03-10

Sun's back

Listening: While browsing through my bandcamp wishlist with my partner I saw that I had followed Ostinato Records, a label whose thing is finding old, mostly third-world, music, restoring, and publishing it.

Here's the album Synthesizing the Silk Roads: Uzbek Disco, Tajik Folktronica, Uyghur Rock & Tatar Jazz from 1980s Soviet Central Asia, featuring some really incredible Central Asian synthrock. Unknown creator, Sen Qaidan Bilasan (How Do You Know)

This whole album, and a lot of this label's work, is really neat: Most of this album was recovered from unsold stock in Tashkent.

In the summer of 1941, as the Nazis invaded the USSR, Stalin ordered a mass evacuation. Sixteen million people were put on trains bound eastward to Soviet Central Asia, especially Tashkent, Uzbekistan’s picturesque capital. Among those onboard were gramophone engineers who later established the Tashkent Gramplastinok plant in 1945. This factory became central to Soviet record production, part of a network of plants churning out 200 million records by the 1970s. Rare dead stock of 1980s vinyl from this plant, shut down in 1991, forms the backbone of our groundbreaking 15-track compilation, complemented by live TV recordings and curated in collaboration with Uzbek label Maqom Soul. Fully licensed directly from the artists or their families and meticulously remastered, these songs – all recorded in Tashkent – unveil a diverse tapestry of sounds from Soviet Uzbekistan and its neighbors. ... Tashkent’s musicians often had access to a wider array of technology than their Moscow counterparts. Thanks to Uzbekistan’s Bukharan Jewish community, leading importers of state-of-the-art music tech from the US and Japan, artists on this compilation were crafting sounds on Moog and Korg synthesizers, creating the signature sonic palette that emerged from the region.

Reading: Mostly boring work stuff, the O'Reilly Active Directory book.

As far as I can tell, Active Directory is an enrichment program for Microsoft developers where they get to design a Domain Controller that does exactly one thing and has bizarre requirements on how it can be run.

Making: Ongoing silk project.

Currently editing some photos for printing. It's important to remember that on a standard modern monitor at 1:1, your average DSLR/Mirrorless photo is scaled up to like 2-3 meters across minimum, so you shouldn't really trust 1:1 when trying to figure out if it looks good. It turns out fulscreen on my monitor is almost exactly 30×40cm which is what I'm printing, so that's great for me.

Some options I might print include these

Still going through some favourites though. Oh, and The Hare

Playing: Co-op Borderlands 2 for a while with my partner. They ragequit easily so it was a short one but we did get up to fighting and losing to midgemong.

Good trial for the Legion Go and the lapboard, incidentally, because they took over my PC and I played on the TV with the handheld as a console with the lapboard and trackball. It works about as well as I hoped it would.

Watching: A few!

Wing Commander (1999) at Bad Movie Night. A truly baffling film. Pretty good sets, okay special effects, baffling storyline. We got rid of the Jedi from Star Wars and replaced them with an offshoot of the human race that can home like space pigeons towards black holes, and people are racist towards them.

You have to watch this kind of movie with friends because that's how you get phrases like "Holy shit how did the twink not know that Quebecois Space Mormon CIA Pope was a Space Mormon?"

Also watched Escape From New York because I have had it kicking around and Kill James Bond did Escape From L.A.. I am already a John Carpenter x Kurt Russel fan but damn. They put this man in so many situations.

I do really like how they manage to make Snake look very small even though he's strong. A lot of action heroes are enormous, Snake is obviously muscular and athletic but he's also just a little guy!

I can literally feel myself wanting to get a black tank top this movie is a cognitohazard. He's so cool!

Lee Van Cleef is here looking like he does! I was so thrilled when I saw LVC come up in the opening credits and even more when I realized he'd be in the whole movie. I gotta watch Sabata sometime to see him play not a huge heel and see how that goes but he is a great heel.

Finally honorable mention to the week's new Friday Nights from Loading Ready Run, which is their Magic themed sketch comedy spinoff, this one being a really good Wes Anderson parody. It says it's 20 minutes long but only 10 minutes are show, the rest is credits and BTS, don't worry.

youtube

Tools and Equipment: Part of Silk Project is pinning a lot of silk. I ended up going to get 0.5mm sewing pins, which are the finest like, normal everyday pins you can usually get. There's finer ones but they're less common. These are a huge improvement over your average 0.6 and 0.65mm pins when you're working with a stiff, tightly woven fabric.

6 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

What Will Destroy AI Image Generation In Two Years?

You are probably deluding yourself that the answer is some miraculous program that will "stop your art from being stolen" or "destroy the plagiarism engines from within". Well...

NOPE.

I can call such an idea stupid, imbecilic, delusional, ignorant, coprolithically idiotic and/or Plain Fucking Dumb. The thing that will destroy image generation, or more precisely, get the generators shut down is simple and really fucking obvious: it's lack of interest.

Tell me: how many articles about "AI art" have you seen in the media in the last two to three months? How many of them actually hyped the thing and weren't covering lawsuits against Midjourney, OpenAI/Microsoft and/or Stability AI? My guess is zilch. Zero. Fuckin' nada. If anything, people are tired of lame, half-assed if not outright insulting pictures posted by the dozen. The hype is dead. Not even the morons from the corner office are buying it. The magical machine that could replace highly-paid artists doesn't exist, and some desperate hucksters are trying to flog topically relevant AI-generated shots on stock image sites at rock-bottom prices in order to wring any money from prospective suckers. This leads us to another thing.

Centralized Models Will Keel Over First

Yes, Midjourney and DALL-E 3 will be seriously hurt by the lack of attention. Come on, rub those two brain cells together: those things are blackboxed, centralized, running on powerful and very expensive hardware that cost a lot to put together and costs a lot to keep running. Sure, Microsoft has a version of DALL-E 3 publicly accessible for free, but the intent is to bilk the schmucks for $20 monthly and sell them access to GPT-4 as well... well, until it turned out that GPT-4 attracts more schmucks than the servers can handle, so there's a waiting list for that one.

Midjourney costs half that, but it doesn't have the additional draw of having an overengineered chatbot still generating a lot of hype itself. That and MJ interface itself is coprolithically idiotic as well - it relies on a third-party program to communicate with the user, as if that even makes sense. Also, despite the improvements, there are still things that Midjourney is just incapable of, as opposed to DALL-E 3 or SDXL. For example, legible text. So right now, they're stuck with storage costs for the sheer number of half-assed images people generated over the last year or so and haven't deleted.

The recent popularity of "Disney memes" made using DALL-E 3 proved that Midjourney is going out of fashion, which should make you happy, and drew the ire of Disney, what with the "brand tarnishing" and everything, which should make you happier. So the schmucks are coming in, but they're not paying and pissing the House of Mouse off. This means what? Yes, costs. With nothing to show for it. Runtime, storage space, the works, and nobody's paying for the privilege of using the tech.

Pissing On The Candle While The House Burns

Yep, that's what you're doing by cheering for bullshit programs like Glaze and Nightshade. Time to dust off both of your brain cells and rub them together, because I have a riddle for you:

An open-source, client-side, decentralized image generator is targeted by software intended to disrupt it. Who profits?

The answer is: the competition. Congratulations, you chucklefucks. Even if those programs aren't a deniable hatchet job funded by Midjourney, Microsoft or Adobe, they indirectly help those corporations. As of now, nobody can prove that either Glaze or Nightshade actually work against the training algorithms of Midjourney and DALL-E 3, which are - surprise surprise! - classified, proprietary, blackboxed and not available to the fucking public, "data scientists" among them. And if they did work, you'd witness a massive gavel brought down on the whole project, DMCA and similar corporation-protecting copygrift bullshit like accusations of reverse-engineering classified and proprietary software included. Just SLAM! and no Glaze, no Nightshade, no nothing. Keep the lawsuit going until the "data scientists" go broke or give up.

Yep, keep rubbing those brain cells together, I'm not done yet. Stable Diffusion can be run on your own computer, without internet access, as long as you have a data model. You don't need a data center, you don't need a server stack with industrial crypto mining hardware installed, a four-year-old gaming computer will do. You don't pay any fees either. And that's what the corporations who have to pay for their permanently besieged high-cost hardware don't like.

And the data models? You can download them for free. Even if the publicly available websites hosting them go under for some reason, you'll probably be able to torrent them or download them from Mega. You don't need to pay for that either, much to the corporations' dismay.

Also, in case you didn't notice, there's one more problem with the generators scraping everything off the Internet willy-nilly:

AI Is Eating Its Own Shit

You probably heard about "data pollution", or the data models coming apart because if they're even partially trained on previously AI-generated images, the background noise they were created from is fucking with the internal workings of the image generators. This is also true of text models, as someone already noticed by having two instances of ChatGPT talk to each other, they devolve into incomprehensible babble. Of course that incident was first met with FUD on one side and joy on the other, because "OMG AI created their own language!" - nope, dementia. Same goes for already-generated images used to train new models: the semantic segmentation subroutines see stuff that is not recognized by humans and even when inspected and having the description supposedly corrected, that noise gets in the way and fucks up the outcome. See? No need to throw another spanner into the machine, because AI does that fine all by itself (as long as it's run by complete morons).

But wait, there's another argument why those bullshit programs are pointless:

They Already Stole Everything

Do you really think someone's gonna steal your new mediocre drawing of a furry gang bang that you probably traced from vintage porno mag scans? They won't, and they don't need to.

For the last several months, even the basement nerds that keep Stable Diffusion going are merely crossbreeding the old data models, because it's faster. How much data are Midjourney and OpenAI sitting on? I don't exactly know, but my very scientific guess is, a shitload, and they nicked it all a year or two ago anyway.

The amount of raw data means jack shit in relation to how well the generator works. Hell, if you saw the monstrosities spewed forth by StabilityAI LAION default models for Stable Diffusion, that's the best proof: basement nerds had to cut down on the amount of data included in their models, sort the images, edit the automatically generated descriptions to be more precise and/or correct in the first place and introduce some stylistic coherence so the whole thing doesn't go off the rails.

And that doesn't change the fact that the development methodology behind the whole thing, proprietary or open-source, is still "make a large enough hammer". It's brute force and will be until it stops being financially viable. When will it stop being financially viable? When people get bored of getting the same kind of repetitive pedestrian shit over and over. And that means soon. Get real for a moment: the data models contain da Vinci, Rembrandt, van Gogh, and that means jack shit. Any concept you ask for will be technically correct at best, but hardly coherent or well thought-out. You'll get pablum. Sanitized if you're using the centralized corporate models, maybe a little more horny if you're running Stable Diffusion with something trained on porn. But whatever falls out of the machine can't compete with art, for reasons.

#mike's musings#Midjourney#DALLE3#stable diffusion#Nightshade#Glaze#ai art#ai art generation#ai image generation#Nightshade doesn't protect your art#Nightshade protects corporate interests#long reads#long post#TLDR#LONGPOST IS LOOONG

6 notes

·

View notes

Text

First Post, and hard lessons.

It's been a long time since I've blogged, I used to have an account with blogger.com (remember that?) back in the early 2000's So trying this out again is interesting, seeing what's different, what's similar.

I wanted to start this blog to record some personal thoughts that I've had over the last few years, so there will be some serious posts, including this one, but I do want to lighten things up inbetween.

I feel like I've written and re-written this a dozen times in my head, but it's time to commit and write something down. for those of you who know me, I want to tell a story and maybe you've heard some bits of it already, for any others who happen along? it's a story of anxiety, depression, burnout, a new path maybe? mostly i write this for my own benefit, to remind myself of where I've been & where I'd like to go, and where i don't want to go back to.

But first, some context. I've been working (full time) in IT for….16 years? I think? (give or take), and I'll be honest - I've had enough, and i want out of IT. I remember a time when computers were "simple" beasts (relatively speaking), the earliest memories of using a computer was my mum's 386 (it was a while ago ok!), she was studying a degree, but we had a number of DOS games installed on it (lots of apogee shareware), over time that computer got upgraded, new CPU, more ram, bigger hard drive, Windows 95! that was new & exciting! I remember somewhere along the way mum teaching me enough dos commands that when we bought a new game I could install it without needing help, she just gave me the discs and sent me on my way.

It was in year 10 in high school that i started scrounging enough parts to start making my own computer (or computers as it would become), my first pc being a 386 and the first thing I did was run games on it & dad had a laugh, it was slow, but it was mine! I worked out how to do all the upgrades myself, and over time ended up moving through windows 3.x, 95, 98. by the time i got to windows 2000 i had a 2nd hand IBM desktop, and I was looking after our home network, i think we'd moved from dial-up to ADSL around that time too.

After high school i got into the local TAFE (college for trade certificates), and got a Cert III in IT

I remember not liking XP when it came out (oh god, what is that default theme?! those colours?!), but I built an amd athlon64 system to run it on, all new parts & it was the fastest thing ever! (well, ok, maybe not ever…but it was mine! and it way faster than anything i had previously) i spent days playing warcraft III on that machine, learning how to compile software, playing with virtual machines, and it around this time i landed a job doing helpdesk at the local university.

Helpdesk work was interesting, but it's pretty soul crushing at times, you learn there are people out there who have no idea how to do the equivalent of "fill the tank with gas & check tire pressure", the uni had debated about having a basic computer literacy course for both staff & students, but it never got off the ground. But i pushed through, worked hard, and got recognized as being a good person to talk to in person or on the phone, often out-performing many peers on the helpdesk. We had people on the helpdesk escalating tickets to me, because i was good at working out the "curly" ones.

at some point I got offered a temporary transfer into server admin for 6 months, they'd seen me do good troubleshooting before sending stuff over to them, and they wanted to give me a chance. That ended up turning into fulltime work, that lasted 10 years. I learned a lot in that time, deploying and managing servers, "hearding cats" to get people to agree when an old application can be turned off or upgraded, working on projects. i'm not going to fill this up with IT acronyms but i did get sent on a lot of microsoft & other vendor training and for a number of mission-critical things became first point of contact. I got to experience oncall (and get paid extra for it), and almost single-handedly dragged the windows server fleet up to modern standards.

But in 2022 i couldn't do it anymore. I'd watched over the past years since microsoft fired it's QA staff in 2014, patches got worse, microsoft's promises of improvement got more frequent, and my team (or me specifically) was often stuck between "deploy patch to fix vulnerability or don't deploy patch since it's broken and will break things we depend on", a position that no IT department should find themselves in, having to choose between security and uptime. I'd worked on projects that were so badly run that I'd experienced depression (and some of the places your mind can take you), and while i never acted on the the thoughts during such times, it was not a place that, mentally, i wanted to return to. I'd seen people in other teams at the uni stonewall projects…for what??? no repercussions, one of them even got a promotion. not to mention that in 2021 our IT director/executive staff decided to overrule state government and tell everyone they had to be back in the office (that went down about as well as you'd expect)

2022 was a bad year, we had multiple bad patches we couldn't install on some of our servers until revisions came out, I had a staff member in another team who refused (again…after 4 years…and raising it with my supervisor) to complete work they'd promised, we had a huuuuge amount of work coming down the pipe, and no extra staff, and at some point in july i just broke down over it all. I could not do it any more. I could not push through. my reserves were empty. I had no more to give. things had gotten too hard, things were too complex, I wasn't running a cute 486 playing an apogee game. I was looking after hundreds of servers and multiple cloud environments. these weren't the basic applications that we knew & hated when i arrived, these things were using complex databases and machine learning, and I was expected to understand it all enough to support it. Sure i was part of a team, but people go on leave, I get the on-call phone, I filled in for my manager on occasion, you have to know enough to be able to diagnose and fix things, and it's so. much. now. Things have moved so fast over the last 10 years, and the reward for being able to tread water, for being able to keep up? not more people to help out, but more work, more new technology to learn, in addition to the old technology. It was suffocating, it wasn't sustainable.

And i was dumb enough to think that changing employers would be sufficient. I moved state, found a new job, it paid more, only to find out that the work was worse. the internal documentation was incomplete and the team didn't want to answer questions. clients running systems that were 20 years old (and not supported)….and were planning an upgrade to a system that was 15 years old….and would still not be supported. And all that anxiety? It came back! with friends!

I found a 2nd job, working in IT / healthcare and it was terrifying. we have laws about how medical data is to be stored & handled and my manager told me "there's no laws about this", turns out he was also a sexist & a bigot too. That was a job that didn't see a need to have compliant IT and guess who's getting blamed when shit hits the fan??….well not me….not anymore. The only reason I was able to stay there as long as I did was that I was working part time for most of it.

I spoke to a number of people in IT over the last 2 years and the common theme is that they're all burned out, they've all been screwed by the pace of change or inability to enact required changes. And maybe that pace has been dictated by management not because anyone needed new things, but simply due to those things being new and shiny, or due to complacency. Maybe in other cases it was driven by consumer demand / consumption, maybe as a society we're destroying good IT staff for our own amusement so we can have the goods & services we want when we want them, on our schedule….I don't think I'm qualified to answer that.

But for all that IT has burned me, there are things about it I miss. I miss those times when computing was simpler, when it was easier to understand, when it was just a hobby. I miss being able to comprehend how things worked, rather than feeling like I was part of some cargo cult. I miss when IT was just a hobby and didn't have to understand laws for businesses around it and ensure compliance. I miss when IT was fun.

whoever said that making your hobby a job would make you happy and "you'd never work a day in your life" was lying. whoever said "just push through" never experienced anxiety / depression / burnout (or at least not in the way I did)

If i had piece of advice? If I can leave a message for myself to look back on? Know your limits, you're only human, don't try and push yourself beyond them & hit the wall. Know where that wall is & that it's ok to tap out if need to & you have the means. It's a lesson I had to learn over the last 2 years.

So what does the future hold? I want to go back to IT as a hobby, I can't see myself doing this as a career anymore. and in 2024 I'm going to study for a Library & Information Services Certificate, it'll be a change of pace / direction & should be a good career change.

If you made it this far, thankyou for reading, it's a serious first post i know. But it's been roiling around my head for a while. I should have some more light hearted things to post later

3 notes

·

View notes

Text

This was originally going to be a rant in the tags, but it got too big so in the main body it goes.

God everything about this fucking sucks. Fuck AI and everything these techbro hype men represent. ''It's better to ask forgiveness than permission'' is only relevant in certain circumstances. We have had systems in place to licence creative works for decades, IT'S NOT THAT HARD. To then double down and say that training models need so much data that licensing would be untenable, congratulations your platform fundamentally unworkable because this fails to accommodate every plagiarism and IP law we have. And the law *will* catch up, just as we saw with the SAG-AFTRA and WGA strike.

When I say this stuff cannot produce anything worthwhile, I mean that in a very literal way. Nobody, and I mean NOBODY, has any idea how to make this profitable. It costs hundreds of thousands of dollars daily for these LLM predictive engines to operate, with each inquiry having a sizable cost attached. And for all that, no one has any idea how to monetize it. Having access to these predictive models costs a pittance (if they even charge at ALL) because they need to drive engagement so much because the whole process solely operates on potential, nothing actually concrete. The costs go beyond merely the wages needed to pay the people that manage the platforms (which again should also include the people whose work is being used, but I digress), the sheer volume of of servers, CPUs, and electricity needed for continuous operations is fucking staggering. The only reason so many of these projects have remained afloat is because they have the backing of venture capitalists or enormous corporations like Facebook, Microsoft, and Google.

And the process is still rife with hallucinatory imagery, and even circumstances where it could prove to be useful (such as being able to search and disseminate legal proceedings) it's just a glorified Markov chain so it will just output words that it decides is appropriate, ignoring the context of the things it has said before and even the questions it is being asked.

And now, the prospect of getting a pittance for what is being asked of it (I have no idea what Automattic is being paid for this, but I can assure you that it is nowhere near enough), Tumblr and WordPress are throwing their users to the wolves. I say this not just as a user of said platforms, but as someone who has intimate knowledge of how licensing is supposed to work. To enlighten people: the costs of giving a company the rights to use a creative work vary greatly depending on circumstances. I live in a city where it is comparatively cheap to pay for these rights. For all that, what Midjourney should be paying out for an exclusive, indefinite right to reference, reuse, and modify a given work should be in the tens of thousands of dollars for each individual work. And that Automattic is taking payment for these services, but pointedly not distributing it to the people whose work is being taken is the height of bullshit. And to than deny the right to refuse to deactivated, abandoned, and otherwise inaccessible accounts is so utterly vile.

For these reasons I do not begrudge anyone that wants to leave the platform. All I will say is that I will miss you, and that I wish you better fortunes than what what tumblr can provide.

Please be aware that the "opt-out" choice is just a way to try to appease people. But Tumblr has not been transparent about when has data been sold and shared with AI companies, and there are sources that confirm that data has already been shared before the toggle was even provided to users.

Also, it seems to include data they should not have been able to give under any circumstance, including that of deactivated blogs, private messages and conversations, stuff from private blogs, and so on.

Do not believe that "AI companies will honor the "opt-out request retroactively". Once they've got their hands on your data (and they have), they won't be "honoring" an opt-out option retroactively. There is no way to confirm or deny what data do they have: The fact they are completely opaque on what do they currently "own" and have, means that they can do whatever they want with it. How can you prove they have your data if they don't give everyone free access to see what they've stolen already?

So, yeah, opt out of data sharing, but be aware that this isn't stopping anyone from taking your data. They already have been taking it, before you were given that option. Go and go to Tumblr's Suppport and leave your Feedback on this (politely, but firmly- not everyone in the company is responsible for this.)

Finally: Opt out is not good under any circumstance. Deactivated people can't opt out. People who have lost their passwords can't opt out. People who can't access internet or computers can't opt out. People who had their content reposted can't opt out. Dead people can't opt out. When DeviantArt released their AI image generator, saying that it wasn't trained on people who didn't consent to it, it was proven it could easily replicate the styles of people who had passed away, as seen here. So, yeah. AI companies cannot be trusted to have any sort of respect for people's data and content, because this entire thing is just a data laundering scheme.

Please do reblog for awareness.

#Anti AI#tumblr nonsense#I am so very tired of having to uproot my online presence because the parent company is shit

33K notes

·

View notes

Text

Top Skills You Learn in a B.Tech in Cloud Computing Program

Cloud computing is transforming how businesses store, manage, and process data. If you plan to build a career in technology, a B Tech in cloud computing offers one of the most future-focused career paths in engineering. But what do you learn in this program?

Here’s a clear look at the top skills you gain that make you industry-ready.

Cloud Architecture and Infrastructure

Understanding how cloud systems are built is your starting point. You learn about cloud deployment models, storage systems, servers, networks, and virtualization. This forms the base for designing and managing scalable cloud platforms. You also explore how large-scale systems work using public, private, and hybrid cloud models.

Working with Leading Cloud Platforms

During your btech cloud computing course, you train on industry-leading platforms like:

Amazon Web Services (AWS)

Microsoft Azure

Google Cloud Platform (GCP)

You work on real-time projects where you learn how to deploy applications, manage services, and monitor systems on these platforms. These are the tools most employers look for in their cloud teams.

Virtualization and Containerization

You learn how to run applications efficiently across different environments using:

Virtual Machines (VMs)

Containers (Docker)

Orchestration Tools (Kubernetes)

These technologies are at the core of cloud efficiency and automation. The hands-on training helps you build scalable, portable applications that work in complex infrastructures.

Cloud Security and Compliance

As a cloud engineer, your role is not just about development. Security is a big part of cloud technology. You understand how to protect data, set access controls, manage identities, and secure cloud resources.

You also learn how to handle compliance and data regulations, which are critical in industries like finance, healthcare, and e-commerce.

Cloud Automation and DevOps

Modern cloud systems rely on automation. You are trained in DevOps tools and workflows, including:

CI/CD pipelines

Infrastructure as Code (IaC)

Monitoring and automation scripts

These tools help you manage cloud resources efficiently and reduce manual work, making you more productive and valuable in any tech team.

Integration with Machine Learning

At BBDU, the cloud computing program also includes machine learning skills. You learn how cloud platforms support AI tools, data analysis, and model training. This gives you an edge, especially in projects involving smart automation, data prediction, and intelligent systems.

This blend of cloud and ML is one of the top requirements in emerging tech careers.

Why Choose BBDU’s B.Tech in Cloud Computing and Machine Learning?

Babu Banarasi Das University offers one of the most advanced b techs in cloud computing programs in India. Developed in collaboration with IBM, this course combines academic strength with practical exposure.

Here’s what makes BBDU a smart choice:

IBM-certified curriculum and hands-on labs

Real-time project training on AWS, Azure, and GCP

Faculty with industry experience

Soft skill and placement support

Affordable fee structure with scholarships

You graduate not only with technical skills but also with real confidence to start your career.

Conclusion

A B.Tech in cloud computing gives you a strong foundation in one of the fastest-growing tech fields. You learn how to design systems, solve problems, secure data, and automate processes.

Start your cloud career with a university that prepares you for tomorrow.

Apply now to the IBM-collaborated B.Tech in Cloud Computing and Machine Learning program at BBDU.

1 note

·

View note

Text

Top Tools and Technologies Every Full Stack Java Developer Should Know

In today's fast-paced software development landscape, Full Stack Java Developers are in high demand. Companies seek professionals who can work across both the frontend and backend, manage databases, and understand deployment processes. Whether you're just starting your career or planning to upskill, mastering the right set of tools and technologies is key.

If you're considering a full stack java training in KPHB, this guide will help you understand the essential technologies and tools you should focus on to become industry-ready.

1. Java and Spring Framework

The foundation of full stack Java development starts with a deep understanding of Core Java and object-oriented programming concepts. Once you’ve nailed the basics, move to:

Spring Core

Spring Boot – simplifies microservices development.

Spring MVC – for building web applications.

Spring Security – for handling authentication and authorization.

Spring Data JPA – for database operations.

Spring Boot is the most widely adopted framework for backend development in enterprise applications.

2. Frontend Technologies

A full stack Java developer must be proficient in creating responsive and interactive UIs. Core frontend technologies include:

HTML5 / CSS3 / JavaScript

Bootstrap – for responsive designs.

React.js or Angular – for building dynamic SPAs (Single Page Applications).

TypeScript – especially useful when working with Angular.

3. Database Management

You’ll need to work with both relational and non-relational databases:

MySQL / PostgreSQL – popular SQL databases.

MongoDB – a widely used NoSQL database.

Hibernate ORM – simplifies database interaction in Java.

4. Version Control and Collaboration

Version control systems are crucial for working in teams and managing code history:

Git – the most essential tool for source control.

GitHub / GitLab / Bitbucket – platforms for repository hosting and collaboration.

5. DevOps and Deployment Tools

Understanding basic DevOps is vital for modern full stack roles:

Docker – for containerizing applications.

Jenkins – for continuous integration and delivery.

Maven / Gradle – for project build and dependency management.

AWS / Azure – cloud platforms for hosting full stack applications.

6. API Development and Testing

Full stack developers should know how to develop and consume APIs:

RESTful API – commonly used for client-server communication.

Postman – for testing APIs.

Swagger – for API documentation.

7. Unit Testing Frameworks

Testing is crucial for bug-free code. Key testing tools include:

JUnit – for unit testing Java code.

Mockito – for mocking dependencies in tests.

Selenium / Playwright – for automated UI testing.

8. Project Management and Communication

Agile and collaboration tools help manage tasks and teamwork:

JIRA / Trello – for task and sprint management.

Slack / Microsoft Teams – for communication.

Final Thoughts

Learning these tools and technologies can position you as a highly capable Full Stack Java Developer. If you're serious about a career in this field, structured learning can make all the difference.

Looking for expert-led Full Stack Java Training in KPHB? ✅ Get industry-ready with hands-on projects. ✅ Learn from experienced instructors. ✅ Job assistance and certification included.

👉 Visit our website to explore course details, check out FAQs, and kickstart your journey today!

0 notes

Text

Why Data Analyst Professionals Will Be Highly Sought After in 2025, 100% Job in MNC, Excel, VBA, SQL, Power BI, Tableau Projects, "Data Analyst Certification Course" in Delhi, 110006, By SLA Consultants India, Free Python Data Science Certification,

Data analyst professionals will be highly sought after in 2025 due to the unprecedented growth of data across all sectors and the increasing reliance on data-driven decision-making within organizations. Companies in every industry—from technology and finance to healthcare, retail, and even public sectors—are recognizing the value of data as a strategic asset. This has led to a surge in job openings for data analysts, with India alone facing an estimated 97,000 unfilled data analyst positions annually and a 45% increase in demand for these roles in recent years. The trend is global: the World Economic Forum reports that data-related jobs are among the top 10 fastest-growing roles worldwide, and the US Bureau of Labor Statistics predicts a 23% increase in data analyst employment by 2032.

Data Analyst Course in Delhi

The core reason for this demand is the explosion of data volume and variety. Businesses generate vast amounts of data daily, but raw data is only valuable when transformed into actionable insights. Data analysts bridge this gap by using tools like Excel, VBA, SQL, Power BI, and Tableau to collect, clean, analyze, and visualize data. These skills are consistently highlighted in job postings, with Microsoft Excel referenced in over 41% of listings, and Tableau and Power BI in nearly 25–28%. SQL remains foundational for data manipulation, while VBA enables automation of repetitive tasks, increasing efficiency. The ability to turn complex datasets into clear, impactful reports and dashboards makes data analysts indispensable for business strategy and operations.

Data Analyst Training Course in Delhi

Technological advancements are further fueling demand. The integration of AI, machine learning, and real-time analytics is transforming the way data is processed and utilized. AI and automation are not replacing data analysts; instead, they are augmenting their capabilities by handling routine tasks, freeing analysts to focus on strategic analysis and interpretation. This shift means that data analysts are increasingly seen as business partners who influence key decisions and drive growth. In 2025, data analysts are expected to work more closely with business units, requiring not only technical expertise but also strong communication, problem-solving, and storytelling skills.

A Data Analyst Certification Course in Delhi offered by SLA Consultants India (110006)—is designed to equip learners with exactly these in-demand skills. The course covers advanced Excel, VBA, SQL, Power BI, and Tableau, and includes hands-on projects that simulate real-world business challenges. Additionally, the program offers a free Python Data Science Certification, broadening learners’ analytical capabilities to include machine learning and advanced data science techniques. With a 100% job placement support guarantee in MNCs, graduates are assured a smooth transition into high-paying, secure roles.

Data Analytics Training Course Modules Module 1 – Basic and Advanced Excel With Dashboard and Excel Analytics Module 2 – VBA / Macros – Automation Reporting, User Form and Dashboard Module 3 – SQL and MS Access – Data Manipulation, Queries, Scripts and Server Connection – MIS and Data Analytics Module 4 – MS Power BI | Tableau Both BI & Data Visualization Module 5 – Free Python Data Science | Alteryx/ R Programing Module 6 – Python Data Science and Machine Learning – 100% Free in Offer – by IIT/NIT Alumni Trainer

In summary, data analyst professionals are set to remain highly sought after in 2025 due to the critical role they play in transforming data into business value. The combination of strong technical skills, business acumen, and adaptability to new technologies ensures that certified data analysts will continue to enjoy robust job prospects, competitive salaries, and opportunities for career advancement in multinational corporations and beyond. For more details Call: +91-8700575874 or Email: [email protected]

0 notes

Link

0 notes

Text

Why Liquid Cooling is on Every CTO’s Radar in 2025

As we reach the midpoint of 2025, the conversation around data center liquid cooling trends has shifted from speculative to strategic. For CTOs steering digital infrastructure, liquid cooling is no longer a futuristic concept—it’s a competitive necessity. Here’s why this technology is dominating boardroom agendas and shaping the next wave of data center innovation.

The Pressure: AI, Density, and Efficiency

The explosion of AI workloads, cloud computing, and high-frequency trading is pushing data centers to their thermal and operational limits. Traditional air cooling, once the backbone of server rooms, is struggling to keep up with the escalating power densities—especially as modern racks routinely exceed 30-60 kW, far beyond the 10-15 kW threshold where air cooling remains effective. As a result, CTOs are seeking scalable, future-proof solutions that can handle the heat—literally and figuratively.

Data Center Liquid Cooling Trends in 2025

1. Mainstream Market Momentum

The global data center liquid cooling market is projected to skyrocket from $4.68 billion in 2025 to $22.57 billion by 2034, with a CAGR of over 19%. Giants like Google, Microsoft, and Meta are not just adopting but actively standardizing liquid cooling in their hyperscale facilities, setting industry benchmarks and accelerating adoption across the sector.

2. Direct-to-Chip and Immersion Cooling Dominate

Two primary technologies are leading the charge:

Direct-to-Chip Cooling: Coolant circulates through plates attached directly to CPUs and GPUs, efficiently extracting heat at the source. This method is favored for its scalability and selective deployment on high-density racks

Immersion Cooling: Servers are submerged in non-conductive liquid, achieving up to 50% energy savings over air cooling and enabling unprecedented compute densities.

Both approaches are up to 1,000 times more effective at heat transfer than air, supporting the relentless growth of AI and machine learning workloads.

3. AI-Powered Cooling Optimization

Artificial intelligence is now integral to cooling strategy. AI-driven systems monitor temperature fluctuations and optimize cooling in real time, reducing energy waste and ensuring uptime for mission-critical applications.

4. Sustainability and Regulatory Pressures

With sustainability targets tightening and energy costs rising, liquid cooling’s superior efficiency is a major draw. It enables higher operating temperatures, reduces water and power consumption, and supports green IT initiatives—key considerations for CTOs facing regulatory scrutiny.

Challenges and Considerations

Despite the momentum, the transition isn’t without hurdles:

Integration Complexity: 47% of data center leaders cite integration as a barrier, while 41% are concerned about upfront costs.

Skill Gaps: Specialized training is required for installation and maintenance, though this is improving as the ecosystem matures.

Hybrid Approaches: Not all workloads require liquid cooling. Many facilities are adopting hybrid models, combining air and liquid systems to balance cost and performance.

The Strategic Payoff for CTOs

Why are data center liquid cooling trends so critical for CTOs in 2025?

Performance at Scale: Liquid cooling unlocks higher rack densities, supporting the next generation of AI and high-performance computing.

Long-Term Cost Savings: While initial investment is higher, operational expenses (OPEX) drop due to improved energy efficiency and reduced hardware failure rates.

Competitive Edge: Early adopters can maximize compute per square foot, reduce real estate costs, and meet sustainability mandates—key differentiators in a crowded market.

Download PDF Brochure :

In 2025, data center liquid cooling trends are not just a response to technical challenges—they’re a strategic lever for innovation, efficiency, and growth. CTOs who embrace this shift position their organizations to thrive amid rising computational demands and evolving sustainability standards. As liquid cooling moves from niche to norm, it’s clear: the future of data center infrastructure is flowing, not blowing.

#Cooling Optimization AI#Data Center Cooling Solutions#Data Center Infrastructure 2025#Data Center Liquid Cooling Trends#Direct-to-Chip Cooling#High-Density Server Cooling#Liquid Cooling for Data Centers

0 notes

Text

Server Automation Market Growth Analysis 2025

Server Automation Market refers to the set of software solutions and tools designed to automate routine, repetitive server-related tasks such as configuration, deployment, patching, monitoring, and maintenance. It eliminates the need for manual intervention in server management, thereby enhancing operational efficiency, reducing human error, ensuring standardization, and improving system reliability. Server automation solutions are critical in large-scale IT environments where rapid scaling, high availability, and secure infrastructure are required.

Get free sample of this report at : https://www.intelmarketresearch.com/download-free-sample/912/Server-Automation-Market

The global server automation market is witnessing robust growth as enterprises increasingly prioritize IT efficiency, scalability, and cost optimization. With the rapid expansion of cloud computing, DevOps adoption, and hybrid IT environments, organizations are turning to automation to manage complex server infrastructures more effectively.driven by the demand for zero-touch provisioning, automated patching, and predictive maintenance. Leading tech giants like Microsoft, IBM, BMC Software, and Red Hat are introducing advanced automation tools that reduce manual errors, enhance compliance, and improve uptime. Moreover, sectors such as banking, retail, and telecom are accelerating automation to support 24/7 digital services and reduce operational overhead in increasingly distributed computing environments.

Market Size

The Global Server Automation Market size was valued at US$ 3.89 billion in 2024 and is projected to reach US$ 8.67 billion by 2030, growing at a CAGR of 14.3% during the forecast period 2024-2030. The United States Server Automation Market alone accounted for US$ 1.23 billion in 2024, expected to hit US$ 2.65 billion by 2030, registering a CAGR of 13.7%.

Market Dynamics (Drivers, Restraints, Opportunities, and Challenges)

Drivers

Rising Demand for Scalable IT Infrastructure

The growing demand for robust and scalable IT infrastructure across industries is one of the main factors propelling the server automation market. The number and intricacy of server environments have increased dramatically as companies step up their digital transformation initiatives, especially in hybrid and multi-cloud configurations. Businesses are investing in automation tools that facilitate load balancing, auto-provisioning, and real-time monitoring in order to effectively manage this scale.For instance, Microsoft’s Azure Automation and Red Hat Ansible Automation Platform enable organizations to streamline repetitive server tasks, improve uptime, and reduce configuration errors.Additionally, Spotify manages thousands of microservices with little manual intervention by using infrastructure-as-code, server automation via HashiCorp Terraform, and internal orchestration tools, which increases agility and deployment speed.

In 2024, Microsoft announced plans to open new data centers in multiple regions, including North America, Europe, and Asia, to support increasing workloads from enterprises adopting hybrid and multi-cloud strategies. This expansion allows customers to easily scale their infrastructure up or down based on fluctuating demand, enabling businesses to optimize costs while maintaining high performance and reliability.

Restraints

High Implementation Costs

The high initial implementation cost is one of the main factors restraining the server automation market's expansion. It is difficult for many businesses, especially small and mid-sized ones, to defend the initial expenditure on automation platforms, specialized integrations, and training. The difficulty of switching from manual to automated server management, which frequently calls for personnel with DevOps or scripting experience, adds to these expenses.

For example, according to a TechTarget report from 2024, 47% of mid-sized companies put off automation projects because they lacked internal resources and had limited budgets. Long-term scaling is challenging since even large businesses have trouble finding and keeping automation experts. Because of this, automation's promise of increased productivity and decreased downtime is frequently postponed or underutilized, especially in industries that still rely on legacy systems.

Opportunities

Growing Opportunities in AI-Driven Automation and Hybrid Cloud Management

The market for server automation is expected to grow significantly due to the growing use of AI and ML technologies to improve automation capabilities. Proactive maintenance is made possible by AI-powered predictive analytics and anomaly detection, which lower operating expenses and downtime. Furthermore, there are opportunities for automation tools that can smoothly coordinate workloads across various infrastructures due to the growing trend towards hybrid and multi-cloud environments.For instance, AI-driven automation,is being integrated by businesses like Google Cloud's Anthos and IBM's Watson AI to enhance server management, optimize resource usage, and quicken deployment cycles. Furthermore, in order to manage distributed servers nearer to data sources, the rise in edge computing necessitates the use of lightweight automation solutions.

In October 2023, Siemens and Microsoft partnered to drive cross-industry AI adoption, unveiling Siemens Industrial Copilot, an AI-powered assistant developed collaboratively to enhance collaboration between humans and machines in the manufacturing sector

Challenges

Complexity of Managing Diverse IT Environments

The complexity of managing various and quickly changing IT environments is one of the main challenges in the server automation market. Businesses increasingly use on-premises, multi-cloud, and hybrid cloud infrastructures, each with its own platforms, configurations, and security needs. Workflow automation in these diverse settings necessitates deep integration and advanced orchestration capabilities, both of which can be challenging to set up and maintain.

For instance, because its microservices architecture was dynamic and spread across several cloud providers, Netflix had trouble automating its infrastructure. Netflix created its own automation tools, such as Spinnaker, to handle continuous delivery pipelines in order to solve this issue; however, in order to guarantee flawless orchestration and lower deployment failure rates, a substantial investment in engineering resources was necessary.

Regional Analysis

North America remains the largest market for server automation, driven by the early adoption of cloud infrastructure, advanced DevOps practices, and the presence of major technology players like Amazon Web Services (AWS), Microsoft, and IBM.The market is expanding as a result of the U.S. government and financial sectors' growing adoption of automation for increased uptime and compliance. Growing digitalization initiatives in Europe, particularly in Germany, the UK, and the Nordics, are encouraging adoption; however, stringent data privacy and compliance laws (such as GDPR) necessitate highly specialized automation solutions.Meanwhile, the Asia-Pacific region is emerging as the fastest-growing market, led by cloud infrastructure expansion and enterprise IT modernization in countries like India, China, and Singapore. For example, in 2024, Tata Consultancy Services (TCS) announced a major investment in AI-based automation platforms for its clients across Southeast Asia, highlighting the region’s rapid move toward scalable infrastructure.

In South America, adoption is gaining traction, particularly in Brazil and Chile, where businesses are modernizing data centers and integrating hybrid cloud platforms. Local tech firms are increasingly leveraging automation to cut costs and improve service uptime—especially in sectors like telecommunications and retail.Meanwhile, Middle East & Africa (MEA) is emerging as a niche but promising market. Countries like the UAE and Saudi Arabia are accelerating their digital transformation agendas under initiatives like Saudi Vision 2030, with increased investment in smart infrastructure and cloud automation. For instance, in 2025, Saudi Aramco partnered with local IT firms to automate its data center operations, highlighting regional momentum despite skills shortages and legacy IT barriers in parts of Africa.

Competitor Analysis (in brief)

Key players in the global Server Automation Market include:

Micro Focus and BMC Software: Long-standing leaders offering comprehensive server management suites.

Broadcom: Known for infrastructure automation.

Red Hat: Popular for open-source automation through Ansible.

IBM and Microsoft: Leverage AI for predictive analytics and cloud integration.

Tencent and Alibaba: Driving innovation in the Asia-Pacific region.

Dell, NetApp: Hardware-integrated automation capabilities.

These companies focus on partnerships, R&D in AI-driven automation, acquisitions, and expanding cloud-native capabilities to maintain market dominance.

May 2025, Salesforce announced plans to acquire data management platform Informatica for approximately $8 billion. This acquisition is intended to bolster Salesforce's capabilities in data management and enhance its AI functionalities, particularly in automating tasks through virtual AI agents.

January 2024, Hitachi Vantara and Cisco introduced the Hitachi EverFlex with Cisco Powered Hybrid Cloud, fusing their knowledge of networking and storage to provide adaptable, pay-per-use solutions for businesses making the switch to consumption-based business models.

January 2024, Synopsys announced that it would pay about $35 billion to acquire Ansys, a well-known provider of engineering simulation software. This calculated action intends to improve solutions in the automotive, aerospace, and industrial sectors by fusing Ansys' simulation capabilities with Synopsys' electronic design automation tools.April 2024, IBM announced the acquisition of HashiCorp, the developer of Terraform, for $6.4 billion, to bolster its cloud and AI automation capabilities.

October 2023, By utilizing generative AI capabilities, Rockwell Automation and Microsoft extended their collaboration, improving industrial automation system productivity and time-to-market.

Global Server Automation Market: Market Segmentation Analysis

This report provides a deep insight into the global Server Automation market, covering all its essential aspects. This ranges from a macro overview of the market to micro details of the market size, competitive landscape, development trend, niche market, key market drivers and challenges, SWOT analysis, value chain analysis, etc.

The analysis helps the reader to shape the competition within the industries and strategies for the competitive environment to enhance the potential profit. Furthermore, it provides a simple framework for evaluating and assessing the position of the business organization. The report structure also focuses on the competitive landscape of the Global Server Automation Market. This report introduces in detail the market share, market performance, product situation, operation situation, etc., of the main players, which helps the readers in the industry to identify the main competitors and deeply understand the competition pattern of the market.

In a word, this report is a must-read for industry players, investors, researchers, consultants, business strategists, and all those who have any kind of stake or are planning to foray into the Server Automation market in any manner.

Market Segmentation (by Type)

Software

Configuration Management

Patch Management

Workflow Automation

Service

Professional Services

Managed Services

Market Segmentation (by Deployment Mode)

On-Premise

Cloud-Based

Market Segmentation (by Organization Size)

Large Enterprises

SMEs

Market Segmentation (by End-User Industry)

IT & Telecom

BFSI

Healthcare

Retail & E-commerce

Manufacturing

Government & Defense

Energy & Utilities

Others

Key Company

Micro Focus

BMC Software

Broadcom

Riverturn

Red Hat

HP

IBM

Bizagi

Microsoft

ServerTribe

Dell

NetApp

Tencent

Alibaba

Geographic Segmentation

North America (USA, Canada, Mexico)

Europe (Germany, UK, France, Russia, Italy, Rest of Europe)

Asia-Pacific (China, Japan, South Korea, India, Southeast Asia, Rest of Asia-Pacific)

South America (Brazil, Argentina, Columbia, Rest of South America)

The Middle East and Africa (Saudi Arabia, UAE, Egypt, Nigeria, South Africa, Rest of MEA)

FAQ :

▶ What is the current market size of the Server Automation Market?

The global Server Automation Market was valued at US$ 3.89 billion in 2024, with projections reaching US$ 8.67 billion by 2030.

▶ Which are the key companies operating in the Server Automation Market?

Key players include Micro Focus, BMC Software, Broadcom, Red Hat, IBM, Microsoft, Tencent, and Dell, among others.

▶ What are the key growth drivers in the Server Automation Market?

Drivers include rising IT infrastructure complexity, increased cloud adoption, operational efficiency goals, and the demand for AI-driven server automation.

▶ Which regions dominate the Server Automation Market?

North America leads the market, followed by Europe and Asia-Pacific, with Asia-Pacific being the fastest-growing region.

▶ What are the emerging trends in the Server Automation Market?

Trends include AI-powered automation, self-healing systems, container-based automation, and hybrid cloud management.

Get free sample of this report at : https://www.intelmarketresearch.com/download-free-sample/912/Server-Automation-Market

0 notes

Text

Best Software Training Institute in Hyderabad – Monopoly ITSolutions

Best Software Training Institute in Hyderabad – Monopoly ITSolutions

Best Software Training Institute in Hyderabad, Kukatpally, KPHB

In today’s competitive job market, having the right technical skills is essential for launching a successful IT career. The Best Software Training Institute in Hyderabad, Kukatpally, KPHB offers a wide range of career-focused courses designed to equip students with real-time project experience and job-ready expertise. Whether you're a beginner or a professional looking to upskill, choosing the right institute in Kukatpally can make a significant difference in your career path.

Comprehensive Course Offerings

The best software training institutes in Kukatpally offer a robust lineup of technology courses that are constantly updated with the latest industry trends. Here are the top programs offered:

.NET Full Stack Development

This course covers everything from front-end to back-end using Microsoft technologies.You will learn C#, ASP.NET MVC, Web API, ADO.NET, and SQL Server. The program also includes front-end tools like HTML5, CSS3, JavaScript, Bootstrap, and Angular. Students will build real-world enterprise-level applications, preparing them for roles in both product-based and service-based companies.

Java Full Stack Development

Java remains a staple in enterprise application development. This full stack course covers Core Java, OOPs, Collections, JDBC, Servlets, JSP, and frameworks like Spring, Spring Boot, and Hibernate. On the front end, you’ll learn Angular or React to complete your stack. Real-time project building and deployment on servers will give you hands-on experience.

Python with Django

Both beginners and professionals enjoy Python's simplicity and flexibility. This course starts with Python basics, data structures, and object-oriented programming, then advances into Django, RESTful APIs, MySQL/PostgreSQL integration, and deployment. It is ideal for those who are interested in web development or want to prepare for a career in data science.

Angular

The Angular framework allows you to build single-page applications (SPAs) that are scalable. Learn TypeScript, component-based architecture, services, HTTP client, reactive forms, routing, and third-party library integrations. The course includes building dynamic dashboards and enterprise apps using RESTful APIs and backend integration.

React

React is widely used for developing fast, interactive user interfaces. This course includes JSX, props, state management, lifecycle methods, Hooks, Context API, Redux, and routing. Students will also work on component-based architecture and build complete web apps with real API integration. React is especially important for those aiming to work in front-end development or MERN stack.

Data Science

A professional looking to transition into analytics or artificial intelligence can benefit from this specialized course.The curriculum includes Python for data analysis, NumPy, Pandas, Matplotlib, Seaborn, statistics, machine learning algorithms, data preprocessing, model evaluation, and deployment. Tools like Jupyter Notebook, Scikit-learn, and TensorFlow are introduced through real-life case studies.

Key Features of the Institute

Industry-Experienced Trainers: Learn from certified professionals with hands-on experience in top IT companies.

Real-Time Projects: Gain practical experience by working on real-world case studies and applications.

Resume & Interview Support: Resume building sessions, mock interviews, and HR support to help you crack job opportunities.

Student Success and Placement Support

Leading training institutes in Kukatpally not only focus on technical knowledge but also prepare students for real job scenarios. From interview preparation to placement drives, students receive complete career support. Many have secured jobs in top MNCs and IT startups after completing their training.

Conclusion

Making the right choice when it comes to software training is crucial to shaping your career in IT. If you’re ready to build expertise in technologies like .NET, Java, Python, Angular, React, and Data Science, look no further than Monopoly IT Solutions. Located in the heart of Kukatpally, we are committed to transforming learners into skilled professionals ready for today’s digital world.

#DotNet training#Job training institute in hyderabad#Software training in KPHB#Job training in JNTU Kukatpally

0 notes

Text

Best .NET Full Stack Development Training Institutes

If you're looking for the best software training institute in Hyderabad, choosing the right place to learn .NET Full Stack Development is essential for launching a successful tech career. With the IT industry’s high demand for full stack developers, mastering both frontend and backend using Microsoft's robust .NET ecosystem can open up excellent opportunities.

Why Choose .NET Full Stack Development?

A .NET Full Stack Developer is skilled in building complete web applications using technologies like C#, ASP.NET, Entity Framework, SQL Server on the backend, and HTML, CSS, JavaScript, and Angular or React on the frontend. This combination is widely used in enterprise-level applications, making it a smart career path for both beginners and experienced developers.

What Makes a Training Institute Stand Out?

When evaluating training institutes, consider:

Experienced Faculty: Trainers with real-world industry experience make a huge difference.

Comprehensive Curriculum: The program should cover both theory and practical projects.

Hands-On Learning: Look for institutes offering real-time projects and code walkthroughs.

Placement Support: Resume building, mock interviews, and placement drives help students land jobs faster.

Features Offered by Leading Institutes

Top .NET Full Stack institutes offer:

Flexible batch timings (weekdays/weekends)

Online and classroom options

Lifetime access to course materials

Doubt-clearing sessions and project support

Conclusion

Choosing the right institute can be the difference between just learning and truly mastering .NET Full Stack development. For high-quality training, expert mentorship, and dedicated placement assistance, we highly recommend Monopoly IT Solutions. Known for its practical approach and student-focused training, Monopoly IT Solutions is a top choice for anyone serious about becoming a skilled full stack developer.

0 notes

Text

Move Ahead with Confidence: Microsoft Training Courses That Power Your Potential

Why Microsoft Skills Are a Must-Have in Modern IT

Microsoft technologies power the digital backbone of countless businesses, from small startups to global enterprises. From Microsoft Azure to Power Platform and Microsoft 365, these tools are essential for cloud computing, collaboration, security, and business intelligence. As companies adopt and scale these technologies, they need skilled professionals to configure, manage, and secure their Microsoft environments. Whether you’re in infrastructure, development, analytics, or administration, Microsoft skills are essential to remain relevant and advance your career.