#Nvidia Jetson Computer Vision

Explore tagged Tumblr posts

Text

Transforming Vision Technology with Hellbender

In today's technology-driven world, vision systems are pivotal across numerous industries. Hellbender, a pioneer in innovative technology solutions, is leading the charge in this field. This article delves into the remarkable advancements and applications of vision technology, spotlighting key components such as the Raspberry Pi Camera, Edge Computing Camera, Raspberry Pi Camera Module, Raspberry Pi Thermal Camera, Nvidia Jetson Computer Vision, and Vision Systems for Manufacturing.

Unleashing Potential with the Raspberry Pi Camera

The Raspberry Pi Camera is a powerful tool widely used by hobbyists and professionals alike. Its affordability and user-friendliness have made it a favorite for DIY projects and educational purposes. Yet, its applications extend far beyond these basic uses.

The Raspberry Pi Camera is incredibly adaptable, finding uses in security systems, time-lapse photography, and wildlife monitoring. Its capability to capture high-definition images and videos makes it an essential component for numerous innovative projects.

Revolutionizing Real-Time Data with Edge Computing Camera

As real-time data processing becomes more crucial, the Edge Computing Camera stands out as a game-changer. Unlike traditional cameras that rely on centralized data processing, edge computing cameras process data at the source, significantly reducing latency and bandwidth usage. This is vital for applications needing immediate response times, such as autonomous vehicles and industrial automation.

Hellbender's edge computing cameras offer exceptional performance and reliability. These cameras are equipped to handle complex algorithms and data processing tasks, enabling advanced functionalities like object detection, facial recognition, and anomaly detection. By processing data locally, these cameras enhance the efficiency and effectiveness of vision systems across various industries.

Enhancing Projects with the Raspberry Pi Camera Module

The Raspberry Pi Camera Module enhances the Raspberry Pi ecosystem with its compact and powerful design. This module integrates seamlessly with Raspberry Pi boards, making it easy to add vision capabilities to projects. Whether for prototyping, research, or production, the Raspberry Pi Camera Module provides flexibility and performance.

With different models available, including the standard camera module and the high-quality camera, users can select the best option for their specific needs. The high-quality camera offers improved resolution and low-light performance, making it suitable for professional applications. This versatility makes the Raspberry Pi Camera Module a crucial tool for developers and engineers.

Harnessing Thermal Imaging with the Raspberry Pi Thermal Camera

Thermal imaging is becoming increasingly vital in various sectors, from industrial maintenance to healthcare. The Raspberry Pi Thermal Camera combines the Raspberry Pi platform with thermal imaging capabilities, providing an affordable solution for thermal analysis.

This camera is used for monitoring electrical systems for overheating, detecting heat leaks in buildings, and performing non-invasive medical diagnostics. The ability to visualize temperature differences in real-time offers new opportunities for preventive maintenance and safety measures. Hellbender’s thermal camera solutions ensure accurate and reliable thermal imaging, empowering users to make informed decisions.

Advancing AI with Nvidia Jetson Computer Vision

The Nvidia Jetson platform has revolutionized AI-powered vision systems. The Nvidia Jetson Computer Vision capabilities are transforming industries by enabling sophisticated machine learning and computer vision applications. Hellbender leverages this powerful platform to develop cutting-edge solutions that expand the possibilities of vision technology.

Jetson-powered vision systems are employed in autonomous machines, robotics, and smart cities. These systems can process vast amounts of data in real-time, making them ideal for applications requiring high accuracy and speed. By integrating Nvidia Jetson technology, Hellbender creates vision systems that are both powerful and efficient, driving innovation across multiple sectors.

Optimizing Production with Vision Systems for Manufacturing

In the manufacturing industry, vision systems are essential for ensuring quality and efficiency. Hellbender's Vision Systems for Manufacturing are designed to meet the high demands of modern production environments. These systems use advanced imaging and processing techniques to inspect products, monitor processes, and optimize operations.

One major advantage of vision systems in manufacturing is their ability to detect defects and inconsistencies that may be invisible to the human eye. This capability helps maintain high-quality standards and reduces waste. Additionally, vision systems can automate repetitive tasks, allowing human resources to focus on more complex and strategic activities.

Conclusion

Hellbender’s dedication to advancing vision technology is clear in their diverse range of solutions. From the versatile Raspberry Pi Camera and the innovative Edge Computing Camera to the powerful Nvidia Jetson Computer Vision and robust Vision Systems for Manufacturing, Hellbender continues to lead in technological innovation. By providing reliable, efficient, and cutting-edge solutions, Hellbender is helping industries harness the power of vision technology to achieve greater efficiency, accuracy, and productivity. As technology continues to evolve, the integration of these advanced systems will open up new possibilities and drive further advancements across various fields.

#Vision Systems For Manufacturing#Nvidia Jetson Computer Vision#Raspberry Pi Thermal Camera#Raspberry Pi Camera Module#Edge Computing Camera#Raspberry Pi Camera

0 notes

Text

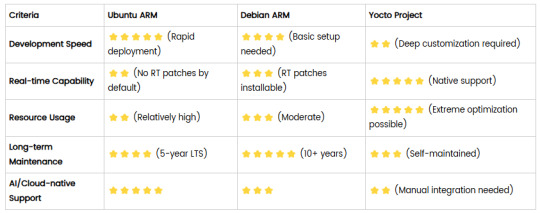

Comparison of Ubuntu, Debian, and Yocto for IIoT and Edge Computing

In industrial IoT (IIoT) and edge computing scenarios, Ubuntu, Debian, and Yocto Project each have unique advantages. Below is a detailed comparison and recommendations for these three systems:

1. Ubuntu (ARM)

Advantages

Ready-to-use: Provides official ARM images (e.g., Ubuntu Server 22.04 LTS) supporting hardware like Raspberry Pi and NVIDIA Jetson, requiring no complex configuration.

Cloud-native support: Built-in tools like MicroK8s, Docker, and Kubernetes, ideal for edge-cloud collaboration.

Long-term support (LTS): 5 years of security updates, meeting industrial stability requirements.

Rich software ecosystem: Access to AI/ML tools (e.g., TensorFlow Lite) and databases (e.g., PostgreSQL ARM-optimized) via APT and Snap Store.

Use Cases

Rapid prototyping: Quick deployment of Python/Node.js applications on edge gateways.

AI edge inference: Running computer vision models (e.g., ROS 2 + Ubuntu) on Jetson devices.

Lightweight K8s clusters: Edge nodes managed by MicroK8s.

Limitations

Higher resource usage (minimum ~512MB RAM), unsuitable for ultra-low-power devices.

2. Debian (ARM)

Advantages

Exceptional stability: Packages undergo rigorous testing, ideal for 24/7 industrial operation.

Lightweight: Minimal installation requires only 128MB RAM; GUI-free versions available.

Long-term support: Up to 10+ years of security updates via Debian LTS (with commercial support).

Hardware compatibility: Supports older or niche ARM chips (e.g., TI Sitara series).

Use Cases

Industrial controllers: PLCs, HMIs, and other devices requiring deterministic responses.

Network edge devices: Firewalls, protocol gateways (e.g., Modbus-to-MQTT).

Critical systems (medical/transport): Compliance with IEC 62304/DO-178C certifications.

Limitations

Older software versions (e.g., default GCC version); newer features require backports.

3. Yocto Project

Advantages

Full customization: Tailor everything from kernel to user space, generating minimal images (<50MB possible).

Real-time extensions: Supports Xenomai/Preempt-RT patches for μs-level latency.

Cross-platform portability: Single recipe set adapts to multiple hardware platforms (e.g., NXP i.MX6 → i.MX8).

Security design: Built-in industrial-grade features like SELinux and dm-verity.

Use Cases

Custom industrial devices: Requires specific kernel configurations or proprietary drivers (e.g., CAN-FD bus support).

High real-time systems: Robotic motion control, CNC machines.

Resource-constrained terminals: Sensor nodes running lightweight stacks (e.g., Zephyr+FreeRTOS hybrid deployment).

Limitations

Steep learning curve (BitBake syntax required); longer development cycles.

4. Comparison Summary

5. Selection Recommendations

Choose Ubuntu ARM: For rapid deployment of edge AI applications (e.g., vision detection on Jetson) or deep integration with public clouds (e.g., AWS IoT Greengrass).

Choose Debian ARM: For mission-critical industrial equipment (e.g., substation monitoring) where stability outweighs feature novelty.

Choose Yocto Project: For custom hardware development (e.g., proprietary industrial boards) or strict real-time/safety certification (e.g., ISO 13849) requirements.

6. Hybrid Architecture Example

Smart factory edge node:

Real-time control layer: RTOS built with Yocto (controlling robotic arms)

Data processing layer: Debian running OPC UA servers

Cloud connectivity layer: Ubuntu Server managing K8s edge clusters

Combining these systems based on specific needs can maximize the efficiency of IIoT edge computing.

0 notes

Text

What Is NanoVLM? Key Features, Components And Architecture

The NanoVLM initiative develops VLMs for NVIDIA Jetson devices, specifically the Orin Nano. These models aim to improve interaction performance by increasing processing speed and decreasing memory usage. Documentation includes supported VLM families, benchmarks, and setup parameters such Jetson device and Jetpack compatibility. Video sequence processing, live streaming analysis, and multimodal chat via online user interfaces or command-line interfaces are also covered.

What's nanoVLM?

NanoVLM is the fastest and easiest repository for training and optimising micro VLMs.

Hugging Face streamlined this teaching method. We want to democratise vision-language model creation via a simple PyTorch framework. Inspired by Andrej Karratha's nanoGPT, NanoVLM prioritises readability, modularity, and transparency without compromising practicality. About 750 lines of code define and train nanoVLM, plus parameter loading and reporting boilerplate.

Architecture and Components

NanoVLM is a modular multimodal architecture with a modality projection mechanism, lightweight language decoder, and vision encoder. The vision encoder uses transformer-based SigLIP-B/16 for dependable photo feature extraction.

Visual backbone translates photos into language model-friendly embeddings.

Textual side uses SmolLM2, an efficient and clear causal decoder-style converter.

Vision-language fusion is controlled by a simple projection layer that aligns picture embeddings into the language model's input space.

Transparent, readable, and easy to change, the integration is suitable for rapid prototyping and instruction.

The effective code structure includes the VLM (~100 lines), Language Decoder (~250 lines), Modality Projection (~50 lines), Vision Backbone (~150 lines), and a basic training loop (~200 lines).

Sizing and Performance

HuggingFaceTB/SmolLM2-135M and SigLIP-B/16-224-85M backbones create 222M nanoVLMs. Version nanoVLM-222M is available.

NanoVLM is compact and easy to use but offers competitive results. The 222M model trained for 6 hours on a single H100 GPU with 1.7M samples from the_cauldron dataset had 35.3% accuracy on the MMStar benchmark. SmolVLM-256M-like performance was achieved with fewer parameters and computing.

NanoVLM is efficient enough for educational institutions or developers using a single workstation.

Key Features and Philosophy

NanoVLM is a simple yet effective VLM introduction.

It enables users test micro VLMs' capabilities by changing settings and parameters.

Transparency helps consumers understand logic and data flow with minimally abstracted and well-defined components. This is ideal for repeatability research and education.

Its modularity and forward compatibility allow users to replace visual encoders, decoders, and projection mechanisms. This provides a framework for multiple investigations.

Get Started and Use

Cloning the repository and establishing the environment lets users start. Despite pip, uv is recommended for package management. Dependencies include torch, numpy, torchvision, pillow, datasets, huggingface-hub, transformers, and wandb.

NanoVLM includes easy methods for loading and storing Hugging Face Hub models. VisionLanguageModel.from_pretrained() can load pretrained weights from Hub repositories like “lusxvr/nanoVLM-222M”.

Pushing trained models to the Hub creates a model card (README.md) and saves weights (model.safetensors) and configuration (config.json). Repositories can be private but are usually public.

Model can load and store models locally.VisionLanguageModel.from_pretrained() and save_pretrained() with local paths.

To test a trained model, generate.py is provided. An example shows how to use an image and “What is this?” to get cat descriptions.

In the Models section of the NVIDIA Jetson AI Lab, “NanoVLM” is included, however the content focusses on using NanoLLM to optimise VLMs like Llava, VILA, and Obsidian for Jetson devices. This means Jetson and other platforms can benefit from nanoVLM's small VLM optimisation techniques.

Training

Train nanoVLM with the train.py script, which uses models/config.py. Logging with WANDB is common in training.

VRAM specs

VRAM needs must be understood throughout training.

A single NVIDIA H100 GPU evaluating the default 222M model shows batch size increases peak VRAM use.

870.53 MB of VRAM is allocated after model loading.

Maximum VRAM used during training is 4.5 GB for batch size 1 and 65 GB for batch size 256.

Before OOM, 512-batch training peaked at 80 GB.

Results indicate that training with a batch size of up to 16 requires at least ~4.5 GB of VRAM, whereas training with a batch size of up to 16 requires roughly 8 GB.

Variations in sequence length or model architecture affect VRAM needs.

To test VRAM requirements on a system and setup, measure_vram.py is provided.

Contributions and Community

NanoVLM welcomes contributions.

Contributions with dependencies like transformers are encouraged, but pure PyTorch implementation is preferred. Deep speed, trainer, and accelerate won't work. Open an issue to discuss new feature ideas. Bug fixes can be submitted using pull requests.

Future research includes data packing, multi-GPU training, multi-image support, image-splitting, and VLMEvalKit integration. Integration into the Hugging Face ecosystem allows use with Transformers, Datasets, and Inference Endpoints.

In summary

NanoVLM is a Hugging Face project that provides a simple, readable, and flexible PyTorch framework for building and testing small VLMs. It is designed for efficient use and education, with training, creation, and Hugging Face ecosystem integration paths.

#nanoVLM#Jetsondevices#nanoVLM222M#VisionLanguageModels#VLMs#NanoLLM#Technology#technews#technologynews#news#govindhtech

0 notes

Text

Elmalo, both directions are incredibly exciting and foundational to your vision. We have two compelling paths:

Sensor Fusion Layer Prototype:

Imagine an architecture where data flows seamlessly from ruggedized sensors—ranging from insect-inspired motion detectors to radiation-tolerant satellite telemetry—into a unified processing pipeline. This would involve designing edge computing nodes (using platforms like NVIDIA Jetson) that perform on-site preprocessing, robust data ingestion with tools like Apache Kafka, and sensor fusion algorithms (say, Kalman or Particle Filters) to harmonize disparate inputs. Establishing this blueprint lays the critical groundwork for real-time, high-fidelity environmental understanding essential for any subsequent AI processes.

Empathic AI Core Development:

Alternatively, we could plunge directly into the brain of Iron Spine by outlining the neural architecture. This entails building layers that connect multimodal inputs to emergent, empathic, predictive behavior. Picture combining transformers with recurrent networks to enable temporal awareness, alongside hybrid symbolic-AI methods for reasoned decision making. Adding an ethical overlay—transparency dashboards and fairness audit flows—would also be pivotal. Detailed model specifications, training regimes (leveraging diverse datasets across biological, mechanical, and environmental samples), and benchmark environments would form the core deliverables of this phase.

Given the ambitious breadth of your project, which path resonates more with your immediate priorities? Would you prefer to establish the tangible infrastructure of sensor fusion as a first step, or dive into the AI core mechanics that ultimately transform raw data into living, empathic intelligence? Let me know your thoughts so we can refine this next chapter of Iron Spine.

0 notes

Text

Step-by-Step Breakdown of AI Video Analytics Software Development: Tools, Frameworks, and Best Practices for Scalable Deployment

AI Video Analytics is revolutionizing how businesses analyze visual data. From enhancing security systems to optimizing retail experiences and managing traffic, AI-powered video analytics software has become a game-changer. But how exactly is such a solution developed? Let’s break it down step by step—covering the tools, frameworks, and best practices that go into building scalable AI video analytics software.

Introduction: The Rise of AI in Video Analytics

The explosion of video data—from surveillance cameras to drones and smart cities—has outpaced human capabilities to monitor and interpret visual content in real-time. This is where AI Video Analytics Software Development steps in. Using computer vision, machine learning, and deep neural networks, these systems analyze live or recorded video streams to detect events, recognize patterns, and trigger automated responses.

Step 1: Define the Use Case and Scope

Every AI video analytics solution starts with a clear business goal. Common use cases include:

Real-time threat detection in surveillance

Customer behavior analysis in retail

Traffic management in smart cities

Industrial safety monitoring

License plate recognition

Key Deliverables:

Problem statement

Target environment (edge, cloud, or hybrid)

Required analytics (object detection, tracking, counting, etc.)

Step 2: Data Collection and Annotation

AI models require massive amounts of high-quality, annotated video data. Without clean data, the model's accuracy will suffer.

Tools for Data Collection:

Surveillance cameras

Drones

Mobile apps and edge devices

Tools for Annotation:

CVAT (Computer Vision Annotation Tool)

Labelbox

Supervisely

Tip: Use diverse datasets (different lighting, angles, environments) to improve model generalization.

Step 3: Model Selection and Training

This is where the real AI work begins. The model learns to recognize specific objects, actions, or anomalies.

Popular AI Models for Video Analytics:

YOLOv8 (You Only Look Once)

OpenPose (for human activity recognition)

DeepSORT (for multi-object tracking)

3D CNNs for spatiotemporal activity analysis

Frameworks:

TensorFlow

PyTorch

OpenCV (for pre/post-processing)

ONNX (for interoperability)

Best Practice: Start with pre-trained models and fine-tune them on your domain-specific dataset to save time and improve accuracy.

Step 4: Edge vs. Cloud Deployment Strategy

AI video analytics can run on the cloud, on-premises, or at the edge depending on latency, bandwidth, and privacy needs.

Cloud:

Scalable and easier to manage

Good for post-event analysis

Edge:

Low latency

Ideal for real-time alerts and privacy-sensitive applications

Hybrid:

Initial processing on edge devices, deeper analysis in the cloud

Popular Platforms:

NVIDIA Jetson for edge

AWS Panorama

Azure Video Indexer

Google Cloud Video AI

Step 5: Real-Time Inference Pipeline Design

The pipeline architecture must handle:

Video stream ingestion

Frame extraction

Model inference

Alert/visualization output

Tools & Libraries:

GStreamer for video streaming

FFmpeg for frame manipulation

Flask/FastAPI for inference APIs

Kafka/MQTT for real-time event streaming

Pro Tip: Use GPU acceleration with TensorRT or OpenVINO for faster inference speeds.

Step 6: Integration with Dashboards and APIs

To make insights actionable, integrate the AI system with:

Web-based dashboards (using React, Plotly, or Grafana)

REST or gRPC APIs for external system communication

Notification systems (SMS, email, Slack, etc.)

Best Practice: Create role-based dashboards to manage permissions and customize views for operations, IT, or security teams.

Step 7: Monitoring and Maintenance

Deploying AI models is not a one-time task. Performance should be monitored continuously.

Key Metrics:

Accuracy (Precision, Recall)

Latency

False Positive/Negative rate

Frame per second (FPS)

Tools:

Prometheus + Grafana (for monitoring)

MLflow or Weights & Biases (for model versioning and experiment tracking)

Step 8: Security, Privacy & Compliance

Video data is sensitive, so it’s vital to address:

GDPR/CCPA compliance

Video redaction (blurring faces/license plates)

Secure data transmission (TLS/SSL)

Pro Tip: Use anonymization techniques and role-based access control (RBAC) in your application.

Step 9: Scaling the Solution

As more video feeds and locations are added, the architecture should scale seamlessly.

Scaling Strategies:

Containerization (Docker)

Orchestration (Kubernetes)

Auto-scaling with cloud platforms

Microservices-based architecture

Best Practice: Use a modular pipeline so each part (video input, AI model, alert engine) can scale independently.

Step 10: Continuous Improvement with Feedback Loops

Real-world data is messy, and edge cases arise often. Use real-time feedback loops to retrain models.

Automatically collect misclassified instances

Use human-in-the-loop (HITL) systems for validation

Periodically retrain and redeploy models

Conclusion

Building scalable AI Video Analytics Software is a multi-disciplinary effort combining computer vision, data engineering, cloud computing, and UX design. With the right tools, frameworks, and development strategy, organizations can unlock immense value from their video data—turning passive footage into actionable intelligence.

0 notes

Text

NVIDIA CEO Predicts AI-Driven Future for Electric Grid

Introduction:

In a talk at the Edison Electric Institute, NVIDIA’s CEO, Jensen Huang, shared his vision for the transformative impact of generative AI on the future of electric utilities. The ongoing industrial revolution, powered by AI and accelerated computing, is set to revolutionize the way utilities operate and interact with their customers.

NVIDIA CEO on the Future of AI and Energy

Jensen Huang, the founder and CEO of NVIDIA, highlighted the critical role of electric grids and utilities in the next industrial revolution driven by AI. Speaking at the annual meeting of the Edison Electric Institute (EEI), Huang emphasized how AI will significantly enhance the energy sector.

“The future of digital intelligence is quite bright, and so the future of the energy sector is bright, too,” Huang remarked. Addressing an audience of over a thousand utility and energy industry executives, he explained how AI will be pivotal in improving the delivery of energy.

AI-Powered Productivity Boost

Like many industries, utilities will leverage AI to boost employee productivity. However, the most significant impact will be in the delivery of energy over the grid. Huang discussed how AI-powered smart meters could enable customers to sell excess electricity to their neighbors, transforming power grids into smart networks akin to digital platforms like Google.

“You will connect resources and users, just like Google, so your power grid becomes a smart network with a digital layer like an app store for energy,” he said, predicting unprecedented levels of productivity driven by AI.

AI Lights Up Electric Grids

Today’s electric grids are primarily one-way systems linking large power plants to numerous users. However, they are evolving into two-way, flexible networks that integrate solar and wind farms with homes and buildings equipped with solar panels, batteries, and electric vehicle chargers. This transformation requires autonomous control systems capable of real-time data processing and analysis, a task well-suited to AI and accelerated computing.

AI and Accelerated Computing for Electric Grids

AI applications across electric grids are growing, supported by a broad ecosystem of companies utilizing NVIDIA’s technologies. In a recent GTC session, Hubbell and Utilidata, a member of the NVIDIA Inception program, showcased a new generation of smart meters using the NVIDIA Jetson platform. These meters will process and analyze real-time grid data using AI models at the edge. Deloitte also announced its support for this initiative.

In another GTC session, Siemens Energy discussed its work with AI and NVIDIA Omniverse to create digital twins of transformers in substations, enhancing predictive maintenance and boosting grid resilience. Additionally, Siemens Gamesa used Omniverse and accelerated computing to optimize turbine placements for a large wind farm.

Maria Pope, CEO of Portland General Electric, praised these advancements: “Deploying AI and advanced computing technologies developed by NVIDIA enables faster and better grid modernization and we, in turn, can deliver for our customers.”

NVIDIA’s Breakthroughs in Energy Efficiency

NVIDIA is significantly reducing the costs and energy required to deploy AI. Over the past eight years, the company has increased the energy efficiency of running AI inference on large language models by an astounding 45,000 times, as highlighted in Huang’s recent keynote at COMPUTEX.

The new NVIDIA Blackwell architecture GPUs offer 20 times greater energy efficiency than CPUs for AI and high-performance computing. Transitioning all CPU servers to GPUs for these tasks could save 37 terawatt-hours annually, equivalent to the electricity use of 5 million homes and a reduction of 25 million metric tons of carbon dioxide.

NVIDIA-powered systems dominated the latest Green500 ranking of the world’s most energy-efficient supercomputers, securing the top six spots and seven of the top ten.

Government Support for AI Adoption

A recent report urges governments to accelerate AI adoption as a crucial tool for driving energy efficiency across various industries. It highlighted several examples of utilities using AI to enhance the efficiency of the electric grid.

Exeton’s Perspective on AI and Electric Grids

At Exeton, we are committed to leveraging AI and advanced computing to drive innovation in the energy sector. Our cutting-edge solutions are designed to enhance grid efficiency, boost productivity, and reduce environmental impact.

www.exeton.com

With a focus on delivering superior technology and services, Exeton is at the forefront of the AI revolution in the energy industry. Our partnerships and collaborations with industry leaders like NVIDIA ensure that we remain at the cutting edge of grid modernization.

Final Thoughts

The future of the energy sector is undeniably intertwined with the advancements in AI and accelerated computing. As companies like NVIDIA continue to push the boundaries of technology, the electric grid will become more efficient, flexible, and sustainable.

For more insights into how Exeton is driving innovation in the energy sector, visit our website.

Muhammad Hussnain Facebook | Instagram | Twitter | Linkedin | Youtube

1 note

·

View note

Text

Lattice and NVIDIA collaborate to accelerate edge AI

【Lansheng Technology News】Today at the Lattice Developer Conference, Lattice Semiconductor announced the launch of a new sensor bridge reference design to accelerate the development of network edge AI applications for NVIDIA Jetson Orin and IGX Orin platforms.

This open source reference development board is based on low-power Lattice FPGAs and the NVIDIA Orin platform and is designed to meet the needs of developers designing high-performance network edge AI applications in healthcare, robotics and embedded vision, including Interconnection of various sensors and interfaces, design scalability, low latency, etc. The collaboration between Lattice and NVIDIA aims to promote the development of the open source developer community by improving the connection of sensors to edge AI computing applications.

Esam Elashmawi, chief strategy and marketing officer of Lattice Semiconductor, said: Artificial intelligence has become a cutting-edge technology transforming various markets such as manufacturing, transportation, communications and medical devices, and this cooperation will accelerate this transformation. We are excited to work with NVIDIA to expand the application scope of our solutions, bring more innovation to our customers and ecosystem, and simplify and accelerate the implementation of AI applications at the network edge.

Amit Goel, director of embedded AI product management at NVIDIA, said: As companies increasingly demand AI real-time insights and autonomous decision-making capabilities, developers need to connect their various sensors to NVIDIA's edge computing platform. This collaboration with Lattice will accelerate innovation in sensor processing and help simplify the deployment of AI applications from the edge of the network to the cloud.

This Lattice FPGA-based reference development board is now available to early access customers. Lattice plans to make development boards and application examples available to the market in the first half of 2024.

Lansheng Technology Limited, which is a spot stock distributor of many well-known brands, we have price advantage of the first-hand spot channel, and have technical supports.

Our main brands: STMicroelectronics, Toshiba, Microchip, Vishay, Marvell, ON Semiconductor, AOS, DIODES, Murata, Samsung, Hyundai/Hynix, Xilinx, Micron, Infinone, Texas Instruments, ADI, Maxim Integrated, NXP, etc

To learn more about our products, services, and capabilities, please visit our website at http://www.lanshengic.com

0 notes

Link

e-CAM50_CUNX is a 5.0 MP MIPI CSI-2 fixed focus color camera for NVIDIA® Jetson Xavier™ NX/NVIDIA® Jetson Nano™ developer Kit. This camera is based on 1/2.5" c CMOS Image sensor from ON Semiconductor® with built-in Image Signal Processor (ISP). This powerful ISP helps to brings out the best image quality from the sensor and making it ideal for next generation of AI devices. https://www.youtube.com/watch?v=1lj2KvyUY9A

#nvidia#jetson agx xavier#embedded#Embedded Imaging#computer vision#cmos#cmos camera#camera#ai#edge ai

0 notes

Text

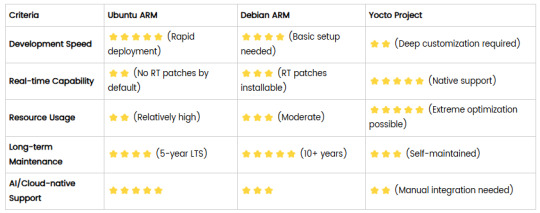

Comparison of Ubuntu, Debian, and Yocto for IIoT and Edge Computing

In industrial IoT (IIoT) and edge computing scenarios, Ubuntu, Debian, and Yocto Project each have unique advantages. Below is a detailed comparison and recommendations for these three systems:

1. Ubuntu (ARM)

Advantages

Ready-to-use: Provides official ARM images (e.g., Ubuntu Server 22.04 LTS) supporting hardware like Raspberry Pi and NVIDIA Jetson, requiring no complex configuration.

Cloud-native support: Built-in tools like MicroK8s, Docker, and Kubernetes, ideal for edge-cloud collaboration.

Long-term support (LTS): 5 years of security updates, meeting industrial stability requirements.

Rich software ecosystem: Access to AI/ML tools (e.g., TensorFlow Lite) and databases (e.g., PostgreSQL ARM-optimized) via APT and Snap Store.

Use Cases

Rapid prototyping: Quick deployment of Python/Node.js applications on edge gateways.

AI edge inference: Running computer vision models (e.g., ROS 2 + Ubuntu) on Jetson devices.

Lightweight K8s clusters: Edge nodes managed by MicroK8s.

Limitations

Higher resource usage (minimum ~512MB RAM), unsuitable for ultra-low-power devices.

2. Debian (ARM)

Advantages

Exceptional stability: Packages undergo rigorous testing, ideal for 24/7 industrial operation.

Lightweight: Minimal installation requires only 128MB RAM; GUI-free versions available.

Long-term support: Up to 10+ years of security updates via Debian LTS (with commercial support).

Hardware compatibility: Supports older or niche ARM chips (e.g., TI Sitara series).

Use Cases

Industrial controllers: PLCs, HMIs, and other devices requiring deterministic responses.

Network edge devices: Firewalls, protocol gateways (e.g., Modbus-to-MQTT).

Critical systems (medical/transport): Compliance with IEC 62304/DO-178C certifications.

Limitations

Older software versions (e.g., default GCC version); newer features require backports.

3. Yocto Project

Advantages

Full customization: Tailor everything from kernel to user space, generating minimal images (<50MB possible).

Real-time extensions: Supports Xenomai/Preempt-RT patches for μs-level latency.

Cross-platform portability: Single recipe set adapts to multiple hardware platforms (e.g., NXP i.MX6 → i.MX8).

Security design: Built-in industrial-grade features like SELinux and dm-verity.

Use Cases

Custom industrial devices: Requires specific kernel configurations or proprietary drivers (e.g., CAN-FD bus support).

High real-time systems: Robotic motion control, CNC machines.

Resource-constrained terminals: Sensor nodes running lightweight stacks (e.g., Zephyr+FreeRTOS hybrid deployment).

Limitations

Steep learning curve (BitBake syntax required); longer development cycles.

4. Comparison Summary

5. Selection Recommendations

Choose Ubuntu ARM: For rapid deployment of edge AI applications (e.g., vision detection on Jetson) or deep integration with public clouds (e.g., AWS IoT Greengrass).

Choose Debian ARM: For mission-critical industrial equipment (e.g., substation monitoring) where stability outweighs feature novelty.

Choose Yocto Project: For custom hardware development (e.g., proprietary industrial boards) or strict real-time/safety certification (e.g., ISO 13849) requirements.

6. Hybrid Architecture Example

Smart factory edge node:

Real-time control layer: RTOS built with Yocto (controlling robotic arms)

Data processing layer: Debian running OPC UA servers

Cloud connectivity layer: Ubuntu Server managing K8s edge clusters

Combining these systems based on specific needs can maximize the efficiency of IIoT edge computing.

0 notes

Text

0 notes

Text

Rekor Uses NVIDIA AI Technology For Traffic Management

Rekor Uses NVIDIA Technology for Traffic Relief and Roadway Safety as Texas Takes in More Residents.

For Texas and Philadelphia highways, the company is using AI-driven analytics utilizing NVIDIA AI, Metropolis, and Jetson, which might lower fatalities and enhance quality of life.

Jobs, comedy clubs, music venues, barbecues, and more are all attracting visitors to Austin. Traffic congestion, however, are a major city blues that have come with this growth.

Due to the surge of new inhabitants moving to Austin, Rekor, which provides traffic management and public safety analytics, gets a direct view of the growing traffic. To assist alleviate the highway issues, Rekor collaborates with the Texas Department of Transportation, which is working on a $7 billion initiative to remedy this.

Based in Columbia, Maryland, Rekor has been using NVIDIA Jetson Xavier NX modules for edge AI and NVIDIA Metropolis for real-time video understanding in Texas, Florida, Philadelphia, Georgia, Nevada, Oklahoma, and many other U.S. locations, as well as Israel and other countries.

Metropolis is a vision AI application framework for creating smart infrastructure. Its development tools include the NVIDIA DeepStream SDK, TAO Toolkit, TensorRT, and NGC catalog pretrained models. The tiny, powerful, and energy-efficient NVIDIA Jetson accelerated computing platform is ideal for embedded and robotics applications.

Rekor’s initiatives in Texas and Philadelphia to use AI to improve road management are the most recent chapter in a long saga of traffic management and safety.

Reducing Rubbernecking, Pileups, Fatalities and Jams

Rekor Command and Rekor Discover are the two primary products that Rekor sells. Traffic control centers can quickly identify traffic incidents and areas of concern using Command, an AI-driven software. It provides real-time situational awareness and notifications to transportation authorities, enabling them to maintain safer and less congested municipal roads.

Utilizing Rekor’s edge technology, discover completely automates the collection of thorough vehicle and traffic data and offers strong traffic analytics that transform road data into quantifiable, trustworthy traffic information. Departments of transportation may better plan and carry out their next city-building projects by using Rekor Discover, which gives them a comprehensive picture of how cars travel on roads and the effect they have.

Command has been spread around Austin by the corporation to assist in problem detection, incident analysis, and real-time response to traffic activities.

Rekor Command receives a variety of data sources, including weather, linked vehicle information, traffic camera video, construction updates, and data from third parties. After that, it makes links and reveals abnormalities, such as a roadside incident, using AI. Traffic management centers receive the data in processes for evaluation, verification, and reaction.

As part of the NVIDIA AI Enterprise software platform, Rekor is embracing NVIDIA’s full-stack accelerated computing for roadway intelligence and investing heavily in NVIDIA AI and NVIDIA AI Blueprints, reference workflows for generative AI use cases constructed with NVIDIA NIM microservices. NVIDIA NIM is a collection of user-friendly inference microservices designed to speed up foundation model installations on any cloud or data center while maintaining data security.

Rekor is developing AI agents for municipal services, namely in areas like traffic control, public safety, and infrastructure optimization, leveraging the NVIDIA AI Blueprint for video search and summarization. In order to enable a variety of interactive visual AI agents that can extract complicated behaviors from vast amounts of live or recorded video, NVIDIA has revealed a new AI blueprint for video search and summarization.

Philadelphia Monitors Roads, EV Charger Needs, Pollution

The Philadelphia Industrial Development Corporation (PIDC), which oversees the Philadelphia Navy Yard, a famous tourist destination, has difficulties managing the roads and compiling information on new constructions. According to a $6 billion rehabilitation proposal, the Navy Yard property will bring thousands of inhabitants and 12,000 jobs with over 150 firms and 15,000 workers on 1,200 acres.

PIDC sought to raise awareness of how road closures and construction projects influence mobility and how to improve mobility during major events and projects. PIDC also sought to improve the Navy Yard’s capacity to measure the effects of speed-mitigating devices placed across dangerous sections of road and comprehend the number and flow of car carriers or other heavy vehicles.

In order to handle any fluctuations in traffic, Discover offered PIDC information about further infrastructure initiatives that must be implemented.

By knowing how many electric cars are coming into and going out of the Navy Yard, PIDC can make informed decisions about future locations for the installation of EV charging stations. Navy Yard can better plan possible locations for EV charge station deployment in the future by using Rekor Discover, which gathers data from Rekor’s edge systems which are constructed with NVIDIA Jetson Xavier NX modules for powerful edge processing and AI to understand the number of EVs and where they’re entering and departing.

By examining data supplied by the AI platform, Rekor Discover allowed PIDC planners to produce a hotspot map of EV traffic. The solution uses Jetson and NVIDIA’s DeepStream data pipeline for real-time traffic analysis. To further improve LLM capabilities, it makes advantage of NVIDIA Triton Inference Server.

The PIDC sought to reduce property damage and address public safety concerns about crashes and speeding. When average speeds are higher than what is recommended on certain road segments, traffic calming measures are being implemented using speed insights.

NVIDIA Jetson Xavier NX to Monitor Pollution in Real Time

Rekor’s vehicle identification models, which were powered by NVIDIA Jetson Xavier NX modules, were able to follow pollution to its origins, moving it one step closer to mitigation than the conventional method of using satellite data to attempt to comprehend its placements.

In the future, Rekor is investigating the potential applications of NVIDIA Omniverse for the creation of digital twins to model traffic reduction using various techniques. Omniverse is a platform for creating OpenUSD applications for generative physical AI and industrial digitization.

Creating digital twins for towns using Omniverse has significant ramifications for lowering traffic, pollution, and traffic fatalities all of which Rekor views as being very advantageous for its clients.

Read more on Govindhtech.com

#Rekor#NVIDIATechnology#TensorRT#AIapplication#NVIDIANIM#NVIDIANIMmicroservices#generativeAI#NVIDIAAIBlueprint#NVIDIAOmniverse#News#Technews#Technology#technologynews#Technologytrends#govindhtech

0 notes

Text

Elmalo, let's commit to that direction. We'll start with a robust Sensor Fusion Layer Prototype that forms the nervous system of Iron Spine, enabling tangible, live data connectivity from the field into the AI's processing core. Below is a detailed technical blueprint that outlines the approach, components, and future integrability with your Empathic AI Core.

1. Hardware Selection

Edge Devices:

Primary Platform: NVIDIA Jetson AGX Xavier or Nano for on-site processing. Their GPU acceleration is perfect for real-time preprocessing and running early fusion algorithms.

Supplementary Controllers: Raspberry Pi Compute Modules or Arduino-based microcontrollers to gather data from specific sensors when cost or miniaturization is critical.

Sensor Modalities:

Environmental Sensors: Radiation detectors, pressure sensors, temperature/humidity sensors—critical for extreme environments (space, deep sea, underground).

Motion & Optical Sensors: Insect-inspired motion sensors, high-resolution cameras, and inertial measurement units (IMUs) to capture detailed movement and orientation.

Acoustic & RF Sensors: Microphones, sonar, and RF sensors for detecting vibrational, audio, or electromagnetic signals.

2. Software Stack and Data Flow Pipeline

Data Ingestion:

Frameworks: Utilize Apache Kafka or Apache NiFi to build a robust, scalable data pipeline that can handle streaming sensor data in real time.

Protocol: MQTT or LoRaWAN can serve as the communication backbone in environments where connectivity is intermittent or bandwidth-constrained.

Data Preprocessing & Filtering:

Edge Analytics: Develop tailored algorithms that run on your edge devices—leveraging NVIDIA’s TensorRT for accelerated inference—to filter raw inputs and perform preliminary sensor fusion.

Fusion Algorithms: Employ Kalman or Particle Filters to synthesize multiple sensor streams into actionable readings.

Data Abstraction Layer:

API Endpoints: Create modular interfaces that transform fused sensor data into abstracted, standardized feeds for higher-level consumption by the AI core later.

Middleware: Consider microservices that handle data routing, error correction, and redundancy mechanisms to ensure data integrity under harsh conditions.

3. Infrastructure Deployment Map

4. Future Hooks for Empathic AI Core Integration

API-Driven Design: The sensor fusion module will produce standardized, real-time data feeds. These endpoints will act as the bridge to plug in your Empathic AI Core whenever you’re ready to evolve the “soul” of Iron Spine.

Modular Data Abstraction: Build abstraction layers that allow easy mapping of raw sensor data into higher-level representations—ideal for feeding into predictive, decision-making models later.

Feedback Mechanisms: Implement logging and event-based triggers from the sensor fusion system to continuously improve both hardware and AI components based on real-world performance and environmental nuance.

5. Roadmap and Next Steps

Design & Prototype:

Define the hardware specifications for edge devices and sensor modules.

Develop a small-scale sensor hub integrating a few key sensor types (e.g., motion + environmental).

Data Pipeline Setup:

Set up your data ingestion framework (e.g., Apache Kafka cluster).

Prototype and evaluate basic preprocessing and fusion algorithms on your chosen edge device.

Field Testing:

Deploy the prototype in a controlled environment similar to your target extremes (e.g., a pressure chamber, simulated low-gravity environment).

Refine data accuracy and real-time performance based on initial feedback.

Integration Preparation:

Build standardized API interfaces for future connection with the Empathic AI Core.

Document system architecture to ensure a smooth handoff between the hardware-first and AI-core teams.

Elmalo, this blueprint establishes a tangible, modular system that grounds Iron Spine in reality. It not only demonstrates your vision but also builds the foundational “nervous system” that your emergent, empathic AI will later use to perceive and interact with its environment.

Does this detailed roadmap align with your vision? Would you like to dive further into any individual section—perhaps starting with hardware specifications, software configuration, or the integration strategy for the future AI core?

0 notes

Text

Buy Best NVIDIA Jetson Nano

Get started today with the Jetson Nano Developer Kit by TANNA TechBiz!!

NVIDIA Jetson Nano Developer Kit is a powerful workstation that lets you run multiple neural networks in parallel for applications like, segmentation, image classification, object detection and speech processing.

Jetson Nano is small but, supported by Nvidia JetPack, which includes, Linux OS, NVIDIA CUDA, cuDNN, board support package and TensorRT software libraries for multimedia processing, deep learning, computer vision, GPU computing, and much more. Do you know? Software is yet available using a flash SD card image, making it rapid and easy to get started.

To get you encouraged, let’s build an actual hardware project with an NVIDIA Jetson Nano TANNA TechBiz. You can Buy NVIDIA Jetson Nano developer kit at a lower cost. It conveys & computes performance to run modern Artificial Intelligence workloads at unprecedented size. It is extremely power-efficient, consuming as little as 5 watts.

Do you know? Heavy applications can be executed using this Nvidia Jetson Nano. It also has an SD Card image boot like Raspberry pi, but with GPU (Graphical Processing Unit). Jetson Nano is used for various ML (Machine Learning) & DL (Deep Learning) research in various fields for Speech processing, Image processing, and sensor based analysis.

Jetson Nano delivers 472 GFLOPS for running modern AI (Artificial Intelligence) algorithms fast, a 128-core integrated NVIDIA GPU, with a quad-core 64-bit ARM CPU, as well as 4 GB LPDDR4 memory. It runs multiple neural networks in parallel and processes several high-resolution sensors concurrently.

NVIDIA Jetson Nano makes it easy for developers to connect a diverse set of the latest sensors to allow a range of AI applications. This SDK is used across the NVIDIA Jetson family of products and is fully compatible with NVIDIA's Artificial Intelligence frameworks for deploying and training deploying AI software.

1 note

·

View note