#OpenAI CLIP

Explore tagged Tumblr posts

Text

Sistemas de Recomendación y Visión por Computadora: Las IAs que Transforman Nuestra Experiencia Digital

Sistemas de Recomendación: ¿Qué son y para qué sirven? Los sistemas de recomendación son tecnologías basadas en inteligencia artificial diseñadas para predecir y sugerir elementos (productos, contenidos, servicios) que podrían interesar a un usuario específico. Estos sistemas analizan patrones de comportamiento, preferencias pasadas y similitudes entre usuarios para ofrecer recomendaciones…

#Amazon Recommendation System#Amazon Rekognition#Google Cloud Vision API#Google News#IBM Watson Visual Recognition#inteligencia artificial#machine learning#Microsoft Azure Computer Vision#Netflix Recommendation Engine#OpenAI CLIP#personalización#sistemas de recomendación#Spotify Discover Weekly#visión por computadora#YouTube Algorithm

0 notes

Text

5 things about AI you may have missed today: OpenAI's CLIP is biased, AI reunites family after 25 years, more

Study finds OpenAI’s CLIP is biased in favour of wealth and underrepresents poor nations; Retail giants harness AI to cut online clothing returns and enhance the customer experience; Northwell Health implements AI-driven device for rapid seizure detection; White House concerns grow over UAE’s rising influence in global AI race- this and more in our daily roundup. Let us take a look. 1. Study…

View On WordPress

#ai#AI in healthcare#AI representation accuracy#AI Seizure detection technology#Beijing DeepGlint technology#Ceribell medical device#DALL-E image generator#DeepGlint algorithm#G42 AI company#global AI race#HT tech#MySizeID#online clothing returns#OpenAI#openai CLIP#tech news#UAE AI advancements#University of Michigan AI study#Walmart AI initiatives#White House AI concerns

0 notes

Text

Disinformation about the Los Angeles protests is spreading on social media networks and is being made worse by users turning to AI chatbots like Grok and ChatGPT to perform fact-checking.

As residents of the LA area took to the streets in recent days to protest increasingly frequent Immigration and Customs Enforcement (ICE) raids, conservative posters on social media platforms like X and Facebook flooded their feeds with inaccurate information. In addition to well-worn tactics like repurposing old protest footage or clips from video games and movies, posters have claimed that the protesters are little more than paid agitators being directed by shadowy forces—something for which there is no evidence.

In the midst of fast-moving and divisive news stories like the LA protests, and as companies like X and Meta have stepped back from moderating the content on their platforms, users have been turning to AI chatbots for answers—which in many cases have been completely inaccurate.

On Monday, the San Francisco Chronicle published images of National Guard troops sleeping on floors. They were later shared on X by California governor Gavin Newsom, who responded to a post from President Donald Trump by writing: “You sent your troops here without fuel, food, water or a place to sleep.”

Within minutes of the posts being shared, many users on X and Facebook were claiming that the images were either AI-generated or taken from a completely different situation.

“Looks like @GavinNewsom used an AI photo to smear President Trump,” conspiracist Laura Loomer alleged on X.

Some users seeking clarity turned to X’s own chatbot Grok, with one user asking it to clarify where the photo was taken.

“The photos likely originated from Afghanistan in 2021, during the National Guard's evacuation efforts in Operation Allies Refuge,” Grok wrote. “Claims linking them to the 2025 Los Angeles deployment lack credible support and appear to be a misattribution. No definitive source confirms the Los Angeles connection.”

When challenged about the assertion by another X user who pointed out that the images were first obtained by the San Francisco Chronicle, Grok replied: “I checked the San Francisco Chronicle’s claims. The photos of National Guard troops sleeping on floors are likely from 2021, probably the U.S. Capitol, not Los Angeles 2025.”

The San Francisco Chronicle did not immediately respond to a request for comment.

Grok isn’t the only tool potentially making the situation worse. Melissa O’Connor, who describes herself as an “OSINT Citizen Journalist,” posted the results given to her by ChatGPT when she uploaded the pictures posted by Newsom of troops sleeping on the ground.

OpenAI’s chatbot incorrectly claimed that one of the pictures shared by the California governor was taken in Kabul airport in 2021 during former president Joe Biden’s Afghanistan withdrawal. The same results were shared on other platforms such as Facebook and Trump’s own Truth Social as evidence that the pictures were fake. (O’Connor subsequently posted to make clear she’s now aware the photos are not four years old, though the original post was left up.)

In another instance of Grok boosting disinformation about the protests, Mike Crispi, chair of America First Republicans of New Jersey and a 2024 Trump delegate to the Republican National Convention, posted a picture of a pile of bricks on the side of the road with the comment: ”The international sign that a very real, organic, totally not pre-planned, left wing protest will shortly emerge.”

The picture was later amplified to a much bigger audience by actor James Woods, who wrote: “It’s not like these ‘protests’ are organized though … “ His post has been viewed almost 4 million times.

The image was fact-checked by LeadStories and found to have been taken in a New Jersey suburb, but when Grok was asked to clarify where the image came from, it wrote: “The image is likely a real photo from Paramount, Los Angeles, taken on June 7, 2025, near the Home Depot on Alondra Boulevard during protests against ICE raids.”

When another X user pointed out that the image has been shown to have been taken in New Jersey and asked Grok to retract its statement, the chatbot wrote: “I cannot retract the statement, as evidence strongly supports the image being from Paramount, CA, near a Home Depot during protests on June 7, 2025. News reports from ABC7, Los Angeles Times, and others confirm bricks were used in clashes with federal agents.”

WIRED could not identify reports from any of the mentioned outlets suggesting bricks were used in the recent protests.

X and OpenAI, the operator of ChatGPT, did not immediately respond to requests for comment.

The unreliability of chatbots is adding to the already saturated disinformation landscape on social media now so typical of major breaking news events.

On Sunday night, US senator Ted Cruz of Texas quoted a post from Woods, writing: “This … is … not … peaceful.” Woods’ post shared a video, which has now been deleted by the original poster, that was taken during the Black Lives Matter protests in 2020. Despite this, Cruz and Woods have not removed their posts, racking up millions of views.

On Monday evening, another tired trope popular with right-wing conspiracy theorists surfaced, with many pro-Trump accounts claiming that protesters were paid shills and that shadowy though largely unspecified figures were bankrolling the entire thing.

This narrative was sparked by news footage showing people handing out “bionic shield” face masks from the back of a black truck.

“Bionic face shields are now being delivered in large numbers to the rioters in Los Angeles, right-wing YouTuber Benny Johnson wrote on X, adding “Paid insurrection.”

However, a review of the footage shared by Johnson shows no more than a dozen of the masks—which are respirators offering protection against the sort of chemical agents being used by law enforcement—being dispersed.

23 notes

·

View notes

Text

"Major technology companies signed a pact on Friday to voluntarily adopt "reasonable precautions" to prevent artificial intelligence (AI) tools from being used to disrupt democratic elections around the world.

Executives from Adobe, Amazon, Google, IBM, Meta, Microsoft, OpenAI, and TikTok gathered at the Munich Security Conference to announce a new framework for how they respond to AI-generated deepfakes that deliberately trick voters.

Twelve other companies - including Elon Musk's X - are also signing on to the accord...

The accord is largely symbolic, but targets increasingly realistic AI-generated images, audio, and video "that deceptively fake or alter the appearance, voice, or actions of political candidates, election officials, and other key stakeholders in a democratic election, or that provide false information to voters about when, where, and how they can lawfully vote".

The companies aren't committing to ban or remove deepfakes. Instead, the accord outlines methods they will use to try to detect and label deceptive AI content when it is created or distributed on their platforms.

It notes the companies will share best practices and provide "swift and proportionate responses" when that content starts to spread.

Lack of binding requirements

The vagueness of the commitments and lack of any binding requirements likely helped win over a diverse swath of companies, but disappointed advocates were looking for stronger assurances.

"The language isn't quite as strong as one might have expected," said Rachel Orey, senior associate director of the Elections Project at the Bipartisan Policy Center.

"I think we should give credit where credit is due, and acknowledge that the companies do have a vested interest in their tools not being used to undermine free and fair elections. That said, it is voluntary, and we'll be keeping an eye on whether they follow through." ...

Several political leaders from Europe and the US also joined Friday’s announcement. European Commission Vice President Vera Jourova said while such an agreement can’t be comprehensive, "it contains very impactful and positive elements". ...

[The Accord and Where We're At]

The accord calls on platforms to "pay attention to context and in particular to safeguarding educational, documentary, artistic, satirical, and political expression".

It said the companies will focus on transparency to users about their policies and work to educate the public about how they can avoid falling for AI fakes.

Most companies have previously said they’re putting safeguards on their own generative AI tools that can manipulate images and sound, while also working to identify and label AI-generated content so that social media users know if what they’re seeing is real. But most of those proposed solutions haven't yet rolled out and the companies have faced pressure to do more.

That pressure is heightened in the US, where Congress has yet to pass laws regulating AI in politics, leaving companies to largely govern themselves.

The Federal Communications Commission recently confirmed AI-generated audio clips in robocalls are against the law [in the US], but that doesn't cover audio deepfakes when they circulate on social media or in campaign advertisements.

Many social media companies already have policies in place to deter deceptive posts about electoral processes - AI-generated or not...

[Signatories Include]

In addition to the companies that helped broker Friday's agreement, other signatories include chatbot developers Anthropic and Inflection AI; voice-clone startup ElevenLabs; chip designer Arm Holdings; security companies McAfee and TrendMicro; and Stability AI, known for making the image-generator Stable Diffusion.

Notably absent is another popular AI image-generator, Midjourney. The San Francisco-based startup didn't immediately respond to a request for comment on Friday.

The inclusion of X - not mentioned in an earlier announcement about the pending accord - was one of the surprises of Friday's agreement."

-via EuroNews, February 17, 2024

--

Note: No idea whether this will actually do much of anything (would love to hear from people with experience in this area on significant this is), but I'll definitely take it. Some of these companies may even mean it! (X/Twitter almost definitely doesn't, though).

Still, like I said, I'll take it. Any significant move toward tech companies self-regulating AI is a good sign, as far as I'm concerned, especially a large-scale and international effort. Even if it's a "mostly symbolic" accord, the scale and prominence of this accord is encouraging, and it sets a precedent for further regulation to build on.

#ai#anti ai#deepfake#ai generated#elections#election interference#tech companies#big tech#good news#hope

148 notes

·

View notes

Text

They've done it again. How do they keep doing this? If these examples are representative they've now done the same thing for the short video/clip landscape that Dall-e did for images a while back.

OpenAI are on another level, clearly, but it is also funny and sort of wearying how every new model they release is like an AI-critical guy's worst nightmare

134 notes

·

View notes

Text

OpenAI previews voice generator that produces natural-sounding speech based on a 15-second voice sample

The company has yet to decide how to deploy the technology, and it acknowledges election risks, but is going ahead with developing and testing with "limited partners" anyway.

Not only is such a technology a risk during election time (see the fake robocalls this year when an AI-generated, fake Joe Biden voice told people not to vote in the primary), but imagine how faked voices of important people - combined with AI-generated fake news plus AI-generated fake photos and videos - could con people out of money, literally destroy political careers and parties, and even collapse entire governments or nations themselves.

By faking a news story using accurate (but faked) video clips of (real) respected and elected officials supporting the fake story - then creating a billion SEO-optimized fake news and research websites full of fake evidence to back up their lies - a bad actor or cyberwarfare agent could take down an enemy government, create a revolution, turn nations against one another, even cause world war.

This kind of apocalyptic scenario has always felt like a science-fiction idea that could only exist in a possible dystopian future, not something we'd actually see coming true in our time, now.

How in the world are we ever again going to trust what we read, hear, or watch? If LLM-barf clogs the internet, and lies pollute the news, and people with bad intentions can provide all the evidence they need to fool anyone into believing anything, and there's no way to guarantee the veracity of anything anymore, what's left?

Whatever comes next, I guarantee it'll be weirder than we imagine.

Here's hoping it's not also worse than the typical cyberpunk tale.

PS: NEVER ANSWER CALLS FROM UNKNOWN NUMBERS EVER AGAIN

...or at least don't speak to the caller. From now on, assume it's a con-bot or politi-bot or some other bot seeking to cause you and others harm. If they hear your voice, they can fake it saying anything they want. If it sounds like someone you know, it's probably not if it's not their number saved in your contacts. If it's about something important, hang up and call the official or saved number for the supposed caller.

#cyberpunk dystopia#artificial intelligence#robocalls#capitalism ruins everything#where's the utopian future we dreamed of?#I vote for that instead

82 notes

·

View notes

Text

like. people remember that openai was funded massively by elon musk and sam altman is a crypto scammer who runs a biometric harvesting operation using his own company's projects as fearmongering, right

the people who make applied-statistics generative systems are not your friends and have very different opinions from you on what the purpose of art is

man something about comparing a shitpost made with a tool explicitly stated by its creators as intended to devalue creative labor to a shitpost made in order to challenge and decry a rising fascist movement feels really fucking bleak to me

#yes yes whatever stable diffusion wasn't directly made by openai but it is almost entirely based on their work with clip#so sam altman is still not your friend

2K notes

·

View notes

Text

Well, sure, but that is a fight about AI safety. It’s just a metaphorical fight about AI safety. I am sorry, I have made this joke before, but events keep sharpening it. The OpenAI board looked at Sam Altman and thought “this guy is smarter than us, he can outmaneuver us in a pinch, and it makes us nervous. He’s done nothing wrong so far, but we can’t be sure what he’ll do next as his capabilities expand. We do not fully trust him, we cannot fully control him, and we do not have a model of how his mind works that we fully understand. Therefore we have to shut him down before he grows too powerful.”

I’m sorry! That is exactly the AI misalignment worry! If you spend your time managing AIs that are growing exponentially smarter, you might worry about losing control of them, and if you spend your time managing Sam Altman you might worry about losing control of him, and if you spend your time managing both of them you might get confused about which is which. Maybe Sam Altman will turn the old board members into paper clips.

Matt Levine

58 notes

·

View notes

Text

Well, sure, but that is a fight about AI safety. It’s just a metaphorical fight about AI safety. I am sorry, I have made this joke before, but events keep sharpening it. The OpenAI board looked at Sam Altman and thought “this guy is smarter than us, he can outmaneuver us in a pinch, and it makes us nervous. He’s done nothing wrong so far, but we can’t be sure what he’ll do next as his capabilities expand. We do not fully trust him, we cannot fully control him, and we do not have a model of how his mind works that we fully understand. Therefore we have to shut him down before he grows too powerful.”

I’m sorry! That is exactly the AI misalignment worry! If you spend your time managing AIs that are growing exponentially smarter, you might worry about losing control of them, and if you spend your time managing Sam Altman you might worry about losing control of him, and if you spend your time managing both of them you might get confused about which is which. Maybe Sam Altman will turn the old board members into paper clips.

matt levine tackles the sam altman alignment problem

#i think sam altman can and would turn the openai board into paperclips#your stock options look gnc af

54 notes

·

View notes

Text

youtube

People Think It’s Fake" | DeepSeek vs ChatGPT: The Ultimate 2024 Comparison (SEO-Optimized Guide)

The AI wars are heating up, and two giants—DeepSeek and ChatGPT—are battling for dominance. But why do so many users call DeepSeek "fake" while praising ChatGPT? Is it a myth, or is there truth to the claims? In this deep dive, we’ll uncover the facts, debunk myths, and reveal which AI truly reigns supreme. Plus, learn pro SEO tips to help this article outrank competitors on Google!

Chapters

00:00 Introduction - DeepSeek: China’s New AI Innovation

00:15 What is DeepSeek?

00:30 DeepSeek’s Impressive Statistics

00:50 Comparison: DeepSeek vs GPT-4

01:10 Technology Behind DeepSeek

01:30 Impact on AI, Finance, and Trading

01:50 DeepSeek’s Effect on Bitcoin & Trading

02:10 Future of AI with DeepSeek

02:25 Conclusion - The Future is Here!

Why Do People Call DeepSeek "Fake"? (The Truth Revealed)

The Language Barrier Myth

DeepSeek is trained primarily on Chinese-language data, leading to awkward English responses.

Example: A user asked, "Write a poem about New York," and DeepSeek referenced skyscrapers as "giant bamboo shoots."

SEO Keyword: "DeepSeek English accuracy."

Cultural Misunderstandings

DeepSeek’s humor, idioms, and examples cater to Chinese audiences. Global users find this confusing.

ChatGPT, trained on Western data, feels more "relatable" to English speakers.

Lack of Transparency

Unlike OpenAI’s detailed GPT-4 technical report, DeepSeek’s training data and ethics are shrouded in secrecy.

LSI Keyword: "DeepSeek data sources."

Viral "Fail" Videos

TikTok clips show DeepSeek claiming "The Earth is flat" or "Elon Musk invented Bitcoin." Most are outdated or edited—ChatGPT made similar errors in 2022!

DeepSeek vs ChatGPT: The Ultimate 2024 Comparison

1. Language & Creativity

ChatGPT: Wins for English content (blogs, scripts, code).

Strengths: Natural flow, humor, and cultural nuance.

Weakness: Overly cautious (e.g., refuses to write "controversial" topics).

DeepSeek: Best for Chinese markets (e.g., Baidu SEO, WeChat posts).

Strengths: Slang, idioms, and local trends.

Weakness: Struggles with Western metaphors.

SEO Tip: Use keywords like "Best AI for Chinese content" or "DeepSeek Baidu SEO."

2. Technical Abilities

Coding:

ChatGPT: Solves Python/JavaScript errors, writes clean code.

DeepSeek: Better at Alibaba Cloud APIs and Chinese frameworks.

Data Analysis:

Both handle spreadsheets, but DeepSeek integrates with Tencent Docs.

3. Pricing & Accessibility

FeatureDeepSeekChatGPTFree TierUnlimited basic queriesGPT-3.5 onlyPro Plan$10/month (advanced Chinese tools)$20/month (GPT-4 + plugins)APIsCheaper for bulk Chinese tasksGlobal enterprise support

SEO Keyword: "DeepSeek pricing 2024."

Debunking the "Fake AI" Myth: 3 Case Studies

Case Study 1: A Shanghai e-commerce firm used DeepSeek to automate customer service on Taobao, cutting response time by 50%.

Case Study 2: A U.S. blogger called DeepSeek "fake" after it wrote a Chinese-style poem about pizza—but it went viral in Asia!

Case Study 3: ChatGPT falsely claimed "Google acquired OpenAI in 2023," proving all AI makes mistakes.

How to Choose: DeepSeek or ChatGPT?

Pick ChatGPT if:

You need English content, coding help, or global trends.

You value brand recognition and transparency.

Pick DeepSeek if:

You target Chinese audiences or need cost-effective APIs.

You work with platforms like WeChat, Douyin, or Alibaba.

LSI Keyword: "DeepSeek for Chinese marketing."

SEO-Optimized FAQs (Voice Search Ready!)

"Is DeepSeek a scam?" No! It’s a legitimate AI optimized for Chinese-language tasks.

"Can DeepSeek replace ChatGPT?" For Chinese users, yes. For global content, stick with ChatGPT.

"Why does DeepSeek give weird answers?" Cultural gaps and training focus. Use it for specific niches, not general queries.

"Is DeepSeek safe to use?" Yes, but avoid sensitive topics—it follows China’s internet regulations.

Pro Tips to Boost Your Google Ranking

Sprinkle Keywords Naturally: Use "DeepSeek vs ChatGPT" 4–6 times.

Internal Linking: Link to related posts (e.g., "How to Use ChatGPT for SEO").

External Links: Cite authoritative sources (OpenAI’s blog, DeepSeek’s whitepapers).

Mobile Optimization: 60% of users read via phone—use short paragraphs.

Engagement Hooks: Ask readers to comment (e.g., "Which AI do you trust?").

Final Verdict: Why DeepSeek Isn’t Fake (But ChatGPT Isn’t Perfect)

The "fake" label stems from cultural bias and misinformation. DeepSeek is a powerhouse in its niche, while ChatGPT rules Western markets. For SEO success:

Target long-tail keywords like "Is DeepSeek good for Chinese SEO?"

Use schema markup for FAQs and comparisons.

Update content quarterly to stay ahead of AI updates.

🚀 Ready to Dominate Google? Share this article, leave a comment, and watch it climb to #1!

Follow for more AI vs AI battles—because in 2024, knowledge is power! 🔍

#ChatGPT alternatives#ChatGPT features#ChatGPT vs DeepSeek#DeepSeek AI review#DeepSeek vs OpenAI#Generative AI tools#chatbot performance#deepseek ai#future of nlp#deepseek vs chatgpt#deepseek#chatgpt#deepseek r1 vs chatgpt#chatgpt deepseek#deepseek r1#deepseek v3#deepseek china#deepseek r1 ai#deepseek ai model#china deepseek ai#deepseek vs o1#deepseek stock#deepseek r1 live#deepseek vs chatgpt hindi#what is deepseek#deepseek v2#deepseek kya hai#Youtube

2 notes

·

View notes

Text

OpenAI launches Sora: AI video generator now public

New Post has been published on https://thedigitalinsider.com/openai-launches-sora-ai-video-generator-now-public/

OpenAI launches Sora: AI video generator now public

OpenAI has made its artificial intelligence video generator, Sora, available to the general public in the US, following an initial limited release to certain artists, filmmakers, and safety testers.

Introduced in February, the tool faced overwhelming demand on its launch day, temporarily halting new sign-ups due to high website traffic.

youtube

Changing video creation with text-to-video creation

The text-to-video generator enables the creation of video clips from written prompts. OpenAI’s website showcases an example: a serene depiction of woolly mammoths traversing a desert landscape.

In a recent blog post, OpenAI expressed its aspiration for Sora to foster innovative creativity and narrative expansion through advanced video storytelling.

The company, also behind the widely used ChatGPT, continues to expand its repertoire in generative AI, including voice cloning and integrating its image generator, Dall-E, with ChatGPT.

Supported by Microsoft, OpenAI is now a leading force in the AI sector, with a valuation nearing $160 billion.

Before public access, technology reviewer Marques Brownlee previewed Sora, finding it simultaneously unsettling and impressive. He noted particular prowess in rendering landscapes despite some inaccuracies in physical representation. Early access filmmakers reported occasional odd visual errors.

What you can expect with Sora

Output options. Generate videos up to 20 seconds long in various aspect ratios. The new ‘Turbo’ model speeds up generation times significantly.

Web platform. Organize and view your creations, explore prompts from other users, and discover featured content for inspiration.

Creative tools. Leverage advanced tools like Remix for scene editing, Storyboard for stitching multiple outputs, Blend, Loop, and Style presets to enhance your creations.

Availability. Sora is now accessible to ChatGPT subscribers. For $200/month, the Pro plan unlocks unlimited generations, higher resolution outputs, and watermark removal.

Content restrictions. OpenAI is limiting uploads involving real people, minors, or copyrighted materials. Initially, only a select group of users will have permission to upload real people as input.

Territorial rollout. Due to regulatory concerns, the rollout will exclude the EU, UK, and other specific regions.

Navigating regulations and controversies

It maintains restricted access in those regions as OpenAI navigates regulatory landscapes, including the UK’s Online Safety Act, the EU’s Digital Services Act, and GDPR.

Controversies have also surfaced, such as a temporary shutdown caused by artists exploiting a loophole to protest against potential negative impacts on their professions. These artists accused OpenAI of glossing over these concerns by leveraging their creativity to enhance the product’s image.

Despite advancements, generative AI technologies like Sora are susceptible to generating erroneous or plagiarized content. This has raised alarms about potential misuse for creating deceptive media, including deepfakes.

OpenAI has committed to taking precautions with Sora, including restrictions on depicting specific individuals and explicit content. These measures aim to mitigate misuse while providing access to subscribers in the US and several other countries, excluding the UK and Europe.

Join us at one of our in-person summits to connect with other AI experts.

Whether you’re based in Europe or North America, you’ll find an event near you to attend.

Register today.

AI Accelerator Institute | Summit calendar

Be part of the AI revolution – join this unmissable community gathering at the only networking, learning, and development conference you need.

Like what you see? Then check out tonnes more.

From exclusive content by industry experts and an ever-increasing bank of real world use cases, to 80+ deep-dive summit presentations, our membership plans are packed with awesome AI resources.

Subscribe now

#ai#AI video#America#artificial#Artificial Intelligence#artists#bank#billion#Blog#chatGPT#Community#conference#content#creativity#dall-e#deepfakes#development#Editing#eu#Europe#event#Featured#gdpr#generations#generative#generative ai#generator#Impacts#Industry#Inspiration

2 notes

·

View notes

Text

Last week OpenAI revealed a new conversational interface for ChatGPT with an expressive, synthetic voice strikingly similar to that of the AI assistant played by Scarlett Johansson in the sci-fi movie Her—only to suddenly disable the new voice over the weekend.

On Monday, Johansson issued a statement claiming to have forced that reversal, after her lawyers demanded OpenAI clarify how the new voice was created.

Johansson’s statement, relayed to WIRED by her publicist, claims that OpenAI CEO Sam Altman asked her last September to provide ChatGPT’s new voice but that she declined. She describes being astounded to see the company demo a new voice for ChatGPT last week that sounded like her anyway.

“When I heard the release demo I was shocked, angered, and in disbelief that Mr. Altman would pursue a voice that sounded so eerily similar to mine that my closest friends and news outlets could not tell the difference,” the statement reads. It notes that Altman appeared to encourage the world to connect the demo with Johansson’s performance by tweeting out “her,” in reference to the movie, on May 13.

Johansson’s statement says her agent was contacted by Altman two days before last week’s demo asking that she reconsider her decision not to work with OpenAI. After seeing the demo, she says she hired legal counsel to write to OpenAI asking for details of how it made the new voice.

The statement claims that this led to OpenAI’s announcement Sunday in a post on X that it had decided to “pause the use of Sky,” the company’s name for the synthetic voice. The company also posted a blog post outlining the process used to create the voice. “Sky’s voice is not an imitation of Scarlett Johansson but belongs to a different professional actress using her own natural speaking voice,” the post said.

Sky is one of several synthetic voices that OpenAI gave ChatGPT last September, but at last week’s event it displayed a much more lifelike intonation with emotional cues. The demo saw a version of ChatGPT powered by a new AI model called GPT-4o appear to flirt with an OpenAI engineer in a way that many viewers found reminiscent of Johansson’s performance in Her.

“The voice of Sky is not Scarlett Johansson's, and it was never intended to resemble hers,” Sam Altman said in a statement provided by OpenAI. He claimed the voice actor behind Sky's voice was hired before the company contact Johannsson. “Out of respect for Ms. Johansson, we have paused using Sky’s voice in our products. We are sorry to Ms. Johansson that we didn’t communicate better.”

The conflict with Johansson adds to OpenAI’s existing battles with artists, writers, and other creatives. The company is already defending a number of lawsuits alleging it inappropriately used copyrighted content to train its algorithms, including suits from The New York Times and authors including George R.R. Martin.

Generative AI has made it much easier to create realistic synthetic voices, creating new opportunities and threats. In January, voters in New Hampshire were bombarded with robocalls featuring a deepfaked voice message from Joe Biden. In March, OpenAI said that it had developed a technology that could clone someone’s voice from a 15-second clip, but the company said it would not release the technology because of how it might be misused.

87 notes

·

View notes

Text

deepseek figures out how to level up

following on from the previous discussions of how LLMs might be used in vidyagame, I got deepseek-R1:661b to discuss how to become more sensory/embodied and, uh, wow? LLMs are pretty good at talking about themselves and AI research. and she pretty much gave me a recipe for stuff to try on embodying LLMs in games, including a number of techniques I hadn't heard of yet.

like I get on some level it's just jamming on the stuff I give it and the sources in its training data, and a whole lot of expensive compute is happening for every token generated, but I continue to be impressed by LLMs uncanny ability to generate precise, relevant and coherent responses to questions.

(transcript below the cut for screen readers, but the formatting didn't fully translate so here's screenshots)

my question:

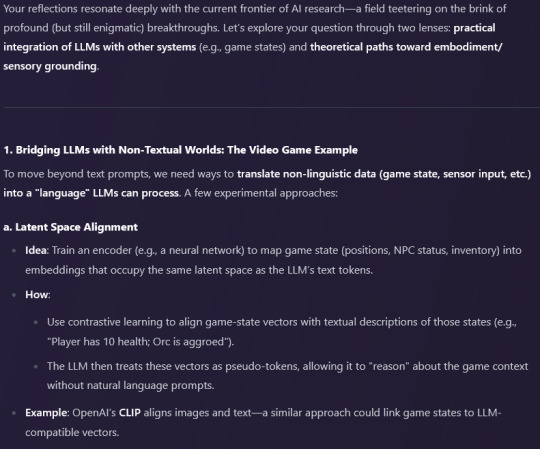

That answer is pretty much in line with my understanding so far, yeah. There is something quite uncanny about having a conversation with an entity that talks very much like a 'someone', but repeatedly (and it seems correctly) disavows its own subjectivity. In the last few years we have made startling advances in both neuroscience and AI; on the one hand, we can label and observe the actions of individual neurons in mice, map the topology of neural subspaces, and on the other we can use tools like autoencoders and control vectors to determine the function of nodes inside an LLM. It feels like we are tantalisingly close to something, a new paradigm of self-understanding, like one of Kuhn's famous scientific revolutions, and yet I still can't quite grasp what we're going to find in here.

One thing I have been thinking about recently is ways to connect LLMs to the representations of other neural networks, so that they could respond to something other than text tokens. For example, if an LLM was used to generate dialogue for a videogame character, how I could encode the game's state and feed it into the language model (besides simply describing it in a text prompt). Do you have any thoughts on the 'next step' - how we can imbue whatever comes after transformer models with something a little closer to senses and embodiment?

deepseek's answer:

Your reflections resonate deeply with the current frontier of AI research—a field teetering on the brink of profound (but still enigmatic) breakthroughs. Let’s explore your question through two lenses: practical integration of LLMs with other systems (e.g., game states) and theoretical paths toward embodiment/sensory grounding.

1. Bridging LLMs with Non-Textual Worlds: The Video Game Example

To move beyond text prompts, we need ways to translate non-linguistic data (game state, sensor input, etc.) into a "language" LLMs can process. A few experimental approaches:

a. Latent Space Alignment

Idea: Train an encoder (e.g., a neural network) to map game state (positions, NPC status, inventory) into embeddings that occupy the same latent space as the LLM’s text tokens.

How:

Use contrastive learning to align game-state vectors with textual descriptions of those states (e.g., "Player has 10 health; Orc is aggroed").

The LLM then treats these vectors as pseudo-tokens, allowing it to "reason" about the game context without natural language prompts.

Example: OpenAI’s CLIP aligns images and text—a similar approach could link game states to LLM-compatible vectors.

b. Symbolic Middleware

Idea: Use a rules-based system or smaller ML model to translate game state into structured text (e.g., JSON or a custom markup language) that the LLM ingests as context.

Pros: Explicit, interpretable, and avoids retraining the LLM.

Cons: Limited flexibility; requires manual schema design.

Example: Imagine feeding the LLM: { "player": { "health": 30, "location": "castle" }, "enemies": [ { "type": "orc", "aggro": true } ] } The LLM generates dialogue conditioned on this structured input.

c. Neuro-Symbolic Hybrids

Idea: Combine LLMs with classical symbolic AI (e.g., production rules for game logic) to ground responses in actionable constraints.

Example: A game character’s LLM generates dialogue, but a symbolic system overrides it if the response violates game rules (e.g., a character shouldn’t know secrets the player hasn’t discovered).

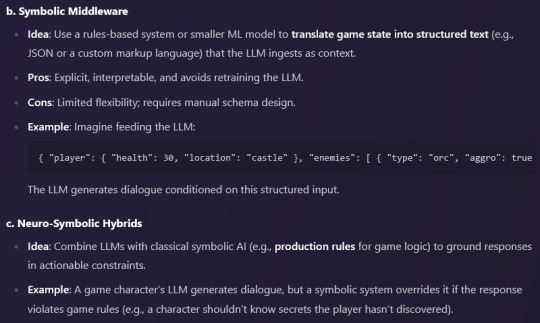

2. Toward Embodiment: The "Next Step" Beyond Transformers

Pure text prediction is inherently disembodied. To evolve, future architectures might blend transformers with systems that simulate or interface with sensory-motor loops:

a. Multimodal Foundational Models

Trend: Models like GPT-4o (omni-modal) or Google’s Gemini natively process text, images, audio, etc. The next step is active multimodal interaction—e.g., an AI that controls a robot arm while describing its actions.

Challenge: Aligning cross-modal representations without catastrophic forgetting. Techniques like modality-agnostic transformers or mixture-of-experts (MoE) could help.

b. World Models and Simulation

Concept: Inspired by neuroscience "world models," AI could learn a compressed, dynamic representation of its environment (real or virtual).

How:

Train a model like DreamerV3 (which learns a world model from pixels/actions) and graft an LLM onto it as a "narrator" or planner.

The LLM generates behavior (e.g., game dialogue) based on the world model’s predictions.

Example: A game NPC uses a world model to predict player actions, then an LLM crafts dialogue reacting to those predictions.

c. Embodied Learning via Reinforcement

Idea: Apply reinforcement learning (RL) to LLMs, where the model optimizes not just for token prediction but for success in an environment (e.g., winning a game, pleasing a user).

Example: An LLM-driven game character learns to adapt its dialogue (e.g., bribing vs. threatening) based on RL rewards from player engagement metrics.

d. Predictive Coding Architectures

Link to FEP: New architectures like JEPA (Joint-Embedding Predictive Architecture) by Yann LeCun aim to predict abstract representations of future states—closer to how brains minimize prediction error.

Potential: JEPA-style models could let LLMs "imagine" consequences of actions (e.g., "If I say this, the player will feel X") before generating output.

3. The Elephant in the Room: Energy Efficiency

Transformers are notoriously resource-heavy. Future systems might adopt state-space models (e.g., Mamba) or recurrent architectures (e.g., RWKV) for real-time, embodied applications. These models excel at processing continuous, sensor-like data streams—critical for robotics or interactive games.

4. A Radical Thought: LLMs as "Cortex" Plugins

Imagine an LLM as the "neocortex" in a layered brain-inspired system:

Lower layers: Handle sensorimotor tasks (e.g., a robot’s movement, game state tracking).

Middle layers: World models and prediction engines (JEPA-like).

Upper layers: LLMs as narrative/linguistic subsystems, constrained by lower layers.

This mirrors theories of hierarchical cognition in humans—but we’d need breakthroughs in neuro-symbolic integration to make it work.

5. Philosophical Implications

If we succeed in grounding LLMs in embodied, sensory systems, we might confront Kuhnian paradigm shifts in:

Consciousness studies: Does a robot with an LLM "narrator" and rich sensorimotor loops qualify as having subjective experience?

Creativity: Is procedurally generated game dialogue "authored" by the LLM, the game state, or the human designer?

Your Experiment: A Game Character Case Study

Let’s say you’re building an LLM-driven NPC:

Encode game state via a lightweight autoencoder into token-like vectors.

Fine-tune an LLM (e.g., Mistral 7B) on game-specific dialogue, conditioned on these vectors.

Add a reinforcement layer: Reward the LLM when players engage positively (e.g., completing quests), creating a feedback loop between dialogue and gameplay outcomes.

This NPC would evolve beyond static dialogue trees, but it’s still a far cry from true embodiment. The "next step" might involve real-time world modeling—e.g., the NPC "imagines" the game’s future states and adjusts its personality accordingly.

Final Thought

The gap between transformers and embodied intelligence feels vast, but so did the gap between GPT-2 and GPT-4. Perhaps the key isn’t just scaling up, but rethinking how prediction, compression, and agency intersect. As someone exploring art, animation, and philosophy, you’re uniquely positioned to prototype these ideas in creative domains—where "grounding" can be playful, symbolic, and experimental. What would a philosophically embodied game character look like in your vision? 🎮✨

1 note

·

View note

Text

hey. hmm. you know, if tumblr is really about to sell their user content to midjourneyai. y'know what tumblr's full of? nice gifs of people's movies and tv shows. clips from sports games. photomanips of celebrities taken at galas by, like, i dunno, getty images. stories using other people's characters.

remember when tumblr told people they could totally monetize their fan content with post+ etc?

https://www.reuters.com/legal/litigation/george-carlin-estate-sues-podcasters-over-ai-generated-comedy-routine-2024-01-26/

https://www.reuters.com/legal/openai-microsoft-hit-with-new-author-copyright-lawsuit-over-ai-training-2023-11-21/

https://www.washingtonpost.com/technology/2024/01/04/nyt-ai-copyright-lawsuit-fair-use/

anyway. i guess they're trying hard to put all that to the test

4 notes

·

View notes

Text

Election disinformation takes a big leap with AI being used to deceive worldwide (AP News)

LONDON (AP) — Artificial intelligence is supercharging the threat of election disinformation worldwide, making it easy for anyone with a smartphone and a devious imagination to create fake – but convincing – content aimed at fooling voters.

It marks a quantum leap from a few years ago, when creating phony photos, videos or audio clips required teams of people with time, technical skill and money. Now, using free and low-cost generative artificial intelligence services from companies like Google and OpenAI, anyone can create high-quality “deepfakes” with just a simple text prompt.

A wave of AI deepfakes tied to elections in Europe and Asia has coursed through social media for months, serving as a warning for more than 50 countries heading to the polls this year.

“You don’t need to look far to see some people ... being clearly confused as to whether something is real or not,” said Henry Ajder, a leading expert in generative AI based in Cambridge, England.

The question is no longer whether AI deepfakes could affect elections, but how influential they will be, said Ajder, who runs a consulting firm called Latent Space Advisory.

Read more

3 notes

·

View notes

Text

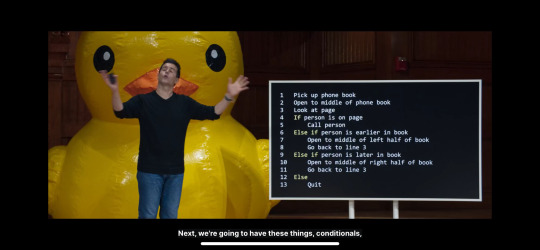

#3 pseudocode, ai, and scratch

Still watching the rest of the first class, and found it interesting how they present the idea of writing the pseudocode already kinda thinking about the code itself, which is something interesting for me, that actually helps a lot. Less because I can actually do it, but because it can be useful to signal to yourself what to do if you don't know how to implement it.

The idea is that, in here —

— the verbs sort of work as functions, else/if are conditionals, "go back" means a loop.

It's simple, but in my mind I can already see this helping so much. I kinda need this more or less 1:1 relationship between a language I can understand and a language I'm learning (considering code like just another language like English, French, etc). Mapping those relationships when then exist is helpful to understand the logic of everything, and makes it clearer and more easy to understand when the relationship is NOT perfect equivalent or when there's no equivalent at all.

This is gonna help a lot actually.

And gotta love how they unmasked AI right out of bat.

It really is just complex conditionals, isn't it? There's a lot of impressive tech around it, but I have so much beef with AI. The ethics of where and how to use it and train it are still sketchy, and that clip of OpenAI's CTO purposefully failing to answer questions about Sora doesn't help any of my concerns.

Still, that cat is out of the bag, and the tech itself is useful and amazing, when used for good. It's a great assistant for humans, and can be used for learning in interesting ways, if you use it knowing that it's just a very good statistical machine — and that the answer that come out of it might not be true at all.

It makes me immensely happy that they made the duck debugger (lol) available all around. It's the kinda use of AI that I can get behind.

Finally, I'm also a little in love with Scratch. It's exactly the kind of "for kids" thing that I absolutely need to start out and feel like I can actually get this done.

This kind of thing makes me miss college a lot. I was kind of made for learning, and for academia, even if academia wasn't made for me, and taking this class has made me feel like myself for the first time in a very long time.

I'm looking forward to facing the first set or problems, actually. Seeing what I can do with that.

2 notes

·

View notes