#Oracle to GCP PostgreSQL

Explore tagged Tumblr posts

Text

Oracle to GCP PostgreSQL Migration Strategies With Newt Global Expertise

When you Migrate Oracle to GCP PostgreSQL, it's crucial to understand the differences between the two database systems. PostgreSQL offers an open-source environment with a rich set of features and active community support, making it an attractive alternative to Oracle. During the migration, tools like Google Cloud Database Migration Service and Ora2Pg can help automate schema conversion and data transfer, ensuring a seamless transition. Plan for optimization and tuning in the new environment to achieve optimal performance. This blog explores best practices, the advantages of this migration, and how it positions businesses for ongoing success. Seamless Oracle to GCP PostgreSQL Migration When planning to Migrate Oracle to GCP PostgreSQL, careful planning and consideration are fundamental to guarantee a successful transition. First, conduct a comprehensive assessment of your current Oracle database environment. Identify the data, applications, and dependencies that need to be migrated. This assessment helps in understanding the complexity and scope of the migration. Next, focus on schema compatibility. Oracle and PostgreSQL have diverse data types and functionalities, so it's vital to map Oracle data types to their PostgreSQL equivalents and address any incompatibilities. Another critical consideration is the volume of data to be migrated. Large datasets may require more time and resources, so plan accordingly to minimize downtime and disruption. Choosing the right migration tools is also important. Tools like Google Cloud Database Migration Service, Ora2Pg, and pgLoader can automate and streamline the migration process, ensuring data integrity and consistency. Additionally, consider the need for thorough testing and validation post-migration to ensure the new PostgreSQL database functions correctly and meets performance expectations. Finally, plan for optimization and tuning in the new environment to achieve optimal performance. By addressing these key considerations, organizations can effectively manage the complexities of migrating Oracle to GCP PostgreSQL. Best Practices for Future-Proofing Your Migration

1. Incremental Migration: Consider migrating in phases, Begin with non-critical data. This approach minimizes risk and allows for easier troubleshooting and adjustments. 2. Comprehensive Documentation: Maintain detailed documentation of the migration process, including configuration settings, scripts used, and any issues encountered. 3. Continuous Monitoring and Maintenance: Implement robust monitoring tools to track database execution, resource utilization, and potential issues. Schedule regular maintenance tasks such as updating statistics and vacuuming tables.

GCP PostgreSQL: Powering Growth through Seamless Integration Migrating to GCP PostgreSQL offers several key advantages that make it a forward-looking choice:

Open-Source Innovation: PostgreSQL is a leading open-source database known for its robustness, feature richness, and active community. It continuously evolves with new features and advancements.

Integration with Google Ecosystem: GCP PostgreSQL integrates seamlessly with other Google Cloud services, such as BigQuery for analytics, AI and machine learning tools, and Kubernetes for container orchestration.

Cost Management: This model helps in better cost management and budgeting compared to traditional on-premises solutions.

Evolving with GCP PostgreSQL- Strategic Migration Insights with Newt Global In conclusion, migrating Oracle to GCP PostgreSQL represents a strategic evolution for businesses, facilitated by the expertise and partnership opportunities with Newt Global. This transition goes beyond mere database migration. Post-migration, thorough testing, and continuous optimization are crucial to fully leverage PostgreSQL's capabilities and ensure high performance. By migrating Oracle to GCP PostgreSQL, organizations can reduce the total cost of ownership and benefit from an open-source environment that integrates smoothly with other Google Cloud services. This positions businesses to better respond to market demands, drive innovation, and achieve greater operational efficiency. Ultimately, migrating to GCP PostgreSQL not only addresses current database needs but also sets the foundation for future growth and technological advancement in an increasingly digital world. Ultimately, migrating Oracle to GCP PostgreSQL through Newt Global signifies a proactive approach to staying competitive, driving growth, and achieving sustained success in the digital world. Thanks For Reading

For More Information, Visit Our Website: https://newtglobal.com/

0 notes

Text

IT Staff Augmentation Services | Staff Augmentation Company

In today’s fast-paced digital world, technology evolves rapidly—and so does the need for top IT talent. Companies are constantly seeking efficient, scalable, and cost-effective ways to expand their tech teams without bearing the long-term burden of hiring full-time employees. This is where IT staff augmentation services come into play.

At Versatile IT Solutions, we offer flexible and customized IT staff augmentation to meet your short-term or long-term project demands. With over 12+ years of industry experience, we help companies of all sizes find the right talent, fast—without compromising quality.

What Is IT Staff Augmentation?

IT Staff Augmentation is a strategic outsourcing model that allows you to hire skilled tech professionals on-demand to fill temporary or project-based roles within your organization. This model helps bridge skill gaps, scale teams quickly, and improve operational efficiency without the complexities of permanent hiring.

Whether you need software developers, QA engineers, UI/UX designers, DevOps experts, or cloud specialists, Versatile IT Solutions has a ready pool of vetted professionals to meet your unique business needs.

Why Choose Versatile IT Solutions?

Versatile IT Solutions stands out as a reliable staff augmentation company because of our deep understanding of technology, rapid talent deployment capabilities, and commitment to quality.

✅ Key Highlights:

12+ Years of Experience in IT consulting and workforce solutions

300+ Successful Client Engagements across the USA, UK, UAE, and India

Pre-vetted Tech Talent in various domains and technologies

Flexible Engagement Models: Hourly, monthly, or project-based

Fast Onboarding & Deployment within 24–72 hours

Compliance-Ready staffing for international standards

We don’t just provide resumes—we deliver professionals who are culture-fit, project-ready, and aligned with your objectives.

Our IT Staff Augmentation Services

We offer comprehensive staff augmentation solutions that allow companies to hire qualified IT professionals on demand. Some of our key offerings include:

1. Contract Developers

Hire experienced developers skilled in technologies like Java, Python, PHP, Node.js, React, Angular, and more to strengthen your software development lifecycle.

2. Dedicated Project Teams

Get entire project teams—including developers, testers, designers, and project managers—for end-to-end execution.

3. Cloud & DevOps Engineers

Need help with infrastructure or deployment? Augment your IT team with certified AWS, Azure, or Google Cloud professionals.

4. QA & Automation Testing Experts

Our testing professionals ensure product reliability with both manual and automated testing capabilities.

5. UI/UX Designers

Enhance your product’s user experience with creative UI/UX professionals skilled in tools like Figma, Sketch, and Adobe XD.

6. ERP & CRM Specialists

Staff your enterprise solutions with SAP, Salesforce, and Microsoft Dynamics experts.

Technologies We Support

We cater to a wide array of platforms and technologies:

Front-End: React.js, Angular, Vue.js

Back-End: Node.js, .NET, Java, Python, PHP

Mobile: Android, iOS, Flutter, React Native

Cloud: AWS, Azure, GCP

DevOps: Docker, Kubernetes, Jenkins, Ansible

Database: MySQL, MongoDB, PostgreSQL, Oracle

ERP/CRM: SAP, Salesforce, Microsoft Dynamics

Engagement Models

We offer flexibility with our hiring models to best suit your project and budget requirements:

Hourly Basis – Ideal for short-term needs

Monthly Contracts – Great for ongoing or long-term projects

Dedicated Teams – For businesses needing focused delivery from a committed team

Whether you want to scale up quickly for a new project or need niche expertise to complement your internal team, our adaptable models ensure smooth onboarding and integration.

Benefits of IT Staff Augmentation

Hiring through a trusted staff augmentation partner like Versatile IT Solutions comes with numerous advantages:

🔹 Cost-Effective Resource Allocation

🔹 No Long-Term Hiring Commitments

🔹 Access to Global Talent Pool

🔹 Faster Time-to-Market

🔹 Reduced Overhead Costs

🔹 Control Over Project Workflow

Instead of spending months on hiring and training, you can deploy top tech talent within days and keep your business moving forward.

Client Success Story

“We needed a team of skilled backend developers for a critical fintech project. Versatile delivered high-quality professionals within a week. They were proactive, collaborative, and technically strong.” — CTO, US-Based Fintech Company

“Thanks to Versatile's staff augmentation services, we were able to reduce our time to market by 40%. Their resources seamlessly integrated with our in-house team.” — Head of Product, SaaS Startup

Ready to Augment Your IT Team?

If you're struggling with hiring delays, talent shortages, or capacity issues, Versatile IT Solutions is here to help. We offer customized IT staff augmentation services that let you scale smarter, faster, and more efficiently.

📌 Explore our Staff Augmentation Services 📌 Need expert advice or want to get started?

#IT Staff Augmentation#Tech Talent Hiring#Software Developers#Dedicated Teams#Versatile IT Solutions#Resource Augmentation#Remote Developers#DevOps Engineers

0 notes

Text

Java Cloud Development Company

Looking for a reliable Java cloud development company? Associative in Pune, India offers scalable, secure, and enterprise-grade cloud solutions using Java and modern frameworks.

In today's fast-paced digital landscape, cloud-native applications are no longer a luxury—they're a necessity. Java, with its robustness, portability, and security, remains a preferred language for developing enterprise-grade cloud solutions. If you're searching for a Java cloud development company that blends experience, innovation, and scalability, Associative, based in Pune, India, is your trusted technology partner.

Why Choose Java for Cloud Development?

Java has long been recognized for its platform independence, scalability, and extensive ecosystem. When paired with cloud platforms like Amazon Web Services (AWS) and Google Cloud Platform (GCP), Java enables businesses to build highly resilient, distributed systems with ease.

Benefits of using Java for cloud-based applications:

Object-oriented, secure, and stable

Strong community support

Excellent performance for backend services

Seamless integration with cloud services and databases

Compatibility with containerization tools like Docker and Kubernetes

Associative – Your Expert Java Cloud Development Company

At Associative, we specialize in building robust and scalable Java-based cloud solutions tailored to your business needs. With a proven track record across multiple industries, our team leverages frameworks like Spring Boot, cloud platforms like AWS and GCP, and robust database solutions like Oracle and MySQL to deliver end-to-end cloud applications.

Our Java Cloud Development Services Include:

Cloud-native application development using Java and Spring Boot

Migration of legacy Java applications to cloud platforms

API development & integration for scalable microservices

Serverless architecture & deployment on AWS Lambda and GCP Cloud Functions

Containerization with Docker & orchestration using Kubernetes

Database integration with Oracle, MySQL, and PostgreSQL

Continuous Integration and Continuous Deployment (CI/CD) pipelines

Cloud security and compliance implementation

Industries We Serve

We provide cloud-based Java solutions to various industries including:

Fintech and Banking

E-commerce and Retail

Healthcare and Education

Logistics and Supply Chain

Real Estate and Hospitality

Why Clients Trust Associative

Location Advantage: Based in Pune, India – a growing tech hub

Cross-Technology Expertise: Java, Spring Boot, AWS, GCP, Oracle

Agile Delivery: Scalable, flexible, and cost-effective solutions

End-to-End Services: From planning to deployment and support

Certified Developers: Skilled professionals in cloud and Java technologies

Let’s Build the Future on Cloud with Java

If you're looking to digitally transform your business through cloud computing and need a reliable Java cloud development company, Associative is here to help. Whether it's migrating your legacy system, developing cloud-native applications, or building microservices, we bring the technology and expertise to accelerate your growth.

youtube

0 notes

Text

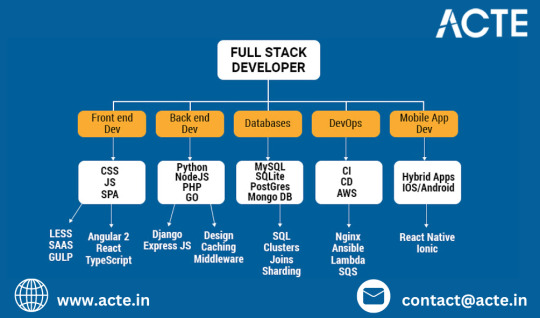

Essential Skills and Technologies for Full Stack Developers in 2024

As we move deeper into the digital age, the role of a full stack developer has never been more crucial. These professionals are the backbone of modern software development, combining expertise in both front-end and back-end technologies to build complete web applications.

For those looking to master the art of Full Stack, enrolling in a reputable Full Stack Developer Training in Pune can provide the essential skills and knowledge needed for navigating this dynamic landscape effectively.

To thrive as a full stack developer in 2024, you'll need a robust set of skills that cover everything from user interfaces to server management. Here’s a detailed look at the key competencies you’ll need to succeed.

1. Front-End Development: Crafting User Interfaces

The front-end is the face of your application, where user interaction takes place. A solid understanding of HTML, CSS, and JavaScript is foundational, but in 2024, that’s just the beginning.

Advanced JavaScript Frameworks and Libraries: Mastering frameworks like React.js, Angular, or Vue.js is essential for creating dynamic and responsive user experiences efficiently.

Responsive Web Design: With the continued dominance of mobile browsing, proficiency in CSS frameworks such as Bootstrap and Tailwind CSS is critical for developing mobile-first, responsive designs that work seamlessly across devices.

Version Control: Mastering Git and using platforms like GitHub or GitLab is indispensable for managing code versions and collaborating effectively, whether working individually or as part of a team.

2. Back-End Development: The Core of Application Functionality

The back-end is where the heavy lifting happens, powering the application’s core logic and operations.

Server-Side Programming Languages: Expertise in languages like Node.js (JavaScript), Python, Ruby, Java, and C# remains vital. The choice of language often depends on the specific needs of your project.

Web Frameworks: Familiarity with frameworks like Express.js (Node.js), Django (Python), Spring (Java), and Ruby on Rails (Ruby) enables you to build and manage back-end systems efficiently.

API Development: Understanding how to develop and integrate RESTful APIs is essential for ensuring smooth communication between the front-end and back-end, particularly in data-rich applications.

Security: Implementing robust security measures, such as OAuth, JWT (JSON Web Tokens), and role-based access control, is critical to protecting user data and ensuring secure transactions.

3. Database Management: Handling Data Efficiently

Effective data management is crucial for the success of any application.

Relational Databases: Proficiency in SQL and experience with relational databases like MySQL, PostgreSQL, or Oracle are necessary for managing structured data.

NoSQL Databases: With the increasing importance of unstructured data, familiarity with NoSQL databases like MongoDB or Cassandra is becoming more valuable.

Database Design and Optimization: Strong skills in designing databases, writing efficient queries, and optimizing performance are key to managing complex data environments. Here’s where getting certified with the Top Full Stack Online Certification can help a lot.

4. DevOps and Deployment: Keeping Applications Running Smoothly

In today’s development landscape, understanding DevOps practices is essential for maintaining and deploying applications.

CI/CD Pipelines: Mastery of Continuous Integration/Continuous Deployment tools like Jenkins, Travis CI, or GitLab CI helps automate testing and deployment, ensuring a smooth development workflow.

Containerization: Proficiency in Docker and orchestration tools like Kubernetes is essential for managing applications in cloud environments, ensuring they run consistently across different platforms.

Cloud Services: Knowledge of cloud platforms like AWS, Azure, or Google Cloud Platform (GCP) is crucial for deploying and scaling applications in a cost-effective and flexible manner.

5. API Development and Integration: Connecting the Dots

APIs play a pivotal role in connecting different components of an application and integrating with external services.

GraphQL: Learning GraphQL offers a more efficient and flexible approach to data querying, which is especially beneficial in complex web applications.

Microservices Architecture: Understanding how to build applications using microservices allows for better scalability and modularity, enabling different services to be updated and deployed independently.

6. Version Control and Collaboration: Working Together

Effective collaboration is key in modern development environments, and version control systems are the foundation.

Version Control: Mastery of Git is essential for managing code changes, coordinating with team members, and tracking the progress of a project over time.

Project Management Tools: Familiarity with tools like JIRA, Trello, or Asana helps in organizing tasks, managing workflows, and ensuring that projects stay on track.

7. Testing and Quality Assurance: Ensuring Robust Applications

Quality assurance is crucial to delivering reliable software that meets user expectations.

Unit Testing: Writing unit tests using frameworks like Jest (JavaScript), JUnit (Java), or PyTest (Python) ensures that each component of your application works as expected.

Integration Testing: Ensuring that different parts of the application work together as intended is vital for maintaining overall system integrity.

End-to-End Testing: Tools like Selenium, Cypress, or Puppeteer allow you to test entire workflows, simulating real user interactions to catch issues before they impact the user.

8. Soft Skills: The Human Element of Development

Beyond technical prowess, soft skills are essential for a well-rounded full stack developer.

Problem-Solving: The ability to troubleshoot and resolve complex issues is crucial in any development environment.

Communication: Clear and effective communication is key to collaborating with team members, stakeholders, and clients.

Time Management: Balancing multiple tasks and meeting deadlines requires strong organizational skills and time management.

Adaptability: The tech landscape is ever-changing, and a successful developer must be willing to learn and adapt to new technologies and methodologies.

9. Staying Ahead of the Curve: Emerging Technologies

Keeping up with new and emerging technologies is vital for staying competitive in the job market.

WebAssembly: Knowledge of WebAssembly can enhance performance for web applications, particularly those requiring heavy computational tasks.

AI/ML Integration: Incorporating machine learning models and AI features into web applications is becoming more common, and understanding these technologies can give you a competitive edge.

Blockchain: With the growing interest in decentralized applications (dApps), blockchain technology is becoming increasingly relevant in certain industries.

Conclusion

In 2024, the role of a full stack developer demands a diverse skill set that spans both front-end and back-end development, as well as a deep understanding of emerging technologies. Success in this field requires continuous learning, adaptability, and a commitment to staying ahead of the curve. By mastering these essential skills and technologies, you’ll be well-equipped to build and maintain complex, high-performing applications that meet the demands of today’s digital landscape.

#full stack course#full stack developer#full stack software developer#full stack training#full stack web development

0 notes

Text

🚀 10X Your Coding Skills with Learn24x – Apply Now! 🚀

Looking to master the most in-demand tech skills? At Learn24x, we offer expert-led training across a wide range of courses to help you excel in your tech career:

🔹 Full Stack Development: Java, Python, .Net, MERN, MEAN, PHP

🔹 Programming Languages: Java, Python, .Net, PHP

🔹 Web & Mobile Development: Angular, ReactJS, VueJS, React Native, Flutter, Ionic, Android

🔹 Cloud & DevOps: AWS, Azure, GCP DevOps

🔹 Database Technologies: MongoDB, MySQL, Oracle, SQL Server, IBM Db2, PostgreSQL

🔹 Testing: Manual & Automation Testing, ETL Testing

🔹 Data & Business Intelligence: Power BI, Data Science, Data Analytics, AI, ETL Developer

🔹 Web Design & Frontend: HTML5, CSS3, Bootstrap5, JavaScript, jQuery, TypeScript

🔹 Digital Marketing

🌐 Learn online, gain hands-on experience, and unlock career opportunities with personalized guidance and job placement support!

📞 +91 80962 66265

🌐 https://www.learn24x.com/

Apply today and accelerate your tech journey with Learn24x! 💻

#Learn24x #TechSkills #FullStackDevelopment #DataScience #CloudDevOps #DigitalMarketing #WebDevelopment #AI #Python #Java #CareerGrowth #Programming #Testing #FrontendDevelopment #ReactJS #CloudComputing #Internship #JobPlacement #UpskillNow #TechCareers #CodingCourses #SoftwareDevelopment

#Learn24x#online courses#tech skills#full stack developer#data science#edutech#programming#digital marketing#coding#education#ai#artificial intelligence#ui ux design#uidesign#ux

0 notes

Text

GCP Database Migration Service Boosts PostgreSQL migrations

GCP database migration service

GCP Database Migration Service (DMS) simplifies data migration to Google Cloud databases for new workloads. DMS offers continuous migrations from MySQL, PostgreSQL, and SQL Server to Cloud SQL and AlloyDB for PostgreSQL. DMS migrates Oracle workloads to Cloud SQL for PostgreSQL and AlloyDB to modernise them. DMS simplifies data migration to Google Cloud databases.

This blog post will discuss ways to speed up Cloud SQL migrations for PostgreSQL / AlloyDB workloads.

Large-scale database migration challenges

The main purpose of Database Migration Service is to move databases smoothly with little downtime. With huge production workloads, migration speed is crucial to the experience. Slower migration times can affect PostgreSQL databases like:

Long time for destination to catch up with source after replication.

Long-running copy operations pause vacuum, causing source transaction wraparound.

Increased WAL Logs size leads to increased source disc use.

Boost migrations

To speed migrations, Google can fine-tune some settings to avoid aforementioned concerns. The following options apply to Cloud SQL and AlloyDB destinations. Improve migration speeds. Adjust the following settings in various categories:

DMS parallels initial load and change data capture (CDC).

Configure source and target PostgreSQL parameters.

Improve machine and network settings

Examine these in detail.

Parallel initial load and CDC with DMS

Google’s new DMS functionality uses PostgreSQL multiple subscriptions to migrate data in parallel by setting up pglogical subscriptions between the source and destination databases. This feature migrates data in parallel streams during data load and CDC.

Database Migration Service’s UI and Cloud SQL APIs default to OPTIMAL, which balances performance and source database load. You can increase migration speed by selecting MAXIMUM, which delivers the maximum dump speeds.

Based on your setting,

DMS calculates the optimal number of subscriptions (the receiving side of pglogical replication) per database based on database and instance-size information.

To balance replication set sizes among subscriptions, tables are assigned to distinct replication sets based on size.

Individual subscription connections copy data in simultaneously, resulting in CDC.

In Google’s experience, MAXIMUM mode speeds migration multifold compared to MINIMAL / OPTIMAL mode.

The MAXIMUM setting delivers the fastest speeds, but if the source is already under load, it may slow application performance. So check source resource use before choosing this option.

Configure source and target PostgreSQL parameters.

CDC and initial load can be optimised with these database options. The suggestions have a range of values, which you must test and set based on your workload.

Target instance fine-tuning

These destination database configurations can be fine-tuned.

max_wal_size: Set this in range of 20GB-50GB

The system setting max_wal_size limits WAL growth during automatic checkpoints. Higher wal size reduces checkpoint frequency, improving migration resource allocation. The default max_wal_size can create DMS load checkpoints every few seconds. Google can set max_wal_size between 20GB and 50GB depending on machine tier to avoid this. Higher values improve migration speeds, especially beginning load. AlloyDB manages checkpoints automatically, therefore this argument is not needed. After migration, modify the value to fit production workload requirements.

pglogical.synchronous_commit : Set this to off

As the name implies, pglogical.synchronous_commit can acknowledge commits before flushing WAL records to disc. WAL flush depends on wal_writer_delay parameters. This is an asynchronous commit, which speeds up CDC DML modifications but reduces durability. Last few asynchronous commits may be lost if PostgreSQL crashes.

wal_buffers : Set 32–64 MB in 4 vCPU machines, 64–128 MB in 8–16 vCPU machines

Wal buffers show the amount of shared memory utilised for unwritten WAL data. Initial load commit frequency should be reduced. Set it to 256MB for greater vCPU objectives. Smaller wal_buffers increase commit frequency, hence increasing them helps initial load.

maintenance_work_mem: Suggested value of 1GB / size of biggest index if possible

PostgreSQL maintenance operations like VACUUM, CREATE INDEX, and ALTER TABLE ADD FOREIGN KEY employ maintenance_work_mem. Databases execute these actions sequentially. Before CDC, DMS migrates initial load data and rebuilds destination indexes and constraints. Maintenance_work_mem optimises memory for constraint construction. Increase this value beyond 64 MB. Past studies with 1 GB yielded good results. If possible, this setting should be close to the destination’s greatest index to replicate. After migration, reset this parameter to the default value to avoid affecting application query processing.

max_parallel_maintenance_workers: Proportional to CPU count

Following data migration, DMS uses pg_restore to recreate secondary indexes on the destination. DMS chooses the best parallel configuration for –jobs depending on target machine configuration. Set max_parallel_maintenance_workers on the destination for parallel index creation to speed up CREATE INDEX calls. The default option is 2, although the destination instance’s CPU count and memory can increase it. After migration, reset this parameter to the default value to avoid affecting application query processing.

max_parallel_workers: Set proportional max_worker_processes

The max_parallel_workers flag increases the system’s parallel worker limit. The default value is 8. Setting this above max_worker_processes has no effect because parallel workers are taken from that pool. Maximum parallel workers should be equal to or more than maximum parallel maintenance workers.

autovacuum: Off

Turn off autovacuum in the destination until replication lag is low if there is a lot of data to catch up on during the CDC phase. To speed up a one-time manual hoover before promoting an instance, specify max_parallel_maintenance_workers=4 (set it to the Cloud SQL instance’s vCPUs) and maintenance_work_mem=10GB or greater. Note that manual hoover uses maintenance_work_mem. Turn on autovacuum after migration.

Source instance configurations for fine tuning

Finally, for source instance fine tuning, consider these configurations:

Shared_buffers: Set to 60% of RAM

The database server allocates shared memory buffers using the shared_buffers argument. Increase shared_buffers to 60% of the source PostgreSQL database‘s RAM to improve initial load performance and buffer SELECTs.

Adjust machine and network settings

Another factor in faster migrations is machine or network configuration. Larger destination and source configurations (RAM, CPU, Disc IO) speed migrations.

Here are some methods:

Consider a large machine tier for the destination instance when migrating with DMS. Before promoting the instance, degrade the machine to a lower tier after migration. This requires a machine restart. Since this is done before promoting the instance, source downtime is usually unaffected.

Network bandwidth is limited by vCPUs. The network egress cap on write throughput for each VM depends on its type. VM network egress throughput limits disc throughput to 0.48MBps per GB. Disc IOPS is 30/GB. Choose Cloud SQL instances with more vCPUs. Increase disc space for throughput and IOPS.

Google’s experiments show that private IP migrations are 20% faster than public IP migrations.

Size initial storage based on the migration workload’s throughput and IOPS, not just the source database size.

The number of vCPUs in the target Cloud SQL instance determines Index Rebuild parallel threads. (DMS creates secondary indexes and constraints after initial load but before CDC.)

Last ideas and limitations

DMS may not improve speed if the source has a huge table that holds most of the data in the database being migrated. The current parallelism is table-level due to pglogical constraints. Future updates will solve the inability to parallelise table data.

Do not activate automated backups during migration. DDLs on the source are not supported for replication, therefore avoid them.

Fine-tuning source and destination instance configurations, using optimal machine and network configurations, and monitoring workflow steps optimise DMS migrations. Faster DMS migrations are possible by following best practices and addressing potential issues.

Read more on govindhtech.com

#GCP#GCPDatabase#MigrationService#PostgreSQL#CloudSQL#AlloyDB#vCPU#CPU#VMnetwork#APIs#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Cutting-Edge Software Development Services - MedRec Technologies

Comprehensive Software Development Solutions

At MedRec Technologies, we pride ourselves on being a leading provider of top-tier software development services. Our dedicated teams of experienced developers, designers, and analysts are committed to delivering exceptional solutions tailored to meet the unique needs of our clients. Our services span a wide array of technologies and industries, ensuring we can cater to any requirement you may have.

Frontend Development

Our team of skilled frontend developers specializes in creating engaging, responsive, and user-friendly interfaces. Leveraging the latest technologies, we ensure your web applications provide a seamless user experience.

AngularJS & BackboneJS: Robust frameworks for dynamic web applications.

JavaScript & React: Cutting-edge libraries for interactive UIs.

UI/UX Design: Crafting visually appealing and intuitive designs.

Backend Development

We offer robust backend solutions that power your applications, ensuring scalability, security, and performance.

C & C++: High-performance system programming.

C# & .NET: Comprehensive development ecosystem for enterprise solutions.

Python: Versatile language for rapid development and data analysis.

Ruby on Rails: Efficient framework for scalable web applications.

PHP & Java: Popular languages for dynamic web content and enterprise applications.

Database Management

Our database experts ensure your data is efficiently stored, retrieved, and managed, providing a solid foundation for your applications.

MySQL, MongoDB, PostgreSQL: Reliable and scalable database solutions.

Amazon Aurora, Oracle, MS SQL: Enterprise-grade database services.

ClickHouse, MariaDB: High-performance, open-source databases.

Cloud Computing

We provide comprehensive cloud services, enabling your business to leverage scalable and cost-effective IT resources.

Amazon AWS, Google Cloud Platform (GCP), Microsoft Azure: Leading cloud platforms offering a wide range of services.

Private Cloud: Customizable cloud solutions for enhanced security and control.

Containerization & Orchestration: Docker, Kubernetes, and more for efficient application deployment and management.

CI/CD and Configuration Management

Streamline your development and deployment processes with our CI/CD and configuration management services.

Jenkins, GitLab, GitHub: Automate your software delivery pipeline.

Ansible, Chef, Puppet: Ensure consistent and reliable system configurations.

Artificial Intelligence and Machine Learning

Harness the power of AI and machine learning to transform your business operations and drive innovation.

Deep Learning & Data Science: Advanced analytics and predictive modeling.

Computer Vision & Natural Language Processing: Enhance automation and user interaction.

Blockchain Technologies

Embrace the future of secure and decentralized applications with our blockchain development services.

Smart Contracts & dApps: Automate transactions and processes securely.

Crypto Exchange & Wallets: Develop secure platforms for digital assets.

NFT Marketplace: Create, buy, and sell digital assets on a decentralized platform.

Internet of Things (IoT)

Connect your devices and gather valuable data with our end-to-end IoT solutions.

Smart Homes & Telematics: Enhance living and transportation with connected technology.

IIoT & IoMT: Industrial and medical IoT solutions for improved efficiency and care.

Industry-Specific Solutions

We understand the unique challenges and opportunities within various industries. Our solutions are tailored to meet the specific needs of your sector.

Healthcare and Life Sciences

Revolutionize patient care and streamline operations with our AI and IoMT solutions.

AIaaS & IoMTaaS: Advanced analytics and connected medical devices.

Telemedicine & Remote Monitoring: Improve access to care and patient outcomes.

Banking and Finance

Secure your transactions and innovate your financial services with our blockchain and AI solutions.

Blockchain for Secure Transactions: Enhance security and transparency.

AI for Predictive Analytics: Improve decision-making and customer service.

Travel and Hospitality

Enhance guest experiences and optimize operations with our technology-driven solutions.

VR Tours & Chatbots: Engage customers with immersive experiences and efficient service.

IoT-Enabled Devices: Automate and personalize guest services.

Logistics and Shipping

Optimize your supply chain and unlock new business opportunities with our comprehensive logistics solutions.

XaaS (Everything-as-a-Service): Flexible and scalable solutions for logistics and supply chain management.

Our Commitment to Excellence

With over a decade of experience in crafting exceptional software, we have a proven track record of success. Our clients trust us to deliver high-quality solutions that drive their digital transformation and business growth.

10+ Years of Experience: Delivering top-tier software development services.

7.5+ Years Client Retention: Building long-term partnerships.

100+ Successful Projects: Supporting startups and enterprises alike.

Strict NDA and IP Protection: Ensuring confidentiality and security.

Conclusion

Partner with MedRec Technologies to accelerate your digital transformation. Our expert teams are ready to deliver innovative, scalable, and secure software solutions tailored to your unique needs. Contact us today to learn more about how we can help your business thrive in the digital age.

0 notes

Photo

Relational Databases - How to Choose |¦| SQL Tutorial |¦| SQL for Beginners http://ehelpdesk.tk/wp-content/uploads/2020/02/logo-header.png [ad_1] When learning SQL, you first nee... #agile #amazonfba #analysis #aws #azure #business #businessfundamentals #chooserelationaldatabase #cloud #cloudservices #database #databases #excel #financefundamentals #financialanalysis #financialmodeling #forex #gcp #investing #learnsql #mariadb #microsoft #mysql #oracle #pmbok #pmp #postgresql #realestateinvesting #relationaldatabase #relationaldatabasechoices #socratica #socraticacs #sql #sqlbeginners #sqlcourse #sqldatabase #sqllesson #sqlserver #sqltutorial #sqlvideotutorial #sqlvideos #sqlite #stocktrading #structuredquerylanguage #tableau

0 notes

Link

2019年度 IaaS ワークショップ @ NTTコム 1. IaaSワークショップ @NTT Communications @iwashi86 2019.4.15 2. Day3-5(3⽇間)の流れ ・IaaS/CaaS/FaaSを⽤いて 同じ仕様のWebアプリを作成 ・今回の研修の本質はアプリではない ・本質は ・どうクラウドを使いこなすか? ・サービス(お客様価値)をどう作り上げるか? ・クラウドはそのための強⼒な武器 3. 今⽇(IaaS)の流れ ・講義: 1-1.5h 程度 ・クラウド、IaaSについて ・注: 知識量が多く駆け⾜なので、 気になるところは別途確認のこと 4. 今⽇(IaaS)の流れ ・講義: 1-1.5h 程度 ・クラウド、IaaSについて ・注: 知識量が多く駆け⾜なので、 気になるところは別途確認のこと ・実習: 残り時間全て ・IaaSクラウドデザインパターンの実装 ・簡易カオスエンジニアリングに耐える 5. 講義の流れ 6. ・クラウド全般の知識 ・IaaSについて ・Instagramライクなアプリの設計で学ぶ ・カオスエンジニアリング 講義の流れ 7. ・クラウド全般の知識 ・IaaSについて ・Instagramライクなアプリの設計で学ぶ ・カオスエンジニアリング 講義の流れ 8. そもそもクラウドとはなにか? NISTによるクラウドコンピューティングの定義 https://www.ipa.go.jp/files/000025366.pdf (最近だとちょっと古い感じの内容 + 少しお硬いので…) 9. ・対照的なのはオンプレ ・⾃社でサーバを抱えること ・クラウド ⇒ 抱えない ・オンデマンドで必要なときにリソース確保 ・コスト ・オンプレ:将来を予測して投資 ・クラウド:必要なだけ払う(⼤量に使った場合はむしろ⾼いことも) そもそもクラウドとはなにか? 10. クラウドを提供するサービス達 ・GCP / Google ・AWS / Amazon Web Services ・Azure / Microsoft ・ECL 2.0 / NTT Com 11. クラウドの分類① ・IaaS / Infrastructure as a Service ・もっともベーシック ・他を⽀える基礎として抑えておくのが重要 ・IaaSが向いている機能もある ・VM、ネットワーク、ストレージを貸出 ・PaaS / Platform as a Service ・アプリ+ミドルウェア以外をマネージ ・HerokuやGoogle App Engineが有名 12. クラウドの分類② ・CaaS / Container as a Service / 詳しくは明⽇ ・コンテナの実⾏基盤を提供 ・主流は Kubernetes をマネージ ・GKE / EKS / AKS と各社出している ・FaaS / Function as a Service / 詳しくは明後⽇ ・関数の実⾏環境を提供 ・AWS Lambda、Google Cloud Functions など ・SaaS / Software as a Service、GitHubとか 13. レイヤで 分類⽐較してみる 14. オンプレは全部やる、データセンタすら作る データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ オンプレ ⾃社は事実、データセンタを多く構築している 15. ホスティングはデータセンタ内のサーバを拝借 データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ オンプ 16. IaaSはOS以上をユーザがやる データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ オンプレ データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ ホスティング データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ IaaS 17. CaaSはコンテナ以上をユーザが対応 データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ オンプレ データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ ホスティング データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ IaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ CaaS 18. PaaSはアプリだけ⾃分で作る データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ オンプレ データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ ホスティング データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ IaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ CaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ PaaS 19. FaaSは関数だけ⾃分で作る データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ オンプレ データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ ホスティング データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ IaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ CaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ PaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ FaaS 20. SaaSは使いたい機能だけ データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ オンプレ データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ ホスティング データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ IaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ CaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ PaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ FaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ SaaS 21. それぞれに向き不向きがある データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ オンプレ データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ ホスティング データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ IaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ CaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ PaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ FaaS データセンタ 物理サーバ 仮想化 OS コンテナ 実⾏環境 アプリ 関数 データ ネットワーク ストレージ SaaS ⼤ ← ⾃由度 → ⼩ ⼤ ← ⼯数→ ⼩ 22. 参考: 使い分けはCNCFのホワイトペーパーがオススメ https://github.com/cncf/wg-serverless 23. ・クラウド全般の知識 ・IaaSについて ・Instagramライクなアプリの設計で学ぶ ・カオスエンジニアリング 講義の流れ 24. IaaSの得意領域 ・既存のシステム移⾏ ・特定のOS/Kernelに縛りがある ・GPUが必要 ・HTTP/S以外のプロトコル 25. IaaSでは ・GUIをポチポチ or APIを叩くと VMが上がる + NW と ストレージが付属 26. IaaSでは ・GUIをポチポチ or APIを叩くと VMが上がる + NW と ストレージが付属 ・NWもまるごと設計できるので ⾃由度は⾮常に⾼い その半⾯、イマイチな設計もしやすい (クラウドの⼒を使いこなせない) 27. ではどうするか? ・もともと有名なやつ (と類似の概念で…) 28. クラウドデザインパターン(CDP)へ 補⾜: AWS版だけど、他クラウドでもほぼそのまま応⽤できる http://aws.clouddesignpattern.org/index.php/%E3%83%A1%E3%82%A4%E3%83%B3%E3%83%9A%E3%83%BC%E3%82%B8 だいたい2013-2015年ぐらいに流⾏していた ���今も現役で動いているものは多いはず) 29. ・クラウド全般の知識 ・IaaSについて ・Instagramライクなアプリの設計で学ぶ ・カオスエンジニアリング 講義の流れ 30. InstagramみたいなWebアプリを想像 ・まず、HTML/JavaScript/CSSを取得 ・ユーザ登録・ログイン認証 ・画像やコメントの投稿・修正・閲覧 ・当然、それらは保存される必要がある ・ユーザのAppへPush通知 これを作りたい、さてどうしよう? 31. もし研究室でやるなら VM App + DB ・サーバ(VM)を1台たてる ・Webサーバ(nginx) ・Rails/Djangoとかでロジック ・MySQLやPostgreSQLにデータを保存 ・落ちたら苦情ドリブンで復旧 ・全部とぶとヤバいので たまにRDBのバックアップをとる 32. お仕事でやる場合の 重要概念 33. SPOFを避ける ・SPOF / Single Point Of Failure ・⽇本語だと「単⼀障害点」 ・その単⼀箇所が働かないと システム全体が障害となるような箇所 補⾜) 全てSPOFを避けるのではなく、費⽤対効果でSPOFを許容するケースもある 34. SPOFを避ける ・SPOF / Single Point Of Failure ・⽇本語だと「単⼀障害点」 ・その単⼀箇所が働かないと システム全体が障害となるような箇所 ・解決⽅法の例 ・冗⻑化 ・落ちても⾃動復旧させる 補⾜) 全てSPOFを避けるのではなく、費⽤対効果でSPOFを許容するケースもある 35. IaaS x Webアプリ x CDP 36. 最初の構成 VM App + RDB 37. 圧倒的に重要なDBを冗⻑化 App RDB (Primary) RDB (Secondary) Primaryが落ちたら SecondaryをPrimaryに昇格し 接続先を切り替え 38. 障害時はFailOver Master/Slave(Slaveという⾔葉がいまいちなので最近使わない) ・使うDBの種類、プロダクトによって異なる 39. Appも冗⻑化していく App RDB (Primary) RDB (Secondary) App 40. 参考: スケールアウト/スケールイン App RDB (Primary) RDB (Secondary) App App ・ ・ ・ ス ケ ル ア ウ ト ス ケ ル イ ン 41. どうやってAppにリクエストを振り分ける? App RDB (Primary) RDB (Secondary) App 42. 代表的案な2⽅式 43. 代表的案な2⽅式 44. 結果的にこうなる App RDB (Primary) RDB (Secondary) App LB LB DNS ラウンド ロビン ・IaaSを使った場合の超基本形 45. さらにマネージドサービスを使うと… App App DNS ラウンド ロビン※ クラウド 提供LB クラウド 提供DB ※ クラウドによってラウンドロビンするかは異なる GCPの場合は、単⼀IPアドレスでIPエニーキャストで対応 46. 参考: Cloud Load Balancing(GCP Managed LB) 47. 参考: Cloud SQL(GCP Managed RDB) 48. ・クラウド全般の知識 ・IaaSについて ・Instagramライクなアプリの設計で学ぶ ・冗長化について ・カオスエンジニアリング 講義の流れ 49. 冗⻑化したときの ハマりどころ 50. 50 App(Rails) App(Rails) ①ログイン ログインを考える 51. 51 App(Rails) App(Rails) ①ログイン ②セッション キャッシュ ログインを考える 52. 52 App(Rails) App(Rails) ①ログイン ③GET ログインを考える ②セッション キャッシュ 53. 53 App(Rails) App(Rails) ①ログイン ③GET ④キャッシュが無くて アクセスがコケる ログインを考える ②セッション キャッシュ 54. 54 ①スティッキーセッション ・ロードバランサなどを利⽤して セッションを払い出したアプリサーバと 同⼀のアプリサーバに常に接続させる 代表的な対応策 x 2 55. 55 ①スティッキーセッション ・ロードバランサなどを利⽤して セッションを払い出したアプリサーバと 同⼀のアプリサーバに常に接続させる ②セッションの状態を外に追い出す ・KVSなどを利⽤してセッションを追い出す ・クラウドを活かすならコッチ ・スケールイン/アウトに対応しやすいから 代表的な対応策 x 2 56. 56 App(Rails) App(Rails) ①ログイン ②セッション ③GET ④セッションを取りに⾏く KVSとか ②セッションを書き出す セッションを外に出した例 57. 57 CDP: State Sharingパターン http://aws.clouddesignpattern.org/index.php/CDP:State_Sharing%E3%83%91%E3%82%BF%E3%83%BC%E3%83%B3 58. 58 ・スティッキーセッション ・ロードバランサなどを利⽤して セッションを払い出したアプリサーバと 同⼀のアプリサーバに常に接続させる ・セッションの状態を外に追い出す ・KVSなどを利⽤してセッションを追い出す ・クラウドを活かすならコッチ 重要な概念 59. 59 ・スティッキーセッション ・ロードバランサなどを利⽤して セッションを払い出したアプリサーバと 同⼀のアプリサーバに常に接続させる ・セッションの状態を外に追い出す ・KVSなどを利⽤してセッションを追い出す ・クラウドを活かすならコッチ スケールアウトさせるときの肝 =いかに状態/ステートを 外にだすか?閉じ込めていくか? (この概念はコンテナ時代でも重要) 重要な概念 60. 60 ①APP ②RDB ③KVS ④Other ユーザが写真を投稿したらどこに保存? 61. 61 ①APP ②RDB ③KVS ④Other ①APP 状態を追い出す、 閉じ込められてない例 ①はNG、リクエスト先によっては存在しない 62. 62 ①APP ④Other ④が多い、よく使うのはオブジェクトストレージ ⾃社クラウドであるCloudnの オブジェクトストレージへ貯め込む例 63. 冗⻑化のめんどくささ 64. 冗⻑化対象は⾊々とある ・RDB (MySQL/Postgres/Oracle/…) ・KVS (Redis/…) ・LB ・全部やるのは⾮常に⼤変 ・ちゃんとFailoverするの?コネクションは? ・直接、お客様価値につながらない部分 ・Instagramだったら、ここは本質ではない ・そもそも、その価値(仮説)が刺さるか謎 65. そこで各クラウドプロバイダはマネージドを⽤意 ・RDB ・AWS RDS ・GCP CloudSQL ・KVS ・AWS ElastiCache ・GCP / Cloud DataStore ・LB ・AWS ALB/NLB/CLB ・GCP LB (その他、SQLで扱えるDBは⾊々あるが割愛) ・⾃動で対応 ・セキュリティ パッチアップデート ・冗⻑化 ・バックアップ 66. そこで各クラウドプロバイダはマネージドを⽤意 ・RDB ・AWS RDS ・GCP CloudSQL ・KVS ・AWS ElastiCache ・GCP / Cloud DataStore ・LB ・AWS ALB/NLB/CLB ・GCP LB (その他、SQLで扱えるDBは⾊々あるが割愛) ・⾃動で対応 ・セキュリティ パッチアップデート ・冗⻑化 ・バックアップ 重要なのは サービス・プロダクトの 本来の価値にリソースを集中すること (サボれる部分はサボる) 67. どこまで冗⻑化するか? 68. 冗⻑化の単位 ・HDD/SSD単位(RAID) ・サーバ単位 ・ラック単位 ・データセンタ単位、Availability Zone単位 ・リージョン単位 ・クラウド単位 結局、サービス・プロダクト特性にあわせて検討 69. スケールアウト/イン or スケールアップ/ダウン 70. 再掲: スケールアウト/スケールイン App RDB (Primary) RDB (Secondary) App App ・ ・ ・ ス ケ ル ア ウ ト ス ケ ル イ ン 71. スケールアップとダウン ・スケールアップ ・CPUの数を増やす、メモリを増やす ・スケールダウン ・CPUの数を減らす、メモリを減らす なお… 現代は、スケールアウトを上⼿く使うのが主流 (スケールアップ/ダウンも使わないわけじゃない、使い分け) 72. 12 Factor Apps https://12factor.net/ja/ 73. I. バージョン管理されている1つのコードベースと複数のデプロイ II. 依存関係を明⽰的に宣⾔し分離する III. 設��を環境変数に格納する IV.バックエンドサービスをアタッチされたリソースとして扱う V. ビルド、リリース、実⾏の3つのステージを厳密に分離する VI.アプリケーションを1つもしくは複数のステートレスなプロセスとして実⾏する VII.ポートバインディングを通してサービスを公開する VIII.プロセスモデルによってスケールアウトする IX.⾼速な起動とグレースフルシャットダウンで堅牢性を最⼤化する X. 開発、ステージング、本番環境をできるだけ⼀致させた状態を保つ XI.ログをイベントストリームとして扱う XII.管理タスクを1回限りのプロセスとして実⾏する 参考: 12 Factor Apps の各項⽬ 74. I. バージョン管理されている1つのコードベースと複数のデプロイ II. 依存関係を明⽰的に宣⾔し分離する III. 設定を環境変数に格納する IV.バックエンドサービスをアタッチされたリソースとして扱う V. ビルド、リリース、実⾏の3つのステージを厳密に分離する VI.アプリケーションを1つもしくは複数のステートレスなプロセスとして実⾏する VII.ポートバインディングを通してサービスを公開する VIII.プロセスモデルによってスケールアウトする IX.⾼速な起動とグレースフルシャットダウンで堅牢性を最⼤化する X. 開発、ステージング、本番環境をできるだけ⼀致させた状態を保つ XI.ログをイベントストリームとして扱う XII.管理タスクを1回限りのプロセスとして実⾏する 参考: 12 Factor Apps の各項⽬ 微妙にイメージが湧きにくいので、たとえばAWSなら 75. https://www.slideshare.net/AmazonWebServicesJapan/the-twelvefactor-appaws 76. https://codezine.jp/article/detail/10402 77. 少し戻って… 78. 再掲: InstagramみたいなWebアプリを想像 ・まず、HTML/JavaScript/CSSを取得 ・ユーザ登録・ログイン認証 ・画像やコメントの投稿・修正・閲覧 ・当然、それらは保存される必要がある ・ユーザのAppへPush通知 79. 再掲: InstagramみたいなWebアプリを想像 ・まず、HTML/JavaScript/CSSを取得 ・ユーザ登録・ログイン認証 ・画像やコメントの投稿・修正・閲覧 ・当然、それらは保存される必要がある ・ユーザのAppへPush通知 ⇒ ⼀度に⼤量配信すると処理溢れで 捌けなくなることも… 80. Queueを挟んで分散する Queue App App 送りたいタスクを ⽣成(Produce) 配信App 配信App Queueからタスクを 取得して配信 (Consume) 81. ・クラウド全般の知識 ・IaaSについて ・Instagramライクなアプリの設計で学ぶ ・カオスエンジニアリング 講義の流れ 82. https://www.oreilly.com/ideas/chaos-engineering 83. カオスエンジニアリングとは “Chaos Engineering is the discipline of experimenting on a distributed system in order to build confidence in the systemʼs capability to withstand turbulent conditions in production.” ・分散システムにおいて ・本番環境のように荒れる環境にも耐える能⼒を持っている ・という確信を得るための実験/検証の規律 84. どのようなことをするか? ・(⼀番有名なものだと) 商⽤のVMをランダムで落とす ・リージョンやデータセンタ単位で障害を起こす ・あるトラフィックに対して⼀定期間 レイテンシを増加させる ・ある関数がランダムに例外を投げるようにする ・システム間の時刻をずらす ・デバイスドライバでI/Oエラーを起こす ・クラスタのCPUを最⼤限まで使い切る 85. なぜこんなことを? ・お客様価値を落とすシステムの弱みを学び それを改善することで、 システムの信頼性を⾼められるから (実際に障害を起こさないと 分からないことは⼭程ある…) 86. カオスエンジニアリング適⽤の前提条件 ・シンプルな質問に答えられるか? 「あなたのシステムは、サービスの障害や ネットワークレイテンシの急激な上昇といった 現実世界の出来事から回復できるか?」 もし、no なら先にやることがある。 ・⾃分のシステムの状態を監視できているか? + Steady State(正常な状態) を定義できているか? 87. 再掲:実習では少しだけ味⾒してみる (カオスエンジニアリングの⼀部だけ体験してみる) ・(⼀番有名なものだと) 商⽤のVMをランダムで落とす ← 採⽤ ・リージョンやデータセンタ単位で障害を起こす ・あるトラフィックに対して⼀定期間 レイテンシを増加させる ・ある関数がランダムに例外を投げるようにする ・システム間の時刻をずらす ・デバイスドライバでI/Oエラーを起こす ・クラスタのCPUを最⼤限まで使い切る 88. 現実世界では もっと考えることがある 89. たとえば… ・運⽤って何やるんだっけ? ・冗⻑化してても落ちたら? ・依存しているAPIが不安定に… ・使ってるライブラリの脆弱性が⾒つかった? ・あれ、そもそも監視って何やるんだっけ? ⇒ トピックはいくらでもあるので 書籍、オンライン資料、実務で⾝につける 90. (参考) 個⼈的にいくつか良い クラウド向けの 勉強リソース/書籍 91. https://www.oreilly.co.jp/books/9784873118642/ ⼊⾨ 監視 92. https://www.slideshare.net/AmazonWebServicesJapan AWS Black Beltシリーズ 93. https://cloudplatformonline.com/onair-japan.html Google Cloud OnAir 94. まとめ 95. 本⽇お話したこと ・クラウドとは?IaaSとは? ・Instagram的なアプリを作る⽅法 ・CDPを使う ・マネージドを使う ・カオスエンジニアリング おしまい!(質問があれば!)

0 notes

Text

Achieve Greater Agility: The Critical Need to Migrate Oracle to GCP PostgreSQL

Migrating from Oracle to GCP PostgreSQL is progressively crucial for future-proofing your database foundation. As organizations strive for greater agility and cost efficiency, GCP PostgreSQL offers a compelling open-source alternative to proprietary Oracle databases. This migration not only addresses the high licensing costs associated with Oracle but also provides superior scalability and flexibility. GCP PostgreSQL integrates seamlessly with Google Cloud’s suite of services, including advanced analytics and machine learning tools, enabling businesses to harness powerful data insights and drive innovation. The move to PostgreSQL also supports modern cloud-native applications, ensuring compatibility with evolving technologies and development practices. Additionally, GCP PostgreSQL offers enhanced performance, reliability, and security features, which are critical in an era of growing data volumes and stringent compliance requirements. Embracing this relocation positions organizations to use cutting-edge cloud advances, streamline operations, and diminish the total cost of ownership. As data management and analytics continue to be central to strategic decision-making, migrating to GCP PostgreSQL equips businesses with a robust, scalable platform to adapt to future demands and maintain a competitive edge.

Future Usage and Considerations

Scalability and Performance

Vertical and Horizontal Scaling: GCP PostgreSQL supports both vertical scaling (increasing instance size) and horizontal scaling (adding more instances).

Performance Tuning: Continuous monitoring and tuning of queries, indexing strategies, and resource allocation.

Integration with GCP Services

BigQuery: Coordinated with BigQuery for progressed analytics and data warehousing arrangements.

AI and Machine Learning: Use GCP's AI and machine learning administrations to construct predictive models and gain insights from your information.

Security and Compliance

IAM: Utilize character and get to administration for fine-grained control.

Encryption: Ensure data at rest and in transit is encrypted using GCP's encryption services.

Compliance: Follow industry-specific compliance necessities utilizing GCP's compliance frameworks and tools.

Cost Management

Cost Monitoring: Utilize GCP's cost management tools to monitor and optimize spending.

Auto-scaling: Implement auto-scaling to ensure resources are used efficiently, reducing costs.

High Availability and Disaster Recovery

Backup and Restore: Implement automated backups and regular restore testing.

Disaster Recovery: Plan and test a disaster recovery plan to guarantee business coherence.

Redefine Your Database Environment: Key Reasons to Migrate Oracle to GCP PostgreSQL Companies need to migrate from Oracle to GCP PostgreSQL to address high licensing costs and scalability limitations inherent in Oracle databases. GCP PostgreSQL offers a cost-effective, open-source alternative with seamless integration into Google Cloud’s ecosystem, providing advanced analytics and machine learning capabilities. This migration enables businesses to reduce operational expenses, enhance scalability, and leverage modern cloud services for greater innovation. Additionally, PostgreSQL's flexibility and strong community support ensure long-term sustainability and adaptability, making it a strategic choice for companies looking to future-proof their database infrastructure while optimizing performance and reducing costs. Transformative Migration: Essential Reasons to Migrate Oracle to GCP PostgreSQL Migrating from Oracle to GCP PostgreSQL is a crucial step for businesses looking to optimize their database foundation. GCP PostgreSQL seamlessly integrates with Google Cloud's ecosystem, enabling organizations to harness advanced analytics, machine learning, and other cutting-edge technologies.

As companies move to GCP PostgreSQL, they gain access to powerful tools for scalability, including vertical and horizontal scaling, which ensures that their database can grow with their needs. Integration with GCP administrations like BigQuery and AI tools improves data analysis capabilities and drives development. Moreover, GCP PostgreSQL's strong security features, including IAM and encryption, and compliance with industry standards, safeguard data integrity and privacy.

By migrating to GCP PostgreSQL, businesses not only reduce operational costs but also position themselves to leverage modern cloud capabilities effectively. This migration supports better performance, high availability, and efficient cost management through auto-scaling and monitoring tools. Embracing this change ensures that organizations remain competitive and adaptable in a rapidly evolving technological landscape.

Thanks For Reading

For More Information, Visit Our Website: https://newtglobal.com/

0 notes

Text

Everything you should know about Anthos- Google’s multi-cloud platform

What is Anthos?

Recently, Google reported a general availability of Anthos. Anthos is an enterprise hybrid and multi-cloud platform. This platform is designed to allow users to run applications on-premise not just Google Cloud but also with other providers such as Amazon Web Services and Microsoft Azure. Anthos stands out as the tech behemoth’s official entry into the quarrel of data centers. Anthos is different from other public cloud services. It is not just a product but it is an umbrella brand for various services aligned with the themes of application modernization, cloud migration, hybrid cloud, and multi-cloud management.

Despite the extensive coverage at Google Cloud Next and, of course, the general availability, the Anthos announcement was confusing. The documentation is sparse, and the service is not fully integrated with the self-service console. Except for the hybrid connectivity and multi-cloud application deployment, not much is known about this new technology from Google.

Building Blocks of Anthos-1. Google Kubernetes Engine –

Kubernetes Engine is a central command and control center of Anthos. Clients utilize the GKE control plane to deal with the distributed infrastructure running in Google’s cloud on-premise data center and other cloud platforms

2. GKE On-prem–

Google is delivering a Kubernetes-based software platform which is consistent with GKE. Clients can deliver this on any compatible hardware and Google will manage the platform. Google will treat it as a logical extension of GKE from upgrading the versions of Kubernetes to applying the latest updates. It is necessary to consider that GKE On-prem runs as a virtual appliance on top of VMware vSphere 6.5. The support for other hypervisors, such as Hyper-V and KVM is in process.

3. Istio –

This technology empowers federated network management across the platform. Istio acts as the service work that connects various components of applications deployed across the data center, GCP, and other clouds. It integrates with software defined networks such as VMware NSX, Cisco ACI, and of course Google’s own Andromeda. Customers with existing investments in network appliances can integrate Istio with load balancers and firewalls also.

4. Velostrata –

Google gained this cloud migration technology in 2018 to enlarge it for Kubernetes. Velostrata conveys two significant capabilities – stream on-prem physical/virtual machines to create replicas in GCE instances and convert existing VMs into Kubernetes applications (Pods).

This is the industry’s first physical-to-Kubernetes (P2K) migration tool built by Google. This capability is available as Anthos Migrate, which is still in beta.

5. Anthos Config Management –

Kubernetes is an extensible and policy-driven platform. Anthos’ customers have to deal with multiple Kubernetes deployments running across a variety of environments so Google attempts to simplify configuration management through Anthos. From deployment artifacts, configuration settings, network policies, secrets and passwords, Anthos Config Management can maintain and apply the configuration to one or more clusters. This technology Is a version-controlled, secure, central repository of all things related to policy and configuration also.

6. Stackdriver –

Stackdriver carries observability to Anthos infrastructure and applications. Customers can locate the state of clusters running within Anthos with the health of applications delivered in each managed cluster. It acts as the centralized monitoring, logging, tracing, and observability platform.

7. GCP Cloud Interconnect –

Any hybrid cloud platform is incomplete without high-speed connectivity between the enterprise data center and the cloud infrastructure. While connecting the data center with the cloud, cloud interconnect can deliver speeds up to 100Gbps. Customers can also use Telco networks offered by Equinix, NTT Communications, Softbank and others for extending their data center to GCP.

8. GCP Marketplace –

Google has created a list of ISV and open source applications that can run on Kubernetes. Customers can deploy applications such as Cassandra database and GitLab in Anthos with the one-click installer. In the end, Google may offer a private catalog of apps maintained by internal IT.

Greenfield vs. Brownfield Applications-

The central theme of Anthos is application modernization. Google conceives a future where all enterprise applications will run on Kubernetes.

To that end, it invested in technologies such as Velostrata that perform in-place upgradation of VMs to containers. Google built a plug-in for VMware vRealize to convert existing VMs into Kubernetes Pods. Even stateful workloads such as PostgreSQL and MySQL can be migrated and deployed as Stateful Sets in Kubernetes. In general Google’s style, the company is downplaying the migration of on-prem VMs to cloud VMs. But Velostrata’s original offering was all about VMs.

Customers using traditional business applications like SAP, Oracle Financials and also Peoplesoft can continue to run them in on-prem VMs or select to migrate them to Compute Engine VMs. Anthos can provide interoperability between VMs and also containerized apps running in Kubernetes. With Anthos, Google wants all your contemporary microservices-based applications (greenfield) in Kubernetes while migrating existing VMs (brownfield) to containers. Applications running in non-x86 architecture and legacy apps will continue to run either in physical or virtual machines.

Google’s Kubernetes Landgrab-

When Docker started to get traction among developers, Google realized that it’s the best time to release Kubernetes in the world. It also moved fast in offering the industry’s first managed Kubernetes in the public cloud. As there are various managed Kubernetes offerings, GKE is still the best platform to run microservices.

With a detailed understanding of Kubernetes and also the substantial investments it made, Google wants to assert its claim in the brave new world of containers and microservices. The company wants enterprises to leapfrog from VMs to Kubernetes to run their modern applications.

Read more at- https://solaceinfotech.com/blog/everything-you-should-know-about-anthos-googles-multi-cloud-platform/

0 notes

Text

Java Full Stack Development Company

Looking for a trusted Java Full Stack Development Company? Associative in Pune, India delivers expert solutions with Java, Spring Boot, Oracle, React, and more.

In the ever-evolving digital world, businesses need robust, scalable, and secure applications that can adapt to rapid market changes. At Associative, a leading Java Full Stack Development Company in Pune, India, we specialize in delivering end-to-end software solutions powered by Java technologies and modern front-end frameworks.

Why Choose Java Full Stack Development?

Java remains a cornerstone of enterprise-level development due to its reliability, performance, and vast ecosystem. Combining Java with cutting-edge front-end technologies like React.js or Angular allows businesses to build dynamic, responsive, and secure web and mobile applications. Full stack development ensures smooth integration between backend and frontend, providing a seamless user experience.

Our Full Stack Java Expertise Includes:

Backend Development using Java, Spring Boot, Hibernate, and RESTful APIs

Frontend Development with React.js, HTML5, CSS3, and JavaScript

Database Integration with Oracle, MySQL, and PostgreSQL

DevOps & Deployment on cloud platforms like AWS and GCP

Agile Project Delivery with continuous integration and scalable architecture

What Sets Associative Apart?

As a full-service software company, Associative has built a reputation for excellence across multiple domains:

Proven Expertise in Java, React.js, Node.js, and modern frameworks

Mobile-First Development for Android and iOS

Custom Web Solutions including E-commerce, CMS, and ERP systems

Experience with Popular Platforms such as Magento, WordPress, Drupal, and Shopify

End-to-End Digital Services from development to SEO and digital marketing

Advanced Technologies like Web3, Blockchain, and Cloud Computing

Industries We Serve:

E-commerce

Education & LMS

Healthcare

FinTech

Travel & Hospitality

Gaming & Entertainment

Your Trusted Java Full Stack Partner in India

At Associative, we believe in delivering quality-driven solutions tailored to your unique business needs. Whether you need a custom enterprise application or a scalable web platform, our Java full stack development team is here to help you succeed.

youtube

0 notes

Text

AWS/Azure/GCPサービス比較 2019.05

from https://qiita.com/hayao_k/items/906ac1fba9e239e08ae8?utm_campaign=popular_items&utm_medium=feed&utm_source=popular_items

はじめに

こちら のAWSサービス一覧をもとに各クラウドで対応するサービスを記載しています

AWSでは提供されていないが、Azure/GCPでは提供されているサービスが漏れている場合があります

主観が含まれたり、サービス内容が厳密に一致していない場合もあると思いますが、ご容赦ください

Office 365やG SuiteなどMicrsoft/Googleとして提供されているものは括弧書き( )で記載しています

物理的なデバイスやSDKなどのツール群は記載していません

Analytics

AWS Azure GCP

データレイクへのクエリ Amazon Athena Azure Data Lake Analytics Google BigQuery

検索 Amazon CloudSearch Azure Search -

Hadoopクラスターの展開 Amazon EMR HD Insight/Azure Databricks CloudDataproc

Elasticsearchクラスターの展開 Amazon Elasticserach Service - -

ストリーミング処理 Amazon Kinesis Azure Event Hubs Cloud Dataflow

Kafkaクラスターの展開 Amazon Managed Streaming for Kafka - -

DWH Amazon Redshift Azure SQL Data Warehouse Google BigQuery

BIサービス Quick Sight (Power BI) (Goolge データーポータル)

ワークフローオーケストレーション AWS Data Pipeline Azure Data Factory Cloud Composer

ETL AWS Glue Azure Data Factory Cloud Data Fusion

データレイクの構築 AWS Lake Formation - -

データカタログ AWS Glue Azure Data Catalog Cloud Data Catalog

Application Integration

AWS Azure GCP

分散アプリケーションの作成 AWS Step Functions Azure Logic Apps -

メッセージキュー Amazon Simple Queue Service Azure Queue Storage -

Pub/Sub Amazon Simple Notification Service Azure Service Bus Cloud Pub/Sub

ActiveMQの展開 Amazon MQ

GraphQL AWS AppSync - -

イベントの配信 Amazon CloudWatch Events Event Grid -

Blockchain

AWS Azure GCP

ネットワークの作成と管理 Amazon Managed Blockchain Azure Blockchain Service -

台帳データベース Amazon Quantum Ledger Database - -

アプリケーションの作成 - Azure Blockchain Workbench -

Business Applications

AWS Azure GCP

Alexa Alexa for Business - -

オンラインミーティング Amazon Chime (Office 365) (G Suite)

Eメール Amazon WorkMail (Office 365) (G Suite)

Compute

AWS Azure GCP

仮想マシン Amazon EC2 Azure Virtual Machines Compute Engine

オートスケール Amazon EC2 Auto Scaling Virtual Machine Scale Sets Autoscaling

コンテナオーケストレーター Amazon Elastic Container Service Service Fabric -

Kubernetes Amazon Elastic Container Service for Kubernetes Azure Kubernetes Service Google Kubernetes Engine

コンテナレジストリ Amazon Elastic Container Registry Azure Container Registry Container Registry

VPS Amazon Lightsail - -

バッチコンピューティング AWS Batch Azure Batch -

Webアプリケーションの実行環境 Amazon Elastic Beanstalk Azure App Service App Engine

Function as a Service AWS Lambda Azure Functions Cloud Functions

サーバーレスアプリケーションのリポジトリ AWS Serverless Application Repository - -

VMware環境の展開 VMware Cloud on AWS Azure VMware Solutions -

オンプレミスでの展開 AWS Outposts Azure Stack Cloud Platform Service

バイブリットクラウドの構築 - - Anthos

ステートレスなHTTPコンテナの実行 - - Cloud Run

Cost Management

AWS Azure GCP

使用状況の可��化 AWS Cost Explorer Azure Cost Management -

予算の管理 AWS Budgets Azure Cost Management -

リザーブドインスタンスの管理 Reserved Instance Reporting Azure Cost Management -

使用状況のレポート AWS Cost & Usage Report Azure Cost Management -

Customer Engagement

AWS Azure GCP

コンタクトセンター Amazon Connect - Contact Center AI

エンゲージメントのパーソナライズ Amazon Pinpoint Notification Hubs -

Eメールの送受信 Amazon Simple Email Service - -

Database

AWS Azure GCP

MySQL Amazon RDS for MySQL/Amazon Aurora Azure Database for MySQL Cloud SQL for MySQL

PostgreSQL Amazon RDS for PostgreSQL/Amazon Aurora Azure Database for PostgreSQL Cloud SQL for PostgreSQL

Oracle Amazon RDS for Oracle - -

SQL Server Amazon RDS for SQL Server SQL Database Cloud SQL for SQL Server

MariaDB Amazon RDS for MySQL for MariaDB Azure Database for MariaDB -

NoSQL Amazon DynamoDB Azure Cosmos DB Cloud Datastore/Cloud Bigtable

インメモリキャッシュ Amazon ElastiCache Azure Cache for Redis Cloud Memorystore

グラフDB Amazon Neptune Azure Cosmos DB(API for Gremlin) -

時系列DB Amazon Timestream - -

MongoDB Amazon DocumentDB (with MongoDB compatibility) Azure Cosmos DB(API for MongoDB) -

グローバル分散RDB - - Cloud Spanner

リアルタイムDB - - Cloud Firestore

エッジに配置可能なDB - Azure SQL Database Edge -

Developer Tools

AWS Azure GCP

開発プロジェクトの管理 AWS CodeStar Azure DevOps -

Gitリポジトリ AWS CodeCommit Azure Repos Cloud Source Repositories

継続的なビルドとテスト AWS CodeBuild Azure Pipelines Cloud Build

継続的なデプロイ AWS CodeDeploy Azure Pipelines Cloud Build

パイプライン AWS CodePipeline Azure Pipelines Cloud Build

作業の管理 - Azure Boards -

パッケージレジストリ - Azure Artifacts -

テスト計画の管理 - Azure Test Plans -

IDE AWS Cloud9 (Visual Studio Online) -

分散トレーシング AWS X-Ray Azure Application Insights Stackdriver Trace

End User Computing

AWS Azure GCP

デスクトップ Amazon WorkSpaces Windows Virtual Desktop -

アプリケーションストリーミング Amazon AppStream 2.0 - -

ストレージ Amazon WorkDocs (Office 365) (G Suite)

社内アプリケーションへのアクセス Amazon WorkLink Azure AD Application Proxy -

Internet of Things

AWS Azure GCP

デバイスとクラウドの接続 AWS IoT Core Azure IoT Hub Cloud IoT Core

エッジへの展開 AWS Greengrass Azure IoT Edge Cloud IoT Edge

デバイスから任意の関数を実行 AWS IoT 1-Click - -

デバイスの分析 AWS IoT Analytics Azure Stream Analytics/Azure Time Series Insights -

デバイスのセキュリティ管理 AWS IoT Device Defender - -

デバイスの管理 AWS IoT Device Management Azure IoT Hub Cloud IoT Core

デバイスで発生するイベントの検出 AWS IoT Events - -

産業機器からデータを収集 AWS IoT SiteWise - -

IoTアプリケーションの構築 AWS IoT Things Graph Azure IoT Central -

位置情報 - Azure Maps Google Maps Platform

実世界のモデル化 - Azure Digital Twins

Machine Learning

AWS Azure GCP

機械学習モデルの構築 Amazon SageMaker Azure Machine Learning Service Cloud ML Engine

自然言語処理 Amazon Comprehend Language Understanding Cloud Natural Language

チャットボットの構築 Amazon Lex Azure Bot Service (Dialogflow)

Text-to-Speech Amazon Polly Speech Services Cloud Text-to-Speech

画像認識 Amazon Rekognition Computer Vision Cloud Vision

翻訳 Amazon Translate Translator Text Cloud Translation

Speech-to-Text Amazon Transcribe Speech Services Cloud Speech-to-Text

レコメンデーション Amazon Personalize - Recommendations AI

時系列予測 Amazon Forecast - -

ドキュメント検出 Amazon Textract - -

推論の高速化 Amazon Elastic Inference - -

データセットの構築 Amazon SageMaker Ground Truth - -

ビジョンモデルのカスタマイズ - Custom Vision Cloud AutoML Vision

音声モデルのカスタマイズ - Custom Speech -

言語処理モデルのカスタマイズ Amazon Comprehend - Cloud AutoML Natural Language

翻訳モデルのカスタマイズ - - Cloud AutoML Translation

Managemnet & Governance

AWS Azure GCP

モニタリング Amazon CloudWatch Azure Monitor Google Stackdriver

リソースの作成と管理 AWS CloudFormation Azure Resource Manager Cloud Deployment Manager

アクティビティの追跡 AWS CloudTrail Azure Activity Log

リソースの設定変更の記録、監査 AWS Config - -

構成管理サービスの展開 AWS OpsWorks(Chef/Puppet) - -

ITサービスカタログの管理 AWS Service Catalog - Private Catalog

インフラストラクチャの可視化と制御 AWS Systems Manager - -

パフォーマンスとセキュリティの最適化 AWS Trusted Advisor Azure Advisor -

使用しているサービスの状態表示 AWS Personal Health Dashboard Azure Resource Health -

基準に準拠したアカウントのセットアップ AWS Control Tower Azure Policy -

ライセンスの管理 AWS License Manager - -

ワークロードの見直しと改善 AWS Well-Architected Tool - -

複数アカウントの管理 AWS Organizations Subspricton+RBAC -

ディザスタリカバリ - Azure Site Recovery -

ブラウザベースのシェル AWS Systems Manager Session Manager Cloud Shell Cloud Shell

Media Services

AWS Azure GCP

メディア変換 Amazon Elastic Transcoder/AWS Elemental MediaConvert Azure Media Services - Encoding (Anvato)

ライブ動画処理 AWS Elemental MediaLive Azure Media Services - Live and On-demand Streaming (Anvato)

動画の配信とパッケージング AWS Elemental MediaPackage Azure Media Services (Anvato)

動画ファイル向けストレージ AWS Elemental MediaStore - -

ターゲティング広告の挿入 AWS Elemental MediaTailor - -

Migration & Transfer

AWS Azure GCP

移行の管理 AWS Migration Hub - -

移行のアセスメント AWS Application Discovery Service Azure Migrate -

データベースの移行 AWS Database Migration Service Azure Database Migration Service -

オンプレミスからのデータ転送 AWS DataSync - -

サーバーの移行 AWS Server Migration Service Azure Site Recovery -

大容量データの移行 Snowファミリー Azure Data box Transfer Appliance

SFTP AWS Transfer for SFTP - -

クラウド間のデータ転送 - - Cloud Storage Transfer Service

Mobile

AWS Azure GCP

モバイル/Webアプリケーションの構築とデプロイ AWS Amplify Mobile Apps (Firebase)

アプリケーションテスト AWS Device Farm (Xamarin Test Cloud) (Firebase Test Lab)

Networking & Content Delivery

AWS Azure GCP

仮想ネットワーク Amazon Virtual Private Cloud Azure Virtual Network Virtual Private Cloud

APIの管理 Amazon API Gateway API Management Cloud Endpoints/Apigee

CDN Amazon CloudFront Azure CDN Cloud CDN

DNS Amazon Route 53 Azure DNS Cloud DNS

プライベート接続 Amazon VPC PrivateLink Virtual Network Service Endpoints Private Access Options for Services

サービスメッシュ AWS App Mesh Azure Service Fabric Mesh Traffic Director

サービスディスカバリー AWS Cloud Map - -

専用線接続 AWS Direct Connect ExporessRoute Cloud Interconnect

グローバルロードバランサー AWS Global Accelerator Azure Traffic Manager Cloud Load Balancing

ハブ&スポーク型ネットワーク接続 AWS Transit Gateway - -

ネットワークパフォーマンスの監視 - Network Watcher -

Security, Identity & Compliance

AWS Azure GCP

ID管理 AWS Identity and Access Management Azure Active Directory Cloud IAM

階層型データストア Amazon Cloud Directory - -

アプリケーションのID管理 Amazon Cognito Azure Mobile Apps -

脅威検出 Amazon GuardDuty Azure Security Center Cloud Security Command Center

サーバーのセキュリティの評価 Amazon Inspector Azure Security Center Cloud Security Command Center

機密データの検出と保護 Amazon Macie Azure Information Protection -

コンプライアンスレポートへのアクセス AWS Artifact (Service Trust Portal) -

SSL/TLS証明書の管理 AWS Certificate Manager App Service Certificates Google-managed SSL certificates

ハードウェアセキュリティモジュール AWS Cloud HSM Azure Dedicated HSM Cloud HSM

Active Directory AWS Directory Service Azure Active Directory Managed Service for Microsoft Active Directory

ファイアウォールルールの一元管理 AWS Firewall Manager - -

キーの作成と管理 AWS Key Management Service Azure Key Vault Clou Key Management Service

機密情報の管理 AWS Secrets Manager Azure Key Vault -

セキュリティ情報の一括管理 AWS Security Hub Azure Sentinel -

DDoS保護 AWS Shield Azure DDoS Protection Cloud Armor

シングルサインオン AWS Single Sign-On Azure Active Directory B2C Cloud Identity

WAF AWS WAF Azure Application Gateway Cloud Armor

Storage

AWS Azure GCP

オブジェクトストレージ Amazon S3 Azure Blob Cloud Storage

ブロックストレージ Amazon EBS Disk Storage Persistent Disk

ファイルストレージ(NFS) Amazon Elastic File System Azure NetApp Files Cloud Filestore

ファイルストレージ(SMB) Amazon FSx for Windows File Server Azure Files -

HPC向けファイルシステム Amazon FSx for Lustre Azure FXT Edge Filer -

アーカイブストレージ Amazon S3 Glacier Storage archive access tier Cloud Storage Coldline

バックアップの一元管理 AWS Backup Azure Backup -

ハイブリットストレージ AWS Storage Gateway Azure StorSimple -

その他

AWS Azure GCP

AR/VRコンテンツの作成 Amazon Sumerian - -

ゲームサーバーホスティング Amazon GameLift - -

ゲームエンジン Amazon Lumberyard - -

ロボット工学 RoboMaker - -

人工衛星 Ground Station - -

参考情報

0 notes

Text

Cloud DBA

Role: Cloud DBA Location: Atlanta, GA Type: Contract Job Description: Primary Skills: NoSQL, Oracle and Cloud Spanner. Job Requirements: ? Bachelor?s degree (Computer Science preferred) with 5+ year?s experience as a DBA managing databases (Cassandra, MongoDB, Amazon DynamoDB, Google Cloud Bigtable, Google Cloud Datastore, etc) in Linux environment. ? Experience in a technical database engineering role, such as software development, release management, deployment engineering, site operations, technical operations, etc. ? Production DBA experience with NoSQL databases. ? Actively monitor existing databases to identify performance issues, and give application team?s guidance and oversight to remediate performance issues. ? Excellent troubleshooting/problem solving and communication skills. ? Scripting/Programming (UNIX shell, Python, etc.) experience is highly desirable what will make you stand out? ? Experience with other Big Data technologies is a plus. ? Experience with Oracle, PostgreSQL database administration is a plus. ? Knowledge Continuous Integration/Continuous Delivery. ? Linux system administration. Roles and Responsibilities: ? Responsible for database deployments, and monitor for capacity, performance, and/or solving issues. ? Experience with backup/recovery, sizing and space management, tuning, diagnostics, and proficient with administering multiple databases. ? Enable/Integrate monitoring, auditing, and alert systems for databases with existing monitoring infrastructure. ? Responsible for working with development teams in evaluating new database technologies and provide SME guidance to development teams. ? Responsible for system performance and reliability. ? Occasional off-shift on-call availability to resolve Production issues or assist in Production deployments. ? Thorough knowledge of UNIX operating system and shell scripting. ? Good Experience in databases like, Cassandra, MongoDB, Amazon DynamoDB, Google Cloud Bigtable, Google Cloud Datastore,in Linux environment. ? Perform independent security reviews of critical databases across the organization to ensure the databases are aligned with Equifax Global Security Policies, Access Control Standards and industry standard methodologies. ? Experience with Cloud technologies like AWS, GCP, Azure is required. ? DBA ought to ensure the databases are highly available, have sufficient capacity in place and are fully resilient across multiple data centers and cloud architectures. ? You should be able to uphold high standards, learn and adopt new methodologies and tackle new challenges in everyday work. We want our DBA to be on the state of the art of technologies constantly seeking to improve performance, and uptime of the database systems. ? Handle NoSQL databases in all SDLC environments including installation, configuration, backup, recovery, replication, upgrades, etc. Reference : Cloud DBA jobs from Latest listings added - cvwing http://cvwing.com/jobs/technology/cloud-dba_i13145

0 notes

Text

Introducing Datastream GCP’s new Stream Recovery Features

Replication pipelines can break in the complicated and dynamic world of data replication. Restarting replication with little impact on data integrity requires a number of manual procedures that must be completed after determining the cause and timing of the failure.

With the help of Datastream GCP‘s new stream recovery capability, you can immediately resume data replication in scenarios like database failover or extended network outages with little to no data loss.

Think about a financial company that replicates transaction data to BigQuery for analytics using DataStream from their operational database. A planned failover to a replica occurs when there is a hardware failure with the primary database instance. Due to the unavailability of the original source, Datastream’s replication pipeline is malfunctioning. In order to prevent transaction data loss, stream recovery enables replication to continue from the failover database instance.