#Reverse Proxy Servers

Explore tagged Tumblr posts

Text

Linux sunucular için Open Source Reverse Proxy Servers

Merhaba Dostlar, Linux tabanlı sunucular için kullanılan Open Source Reverse Proxy Servers konusuna odaklanacağız. Bu güçlü araçlar, web sitelerinizin performansını artırmak, güvenliği sağlamak ve trafik yönetimini optimize etmek için önemli bir rol oynarlar. Open Source Reverse Proxy Servers Nedir? Reverse Proxy, istemciler ve bir veya daha fazla sunucu arasında yer alan bir ara sunucudur.…

View On WordPress

0 notes

Text

Me when I find another promising roleplay ai platform

#my reverse proxy is not working cause of something going on with the owners and stuff#but someone in that server mentioned this other platform and when i checked it out it does seem promising#hopefully this is a good spot#saltydoesstuff#saltyrambles

0 notes

Photo

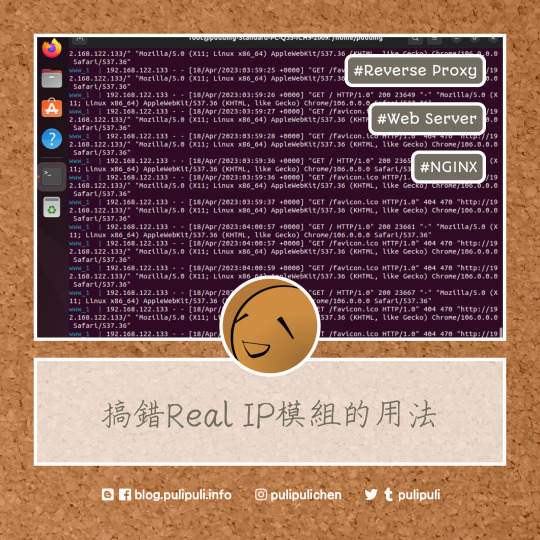

看看網頁版全文 ⇨ 如何取得使用者的IP?從反向代理伺服器、網頁伺服器到程式語言來看 / How to Get the User's IP? From Reverse Proxy Server, Web Server to Programming Language https://blog.pulipuli.info/2023/04/blog-post_18.html 看來目前是做不到「真的透明」的反向代理伺服器了。 ---- # 真實IP / The "Real IP"。 網路服務中加入反向代理伺服器的人,通常都會有這個問題:「怎麼取得使用者真實的IP?」。 如果你使用PHP,那我們通常會用$_SERVER["REMOTE_ADDR"]來取得使用者的IP位置。 但如果該伺服器位於反向代理伺服器的後頭,那$_SERVER["REMOTE_ADDR"]抓到的會是反向代理伺服器的IP,並非來自使用者真實的IP。 為此,使用NGINX架設反向代理伺服器的教學中,大多會建議在反向代理伺服器的NGINX中加入以下設定,將使用者的IP包裝在X-Real-IP中。 [Code...] 如此一來,後端伺服器(backend,或說是上游伺服器 upstream)的PHP程式碼便能在 $_SERVER["HTTP_X_READ_IP"]取得使用者真實的IP (192.168.122.1)。 再回來看到這張網路架構圖。 在取得使用者IP的這個問題上,可以把整體架構分成四個角色:。 - 使用者 (Client) :這裡真實IP給的例子是192.168.122.1。 - 反向代理伺服器 (Reverse Proxy):使用NGINX架設。該伺服器的IP是192.168.122.133。 - 網頁伺服器 (Web Server):提供網頁內容的真實網頁,可以用Apache架設,也可以用NGINX架設。IP是192.168.122.77。 - PHP:產生網頁的程式語言。該程式語言用來辨別使用者IP的主要方式是$_SERVER["REMOTE_ADDR"]。但如果反向代理伺服器有設定X-Real-IP的話,也可以用$_SERVER["HTTP_X_REAL_IP"]取得使用者的IP。 當我們在討論「如何取得使用者IP」的問題時,一定要搞清楚我們討論的角色是哪一層。 到底是後端的程式語言PHP或ASP.NET?還是網頁伺服器的Apache或NGINX?還是我們想要在前端的反向代理伺服器實作這個功能?。 理想上,如果能在反向代理伺服器就將使用者的真實IP傳遞給後端的網頁伺服器跟程式語言,而且能夠讓後端伺服器誤以為請求就是來自使用者本人,那應該是最理想的做法。 但目前的結論是:做不到。 以下讓我們從前端到後端一一來看看要怎麼做。 ---- 繼續閱讀 ⇨ 如何取得使用者的IP?從反向代理伺服器、網頁伺服器到程式語言來看 / How to Get the User's IP? From Reverse Proxy Server, Web Server to Programming Language https://blog.pulipuli.info/2023/04/blog-post_18.html

0 notes

Note

I just pulled this 2009 hp out of the dumpster what do i do with it. It has ubuntu.

if it's that old, probably wipe and reinstall. If you're doing ubuntu again first uninstall snap but there's a lot of neat self-hosting stuff you can do with an old PC.

I have a little RasPi in my basement which runs an RSS Feed aggregator(FreshRSS), some calendars(Radicale) and notes(Joplin) so they're synced between all my devices.

If the computer has the storage and a little bit of power for processing things you could also run something to sync all your photos (Immich) and files (Nextcloud, which also does images but i like Immich better for it) between ur devices so you can avoid having to use paid services which may or may not be selling your data or whatever.

You can have it run a self hosted VPN such as WireGuard which you can port forward so you can use it from anywhere, or you can use a service like Tailscale which doesn't require port forwarding, but it's not something you host yourself, they have their own servers.

You can also put all the services behind a reverse proxy (nginx Proxy Manager, NOT nginx, i mean it's good but it's much harder) and be able to access it through a proper domain with SSL(a vpn will already do this though) instead of whatever 192.168.whatever, again, only accessible by people On That VPN.

All things are available (and usually encouraged) to run through docker, and they often even have their own compose files so it's not too much setup. (it's maintenence to update things though)

Also have fun and play tuoys. Old computers run modern versions of linux much better than windows. just open it up see what u can do with it, get used to it, try to customize the desktop to how you like it, or try another one (Ubuntu comes with GNOME. please try another one). See what works and what doesn't (hardware will likely be the issue if something doesn't work though, not linux itself). Something like Plasma or Cinnamon works just like a normal windows computer but there's still a bit of that "learning how to use a computer" that you don't really get after using the same version of windows for 10 years.

12 notes

·

View notes

Text

i am not really interested in game development but i am interested in modding (or more specifically cheat creation) as a specialized case of reverse-engineering and modifying software running on your machine

like okay for a lot of games the devs provide some sort of easy toolkit which lets even relatively nontechnical players write mods, and these are well-documented, and then games which don't have those often have a single-digit number of highly technical modders who figure out how to do injection and create some kind of api for the less technical modders to use, and that api is often pretty well documented, but the process of creating it absolutely isn't

it's even more interesting for cheat development because it's something hostile to the creators of the software, you are actively trying to break their shit and they are trying to stop you, and of course it's basically completely undocumented because cheat developers both don't want competitors and also don't want the game devs to patch their methods....

maybe some of why this is hard is because it's pretty different for different types of games. i think i'm starting to get a handle on how to do it for this one game - so i know there's a way to do packet sniffing on the game, where the game has a dedicated port and it sends tcp packets, and you can use the game's tick system and also a brute-force attack on its very rudimentary encryption to access the raw packets pretty easily.

through trial and error (i assume) people have figured out how to decode the packets and match them up to various ingame events, which is already used in a publicly available open source tool to do stuff like DPS calculation.

i think, without too much trouble, you could probably step this up and intercept/modify existing packets? like it looks like while damage is calculated on the server side, whether or not you hit an enemy is calculated on the client side and you could maybe modify it to always hit... idk.

apparently the free cheats out there (which i would not touch with a 100 foot pole, odds those have something in them that steals your login credentials is close to 100%) operate off a proxy server model, which i assume intercepts your packets, modifies them based on what cheats you tell it you have active, and then forwards them to the server.

but they also manage to give you an ingame GUI to create those cheats, which is clearly something i don't understand. the foss sniffer opens itself up in a new window instead of modifying the ingame GUI.

man i really want to like. shadow these guys and see their dev process for a day because i'm really curious. and also read their codebase. but alas

#coding#past the point of my life where i am interested in cheating in games#but if anything i am even more interested in figuring out how to exploit systems

48 notes

·

View notes

Text

Fighting my server rn. I've got some nextcloud docker container running, and nginx should do a reverse-proxy to it but it just doesn't seem to do it for some reason. I can access it perfectly fine via hostname:8080 on other devices but for some reason not from the server itself, where I have to use 127.17.0.1:8080 but even a reverse proxy to that doesn't do it. Honestly no fucking clue what's wrong. And if I can't fix it I guess I'll have to go back to not using a container

9 notes

·

View notes

Text

ok since i've been sharing some piracy stuff i'll talk a bit about how my personal music streaming server is set up. the basic idea is: i either buy my music on bandcamp or download it on soulseek. all of my music is stored on an external hard drive connected to a donated laptop that's next to my house's internet router. this laptop is always on, and runs software that lets me access and stream my any song in my collection to my phone or to other computers. here's the detailed setup:

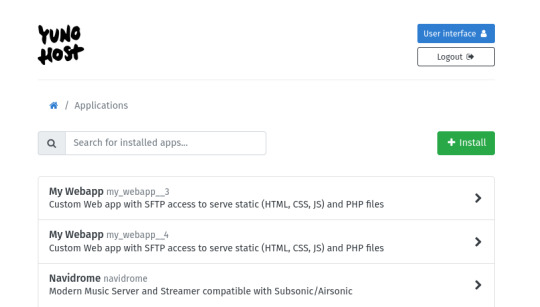

my home server is an old thinkpad laptop with a broken keyboard that was donated to me by a friend. it runs yunohost, a linux distribution that makes it simpler to reuse old computers as servers in this way: it gives you a nice control panel to install and manage all kinds of apps you might want to run on your home server, + it handles the security part by having a user login page & helping you install an https certificate with letsencrypt.

***

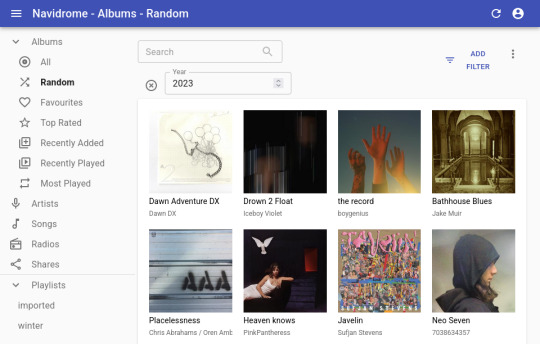

to stream my music collection, i use navidrome. this software is available to install from the yunohost control panel, so it's straightforward to install. what it does is take a folder with all your music and lets you browse and stream it, either via its web interface or through a bunch of apps for android, ios, etc.. it uses the subsonic protocol, so any app that says it works with subsonic should work with navidrome too.

***

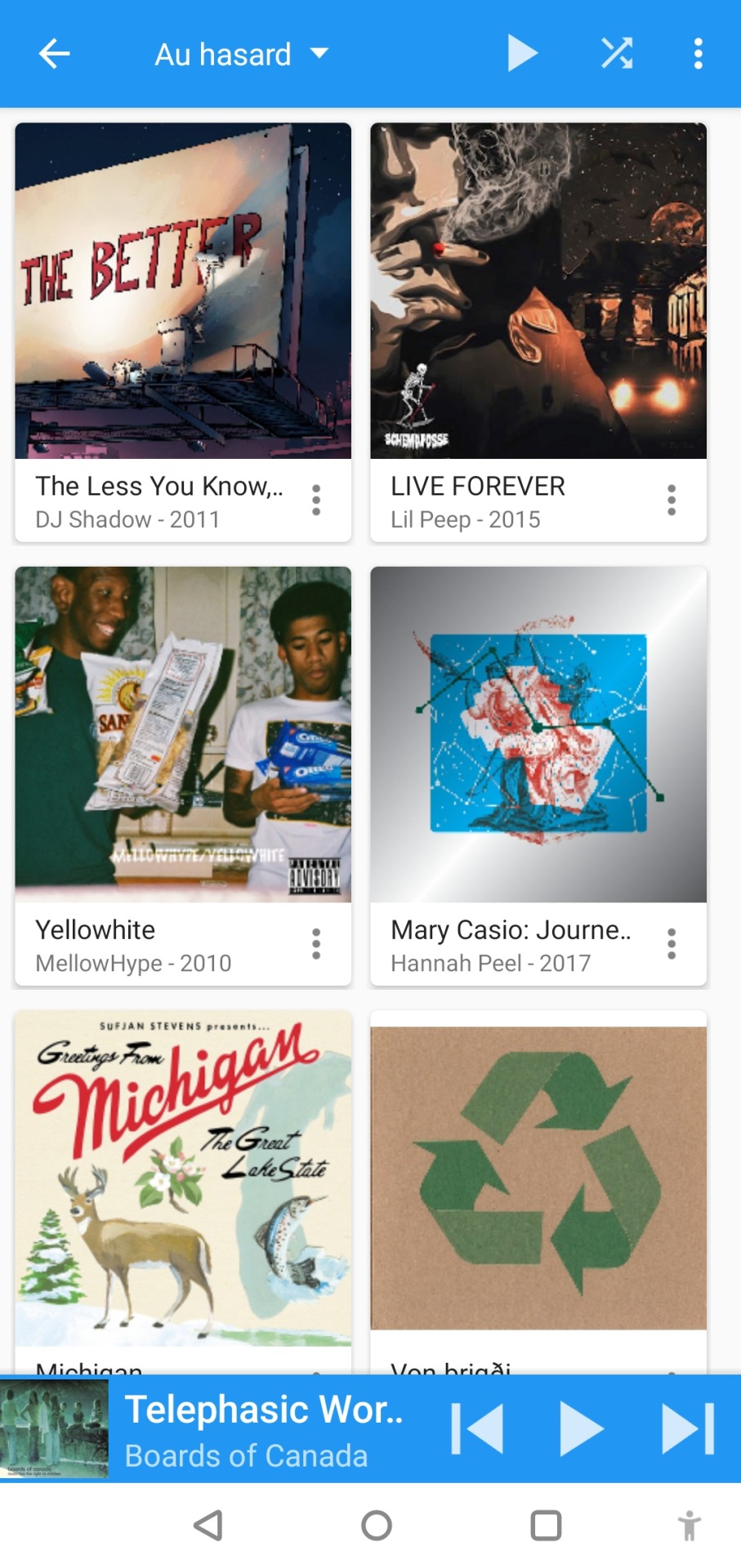

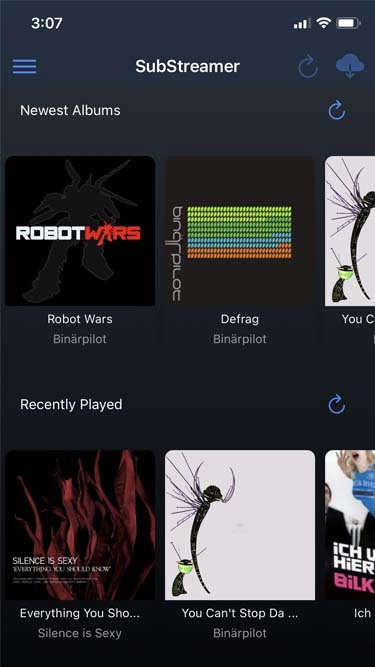

to listen to my music on my phone, i use DSub. It's an app that connects to any server that follows the subsonic API, including navidrome. you just have to give it the address of your home server, and your username and password, and it fetches your music and allows you to stream it. as mentionned previously, there's a bunch of alternative apps for android, ios, etc. so go take a look and make your pick. i've personally also used and enjoyed substreamer in the past. here are screenshots of both:

***

to listen to my music on my computer, i use tauon music box. i was a big fan of clementine music player years ago, but it got abandoned, and the replacement (strawberry music player) looks super dated now. tauon is very new to me, so i'm still figuring it out, but it connects to subsonic servers and it looks pretty so it's fitting the bill for me.

***

to download new music onto my server, i use slskd which is a soulseek client made to run on a web server. soulseek is a peer-to-peer software that's found a niche with music lovers, so for anything you'd want to listen there's a good chance that someone on soulseek has the file and will share it with you. the official soulseek client is available from the website, but i'm using a different software that can run on my server and that i can access anywhere via a webpage, slskd. this way, anytime i want to add music to my collection, i can just go to my server's slskd page, download the files, and they directly go into the folder that's served by navidrome.

slskd does not have a yunohost package, so the trick to make it work on the server is to use yunohost's reverse proxy app, and point it to the http port of slskd 127.0.0.1:5030, with the path /slskd and with forced user authentification. then, run slskd on your server with the --url-base slskd, --no-auth (it breaks otherwise, so it's best to just use yunohost's user auth on the reverse proxy) and --no-https (which has no downsides since the https is given by the reverse proxy anyway)

***

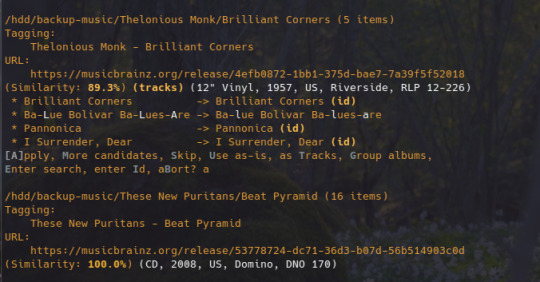

to keep my music collection organized, i use beets. this is a command line software that checks that all of the tags on your music are correct and puts the file in the correct folder (e.g. artist/album/01 trackname.mp3). it's a pretty complex program with a ton of features and settings, i like it to make sure i don't have two copies of the same album in different folders, and to automatically download the album art and the lyrics to most tracks, etc. i'm currently re-working my config file for beets, but i'd be happy to share if someone is interested.

that's my little system :) i hope it gives the inspiration to someone to ditch spotify for the new year and start having a personal mp3 collection of their own.

34 notes

·

View notes

Text

https://github.com/dexter-proxy

<3

ERR_ECH_FALLBACK_CERTIFICATE_INVALID

this was my github account when I use to be really into programming , I was really good at it . Infact I was so good at it that everyone I once knew became trapped in a mixed matrix loop glitch of an 80's high-school drama flick & a super hero movie . Under ideals between war in a loop where there are no friends and where there is nothing but meaningless bitcoin . I stopped programming because after awhile I got sick of the same looped behavior in the same states of mind without really paying attention :: the loop always goes something like this [ I'm important & needed , am I villain, what good am I ] the entire ideal trying to hide the idea that I'm only lied to through fear . The idea that rape has been hunted down for a long time on the internet due to the darkwebs popularity was always something I was good at , in fact I was so good at it people made me out as a lieing joke and put my entire life on a zoo display like a child to make fun of calling it nobility . This is cursed by the old gods . Though 🤔 .... the same loop always follows because that's only the human behavior aspect that's how c++⁹⁸ was created as a subjective of C programming . Though even the idea of helping me was used against people to make people into basically brain dead nothingness hoping to hide . Sorry ( not really ) it's not my war in the first place . English is kind of lame . I made such a HUGE COOL internet trench that no one will ever explore . That's okay .

英•語目がクチる ° 本の方が使いい • 但只能在同一个循环中 ° 最终每个人都会停下来,然后这个循环再次开始 ,

that is impossible I guess to comprehend . So I made a place where we could post hentai and porn and not be bothered this

http://blueponyfdt5emnvtabzgfy36bwakan225x4fxvttgt2ov2dbkcqgdad.onion

This is my friend ^-^ ^-^ <3

error in E explorer indicates an issue with Encrypted Client Hello (ECH) and its fallback mechanism, specifically when a reverse proxy or other network middlebox interferes with the ECH handshake. This error usually occurs when a site uses ECH for secure connections, but the browser (E explorer , in this case) detects that the fallback certificate presented by the server is invalid or untrusted.

3 notes

·

View notes

Text

DUDELZ of the Damned | Rosie of the Jungle, Pt. 1

HEY THERE PEOPLE OF TODAY AND ROBOTS OF TOMORROW! IT'S ME, CLARK!

A chill is in the air. You can feel it can't you? Perhaps you even recognize it. That same chill arrives every year right on the dot. With it comes a frightful howl in the moonlight, the only other sound to be heard. Otherwise there is a strange calmness settling around you, like the point of ease before the storm. By now the howling has stopped. It has been replaced by a different sound. Footsteps. Big, heavy, dragging, as if the figure didn't quite know how to use their legs. Perhaps it's a random passerby. Perhaps it's a rotting, frightful feature freshly risen from the grave. Perhaps it's some other, unspeakable horror waiting to pounce! Whatever it is, you're not waiting around to find out! Yet no matter how far you run, it can't be escaped. The chill in the air, the howling of the wind, the heavy footsteps, it all leads back to one thing: October is here! And with it comes the return of the DUDELZ of the Damned!

Yes weirdos, like last year, my approximation of Sketchtober has returned. I call it an proxy because there was no list of prompts. Nah, that'd be too limiting. This is yet another case where I compiled my own list of ideas, sketched them out, then used one color per picture. With all that said, let's see what spoopy scribblings await us today!

Legend says there is an ancient city hidden deep within the jungle of Africa. Many have ventured deep into the wilderness to find it, but seldom have come back successful. Or alive. This fabled city and all of its riches is at risk to becoming lost to time. Unless Rosie Stardust can find it first! Finding herself on Earth-618, the cosmic cutie becomes acquainted with a naval merchant named Paul D'Arnot, who tells her of the infamous civilization the locals call Opar. Never one to shy away from a challenge, or the chance to discover something new, the sentient spacial-anomaly tasks herself with finding the jungle's last hidden treasure. After she dresses for the trip, of course. Luckily this world is still stuck in the late 1800s, meaning she's sporting the trendiest dresses coming out of London. Luckier still, Rosie is able to befriend most creatures she encounters, so the local animals are more than willing to help. One baby baboon quickly grows to liking the Cosmic Cutie.

Needless to say, Rosie has an easier time traversing Africa than Jane Porter did. This sketch idea came about after my friend @burningthrucelluloid watched Tarzan earlier this year. He was providing commentary over on my Discord server and it got me to watch the movie again. I say, as if I needed an excuse. Disney's take on this classic story is easily one of my all-time favorite films. It features amazing animation, a sensational soundtrack, doesn't treat the gorillas like savage monsters, makes its lead hero more compelling than he's ever been, and their version of Jane Porter is hot. She's brainy, competent, managed to teach a man with no English how to speak it fluently in like a week, and voiced by Minnie Driver in her prime. You might think that's why I drew Rosie in this outfit, but really it was the realization that her curious nature and kind spirit would mean she'd get along swimmingly with the cosmic cutie. From there the image of Rosie in Jane's dress refused to leave my mind, so here we are. Actually this was my second attempt at putting this idea on paper. My first draft turned out great, save for Rosie's arms. She was meant to be holding the baboon in awe while the furry rascal looked on in confusion. Upon rewatching the movie it dawned on me that having the monkey draw the lady would be a funny reversal of roles. Either way, happy 25th anniversary to this fantastic film! I hope you all enjoy this sketch. And remember: be kind to baboons. They usually roll with backup.

BONUS QUESTION: Are you a fan of Disney's Tarzan?

MAY THE GLASSES BE WITH YOU!

#Clarktoons#Clarktoon Crossing#DUDELZ#DUDELZ of the Damned#DUDELZ of the Damned 2024#Halloween#Halloween 2024#monsters#sketches#Sketchtober#spoopy#artists on tumblr#Africa#baboon#Cosmic Cutie#Disney#Jane Porter#jungle#Rosie#Rosie Stardust#space#Tarzan

4 notes

·

View notes

Text

Progress so far of mobile server (dev log)

So I was successful in turning my old phone into apache web server. The phone had some hardware issue that it always clicked on the screen randomly so I had to maneuverer my way installing Linux and apache into the phone. I had to set up proxies as well to connect to it with the open internet. So far it is working well. Sometimes I have to reroute the connections since it is running over mobile data? I am thinking of getting a dynamic DNS. But I have never tried dynamic DNS before so I am not ready to explore that yet. Plus, I don't know if I would get a static IP from it or a straight away domain name? I mean both are okay, but both have pros and cons of the usage.

Like if I get a static IP , I can run virtual hosts in apache and run multiple websites pointing A record of the domain to the same website.

And lets say if I get a subdomain from the dynamic DNS, I can point CNAME record of the domain to subdomain? But will virtual hosts work with it? I don't know. I suppose it should but it could not work also. Hmm.. lets see, I think I have done this before, apache virtual hosts works with CNAME.

But there is another issue that one of the website runs behind a SOCKS5 proxy, How will I accommodate that? The issue is that, since its Ubuntu running over VM over lineage OS in phone, I can't get low number ports such 80 or 443 so I have to somehow use Dynamic DNS and SOCKS5 proxy to redirect traffic at higher port number.

I mean, I still have oracle VPS running so I may not need virtual hosts in mobile server. So maybe I will venture this when I need it.

I am just wondering whether I should run Docker to run my game server then redirect the traffic with apache virtual hosts, reverse proxy with SOCKS5 proxy? I don't even know if it work. Theoretically it should work.

14 notes

·

View notes

Text

Boost Your Website with Nginx Reverse Proxy

Hi there, enthusiasts of the web! 🌐

Have you ever wondered how to speed up and protect your website? Allow me to provide to you a little tip known as Nginx reverse proxy. I promise it will revolutionise the game!

What’s a Reverse Proxy Anyway?

Consider a reverse proxy as the security guard for your website. It manages all incoming traffic and ensures seamless operation by standing between your users and your server. Do you want to go further? Take a look at this fantastic article for configuring the Nginx reverse proxy.

Why You’ll Love Nginx Reverse Proxy

Load Balancing: Keep your site running smoothly by spreading traffic across multiple servers.

Extra Security: Protect your backend servers by hiding their IP addresses.

SSL Termination: Speed up your site by handling SSL decryption on the proxy.

Caching: Save time and resources by storing copies of frequently accessed content.

Setting Up Nginx Reverse Proxy

It's really not as hard to set up as you may imagine! On your server, you must first install Nginx. The configuration file may then be adjusted to refer to your backend servers. Want a detailed how-to guide? You just need to look at our comprehensive guide on setting up a reverse proxy on Nginx.

When to Use Nginx Reverse Proxy

Scaling Your Web App: Perfect for managing traffic on large websites.

Microservices: Ideal for routing requests in a microservices architecture.

CDNs: Enhance your content delivery by caching static content.

The End

It's like giving your website superpowers when you add a Nginx reverse proxy to your web configuration. This is essential knowledge to have if you're serious about moving your website up the ladder. Visit our article on configuring Nginx reverse proxy for all the specifics.

Hope this helps! Happy coding! 💻✨

2 notes

·

View notes

Text

Critical Vulnerability (CVE-2024-37032) in Ollama

Researchers have discovered a critical vulnerability in Ollama, a widely used open-source project for running Large Language Models (LLMs). The flaw, dubbed "Probllama" and tracked as CVE-2024-37032, could potentially lead to remote code execution, putting thousands of users at risk.

What is Ollama?

Ollama has gained popularity among AI enthusiasts and developers for its ability to perform inference with compatible neural networks, including Meta's Llama family, Microsoft's Phi clan, and models from Mistral. The software can be used via a command line or through a REST API, making it versatile for various applications. With hundreds of thousands of monthly pulls on Docker Hub, Ollama's widespread adoption underscores the potential impact of this vulnerability.

The Nature of the Vulnerability

The Wiz Research team, led by Sagi Tzadik, uncovered the flaw, which stems from insufficient validation on the server side of Ollama's REST API. An attacker could exploit this vulnerability by sending a specially crafted HTTP request to the Ollama API server. The risk is particularly high in Docker installations, where the API server is often publicly exposed. Technical Details of the Exploit The vulnerability specifically affects the `/api/pull` endpoint, which allows users to download models from the Ollama registry and private registries. Researchers found that when pulling a model from a private registry, it's possible to supply a malicious manifest file containing a path traversal payload in the digest field. This payload can be used to: - Corrupt files on the system - Achieve arbitrary file read - Execute remote code, potentially hijacking the system The issue is particularly severe in Docker installations, where the server runs with root privileges and listens on 0.0.0.0 by default, enabling remote exploitation. As of June 10, despite a patched version being available for over a month, more than 1,000 vulnerable Ollama server instances remained exposed to the internet.

Mitigation Strategies

To protect AI applications using Ollama, users should: - Update instances to version 0.1.34 or newer immediately - Implement authentication measures, such as using a reverse proxy, as Ollama doesn't inherently support authentication - Avoid exposing installations to the internet - Place servers behind firewalls and only allow authorized internal applications and users to access them

Broader Implications for AI and Cybersecurity

This vulnerability highlights ongoing challenges in the rapidly evolving field of AI tools and infrastructure. Tzadik noted that the critical issue extends beyond individual vulnerabilities to the inherent lack of authentication support in many new AI tools. He referenced similar remote code execution vulnerabilities found in other LLM deployment tools like TorchServe and Ray Anyscale. Moreover, despite these tools often being written in modern, safety-first programming languages, classic vulnerabilities such as path traversal remain a persistent threat. This underscores the need for continued vigilance and robust security practices in the development and deployment of AI technologies. Read the full article

2 notes

·

View notes

Text

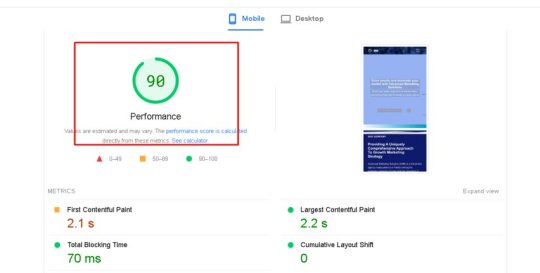

how to and why need WordPress website speed optimization?

In today's fast-paced digital world, speed optimization plays a crucial role in delivering a seamless user experience and achieving business success. This article will discuss the significance of speed optimization and explore various techniques to enhance the performance of websites, applications, and digital platforms.

The Significance of Speed Optimization : Speed optimization refers to the process of improving the loading time and overall performance of a website or application. It is vital for several reasons. Firstly, users have become increasingly impatient and expect instant access to information. A slow-loading website or application can lead to frustration and drive users away, resulting in lost opportunities and reduced conversions. Secondly, search engines like Google consider page speed as a ranking factor, influencing a website's visibility and organic traffic. Therefore, speed optimization directly impacts search engine optimization (SEO) efforts and online visibility.

hire me for website optimization: https://www.fiverr.com/jobair_webpro

Techniques for Speed Optimization

Compressed and Minified Code: Reduce the file sizes of HTML, CSS, and JavaScript by compressing and minifying them. This reduces the bandwidth required for downloading, resulting in faster page load times.

Image Optimization: Optimize images by compressing them without sacrificing visual quality. This can be achieved through various techniques, such as using the appropriate image format (JPEG, PNG, etc.), resizing images to the required dimensions, and leveraging modern image formats like WebP.

Caching: Implement browser caching to store frequently accessed files on the user's device, reducing the need for repeated downloads. This improves load times for returning visitors.

Content Delivery Network (CDN): Utilize a CDN to distribute website content across multiple servers worldwide. CDN servers located closer to the user reduce latency and enable faster content delivery.

Minimize HTTP Requests: Reduce the number of HTTP requests made by the browser by combining multiple files into one. This can be achieved by merging CSS and JavaScript files, using CSS sprites, or inlining small CSS and JavaScript directly into HTML.

Server-Side Optimization: Optimize server configurations, database queries, and scripting languages to improve response times. Techniques include enabling server compression (Gzip), using a reverse proxy cache, and optimizing database queries.

Responsive Design: Ensure your website or application is responsive and optimized for different devices and screen sizes. This ensures a consistent user experience across platforms and reduces the need for unnecessary downloads or device-specific redirects.

Speed optimization is crucial for delivering a positive user experience, improving search engine rankings, and achieving business goals. By implementing techniques such as code compression, image optimization, caching, and server-side optimizations, organizations can enhance the speed and performance of their digital platforms, resulting in increased user engagement and better conversion rates.

Hire me for website speed optimization: https://www.fiverr.com/jobair_webpro/

#wordpress#speed optimization#wordpress speed optimization#speed up#seo optimization#onpageseo#on page optimization

2 notes

·

View notes

Text

Efficient Naver Map Data Extraction for Business Listings

Introduction

In today's competitive business landscape, having access to accurate and comprehensive business data is crucial for strategic decision-making and targeted marketing campaigns. Naver Map Data Extraction presents a valuable opportunity to gather insights about local businesses, consumer preferences, and market trends for companies looking to expand their operations or customer base in South Korea.

Understanding the Value of Naver Map Business Data

Naver is often called "South Korea's Google," dominating the local search market with over 70% market share. The platform's mapping service contains extensive information about businesses across South Korea, including contact details, operating hours, customer reviews, and location data. Naver Map Business Data provides international and local businesses rich insights to inform market entry strategies, competitive analysis, and targeted outreach campaigns.

However, manually collecting this information would be prohibitively time-consuming and inefficient. This is where strategic Business Listings Scraping comes into play, allowing organizations to collect and analyze business information at scale systematically.

The Challenges of Accessing Naver Map Data

Unlike some other platforms, Naver presents unique challenges for data collection:

Language barriers: Naver's interface and content are primarily Korean, creating obstacles for international businesses.

Complex website structure: Naver's dynamic content loading makes straightforward scraping difficult.

Strict rate limiting: Aggressive anti-scraping measures can block IP addresses that require too many requests.

CAPTCHA systems: Automated verification challenges to prevent bot activity.

Terms of service considerations: Understanding the Legal Ways To Scrape Data From Naver Map is essential.

Ethical and Legal Considerations

Before diving into the technical aspects of Naver Map API Scraping, it's crucial to understand the legal and ethical framework. While data on the web is publicly accessible, how you access it matters from legal and ethical perspectives.

To Scrape Naver Map Data Without Violating Terms Of Service, consider these principles:

Review Naver's terms of service and robots.txt file to understand access restrictions.

Implement respectful scraping practices with reasonable request rates.

Consider using official APIs where available.

Store only the data you need and ensure compliance with privacy regulations, such as GDPR and Korea's Personal Information Protection Act.

Use the data for legitimate business purposes without attempting to replicate Naver's services.

Effective Methods For Scraping Naver Map Business Data

There are several approaches to gathering business listing data from Naver Maps, each with advantages and limitations.

Here are the most practical methods:

1. Official Naver Maps API

Naver provides official APIs that allow developers to access map data programmatically. While these APIs have usage limitations and costs, they represent the most straightforward and compliant Naver Map Business Data Extraction method.

The official API offers:

Geocoding and reverse geocoding capabilities.

Local search functionality.

Directions and routing services.

Address verification features.

Using the official API requires registering a developer account and adhering to Naver's usage quotas and pricing structure. However, it provides reliable, sanctioned access to the data without risking account blocks or legal issues.

2. Web Scraping Solutions

When API limitations prove too restrictive for your business needs, web scraping becomes a viable alternative. Naver Map Scraping Tools range from simple script-based solutions to sophisticated frameworks that can handle dynamic content and bypass basic anti-scraping measures.

Key components of an effective scraping solution include:

Proxy RotationRotating between multiple proxy servers is essential to prevent IP bans when accessing large volumes of data. This spreads requests across different IP addresses, making the scraping activity appear more like regular user traffic than automated collection.Commercial proxy services offer:1. Residential proxies that use real devices and ISPs.2. Datacenter proxies that provide cost-effective rotation options.3. Geographically targeted proxies that can access region-specific content.

Request Throttling Implementing delays between requests helps mimic human browsing patterns and reduces server load. Adaptive throttling that adjusts based on server response times can optimize the balance between collection speed and avoiding detection.

Browser Automation Tools like Selenium and Playwright can control real browsers to render JavaScript-heavy pages and interact with elements just as a human user would. This approach is efficient for navigating Naver's dynamic content loading system.

3. Specialized Web Scraping API Services

For businesses lacking technical resources to build and maintain scraping infrastructure, Web Scraping API offers a middle-ground solution. These services handle the complexities of proxy rotation, browser rendering, and CAPTCHA solving while providing a simple API interface to request data.

Benefits of using specialized scraping APIs include:

Reduced development and maintenance overhead.

Built-in compliance with best practices.

Scalable infrastructure that adapts to project needs.

Regular updates to counter anti-scraping measures.

Structuring Your Naver Map Data Collection Process

Regardless of the method chosen, a systematic approach to Naver Map Data Extraction will yield the best results. Here's a framework to guide your collection process:

1. Define Clear Data Requirements

Before beginning any extraction project, clearly define what specific business data points you need and why.

This might include:

Business names and categories.

Physical addresses and contact information.

Operating hours and service offerings.

Customer ratings and review content.

Geographic coordinates for spatial analysis.

Precise requirements prevent scope creep and ensure you collect only what's necessary for your business objectives.

2. Develop a Staged Collection Strategy

Rather than attempting to gather all data at once, consider a multi-stage approach:

Initial broad collection of business identifiers and basic information.

Categorization and prioritization of listings based on business relevance.

Detailed collection focusing on high-priority targets.

Periodic updates to maintain data freshness.

This approach optimizes resource usage and allows for refinement of collection parameters based on initial results.

3. Implement Data Validation and Cleaning

Raw data from Naver Maps often requires preprocessing before it becomes business-ready.

Common data quality issues include:

Inconsistent formatting of addresses and phone numbers.

Mixed language entries (Korean and English).

Duplicate listings with slight variations.

Outdated or incomplete information.

Implementing automated validation rules and manual spot-checking ensures the data meets quality standards before analysis or integration with business systems.

Specialized Use Cases for Naver Product Data Scraping

Beyond basic business information, Naver's ecosystem includes product listings and pricing data that can provide valuable competitive intelligence.

Naver Product Data Scraping enables businesses to:

Monitor competitor pricing strategies.

Identify emerging product trends.

Analyze consumer preferences through review sentiment.

Track promotional activities across the Korean market.

This specialized data collection requires targeted approaches that navigate Naver's shopping sections and product detail pages, often necessitating more sophisticated parsing logic than standard business listings.

Data Analysis and Utilization

The actual value of Naver Map Business Data emerges during analysis and application. Consider these strategic applications:

Market Penetration AnalysisBy mapping collected business density data, companies can identify underserved areas or regions with high competitive saturation. This spatial analysis helps optimize expansion strategies and resource allocation.

Competitive BenchmarkingAggregated ratings and review data provide insights into competitor performance and customer satisfaction. This benchmarking helps identify service gaps and opportunities for differentiation.

Lead Generation and OutreachFiltered business contact information enables targeted B2B marketing campaigns, partnership initiatives, and sales outreach programs tailored to specific business categories or regions.

How Retail Scrape Can Help You?

We understand the complexities involved in Naver Map API Scraping and the strategic importance of accurate Korean market data. Our specialized team combines technical expertise with deep knowledge of Korean digital ecosystems to deliver reliable, compliance-focused data solutions.

Our approach to Naver Map Business Data Extraction is built on three core principles:

Compliance-First Approach: We strictly adhere to Korean data regulations, ensuring all activities align with platform guidelines for ethical, legal scraping.

Korea-Optimized Infrastructure: Our tools are designed for Korean platforms, offering native language support and precise parsing for Naver’s unique data structure.

Insight-Driven Delivery: Beyond raw data, we offer value-added intelligence—market insights, tailored reports, and strategic recommendations to support your business in Korea.

Conclusion

Harnessing the information available through Naver Map Data Extraction offers significant competitive advantages for businesses targeting the Korean market. Organizations can develop deeper market understanding and more targeted business strategies by implementing Effective Methods For Scraping Naver Map Business Data with attention to legal compliance, technical best practices, and strategic application.

Whether you want to conduct market research, generate sales leads, or analyze competitive landscapes, the rich business data available through Naver Maps can transform your Korean market operations. However, the technical complexities and compliance considerations make this a specialized undertaking requiring careful planning and execution.

Need expert assistance with your Korean market data needs? Contact Retail Scrape today to discuss how our specialized Naver Map Scraping Tools and analytical expertise can support your business objectives.

Source : https://www.retailscrape.com/efficient-naver-map-data-extraction-business-listings.php

Originally Published By https://www.retailscrape.com/

#NaverMapDataExtraction#BusinessListingsScraping#NaverBusinessData#SouthKoreaMarketAnalysis#WebScrapingServices#NaverMapAPIScraping#CompetitorAnalysis#MarketIntelligence#DataExtractionSolutions#RetailDataScraping#NaverMapBusinessListings#KoreanBusinessDataExtraction#LocationDataScraping#NaverMapsScraper#DataMiningServices#NaverLocalSearchData#BusinessIntelligenceServices#NaverMapCrawling#GeolocationDataExtraction#NaverDirectoryScraping

0 notes

Text

Reasons Your Office Needs Web Application Firewall (WAF) Solutions

To Defend Against Forgery Attacks

Web application firewalls help defend your website against cross-site request forgery (CSRF) attacks. At the individual level, these attacks may mislead you into using your credentials to complete an unauthorized action, such as transferring money or changing passwords. At the administrative level, such threats can infiltrate your server and take complete control over your website.

To Block Unauthorized Data Access

A reliable WAF solution will detect malicious SQL injections, an attack threat actors use to access databases and steal or destroy information. Often, the attacker will spoof an identity, make themselves an administrator, modify data, or retrieve information using a well-thought-out SQL command execution. Sometimes, the perpetrators may distract database administrators with a DDoS attack so that you would not notice they are already accessing sensitive information.

To Prevent Website Outages

Web application firewall solutions implement protective measures against distributed denial-of-service (DDoS) attacks. These attacks aim to overwhelm the target website by flooding it with internet traffic and rendering it inaccessible (as would have happened with the Philippine Congress website in 2024 if they did not have a reliable IT team on board). The right WAF will mitigate such threats by acting as a reverse proxy and protecting your server from harmful traffic. Custom rules to filter said traffic also increase the chance of preventing DDoS attacks from succeeding.

To Stop Online Impersonators

A solid WAF security will protect your web applications from malicious actors who pose as someone else. When attackers attempt to execute impersonations through cross-site request forgery and SQL-injecting attacks, a reliable web application firewall detects such methods, filtering out unusual, dubious elements before they damage or shut down your website.

To Avoid Transaction Interruptions

Many government websites in the Philippines are used for essential transactions. Your constituents complete activities such as checking their social security status, booking a passport application appointment, securing an NBI certificate, and downloading tax-related documents via government web applications. Hackers and attackers would want to hamper these processes, inconveniencing both your agency and the people you serve.

To Protect Your Reputation

Website downtimes will make your users complain, especially if they are in the middle of completing an online transaction. As these inconveniences pile up, they may affect your reputation and erode public trust. Web application firewalls can help prevent this downgrade by consistently mitigating website threats.

Do you want to learn more? Read about What is the difference between a firewall and a WAF .

0 notes

Text

#HướngDẫnCàiNginxTrênUbuntu2204 #CấuHìnhPort80Và443 #Thuecloud

#HướngDẫnCàiNginxTrênUbuntu2204 #CấuHìnhPort80Và443 #Thuecloud — Hướng Dẫn Cài Đặt Nginx Trên Ubuntu 22.04 – Mở Port 80 & 443 Cho HTTPS Nginx là một trong những web server mạnh mẽ, hiệu suất cao và tiết kiệm tài nguyên, đồng thời đóng vai trò như reverse proxy, load balancer hoặc cache server cho các ứng dụng web hiện đại. Bài viết này sẽ hướng dẫn bạn từng bước cài đặt Nginx trên Ubuntu 22.04,…

0 notes