#SQL Coalesce

Explore tagged Tumblr posts

Text

Top 6 Things to Know About SQL COALESCE

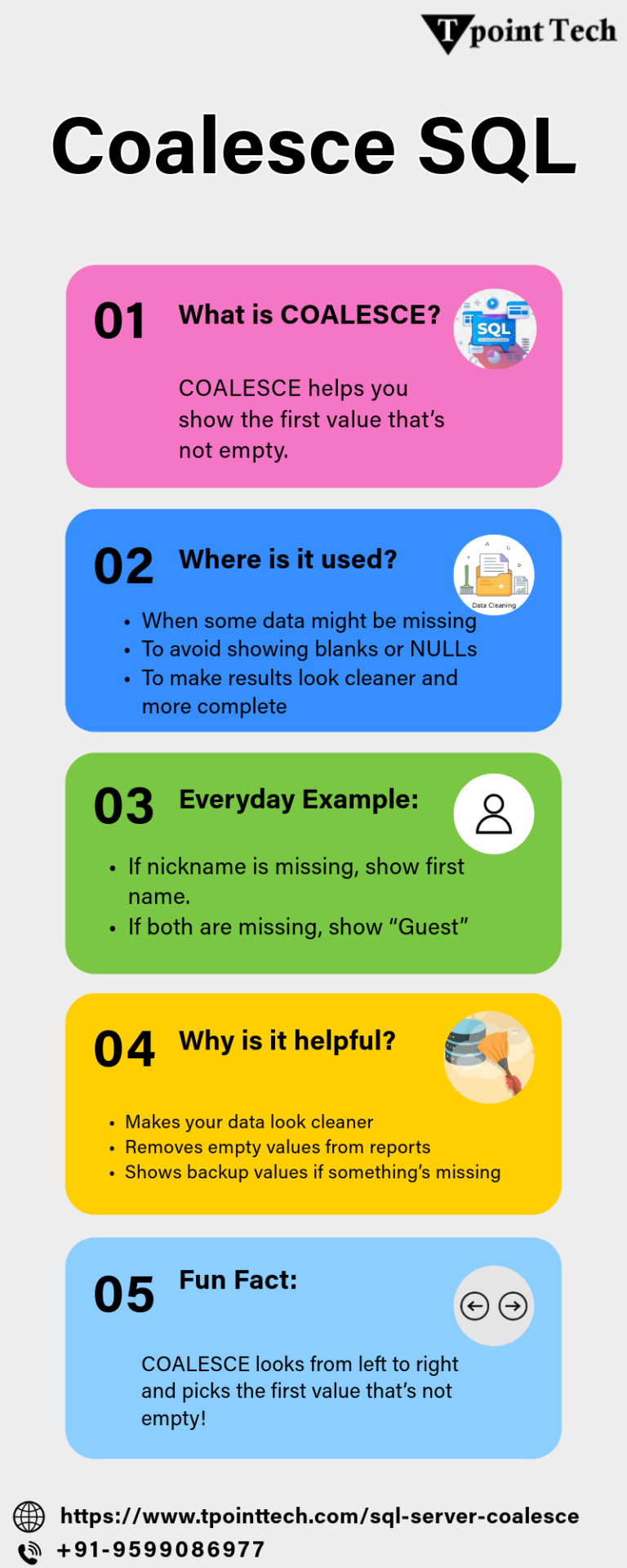

This infographic breaks down the SQL COALESCE function, a powerful tool for handling NULL values in your queries. Discover how the COALESCE function returns the first non-null value from a list, enhancing data readability and reliability. With syntax examples, use cases, and tips, this guide helps simplify your SQL workflow, ensuring cleaner and more consistent results in your database operations.

CONTACT INFORMATION

Email: [email protected]

Phone No. : +91-9599086977

Website: https://www.tpointtech.com/sql-server-coalesce

Location: G-13, 2nd Floor, Sec-3, Noida, UP, 201301, India

0 notes

Text

The foundation of database administration is Structured Query Language (SQL), and SQL Server COALESCE is a particularly potent feature in this field. Let's Explore:

https://madesimplemssql.com/sql-server-coalesce/

Please follow us on FB: https://www.facebook.com/profile.php?id=100091338502392

OR

Join our Group: https://www.facebook.com/groups/652527240081844

3 notes

·

View notes

Text

5 Ultimate Industry Trends That Define the Future of Data Science

Data science is a field in constant motion, a dynamic blend of statistics, computer science, and domain expertise. Just when you think you've grasped the latest tool or technique, a new paradigm emerges. As we look towards the immediate future and beyond, several powerful trends are coalescing to redefine what it means to be a data scientist and how data-driven insights are generated.

Here are 5 ultimate industry trends that are shaping the future of data science:

1. Generative AI and Large Language Models (LLMs) as Co-Pilots

This isn't just about data scientists using Gen-AI; it's about Gen-AI augmenting the data scientist themselves.

Automated Code Generation: LLMs are becoming increasingly adept at generating SQL queries, Python scripts for data cleaning, feature engineering, and even basic machine learning models from natural language prompts.

Accelerated Research & Synthesis: LLMs can quickly summarize research papers, explain complex concepts, brainstorm hypotheses, and assist in drafting reports, significantly speeding up the research phase.

Democratizing Access: By lowering the bar for coding and complex analysis, LLMs enable "citizen data scientists" and domain experts to perform more sophisticated data tasks.

Future Impact: Data scientists will shift from being pure coders to being "architects of prompts," validators of AI-generated content, and experts in fine-tuning and integrating LLMs into their workflows.

2. MLOps Maturation and Industrialization

The focus is shifting from building individual models to operationalizing entire machine learning lifecycles.

Production-Ready AI: Organizations realize that a model in a Jupyter notebook provides no business value. MLOps (Machine Learning Operations) provides the practices and tools to reliably deploy, monitor, and maintain ML models in production environments.

Automated Pipelines: Expect greater automation in data ingestion, model training, versioning, testing, deployment, and continuous monitoring.

Observability & Governance: Tools for tracking model performance, data drift, bias detection, and ensuring compliance with regulations will become standard.

Future Impact: Data scientists will need stronger software engineering skills and a deeper understanding of deployment environments. The line between data scientist and ML engineer will continue to blur.

3. Ethical AI and Responsible AI Taking Center Stage

As AI systems become more powerful and pervasive, the ethical implications are no longer an afterthought.

Bias Detection & Mitigation: Rigorous methods for identifying and reducing bias in training data and model outputs will be crucial to ensure fairness and prevent discrimination.

Explainable AI (XAI): The demand for understanding why an AI model made a particular decision will grow, driven by regulatory pressure (e.g., EU AI Act) and the need for trust in critical applications.

Privacy-Preserving AI: Techniques like federated learning and differential privacy will gain prominence to allow models to be trained on sensitive data without compromising individual privacy.

Future Impact: Data scientists will increasingly be responsible for the ethical implications of their models, requiring a strong grasp of responsible AI principles, fairness metrics, and compliance frameworks.

4. Edge AI and Real-time Analytics Proliferation

The need for instant insights and local processing is pushing AI out of the cloud and closer to the data source.

Decentralized Intelligence: Instead of sending all data to a central cloud for processing, AI models will increasingly run on devices (e.g., smart cameras, IoT sensors, autonomous vehicles) at the "edge" of the network.

Low Latency Decisions: This enables real-time decision-making for applications where milliseconds matter, reducing bandwidth constraints and improving responsiveness.

Hybrid Architectures: Data scientists will work with complex hybrid architectures where some processing happens at the edge and aggregated data is sent to the cloud for deeper analysis and model retraining.

Future Impact: Data scientists will need to understand optimization techniques for constrained environments and the challenges of deploying and managing models on diverse hardware.

5. Democratization of Data Science & Augmented Analytics

Data science insights are becoming accessible to a broader audience, not just specialized practitioners.

Low-Code/No-Code (LCNC) Platforms: These platforms empower business analysts and domain experts to build and deploy basic ML models without extensive coding knowledge.

Augmented Analytics: AI-powered tools that automate parts of the data analysis process, such as data preparation, insight generation, and natural language explanations, making data more understandable to non-experts.

Data Literacy: A greater emphasis on fostering data literacy across the entire organization, enabling more employees to interpret and utilize data insights.

Future Impact: Data scientists will evolve into mentors, consultants, and developers of tools that empower others, focusing on solving the most complex and novel problems that LCNC tools cannot handle.

The future of data science is dynamic, exciting, and demanding. Success in this evolving landscape will require not just technical prowess but also adaptability, a strong ethical compass, and a continuous commitment to learning and collaboration.

0 notes

Text

Ensuring Data Accuracy with Cleaning

Ensuring data accuracy with cleaning is an essential step in data preparation. Here’s a structured approach to mastering this process:

1. Understand the Importance of Data Cleaning

Data cleaning is crucial because inaccurate or inconsistent data leads to faulty analysis and incorrect conclusions. Clean data ensures reliability and robustness in decision-making processes.

2. Common Data Issues

Identify the common issues you might face:

Missing Data: Null or empty values.

Duplicate Records: Repeated entries that skew results.

Inconsistent Formatting: Variations in date formats, currency, or units.

Outliers and Errors: Extreme or invalid values.

Data Type Mismatches: Text where numbers should be or vice versa.

Spelling or Casing Errors: Variations like “John Doe” vs. “john doe.”

Irrelevant Data: Data not needed for the analysis.

3. Tools and Libraries for Data Cleaning

Python: Libraries like pandas, numpy, and pyjanitor.

Excel: Built-in cleaning functions and tools.

SQL: Using TRIM, COALESCE, and other string functions.

Specialized Tools: OpenRefine, Talend, or Power Query.

4. Step-by-Step Process

a. Assess Data Quality

Perform exploratory data analysis (EDA) using summary statistics and visualizations.

Identify missing values, inconsistencies, and outliers.

b. Handle Missing Data

Imputation: Replace with mean, median, mode, or predictive models.

Removal: Drop rows or columns if data is excessive or non-critical.

c. Remove Duplicates

Use functions like drop_duplicates() in pandas to clean redundant entries.

d. Standardize Formatting

Convert all text to lowercase/uppercase for consistency.

Standardize date formats, units, or numerical scales.

e. Validate Data

Check against business rules or constraints (e.g., dates in a reasonable range).

f. Handle Outliers

Remove or adjust values outside an acceptable range.

g. Data Type Corrections

Convert columns to appropriate types (e.g., float, datetime).

5. Automate and Validate

Automation: Use scripts or pipelines to clean data consistently.

Validation: After cleaning, cross-check data against known standards or benchmarks.

6. Continuous Improvement

Keep a record of cleaning steps to ensure reproducibility.

Regularly review processes to adapt to new challenges.

Would you like a Python script or examples using a specific dataset to see these principles in action?

0 notes

Link

0 notes

Text

Coding, Databases, and Deployment: Essentials of Full-Stack Web Development

In our modern digital era, the realm of web development has become an inseparable part of our daily lives. Whether you're exploring your favourite online store, connecting with friends via social media, or accessing vital information, it's all thanks to the marvel of web applications. Behind the user-friendly interfaces that we often take for granted, there lies a web development symphony, where coding, databases, and deployment come together harmoniously. In this article, we will delve into the fundamental elements that make up the world of web development, examining the art of coding, the magic of database management, and how they coalesce to bring forth the websites and web applications we use on a daily basis.

The Art of Crafting Code for Web Development:

Coding is the very essence of web development, giving life to the functionality and appearance of websites and web applications. When we talk about "full-stack," it means that developers tackle both the front-end and back-end aspects of a project.

Front-End Choreography: This is what captivates users' senses and engages them directly. Front-end development encompasses HTML, CSS, and JavaScript, the trio responsible for defining the structure, style, and interactivity of web pages. Front-end developers are the artists who craft visually appealing and user-friendly interfaces.

Back-End Wizardry: This segment delves into the server-side of web applications. Back-end developers utilize programming languages such as Python, Ruby, PHP, or Node.js to construct the logic, manage database interactions, and orchestrate server operations that empower the front-end. Their mission is to ensure the secure and efficient processing of data. The Magic of Database Management in Web Development Databases serve as the backbone of dynamic web applications, functioning as repositories that store, organize, and retrieve data, making it accessible to users and the application itself.

A Tapestry of Databases: Web developers work with a spectrum of database types, including relational databases like MySQL and PostgreSQL, NoSQL databases such as MongoDB and Cassandra, and in-memory databases like Redis. The choice of database depends on the specific requirements of the project at hand.

Artful Data Modelling: Designing the structure of the database is a crucial endeavour. This involves sculpting tables, defining relationships, and enforcing constraints to ensure the efficient storage and retrieval of data.

The Language of Database Querying: Developers employ query languages, such as SQL for relational databases or specialized queries for NoSQL databases, to engage with the database. This allows them to insert, update, delete, and retrieve data based on user requests.

The Grand Finale - Deployment: Once the coding and database management are finely tuned, the ultimate act is deployment. Deployment is the process of making your web application accessible to the public, and there are several steps involved in this performance:

Selecting a Hosting Stage: Developers select a hosting environment based on the technology stack in use. Popular choices include cloud platforms like AWS, Azure, or Google Cloud, as well as shared hosting services.

Setting the Stage: The web server, database server, and any other necessary components are configured to ensure they can gracefully handle the web application's traffic and security requirements.

The Choreography of Continuous Integration and Continuous Deployment (CI/CD): Automation takes center stage with CI/CD pipelines. This ensures that code changes are meticulously tested and deployed seamlessly, mitigating the risk of errors in the production environment.

The Grand Encore - Scaling: As your application gains popularity and grows, it may demand a larger infrastructure to handle increased traffic. This involves the graceful art of load balancing, adding more servers, and optimizing database performance.

In Conclusion:

Full-stack web development is a multi-faceted domain that beautifully marries the art of coding for web development, the magic of database management, and the intricacies of deployment. It is the synergy of these essential components that breathes life into web applications. By mastering these skills, web developers have the power to craft robust, user-friendly, and powerful websites and web applications that elevate the online experience for users across the globe. Whether you aspire to be a web developer or simply wish to gain insight into the inner workings of the websites you use, understanding these essentials is a pivotal step on the path to becoming a proficient web developer.

#Database Management in Web Development#coding for web development#attitude academy#enrollnow#learnwithattitudeacademy#bestcourse#attitude tally academy#full stack development course in yamuna vihar

0 notes

Text

Mastering the COALESCE Function in SQL: Handling Null Values with Elegance

Certainly! "COALESCE" is a SQL function used to return the first non-null value in a list of expressions. It's commonly used to provide a default value when working with nullable columns or expressions in SQL queries. By understanding and utilizing the "COALESCE" function, you can enhance the robustness and readability of your SQL queries and improve the overall quality of your database interactions.

0 notes

Text

Manejo de NULL

La función COALESCE() de MySQL permite manejar los valores NULL y reemplazarlos por lo que defina el usuario. Por ejemplo:

COALESCE(NULL,'Indefinido');

Es especialmente útil en funciones como CONCAT() que devuelve NULL si alguno de los parámetros es NULL. Por ejemplo:

CONCAT('CONCAT con ',COALESCE(NULL,'NULL'));

0 notes

Text

COALESCE in SQL

COALESCE in SQL is a powerful function used to handle NULL values by returning the first non-null value from a list of expressions. It’s perfect for ensuring data completeness in reports and queries. Commonly used in SELECT statements, COALESCE SQL helps create cleaner outputs by replacing missing data with default or fallback values.

0 notes

Text

The foundation of database administration is Structured Query Language (SQL), and SQL Server COALESCE is a particularly potent feature in this field. Check all the details in below article:

https://madesimplemssql.com/sql-server-coalesce/

Please follow our FB page: https://www.facebook.com/profile.php?id=100091338502392

0 notes

Text

SQL Server Essentials Series

SQL Server Essentials Series

SQL is the essential part of SQL Administration and SQL Development. A quick overview of basics can take you a long way in the database career and make you feel more comfortable to use powerful SQL features.

This article introduces the basic and important features of SQL in SQL Server. This guide demonstrates the working of SQL Server starts from creating tables, defining relationships, and…

View On WordPress

#DBAM#DBMS#De-Normalization#Normalization#RDBMS#SQL#SQL Coalesce#SQL delete#SQL Insert#SQL Join#SQL Like#SQL Order#SQL Union#SQL Update#What is SQL?

0 notes

Text

MAX() can be used for matching

I found something interesting here they used SQL function MAX() to search the record that match with the WHERE condition, and if not, it throws null so that you can use COALESCE(), to replace with "CN" select coalesce(max(country_code), 'XX') from postage_table where country_code = 'JP'; coalesce ---------- JP (1 row) select coalesce(max(country_code), 'XX') from postage_table where country_code = 'US'; coalesce ---------- US (1 row) select coalesce(max(country_code), 'XX') from postage_table where country_code = 'CN'; coalesce ---------- XX (1 row)

0 notes

Text

SQL Functions are Available in NetSuite saved searches?

Numeric Functions

Examples

12345678910111213141516171819202122232425

ABS( {amount} )

ACOS( 0.35 )

ASIN( 1 )

ATAN( 0.2 )

ATAN2( 0.2, 0.3 )

BITAND( 5, 3 )

CEIL( {today}-{createddate} )

COS( 0.35 )

COSH( -3.15 )

EXP( {rate} )

FLOOR( {today}-{createddate} )

LN( 20 )

LOG( 10, 20 )

MOD( 3:56 pm-{lastmessagedate},7 )

NANVL( {itemisbn13}, '' )

POWER( {custcoldaystoship},-.196 )

REMAINDER( {transaction.totalamount}, {transaction.amountpaid} )

ROUND( ( {today}-{startdate} ), 0 )

SIGN( {quantity} )

SIN( 5.2 )

SINH( 3 )

SQRT( POWER( {taxamount}, 2 ) )

TAN( -5.2 )

TANH( 3 )

TRUNC( {amount}, 1 )

Character Functions Returning Character Values

Examples

12345678910111213141516

CHR( 13 )

CONCAT( {number},CONCAT( '_',{line} ) )

INITCAP( {customer.companyname} )

LOWER( {customer.companyname} )

LPAD( {line},3,'0' )

LTRIM( {companyname},'-' )

REGEXP_REPLACE( {name}, '^.*:', '' )

REGEXP_SUBSTR( {item},'[^:]+$' )

REPLACE( {serialnumber}, '&', ',' )

RPAD( {firstname},20 )

RTRIM( {paidtransaction.externalid},'-Invoice' )

SOUNDEX( {companyname} )

SUBSTR( {transaction.salesrep},1,3 )

TRANSLATE( {expensecategory}, ' ', '+' )

TRIM ( BOTH ',' FROM {custrecord_assetcost} )

UPPER( {unit} )

Character Functions Returning Number Values

Examples

12345

ASCII( {taxitem} )

INSTR( {messages.message}, 'cspdr3' )

LENGTH( {name} )

REGEXP_INSTR ( {item.unitstype}, '\d' )

TO_NUMBER( {quantity} )

Datetime Functions

Examples

123456789

ADD_MONTHS( {today},-1 )

LAST_DAY( {today} )

MONTHS_BETWEEN( SYSDATE,{createddate} )

NEXT_DAY( {today},'SATURDAY' )

ROUND( TO_DATE( '12/31/2014', 'mm/dd/yyyy' )-{datecreated} )

TO_CHAR( {date}, 'hh24' )

TO_DATE( '31.12.2011', 'DD.MM.YYYY' )

TRUNC( {today},'YYYY' )

Also see Sysdate in one of the example sections below.

NULL-Related Functions

Examples

1234

COALESCE( {quantitycommitted}, 0 )

NULLIF( {price}, 0 )

NVL( {quantity},'0' )

NVL2( {location}, 1, 2 )

Decode

Examples

1

DECODE( {systemnotes.name}, {assigned},'T','F' )

Sysdate

Examples

1

TO_DATE( SYSDATE, 'DD.MM.YYYY' )

or

1

TO_CHAR( SYSDATE, 'mm/dd/yyyy' )

See also TO_DATE and TO_CHAR in the Datetime Functions.

Case

Examples

12345

CASE {state}

WHEN 'NY' THEN 'New York'

WHEN 'CA' THEN 'California'

ELSE {state}

END

or

1234567

CASE

WHEN {quantityavailable} > 19 THEN 'In Stock'

WHEN {quantityavailable} > 1 THEN 'Limited Availability'

WHEN {quantityavailable} = 1 THEN 'The Last Piece'

WHEN {quantityavailable} IS NULL THEN 'Discontinued'

ELSE 'Out of Stock'

END

Analytic and Aggregate Functions

Examples

1

DENSE_RANK ( {amount} WITHIN GROUP ( ORDER BY {AMOUNT} ) )

or

123

DENSE_RANK( ) OVER ( PARTITION BY {name}ORDER BY {trandate} DESC )

KEEP( DENSE_RANK LAST ORDER BY {internalid} )

RANK( ) OVER ( PARTITION by {tranid} ORDER BY {line} DESC )

or

1

RANK ( {amount} WITHIN GROUP ( ORDER BY {amount} ) )

1 note

·

View note

Text

Pyspark fhash

#PYSPARK FHASH HOW TO#

#PYSPARK FHASH FULL#

#PYSPARK FHASH CODE#

By tuning the partition size to optimal, you can improve the performance of the Spark application

#PYSPARK FHASH FULL#

This yields output Repartition size : 4 and the repartition re-distributes the data(as shown below) from all partitions which is full shuffle leading to very expensive operation when dealing with billions and trillions of data. Note: Use repartition() when you wanted to increase the number of partitions. When you want to reduce the number of partitions prefer using coalesce() as it is an optimized or improved version of repartition() where the movement of the data across the partitions is lower using coalesce which ideally performs better when you dealing with bigger datasets. For example, if you refer to a field that doesn’t exist in your code, Dataset generates compile-time error whereas DataFrame compiles fine but returns an error during run-time.

#PYSPARK FHASH CODE#

Catalyst Optimizer is the place where Spark tends to improve the speed of your code execution by logically improving it.Ĭatalyst Optimizer can perform refactoring complex queries and decides the order of your query execution by creating a rule-based and code-based optimization.Īdditionally, if you want type safety at compile time prefer using Dataset. What is Catalyst?Ĭatalyst Optimizer is an integrated query optimizer and execution scheduler for Spark Datasets/DataFrame. Before your query is run, a logical plan is created using Catalyst Optimizer and then it’s executed using the Tungsten execution engine. Since DataFrame is a column format that contains additional metadata, hence Spark can perform certain optimizations on a query. Tungsten performance by focusing on jobs close to bare metal CPU and memory efficiency. Tungsten is a Spark SQL component that provides increased performance by rewriting Spark operations in bytecode, at runtime. Spark Dataset/DataFrame includes Project Tungsten which optimizes Spark jobs for Memory and CPU efficiency. Since Spark/PySpark DataFrame internally stores data in binary there is no need of Serialization and deserialization data when it distributes across a cluster hence you would see a performance improvement.

#PYSPARK FHASH HOW TO#

Using RDD directly leads to performance issues as Spark doesn’t know how to apply the optimization techniques and RDD serialize and de-serialize the data when it distributes across a cluster (repartition & shuffling). Spark RDD is a building block of Spark programming, even when we use DataFrame/Dataset, Spark internally uses RDD to execute operations/queries but the efficient and optimized way by analyzing your query and creating the execution plan thanks to Project Tungsten and Catalyst optimizer. In PySpark use, DataFrame over RDD as Dataset’s are not supported in PySpark applications. For Spark jobs, prefer using Dataset/DataFrame over RDD as Dataset and DataFrame’s includes several optimization modules to improve the performance of the Spark workloads.

0 notes

Text

Google doc merge cell commande

#GOOGLE DOC MERGE CELL COMMANDE HOW TO#

#GOOGLE DOC MERGE CELL COMMANDE MOD#

#GOOGLE DOC MERGE CELL COMMANDE UPDATE#

For more info, see Data sources you can use for a mail merge.įor more info, see Mail merge: Edit recipients.įor more info on sorting and filtering, see Sort the data for a mail merge or Filter the data for a mail merge. Connect and edit the mailing listĬonnect to your data source. The Excel spreadsheet to be used in the mail merge is stored on your local machine.Ĭhanges or additions to your spreadsheet are completed before it's connected to your mail merge document in Word.įor more information, see Prepare your Excel data source for mail merge in Word. For example, to address readers by their first name in your document, you'll need separate columns for first and last names.Īll data to be merged is present in the first sheet of your spreadsheet.ĭata entries with percentages, currencies, and postal codes are correctly formatted in the spreadsheet so that Word can properly read their values. In case you need years as well, you'll have to create the formula in the neighboring column since JOIN works with one column at a time: JOIN (', ',FILTER (C:C,A:AE2)) So, this option equips Google Sheets with a few functions to combine multiple rows into one based on duplicates.

#GOOGLE DOC MERGE CELL COMMANDE HOW TO#

We hope this tutorial will help you learn how to merge cells in Google Docs. Select the cells you want to merge, go to Format on the toolbar on top, then press Table and Merge cells here. This lets you create a single 'master' document (the template) from which you can generate many similar documents, each customized with the data being merged.

#GOOGLE DOC MERGE CELL COMMANDE UPDATE#

A mail merge takes values from rows of a spreadsheet or other data source and inserts them into a template document. Data manipulation language (DML) statements in Google Standard SQL INSERT statement DELETE statement TRUNCATE TABLE statement UPDATE statement MERGE. Make sure:Ĭolumn names in your spreadsheet match the field names you want to insert in your mail merge. Method 2: And also, there is another way to merge cells in a table in Google Docs. Performing Mail Merge with the Google Docs API. the diffusion model checkpoint file to (and/or load from) your Google Drive. This SQL keywords reference contains the reserved words in SQL.Here are some tips to prepare your Excel spreadsheet for a mail merge. For issues, join the Disco Diffusion Discord or message us on twitter at. The app allows users to create and edit files online while collaborating with other users in real-time.

#GOOGLE DOC MERGE CELL COMMANDE MOD#

String Functions: ASCII CHAR_LENGTH CHARACTER_LENGTH CONCAT CONCAT_WS FIELD FIND_IN_SET FORMAT INSERT INSTR LCASE LEFT LENGTH LOCATE LOWER LPAD LTRIM MID POSITION REPEAT REPLACE REVERSE RIGHT RPAD RTRIM SPACE STRCMP SUBSTR SUBSTRING SUBSTRING_INDEX TRIM UCASE UPPER Numeric Functions: ABS ACOS ASIN ATAN ATAN2 AVG CEIL CEILING COS COT COUNT DEGREES DIV EXP FLOOR GREATEST LEAST LN LOG LOG10 LOG2 MAX MIN MOD PI POW POWER RADIANS RAND ROUND SIGN SIN SQRT SUM TAN TRUNCATE Date Functions: ADDDATE ADDTIME CURDATE CURRENT_DATE CURRENT_TIME CURRENT_TIMESTAMP CURTIME DATE DATEDIFF DATE_ADD DATE_FORMAT DATE_SUB DAY DAYNAME DAYOFMONTH DAYOFWEEK DAYOFYEAR EXTRACT FROM_DAYS HOUR LAST_DAY LOCALTIME LOCALTIMESTAMP MAKEDATE MAKETIME MICROSECOND MINUTE MONTH MONTHNAME NOW PERIOD_ADD PERIOD_DIFF QUARTER SECOND SEC_TO_TIME STR_TO_DATE SUBDATE SUBTIME SYSDATE TIME TIME_FORMAT TIME_TO_SEC TIMEDIFF TIMESTAMP TO_DAYS WEEK WEEKDAY WEEKOFYEAR YEAR YEARWEEK Advanced Functions: BIN BINARY CASE CAST COALESCE CONNECTION_ID CONV CONVERT CURRENT_USER DATABASE IF IFNULL ISNULL LAST_INSERT_ID NULLIF SESSION_USER SYSTEM_USER USER VERSION SQL Server Functions Google Sheets is a spreadsheet program included as part of the free, web-based Google Docs.

0 notes

Text

Toad for sql server null

Therefore, if we want to retain the rows with null values, we can use ISNULL() to replace the null values with another value: SELECT STRING_AGG(ISNULL(TaskCode, 'N/A'), ', ') AS ResultĬat123, N/A, N/A, pnt456, rof789, N/A The COALESCE() Function However, this could also cause issues, depending on what the data is going to be used for. In many cases, this is a perfect result, as our result set isn’t cluttered up with null values. So the three rows containing null values aren’t returned. So if we use this function with the above sample data, we’d end up with three results instead of six: SELECT STRING_AGG(TaskCode, ', ') AS Result However, it also eliminates null values from the result set. This function allows you to return the result set as a delimited list. One example of such a function is STRING_AGG(). While this might be a desirable outcome in some cases, in other cases it could be disastrous, depending on what you need to do with the data once it’s returned. In such cases, null values won’t be returned at all. There are some T-SQL functions where null values are eliminated from the result set. That’s because it returns the result using the data type of the first argument. Note that ISNULL() requires that the second argument is of a type that can be implicitly converted to the data type of the first argument. We could also replace it with the empty string: SELECT ISNULL(TaskCode, '') AS Result Like this: SELECT ISNULL(TaskCode, 'N/A') AS Result If we didn’t want the null values to appear as such, we could use ISNULL() to replace null with a different value. We can see that there are three rows that contain null values. Here’s a basic query that returns a small result set: SELECT TaskCode AS Result ISNULL() is a T-SQL function that allows you to replace NULL with a specified value of your choice. That’s what the ISNULL() function is for. But there may also be times where you do want them returned, but as a different value. And there may be times where you do want them returned. See Automate Tasks for more information.When querying a SQL Server database, there may be times where you don’t want null values to be returned in your result set. Tip: You can save this Query Builder file (.tsm) and click Automate to schedule query execution, generate a report of the results, and email the report to colleagues. If you click this for the Fiscal or Academic calendars, you are prompted to revert back to the original version.Īdd additional columns and complete the query. Click to view the dates.If you select a Custom calendar, you can customize the dates.Academic or Custom-For the Period name, updating the name updates the name value in the Date Range Values list.Fiscal-For Fiscal calendar type, you can select Normal, or two four week periods and one 5 week period that can be defined as 4/4/5, 5/4/4, or 4/5/4.Review the following for additional information about the Calendar Editor: If you select a calendar type other than Gregorian, you can click to edit the selected calendar. Select the type of calendar to use for the date range values. Review the following for additional information: Calendar Select the Where Condition field below the date column and click. Select a column with a date data type from a table in the Diagram tab. The following date range commands are available and can also be used in the Editor: Toad inserts the correct SQL between these quotes when you execute the query. The criteria is empty and contains two single quotes without a space. WHERE (sales.ord_date = '' /*Last year*/ ) The following displays the revised query: This ensures that your query is valid regardless of the date. With Toad, you simply select Last year to dynamically insert the correct SQL. WHERE (sales.ord_date BETWEEN ' 00:00:00' In the past, you hard coded the dates to create the following statement: You need to create a query that retrieves a list of the orders that were placed last year. If you want to view the syntax of the date range so you can copy it to another application, click the date range link in the Query tab, as illustrated in the following: Note: The date range format is specific to Toad. Identifying the actual date range to use is time consuming, confusing, and is often error prone. When writing a query, you frequently need to retrieve data from a range of dates such as the last week, month, or quarter. Storage Performance and Utilization Management.Information Archiving & Storage Management.Hybrid Active Directory Security and Governance.Starling Identity Analytics & Risk Intelligence.One Identity Safeguard for Privileged Passwords.

1 note

·

View note