#SQL Server maximum value

Explore tagged Tumblr posts

Text

Finding the Maximum Value Across Multiple Columns in SQL Server

To find the maximum value across multiple columns in SQL Server 2022, you can use several approaches depending on your requirements and the structure of your data. Here are a few methods to consider: 1. Using CASE Statement or IIF You can use a CASE statement or IIF function to compare columns within a row and return the highest value. This method is straightforward but can get cumbersome with…

View On WordPress

#comparing multiple columns SQL#SQL CROSS APPLY technique#SQL Server aggregate functions#SQL Server date comparison#SQL Server maximum value

0 notes

Text

Structured Query Language (SQL): A Comprehensive Guide

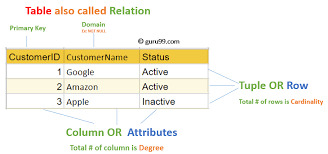

Structured Query Language, popularly called SQL (reported "ess-que-ell" or sometimes "sequel"), is the same old language used for managing and manipulating relational databases. Developed in the early 1970s by using IBM researchers Donald D. Chamberlin and Raymond F. Boyce, SQL has when you consider that end up the dominant language for database structures round the world.

Structured query language commands with examples

Today, certainly every important relational database control system (RDBMS)—such as MySQL, PostgreSQL, Oracle, SQL Server, and SQLite—uses SQL as its core question language.

What is SQL?

SQL is a website-specific language used to:

Retrieve facts from a database.

Insert, replace, and delete statistics.

Create and modify database structures (tables, indexes, perspectives).

Manage get entry to permissions and security.

Perform data analytics and reporting.

In easy phrases, SQL permits customers to speak with databases to shop and retrieve structured information.

Key Characteristics of SQL

Declarative Language: SQL focuses on what to do, now not the way to do it. For instance, whilst you write SELECT * FROM users, you don’t need to inform SQL the way to fetch the facts—it figures that out.

Standardized: SQL has been standardized through agencies like ANSI and ISO, with maximum database structures enforcing the core language and including their very own extensions.

Relational Model-Based: SQL is designed to work with tables (also called members of the family) in which records is organized in rows and columns.

Core Components of SQL

SQL may be damaged down into numerous predominant categories of instructions, each with unique functions.

1. Data Definition Language (DDL)

DDL commands are used to outline or modify the shape of database gadgets like tables, schemas, indexes, and so forth.

Common DDL commands:

CREATE: To create a brand new table or database.

ALTER: To modify an present table (add or put off columns).

DROP: To delete a table or database.

TRUNCATE: To delete all rows from a table but preserve its shape.

Example:

sq.

Copy

Edit

CREATE TABLE personnel (

id INT PRIMARY KEY,

call VARCHAR(one hundred),

income DECIMAL(10,2)

);

2. Data Manipulation Language (DML)

DML commands are used for statistics operations which include inserting, updating, or deleting information.

Common DML commands:

SELECT: Retrieve data from one or more tables.

INSERT: Add new records.

UPDATE: Modify existing statistics.

DELETE: Remove information.

Example:

square

Copy

Edit

INSERT INTO employees (id, name, earnings)

VALUES (1, 'Alice Johnson', 75000.00);

three. Data Query Language (DQL)

Some specialists separate SELECT from DML and treat it as its very own category: DQL.

Example:

square

Copy

Edit

SELECT name, income FROM personnel WHERE profits > 60000;

This command retrieves names and salaries of employees earning more than 60,000.

4. Data Control Language (DCL)

DCL instructions cope with permissions and access manage.

Common DCL instructions:

GRANT: Give get right of entry to to users.

REVOKE: Remove access.

Example:

square

Copy

Edit

GRANT SELECT, INSERT ON personnel TO john_doe;

five. Transaction Control Language (TCL)

TCL commands manage transactions to ensure data integrity.

Common TCL instructions:

BEGIN: Start a transaction.

COMMIT: Save changes.

ROLLBACK: Undo changes.

SAVEPOINT: Set a savepoint inside a transaction.

Example:

square

Copy

Edit

BEGIN;

UPDATE personnel SET earnings = income * 1.10;

COMMIT;

SQL Clauses and Syntax Elements

WHERE: Filters rows.

ORDER BY: Sorts effects.

GROUP BY: Groups rows sharing a assets.

HAVING: Filters companies.

JOIN: Combines rows from or greater tables.

Example with JOIN:

square

Copy

Edit

SELECT personnel.Name, departments.Name

FROM personnel

JOIN departments ON personnel.Dept_id = departments.Identity;

Types of Joins in SQL

INNER JOIN: Returns statistics with matching values in each tables.

LEFT JOIN: Returns all statistics from the left table, and matched statistics from the right.

RIGHT JOIN: Opposite of LEFT JOIN.

FULL JOIN: Returns all records while there is a in shape in either desk.

SELF JOIN: Joins a table to itself.

Subqueries and Nested Queries

A subquery is a query inside any other query.

Example:

sq.

Copy

Edit

SELECT name FROM employees

WHERE earnings > (SELECT AVG(earnings) FROM personnel);

This reveals employees who earn above common earnings.

Functions in SQL

SQL includes built-in features for acting calculations and formatting:

Aggregate Functions: SUM(), AVG(), COUNT(), MAX(), MIN()

String Functions: UPPER(), LOWER(), CONCAT()

Date Functions: NOW(), CURDATE(), DATEADD()

Conversion Functions: CAST(), CONVERT()

Indexes in SQL

An index is used to hurry up searches.

Example:

sq.

Copy

Edit

CREATE INDEX idx_name ON employees(call);

Indexes help improve the performance of queries concerning massive information.

Views in SQL

A view is a digital desk created through a question.

Example:

square

Copy

Edit

CREATE VIEW high_earners AS

SELECT call, salary FROM employees WHERE earnings > 80000;

Views are beneficial for:

Security (disguise positive columns)

Simplifying complex queries

Reusability

Normalization in SQL

Normalization is the system of organizing facts to reduce redundancy. It entails breaking a database into multiple related tables and defining overseas keys to link them.

1NF: No repeating groups.

2NF: No partial dependency.

3NF: No transitive dependency.

SQL in Real-World Applications

Web Development: Most web apps use SQL to manipulate customers, periods, orders, and content.

Data Analysis: SQL is extensively used in information analytics systems like Power BI, Tableau, and even Excel (thru Power Query).

Finance and Banking: SQL handles transaction logs, audit trails, and reporting systems.

Healthcare: Managing patient statistics, remedy records, and billing.

Retail: Inventory systems, sales analysis, and consumer statistics.

Government and Research: For storing and querying massive datasets.

Popular SQL Database Systems

MySQL: Open-supply and extensively used in internet apps.

PostgreSQL: Advanced capabilities and standards compliance.

Oracle DB: Commercial, especially scalable, agency-degree.

SQL Server: Microsoft’s relational database.

SQLite: Lightweight, file-based database used in cellular and desktop apps.

Limitations of SQL

SQL can be verbose and complicated for positive operations.

Not perfect for unstructured information (NoSQL databases like MongoDB are better acceptable).

Vendor-unique extensions can reduce portability.

Java Programming Language Tutorial

Dot Net Programming Language

C ++ Online Compliers

C Language Compliers

2 notes

·

View notes

Text

Move Ahead with Confidence: Microsoft Training Courses That Power Your Potential

Why Microsoft Skills Are a Must-Have in Modern IT

Microsoft technologies power the digital backbone of countless businesses, from small startups to global enterprises. From Microsoft Azure to Power Platform and Microsoft 365, these tools are essential for cloud computing, collaboration, security, and business intelligence. As companies adopt and scale these technologies, they need skilled professionals to configure, manage, and secure their Microsoft environments. Whether you’re in infrastructure, development, analytics, or administration, Microsoft skills are essential to remain relevant and advance your career.

The good news is that Microsoft training isn’t just for IT professionals. Business analysts, data specialists, security officers, and even non-technical managers can benefit from targeted training designed to help them work smarter, not harder.

Training That Covers the Full Microsoft Ecosystem

Microsoft’s portfolio is vast, and Ascendient Learning’s training spans every major area. If your focus is cloud computing, Microsoft Azure training courses help you master topics like architecture, administration, security, and AI integration. Popular courses include Azure Fundamentals, Designing Microsoft Azure Infrastructure Solutions, and Azure AI Engineer Associate preparation.

For business professionals working with collaboration tools, Microsoft 365 training covers everything from Teams Administration to SharePoint Configuration and Microsoft Exchange Online. These tools are foundational to hybrid and remote work environments, and mastering them improves productivity across the board.

Data specialists can upskill through Power BI, Power Apps, and Power Automate training, enabling low-code development, process automation, and rich data visualization. These tools are part of the Microsoft Power Platform, and Ascendient’s courses teach how to connect them to real-time data sources and business workflows.

Security is another top concern for today’s organizations, and Microsoft’s suite of security solutions is among the most robust in the industry. Ascendient offers training in Microsoft Security, Compliance, and Identity, as well as courses on threat protection, identity management, and secure cloud deployment.

For developers and infrastructure specialists, Ascendient also offers training in Windows Server, SQL Server, PowerShell, DevOps, and programming tools. These courses provide foundational and advanced skills that support software development, automation, and enterprise system management.

Earn Certifications That Employers Trust

Microsoft certifications are globally recognized credentials that validate your expertise and commitment to professional development. Ascendient Learning’s Microsoft training courses are built to prepare learners for certifications across all levels, including Microsoft Certified: Fundamentals, Associate, and Expert tracks.

These certifications improve your job prospects and help organizations meet compliance requirements, project demands, and client expectations. Many professionals who pursue Microsoft certifications report higher salaries, faster promotions, and broader career options.

Enterprise Solutions That Scale with Your Goals

For organizations, Ascendient Learning offers end-to-end support for workforce development. Training can be customized to match project timelines, technology adoption plans, or compliance mandates. Whether you need to train a small team or launch a company-wide certification initiative, Ascendient Learning provides scalable solutions that deliver measurable results.

With Ascendient’s Customer Enrollment Portal, training coordinators can easily manage enrollments, monitor progress, and track learning outcomes in real-time. This level of insight makes it easier to align training with business strategy and get maximum value from your investment.

Get Trained. Get Certified. Get Ahead.

In today’s fast-changing tech environment, Microsoft training is a smart step toward lasting career success. Whether you are building new skills, preparing for a certification exam, or guiding a team through a technology upgrade, Ascendient Learning provides the tools, guidance, and expertise to help you move forward with confidence.

Explore Ascendient Learning’s full catalog of Microsoft training courses today and take control of your future, one course, one certification, and one success at a time.

For more information, visit: https://www.ascendientlearning.com/it-training/microsoft

0 notes

Text

How to resolve CXPACKET wait in SQL Server

CXPACKET:o Happens when a parallel query runs and some threads are slower than others.o This wait type is common in highly parallel environments. How to resolve CXPACKET wait in SQL Server Adjust the MAXDOP Setting:o The MAXDOP (Maximum Degree of Parallelism) setting controls the number ofprocessors used for parallel query execution. Reducing the MAXDOP value can helpreduce CXPACKET waits.o You…

0 notes

Text

5th Gen Intel Xeon Scalable Processors Boost SQL Server 2022

5th Gen Intel Xeon Scalable Processors

While speed and scalability have always been essential to databases, contemporary databases also need to serve AI and ML applications at higher performance levels. Real-time decision-making, which is now far more widespread, should be made possible by databases together with increasingly faster searches. Databases and the infrastructure that powers them are usually the first business goals that need to be modernized in order to support analytics. The substantial speed benefits of utilizing 5th Gen Intel Xeon Scalable Processors to run SQL Server 2022 will be demonstrated in this post.

OLTP/OLAP Performance Improvements with 5th gen Intel Xeon Scalable processors

The HammerDB benchmark uses New Orders per minute (NOPM) throughput to quantify OLTP. Figure 1 illustrates performance gains of up to 48.1% NOPM Online Analytical Processing when comparing 5th Gen Intel Xeon processors to 4th Gen Intel Xeon processors, while displays up to 50.6% faster queries.

The enhanced CPU efficiency of the 5th gen Intel Xeon processors, demonstrated by its 83% OLTP and 75% OLAP utilization, is another advantage. When compared to the 5th generation of Intel Xeon processors, the prior generation requires 16% more CPU resources for the OLTP workload and 13% more for the OLAP workload.

The Value of Faster Backups

Faster backups improve uptime, simplify data administration, and enhance security, among other things. Up to 2.72x and 3.42 quicker backups for idle and peak loads, respectively, are possible when running SQL Server 2022 Enterprise Edition on an Intel Xeon Platinum processor when using Intel QAT.

The reason for the longest Intel QAT values for 5th Gen Intel Xeon Scalable Processors is because the Gold version includes less backup cores than the Platinum model, which provides some perspective for the comparisons.

With an emphasis on attaining near-real-time latencies, optimizing query speed, and delivering the full potential of scalable warehouse systems, SQL Server 2022 offers a number of new features. It’s even better when it runs on 5th gen Intel Xeon Processors.

Solution snapshot for SQL Server 2022 running on 4th generation Intel Xeon Scalable CPUs. performance, security, and current data platform that lead the industry.

SQL Server 2022

The performance and dependability of 5th Gen Intel Xeon Scalable Processors, which are well known, can greatly increase your SQL Server 2022 database.

The following tutorial will examine crucial elements and tactics to maximize your setup:

Hardware Points to Consider

Choose a processor: Choose Intel Xeon with many cores and fast clock speeds. Choose models with Intel Turbo Boost and Intel Hyper-Threading Technology for greater performance.

Memory: Have enough RAM for your database size and workload. Sufficient RAM enhances query performance and lowers disk I/O.

Storage: To reduce I/O bottlenecks, choose high-performance storage options like SSDs or fast HDDs with RAID setups.

Modification of Software

Database Design: Make sure your query execution plans, indexes, and database schema are optimized. To guarantee effective data access, evaluate and improve your design on a regular basis.

Configuration Settings: Match your workload and hardware capabilities with the SQL Server 2022 configuration options, such as maximum worker threads, maximum server RAM, and I/O priority.

Query tuning: To find performance bottlenecks and improve queries, use programs like Management Studio or SQL Server Profiler. Think about methods such as parameterization, indexing, and query hints.

Features Exclusive to Intel

Use Intel Turbo Boost Technology to dynamically raise clock speeds for high-demanding tasks.

With Intel Hyper-Threading Technology, you may run many threads on a single core, which improves performance.

Intel QuickAssist Technology (QAT): Enhance database performance by speeding up encryption and compression/decompression operations.

Optimization of Workload

Workload balancing: To prevent resource congestion, divide workloads among several instances or servers.

Partitioning: To improve efficiency and management, split up huge tables into smaller sections.

Indexing: To expedite the retrieval of data, create the proper indexes. Columnstore indexes are a good option for workloads involving analysis.

Observation and Adjustment

Performance monitoring: Track key performance indicators (KPIs) and pinpoint areas for improvement with tools like SQL Server Performance Monitor.

Frequent Tuning: Keep an eye on and adjust your database on a regular basis to accommodate shifting hardware requirements and workloads.

SQL Server 2022 Pricing

SQL Server 2022 cost depends on edition and licensing model. SQL Server 2022 has three main editions:

SQL Server 2022 Standard

Description: For small to medium organizations with minimal database functions for data and application management.

Licensing

Cost per core: ~$3,586.

Server + CAL (Client Access License): ~$931 per server, ~$209 per CAL.

Basic data management, analytics, reporting, integration, and little virtualization.

SQL Server 2022 Enterprise

Designed for large companies with significant workloads, extensive features, and scalability and performance needs.

Licensing

Cost per core: ~$13,748.

High-availability, in-memory performance, business intelligence, machine learning, and infinite virtualization.

SQL Server 2022 Express

Use: Free, lightweight edition for tiny applications, learning, and testing.

License: Free.

Features: Basic capability, 10 GB databases, restricted memory and CPU.

Models for licensing

Per Core: Recommended for big, high-demand situations with processor core-based licensing.

Server + CAL (Client Access License): For smaller environments, each server needs a license and each connecting user/device needs a CAL.

In brief

Faster databases can help firms meet their technical and business objectives because they are the main engines for analytics and transactions. Greater business continuity may result from those databases’ faster backups.

Read more on govindhtech.com

#5thGen#IntelXeonScalableProcessors#IntelXeon#BoostSQLServer2022#IntelXeonprocessors#intel#4thGenIntelXeonprocessors#SQLServer#Software#HardwarePoints#OLTP#OLAP#technology#technews#news#govindhtech

0 notes

Text

Transitioning from Tableau to Power BI - A Comprehensive Guide

The ability to extract actionable insights from vast datasets is a game-changer. Data visualization platforms like Tableau and Power BI empower organizations to transform raw data into meaningful visualizations, enabling stakeholders to make informed decisions. However, as businesses evolve and their data needs change, they may need to migrate from Tableau to Power BI to unlock new functionalities, enhance efficiency, or align with their broader IT infrastructure.

Understanding the Importance of Switching:

Transitioning from Tableau to Power BI is not just about swapping one tool for another; it's a strategic move with far-reaching implications. Several factors underscore the importance of Tableau to Power BI migration:

Cost Considerations:

The financial aspect often plays a significant role in decision-making. Tableau's licensing model can be a considerable expense for organizations, particularly for larger deployments. Conversely, Power BI offers flexible pricing options, including free tiers for individual users and cost-effective subscription plans for enterprises, making it a financially attractive alternative.

Integration with Existing Infrastructure:

Power BI's seamless integration with Azure, Office 365, and other Microsoft products is a compelling proposition for organizations entrenched in the Microsoft ecosystem. This integration fosters interoperability, streamlines data management processes, and promotes collaboration across departments, aligning with the organization's broader IT strategy.

Enhanced Analytics and Visualization Capabilities:

While Tableau is renowned for its advanced analytics features, Power BI distinguishes itself with its user-friendly interface and robust data visualization platforms. Power BI's integration with Excel, SQL Server, and other Microsoft applications empowers users to easily create interactive dashboards, reports, and data visualizations, democratizing data access and analysis across the organization.

Simplified Setup and Maintenance:

The ease of deployment and maintenance is another crucial consideration. Power BI's intuitive interface, comprehensive documentation, and robust community support simplifies the migration process, minimizing downtime and disruption to operations. Organizations familiar with Microsoft products will find Power BI's setup process relatively straightforward, further expediting the transition.

Key Considerations for Migration from Tableau to Power BI:

Before embarking on the migration journey, organizations must carefully assess various factors to ensure a seamless transition:

Data Compatibility: Evaluate the compatibility of existing data sources with Power BI to pre-empt any compatibility issues during the migration process. Conduct thorough testing to verify data integrity and identify any potential challenges.

Training and Support: Invest in comprehensive training programs to equip users with the skills and knowledge to effectively leverage Power BI. Consider engaging external support services to address technical challenges and provide ongoing assistance during and after the migration.

Customization Needs: Assess the organization's customization requirements and ascertain whether Power BI can accommodate them effectively. Collaborate with experts to tailor Power BI to business needs and optimize its functionality to drive maximum value.

Unlocking the Benefits of Migration from Tableau to Power BI:

Migrating from Tableau to Power BI offers a multitude of benefits for organizations seeking to harness the full potential of their data:

Cost Savings: By transitioning to Power BI, organizations can realize substantial cost savings through more economical licensing options and streamlined data management processes, enabling them to reallocate resources more efficiently.

Integration with Microsoft Ecosystem: Power BI's seamless integration with other Microsoft products facilitates data sharing, collaboration, and workflow automation, fostering operational efficiency and productivity gains across the organization.

User-Friendly Interface: Power BI's intuitive interface empowers users of all skill levels to create compelling visualizations, dashboards, and reports, democratizing data access and analysis and promoting data-driven decision-making at every level of the organization.

Advanced Analytics Capabilities: Leverage Power BI's advanced analytics tools, including machine learning capabilities and predictive analytics, to uncover hidden insights, identify trends, and drive innovation, enabling organizations to stay ahead of the curve in an increasingly competitive landscape.

Best Practices for a Smooth Transition:

Successfully moving from Tableau to Power BI requires careful planning, execution, and optimization. Here are some best practices to follow through the process:

Assess The Requirements: Conduct a thorough analysis of the organization's data visualization needs, technical requirements, and strategic objectives to inform migration strategy and prioritize key milestones.

Develop a Migration Plan: Create a detailed migration plan delineating key milestone, timelines, resource requirements, and stakeholder responsibilities to ensure a structured and coordinated approach to the migration process.

Test and Validate: Before migrating critical data and workflows, conduct rigorous testing and validation to identify and address potential issues, errors, or compatibility challenges, minimizing the risk of disruptions during the migration.

Provide Comprehensive Training: Invest in comprehensive training programs to equip users with the skills, knowledge, and confidence to leverage Power BI effectively and maximize its value across the organization. Offer ongoing support and resources to facilitate continuous learning and skill development.

Monitor and Optimize: Continuously monitor the migration process, user adoption rates, and performance metrics to identify areas for improvement, optimization, and ongoing support. Solicit feedback from stakeholders to address concerns and ensure alignment with business objectives.

Migrating from Tableau to Power BI represents an opportunity for organizations to unlock new capabilities, drive innovation, and gain a competitive edge in today's data-driven landscape. While the migration journey may present challenges, with proper planning, execution, and support, organizations can successfully navigate these challenges and realize Power BI's full potential. Nous specializes in helping organizations seamlessly migrate from Tableau to Power BI. The team of experts offers comprehensive migration services, including strategy development, technical implementation, user training, and ongoing support, to ensure a smooth and successful transition.

#data analytics solutions#data visualization#power bi migration#microsoft power bi#power bi services#tableau#data migration

1 note

·

View note

Text

SAP Basis Expert

The Backbone of SAP Systems: Understanding the SAP Basis Expert

SAP systems are the technological lifelines powering countless modern businesses. Behind that smooth functionality lies a crucial figure: the SAP Basis expert. These professionals hold the keys to optimizing and maintaining those complex SAP environments upon which businesses depend.

What is SAP Basis?

SAP Basis is the technical foundation of any SAP implementation. It involves the core administration and configuration of SAP systems, including:

Installation and Configuration: Setting up the SAP landscape and tailor it to business needs.

Database Management: Ensuring the SAP database (Oracle, SQL Server, etc.) is running smoothly.

System Monitoring and Performance Tuning: Keeping an eye on the system’s health and optimizing performance for peak efficiency.

User and Security Management: Securely adding new users and managing their permissions.

Transport Management: Handling the movement of changes and customizations between SAP environments (development, testing, production).

Troubleshooting: Proactively identifying and resolving technical issues.

Why Do You Need an SAP Basis Expert?

Think of the SAP Basis expert as your SAP environment’s doctor. Here’s why their expertise is vital:

Smooth Operations: They ensure seamless day-to-day operations within the SAP system keeping mission-critical applications running optimally.

Proactive Problem Solving: They prevent breakdowns and downtime by spotting potential issues early.

Performance Optimization: Constant analysis and tuning helps maintain system speed and efficiency, especially during high-load situations.

Security: They uphold a strong security posture, implementing safeguards against unauthorized access or data breaches.

Business Agility: Basis experts quickly adapt SAP environments to business needs like expansions and updates.

Essential Skills of an SAP Basis Expert

A successful SAP Basis expert usually possesses a robust mix of skills:

SAP Technical Knowledge: Deep understanding of SAP architecture, ABAP programming, and NetWeaver components.

Operating Systems: Expertise in Linux, Windows, and other platforms commonly hosting SAP systems.

Database Administration: Strong database skills (Oracle, SQL Server, HANA, etc.) to handle the underlying data structures.

Troubleshooting and Problem-Solving: The ability to analyze logs, diagnose issues, and implement rapid solutions.

Communication and Collaboration: Clear documentation skills and the ability to collaborate effectively with cross-functional teams.

Finding the Right SAP Basis Expert

When seeking an SAP Basis expert, you’ve got options:

In-House: Large companies may maintain their own team of SAP Basis specialists for dedicated support.

SAP Consulting Firms: Engaging a reputable firm provides access to seasoned experts as needed.

Freelance SAP Basis Consultants: Independent specialists bring flexibility and can be cost-effective for project-based or ongoing needs.

The Takeaway

The SAP Basis expert is a quiet force that keeps complex business systems humming. Their expertise ensures your SAP investments deliver maximum value and remain a reliable engine that drives your enterprise forward. Investing in the right SAP Basis talent is an investment in your business’s overall success.

youtube

You can find more information about SAP BASIS in this SAP BASIS Link

Conclusion:

Unogeeks is the No.1 IT Training Institute for SAP BASIS Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on SAP BASIS here – SAP BASIS Blogs

You can check out our Best In Class SAP BASIS Details here – SAP BASIS Training

Follow & Connect with us:

———————————-

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook:https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeek

#Unogeeks #training #Unogeekstraining

1 note

·

View note

Text

Web Application Penetration Testing Checklist

Web-application penetration testing, or web pen testing, is a way for a business to test its own software by mimicking cyber attacks, find and fix vulnerabilities before the software is made public. As such, it involves more than simply shaking the doors and rattling the digital windows of your company's online applications. It uses a methodological approach employing known, commonly used threat attacks and tools to test web apps for potential vulnerabilities. In the process, it can also uncover programming mistakes and faults, assess the overall vulnerability of the application, which include buffer overflow, input validation, code Execution, Bypass Authentication, SQL-Injection, CSRF, XSS etc.

Penetration Types and Testing Stages

Penetration testing can be performed at various points during application development and by various parties including developers, hosts and clients. There are two essential types of web pen testing:

l Internal: Tests are done on the enterprise's network while the app is still relatively secure and can reveal LAN vulnerabilities and susceptibility to an attack by an employee.

l External: Testing is done outside via the Internet, more closely approximating how customers — and hackers — would encounter the app once it is live.

The earlier in the software development stage that web pen testing begins, the more efficient and cost effective it will be. Fixing problems as an application is being built, rather than after it's completed and online, will save time, money and potential damage to a company's reputation.

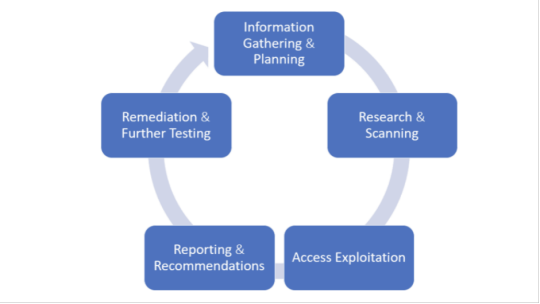

The web pen testing process typically includes five stages:

1. Information Gathering and Planning: This comprises forming goals for testing, such as what systems will be under scrutiny, and gathering further information on the systems that will be hosting the web app.

2. Research and Scanning: Before mimicking an actual attack, a lot can be learned by scanning the application's static code. This can reveal many vulnerabilities. In addition to that, a dynamic scan of the application in actual use online will reveal additional weaknesses, if it has any.

3. Access and Exploitation: Using a standard array of hacking attacks ranging from SQL injection to password cracking, this part of the test will try to exploit any vulnerabilities and use them to determine if information can be stolen from or unauthorized access can be gained to other systems.

4. Reporting and Recommendations: At this stage a thorough analysis is done to reveal the type and severity of the vulnerabilities, the kind of data that might have been exposed and whether there is a compromise in authentication and authorization.

5. Remediation and Further Testing: Before the application is launched, patches and fixes will need to be made to eliminate the detected vulnerabilities. And additional pen tests should be performed to confirm that all loopholes are closed.

Information Gathering

1. Retrieve and Analyze the robot.txt files by using a tool called GNU Wget.

2. Examine the version of the software. DB Details, the error technical component, bugs by the error codes by requesting invalid pages.

3. Implement techniques such as DNS inverse queries, DNS zone Transfers, web-based DNS Searches.

4. Perform Directory style Searching and vulnerability scanning, Probe for URLs, using tools such as NMAP and Nessus.

5. Identify the Entry point of the application using Burp Proxy, OWSAP ZAP, TemperIE, WebscarabTemper Data.

6. By using traditional Fingerprint Tool such as Nmap, Amap, perform TCP/ICMP and service Fingerprinting.

7.By Requesting Common File Extension such as.ASP,EXE, .HTML, .PHP ,Test for recognized file types/Extensions/Directories.

8. Examine the Sources code From the Accessing Pages of the Application front end.

9. Many times social media platform also helps in gathering information. Github links, DomainName search can also give more information on the target. OSINT tool is such a tool which provides lot of information on target.

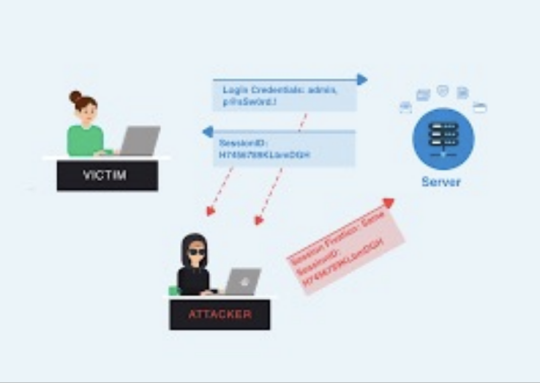

Authentication Testing

1. Check if it is possible to “reuse” the session after Logout. Verify if the user session idle time.

2. Verify if any sensitive information Remain Stored in browser cache/storage.

3. Check and try to Reset the password, by social engineering crack secretive questions and guessing.

4.Verify if the “Remember my password” Mechanism is implemented by checking the HTML code of the log-in page.

5. Check if the hardware devices directly communicate and independently with authentication infrastructure using an additional communication channel.

6. Test CAPTCHA for authentication vulnerabilities.

7. Verify if any weak security questions/Answer are presented.

8. A successful SQL injection could lead to the loss of customer trust and attackers can steal PID such as phone numbers, addresses, and credit card details. Placing a web application firewall can filter out the malicious SQL queries in the traffic.

Authorization Testing

1. Test the Role and Privilege Manipulation to Access the Resources.

2.Test For Path Traversal by Performing input Vector Enumeration and analyze the input validation functions presented in the web application.

3.Test for cookie and parameter Tempering using web spider tools.

4. Test for HTTP Request Tempering and check whether to gain illegal access to reserved resources.

Configuration Management Testing

1. Check file directory , File Enumeration review server and application Documentation. check the application admin interfaces.

2. Analyze the Web server banner and Performing network scanning.

3. Verify the presence of old Documentation and Backup and referenced files such as source codes, passwords, installation paths.

4.Verify the ports associated with the SSL/TLS services using NMAP and NESSUS.

5.Review OPTIONS HTTP method using Netcat and Telnet.

6. Test for HTTP methods and XST for credentials of legitimate users.

7. Perform application configuration management test to review the information of the source code, log files and default Error Codes.

Session Management Testing

1. Check the URL’s in the Restricted area to Test for CSRF (Cross Site Request Forgery).

2.Test for Exposed Session variables by inspecting Encryption and reuse of session token, Proxies and caching.

3. Collect a sufficient number of cookie samples and analyze the cookie sample algorithm and forge a valid Cookie in order to perform an Attack.

4. Test the cookie attribute using intercept proxies such as Burp Proxy, OWASP ZAP, or traffic intercept proxies such as Temper Data.

5. Test the session Fixation, to avoid seal user session.(session Hijacking )

Data Validation Testing

1. Performing Sources code Analyze for javascript Coding Errors.

2. Perform Union Query SQL injection testing, standard SQL injection Testing, blind SQL query Testing, using tools such as sqlninja, sqldumper, sql power injector .etc.

3. Analyze the HTML Code, Test for stored XSS, leverage stored XSS, using tools such as XSS proxy, Backframe, Burp Proxy, OWASP, ZAP, XSS Assistant.

4. Perform LDAP injection testing for sensitive information about users and hosts.

5. Perform IMAP/SMTP injection Testing for Access the Backend Mail server.

6.Perform XPATH Injection Testing for Accessing the confidential information

7. Perform XML injection testing to know information about XML Structure.

8. Perform Code injection testing to identify input validation Error.

9. Perform Buffer Overflow testing for Stack and heap memory information and application control flow.

10. Test for HTTP Splitting and smuggling for cookies and HTTP redirect information.

Denial of Service Testing

1. Send Large number of Requests that perform database operations and observe any Slowdown and Error Messages. A continuous ping command also will serve the purpose. A script to open browsers in loop for indefinite no will also help in mimicking DDOS attack scenario.

2.Perform manual source code analysis and submit a range of input varying lengths to the applications

3.Test for SQL wildcard attacks for application information testing. Enterprise Networks should choose the best DDoS Attack prevention services to ensure the DDoS attack protection and prevent their network

4. Test for User specifies object allocation whether a maximum number of object that application can handle.

5. Enter Extreme Large number of the input field used by the application as a Loop counter. Protect website from future attacks Also Check your Companies DDOS Attack Downtime Cost.

6. Use a script to automatically submit an extremely long value for the server can be logged the request.

Conclusion:

Web applications present a unique and potentially vulnerable target for cyber criminals. The goal of most web apps is to make services, products accessible for customers and employees. But it's definitely critical that web applications must not make it easier for criminals to break into systems. So, making proper plan on information gathered, execute it on multiple iterations will reduce the vulnerabilities and risk to a greater extent.

1 note

·

View note

Text

Business intelligence Dashboards Saudi Arabia

Business intelligence is the data management solutions provided to various companies using their past or current data to deliver real-time solutions to the companies. BI, is a type of software that uses the power of data within an organization. It offers a different way to sort, compare, and review data in order for companies to make smart and real-time decisions.bi helps in better planning and understanding the data, improves the accuracy of the business strategies, improves sales forecasting, focuses on business operation, and improves decision making. Adding business intelligence software to the company creates a positive action effect that spreads in all parts of the company. It’s not just about improving access to the data in your firm It’s about whether we are using that data to improve profitability. Business intelligence dashboards are the management of data visuals that provide solutions to the data analysis. Dashboards are the most popular platform in Bi analytics which allows us to customize the information we want strategically. Resemble Systems is a top business intelligence provider with a local presence in India, Saudi Arabia, UAE, and Qatar, offering Enterprise Portfolio Management and Project Management database solutions and Digital Transformations that enable customers to digitally transform their data to increase efficiency while optimizing business processes. Resemble Systems follows a closed approach in identifying customer’s business problems and drives those challenges and vital points with unique, cost-effective, and secure solutions that ensure Business Value, Maximum ROI. Power business intelligence is a tool of business analytics that delivers insights throughout the organization. Connecting the data to hundreds of other data sources, to simplify data prep, and drive ad hoc analysis. Produce genuine reports, then publish them for the organization to access on the web and mobile devices. At resemble system, we can create personalized dashboards with a unique, 360-degree view of their business. And measure across the enterprise, with governance and security that is built-in. We help the clients with Reporting and business intelligence Dashboard visually which is presented on the SharePoint Online platform. We use Power BI. PowerPivot and integrate with Excel, SQL Server, also with any 3rd party data sources such as Oracle and SAP.

2 notes

·

View notes

Text

Understanding Important Differences Between Laravel Vs CodeIgniter

PHP is a modern framework in software development with a lot more flexibility in terms of a structured coding pattern with scope for applications that we deliver to perform better is required. The security feature of Laravel is quick in taking action when in security violation and for CodeIgniter too. The syntax guides of Laravel are expressive and elegant. The differences between Laravel vs CodeIgniter are as under:

Essential differences between Laravel vs CodeIgniter

Assistance for PHP 7

As a major announcement of the server-side programming language, PHP 7 comes with several unique features and improvements. The new features let programmers magnify the performance of web applications and lessen memory consumption. Both Laravel and CodeIgniter support version 7 of PHP. But several programmers have highlighted the issues faced by them while developing and testing CodeIgniter applications on PHP 7.

Produced in Modules

Most developers part big and complex web applications into a number of small modules to simplify and advance the development process. Laravel is designed with built-in modularity features. It allows developers to divide a project into small modules through a bundle. They can further reuse the modules over multiple projects. But CodeIgniter is not planned with built-in modularity specialties. It requires CodeIgniter developers to create and control modules by using Modular Extension additionally.

Support for Databases

Both PHP frameworks support an array of databases including MySQL, PostgreSQL, Microsoft Bi, and MongoDB. However in the fight of Laravel vs CodeIgniter, additionally supports a plethora of databases including Oracle, Microsoft SQL Server, IBM DB2, orientdb, and JDBC compatible. Hence, CodeIgniter supports a prominent number of databases that Laravel.

Database Scheme Development

Despite encouraging several popular databases, CodeIgniter does not provide any particular features to clarify database schema migration. Though the DB agnostic migrations emphasize provided by Laravel makes it simpler for programmers to alter and share the database schema of the application without rewriting complicated code. The developer can further develop a database schema of the application easily by combining the database agnostic migration with the schema builder provided by Laravel.

Fluent ORM

Unlike CodeIgniter, Laravel empowers developers to take advantage of Graceful ORM. They can practice the object-relational mapper (ORM) system to operate with a diversity of databases more efficiently by Active Record implementation. Fluent ORM further allows users to interact with databases directly through the specific model of individual database tables. They can even use the model to accomplish common tasks like including new records and running database queries.

Built-in Template Engine

Laravel comes with a simple but robust template engine like Blade. Blade template engine enables PHP programmers to optimize the representation of the web application by improving and managing views. But CodeIgniter seems not appear with a built-in template engine. The developers need to unite the framework with robust template engines like Smarty to accomplish common tasks and boost the performance of the website.

REST API Development

The RESTful Controllers provided by Laravel allows laravel development company to build a diversity of REST APIs without embedding extra time and effort. They can simply set the restful property as true in the RESTful Controller to build custom REST APIs without writing extra code. But CodeIgniter does not provide any specific features to simplify development of REST APIs. The users have to write extra code to create custom REST APIs while developing web applications with CodeIgniter.

Routing

The routing choices given by both PHP frameworks work identically. But the features presented by Laravel facilitate developers to route requests in an easy yet efficient way. The programmers can take advantage of the routing feature of Laravel to define most routes for a web application in a single file. Each basic Laravel route further accepts a single URI and closure. However, the users still have the option to register a route with the capability to respond to multiple HTTP verbs concurrently.

HTTPS Guide

Maximum web developers opt for HTTPS protocol to obtain the application send and acquire sensitive information securely. Laravel empowers programmers to set custom HTTPs routes. The developers also have the choice to create a distinct URL for each HTTPS route. Laravel further keeps the data transmission secure by adding https:// protocol before the URL automatically. But CodeIgniter appears not support HTTPS fully. The programmers should practice URL helpers to keep the data transmission secure by generating pats.

Authentication

The Authentication Class presented by Laravel makes it simpler for developers to execute authentication and authorization. The extensible and customizable class further permits users to manage the web application secure by executing comprehensive user login and managing the routes secure with filters. However, CodeIgniter does not appear with such built-in authentication features. The users are asked to authenticate and authorize users by drafting custom CodeIgniter extensions.

Unit Testing

Laravel accounts for additional PHP frameworks in the division of unit testing. It enables programmers to check the application code thoroughly and continuously with PHPUnit. In extension to being a broadly accepted unit testing tool, PHPUnit arises with a variety of out-of-box extensions. Hence, programmers have to use added unit testing tools to evaluate the quality of application code throughout the development process.

Learning Curve

youtube

Unlike Laravel, CodeIgniter has a petite footprint. But Laravel presents extra features and tools than CodeIgniter. The additional features make Laravel complex. Hence, the beginners have to put additional time and effort to learn all the features of Laravel and use it efficiently. The trainees find it simpler to learn and use CodeIgniter within a short period of time.

Community Support

Both are open source PHP framework. Each framework is also backed by a great community. But numerous web developers have said that members of the Laravel community are major active than members of the CodeIgniter community. The developers regularly find it simpler to avail online help and quick solutions while developing web applications with Laravel.

The developers yet require to evaluate the features of Laravel vs CodeIgniter according to the precise needs of each project to choose the best PHP framework.

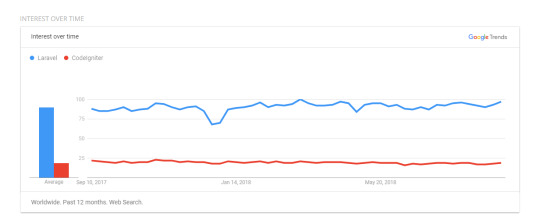

If analyzed statistically, Laravel seems to be more accepted than CodeIgniter by a wide margin. This is validated by Sitepoint’s 2015 survey results, where Laravel was honored as the most popular PHP framework according to a massive 7800 entries. CodeIgniter, according to the study, pursues at number 4. Users also report that Laravel is more marketable since clients have often heard about the framework previously, giving a Laravel an enormous market value than CodeIgniter.

Some would claim that the predominance or the market shares are not enough reasons to pick one framework across another, and it is a strong point. A good developer should examine the overall features, performance, and functionalities that are particular to their web application before executing ANY framework.

Among Laravel vs CodeIgniter, well-seasoned master developers can find that they can avail several great features if they opt for Laravel since it requires an absolute command on the MVC architecture as well as a strong grip on OOP (Object Oriented Programming) concepts.

Conclusion

If you are looking to create a resilient and maintainable application, Laravel is a nice choice. The documentation is accurate, the community is large and you can develop fully emphasized complex web applications. There are still many developers in PHP community preferring CodeIgniter for developing medium to small applications in simple developing environment. Conserving in mind the pros and cons of each, in reverence to the precise project, you can reach the perfect verdict. Read Full Article on: https://www.cyblance.com/laravel/understanding-important-differences-between-laravel-vs-codeigniter/

#laravel development company#laravel development#laravel developer#laravel development services#laravel development company india#laravel application development#laravel web development#php laravel developer#laravel web development company#laravel application development company#laravel web development services#best laravel development company#laravel web developer#laravel web application development company#remote laravel developer#laravel framework development#laravel app development#dedicated laravel developer#laravel website development#remote laravel#certified laravel developer#laravel development india

1 note

·

View note

Text

Business Analyst Finance Domain Sample Resume

This is just a sample Business Analyst resume for freshers as well as for experienced job seekers in Finance domain of business analyst or system analyst. While this is only a sample resume, please use this only for reference purpose, do not copy the same client names or job duties for your own purpose. Always make your own resume with genuine experience.

Name: Justin Megha

Ph no: XXXXXXX

your email here.

Business Analyst, Business Systems Analyst

SUMMARY

Accomplished in Business Analysis, System Analysis, Quality Analysis and Project Management with extensive experience in business products, operations and Information Technology on the capital markets space specializing in Finance such as Trading, Fixed Income, Equities, Bonds, Derivatives(Swaps, Options, etc) and Mortgage with sound knowledge of broad range of financial instruments. Over 11+ Years of proven track record as value-adding, delivery-loaded project hardened professional with hands-on expertise spanning in System Analysis, Architecting Financial applications, Data warehousing, Data Migrations, Data Processing, ERP applications, SOX Implementation and Process Compliance Projects. Accomplishments in analysis of large-scale business systems, Project Charters, Business Requirement Documents, Business Overview Documents, Authoring Narrative Use Cases, Functional Specifications, and Technical Specifications, data warehousing, reporting and testing plans. Expertise in creating UML based Modelling views like Activity/ Use Case/Data Flow/Business Flow /Navigational Flow/Wire Frame diagrams using Rational Products & MS Visio. Proficient as long time liaison between business and technology with competence in Full Life Cycle of System (SLC) development with Waterfall, Agile, RUP methodology, IT Auditing and SOX Concepts as well as broad cross-functional experiences leveraging multiple frameworks. Extensively worked with the On-site and Off-shore Quality Assurance Groups by assisting the QA team to perform Black Box /GUI testing/ Functionality /Regression /System /Unit/Stress /Performance/ UAT's. Facilitated change management across entire process from project conceptualization to testing through project delivery, Software Development & Implementation Management in diverse business & technical environments, with demonstrated leadership abilities. EDUCATION

Post Graduate Diploma (in Business Administration), USA Master's Degree (in Computer Applications), Bachelor's Degree (in Commerce), TECHNICAL SKILLS

Documentation Tools UML, MS Office (Word, Excel, Power Point, Project), MS Visio, Erwin

SDLC Methodologies Waterfall, Iterative, Rational Unified Process (RUP), Spiral, Agile

Modeling Tools UML, MS Visio, Erwin, Power Designer, Metastrom Provision

Reporting Tools Business Objects X IR2, Crystal Reports, MS Office Suite

QA Tools Quality Center, Test Director, Win Runner, Load Runner, QTP, Rational Requisite Pro, Bugzilla, Clear Quest

Languages Java, VB, SQL, HTML, XML, UML, ASP, JSP

Databases & OS MS SQL Server, Oracle 10g, DB2, MS Access on Windows XP / 2000, Unix

Version Control Rational Clear Case, Visual Source Safe

PROFESSIONAL EXPERIENCE

SERVICE MASTER, Memphis, TN June 08 - Till Date

Senior Business Analyst

Terminix has approximately 800 customer service agents that reside in our branches in addition to approximately 150 agents in a centralized call center in Memphis, TN. Terminix customer service agents receive approximately 25 million calls from customers each year. Many of these customer's questions are not answered or their problems are not resolved on the first call. Currently these agents use an AS/400 based custom developed system called Mission to answer customer inquiries into branches and the Customer Communication Center. Mission - Terminix's operation system - provides functionality for sales, field service (routing & scheduling, work order management), accounts receivable, and payroll. This system is designed modularly and is difficult to navigate for customer service agents needing to assist the customer quickly and knowledgeably. The amount of effort and time needed to train a customer service representative using the Mission system is high. This combined with low agent and customer retention is costly.

Customer Service Console enables Customer Service Associates to provide consistent, enhanced service experience, support to the Customers across the Organization. CSC is aimed at providing easy navigation, easy learning process, reduced call time and first call resolution.

Responsibilities

Assisted in creating Project Plan, Road Map. Designed Requirements Planning and Management document. Performed Enterprise Analysis and actively participated in buying Tool Licenses. Identified subject-matter experts and drove the requirements gathering process through approval of the documents that convey their needs to management, developers, and quality assurance team. Performed technical project consultation, initiation, collection and documentation of client business and functional requirements, solution alternatives, functional design, testing and implementation support. Requirements Elicitation, Analysis, Communication, and Validation according to Six Sigma Standards. Captured Business Process Flows and Reengineered Process to achieve maximum outputs. Captured As-Is Process, designed TO-BE Process and performed Gap Analysis Developed and updated functional use cases and conducted business process modeling (PROVISION) to explain business requirements to development and QA teams. Created Business Requirements Documents, Functional and Software Requirements Specification Documents. Performed Requirements Elicitation through Use Cases, one to one meetings, Affinity Exercises, SIPOC's. Gathered and documented Use Cases, Business Rules, created and maintained Requirements/Test Traceability Matrices. Client: The Dun & Bradstreet Corporation, Parsippany, NJ May' 2007 - Oct' 2007

Profile: Sr. Financial Business Analyst/ Systems Analyst.

Project Profile (1): D&B is the world's leading source of commercial information and insight on businesses. The Point of Arrival Project and the Data Maintenance (DM) Project are the future applications of the company that the company would transit into, providing an effective method & efficient report generation system for D&B's clients to be able purchase reports about companies they are trying to do business.

Project Profile (2): The overall purpose of this project was building a Self Awareness System(SAS) for the business community for buying SAS products and a Payment system was built for SAS. The system would provide certain combination of products (reports) for Self Monitoring report as a foundation for managing a company's credit.

Responsibilities:

Conducted GAP Analysis and documented the current state and future state, after understanding the Vision from the Business Group and the Technology Group. Conducted interviews with Process Owners, Administrators and Functional Heads to gather audit-related information and facilitated meetings to explain the impacts and effects of SOX compliance. Played an active and lead role in gathering, analyzing and documenting the Business Requirements, the business rules and Technical Requirements from the Business Group and the Technological Group. Co - Authored and prepared Graphical depictions of Narrative Use Cases, created UML Models such as Use Case Diagrams, Activity Diagrams and Flow Diagrams using MS Visio throughout the Agile methodology Documented the Business Requirement Document to get a better understanding of client's business processes of both the projects using the Agile methodology. Facilitating JRP and JAD sessions, brain storming sessions with the Business Group and the Technology Group. Documented the Requirement traceability matrix (RTM) and conducted UML Modelling such as creating Activity Diagrams, Flow Diagrams using MS Visio. Analysed test data to detect significant findings and recommended corrective measures Co-Managed the Change Control process for the entire project as a whole by facilitating group meetings, one-on-one interview sessions and email correspondence with work stream owners to discuss the impact of Change Request on the project. Worked with the Project Lead in setting realistic project expectations and in evaluating the impact of changes on the organization and plans accordingly and conducted project related presentations. Co-oordinated with the off shore QA Team members to explain and develop the Test Plans, Test cases, Test and Evaluation strategy and methods for unit testing, functional testing and usability testing Environment: Windows XP/2000, SOX, Sharepoint, SQL, MS Visio, Oracle, MS Office Suite, Mercury ITG, Mercury Quality Center, XML, XHTML, Java, J2EE.

GATEWAY COMPUTERS, Irvine, CA, Jan 06 - Mar 07

Business Analyst

At Gateway, a Leading Computer, Laptop and Accessory Manufacturer, was involved in two projects,

Order Capture Application: Objective of this Project is to Develop Various Mediums of Sales with a Centralized Catalog. This project involves wide exposure towards Requirement Analysis, Creating, Executing and Maintaining of Test plans and Test Cases. Mentored and trained staff about Tech Guide & Company Standards; Gateway reporting system: was developed with Business Objects running against Oracle data warehouse with Sales, Inventory, and HR Data Marts. This DW serves the different needs of Sales Personnel and Management. Involved in the development of it utilized Full Client reports and Web Intelligence to deliver analytics to the Contract Administration group and Pricing groups. Reporting data mart included Wholesaler Sales, Contract Sales and Rebates data.

Responsibilities:

Product Manager for Enterprise Level Order Entry Systems - Phone, B2B, Gateway.com and Cataloging System. Modeled the Sales Order Entry process to eliminate bottleneck process steps using ERWIN. Adhered and practiced RUP for implementing software development life cycle. Gathered Requirements from different sources like Stakeholders, Documentation, Corporate Goals, Existing Systems, and Subject Matter Experts by conducting Workshops, Interviews, Use Cases, Prototypes, Reading Documents, Market Analysis, Observations Created Functional Requirement Specification documents - which include UMLUse case diagrams, Scenarios, activity, work Flow diagrams and data mapping. Process and Data modeling with MS VISIO. Worked with Technical Team to create Business Services (Web Services) that Application could leverage using SOA, to create System Architecture and CDM for common order platform. Designed Payment Authorization (Credit Card, Net Terms, and Pay Pal) for the transaction/order entry systems. Implemented A/B Testing, Customer Feedback Functionality to Gateway.com Worked with the DW, ETL teams to create Order entry systems Business Objects reports. (Full Client, Web I) Worked in a cross functional team of Business, Architects and Developers to implement new features. Program Managed Enterprise Order Entry Systems - Development and Deployment Schedule. Developed and maintained User Manuals, Application Documentation Manual, on Share Point tool. Created Test Plansand Test Strategies to define the Objective and Approach of testing. Used Quality Center to track and report system defects and bug fixes. Written modification requests for the bugs in the application and helped developers to track and resolve the problems. Developed and Executed Manual, Automated Functional, GUI, Regression, UAT Test cases using QTP. Gathered, documented and executed Requirements-based, Business process (workflow/user scenario), Data driven test cases for User Acceptance Testing. Created Test Matrix, Used Quality Center for Test Management, track & report system defects and bug fixes. Performed Load, stress Testing's & Analyzed Performance, Response Times. Designed approach, developed visual scripts in order to test client & server side performance under various conditions to identify bottlenecks. Created / developed SQL Queries (TOAD) with several parameters for Backend/DB testing Conducted meetings for project status, issue identification, and parent task review, Progress Reporting. AMC MORTGAGE SERVICES, CA, USA Oct 04 - Dec 05

Business Analyst

The primary objective of this project is to replace the existing Internal Facing Client / Server Applications with a Web enabled Application System, which can be used across all the Business Channels. This project involves wide exposure towards Requirement Analysis, Creating, Executing and Maintaining of Test plans and Test Cases. Demands understanding and testing of Data Warehouse and Data Marts, thorough knowledge of ETL and Reporting, Enhancement of the Legacy System covered all of the business requirements related to Valuations from maintaining the panel of appraisers to ordering, receiving, and reviewing the valuations.

Responsibilities:

Gathered Analyzed, Validated, and Managed and documented the stated Requirements. Interacted with users for verifying requirements, managing change control process, updating existing documentation. Created Functional Requirement Specification documents - that include UML Use case diagrams, scenarios, activity diagrams and data mapping. Provided End User Consulting on Functionality and Business Process. Acted as a client liaison to review priorities and manage the overall client queue. Provided consultation services to clients, technicians and internal departments on basic to intricate functions of the applications. Identified business directions & objectives that may influence the required data and application architectures. Defined, prioritized business requirements, Determine which business subject areas provide the most needed information; prioritize and sequence implementation projects accordingly. Provide relevant test scenarios for the testing team. Work with test team to develop system integration test scripts and ensure the testing results correspond to the business expectations. Used Test Director, QTP, Load Runner for Test management, Functional, GUI, Performance, Stress Testing Perform Data Validation, Data Integration and Backend/DB testing using SQL Queries manually. Created Test input requirements and prepared the test data for data driven testing. Mentored, trained staff about Tech Guide & Company Standards. Set-up and Coordinate Onsite offshore teams, Conduct Knowledge Transfer sessions to the offshore team. Lloyds Bank, UK Aug 03 - Sept 04 Business Analyst Lloyds TSB is leader in Business, Personal and Corporate Banking. Noted financial provider for millions of customers with the financial resources to meet and manage their credit needs and to achieve their financial goals. The Project involves an applicant Information System, Loan Appraisal and Loan Sanction, Legal, Disbursements, Accounts, MIS and Report Modules of a Housing Finance System and Enhancements for their Internet Banking.

Responsibilities:

Translated stakeholder requirements into various documentation deliverables such as functional specifications, use cases, workflow / process diagrams, data flow / data model diagrams. Produced functional specifications and led weekly meetings with developers and business units to discuss outstanding technical issues and deadlines that had to be met. Coordinated project activities between clients and internal groups and information technology, including project portfolio management and project pipeline planning. Provided functional expertise to developers during the technical design and construction phases of the project. Documented and analyzed business workflows and processes. Present the studies to the client for approval Participated in Universe development - planning, designing, Building, distribution, and maintenance phases. Designed and developed Universes by defining Joins, Cardinalities between the tables. Created UML use case, activity diagrams for the interaction between report analyst and the reporting systems. Successfully implemented BPR and achieved improved Performance, Reduced Time and Cost. Developed test plans and scripts; performed client testing for routine to complex processes to ensure proper system functioning. Worked closely with UAT Testers and End Users during system validation, User Acceptance Testing to expose functionality/business logic problems that unit testing and system testing have missed out. Participated in Integration, System, Regression, Performance, and UAT - Using TD, WR, Load Runner Participated in defect review meetings with the team members. Worked closely with the project manager to record, track, prioritize and close bugs. Used CVS to maintain versions between various stages of SDLC. Client: A.G. Edwards, St. Louis, MO May' 2005 - Feb' 2006

Profile: Sr. Business Analyst/System Analyst

Project Profile: A.G. Edwards is a full service Trading based brokerage firm in Internet-based futures, options and forex brokerage. This site allows Users (Financial Representative) to trade online. The main features of this site were: Users can open new account online to trade equitiies, bonds, derivatives and forex with the Trading system using DTCC's applications as a Clearing House agent. The user will get real-time streaming quotes for the currency pairs they selected, their current position in the forex market, summary of work orders, payments and current money balances, P & L Accounts and available trading power, all continuously updating in real time via live quotes. The site also facilitates users to Place, Change and Cancel an Entry Order, Placing a Market Order, Place/Modify/Delete/Close a Stop Loss Limit on an Open Position.

Responsibilities:

Gathered Business requirements pertaining to Trading, equities and Fixed Incomes like bonds, converted the same into functional requirements by implementing the RUP methodology and authored the same in Business Requirement Document (BRD). Designed and developed all Narrative Use Cases and conducted UML modeling like created Use Case Diagrams, Process Flow Diagrams and Activity Diagrams using MS Visio. Implemented the entire Rational Unified Process (RUP) methodology of application development with its various workflows, artifacts and activities. Developed business process models in RUP to document existing and future business processes. Established a business Analysis methodology around the Rational Unified Process. Analyzed user requirements, attended Change Request meetings to document changes and implemented procedures to test changes. Assisted in developing project timelines/deliverables/strategies for effective project management. Evaluated existing practices of storing and handling important financial data for compliance. Involved in developing the test strategy and assisted in developed Test scenarios, test conditions and test cases Partnered with the technical Business Analyst Interview questions areas in the research, resolution of system and User Acceptance Testing (UAT).

1 note

·

View note

Text

RMAN QUICK LEARN– FOR THE BEGINNERS