#Techdirt

Explore tagged Tumblr posts

Text

There’s something important to understand about innovation. It doesn’t actually happen in a vacuum. The reason Silicon Valley became Silicon Valley wasn’t because a bunch of genius inventors happened to like California weather. It was because of a complex web of institutions that made innovation possible: courts that would enforce contracts (but not non-competes, allowing ideas to spread quickly and freely across industries), universities that shared research, a financial system that could fund new ideas, and laws that let people actually try those ideas out. And surrounding it all: a fairly stable economy, stability in global markets and (more recently) a strong belief in a global open internet. And now we’re watching Musk, Trump, and their allies destroy these foundations. They operate under the dangerous delusion of the “great man” theory of innovation — the false belief that revolutionary changes come solely from lone geniuses, rather than from the complex interplay of open systems, diverse perspectives, and stable institutions that actually drives progress. The reality has always been much messier. Innovation happens when lots of different people can try lots of different ideas. When information flows freely. When someone can start a company without worrying that the government will investigate them for criticizing an oligarch. When diverse perspectives can actually contribute to the conversation. You know — all the things that are currently under attack. But you need a stable economy and stable infrastructure to make that work. And you need an openness to ideas and collaboration and (gasp) diversity to actually getting the most out of people.

259 notes

·

View notes

Text

Mike Masnick: The ATProtocol is a technological poison pill that sabotages enshittification. Even the potential of competition serves to protect against BlueSky following Twitter's trajectory.

88 notes

·

View notes

Text

7 notes

·

View notes

Text

I don't know what TechDirt is or who Karl Bode is, but his editorial / article on why Vice Media (and all these other similar whatevers) failed is worth considering, at least:

They were run into the ground by "brunchlords," which is a fun term new-to-me for rich kids with flashy degrees from flashy schools where they made flashy connections that got them these flashy jobs, all while they are in fact utterly dull untested incompetent wealth-vampires, who are eternally insulated from any of the negative consequences of their idiotic, trend-based, should-probably-be-criminal mishandling of actual businesses.

They also think of journalism as nothing more than a tool of self-promotion and for generating revenue from whatever shady actor shows up to throw money at them. And they treat the people they employ, and what they produce, exactly like that.

According to this guy, good for-profit journalism, such as it ever was, is mostly the collapsing garbage heap it is now because it was squeezed to death by petulent manbabies who never should have been allowed to handle it.

I think this is certainly a big reason why these kinds of outlets are all tumbling down; there are others, and there are lots of people to blame. And I certainly think some of the workaday, boots-on-the-ground journalists themselves ARE partially responsible for this, because they said yes to things because of personal financial desperation that shot their supposed journalistic integrity in the feet. Journalism thrives only when journalists are trusted, and over the last 20+ years, lots of us have had good reason to not trust a lot of these people.

But that just fed into this other stuff.

Again, it is worth considering.

Edit: Slightly softer rendition of the same information, from The Guardian

2 notes

·

View notes

Text

#Jynxfaery#Techdirt#easthetic#faery kei#club kid#clubhouse#faery aesthetic#cybergoth#faery kin#cyberspace#cyber girl#youtube

2 notes

·

View notes

Text

Techdirt and wired are our only hope

1 note

·

View note

Text

Albuquerque will try to build a new soccer stadium for the 12th time thanks to support from fake groups

Just several months have passed since I last wrote about the mayor of Albuquerque and his never-ending quest to get a new stadium built for the local soccer franchise, New Mexico United (NMU). To shortly summarize, the mayor has been begging public officials for the last 3 years to give NMU upwards of $50 million taxpayer dollars for a new stadium. After city councilors rejected this idea, the…

View On WordPress

#Albuquerque#Albuquerque Hispano Chamber#Astroturf#Balloon Fiesta Park#Buffalo Bills#Center Square#Greater Albuquerque Chamber of Commerce#Industrial Revenue Bonds#Mayor#New Mexico#New Mexico United#SantaFeNewMexican.com#TechDirt

0 notes

Text

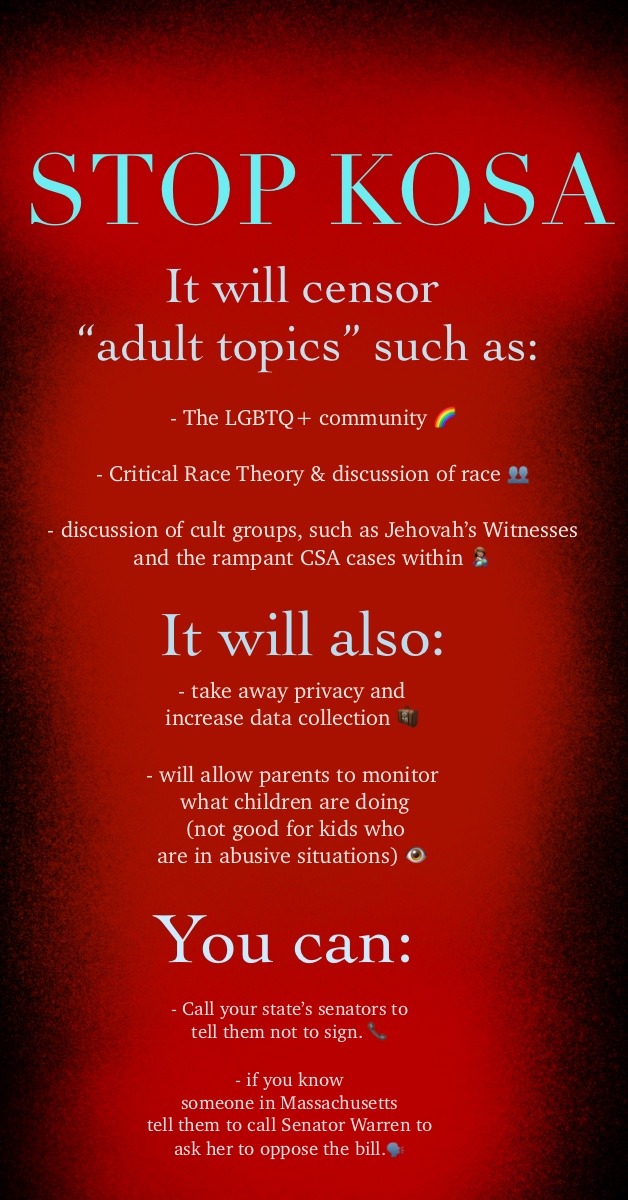

Please do what you can to stop this bill from being passed, and please share this message where you can. It's going to be very bad for pretty much everyone on the Internet if this bill is passed. If you're skeptical: There are resources online with more information about the KOSA bill, including articles written by lawmakers explaining why this bill is a terrible idea. There is even a TechDirt article written by Mike Masnick titled "Marsha Blackburn Makes It Clear: KOSA Is Designed To Silence Trans People" where they write of and post a video in which Senator Marsha Blackburn (who co-wrote the bill) explicitly stated that this bill would be used to target the trans community. So please share this in your stories, with your family and friends, and call your Senators to tell them not to sign this bill. And if you know anyone in Massachusetts, tell them to call Senator Warren to ask her to withdraw her support of the bill. Thank you for your time and consideration.

EDIT - UPDATE:

Please read the post I linked below, it pertains to the status of the KOSA bill. Things are not looking good right now.

UPDATE #2:

Please look at this post!

#lgbtq#lgbtq+#lgbtqia#lgbtqplus#lgbtq community#lgbtqia2s+#lgbt#critical race theory#blacklivesmatter#black lives matter#race#exjw#ex jw#ex jehovah's witness#ex mormon#ex catholic#ex christian#ex cult#apostate#religious trauma#religion#stop kosa#kosa bill#kosa act#kosa#kids online safety act#internet censorship#censorship#us politics#politics

536 notes

·

View notes

Text

He’s not a famous name in the wider world, but copyright lawyer Mark Lemley is equal parts revered and feared within certain tech circles. TechDirt recently described him as a “Lebron James/Michael Jordan”-level legal thinker. A professor at Stanford, counsel at an IP-focused law firm in the Bay Area, and one of the 10 most-cited legal scholars of all time, Lemley is exactly the kind of person Silicon Valley heavyweights want on their side. Meta, however, has officially lost him.

Earlier this month, Lemley announced he was no longer going to defend the tech giant in Kadrey v. Meta, a lawsuit filed by a group of authors who allege the tech giant violated copyright law by training its AI tools on their books without their permission. The fact that he quit is a big deal. I wondered if it had something to do with how the case was going—but then I checked social media.

Lemley said on LinkedIn and Bluesky that he still believes Meta should win the lawsuit, and he wasn’t bowing out because of the merits of the case. Instead, he’d “fired” Meta because of what he characterized as the company and its CEO Mark Zuckerberg’s “descent into toxic masculinity and Neo-Nazi madness.” The move came on the heels of major policy shifts at Meta, including changes to its hateful conduct rules that now allow users to call gay and trans people “mentally ill.”

In a phone conversation, Lemley explained what motivated his decision to quit, and where he sees the broader legal landscape on AI and copyright going, including his suspicion that OpenAI may settle with The New York Times.

This interview has been edited for clarity and length.

Kate Knibbs: Could you go into more detail about how you arrived at your decision to quit representing Meta? What was the deciding factor?

Mark Lemley: I am very troubled by the direction in which the country is going, and I am particularly troubled that a number of folks in the tech industry seem to be willing to go along with it, no matter how extreme it gets. A number of policy changes struck me as things that I would not personally want to be associated with, from the full-throated endorsement of Trump, to the systematic cutting-back on protections for LGBTQ people, to the elimination of DEI programs. All of this is a pattern, I think, that seems to be following what we saw with Elon Musk a couple of years ago. We've seen where that path leads, and it's not somewhere good. Mark Zuckerberg is, of course, free to do whatever he wants to do, but I decided that that wasn't something I wanted to be associated with.

Did Meta make an effort to keep you? Did Zuckerberg say anything to you?

I’ve not had any conversation with Mark Zuckerberg, ever. But any internal conversations that were had is something I probably should not talk about.

Especially right now, it’s apparent that Zuckerberg isn’t the only tech mogul aligning himself with Trump. As you mentioned, Elon Musk comes to mind. But there are a lot of very powerful people in Silicon Valley who are pivoting hard towards MAGA policies. Do you have a list, now, of people you’d say no to representing? How are you approaching this?

I did think Zuckerberg and Musk have been particularly egregious in their behavior. But one of the nice things about being in the position I'm in—having a full-time job teaching rather than practicing law—is that I have probably greater freedom than a lot of people to say I don't need to take that money. Do I have a list? No, absolutely not.

But if you decide that the thing to do with your brand is to associate it with moves towards fascism, that is a decision that ought to have consequences. One of the challenges that a lot of people have is they don't feel that they can speak up, because it's going to cost them personally. So I think it's all the more important for people who can bear that cost to do so.

What has the reaction been like?

When I made this as a personal decision, I decided I should say something about it on social media, both because I thought it was important to explain why I was doing it, and also to explain that it wasn't a function of anything in the case, or my views about the case. I had no idea what I was in for, in terms of the reaction. It's been quite remarkable and overwhelmingly positive. There are plenty of trolls who think I'm an idiot and a libtard. But so far, no death threats, which is a welcome improvement from the past.

Have you heard from people who might follow in your footsteps?

This struck such a nerve, and there are obviously a lot of people who feel that they don't have the power to tell Meta or anyone else to go away, or to stand up for things that they think, and that's unfortunate.

I know your position remains that Meta is still on the right in its AI copyright disputes. But are there any cases in which you think the plaintiffs have a stronger argument?

The strongest arguments are the ones where the output of a work ends up being substantially similar to a particular copyrighted input. Most of the time, when that happens, it happens by accident or because they didn't do a good enough job trying to fix the problems that lead to it. But sometimes, it might be unavoidable. Turns out, it’s hard to purge all references to Mickey Mouse from your AI dataset, for instance. If people want to try to generate a Mickey Mouse image, it's often possible to do something that looks like Mickey Mouse. So there are a set of issues that might create copyright problems, but they're mostly not the ones currently being litigated.

The one exception to that is the UMG v. Anthropic case, because at least early on, earlier versions of Anthropic would generate the song lyrics for songs in the output. That's a problem. The current status of that case is they've put safeguards in place to try to prevent that from happening, and the parties have sort of agreed that, pending the resolution of the case, those safeguards are sufficient, so they're no longer seeking a preliminary injunction.

At the end of the day, the harder question for the AI companies is not is it legal to engage in training? It’s what do you do when your AI generates output that is too similar to a particular work?

Do you expect the majority of these cases to go to trial, or do you see settlements on the horizon?

There may well be some settlements. Where I expect to see settlements is with big players who either have large swaths of content or content that's particularly valuable. The New York Times might end up with a settlement, and with a licensing deal, perhaps where OpenAI pays money to use New York Times content.

There's enough money at stake that we're probably going to get at least some judgments that set the parameters. The class-action plaintiffs, my sense is they have stars in their eyes. There are lots of class actions, and my guess is that the defendants are going to be resisting those and hoping to win on summary judgment. It's not obvious that they go to trial. The Supreme Court in the Google v. Oracle case nudged fair-use law very strongly in the direction of being resolved on summary judgment, not in front of a jury. I think the AI companies are going to try very hard to get those cases decided on summary judgment.

Why would it be better for them to win on summary judgment versus a jury verdict?

It's quicker and it's cheaper than going to trial. And AI companies are worried that they're not going to be viewed as popular, that a lot of people are going to think, Oh, you made a copy of the work that should be illegal and not dig into the details of the fair-use doctrine.

There have been lots of deals between AI companies and media outlets, content providers, and other rights holders. Most of the time, these deals appear to be more about search than foundational models, or at least that’s how it’s been described to me. In your opinion, is licensing content to be used in AI search engines—where answers are sourced by retrieval augmented generation or RAG—something that’s legally obligatory? Why are they doing it this way?

If you're using retrieval augmented generation on targeted, specific content, then your fair-use argument gets more challenging. It's much more likely that AI-generated search is going to generate text taken directly from one particular source in the output, and that's much less likely to be a fair use. I mean, it could be—but the risky area is that it’s much more likely to be competing with the original source material. If instead of directing people to a New York Times story, I give them my AI prompt that uses RAG to take the text straight out of that New York Times story, that does seem like a substitution that could harm the New York Times. Legal risk is greater for the AI company.

What do you want people to know about the generative AI copyright fights that they might not already know, or they might have been misinformed about?

The thing that I hear most often that's wrong as a technical matter is this concept that these are just plagiarism machines. All they're doing is taking my stuff and then grinding it back out in the form of text and responses. I hear a lot of artists say that, and I hear a lot of lay people say that, and it's just not right as a technical matter. You can decide if generative AI is good or bad. You can decide it's lawful or unlawful. But it really is a fundamentally new thing we have not experienced before. The fact that it needs to train on a bunch of content to understand how sentences work, how arguments work, and to understand various facts about the world doesn't mean it's just kind of copying and pasting things or creating a collage. It really is generating things that nobody could expect or predict, and it's giving us a lot of new content. I think that's important and valuable.

39 notes

·

View notes

Quote

This isn’t about politics — it’s about the systematic dismantling of the very infrastructure that made American innovation possible. For those in the tech industry who supported this administration thinking it would mean less regulation or more “business friendly” policies: you’ve catastrophically misread the situation (which many people tried to warn you about). While overregulation (which, let’s face it, we didn’t really have) can be bad, it’s nothing compared to the destruction of the stable institutional framework that allowed American innovation to thrive in the first place.

Why Techdirt Is Now A Democracy Blog (Whether We Like It Or Not)

26 notes

·

View notes

Text

The person who started TechDirt posted an article about the content moderation cycle. Basically, people don't like moderation, then start a site without moderation, then realize they need moderation, and people get mad about moderation again to start the cycle again.

And yet, people don't realize this is happening again.

I just saw someone make a really accurate point about why right-wing social media sites fail, and why Twitter will inevitably fall now that's it's rapidly becoming a right-wing exclusive social media site. It also explains why right-wingers are so pissed about the mass migration to Bluesky:

MAGAts want an audience they can indoctrinate and radicalize. Normal people who don't care much about politics are not going to go on explicitly right-wing sites like Truth Social, but they will go onto sites like Twitter and Youtube and can be radicalized there.

MAGAts are, at their core, bullies and abusers. They want to have a steady supply of punching bags they can hurt. Of course there's infighting on sites like Gab and Rumble, but it's not the same. This is why they get so upset when someone blocks them; they claim it's about "free speech" (lol) but in reality they're just butthurt that one of their victims has cut off their ability to harass them. MAGAts are losing their minds over the Bluesky migration because Bluesky has much better blocking/moderation practices than Twitter (where Elon has stated his intent to remove or at least weaken the block button). So yeah, the conservative reaction to the ongoing Twitter exodus literally is just "how dare you leave and not let me use you as a punching bag?!?!!"

#i hate republicans for being hypocrites on free speech#techdirt is great for independent tech stuff#site founder named the Stressiand effect#social media allows us to stay in our own reality

538 notes

·

View notes

Text

Mike Masnick explains Why Techdirt Is Now A Democracy Blog (Whether We Like It Or Not). “Right now, the story that matters most is how the dismantling of American institutions threatens everything else we cover.”

24 notes

·

View notes

Text

At long last, a meaningful step to protect Americans' privacy

This Saturday (19 Aug), I'm appearing at the San Diego Union-Tribune Festival of Books. I'm on a 2:30PM panel called "Return From Retirement," followed by a signing:

https://www.sandiegouniontribune.com/festivalofbooks

Privacy raises some thorny, subtle and complex issues. It also raises some stupid-simple ones. The American surveillance industry's shell-game is founded on the deliberate confusion of the two, so that the most modest and sensible actions are posed as reductive, simplistic and unworkable.

Two pillars of the American surveillance industry are credit reporting bureaux and data brokers. Both are unbelievably sleazy, reckless and dangerous, and neither faces any real accountability, let alone regulation.

Remember Equifax, the company that doxed every adult in America and was given a mere wrist-slap, and now continues to assemble nonconsensual dossiers on every one of us, without any material oversight improvements?

https://memex.craphound.com/2019/07/20/equifax-settles-with-ftc-cfpb-states-and-consumer-class-actions-for-700m/

Equifax's competitors are no better. Experian doxed the nation again, in 2021:

https://pluralistic.net/2021/04/30/dox-the-world/#experian

It's hard to overstate how fucking scummy the credit reporting world is. Equifax invented the business in 1899, when, as the Retail Credit Company, it used private spies to track queers, political dissidents and "race mixers" so that banks and merchants could discriminate against them:

https://jacobin.com/2017/09/equifax-retail-credit-company-discrimination-loans

As awful as credit reporting is, the data broker industry makes it look like a paragon of virtue. If you want to target an ad to "Rural and Barely Making It" consumers, the brokers have you covered:

https://pluralistic.net/2021/04/13/public-interest-pharma/#axciom

More than 650,000 of these categories exist, allowing advertisers to target substance abusers, depressed teens, and people on the brink of bankruptcy:

https://themarkup.org/privacy/2023/06/08/from-heavy-purchasers-of-pregnancy-tests-to-the-depression-prone-we-found-650000-ways-advertisers-label-you

These companies follow you everywhere, including to abortion clinics, and sell the data to just about anyone:

https://pluralistic.net/2022/05/07/safegraph-spies-and-lies/#theres-no-i-in-uterus

There are zillions of these data brokers, operating in an unregulated wild west industry. Many of them have been rolled up into tech giants (Oracle owns more than 80 brokers), while others merely do business with ad-tech giants like Google and Meta, who are some of their best customers.

As bad as these two sectors are, they're even worse in combination – the harms data brokers (sloppy, invasive) inflict on us when they supply credit bureaux (consequential, secretive, intransigent) are far worse than the sum of the harms of each.

And now for some good news. The Consumer Finance Protection Bureau, under the leadership of Rohit Chopra, has declared war on this alliance:

https://www.techdirt.com/2023/08/16/cfpb-looks-to-restrict-the-sleazy-link-between-credit-reporting-agencies-and-data-brokers/

They've proposed new rules limiting the trade between brokers and bureaux, under the Fair Credit Reporting Act, putting strict restrictions on the transfer of information between the two:

https://www.cnn.com/2023/08/15/tech/privacy-rules-data-brokers/index.html

As Karl Bode writes for Techdirt, this is long overdue and meaningful. Remember all the handwringing and chest-thumping about Tiktok stealing Americans' data to the Chinese military? China doesn't need Tiktok to get that data – it can buy it from data-brokers. For peanuts.

The CFPB action is part of a muscular style of governance that is characteristic of the best Biden appointees, who are some of the most principled and competent in living memory. These regulators have scoured the legislation that gives them the power to act on behalf of the American people and discovered an arsenal of action they can take:

https://pluralistic.net/2022/10/18/administrative-competence/#i-know-stuff

Alas, not all the Biden appointees have the will or the skill to pull this trick off. The corporate Dems' darlings are mired in #LearnedHelplessness, convinced that they can't – or shouldn't – use their prodigious powers to step in to curb corporate power:

https://pluralistic.net/2023/01/10/the-courage-to-govern/#whos-in-charge

And it's true that privacy regulation faces stiff headwinds. Surveillance is a public-private partnership from hell. Cops and spies love to raid the surveillance industries' dossiers, treating them as an off-the-books, warrantless source of unconstitutional personal data on their targets:

https://pluralistic.net/2021/02/16/ring-ring-lapd-calling/#ring

These powerful state actors reliably intervene to hamstring attempts at privacy law, defending the massive profits raked in by data brokers and credit bureaux. These profits, meanwhile, can be mobilized as lobbying dollars that work lawmakers and regulators from the private sector side. Caught in the squeeze between powerful government actors (the true "Deep State") and a cartel of filthy rich private spies, lawmakers and regulators are frozen in place.

Or, at least, they were. The CFPB's discovery that it had the power all along to curb commercial surveillance follows on from the FTC's similar realization last summer:

https://pluralistic.net/2022/08/12/regulatory-uncapture/#conscious-uncoupling

I don't want to pretend that all privacy questions can be resolved with simple, bright-line rules. It's not clear who "owns" many classes of private data – does your mother own the fact that she gave birth to you, or do you? What if you disagree about such a disclosure – say, if you want to identify your mother as an abusive parent and she objects?

But there are so many stupid-simple privacy questions. Credit bureaux and data-brokers don't inhabit any kind of grey area. They simply should not exist. Getting rid of them is a project of years, but it starts with hacking away at their sources of profits, stripping them of defenses so we can finally annihilate them.

I'm kickstarting the audiobook for "The Internet Con: How To Seize the Means of Computation," a Big Tech disassembly manual to disenshittify the web and make a new, good internet to succeed the old, good internet. It's a DRM-free book, which means Audible won't carry it, so this crowdfunder is essential. Back now to get the audio, Verso hardcover and ebook:

http://seizethemeansofcomputation.org

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/16/the-second-best-time-is-now/#the-point-of-a-system-is-what-it-does

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

#pluralistic#privacy#data brokers#cfpb#consumer finance protection bureau#regulation#regulatory nihilism#regulatory capture#trustbusting#monopoly#antitrust#private public partnerships from hell#deep state#photocopier kickers#rohit chopra#learned helplessness#equifax#credit reporting#credit reporting bureaux#experian

310 notes

·

View notes

Text

Good article from TechDirt on how Heritage Foundation/Action is LYING about KOSA. Good source to read through and share on social media and send to Democrats.

Just another reason you should oppose KOSA.

#pro choice#pro abortion#fuck pro lifers#reproductive freedom#reproductive rights#kosa bill#fuck kosa#anti censorship

47 notes

·

View notes

Text

If you are concerned about AI reading your fanfics, lock your fics to only be viewable to AO3 users only.

Found out FanFiction.net’s privacy policy prevents AI from scraping it. As a user of that site and that being primarily where I post, I’m pretty happy about this

But I’ve heard AO3 isn’t as secure so I won’t be moving my fics there any time soon until I know for sure they are safe from the thieves

17 notes

·

View notes