#Walmart with Python and BeautifulSoup

Explore tagged Tumblr posts

Text

How to Extract Product Data from Walmart with Python and BeautifulSoup

This tutorial blog helps you understand How to Extract Product Data from Walmart with Python and BeautifulSoup. Get the best Walmart product data scraping services from iWeb Scraping at affordable prices.

0 notes

Text

Web Scraping Walmart: Extracting Inventory and Sales Insights

Introduction

Admittedly, power is within data's confinement, especially in this present age of digital commerce. Brands, marketers, analysts, and advanced shoppers would all want continuous news or the very latest updates on inventory levels, sales performance, and pricing trends, especially in retail giants like Walmart. Web Scraping Walmart has become a very useful methodology of creating actionable business intelligence because the online store houses millions of products supplied by thousands of suppliers from its enormous global footprint.

Understanding the Web Scraping APIs

In this article, you'll find all the amazing ways of extracting Walmart inventory data and scraping Walmart sales insights,as well as effective tools and ethical considerations regarding the practice, and real-world applications for using this technique. Regardless of being a data lover, e-commerce entrepreneur, or competitive intelligence professional, this great guide will brighten your path toward understanding how Web Scraping Walmart inventory data works.

Why Scrape Walmart?

Before discussing the “how,” an explanation of the “why” is in order. Walmart has one of the largest and most complicated retail ecosystems in the world, covering everything from grocery supplies to electronics, apparel, and automotive supplies.

Here’s why Walmart is a goldmine of data:

1.Dynamic Pricing:

Walmart frequently updates its prices to stay competitive. Tracking these changes gives businesses a competitive edge.

2. Inventory Monitoring

Understanding stock levels across regions helps brands optimize supply chains.

3. Sales Trends:

By analyzing pricing and availability over time, you can deduce sales performance.

4. Product Launches & Discontinuations:

Stay ahead of the curve by identifying when new items are introduced or discontinued.

5. Competitor Benchmarking:

Monitor your competitors' products listed on Walmart to shape your own strategies.

Understanding the Basics of Web Scraping

At its core, Web Scraping Walmart means programmatically extracting data from Walmart's public-facing website. Unlike manually copying data, web scraping automates the process, pulling large volumes of data quickly and efficiently.

The process typically involves:

● Sending HTTP requests to Walmart pages

● Parsing the HTML responses to locate and extract data.

● Storing the extracted data in databases or spreadsheets for analysis.

Benefits of AI and ML in Web Scraping APIs

Common data points include:

● Product names

● Prices

● Stock availability

● Ratings and reviews

● Shipping information

● Category hierarchies

Tools such as Python libraries (BeautifulSoup, Scrapy), browser automation tools (Selenium), or headless browsers (Puppeteer) are widely used in this domain.

Extracting Walmart Inventory Data: A Closer Look

What is Inventory Data?

Inventory data refers to the available stock levels of products listed on Walmart’s platform. Monitoring inventory levels can reveal:

● Product demand patterns

● Stock-out situations

● Overstock scenarios

● Seasonal fluctuations

How to Scrape Walmart Inventory Data

Step 1: Identify Product URLs

First, you need a list of Walmart product URLs. These can be gathered by scraping category pages, search results, or using Walmart’s sitemap (if accessible).

Step 2: Inspect the Web Page Structure

Use browser developer tools to inspect the product page. Walmart usually displays inventory status like:

● “In Stock”

● “Out of Stock

● “Only X left!”

These cues are often embedded in specific HTML tags or within dynamically loaded JSON embedded in scripts.

Step 3: Extract Inventory Data

Here’s a basic Python snippet using BeautifulSoup:

python

CopyEdit

import requests from bs4 import BeautifulSoup url = 'https://www.walmart.com/ip/your-product-id' headers = {'User-Agent': 'Mozilla/5.0'} response = requests.get(url, headers=headers) soup = BeautifulSoup(response.text, 'html.parser') inventory_status = soup.find('div', {'class': 'prod-ProductOffer-oosMsg'}).text.strip() print(inventory_status)

Note: Walmart uses dynamic content loading. You might need Selenium to fully render JavaScript-heavy pages.

Step 4: Automate and Scale

Once you have a working script, scale it to hundreds or thousands of products. Respect Walmart’s terms of service and implement polite scraping practices:

● Use proxy rotation

● Add delays between requests

● Handle errors gracefully

Insights You Can Derive

● Regional Stock Levels: Cross-reference inventory status by changing ZIP codes.

● Stock Movements: Track over time to detect restocks or shortages.

● Supplier Performance: Detect patterns in stock availability by supplier or brand.

Scrape Walmart Sales Insights: Unlocking Patterns

Sales insights go beyond just pricing and availability. By scraping Walmart sales insights, you’re aiming to infer:

● Sales velocity

● Pricing strategies

● Promotional impacts

● Consumer preferences

Step 1: Monitor Price Changes

Frequent price drops followed by "out of stock" status can signal high sales velocity. Track price changes over time to build a price history.

python

CopyEdit

price = soup.find('span', {'class': 'price-characteristic'}).get('content')

Step 2: Analyze Ratings and Reviews

Products with increasing reviews and ratings are likely top sellers. Scrape timestamps of reviews to map review velocity.

Step 3: Track "Best Seller" and "Popular Pick" Badges

Walmart often marks hot-selling products with badges. These are reliable indicators of high sales performance.

Step 4: Monitor Inventory Depletion

A rapid decrease in stock across multiple regions often signals a spike in sales.

Step 5: Utilize Historical Tracking

Record inventory levels and prices over days, weeks, or months to predict sales cycles and promotional windows.

Step 6: Advanced Data Enrichment

Enhance your scraped data with external sources:

● Social media mentions

● Advertising campaigns

● Seasonal demand forecasts

Challenges in Web Scraping Walmart

While Web Scraping Walmart is a powerful approach, it comes with its challenges:

1. Dynamic Content:

Walmart heavily uses JavaScript for rendering product details. Tools like Selenium or Puppeteer are essential for accurate scraping.

2. Anti-Scraping Measures:

Rate limiting, CAPTCHA challenges, and IP bans are common. Use ethical scraping tactics to mitigate risks.

3. Data Structure Variability:

Walmart constantly updates its website design. Regularly update your scrapers to adapt to changes.

4. Legal Considerations:

Always review Walmart’s terms of service and consult legal guidance. Ethical data scraping focuses on publicly accessible data and respects fair use.

Ethical Considerations: Responsible Web Scraping

When it comes to Web Scraping Walmart inventory data, ethics and legality must be at the forefront of your strategy.

● Respect Robots.txt:

Check Walmart’s robots.txt file to understand which parts of the site are disallowed for automated access.

● Avoid Server Overload:

Implement throttling and delay mechanisms to avoid overwhelming Walmart’s servers.

● Use Proxies and User Agents:

Rotate IP addresses and use realistic user-agent headers to mimic human browsing behavior.

● Data Usage:

Use scraped data responsibly. Avoid using it for malicious activities or data resale.

Real-World Applications

E-commerce Competitor Analysis

Brands selling on Walmart can benchmark their products against competitors

● Pricing strategies

● Stock levels

● Promotions and discounts

Dynamic Pricing Models

Use Walmart’s pricing patterns to dynamically adjust your own prices across multiple platforms.

Inventory Forecasting

By continuously extracting Walmart inventory data, brands can predict demand fluctuations and optimize supply chains accordingly.

Marketplace Research

Entrepreneurs looking to enter Walmart Marketplace can use sales insights to identify high-demand, low-competition niches.

Ad Spend Optimization

Link sales velocity with advertising spend to measure ROI on Walmart-sponsored ads.

Tools & Technologies for Walmart Web Scraping

Let’s explore the best tools for Web Scraping Walmart data efficiently.

Python Libraries

● Requests:

For sending HTTP requests.

● BeautifulSoup:

For parsing HTML.

● Selenium:

For handling dynamic content and automation.

● Scrapy:

A robust scraping framework with built-in throttling and proxies.

Headless Browsers

● Puppeteer (Node.js):

Excellent for scraping modern JavaScript-heavy websites.

● Playwright:

Supports multiple browsers and is fast and reliable

Proxy Services

● Bright Data

● Oxylabs

● ScraperAPI

Data Storage & Visualization

● Pandas and SQL databases for storing data.

● Power BI, Tableau, or Google Data Studio for visualizing insights.

Automating & Scaling Your Scraping Projects

When scaling your Web Scraping Walmart efforts, consider:

● Task Scheduling:

Use cron jobs or cloud functions.

● Distributed Scraping:

Deploy your scraper on cloud platforms like AWS Lambda, Google Cloud Functions, or Azure Functions.

● Data Pipelines:

Automate data cleaning, transformation, and loading (ETL) processes.

● Dashboarding:

Build real-time dashboards to monitor inventory and sales trends.

The Future of Data Extraction from Walmart

With advancements in AI and machine learning, the future of extracting Walmart inventory data and scraping Walmart sales insights looks even more promising.

● Predictive Analytics:

Predict out-of-stock scenarios before they happen.

● Sentiment Analysis:

Combine scraped reviews with AI to understand consumer sentiment.

● Automated Alerts:

Receive real-time notifications for inventory or price changes.

● AI-Powered Scrapers:

Use natural language processing to adapt to page structure changes.

Conclusion

Through Walmart web scraping, users are offered vast opportunities to gain critical insights into inventory and sales, effectively allowing firms to use data to base their decisions. Discovering various patterns, predicting trends, and formulating better strategies presents unlimited possibilities from scraping Walmart on inventory data to sales insights.

Know More : https://www.crawlxpert.com/blog/Web-Scraping-Walmart-Extracting-Inventory-and-Sales-Insights

0 notes

Text

How to Scrape Product Reviews from eCommerce Sites?

Know More>>https://www.datazivot.com/scrape-product-reviews-from-ecommerce-sites.php

Introduction In the digital age, eCommerce sites have become treasure troves of data, offering insights into customer preferences, product performance, and market trends. One of the most valuable data types available on these platforms is product reviews. To Scrape Product Reviews data from eCommerce sites can provide businesses with detailed customer feedback, helping them enhance their products and services. This blog will guide you through the process to scrape ecommerce sites Reviews data, exploring the tools, techniques, and best practices involved.

Why Scrape Product Reviews from eCommerce Sites? Scraping product reviews from eCommerce sites is essential for several reasons:

Customer Insights: Reviews provide direct feedback from customers, offering insights into their preferences, likes, dislikes, and suggestions.

Product Improvement: By analyzing reviews, businesses can identify common issues and areas for improvement in their products.

Competitive Analysis: Scraping reviews from competitor products helps in understanding market trends and customer expectations.

Marketing Strategies: Positive reviews can be leveraged in marketing campaigns to build trust and attract more customers.

Sentiment Analysis: Understanding the overall sentiment of reviews helps in gauging customer satisfaction and brand perception.

Tools for Scraping eCommerce Sites Reviews Data Several tools and libraries can help you scrape product reviews from eCommerce sites. Here are some popular options:

BeautifulSoup: A Python library designed to parse HTML and XML documents. It generates parse trees from page source code, enabling easy data extraction.

Scrapy: An open-source web crawling framework for Python. It provides a powerful set of tools for extracting data from websites.

Selenium: A web testing library that can be used for automating web browser interactions. It's useful for scraping JavaScript-heavy websites.

Puppeteer: A Node.js library that gives a higher-level API to control Chromium or headless Chrome browsers, making it ideal for scraping dynamic content.

Steps to Scrape Product Reviews from eCommerce Sites Step 1: Identify Target eCommerce Sites First, decide which eCommerce sites you want to scrape. Popular choices include Amazon, eBay, Walmart, and Alibaba. Ensure that scraping these sites complies with their terms of service.

Step 2: Inspect the Website Structure Before scraping, inspect the webpage structure to identify the HTML elements containing the review data. Most browsers have built-in developer tools that can be accessed by right-clicking on the page and selecting "Inspect" or "Inspect Element."

Step 3: Set Up Your Scraping Environment Install the necessary libraries and tools. For example, if you're using Python, you can install BeautifulSoup, Scrapy, and Selenium using pip:

pip install beautifulsoup4 scrapy selenium Step 4: Write the Scraping Script Here's a basic example of how to scrape product reviews from an eCommerce site using BeautifulSoup and requests:

Step 5: Handle Pagination Most eCommerce sites paginate their reviews. You'll need to handle this to scrape all reviews. This can be done by identifying the URL pattern for pagination and looping through all pages:

Step 6: Store the Extracted Data Once you have extracted the reviews, store them in a structured format such as CSV, JSON, or a database. Here's an example of how to save the data to a CSV file:

Step 7: Use a Reviews Scraping API For more advanced needs or if you prefer not to write your own scraping logic, consider using a Reviews Scraping API. These APIs are designed to handle the complexities of scraping and provide a more reliable way to extract ecommerce sites reviews data.

Step 8: Best Practices and Legal Considerations Respect the site's terms of service: Ensure that your scraping activities comply with the website’s terms of service.

Use polite scraping: Implement delays between requests to avoid overloading the server. This is known as "polite scraping."

Handle CAPTCHAs and anti-scraping measures: Be prepared to handle CAPTCHAs and other anti-scraping measures. Using services like ScraperAPI can help.

Monitor for changes: Websites frequently change their structure. Regularly update your scraping scripts to accommodate these changes.

Data privacy: Ensure that you are not scraping any sensitive personal information and respect user privacy.

Conclusion Scraping product reviews from eCommerce sites can provide valuable insights into customer opinions and market trends. By using the right tools and techniques, you can efficiently extract and analyze review data to enhance your business strategies. Whether you choose to build your own scraper using libraries like BeautifulSoup and Scrapy or leverage a Reviews Scraping API, the key is to approach the task with a clear understanding of the website structure and a commitment to ethical scraping practices.

By following the steps outlined in this guide, you can successfully scrape product reviews from eCommerce sites and gain the competitive edge you need to thrive in today's digital marketplace. Trust Datazivot to help you unlock the full potential of review data and transform it into actionable insights for your business. Contact us today to learn more about our expert scraping services and start leveraging detailed customer feedback for your success.

#ScrapeProduceReviewsFromECommerce#ExtractProductReviewsFromECommerce#ScrapingECommerceSitesReviews Data#ScrapeProductReviewsData#ScrapeEcommerceSitesReviewsData

0 notes

Text

A Beginner's Guide: What You Need To Know About Product Review Scraping

In the world of online shopping, knowing what customers think about products is crucial for businesses to beat their rivals. Product review scraping is a magic tool that helps businesses understand what customers like or don't like about products. It's like opening a treasure chest of opinions, ratings, and stories from customers, all with just a few clicks. With product review scraping, you can automatically collect reviews from big online stores like Amazon or Walmart, as well as from special review websites like Yelp or TripAdvisor.

To start scraping product reviews, you need the right tools. Software tools like BeautifulSoup and Scrapy are like special helpers, and frameworks like Selenium make things even easier. These tools help beginners explore websites, grab the information they need, and deal with tricky stuff like pages that change constantly.

What is Product Review Scraping?

The process of scraping product reviews involves collecting data from various internet sources, including e-commerce websites, forums, social media, and review platforms. Product review scraping can be compared to having a virtual robot that navigates through the internet to gather various opinions on different products from people. Picture yourself in the market for a new phone, seeking opinions from others before making a purchase. Instead of reading every review yourself, you can use a tool or program to do it for you.

The task requires checking multiple websites, such as Amazon or Best Buy, to collect user reviews and compile all comments and ratings for the particular phone. It's kind of like having a super-fast reader that can read thousands of reviews in a very short time. Once all the reviews are collected, you can compare them to see if people generally like the phone or if there are common complaints. For example, lots of people say the battery life is great, but some complain about the camera quality. This method eliminates the need to read through each review individually to determine which features of the product are great and which ones are not so great.

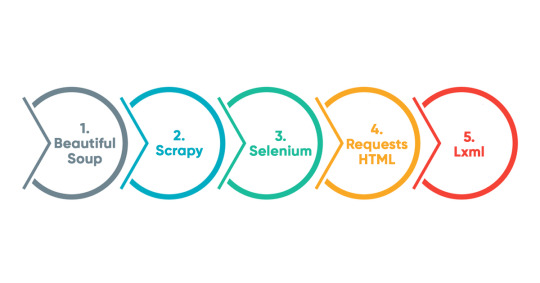

Tools to Scrape Product Reviews

These Python programs make it easy to gather product evaluations from numerous websites, allowing businesses to gain valuable insights from client feedback. Choosing the best instrument is determined by your requirements and preferences, as each has distinct strengths and purposes.

The popular Python tools for scraping product reviews are:

Beautiful Soup

It's like having a magic tool that helps you read and understand web pages. With Beautiful Soup, you can easily find and collect information from websites, making it the best tool for scraping product reviews from ecommerce websites.

Scrapy

Scrapy acts as a super-fast spider that crawls through websites to collect data. It is ideal for scraping product evaluations from several websites because it can handle large amounts of web pages and extract the information you want.

Selenium

Selenium is like a virtual robot that can click on buttons, fill out forms, and interact with websites just like a natural person would. This makes it handy to extract product evaluations from websites that make extensive use of advanced technologies like JavaScript.

Requests-HTML

Imagine asking a website for information, like asking a friend for help. That's what Requests-HTML does - it lets you make requests to websites and easily find the data you're looking for in the response.

Lxml

Lxml is like a super-powered magnifying glass for web pages. It is a helpful instrument for extracting information from HTML texts, making it valuable for scraping product reviews.

What are the Benefits of Product Review Scraping?

Product review scraping services help in utilizing the most efficient tool that captures all the customer views and mentions of products that are distributed across the web. This tool is beneficial for businesses in lots of ways:

Understanding the Market

When the company asks for feedback from different sources, customers can become more familiar with what other buyers have to say about ecommerce data scraping services. This may help them determine products that attract customers and how to notify people about them.

Checking out Competitors

Businesses will have to look at the reviews of similar products in order to take them up. This enables them to figure out who follows and does not, regarding leading competitors, and how to improve their products.

Listening to Customers

Reviews present the same thing that blows the air straight from the customer's mouth about the experience of the product. Therefore, it will be easy for businesses to find out the pros and cons of their marketing campaigns.

Keeping an Eye on Prices

Reviews featuring overpricing or offering a good deal can be found in the review texts. This influences the price that businesses can set on their products, which ensures customers are happy and get value for their money.

Protecting their Reputation

Upon seeing the reviews, the businesses will be able to act and respond to any negative comments; they can even demonstrate that they value their customers' viewpoints. Through this action, they are able to maintain their position and gain customers' trust, which are the key things for their reputation.

What are the Challenges of Product Review Scraping

In general, it is the most convenient approach, allowing companies to get useful recommendations, make the right decisions, and retain their strong positions.

Data Quality

When scraping product reviews, it's essential to make sure that the information gathered is accurate and reliable by using expert web scraping services. However, reviews often contain typos, slang, or unclear language, which can make it hard to understand what customers are saying. When analyzing the data, this might result in mistakes or misinterpretations.

Website Changes

Websites where posted reviews frequently update their layout or structure. This can cause problems for scraping tools because they may no longer be able to find and collect the reviews in the same way. Businesses need to constantly monitor and update their scraping methods to keep up with these changes.

Legal and Ethical Issues

Scraping data from websites without permission can raise legal and ethical concerns. Numerous websites include terms of service that forbid scraping, and doing so without authorization could infringe upon copyright laws. Moreover, collecting personal data without consent can lead to privacy issues.

Anti-Scraping Measures

Some websites use measures like CAPTCHA challenges or blocking IP addresses to prevent automated scraping. These measures can make it difficult to collect the data needed for analysis.

Volume and Scale

Collecting and processing large amounts of review data from multiple sources by utilizing ecommerce data scraping services can be challenging. Significant computing and knowledge of advanced resources are necessary, which can cause the scraping process to run more slowly. It is crucial to have efficient techniques for organizing, storing, and interpreting large amounts of data.

Review Spam and Bias

Review platforms may contain fake or biased reviews, which can skew the analysis results. Methods for removing spam and recognizing authentic reviews must be developed to guarantee the accuracy of the analysis.

Multilingual Data

When scraping product reviews from ecommerce websites and international websites, businesses may encounter reviews in different languages. This raises issues with linguistic variety and translation. Language hurdles and cultural variations must be carefully taken into account when correctly understanding and interpreting evaluations written in several languages.

Dynamic Content

Reviews often contain dynamic content such as images, videos, or emojis. This content may be too complex for traditional scraping approaches to collect correctly. Effective dynamic content extraction and analysis require sophisticated techniques.

Why Perform Product Review Scraping?

Product review scraping involves using special tools or software to gather information from various places on the internet where people leave reviews about products. This information can come from online stores like Amazon, review websites, social media platforms, or forums.

Continue reading https://www.reviewgators.com/know-about-product-review-scraping.php

1 note

·

View note

Text

How to Extract Product Data from Walmart with Python and BeautifulSoup

Walmart is the leading retailer with both online stores as well as physical stores around the world. Having a larger product variety in the portfolio with $519.93 Billion of net sales, Walmart is dominating the retail market as well as it also provides ample data, which could be utilized to get insights on product portfolios, customer’s behavior, as well as market trends.

In this tutorial blog, we will extract product data from Walmart s well as store that in the SQL databases. We use Python for scraping a website. The package used for the scraping exercise is called BeautifulSoup. Together with that, we have also utilized Selenium as it helps us interact with Google Chrome.

Scrape Walmart Product Data

The initial step is importing all the required libraries. When, we import the packages, let’s start by setting the scraper’s flow. For modularizing the code, we initially investigated the URL structure of Walmart product pages. A URL is an address of a web page, which a user refers to as well as can be utilized for uniquely identifying the page.

Here, in the given example, we have made a listing of page URLs within Walmart’s electronics department. We also have made the list of names of different product categories. We would use them in future to name the tables or datasets.

You may add as well as remove the subcategories for all major product categories. All you require to do is going to subcategory pages as well as scrape the page URL. The address is general for all the available products on the page. You may also do that for maximum product categories. In the given image, we have showed categories including Toys and Food for the demo.

In addition, we have also stored URLs in the list because it makes data processing in Python much easier. When, we have all the lists ready, let’s move on for writing a scraper.

Also, we have made a loop for automating the extraction exercise. Although, we can run that for only one category as well as subcategory also. Let us pretend, we wish to extract data for only one sub-category like TVs in ‘Electronics’ category. Later on, we will exhibit how to scale a code for all the sub-categories.

Here, a variable pg=1 makes sure that we are extracting data for merely the first URL within an array ‘url_sets’ i.e. merely for the initial subcategory in main category. When you complete that, the following step might be to outline total product pages that you would wish to open for scraping data from. To do this, we are extracting data from the best 10 pages.

Then, we loop through a complete length of top_n array i.e. 10 times for opening the product pages as well as scrape a complete webpage structure in HTML form code. It is like inspecting different elements of web page as well as copying the resultants’ HTML code. Although, we have more added a limitation that only a part of HTML structure, which lies in a tag ‘Body’ is scraped as well as stored as the object. That is because applicable product data is only within a page’s HTML body.

This entity can be used for pulling relevant product data for different products, which were listed on an active page. For doing that, we have identified that a tag having product data is the ‘div’ tag having a class, ‘search-result-gridview-item-wrapper’. Therefore, in next step, we have used a find_all function for scraping all the occurrences from the given class. We have stored this data in the temporary object named ‘codelist’.

After that, we have built the URL of separate products. For doing so, we have observed that different product pages begin with a basic string called ‘https://walmart.com/ip’. All unique-identifies were added only before this string. A unique identifier was similar as a string values scraped from a ‘search-result-gridview-item-wrapper’ items saved above. Therefore, in the following step, we have looped through a temporary object code list, for constructing complete URL of any particular product’ page.

With this URL, we will be able to scrape particular product-level data. To do this demo, we have got details like unique Product codes, Product’s name, Product page URL, Product_description, name of current page’s category where a product is positioned, name of the active subcategory where the product is positioned on a website (which is called active breadcrumb), Product pricing, ratings (Star ratings), number of reviews or ratings for a product as well as other products suggested on the Walmart’s site similar or associated to a product. You may customize this listing according to your convinience.

The code given above follows the following step of opening an individual product page, based on the constructed URLs as well as scraping the products’ attributes, as given in the listing above. When you are okay with a listing of attributes getting pulled within a code, the last step for a scraper might be to attach all the product data in the subcategory within a single frame data. The code here shows that.

A data frame called ‘df’ would have all the data for products on the best 10 pages of a chosen subcategory within your code. You may either write data on the CSV files or distribute it to the SQL database. In case, you need to export that to the MySQL database within the table named ‘product_info’, you may utilize the code given below:

You would need to provide the SQL database credentials and when you do it, Python helps you to openly connect the working environment with the database as well as push the dataset straight as the SQL dataset. In the above code, in case the table having that name exists already, the recent code would replace with the present table. You may always change a script to evade doing so. Python provides you an option to 'fail', 'append', or 'replace' data here.

It is the basic code structure, which can be improved to add exclusions to deal with missing data or later loading pages. In case, you choose to loop the code for different subcategories, a complete code would look like:

import os import selenium.webdriver import csv import time import pandas as pd from selenium import webdriver from bs4 import BeautifulSoup url_sets=["https://www.walmart.com/browse/tv-video/all-tvs/3944_1060825_447913", "https://www.walmart.com/browse/computers/desktop-computers/3944_3951_132982", "https://www.walmart.com/browse/electronics/all-laptop-computers/3944_3951_1089430_132960", "https://www.walmart.com/browse/prepaid-phones/1105910_4527935_1072335", "https://www.walmart.com/browse/electronics/portable-audio/3944_96469", "https://www.walmart.com/browse/electronics/gps-navigation/3944_538883/", "https://www.walmart.com/browse/electronics/sound-bars/3944_77622_8375901_1230415_1107398", "https://www.walmart.com/browse/electronics/digital-slr-cameras/3944_133277_1096663", "https://www.walmart.com/browse/electronics/ipad-tablets/3944_1078524"] categories=["TVs","Desktops","Laptops","Prepaid_phones","Audio","GPS","soundbars","cameras","tablets"] # scraper for pg in range(len(url_sets)): # number of pages per category top_n= ["1","2","3","4","5","6","7","8","9","10"] # extract page number within sub-category url_category=url_sets[pg] print("Category:",categories[pg]) final_results = [] for i_1 in range(len(top_n)): print("Page number within category:",i_1) url_cat=url_category+"?page="+top_n[i_1] driver= webdriver.Chrome(executable_path='C:/Drivers/chromedriver.exe') driver.get(url_cat) body_cat = driver.find_element_by_tag_name("body").get_attribute("innerHTML") driver.quit() soupBody_cat = BeautifulSoup(body_cat) for tmp in soupBody_cat.find_all('div', {'class':'search-result-gridview-item-wrapper'}): final_results.append(tmp['data-id']) # save final set of results as a list codelist=list(set(final_results)) print("Total number of prods:",len(codelist)) # base URL for product page url1= "https://walmart.com/ip" # Data Headers WLMTData = [["Product_code","Product_name","Product_description","Product_URL", "Breadcrumb_parent","Breadcrumb_active","Product_price", "Rating_Value","Rating_Count","Recommended_Prods"]] for i in range(len(codelist)): #creating a list without the place taken in the first loop print(i) item_wlmt=codelist[i] url2=url1+"/"+item_wlmt #print(url2) try: driver= webdriver.Chrome(executable_path='C:/Drivers/chromedriver.exe') # Chrome driver is being used. print ("Requesting URL: " + url2) driver.get(url2) # URL requested in browser. print ("Webpage found ...") time.sleep(3) # Find the document body and get its inner HTML for processing in BeautifulSoup parser. body = driver.find_element_by_tag_name("body").get_attribute("innerHTML") print("Closing Chrome ...") # No more usage needed. driver.quit() # Browser Closed. print("Getting data from DOM ...") soupBody = BeautifulSoup(body) # Parse the inner HTML using BeautifulSoup h1ProductName = soupBody.find("h1", {"class": "prod-ProductTitle prod-productTitle-buyBox font-bold"}) divProductDesc = soupBody.find("div", {"class": "about-desc about-product-description xs-margin-top"}) liProductBreadcrumb_parent = soupBody.find("li", {"data-automation-id": "breadcrumb-item-0"}) liProductBreadcrumb_active = soupBody.find("li", {"class": "breadcrumb active"}) spanProductPrice = soupBody.find("span", {"class": "price-group"}) spanProductRating = soupBody.find("span", {"itemprop": "ratingValue"}) spanProductRating_count = soupBody.find("span", {"class": "stars-reviews-count-node"}) ################# exceptions ######################### if divProductDesc is None: divProductDesc="Not Available" else: divProductDesc=divProductDesc if liProductBreadcrumb_parent is None: liProductBreadcrumb_parent="Not Available" else: liProductBreadcrumb_parent=liProductBreadcrumb_parent if liProductBreadcrumb_active is None: liProductBreadcrumb_active="Not Available" else: liProductBreadcrumb_active=liProductBreadcrumb_active if spanProductPrice is None: spanProductPrice="NA" else: spanProductPrice=spanProductPrice if spanProductRating is None or spanProductRating_count is None: spanProductRating=0.0 spanProductRating_count="0 ratings" else: spanProductRating=spanProductRating.text spanProductRating_count=spanProductRating_count.text ### Recommended Products reco_prods=[] for tmp in soupBody.find_all('a', {'class':'tile-link-overlay u-focusTile'}): reco_prods.append(tmp['data-product-id']) if len(reco_prods)==0: reco_prods=["Not available"] else: reco_prods=reco_prods WLMTData.append([codelist[i],h1ProductName.text,ivProductDesc.text,url2, liProductBreadcrumb_parent.text, liProductBreadcrumb_active.text, spanProductPrice.text, spanProductRating, spanProductRating_count,reco_prods]) except Exception as e: print (str(e)) # save final result as dataframe df=pd.DataFrame(WLMTData) df.columns = df.iloc[0] df=df.drop(df.index[0]) # Export dataframe to SQL import sqlalchemy database_username = 'ENTER USERNAME' database_password = 'ENTER USERNAME PASSWORD' database_ip = 'ENTER DATABASE IP' database_name = 'ENTER DATABASE NAME' database_connection = sqlalchemy.create_engine('mysql+mysqlconnector://{0}:{1}@{2}/{3}'. format(database_username, database_password, database_ip, base_name)) df.to_sql(con=database_connection, name='‘product_info’', if_exists='replace',flavor='mysql')

You may always add additional complexity into this code for adding customization to the scraper. For example, the given scraper will take care of the missing data within attributes including pricing, description, or reviews. The data might be missing because of many reasons like if a product get out of stock or sold out, improper data entry, or is new to get any ratings or data currently.

For adapting different web structures, you would need to keep changing your web scraper for that to become functional while a webpage gets updated. The web scraper gives you with a base template for the Python’s scraper on Walmart.

Want to extract data for your business? Contact iWeb Scraping, your data scraping professional!

#web scraping#data mining#walmart data scraping#web scraping api#big data#ecommerce#data extraction#scrape walmart

3 notes

·

View notes

Link

In today's world, shoppers are no longer confused about where to seek and purchase specific goods. E-Commerce platforms like Amazon and Walmart are popular among millennial shoppers because they make it simple to compare and locate the best product values in a short amount of time.

Pricing is at the top of the list for the factors that affect a customer’s purchasing choice. While shoppers are debating which products to buy, e-commerce companies are busy deciding the charge for the particular product.

In the internet economy, Amazon has controlled the game of pricing policies. Due to its smart technique of gathering pricing information, Amazon has become the unchallenged winner.

The distinguishing sales prices technique on Amazon may be explained by combining two factors: price change frequency and price change revisions. In 2013, Amazon changed the prices of 40 million products in a single day, and this number more than doubled the following year. Amazon's competitors couldn't even come close to matching this figure.

Why Price Comparison is Necessary?

Price comparison websites and e-commerce sites of various sizes and types use price scraping. It may be a simple Chrome plugin, a Python program to scrape information from competitor e-commerce sites like Walmart or BestBuy, or a full-packed data scraping service business.

Retailers must continuously perform price analysis for various reasons. Initially, your competitors will sell any products at low rates and offer various discounts. Amazon, on the other hand, was able to overcome this hurdle by adopting site scraping and constant pricing monitoring. So, let's figure out what Amazon's secret formula is.

Amazon uses sophisticated price scraping to keep track of competitors and provide products at competitive costs. According to few facts, Amazon changes prices by more than 2.5 million a day. Amazon used big data analytics to become the industry leader in this focused market research industry. They were able to leverage web scraping for a variety of business purposes.

The sales teams were able to forecast consumer patterns and, as a result, impact client preferences. Amazon increased its annual revenues by 25% by using price optimization strategies and a huge amount of information collected from users.

E-Commerce price scraping can assist you to obtain an advantage in online retailing if you're a marketer. Businesses must collect massive volumes of data from a variety of competing e-commerce platforms. The extracted data is saved in a database or as a local file on your PC.

Knowing your competitor's merchandising strategy can help you develop and enhance your strategy. You can use web scraping and multiple analytical techniques to figure out which of your unique products set you apart from your competitors. A well-rounded Internet marketing strategy involves keeping tabs on your competitor's entire library and tracking which brands are being added, removed, or are not in stock.

How to Make Amazon Data Work?

Making business decisions is a difficult task. Business teams are having a hard time figuring out how to gain a competitive advantage. Dealers must recognize the advantages of harvesting Amazon pricing and product data. Comparing and tracking it will also assist you in developing a more competitive marketing plan. Competitor price monitoring can provide a company an unfair advantage when it comes to providing a product at an affordable price.

Assume you used a Price Scraper Tool to scrape data. The information is subsequently entered into a database and analyzed. You can get excellent insights into what is required to search the optimal price using technologies like PowerBI, Tableau, or the open-source Metabase.

When expanding the pricing intelligence solution over various product categories, the coverage and efficiency of your price scraping tool are crucial. These analytics tools will also assist you in enhancing your product's search engine rating. The effectiveness of your ongoing marketing plan will be determined by an evaluation of Amazon customer reviews.

How to Use Amazon Scraped Product Data and Pricing Information?

Amazon consists of a massive amount of data. hence, now let us help you develop an effective competitive price analysis solution. To begin, you must make a few fundamental assumptions. For example, you'll need to decide on the data source (which will be Amazon and a couple of its competitors) as well as the sub-categories. Each of these subcategories' refresh frequency must be defined independently.

You should also be conscious of every anti-scraping technique that may be used in conjunction with your source websites. As a result, you have complete control over the amount of price information you scrape. Furthermore, comprehending e-commerce information can assist you in extracting accurate facts. Precise data will make the process go more smoothly.

The first stage is to focus all of this effort in one direction. You get to choose the way you want to go, which increases the efficiency of the procedure. After you've extracted the information, you might need some feedback.

You can scrape Amazon data with open-source software like BeautifulSoup or utilize a service like Retailgator to receive updated data.

The structure of the source site contains data extraction process altogether. The structure of the source website determines the data extraction process altogether. You begin by submitting a message to the site, which is received with an HTML file in response. This file contains information that you must parse. Many tools may be used to create web scrapers.

At Retailgator, we deliver web scraping services in the required format.

Request for a Quote!!

0 notes

Text

Scraping Grocery Apps for Nutritional and Ingredient Data

Introduction

With health trends becoming more rampant, consumers are focusing heavily on nutrition and accurate ingredient and nutritional information. Grocery applications provide an elaborate study of food products, but manual collection and comparison of this data can take up an inordinate amount of time. Therefore, scraping grocery applications for nutritional and ingredient data would provide an automated and fast means for obtaining that information from any of the stakeholders be it customers, businesses, or researchers.

This blog shall discuss the importance of scraping nutritional data from grocery applications, its technical workings, major challenges, and best practices to extract reliable information. Be it for tracking diets, regulatory purposes, or customized shopping, nutritional data scraping is extremely valuable.

Why Scrape Nutritional and Ingredient Data from Grocery Apps?

1. Health and Dietary Awareness

Consumers rely on nutritional and ingredient data scraping to monitor calorie intake, macronutrients, and allergen warnings.

2. Product Comparison and Selection

Web scraping nutritional and ingredient data helps to compare similar products and make informed decisions according to dietary needs.

3. Regulatory & Compliance Requirements

Companies require nutritional and ingredient data extraction to be compliant with food labeling regulations and ensure a fair marketing approach.

4. E-commerce & Grocery Retail Optimization

Web scraping nutritional and ingredient data is used by retailers for better filtering, recommendations, and comparative analysis of similar products.

5. Scientific Research and Analytics

Nutritionists and health professionals invoke the scraping of nutritional data for research in diet planning, practical food safety, and trends in consumer behavior.

How Web Scraping Works for Nutritional and Ingredient Data

1. Identifying Target Grocery Apps

Popular grocery apps with extensive product details include:

Instacart

Amazon Fresh

Walmart Grocery

Kroger

Target Grocery

Whole Foods Market

2. Extracting Product and Nutritional Information

Scraping grocery apps involves making HTTP requests to retrieve HTML data containing nutritional facts and ingredient lists.

3. Parsing and Structuring Data

Using Python tools like BeautifulSoup, Scrapy, or Selenium, structured data is extracted and categorized.

4. Storing and Analyzing Data

The cleaned data is stored in JSON, CSV, or databases for easy access and analysis.

5. Displaying Information for End Users

Extracted nutritional and ingredient data can be displayed in dashboards, diet tracking apps, or regulatory compliance tools.

Essential Data Fields for Nutritional Data Scraping

1. Product Details

Product Name

Brand

Category (e.g., dairy, beverages, snacks)

Packaging Information

2. Nutritional Information

Calories

Macronutrients (Carbs, Proteins, Fats)

Sugar and Sodium Content

Fiber and Vitamins

3. Ingredient Data

Full Ingredient List

Organic/Non-Organic Label

Preservatives and Additives

Allergen Warnings

4. Additional Attributes

Expiry Date

Certifications (Non-GMO, Gluten-Free, Vegan)

Serving Size and Portions

Cooking Instructions

Challenges in Scraping Nutritional and Ingredient Data

1. Anti-Scraping Measures

Many grocery apps implement CAPTCHAs, IP bans, and bot detection mechanisms to prevent automated data extraction.

2. Dynamic Webpage Content

JavaScript-based content loading complicates extraction without using tools like Selenium or Puppeteer.

3. Data Inconsistency and Formatting Issues

Different brands and retailers display nutritional information in varied formats, requiring extensive data normalization.

4. Legal and Ethical Considerations

Ensuring compliance with data privacy regulations and robots.txt policies is essential to avoid legal risks.

Best Practices for Scraping Grocery Apps for Nutritional Data

1. Use Rotating Proxies and Headers

Changing IP addresses and user-agent strings prevents detection and blocking.

2. Implement Headless Browsing for Dynamic Content

Selenium or Puppeteer ensures seamless interaction with JavaScript-rendered nutritional data.

3. Schedule Automated Scraping Jobs

Frequent scraping ensures updated and accurate nutritional information for comparisons.

4. Clean and Standardize Data

Using data cleaning and NLP techniques helps resolve inconsistencies in ingredient naming and formatting.

5. Comply with Ethical Web Scraping Standards

Respecting robots.txt directives and seeking permission where necessary ensures responsible data extraction.

Building a Nutritional Data Extractor Using Web Scraping APIs

1. Choosing the Right Tech Stack

Programming Language: Python or JavaScript

Scraping Libraries: Scrapy, BeautifulSoup, Selenium

Storage Solutions: PostgreSQL, MongoDB, Google Sheets

APIs for Automation: CrawlXpert, Apify, Scrapy Cloud

2. Developing the Web Scraper

A Python-based scraper using Scrapy or Selenium can fetch and structure nutritional and ingredient data effectively.

3. Creating a Dashboard for Data Visualization

A user-friendly web interface built with React.js or Flask can display comparative nutritional data.

4. Implementing API-Based Data Retrieval

Using APIs ensures real-time access to structured and up-to-date ingredient and nutritional data.

Future of Nutritional Data Scraping with AI and Automation

1. AI-Enhanced Data Normalization

Machine learning models can standardize nutritional data for accurate comparisons and predictions.

2. Blockchain for Data Transparency

Decentralized food data storage could improve trust and traceability in ingredient sourcing.

3. Integration with Wearable Health Devices

Future innovations may allow direct nutritional tracking from grocery apps to smart health monitors.

4. Customized Nutrition Recommendations

With the help of AI, grocery applications will be able to establish personalized meal planning based on the nutritional and ingredient data culled from the net.

Conclusion

Automated web scraping of grocery applications for nutritional and ingredient data provides consumers, businesses, and researchers with accurate dietary information. Not just a tool for price-checking, web scraping touches all aspects of modern-day nutritional analytics.

If you are looking for an advanced nutritional data scraping solution, CrawlXpert is your trusted partner. We provide web scraping services that scrape, process, and analyze grocery nutritional data. Work with CrawlXpert today and let web scraping drive your nutritional and ingredient data for better decisions and business insights!

Know More : https://www.crawlxpert.com/blog/scraping-grocery-apps-for-nutritional-and-ingredient-data

#scrapingnutritionaldatafromgrocery#ScrapeNutritionalDatafromGroceryApps#NutritionalDataScraping#NutritionalDataScrapingwithAI

0 notes

Text

A Step-by-Step Guide to Web Scraping Walmart Grocery Delivery Data

Introduction

As those who are in the marketplace know, it is today's data model that calls for real-time grocery delivery data accessibility to drive pricing strategy and track changes in the market and activity by competitors. Walmart Grocery Delivery, one of the giants in e-commerce grocery reselling, provides this data, including product details, prices, availability, and operation time of the deliveries. Data scraping of Walmart Grocery Delivery could provide a business with fine intelligence knowledge about consumer behavior, pricing fluctuations, and changes in inventory.

This guide shall give you everything you need to know about web scraping Walmart Grocery Delivery data—from tools to techniques to challenges and best practices involved in it. We'll explore why CrawlXpert provides the most plausible way to collect reliable, large-scale data on Walmart.

1. What is Walmart Grocery Delivery Data Scraping?

Walmart Grocery Delivery scraping data is the collection of the product as well as delivery information from Walmart's electronic grocery delivery service. The online grocery delivery service thus involves accessing the site's HTML content programmatically and processing it for key data points.

Key Data Points You Can Extract:

Product Listings: Names, descriptions, categories, and specifications.

Pricing Data: Current price, original price, and promotional discounts.

Delivery Information: Availability, delivery slots, and estimated delivery times.

Stock Levels: In-stock, out-of-stock, or limited availability status.

Customer Reviews: Ratings, review counts, and customer feedback.

2. Why Scrape Walmart Grocery Delivery Data?

Scraping Walmart Grocery Delivery data provides valuable insights and enables data-driven decision-making for businesses. Here are the primary use cases:

a) Competitor Price Monitoring

Track Pricing Trends: Extracting Walmart’s pricing data enables you to track price changes over time.

Competitive Benchmarking: Compare Walmart’s pricing with other grocery delivery services.

Dynamic Pricing: Adjust your pricing strategies based on real-time competitor data.

b) Market Research and Consumer Insights

Product Popularity: Identify which products are frequently purchased or promoted.

Seasonal Trends: Track pricing and product availability during holiday seasons.

Consumer Sentiment: Analyze reviews to understand customer preferences.

c) Inventory and Supply Chain Optimization

Stock Monitoring: Identify frequently out-of-stock items to detect supply chain issues.

Demand Forecasting: Use historical data to predict future demand and optimize inventory.

d) Enhancing Marketing and Promotions

Targeted Advertising: Leverage scraped data to create personalized marketing campaigns.

SEO Optimization: Enrich your website with detailed product descriptions and pricing data.

3. Tools and Technologies for Scraping Walmart Grocery Delivery Data

To efficiently scrape Walmart Grocery Delivery data, you need the right combination of tools and technologies.

a) Python Libraries for Web Scraping

BeautifulSoup: Parses HTML and XML documents for easy data extraction.

Requests: Sends HTTP requests to retrieve web page content.

Selenium: Automates browser interactions, useful for dynamic pages.

Scrapy: A Python framework designed for large-scale web scraping.

Pandas: For data cleaning and storing scraped data into structured formats.

b) Proxy Services to Avoid Detection

Bright Data: Reliable IP rotation and CAPTCHA-solving capabilities.

ScraperAPI: Automatically handles proxies, IP rotation, and CAPTCHA solving.

Smartproxy: Provides residential proxies to reduce the chances of being blocked.

c) Browser Automation Tools

Playwright: Automates browser interactions for dynamic content rendering.

Puppeteer: A Node.js library that controls a headless Chrome browser.

d) Data Storage Options

CSV/JSON: Suitable for smaller-scale data storage.

MongoDB/MySQL: For large-scale structured data storage.

Cloud Storage: AWS S3, Google Cloud, or Azure for scalable storage.

4. Building a Walmart Grocery Delivery Scraper

a) Install the Required Libraries

First, install the necessary Python libraries:

pip install requests beautifulsoup4 selenium pandas

b) Inspect Walmart’s Website Structure

Open Walmart Grocery Delivery in your browser.

Right-click → Inspect → Select Elements.

Identify product containers, pricing, and delivery details.

c) Fetch the Walmart Delivery Page

import requests from bs4 import BeautifulSoup url = 'https://www.walmart.com/grocery' headers = {'User-Agent': 'Mozilla/5.0'} response = requests.get(url, headers=headers) soup = BeautifulSoup(response.content, 'html.parser')

d) Extract Product and Delivery Data

products = soup.find_all('div', class_='search-result-gridview-item') data = [] for product in products: try: title = product.find('a', class_='product-title-link').text price = product.find('span', class_='price-main').text availability = product.find('div', class_='fulfillment').text data.append({'Product': title, 'Price': price, 'Delivery': availability}) except AttributeError: continue

5. Bypassing Walmart’s Anti-Scraping Mechanisms

Walmart uses anti-bot measures like CAPTCHAs and IP blocking. Here are strategies to bypass them:

a) Use Proxies for IP Rotation

Rotating IP addresses reduces the risk of being blocked.proxies = {'http': 'http://user:pass@proxy-server:port'} response = requests.get(url, headers=headers, proxies=proxies)

b) Use User-Agent Rotation

import random user_agents = [ 'Mozilla/5.0 (Windows NT 10.0; Win64; x64)', 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7)' ] headers = {'User-Agent': random.choice(user_agents)}

c) Use Selenium for Dynamic Content

from selenium import webdriver options = webdriver.ChromeOptions() options.add_argument('--headless') driver = webdriver.Chrome(options=options) driver.get(url) data = driver.page_source driver.quit() soup = BeautifulSoup(data, 'html.parser')

6. Data Cleaning and Storage

Once you’ve scraped the data, clean and store it:import pandas as pd df = pd.DataFrame(data) df.to_csv('walmart_grocery_delivery.csv', index=False)

7. Why Choose CrawlXpert for Walmart Grocery Delivery Data Scraping?

While building your own Walmart scraper is possible, it comes with challenges, such as handling CAPTCHAs, IP blocking, and dynamic content rendering. This is where CrawlXpert excels.

Key Benefits of CrawlXpert:

Accurate Data Extraction: CrawlXpert provides reliable and comprehensive data extraction.

Scalable Solutions: Capable of handling large-scale data scraping projects.

Anti-Scraping Evasion: Uses advanced techniques to bypass CAPTCHAs and anti-bot systems.

Real-Time Data: Access fresh, real-time data with high accuracy.

Flexible Delivery: Data delivery in multiple formats (CSV, JSON, Excel).

Conclusion

Scrape Data from Walmart Grocery Delivery: Extracting and analyzing the prices, trends, and consumer preferences can show any business the strength behind Walmart Grocery Delivery. But all the tools and techniques won't matter if one finds themselves in deep trouble against Walmart's excellent anti-scraping measures. Thus, using a well-known service such as CrawlXpert guarantees consistent, correct, and compliant data extraction.

Know More : https://www.crawlxpert.com/blog/web-scraping-walmart-grocery-delivery-data

#ScrapingWalmartGroceryDeliveryData#WalmartGroceryDeliveryDataScraping#ScrapeWalmartGroceryDeliveryData#WalmartGroceryDeliveryScraper

0 notes

Text

How To Scrape Walmart for Product Information Using Python

In the ever-expanding world of e-commerce, Walmart is one of the largest retailers, offering a wide variety of products across numerous categories. If you're a data enthusiast, researcher, or business owner, you might find it useful to scrape Walmart for product information such as prices, product descriptions, and reviews. In this blog post, I'll guide you through the process of scraping Walmart's website using Python, covering the tools and libraries you'll need as well as the code to get started.

Why Scrape Walmart?

There are several reasons you might want to scrape Walmart's website:

Market research: Analyze competitor prices and product offerings.

Data analysis: Study trends in consumer preferences and purchasing habits.

Product monitoring: Track changes in product availability and prices over time.

Business insights: Understand what products are most popular and how they are being priced.

Tools and Libraries

To get started with scraping Walmart's website, you'll need the following tools and libraries:

Python: The primary programming language we'll use for this task.

Requests: A Python library for making HTTP requests.

BeautifulSoup: A Python library for parsing HTML and XML documents.

Pandas: A data manipulation library to organize and analyze the scraped data.

First, install the necessary libraries:

shell

Copy code

pip install requests beautifulsoup4 pandas

How to Scrape Walmart

Let's dive into the process of scraping Walmart's website. We'll focus on scraping product information such as title, price, and description.

1. Import Libraries

First, import the necessary libraries:

python

Copy code

import requests from bs4 import BeautifulSoup import pandas as pd

2. Define the URL

You need to define the URL of the Walmart product page you want to scrape. For this example, we'll use a sample URL:

python

Copy code

url = "https://www.walmart.com/search/?query=laptop"

You can replace the URL with the one you want to scrape.

3. Send a Request and Parse the HTML

Next, send an HTTP GET request to the URL and parse the HTML content using BeautifulSoup:

python

Copy code

response = requests.get(url) soup = BeautifulSoup(response.text, "html.parser")

4. Extract Product Information

Now, let's extract the product information from the HTML content. We will focus on extracting product titles, prices, and descriptions.

Here's an example of how to do it:

python

Copy code

# Create lists to store the scraped data product_titles = [] product_prices = [] product_descriptions = [] # Find the product containers on the page products = soup.find_all("div", class_="search-result-gridview-item") # Loop through each product container and extract the data for product in products: # Extract the title title = product.find("a", class_="product-title-link").text.strip() product_titles.append(title) # Extract the price price = product.find("span", class_="price-main-block").find("span", class_="visuallyhidden").text.strip() product_prices.append(price) # Extract the description description = product.find("span", class_="price-characteristic").text.strip() if product.find("span", class_="price-characteristic") else "N/A" product_descriptions.append(description) # Create a DataFrame to store the data data = { "Product Title": product_titles, "Price": product_prices, "Description": product_descriptions } df = pd.DataFrame(data) # Display the DataFrame print(df)

In the code above, we loop through each product container and extract the title, price, and description of each product. The data is stored in lists and then converted into a Pandas DataFrame for easy data manipulation and analysis.

5. Save the Data

Finally, you can save the extracted data to a CSV file or any other desired format:

python

Copy code

df.to_csv("walmart_products.csv", index=False)

Conclusion

Scraping Walmart for product information can provide valuable insights for market research, data analysis, and more. By using Python libraries such as Requests, BeautifulSoup, and Pandas, you can extract data efficiently and save it for further analysis. Remember to use this information responsibly and abide by Walmart's terms of service and scraping policies.

0 notes

Text

This tutorial blog helps you understand How to Extract Product Data from Walmart with Python and BeautifulSoup. Get the best Walmart product data scraping services from iWeb Scraping at affordable prices.

For More Information:-

0 notes

Text

How to Extract Product Data from Walmart with Python and BeautifulSoup

In the vast world of e-commerce, accessing and analyzing product data is a crucial aspect for businesses aiming to stay competitive. Whether you're a small-scale seller or a large corporation, having access to comprehensive product information can significantly enhance your decision-making process and marketing strategies.

Walmart, being one of the largest retailers globally, offers a treasure trove of product data. Extracting this data programmatically can be a game-changer for businesses looking to gain insights into market trends, pricing strategies, and consumer behavior. In this guide, we'll explore how to harness the power of Python and BeautifulSoup to scrape product data from Walmart's website efficiently.

Why BeautifulSoup and Python?

BeautifulSoup is a Python library designed for quick and easy data extraction from HTML and XML files. Combined with Python's simplicity and versatility, it becomes a potent tool for web scraping tasks. By utilizing these tools, you can automate the process of retrieving product data from Walmart's website, saving time and effort compared to manual data collection methods.

Setting Up Your Environment

Before diving into the code, you'll need to set up your Python environment. Ensure you have Python installed on your system, along with the BeautifulSoup library. You can install BeautifulSoup using pip, Python's package installer, by executing the following command:

bashCopy code

pip install beautifulsoup4

Scraping Product Data from Walmart

Now, let's walk through a simple script to scrape product data from Walmart's website. We'll focus on extracting product names, prices, and ratings. Below is a basic Python script to achieve this:

pythonCopy code

import requests from bs4 import BeautifulSoup def scrape_walmart_product_data(url): # Send a GET request to the URL response = requests.get(url) # Parse the HTML content soup = BeautifulSoup(response.text, 'html.parser') # Find all product containers products = soup.find_all('div', class_='search-result-gridview-items') # Iterate over each product for product in products: # Extract product name name = product.find('a', class_='product-title-link').text.strip() # Extract product price price = product.find('span', class_='price').text.strip() # Extract product rating rating = product.find('span', class_='stars-container')['aria-label'].split()[0] # Print the extracted data print(f"Name: {name}, Price: {price}, Rating: {rating}") # URL of the Walmart search page url = 'https://www.walmart.com/search/?query=laptop' scrape_walmart_product_data(url)

Conclusion

In this tutorial, we've demonstrated how to extract product data from Walmart's website using Python and BeautifulSoup. By automating the process of data collection, you can streamline your market research efforts and gain valuable insights into product trends, pricing strategies, and consumer preferences.

However, it's essential to be mindful of Walmart's terms of service and use web scraping responsibly and ethically. Always check for any legal restrictions or usage policies before scraping data from a website.

With the power of Python and BeautifulSoup at your fingertips, you're equipped to unlock the wealth of product data available on Walmart's platform, empowering your business to make informed decisions and stay ahead in the competitive e-commerce landscape. Happy scraping!

0 notes

Text

This tutorial blog helps you understand How to Extract Product Data from Walmart with Python and BeautifulSoup. Get the best Walmart product data scraping services from iWeb Scraping at affordable prices.

For More Information:-

0 notes

Text

How To Make The Right Choice Of Web Scrapers To Collect Grocery Store Prices Data

How To Make The Right Choice Of Web Scrapers To Collect Grocery Store Prices Data?

In today's fast-paced world, gathering data on grocery store prices is essential for consumers and businesses. Thankfully, with the advent of web scraping techniques, obtaining real-time and accurate pricing information from multiple grocery stores has become more accessible than ever.

The rise of online grocery delivery platforms has transformed how people shop for groceries, fueled by digital advancements and improved logistics. Major players like Walmart, Publix, Target, and Amazon Fresh are experiencing unprecedented growth, with projected annual revenue to soar by 20% from 2021 to 2031.

Businesses use web-scraping grocery delivery data from market leaders to gain a competitive edge. By leveraging grocery data scraping services, established and aspiring grocery delivery companies can better understand the market landscape and make informed decisions for success. This article will explore how to use web scrapers to collect grocery store prices and unlock valuable insights for smarter shopping decisions and competitive analysis.

List of Data Fields

Store/Grocer Name

Address

City

State

Zip

Geo coordinates

Product Name

Product Image

Product SKU

Product Category

Product Description

Product Specifications

Product Price

Discounted Price

Best offers

Services Available

Reason Why Grocery Delivery Data Scraping is Important?

Competitive Advantage: Grocery delivery data scraping is a perfect tool to help businesses access real-time information about competitor pricing, product offerings, and promotions. Using this knowledge, companies can fine-tune their strategies to stand out.

Market Intelligence: Scraping data from various grocery delivery platforms provides valuable market intelligence. Businesses can analyze consumer preferences, track trends, and identify emerging opportunities, enabling them to make informed decisions based on data-driven insights.

Price Optimization: With access to pricing data from different platforms, companies can optimize their pricing strategies. They can adjust prices dynamically, ensuring competitiveness while maintaining profitability and responding swiftly to market fluctuations.

Customer Insights: Businesses can understand consumer sentiments and preferences by scraping customer reviews and feedback. It helps tailor products and services to meet customer expectations, improving customer satisfaction and loyalty.

Inventory Management: Grocery delivery data scraping services aids in effective inventory management. Businesses can monitor product demand and popularity, ensuring the stocking of the right products appropriately. It minimizes stockouts and excess inventory, optimizing supply chain efficiency.

How to Select the Right Scraper for Scraping grocery store prices?

Selecting the proper scraper for scraping grocery store prices involves considering various factors to ensure efficient and accurate data extraction. Here's a step-by-step guide to help you make the best choice:

Identify Your Requirements: Determine the data you need to scrape from grocery store websites. Are you interested in product names, prices, availability, or other details? Understanding your requirements will guide your choice of scraper.

Choose the Right Programming Language: Popular web scraping libraries are available in different programming languages. Among libraries like BeautifulSoup and Scrapy, Python is highly popular for web scraping. JavaScript options include Cheerio and Puppeteer. Choose a language you are comfortable with that best suits your project needs.

Ease of Use: Look for a user-friendly scraper that is easy to set up. A user-friendly scraper can save you time and effort in the development process.

Performance and Efficiency: Consider the efficiency of the scraper in terms of speed and resource usage. Efficient scrapers can handle large volumes of data without straining your system.

Support for JavaScript Rendering: Some websites use JavaScript to load content dynamically. If the grocery store websites you target use JavaScript rendering, consider a scraper that can handle it, like Puppeteer.

Robustness and Error Handling: A good scraper should be robust and capable of handling errors gracefully. Look for error-handling mechanisms that prevent the scraper from crashing if it encounters unexpected situations.

Data Parsing and Extraction: Ensure that the grocery data scraper can accurately parse and extract the specific data you need. It should be able to locate relevant elements on the website and extract information in a structured format.

Compliance with Website Policies: Choose a scraper that allows you to respect the terms of service and robots.txt files of the grocery store websites you are scraping. Respecting website policies is essential to avoid potential legal issues.

Community and Support: Check if the scraper has an active community and reliable support channels. It can be beneficial if you encounter any issues during the scraping process.

Scalability: If you plan to scrape data from multiple grocery store websites or expand your scraping efforts, consider a scalable scraper that can handle increased data loads.

Documentation and Tutorials: Ensure the scraper has comprehensive documentation and tutorials to guide you through the scraping process and troubleshoot common issues.

Role of Scraper to scrape grocery store prices data

The role of a scraper in scraping grocery store price data is pivotal in gathering and extracting valuable pricing information from various online grocery store platforms. Here are the key roles and functions that a scraper plays in this context:

Data Extraction: The primary role of a scraper is to automatically access grocery store websites, navigate through the pages, and extract relevant pricing data. It locates specific HTML elements containing product names, prices, and other relevant details.

Real-Time Data: A scraper enables the collection of real-time pricing data from multiple grocery stores. It allows businesses to stay up-to-date with current market prices and respond swiftly to changes in pricing strategies.

Efficiency and Scale: Web scrapers can quickly process large amounts of data from numerous websites. This scalability is crucial in gathering comprehensive pricing information from various products and stores.

Competitive Analysis: A scraper enables businesses to perform detailed competitive analysis by collecting pricing data from competitor websites. It includes monitoring competitor prices, promotions, and product assortments, providing valuable insights for pricing strategies.

Price Comparison: Scraper-based data extraction allows businesses to compare prices of the same products across different grocery stores. This information helps consumers decide to find the best deals and savings.

Trend Analysis: Scraped pricing data helps analyze pricing trends over time. Businesses can identify seasonal fluctuations, pricing patterns, and trends to adjust their strategies accordingly.

Forecasting and Inventory Management: Price data extracted from grocery store websites are helpful in forecasting product demand and planning inventory levels. It helps businesses optimize inventory management, reducing costs and wastage.

Personalization: With pricing data from various stores, businesses can effectively customize their offerings and promotions based on regional preferences and target customer segments.

Market Insights: The collected pricing data provides valuable market insights, enabling businesses to make data-driven decisions, set competitive prices, and enhance overall market understanding.

Streamlined Decision-Making: By automating data extraction, scrapers streamline the decision-making process for businesses. The availability of accurate and timely pricing information enables quicker responses to market changes.

Market Research: Pricing data scraped from grocery store websites becomes a valuable resource for market research, enabling businesses to identify emerging trends and opportunities.

Conclusion: A scraper is crucial in collecting, processing, and providing actionable pricing data from grocery store websites. By leveraging this information, businesses can make informed decisions, optimize pricing strategies, and gain a competitive advantage in the dynamic online grocery market.

knowmore:

#ScrapeToCollectGroceryStorePricesData#scrapinggrocerydeliverydata#grocerydatascrapingservices#Scrapingdatafromvariousgrocerydeliveryplatforms#Grocerydeliverydatascrapingservices#Scrapinggrocerystoreprices#Scrapepricingdata#Pricedataextractedfromgrocerystore

0 notes

Text

This tutorial blog helps you understand How to Extract Product Data from Walmart with Python and BeautifulSoup. Get the best Walmart product data scraping services from iWeb Scraping at affordable prices.

0 notes

Link

today's world, shoppers are no longer confused about where to seek and purchase specific goods. E-Commerce platforms like Amazon and Walmart are popular among millennial shoppers because they make it simple to compare and locate the best product values in a short amount of time.

Pricing is at the top of the list for the factors that affect a customer’s purchasing choice. While shoppers are debating which products to buy, e-commerce companies are busy deciding the charge for the particular product.

In the internet economy, Amazon has controlled the game of pricing policies. Due to its smart technique of gathering pricing information, Amazon has become the unchallenged winner.

The distinguishing sales prices technique on Amazon may be explained by combining two factors: price change frequency and price change revisions. In 2013, Amazon changed the prices of 40 million products in a single day, and this number more than doubled the following year. Amazon's competitors couldn't even come close to matching this figure.

Why Price Comparison is Necessary?

Price comparison websites and e-commerce sites of various sizes and types use price scraping. It may be a simple Chrome plugin, a Python program to scrape information from competitor e-commerce sites like Walmart or BestBuy, or a full-packed data scraping service business.

Retailers must continuously perform price analysis for various reasons. Initially, your competitors will sell any products at low rates and offer various discounts. Amazon, on the other hand, was able to overcome this hurdle by adopting site scraping and constant pricing monitoring. So, let's figure out what Amazon's secret formula is.

Amazon uses sophisticated price scraping to keep track of competitors and provide products at competitive costs. According to few facts, Amazon changes prices by more than 2.5 million a day. Amazon used big data analytics to become the industry leader in this focused market research industry. They were able to leverage web scraping for a variety of business purposes.