#Extract Product Data

Explore tagged Tumblr posts

Text

Etsy is an E-Commerce website that targets craft products, vintage, and handmade products. Many products fall under a wide price range like toys, bags, home decor, jewelry, clothing, and craft supplies & tools.

The annual Revenue of Etsy’s is around about 818.79 Million in 2019. Etsy’s annual marketplace revenue in 2019 was around 593.65 Million. There are around 2.5 Million active Etsy sellers as of 2019. Etsy has round about 45.7 Million active buyers as of 2019. There are 83% off the Etsy woman sellers.

#Etsy Web Data#Etsy Web Data Scraping#Etsy Web Data Scraping Services#Extract Product Data From Etsy Website#Extract Product Data

0 notes

Text

This tutorial blog helps you understand How to Extract Product Data from Walmart with Python and BeautifulSoup. Get the best Walmart product data scraping services from iWeb Scraping at affordable prices.

For More Information:-

0 notes

Text

What Are the Qualifications for a Data Scientist?

In today's data-driven world, the role of a data scientist has become one of the most coveted career paths. With businesses relying on data for decision-making, understanding customer behavior, and improving products, the demand for skilled professionals who can analyze, interpret, and extract value from data is at an all-time high. If you're wondering what qualifications are needed to become a successful data scientist, how DataCouncil can help you get there, and why a data science course in Pune is a great option, this blog has the answers.

The Key Qualifications for a Data Scientist

To succeed as a data scientist, a mix of technical skills, education, and hands-on experience is essential. Here are the core qualifications required:

1. Educational Background

A strong foundation in mathematics, statistics, or computer science is typically expected. Most data scientists hold at least a bachelor’s degree in one of these fields, with many pursuing higher education such as a master's or a Ph.D. A data science course in Pune with DataCouncil can bridge this gap, offering the academic and practical knowledge required for a strong start in the industry.

2. Proficiency in Programming Languages

Programming is at the heart of data science. You need to be comfortable with languages like Python, R, and SQL, which are widely used for data analysis, machine learning, and database management. A comprehensive data science course in Pune will teach these programming skills from scratch, ensuring you become proficient in coding for data science tasks.

3. Understanding of Machine Learning

Data scientists must have a solid grasp of machine learning techniques and algorithms such as regression, clustering, and decision trees. By enrolling in a DataCouncil course, you'll learn how to implement machine learning models to analyze data and make predictions, an essential qualification for landing a data science job.

4. Data Wrangling Skills

Raw data is often messy and unstructured, and a good data scientist needs to be adept at cleaning and processing data before it can be analyzed. DataCouncil's data science course in Pune includes practical training in tools like Pandas and Numpy for effective data wrangling, helping you develop a strong skill set in this critical area.

5. Statistical Knowledge

Statistical analysis forms the backbone of data science. Knowledge of probability, hypothesis testing, and statistical modeling allows data scientists to draw meaningful insights from data. A structured data science course in Pune offers the theoretical and practical aspects of statistics required to excel.

6. Communication and Data Visualization Skills

Being able to explain your findings in a clear and concise manner is crucial. Data scientists often need to communicate with non-technical stakeholders, making tools like Tableau, Power BI, and Matplotlib essential for creating insightful visualizations. DataCouncil’s data science course in Pune includes modules on data visualization, which can help you present data in a way that’s easy to understand.

7. Domain Knowledge

Apart from technical skills, understanding the industry you work in is a major asset. Whether it’s healthcare, finance, or e-commerce, knowing how data applies within your industry will set you apart from the competition. DataCouncil's data science course in Pune is designed to offer case studies from multiple industries, helping students gain domain-specific insights.

Why Choose DataCouncil for a Data Science Course in Pune?

If you're looking to build a successful career as a data scientist, enrolling in a data science course in Pune with DataCouncil can be your first step toward reaching your goals. Here’s why DataCouncil is the ideal choice:

Comprehensive Curriculum: The course covers everything from the basics of data science to advanced machine learning techniques.

Hands-On Projects: You'll work on real-world projects that mimic the challenges faced by data scientists in various industries.

Experienced Faculty: Learn from industry professionals who have years of experience in data science and analytics.

100% Placement Support: DataCouncil provides job assistance to help you land a data science job in Pune or anywhere else, making it a great investment in your future.

Flexible Learning Options: With both weekday and weekend batches, DataCouncil ensures that you can learn at your own pace without compromising your current commitments.

Conclusion

Becoming a data scientist requires a combination of technical expertise, analytical skills, and industry knowledge. By enrolling in a data science course in Pune with DataCouncil, you can gain all the qualifications you need to thrive in this exciting field. Whether you're a fresher looking to start your career or a professional wanting to upskill, this course will equip you with the knowledge, skills, and practical experience to succeed as a data scientist.

Explore DataCouncil’s offerings today and take the first step toward unlocking a rewarding career in data science! Looking for the best data science course in Pune? DataCouncil offers comprehensive data science classes in Pune, designed to equip you with the skills to excel in this booming field. Our data science course in Pune covers everything from data analysis to machine learning, with competitive data science course fees in Pune. We provide job-oriented programs, making us the best institute for data science in Pune with placement support. Explore online data science training in Pune and take your career to new heights!

#In today's data-driven world#the role of a data scientist has become one of the most coveted career paths. With businesses relying on data for decision-making#understanding customer behavior#and improving products#the demand for skilled professionals who can analyze#interpret#and extract value from data is at an all-time high. If you're wondering what qualifications are needed to become a successful data scientis#how DataCouncil can help you get there#and why a data science course in Pune is a great option#this blog has the answers.#The Key Qualifications for a Data Scientist#To succeed as a data scientist#a mix of technical skills#education#and hands-on experience is essential. Here are the core qualifications required:#1. Educational Background#A strong foundation in mathematics#statistics#or computer science is typically expected. Most data scientists hold at least a bachelor’s degree in one of these fields#with many pursuing higher education such as a master's or a Ph.D. A data science course in Pune with DataCouncil can bridge this gap#offering the academic and practical knowledge required for a strong start in the industry.#2. Proficiency in Programming Languages#Programming is at the heart of data science. You need to be comfortable with languages like Python#R#and SQL#which are widely used for data analysis#machine learning#and database management. A comprehensive data science course in Pune will teach these programming skills from scratch#ensuring you become proficient in coding for data science tasks.#3. Understanding of Machine Learning

3 notes

·

View notes

Text

Market Research with Web Data Solutions – Dignexus

6 notes

·

View notes

Text

How to Extract Amazon Product Prices Data with Python 3

Web data scraping assists in automating web scraping from websites. In this blog, we will create an Amazon product data scraper for scraping product prices and details. We will create this easy web extractor using SelectorLib and Python and run that in the console.

#webscraping#data extraction#web scraping api#Amazon Data Scraping#Amazon Product Pricing#ecommerce data scraping#Data EXtraction Services

3 notes

·

View notes

Text

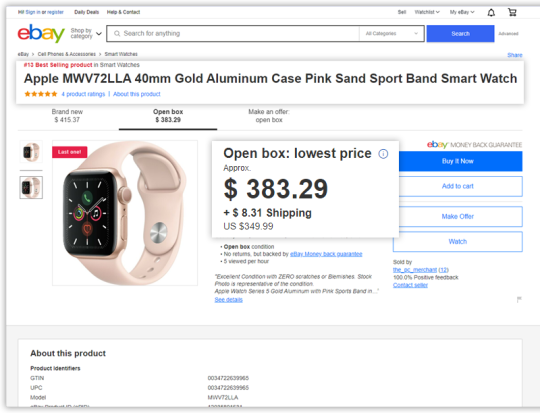

Scrape eBay Product Data | eBay Product Data Scraper

Scrape eBay Product Data such as - item name, price, bid information, image, payment option etc using Retailgators. Download the scraped data in CSV, Excel or JSON formats.

#Scrape eBay Product Data#eBay Data Scraping#Extract eBay Data#eBay Data Extraction#eBay Product Data Scraping

4 notes

·

View notes

Text

#Web Scraping ASDA Liquor Products and Prices#Scrape Liquor Prices from ASDA#ASDA Alcohol Price Scraping Services#Extract Liquor Pricing Data from ASDA#Web Scraping ASDA Alcohol Prices#Extract Asda Alcohol and Liquor Price Data

0 notes

Text

How to Automate Document Processing for Your Business: A Step-by-Step Guide

Managing documents manually is one of the biggest time drains in business today. From processing invoices and contracts to organizing customer forms, these repetitive tasks eat up hours every week. The good news? Automating document processing is simpler (and more affordable) than you might think.

In this easy-to-follow guide, we’ll show you step-by-step how to automate document processing in your business—saving you time, reducing errors, and boosting productivity.

What You’ll Need

A scanner (if you still have paper documents)

A document processing software (like AppleTechSoft’s Document Processing Solution)

Access to your business’s document workflows (invoices, forms, receipts, etc.)

Step 1: Identify Documents You Want to Automate

Start by making a list of documents that take up the most time to process. Common examples include:

Invoices and bills

Purchase orders

Customer application forms

Contracts and agreements

Expense receipts

Tip: Prioritize documents that are repetitive and high volume.

Step 2: Digitize Your Paper Documents

If you’re still handling paper, scan your documents into digital formats (PDF, JPEG, etc.). Most modern document processing tools work best with digital files.

Quick Tip: Use high-resolution scans (300 DPI or more) for accurate data extraction.

Step 3: Choose a Document Processing Tool

Look for a platform that offers:

OCR (Optical Character Recognition) to extract text from scanned images

AI-powered data extraction to capture key fields like dates, names, and totals

Integration with your accounting software, CRM, or database

Security and compliance features to protect sensitive data

AppleTechSoft’s Document Processing Solution ticks all these boxes and more.

Step 4: Define Your Workflow Rules

Tell your software what you want it to do with your documents. For example:

Extract vendor name, date, and amount from invoices

Automatically save contracts to a shared folder

Send expense reports directly to accounting

Most tools offer an easy drag-and-drop interface or templates to set these rules up.

Step 5: Test Your Automation

Before going live, test the workflow with sample documents. Check if:

Data is extracted accurately

Documents are routed to the right folders or apps

Any errors or mismatches are flagged

Tweak your settings as needed.

Step 6: Go Live and Monitor

Once you’re confident in your workflow, deploy it for daily use. Monitor the automation for the first few weeks to ensure it works as expected.

Pro Tip: Set up alerts for any failed extractions or mismatches so you can quickly correct issues.

Bonus Tips for Success

Regularly update your templates as your document formats change

Train your team on how to upload and manage documents in the system

Schedule periodic reviews to optimize and improve your workflows

Conclusion

Automating document processing can transform your business operations—from faster invoicing to smoother customer onboarding. With the right tools and a clear plan, you can streamline your paperwork and focus on what matters most: growing your business.

Ready to get started? Contact AppleTechSoft today to explore our Document Processing solutions.

#document processing#business automation#workflow automation#AI tools#paperless office#small business tips#productivity hacks#digital transformation#AppleTechSoft#business technology#OCR software#data extraction#invoicing automation#business growth#time saving tips

1 note

·

View note

Text

🚀 The ChatGPT Desktop App is Changing the Game! 🤯💻 Imagine having an AI assistant that can: ✅ Reply to emails in seconds 📧⏩ ✅ Generate high-quality images with DALL-E 🎨🤩 ✅ Summarize long content instantly 📖📜 ✅ Write HTML/CSS code from screenshots 💻💡 ✅ Translate text across multiple languages 🌍🗣️ ✅ Extract text from images easily 📷📝 ✅ Analyze large datasets from Excel/CSV files 📊📈 👉 This app is designed to save your time. #ChatGPT #ChatGPTDesktopApp #AIProductivity #dalle #TechT

#AI automation#AI content creation#AI email management#AI for business#AI productivity tool#AI social media engagement#automatic code generation#ChatGPT benefits#ChatGPT coding#ChatGPT content summarization#ChatGPT desktop app#ChatGPT email replies#ChatGPT features#ChatGPT for professionals#ChatGPT tools for professionals.#ChatGPT uses#content summarization#DALL-E image generation#data analysis with AI#simplify daily tasks#smart translation#social media automation#text extraction from images

1 note

·

View note

Text

💼 Unlock LinkedIn Like Never Before with the LinkedIn Profile Explorer!

Need to extract LinkedIn profile data effortlessly? Meet the LinkedIn Profile Explorer by Dainty Screw—your ultimate tool for automated LinkedIn data collection.

✨ What This Tool Can Do:

• 🧑💼 Extract names, job titles, and company details.

• 📍 Gather profile locations and industries.

• 📞 Scrape contact information (if publicly available).

• 🚀 Collect skills, education, and more from profiles!

💡 Perfect For:

• Recruiters sourcing top talent.

• Marketers building lead lists.

• Researchers analyzing career trends.

• Businesses creating personalized outreach campaigns.

🚀 Why Choose the LinkedIn Profile Explorer?

• Accurate Data: Scrapes reliable and up-to-date profile details.

• Customizable Searches: Target specific roles, industries, or locations.

• Time-Saving Automation: Save hours of manual work.

• Scalable for Big Projects: Perfect for bulk data extraction.

🔗 Get Started Today:

Simplify LinkedIn data collection with one click: LinkedIn Profile Explorer

🙌 Whether you’re hiring, marketing, or researching, this tool makes LinkedIn data extraction fast, easy, and reliable. Try it now!

Tags: #LinkedInScraper #ProfileExplorer #WebScraping #AutomationTools #Recruitment #LeadGeneration #DataExtraction #ApifyTools

#LinkedIn scraper#profile explorer#apify tools#automation tools#lead generation#data scraper#data extraction tools#data scraping#100 days of productivity#accounting#recruiting

1 note

·

View note

Text

When self-described “ocean custodian” Boyan Slat took the stage at TED 2025 in Vancouver this week, he showed viewers a reality many of us are already heartbreakingly familiar with: There is a lot of trash in the ocean.

“If we allow current trends to continue, the amount of plastic that’s entering the ocean is actually set to double by 2060,” Slat said in his TED Talk, which will be published online at a later date.

Plus, once plastic is in the ocean, it accumulates in “giant circular currents” called gyres, which Slat said operate a lot like the drain of the bathtub, meaning that plastic can enter these currents but cannot leave.

That’s how we get enormous build-ups like the Great Pacific Garbage Patch, a giant collection of plastic pollution in the ocean that is roughly twice the size of Texas.

As the founder and CEO of The Ocean Cleanup, Slat’s goal is to return our oceans to their original, clean state before 2040. To accomplish this, two things must be done.

First: Stop more plastic from entering the ocean. Second: Clean up the “legacy” pollution that is already out there and doesn’t go away by itself.

And Slat is well on his way.

Pictured: Kingston Harbour in Jamaica. Photo courtesy of The Ocean Cleanup Project

When Slat’s first TEDx Talk went viral in 2012, he was able to organize research teams to create the first-ever map of the Great Pacific Garbage Patch. From there, they created a technology to collect plastic from the most garbage-heavy areas in the ocean.

“We imagined a very long, u-shaped barrier … that would be pushed by wind and waves,” Slat explained in his Talk.

This barrier would act as a funnel to collect garbage and be emptied out for recycling.

But there was a problem.

“We took it out in the ocean, and deployed it, and it didn’t collect plastic,” Slat said, “which is a pretty important requirement for an ocean cleanup system.”

Soon after, this first system broke into two. But a few days later, his team was already back to the drawing board.

From here, they added vessels that would tow the system forward, allowing it to sweep a larger area and move more methodically through the water. Mesh attached to the barrier would gather plastic and guide it to a retention area, where it would be extracted and loaded onto a ship for sorting, processing, and recycling.

It worked.

“For 60 years, humanity had been putting plastic into the ocean, but from that day onwards, we were also taking it back out again,” Slat said, with a video of the technology in action playing on screen behind him.

To applause, he said: “It’s the most beautiful thing I’ve ever seen, honestly.”

Over the years, Ocean Cleanup has scaled up this cleanup barrier, now measuring almost 2.5 kilometers — or about 1.5 miles — in length. And it cleans up an area of the ocean the size of a football field every five seconds.

Pictured: The Ocean Cleanup's System 002 deployed in the Great Pacific Garbage Patch. Photo courtesy of The Ocean Cleanup

The system is designed to be safe for marine life, and once plastic is brought to land, it is recycled into new products, like sunglasses, accessories for electric vehicles, and even Coldplay’s latest vinyl record, according to Slat.

These products fund the continuation of the cleanup. The next step of the project is to use drones to target areas of the ocean that have the highest plastic concentration.

In September 2024, Ocean Cleanup predicted the Patch would be cleaned up within 10 years.

However, on April 8, Slat estimated “that this fleet of systems can clean up the Great Pacific Garbage Patch in as little as five years’ time.”

With ongoing support from MCS, a Netherlands-based Nokia company, Ocean Cleanup can quickly scale its reliable, real-time data and video communication to best target the problem.

It’s the largest ocean cleanup in history.

But what about the plastic pollution coming into the ocean through rivers across the world? Ocean Cleanup is working on that, too.

To study plastic pollution in other waterways, Ocean Cleanup attached AI cameras to bridges, measuring the flow of trash in dozens of rivers around the world, creating the first global model to predict where plastic is entering oceans.

“We discovered: Just 1% of the world’s rivers are responsible for about 80% of the plastic entering our oceans,” Slat said.

His team found that coastal cities in middle-income countries were primarily responsible, as people living in these areas have enough wealth to buy things packaged in plastic, but governments can’t afford robust waste management infrastructure.

Ocean Cleanup now tackles those 1% of rivers to capture the plastic before it reaches oceans.

Pictured: Interceptor 007 in Los Angeles. Photo courtesy of The Ocean Cleanup

“It’s not a replacement for the slow but important work that’s being done to fix a broken system upstream,” Slat said. “But we believe that tackling this 1% of rivers provides us with the only way to rapidly close the gap.”

To clean up plastic waste in rivers, Ocean Cleanup has implemented technology called “interceptors,” which include solar-powered trash collectors and mobile systems in eight countries worldwide.

In Guatemala, an interceptor captured 1.4 million kilograms (or over 3 million pounds) of trash in under two hours. Now, this kind of collection happens up to three times a week.

“All of that would have ended up in the sea,” Slat said.

Now, interceptors are being brought to 30 cities around the world, targeting waterways that bring the most trash into our oceans. GPS trackers also mimic the flow of the plastic to help strategically deploy the systems for the most impact.

“We can already stop up to one-third of all the plastic entering our oceans once these are deployed,” Slat said.

And as soon as he finished his Talk on the TED stage, Slat was told that TED’s Audacious Project would be funding the deployment of Ocean Cleanup’s efforts in those 30 cities as part of the organization’s next cohort of grantees.

While it is unclear how much support Ocean Cleanup will receive from the Audacious Project, Head of TED Chris Anderson told Slat: “We’re inspired. We’re determined in this community to raise the money you need to make that 30-city project happen.”

And Slat himself is determined to clean the oceans for good.

“For humanity to thrive, we need to be optimistic about the future,” Slat said, closing out his Talk.

“Once the oceans are clean again, it can be this example of how, through hard work and ingenuity, we can solve the big problems of our time.”

-via GoodGoodGood, April 9, 2025

#ocean#oceans#plastic#plastic pollution#ocean cleanup#ted talks#boyan slat#climate action#climate hope#hopepunk#pollution#environmental issues#environment#pacific ocean#rivers#marine life#good news#hope

6K notes

·

View notes

Text

Aliexpress scraper is an automated coding software or tool, which scrapes the Aliexpress product data in a very short time as well as offers the best solutions for Aliexpress data scraping services.

0 notes

Text

How to Extract Product Data from Walmart with Python and BeautifulSoup

In the vast world of e-commerce, accessing and analyzing product data is a crucial aspect for businesses aiming to stay competitive. Whether you're a small-scale seller or a large corporation, having access to comprehensive product information can significantly enhance your decision-making process and marketing strategies.

Walmart, being one of the largest retailers globally, offers a treasure trove of product data. Extracting this data programmatically can be a game-changer for businesses looking to gain insights into market trends, pricing strategies, and consumer behavior. In this guide, we'll explore how to harness the power of Python and BeautifulSoup to scrape product data from Walmart's website efficiently.

Why BeautifulSoup and Python?

BeautifulSoup is a Python library designed for quick and easy data extraction from HTML and XML files. Combined with Python's simplicity and versatility, it becomes a potent tool for web scraping tasks. By utilizing these tools, you can automate the process of retrieving product data from Walmart's website, saving time and effort compared to manual data collection methods.

Setting Up Your Environment

Before diving into the code, you'll need to set up your Python environment. Ensure you have Python installed on your system, along with the BeautifulSoup library. You can install BeautifulSoup using pip, Python's package installer, by executing the following command:

bashCopy code

pip install beautifulsoup4

Scraping Product Data from Walmart

Now, let's walk through a simple script to scrape product data from Walmart's website. We'll focus on extracting product names, prices, and ratings. Below is a basic Python script to achieve this:

pythonCopy code

import requests from bs4 import BeautifulSoup def scrape_walmart_product_data(url): # Send a GET request to the URL response = requests.get(url) # Parse the HTML content soup = BeautifulSoup(response.text, 'html.parser') # Find all product containers products = soup.find_all('div', class_='search-result-gridview-items') # Iterate over each product for product in products: # Extract product name name = product.find('a', class_='product-title-link').text.strip() # Extract product price price = product.find('span', class_='price').text.strip() # Extract product rating rating = product.find('span', class_='stars-container')['aria-label'].split()[0] # Print the extracted data print(f"Name: {name}, Price: {price}, Rating: {rating}") # URL of the Walmart search page url = 'https://www.walmart.com/search/?query=laptop' scrape_walmart_product_data(url)

Conclusion

In this tutorial, we've demonstrated how to extract product data from Walmart's website using Python and BeautifulSoup. By automating the process of data collection, you can streamline your market research efforts and gain valuable insights into product trends, pricing strategies, and consumer preferences.

However, it's essential to be mindful of Walmart's terms of service and use web scraping responsibly and ethically. Always check for any legal restrictions or usage policies before scraping data from a website.

With the power of Python and BeautifulSoup at your fingertips, you're equipped to unlock the wealth of product data available on Walmart's platform, empowering your business to make informed decisions and stay ahead in the competitive e-commerce landscape. Happy scraping!

0 notes

Text

youtube

#Scrape Amazon Products data#Amazon Products data scraper#Amazon Products data scraping#Amazon Products data collection#Amazon Products data extraction#Youtube

0 notes

Text

E-commerce data scraping provides detailed information on market dynamics, prevailing patterns, pricing data, competitors’ practices, and challenges. Scrape E-commerce data such as products, pricing, deals and offers, customer reviews, ratings, text, links, seller details, images, and more. Avail of the E-commerce data from any dynamic website and get an edge in the competitive market. Boost Your Business Growth, increase revenue, and improve your efficiency with Lensnure's custom e-commerce web scraping services. We have a team of highly qualified and experienced professionals in web data scraping.

#ecommerce data scraping#product data scraping#ecommerce web scraping#product data extraction#Lensnure Solutions

1 note

·

View note

Text

Extracting Shopify product data efficiently can give your business a competitive edge. In just a few minutes, you can gather essential information such as product names, prices, descriptions, and inventory levels. Start by using web scraping tools like Python with libraries such as BeautifulSoup or Scrapy, which allow you to automate the data collection process. Alternatively, consider using user-friendly no-code platforms that simplify the extraction without programming knowledge. This valuable data can help inform pricing strategies, product listings, and inventory management, ultimately driving your eCommerce success.

#extract Shopify websites#scrape Shopify product data#extract product data from Shopify websites#Data Scraping

0 notes