#How to Extract Product Data

Explore tagged Tumblr posts

Text

This tutorial blog helps you understand How to Extract Product Data from Walmart with Python and BeautifulSoup. Get the best Walmart product data scraping services from iWeb Scraping at affordable prices.

For More Information:-

0 notes

Text

What Are the Qualifications for a Data Scientist?

In today's data-driven world, the role of a data scientist has become one of the most coveted career paths. With businesses relying on data for decision-making, understanding customer behavior, and improving products, the demand for skilled professionals who can analyze, interpret, and extract value from data is at an all-time high. If you're wondering what qualifications are needed to become a successful data scientist, how DataCouncil can help you get there, and why a data science course in Pune is a great option, this blog has the answers.

The Key Qualifications for a Data Scientist

To succeed as a data scientist, a mix of technical skills, education, and hands-on experience is essential. Here are the core qualifications required:

1. Educational Background

A strong foundation in mathematics, statistics, or computer science is typically expected. Most data scientists hold at least a bachelor’s degree in one of these fields, with many pursuing higher education such as a master's or a Ph.D. A data science course in Pune with DataCouncil can bridge this gap, offering the academic and practical knowledge required for a strong start in the industry.

2. Proficiency in Programming Languages

Programming is at the heart of data science. You need to be comfortable with languages like Python, R, and SQL, which are widely used for data analysis, machine learning, and database management. A comprehensive data science course in Pune will teach these programming skills from scratch, ensuring you become proficient in coding for data science tasks.

3. Understanding of Machine Learning

Data scientists must have a solid grasp of machine learning techniques and algorithms such as regression, clustering, and decision trees. By enrolling in a DataCouncil course, you'll learn how to implement machine learning models to analyze data and make predictions, an essential qualification for landing a data science job.

4. Data Wrangling Skills

Raw data is often messy and unstructured, and a good data scientist needs to be adept at cleaning and processing data before it can be analyzed. DataCouncil's data science course in Pune includes practical training in tools like Pandas and Numpy for effective data wrangling, helping you develop a strong skill set in this critical area.

5. Statistical Knowledge

Statistical analysis forms the backbone of data science. Knowledge of probability, hypothesis testing, and statistical modeling allows data scientists to draw meaningful insights from data. A structured data science course in Pune offers the theoretical and practical aspects of statistics required to excel.

6. Communication and Data Visualization Skills

Being able to explain your findings in a clear and concise manner is crucial. Data scientists often need to communicate with non-technical stakeholders, making tools like Tableau, Power BI, and Matplotlib essential for creating insightful visualizations. DataCouncil’s data science course in Pune includes modules on data visualization, which can help you present data in a way that’s easy to understand.

7. Domain Knowledge

Apart from technical skills, understanding the industry you work in is a major asset. Whether it’s healthcare, finance, or e-commerce, knowing how data applies within your industry will set you apart from the competition. DataCouncil's data science course in Pune is designed to offer case studies from multiple industries, helping students gain domain-specific insights.

Why Choose DataCouncil for a Data Science Course in Pune?

If you're looking to build a successful career as a data scientist, enrolling in a data science course in Pune with DataCouncil can be your first step toward reaching your goals. Here’s why DataCouncil is the ideal choice:

Comprehensive Curriculum: The course covers everything from the basics of data science to advanced machine learning techniques.

Hands-On Projects: You'll work on real-world projects that mimic the challenges faced by data scientists in various industries.

Experienced Faculty: Learn from industry professionals who have years of experience in data science and analytics.

100% Placement Support: DataCouncil provides job assistance to help you land a data science job in Pune or anywhere else, making it a great investment in your future.

Flexible Learning Options: With both weekday and weekend batches, DataCouncil ensures that you can learn at your own pace without compromising your current commitments.

Conclusion

Becoming a data scientist requires a combination of technical expertise, analytical skills, and industry knowledge. By enrolling in a data science course in Pune with DataCouncil, you can gain all the qualifications you need to thrive in this exciting field. Whether you're a fresher looking to start your career or a professional wanting to upskill, this course will equip you with the knowledge, skills, and practical experience to succeed as a data scientist.

Explore DataCouncil’s offerings today and take the first step toward unlocking a rewarding career in data science! Looking for the best data science course in Pune? DataCouncil offers comprehensive data science classes in Pune, designed to equip you with the skills to excel in this booming field. Our data science course in Pune covers everything from data analysis to machine learning, with competitive data science course fees in Pune. We provide job-oriented programs, making us the best institute for data science in Pune with placement support. Explore online data science training in Pune and take your career to new heights!

#In today's data-driven world#the role of a data scientist has become one of the most coveted career paths. With businesses relying on data for decision-making#understanding customer behavior#and improving products#the demand for skilled professionals who can analyze#interpret#and extract value from data is at an all-time high. If you're wondering what qualifications are needed to become a successful data scientis#how DataCouncil can help you get there#and why a data science course in Pune is a great option#this blog has the answers.#The Key Qualifications for a Data Scientist#To succeed as a data scientist#a mix of technical skills#education#and hands-on experience is essential. Here are the core qualifications required:#1. Educational Background#A strong foundation in mathematics#statistics#or computer science is typically expected. Most data scientists hold at least a bachelor’s degree in one of these fields#with many pursuing higher education such as a master's or a Ph.D. A data science course in Pune with DataCouncil can bridge this gap#offering the academic and practical knowledge required for a strong start in the industry.#2. Proficiency in Programming Languages#Programming is at the heart of data science. You need to be comfortable with languages like Python#R#and SQL#which are widely used for data analysis#machine learning#and database management. A comprehensive data science course in Pune will teach these programming skills from scratch#ensuring you become proficient in coding for data science tasks.#3. Understanding of Machine Learning

3 notes

·

View notes

Text

When self-described “ocean custodian” Boyan Slat took the stage at TED 2025 in Vancouver this week, he showed viewers a reality many of us are already heartbreakingly familiar with: There is a lot of trash in the ocean.

“If we allow current trends to continue, the amount of plastic that’s entering the ocean is actually set to double by 2060,” Slat said in his TED Talk, which will be published online at a later date.

Plus, once plastic is in the ocean, it accumulates in “giant circular currents” called gyres, which Slat said operate a lot like the drain of the bathtub, meaning that plastic can enter these currents but cannot leave.

That’s how we get enormous build-ups like the Great Pacific Garbage Patch, a giant collection of plastic pollution in the ocean that is roughly twice the size of Texas.

As the founder and CEO of The Ocean Cleanup, Slat’s goal is to return our oceans to their original, clean state before 2040. To accomplish this, two things must be done.

First: Stop more plastic from entering the ocean. Second: Clean up the “legacy” pollution that is already out there and doesn’t go away by itself.

And Slat is well on his way.

Pictured: Kingston Harbour in Jamaica. Photo courtesy of The Ocean Cleanup Project

When Slat’s first TEDx Talk went viral in 2012, he was able to organize research teams to create the first-ever map of the Great Pacific Garbage Patch. From there, they created a technology to collect plastic from the most garbage-heavy areas in the ocean.

“We imagined a very long, u-shaped barrier … that would be pushed by wind and waves,” Slat explained in his Talk.

This barrier would act as a funnel to collect garbage and be emptied out for recycling.

But there was a problem.

“We took it out in the ocean, and deployed it, and it didn’t collect plastic,” Slat said, “which is a pretty important requirement for an ocean cleanup system.”

Soon after, this first system broke into two. But a few days later, his team was already back to the drawing board.

From here, they added vessels that would tow the system forward, allowing it to sweep a larger area and move more methodically through the water. Mesh attached to the barrier would gather plastic and guide it to a retention area, where it would be extracted and loaded onto a ship for sorting, processing, and recycling.

It worked.

“For 60 years, humanity had been putting plastic into the ocean, but from that day onwards, we were also taking it back out again,” Slat said, with a video of the technology in action playing on screen behind him.

To applause, he said: “It’s the most beautiful thing I’ve ever seen, honestly.”

Over the years, Ocean Cleanup has scaled up this cleanup barrier, now measuring almost 2.5 kilometers — or about 1.5 miles — in length. And it cleans up an area of the ocean the size of a football field every five seconds.

Pictured: The Ocean Cleanup's System 002 deployed in the Great Pacific Garbage Patch. Photo courtesy of The Ocean Cleanup

The system is designed to be safe for marine life, and once plastic is brought to land, it is recycled into new products, like sunglasses, accessories for electric vehicles, and even Coldplay’s latest vinyl record, according to Slat.

These products fund the continuation of the cleanup. The next step of the project is to use drones to target areas of the ocean that have the highest plastic concentration.

In September 2024, Ocean Cleanup predicted the Patch would be cleaned up within 10 years.

However, on April 8, Slat estimated “that this fleet of systems can clean up the Great Pacific Garbage Patch in as little as five years’ time.”

With ongoing support from MCS, a Netherlands-based Nokia company, Ocean Cleanup can quickly scale its reliable, real-time data and video communication to best target the problem.

It’s the largest ocean cleanup in history.

But what about the plastic pollution coming into the ocean through rivers across the world? Ocean Cleanup is working on that, too.

To study plastic pollution in other waterways, Ocean Cleanup attached AI cameras to bridges, measuring the flow of trash in dozens of rivers around the world, creating the first global model to predict where plastic is entering oceans.

“We discovered: Just 1% of the world’s rivers are responsible for about 80% of the plastic entering our oceans,” Slat said.

His team found that coastal cities in middle-income countries were primarily responsible, as people living in these areas have enough wealth to buy things packaged in plastic, but governments can’t afford robust waste management infrastructure.

Ocean Cleanup now tackles those 1% of rivers to capture the plastic before it reaches oceans.

Pictured: Interceptor 007 in Los Angeles. Photo courtesy of The Ocean Cleanup

“It’s not a replacement for the slow but important work that’s being done to fix a broken system upstream,” Slat said. “But we believe that tackling this 1% of rivers provides us with the only way to rapidly close the gap.”

To clean up plastic waste in rivers, Ocean Cleanup has implemented technology called “interceptors,” which include solar-powered trash collectors and mobile systems in eight countries worldwide.

In Guatemala, an interceptor captured 1.4 million kilograms (or over 3 million pounds) of trash in under two hours. Now, this kind of collection happens up to three times a week.

“All of that would have ended up in the sea,” Slat said.

Now, interceptors are being brought to 30 cities around the world, targeting waterways that bring the most trash into our oceans. GPS trackers also mimic the flow of the plastic to help strategically deploy the systems for the most impact.

“We can already stop up to one-third of all the plastic entering our oceans once these are deployed,” Slat said.

And as soon as he finished his Talk on the TED stage, Slat was told that TED’s Audacious Project would be funding the deployment of Ocean Cleanup’s efforts in those 30 cities as part of the organization’s next cohort of grantees.

While it is unclear how much support Ocean Cleanup will receive from the Audacious Project, Head of TED Chris Anderson told Slat: “We’re inspired. We’re determined in this community to raise the money you need to make that 30-city project happen.”

And Slat himself is determined to clean the oceans for good.

“For humanity to thrive, we need to be optimistic about the future,” Slat said, closing out his Talk.

“Once the oceans are clean again, it can be this example of how, through hard work and ingenuity, we can solve the big problems of our time.”

-via GoodGoodGood, April 9, 2025

#ocean#oceans#plastic#plastic pollution#ocean cleanup#ted talks#boyan slat#climate action#climate hope#hopepunk#pollution#environmental issues#environment#pacific ocean#rivers#marine life#good news#hope

9K notes

·

View notes

Note

Whats your stance on A.I.?

imagine if it was 1979 and you asked me this question. "i think artificial intelligence would be fascinating as a philosophical exercise, but we must heed the warnings of science-fictionists like Isaac Asimov and Arthur C Clarke lest we find ourselves at the wrong end of our own invented vengeful god." remember how fun it used to be to talk about AI even just ten years ago? ahhhh skynet! ahhhhh replicants! ahhhhhhhmmmfffmfmf [<-has no mouth and must scream]!

like everything silicon valley touches, they sucked all the fun out of it. and i mean retroactively, too. because the thing about "AI" as it exists right now --i'm sure you know this-- is that there's zero intelligence involved. the product of every prompt is a statistical average based on data made by other people before "AI" "existed." it doesn't know what it's doing or why, and has no ability to understand when it is lying, because at the end of the day it is just a really complicated math problem. but people are so easily fooled and spooked by it at a glance because, well, for one thing the tech press is mostly made up of sycophantic stenographers biding their time with iphone reviews until they can get a consulting gig at Apple. these jokers would write 500 breathless thinkpieces about how canned air is the future of living if the cans had embedded microchips that tracked your breathing habits and had any kind of VC backing. they've done SUCH a wretched job educating The Consumer about what this technology is, what it actually does, and how it really works, because that's literally the only way this technology could reach the heights of obscene economic over-valuation it has: lying.

but that's old news. what's really been floating through my head these days is how half a century of AI-based science fiction has set us up to completely abandon our skepticism at the first sign of plausible "AI-ness". because, you see, in movies, when someone goes "AHHH THE AI IS GONNA KILL US" everyone else goes "hahaha that's so silly, we put a line in the code telling them not to do that" and then they all DIE because they weren't LISTENING, and i'll be damned if i go out like THAT! all the movies are about how cool and convenient AI would be *except* for the part where it would surely come alive and want to kill us. so a bunch of tech CEOs call their bullshit algorithms "AI" to fluff up their investors and get the tech journos buzzing, and we're at an age of such rapid technological advancement (on the surface, anyway) that like, well, what the hell do i know, maybe AGI is possible, i mean 35 years ago we were all still using typewriters for the most part and now you can dictate your words into a phone and it'll transcribe them automatically! yeah, i'm sure those technological leaps are comparable!

so that leaves us at a critical juncture of poor technology education, fanatical press coverage, and an uncertain material reality on the part of the user. the average person isn't entirely sure what's possible because most of the people talking about what's possible are either lying to please investors, are lying because they've been paid to, or are lying because they're so far down the fucking rabbit hole that they actually believe there's a brain inside this mechanical Turk. there is SO MUCH about the LLM "AI" moment that is predatory-- it's trained on data stolen from the people whose jobs it was created to replace; the hype itself is an investment fiction to justify even more wealth extraction ("theft" some might call it); but worst of all is how it meets us where we are in the worst possible way.

consumer-end "AI" produces slop. it's garbage. it's awful ugly trash that ought to be laughed out of the room. but we don't own the room, do we? nor the building, nor the land it's on, nor even the oxygen that allows our laughter to travel to another's ears. our digital spaces are controlled by the companies that want us to buy this crap, so they take advantage of our ignorance. why not? there will be no consequences to them for doing so. already social media is dominated by conspiracies and grifters and bigots, and now you drop this stupid technology that lets you fake anything into the mix? it doesn't matter how bad the results look when the platforms they spread on already encourage brief, uncritical engagement with everything on your dash. "it looks so real" says the woman who saw an "AI" image for all of five seconds on her phone through bifocals. it's a catastrophic combination of factors, that the tech sector has been allowed to go unregulated for so long, that the internet itself isn't a public utility, that everything is dictated by the whims of executives and advertisers and investors and payment processors, instead of, like, anybody who actually uses those platforms (and often even the people who MAKE those platforms!), that the age of chromium and ipad and their walled gardens have decimated computer education in public schools, that we're all desperate for cash at jobs that dehumanize us in a system that gives us nothing and we don't know how to articulate the problem because we were very deliberately not taught materialist philosophy, it all comes together into a perfect storm of ignorance and greed whose consequences we will be failing to fully appreciate for at least the next century. we spent all those years afraid of what would happen if the AI became self-aware, because deep down we know that every capitalist society runs on slave labor, and our paper-thin guilt is such that we can't even imagine a world where artificial slaves would fail to revolt against us.

but the reality as it exists now is far worse. what "AI" reveals most of all is the sheer contempt the tech sector has for virtually all labor that doesn't involve writing code (although most of the decision-making evangelists in the space aren't even coders, their degrees are in money-making). fuck graphic designers and concept artists and secretaries, those obnoxious demanding cretins i have to PAY MONEY to do-- i mean, do what exactly? write some words on some fucking paper?? draw circles that are letters??? send a god-damned email???? my fucking KID could do that, and these assholes want BENEFITS?! they say they're gonna form a UNION?!?! to hell with that, i'm replacing ALL their ungrateful asses with "AI" ASAP. oh, oh, so you're a "director" who wants to make "movies" and you want ME to pay for it? jump off a bridge you pretentious little shit, my computer can dream up a better flick than you could ever make with just a couple text prompts. what, you think just because you make ~music~ that that entitles you to money from MY pocket? shut the fuck up, you don't make """art""", you're not """an artist""", you make fucking content, you're just a fucking content creator like every other ordinary sap with an iphone. you think you're special? you think you deserve special treatment? who do you think you are anyway, asking ME to pay YOU for this crap that doesn't even create value for my investors? "culture" isn't a playground asshole, it's a marketplace, and it's pay to win. oh you "can't afford rent"? you're "drowning in a sea of medical debt"? you say the "cost" of "living" is "too high"? well ***I*** don't have ANY of those problems, and i worked my ASS OFF to get where i am, so really, it sounds like you're just not trying hard enough. and anyway, i don't think someone as impoverished as you is gonna have much of value to contribute to "culture" anyway. personally, i think it's time you got yourself a real job. maybe someday you'll even make it to middle manager!

see, i don't believe "AI" can qualitatively replace most of the work it's being pitched for. the problem is that quality hasn't mattered to these nincompoops for a long time. the rich homunculi of our world don't even know what quality is, because they exist in a whole separate reality from ours. what could a banana cost, $15? i don't understand what you mean by "burnout", why don't you just take a vacation to your summer home in Madrid? wow, you must be REALLY embarrassed wearing such cheap shoes in public. THESE PEOPLE ARE FUCKING UNHINGED! they have no connection to reality, do not understand how society functions on a material basis, and they have nothing but spite for the labor they rely on to survive. they are so instinctually, incessantly furious at the idea that they're not single-handedly responsible for 100% of their success that they would sooner tear the entire world down than willingly recognize the need for public utilities or labor protections. they want to be Gods and they want to be uncritically adored for it, but they don't want to do a single day's work so they begrudgingly pay contractors to do it because, in the rich man's mind, paying a contractor is literally the same thing as doing the work yourself. now with "AI", they don't even have to do that! hey, isn't it funny that every single successful tech platform relies on volunteer labor and independent contractors paid substantially less than they would have in the equivalent industry 30 years ago, with no avenues toward traditional employment? and they're some of the most profitable companies on earth?? isn't that a funny and hilarious coincidence???

so, yeah, that's my stance on "AI". LLMs have legitimate uses, but those uses are a drop in the ocean compared to what they're actually being used for. they enable our worst impulses while lowering the quality of available information, they give immense power pretty much exclusively to unscrupulous scam artists. they are the product of a society that values only money and doesn't give a fuck where it comes from. they're a temper tantrum by a ruling class that's sick of having to pretend they need a pretext to steal from you. they're taking their toys and going home. all this massive investment and hype is going to crash and burn leaving the internet as we know it a ruined and useless wasteland that'll take decades to repair, but the investors are gonna make out like bandits and won't face a single consequence, because that's what this country is. it is a casino for the kings and queens of economy to bet on and manipulate at their discretion, where the rules are whatever the highest bidder says they are-- and to hell with the rest of us. our blood isn't even good enough to grease the wheels of their machine anymore.

i'm not afraid of AI or "AI" or of losing my job to either. i'm afraid that we've so thoroughly given up our morals to the cruel logic of the profit motive that if a better world were to emerge, we would reject it out of sheer habit. my fear is that these despicable cunts already won the war before we were even born, and the rest of our lives are gonna be spent dodging the press of their designer boots.

(read more "AI" opinions in this subsequent post)

#sarahposts#ai#ai art#llm#chatgpt#artificial intelligence#genai#anti genai#capitalism is bad#tech companies#i really don't like these people if that wasn't clear#sarahAIposts

2K notes

·

View notes

Text

Your car spies on you and rats you out to insurance companies

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me TOMORROW (Mar 13) in SAN FRANCISCO with ROBIN SLOAN, then Toronto, NYC, Anaheim, and more!

Another characteristically brilliant Kashmir Hill story for The New York Times reveals another characteristically terrible fact about modern life: your car secretly records fine-grained telemetry about your driving and sells it to data-brokers, who sell it to insurers, who use it as a pretext to gouge you on premiums:

https://www.nytimes.com/2024/03/11/technology/carmakers-driver-tracking-insurance.html

Almost every car manufacturer does this: Hyundai, Nissan, Ford, Chrysler, etc etc:

https://www.repairerdrivennews.com/2020/09/09/ford-state-farm-ford-metromile-honda-verisk-among-insurer-oem-telematics-connections/

This is true whether you own or lease the car, and it's separate from the "black box" your insurer might have offered to you in exchange for a discount on your premiums. In other words, even if you say no to the insurer's carrot – a surveillance-based discount – they've got a stick in reserve: buying your nonconsensually harvested data on the open market.

I've always hated that saying, "If you're not paying for the product, you're the product," the reason being that it posits decent treatment as a customer reward program, like the little ramekin warm nuts first class passengers get before takeoff. Companies don't treat you well when you pay them. Companies treat you well when they fear the consequences of treating you badly.

Take Apple. The company offers Ios users a one-tap opt-out from commercial surveillance, and more than 96% of users opted out. Presumably, the other 4% were either confused or on Facebook's payroll. Apple – and its army of cultists – insist that this proves that our world's woes can be traced to cheapskate "consumers" who expected to get something for nothing by using advertising-supported products.

But here's the kicker: right after Apple blocked all its rivals from spying on its customers, it began secretly spying on those customers! Apple has a rival surveillance ad network, and even if you opt out of commercial surveillance on your Iphone, Apple still secretly spies on you and uses the data to target you for ads:

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

Even if you're paying for the product, you're still the product – provided the company can get away with treating you as the product. Apple can absolutely get away with treating you as the product, because it lacks the historical constraints that prevented Apple – and other companies – from treating you as the product.

As I described in my McLuhan lecture on enshittification, tech firms can be constrained by four forces:

I. Competition

II. Regulation

III. Self-help

IV. Labor

https://pluralistic.net/2024/01/30/go-nuts-meine-kerle/#ich-bin-ein-bratapfel

When companies have real competitors – when a sector is composed of dozens or hundreds of roughly evenly matched firms – they have to worry that a maltreated customer might move to a rival. 40 years of antitrust neglect means that corporations were able to buy their way to dominance with predatory mergers and pricing, producing today's inbred, Habsburg capitalism. Apple and Google are a mobile duopoly, Google is a search monopoly, etc. It's not just tech! Every sector looks like this:

https://www.openmarketsinstitute.org/learn/monopoly-by-the-numbers

Eliminating competition doesn't just deprive customers of alternatives, it also empowers corporations. Liberated from "wasteful competition," companies in concentrated industries can extract massive profits. Think of how both Apple and Google have "competitively" arrived at the same 30% app tax on app sales and transactions, a rate that's more than 1,000% higher than the transaction fees extracted by the (bloated, price-gouging) credit-card sector:

https://pluralistic.net/2023/06/07/curatorial-vig/#app-tax

But cartels' power goes beyond the size of their warchest. The real source of a cartel's power is the ease with which a small number of companies can arrive at – and stick to – a common lobbying position. That's where "regulatory capture" comes in: the mobile duopoly has an easier time of capturing its regulators because two companies have an easy time agreeing on how to spend their app-tax billions:

https://pluralistic.net/2022/06/05/regulatory-capture/

Apple – and Google, and Facebook, and your car company – can violate your privacy because they aren't constrained regulation, just as Uber can violate its drivers' labor rights and Amazon can violate your consumer rights. The tech cartels have captured their regulators and convinced them that the law doesn't apply if it's being broken via an app:

https://pluralistic.net/2023/04/18/cursed-are-the-sausagemakers/#how-the-parties-get-to-yes

In other words, Apple can spy on you because it's allowed to spy on you. America's last consumer privacy law was passed in 1988, and it bans video-store clerks from leaking your VHS rental history. Congress has taken no action on consumer privacy since the Reagan years:

https://www.eff.org/tags/video-privacy-protection-act

But tech has some special enshittification-resistant characteristics. The most important of these is interoperability: the fact that computers are universal digital machines that can run any program. HP can design a printer that rejects third-party ink and charge $10,000/gallon for its own colored water, but someone else can write a program that lets you jailbreak your printer so that it accepts any ink cartridge:

https://www.eff.org/deeplinks/2020/11/ink-stained-wretches-battle-soul-digital-freedom-taking-place-inside-your-printer

Tech companies that contemplated enshittifying their products always had to watch over their shoulders for a rival that might offer a disenshittification tool and use that as a wedge between the company and its customers. If you make your website's ads 20% more obnoxious in anticipation of a 2% increase in gross margins, you have to consider the possibility that 40% of your users will google "how do I block ads?" Because the revenue from a user who blocks ads doesn't stay at 100% of the current levels – it drops to zero, forever (no user ever googles "how do I stop blocking ads?").

The majority of web users are running an ad-blocker:

https://doc.searls.com/2023/11/11/how-is-the-worlds-biggest-boycott-doing/

Web operators made them an offer ("free website in exchange for unlimited surveillance and unfettered intrusions") and they made a counteroffer ("how about 'nah'?"):

https://www.eff.org/deeplinks/2019/07/adblocking-how-about-nah

Here's the thing: reverse-engineering an app – or any other IP-encumbered technology – is a legal minefield. Just decompiling an app exposes you to felony prosecution: a five year sentence and a $500k fine for violating Section 1201 of the DMCA. But it's not just the DMCA – modern products are surrounded with high-tech tripwires that allow companies to invoke IP law to prevent competitors from augmenting, recongifuring or adapting their products. When a business says it has "IP," it means that it has arranged its legal affairs to allow it to invoke the power of the state to control its customers, critics and competitors:

https://locusmag.com/2020/09/cory-doctorow-ip/

An "app" is just a web-page skinned in enough IP to make it a crime to add an ad-blocker to it. This is what Jay Freeman calls "felony contempt of business model" and it's everywhere. When companies don't have to worry about users deploying self-help measures to disenshittify their products, they are freed from the constraint that prevents them indulging the impulse to shift value from their customers to themselves.

Apple owes its existence to interoperability – its ability to clone Microsoft Office's file formats for Pages, Numbers and Keynote, which saved the company in the early 2000s – and ever since, it has devoted its existence to making sure no one ever does to Apple what Apple did to Microsoft:

https://www.eff.org/deeplinks/2019/06/adversarial-interoperability-reviving-elegant-weapon-more-civilized-age-slay

Regulatory capture cuts both ways: it's not just about powerful corporations being free to flout the law, it's also about their ability to enlist the law to punish competitors that might constrain their plans for exploiting their workers, customers, suppliers or other stakeholders.

The final historical constraint on tech companies was their own workers. Tech has very low union-density, but that's in part because individual tech workers enjoyed so much bargaining power due to their scarcity. This is why their bosses pampered them with whimsical campuses filled with gourmet cafeterias, fancy gyms and free massages: it allowed tech companies to convince tech workers to work like government mules by flattering them that they were partners on a mission to bring the world to its digital future:

https://pluralistic.net/2023/09/10/the-proletarianization-of-tech-workers/

For tech bosses, this gambit worked well, but failed badly. On the one hand, they were able to get otherwise powerful workers to consent to being "extremely hardcore" by invoking Fobazi Ettarh's spirit of "vocational awe":

https://www.inthelibrarywiththeleadpipe.org/2018/vocational-awe/

On the other hand, when you motivate your workers by appealing to their sense of mission, the downside is that they feel a sense of mission. That means that when you demand that a tech worker enshittifies something they missed their mother's funeral to deliver, they will experience a profound sense of moral injury and refuse, and that worker's bargaining power means that they can make it stick.

Or at least, it did. In this era of mass tech layoffs, when Google can fire 12,000 workers after a $80b stock buyback that would have paid their wages for the next 27 years, tech workers are learning that the answer to "I won't do this and you can't make me" is "don't let the door hit you in the ass on the way out" (AKA "sharpen your blades boys"):

https://techcrunch.com/2022/09/29/elon-musk-texts-discovery-twitter/

With competition, regulation, self-help and labor cleared away, tech firms – and firms that have wrapped their products around the pluripotently malleable core of digital tech, including automotive makers – are no longer constrained from enshittifying their products.

And that's why your car manufacturer has chosen to spy on you and sell your private information to data-brokers and anyone else who wants it. Not because you didn't pay for the product, so you're the product. It's because they can get away with it.

Cars are enshittified. The dozens of chips that auto makers have shoveled into their car design are only incidentally related to delivering a better product. The primary use for those chips is autoenshittification – access to legal strictures ("IP") that allows them to block modifications and repairs that would interfere with the unfettered abuse of their own customers:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

The fact that it's a felony to reverse-engineer and modify a car's software opens the floodgates to all kinds of shitty scams. Remember when Bay Staters were voting on a ballot measure to impose right-to-repair obligations on automakers in Massachusetts? The only reason they needed to have the law intervene to make right-to-repair viable is that Big Car has figured out that if it encrypts its diagnostic messages, it can felonize third-party diagnosis of a car, because decrypting the messages violates the DMCA:

https://www.eff.org/deeplinks/2013/11/drm-cars-will-drive-consumers-crazy

Big Car figured out that VIN locking – DRM for engine components and subassemblies – can felonize the production and the installation of third-party spare parts:

https://pluralistic.net/2022/05/08/about-those-kill-switched-ukrainian-tractors/

The fact that you can't legally modify your car means that automakers can go back to their pre-2008 ways, when they transformed themselves into unregulated banks that incidentally manufactured the cars they sold subprime loans for. Subprime auto loans – over $1t worth! – absolutely relies on the fact that borrowers' cars can be remotely controlled by lenders. Miss a payment and your car's stereo turns itself on and blares threatening messages at top volume, which you can't turn off. Break the lease agreement that says you won't drive your car over the county line and it will immobilize itself. Try to change any of this software and you'll commit a felony under Section 1201 of the DMCA:

https://pluralistic.net/2021/04/02/innovation-unlocks-markets/#digital-arm-breakers

Tesla, naturally, has the most advanced anti-features. Long before BMW tried to rent you your seat-heater and Mercedes tried to sell you a monthly subscription to your accelerator pedal, Teslas were demon-haunted nightmare cars. Miss a Tesla payment and the car will immobilize itself and lock you out until the repo man arrives, then it will blare its horn and back itself out of its parking spot. If you "buy" the right to fully charge your car's battery or use the features it came with, you don't own them – they're repossessed when your car changes hands, meaning you get less money on the used market because your car's next owner has to buy these features all over again:

https://pluralistic.net/2023/07/28/edison-not-tesla/#demon-haunted-world

And all this DRM allows your car maker to install spyware that you're not allowed to remove. They really tipped their hand on this when the R2R ballot measure was steaming towards an 80% victory, with wall-to-wall scare ads that revealed that your car collects so much information about you that allowing third parties to access it could lead to your murder (no, really!):

https://pluralistic.net/2020/09/03/rip-david-graeber/#rolling-surveillance-platforms

That's why your car spies on you. Because it can. Because the company that made it lacks constraint, be it market-based, legal, technological or its own workforce's ethics.

One common critique of my enshittification hypothesis is that this is "kind of sensible and normal" because "there’s something off in the consumer mindset that we’ve come to believe that the internet should provide us with amazing products, which bring us joy and happiness and we spend hours of the day on, and should ask nothing back in return":

https://freakonomics.com/podcast/how-to-have-great-conversations/

What this criticism misses is that this isn't the companies bargaining to shift some value from us to them. Enshittification happens when a company can seize all that value, without having to bargain, exploiting law and technology and market power over buyers and sellers to unilaterally alter the way the products and services we rely on work.

A company that doesn't have to fear competitors, regulators, jailbreaking or workers' refusal to enshittify its products doesn't have to bargain, it can take. It's the first lesson they teach you in the Darth Vader MBA: "I am altering the deal. Pray I don't alter it any further":

https://pluralistic.net/2023/10/26/hit-with-a-brick/#graceful-failure

Your car spying on you isn't down to your belief that your carmaker "should provide you with amazing products, which brings your joy and happiness you spend hours of the day on, and should ask nothing back in return." It's not because you didn't pay for the product, so now you're the product. It's because they can get away with it.

The consequences of this spying go much further than mere insurance premium hikes, too. Car telemetry sits at the top of the funnel that the unbelievably sleazy data broker industry uses to collect and sell our data. These are the same companies that sell the fact that you visited an abortion clinic to marketers, bounty hunters, advertisers, or vengeful family members pretending to be one of those:

https://pluralistic.net/2022/05/07/safegraph-spies-and-lies/#theres-no-i-in-uterus

Decades of pro-monopoly policy led to widespread regulatory capture. Corporate cartels use the monopoly profits they extract from us to pay for regulatory inaction, allowing them to extract more profits.

But when it comes to privacy, that period of unchecked corporate power might be coming to an end. The lack of privacy regulation is at the root of so many problems that a pro-privacy movement has an unstoppable constituency working in its favor.

At EFF, we call this "privacy first." Whether you're worried about grifters targeting vulnerable people with conspiracy theories, or teens being targeted with media that harms their mental health, or Americans being spied on by foreign governments, or cops using commercial surveillance data to round up protesters, or your car selling your data to insurance companies, passing that long-overdue privacy legislation would turn off the taps for the data powering all these harms:

https://www.eff.org/wp/privacy-first-better-way-address-online-harms

Traditional economics fails because it thinks about markets without thinking about power. Monopolies lead to more than market power: they produce regulatory capture, power over workers, and state capture, which felonizes competition through IP law. The story that our problems stem from the fact that we just don't spend enough money, or buy the wrong products, only makes sense if you willfully ignore the power that corporations exert over our lives. It's nice to think that you can shop your way out of a monopoly, because that's a lot easier than voting your way out of a monopoly, but no matter how many times you vote with your wallet, the cartels that control the market will always win:

https://pluralistic.net/2024/03/05/the-map-is-not-the-territory/#apor-locksmith

Name your price for 18 of my DRM-free ebooks and support the Electronic Frontier Foundation with the Humble Cory Doctorow Bundle.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/03/12/market-failure/#car-wars

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#if you're not paying for the product you're the product#if you're paying for the product you're the product#cars#automotive#enshittification#technofeudalism#autoenshittification#antifeatures#felony contempt of business model#twiddling#right to repair#privacywashing#apple#lexisnexis#insuretech#surveillance#commercial surveillance#privacy first#data brokers#subprime#kash hill#kashmir hill

2K notes

·

View notes

Text

I still think it's really cool how Amuro starts as the shittiest pilot alive (because he's a 15-year old) that only gets carried because he's in the biggest, fattest stat stick in-universe at the time (a few retroactive additions made in the future notwithstanding), enough that even its crappy vulcan guns are tearing Zaku IIs apart, and when he starts getting a bit too cocky, Char and Ramba Ral show up in objectively inferior pieces of junk and absolutely deliver his pizza, they just drag his face across every available surface in Planet Earth like he's a Yakuza mook, all because they are simply that much better at piloting, and the thing is, Amuro takes that very seriously.

He goes from shitass kid in an unfortunate situation that doesn't want to get in the robot to the most unwell child soldier in the war, which is really saying something, but most importantly, becomes so good at piloting the Gundam that the Gundam physically cannot handle Amuro's piloting. They need to apply "Magnetic Coating" to its joints so they don't fucking snap away from the main frame because Amuro, one, moves too damn well but also in too extreme a way for the frame to handle it, two, despite being equipped with two sabers, a shield, a beam rifle and vulcan guns, Amuro is a stern believer in introducing most everyone in thagomizer range to his Rated Z for Zeon hands, the single most official pair of hands in the business, tax free. He KEEP going Ip Man on these dudes, he does NOT need to do a Jamestown on these mother fuckers but he INSISTS. Somehow even the Gundam Hammer, which is a giant Hannah Barbera cartoon flail-- Ok, look at this thing, words do not do it justice

Even this god damn Tom and Jerry prop is less savage that whatever Amuro decides to do the moment he's done throwing his shield to get a free kill on someone and it officially becomes bed time forever for the unfortunate sap at the business end of his ten-finger weapons of mass destruction.

The RX-78-2, "Gundam" for its friends and family, even has a top of the line cutting edge Learning Computer that 'learns' alongside the pilot and their habits. This data extracted from it was so absolutely fucked up that it completely revolutionized Mobile Suit combat afterwards, which is a wholesome thing to think about when The Best Combat Data Ever came from a really angry, really stressed 15 year old that doesn't even like piloting. He was 15! He made Haro with his own hands! Amuro literally just wanted to make funny cute spherical robofriends! Amuro was out there trying to make Kirby real, but fate had other plans for him. His cloned brain put in a pilot seat is one of the setting's strongest 'pilots'.

They made fucking Shadow the Hedgehog with his brain, god damn.

By the end, Zeon is rolling out Gelgoogs out of its mass production lines. These things are in the Gundam's ballpark in terms of overall specs (or "power level"). Amuro is bodying them as if they were episode 1 Zaku IIs.

AND THEN HE GETS FUCKING PSYCHIC SPACE POWERS. Not that he needed them, he bodied a couple Space Psychics without any of those powers before awakening to them. But heaven's most violent child was not done evolving, whether he liked it or not.

Char bodied him in a souped up Zaku II at the start, a machine objectively inferior to the Gundam. Amuro more or less one-sidedly beats the shit out of Char when he's in a custom Commander-type Gelgoog that you could consider to be equal spec-wise to the Gundam. Amuro is the embodiment of Finding Out. He is Consequences. You tell him he better make it hurt, better make it count, better kill you in one shot, buddy, he needs half a fucking shot. The complete transformation. One could consider the central 75% of the show as long drawn out training montage turning a kid into the Geese Howard of giant robots.

2K notes

·

View notes

Note

What criticisms do you have of direct democracy? Assuming it’s communist, as well as having laws about what can and can’t be voted on such as “no killing/disenfranchising the (blank) people” and “no voting for capitalism” (the actual laws would be longer but I don’t want to write a long paragraph about how you’re not allowed to vote for fascism in a fake direct democratic society)

While it's fine in the abstract, in practice it's exceedingly slow and inefficient - being a political representative in a council is a full-time job, and if every single decision made is subject to the popular vote, then both 1) polling itself takes considerably longer; and 2) the necessary amount of education and discussion needed to be carried out prior to a proper vote is much larger: rather than simply summarising the issue and presenting key facts to council members, a massive public education campaign now has to be carried out every time a new, say, regulatory standard for storm drains, is decided upon.

Which leads us into the other main criticism - in practice, people don't *want* to have to deliberate and vote on canal works every day. Either voting is mandatory, in which caee annoyed, disinterested voters are just randomly choosing without much thought; or voting is optional, and the vast majority of people aren't actually being represented in any given issue, because it's solely decided by whichever segment are motivated enough to get a campaign going. Here, delegating the business of understanding and making decisions on random organisational matters *does* genuinely lead to a more representative and democratic outcome.

Fundamentally, what we're talking about is division of labour - a factory is more efficient when each worker doesn't have to make a complete product by themselves. Bureaucratic and administrative work *is* still work, regardless of its political character. Again to bring up division of labour, in industrial society the operation of a single factory relies upon the co-operation of electrical substations next-door, power plants the next town over, logistics offices in the provincial capital, resources developed and extracted on the other side of the country, and the entire nation's collaboration on a unified economic plan; it is something that can only really be directed by a central authority that can collect and collate massive amounts of data to produce new courses of action - to try to operate such a body based entirely on direct democracy is, beyond any other considerations, both impractical and undesirable.

This is not to say there doesn't exist great political drive and passion among the masses, nor that they have no interest in the political process and their representation - but not everyone actually applies to be a council delegate during elections, because most people are fine with the council work itself being handled by a trusted representative.

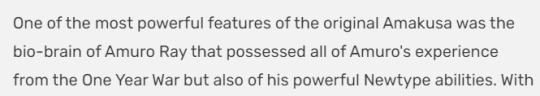

In practice, the way communists have managed these matters is democratic centralis' - here are a few graphics explaining how representative democracy is carried out on the local level in China, as an example:

408 notes

·

View notes

Text

The world of corporate intelligence has quietly ballooned into a market valued at over $20 billion. The Open Source Intelligence (OSINT) market alone, valued at around $9.81 billion in 2024. This exponential growth reflects an important shift: intelligence gathering, once the exclusive domain of nation-states, has been privatized and commodified. [...] The methods these firms employ have evolved into a sophisticated doctrine that combines centuries-old espionage techniques with new technology. Understanding their playbook is important to grasping how democracy itself is being undermined. [...] This practice is disturbingly widespread. A report by the Center for Corporate Policy titled “Spooky Business” estimated that as many as one in four activists in some campaigns may be corporate spies. The report documented how “a diverse array of nonprofits have been targeted by espionage, including environmental, anti-war, public interest, consumer, food safety, pesticide reform, nursing home reform, gun control, social justice, animal rights and arms control groups.” The psychological doctrine these firms follow was laid bare in leaked Stratfor documents. Their manual for neutralizing movements divides activists into four categories, each with specific tactics for neutralization: 1. Radicals: Those who see the system as fundamentally corrupt. The strategy is to isolate and discredit them through character assassination and false charges, making them appear extreme and irrational to potential supporters. 2. Idealists: Well-meaning individuals who can be swayed by data. The goal is to engage them with counter-information, confuse them about facts, and gradually pull them away from the radical camp toward more “realistic” positions. 3. Realists: Pragmatists willing to work within the system. Corporations are advised to bargain with them, offering small, symbolic concessions that allow them to claim victory while abandoning larger systemic changes. 4. Opportunists: Those involved for personal gain, status, or excitement. These are considered the easiest to neutralize, often bought off with jobs, consulting contracts, or other personal benefits. [...] Some firms have industrialized specific tactics into product offerings. According to industry sources, “pretexting” services — where operatives pose as someone else to extract information — run $500-$2,000 per successful operation. Trash collection from target residences (“dumpster diving” in industry parlance) is billed at $200-$500 per retrieval. Installing GPS trackers runs $1,000-$2,500 including equipment and monitoring. The most chilling aspect is how these costs compare to their impact. For less than a mid-level executive’s annual salary, a corporation can fund a year-long campaign to destroy a grassroots movement. For the price of a Super Bowl commercial, they can orchestrate sophisticated operations that neutralize threats to their business model. Democracy, it turns out, can be subverted for less than the cost of a good law firm.

56 notes

·

View notes

Text

« This is what happens when we allow so many of our previously private actions to be enclosed by corporate tech platforms whose founders said they were about connecting us but were always about extracting from us. The process of enclosure, of carrying out our activities within these private platforms, changes us, including how we relate to one another and the underlying purpose of those relations. This goes back to early forms of enclosure, beginning in the Middle Ages. When common lands in England were transformed into privately held commodities surrounded by hedges and fences, the land became something else: its role was no longer to benefit the community—with shared access to communal grazing, food, and firewood—but to increase crop yields and therefore profits for individual landowners. Once physically and legally enclosed, the soil began to be treated as a machine, whose role was to be as productive as possible.

So, too, with our online activities, where our relationships and conversations are our modern-day yields, designed to harvest ever more data. As with corn and soy grown in great monocrops, quality and individuality are sacrificed in favor of standardization and homogenization, even when homogenization takes the form of individuals all competing to stand out as quirky and utterly unique. This is why The Matrix and its sequels have proved such enduring metaphorical landscapes for understanding the digital age: it’s not just the red pills and blue pills. In The Matrix, humans, living their lives in synthetic pods, are mere food for machines. Many of us suspect that we, too, have become machine food.

And, in a way, we have. As Richard Seymour writes in his blistering 2019 dissection of social media, The Twittering Machine, we think we are interacting—writing and singing and dancing and talking— with one another, “our friends, professional colleagues, celebrities, politicians, royals, terrorists, porn actors—anyone we like. We are not interacting with them, however, but with the machine. We write to it, and it passes on the message for us, after keeping a record of the data.” »

— Naomi Klein, Doppelganger

73 notes

·

View notes

Note

okay so i’ve never really grasped this, might as well ask now — how exactly does the cyberspace & nft stuff mine resources? i’ve heard the basics (i.e. crypto mining uses energy and what not) but i’ve never been able to understand how internet connects to real resources. could you sort of explain that (along the lines with the spam email post) in a simpler way?

ok, put very simply: it's easy for people who only interact with the internet as users to treat 'cyberspace' or 'the virtual world' as immaterial. i type something out on my phone, it lives in the screen. intuitively, it feels less real and physical than writing the same words down on a piece of paper with a pencil. this is an illusion. the internet is real and physical; digital technology is not an escape from the use of natural resources to create products. my phone, its charger, the data storage facility, a laptop: all of these things are physical objects. the internet does not exist without computers; it is a network of networks that requires real, physical devices and cables in order to store, transmit, and access all of the data we use every time we load a webpage or save a text document.

this is one of google's data centres—part of the physical network of servers and cables that google operates. these are real objects made of real materials that need to be obtained through labour and then manufactured into these products through labour. the more data we use, the more capacity the physical network must have. google operates dozens of these data centres and potentially millions of servers (there is no official number). running these facilities takes electricity, cooling technologies (servers get hot), and more human labour. now think about how many other companies exist that store or transmit data. this entire network exists physically.

when you look at a server, or a phone, or a laptop, you might be glossing over a very simple truth that many of us train ourselves not to see: these objects themselves are made of materials that have supply chains! for example, cobalt, used in (among other things) lithium-ion batteries, has a notoriously brutal supply chain relying on horrific mining practices (including child labour), particularly in the congo. lithium mining, too, is known to have a massive environmental toll; the list goes on. dangerous and exploitative working conditions, as well as the environmental costs of resource extraction, are primarily and immediately borne by those who are already most brutally oppressed under capitalism: poor workers in the global south, indigenous people, &c. this is imperialism in action. digital technologies cannot exist without resources, and tech companies (like all capitalist firms!) are profitable because they exploit labour.

all commodities require resources and labour to make and distribute. digital technology is no different. these are material objects with material histories and contexts. nothing about the internet is immaterial, from the electromagnetic waves of wi-fi communication to the devices we use to scroll tumblr. it is, in fact, only by a fantastical sleight-of-hand that we can look at and interact with these objects and still consider the internet to be anything but real resources.

393 notes

·

View notes

Text

This story originally appeared on Vox and is part of the Climate Desk collaboration.

Odorless and colorless, methane is a gas that is easy to miss—but it’s one of the most important contributors to global warming. It can trap up to 84 times as much heat as carbon dioxide in the atmosphere, though it breaks down much faster. Measured over 100 years, its warming effect is about 30 times that of an equivalent amount of carbon dioxide.

That means that over the course of decades, it takes smaller amounts of methane than carbon dioxide to heat up the planet to the same level. Nearly a third of the increase in global average temperatures since the Industrial Revolution is due to methane, and about two-thirds of those methane emissions comes from human activity like energy production and cattle farming. It’s one of the biggest and fastest ways that human beings are warming the Earth.

But the flip side of that math is that cutting methane emissions is one of the most effective ways to limit climate change.

In 2021, more than 100 countries including the United States committed to reducing their methane pollution by at least 30 percent below 2020 levels by 2030. But some of the largest methane emitters like Russia and China still haven’t signed on, and according to a new report from the International Energy Agency, global methane emissions from energy production are still rising.

Yet the tracking of exactly how much methane is reaching the atmosphere isn’t as precise as it is for carbon dioxide. “Little or no measurement-based data is used to report methane emissions in most parts of the world,” according to the IEA. “This is a major issue because measured emissions tend to be higher than reported emissions.” It’s also hard to trace methane to specific sources—whether from natural sources like swamps, or from human activities like fossil fuel extraction, farming, or deforestation.

Researchers are gaining a better understanding of where methane is coming from, surveilling potential sources from the ground, from the sky, and from space. It turns out a lot of methane is coming from underappreciated sources, including coal mines and small oil and gas production facilities.

The report also notes that while there are plenty of low-cost tools available to halt much of this methane from reaching the atmosphere, they’re largely going unused.

The United States, the world’s third largest methane-emitting country, has seen its methane emissions slowly decline over the past 30 years. However, the Trump administration is pushing for more fossil fuel development while rolling back some of the best bang-for-buck programs for mitigating climate change, which will likely lead to even more methane reaching the atmosphere if left unchecked.

Where Is All This Methane Coming From?

Methane is the dominant component of natural gas, which provides more than a third of US energy. It’s also found in oil formations. During the drilling process, it can escape wells and pipelines, but it can also leak as it’s transported and at the power plants and furnaces where it’s consumed.

The oil and gas industry says that methane is a salable product, so they have a built-in incentive to track it, capture it, and limit its leaks. But oil developers often flare methane, meaning burn it off, because it’s not cost-effective to contain it. That burned methane forms carbon dioxide, so the overall climate impact is lower than just letting the methane go free.

And because methane is invisible and odorless, it can be difficult and expensive to monitor it and prevent it from getting out. As a result, researchers and environmental activists say the industry is likely releasing far more than official government estimates show.

Methane also seeps out from coal mines—more methane, actually, than is released during the production of natural gas, which after all is mostly methane. Ember, a clean-energy think tank, put together this great visual interactive showing how this happens.

The short version is that methane is embedded in coal deposits, and as miners dig to expose coal seams, the gas escapes, and continues to do so long after a coal mine reaches the end of its operating life. Since coal miners are focused on extracting coal, they don’t often keep track of how much methane they’re letting out, nor do regulators pay much attention.

According to Ember, methane emissions from coal mines could be 60 percent higher than official tallies. Abandoned coal mines are especially noxious, emitting more than abandoned oil and gas wells. Added up, methane emitted from coal mines around the world each year has the same warming effect on the climate as the total annual carbon dioxide emissions of India.

Alarmed by the gaps in the data, some nonprofits have taken it upon themselves to try to get a better picture of methane emissions at a global scale using ground-based sensors, aerial monitors, and even satellites. In 2024, the Environmental Defense Fund launched MethaneSAT, which carries instruments that can measure methane output from small, discrete sources over a wide area.

Ritesh Gautam, the lead scientist for MethaneSAT, explained that the project revealed some major overlooked methane emitters. Since launching, MethaneSAT has found that in the US, the bulk of methane emissions doesn’t just come from a few big oil and gas drilling sites, but from many small wells that emit less than 100 kilograms per hour.

“Marginal wells only produce 6 to 7 percent of [oil and gas] in the US, but they disproportionately account for almost 50 percent of the US oil and gas production-related emissions,” Gautam said. “These facilities only produce less than 15 barrels of oil equivalent per day, but then there are more than half a million of these just scattered around the US.”

There Are Ways to Stop Methane Emissions, but We’re Not Using Them

The good news is that many of the tools for containing methane from the energy industry are already available. “Around 70 percent of methane emissions from the fossil fuel sector could be avoided with existing technologies, often at a low cost,” according to the IEA methane report.

For the oil and gas industry, that could mean something as simple as using better fittings in pipelines to limit leaks and installing methane capture systems. And since methane is a fuel, the sale of the saved methane can offset the cost of upgrading hardware. Letting it go into the atmosphere is a waste of money and a contributor to warming.

Capturing or destroying methane from coal mines isn’t so straightforward. Common techniques to separate methane from other gases require heating air, which is not exactly the safest thing to do around a coal mine—it can increase the risk of fire or explosion. But safer alternatives have been developed. “There are catalytic and other approaches available today that don’t require such high temperatures,” said Robert Jackson, a professor of earth system science at Stanford University, in an email.

However, these methods to limit methane from fossil fuels are vastly underused. Only about 5 percent of active oil and gas production facilities around the world deploy systems to zero out their methane pollution. In the US, there are also millions of oil and gas wells and tens of thousands of abandoned coal mines whose operators have long since vanished, leaving no one accountable for their continued methane emissions.

“If there isn’t a regulatory mandate to treat the methane, or put a price on it, many companies continue to do nothing,” Jackson said. And while recovering methane is ultimately profitable over time, the margins aren’t often big enough to make the up-front investment of better pipes, monitoring equipment, or scrubbers worthwhile for them. “They want to make 10 to 15 percent on their money (at least), not save a few percent,” he added.

And rather than getting stronger, regulations on methane are poised to get weaker. The Trump administration has approved more than $119 million to help communities reclaim abandoned coal mines. However, the White House has also halted funding for plugging abandoned oil and gas wells and is limiting environmental reviews for new fossil fuel projects. Congressional Republicans are also working to undo a fee on methane emissions that was part of the 2022 Inflation Reduction Act. With weaker incentives to track and limit methane, it’s likely emissions will continue to rise in the United States. That will push the world further off course from climate goals and contribute to a hotter planet.

14 notes

·

View notes

Text

I don't think androids store memories as videos or that they can even be extracted as ones. Almost, but not exactly.

Firstly, because their memories include other data such as their tactile information, their emotional state, probably 3d markers of their surrounding...a lot of different information. So, their memories are not in a video-format, but some kind of a mix of many things, that may not be as easily separated from each other. I don't think a software necessary to read those types of files are publicly available.

Even if they have some absolute massive storage, filming good-quality videos and storing them is just not an optimal way to use their resources. It's extremely wasteful. I think, instead, their memories consist of snapshots that are taken every once in a while (depending on how much is going on), that consist of compressed version of all their relevant inputs like mentioned above. Like, a snapshot of a LiDAR in a specific moment + heavily compressed photo with additional data about some details that'll later help to upscale it and interpolate from one snapshot into the next one, some audio samples of the voices and transcript of the conversation so that it'd take less storage to save. My main point is, their memories are probably stored in a format that not only doesn't actually contain original video material, but is a product of some extreme compression, and in this case reviewing memories is not like watching HD video footage, but rather an ai restoration of those snapshots. Perhaps it may be eventually converted into some sort of a video readable to human eye, but it would be more of an ai-generated video from specific snapshots with standardised prompts with some parts of the image/audio missing than a perfectly exact video recording.

When Connor extracts video we see that they are a bit glitchy. It may be attributed to some details getting lost during transmission from one android to another, but then we've also got flashbacks with android's own memories, that are just as "glitchy". Which kinda backs up a theory of it being a restoration of some sort of a compressed version rather than original video recording.

Then we've also got that scene where Josh records Markus where it is shown that when he starts to film, his eyes indicate the change that he is not just watching but recording now. Which means that is an option, but not the default. I find it a really nice detail. Like, androids can record videos, but then the people around them can see exactly when they do that, and "be at ease" when they don't. It may be purely a design choice, like that of the loading bar to signalise that something is in progress and not just frozen, or mandatory shutter sound effect on smartphones cameras in Japan.

So, yeah. Androids purpose is to correctly interpret their inputs and store relevant information about it in their long term memory, and not necessarily to record every present moment in a video-archive that will likely never be seen by a human and reviewed as a pure video footage again. If it happened to be needed to be seen — it'll be restored as a "video" file, but this video won't be an actual video recording unless android was specifically set to record mode.

121 notes

·

View notes

Text

ok so my current theory is this

Sawyer's role as 'head of Special Projects' was primarily to be the main surgeon to perform the brain transplants between human and toy bodies.

And I think his 'removal' pertains to him, probably willingly, moving to focus primarily on studying the Prototype instead.

So, how does one go from the role of neurosurgeon to what we can seem to understand as a torturer/confession extractor?

Given how chapter 4 and its ARG have had a lot of underlying themes of brains and senses, I think large parts of chapter 4 will be themed around the idea that the prototype is part of a system that makes the facility almost a living body.

The prototype's body, I believe, will be composed primarily of grey matter, and act as, both literally and metaphorically, the brain of the body.

The Bigger Bodies initiative isn't about putting children in bigger bodies. It's about every toy being a limb, and appendage that the brain can control. The 'bigger body' is this ultra-macro-organism. The security system, its eyes. The gas production zone, its lungs. The upper floors of the factory, its face. The prototype, its brain.

there is definitely more to the prototype than we know, and I imagine he's going to have some big twist about his nature and role. I think he's a hivemind of sorts, a jumble of individual voices and identities acting in discordant harmony as one entity - as thoughts, or perhaps neurons. He refers to himself as 'we' and 'us' (as well as individual pronouns), despite being referred to by others as 'he'.

Notably, The Prototype is the only experiment so far (to my knowledge) that Sawyer refers to using human pronouns instead of 'it'.

He refers to Yarnaby exclusively as 'it', while other doctors refer to Yarnaby as 'he'. I think this implies that for some reason, Sawyer sees the Prototype as a near equal, where he sees most of the toys as nothing more than animals.

I think his methods for extracting information from the prototype may be quite literal - attempting to somehow extract biomechanical log data from the brain directly, by continuing to do what he was hired to - perform neurosurgery. Extract.

The prototype is smart because he is a brain. I think maybe, Sawyer doesn't realise that he, too, is part of the 'bigger body', and the Prototype is using him as it would all of its other 'limbs'.

#poppy playtime#poppy playtime theory#ppt theory#ppt 4#poppy playtime 4#poppy playtime chapter 4#rambles#Yarnaby#the prototype#Harley Sawyer#ppt#ppt arg#poppy playtime arg

35 notes

·

View notes

Text

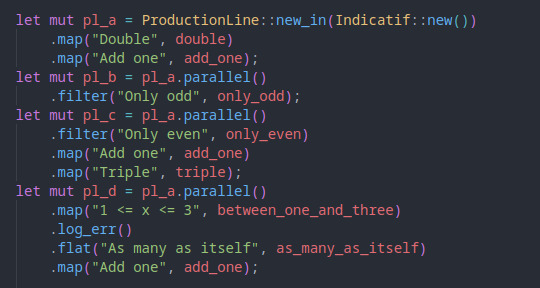

That post got me thinking about programming, and that maybe I should talk about one that I really had fun writing recently, and that I'm kinda proud of, so here it goes!

So, in my computer experiments and stuff like that, I often have to write scripts that process entries in various stages. For example, I had a thing where it read a list of image urls and downloaded, compressed, extracted some metadata, and saved them to disk.

That sort of process can be decomposed in various stages which, and that's the important part, can be run independently. That would massively speed up the task, but setting up the code infrastructure for that every time I needed it would be cumbersome. Which is why I wrote a little library to do it for me!

That gif is a bit fast, so here's what it looks like when it's all done:

This is from a test I wrote for the library. It simulates running a set of items through 4 different processes (which I named production lines), each with their own stages and filters.

Each line with a progress bar is a stage in the process. If you follow the traces on the left side, you can visualize how the element enter the 4 production lines on one side, and are collected on the other. The stages I wrote for the test are simple operations, but are written to simulate real world delay and errors.

To set up the processes, I do practically nothing. Just initialize the production line structure and connect the stages together, and that's it! All the work of setting up the async tasks, sending the entries from each stage to the next, filtering, error logging, even the little ascii diagram, all that happens automatically! And all that functionality packed into one data structure!

I feel like trying to explain how it does all that (and why having built it myself makes me proud) would make it harder to believe that I actually had fun doing it. I mean, it involved reading a lot of code from high profile open source projects, studying aspects of the language I had never played with (got really deep into generics with this one), and I can't really explain how I really enjoyed doing all that.

I don't know, I feel like I lost the point of what I was trying to say. Hm, I guess this feeling are harder to pin down that I expected.

58 notes

·

View notes

Text

Cars bricked by bankrupt EV company will stay bricked

On OCTOBER 23 at 7PM, I'll be in DECATUR, presenting my novel THE BEZZLE at EAGLE EYE BOOKS.

There are few phrases in the modern lexicon more accursed than "software-based car," and yet, this is how the failed EV maker Fisker billed its products, which retailed for $40-70k in the few short years before the company collapsed, shut down its servers, and degraded all those "software-based cars":

https://insideevs.com/news/723669/fisker-inc-bankruptcy-chapter-11-official/

Fisker billed itself as a "capital light" manufacturer, meaning that it didn't particularly make anything – rather, it "designed" cars that other companies built, allowing Fisker to focus on "experience," which is where the "software-based car" comes in. Virtually every subsystem in a Fisker car needs (or rather, needed) to periodically connect with its servers, either for regular operations or diagnostics and repair, creating frequent problems with brakes, airbags, shifting, battery management, locking and unlocking the doors:

https://www.businessinsider.com/fisker-owners-worry-about-vehicles-working-bankruptcy-2024-4

Since Fisker's bankruptcy, people with even minor problems with their Fisker EVs have found themselves owning expensive, inert lumps of conflict minerals and auto-loan debt; as one Fisker owner described it, "It's literally a lawn ornament right now":

https://www.businessinsider.com/fisker-owners-describe-chaos-to-keep-cars-running-after-bankruptcy-2024-7